Loss of Temporal Homogeneity and Symmetry in Statistical Systems: Deterministic Versus Stochastic Dynamics

Abstract

:1. Introduction

- Temporal symmetry due to time-reversal invariance. The time reversalcorresponds to a discrete transformation of time t and the invariance under this transformation ensures that forward and backward evolution in time are physically indistinguishable.

- Temporal Homogeneity due to shift in the origin of time. The shiftby a constant for all times t corresponds to a continuous transformation when becomes infinitesimal, and the invariance under the shift ensures that the energy remains a constant in the evolution of the system.

1.1. Uniqueness, Temporal Homogeneity and Stochasticity

1.2. Temporal Asymmetry

1.3. Irreversibility and Recurrence Paradoxes, and Molecular Chaos

1.4. Boltzmann’s Response

1.5. Scope of the Review

- Q1

- Is the entropy at a given instant defined for a single system (a microstate) or is it an average property of many systems (a macrostate)?

- Q2

- What role does the dynamics play in determining the value of the entropy?

- the deterministic dynamics retains memory of the initial state so equilibrium is not always possible, and

- the temporal symmetry of the deterministic dynamics must be broken if we want to ensure approach to equilibrium.

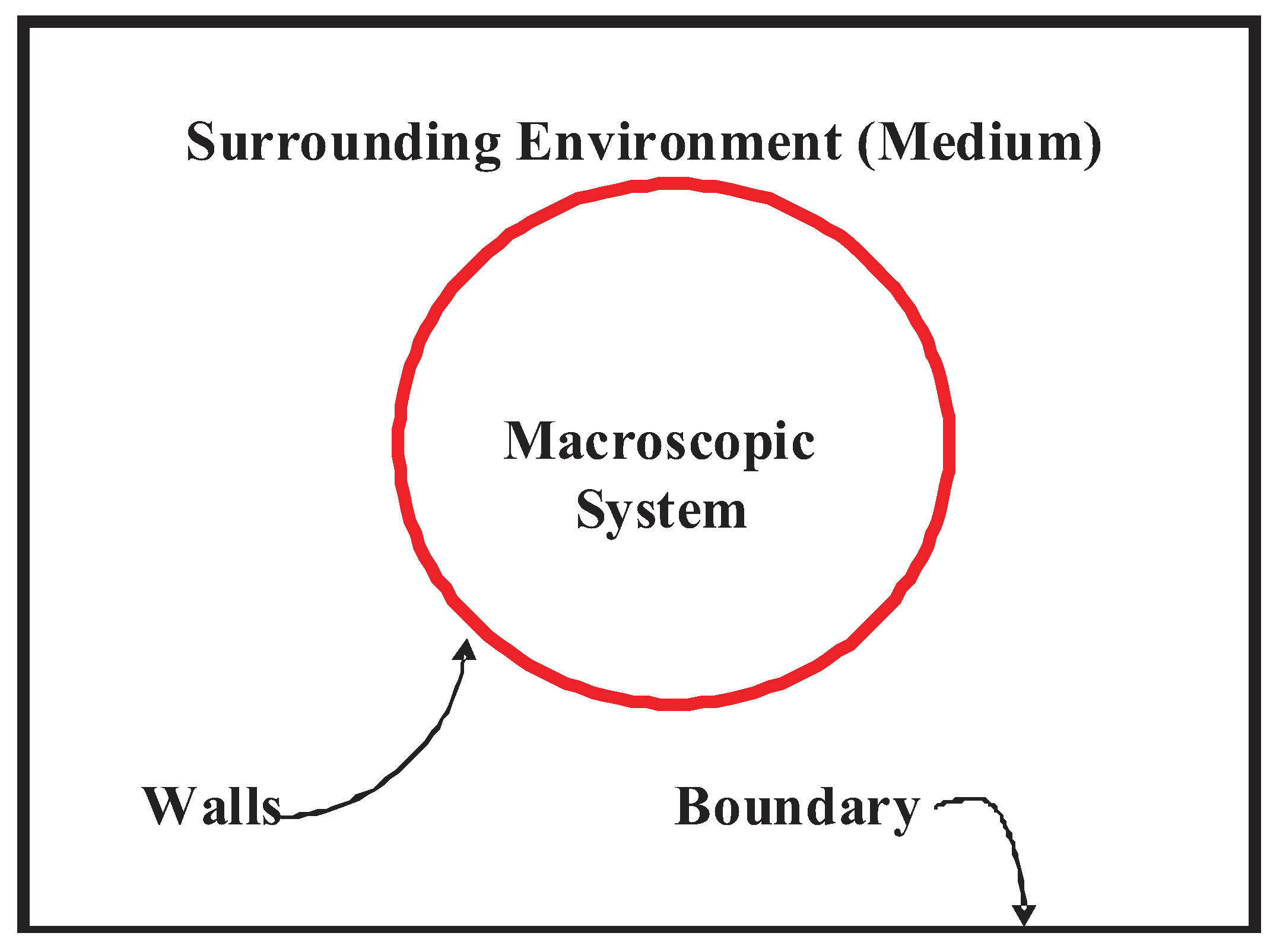

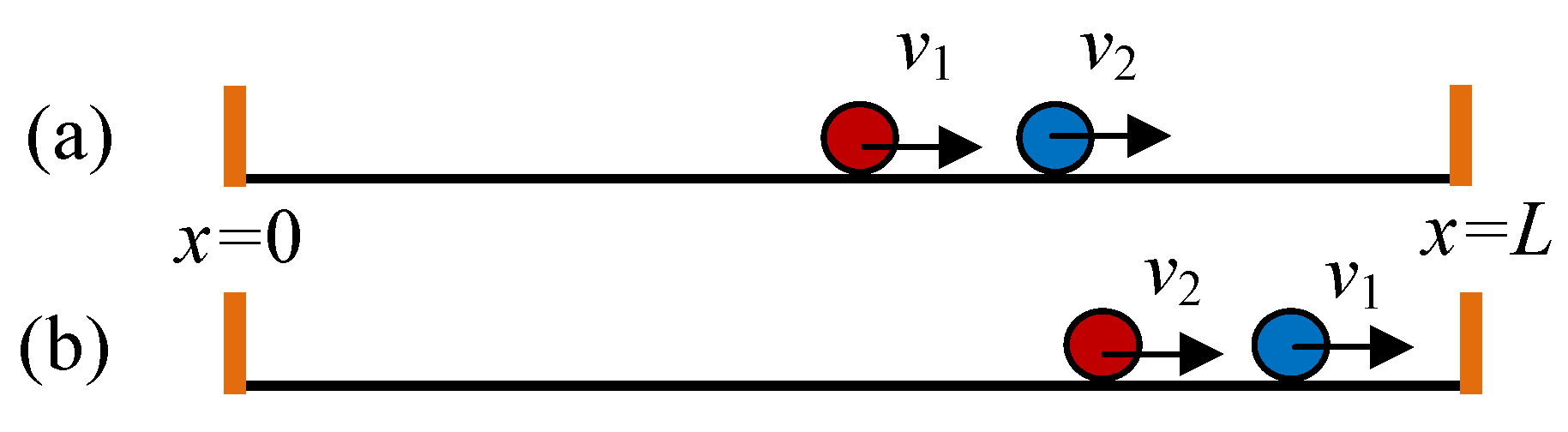

- (a)

- the (red) walls of the system,

- (b)

- (c)

- the 3 K radiation from the big bang that fills the entire universe.

2. Equilibrium and Non-equilibrium States

2.1. Entropy as an Average of the Index of Probability or Uncertainty

2.2. Equilibrium and Partial Equilibrium: Principle of No Bias

2.3. Gibbs Formulation of Entropy

3. Concept of Probability: Ensemble Interpretation

3.1. Ensemble of Replicas or Samples

3.2. Principle of Additivity

3.3. Fundamental Axiom

Fundamental Axiom The thermodynamic behavior of a system is not the behavior of a single sample, but the average behavior of a large number of independent samples, prepared identically under the same macroscopic conditions at time .

“The methods are essentially statistical in character and only purport to give results that may be expected on the average rather than precisely expected for any particular system……The methods being statistical in character have to be based on some hypothesis as to a priori probabilities, and the hypothesis chosen is the only postulate that can be introduced without proceeding in an arbitrary manner…"

“…that the averages obtained on successive trials of the same experiment will agree with the ensemble average, thus permitting any particular individual system to exhibit a behavior in time very different from the average;”

4. Role of Dynamics: Temporal Interpretation

4.1. Macrostate (System) Dynamics versus Microstate (Microscopic) Dynamics: Temporal Average

4.2. Non-interacting Ising Spins: Ensemble and Temporal Descriptions

4.3. Entropy as a Macrostate Property: Component Confinement

4.3.1. Probability Collapse

- In experimental glass transition, no such measurement is ever made that identifies precisely which component the glass is frozen in. Such a measurement will tell us precisely the positions of all the N particles which allows us to decipher which particular component the glass is in.

4.3.2. Daily Life Example

4.3.3. System Confined in a Component

4.3.4. Spontaneous Symmetry Breaking and Confinement

4.4. Non-uniqueness of the Temporal Entropy

4.5. Unsuitability of Temporal Averages

5. Deterministic Dynamics

5.1. Poincaré Recurrence Theorem: One-to-one Mapping of Microstates

5.2. Ensemble Entropy in a Deterministic System

5.3. Probability in a Deterministic Dynamics

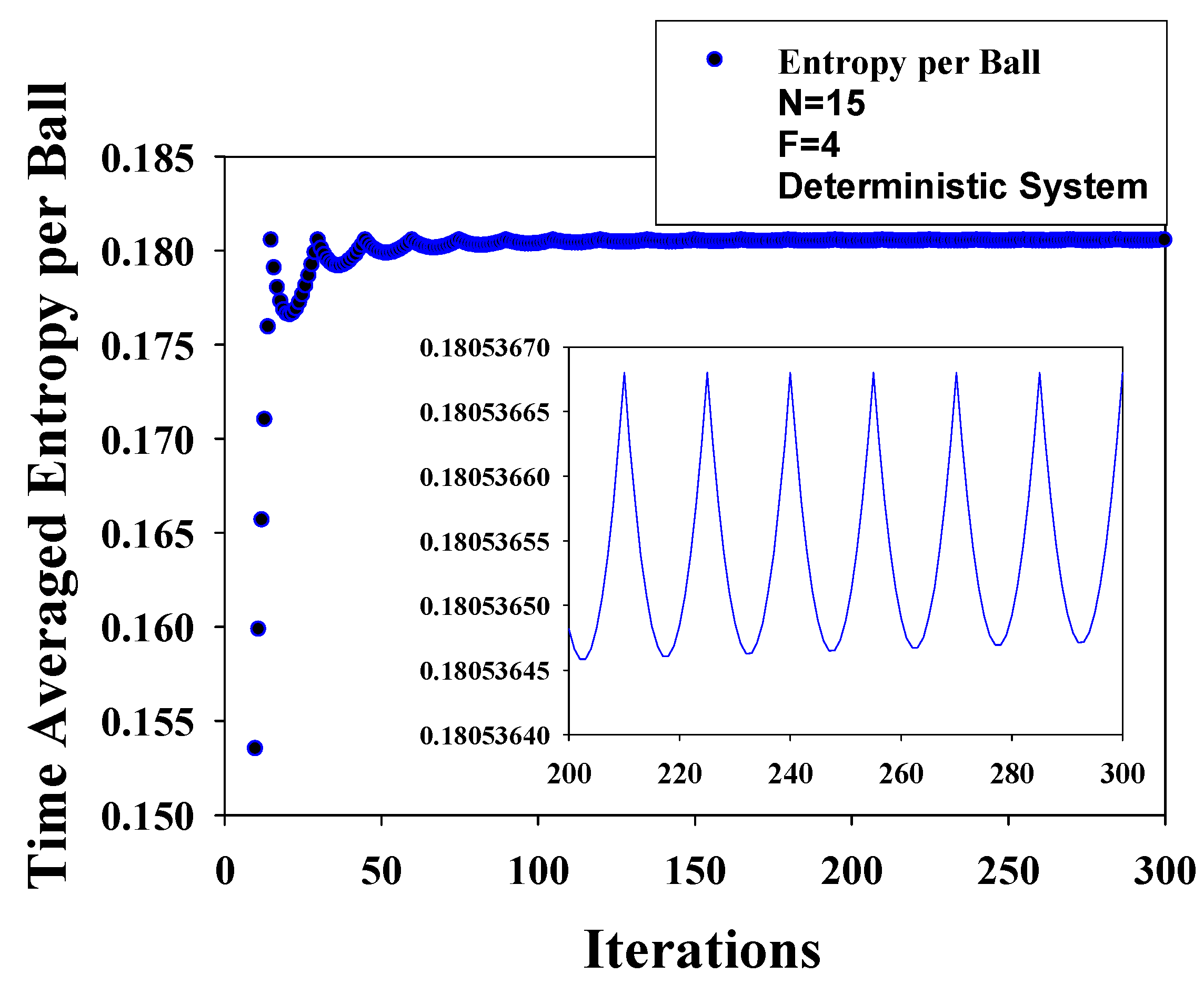

5.4. Temporal Entropy in a Deterministic System

5.4.1. Temporal Entropy: Presence of a Dynamics

5.4.2. Absence of a Dynamics: Throwing a Die

6. Important Consequences of a Deterministic Dynamics

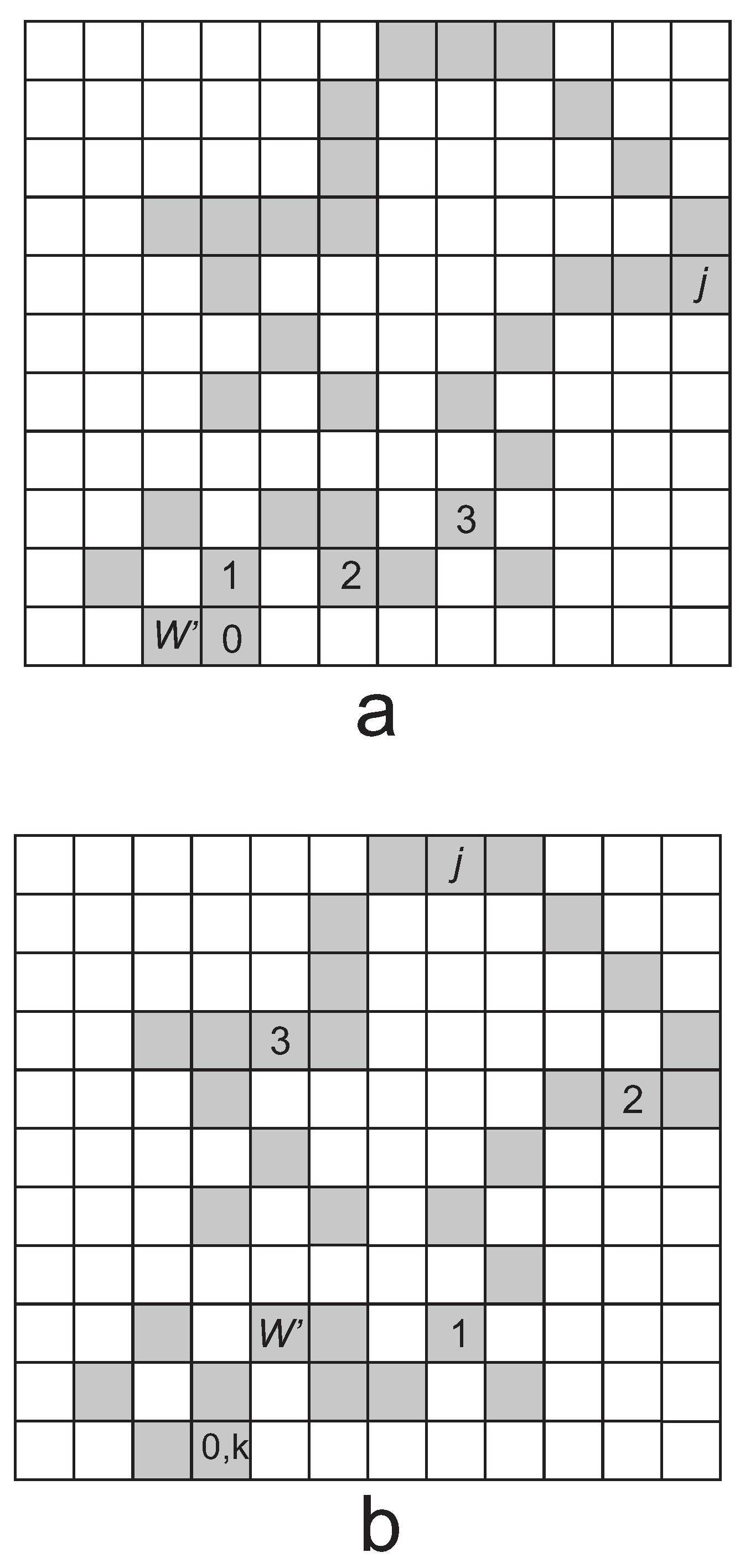

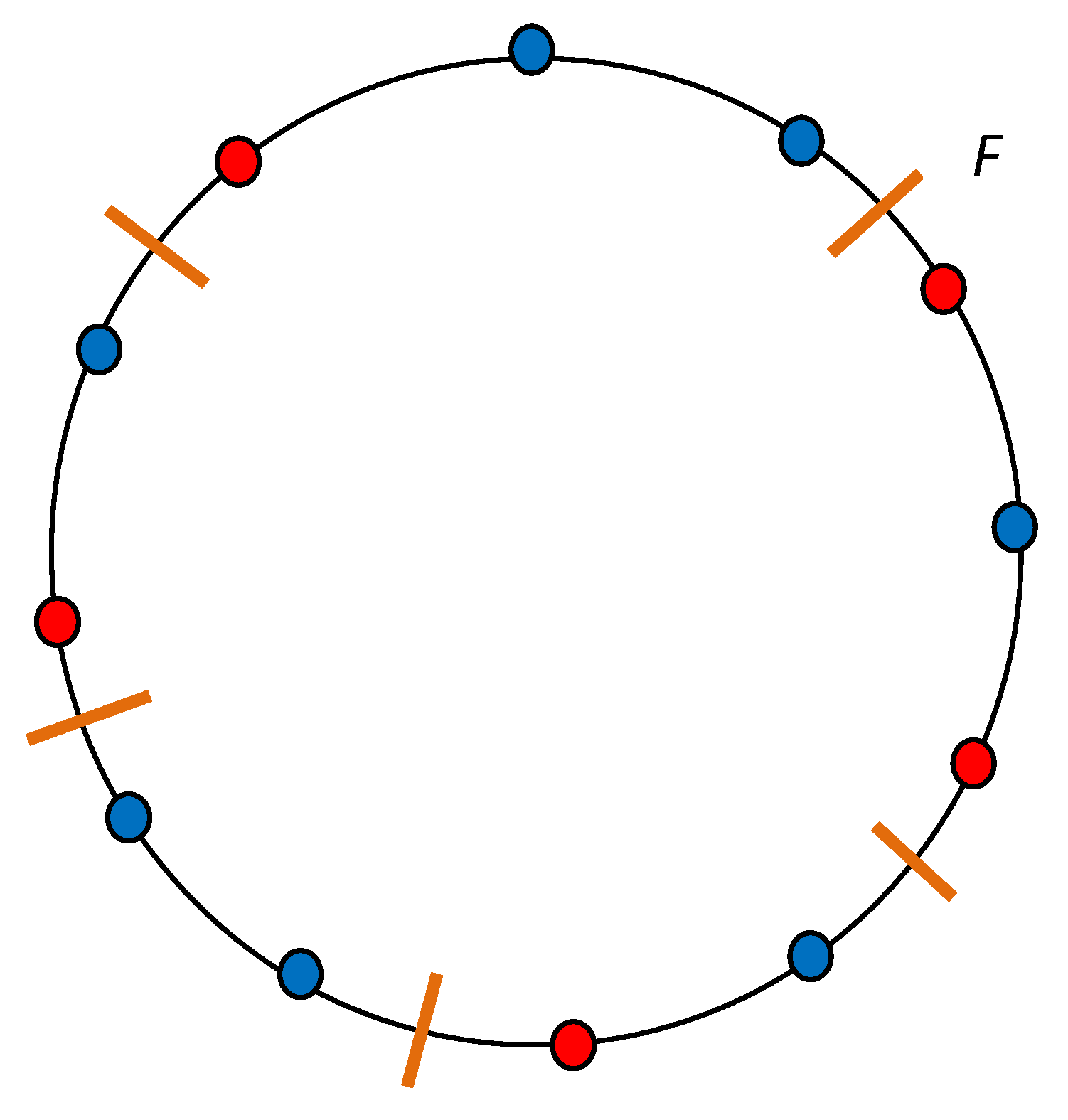

6.1. Lack of Molecular Chaos: Kac Ring Model

6.2. Zermelo’s Incorrect Conclusion

7. Stochastic Dynamics

7.1. Lessons from Kac’s Ring Model

7.1.1. Need for Stochasticity

7.1.2. ↛ under Time-reversal

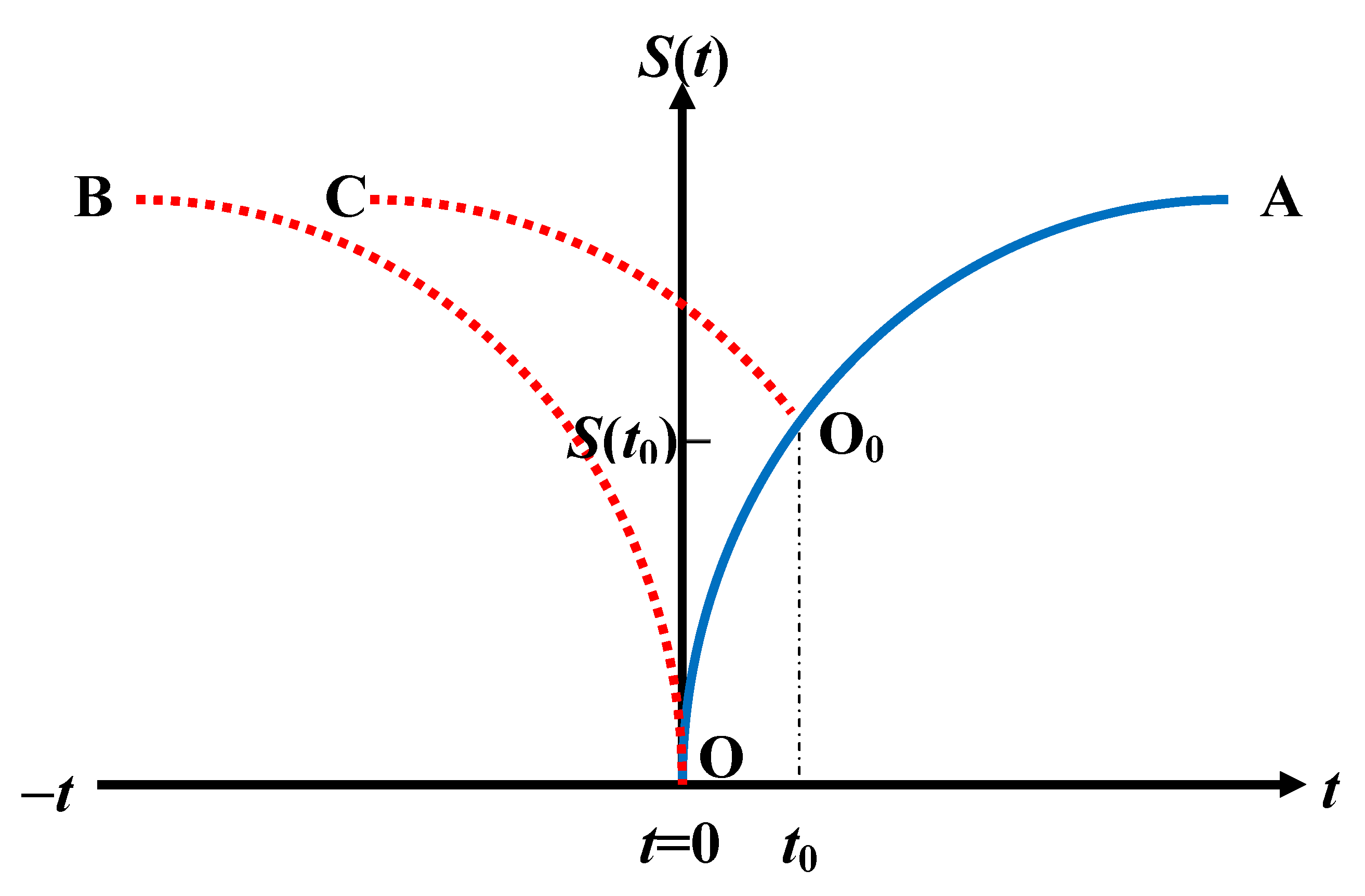

7.1.3. Effect of Time Reversal on the Entropy

7.1.4. Idealized walls

7.1.5. Real Systems

7.2. One-to-many Mapping of Microstates, Temporal Asymmetry, and Entropy Change

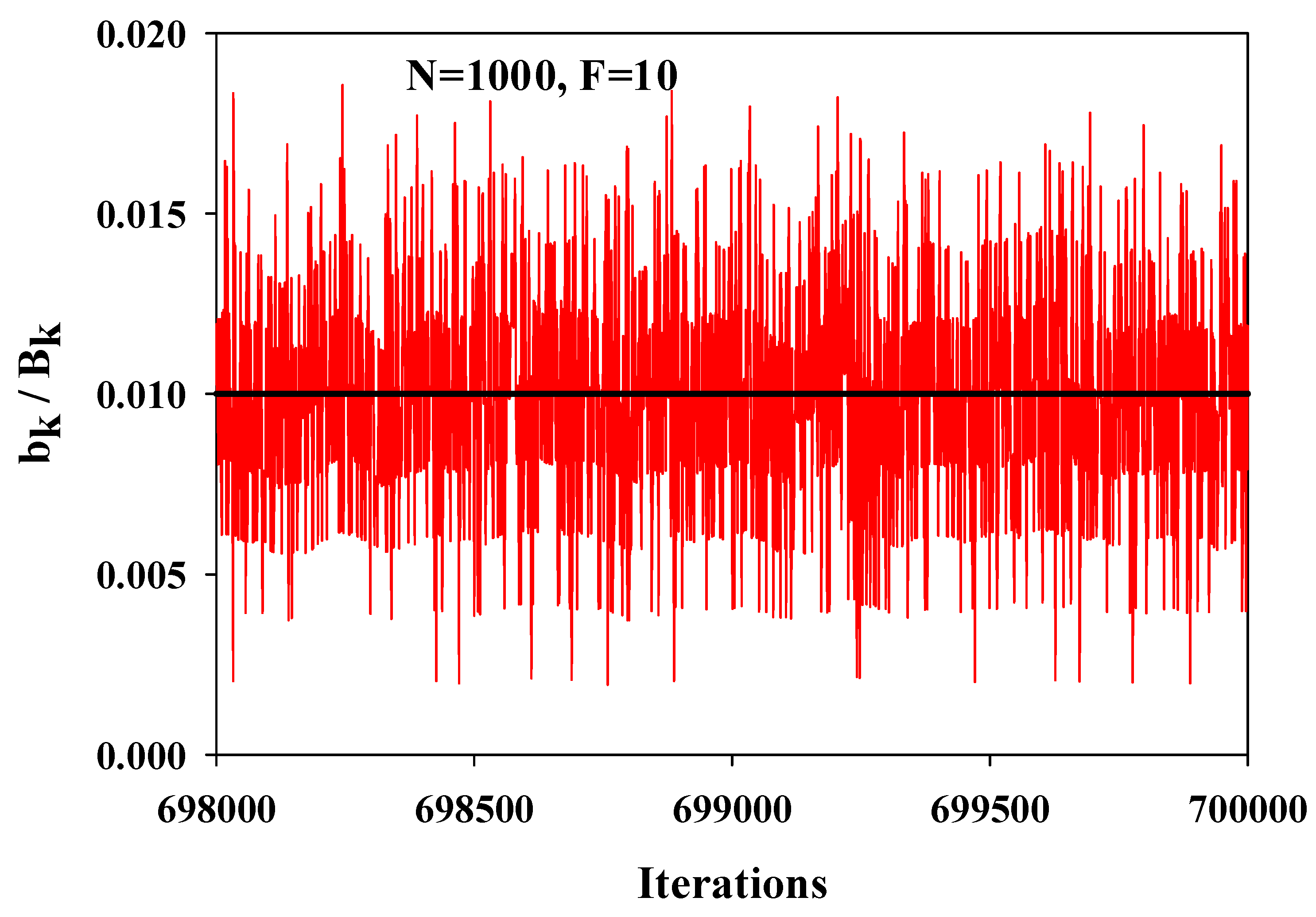

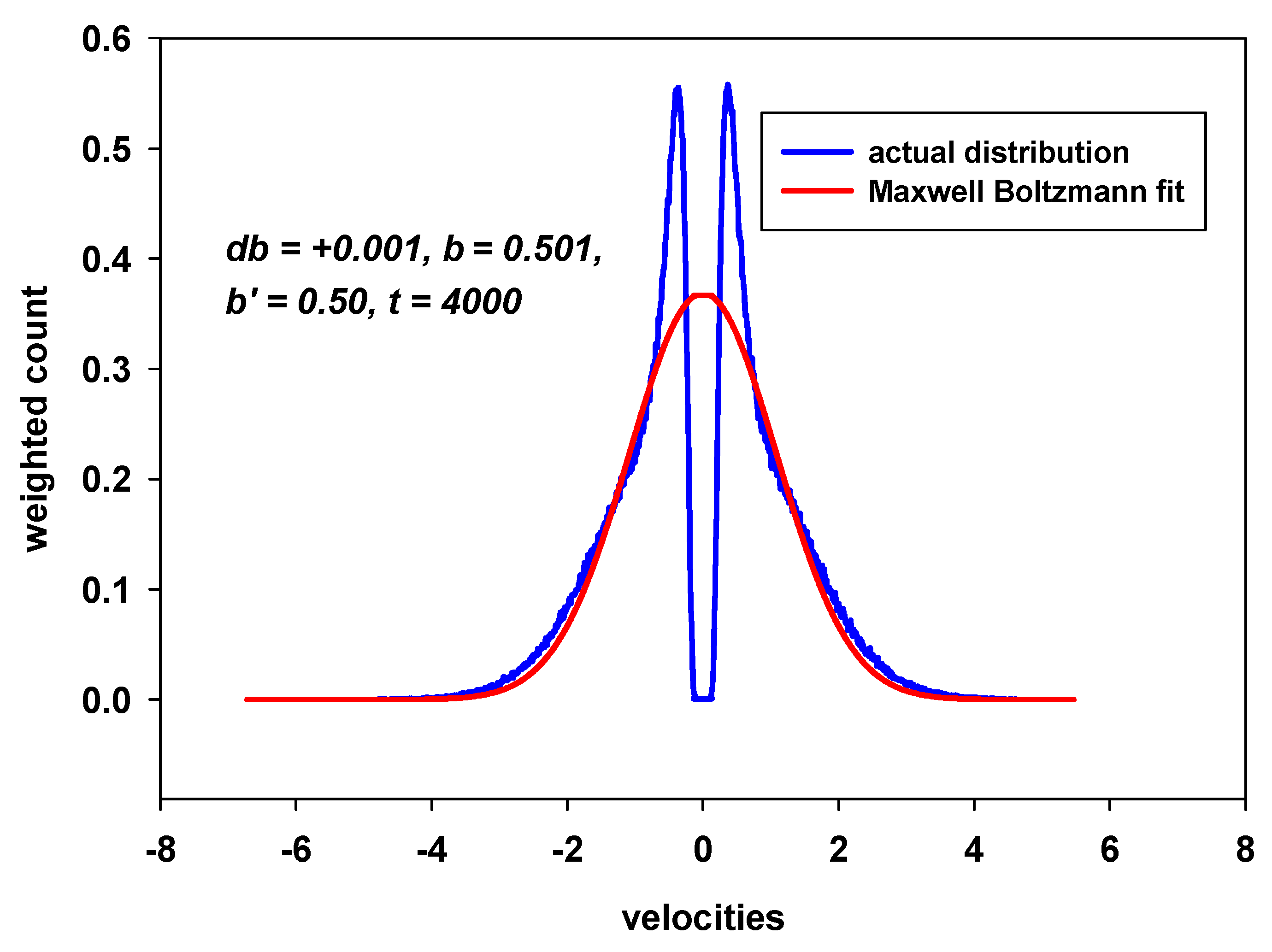

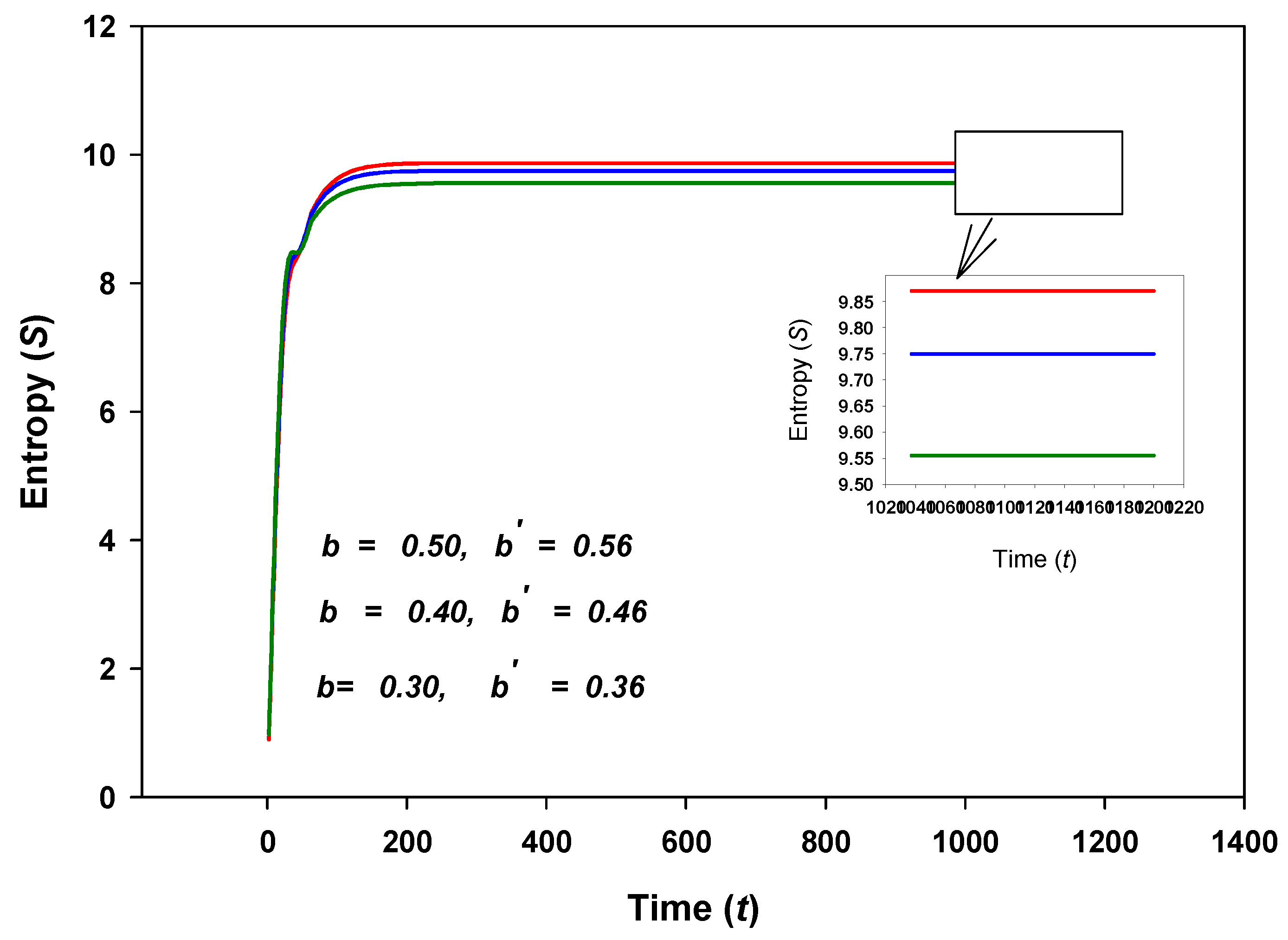

8. Stochastic Impulses from the Walls: 1-d Ideal Gas

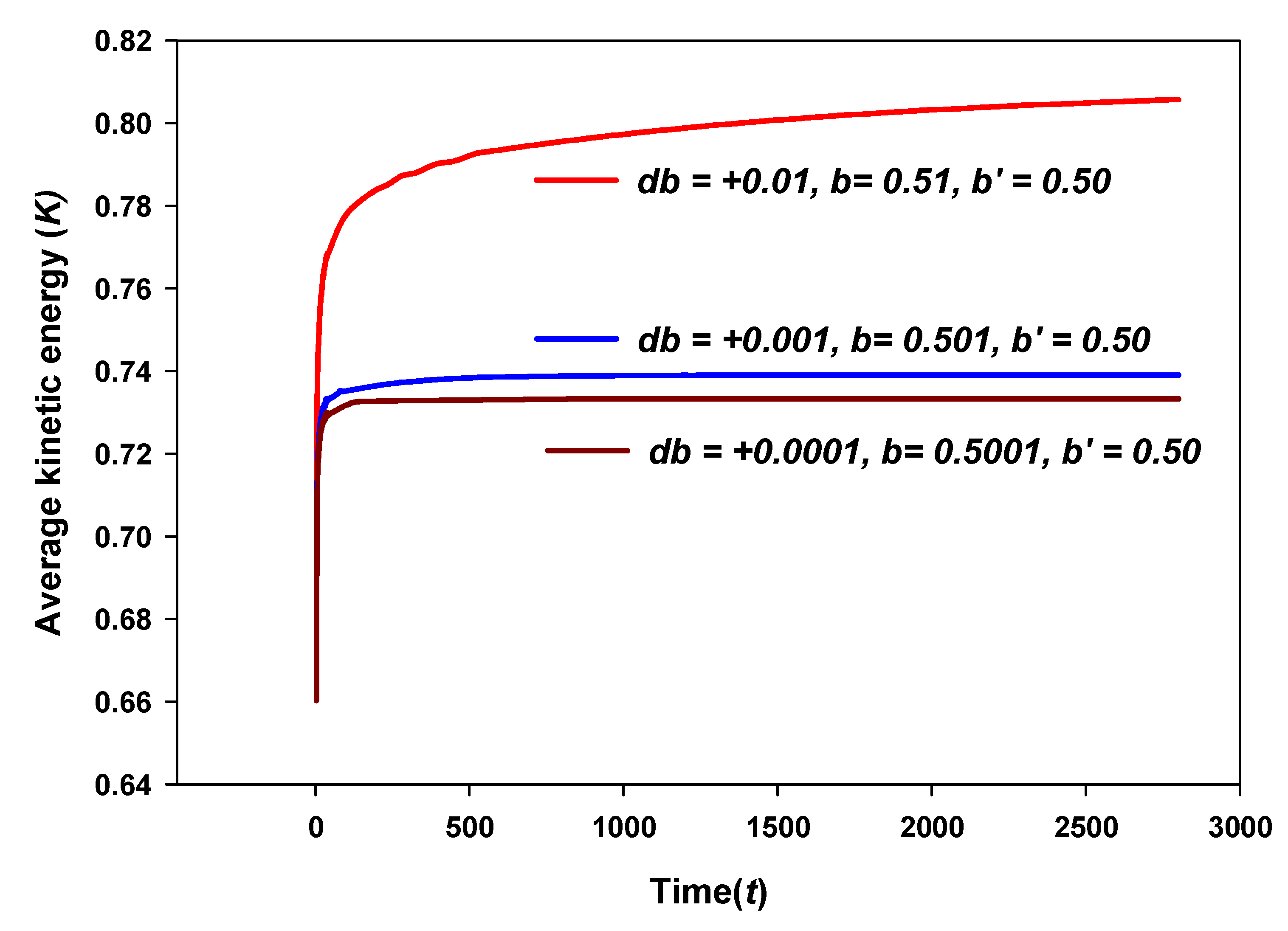

8.1. Reconstruction of Walls and Stochastic Impulses

8.2. Average Kinetic Energy

8.3. Temporal Inhomogeneity

8.4. Some Results

9. Discussion and Conclusions

- The ensemble averages satisfy the additivity principle introduced in Section 3.

- The other reason is that most measurements last a short period of time. The temporal average over an extended time period has nothing to do with information obtained in measurements that may take a fraction of a second or so. In contrast, the ensemble average provides an instantaneous average and thus bypasses the objection of the finite measurement time.

Acknowledgments

References

- Noether, E. Invariante Variationsprobleme. Nachr. Konig. Gesell. Wiss. Gottingen Math.-Phys. Kl. Heft 1918, 2, 235–257, English translation in Transp. Theory Statist. Phys. 1971, 1, 186–207. [Google Scholar]

- Wigner, E.P. Symmetries and Reflections; Indiana University Press: Bloomington, IN, USA, 1967. [Google Scholar]

- When we consider many particles, it is convenient to introduce the concept of a phase space in which a point represents the collections of particles’ coordinates and momenta. Thus, each point in the phase space represents a state of the system. A microstate of the system is represented not by a point, but by a volume element h3N, where h is Planck’s constant; see Section 5 for more details.

- Zeh, H.-D. The Physical Basis of the Direction of Time; Springe-Verlag: Berlin, Germany, 1989. [Google Scholar]

- Poincaré, H. Mathematics and Science: Last Essays; Dover Publications Inc.: New York, NY, USA, 1963. [Google Scholar]

- A system with unique trajectories requiring an invertible one-to-one mapping (7) is what we call a deterministic system in this work. A Hamiltonian system is deterministic in this sense.

- We are not considering weak interactions where this symmetry is not exact.

- de Hemptinne, X. Non-Equilibrium Statistical Thermodynamics; World Scientific: Singapore, 1992. [Google Scholar]

- Chandrasekhar, S. Stochastic Problems in Physics and Astronomy. Rev. Mod. Phys. 1943, 15, 1–89. [Google Scholar] [CrossRef]

- Ehrenfest, P.; Ehrenfest, T. The Conceptual Foundations of the Statistical Approach in Mechanics; Moravcsik, M., Translator; Cornell University Press: Ithaca, NY, USA, 1959. [Google Scholar]

- Daub, E.E. Probability and thermodynamics: The reduction of the second law. ISIS 1969, 60, 318–330. [Google Scholar] [CrossRef]

- Davies, P.C.W. The Physics of Time Asymmetry; University of California Press: Berkeley, CA, USA, 1977. [Google Scholar]

- Hawking, S.W. Arrow of time in cosmology. Phys. Rev. D 1985, 32, 2489–2495. [Google Scholar] [CrossRef]

- Coveney, P.V. The second law of thermodynamics: entropy, irreversibility and dynamics. Nature 1988, 333, 409–415. [Google Scholar] [CrossRef]

- Zak, M. Irreversibility in Thermodynamics. Int. J. Theor. Phys. 1996, 35, 347–382. [Google Scholar] [CrossRef]

- Price, H. Time’s Arrow and Archimedes’ Point: New Directions for the Physics of Time; Oxford University Press: New York, NY, USA, 1996. [Google Scholar]

- Uffink, J. Irreversibility and the Second Law of Thermodynamics. In Entropy; Greven, A., Keller, G., Warnecke, G., Eds.; Princeton University Press: Princeton, NJ, USA, 2003; pp. 121–146. [Google Scholar]

- Price, H. The Thermodynamic Arrow: Puzzles and Pseudo-puzzles. arXiv Phys. 2004, 0402040. [Google Scholar]

- The reader should pause to guess about our motivation to italicize container. During the process of expansion or at any other time, the gas molecules are always experiencing the walls of the container. Later, we will see that the presence of walls becomes a central concept for breaking the temporal symmetry. Their presence gives rise to boundary conditions for the collisions of gas particles with the walls. These collisions are not described by potentials that are part of the Hamiltonian of the system, and destroy the temporal symmetry, just like the presence of walls destroys the homogeneity of space.

- Prigogine, I.; Grecos, A.; George, C. On the relation of dynamics to statistical mechanics. Celes. Mech. 1977, 16, 489–507. [Google Scholar] [CrossRef]

- Maxwell, J.C. Theory of Heat; Longmans, Green & Co: London, UK, 1871; J. Chem. Soc. London 1875, 28, 493–508. [Google Scholar]

- Boltzmann, L. Über die mechanische Bedeutung des Zweiten Hauptsatzes der Wärmegleichgewicht. Wien. Ber. 1877, 76, 373–435. [Google Scholar]

- Boltzmann, L. Lectures on Gas Theory; University Of California Press: Berkeley, CA, USA, 1964. [Google Scholar]

- Clausius, R. Über die Wärmeleitung gasförmiger Körper. Ann. Physik 1862, 115, 1–57. [Google Scholar] [CrossRef]

- Loschmidt, J. Sitznngsberichte der Akademie der Wissenschaften. Wien. Ber. 1876, 73, 128–142. [Google Scholar]

- Kröning, A. Grundziuge einer Theorie der Gase. Ann. Physik 1856, 99, 315–322. [Google Scholar] [CrossRef]

- Burbury, S.H. Boltzmann’s minimum fuction. Nature 1895, 52, 104–105. [Google Scholar] [CrossRef]

- von Smoluchowski, M. Experimetell nachweisbare der ublichen Thermodynamik widersprechende Molekularphanomene. Physik Z 1912, 13, 1069. [Google Scholar]

- Terr Haar, D.; Green, C.D. The statistical aspect of Bolzmann’s H-Theorem. Proc. Phys. Soc. A 1953, 66, 153–159. [Google Scholar] [CrossRef]

- ter Haar, D. Foundations of Statistical Mechanics. Rev. Mod. Phys. 1953, 7, 289–338. [Google Scholar]

- Sklar, L. Physics and Chance; Cambridge University Press: Cambridge, UK, 1993. [Google Scholar]

- Lebowitz, J.L. Statistical mechanics: A selective review of two central issues. Rev. Mod. Phys. 1999, 71, S346–S357. [Google Scholar] [CrossRef]

- We discuss this important ansatz later in Section 6.1 in more detail, where we find that the ansatz is not fulfilled in a deterministic dynamics. We suggest that one needs a stochastic dynamics for the ansatz to be satisfied.

- Poincaré, H. Sur le problème des trois corps et les équations de la dynamique. Acta Math. 1890, 13, 1–270, see also Chandrasekhar, S. [9]. [Google Scholar]

- Zermelo, E. On a Theorem of Dynamics and the Mechanical Theory of Heat. Ann. Physik 1896, 57, 485–494. [Google Scholar] [CrossRef]

- Zermelo, E. On the Mechanical explanation of Irreversible Processes. Wied. Ann. 1897, 60, 392–398. [Google Scholar]

- Huang, K. Statistical Mechanics, 2nd ed.; John Wiley and Sons: New York, NY, USA, 1987. [Google Scholar]

- Gujrati, P.D. Poincare Recurrence, Zermelo’s Second Law Paradox, and Probabilistic Origin in Statistical Mechanics. arXiv, 2008; arXiv:0803.0983. [Google Scholar]

- Hoover, W.G. Computational Statistical Mechanics; Elsevier: Amsterdam, The Netherlands, 1991. [Google Scholar]

- Boltzmann, L. Reply to Zermelo’s Remarks on the Theory of Heat. Ann. Physik 1896, 57, 773–784. [Google Scholar] [CrossRef]

- Boltzmann, L. On Zermelo’s Paper: On the Mechanical Explanation of Irreversible Processes. Ann. Physik 1897, 60, 392–398. [Google Scholar] [CrossRef]

- Jäckle, J. On the glass transition and the residual entropy of glasses. Philos. Mag. B 1981, 44, 533–545. [Google Scholar] [CrossRef]

- Jäckle, J. Residual entropy in glasses and spin glasses. Physica B 1984, 127, 79–86. [Google Scholar]

- Palmer, R.G. Broken Ergodicity. Adv. Phys. 1982, 31, 669–735. [Google Scholar] [CrossRef]

- Landau, L.D.; Lifshitz, E.M. Statistical Physics, 3rd ed.; Pergamon Press: Oxford, UK, 1986. [Google Scholar]

- Planck, M. The Theory of Heat Radiation. In The history of modern physics 1800–1950; American Institute of Physics: New York, NY, USA, 1988; Volume 11. [Google Scholar]

- Lewis, G.N. The entropy of radiation. Proc. Nat. Acad. Sci. 1927, 13, 307–313. [Google Scholar] [CrossRef]

- Lanyi, G. Thermal Equilibrium Between Radiation and Matter. Found. Phys. 2003, 33, 511–528. [Google Scholar] [CrossRef]

- Whether the entire universe satisfies the second law is an unsettled problem at present. To verify it requires making measurement of some sort on different parts of an ever-expanding universe at the same instant. It is not clear whether it is possible to send signals to distant receding parts of our expanding universe to be able to make this measurement; most of these parts are probably causally disconnected from us. The idea of an isolated system is based on an exterior from which it is isolated. To test the isolation, we need to perform some sort of test from outside the isolated system. We need to know if we live in a universe or a multiverse. Also, is there a physical boundary to our universe isolating it from outside? By physical, we mean it to be composed of matter and energy. What is outside this boundary, and how can we test or know what is outside, while remaining inside the isolated universe? If there is a physical boundary, does it contain all the matter and energy within it or is there energy outside it? Are dark matter and dark energy confined within this boundary or do they also exist outside it? If it is vacuum outside, does it have any vacuum energy, which is then absorbed by the expanding universe? At present, we do not know answers to these questions. It is highly likely that there is no physical boundary to the universe that we can detect. Everything that we observe is causally connected to us and lies within the universe. Therefore, we cannot see its boundary, which is causally disconnected from us. For all practical purposes, the universe appears to be “unbounded” to us. The only sensible thing we can speak of is a part (within the causally connected observable universe) of the universe, finite in extent within this “unbounded” universe. The surrounding medium of the observable universe and the 3K radiation generate stochasticity and ensure that the observable universe satisfies the second law. In our opinion, causally disconnected parts of the universe have no bearing on the second law. Therefore, we will not worry about this issue here.

- This is impossible at least due to the presence of the remanent 3 K radiation from the big bang that permeates the entire universe. We will neglect this radiation and other thermal radiation from the walls and other external bodies when we consider a deterministic dynamics. They will become an integral part of the discussion when we deal with stochastic dynamics.

- A truly isolated system is really an idealization and will not correctly represent a physical system, as noted in the previous footnote. For a correct representation, the description requires a probabilistic approach, which follows from the loss of temporal inhomogeneity; see the discussion leading to (9).

- Gibbs, J.W. Elementary Principles in Statistical Mechanics; Ox Bow Press: Woodbridge, VA, USA, 1981. [Google Scholar]

- Tolman, R.C. The Principles of Statistical Mechanics; Oxford University: London, UK, 1959. [Google Scholar]

- Rice, S.A.; Gray, P. The Statistical Mechanics of Simple Liquids; John Wiley & Sons: New York, NY, USA, 1965. [Google Scholar]

- Rice, O.K. Statistical Mechanics, Thermodynamics and Kinetics; W.H. Freeman: San Francisco, CA, USA, 1967. [Google Scholar]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423; 623–656. [Google Scholar] [CrossRef]

- Jaynes, E.T. Papers on Probability, Statistics and Statistical Physics; Resenkrantz, R.D., Ed.; Reidel Publishing: Dordrecht, The Netherlands, 1983. [Google Scholar]

- Sethna, J.P. Statistical Mechanics: Entropy, Order Parameters and Complexity; Oxford University Press: New York, NY, USA, 2006. [Google Scholar]

- Becker, R. Theory of Heat, 2nd ed.; Leibfried, G., Ed.; Springer-Verlag: New York, NY, USA, 1967. [Google Scholar]

- Nemilov, S.V. Thermodynamic and Kinetic Aspects of the Vitreous State; CRC Press: Boca Raton, FL, USA, 1995. [Google Scholar]

- Gutzow, I.; Schmelzer, J. The Vitreous State: Thermodynamics, Structure, Rheology and Crystallization; Springer: Berlin, Germany, 1995. [Google Scholar]

- Gujrati, P.D. Where is the residual entropy of a glass hiding? arXiv, 2009; arXiv:0908.1075. [Google Scholar]

- The division in cells is to ensure that the number of microstates does not become infinite even for a finite system (finite N, E and V).

- Even the collisions are deterministic in such a system.

- Kac, M. Some remarks on the use of probability in classical statistical mechanics. Bull. Acad. Roy. Belg. 1956, 42, 356–361. [Google Scholar]

- Henin, F. Entropy, dynamics and molecular chaos, Kac’s model. Physica 1974, 77, 220–246. [Google Scholar] [CrossRef]

- Thompson, C.J. Mathematical Statistical Mechanics; Princeton University: Princeton, NJ, USA, 1979. [Google Scholar]

- Gujrati, P.D. Irreversibility, Molecular Chaos, and A Simple Proof of the Second Law. arXiv, 2008; arXiv:0803.1099. [Google Scholar]

- Fernando, P. Lack of Molecular Chaos and the Role of Stochasticity in Kac’s Ring Model; The University of Akron: Akron, OH, USA, 2009. [Google Scholar]

- Indeed, it is found that the entire phase space with 2N microstates is broken into disjoint components, so that the initial microstate in a given component evolves into microstates belonging to this component alone; no microstates from other components occur in the evolution. However, Poincaré’s recurrence theorem applies to each component separately.

- Hoover, W.G. Molecular Dynamics; Springer-verlag: Berlin, Germany, 1986. [Google Scholar]

- As the system is no longer isolated because of its interaction with the environment, E, N, V need not remain constant and may fluctuate. However, as long as we are dealing with very weak environmental noise, we can safely treat the system as quasi-isolated in that the widths of their spread can be neglected.

- Indeed, for a macroscopic system, the probability to come back to a previously generated microstate will be almost negligible.

- Gautam, M. The Role of Walls’ Stochastic Forces in Statistical Mechanics: Phenomenon of Time Irreversibility; The University of Akron: Akron, OH, USA, 2009. [Google Scholar]

© 2010 by the author; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license http://creativecommons.org/licenses/by/3.0/.

Share and Cite

Gujrati, P.D. Loss of Temporal Homogeneity and Symmetry in Statistical Systems: Deterministic Versus Stochastic Dynamics. Symmetry 2010, 2, 1201-1249. https://doi.org/10.3390/sym2031201

Gujrati PD. Loss of Temporal Homogeneity and Symmetry in Statistical Systems: Deterministic Versus Stochastic Dynamics. Symmetry. 2010; 2(3):1201-1249. https://doi.org/10.3390/sym2031201

Chicago/Turabian StyleGujrati, Purushottam D. 2010. "Loss of Temporal Homogeneity and Symmetry in Statistical Systems: Deterministic Versus Stochastic Dynamics" Symmetry 2, no. 3: 1201-1249. https://doi.org/10.3390/sym2031201

APA StyleGujrati, P. D. (2010). Loss of Temporal Homogeneity and Symmetry in Statistical Systems: Deterministic Versus Stochastic Dynamics. Symmetry, 2(3), 1201-1249. https://doi.org/10.3390/sym2031201