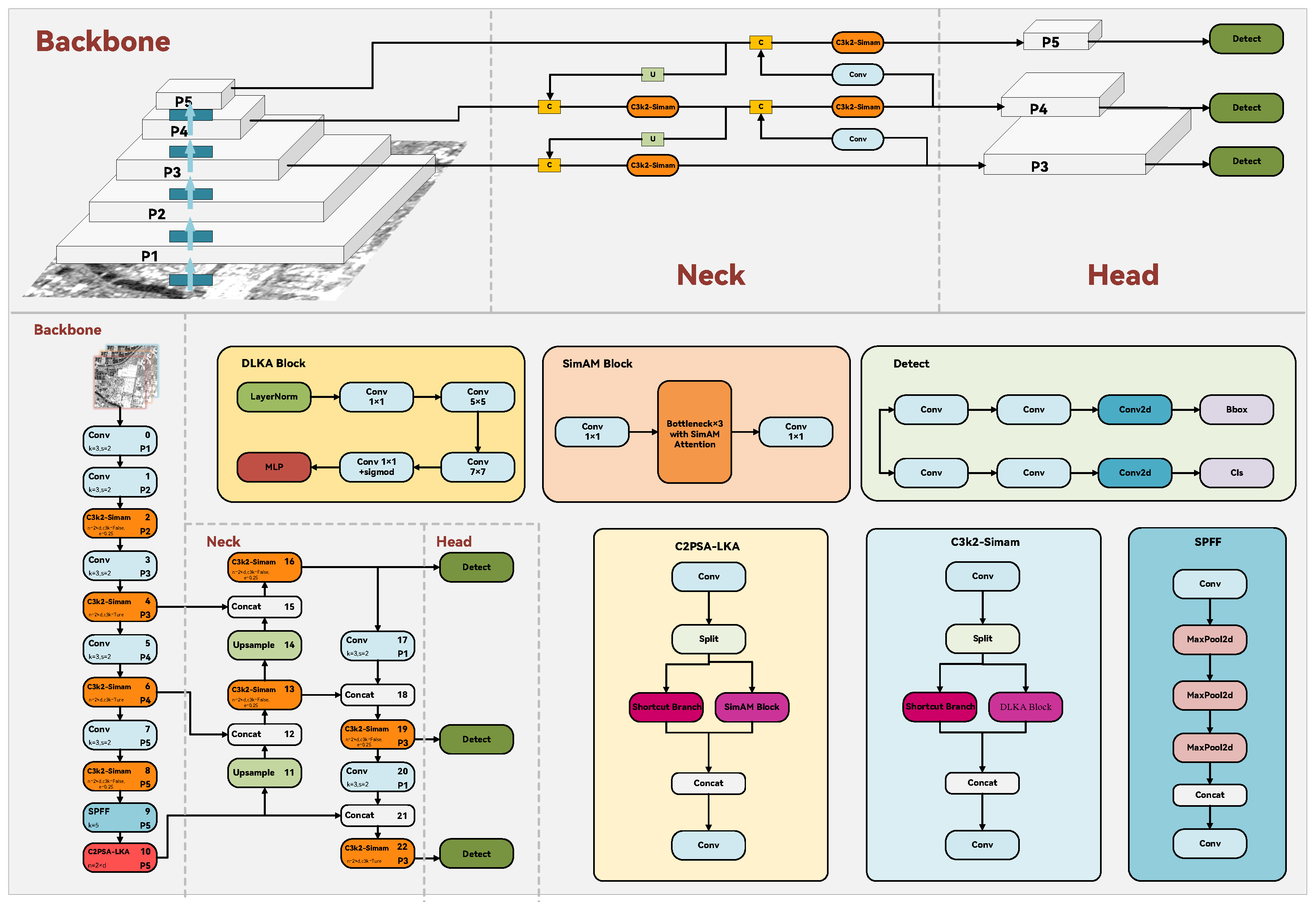

YOLO11 employs a multi-scale feature fusion architecture that processes input images through three main stages: the backbone network extracts hierarchical features at different scales, the neck network fuses these features through feature pyramid structures to enhance multi-scale representation, and the head network performs final classification and bounding box regression. As shown in

Figure 1, this architecture achieves a balance between detection speed and precision. However, when applied to SAR target detection, several limitations become apparent: the fixed-scale convolutional kernels struggle to capture long-range spatial dependencies required for large structural targets like ports and bridges; the absence of specialized noise suppression mechanisms makes the model vulnerable to speckle noise that overwhelms weak small target features; the baseline attention mechanisms demonstrate insufficient adaptability to SAR’s unique scattering characteristics and complex backgrounds; and the multi-scale fusion lacks adequate handling of extreme scale variations among different target categories. To address these challenges while maintaining computational efficiency, we propose LSDA-YOLO, an enhanced framework incorporating dual attention mechanisms that significantly improves detection accuracy for SAR imagery. This approach effectively handles SAR-specific difficulties including speckle noise interference, weak small objects, and multi-category imbalance, making it particularly suitable for defense security, emergency response, and environmental monitoring applications where both accuracy and efficiency are critical.

3.1. Deformable Large Kernel Attention Module

The conventional C2PSA module [

41] provides high-level semantic features for large-scale targets in standard architectures but exhibits several limitations in SAR detection scenarios. Fixed-size convolutional kernels struggle to adapt to geometric deformations of targets, while limited receptive fields hinder comprehensive global semantic modeling. Additionally, the absence of dedicated noise suppression mechanisms degrades feature quality in speckle-intensive environments, and insufficient multi-scale interaction constrains performance in complex scenes.

To address these limitations, we propose the Deformable Large Kernel Attention (DLKA) module [

42], whose overall structure is illustrated in

Figure 2. This module employs a hierarchical architecture comprising feature preprocessing, deformable attention, and feature refinement stages, maintaining a residual structure while incorporating several SAR-specific enhancements.

Deformable Convolution Unit utilizes learnable sampling offsets to enable kernel adaptation to target geometries. The offset calculation is implemented through a dedicated convolutional branch that learns deformation information from normalized input features. For input features

, the offset field

is computed as:

where

denotes layer normalization, and

is the offset prediction convolutional layer with output channels

, corresponding to x and y offsets for each sampling point of a

convolution kernel.

The deformable convolution operation using the learned offsets can be expressed as:

where

p denotes the output position,

represents the predefined sampling position for the

k-th kernel weight

, and

is the learned offset corresponding to

.

Large Receptive Field Module employs large-size convolutional kernels combined with dilated convolutions to significantly expand the effective receptive field. In practice, large kernels are decomposed into a combination of depthwise convolution, dilated depthwise convolution, and 1 × 1 convolution to reduce computational complexity. For constructing a

convolution kernel, the following decomposition approach is used:

where

represents the depthwise convolution kernel size,

represents the dilated depthwise convolution kernel size, and

d is the dilation rate. For feature maps with input dimensions

and channel number

C, the parameter count and computational cost are:

In our implementation, we set and , optimizing the dilation rate to minimize parameter count while preserving the large receptive field advantage.

Noise Suppression Branch is designed specifically for speckle noise characteristic of SAR images, implementing adaptive gating through statistical normalization. This mechanism is based on statistical differences between target and background regions in the feature map:

where

and

represent the intensity means of foreground and background regions, respectively,

denotes the standard deviation of background regions, and

is a numerical stability constant. Foreground and background regions are determined through adaptive thresholding:

where

A is the attention weight map and

T is the adaptive threshold. The purified features are computed as:

where ⊙ denotes element-wise multiplication.

Multi-scale Hybrid Attention Block combines local window attention with cross-block global attention. Local window attention partitions the feature map into

non-overlapping windows, computing self-attention within each window:

where

Q,

K, and

V represent the query, key, and value matrices, respectively, and

is the key dimension. Cross-block global attention captures long-range dependencies through strided sampling:

where

denotes strided convolution and

s is the sampling stride.

Complete Computational Flow of Deformable Large Kernel Attention can be expressed as:

where

denotes deformable depthwise convolution,

denotes deformable dilated depthwise convolution, and ⊗ denotes element-wise multiplication.

The final output of the module is fused with input features through residual connections:

where

represents the complete DLKA processing pipeline. This design ensures effective gradient propagation while enhancing the module’s representational capacity.

3.2. SimAM-Based Attention Enhancement Module

The conventional C3k2 modules face significant challenges in SAR image target detection: their fixed local receptive fields struggle to capture subtle features of weak small targets, the standard convolution operations lack specific suppression mechanisms for speckle noise, and they demonstrate limited discriminative capability for low-contrast targets under complex background interference. These limitations severely impact the detection performance of small targets and low-contrast features in SAR imagery.

To overcome these deficiencies, we introduce an enhanced structure based on SimAM (Simple Attention Module) [

43], whose overall architecture is illustrated in

Figure 3. This neuroscience-inspired attention mechanism employs a parameter-free attention reweighting mechanism rather than conventional weight-injection fusion, enabling effective local feature enhancement without introducing learnable parameters during deployment.

SimAM’s innovation fundamentally lies in its unique three-dimensional attention weight generation mechanism, which overcomes the limitations of traditional attention modules. As shown in

Figure 3, SimAM differs from conventional approaches in three key aspects:

Channel-wise attention (

Figure 3a) generates one-dimensional weights that assign a uniform scalar weight to each channel, with all spatial positions within the same channel sharing this weight. The process involves generating 1-D weights (corresponding to C channels, one value per channel) from the input feature map X (dimension C × H × W), expanding these weights by replicating them to all spatial positions within each channel, and finally fusing them with the original feature map. The critical limitation is that all neurons within the same channel (across different spatial positions) share identical weights (represented by the same color in the figure), making it impossible to distinguish the importance of different spatial locations within a channel. The SE module represents a typical example of this approach in literature comparisons.

Spatial-wise attention (

Figure 3b) generates two-dimensional weights that assign a uniform scalar weight to each spatial position (H × W), with all channels at the same spatial location sharing this weight. The process involves generating 2-D weights (corresponding to H×W spatial positions, one value per position) from the input feature map X, expanding these weights by replicating them across all channels, and then fusing them with the original feature map. The critical limitation is that all channel neurons at the same spatial position share identical weights (represented by the same color in the figure), making it impossible to distinguish the importance of different channels at the same location. The spatial attention branch of CBAM exemplifies this approach.

In contrast, SimAM’s full 3-D attention (

Figure 3c) generates three-dimensional weights that assign a unique scalar weight to each individual neuron (the intersection of channel and spatial dimensions, i.e., each point in C × H × W), eliminating the need for expansion steps. The process directly generates 3-D weights (one dedicated value per neuron) from the input feature map X and fuses them with the original feature map without any weight replication or expansion. The critical advantage is that each neuron’s weight is independent (represented by different colors in the figure), enabling the distinction of both channel importance and spatial location importance within each channel. This approach directly matches the 3-D structure of feature maps without requiring the “generate single-dimensional weights → replicate and expand” process of traditional methods, resulting in more refined and non-redundant weighting.

The design of SimAM is motivated by the key neuron hypothesis in visual neuroscience, which posits that important neurons exhibit significant differences from their surrounding neurons. Unlike conventional weight-injection fusion mechanisms that rely on learnable parameters, SimAM employs a parameter-free attention reweighting mechanism based on energy minimization. This approach evaluates the importance of each neuron by minimizing an energy function that measures the distinguishability between target neurons and their neighbors, eliminating the need for additional trainable parameters. The computational process comprises three core stages: energy function construction, closed-form attention estimation, and feature reweighting.

First, the energy function is defined to measure the separability between a target neuron

t and its

neighboring neurons

in the same channel:

where

and

are auxiliary scalar variables introduced for derivation,

is a regularization coefficient, and

M denotes the total number of neurons in the channel. The first term encourages neighboring neurons to be mapped near

, while the second term pushes the target neuron toward

, with the regularizer preventing degenerate solutions.

By substituting the closed-form solutions of the auxiliary variables, we obtain the simplified energy expression:

where

and

represent the mean and variance of neurons in the same channel. Since

and

are analytically eliminated, the resulting energy

depends only on local statistics and thus introduces no trainable parameters.

Subsequently, attention weights are generated from the inverse of the energy map to emphasize neurons with lower energy values (i.e., higher distinctiveness). Let

denote the tensor composed of all

values; the attention tensor

is computed as:

where all operations are element-wise, and numerical stabilization (adding a small

) is applied to prevent division by zero.

Finally, feature refinement is achieved through element-wise reweighting:

where ⊙ denotes element-wise multiplication. This unified channel-spatial attention mechanism emphasizes locally discriminative neurons without introducing learnable parameters, distinguishing it from traditional weight-injection approaches.

In practical deployment, we replace all C3k2 modules [

44] in both the backbone and neck with C3k2_SimAM modules (eight replacements in total), enhancing the local feature capture capability for small targets while maintaining the CSP structure [

45]. The module offers four main advantages: lightweight and parameter-free deployment, effective suppression of speckle-like activations, improved recall for small and low-contrast targets, and a biologically inspired unified weighting mechanism for channel-spatial attention.

3.3. The Structure of LSDA-YOLO

Building on DLKA and SimAM enhancements, we propose LSDA-YOLO (Large Kernel and SimAM Dual Attention YOLO), which integrates global spatial modeling with local detail enhancement to achieve precise detection of multi-scale targets in SAR images. The overall framework is shown in

Figure 4, with core fusion details in

Figure 5.

LSDA-YOLO systematically incorporates dual attention mechanisms while maintaining the basic YOLO11 architecture. The selection of SimAM and DLKA is grounded in their complementary scientific properties and specific adaptation to SAR imaging characteristics. SimAM’s parameter-free attention mechanism originates from visual neuroscience principles, automatically identifying important neurons through energy minimization, effectively addressing the statistical characteristics of speckle noise and weak features of small targets in SAR images. DLKA addresses the geometric deformations and long-range contextual dependencies of large-scale structural targets through deformable convolutions and large receptive field design. Functionally, these two mechanisms complement each other: SimAM provides fine-grained neuron-level feature enhancement, while DLKA focuses on macroscopic geometric structures and spatial context. This combination aligns with the scientific principle of balancing local details and global structure in SAR image processing, providing mechanism-level support for addressing scientific problems such as multi-scale target coexistence and complex background interference.

Specifically, we replace the terminal C2PSA module with C2PSA_LKA to enhance global feature extraction for large-scale targets, and substitute all C3k2 modules with C3k2_SimAM to improve local detail perception for small targets. This design creates complementary feature processing pathways: the DLKA module effectively captures global features of large structural targets such as ports and bridges through its expanded receptive field, while the SimAM module provides precise feature enhancement for small targets like vehicles and tanks through its neuron-level attention mechanism.

The core innovation of this architecture lies in the SimAM-DLKA fusion module, which achieves deep integration at the mechanism level rather than simple sequential connection. This integration embodies a structural and functional symmetry: DLKA expands the receptive field outward to model global spatial contexts, while SimAM focuses inward to refine local neuronal responses. The two mechanisms form a symmetric pair that processes features from “macro-geometry” to “micro-detail,” establishing a balanced computational pathway consistent with the inherent symmetry in visual information processing—where local features aggregate into global structures, and global contexts guide local discrimination. As shown in

Figure 5, this fusion module implements the “local guidance for global attention” mechanism through four key stages: first, feature normalization establishes the computational foundation; then, the SimAM-based weighting process generates neuron-level saliency maps; these saliency maps guide the DLKA global attention computation through enhanced 3D weights; finally, multi-layer perceptron refinement produces output features that integrate local precision with global semantics. This fusion mechanism scientifically embodies the interaction and synergy between local features and global context, adhering to the hierarchical principle of visual information processing.

LSDA-YOLO demonstrates significant advantages in three aspects: achieving multi-scale coverage through complementary attention mechanisms, enhancing robustness to speckle noise interference through cooperative suppression, and maintaining lightweight characteristics to ensure deployment efficiency. The architecture deploys DLKA modules in the deep layers of the backbone network to capture global contextual information, widely embeds SimAM modules in the shallow and middle layers to enhance local feature representation, and achieves organic fusion of multi-scale features through the feature pyramid network. This design enables the model to effectively address diverse target detection tasks with varying scales and complexities in SAR images, significantly improving detection accuracy while maintaining computational efficiency, providing a scientifically grounded solution for SAR target detection with reasonable mechanisms and solid scientific basis.