1. Introduction

A machine learning model used widely in classification and regression problems is the artificial neural network [

1,

2]. Commonly, these models are expressed as functions

. In this expression, the vector

having dimension

d is considered the input vector (pattern) and the vector

is the vector of parameters for the neural network. The learning of these models is obtained by minimizing the training error, which is expressed using the following equation:

The set

represents the corresponding training set for the objective problem. In this set, the values

define the expected outputs for each pattern

.

Artificial neural networks have been applied in a wide series of real-world problems, such as image processing [

3], time series forecasting [

4], credit card analysis [

5], problems derived from physics [

6], etc. Also, recently they have been applied to flood simulation [

7], solar radiation prediction [

8], agricultural problems [

9], problems appearing in communications [

10], mechanical applications [

11], etc. During recent years, a wide series of optimization methods has been incorporated to tackle Equation (

1), such as the back propagation algorithm [

12], the RPROP algorithm [

13,

14], etc. Furthermore, global optimization methods have been used widely for the training of artificial neural networks, such as the genetic algorithms [

15], the particle swarm optimization (PSO) method [

16], the simulated annealing method [

17], the differential evolution technique [

18], the Artificial Bee Colony (ABC) method [

19], etc. Moreover, Sexton et al. suggested the usage of the tabu search algorithm for this problem [

20], and Zhang et al. introduced a hybrid algorithm that utilizes the PSO method and the back propagation algorithm [

21]. Additionally, Zhao et al. introduced a new Cascaded Forward Algorithm for neural network training [

22]. Furthermore, a series of parallel computing techniques have been proposed to speed up the training of neural networks [

23,

24].

However, these techniques face a series of problems. For example, many times, they cannot be freed from local minima of the error function. This will have a direct consequence of low performance of the neural network on the data of the objective problem. Another major problem that appears in the previously mentioned optimization techniques is the overfitting problem, where poor performance is observed when the neural networks are applied to data that was not present during the training process. This problem has been thoroughly studied by many researchers who have proposed some methods to handle this problem. Among these methods, one can detect the weight-sharing method [

25], pruning techniques [

26,

27], early stopping methods [

28,

29], the weight-decaying procedure [

30,

31], etc. Additionally, the dynamic construction of the architecture of neural networks was proposed by various researchers as a possible solution for the overfitting problem. For example, genetic algorithms have been proposed to dynamically create the architecture of neural networks [

32,

33], as well as the PSO method [

34]. Recently, Siebel et al. introduced a method based on evolutionary reinforcement learning to obtain the optimal architecture of neural networks [

35]. Moreover, Jaafra et al. published a review regarding the usage of reinforcement learning for neural architecture search [

36]. Similarly, Pham et al. introduced a novel method based on parameter sharing [

37]. Also, the technique of the Stochastic Neural Architecture search was proposed by Xie et al. [

38]. Finally, Zhou et al. introduced a Bayesian approach for the same task [

39].

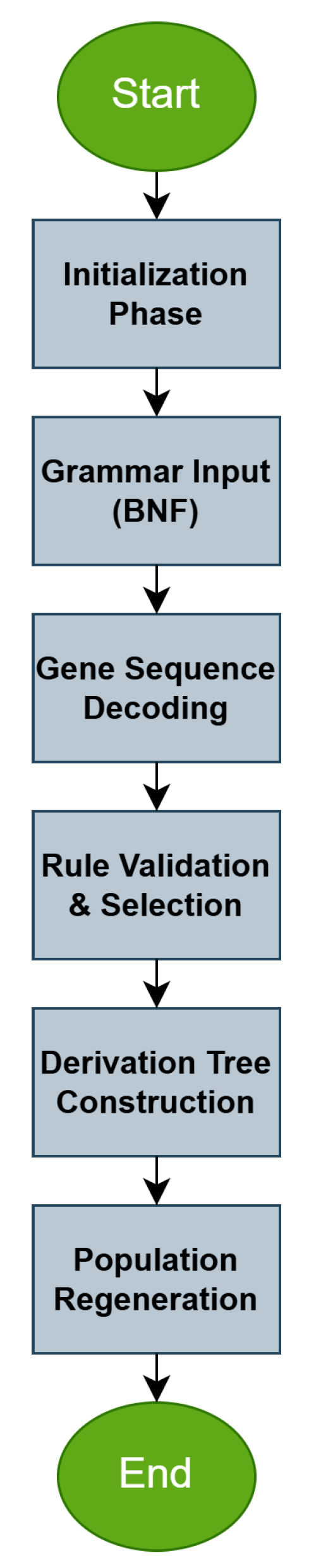

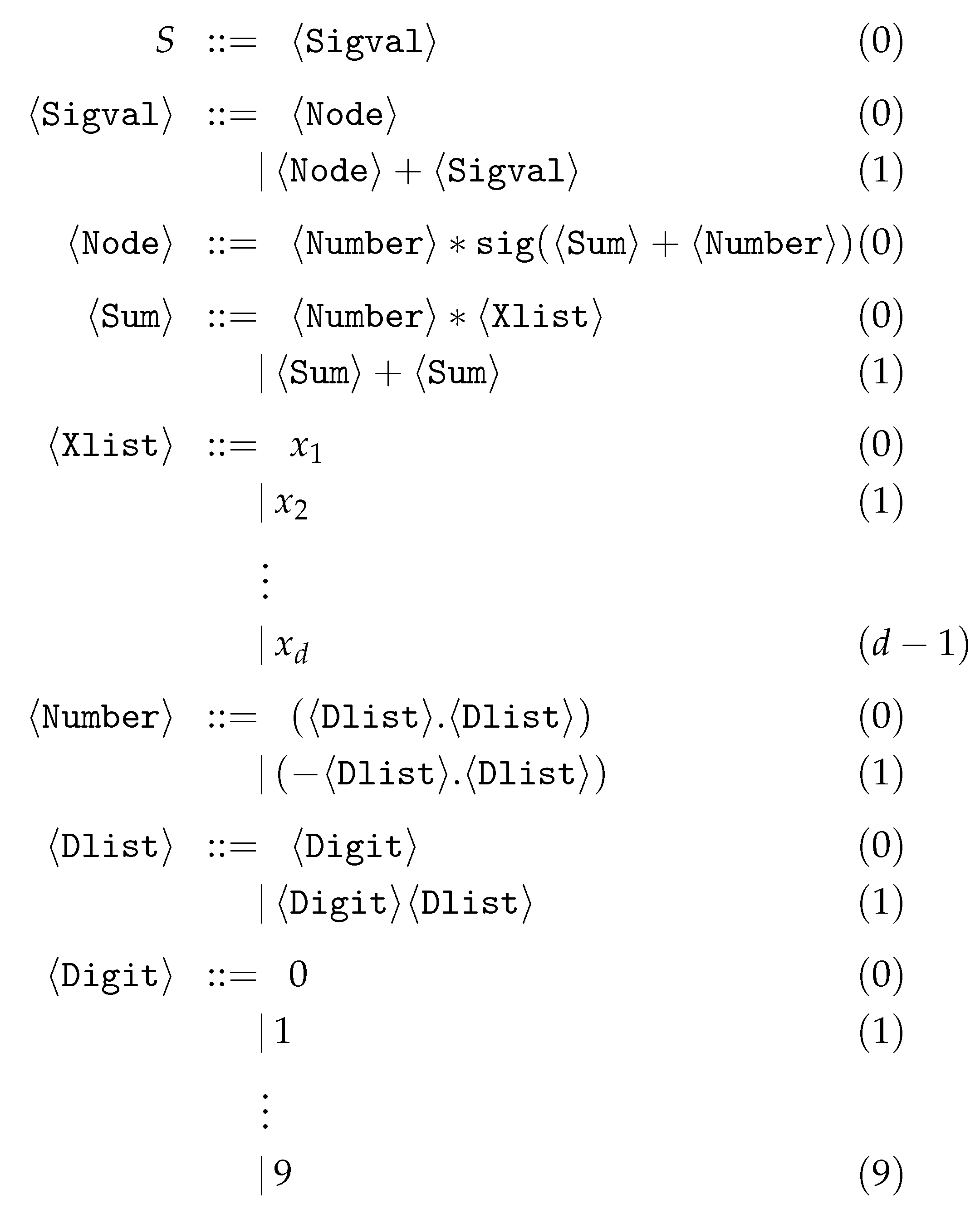

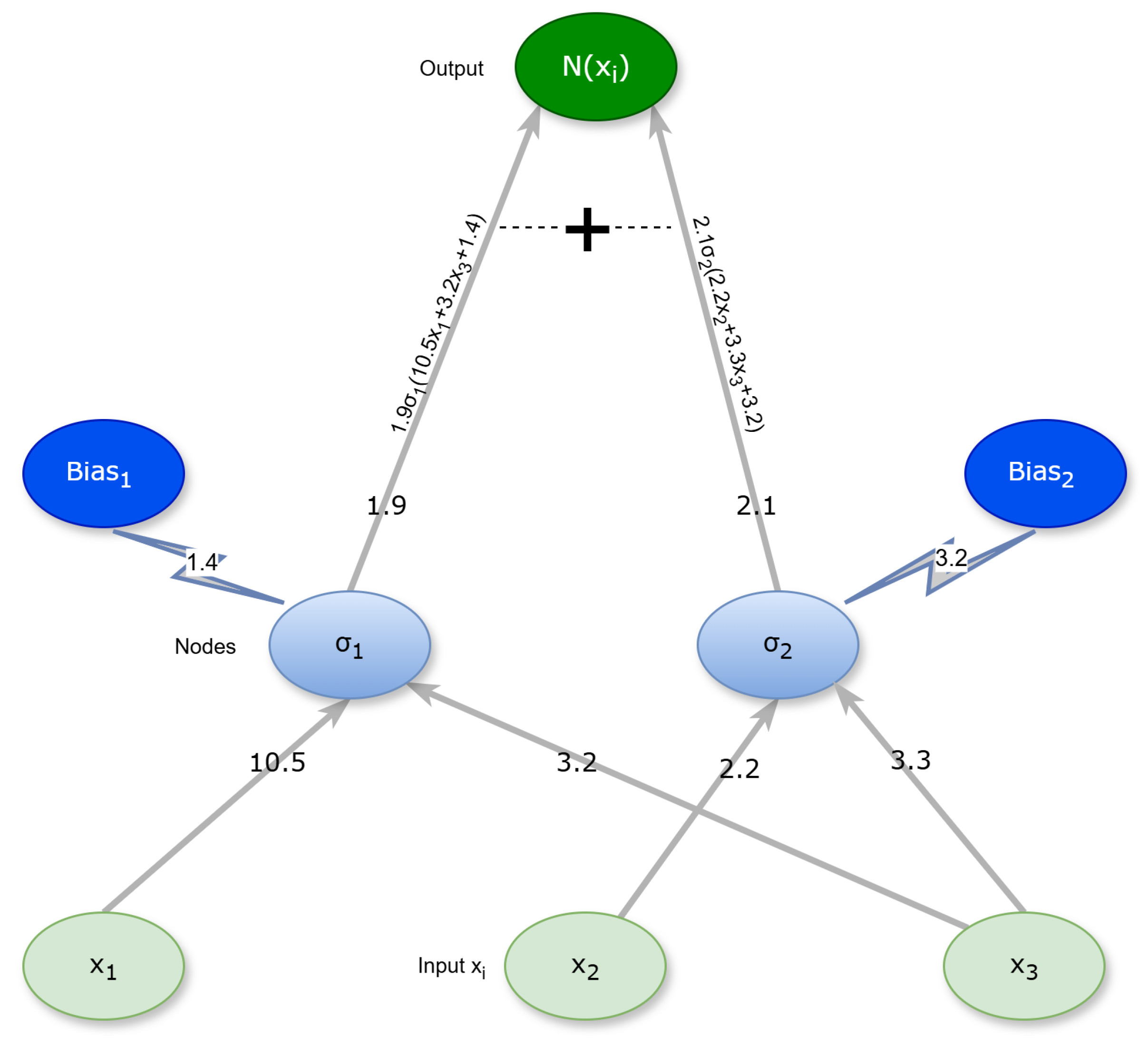

Recently, a method that utilizes the Grammatical Evolution [

40] to create the architecture of neural networks was proposed. This method can dynamically discover the desired architecture of neural networks, and it can also detect the optimal values for the corresponding parameters [

41]. This technique creates various trial structures of artificial neural networks, which, using genetic operators, evolve from generation to generation, aiming to obtain the global minimum of the training error, as provided by Equation (

1). This method was applied with success in a series of practical problems. Among them, one can locate chemical problems [

42], solutions to differential equations [

43], medical problems [

44], education problems [

45], autism screening [

46], etc.

Compared to other techniques for constructing the structure of artificial neural networks, the method guided by Grammatical Evolution has a number of advantages. First of all, this method can generate both the topology and the values for the associated parameters. Furthermore, this method can effectively isolate the features of the dataset that are most important, and furthermore, can retain only those synapses in the neural network that will lead to a reduction in training error. Additionally, this method does not demand prior knowledge of the objective problem and can be applied without any differentiation to both classification problems and regression problems. Furthermore, since a grammar is used to generate the artificial neural network, it is possible for the researcher to explain why one structure may be preferred over others. Finally, since the Grammatical Evolution procedure is used, the method could be faster than others since the Grammatical Evolution utilizes integer-based chromosomes to express valid programs in the underlying grammar.

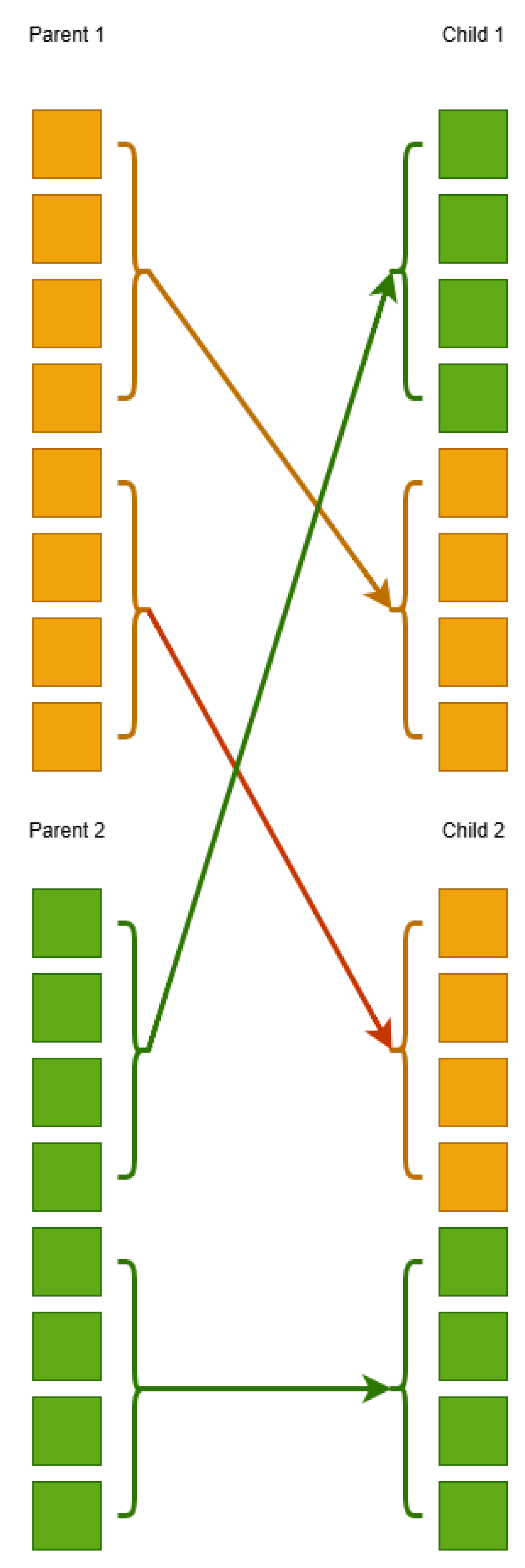

However, in many cases, training the above model is not efficient and can become trapped in local minima of the error function, which will directly result in poor performance on the problem data. Furthermore, an important factor in the problems addressed by Grammatical Evolution is the initial values that the chromosomes of the genetic population take. If the initialization is not effective, then Grammatical Evolution may take a significant amount of time to find the optimal solution to the problem. Furthermore, in artificial neural networks, an ineffective initialization of the genetic population can lead to the model becoming trapped in local minima of the error function. In this paper, we propose to introduce an additional phase in the artificial neural network construction algorithm. In this phase, an optimization method, such as a genetic algorithm, can train an artificial neural network with a fixed number of parameters. The final result of this additional phase is a trained artificial neural network, which can be introduced into the initial genetic population of Grammatical Evolution. In this way, the evolution of chromosomes will be accelerated and through genetic operators, chromosomes will be produced that will use genetic material from the chromosome introduced from the first phase of the proposed process. The final method was applied to many classification and regression problems, and it was compared against the original neural network construction method; the results seem promising.

Similar works in the field of pre-training neural networks were presented in the related literature. For example, the work of Li et al. focused on the acceleration of the back propagation training algorithm by incorporating an initial weight pre-training [

47]. Also, Erhan et al. [

48] discussed the role of different pre-training mechanisms for the effectiveness of neural networks. Furthermore, a recent work [

49] discusses the effect of pre-training of artificial neural networks for the problem of software fault prediction. Saikia et al. proposed a novel pre-training mechanism [

50] to improve the performance of neural networks in the case of regression problems. Moreover, Kroshchanka et al. proposed a method [

51] to significantly reduce the number of parameters in neural networks using a pre-training method. Also, Noinongyao et al. introduced a method based on Extreme Learning Machines [

52] for the efficient pre-training of artificial neural networks.

The process of constructing neural networks using Grammatical Evolution, as proposed in the article, enables the model to automatically discover a wide range of structures that may incorporate features such as symmetry or asymmetry between connections and layers. This flexibility in designing architectures allows for the representation of both symmetric and asymmetric patterns, which can be important for neural network performance, especially when the problem at hand exhibits intrinsic symmetries in the data or in the relationships among variables. Through the evolutionary process, the proposed approach can result in structures with a higher degree of symmetry in the connections or in the distribution of weights, an aspect that is often associated with improved generalization and stability during training.

Compared to other similar techniques, the present work uses a fixed number of parameters during pre-training, as the goal is not to find a critical number of parameters, but to more effectively initialize the population of the next phase. Furthermore, in the second phase of the algorithm, the optimal architecture for neural networks is found, where the most effective number of parameters will be found. Furthermore, the proposed technique has no dependence on prior knowledge of the data to which it will be applied, and is performed in the same way for both classification and regression problems.

The remainder of this article is divided as follows: in

Section 2, the current work and the accompanying genetic algorithm are introduced. In

Section 3, the experimental datasets and the series of experiments conducted are listed and discussed thoroughly, followed by

Section 4, where some conclusions are discussed.

3. Results

The validation of the current work was performed with the assistance of a series of classification and regression datasets, which can be accessed freely from the Internet from the following sites:

3.1. Experimental Datasets

The classification datasets used in the conducted experiments are the following:

Appendictis dataset [

73].

Alcohol, used in experiments related to alcohol consumption [

74].

Australian, which is a dataset produced from various bank transactions [

75].

Balance dataset [

76], produced from various psychological experiments.

Cleveland, a medical dataset which was discussed in a series of papers [

77,

78].

Circular dataset, which was produced artificially with two distinct classes.

Dermatology, which is related to dermatology problems [

79].

Ecoli, a dataset regarding protein problems [

80].

Glass, a dataset that contains measurements from glass component analysis.

Haberman, a medical dataset used for the detection of breast cancer.

Heart, which is used for the detection of heart diseases [

82].

HeartAttack, used in a variety of heart diseases.

Housevotes, a dataset which contains measurement forms related to congressional voting in the USA [

83].

Ionosphere, a commonly used dataset regarding measurements from the ionosphere [

84,

85].

Liverdisorder, a medical dataset that was studied thoroughly in a series of papers [

86,

87].

Mammographic, which is related to breast cancer detection [

89].

Parkinsons, which is related to the detection of Parkinson’s disease [

90,

91].

Pima, related to the detection of diabetes [

92].

Phoneme, a dataset that contains sound measurements.

Popfailures, which is related to measurements regarding climate [

93].

Regions2, a dataset used for the detection of liver problems [

94].

Saheart, which is a medical dataset concerning heart diseases [

95].

Statheart, a dataset related to the detection of heart diseases.

Spiral, an artificial dataset with two classes.

Student, which is a dataset regarding experiments in schools [

97].

Transfusion dataset [

98].

Wdbc, a medical dataset regarding breast cancer [

99,

100].

Wine, a dataset regarding measurements of the quality of wines [

101,

102].

EEG, a dataset related to EEG measurements [

103,

104], and the following cases were used from this dataset: Z_F_S, ZO_NF_S, ZONF_S and Z_O_N_F_S.

Zoo, which is related to the classification of animals in some predefined categories [

105].

Also, the following list of regression datasets was used in the conducted experiments:

Abalone, related to the detection of the age of abalones [

106].

Airfoil, a dataset provided by NASA [

107].

Auto, regarding fuel consumption in cars.

BK, used to predict the points scored by basketball players.

BL, a dataset regarding some electricity experiments.

Baseball, used to estimate the income of baseball players.

Concrete, related to measurements from civil engineering [

108].

DEE, a dataset used to estimate the electricity prices.

FY, related to fruit flies.

HO, a dataset founded in the STATLIB repository.

Housing, a dataset used for the prediction of the price of houses [

110].

Laser, which contains measurements from various physics experiments.

LW, a dataset regarding the weight of babes.

Mortgage, a dataset that contains measurements from the economy of the USA.

PL dataset, located in the STALIB repository.

Plastic, a dataset related to problem in plastics.

Quake, a dataset that contains measurements from earthquakes.

SN, a dataset used in trellising and pruning.

Stock, related to the prices of stocks.

Treasury, an economic dataset.

3.2. Experiments

The code used in the current work was coded in the C++ language, and the freely available Optimus environment [

111] was used. All the experiments were repeated 30 times, using a different seed for the random generator in every run. For the validation of the experimental results, the well-known procedure of ten-fold cross-validation was incorporated. For the classification datasets, the average classification error is reported, as calculated using the following formula:

The set

T represents the test set and it is defined as

. Likewise, for the regression datasets, the average regression error is reported and is computed using the following expression:

The values for the experimental settings are depicted in

Table 1. The parameter values have been set so that there is a compromise between the efficiency and speed of the methodologies used when performing the experiments. The following notation is used in the experimental tables:

The column DATASET represents the objective problem.

The column ADAM denotes the results from the training of a neural network using the ADAM optimizer [

112]. The number of processing nodes was set to

.

The column BFGS represents the results obtained by the training of a neural network with

processing nodes using the BFGS variant of Powell [

113].

The column GENETIC stands for the results obtained by the training of a neural network with

processing nodes using Genetic Algorithm with the same parameter set, as provided in

Table 1.

The column RBF describes the incorporation of a Radial Basis Function (RBF) network [

114,

115], with

hidden nodes.

The column NNC represents the results obtained by the incorporation of the original neural construction method.

The column NEAT represents the usage of the NEAT method (NeuroEvolution of Augmenting Topologies) [

116].

The column PRUNE stands for the usage of the OBS pruning method [

117], provided by the Fast Compressed Neural Networks library [

118].

The column PROPOSED shows the results obtained by the application of the proposed method.

The row AVERAGE depicts the average classification or regression error for all datasets in the corresponding table.

In

Table 2, classification error rates are presented for a variety of machine learning models applied to the series of classification datasets. The values indicate error percentages, meaning that lower values correspond to better model performance on each dataset. The final row shows the average error rate for each model, serving as a general indicator of overall performance across all datasets. Based on the analysis of the average errors, it becomes evident that the current method achieves the lowest average error rate, with a value of 19.63%. This suggests that it generally outperforms the other methods. It is followed by the NNC model, with an average error of 24.79%, which also demonstrates a significantly lower error compared to traditional approaches such as ADAM, BFGS, and GENETIC, whose average error rates are 36.45%, 35.71%, and 28.25%, respectively. The PRUNE method also performs relatively well, with a mean error of 27.94%. On an individual dataset level, the PROPOSED method achieves the best performance (i.e., the lowest error) in a considerable number of cases, such as in the CIRCULAR, DERMATOLOGY, SEGMENT, Z_F_S, ZO_NF_S, ZONF_S, and ZOO datasets, where it records the smallest error among all methods. Furthermore, in many of these cases, the performance gap between the PROPOSED method and the others is quite significant, indicating the method’s stability and reliability across various data conditions and structures. Some models, including GENETIC, RBF, and NEAT, tend to show relatively high errors in several datasets, which may be due to issues such as overfitting, poor adaptation to non-linear relationships, or generally weaker generalization capabilities. In contrast, the NNC and PRUNE models demonstrate more consistent behavior, while the PROPOSED method maintains not only the lowest overall error, but also reliable performance across a wide range of problem types. In summary, the statistical analysis of classification error rates confirms the superiority of the PROPOSED method over the others, both in terms of average performance and the number of datasets in which it excels. This conclusion is further supported by the observation that the PROPOSED method achieves the best results in the majority of datasets, often with significantly lower error rates. Such superiority may be attributed to better adaptability to data characteristics, effective avoidance of overfitting, and, more broadly, a more flexible or advanced algorithmic architecture.

Table 3 presents the application of various machine learning methods on regression datasets. In this table, columns represent different algorithms, and rows correspond to datasets. The numerical values shown are absolute errors, indicating the magnitude of deviation from the actual values. Therefore, smaller values signify higher prediction accuracy for the corresponding model. The last row reports the average error for each method across all datasets, offering a general measure of overall performance. According to the overall results, the PROPOSED method exhibits the lowest average error value at 4.83, indicating high accuracy and better overall behavior compared to the other approaches. The second-best performing model is NNC, with an average error of 6.29, which also stands out from the traditional methods. On the other hand, ADAM and BFGS show significantly higher error rates, at 22.46 and 30.29, respectively, suggesting that these methods may not adapt well to the specific characteristics of the regression problems evaluated. At the individual dataset level, the PROPOSED method achieves notably low error values across multiple datasets, including AIRFOIL, CONCRETE, LASER, PL, PLASTIC, and STOCK, outperforming other algorithms by a considerable margin. Its consistent performance across such diverse problems suggests that it is a flexible and reliable approach. Furthermore, the fact that it also performs strongly on more complex datasets with high variability in error—such as AUTO and BASEBALL—strengthens the impression that the method adapts effectively to varying data structures. By comparison, algorithms such as GENETIC and RBF exhibit less stable behavior, showing good performance in some datasets but poor results in others, resulting in a higher overall average error. The PRUNE method, although not a traditional algorithm, shows moderate performance overall, while NEAT does not appear to stand out in any particular dataset and also maintains a relatively high average error. In conclusion, the analysis indicates that the PROPOSED method clearly excels in predictive accuracy, both on average and across a large number of individual datasets. Its ability to minimize error across different types of problems makes it a particularly promising option for regression tasks involving heterogeneous data.

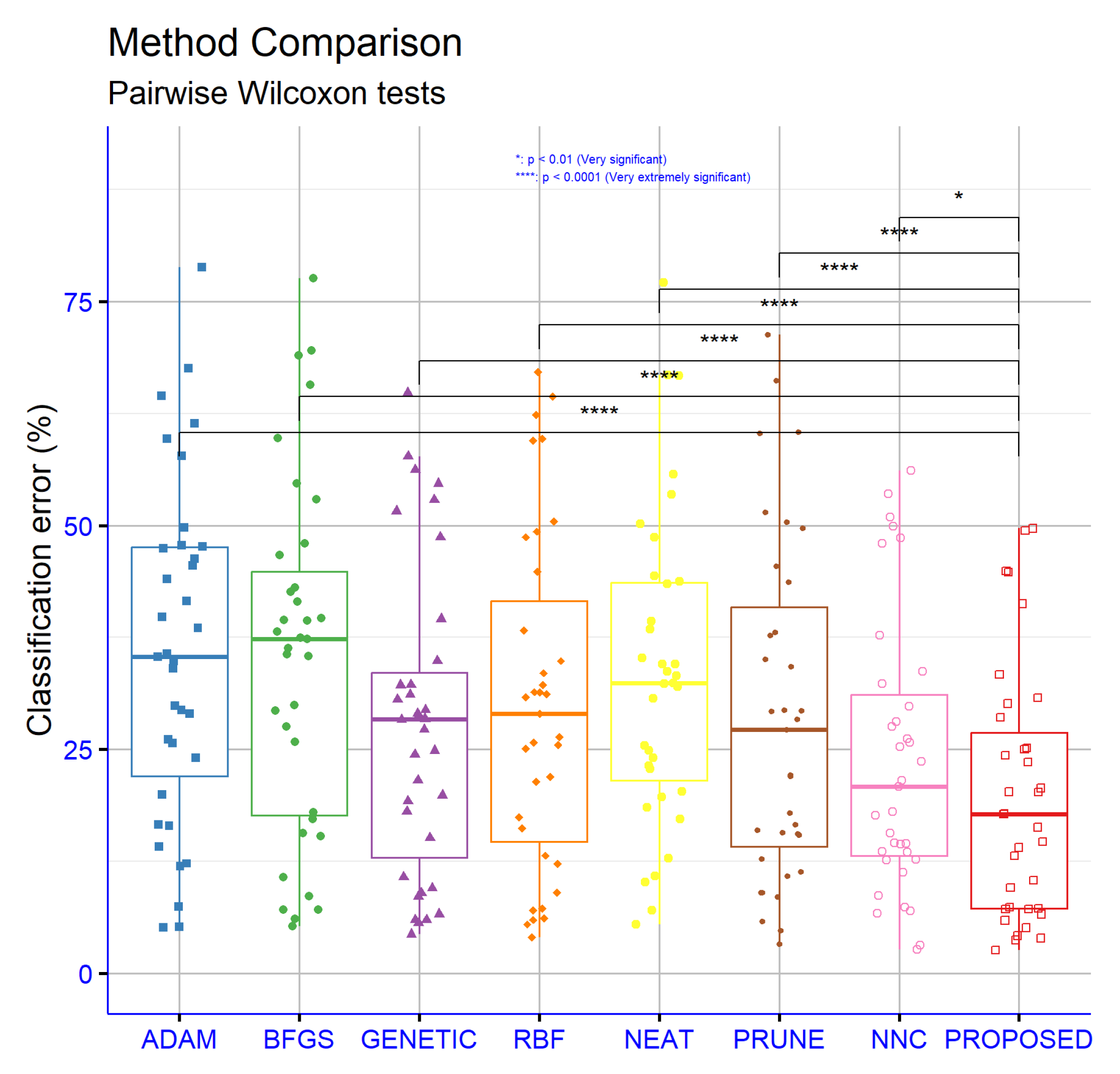

To determine the significance levels of the experimental results presented in the classification dataset tables, statistical analyses were conducted. Exclusively, the non-parametric, paired Wilcoxon signed-rank test was used to assess the statistical significance of the differences between the PROPOSED method and the other methods, as well as for hyperparameter comparisons in both classification and regression tasks. These analyses were based on the critical parameter “

p”, which is used to assess the statistical significance of performance differences between models. As shown in

Figure 5, the differences in performance between the PROPOSED model and all other models, namely ADAM, BFGS, GENETIC, RBF, NEAT, and PRUNE, are extremely statistically significant with

p < 0.0001. This indicates, with a high level of confidence, that the PROPOSED model outperforms the rest in classification accuracy. Even the comparison with NNC, which is the model with the closest average performance, showed a statistically significant difference with

p < 0.05. This confirms that the superiority of the PROPOSED model is not due to random variation, but is statistically sound and consistent. Therefore, the PROPOSED model can be confidently considered the best choice among the evaluated models for classification tasks, based on the experimental data and corresponding statistical analysis.

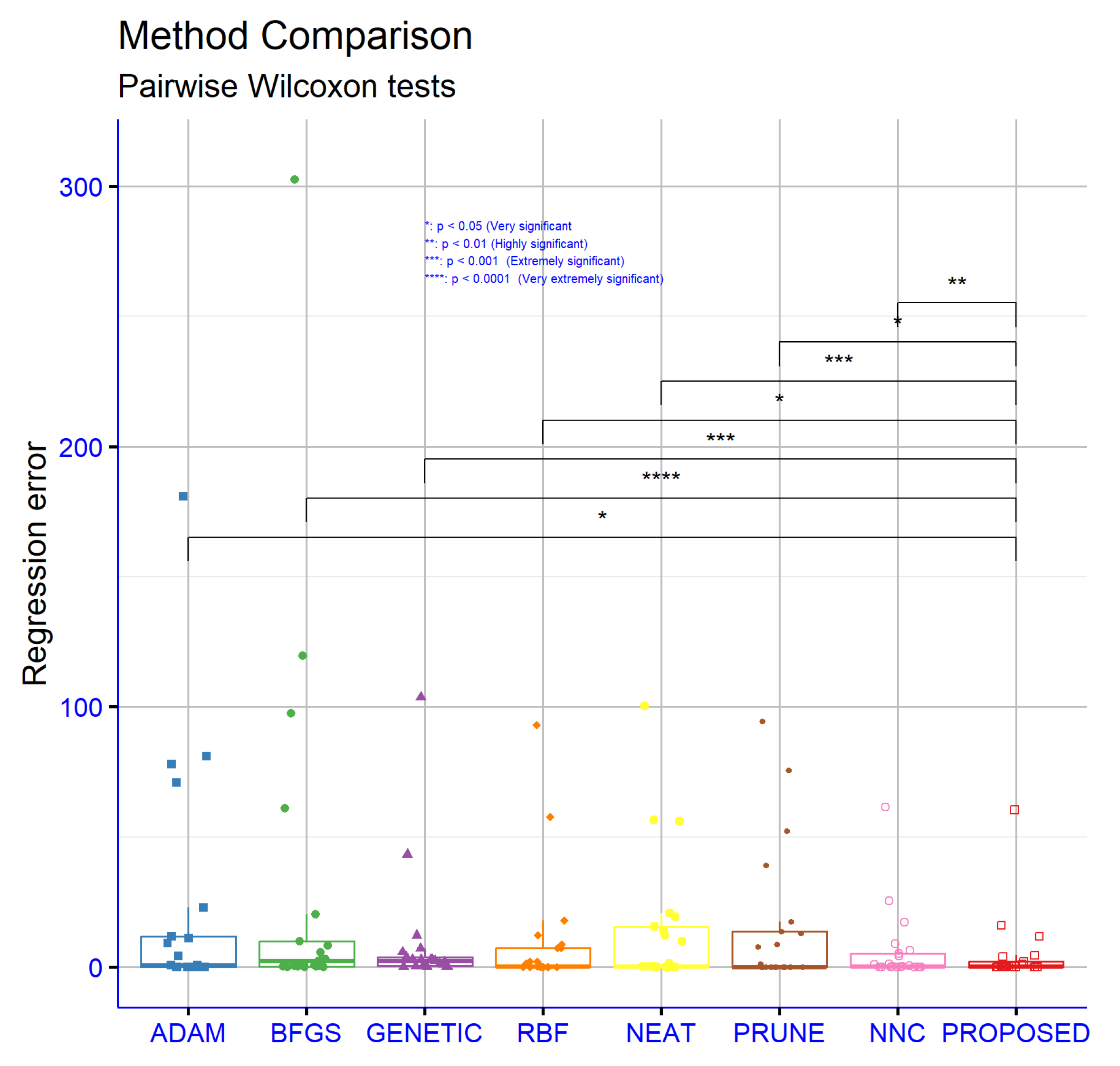

From the analysis of the results presented in

Figure 6, it is evident that the performance difference between the PROPOSED model and BFGS is extremely significant (

p < 0.0001), clearly indicating the superiority of the PROPOSED model. Similarly, the comparisons with GENETIC and NEAT show very high statistical significance (

p < 0.001), confirming that the PROPOSED model achieves clearly better results. The difference with NNC, though smaller, remains significant (

p < 0.01), showing that even in comparison with one of the best-performing alternative models, the PROPOSED model still outperforms. The differences with ADAM, RBF, and PRUNE are statistically significant at the

p < 0.05 level, suggesting a noteworthy advantage of the PROPOSED model in these cases as well, albeit with a lower confidence level. Overall, the statistical analysis of the regression dataset results confirms the overall superiority of the PROPOSED model, not only in terms of average prediction accuracy, but also in the consistency of its performance compared to the alternative approaches.

3.3. Experiments with the Weight Factor

An additional experiment was conducted, where the initial weight parameter , used in the first phase of the current work was altered from 2 to 10. The purpose of this experiment is to determine the stability of the proposed procedure to changes in this critical parameter.

Table 4 presents the results from the application of the PROPOSED method on various classification datasets, using four distinct values of the parameter

(initialization factor): 2, 3, 5, and 10. The recorded values correspond to error percentages for each dataset, while the last row of the table includes the average error rate for each parameter value. Analyzing the data, it is observed that the value

exhibits the lowest average error rate (19.63%), followed by

(19.89%). The values

and

have slightly higher averages, 20.32% and 20.33%, respectively. The difference between the averages is relatively small, a fact suggesting that the parameter

does not dramatically affect the model’s performance; however, the gradual decrease in average error with increasing parameter value may indicate a trend of improvement.

In individual datasets, small variations are observed depending on the setting. In some cases, such as SEGMENT and CIRCULAR, increasing the parameter value leads to noticeably better results. For example, in SEGMENT, the error rate decreases from 39.10% for to only 9.59% for . A similar improvement is observed in CIRCULAR, where the error decreases from 14.71% to 4.22%. Conversely, in other datasets, the variation in values is smaller or negligible, and in some cases, such as ECOLI and CLEVELAND, higher values lead to slightly increased error. Overall, the statistical analysis shows that although no statistically significant differences are observed between the different parameter values, in accordance with the p-values from previous analyses, there is nevertheless an indication that higher values of , such as 10, are associated with slightly improved average performance and better results in certain datasets. This trend may be interpreted as an indication that a higher initialization factor might allow the model to start from more favorable learning conditions, particularly in datasets with greater complexity. However, because the variation is not systematic across all datasets, the selection of the value should be conducted carefully and in relation to the characteristics of each specific problem.

In

Table 5, a general trend of decreasing average error is observed as the value of the initialization factor

increases. The average drops from 6.08 (for

) to 5.48 (

), 5.24 (

), and finally 4.83 (

). This sequential decrease suggests that higher values of

tend to improve the model’s overall performance. However, the effect is not uniform across all datasets. In some cases, the improvement is striking: in AUTO, the error decreases from 17.16 (

) to 11.73 (

), in HOUSING, it reduces from 27.19 to 15.96, and in FRIEDMAN, the most noticeable improvement is recorded from 6.49 to 1.25. Additionally, in STOCK, a significant drop from 8.79 to 3.96 is observed. Conversely, in some datasets, performance deteriorates with increasing

: in BASEBALL, the error increases from 59.05 (

) to 60.42 (

), and in LW, from 0.11 to 0.32. In other datasets, such as AIRFOIL, LASER, and PL, differences are minimal and practically negligible, with values remaining very close for all

parameters. For example, in AIRFOIL, all values are around 0.002, while in PL, the difference between values is merely 0.001. This heterogeneity in the response of different datasets underscores that the optimal value of

depends significantly on the specific characteristics of each problem. Despite the general improving trend with higher

values, notable exceptions like BASEBALL and LW confirm that there is no global optimal setting suitable for all regression problems.

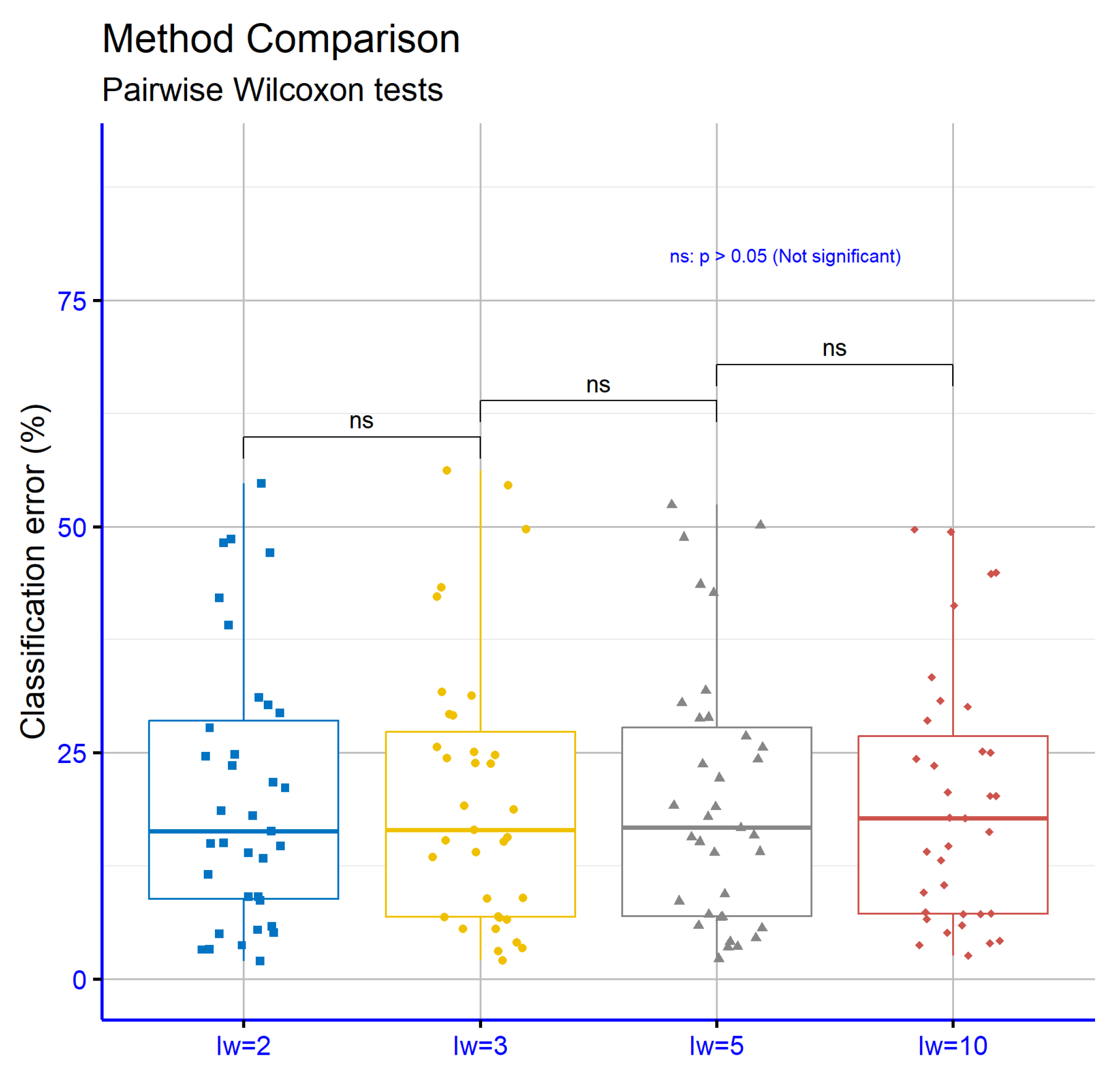

Figure 7 presents the significance levels for the comparison of different values of the

(initial weights) parameter in classification datasets. The comparisons include the pairs

vs.

,

vs.

, and

vs.

. In all cases, the

p-values are greater than 0.05, indicating that the differences between the respective settings are not statistically significant. This implies that changes in the

parameter across these specific values do not substantially change the performance of the model in classification tasks.

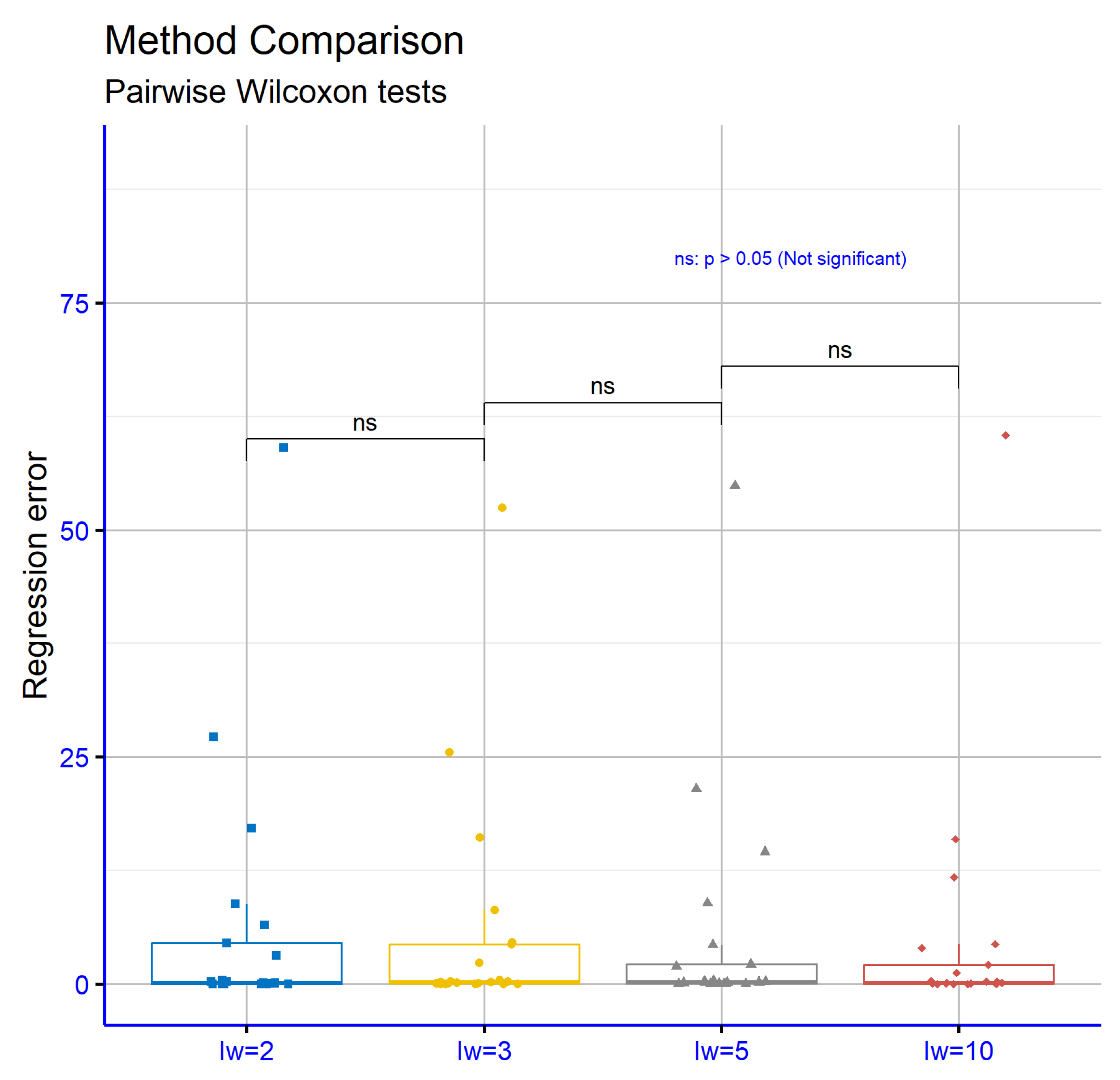

In

Figure 8, the statistical evaluation focuses on how different initial weight settings (

) affect performance in regression tasks. The comparisons between the values

,

,

, and

revealed no significant variations, as all corresponding

p-values were found to be greater than 0.05. This outcome suggests that altering the

parameter within this range does not lead to measurable differences in the models’ predictive behavior. The results imply that model accuracy remains stable regardless of these specific

configurations in regression scenarios.

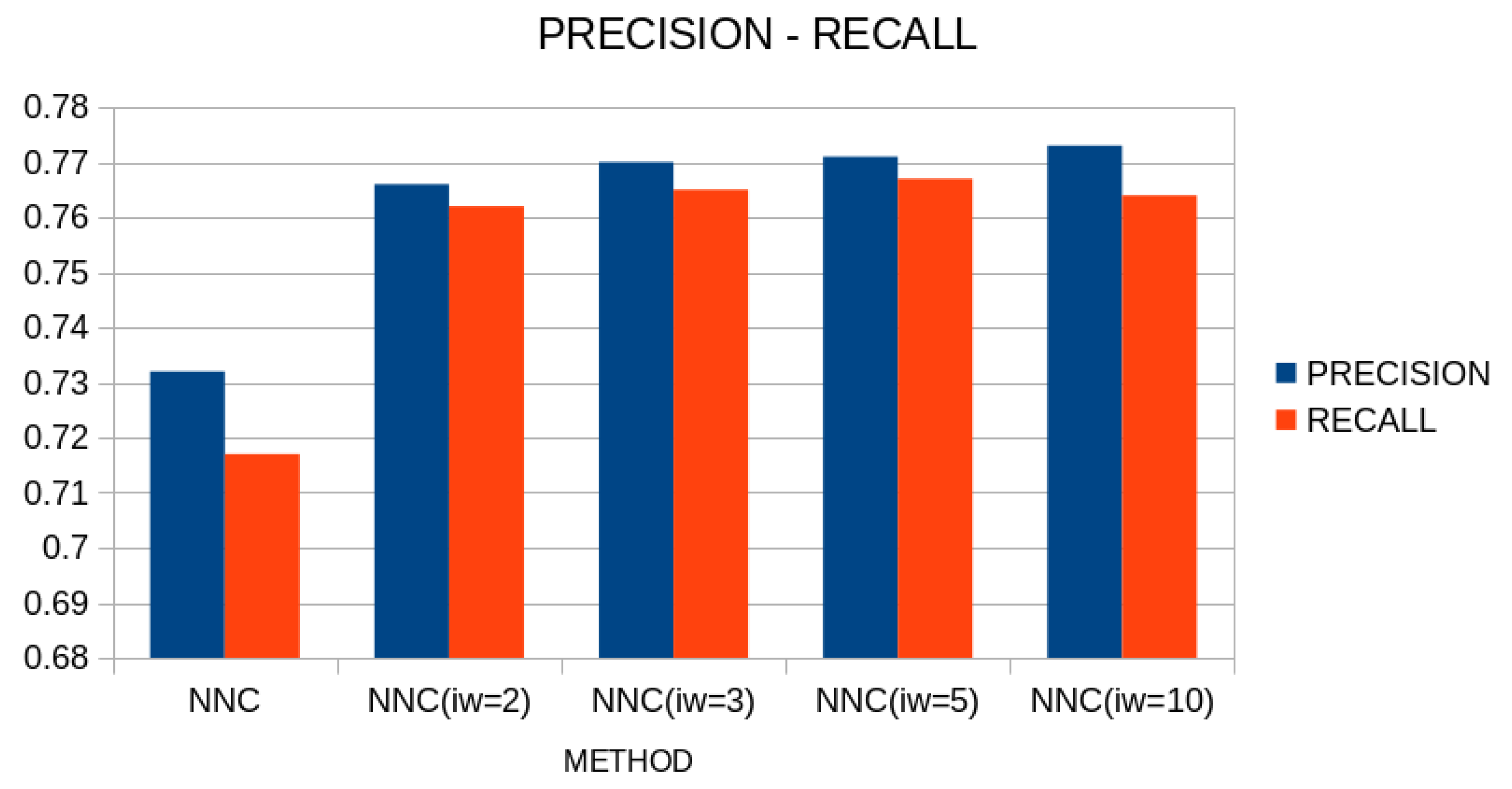

A comparison in terms of precision and recall between the original neural network construction method and the proposed one is outlined in

Figure 9.

As can be clearly seen from this figure, the proposed technique significantly improves the performance of the artificial neural network construction method on classification data, achieving high rates of correct data classification.

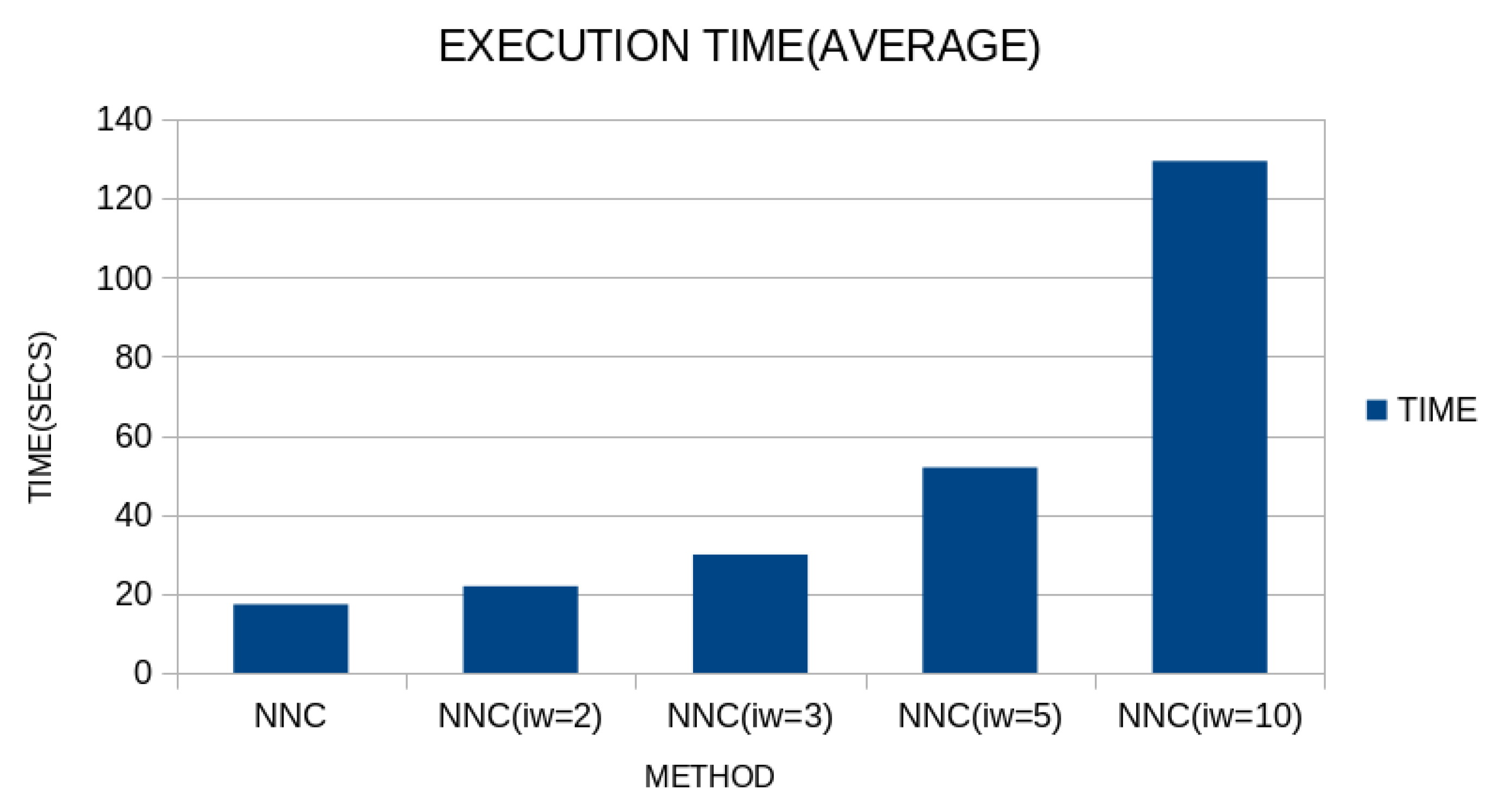

Although the proposed technique appears to be more efficient than the original one, the addition of the first processing phase results in a significant increase in execution time, as also demonstrated in

Figure 10.

It is clear that there is a significant increase in execution time, as more units are added to the initial neural network of the first phase, and in fact, this time increases significantly between the values and .

3.4. Some Real-World Examples

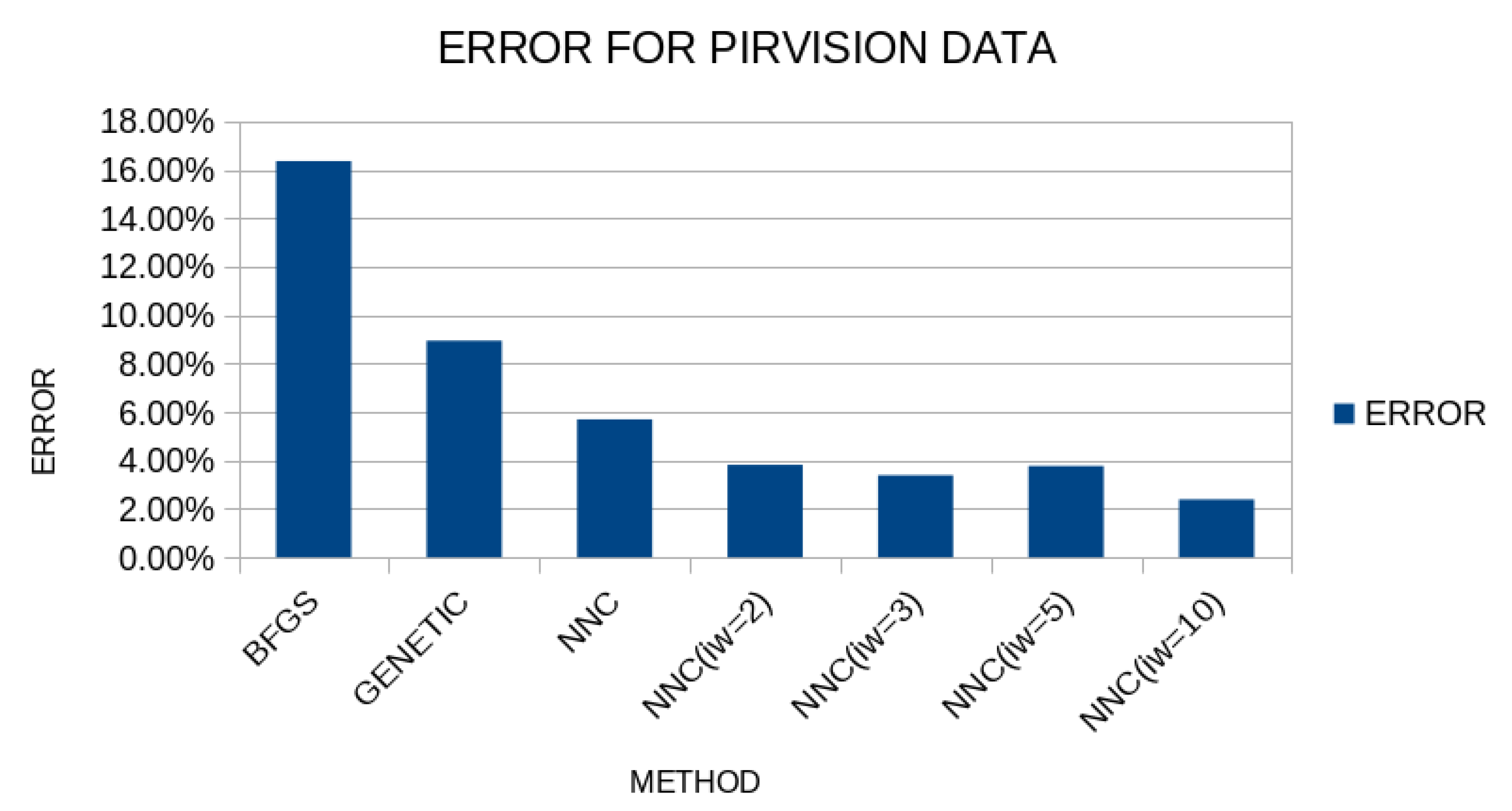

Many real-world examples consider the PIRvision dataset, initially discussed in 2023 [

119], and the Beed dataset, presented in the work of Banu [

120]. The dataset contains 15302 patterns, and the dimension of each pattern is 59. The Beed dataset (Bangalore EEG Epilepsy Dataset) is a comprehensive EEG collection for epileptic seizure detection and classification, containing 8000 patterns, and each pattern has 16 features. In the conducted experiments, the following methods were used:

BFGS, which defines the usage of the BFGS method to train a neural network with processing nodes.

GENETIC, which is used to represent a genetic algorithm used to train a neural network with processing nodes.

NNC, which represents the initial neural network construction method.

The PROPOSED method with the following values for parameter: , , and .

For the validation of the results, the method of ten-fold cross-validation was used and the results are shown in

Figure 11.

As is evident from the specific results, the artificial neural network construction technique significantly outperforms the others, and in fact, the proposed procedure significantly improves the results, especially in the case where the parameter takes the value 10, where the average classification error reaches approximately 2%.

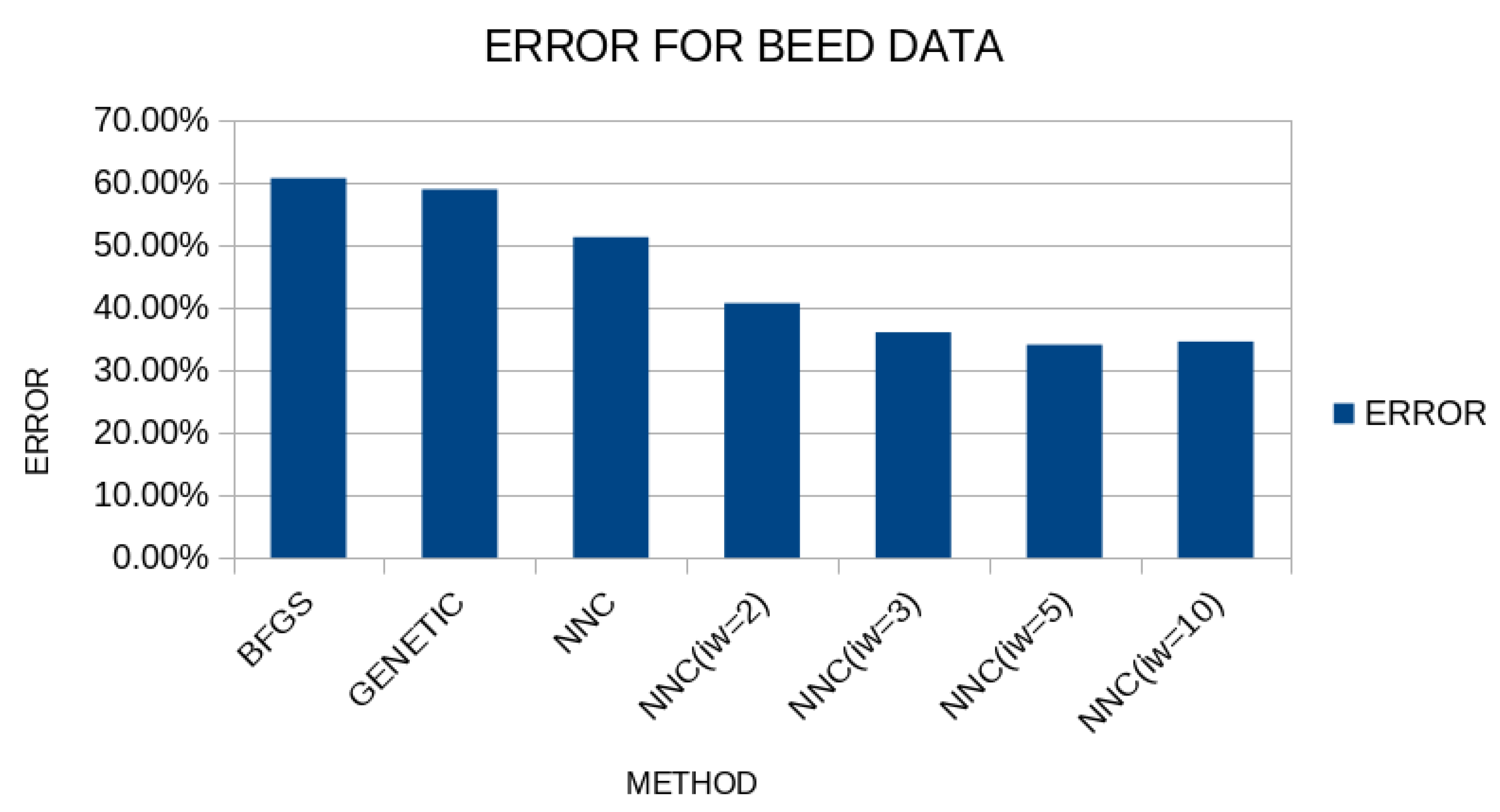

The results for the BEED dataset are outlined in

Figure 12.

And in this case, the PROPOSED method reduces the classification error in comparison to a simple genetic algorithm or compared to the original method of constructing artificial neural networks.

3.5. Experiments with the Number of Chromosomes

Additionally, another experiment was conducted using the current work and a range of values for the critical parameter

, which represents the number of used chromosomes.

Table 6 presents the classification error rates of the proposed machine learning method across various datasets, for four distinct values of

, which corresponds to the number of chromosomes used in the evolutionary process. It is observed that, in a large proportion of datasets, an increase in

is accompanied by a reduction in the error rate, indicating that a greater diversity of initial solutions can lead to better final performance. In certain datasets, such as DERMATOLOGY, ECOLI, SEGMENT, and Z_F_S, the improvement is particularly evident at higher

values, whereas in others, such as AUSTRALIAN, HEART, and MAMMOGRAPHIC, the change is small or nonexistent, suggesting that performance in these cases is less sensitive to an increase in the number of chromosomes. There are also instances where increasing

does not lead to improvement but rather to a slight increase in error, as in GLASS and CIRCULAR, a phenomenon that may be due to overfitting or random variation in performance. The mean percentage error gradually decreases from 21.40% for

to 19.63% for

, confirming the general trend of improvement with increasing

, although the benefit from very large values appears to diminish, possibly indicating saturation in the model’s ability to exploit the additional diversity. Overall, the statistical picture shows that increasing the number of chromosomes contributes to reducing the error, but the degree of benefit depends on the characteristics of each dataset.

Table 7 presents the absolute prediction errors of the PROPOSED method across the regression datasets, where four different values of

were utilized. The general trend observed is a reduction in error as

increases, suggesting that greater solution diversity leads to more accurate predictions. This is particularly evident in datasets such as AUTO, BL, HOUSING, STOCK, and TREASURY, where the difference between

and

is substantial. In some datasets, such as ABALONE, AIRFOIL, CONCRETE, and LASER, the values remain almost unchanged regardless of

, indicating that performance in these cases is less dependent on the number of chromosomes. There are also cases with non-monotonic behavior, such as BK and LW, where increasing

does not necessarily lead to a consistent reduction in error, possibly due to stochastic factors or overfitting. The mean error steadily decreases from 5.78 for

to 4.83 for

, reinforcing the overall picture of improvement, although the difference between the two largest

values are smaller, which may indicate that the benefit of increasing

begins to saturate. Overall, the statistical analysis shows that increasing the number of chromosomes improves the accuracy of the method, with the magnitude of the benefit depending on the specific characteristics of each dataset.

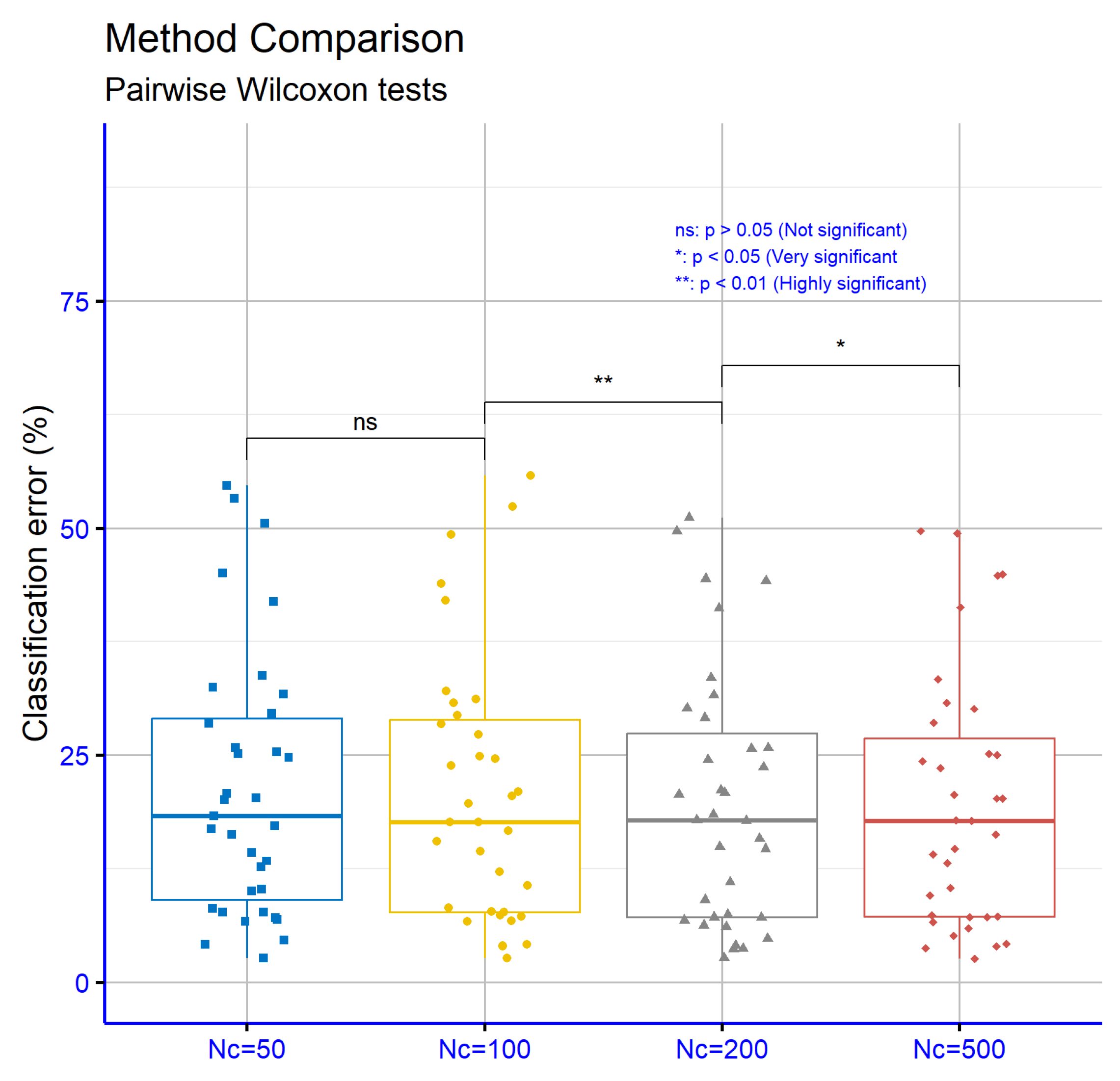

Figure 13 presents the significance levels resulting from the comparisons of classification error rates across datasets for the proposed machine learning method. The comparison between

and

shows no significant difference (

p = ns), indicating that increasing the number of chromosomes from 50 to 100 does not lead to a substantial improvement. In contrast, the transition from

to

shows a statistically significant improvement (

p = **), while the increase from

to

is also accompanied by a significant difference (

p = *), albeit of lower magnitude. These results suggest that higher

values can improve performance, with the significance being more pronounced in the mid-range

values.

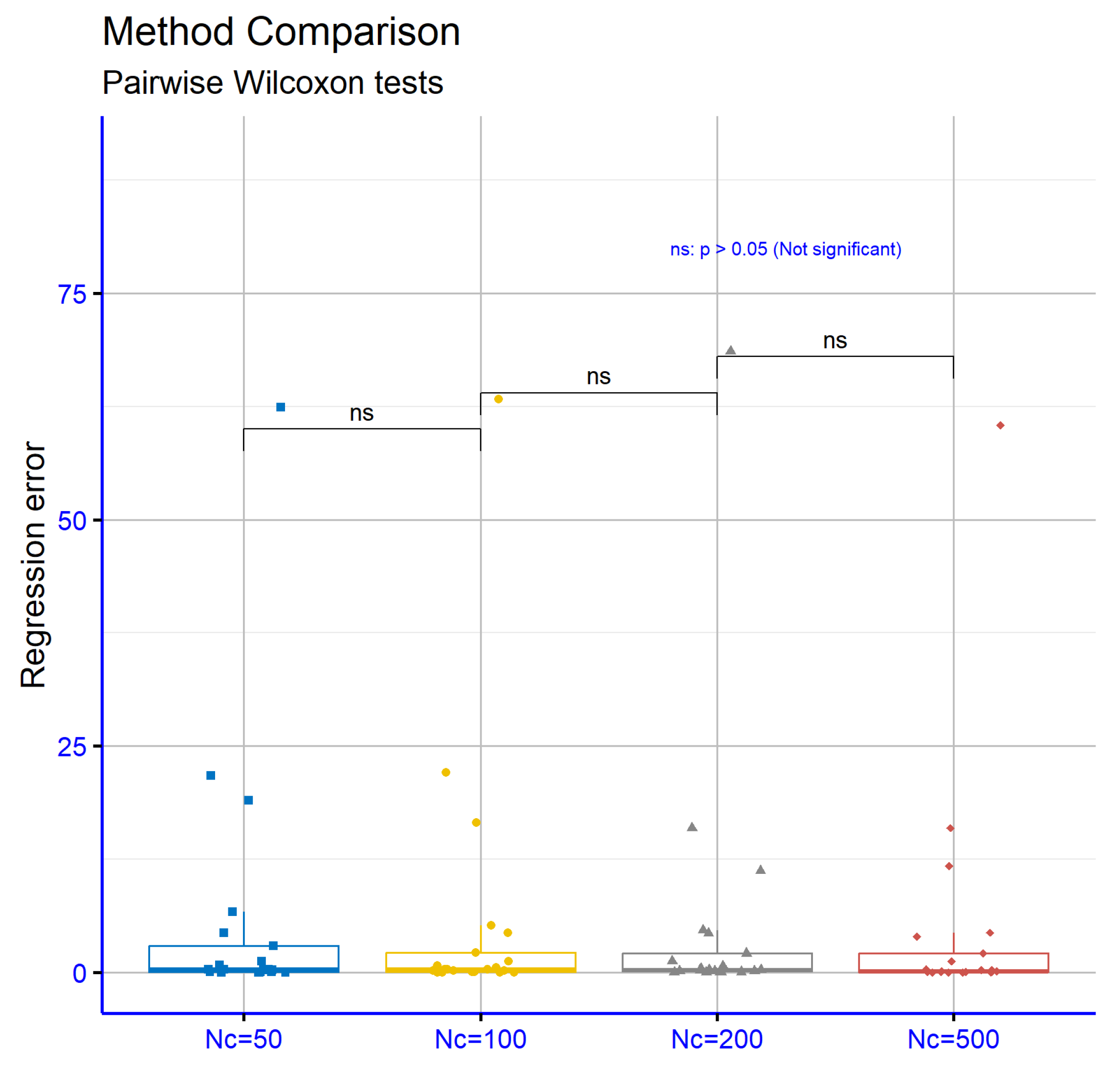

Figure 14 presents the significance levels resulting from the comparisons of prediction errors across regression datasets for the proposed machine learning method. In all three comparisons between

and

,

and

, as well as

and

, the

p-value is non-significant (

p = ns), indicating that increasing the number of chromosomes does not lead to a statistically significant change in the model’s performance on these datasets.

3.6. Comparison with a Previous Work

Recently, an improved version of the constructed neural networks was published. In this version, the periodical application of a local optimization procedure was suggested [

121]. In the following tables, this method is denoted as INNC, and a comparison is made against the original neural network construction procedure (denoted as NNC) and the proposed method (denoted as PROPOSED).

In

Table 8, the comparison of mean error rates shows that the PROPOSED method achieves the lowest average error rate, 19.63%, compared to 20.92% for INNC and 23.82% for NNC, indicating an overall improvement in performance. In several datasets, such as ALCOHOL, BALANCE, CIRCULAR, DERMATOLOGY, GLASS, SEGMENT, and ZO_NF_S, the PROPOSED method clearly outperforms the others, recording substantially lower error rates than the two comparative models. However, in certain cases, such as HABERMAN, HEART, HOUSEVOTES, and IONOSPHERE, the proposed method shows slightly higher error than the best-performing of the other two models, suggesting that its superiority is not universal. There are also instances of equivalent or marginal differences, such as in LYMOGRAPHY and ZONF_S, where the performances of all three methods are very close. The overall trend indicates that the proposed method often achieves a significant reduction in error, with improvements being more pronounced in datasets with higher complexity or diversity in classes.

In

Table 9, the comparison of mean errors shows that INNC has the lowest average value (4.47), followed by the PROPOSED method (4.83) and NNC (6.29), indicating that the proposed method demonstrates an overall improvement over NNC but falls slightly short of INNC. In several datasets, such as AIRFOIL, CONCRETE, LASER, PL, PLASTIC, and STOCK, the PROPOSED method achieves the lowest or highly competitive error values, showing clear improvement over NNC and, in some cases, over INNC as well. However, in certain cases, such as AUTO, BASEBALL, FRIEDMAN, LW, and SN, INNC outperforms with lower errors, while there are also instances where NNC achieves better performance than the PROPOSED method, such as in DEE and FY, although the differences are small. The overall picture indicates that the PROPOSED method can achieve significant improvement in specific regression problems, but its superiority is not universal, with its performance depending on the characteristics of each dataset.

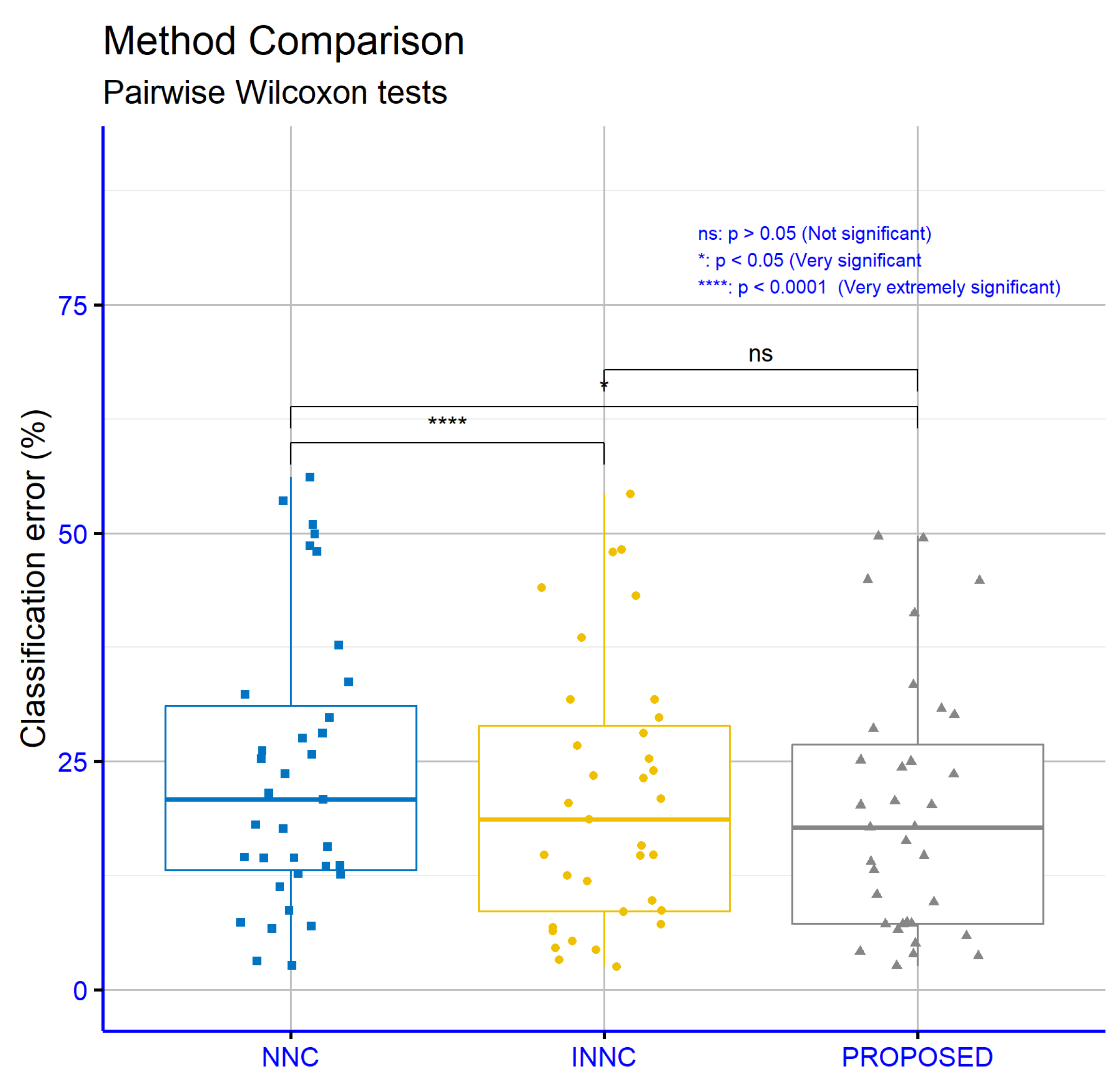

Figure 15 presents the significance levels resulting from the comparisons of error rates on classification datasets for the proposed machine learning method in relation to the NNC and INNC models. The comparison between NNC and INNC shows extremely high statistical significance (

p = ****), indicating a clear superiority of INNC. The comparison between NNC and the PROPOSED method also shows a statistically significant difference (

p = *), indicating that the PROPOSED method outperforms NNC. In contrast, the comparison between INNC and the PROPOSED method does not show a statistically significant difference, suggesting that the two methods perform similarly on these datasets.

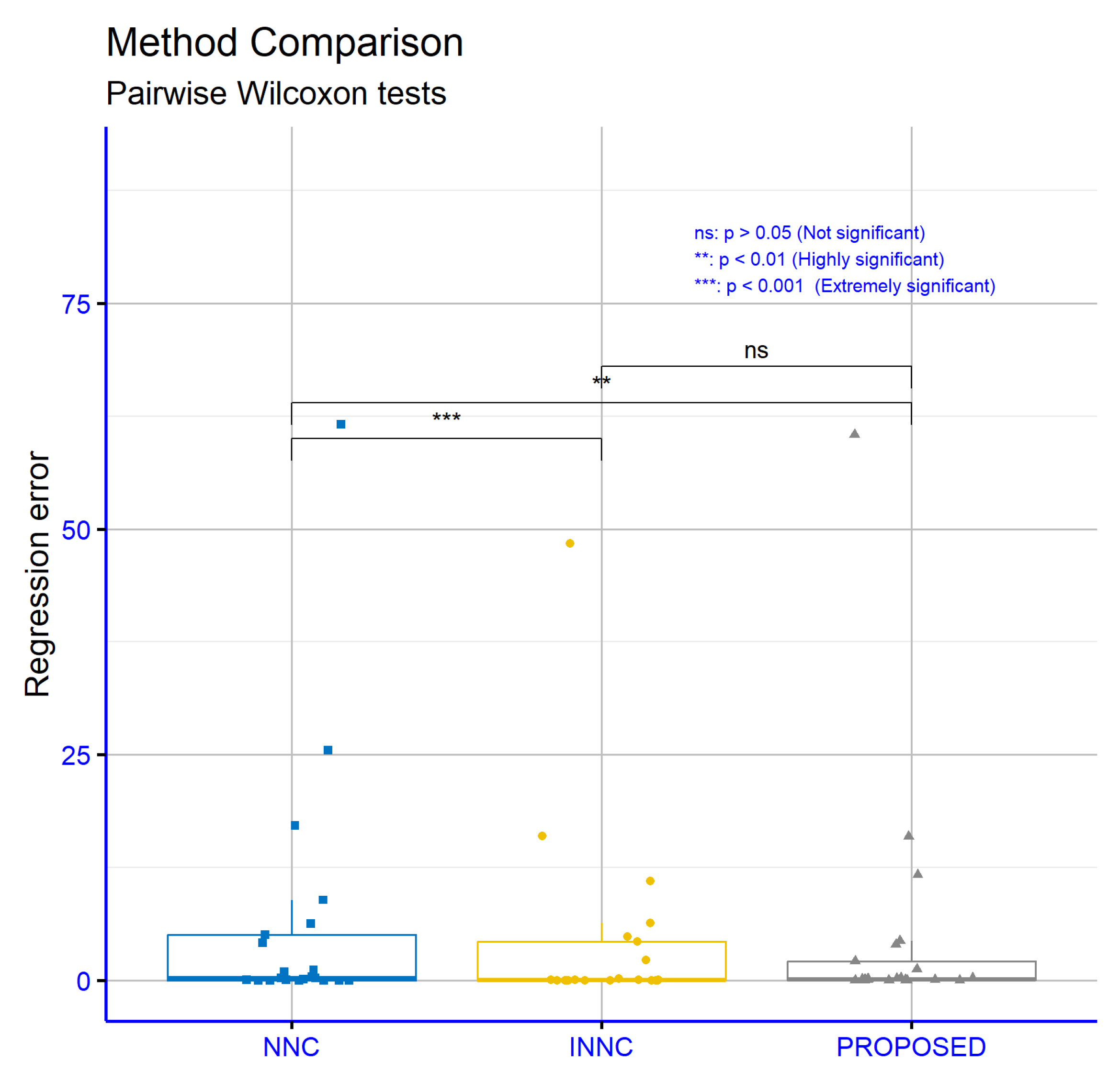

Figure 16 presents the significance levels from the comparisons of error rates on classification datasets for the proposed machine learning method in relation to the NNC and INNC models. The comparison between NNC and INNC shows high statistical significance (

p = ***), indicating a clear superiority of INNC. The comparison between NNC and the PROPOSED method also shows a statistically significant difference (

p = **), demonstrating the improvement of the PROPOSED method over NNC. In contrast, the comparison between INNC and the PROPOSED method does not show a statistically significant difference, indicating that the two methods have comparable performance on these datasets.

Also, it should be noted that the INNC method requires significantly more time than the PROPOSED method, since it applies several applications of the local search procedure on randomly selected chromosomes.

4. Conclusions

This study clearly demonstrates the importance of integrating a preliminary training phase into the grammar-based evolution framework for constructing artificial neural networks. The role of this pretraining phase extends far beyond merely initializing the solution space. It effectively enhances the quality of the initial population by transferring information from a previously trained neural network, resulting in a better-informed starting point for the evolutionary process. This enriched initialization improves convergence rates and reduces the risk of stagnation in local minima, especially in complex, non-linear, or noisy problem domains.

Experimental findings show that the proposed approach not only achieves improved numerical performance metrics, but also exhibits increased consistency across diverse datasets. Unlike many conventional methods that are often sensitive to the nature of the data and prone to high variability in performance, the proposed model demonstrates both robustness and generalization capability. This makes it a strong candidate for applications in high-stakes or real-time environments where model reliability is critical, such as in medical diagnosis, energy forecasting, or financial decision-making.

The sensitivity analysis concerning the initialization factor () offers further insight into the behavior of the proposed model. Although the differences among parameter values are not statistically significant, a consistent trend toward improved accuracy with higher Iw values suggests that careful tuning of initialization can have a meaningful impact on model effectiveness. In more complex datasets, higher settings appear to support better generalization, pointing to the potential of initialization strategies as a lever for optimization.

Overall, the proposed system should not be viewed as a minor variation on existing grammar evolution techniques, but rather as a substantial advancement in how prior knowledge and pretraining experience can be exploited to improve and accelerate evolutionary learning. This approach merges the advantages of pretraining with the adaptability of evolutionary search, forming a solid foundation for future developments involving hybrid or meta-intelligent strategies in automated neural architecture design. Its demonstrated performance, adaptability, and potential for integration with broader machine learning paradigms mark it as a promising direction for ongoing and future exploration.

Regarding future research directions, there are several promising avenues to explore. One potential extension of the current study could involve the use of alternative pretraining techniques beyond genetic algorithms, such as particle swarm optimization or differential evolution, to assess the influence of various optimization strategies on the initial population. Additionally, it would be valuable to examine the role of the pretraining phase in relation to variables such as the number of nodes, the level of noise in the data, and feature heterogeneity.

At this stage, the proposed work is applied to single-hidden layer artificial neural networks. However, with appropriate modification of the BNF grammar that generates the above networks, it could also be applied to deep learning models, although this would require significantly more computational resources, thus making the use of parallel processing techniques a necessity.

Finally, it is suggested that reinforcement learning techniques or even hybrid models such as GANs and Autoencoders be incorporated into the grammar-based evolution framework. Combining the proposed pretraining phase with neural architecture search methodologies could lead to even more efficient and generalizable models. The demonstrated stability and adaptability of the proposed approach make it a strong candidate for application in demanding real-world domains such as healthcare, energy, and financial forecasting.