Abstract

Federated learning (FL) enables collaborative model training across distributed clients while preserving data privacy by sharing only local parameters. However, this decentralized setup, while preserving data privacy, also introduces new vulnerabilities, particularly to backdoor attacks, in which compromised clients inject poisoned data or gradients to manipulate the global model. Existing defenses rely on the global server to inspect model parameters, while mitigating backdoor effects locally remains underexplored. To address this, we propose a decoupled contrastive learning–based defense. We first train a backdoor model using poisoned data, then extract intermediate features from both the local and backdoor models, and apply a contrastive objective to reduce their similarity, encouraging the local model to focus on clean patterns and suppress backdoor behaviors. Crucially, we leverage an implicit symmetry between clean and poisoned representations—structurally similar but semantically different. Disrupting this symmetry helps disentangle benign and malicious components. Our approach requires no prior attack knowledge or clean validation data, making it suitable for practical FL deployments.

1. Introduction

By enabling decentralized model optimization without sharing raw data, federated learning (FL) effectively preserves the privacy of participating clients and enhances the capability for collaborative learning across devices [1]. However, this architecture also creates vulnerabilities that can be exploited by backdoor attacks, in which compromised clients introduce malicious modifications to manipulate the behavior of the global model and undermine the trustworthiness of the entire system. Existing studies indicate that backdoor attacks in FL mainly arise from two types of strategies: the first involves manipulating local training data by injecting samples embedded with specific triggers, thereby implanting hidden malicious behaviors without affecting the model’s performance on the primary task [2,3,4]; the second involves directly tampering with model updates after local training, such as forging or modifying gradients or model parameters to achieve malicious objectives [5,6,7,8]. Both strategies can stealthily affect the behavior of the aggregated global model, posing severe security threats to federated learning systems.

Existing defenses against backdoor threats in federated learning can be broadly categorized into two main approaches: one focuses on enhancing the aggregation mechanism to resist malicious updates [9,10,11], while the other aims to identify and filter out suspicious client models [12,13,14,15], primarily relying on the analysis of plaintext model parameters. These methods attempt to detect potential backdoor attacks by identifying abnormal changes in model weights or gradients. Typically, such approaches assume that the aggregation server is honest and trustworthy [16,17,18,19], or they rely on access to a large amount of clean data on the server side [13,20]. However, these assumptions pose significant risks and contradict the core principle of data localization in federated learning. Prior studies [21,22] have shown that adversaries can exploit model parameters or gradient updates to infer local data of clients, thereby compromising data privacy. Although homomorphic encryption can enhance privacy protection by concealing client data, it introduces new challenges—most notably, the server loses the ability to verify whether received model updates have been maliciously tampered with. This highlights a fundamental trade-off between user privacy and system-level security. Consequently, there is an urgent need to develop robust defense strategies tailored to the unique characteristics of federated learning environments.

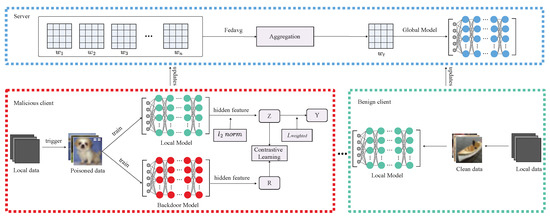

We propose a contrastive learning–based defense for federated learning that disentangles clean and backdoor feature representations. In contrast to many existing defense approaches, the proposed method operates without relying on prior knowledge of the attack or the availability of clean validation data, thereby enhancing its practicality and generalizability across diverse federated learning scenarios. Guided by symmetry theory—which suggests clean and poisoned features share structural similarities despite distinct semantics—we design contrastive objectives to break this symmetry and achieve effective separation. Potentially compromised clients are modeled with specialized backdoor detectors, while benign models use contrastive loss, sample reweighting, and the information bottleneck principle to reinforce clean feature learning. This symmetry-informed strategy improves robustness, preserves user privacy by avoiding server-side weight inspection, and provides a theoretically grounded, interpretable defense. Figure 1 illustrates the workflow. All colors are used for visual distinction only and have no practical meaning.

Figure 1.

Overview of DCL. The bottom right section (green box) represents the training process of benign clients. The red box illustrates the main steps of our local defense mechanism against backdoor attacks initiated by malicious clients, after which the malicious clients submit the locally defended updates to the global server. The blue box illustrates how the central server integrates model parameters collected from distributed clients to refresh the global model collaboratively.

Our Contributions. To summarize, our key theoretical insights and experimental findings include the following:

- We explore how backdoor-related and benign representations interact by analyzing their separability in the feature space. Building on this insight, we propose a novel defense strategy named Decoupled Contrastive Learning (DCL), specifically crafted to counter backdoor threats in federated learning systems. By designing targeted augmentation methods and crafting informative positive–negative sample pairs, DCL encourages the separation of malicious and benign representations. This method enables local models to focus on extracting clean features while minimizing the influence of backdoor signals in the learned embedding space.

- We conducted evaluations on the MNIST, Fashion-MNIST, and CIFAR-10 datasets to validate the performance of our method. Results demonstrate that it reliably suppresses the attack success rate (ASR) below 16% across both transient and sustained backdoor threat settings, all while preserving the primary task accuracy (ACC) with negligible impact.

- We develop a defense-aware adaptive attack strategy and demonstrate that DCL remains robust under such challenging conditions.

- By comparing the communication overhead per training round with and without DCL, we verify that the proposed scheme enhances security without introducing significant communication burden.

- Unlike many existing defenses, our method does not require prior knowledge of the attack or access to clean validation data, and it avoids inspecting client model weights on the server side, which helps better preserve user privacy, making it broadly applicable.

The organization of the remainder of this paper is as follows: Section 2 introduces the background and related works. Section 3 presents the threat model. Section 4 details the design and implementation of the proposed method. Section 5 provides the experimental setup and results. Finally, Section 6 concludes the paper and outlines future work.

2. Related Work

2.1. Backdoor Attacks

Data-Poisoning Attacks. Data-poisoning attacks are a prevalent form of backdoor threat in federated learning, where adversaries intentionally tamper with local training data to cause the global model to behave incorrectly. For example, Shejwalkar [4] introduced an attack based on flipping labels. Expanding on this, Tolpegin [3] examined how variables such as the fraction of malicious participants, the specific class changes, and the timing of their involvement influence the attack’s success. Additionally, Xie [2] broke down the trigger into several localized patterns distributed across different compromised clients, enhancing the attack’s stealthiness and making detection more challenging.

Model-Poisoning Attacks. Adversarial participants may intentionally manipulate local training to inject hidden triggers into the global model, thereby corrupting its behavior without raising suspicion. Bagdasaryan [5] introduced an approach where adversaries locally train a model with embedded backdoors that subtly align with the global model’s parameters, allowing for seamless replacement without triggering anomalies. Fang [6] constructed deceptive gradient updates that can evade existing defense mechanisms, enabling backdoor injection under the guise of legitimate learning. Bhagoji [8] utilized an alternating minimization method to stealthily optimize attack objectives while maintaining plausible update behavior. Sun [7] presented the Distance-Aware Attack, which strategically adjusts feature representations to enhance the effectiveness of targeted poisoning without raising suspicion.

2.2. Backdoor Defense

Robust Aggregation. Robust aggregation techniques are designed to reduce the influence of adversarial updates on the federated global model. Instead of relying on standard averaging, these methods apply aggregation strategies that are resilient to manipulated contributions. For instance, Yin’s -trimmed mean [9] enhances resilience by discarding extreme values during aggregation. Pillutla [10] adopted the geometric median as a robust central tendency measure to limit the effect of poisoned updates. To defend against corrupted updates, Blanchard [11] introduced Krum, an aggregation strategy that selects a client model whose parameters exhibit minimal discrepancy from the majority, thus reducing the influence of outliers. Zhang et al. [18] designed a fine-grained removal strategy to eliminate malicious weights. Huang et al. [16] calibrated anomalous parameters using Fisher information to enhance robustness.

Anomalous Model Detection. Anomalous Model Detection, often known as clustering-based detection, is a widely used strategy for defending against backdoor threats in federated learning. For example, Ding et al. [17] proposed suppressing backdoor behavior by adjusting the weight updates of low-activation neurons. Lin et al. [19] identified potential backdoored models by analyzing layer-wise discrepancies in a fine-grained manner. Shen [12] explored grouping the model updates into clusters based on similarity, allowing suspicious updates that deviate from the main distribution to be identified and flagged as potential threats. Fung [13] introduced FoolsGold, which mitigates adversarial influence by evaluating the consistency patterns in update behaviors across clients. Mu noz-Gonz’alez [14] proposed an adaptive aggregation framework that incorporates statistical consistency checks based on gradient directions to exclude suspicious updates. In a similar vein, Wang [15] designed FLARE, which inspects latent feature distributions to pinpoint and suppress potential poisoning attacks.

2.3. Contrastive Learning

Given the concealed characteristics of backdoor triggers, the model often fails to effectively separate malicious cues from benign representations, thereby increasing the likelihood of misclassification. In contrast, humans are less affected by such perturbations because they tend to focus on the direct associations of objects rather than irrelevant factors [23]. Inspired by disentangled representation learning [24,25,26], we aim to enable the model to learn representations of only the critical features while discarding backdoor perturbations, thereby enhancing the robustness of federated learning. Contrastive learning has shown substantial success in learning discriminative features by guiding the model to distinguish between semantically similar and dissimilar inputs [27,28,29]. In computer vision, approaches like SimCLR [30] and MoCo [31] generate associated input pairs by applying varying transformations to a single data point, treating them as aligned samples. In contrast, samples originating from unrelated instances are treated as divergent, helping the model build a more structured feature representation. This paradigm has proven effective in enhancing visual understanding tasks such as classification and detection. Similarly, in the NLP domain, SimCSE [32] simulates semantically connected text pairs by perturbing a sentence through strategies like token masking or reordering, which improves sentence-level embedding quality. CCL [33] effectively distinguishes causal features from spurious correlations by incorporating causality-guided contrastive learning, thereby improving the model’s generalization and robustness in complex semantic tasks. In the time-series domain, TS-TCC [34] addresses the issues of false negatives and class imbalance in time-series data by leveraging contrastive learning methods to effectively enhance the model’s representation learning capability under imbalanced and noisy conditions.

Moreover, contrastive learning has also shown distinct strengths in achieving feature disentanglement. For example, Xuan [35] introduced a disentangled contrastive learning framework to tackle the issue of class imbalance in long-tailed distributions. Their method formed positive and negative pairs in a way that guided the model to distinguish inter-class features while enhancing the representation of underrepresented classes. Inspired by this capability, we extend contrastive learning to the realm of federated learning for mitigating backdoor threats. By carefully designing positive and negative feature pairs, the proposed strategy promotes the separation of malicious features from clean representations in the latent space, thus reducing the risk of backdoor manipulation.

3. Threat Model

In this study, we consider a federated learning system consisting of 100 clients. In the single-round attack scenario, 10% of the clients are compromised as malicious participants, while in the continuous attack scenario, 40% of the clients persistently participate in multiple training rounds, injecting malicious updates. These malicious clients attempt to manipulate the global model by poisoning their local training data with backdoor triggers. Specifically, in the single-round attack, malicious clients directly replace their local updates in one training round to rapidly implant the backdoor; in the continuous attack, they continuously inject backdoor-related updates over multiple rounds to progressively strengthen the backdoor effect. Throughout the training process, the server neither accesses the clients’ raw data nor inspects the specific model parameters or gradients, relying solely on aggregating local updates to train the global model. This setup adheres to the privacy-preserving principles of federated learning while also increasing the challenges in designing effective defense strategies.

4. Methodology

In this part, we provide a theoretical foundation for our method and assess its effectiveness within a standard federated learning backdoor scenario. Prior studies [15] have shown that the second-to-last layer of a model captures informative, high-level representations that reflect the model’s focus. Building on this understanding, we incorporate an auxiliary network to assist local training. If the client is benign, this additional model enhances the extraction of relevant features, thereby strengthening the quality of local representations. If the client is malicious, the auxiliary model is intentionally trained as a backdoored model . Then, for the sample pairs held by the malicious client, we extract the penultimate layer outputs of both and the local model as R (backdoor features) and Z (clean features), respectively. During training, contrastive learning is employed to decouple R from Z, followed by a sample-reweighting strategy to guide toward learning clean features, thereby mitigating the backdoor effect. Upon completion, the purified model is used in global aggregation. The subsequent sections elaborate on each implementation step in malicious clients.

4.1. Backdoor Model

Building on previous research [36], this paper recognizes that backdoor samples are more easily learned by the model. To further reinforce the backdoor effect in , the training of is terminated immediately after convergence on the backdoor samples, while is switched to evaluation mode during the training of . At this stage, has exclusively learned the backdoor features and has yet to converge on the clean samples.

4.2. Local Model

Building on the decoupling concept introduced by Huang [37], this work formulates the loss function by integrating a variational information bottleneck approach alongside sample weighting. The loss function is expressed as three parts:

Here, denotes mutual information. The terms labeled ➀ and ➁ together form the information bottleneck loss. Term ➀ limits irrelevant information in the input that does not contribute to the label, helping to filter out noise from unrelated features. Term ➁ encourages the latent representation Z to retain the key information needed for accurate label prediction. Term ➂ measures the dependence between the backdoor feature R and the clean feature Z. Reducing the mutual information between Z and R decreases their dependency, allowing Z to focus on extracting features critical for the task. The detailed calculation process is presented below.

Term ➀. To constrain the irrelevant and redundant information in the input that does not affect the label, thus aiding in filtering noise from unrelated features, can be rewritten based on the mutual information formula as follows:

In real-world federated learning settings, calculating the marginal distribution is challenging. Prior studies [38] suggest approximating with a variational distribution . The Kullback–Leibler divergence provides a metric for the difference between and , calculated as follows:

Since the Kullback–Leibler divergence cannot be negative, the inequality below holds

From the aforementioned inequality, the relationship between and can be established. Consequently, an upper bound for Equation (2) is obtained.

Assuming the posterior follows a Gaussian distribution with mean and diagonal covariance matrix whose diagonal elements are to , and the prior is a standard normal distribution with mean zero and identity covariance matrix I, the KL divergence between the two distributions can be formulated as follows:

Here, represents the squared length of the mean vector using the norm, refers to the total of all diagonal elements within the covariance matrix, and denotes the logarithm of the determinant of the covariance matrix. The symbol D corresponds to the dimensionality of the feature vector.

When the covariance matrix is set to zero, the posterior distribution turns deterministic, causing z to equal and yielding a fixed embedding. In this scenario, reducing the mutual information between Z and X is equivalent to applying an norm regularization on z.

Term ➁. The goal of maximizing is to ensure that the latent representation Z effectively encodes information relevant to predicting the label Y. This helps the model focus on extracting useful, task-relevant clean features, thereby improving classification performance and defense effectiveness, while reducing the interference of backdoor features on the model’s decisions. Since calculating directly is challenging, we approximate it by minimizing the cross-entropy loss (). To achieve this, a sample-weighted cross-entropy loss () is applied to train , where features from the intermediate layers of are used only for weighting purposes without backpropagation through . The weight calculation is expressed as follows:

For training samples, a low loss value on results in a weight near 0, while a high loss leads the weight to approach 1. A tiny value is included to prevent division errors caused by zero denominators. This weighting mechanism aims to direct towards emphasizing clean feature learning and diminishing the influence of backdoor feature extraction. It is important to note that the features of the auxiliary model are computed only during the forward pass and do not participate in the backward propagation.

Term ➂. Using the connection between mutual information and entropy, can be represented as follows:

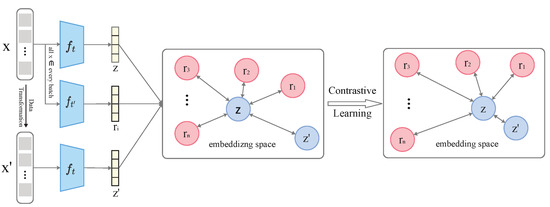

Minimizing the mutual information aims to separate the clean features Z from the backdoor features R, helping the model focus on extracting task-relevant clean key information while suppressing interference from backdoor features, thereby enhancing the model’s defense capability against backdoor attacks. We utilize contrastive learning to improve the model’s feature space by contrasting positive and negative pairs. Positive pairs include z and , where is generated by applying data augmentation on the input x to form , which is then processed by the local model to obtain its feature. Negative pairs are constructed from the local model’s feature z and the backdoor model’s feature . Figure 2 illustrates the overall contrastive learning framework. All colors are used for visual distinction only and have no practical meaning.

Figure 2.

The framework of contrastive learning. By constructing a contrastive loss function, the distance between the positive sample pair z and in the feature space is minimized, where is obtained by applying data augmentation to the input sample x to get , and then extracting its representation through the local model . At the same time, the negative sample pair z and are pushed apart, thereby achieving the goal of decoupling clean features from backdoor features.

Within this framework, the local model and the backdoor model produce intermediate representations z and r, respectively. These outputs are fed into the contrastive learning component to compute the contrastive loss. The loss function employed is based on the InfoNCE formulation, defined as follows:

Here, refers to the similarity measure between sample pairs, commonly calculated using cosine similarity; denotes the similarity score for positive pairs, whereas corresponds to that of negative pairs; the parameter regulates the sharpness of the similarity distribution, while n represents the total count of samples.

It is worth noting that the InfoNCE loss serves as a lower bound on the mutual information between positive pairs [39,40]; thus, maximizing the similarity of positive pairs implicitly maximizes this mutual information. Meanwhile, by contrasting with negative samples , the method encourages the dissimilarity between Z and R, effectively reducing the mutual information and promoting disentanglement. Although InfoNCE does not theoretically guarantee complete independence between Z and R, prior works [41] demonstrate that with sufficient negative samples and appropriate temperature settings, it effectively enforces feature separation in practice. Therefore, we consider the use of the InfoNCE-based contrastive loss as a practical and efficient approach to approximate the desired feature disentanglement in our framework.

Through optimizing this objective, the model increases similarity between positive pairs to strengthen the consistency of clean features and reduces similarity among negative pairs to lower the mutual information between Z and R. Finally, integrating contrastive learning with sample weighting, the loss function for is formulated as follows:

controls the strength of regularization, balancing feature compression and model performance. Too small weakens defense; too large harms accuracy. We set = 0.1 in our experiments.

Based on the described approach, stochastic gradient descent optimizes the loss functions for both and . Algorithm 1 presents the entire procedure. The contrastive learning process is described in Algorithm 2.

| Algorithm 1 Decoupled contrastive learning for federated backdoor defense |

|

| Algorithm 2 Contrastive loss calculation |

|

5. Results

This section assesses the effectiveness of DCL against data-poisoning attacks. We compare DCL’s performance with seven leading defense techniques: Krum [11], RFA [10], Median [9], FLTrust [20], DnC [42], SDFC [16], and FLGuardian [43]. Additionally, we provide results for a baseline scenario without any defense mechanisms, denoted as “No Defense”. In addition to adopting the experimental configurations from [2,5], we also design experiments based on the setup used in our theoretical analysis to support its validity. Furthermore, we assess the performance of DCL under adaptive attack scenarios.

5.1. Experimental Settings

Our experiments follow the protocol outlined by Bagdasaryan et al. [5], guided by parameters informed by theoretical insights. The system consists of 100 clients, with 10 clients participating in each training round—6 are benign, and 4 are adversarial. Benign clients use a learning rate of 0.1, while malicious clients adopt 0.05. Training is performed with a batch size of 64 samples per client.

Datasets and DNNs: We assess our method using three widely recognized image classification benchmarks: MNIST [44], Fashion-MNIST (F-MNIST) [45], and CIFAR-10 [46]. An overview of these datasets is presented in Table 1. For the MNIST and Fashion-MNIST datasets, the SimpNet architecture [47] is utilized, while ResNet-34 [48] serves as the backbone model for CIFAR-10. To reflect the typical Non-IID distribution in federated learning, we generate data heterogeneity by sampling from a Dirichlet distribution [49] with parameter .

Table 1.

Dataset specifications.

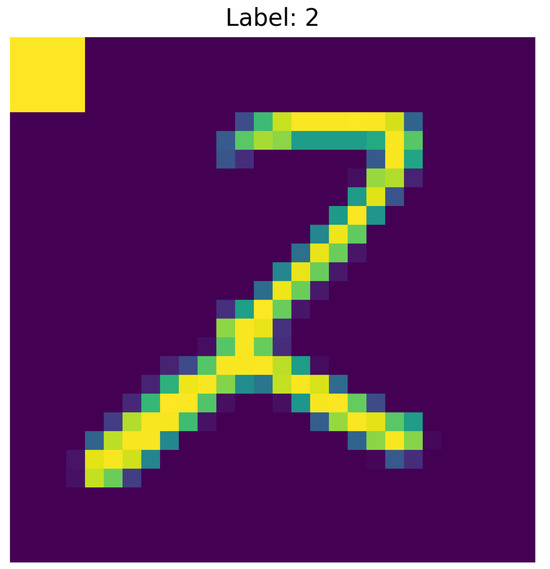

Attack Setups: We adopted the BadNets [50] attack methodology as the adversarial framework in our experiments. Model optimization was performed using stochastic gradient descent (SGD) on deliberately poisoned training datasets. The proportion of poisoned samples per training batch was strictly set to 20 out of 64 for the MNIST and Fashion-MNIST datasets, and 5 out of 64 for the CIFAR-10 dataset. During training, all backdoor attacks consistently used class 2 as the target label. To provide a more intuitive illustration of the impact of backdoor attacks and the defense effectiveness of our method, we present representative poisoned samples from the MNIST dataset. As shown in Figure 3, the vanilla model is easily influenced by the trigger (located at the top-left corner), tends to prioritize learning the backdoor features, and misclassifies the poisoned samples into the target class.

Figure 3.

Examples of backdoor samples in the MNIST dataset. The yellow square in the top-left corner indicates the backdoor trigger.

Evaluation Criteria: We evaluation focuses on two key metrics: the classification accuracy (ACC) reflecting the model’s performance on the main task, and the attack success rate (ASR) measuring the effectiveness of backdoor intrusions. ASR indicates how susceptible the model is to backdoor triggers, with attackers attempting to increase it while defenders strive to reduce it. Meanwhile, ACC evaluates the model’s effectiveness on its primary task, which both attackers and defenders aim to preserve to avoid compromising normal operation.

5.2. Evaluation on Backdoor Mitigation

It is crucial to emphasize that backdoor attacks are executed only after the global model has reached convergence, as previous studies [2] have demonstrated that initiating poisoning from the first training round significantly hinders the model’s convergence on the primary task. In the experimental setup, two distinct attack strategies were examined: single-round and continuous attacks. In a single-round attack, the attacker participates in a single training iteration. Conversely, a continuous attack entails the attacker participating in all training rounds, which is more challenging to counteract.

Single attack: To ensure an unbiased comparison, we report the attack success rate and accuracy of our method and baseline approaches at identical training rounds. The single-step attack results are detailed in Table 2. As shown, without any defense, the ASR surpasses 80% on all three datasets, while ACC remains above 78%. Under single-step attacks, traditional defense techniques also achieve notable effectiveness since model replacement leads to abnormal gradient updates that these methods can mitigate. Our proposed DCL method lowers the ASR to under 5% across all datasets, with the drop in ACC kept within 3%. Although DCL’s performance on CIFAR-10 is marginally behind some baselines, its local defense strategy offers the advantage of minimizing privacy risks.

Table 2.

Comparison of DCL and leading defenses in terms of robustness under single-attack scenarios on MNIST, FMNIST, and CIFAR-10 datasets using 0.5 degree of non-IID data.

Continuous attack: Table 3 shows the results for continuous attack scenarios. It is clear from the table that most defense methods struggle under continuous attacks. While Krum reduces the ASR on MNIST and Fashion-MNIST to below 21%, its effectiveness on CIFAR-10 remains limited. In contrast, DCL significantly decreases the ASR to very low levels, with the corresponding drop in ACC kept within acceptable bounds. Specifically, the ASR on MNIST falls below 1%, with ACC decreasing by less than 1%. DCL reduces the attack success rate to under 16% on Fashion-MNIST and below 11% on CIFAR-10, with accuracy decreasing by less than 6%. Unlike single-round attacks, continuous attacks introduce backdoors progressively through repeated malicious updates, making them harder to detect and defend. DCL addresses this by isolating backdoor features from clean representations during training, minimizing the impact of poisoned data on local models. This enhances the global model’s resilience, making it more resistant to both one-time and ongoing attacks.

Table 3.

Comparison of DCL and leading defenses in terms of robustness under continuous attack scenarios on MNIST, FMNIST, and CIFAR-10 datasets using 0.5 degree of non-IID data.

5.3. Hidden-Feature Visualization

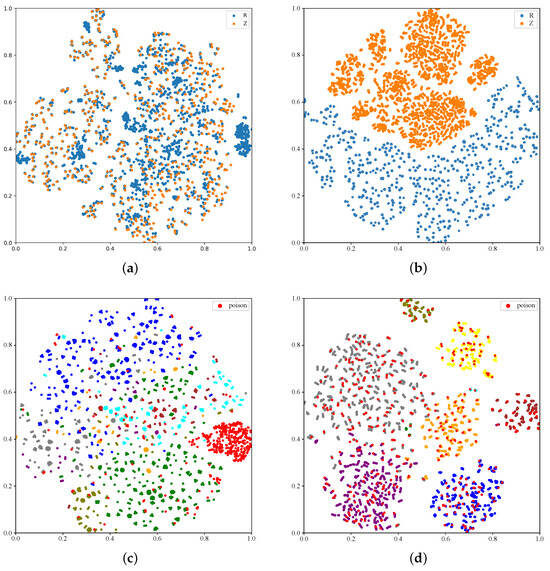

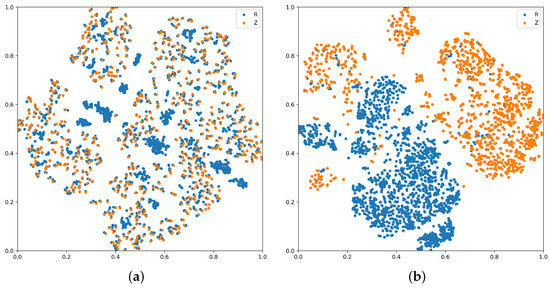

In Figure 4, we leverage t-SNE [51] to visualize the latent space, providing a comprehensive understanding of the proposed method. A data-poisoning attack is conducted on the CIFAR-10 dataset. Figure 4a shows the disentanglement capability of the latest baseline method FLGuardian, where the backdoor and clean features largely overlap, indicating poor disentanglement. In Figure 4b, the distributions of R and Z after training by our proposed method are depicted, revealing a clear separation between the two. This observation confirms the successful disentanglement of features achieved by our approach. Figure 4c,d present the t-SNE visualizations of R and Z, respectively, where samples with different labels are represented by distinct colors. Notably, backdoor samples form distinct clusters within R, indicating that the backdoor features have been effectively captured by the model . Conversely, in Z, backdoor samples are closely aligned with clean samples, demonstrating the effectiveness of DCL in mitigating backdoor attacks and improving the robustness of federated learning systems.

Figure 4.

Hidden-feature disentanglement using t-SNE: (a) FLGuardian feature space; (b) after training the feature space with DCL; (c) backdoor Model features; (d) clean Model features.

5.4. Consequences of Different Non-IID Degrees

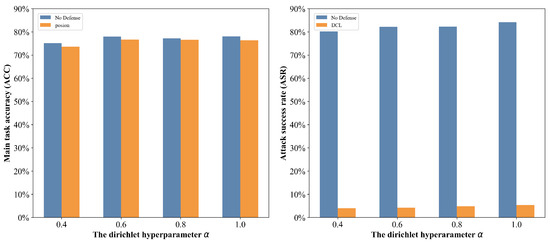

To simulate varying degrees of Non-IID data in federated learning, we employ the Dirichlet distribution with hyperparameter . We assess the effect of a single attack on the CIFAR-10 dataset under both “No Defense” and DCL defense settings. As depicted in Figure 5, without any defense, an increase in corresponds to a stronger backdoor attack performance. In contrast, our DCL method consistently maintains the ASR below 6% across different Non-IID levels, while ensuring stable classification accuracy on benign data.

Figure 5.

Effect of varying Non-IID levels on DCL performance using CIFAR-10 with 10% malicious clients. Left: main task accuracy (ACC); Right: attack success rate (ASR).

5.5. Results of Adaptive Attacks

To evaluate DCL’s robustness against potential adaptive attacks, we design targeted countermeasures and assess its performance in this scenario. The adaptive attack specifically targets DCL’s two defense mechanisms: (1) for feature decoupling, attackers employ dynamic feature obfuscation by minimizing the Wasserstein distance (beyond cosine similarity) between backdoor and clean features in latent space; (2) for contrastive learning, they implement adversarial sample weighting to disguise poisoned samples as high-confidence clean samples during local training. As shown in Table 4, DCL maintains robust defense performance under continuous adaptive attacks across all datasets.

Table 4.

Adaptive attack evaluation.

5.6. Comparison of Computational Overhead

During the local training phase, the proposed method introduces an auxiliary model combined with a contrastive learning mechanism, which inevitably incurs some computational overhead. To evaluate this overhead, experiments were conducted on the MNIST dataset with a non-IID data partition following a Dirichlet distribution parameterized by 0.5. The proposed method was compared against the standard federated averaging algorithm (FedAvg) without defense, focusing on the local training time per communication round. As shown in Table 5, under the single-attack scenario, the average local training time increases by only approximately 7.6% compared to FedAvg. In the more challenging continuous attack scenario, the additional overhead remains controlled at around 9%, without significant growth. These results indicate that the proposed method enhances security without introducing noticeable communication burden. However, we also acknowledge that in large-scale deployments or resource-constrained federated learning environments, requiring each client to train both a contrastive learning model and a variational inference model simultaneously may limit the feasibility of the proposed method. In the future, the algorithm design could be further improved by sharing backdoor model parameters or caching intermediate computation results, thereby enhancing its scalability and practicality in complex real-world scenarios.

Table 5.

Computation overhead comparison under different attack scenarios on MNIST dataset using 0.5 degree of non-IID data.

5.7. Impact of Temperature Parameter on DCL

To investigate the effect of the temperature parameter in contrastive learning, we conducted experiments on CIFAR-10 under a single-shot attack scenario with set to 0.05, 0.1, and 0.5. The results in Table 6 show that smaller values sharpen the contrastive loss, enhancing feature separation between clean and backdoor samples, thus improving defense. Larger values smooth the loss and reduce discriminability, weakening defense. Accordingly, we select = 0.05 for subsequent experiments.

Table 6.

Compare the defense effectiveness under different temperature parameters in a Single-shot Attack scenario on the CIFAR-10 Dataset with a non-IID degree of 0.5.

5.8. Ablation Study of Contrastive Learning and Information Bottleneck

To evaluate the contributions of each component in our defense framework, we conducted an ablation study on CIFAR-10 under a single-shot attack. We compared three settings: contrastive learning only (CL-only), information bottleneck only (IB-only), and the combined method (DCL). As shown in Table 7, both CL-only and IB-only significantly reduced the attack success rate (ASR) compared to the baseline, with CL-only achieving a lower ASR of 15.86%. This is because contrastive learning explicitly disentangles features, helping to identify backdoor features even without IB. In contrast, IB alone cannot fully separate clean and backdoor features, resulting in higher ASR. The combined method further reduced ASR to 4.28% while maintaining high clean accuracy, demonstrating that CL and IB complement each other by compressing redundant information and explicitly disentangling features.

Table 7.

Ablation study of CL and IB components in the proposed defense framework.

We also compared the feature disentanglement capabilities of IB-only and CL-only in more detail. Figure 6a illustrates the disentanglement performance of IB-only, where backdoor and clean features largely overlap. This is mainly because the Information Bottleneck strategy focuses on compressing irrelevant information and extracting key predictive information, resulting in weaker disentanglement ability in the feature space. Figure 6b shows the disentanglement effect of CL-only, where clean and backdoor features are more effectively separated, though some overlap still exists. This is because contrastive learning helps improve the discriminability and separability of features by pulling together samples of the same class and pushing apart samples of different classes.

Figure 6.

(a) Feature space learned using only the information bottleneck; (b) feature space learned using only contrastive learning.

6. Conclusions

We proposed a federated backdoor defense strategy called decoupled contrastive learning (DCL). Unlike traditional methods that rely on inspecting model updates to detect backdoor attacks—often requiring access to clients’ local data or gradients and risking privacy breaches—DCL implements defense locally, safeguarding data privacy while significantly lowering backdoor attack success rates. Our approach outperforms existing defense techniques in both single-round and continuous attack scenarios. From a theoretical standpoint, DCL utilizes feature disentanglement combined with contrastive learning to strengthen the model’s capacity to extract clean features and suppress backdoor-related ones, thereby improving resilience against diverse attacks. This framework also has potential for extension to other fields, such as natural language processing, supporting its applicability in multimodal tasks. However, the current study validates the proposed method primarily on two architectures, SimpNet and ResNet-34. While these models are representative to some extent, the limited coverage of model types remains a constraint. Moreover, our method is designed for static backdoor attacks; its generalizability to dynamic and more sophisticated attacks requires further investigation. In future work, we plan to extend DCL to a wider range of mainstream and structurally diverse architectures, further optimize communication efficiency to enhance the scalability of the method—such as by sharing the parameters of the backdoor model or caching intermediate computation results—and strengthen its robustness against more sophisticated backdoor strategies (e.g., dynamic attacks). Additionally, we will focus on addressing technical and resource constraints, integrating advanced feature disentanglement defenses, expanding experimental baselines, and enhancing the effectiveness and scope of our research. These efforts aim to improve the practicality and generalizability of our approach and better adapt it to diverse application scenarios such as federated learning.

Author Contributions

All authors contributed to the conceptualization and methodology of the study. J.C. and T.Z. were responsible for data collection and experimental analysis; W.W. and J.W. conducted model training and results validation; J.C. and M.L. jointly drafted the initial manuscript; Y.Z. was responsible for literature review and visualization. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Open Foundation of Key Laboratory of Cyberspace Security, Ministry of Education [KLCS20240210] and the Fundamental Research Funds for the Central Universities [3282023012, 3282025041].

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding authors.

Conflicts of Interest

The authors declare that they have no competing interests.

References

- Konečný, J.; McMahan, H.B.; Yu, F.X.; Richtárik, P.; Suresh, A.T.; Bacon, D. Federated learning: Strategies for improving communication efficiency. arXiv 2016, arXiv:1610.05492. [Google Scholar]

- Xie, C.; Huang, K.; Chen, P.Y.; Li, B. DBA: Distributed backdoor attacks against federated learning. In Proceedings of the International Conference on Learning Representations (ICLR), New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Tolpegin, V.; Truex, S.; Gursoy, M.E.; Liu, L. Data poisoning attacks against federated learning systems. In Proceedings of the European Symposium on Research in Computer Security (ESORICS), Guildford, UK, 14–18 September 2020; Springer: Cham, Switzerland, 2020; pp. 480–501. [Google Scholar]

- Shejwalkar, V.; Houmansadr, A.; Kairouz, P.; Ramage, D. Back to the drawing board: A critical evaluation of poisoning attacks on production federated learning. In Proceedings of the 2022 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 22–26 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1354–1371. [Google Scholar]

- Bagdasaryan, E.; Veit, A.; Hua, Y.; Estrin, D.; Shmatikov, V. How to backdoor federated learning. In Proceedings of the International Conference on Artificial Intelligence and Statistics (AISTATS 2020), Palermo, Italy, 3–5 June 2020; PMLR: Cambridge, MA, USA, 2020; pp. 2938–2948. [Google Scholar]

- Fang, M.; Cao, X.; Jia, J.; Gong, N. Local model poisoning attacks to Byzantine-robust federated learning. In Proceedings of the 29th USENIX Security Symposium (USENIX Security 20), Boston, MA, USA, 12–14 August 2020; pp. 1605–1622. [Google Scholar]

- Sun, Y.; Ochiai, H.; Sakuma, J. Semi-targeted model poisoning attack on federated learning via backward error analysis. In Proceedings of the 2022 International Joint Conference on Neural Networks (IJCNN), Padua, Italy, 18–23 July 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–8. [Google Scholar]

- Bhagoji, A.N.; Chakraborty, S.; Mittal, P.; Calo, S.B. Analyzing federated learning through an adversarial lens. In Proceedings of the International Conference on Machine Learning (ICML), Long Beach, CA, USA, 9–15 June 2019; PMLR: Cambridge, MA, USA, 2019; pp. 634–643. [Google Scholar]

- Yin, D.; Chen, Y.; Kannan, R.; Bartlett, P. Byzantine-Robust Distributed Learning: Towards Optimal Statistical Rates. In Proceedings of the International Conference on Machine Learning (ICML), Stockholm, Sweden, 10–15 July 2018; PMLR: Cambridge, MA, USA, 2018; pp. 5650–5659. [Google Scholar]

- Pillutla, K.; Kakade, S.M.; Harchaoui, Z. Robust aggregation for federated learning. IEEE Trans. Signal Process. 2022, 70, 1142–1154. [Google Scholar] [CrossRef]

- Blanchard, P.; El Mhamdi, E.M.; Guerraoui, R.; Stainer, J. Machine learning with adversaries: Byzantine tolerant gradient descent. Adv. Neural Inf. Process. Syst. 2017, 30, 118–128. [Google Scholar]

- Shen, S.; Tople, S.; Saxena, P. Auror: Defending against poisoning attacks in collaborative deep learning systems. In Proceedings of the 32nd Annual Conference on Computer Security Applications (ACSAC 2016), Los Angeles, CA, USA, 5–9 December 2016; pp. 508–519. [Google Scholar]

- Fung, C.; Yoon, C.J.M.; Beschastnikh, I. The limitations of federated learning in sybil settings. In Proceedings of the 23rd International Symposium on Research in Attacks, Intrusions and Defenses (RAID 2020), San Sebastián, Spain, 14–18 October 2020; pp. 301–316. [Google Scholar]

- Muñoz-González, L.; Co, K.T.; Lupu, E.C. Byzantine-robust federated machine learning through adaptive model averaging. arXiv 2019, arXiv:1909.05125. [Google Scholar] [CrossRef]

- Wang, N.; Xiao, Y.; Chen, Y.; Zheng, Z.; Zhang, Q. FLARE: Defending federated learning against model poisoning attacks via latent space representations. In Proceedings of the 2022 ACM Asia Conference on Computer and Communications Security (ASIA CCS 2022), Taipei, Taiwan, 17–21 May 2022; pp. 946–958. [Google Scholar]

- Huang, W.; Ye, M.; Shi, Z.; Chen, W.; Zhang, Y. Fisher calibration for backdoor-robust heterogeneous federated learning. In Proceedings of the European Conference on Computer Vision (ECCV 2024), Zurich, Switzerland, 23–27 September 2024; Springer Nature: Cham, Switzerland, 2024; pp. 247–265. [Google Scholar]

- Ding, B.; Yang, P.; Huang, S.J. FLAIN: Mitigating backdoor attacks in federated learning via flipping weight updates of low-activation input neurons. In Proceedings of the 2025 International Conference on Multimedia Retrieval (ICMR 2025), Tokyo, Japan, 15–19 April 2025; pp. 219–227. [Google Scholar]

- Zhang, H.; Li, X.; Xu, M.; Li, J.; Wang, Y. BADFL: Backdoor attack defense in federated learning from local model perspective. IEEE Trans. Knowl. Data Eng. 2024, 36, 5661–5674. [Google Scholar] [CrossRef]

- Lin, Y.; Liao, Y.; Wu, Z.; Chen, Y. Mitigating backdoors in federated learning with FLD. In Proceedings of the 2024 5th International Seminar on Artificial Intelligence, Networking and Information Technology (AINIT), Shanghai, China, 12–14 April 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 530–535. [Google Scholar]

- Cao, X.; Fang, M.; Liu, J.; Chen, T.; Yu, J. FLTrust: Byzantine-robust federated learning via trust bootstrapping. arXiv 2020, arXiv:2012.13995. [Google Scholar]

- Zhu, L.; Liu, Z.; Han, S. Deep leakage from gradients. Adv. Neural Inf. Process. Syst. 2019, 32, 14747–14756. [Google Scholar]

- Li, Z.; Zhang, J.; Liu, L.; Yang, Z.; Chen, H. Auditing privacy defenses in federated learning via generative gradient leakage. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2022), New Orleans, LA, USA, 18–24 June 2022; pp. 10132–10142. [Google Scholar]

- Lake, B.M.; Ullman, T.D.; Tenenbaum, J.B.; Gershman, S.J. Building machines that learn and think like people. Behav. Brain Sci. 2017, 40, e253. [Google Scholar] [CrossRef]

- Hamaguchi, R.; Sakurada, K.; Nakamura, R. Rare event detection using disentangled representation learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2019), Long Beach, CA, USA, 15–20 June 2019; pp. 9327–9335. [Google Scholar]

- Liu, D.; Cheng, P.; Zhu, H.; Zhao, J.; Liu, X. Mitigating confounding bias in recommendation via information bottleneck. In Proceedings of the 15th ACM Conference on Recommender Systems (RecSys 2021), Amsterdam, The Netherlands, 27 September–1 October 2021; pp. 351–360. [Google Scholar]

- Wang, G.; Han, H.; Shan, S.; Chen, X. Cross-domain face presentation attack detection via multi-domain disentangled representation learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2020), Seattle, WA, USA, 13–19 June 2020; pp. 6678–6687. [Google Scholar]

- Oord, A.; Li, Y.; Vinyals, O. Representation learning with contrastive predictive coding. arXiv 2018, arXiv:1807.03748. [Google Scholar]

- Grill, J.B.; Strub, F.; Altché, F.; Tallec, C.; Richemond, P.H.; Buchatskaya, E.; Doersch, C.; Pires, B.A.; Guo, Z.; Azar, M.G.; et al. Bootstrap your own latent—A new approach to self-supervised learning. Adv. Neural Inf. Process. Syst. 2020, 33, 21271–21284. [Google Scholar]

- Wang, D.; Ding, N.; Li, P.; Zheng, H.T. Cline: Contrastive learning with semantic negative examples for natural language understanding. arXiv 2021, arXiv:2107.00440. [Google Scholar] [CrossRef]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A simple framework for contrastive learning of visual representations. In Proceedings of the International Conference on Machine Learning (ICML 2020), Vienna, Austria, 13–18 July 2020; PMLR: Cambridge, MA, USA, 2020; pp. 1597–1607. [Google Scholar]

- He, K.; Fan, H.; Wu, Y.; Xie, S.; Girshick, R. Momentum contrast for unsupervised visual representation learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2020), Seattle, WA, USA, 13–19 June 2020; pp. 9729–9738. [Google Scholar]

- Gao, T.; Yao, X.; Chen, D. SimCSE: Simple contrastive learning of sentence embeddings. arXiv 2021, arXiv:2104.08821. [Google Scholar]

- Jiang, T. Learn from failure: Causality-guided contrastive learning for generalizable implicit hate speech detection. In Proceedings of the 31st International Conference on Computational Linguistics (COLING 2025), Tokyo, Japan, 20–26 August 2025; pp. 8858–8867. [Google Scholar]

- Jin, X.; Wang, J.; Ou, X.; Li, Y.; Chen, Z. Time-series contrastive learning against false negatives and class imbalance. IEEE Trans. Neural Netw. Learn. Syst. 2025, in press. [Google Scholar] [CrossRef]

- Xuan, S.; Zhang, S. Decoupled contrastive learning for long-tailed recognition. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI 2024), Vancouver, BC, Canada, 2–9 February 2024; Volume 38, pp. 6396–6403. [Google Scholar]

- Li, Y.; Lyu, X.; Koren, N.; Li, Y.; Yang, J. Anti-backdoor learning: Training clean models on poisoned data. Adv. Neural Inf. Process. Syst. 2021, 34, 14900–14912. [Google Scholar]

- Huang, Z.; Lin, X.; Wang, H.; Chen, Y.; Li, M. DisenQNet: Disentangled representation learning for educational questions. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining (KDD 2021), Virtual Event, Singapore, 14–18 August 2021; pp. 696–704. [Google Scholar]

- Alemi, A.A.; Fischer, I.; Dillon, J.V.; Murphy, K. Deep variational information bottleneck. arXiv 2016, arXiv:1612.00410. [Google Scholar]

- Poole, B.; Ozair, S.; Van Den Oord, A.; Alemi, A.; Tucker, G. On variational bounds of mutual information. In Proceedings of the International Conference on Machine Learning (ICML 2019), Long Beach, CA, USA, 9–15 June 2019; PMLR: Cambridge, MA, USA, 2019; pp. 5171–5180. [Google Scholar]

- Tschannen, M.; Djolonga, J.; Rubenstein, P.K.; Hofmann, T. On mutual information maximization for representation learning. arXiv 2019, arXiv:1907.13625. [Google Scholar]

- Wang, T.; Isola, P. Understanding contrastive representation learning through alignment and uniformity on the hypersphere. In Proceedings of the International Conference on Machine Learning (ICML 2020), Vienna, Austria, 13–18 July 2020; PMLR: Cambridge, MA, USA, 2020; pp. 9929–9939. [Google Scholar]

- Shejwalkar, V.; Houmansadr, A. Manipulating the Byzantine: Optimizing model poisoning attacks and defenses for federated learning. In Proceedings of the Network and Distributed System Security Symposium (NDSS 2021), San Diego, CA, USA, 21–24 February 2021. [Google Scholar]

- Zhou, X.; Chen, X.; Liu, S.; Li, J.; Wang, Y. FLGuardian: Defending against model poisoning attacks via fine-grained detection in federated learning. IEEE Trans. Inf. Forensics Secur. 2025, in press. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Xiao, H.; Rasul, K.; Vollgraf, R. Fashion-MNIST: A novel image dataset for benchmarking machine learning algorithms. arXiv 2017, arXiv:1708.07747. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Hinton, G. Learning Multiple Layers of Features from Tiny Images; Technical Report; University of Toronto: Toronto, ON, Canada, 2009. [Google Scholar]

- Hasanpour, S.H.; Rouhani, M.; Fayyaz, M.; Sabokrou, M.; Fathy, M. Let’s keep it simple, using simple architectures to outperform deeper and more complex architectures. arXiv 2016, arXiv:1608.06037. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2016), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Minka, T. Estimating a Dirichlet Distribution. Technical Report. 15 November 2000. Available online: https://tminka.github.io/papers/dirichlet/minka-dirichlet.pdf (accessed on 17 August 2025).

- Gu, T.; Dolan-Gavitt, B.; Garg, S. BadNets: Identifying vulnerabilities in the machine learning model supply chain. arXiv 2017, arXiv:1708.06733. [Google Scholar]

- Maaten, L.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).