Abstract

Federated edge learning (FEEL) is an innovative approach that facilitates collaborative training among numerous distributed edge devices while eliminating the need to transfer sensitive information. However, the practical deployment of FEEL faces significant constraints, owing to the limited and asymmetric computational and communication resources of these devices, along with their energy availability. To this end, we propose a novel asymmetry-tolerant training approach for FEEL, enabled via simultaneous wireless information and power transfer (SWIPT). This framework leverages SWIPT to offer sustainable energy support for devices while enabling them to train models with varying intensities. Given a limited energy budget, we highlight the critical trade-off between heterogeneous local training intensities and the quality of wireless transmission, suggesting that the design of local training and wireless transmission should be closely integrated, rather than treated as separate entities. To elucidate this perspective, we rigorously derive a new explicit upper bound that captures the combined impact of local training accuracy and the mean square error of wireless aggregation on the convergence performance of FEEL. To maximize overall system performance, we formulate two key optimization problems: the first aims to maximize the energy harvesting capability among all devices, while the second addresses the joint learning–communication optimization under the optimal energy harvesting solution. Comprehensive experiments demonstrate that our proposed framework achieves significant performance improvements compared to existing baselines.

1. Introduction

The rapid development of the Sixth Generation Wireless Communication System will enhance the interconnection of intelligent agents, facilitating the shift from the “Internet of Everything” to “Intelligent Connectivity of Everything” within the Internet of Things (IoT) framework. Consequently, a diverse array of device terminals and data will continuously emerge, making it crucial to ensure low latency and high reliability in data processing while achieving flexible and privacy-preserving network interconnection. To confront these challenges, federated edge learning (FEEL) has arisen as a promising paradigm in edge learning, thanks to its strong capabilities in data privacy protection and efficient utilization of terminal computational resources. The FEEL framework allows terminal devices to leverage local data for the autonomous training of machine learning models, transmitting only the trained models to the base station (BS) via wireless networks without revealing local private data [1,2,3]. However, as wireless resources become scarcer and the influx of IoT devices increases, the issue of learning efficiency in FEEL systems has gained prominence, highlighting the need for further research and solutions.

1.1. State-of-the-Art

In existing research, the primary approaches to enhancing the efficiency of the FEEL framework focus on improving local computation efficiency and wireless transmission quality. Among these, optimizing local computation methods aims to enhance resource utilization, achieving faster training speeds and a lower communication overhead. One of the seminal approaches to improving local computational efficiency in FEEL is the communication-efficient federated averaging (FedAvg) algorithm [4,5]. This algorithm enables edge devices to undertake multiple iterations of local model updates, thereby yielding a more refined gradient descent direction. Consequently, FedAvg effectively diminishes the frequency of global communication, serving as a quintessential embodiment of the “trade computation for communication” strategy. Nonetheless, this learning paradigm relies on synchronous aggregation, requiring all devices to undergo the same number of local training iterations. This “one-size-fits-all” methodology inadequately leverages the computational capabilities inherent to each device, particularly in future large-scale IoT ecosystems, where significant asymmetries in processing power, storage capacity, and energy availability among edge devices are expected [6]. Although several studies have proposed asynchronous or semi-asynchronous FEEL training frameworks, e.g., [7,8,9,10], to mitigate this limitation, these mechanisms often lead to increased communication frequency between the BS and edge devices. Concurrently, the irregular update frequency from slower devices can lead to significant staleness in their local models relative to the global model [11]. In this context, a more effective strategy involves the dynamic allocation of training intensity based on the computational capabilities of each device, e.g., employing lower training intensities for less capable devices while reserving higher intensities for those with greater capabilities. For instance, several studies, e.g., [12,13,14,15,16], have proposed a series of asymmetry-tolerant FEEL frameworks that dynamically assign different training intensities (e.g., the number of local updates, batch size, or network blocks) to mitigate waiting delays. Within these frameworks, local training intensity is primarily governed by the maximum permissible delay and the inherent computational capabilities of the devices, with increased local training intensity often viewed as a mechanism to accelerate convergence.

Conversely, enhancing the quality of wireless transmission primarily seeks to mitigate inherent characteristics of wireless communication, including channel fading, noise interference, and multipath effects [17]. These factors may introduce substantial perturbation errors in model parameters during transmission, which can accumulate during the global model aggregation and update processes. In response, several wireless implementation solutions have been proposed to facilitate the operation of the FEEL framework. According to the existing research, the predominant communication protocols for the transmission of FEEL models primarily include Orthogonal Multiple Access (OMA) [18,19,20] and over-the-air computation (AirComp) [21,22,23]. OMA achieves signal separation by allocating independent time or frequency resources to different devices, effectively mitigating inter-device interference and thus enhancing overall system capacity. In contrast to the resource separation strategy employed by OMA, AirComp leverages the superposition characteristics of electromagnetic waves propagating through free space. This approach enables the direct aggregation of distributed data transmitted synchronously between devices, eliminating the need to decode the data at the BS before computation. For instance, the work in [21] presented an accelerated global model aggregation approach that leverages AirComp, formulating a sparse low-rank optimization problem to jointly design device selection strategies alongside beamforming schemes. An extended version of this work, detailed in [22], namely one-bit over-the-air computation, utilizes a truncated-channel-inversion power control strategy to implement an innovative digital approach for broadband AirComp aggregation. Additionally, the research in [23] tackled the challenge of minimizing training latency and energy consumption in scenarios where devices continuously collect new data. In this context, an average training error metric is proposed to evaluate convergence performance, along with a resource allocation strategy developed using deep reinforcement learning techniques.

1.2. Motivations and Contributions

As shown in Table 1, we list the specific research content of some state-of-the-art works. Judging from the existing literature, most studies treat the design of local training intensity and wireless transmission strategies as two separate issues. However, we recognize that there may be a trade-off between local training and wireless communication. The underlying logic is that edge devices typically have a limited battery capacity (as seen in sensors), and this capacity must support both local training tasks and the transmission of model updates in uncontrollable wireless environments. In such cases, solely optimizing computation or wireless transmission strategies often leads the system to face a dilemma: if devices prioritize computation and allocate most of their energy to local training, they may lack sufficient residual energy to sustain wireless communication, resulting in more severe transmission distortions. Conversely, if they excessively reduce local training intensity to ensure wireless transmission quality, they may fail to maintain adequate model update quality, hindering the achievement of the desired global convergence accuracy. Against this backdrop, this paper makes efforts in two main areas. First, we consider applying simultaneous wireless information and power transfer (SWIPT) during the training process to provide wireless energy to these energy-constrained devices, thereby alleviating issues of inadequate training or wireless distortion caused by insufficient energy. The primary benefit of SWIPT is its ability to facilitate concurrent data transmission while also enabling wireless power transfer [24,25,26,27]. For example, the work in [26] proposed a integration technique that combines SWIPT, single-input–multiple-output, and non-orthogonal multiple access to enhance device connectivity and power supply for cell-edge sensors while also improving outage performance through a transmit antenna selection strategy for the base station. In [27], the authors proposed leveraging a hybrid approach that combines time-switching and power-splitting energy at the relay user equipment to minimize the outage probability as much as possible. The aforementioned studies validate the notion that SWIPT is particularly well suited to services that are both energy-intensive and latency-sensitive, which motivates us to apply it to FEEL. Second, under the constraints of limited energy budgets, we precisely characterize the synergistic impact of local training and wireless transmission on the overall performance of the system from a global perspective and design a joint optimization strategy that takes both into account. The key contributions of this paper can be outlined as follows:

Table 1.

Comparisons of this work with other representative works.

- We propose a SWIPT-enabled, asymmetry-tolerance training mechanism for FEEL with two key objectives: (1) alleviating the negative impact of device asymmetry through flexible training intensity and (2) utilizing SWIPT to mitigate training insufficiencies and wireless distortion issues arising from battery limitations, thus enabling FEEL applications on low-end devices. In this context, we acknowledge that, when edge devices operate under limited energy budgets, a critical trade-off may exist between heterogeneous local training intensity and wireless transmission quality. This indicates that local training intensity and wireless transmission strategies should be closely integrated, rather than entirely decoupled.

- To clarify this potential trade-off, we systematically derive the impact of device heterogeneous local training intensity and AirComp aggregation errors on the upper bound of FEEL convergence performance, theoretically revealing the key role of balancing training and transmission under energy-constrained conditions for system convergence efficiency. Our theoretical findings suggest that naively increasing local training intensity under limited energy budgets may inadvertently degrade final training performance. This contrasts with existing research, e.g., [5,12], which often views increasing local training intensity as an effective way to accelerate convergence without considering wireless aggregation distortion issues.

- To maximize system performance, we identify two critical optimization problems. The first problem is a SWIPT optimization problem aimed at maximizing the harvested power across all devices while ensuring successful decoding of global model information. The second problem is a joint learning–communication optimization problem that operates within the available energy of devices, guiding the joint design of uplink transmit beamforming for both devices and the BS, along with local training intensity. To tackle the non-convexity of these optimization problems, we propose an efficient alternating optimization (AO) strategy that combines successive convex approximation (SCA) with first-order Taylor expansion. The simulation results show that the proposed SWIPT-enabled FEEL heterogeneous training mechanism significantly enhances learning performance compared to baseline solutions.

The structure of this paper is laid out concisely as follows. Section 2 outlines the relevant learning and communication strategies. In Section 3, we provide a theoretical analysis of the convergence performance of our system and formulate the problem of maximizing learning performance. Section 4 introduces an AO algorithm designed to jointly optimize the SWIPT optimization problem and the joint learning–communication optimization problem. Section 5 discusses the numerical experiments conducted, and finally, our conclusions are presented in Section 6.

2. System Model

In this section, we outline the underlying learning framework and communication model.

2.1. Federated Learning Framework

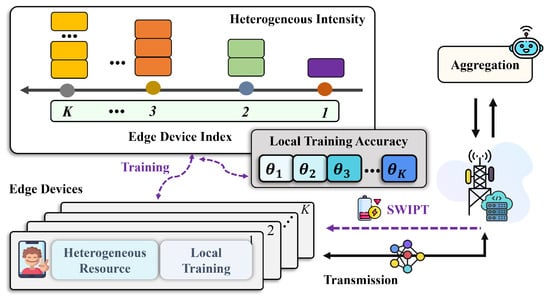

As illustrated in Figure 1, we consider a standard FEEL system that involves a collection of distributed edge devices, denoted by the index set . These devices are orchestrated via a BS to collaboratively develop a global model using their local datasets , by tackling the problem of

Here, is expressed as

where indicates the total number of samples in device k’s local dataset, and represents the prediction error of model on the input feature vector compared to its true label .

Figure 1.

Illustration of the proposed asymmetry-tolerant FEEL system with SWIPT.

We assume in this paper that edge devices utilize the stochastic average gradient (SAG) algorithm [5,6] for local training. Let the complete training process last for N iterations. Then, during iteration n, where , the interaction process between devices and the BS can be summarized as

- Downlink model broadcast: The BS broadcasts the feedback information to all devices using SWIPT, which includes the global model parameters from the previous iteration and the aggregated gradient (as defined in Equations (5) and (6)). Subsequently, each device, integrated with a power splitter [28], receives the global parameters along with radio frequency energy from the BS.

- Heterogeneous local computation: Each device performs local training based on the following surrogate objective function:It can be observed that dynamically adjusts the optimization objective by incorporating and into , thereby better aligning with the convergence requirements of the global optimization process [5]. Considering the limitations of device computing capabilities in practical resource-constrained FEEL environments, this paper introduces an approximate solving strategy to achieve a reasonable balance between computational complexity and model training effectiveness. Specifically, each device obtains a feasible solution by approximately solving problem (3), which satisfies the following approximation condition:where represents the local training accuracy of device k, indicating the degree to which the device solves the local problem (3). Intuitively, signifies that the device can optimally solve the local problem (3); conversely, when , the device chooses to skip local optimization and directly sets . Therefore, from another perspective, a smaller can be interpreted as device k requiring a higher intensity of local training, and vice versa.

- Uplink model transmission: after completing local training, device transmits the local model update and the corresponding gradient to the BS via a wireless channel.

- Global Model Aggregation: Upon receiving the local model updates and the gradients sent via all devices , the BS aggregates them as follows:Subsequently, the BS broadcasts the updated model and the aggregated gradient to all edge devices. For any small constant , the convergence condition is satisfied whenindicating that the solution to problem (1) reaches convergence at the n-th iteration. Here, is the global optimal solution to problem (1).

Analyzing the above process reveals that, in each iteration, devices need to upload local updates (the model gradient and local model updates ) to the BS while simultaneously receiving feedback parameters (the global model parameters and aggregated gradient ) from the BS. Consequently, the communication process involved in the proposed system necessitates both uplink and downlink transmissions. Next, we will detail the specific transmission protocol in the subsequent sections.

2.2. Downlink Communication Model with SWIPT

During downlink SWIPT transmission, the BS simultaneously transmits global parameters, along with wireless power to edge devices. Without a loss of generality, we will take the transmission of as an example and omit the iteration index n to illustrate the functioning of the downlink SWIPT process. In this paper, a digital transmission scheme is utilized to model the downlink transfer of the global parameters. Specifically, before transmitting the global model , we assume that it will be encoded onto a transmitted symbol vector , with the condition that , and that it will be transmitted within one coherence block , which is divided into D equal time slots. In every time slot, the BS is assumed to broadcast the global information utilizing a uniform transmit beamforming vector , which adheres to the constraint . Here, denotes the maximum allowable transmission power allocated to the BS. Let represent the baseband equivalent channel coefficients from the BS to device k. Then, in each time slot, the received signal at device k can be expressed as

where denotes the additive white Gaussian noise (AWGN) present at device k, which follows the complex Gaussian distribution .

We assume that every device is equipped with a power splitter to manage the simultaneous processes of information decoding (ID) and energy harvesting (EH) from the incoming signal. In particular, a fraction of (where ) of the received power is designated for ID, while the remainder, , is allocated for EH throughout the entire downlink transmission process. Consequently, the equivalent signal for ID at device k in each time slot can be expressed as

where represents the additional ID processing noise at device k, which follows a complex Gaussian distribution . Therefore, the signal-to-noise ratio (SNR) at device k is given by

Correspondingly, the signal split for EH in each time slot is

We utilize a linear EH model to describe the power transfer for each device, with the total energy harvested by device k throughout the entire downlink SWIPT process expressed as

where represents the power conversion efficiency of EH at device k.

2.3. Uplink AirComp Communication Model

To further enhance transmission efficiency, this paper employs the AirComp technique for simultaneous model transmission and effective aggregation. Specifically, in each communication round, devices leverage the channel superposition property to simultaneously transmit local updates (including local model updates and gradients ) over shared time-frequency resources. This approach eliminates the need for the BS to decode information from each device individually. Here, we will detail the working mechanism of the AirComp technique using the transmission of local model updates as an example. For brevity, the communication round index n will be omitted from the following explanations. Assuming that the local model update of device k is defined as the set , this set will be converted into a signal vector, , which is then allocated across D time slots for transmission. In the context of AirComp, the local model update of each edge device is initially preprocessed using a normalization function [29,30]. Specifically, each edge device first calculates the statistical properties of its local model update , including the means and variances, namely

Subsequently, the aforementioned statistical information will be transmitted to the BS in an error-free manner. Upon receiving the local statistical information from all devices, the BS will directly compute the global statistics by averaging the received values, i.e.,

These global statistics are then broadcast back to all devices for normalizing the local model updates. Let the normalized sequence be represented as , where each element can be calculated as

Through the above normalization process, each model update element of device k is transformed into a zero-mean, unit-variance entry , which satisfies and . Then, each element of the signal vector is further computed as

Here, represents the transmit equalization coefficient used to mitigate wireless fading. As a result, the expected power of the transmitted signal is given by . To comply with practical communication requirements, we assume that each device must adhere to specific transmit power limits, namely

where represents the maximum available transmit power for each device.

Let denote the channel coefficients from device k to the BS. To simplify the analysis, we assume that the channel state information between the devices and the BS is known a priori, which can be achieved through existing channel estimation techniques [31,32]. Furthermore, all channels are modeled as quasi-static flat fading, meaning that the channel remains unchanged throughout the entire training period. (Although we model all channels in the paper as quasi-static flat fading, we emphasize that our theoretical framework and methodology can be easily extended to scenarios with time-varying channels, where the channel conditions can change during each learning iteration. When addressing time-varying channel conditions, one of the most direct approaches is that the desired solution can be recalculated opportunistically before each iteration begins, as indicated in [30,33].) By utilizing AirComp technology, the signal received via the BS during time slot d can be given by

where represents the AWGN, with its components following a complex Gaussian distribution . To facilitate further processing, the signal received via the BS will be processed through a linear decoder to extract the normalized sum of model updates from the edge devices, denoted as . At this point, the estimate generated via the BS, , is

where denotes the receiver beamforming vector at the BS. To quantify the degree of difference between and its estimate , the AirComp system defines the mean squared error (MSE) as the evaluation metric, which is expressed as

Referring to [34], the computational rate of AirComp communication, denoted as , can be expressed as

Here, represents the uplink communication bandwidth, and can be interpreted as the power of the estimated signal at the BS. Subsequently, to recover the aggregated result of the local model updates from the edge devices, the BS can compute the global model update parameters through the following denormalization operation [33], as

Referring to [33], the expected estimation error during the global model update recovery process satisfies , and its MSE is upper-bounded by

where is the upper bound of the global statistic [33], i.e., it satisfies (This assumption is justified since reflects the finite variance of certain model parameters).

2.4. Delay and Energy Model

The implementation of AirComp technology imposes strict requirements for synchronization mechanisms, meaning that all edge devices must complete their local training and synchronize the transmission of intermediate model updates simultaneously. Let denote the number of local iterations required for device k to attain the desired training accuracy , and given that all samples (in bit) have a uniform size, the local training delay of device k can be formulated as

where , and indicates the CPU frequency utilized by device k for its computational tasks. Additionally, indicates the number of CPU cycles that device k needs to handle each individual sample, which can be estimated offline and is known in advance. To ensure timely aggregation, we set a maximum training delay threshold in this paper, which satisfies

In a typical FEEL system, edge devices often have a limited amount of available battery energy, which must simultaneously fulfill the demands of local training and wireless transmission. Hence, we will further analyze the total energy consumption of the device during both the local training and wireless transmission processes. Specifically, the energy consumption of the device during the local training phase is given by

Here, represents the capacitance coefficient of the device’s computational chip, which reflects the power efficiency of the device’s computations. According to the wireless communication model defined in Section 2.3, the energy consumption of device k during the uplink transmission phase is described by [35] where S represents the amount of local update data that is to be uploaded from the device. Let represent the inherent energy budget of device k. With SWIPT, the overall energy budget constraint for device k is therefore expressed as

The aforementioned energy constraints highlight the dynamic trade-off between local computation and wireless transmission. On one hand, excessive intensity in local training can deplete a significant portion of the device’s limited energy resources, potentially resulting in insufficient energy availability for wireless transmission. This situation may compel the device to lower its transmission power, consequently increasing the distortion of the transmitted model. On the other hand, if more energy is allocated to prioritize wireless transmission, it may require a reduction in the intensity of local model training, which could adversely affect the quality of the local model updates. Therefore, it is crucial to accurately characterize the interplay between local training intensity and wireless distortion, along with their combined effects on the convergence performance of the FEEL system.

3. Convergence Analysis and Problem Formulation

Now, we rigorously examine in this section the joint impact of AirComp aggregation errors and local training intensity on the convergence performance of the FEEL system.

3.1. Basic Assumptions and Preliminaries

To facilitate this discussion, we first establish the following fundamental assumptions regarding the loss function [36,37], thereby laying the groundwork for the subsequent theoretical analysis of convergence.

Assumption 1.

The local loss function for edge device k, , satisfies the L-smooth and β-strongly convex properties. Specifically, for any model parameters , the following inequalities hold:

Upon reviewing the definition of the surrogate objective function , we can conclude that, based on the aforementioned assumptions, also possesses -strong convexity and L-smoothness. The reason is that the second derivative of this objective function (i.e., the Hessian matrix) remains consistent with that of , indicating that its curvature properties do not change during the optimization process. Leveraging these characteristics, we utilize the gradient descent algorithm to optimize the problem described in Equation (3). The update formula is given by

where denotes the local model update at the current gradient iteration t, and is the corresponding learning rate. According to [38], the gradient descent method generates a convergent sequence when optimizing a strongly convex objective function, which exhibits linear convergence. This can be expressed as

where represents the optimal solution to the local problem (3), and c and are intermediate constants. Based on the aforementioned assumptions and the linear convergence property of the gradient descent method, it can be derived that, to satisfy the -approximation condition, device k requires a minimum number of local training iterations to solve problem (3), as stated in the following lemma.

Lemma 1.

Based on Assumption 1 and the linear convergence property, assuming that the initial value of the gradient descent is set to , the minimum number of local training iterations required for device k to solve problem (3) in order to satisfy the -approximation condition is given by [5]

where the constant , and represents the condition number of the Hessian matrix of .

3.2. Convergence Analysis

Building on the previous analysis, we now further explore the impact of heterogeneous local training and wireless transmission errors on the convergence performance of our system to reveal the key role of the trade-off between the two in optimizing overall performance.

Theorem 1.

Under the conditions of Assumption 1, considering the transmission errors during the wireless aggregation process and the heterogeneous training intensity of the devices, the upper bound of the convergence performance of the FEEL system can be expressed as

where represents the system convergence factor, and its specific expression is given by

The term Δ is an additional error that is jointly determined by the model aggregation error and the convergence factor Φ. Its specific form can be expressed as

Proof of Theorem 1.

See Appendix A. □

Remark 1.

From the results of Theorem 1, it can be observed that both wireless transmission errors and the heterogeneous training intensity of the devices play a significant role in determining the overall convergence performance of the FEEL system. Specifically, the global convergence upper bound can be decomposed into two main components: the exponential decay term associated with the initial model loss given by , and the steady-state error term that is influenced by the transmission errors and the convergence factor Φ. As the number of global training rounds N increase, when the convergence factor Φ satisfies , the first exponential decay term approaches zero. However, the second steady-state error term does not diminish with the increase in N; instead, it converges to a non-zero value known as the convergence error, which can be expressed as . By further expanding this term, we can rewrite the convergence error as

By analyzing the form of the convergence error, it becomes clear that increasing the convergence factor or decreasing the model aggregation error can effectively reduce the convergence error . However, in practical FEEL systems, energy budget constraints may introduce a potential trade-off between and . Specifically, the magnitude of is directly related to the local optimization accuracy of the devices, represented as , while is mainly influenced by transmission errors during the model aggregation process. According to monotonicity analysis, increasing generally requires decreasing , which means that devices must intensify their local training efforts. However, while this strategy enhances and accelerates the system’s convergence process, it also significantly increases the devices’ training energy consumption. This, in turn, compresses the power budget available for wireless transmission, leading to an increase in the transmission error . Conversely, if the priority is to reduce the transmission error , it may necessitate a reduction in local training intensity due to a limited energy budget. This results in an increase in and a subsequent decrease in .

Here, we illustrate the trade-off using a FEEL system involving two edge devices. Let us assume that both Device 1 and Device 2 have an energy budget of 100 units. Device A allocates 90 units of energy for local training, such as increasing the number of training iterations. However, during model transmission, to ensure that its communication energy does not exceed the remaining 30 units, Device A may be compelled to significantly reduce its transmission power. For instance, if its available power is 1 W, it might end up transmitting at only 0.1 W to meet the energy constraint. This can lead to substantial model aggregation errors and severely degrade the final learning performance. Conversely, Device B allocates only 10 units of energy for local training but reserves the remaining energy for high-precision transmission. In this scenario, Device B is able to fully utilize its power resources for model transmission, resulting in a small model aggregation error. However, since the energy allocated for local training is limited to 10 units, Device B may only be able to perform a very few local gradient updates. This insufficient number of updates can lead to poor model quality, ultimately diminishing the final performance. Therefore, in complex network environments characterized by high heterogeneity and energy constraints, simply optimizing or is unlikely to lead to a comprehensive improvement in system performance.

3.3. Problem Formulation

To tackle the challenges outlined above, our contributions are twofold. First, we utilize SWIPT to increase the available energy for devices, thereby enhancing the configuration space for local computation and wireless transmission strategies. To realize this aim, we formulate a max–min optimization problem that seeks to maximize the harvested power across all devices while concurrently ensuring the successful decoding of global model information. This can be expressed in the following formulation:

Here, constraint S1 delineates the minimum SNR requirement for each device to successfully decode the global parameter, while constraint S2 specifies the maximum transmit power limit for the BS. Next, the second design involves a joint optimization strategy that effectively balances local training and wireless transmission within the limits of the available energy, thereby achieving efficient coordination between computation and communication. Our core objective is to minimize the convergence error by jointly optimizing the transmit equalization coefficient , the receive beamforming , and the local training accuracy within the feasible space of wireless transmission strategies and local training intensity. To accomplish this, we formalize the joint optimization problem as

where constraint T1 limits the transmission power of the device so that it does not exceed the predetermined threshold . Additionally, constraints T2 and T3 specify that the total energy consumption and computation delay for device k in a single round must remain within its maximum available energy and maximum tolerable delay , respectively. Finally, constraint T4 restricts the range of values for the local optimization precision and stipulates that the convergence factor must satisfy to ensure that the system can achieve stable convergence.

Problems () and () may appear succinct and intuitive in their formulation. However, the intricate coupling relationships among their optimization variables present significant challenges for direct optimization. To effectively address this issue, we propose an alternating optimization framework for both problems that decomposes them into several independent subproblems. By fixing certain variables while optimizing others, we can alternately update the values of each variable, gradually converging towards the global optimal solution, as elaborated in the subsequent section.

4. Alternating Optimization

In this section, we present an efficient method for breaking down the complex problems () and () into a series of manageable subproblems utilizing the AO method.

4.1. Max–Min Optimization of SWIPT

For the max–min problem in SWIPT, by introducing the auxiliary variable , the original problem can be equivalently rewritten as

Next, we employ the AO method to tackle the previously mentioned non-convex problem by iteratively refining the variables , , and until convergence is achieved. The iterative optimization process for these variables can be categorized into the following two cases.

4.1.1. Given , Optimizing ()

When fixing , the optimization problem () simplifies to

Through appropriate mathematical transformations, problem () can be restructured into

The primary challenge in addressing the above problem lies in the non-convex constraints (42c) and (42d). To overcome this difficulty, we utilize the SCA method to iteratively update by solving a series of approximated convex problems. Specifically, for both non-convex constraints, we construct convex approximations using the first-order Taylor expansion of . Let represent the current beamforming vector at iteration u. Then, we have

This yields a reconstruction problem with linearized constraints, as

At this stage, the relaxed problem () has been transformed into a convex optimization problem that can be efficiently addressed using the conventional convex optimization solvers, for instance, CVX [39], with polynomial time complexity. By iteratively updating through the solution of the reformulated problem (), we can identify a local maximum of the original problem () once the convergence threshold is reached. Furthermore, the final solution complies with the Karush–Kuhn–Tucker conditions for the problem ().

4.1.2. Given (), Optimizing

With fixed (), problem () simplifies to a feasibility-check problem with respect to , which does not readily produce an optimal solution. However, we observe that both the SNR constraint and the energy harvesting constraint for each device depend exclusively on its own , without any cross-user dependencies. Therefore, when the beamforming vector is fixed, each device can independently optimize its own to maximize the energy harvested. At this juncture, we can formulate the following optimization problem for device k, as

Let . The SNR constraint from Equation (38b) can then be expressed as

Maximizing is equivalent to minimizing while ensuring the SNR constraint is satisfied. Thus, we arrive at the following formulation:

In summary, the complete algorithm for the alternating optimization for the SWIPT max–min problem is presented in Algorithm 1.

| Algorithm 1 Alternating optimization for the SWIPT max–min problem |

|

4.2. Joint Learning–Communication Optimization

After solving the SWIPT max–min optimization problem, we denote the optimal total energy harvested by device k as . The next step is to minimize the convergence error while adhering to the available energy constraint of device k, which is given by . This is achieved by jointly optimizing the transmit equalization coefficient , the receiver beamforming , and the local training accuracy . Given the coupling between the computational and communication optimization variables at this stage, this paper proposes an alternating optimization framework, which is divided into two main phases: a wireless transmission design and local training intensity optimization.

4.2.1. Wireless Transmission Design

With a fixed local training accuracy, , our goal is to minimize the AirComp MSE by adjusting the wireless transmission strategy, which includes the transmit equalization coefficient and the receive beamforming . This problem is formulated as

The problem () still involves two interdependent optimization variables, including the transmit equalization coefficient and the receive beamforming . Therefore, we will continue to adopt an alternating iteration approach, which allows us to progressively optimize each variable in turn.

Given transmit equalization coefficient , the optimization problem regarding the receive beamforming vector can be formulated as

To efficiently solve the above optimization problem, we define the following auxiliary variable as . Through appropriate mathematical transformations, the problem can be reformulated as

with

where

Here, . At this point, the objective function of problem () has taken the form of a standard quadratic function, and its constraints constitute a convex set, which can be efficiently solved with CVX.

With the optimal receiver beamforming , we need to solve the following simplified problem:

Due to the presence of the non-convex constraint (39c), the problem () poses significant challenges. To effectively mitigate the complexity of the solution process, we next introduce an auxiliary variable . Consequently, the problem () can be equivalently reformulated in the following manner:

where we define .

Next, we gradually adjust the values of and through alternating iterations to approximate the optimal solution of problem (). Given a specific value of , we can apply appropriate mathematical transformations to simplify problem () to the following form:

where

with . Now, problem () has been transformed into a standard convex optimization problem (). This new formulation can be efficiently solved using CVX.

After determining , the auxiliary variable can be straightforwardly updated to . We now present the following lemma to demonstrate that, by alternately optimizing and , one can gradually approach the optimal solution of problem ().

Lemma 2.

By alternately updating and μ, a convergent solution can eventually be obtained.

Proof.

Assuming that, in the -th alternating iteration, the solution set for and is denoted as , with the corresponding objective function value being , where we define . According to the update rule, the auxiliary variable satisfies . In the subsequent t-th iteration, if problem has a feasible solution at , then the solution satisfies or . If , then the current solution is consistent with the previous solution , indicating that the system has found an extremum point. Conversely, if , this suggests that the current solution constitutes an improvement over the previous solution , leading to a further reduction in the objective function value, i.e., . Thus, by continuously updating and , the system ensures that decreases with each iteration. Furthermore, since the objective function is non-negative and has a minimum value that serves as a lower bound, the objective function value will not decrease indefinitely. Based on this, it can be deduced that, with the alternating iterative updates of and , the system will ultimately converge to a global optimal solution or a local optimal solution. □

4.2.2. Local Training Intensity Determination

Given the transmit equalization factor and the receiver beamforming , the problem of optimizing the local accuracy vector can be simplified to

Due to the involvement of multiple summation terms, squared terms, and complex parameter dependencies in the objective function , directly optimizing this function poses significant challenges. Additionally, the nonlinear nature of the constraint further complicates the problem, making it difficult to simultaneously satisfy both the objective function and the constraint. To address these issues, we propose a simplified strategy based on parameter tuning. By appropriately setting the value ranges of key hyperparameters, the constraints concerning can be naturally satisfied during the optimization process, thus avoiding the complexities associated with directly solving the original problem. Next, we will focus on ensuring that remains within the interval by suitably adjusting the value range for the hyperparameter , as illustrated in the following lemma.

Lemma 3.

With Assumption 1, if we set

the convergence condition is always satisfied.

Proof of Lemma 3.

See Appendix B. □

Next, from constraint (39c) and constraint (39d), we can derive

It should be noted that the local accuracy for each device is completely decoupled, meaning that each device can independently choose the optimal based on its own computational resources and energy constraints, without relying on the choices of other devices. By analyzing the form of , it can be observed that, within the range , monotonically increases as decreases. In other words, the smaller the value of , the larger the value of , which contributes to the improvement of system performance. Therefore, when selecting , each device will prioritize choosing the minimum value that satisfies the lower bound of the constraint, i.e.,

Accordingly, we can summarize the basic process of the joint learning–communication optimization, as outlined in Algorithm 2.

| Algorithm 2 Joint Learning–Communication Optimization |

|

4.3. Computational Complexity

Based on the previous analysis, both problems () and () can be progressively optimized using an AO algorithm, with their optimization processes summarized in Algorithm 1 and Algorithm 2, respectively.

For Algorithm 1, its computational complexity is primarily determined by the stepwise solution of the subproblem (). The complexity of solving this convex problem has a complexity of , where denotes the number of SCA iterations. After alternating iterations, the overall worst-case complexity of Algorithm 1 can be expressed as . Here, we neglect the complexity of the assignment operation in Equation (47).

For Algorithm 2, its computational complexity mainly arises from the stepwise solution of the subproblems () and (). According to [34], the worst-case computational complexity for solving the subproblem () using the interior point method is . Similarly, the worst-case complexity for solving the subproblem () is , where is the number of alternating iterations required when solving for . In contrast, the computational complexity associated with the assignment operations in solving the subproblem () is relatively low and can be ignored. In summary, assuming Algorithm 2 converges within iterations, the final overall worst-case complexity can be expressed as .

5. Numerical Results

In this section, we exhibit numerical experiments to validate the effectiveness of our proposed system design.

5.1. Simulation Setup

We create a three-dimensional simulation environment where the BS is positioned at coordinates (0, 0, 0), and edge devices are uniformly and randomly positioned along half-circles with a radius of around the BS. The channels involved in the system, specifically and , are modeled as Rician fading channels [40]. The path loss, which depends on distance, is expressed as , where denotes the reference path loss at a distance m, d represents the link distance, and is the path loss exponent. Unless stated otherwise, the following parameter values are used: dBm, dBm, dBm, dB, , , mHz, s, J, , 1 GHz 3 GHz, , and .

Our approach is evaluated through an image classification task utilizing the well-known MNIST dataset [41] as well as the Fashion-MNIST dataset [42]. For both datasets, we employ a multinomial logistic regression model with a dimensionality of , paired with a cross-entropy loss function [5]. Additionally, the local training data for each edge device is sampled independently and identically from the training set, with each device receiving a total of 600 training samples. For both datasets, we utilized the standard test set, which consists of 10,000 images. The experiment implementation is conducted using PyTorch 1.12.1 on a Legion Y9000P IAH7H laptop (manufactured by Lenovo, Beijing, China), which is equipped with an NVIDIA GeForce RTX 3060 Laptop GPU (manufactured by NVIDIA, Santa Clara, CA, USA) with 6 GB of memory. All experimental curves presented in the paper represent the average results of five MonteCarlo simulations.

To comprehensively evaluate the effectiveness of the proposed algorithm (OptEH), we compare it with the following baseline methods:

- NoEH [6]: In this approach, edge devices have only limited inherent energy and cannot replenish their energy during the FEEL processes.

- ECEH [5]: All devices adopt the same energy harvesting scheme and wireless communication strategy as OptEH, but they execute the same local training intensity, which is determined by the slowest device.

- FPEH [43]: This scheme implements a heuristic approach to SWIPT by employing a fixed power-splitting ratio. In this model, devices operate with a predetermined ratio for splitting power between energy harvesting and information decoding (we set ).

- SEH [44]: Devices are selected based on their channel gain to mitigate the “straggler” problem. Only those devices that achieve channel gains above a specified threshold are allowed to participate in the training process, while others are excluded from engagement in training.

Additionally, we compare a scenario where the local training intensity is the same as that of OptEH in an ideal environment without channel noise (referred to as Error-Free) to assess the impact of communication noise and interference on the FEEL learning performance.

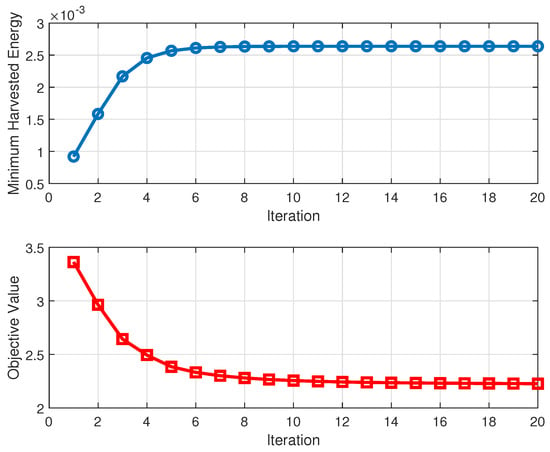

5.2. Convergence Behaviour of the Proposed Algorithms

We start by validating the convergence performance of the proposed Algorithms 1 and 2. Figure 2 illustrates the convergence curves of both algorithms across different numbers of alternating iterations. The simulation results indicate that, as expected, our proposed algorithms converge to a stable value within a relatively small number of iterations (approximately seven iterations). This behavior aligns with the theoretical convergence analysis, thereby confirming the effectiveness of the proposed algorithms.

Figure 2.

Convergence behavior of the proposed optimization algorithm.

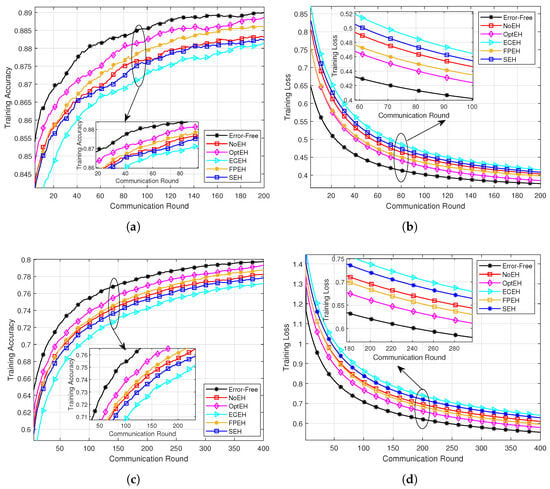

5.3. Performance Comparison on Learning Convergence

As illustrated in Figure 3, we present the testing accuracy and training loss of the proposed algorithm in comparison to baseline algorithms across different datasets. The results clearly indicate that the proposed algorithm outperforms the baseline methods regarding convergence performance. Compared to NoEH, our method demonstrates a significant performance gain. This improvement is primarily attributed to the fact that, with energy harvesting, our system can support more frequent local updates while maintaining a higher power level during the communication process. In contrast to ECEH, our approach allows devices to conduct local training to the fullest extent based on their individual computational capacity and available energy. This flexibility facilitates a more effective utilization of each device’s computational resources, thereby enhancing the overall efficiency of the system. The results from comparing our approach with FPEH highlight the significance of appropriately selecting the device’s power splitting in SWIPT for the FEEL system. This dynamic adjustment allows devices to optimize the trade-off between EH and ID based on their current energy status and channel conditions. When compared to SEH, our approach demonstrates superior performance. This discrepancy may stem from the fact that, while SEH may exclude devices with lower channel gains to enhance the overall communication quality of the system, such exclusion also reduces the diversity of the training data. When the performance gains from improved communication quality do not sufficiently compensate for the performance losses associated with decreased data diversity, it can lead to suboptimal learning outcomes. Moreover, when compared to the ideal Error-Free scenario, the proposed algorithm reveals a small yet significant performance gap. This discrepancy primarily arises from the interference caused by wireless channel conditions affecting model updates during FEEL training, which is consistent with the theoretical analysis outlined in Theorem 1.

Figure 3.

Performance comparison between the proposed algorithm and the baseline schemes on both datasets as the number of communication round varies. (a,b) display the testing accuracy and training loss on the MNIST dataset, while (c,d) show the testing accuracy and training loss on the Fashion-MNIST dataset.

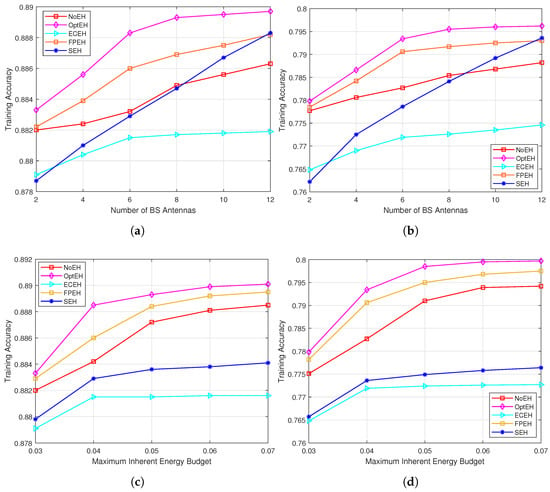

5.4. Performance Versus the Number of BS Antennas

As shown in Figure 4a,b, we further analyze the impact of varying numbers of BS antennas on the training performance of the proposed method. The results indicate that, as the number of receiving antennas at the BS increases, the learning performance of all schemes improves significantly. This enhancement is primarily attributed to the additional multiplexing gain provided via the BS antennas, which effectively strengthens the signal reception capacity and reduces transmission distortion during the model update process. Notably, compared to other baseline methods, the proposed optimization framework demonstrates a more pronounced performance advantage as the number of BS antennas increases.

Figure 4.

Performance comparison between the proposed algorithm and the baseline schemes across both datasets under different numbers of BS antennas and maximum inherent energy budget. (a,b) illustrate the testing accuracy on both datasets as the number of BS antennas changes, while (c,d) show the testing accuracy on both datasets under different maximum inherent energy budgets.

5.5. Performance Versus the Maximum Inherent Energy Budget

Figure 4c,d illustrate the impact of the maximum inherent energy budget for all devices on the learning performance, measured using the test accuracy on both datasets. For simplification, we assume that all devices have the same and denote it as . The results show that, as increases, the performance of all methods improves significantly. This enhancement is primarily due to the higher energy budget, which relaxes the energy constraints on devices during wireless communication. This relaxation allows for higher transmission power and increased local training intensity, thereby boosting overall system performance. However, after a certain threshold is reached, further increases in lead to diminishing returns. This phenomenon is attributed to the physical limitations of device communication capacity, indicating that, beyond a certain energy budget, the MSE cannot be further reduced. In other words, increasing the energy budget does not always yield linear gains in performance.

6. Conclusions

In this paper, we proposed a novel asymmetry-tolerance training framework for FEEL that was enabled via SWIPT. This framework leveraged SWIPT to provide sustainable energy support for devices while accommodating varying local training intensities. Then, we rigorously derived a new explicit upper bound that captured the combined effects of local training accuracy and the MSE of the wireless aggregation on the convergence performance of FEEL to highlight the importance of the joint design of learning and communication. Furthermore, we formulated two key optimization problems: the first aimed to maximize the energy harvesting capability among all devices, while the second addressed the joint learning and communication optimization problem under the optimal energy harvesting strategy. Finally, comprehensive experiments demonstrated that our proposed framework achieved significant performance improvements compared to the existing baselines.

For future research, the following areas are worthy of further exploration: (1) introducing reconfigurable intelligent surfaces to enhance the wireless communication environment and thereby potentially improving the efficiency of energy harvesting and contributing to better overall performance in heterogeneous training frameworks, (2) developing intelligent device selection algorithms that utilize machine learning to predict which devices are most likely to meet energy requirements under varying channel conditions and thereby ensuring optimal participation from devices, (3) on this basis, exploring the integration of multi-source energy harvesting techniques and allowing devices to leverage various energy sources simultaneously, which could lead to more sustainable and resilient operation in diverse environments.

Author Contributions

Conceptualization, H.L.; Methodology, Y.Z.; Investigation, K.R. and S.S.; Writing—original draft, Y.F.; Writing—review and editing, Y.F. and H.L.; Funding acquisition, S.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research is supported by the National Natural Science Foundation of China (62702004), and the key research project of Anhui Provincial Department of Education (2024AH050530).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used to support the findings of this study are included within the article.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| SWIPT | Simultaneous wireless information and power transfer |

| FEEL | Federated edge learning |

| AirComp | Over-the-air computation |

| SCA | Successive convex approximation |

| MSE | Mean squared error |

| AO | Alternating optimization |

| BS | Base station |

| ID | Information decoding |

| EH | Energy harvesting |

Appendix A. Proof of Theorem 1

Given Assumption 1 pertaining to the L-smoothness and -strong convexity of , we can derive the following valuable inequalities [38], as

Next, after the definition of the surrogate objective function is reviewed, and within the context of the AirComp framework, it can be expressed as

Let . Subsequently, we can derive

Using L-smoothness, we can derive

where (a) arises from the assumptions that and . Inequality (b) is derived using the Cauchy–Schwarz inequality, while inequality (c) is based on the L-Lipschitz smoothness of . Next, we will focus on expanding the constraint analysis of the norm term on the right side of (A8). First, we have

Subsequently, the following can be obtained:

By utilizing the triangle inequality, (A9), and (A10), the following can be derived:

Next, we have

Based on the definitions of and , we can further obtain the following [5]:

To simplify the description, we define ; thus, we have

where (a) comes from . Therefore, we derive the following:

which indicates that

Below, we define , which is given by

Then, we get

With the definition , we can ultimately derive the following:

where . According to the relevant conclusions from AirComp, we have that , thus obtaining

By recursively applying Equation (A18), we can finally conclude that

This completes the proof. □

Appendix B. Proof of Lemma 3

Based on the expression of , it can be observed that, within the range , is monotonically increasing as decreases. Therefore, when , for all , reaches its maximum value . Now, we prove that is less than 1. At this point, the expression for is given by

Next, we will prove that is always less than 1. To ensure that , the condition must be satisfied. It can be shown that, when , this condition always holds, thereby guaranteeing that does not exceed 1. Furthermore, to ensure that is greater than 0, it is necessary to ensure that the numerator of is greater than 0. Thus, we have , where

Since is non-negative, this leads to condition (59). □

References

- Lyu, X.; Ren, C.; Ni, W.; Tian, H.; Liu, R.P.; Dutkiewicz, E. Optimal Online Data Partitioning for Geo-Distributed Machine Learning in Edge of Wireless Networks. IEEE J. Sel. Areas Commun. 2019, 37, 2393–2406. [Google Scholar] [CrossRef]

- Li, T.; Sahu, A.K.; Talwalkar, A.; Smith, V. Federated Learning: Challenges, Methods, and Future Directions. IEEE Signal Process. Mag. 2020, 37, 50–60. [Google Scholar] [CrossRef]

- Zhang, T.; Mao, S. Energy-Efficient Federated Learning With Intelligent Reflecting Surface. IEEE Trans. Green Commun. Netw. 2022, 6, 845–858. [Google Scholar] [CrossRef]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; Arcas, B.A.y. Communication-Efficient Learning of Deep Networks from Decentralized Data. In Proceedings of the 20th International Conference on Artificial Intelligence and Statistics, Lauderdale, FL, USA, 20–22 April 2017; Singh, A., Zhu, J., Eds.; PMLR: Mc Kees Rocks, PA, USA, 2017; Volume 54, pp. 1273–1282. [Google Scholar]

- Dinh, C.T.; Tran, N.H.; Nguyen, M.N.H.; Hong, C.S.; Bao, W.; Zomaya, A.Y.; Gramoli, V. Federated Learning Over Wireless Networks: Convergence Analysis and Resource Allocation. IEEE/ACM Trans. Netw. 2021, 29, 398–409. [Google Scholar] [CrossRef]

- Li, H.; Wang, R.; Jiang, M.; Liu, J. STAR-RIS Empowered Heterogeneous Federated Edge Learning With Flexible Aggregation. IEEE Internet Things J. 2025, 12, 28374–28389. [Google Scholar] [CrossRef]

- Zhou, Y.; Pang, X.; Wang, Z.; Hu, J.; Sun, P.; Ren, K. Towards Efficient Asynchronous Federated Learning in Heterogeneous Edge Environments. In Proceedings of the IEEE INFOCOM 2024—IEEE Conference on Computer Communications, Vancouver, BC, Canada, 20–23 May 2024; pp. 2448–2457. [Google Scholar]

- Lu, Y.; Huang, X.; Dai, Y.; Maharjan, S.; Zhang, Y. Differentially Private Asynchronous Federated Learning for Mobile Edge Computing in Urban Informatics. IEEE Trans. Ind. Informatics 2020, 16, 2134–2143. [Google Scholar] [CrossRef]

- Kou, Z.; Ji, Y.; Yang, D.; Zhang, S.; Zhong, X. Semi-Asynchronous Over-the-Air Federated Learning Over Heterogeneous Edge Devices. IEEE Trans. Veh. Technol. 2025, 74, 110–125. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, Z.; Tian, Y.; Yang, Q.; Shan, H.; Wang, W.; Quek, T.Q.S. Asynchronous Federated Learning Over Wireless Communication Networks. IEEE Trans. Wirel. Commun. 2022, 21, 6961–6978. [Google Scholar] [CrossRef]

- Chen, Z.; Yi, W.; Shin, H.; Nallanathan, A. Adaptive Semi-Asynchronous Federated Learning over Wireless Networks. IEEE Trans. Commun. 2024, 73, 394–409. [Google Scholar] [CrossRef]

- Xu, Y.; Liao, Y.; Xu, H.; Ma, Z.; Wang, L.; Liu, J. Adaptive Control of Local Updating and Model Compression for Efficient Federated Learning. IEEE Trans. Mob. Comput. 2023, 22, 5675–5689. [Google Scholar] [CrossRef]

- Ma, Z.; Xu, Y.; Xu, H.; Meng, Z.; Huang, L.; Xue, Y. Adaptive Batch Size for Federated Learning in Resource-Constrained Edge Computing. IEEE Trans. Mob. Comput. 2023, 22, 37–53. [Google Scholar] [CrossRef]

- Wang, L.; Xu, Y.; Xu, H.; Jiang, Z.; Chen, M.; Zhang, W.; Qian, C. BOSE: Block-Wise Federated Learning in Heterogeneous Edge Computing. IEEE/ACM Trans. Netw. 2024, 32, 1362–1377. [Google Scholar] [CrossRef]

- Liao, Y.; Xu, Y.; Xu, H.; Wang, L.; Qian, C. Adaptive Configuration for Heterogeneous Participants in Decentralized Federated Learning. In Proceedings of the IEEE INFOCOM 2023—IEEE Conference on Computer Communications, New York, NY, USA, 17–20 May 2023; pp. 1–10. [Google Scholar]

- Zeng, M.; Wang, X.; Pan, W.; Zhou, P. Heterogeneous Training Intensity for Federated Learning: A Deep Reinforcement Learning Approach. IEEE Trans. Netw. Sci. Eng. 2023, 10, 990–1002. [Google Scholar] [CrossRef]

- Li, H.; Wang, R.; Zhang, W.; Wu, J. One Bit Aggregation for Federated Edge Learning With Reconfigurable Intelligent Surface: Analysis and Optimization. IEEE Trans. Wirel. Commun. 2023, 22, 872–888. [Google Scholar] [CrossRef]

- Chen, M.; Yang, Z.; Saad, W.; Yin, C.; Poor, H.V.; Cui, S. A Joint Learning and Communications Framework for Federated Learning Over Wireless Networks. IEEE Trans. Wirel. Commun. 2021, 20, 269–283. [Google Scholar] [CrossRef]

- Wen, D.; Bennis, M.; Huang, K. Joint Parameter-and-Bandwidth Allocation for Improving the Efficiency of Partitioned Edge Learning. IEEE Trans. Wirel. Commun. 2020, 19, 8272–8286. [Google Scholar] [CrossRef]

- Li, H.; Wang, R.; Wu, J.; Zhang, W.; Soto, I. Reconfigurable Intelligent Surface Empowered Federated Edge Learning With Statistical CSI. IEEE Trans. Wirel. Commun. 2024, 23, 6595–6608. [Google Scholar] [CrossRef]

- Yang, K.; Jiang, T.; Shi, Y.; Ding, Z. Federated Learning via Over-the-Air Computation. IEEE Trans. Wirel. Commun. 2020, 19, 2022–2035. [Google Scholar] [CrossRef]

- Zhu, G.; Du, Y.; Gündüz, D.; Huang, K. One-Bit Over-the-Air Aggregation for Communication-Efficient Federated Edge Learning: Design and Convergence Analysis. IEEE Trans. Wirel. Commun. 2021, 20, 2120–2135. [Google Scholar] [CrossRef]

- Liang, Y.; Chen, Q.; Zhu, G.; Jiang, H.; Eldar, Y.C.; Cui, S. Communication-and-Energy Efficient Over-the-Air Federated Learning. IEEE Trans. Wirel. Commun. 2025, 24, 767–782. [Google Scholar] [CrossRef]

- Zhang, Y.; You, C. SWIPT in Mixed Near- and Far-Field Channels: Joint Beam Scheduling and Power Allocation. IEEE J. Sel. Areas Commun. 2024, 42, 1583–1597. [Google Scholar] [CrossRef]

- Faramarzi, S.; Zarini, H.; Javadi, S.; Robat Mili, M.; Zhang, R.; Karagiannidis, G.K.; Al-Dhahir, N. Energy Efficient Design of Active STAR-RIS-Aided SWIPT Systems. IEEE Trans. Wirel. Commun. 2025, 24, 3209–3224. [Google Scholar] [CrossRef]

- He, Y.; Huang, F.; Wang, D.; Zhang, R. Outage Probability Analysis of MISO-NOMA Downlink Communications in UAV-Assisted Agri-IoT With SWIPT and TAS Enhancement. IEEE Trans. Netw. Sci. Eng. 2025, 12, 2151–2164. [Google Scholar] [CrossRef]

- Do, T.N.; da Costa, D.B.; Duong, T.Q.; An, B. Improving the Performance of Cell-Edge Users in MISO-NOMA Systems Using TAS and SWIPT-Based Cooperative Transmissions. IEEE Trans. Green Commun. Netw. 2018, 2, 49–62. [Google Scholar] [CrossRef]

- Zheng, G.; Fang, Y.; Wen, M.; Ding, Z. Novel Over-the-Air Federated Learning via Reconfigurable Intelligent Surface and SWIPT. IEEE Internet Things J. 2024, 11, 34140–34155. [Google Scholar] [CrossRef]

- Liu, H.; Yuan, X.; Zhang, Y.J.A. Reconfigurable Intelligent Surface Enabled Federated Learning: A Unified Communication-Learning Design Approach. IEEE Trans. Wirel. Commun. 2021, 20, 7595–7609. [Google Scholar] [CrossRef]

- Wang, Z.; Zhou, Y.; Shi, Y.; Zhuang, W. Interference Management for Over-the-Air Federated Learning in Multi-Cell Wireless Networks. IEEE J. Sel. Areas Commun. 2022, 40, 2361–2377. [Google Scholar] [CrossRef]

- Nadeem, Q.U.A.; Kammoun, A.; Chaaban, A.; Debbah, M.; Alouini, M.S. Intelligent reflecting surface assisted wireless communication: Modeling and channel estimation. arXiv 2019, arXiv:1906.02360. [Google Scholar]

- Liaskos, C.; Tsioliaridou, A.; Pitilakis, A.; Pirialakos, G.; Tsilipakos, O.; Tasolamprou, A.; Kantartzis, N.; Ioannidis, S.; Kafesaki, M.; Pitsillides, A.; et al. Joint compressed sensing and manipulation of wireless emissions with intelligent surfaces. In Proceedings of the IEEE International Conference on Distributed Computing in Sensor Systems, Santorini Island, Greece, 29–31 May 2019; pp. 318–325. [Google Scholar]

- Wang, Z.; Qiu, J.; Zhou, Y.; Shi, Y.; Fu, L.; Chen, W.; Letaief, K.B. Federated Learning via Intelligent Reflecting Surface. IEEE Trans. Wirel. Commun. 2022, 21, 808–822. [Google Scholar] [CrossRef]

- Ni, W.; Liu, Y.; Yang, Z.; Tian, H.; Shen, X. Integrating Over-the-Air Federated Learning and Non-Orthogonal Multiple Access: What Role Can RIS Play? IEEE Trans. Wirel. Commun. 2022, 21, 10083–10099. [Google Scholar] [CrossRef]

- Hu, Y.; Chen, M.; Chen, M.; Yang, Z.; Shikh-Bahaei, M.; Poor, H.V.; Cui, S. Energy Minimization for Federated Learning with IRS-Assisted Over-the-Air Computation. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 3105–3109. [Google Scholar] [CrossRef]

- Zhou, S.; Li, G.Y. Federated Learning Via Inexact ADMM. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 9699–9708. [Google Scholar] [CrossRef] [PubMed]

- Elgabli, A.; Park, J.; Issaid, C.B.; Bennis, M. Harnessing Wireless Channels for Scalable and Privacy-Preserving Federated Learning. IEEE Trans. Commun. 2021, 69, 5194–5208. [Google Scholar] [CrossRef]

- Nesterov, Y. Lectures on Convex Optimization; Springer International Publishing: Berlin/Heidelberg, Germany, 2018; Volume 137. [Google Scholar] [CrossRef]

- Grant, M.; Boyd, S. Graph implementations for nonsmooth convex programs. In Recent Advances in Learning and Control; Blondel, V., Boyd, S., Kimura, H., Eds.; Lecture Notes in Control and Information Sciences; Springer: Berlin/Heidelberg, Germany, 2008; pp. 95–110. [Google Scholar]

- Liu, Y.; Mu, X.; Xu, J.; Schober, R.; Hao, Y.; Poor, H.V.; Hanzo, L. STAR: Simultaneous Transmission and Reflection for 360° Coverage by Intelligent Surfaces. IEEE Wirel. Commun. 2021, 28, 102–109. [Google Scholar] [CrossRef]

- Lecun, Y.; Cortes, C. The Mnist Database of Handwritten Digits. 2005. Available online: http://yann.lecun.com/exdb/mnist/ (accessed on 20 October 2024).

- Xiao, H.; Rasul, K.; Vollgraf, R. Fashion-mnist: A novel image dataset for benchmarking machine learning algorithms. arXiv 2017, arXiv:1708.07747. [Google Scholar]

- Wu, Y.; Song, Y.; Wang, T.; Dai, M.; Quek, T.Q.S. Simultaneous Wireless Information and Power Transfer Assisted Federated Learning via Nonorthogonal Multiple Access. IEEE Trans. Green Commun. Netw. 2022, 6, 1846–1861. [Google Scholar] [CrossRef]

- Yao, J.; Xu, W.; Yang, Z.; You, X.; Bennis, M.; Poor, H.V. Wireless Federated Learning Over Resource-Constrained Networks: Digital Versus Analog Transmissions. IEEE Trans. Wirel. Commun. 2024, 23, 14020–14036. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).