1. Introduction

State estimation (or filtering) is one of the most important research areas in the operation of industrial systems [

1]. It refers to the process of describing the internal state structure of a system based on external observed output data. Over the past few decades, state estimation problems have been present in nearly all fields, including industry, the military, and finance. They have been widely applied in areas such as autonomous driving [

2], parameter estimation [

3], system identification [

4], target tracking, and aerospace [

5]. Parameter identification has also found extensive applications across various fields, such as parameter updating in control systems, parameter estimation in communication systems, and fault diagnosis in power systems [

6]. With technological advancements, multi-sensor systems have become increasingly prevalent. Multi-sensor information fusion, also known as multi-source information fusion, refers to the process of optimally combining data from single or multiple sources to obtain accurate and reliable information. This approach has been widely applied in numerous high-profile and emerging fields, including industrial process monitoring, inertial navigation, industrial robotics, unmanned systems [

7], the Internet of Things (IoT) [

8], electric motor and appliance design, and air traffic control systems [

9]. By fusing data from multiple sensors, the resulting estimates are more accurate, the algorithms are simplified, and the overall application becomes more convenient.

In the field of multi-sensor information fusion estimation, there are three fundamental fusion strategies: centralized data fusion, distributed data fusion, and sequential data fusion [

10]. Centralized fusion involves transmitting all sensor measurements to a central station for unified processing. Although this approach yields optimal state estimates, it requires waiting for all data to arrive before fusion can begin. This leads to high-dimensional states, increased computational burden, poor robustness, and difficulty in real-time filtering [

11]. Distributed fusion, on the other hand, allows each sensor to generate local estimates based on its own measurements, which are then sent to a fusion center where they are combined using a predefined fusion rule. The parallel structure of distributed fusion makes fault detection and sensor isolation more straightforward, thus improving system reliability. However, the estimation accuracy of distributed fusion is generally lower than that of centralized methods. Sequential fusion processes sensor measurements in the order in which they arrive at the fusion center, updating the fused estimate at each step. While it achieves estimation accuracy comparable to centralized fusion, it is more suitable for real-time applications because it does not require waiting for all data to arrive. This makes it better equipped to handle delayed or asynchronous data and reduces overall system cost [

12].

Kalman filtering is a classical fusion method widely used in control systems [

13]. For linear Gaussian systems, the standard Kalman filter assumes independence between the process noise and measurement noise. This method is optimal in the sense of linear minimum variance and has the advantage of real-time recursive computation. However, in many practical scenarios, the process noise and measurement noise are not independent but correlated. Therefore, Kalman filters under correlated noise conditions have been successively developed. As environmental complexity increases and the surveillance area expands, the motion of a single target often needs to be observed by multiple spatially distributed sensors. Network transmission introduces delays, resulting in measurements arriving out of sequence. In such cases, for systems with cross-correlation between process and measurement noise, as well as autocorrelation among measurement noises, a centralized Kalman filter requires all sensor measurements to arrive before fusion can be completed. However, due to potential packet losses in the network, implementing such centralized filters becomes infeasible. To address this, sequential or distributed Kalman filters that can handle delayed and out-of-sequence data are needed. The core challenge in designing such filters lies in decorrelating the process noise and measurement noise, as well as removing the inter-sensor measurement noise correlations.

In the context of multi-sensor cooperative systems, reference [

14] utilizes Cholesky decomposition and the inversion of unit lower triangular matrices to transform multi-sensor measurements with correlated noise into equivalent pseudo-measurements with uncorrelated noise, and then proposes an optimal centralized fusion filtering method based on this transformation. Reference [

15] addresses systems with process noise and measurement noise that are finitely step autocorrelated and cross-correlated, respectively, and proposes a distributed fusion filter using a matrix-weighted fusion estimation algorithm. Reference [

16] further considers the impact of correlated measurement noise among sensors and one-step correlation between process and measurement noise in distributed track fusion filtering for time-delay systems. In [

17], a distributed filter is proposed using a decorrelation method at the sensor end. However, the assumption that all sensor information is fully accessible throughout the network is unrealistic for noise decorrelation. Reference [

18] proposes a linearly optimal minimum-variance matrix-weighted fusion filter with a two-stage fusion structure under correlated noise, but due to inaccuracies in the estimation model, both filters yield suboptimal estimates. Reference [

19] presents a sequential Kalman fusion method under uncorrelated process noise and inter-correlated measurement noise, but it fails to achieve performance equivalence with the centralized Kalman filter, rendering it suboptimal. Reference [

20] studies the fusion filtering problem considering one-step autocorrelation among observation noises and one-step cross-correlation between observation and process noise, but relies on matrix augmentation, which significantly increases the computational load, especially in high-dimensional scenarios. Reference [

21] designed sequential and distributed fusion filters, but the sequential method lacked a measurement noise estimator, leading to suboptimal results, and the distributed filter exhibited lower accuracy than the centralized counterpart. Reference [

22] proposes a sequential predictive fusion Kalman filter under simultaneous cross-correlation between process and measurement noise as well as among measurement noises, but it also fails to achieve performance equivalence with the centralized filter and remains suboptimal. Although [

23] shows that distributed fusion is more reliable, it is not optimal. Reference [

24] demonstrates theoretical flaws in its filtering derivation: the induction-based validation step (Equation (

41)) is erroneous, rendering its sequential Kalman fusion filter performance nonequivalent to the centralized version and invalidating its conclusions. Reference [

25] proposes improved sequential filters under time- and event-triggered scheduling, considering correlation between measurement noise and state prediction. However, due to the omission of a measurement noise estimator in the fusion algorithm, the filters are suboptimal—when cross-correlation exists among measurement noises, the estimator cannot be zero. Reference [

26] addresses environments where both the process and all measurement noises are mutually correlated and proposes a suboptimal sequential Kalman fusion method. However, the algorithm incorrectly treats predicted noise terms as deterministic estimates (see Equation (

17)), making the predicted measurement estimate (Equation (

16)) an unresolved random variable, thus rendering the method practically inoperable. Although [

27] presents both distributed and sequential filtering under correlated noise, Equations (18) and (35) represent transformed measurement models that are not equivalent to the original, making the algorithm non-executable. Thus, sequential Kalman filtering under dual-noise correlation—where both process and measurement noises are mutually correlated, and measurement noises are inter-correlated—remains an unsolved challenge. To the best of the authors’ knowledge, there currently exists no recursive solution that addresses this dual-noise correlation scenario.

This paper, based on an analysis of the performance equivalence between the Kalman filter and its corresponding least squares estimator, designs a sequential least squares fusion method for system state estimation using time-ordered measurements, which achieves performance equivalence with the centralized Kalman filter. The contributions of this paper are as follows: (1) To the best of the authors’ knowledge, for the first time, a sequential least squares method equivalent in performance to the centralized fusion method is established under the scenario where the process noise is cross-correlated with all measurement noises, and the measurement noises are also mutually correlated. (2) A sequential closed-form expression for the estimation error covariance matrix of the filter is derived. (3) It is rigorously proven that the proposed sequential least squares fusion method is performance-equivalent to the centralized Kalman fusion method. (4) The proposed method is applicable to scenarios where cross-correlation exists between process noise and measurement noise due to transmission delays, and where measurement noises arriving with delays are also mutually correlated.

The remainder of this paper is organized as follows. In

Section 2, the problem formulation is presented. In

Section 3, a new centralized Kalman filter fusion estimation algorithm is proposed, which accounts for the cross-correlation between process noise and measurement noise as well as the autocorrelation among measurement noises. In

Section 4, a sequential least squares fusion estimation algorithm is developed for time-sequentially arriving data under correlated noise, incorporating progressive decorrelation.

Section 5 provides the performance analysis. In

Section 6, a simulation example of a system with three sensors is given. Finally,

Section 7 concludes the paper.

2. Description of Issues in Multi-Sensor Dynamic Systems with Correlated Noise

Consider a discrete-time varying linear stochastic control system with N sensors, described by the following state and measurement equations:

where

k represents the discrete time index,

is the state of the system to be estimated,

is the state transition matrix,

is a zero-mean white noise sequence,

is the measurement matrix,

is the measurement vector from the

i-th sensor, and

is the measurement noise, with

.

Assumption 1. The process noise is independent of measurement noises , , satisfyingwhere superscripts E and T denote the exception and the transpose. Assumption 2. The process noise is correlated with the measurement noise , and there is also autocorrelation between and for , which satisfies This expression represents the cross-correlation between process noise

and measurement noise

, where

is the process noise covariance,

is the noise coupling matrix, and

is the measurement noise covariance. The symbol

E denotes the mathematical expectation, and

represents the Kronecker delta function, where

Assumption 3. The initial state is independent of and for , which satisfies Under the condition of mutually independent noises (Assumption 1), the Kalman filter has been widely applied. In contrast, the goal of this paper is to develop a new filter that minimizes the state estimation error covariance under Assumptions 2 and 3.

3. Centralized Fusion Kalman Filtering Method Under Correlated Noise

A centralized Kalman filter model is developed for the dynamic systems described by Equations (1) and (2), utilizing the state equations and augmented observation matrices.

First, starting from Equation (

2), we can derive the augmented observation equation as follows:

where the augmented observation vector

, the augmented observation matrix

, and the augmented observation noise

are defined as

Initially, based on the statistical properties of the initial conditions and the observation data, the estimated state

and its corresponding covariance matrix are computed.

Here, denotes the optimal estimate of the system state at time k, conditioned on all measurement information available up to that time. In contrast, denotes the covariance matrix of the state estimation error under the same information set.

The operational process of centralized Kalman filtering under noise correlation can be divided into the following steps:

(1) Based on state Equation (

1), the one-step propagation predicted estimate of the state variable, the state prediction estimation error, and the covariance matrix of the prediction estimation error are calculated as follows:

(2) Based on the observation model, the one-step propagation prediction estimate of observation value

, the observation prediction error, the cross-correlation error covariance matrix, and the autocorrelation covariance matrix are calculated as follows:

where

(3) A linear Kalman filter is designed to estimate the state variable

.

In the equation, represents the unspecified optimal gain matrix.

(4) The optimal gain matrix is derived using the principle of orthogonality.

Next, the estimation error for the state variable

is calculated:

Derived from the principle of orthogonality,

The gain matrix of the filter is obtained as

(5) The state variable

estimation error covariance matrix is calculated as follows:

Issues and Solutions with Centralized Fusion Algorithms:

(1) In multi-sensor systems, sensors distributed across different locations may experience data arrival delays due to network quality issues. If a centralized fusion algorithm is employed, significant delays can occur, particularly when some data cannot be received. In such cases, the centralized algorithm cannot be executed immediately.

(2) To address this challenge, this paper introduces a sequential least squares method, which assumes the independence of noise sources. By ensuring the independence of the process and measurement noises, we are able to derive the corresponding least squares estimator and the associated covariance matrix.

(3) Building on this, this paper develops a sequential least squares estimation algorithm that is equivalent to the centralized fusion algorithm. The observations are assumed to arrive in a sequential order, enabling the implementation of a sequential fusion least squares estimation approach based on the ordered sequence of measurements.

4. A Sequential Least Squares Method for Gradually Decorrelating Time-Series Arrival Data Under Noise Correlation

In this paper, we propose an approach inspired by the Gram–Schmidt orthogonalization method, which integrates stepwise orthogonalization with a sequential fusion least squares algorithm to tackle the multi-sensor fusion filtering problem, where process noise is correlated with measurement noise, and measurement noise exhibits cross-correlation. For generality, we assume that sensor data at the

-th time step arrive sequentially in the order

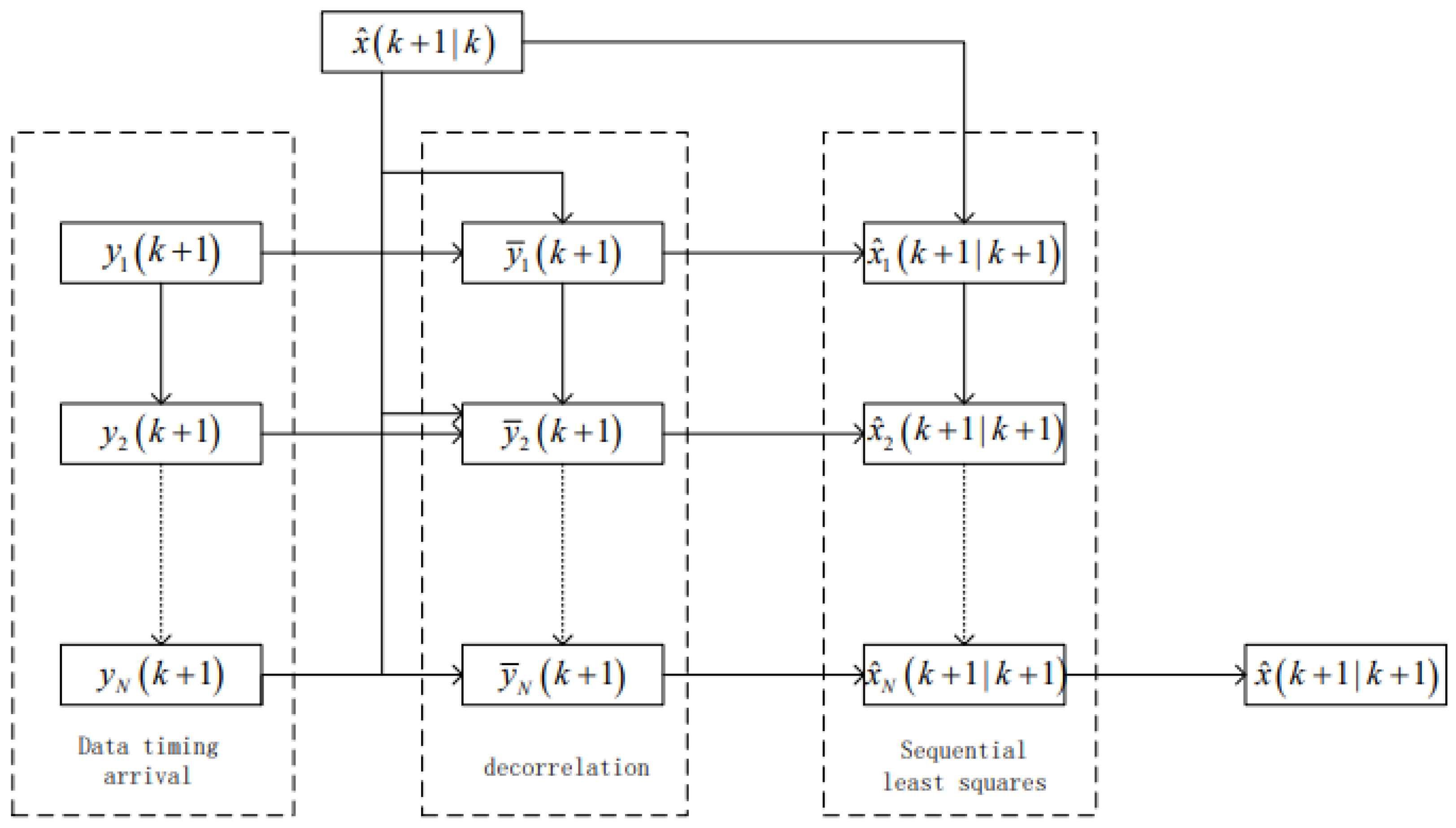

. The flowchart of the proposed algorithm is presented in

Figure 1.

4.1. New Observation Equation for State Based on Predicted Estimates

Based on the state prediction estimation equation, it can also be equivalently expressed as the measurement equation for state

:

where

,

, with

, and

.

The random variable

satisfies the following statistical properties:

Remark 1. Interpreting (27) as the measurement equation for state variable transforms the challenge of decoupling process noise from measurement noise into a unified decoupling problem involving both measurement noise and the estimation error . This is because is a linear combination of and , thereby addressing issues that could not be resolved in the previous literature.

4.2. Estimation Method for State Based on Observation Equation (1)

When data from sensor 1 arrives, the first step is to decorrelate it, since and are correlated noises; this decorrelation process is analogous to the Schmidt orthogonalization process.

(1) An equivalent reformulation of the observation Equation (

1) is

where

is the correlation matrix. Combining like terms in the above expression, we obtain

where

(2) Solve for the correlation matrix

, which is derived from the decorrelation constraint equation

.

Note: .

The augmented measurement noise

satisfies the following statistical properties:

where

(3) Establish a least squares estimator based on the state model and the state estimates from sensor 1.

The estimated value is

where

.

Estimation error:

where

.

The state estimation error covariance matrix is

4.3. Sequential Least Squares Fusion from Sensor to Sensor l

Assume that the following estimates have been obtained:

The corresponding covariance matrix is given by

where

The goal of this section is to establish a recursive least squares solution for state

after observation

from sensor

l arrives.

The corresponding covariance matrix is given by

where

For convenience, we define

where

(1) The observation equation for

l can be equivalently reformulated as follows:

By combining like terms, we obtain

where

(2) The derivation of the correlation matrix

is obtained by solving the decorrelation constraint equations

, for

.

where

For convenience, we denote

The statistical properties of

are as follows:

where

(3) Develop a sequential least squares fusion estimation framework that incorporates the interactions from sensor i (where ) to sensor l.

The least squares estimator is formulated as follows:

The state estimation error covariance matrix is

When

, the fusion center can obtain

and

, thus establishing the global estimate of

as

The sequential least squares estimator is capable of managing systems with observation delays, and the algorithm does not require the acquisition of all data, enabling it to operate effectively even when some data are missing.

To more clearly illustrate the specific implementation steps of the proposed method, the calculation steps of the sequential algorithm are presented below:

Step 1: Establish the initial values and .

Step 2: Compute , , and

Step 3: Establish the prediction equation , where , , and

Step 4: Perform decorrelation processing on observation equation , resulting in the decorrelated equation

Step 5: Combine the measurement equations from steps 3 and 4 and solve the resulting system using least squares to obtain , , and

Step 6: After fusing data from the current sensors, obtain , and . Perform decorrelation processing on observation equation in the same manner as in step 5.

Step 7: Formulate and solve the system of equations to obtain , , and .

Step 8: If , then and will be obtained.

5. Performance Equivalence Analysis

This section establishes the equivalence between the proposed sequential least squares recursive algorithm and the centralized Kalman filtering algorithm in the presence of correlated noise. It is shown that their estimation error covariance matrices are identical, indicating equivalent estimation performance. For technical convenience, we define the following notation:

where

Proof of the equivalence between the centralized Kalman filter under correlated noise and the sequential recursive least squares method with stepwise decorrelation is as follows:

First, we can take the centralized measurement noise

after decorrelation as an example and establish its relationship with the centralized measurement noise

without decorrelation. Then, we substitute all the parameters of the centralized Kalman filter under correlated noise from

Section 3 of this paper with the parameters after decorrelation. The derivation is as follows:

Then,

can be expressed as

where

Likewise,

and

can also be expressed as

In substituting Equations (56), (59), and (60) into Equations (19), (20), (25), and (26), the cross-covariance matrix between the state prediction error and the observation prediction error, the auto-covariance matrix of the observation prediction error, the gain matrix, and the estimation error covariance matrix can be re-expressed as follows:

Based on the matrix inversion lemma in reference [

28], Equation (

64) can be expressed as follows:

According to Equation (

49),

can be expressed as

Thus, by analogy, Equation (

49) can be expressed as

can be expressed as

It can be rigorously proved that

Therefore, it can be concluded that the sequential least squares algorithm, which relies on the measurement arrival time series , and the centralized fusion Kalman filtering algorithm, which is based on , exhibit identical performance under noise correlation.

6. Simulation Examples

Consider a three-sensor target tracking system with correlated noise:

where

The sampling time is

.

is the state, and

and

represent the displacement and velocity of the target, respectively. The measurement matrix consists of

,

, and

.

and

are uncorrelated white noise with means of 0 and variances of

and

, respectively. Let

,

,

,

,

,

,

,

,

,

, and

. The initial state is

and

.

The processor sequentially updates the measurements from the three sensors described in (71). For each iteration of the local least squares estimator, the corresponding estimation results and their associated error covariance are computed, thereby demonstrating the equivalence between the proposed algorithm and the centralized algorithm in the simulation. This comparison reveals that both algorithms achieve the same level of estimation accuracy.

The root mean square error (RMSE) is used to evaluate the performance of the algorithm, defined as follows:

where

,

represents the estimation error,

is the true value of the state,

denotes the estimated state, and

N represents the number of simulation runs.

The accuracy is calculated using the mean square error of the first sensor as the reference, as given by the following formula:

where

denotes the root mean square error for each sensor.

Remark 2. “×” shows that this sensor’s RMSE is shown as a reference, and there is no accuracy improvement.

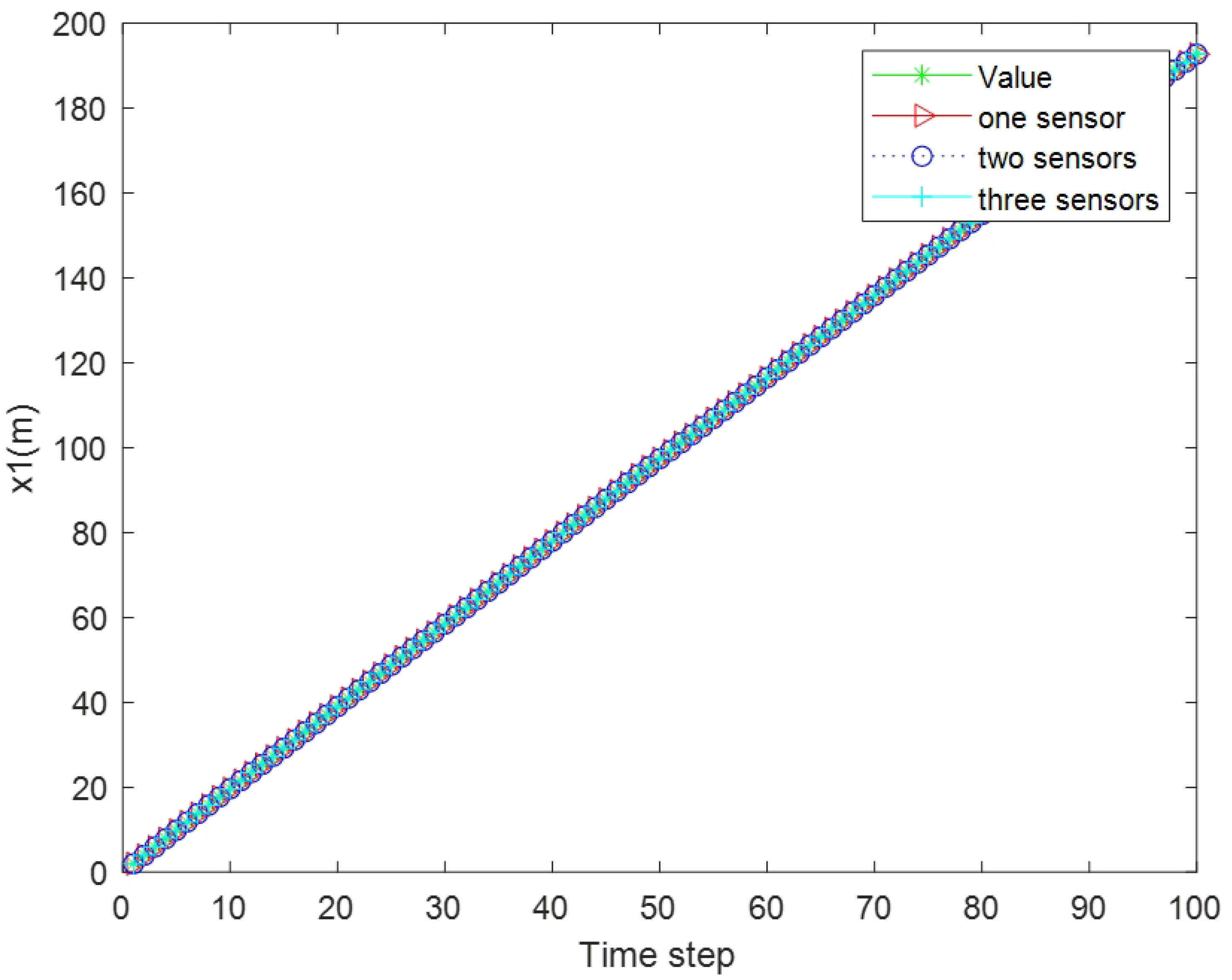

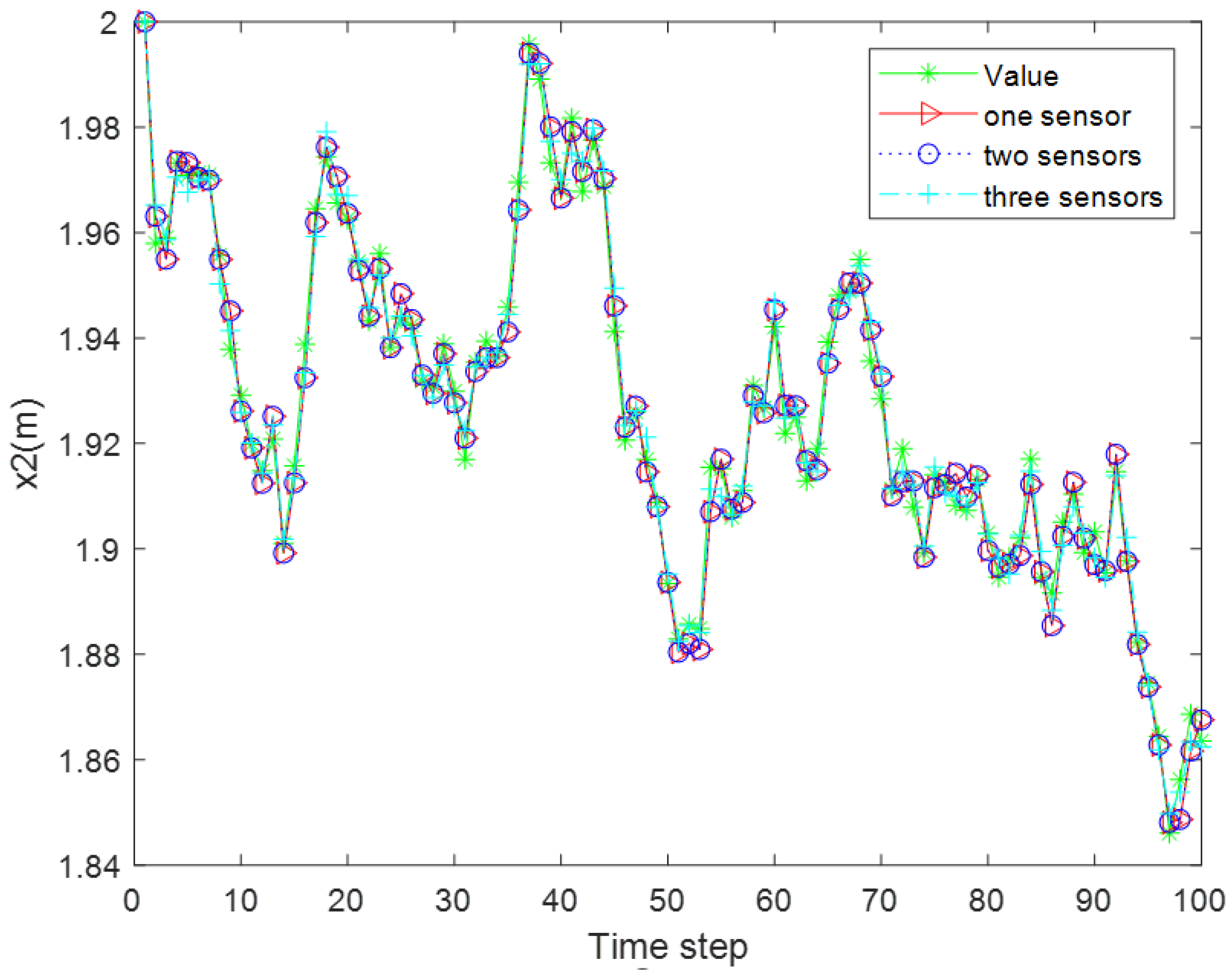

Simulation I : According to the mean square errors shown in

Table 1, the accuracy of the estimated state variables has been improved, which demonstrates the effectiveness of the proposed algorithm. Specifically,

Figure 2 and

Figure 3 show the tracking performance, while

Figure 4 and

Figure 5 illustrate the estimation error curves.

Simulation II:

Figure 6 and

Figure 7, respectively, show the comparison of tracking performance between the sequential least squares estimation algorithm proposed in this paper and the centralized fusion Kalman filtering algorithm. The comparison of their estimated results shows that the curves are completely overlapped, indicating that the proposed algorithm has the same estimation accuracy as the centralized fusion algorithm.

Simulation III: The comparison experiment aimed to evaluate the effects of considering versus ignoring noise correlation. We compared the estimation error covariance matrices in both cases. The simulation results show that the algorithm proposed in this paper, which accounts for noise correlation, yields a smaller estimation error covariance matrix, demonstrating superior performance. Furthermore, the comparison of the mean absolute errors is presented as shown in

Table 2.

Simulation IV: To illustrate how the estimation error covariance of the proposed algorithm varies with the correlation coefficient, we kept the correlation coefficients of the first two sensors unchanged and sequentially varied the correlation coefficient of the third sensor.

Table 3 presents the trace of the error covariance matrix as the correlation coefficient changes. The relationship of the estimation accuracy can be seen as follows:

Based on the simulation results, it can be seen that as the correlation coefficient increases, the estimation error covariance matrix progressively decreases. This improvement in estimation accuracy further ensures the robustness and effectiveness of the proposed algorithm.

The simulation results demonstrate that the proposed algorithm is capable of effectively addressing multi-sensor systems exhibiting both cross-correlation between process and measurement noise, as well as autocorrelation within the measurement noise. Under noisy conditions, the algorithm attains an accuracy level that matches that of the centralized Kalman filtering algorithm, thereby confirming that the proposed filtering strategy is optimal in the sense of the linear minimum mean square error (LMMSE). Additionally, the algorithm features recursive computation via sequential filtering, ensuring robust performance and practical applicability in real-time scenarios.