Abstract

A new predictor in functional time series (FTS ) is considered. It is based on the asymmetric weighting function of quantile regression. More precisely, we assume that FTS is generated from a single-index model that permits the observation of endogenous–exogenous variables by combining the nonparametric model with a linear one. In parallel, the -modal predictor is estimated using the M-estimation of the derivative of the conditional quantile of the generated FTS. In the mathematical part, we prove the complete convergence of the constructed estimator, and we determine its convergence rate. An empirical analysis is performed to prove the applicability of the estimator and to evaluate the impact of different structures involved in the smoothing approach. This analysis is carried out using simulated and real data. Finally, the regressive nature of the constructed predictor allows it to provide a robust instantaneous predictor for environmental data.

Keywords:

L1-modal regression; robust estimation; quantile regression; functional data; kernel smoothing; single index; additive model MSC:

62G08; 62G10; 62G35; 62G07; 62G32; 62G30; 62H12

1. Introduction

Forecasting the interaction between dependent functional random variables is an important topic of applied data analysis. This issue has been studied by many authors in the past while considering the conditional mean. Alternatively, in this paper, we use modal regression, which is more informative than the conditional mean. The importance of modal regression is justified by its strong link to the conditional distribution (see [] for more motivation on the conditional mode prediction). Thus, prediction by combining the conditional mode (CM) with the functional single index algorithm (FSI) is a prominent topic in functional data analysis (FDA).

It is well known that the FSI structure is crucial for increasing the performance of nonparametric prediction by using the projection of the exogenous variable over a given functional. The popularity of the FSI structure comes from the simplicity of its linear part and the flexibility of the nonparametric techniques. The first investigations on this topic were developed by [,]. We refer also to [,,] for a list of references. While the cited references consider the vectorial case, we focus in this paper on the functional case. In fact, single-index regression was considered in functional statistics by [,]. They studied the Nadaraya–Watson (NW) estimator of the conditional mean under the FSI setting. We return to [] for the conditional median using the FSI approach. They treated the estimation of the slope component using a B-spline approach. Moreover, we found the nonparametric estimation of the unknown link function in []. The authors of [] estimate the compact single functional index with a specific coefficient function. The nonparametric estimation of the conditional distribution under the FSI assumption was studied by []. The multi-index case was introduced by []. They demonstrated the asymptotic property of the Nadaraya–Watson estimator of the unknown parameters in the functional model. Readers interested in the FSI topic may refer to [,] for conditional density estimation when the input variables belong to a Hilbert space. We cite [,,] for an overview of functional semi-parametric modeling. The second component of this work is the estimation of modal regression. Furthermore, the functional CM was considered by []. Since the publication of the cited monograph, a great number of papers have been developed to explore the relationship between a functional variable and a scalar output one. For example, ref. [] treats the M-regression, ref. [] considers linear local regression, and [] introduces the functional relative error. In this context, nonparametric CM estimation has been studied by many authors. For instance, [] stated the asymptotic normality of the NW estimator of the CM function. The result was obtained in the case of identically distributed and independent observations. In [], the authors extended this asymptotic normality to the dependent case. We refer to [] for the complete consistency of the NW-estimator of the spatio-functional mode. The consistency of this estimator was obtained by []. The NW-estimator of the mode function in a functional ergodic time series case was introduced by []. They treated the sure consistency when the data exhibited some missingness at random (MAR) in the response variable. Ref. [] provided the local linear estimator of the CM model. We found in this cited paper the asymptotic distribution of the constructed estimator.

Alternatively, in contrast to the previously cited works, we consider in this paper a new estimation method based on techniques. The latter defines the modal predictor as the minimizer of the derivative of the quantile function. This approach enhances the robustness properties of modal regression. The asymptotic property of the new predictor is developed under a functional time series drawn by the FSI structure. The obtained results confirm the main advantages of the constructed estimator in both robustness and accuracy. In particular, it accumulates the advantages of different parameters involved in the estimators. Indeed, from the single-index modeling, we improve the accuracy of the prediction, and from the -approach, we enhance its robustness. It should be noted that the novelty of this work is the treatment of the dependent case.

We have modeled the dependency aspect using the strong mixing condition. From a practical point of view, the development of the proposed model over the strong mixing FTS allows us to extend its scope of application. Indeed, it is well known that the strong mixing assumption implies the -mixing and the -mixing as well as the standard time series models (such as AR, ARIMA and ARCH ), which satisfy, under certain additional conditions, the strong mixing assumption (see []). Furthermore, the computational ability of the estimator is highlighted using real and simulated data. Specifically, the usefulness of the new predictor has been examined using air quality data. More precisely, we compare it to the FSI-standard CM model. Finally, let us point out that the robustness property is fundamental for functional time series prediction, as it permits controlling the deviations of the complicated structure of the data linked to its functional nature and/or its strong correlation, hence the importance of developing a robust version of the functional regression model in FTS data analysis.

The paper is organized as follows. We introduce the model in the following section. Section 3 is devoted to the establishment of the main result. The applicability of the estimator over artificial data and real data is treated in Section 4 and Section 5. In Section 6, we summarize some concluding remarks. The proof of the technical results is delegated to Section 7.

2. Robust Estimator of the Modal-Regression in FSI Structure

Let be a couple of random variables in . The functional space is Hilbertian and has an inner product . Let be n a sample of stationary vectors that have the same distribution as . In a functional single index structure (FSI), the input–output S and T are related

Furthermore, the identifiability of the FSI structure has been studied by []. It is ensured by assuming the differentiability of the conditional expectation and the functional index such that , where is the first vector of an orthonormal basis of . In fact, the FSI approach is very efficient in reducing the effect of the infinite dimensionality of the estimator. Thus, we aim in this paper to improve the convergence of the standard functional modal regression by considering the FSI structure. For this purpose, we fix a location point v in , and we set as a neighborhood of v. In addition, the conditional distribution function of S given , denoted by , is strictly increasing and has a continuous density with respect to Lebesgue’s measure over . The conditional mode in FSI is the maximizer of the conditional probability density function () over a compact K

Alternatively, the function of can be related to the quantile regression by using

where is the conditional quantile of order q given . Therefore, the function satisfies

with

Thus, the robust estimator of the FSI mode is

where

with and are, respectively, estimators of the conditional quantile and its derivative. Firstly, the derivative is estimated by

where is a sequence of positive numbers in . Secondly, the conditional quantile is estimated by the solution with respect to t of the optimization problem

where . Finally, the robust estimator of the FSI mode is obtained by estimating

where

with is a kernel map and is a sequence of positive in that tends to zero as n tends to infinity. As mentioned previously, the main purpose of this work is to establish the asymptotic property under the strong mixing assumption. Recall that a strictly stationary sequence of random variables is strongly mixing if

where is the algebra generated by .

The importance of the mixing assumption comes from the fact that it permits many practical applications, especially in the fields of economics and finance. For example, there exist several time series models satisfying the -mixing condition, such as ARCH, ARMA, threshold models, EXPAR models, ARCH models, GARCH models, and bilinear Markovian models that are geometrically strongly mixing (see [,] for more examples). In this paper, we focus on the almost complete convergence, which is defined below.

Definition 1.

Let be a sequence of real variables; we say that converges almost completely (a.co.) to zero if and only if . Moreover, let be a sequence of positive real numbers; we say that a.co. if and only if This kind of convergence implies both almost certain convergence and convergence in probability.

3. Main Results

Firstly, we start by denoting C or as some positive constants, and we set

Now, we list some required conditions that are necessary in deriving the almost complete convergence of of .

- (AS1)

- . Furthermore, as .

- (AS2)

- The functions is of class and such that the following Lipschitz’s condition is satisfied:

- (AS3)

- The sequence satisfies and

- (AS4)

- is a function with support such that .

- (AS5)

- There exists such thatwhere

The assumed conditions (AS1)–(AS4) are classic in nonparametric functional statistics. Assumption (AS1) is primordial in this kind of data analysis. The function can be explicitly defined for several continuous processes []). Furthermore, the regularity postulate (AS2) is assumed to identify the functional space of the model. Such an assumption has a great impact on the bias component in the convergence rate of . More precisely, we point out that the conditions (AS4)–(AS5) are linked to the kernel and the bandwidth . Such technical assumptions are used to simplify the demonstration of the consistency of the estimator . In fact, the assumptions are less restrictive than those used in the previous studies of modal prediction in functional data analysis. More precisely, the main advantage of the present contribution is the use of one kernel instead of the standard case approach, which requires two kernels.

Theorem 1.

Under assumptions (AS1)–(AS4), and if we have:

where .

The obtained convergence rate explores the impact of the strong correlation of the data on the estimation quality. In particular, this aspect is controlled through the function . Not surprisingly, the level of dependency has a negative effect on estimation quality. In this sense, the convergence rate decreases when the level of dependency increases. Additionally, the obtained convergence rate highlights the importance of the choice of the smoothing parameter and the functional index . The expression of the convergence rate provides a preliminary idea of this direction. Indeed, it suffices to select and by minimizing the asymptotic quantity . Of course, the practical use of this rule requires the plug-in estimation of the unknown functions in this expression, which is not trivial. For this reason, the empirical rules based on the cross-validation criterion are more adequate for practical purposes.

4. Computational Study

This section is devoted to analyzing the computational ability of the constructed estimator. First, we aim to check its easy implantation, and the second aim is to evaluate its behavior in terms of accuracy and robustness. Of course, this analysis is performed through a comparison study between the new estimator and the standard one. More precisely, we will control the effect of dependency, as well as the outlier resistance of both estimators. For this purpose, we generate dependent functional regressors as functional ARCH of order p, whose conditional volatility depends on the following kernel:

Recall that the functional ARCH of order p is defined by

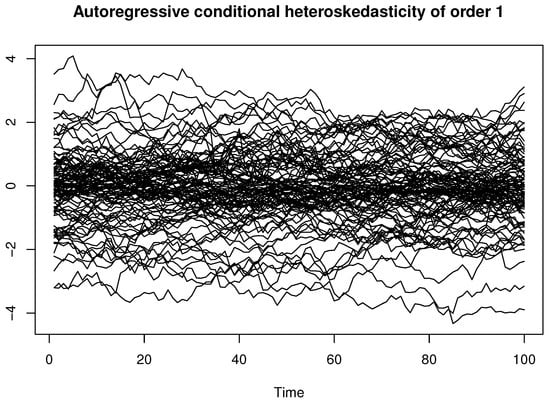

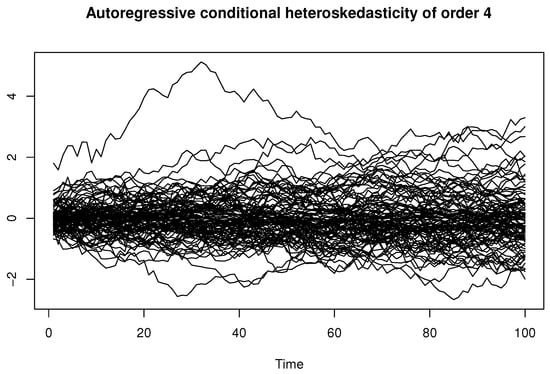

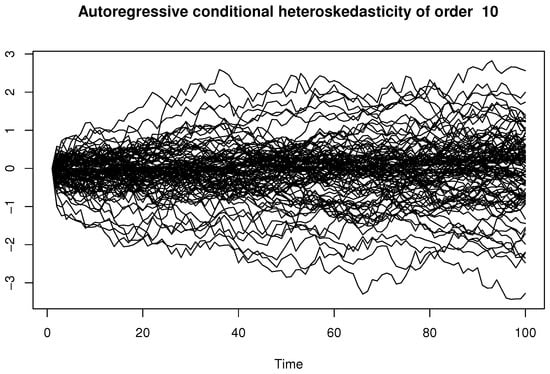

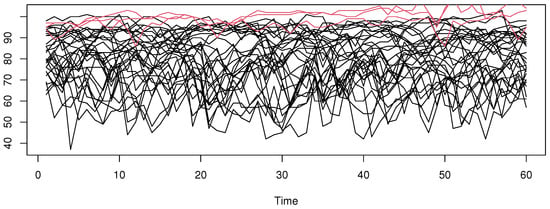

where the innovation is i.i.d. error generated from an Ornstein–Uhlenbeck process and the constant coefficient . The kernel Equation (6) is the default choice in the FTSgof R-package. Of course, the correlation level is adjusted via the parameter p. In the cited package, the correlation is quantified through the function fACF. The different shapes of the generated functional time series are given in Figure 1, Figure 2 and Figure 3.

Figure 1.

A sample of 100 curves from FARCH(1).

Figure 2.

A sample of 100 curves from FARCH(4).

Figure 3.

A sample of 100 curves from FARCH(10).

For the response variable S, we use the heteroscedastic single-index model is defined as

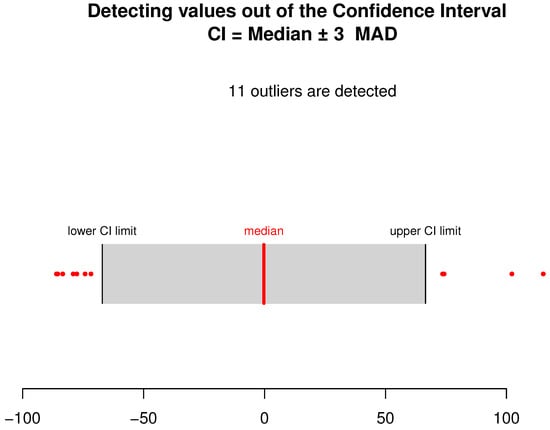

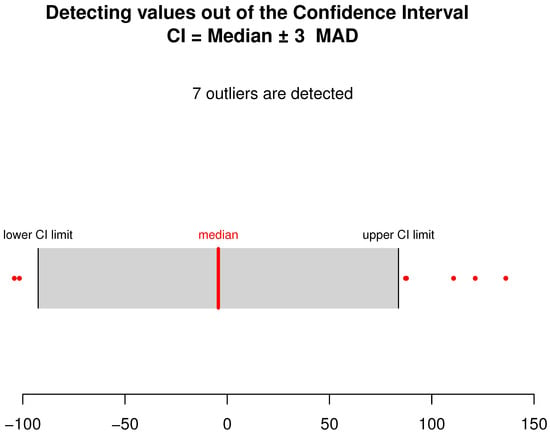

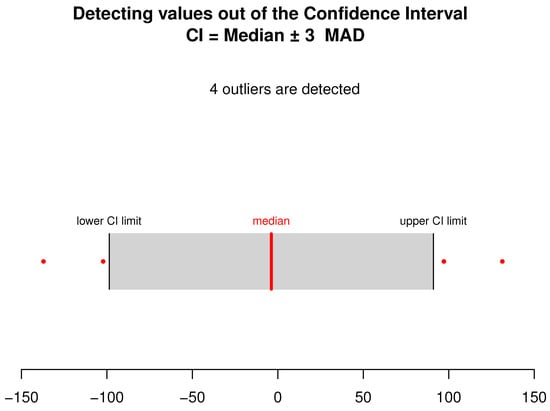

where is the first element of the basis function of Karhunen–Loeve, associated with the sample . The variable is the white noise independent of . They are generated using three distinct distributions: Laplace, Weibull and log-normal. This choice is motivated by the heavy-tailed property of the considered distributions. On the other hand, the three distributions are unvaried by translation, which permits identifying the true models. It is obtained by shifting the distribution of . Furthermore, we use the MAD function to detect the outliers in the response variable. We point out that there exist many other alternative outlier detectors (see [] for more details). However, the MAD function controls the median absolute deviations, which is very simple to execute. The number of detected outliers using the MAD function is displayed in Figure 4, Figure 5 and Figure 6.

Figure 4.

The umber of outliers detected using the MAD-test. The outliers are displayed in red dots.

Figure 5.

The number of outliers detected using the MAD test. The outliers are displayed in red dots.

Figure 6.

The number of outliers detected using the MAD test. The outliers are displayed in red dots.

In these simulation experiments, we compare the estimator to the standard one defined by the conditional density as

Clearly, the performance of the estimators is related to the determination of the different components of the estimation. In this kind of smoothing approach, the bandwidth parameter and the functional index play a crucial role. In this empirical analysis, the sequence and the functional index are selected using the leave-one-out mean square cross-validation rule defined by

where is the leave-one-out estimator or . The subset is the positive real numbers, , such that the ball centered at v with radius contains exactly neighbors of v. We point out that we have used the PCA-metric to construct the previous balls (see [] for more details about this metric). In addition, the subset is finite basis functions of the Hilbert subspace spanned by the covariates . The latter is determined by the first eigenvectors associated with the largest eigenvalues of the variance-covariance matrix of the explanatory variables . Also, we specify that we have used the quadratic kernel. Finally, we examine the efficiency of the estimators or using the mean absolute error by

We compute this error for the three functional time series (for ), for three values of and for two cases. In the first case, we consider the data without modification, while in the second case, we remove the outliers observations detected in Figure 4, Figure 5 and Figure 6. The obtained results are presented in the following Table 1.

Table 1.

MAE results.

Finally, we see clearly that the MAE error is more stable in the estimator. In this sense, the variability of the MAE is more important in the standard case . However, the accuracy of the estimation is also affected by the degree of dependency and the nonparametric distribution. There is a significant difference between different functional time series cases, and the MAE-error increases with the level of dependency.

5. Real Data Example

In addition to the empirical analysis developed in the last section, we aim in this section to examine the applicability of the proposed model to a real-life example. More precisely, our aim is to show how the robust estimation of the modal regression is useful in the prediction problem. Indeed, we use the predictor to forecast the daily temperature in Houston two months ahead using historical data from the past. For this aim, we consider the dataset of this city, which is available on the website “https://kilthub.cmu.edu/articles/dataset/Compiled_daily_temperature_and_precipitation_data_for_the_U_S_cities/7890488” (accessed on 15 February 2025). The station provides daily measurements from 1889 to 2023.

Recall that the main feature of functional data analysis is its ability to model complicated data with high dimensionality. For this reason, it is necessary to examine the reliability of the proposed method over an explanatory variable with a long trajectory. This consideration permits the incorporation of the principle of functional statistics and the evaluation of the role of the functional single-index modeling in this complicated prediction issue. We point out that the choice of an explanatory variable with few historical data is not beneficial for functional data analysis. In this real-data study, we have examined many periods (25 days, 60 days, and 75 days, among others). We have observed that the historical data of 60 days are more informative than the other examined cases. Thus, we will predict the daily temperature of one day given the curves of the last 60 days. For this aim, we construct a functional time series by cutting the whole curve into sub-curves , each of which contains the data for 60 days. We compare the robust predictor to the standard one . In order to highlight the robustness property, we conduct this comparison study in both cases (with outliers and without outliers). Furthermore, the outlier curves are obtained using the routine code outliers.depth.trim from the R-package fda.usc. We return to [] for more discussion on the functional outlier detector. The following Figure 7 displays some functional regressors as well as the outlier curves (in red).

Figure 7.

A sample of curves and the number of outlier curves.

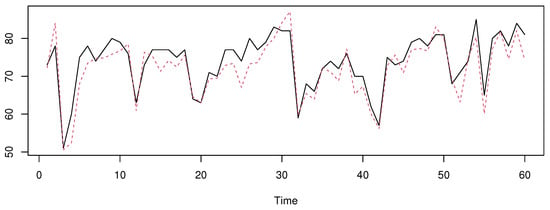

In the first case, we execute the function with the initial data without changes, while in the second case, we remove the outlier curves as well as the outliers in the response variable. Finally, we compute the predictors or using the same procedure as in Section 4 to choose the bandwidth and the index . In addition, we specify that we have used the quadratic kernel function to compute the estimators. The prediction results are plotted in Figure 8, Figure 9, Figure 10 and Figure 11, where we show the true values of temperature for the last 60 days and the predicted values.

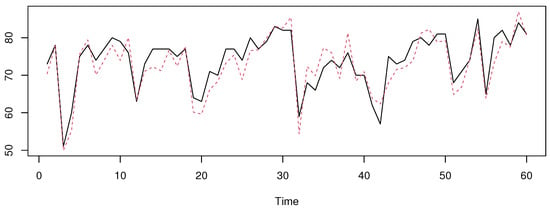

Figure 8.

The prediction with the robust mode with outliers. The true values on black line and the predicted on the red line.

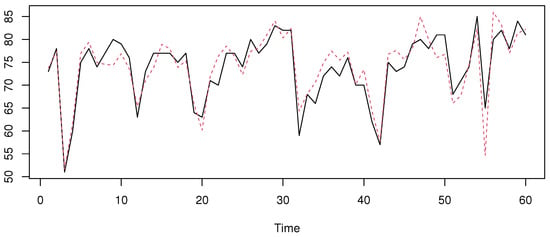

Figure 9.

The prediction with the standard mode with outliers.The true values on black line and the predicted on the red line.

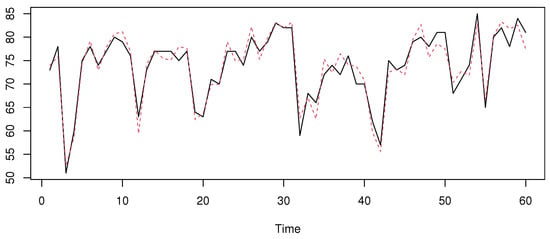

Figure 10.

The prediction with the robust mode without outliers. The true values on black line and the predicted on the red line.

Figure 11.

The prediction with the standard mode without outliers. The true values on black line and the predicted on the red line.

We can see that the predictor is more appropriate for the first case, when the data contain some outliers. Meanwhile, the estimator performs well when the outliers are removed. This conclusion can be confirmed using the mean absolute error (MAE) used in the last section. We have obtained, for the first situation, an MAE of 3.75 for against for . The MAE values of the second situation are for versus 4.51 for .

6. Conclusions and Prospects

In this paper, we have investigated the robust estimation of the conditional mode in a functional time series case. The estimator is derived from the robust estimation of the quantile regression. The constructed estimator constitutes an alternative estimator to the standard one based on the conditional density estimation. Moreover, it aims to improve the accuracy as well as the robustness of the conditional mode prediction. This statement is confirmed by the obtained asymptotic result. Indeed, we show that the new estimator can be defined by one kernel and one bandwidth parameter, which allows reducing the almost complete convergence rate.

The second component of this contribution is the specific functional structure, which is modeled using the single-index smoothing algorithm combined with the strong mixing assumption. We have explored this feature in the expression of the convergence. In this sense, the consistency of the estimator is strongly affected by the level of correlation, as well as the choice of the functional index. This aspect is explored in the empirical analysis. Furthermore, the simulation study proves the easy implementation of the constructed estimator. Moreover, it confirms the robustness and the accuracy of the predictor compared to other alternative estimators. From this empirical analysis, we conclude that the performance of the predictor is impacted by the selection of the different parameters involved in the estimator. The arbitrary choice of these parameters can negatively impact the quality of the prediction. For this reason, we can say that the nonexistence of an automatic procedure to select the best bandwidth and the best functional form is the principal concern that negatively affects the applicability of the constructed estimator. In fact, such an issue constitutes an important prospect for the future.

Additionally, for the last open question, we also list the local linear estimator of the modal regression. In this sense, we will combine the robust estimation with the local linear approaches. Recall that local linear modeling is an important algorithm for improving the bias term in the convergence rate. Finally, it is well known that the asymptotic normality of the robust estimator is interesting for the estimation of the confidence interval. Thus, the statement of this asymptotic result is also important for practical purposes.

7. The Mathematical Development

This section is dedicated to proving the asymptotic results of the paper.

The proof of Theorem 1 is based on some standard analytical arguments. Indeed, we write

From Taylor expansion,

Because is a minimizer of ,

Moreover,

Combining Equations (7)–(10),

The definition gives

So,

Thus, Theorem 1 is a consequence of Proposition 1.

Proposition 1.

Under the postulates of Theorem 1, we have

The proof is based on

Lemma 1

(see []). Let be a sequence of decreasing real random functions, and let be a random real sequence such that

Then, for any real random sequence such that , we have

Proof.

Apply Lemma 1 on

where . Observe that . So, it suffices

such that

For Equation (11), we put

Then,

implies

We evaluate asymptotically

For the covariance part, we split the sum into two sets defined by

and

Let and be the sum of covariance over and , respectively. Obviously,

From (AS1), (AS3) and (AS4), we have

Next, for the quantity we use Davydov–Rio’s inequality, to show that

We deduce

Taking

to

Next, from (AS1) to (AS4) we obtain

We conclude

Finally, from Fuk–Nagaev’s inequality on

where for all and ,

For

to obtain,

and

for and . Combining Equations (14) and (15) to conclude that

Further,

Therefore,

For Equation (12), we have

and the bias

For Equation (17), we write

So, and we put . Thus

Since then

where

Once again, we determine the asymptotic behavior of the variance term to apply Fuk–Nagaev’s inequality. Indeed

So,

We split the sum into two sets defined by

Put and , the sum of covariance, over and , respectively. We have

By (AS3) and (AS5)

Next, we use Davydov–Rio’s inequality for

to show

Hence,

Choose

Thus,

From (AS1) and (AS4), we obtain

The Fu–Nagaev inequality implies

to obtain

For the last term,

By Equation (19) we obtain

Therefore,

implies

with

Reasoning by the same manner as in , we show that

Consequently, such that

which proves Equation (17).

Concerning Equation (18),

implying

Therefore,

Now, from the Bahadur representation, we demonstrate the uniform consistency of . We prove

We demonstrate and prove only the second one. From the compactness ,

So, and we put and

Therefore,

Thus

with

we obtain

and

Similarly,

with

Since

we obtain

and

Putting

where

Reasoning by the same manner as in , we prove that

Consequently, such that

Then, such that

Hence,

We prove

We conclude

□

Author Contributions

Conceptualization, A.L.; Methodology, M.B.A. and A.L.; Investigation, F.A.A.; Data curation, Z.K. and A.L.; Writing—original draft, A.L.; Writing—review & editing, Z.K.; Project administration, Z.K.; Funding acquisition, F.A.A. All authors have read and agreed to the final version of the manuscript.

Funding

This research was funded by Princess Nourah bint Abdulrahman University Researchers; Supporting Project number (PNURSP2025R515); Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia; and the Deanship of Scientific Research and Graduate Studies at King Khalid University through the Research Groups Program under grant number R.G.P. 1/163/45.

Data Availability Statement

The data used in this study are available through the link https://kilthub.cmu.edu (accessed on 2 February 2025).

Acknowledgments

The authors would like to thank the referees for their very valuable comments and suggestions, which led to a considerable improvement of the manuscript. The authors thank and extend their appreciation to the funders of this work. This work was supported by Princess Nourah bint Abdulrahman University Researchers; Supporting Project number (PNURSP2025R515); Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia, and the Deanship of Scientific Research and Graduate Studies at King Khalid University through the Research Groups Program under grant number R.G.P. 1/163/45.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Collomb, G.; Härdle, W.; Hassani, S. A note on prediction via estimation of conditional mode function. J. Statist. Plan. Inf. 1987, 15, 227–236. [Google Scholar] [CrossRef]

- Härdle, W.; Hall, P.; Ichimura, H. Optimal smoothing in single-index models. Ann. Statist. 1993, 21, 157–178. [Google Scholar] [CrossRef]

- Stute, W.; Zhu, L.-X. Nonparametric checks for single-index models. Ann. Statist. 2005, 33, 1048–1083. [Google Scholar] [CrossRef]

- Tang, Q.; Kong, L.; Rupper, D.; Karunamuni, R.J. Partial functional partially linear single-index models. Statist. Sin. 2021, 31, 107–133. [Google Scholar] [CrossRef]

- Zhou, W.; Gao, J.; Harris, D.; Kew, H. Semi-parametric single-index predictive regression models with cointegrated regressors. J. Econom. 2024, 238, 105577. [Google Scholar] [CrossRef]

- Zhu, H.; Zhang, R.; Liu, Y.; Ding, H. Robust estimation for a general functional single index model via quantile regression. J. Korean Stat. Soc. 2022, 51, 1041–1070. [Google Scholar] [CrossRef]

- Ferraty, F.; Peuch, A.; Vieu, P. Modèle à indice fonctionnel simple. Comptes Rendus Math. 2003, 336, 1025–1028. [Google Scholar] [CrossRef]

- Ait-Saïdi, A.; Ferraty, F.; Kassa, R.; Vieu, P. Cross-validated estimations in the single-functional index model. Statistics 2008, 42, 475–494. [Google Scholar] [CrossRef]

- Jiang, Z.; Huang, Z.; Zhang, J. Functional single-index composite quantile regression. Metrika 2023, 86, 595–603. [Google Scholar] [CrossRef]

- Nie, Y.; Wang, L.; Cao, J. Estimating functional single index models with compact support. Environmetrics 2023, 34, e2784. [Google Scholar] [CrossRef]

- Chen, D.; Hall, P.; Müller, H.-G. Single and multiple index functional regression models with nonparametric link. Ann. Statist. 2011, 39, 1720–1747. [Google Scholar] [CrossRef]

- Ling, N.; Xu, Q. Asymptotic normality of conditional density estimation in the single index model for functional time series data. Statist. Probab. Lett. 2012, 82, 2235–2243. [Google Scholar] [CrossRef]

- Attaoui, S. On the nonparametric conditional density and mode estimates in the single functional index model with strongly mixing data. Sankhya A 2014, 76, 356–378. [Google Scholar] [CrossRef]

- Han, Z.-C.; Lin, J.-G.; Zhao, Y.-Y. Adaptive semiparametric estimation for single index models with jumps. Comput. Stat. Data Anal. 2020, 151, 107013. [Google Scholar] [CrossRef]

- Hao, M.; Liu, K.Y.; Su, W.; Zhao, X. Semiparametric estimation for the functional additive hazards model. Can. J. Stat. 2024, 52, 755–782. [Google Scholar] [CrossRef]

- Kowal, D.R.; Canale, A. Semiparametric Functional Factor Models with Bayesian Rank Selection. Bayesian Anal. 2023, 18, 1161–1189. [Google Scholar] [CrossRef]

- Ferraty, F.; Vieu, P. Nonparametric Functional Data Analysis; Springer: New York, NY, USA, 2006. [Google Scholar]

- Azzedine, N.; Laksaci, A.; Ould-Saïd, E. On robust nonparametric regression estimation for functional regressor. Stat. Probab. Lett. 2008, 78, 3216–3221. [Google Scholar] [CrossRef]

- Barrientos-Marin, J.; Ferraty, F.; Vieu, P. Locally modelled regression and functional data. J. Nonparametric Stat. 2010, 22, 617–632. [Google Scholar] [CrossRef]

- Demongeot, J.; Hamie, A.; Laksaci, A.; Rachdi, M. Relative-error prediction in nonparametric functional statistics: Theory and practice. J. Multivar. Anal. 2016, 146, 261–268. [Google Scholar] [CrossRef]

- Ezzahrioui, M.; Ouldsaïd, E. Asymptotic normality of a nonparametric estimator of the conditional mode function for functional data. J. Nonparametric Stat. 2008, 20, 3–18. [Google Scholar] [CrossRef]

- Ezzahrioui, M.; Ould-Said, E. Some asymptotic results of a non-parametric conditional mode estimator for functional time-series data. Stat. Neerl. 2010, 64, 171–201. [Google Scholar] [CrossRef]

- Dabo-Niang, S.; Laksaci, A. Estimation non paramétrique du mode conditionnel pour variable explicative fonctionnelle. Pub. Inst. Stat. Univ. Paris 2007, 3, 27–42. [Google Scholar] [CrossRef]

- Dabo-Niang, S.; Kaid, Z.; Laksaci, A. Asymptotic properties of the kernel estimate of spatial conditional mode when the regressor is functional. AStA Adv. Stat. Anal. 2015, 99, 131–160. [Google Scholar] [CrossRef]

- Ling, N.; Liu, Y.; Vieu, P. Conditional mode estimation for functional stationary ergodic data with responses missing at random. Statistics 2016, 50, 991–1013. [Google Scholar] [CrossRef]

- Bouanani, O.; Rahmani, S.; Laksaci, A.; Rachdi, M. Asymptotic normality of conditional mode estimation for functional dependent data. Indian J. Pure Appl. Math. 2020, 51, 465–481. [Google Scholar] [CrossRef]

- Engle, R.F. Autoregressive conditional heteroskedasticity with estimates of the variance of U.K. inflation. Econometrica 1982, 50, 987–1007. [Google Scholar] [CrossRef]

- Jones, D.A. Nonlinear autoregressive processes. Proc. Roy. Soc. A 1978, 360, 71–95. [Google Scholar]

- Bollerslev, T. General autoregressive conditional heteroskedasticity. J. Econom. 1986, 31, 307–327. [Google Scholar] [CrossRef]

- Leys, C.; Ley, C.; Klein, O.; Bernard, P.; Licata, L. Detecting outliers: Do not use standard deviation around the mean, use absolute deviation around the median. J. Exp. Soc. Psychol. 2013, 49, 764–766. [Google Scholar] [CrossRef]

- Febrero, M.; Galeano, P.; González-Manteiga, W. Outlier detection in functional data by depth measures with application to identify abnormal NOx levels. Environmetrics 2008, 19, 331–345. [Google Scholar] [CrossRef]

- Azzi, A.; Belguerna, A.; Laksaci, A.; Rachdi, M. The scalar-on-function modal regression for functional time series data. J. Nonparametric Stat. 2024, 36, 503–526. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).