1. Introduction

With the development of the Internet, more and more data is stored digitally, and computer users can rely on the Internet to access massive amounts of information. Therefore, the need to quickly obtain key information from massive data is increasing year by year. However, the amount of information is quite large, and manually extracting the main information is a costly task. In recent years, automatic text summary technology has been constantly updated and iterated, reducing the intervention of human effort, and making it easier for users to obtain major information from complex information in different ways. Therefore, different methods have been proposed to automatically generate the summary [

1,

2,

3]: the automatic text summary (ATS) task extracts the important information in the text and filters out the useless information. This technology can effectively reduce user information overload and help users quickly understand the target document. Automatic text summary technology is an important tool for solving large-scale data problems. Furthermore, automatic text summary technology reduces the size of the input document without abusing its main purpose and content, and automatic text summary technology can also avoid the problem [

4] of a manual summary’s tendency to often contain irrelevant or less objective information. Since the automatic text summary technique shows great power in extracting information, this technique is also applied in other fields [

5,

6]. For example, automatic summary techniques can be used to provide annotations to programming language statements, which help us to understand large code written by other programmers, and can also show the evaluation opinions and search results of products or services in commercial services.

Automatic text summary can be classified into extractive summary, abstractive summary, and hybrid summary based on different implementation methods. It can also be divided into single-document and multi-document summaries according to the number of texts processed. Extractive summary directly selects key sentences from the original text, scores sentences based on features such as their position and length, and combines the sentences with higher scores to form a summary. This method is simple to implement and easy to understand, and is usually based on sequence-labeling or graph-ranking models [

7,

8]. Abstractive summaries rely on deep neural network structures, such as sequence-to-sequence models, which can understand the semantics of the original text and generate new sentences. They can even introduce words or phrases not present in the original text, offering higher flexibility [

9,

10]. Hybrid summary combines the advantages of extractive and abstractive methods, typically employing supervised or unsupervised learning approaches. It retains the key information of the original text while enhancing the fluency and readability of the summary [

11,

12,

13]. Currently, most abstractive methods extract word vectors from the text, cluster sentences based on lexical structure and frequency, and then select high-scoring sentences from the clusters to form the summary.

However, there are still some problems in the sentence selection method and sentence clustering, which are described as follows:

- (1)

The K value selection of the traditional K-means clustering algorithm is manually specified, and the improper selection of the K value will lead to a poor clustering effect. The K-means clustering algorithm generates sentence clusters by the feature vector of sentences from the text. The algorithm is simple and the clustering convergence rate is fast. However, different K values can affect the effect of the final sentence clustering, and, thus, affect the sentence selection of the final summary. The improper selection of the K value will lead to a poor clustering effect and form an inaccurate text summary from the clusters.

- (2)

Most automatic text summary methods have high redundancy. Most automatic text summary methods extract sentences with high feature scores from clusters and combine sentences into a final summary. However, the summaries extracted by these automatic text summary methods may have redundant sentences with semantic repetition, which will lead to the insufficient semantic information of the final summary and make the extracted text summary less effective.

To solve the above problems, this paper proposes an automatic text summary method based on the optimized K-means clustering algorithm with symmetry and the Maximal-Marginal-Relevance algorithm. This method uses the Genetic Algorithm to optimize the K value selection of the K-means clustering algorithm and reduces the sentence redundancy of the text summary by using the Maximal-Marginal-Relevance algorithm. Firstly, the summary method obtains the semantic-rich weighted word vectors from the text through the TF-IDF and Word2Vec model, and uses the weighted word vectors to generate the representation vector of sentences. Second, the summary method uses the Genetic Algorithm with symmetry to optimize the K value and improve the silhouette coefficient of the sentence-clustering results of the K-means clustering algorithm to optimize the K-means sentence-clustering effect. Then, the summary method extracts sentence features from the text, which include statistical and linguistic features, and uses these features to calculate the feature scores of sentences. Finally, the summary method selects the sentences with higher feature scores from clusters, uses the Maximal-Marginal-Relevance algorithm to calculate the MMR values of these sentences, and selects the sentences with higher MMR values to form the final text summary. The experiment results show that the proposed GAKM-MMR method can improve the accuracy of automatic text summary and reduce redundancy compared with other three methods, including Lead-3, TextRank, and KM-MMR. In conclusion, the proposed method in this paper can obtain better-quality text summaries.

The main contribution research work in this paper is as follows:

- (1)

In this paper, a Genetic Algorithm with symmetry is used to optimize the K value selection of the K-means clustering algorithm. The K value selection will affect the effect of the clustering. The Genetic Algorithm optimizes the K value of the K-means clustering algorithm, improves the silhouette coefficient of the sentence-clustering results, and searches for the optimal sentence-clustering effect.

- (2)

This paper uses the Maximal-Marginal-Relevance algorithm to select the sentences with higher MMR values from the sentences with higher sentence feature scores to form the final text summary. The feature score of a sentence is calculated by the statistical features and linguistic features of the sentences, and the MMR value of the sentence can be calculated by the Maximal-Marginal-Relevance algorithm. The Maximal-Marginal-Relevance algorithm reduces the redundancy of text summary sentences.

The rest of this article is organized as follows: Part 2 introduces three different automatic text summary methods; Part 3 proposes an automatic text summary method based on optimizing the K-means clustering algorithm and Maximal-Marginal-Relevance algorithm; Part 4 compares and analyzes the experiment results; and Part 5 proposes the conclusions and future work.

2. Related Works

Automatic text summary refers to the process of summarizing the content of the text information, extracting the main content from the text, and forming the summary. The form of the summary can be a text paragraph or sentence. Faced with the growth of data in the current digital space and the data being too long, the automatic text summary can help users quickly know the main meaning of the text and filter out irrelevant information. The automatic text summary method can be divided into three methods: extractive text summary, abstractive text summary, and hybrid text summary. The above three methods are detailed below.

2.1. Text Summary Based on Extractive Method

The extractive text summary involves directly selecting several important sentences from the original text for sorting and reorganization to form a summary. The extractive summary uses the sentence position, sentence length, prompt phrase, and other characteristics to evaluate the weight of the sentence, and, finally, forms the summary according to the order of the sentence weight from high to low.

In recent studies, the unsupervised method based on the text extractive method mainly focuses on the statistical features of the text while ignoring the semantic features and potential significance of the text. To solve this problem, an automatic summarizer using the distributional semantic model is proposed to capture the semantics for producing high-quality summaries [

14]. The method clusters through the K-means method to generate a semantic-rich, high-quality summary by simultaneously obtaining the scores of text statistics and linguistic features, which measures the semantic similarity between text units. In natural language processing, the “bag of words” model is frequently used because of its few model parameters and low computational cost, but the model has many limitations in the application; for example, the BOW method for generating sentences or documents did not consider the two factors of word-sorting information and the importance of words. The ETS-NLPODL (extractive text summarization using NLP with an optimal DL) model is proposed to exploit feature selection with a hyperparameter-tuned DL model for summarizing the text [

15]. It preprocesses data, extracts 15 features, selects optimal ones using Hunger Games Search Optimization (HGSO), and applies an Attention-based CNN-GRU model tuned by Mountain Gazelle Optimization (MGO). A supervised algorithm that produces a task-optimized weighted average of word embeddings for a given task is presented [

16]. This algorithm generates embeddings at the sentence level as a weighted average of a specific word embedding. This sentence-level embedding is improved for the corresponding supervised learning and combines the compactness and expressiveness of the word embedding representation and the word-level insight of the BOW-type model, proving the importance of weighted words in automatic text summary.

Three methods for extracting a single document summary by combining supervised learning with unsupervised learning are proposed to measure the importance of sentences [

17]. The aim of these proposed methods is to evaluate the importance of sentences by combining the statistical characteristics of sentences and inter-sentence relationships. The first approach uses a linear combination of the supervised model and graph model scores as the final score of the sentence. The second approach uses the graph model as an independent feature to evaluate the sentences. The third approach evaluates the importance of the sentences through a supervised model and serves as a prior for the nodes in the graph model, and, finally, scores the sentences using a biased graph model. To obtain better semantic information, a weighted word vector representation method concerning TF-IDF is presented for ATS to perceive the hidden semantic meaning from the text [

18]. This method helps to produce a diverse, minimally redundant summary and generate the sentence vectors. This method uses the word movement distance to evaluate the similarity between the sentences and eliminates the irrelevant sentences in the summary. This method evaluates the diversity and relevance of the generated summaries, making them more informative and more comprehensive.

2.2. Text Summary Based on Abstractive Method

The abstractive text summary is a technique that acquires the main meaning of a text. and generates a text summary through multiple methods. Different from the extractive text summary, the former does not evaluate the importance of sentences in the text through multiple characteristics, and independently generate brand-new, concise and coherent text summary based on a deep understanding of the semantic meaning and importance information of the source text.

The text summary based on abstraction often considers the readability and coherence of the summary. The common methods include graph-based abstractive text summary and abstractive text summary based on deep learning. ARAE (Adversarial Regularization Autoencoder) and CARAE (Conditional Adversarial Regularization Autoencoder) introduce conditional nodes during the summarization process to utilize the clustering information [

19]. SLN-MDS (Abstractive Multi-Document Summarization based on Semantic Link Network) is proposed to explore the role of the semantic link network in representing and understanding the multi-document summary [

20]. The method first transforms the text into a semantic link network of concepts and events, and then transforms the semantic link network into a generative text summary through the selection of important concepts and events and maintains the consistency between the preceding and following text. The method identifies the importance of the semantic link network. A two-stage method for variable-length abstractive summarization is proposed to implement the abstractive text summary with a high fluency and variable length [

21]. Firstly, the text segmentation module uses the Transformer’s pre-trained bidirectional Encoder Representation (BERT) and two-way Long Short-Term Memory (LSTM) to divide the input text into segments. Then, the abstraction model of the BERT-based summary model is constructed to obtain the most important sentence from each summary, and the model achieves good results in capturing the relationships between the sentences.

TASP (Topic-based Abstractive Summarization of Facebook text Posts) is proposed to implement trend topic detection from Facebook posts and generate post text summaries [

22]. This method utilizes authoritative posts published in well-known newspapers to train a generative summary model for multiple post sets. During reasoning, this method first uses a pre-trained Transformer language model to create semantically similar social post clusters, with each social post cluster representing a different topic. Then, this method generates a post text summary for each social post class cluster. Most sequence-to-sequence summary models often ignore the future information implicit in ingenerated words, and, in this paper, a novel summarization model with a “lookahead” ability is presented to fully employ the implied future information [

23]. First, this method trains an asynchronous decoder model that displays the generation and utilizes future information. Then, a highly informative decoding method is used to further consider future ROUGE rewards for ingenerated words. MAtt-Bi-LSTM-AE (Modified Attention-based Bidirectional LSTM Autoencoder), a model combining a bi-directional LSTM, an autoencoder, and a modified attention mechanism for abstractive summarization of Amazon food reviews, is presented [

24]. Dynamic attention weights replace conventional fixed vectors, enabling the context-sensitive compression of long inputs into coherent summaries.

2.3. Mixed-Based Text Summary

Extractive text summary often can guarantee language fluency, but often can only use the original sentences. The abstractive text summary model can use non-original sentences to generate the summary, but often does not conform to the grammar, and has low language fluency. Hybrid text summary combines the advantages of both to generate a highly refined text summary.

A general unified framework for abstractive summarization which incorporates extractive summarization as an auxiliary task is proposed to fully integrate the relatedness and advantages of both approaches [

25]. The framework consists of a shared hierarchical document encoder, a decoder, and an extractor based on a hierarchical attention mechanism. The framework uses the labels of the extracted task to constrain the attention learned by the abstract task to enhance the consistency between the two tasks. The framework also reduces half of the extraction task, and the article limits attention to the abstract task. A two-stage, extractive and then abstractive, summarization model is presented to solve the difficulties in document-level summarization [

26]. The model first considers the characteristics of the sentence position and paragraph position, and extracts important sentences by combining sentence similarity matrix. A beam-search algorithm is then used to reconstruct and rewrite these syntactic blocks of these extracted sentences. The newly generated summaries will be used as the next round of the pseudo-summary, and the algorithm proposed by the article uses the global best pseudo-title as the final summary, to extract coarse-grained sentences from the document and consider the differentiation of important sentences in the document. Ma believes that it is very important to solve long-term text dependencies and utilize underlying topic mapping, and T-BERT-Sum (Topic-Aware Text Summarization Based on BERT) is proposed to better capture the semantics of long documents and leveraging thematic information [

27]. The model is an improvement on the previous model. First, the model matches the encoded latent topic to the embedding of BERT through a neural topic model. Then, the long-term dependence is learned through the transformer network, and, finally, the Long Short-Term Memory (LSTM) network layer stacks the sequence-timing information on the extraction model. A two-stage extraction–abstraction model is constructed in sharing information. The model captures a more accurate representation of the context.

A novel hybrid extractive–abstractive model is proposed to combine BERT (Bidirectional Encoder Representations from Transformers) word embedding with reinforcement learning [

28]. Firstly, the model extracts abstractions written by humans to convert to a base label. Second, it uses BERT word embeddings as text representations, and the two sub-models are trained separately. Finally, the extracted and abstract networks are bridged by reinforcement learning. For low-resource languages, the first Hungarian abstractive summarization tool based on mBART and mT5 models is proposed [

29]. The article is divided into two main blocks, for the abstract model module using the previous two models. For obtaining the extractive summary, the ELECTRA model is also tested in addition to the BERT model. TextR-BLG (TextR-BLG Pointer Algorithm) is proposed, a hybrid summarization system that combines TextRank extractive ranking with an abstractive BERT-LSTM-BiGRU pointer network to tackle out-of-vocabulary words in long documents [

30]. Sentences longer than 30 words are handled by the abstractive module, and shorter ones are extracted. Squid-Game optimization tunes the BLG parameters.

The GAKM-MMR method proposed in this paper has significant connections and differences from the aforementioned works of literature in terms of sentence clustering and redundancy reduction:

- (1)

In terms of K-value optimization, most K-means-based summarization methods rely on manually setting the K value, while this paper introduces the Genetic Algorithm with symmetry to automatically optimize the K value, enhancing the objectivity and stability of the clustering effect. This is similar to the approach that uses external indicators to optimize clustering in the aforementioned works of literature. However, this paper further integrates the clustering quality with the summarization generation process.

- (2)

In terms of redundancy reduction, this paper uses the MMR algorithm to select highly relevant and low-redundancy sentences from the initial summary, which is consistent with the goal for using the word shift distance to evaluate the sentence similarity and for using the attention mechanism to filter sentences in the aforementioned works of literature. However, the MMR algorithm has more theoretical guarantees in balancing relevance and diversity.

- (3)

In terms of feature extraction, this paper comprehensively uses statistical features (such as position, length, and TF-ISF) and language features (such as the proportion of gerunds and average similarity), which is consistent with the multi-feature fusion approach in the aforementioned works of literature. However, this paper further combines feature scoring with the MMR algorithm to form a dual screening mechanism.

- (4)

In terms of sentence representation, this paper uses weighted word vectors generated by Word2Vec and TF-IDF to generate sentence vectors, which use word embeddings similar to the aforementioned works of literature. However, this paper enhances the weight of keywords in sentence representation through weighting methods.

In conclusion, this method, while inheriting the advantages of the existing approaches, optimizes clustering through the Genetic Algorithm and controls redundancy with the MMR algorithm. As a result, it achieves a more systematic integration and improvement in sentence selection and summary construction.

3. Methodology

In this section, we describe our model for automatic summarization. This section proposes an automatic text summary method based on optimized K-means clustering algorithm with symmetry and Maximal-Marginal-Relevance algorithm. This method uses Genetic Algorithm with symmetry to optimize the selection of K value of K-means clustering algorithm and reduce the redundancy of sentence of text summary using Maximal-Marginal-Relevance algorithm.

Figure 1 shows the architecture of our model. Precisely, our proposed approach is divided into four modules: vector generation, cluster optimization, feature extraction, and summary construction. The vector generation module involves obtaining the weighted word vector using the TF-IDF and Word2Vec model and generating the representation vector of sentences. The cluster optimization module uses the Genetic Algorithm with symmetry to optimize the K value to improve the silhouette coefficient of the sentence-clustering results, and, finally, obtain the optimal sentence-clustering effect. The feature extraction module involves extracting the sentence features from the text, with the sentence features including statistical features and linguistic features, and using these features to calculate the feature scores of the sentences. The summary construction module involves selecting the sentences with higher feature scores from the clusters, using the Maximal-Marginal-Relevance algorithm to calculate the MMR values of these sentences, and selecting the sentences with higher MMR values to form the final text summary.

3.1. Vector Generation

Vector generation module preprocesses the text, and uses the Word2Vec model to convert the text into the numerical vector, then generates the weighted word vector combined with TF-IDF, and, finally, constructs the sentence vector. The Word2Vec model is a distributional semantic model that captures the semantics and the TF-IDF method is a term-weighting method with word representation vectors that creates efficient word vectors and representation learning. First, this module processes the input text to obtain the preprocessed text, including the process of removing the stopped words and extracting the effective terms of the input text. Secondly, this module uses the Word2Vec model based on word embedding to calculate the semantic distribution vector of each item in the preprocessed text, and calculates the TF-IDF value by using the TF-IDF method and combines the semantic distribution vector of the item in a multiplicative way to obtain the weighted word vector. Finally, this module adds all the weighted word vectors of a sentence and divides them by the effective number of word terms of the sentence to obtain the vector of that sentence. Vector generation module includes three modules: text preprocessing, weighted word vector generation, and sentence vector generation. The above three modules will be detailed below.

3.1.1. Text Preprocessing

Text preprocessing module involves the process of obtaining the preprocessed text by removing stopped words, extracting effective words, and other methods. Text preprocessing includes four processes: sentence segmentation, tokenization, stop words removal, and stem extraction. Sentence segmentation is the conversion of input text into a set of sentences using punctuation marks such as periods and question marks. Tokenization is the conversion of each sentence into a set of words using the NLTK term segmentation function. Stop words removal is the removal of “no semantic” words from the above word set, such as prepositions, mood words, and so on. Stem extraction involves transforming the different forms of words, such as plurals, inflections, and affixes, into the same stem.

3.1.2. Weighted Word Vector Generation

Weighted word vector generation involves generating weighted word vector by the Word2Vec model and TF-IDF method. The weighted word vector generation is divided into three processes: word vector generation, TF-IDF weight calculation, and weighted word vector calculation. Word vector generation involves converting each word of a preprocessed text into a numerical vector using a model. TF-IDF weight calculation involves calculating the weight of each term using the TF-IDF method. The weighted word vector involves obtaining the weighted word vector constructed by multiplying the word vector obtained by Word2Vec with the TF-IDF weight of the corresponding item.

The weighted word vector generation formula is as follows:

where

is the i-th sentence in document

, the word

is the j-th word in sentence

,

represents the TF-IDF value of the word

in sentence

,

represents the frequency of the word

in sentence

,

represents the inverse sentence frequency of the word

,

represents the number of occurrences of the term

in sentence

,

represents the number of different words in sentence

,

represents the number of occurrences of the word

in sentence

,

represents the total number of sentences in document

,

represents the number of sentences in which the term

appears in document

,

represents the Word2Vec word vector of the word

, and

represents the weighted word vector of the item

.

3.1.3. Sentence Vector Generation

Sentence vector generation module involves the process of generating sentence vector by weighted word vector. The sentences in a document can be regarded as a set of words, by dividing the sum of all weighted word vectors in each sentence by the effective number of terms of the sentence to obtain the representation vector of the sentence.

The formula of sentence vector generation is calculated as follows:

where

represents the sentence vector of the sentence

,

represents the number of terms in the sentence

,

represents the term

in the sentence

, and

represents the vector sum of all terms in the sentence

.

Algorithm 1 gives the pseudo-code for the vector generation. Line 2 represents the definitions of the sentence vector

and the integer variable

. Line 3 indicates that the number of sentences contained in the document

is

, and the sentence vector set

is treated initially as an empty set. Lines 4 to 16 generate the sentence vector for each sentence in the document

. Lines 4 to 6 represent turning the number of terms in the sentence

, and the

is initialized to 0. Lines 7 to 13 represent the product of the calculated TF-IDF value of item

and the Word2Vec vector in the sentence

, and

is the vector of the sentence

. Line 14 represents the ratio of the sentence vector

to

as the final sentence vector

. Line 15 represents the addition of the sentence vector

to the vector aggregation

, and Line 17 indicates the return vector set

.

| Algorithm 1: Vector Generation |

| Input: Document |

| Output: The set of Sentence Vectors |

| 1. Let has sentences; |

| 2. vector ; int ; |

| 3. ; ; |

| 4. for all |

| 5. ; |

| 6. = 0; |

| 7. for all |

| 8. ; |

| 9. ; |

| 10. ; |

| 11. + = 1; |

| 12. ; |

| 13. end for |

| 14. ; |

| 15. ; |

| 16. end for |

| 17. return ; |

3.2. Cluster Optimization

Cluster optimization is a process that combines Genetic Algorithm with symmetry and K-means clustering method to find the best sentence clustering. Firstly, this module defines the sentences in the document as genes of Genetic Algorithm and each sentence is randomly coded as binary value “1” or “0”. Therefore, the document becomes a binary string, and each binary string is an individual of Genetic Algorithm, thus reaching the purpose of initializing the population. Second, this module takes each sentence in the individual with a coded value of “1” as a single initial cluster and clusters all sentences in an individual by using the K-means algorithm. This module then takes the silhouette coefficient as a fitness function, which is used to calculate the fitness of all the individuals in this population. Finally, this module uses the evolutionary rules of Genetic Algorithm to optimize the population continuously and iteratively until the specified number of iterations is reached, and selects individual that has the highest fitness as the final sentence-clustering result. The cluster optimization includes four modules: population initialization, sentence clustering, fitness assessment, and evolutionary operation. The four modules are described in detail below.

3.2.1. Population Initialization

Population initialization is a random assignment of each sentence in a document to a binary code “1” or “0”. Population initialization can include two processes: individual coding and population generation. Individual coding involves randomly selected K sentences from the document, and these K sentences are coded as “1”, and the remaining sentences are coded as “0”. The binary string formed by this coding acts as an individual. Population generation forms different binary strings for all the sentences in the document through individuals, forming different individuals, and all individuals constitute the initialized population.

Population initialization is calculated by the following formula:

where

represents the document,

represents the number of sentences in the document

,

represents the initial K value,

represents the population generated after

iteration,

represents the population size,

represents the i-th individual generated,

represents the binary encoding of the sentence

in the i-th individual,

represents the set of random numbers generated, and

represents the generated random number.

3.2.2. Sentence Clustering

Sentence clustering involves clustering all sentences in an individual by sentence vector using the K-means clustering algorithm. The sentence clustering can be divided into four processes: initial cluster selection, class vector calculation, cluster division, and cluster convergence judgment. The initial cluster selection involves looking at a sentence whose code is 1 in the individual as a single initial cluster or centroid of a cluster; that is, the K value is equal to the number of sentences coded as 1, and the final number of cluster K value is different. The class cluster vector is calculated by taking the average vector of all sentence vectors of each cluster as the representation vector of that cluster. The cluster division refers to calculating the distance from all sentences in an individual to all clusters, dividing the sentence into the cluster with the smallest distance. The cluster convergence judgment is to judge whether all the clusters change. If there is no change or small change, the sentence cluster of the individual converges; otherwise, the cluster vector calculation, cluster division, and cluster convergence judgment will be re-performed.

The sentence-clustering formula is as follows:

where

represents the initial set of clusters,

represents the j-th cluster,

is the representation vector of the cluster

,

represents the number of sentences in cluster

,

indicates that sentence

belongs to the cluster

,

represents the representation vector of sentence

,

represents the distance from

to

, and

and

are detailed in Formula (3).

3.2.3. Fitness Assessment

Fitness assessment is used to evaluate the clustering effect of individuals. Fitness assessment can be divided into five processes: sentence intra-cluster distance calculation, sentence inter-cluster distance calculation, sentence silhouette coefficient calculation, cluster silhouette coefficient calculation, and fitness calculation. Specially, the sentence intra-cluster distance and the sentence inter-cluster distance are the concepts of mutual symmetry. The sentence intra-cluster distance calculation refers to calculating the average distance from each sentence of each class cluster to other sentences of the same class cluster in an individual. The sentence inter-cluster distance calculation involves calculating the minimum value of the average distance between a sentence of a cluster and all sentences of other clusters. The sentence silhouette coefficient calculation refers to the ratio of the difference between sentence intra-cluster distance and sentence inter-cluster distance to the maximum value of these two distances. The cluster silhouette coefficient calculation refers to the average of the silhouette coefficients of all sentences in the clusters. Fitness calculation refers to taking the average value of the silhouette coefficients of all class clusters in an individual as the fitness of the individual.

The fitness assessment is calculated as follows:

where

represents the intra-cluster distance of sentence

in cluster

,

represents the number of clusters,

represents the distance between sentences

and

,

represents the inter-cluster distance of sentence

in cluster

,

represents other clusters except cluster

,

represents the distance between sentence

and

,

represents the sentence silhouette coefficient of sentence

,

represents the cluster silhouette coefficient, and

represents the average silhouette coefficient for this clustering effect as the fitness value of the m-th individual.

3.2.4. Evolutionary Operation

Evolutionary operation refers to the use of selection, crossover operation, and mutation operation in Genetic Algorithm to search for the best individuals in the population. Evolutionary operation is divided into three processes: selection operation, crossover operation, and mutation operation. The selection operation refers to using the roulette rule to select individuals with greater fitness into the next generation. The crossover operation refers to randomly setting two crossover points in two individual coding strings and exchanging part of the chromosomes of two individuals between the two crossover points. The mutation operation is the mutation of a bit in the gene sequence, that is, 0 to 1 or 1 to 0. The evolutionary operation can optimize the population iteratively by using the three operations of selection, crossover operation, and mutation. Individual in the population may have a different K value; that is, the K value is constantly optimized, and all sentences in individual are re-clustered by K-means to obtain the optimized clustering result. The individual with the highest fitness is selected until the specified number of iterations is reached.

The evolutionary operation formula is as follows:

where

,

, and

represent the selection rule, crossover rule, and mutation rule for the

-th generation population;

,

, and

represent selection probability, crossover probability, and mutation probability of the individual

,

, and

, respectively;

represents the fitness value of individual

;

,

, and

are random numbers in the range of [0, 1], respectively;

and

represent the maximum crossover probability and the minimum crossover probability, respectively;

and

indicate the maximum fitness value and average fitness value in the population, respectively; and

and

represent the maximum mutation probability and minimum mutation probability, respectively.

3.3. Feature Extraction

Feature extraction refers to extracting the statistical and linguistic features of the text. Firstly, feature extraction evaluates three statistical features of sentence location, sentence length, and sentence TF-ISF. Secondly, this module evaluates the two linguistic features of the number of sentence verb nouns and the title similarity of sentences. Finally, this module uses statistical features and linguistic features to obtain the final scores of sentences. Feature extraction is divided into statistical feature extraction, linguistic feature extraction, and sentence feature evaluation. The three modules will be described in detail below.

3.3.1. Statistical Feature Extraction

Statistical feature extraction refers to the calculation of three feature scores: sentence position, sentence length, and sentence TF-ISF in a cluster. Statistical feature extraction is divided into three processes: sentence position score extraction, sentence length score extraction, and TF-ISF extraction. The sentence position score extraction involves obtaining the feature score of the sentence position by the position of the sentence in the document. The sentence length score extraction involves obtaining the sentence length feature score by the number of valid words in the sentence, average effective length of all sentences in the document, and the standard deviation of the number of valid words. TF-ISF extraction involves obtaining the TF-ISF value of the sentence by using the TF-ISF value of all the terms in the sentence.

The calculation formula of statistical feature extraction is as follows:

where

represents the sentence position score of sentence

,

represents the position of the sentence in the article,

represents the sentence length score of sentence

,

represents the sentence length of sentence

,

represents the average length of all sentences,

represents the standard deviation of all sentence lengths,

represents the

value of the word

in document

,

represents the

value of the word

,

represents the

value of the word

,

represents the number of times the term

appears in sentence

,

represents the total number of different words in sentence

,

represents the number of occurrences of term

in sentence

,

represents the total number of sentences in document

, and

represents the number of sentences in which the word

appears in document

.

3.3.2. Linguistic Feature Extraction

Linguistic feature extraction is the calculation of the number of verbs and nouns and average cosine similarity calculation. Linguistic feature extraction is divided into two processes: verb noun rate calculation and average similarity calculation. The verb noun rate calculation refers to the ratio of the sum of the number of verbs and the number of nouns in the sentence to the number of all words in the sentence. The average similarity calculation involves calculating the average of the cosine similarities of one sentence to other sentences.

The linguistic feature extraction is as follows:

where

represents the number of verb nouns in sentence

,

represents the number of verbs in sentence

,

represents the number of nouns in sentence

,

represents the number of terms representing sentence

,

represents the average similarity of sentence

,

,

represents the representation vectors of sentences

and

, and

represents the length of document.

3.3.3. Sentence Feature Evaluation

Sentence feature evaluation is used to obtain sentence scores. Sentence feature evaluation is divided into three processes: statistical feature score calculation, linguistic feature score calculation, and sentence score calculation. Statistical feature score calculation refers to the sum of position scores, length scores, and TF-ISF scores of sentences. Linguistic feature score calculation refers to the sum of the verb noun rate and the average similarity of the sentence. Sentence score extraction involves using the statistical feature score and linguistic feature score to obtain the score of the sentence.

The sentence feature evaluation is as follows:

where

represents the sentence score of sentence

,

represents the linguistic feature score of sentence

,

represents the statistical feature score of sentence

,

,

, and

are detailed in Formula (7), and

and

are detailed in Formula (8).

3.4. Summary Construction

Summary construction involves forming the final summary by selecting sentences with high correlation and low redundancy from clusters using sentence feature score and Maximal-Marginal-Relevance algorithm. First, the summary construction involves selecting the sentences with high sentence feature scores from the clusters to form the initial summary. Second, this module uses the Maximal-Marginal-Relevance algorithm to select sentences with high correlation and low redundancy from the initial summary to form the final summary. The summary construction is divided into two modules, Initial Summary Determination and Final Summary Determination, which will be detailed below.

3.4.1. Initial Summary Determination

Initial summary determination involves ordering all the sentences in each cluster in descending order using the feature scores of the sentences; then, half of sentences in each cluster are used as the initial summary. The initial summary is divided into three processes: cluster sentence ranking, cluster sentence extraction, and initial summary construction. Cluster sentence ranking is the ordering of sentences in a cluster from largest to smallest through sentence feature scores. Cluster sentence extraction involves extracting the top-ranked sentences from the cluster, and the number of sentences extracted is half number of all sentences in a cluster. Initial summary construction involves merging the sentences extracted by all clusters as the initial summary.

The formula of initial summary determines as follows:

where

represents the i-th cluster,

represents the j-th sentence in the i-th cluster,

represents the sentence score of sentence

,

represents the number of sentences of cluster

,

represents the sentences extracted from cluster

,

is an integer obtained by rounding the half number of sentences of cluster

, and

represents the initial summary.

3.4.2. Final Summary Determination

Final summary determination involves using the Maximal-Marginal-Relevance algorithm to select high correlation and low redundancy sentences from the initial summary to form the final summary. The final summary determination is divided into two processes: maximum score sentence selection and maximum MMR score sentence selection. Maximum score sentence selection means choosing the sentence with the largest sentence feature score from the initial summary as the first sentence of the final summary. Maximum MMR score sentence selection involves selecting the maximum MMR value in the initial summary into the final summary.

The formula of final summary determination as follows:

where

represents the first sentence of the final summary,

represents the initial summary,

represents the final summary,

represents the MMR value of the sentence

,

represents the correlation parameter,

represents the k-th sentence of the final summary,

represents the cosine similarity between sentences

and

,

represents the representation vector of the sentence

, and

represents the number of sentences in the final summary.

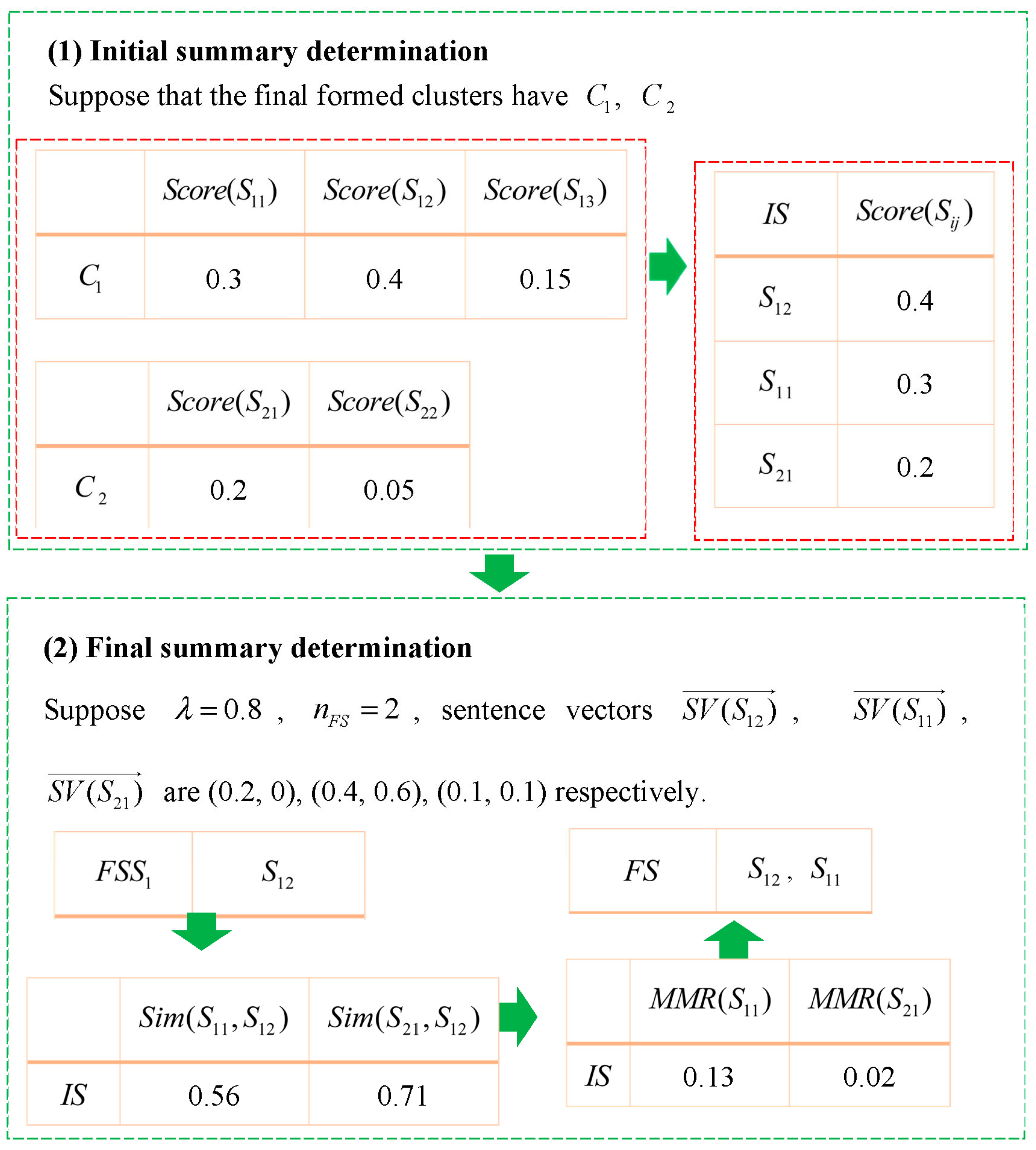

Figure 2 is an example of summary construction. In

Figure 2, for the initial summary determination, the three sentence scores in cluster

are 0.3, 0.4, and 0.15, respectively, and the sentence scores of the two sentences in cluster

are 0.2 and 0.05, respectively. Sentences in each cluster are sorted from high scores to low scores and half the number of sentences from each cluster are selected in order to form the initial summary, and the initial summary are

. In the second step, the final summary is determined. First, the sentence with the maximum

in the initial summary is taken as the first sentence

of the final summary. Then, the cosine similarity of sentences between sentences

are calculated, and then

values of sentences

are calculated. Finally, the sentence

with the largest

value is selected to form the final summary.

4. Experiment

This experiment designs and implements four methods: the Lead-3 method, Text-Rank method, KM-MMR method, and GAKM-MMR method. The experimental results are used to further show that the proposed method can improve the quality of text summary, and the redundancy and correlation of text summary are better than other methods. This part includes two major parts: experimental design and experimental results. The experimental design includes the experimental automatic summary methods, experimental initial data, and experimental evaluation index. The experimental results include the automatic summary method comparison and visualization results. The experimental conclusion shows that the quality of the summary for GAKM-MMR is better than Lead-3, Text-Rank, and KM-MMR, and their redundancy and correlation are better than the other three methods.

4.1. Experimental Design

The experimental design involves illustrating the design scheme of the entire experiment. This part includes three contents: experimental automatic summary methods, experimental initial data, and the experimental evaluation index. Three parts of the experimental design will be detailed below.

4.1.1. Experimental Automatic Summary Method

This experiment designed four different experimental automatic text summary methods, the Lead-3, Text-Rank, KM-MMR, and GAKM-MMR methods, and compared the performance of four automatic text summary methods by experimental results. The four automatic summary methods are described as follows:

Lead-3 is a widely used baseline method. Its core idea is to directly extract the first three sentences of the document as a summary to provide a simple and comparable benchmark. The implementation principle of Lead-3 involves several key steps: First of all, in the text preprocessing stage, it is necessary to clarify whether to remove non-text content such as news headlines and author information, as well as which sentence segmentation tool to choose. These choices directly determine the semantic content and length of the “first three sentences”. In addition, there is uncertainty in defining sentence boundaries, and different methods of sentence segmentation may lead to differences in sentence sequences. The main function of the Lead-3 method is to provide a basic comparison benchmark for automatic text summary models, helping researchers quickly evaluate the effectiveness of new methods. The time complexity of the Lead-3 method is O(1), and this is the minimum time complexity in four different automatic text summary methods.

- (2)

TextRank Method

TextRank is a graph-based ranking model for unsupervised text processing, adapted from the PageRank algorithm used for web page importance ranking. It operates by building a graph that represents relationships between text units to extract key information. In this model, words or sentences in a text are treated as vertices in a graph, while semantic relationships between these text units form the edges, resulting in a complete text network. During implementation, the algorithm optimizes the graph structure by setting an appropriate co-occurrence window and applying part-of-speech filtering, using weighted edges to represent the strength of associations between different units. This method supports extractive summarization tasks, relying solely on the internal structure of the text without requiring any external corpora, thus demonstrating strong cross-domain and cross-language adaptability. The time complexity of the TextRank method is O(N2), where N is the total number of sentences in the document.

- (3)

KM-MMR Method

The KM-MMR method is an automatic text summary method using K-means and the Maximal-Marginal-Relevance algorithm that combines the advantages of word embedding distribution with other conventional models. KM-MMR first conducts K-means clustering of the text, and then counts the statistical feature scores and linguistic feature scores of each sentence. Finally, the Maximal-Marginal-Relevance algorithm is used to select the sentences with low redundancy and high relevance to form the final text summary. The KM-MMR method is a summary method that combines K-means and the Maximal-Marginal-Relevance algorithm. The time complexity of K-means is O(N × K × M), the time complexity of the Maximal-Marginal-Relevance algorithm is O(N × F), and the time complexity of the KM-MMR method is O(N × K × M + N × F), where K and M are the number of clusters and the number of iterations of K-means, respectively, and F is the number of sentences in the final summary.

- (4)

GAKM-MMR Method

The GAKM-MMR method proposed in this paper is a fusion of the K-means algorithm and the Maximal-Marginal-Relevance algorithm. The GAKM-MMR method first generates the weighted sentence vectors of sentences using the Word2Vec model and TF-IDF. Second, the sentences in the document are encoded in a random binary sequence to obtain multiple individuals and form the initial population. The algorithm uses the K-means method and the selection, crossover operation, and mutation operations in the Genetic Algorithm for the best clustering effect. Then, this method counts the linguistic and statistical features of the document and obtains the feature score for each sentence in the document. Finally, the method uses the Maximal-Marginal-Relevance algorithm to select sentence combinations for the summary, thus forming them with a low redundancy and high correlation. For the GAKM-MMR method, each individual in the Genetic Algorithm needs to execute the K-means algorithm once to classify the sentences. Therefore, the time complexity of the GAKM-MMR method is determined by the Genetic Algorithm and the K-means. The time complexity of the Genetic Algorithm is O(G × P × N2), and the time complexity of the GAKM-MMR method is O(G × P × (N × K × M) × N2), that is, O(G × P × N3 × K × M), where G is the number of evolutionary iterations in the Genetic Algorithm and P is the population size.

4.1.2. Experimental Initial Data

This paper focuses on the Document Understanding Conference (DUC2007) dataset from the ACQUAINT corpus. This dataset collects a large amount of text data: DUC2007 includes news articles from various newspapers, consisting of 45 different document sets, each containing 25 different documents, and the evaluator of this dataset has created four different reference summaries. In this experiment, we combined all 25 documents of the same document set into one document, and compared the four reference summaries with those formed by the proposed algorithm in this paper.

This experiment involves different parameter settings [

16,

31,

32]. For the Word2Vec model, the vector size is set to 300, and the number of iterations is set to 8. For the Genetic Algorithm, the parameters are as follows: minimum mutation probability

, maximum mutation probability

, minimum crossover probability

, maximum crossover probability

, evolutionary algebra

, and population size

. These parameters are set as follows:

,

,

,

,

, and

. For the Maximal-Marginal-Relevance algorithm, the parameter value

is set to 0.7 in Formula (4).

4.1.3. Experimental Evaluation Index

ROUGE is a set of metrics to evaluate automatic text summary and machine translation by comparing the automatically generated text summary with a set of reference summaries to obtain the corresponding score to measure the similarity between the automatically generated and reference summaries [

33,

34]. This experiment mainly evaluated the performance of the algorithm for generating the summary through ROUGE-1, ROUGE-2, and ROUGE-L. ROUGE-1 refers to the ratio of the number of same words between the two summaries to the total number of words in the reference summaries. ROUGE-2 refers to the ratio of the number of same word pairs between the two summaries to the total number of word pairs in the reference summaries, and ROUGE-2 can measure the word pair correlation of automatic summaries. ROUGE-L is the number of words in the longest common sub-sequence between two abstracts, and ROUGE-L can measure the correlation of the text summary.

The calculation formula of the experimental evaluation index is as follows:

where

represents the ROUGE-1 score,

represents the reference summary,

is the sentence of the reference summary,

is the number of same words between the candidate summary and the reference summary,

is the total number of words in the reference summary, and

represents the ROUGE-2 score.

is the word pair in the sentence,

is the number of same word pairs between the candidate summary and the reference summary, and

is the total number of word pairs in the reference summary.

represents the ROUGE-L score,

is the LCS score of the largest common sub-sequence of the reference summary

and sentence

,

represents the number of sentences in the reference summary,

is the sentence of the candidate summary,

and

represent the lengths of the reference summary and the candidate summary with the numbers of words, respectively, and

is set to a very large parameter.

4.2. Experimental Results

The experiment results are obtained by all four automatic summary methods using 15 document sets in the DUC2007 dataset in the experiment.

Table 1 displays the average results of the summary results obtained by four automatic summary methods for 15 document sets. In

Table 1, there are three evaluation indicators including ROUGE-1 (R1), ROUGE-2 (R2), and ROUGE-L (RL) for the summary results. For each document set, each summary result has four R1 scores, four R2 scores, and four RL scores between the candidate summary and four reference summaries. Then, the average values of the four R1 scores, four R2 scores, and four RL scores are calculated, as the final average results, respectively, corresponding to R1, R2, and RL for each document set.

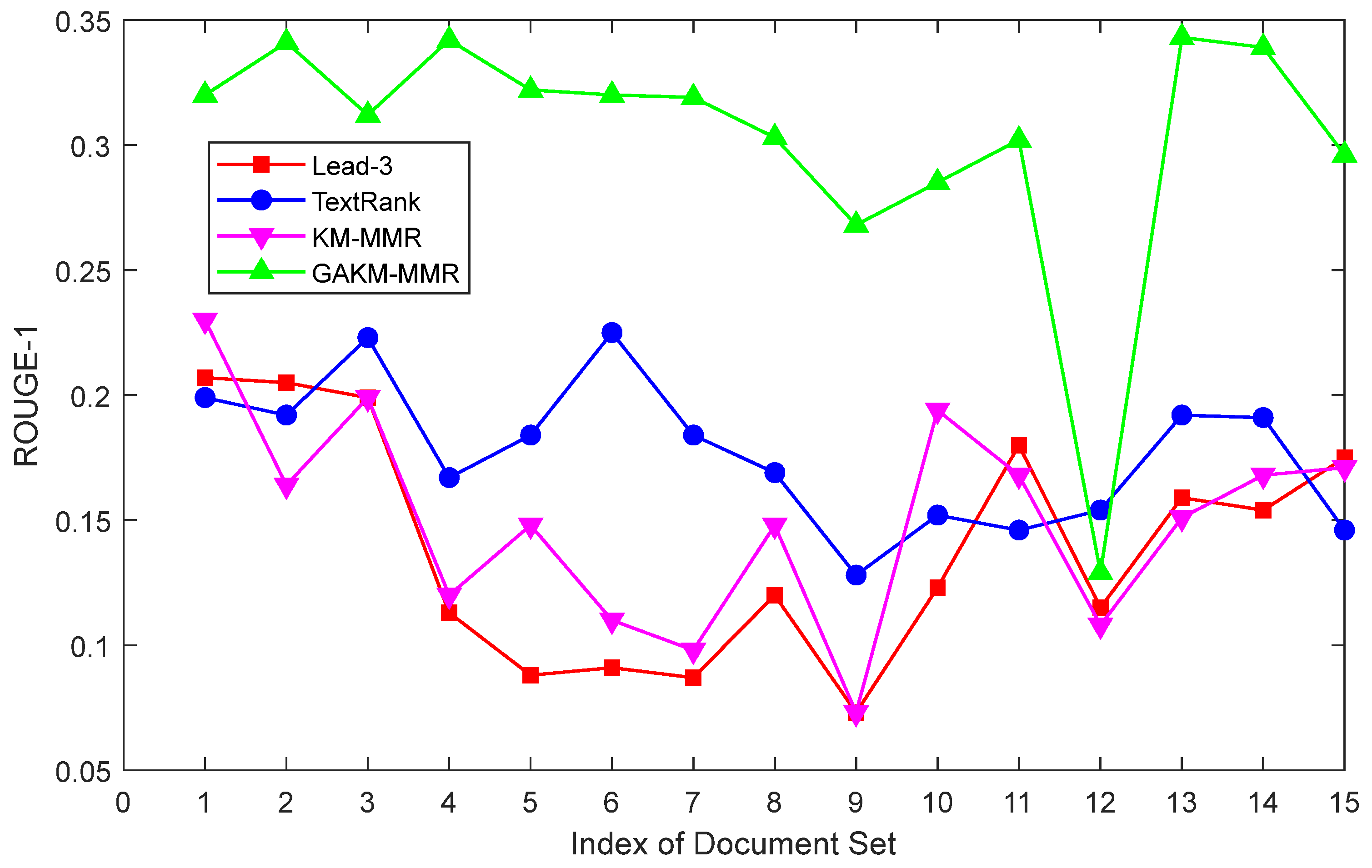

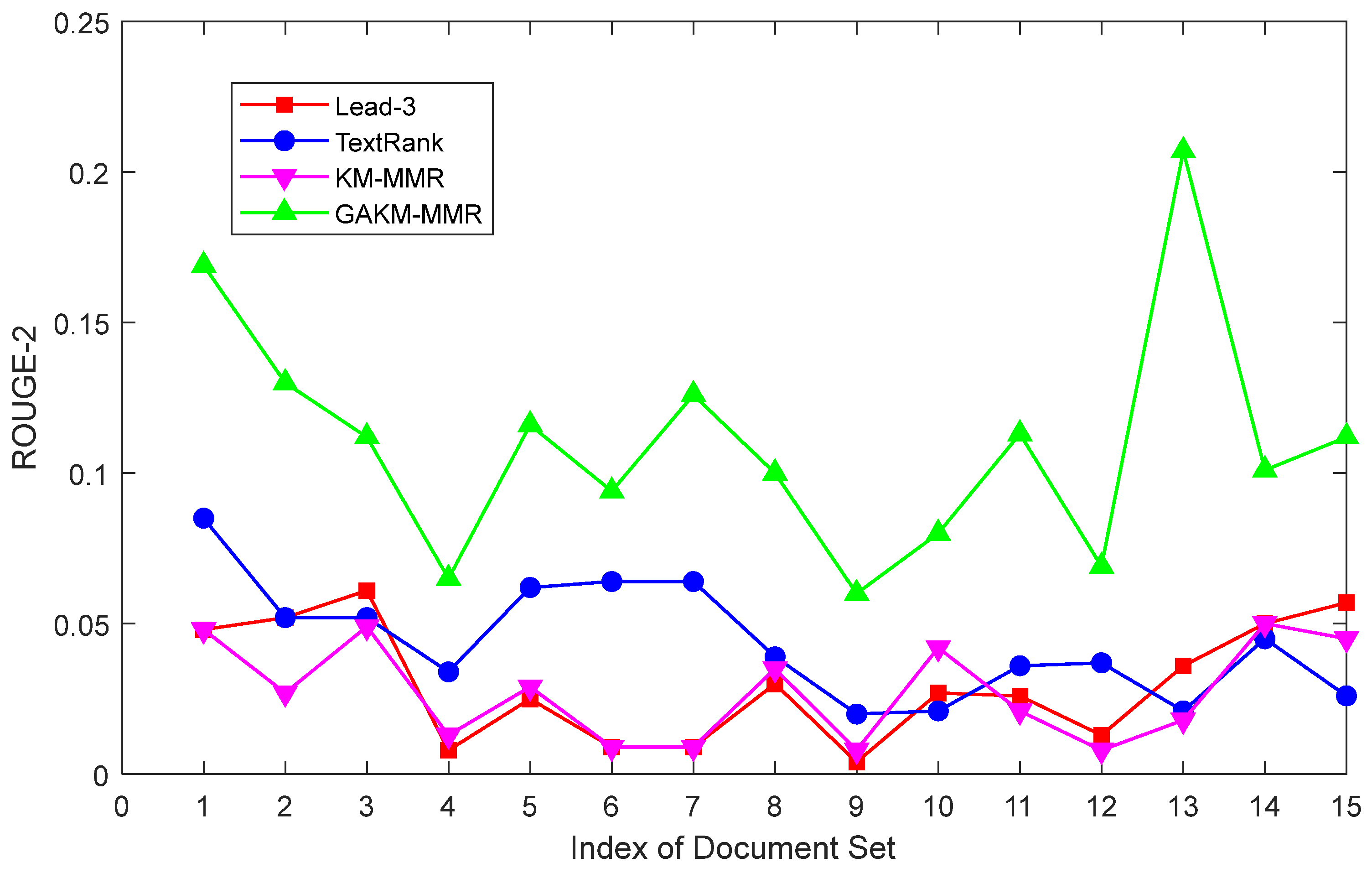

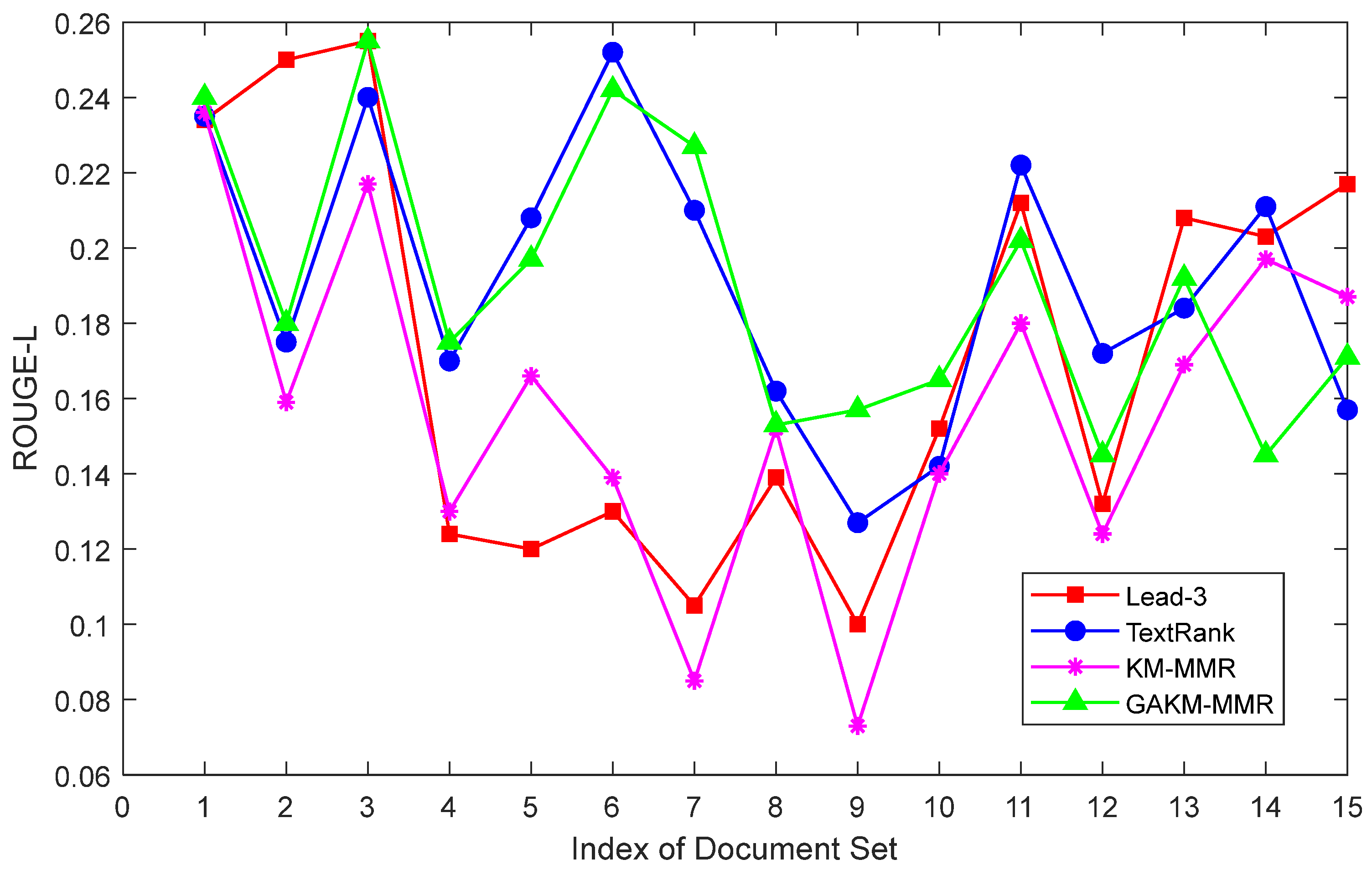

Figure 3,

Figure 4 and

Figure 5 show the comparison of three evaluation indicators based on all four automatic summary methods in

Table 1.

Figure 3 shows the comparison of the ROUGE-1 scores for four automatic summary methods based on the summary results. In

Figure 3, the average ROUGE-1 scores of the four automatic summary methods including Lead-3, TextRank, KM-MMR, and GAKM-MMR are 0.139, 0.177, 0.15, and 0.303 respectively. The average ROUGE-1 score of the GAKM-MMR method is increased by 1.17 times, 71.23%, and 1.02 times compared with the other three automatic summary methods, respectively.

Figure 3 shows that the GAKM-MMR method can obtain important information in the text more accurately than the other three automatic summary methods.

Figure 4 shows the comparison of the ROUGE-2 scores for four automatic summary methods based on the summary results. In

Figure 4, the average ROUGE-2 scores of the four automatic summary methods including Lead-3, TextRank, KM-MMR, and GAKM-MMR are 0.03, 0.044, 0.027, and 0.11, respectively. The average ROUGE-2 score of the GAKM-MMR method is increased by 2.64 times, 1.51 times, and 3.02 times compared with the other three automatic summary methods, respectively.

Figure 4 also shows that the GAKM-MMR method can obtain important information in the text more accurately than the other three automatic summary methods.

Figure 5 shows the comparison of the ROUGE-L scores for four automatic summary methods based on the summary results. In

Figure 5, the average ROUGE-L scores of the four automatic summary methods including Lead-3, TextRank, KM-MMR, and GAKM-MMR are 0.172, 0.191, 0.157, and 0.19, respectively. The average ROUGE-L score of the GAKM-MMR method is close to the TextRank method, and is increased by 10.27% and 20.9% compared with the Lead-3 method and KM-MMR method, respectively.

Figure 5 also shows that the GAKM-MMR method can obtain important information in the text more accurately than the Lead-3 method and KM-MMR method.

The experiment obtains the summary results to compare the performance of four automatic summary methods. First, the experiment results indicate that the GAKM-MMR method has a better effect on the DUC2007 dataset than other three automatic summary methods including the Lead-3 method, TextRank method, and KM-MMR method. Secondly, the method proposed in this paper can generate automatic summarizations with a low redundancy and high correlation. Finally, the proposed method takes multiple linguistic features and statistical features into account, and uses the Maximal-Marginal-Relevance algorithm, so it can extract a more accurate summary. The experiment results show that the GAKM-MMR method proposed in this paper can improve the accuracy of automatic text summary and reduce redundancy.

5. Conclusions and Future Work

This paper proposes an automatic text summary method based on the optimized K-means clustering algorithm with symmetry and the Maximal-Marginal-Relevance algorithm. This method optimizes the K-means clustering algorithm by using the Genetic Algorithm with symmetry and reduces the sentence redundancy by using the Maximal-Marginal-Relevance algorithm. First, the summary method obtains the semantic-rich weighted word vector from the text through the TF-IDF and Word2Vec model, and uses the weighted word vector to generate the representation vector of sentences. Second, the summary method uses the Genetic Algorithm with symmetry to optimize the K value and improves the silhouette coefficient of the sentence-clustering results of the K-means clustering algorithm to optimize the K-means sentence-clustering effect. Then, the summary method extracts sentence features from the text, including statistical features and linguistic features, and uses these features to calculate the feature scores of sentences. Finally, the summary method selects sentences with higher feature scores from the clusters, uses the Maximal-Marginal-Relevance algorithm to calculate the MMR values of these sentences, and selects the sentences with higher MMR values to form the final text summary. The experiment results show that the proposed GAKM-MMR method can improve the accuracy of automatic text summary and reduce redundancy compared with the other three methods, including Lead-3, TextRank, and KM-MMR. In conclusion, the proposed method in this paper can obtain better-quality text summaries.

This paper still requires some intensive research work. First, this paper uses the Genetic Algorithm to optimize the selection of K value, which has the disadvantage of easily falling into local optima. To this end, AI algorithms such as simulated annealing and the ant colony algorithm can be introduced to further optimize the clustering effect. In addition, this paper uses the statistical features of sentences and linguistic features to obtain the feature scores of sentences. The linguistic features only consider the rate of verb nouns and the average similarity. To this end, linguistic features can add suggestive link words and other factors.

More exploration works can be conducted in aspects such as model parameter selection, the diversity of experimental datasets, and significance testing. In terms of model parameter selection, the parameters of each model have primarily drawn upon the referenced works of literature. Future research should focus on exploring the impact trends of different parameter values on model performance to elaborate more fully on the basis for model parameter selection. In terms of experimental datasets, this experiment utilizes datasets from the news domain. Future research should emphasize the comparative performance of model methods across diverse datasets to evaluate the model performance more comprehensively. In terms of significance testing, the experiment employs the ROUGE series of metrics for evaluation. Future research should focus on exploring the significance testing of the performance improvements of the proposed method, thereby enhancing the confidence of the experimental conclusions. In addition, this paper obtains word vectors and sentence vectors by using the Word2Vec model. However, this model fails to effectively address the problem of polysemy. The Large Language Models, such as the GPT series and the DeepSeek series, can be utilized to obtain more accurate representation vectors for terms and sentences.

Author Contributions

Conceptualization, H.S., S.L., Q.T., L.P. and W.L.; methodology, H.S., S.L., B.Y., Y.S., Q.T., L.P. and W.L.; validation, B.Y.; investigation, S.L., Q.T. and L.P.; data curation, Y.S.; writing—original draft, H.S., B.Y., Y.S., Q.T., L.P. and W.L.; writing—review and editing, H.S., S.L., B.Y., Y.S., Q.T., L.P. and W.L.; supervision, H.S. and L.P.; project administration, Q.T. and W.L.; funding acquisition, H.S. and W.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by Sichuan Science and Technology Program (Grant No.2024YFHZ0087), the “Juyuan Xingchuan” Project of Sichuan Provincial Department of Science and Technology for Central Universities and Institutes in Sichuan (Grant No. 2025ZHCG0013), and the Intelligent Policing Key Laboratory of Sichuan Province (Grant No. ZNJW2024KFQN012).

Data Availability Statement

The data is contained within the article.

Conflicts of Interest

Authors Mr. Hongqing Song, Mr. Silin Li, and Mr. Lei Peng were employed by the company “Civil Aviation Logistics Technology Co., Ltd.”. Author Mr. Quanyi Tao was employed by the company “Chengdu Baize Zhihui Technology Co., Ltd.”. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Girsang, A.S.; Amadeus, F.J. Extractive Text Summarization for Indonesian News Article Using Ant System Algorithm. J. Adv. Inf. Technol. 2023, 14, 295–301. [Google Scholar] [CrossRef]

- Luo, M.; Xue, B.; Niu, B. A comprehensive survey for automatic text summarization: Techniques, approaches and perspectives. Neurocomputing 2024, 603, 128–280. [Google Scholar] [CrossRef]

- Diao, Y.; Lin, H.; Yang, L.; Fan, X.; Chu, Y.; Wu, D.; Zhang, D.; Xu, K. CRHASum: Extractive text summarization with contextualized-representation hierarchical-attention summarization network. Neural Comput. Appl. 2022, 32, 11491–11503. [Google Scholar] [CrossRef]

- Sharmila, P.; Deisy, C.; Parthasarathy, S. Ext-ICAS: A Novel Self-Normalized Extractive Intra Cosine Attention Similarity Summarization. Comput. Syst. Sci. Eng. 2022, 45, 377–393. [Google Scholar] [CrossRef]

- Kartha, R.S.; Agal, S.; Odedra, N.D.; Nanda, C.S.K.; Rao, V.S.; Kuthe, A.M.; Taloba, A.I. NLP-Based Automatic Summarization using Bidirectional Encoder Representations from Transformers-Long Short Term Memory Hybrid Model: Enhancing Text Compression. Int. J. Adv. Comput. Sci. Appl. 2024, 15, 1223–1236. [Google Scholar] [CrossRef]

- Citarella, A.A.; Barbella, M.; Ciobanu, M.G.; De Marco, F.; Di Biasi, L.; Tortora, G. Assessing the effectiveness of ROUGE as unbiased metric in Extractive vs. Abstractive summarization techniques. J. Comput. Sci. 2025, 87, 102571. [Google Scholar] [CrossRef]

- Biswas, P.K.; Iakubovich, A. Extractive Summarization of Call Transcripts. IEEE Access 2022, 10, 119826–119840. [Google Scholar] [CrossRef]

- AbdelAziz, N.M.; Ali, A.A.; Naguib, S.M.; Fayed, L.S. Clustering-based topic modeling for biomedical documents extractive text summarization. J. Supercomput. 2025, 81, 171. [Google Scholar] [CrossRef]

- Zhao, H.; Zhang, W.; Huang, M.; Feng, S.; Wu, Y. A Multi-Granularity Heterogeneous Graph for Extractive Text Summarization. Electronics 2023, 12, 2184. [Google Scholar] [CrossRef]

- Hakami, N.A.; Mahmoud, H.A.H. A Dual Attention Encoder-Decoder Text Summarization Model. Comput. Mater. Contin. 2023, 74, 3697–3710. [Google Scholar] [CrossRef]

- Bao, G.; Zhang, Y. A General Contextualized Rewriting Framework for Text Summarization. IEEE/ACM Trans. Audio Speech Lang. Process. 2023, 31, 1624–1635. [Google Scholar] [CrossRef]

- Rakrouki, M.A.; Alharbe, N.; Khayyat, M.; Aljohani, A. TG-SMR: A Text Summarization Algorithm Based on Topic and Graph Models. Comput. Syst. Sci. Eng. 2023, 45, 395–408. [Google Scholar] [CrossRef]

- Mehamed, M.A.; Xiong, S.; Aberha, A.F. Hybrid Approach for Automatic Text Summarization for Low-resourced Amharic Language. ACM Trans. Asian Low-Resource Lang. Inf. Process. 2025, 24, 1–14. [Google Scholar] [CrossRef]

- Mohd, M.; Jan, R.; Shah, M. Text document summarization using word embedding. Expert Syst. Appl. 2020, 143, 112958. [Google Scholar] [CrossRef]

- Hassan, A.Q.A.; Al-Onazi, B.B.; Maashi, M.; Darem, A.A.; Abunadi, I.; Mahmud, A. Enhancing extractive text summarization using natural language processing with an optimal deep learning model. AIMS Math. 2024, 9, 12588–12609. [Google Scholar] [CrossRef]

- Gupta, S.; Kanchinadam, T.; Conathan, D.; Fung, G. Task-Optimized Word Embeddings for Text Classification Representations. Front. Appl. Math. Stat. 2020, 5, 67. [Google Scholar] [CrossRef]

- Mao, X.; Yang, H.; Huang, S.; Liu, Y.; Li, R. Extractive summarization using supervised and unsupervised learning. Expert Syst. Appl. 2019, 133, 173–181. [Google Scholar] [CrossRef]

- Rani, R.; Lobiyal, D.K. A weighted word embedding based approach for extractive text summarization. Expert Syst. Appl. 2021, 186, 115867–115868. [Google Scholar] [CrossRef]

- Kong, H.; Kim, W. Generating summary sentences using Adversarially Regularized Autoencoders with conditional context. Expert Syst. Appl. 2019, 130, 1–11. [Google Scholar] [CrossRef]

- Li, W.; Zhuge, H. Abstractive Multi-Document Summarization based on Semantic Link Network. IEEE Trans. Knowl. Data Eng. 2019, 33, 43–54. [Google Scholar] [CrossRef]

- Su, M.-H.; Wu, C.-H.; Cheng, H.-T. A Two-Stage Transformer-Based Approach for Variable-Length Abstractive Summarization. IEEE/ACM Trans. Audio Speech Lang. Process. 2020, 28, 2061–2072. [Google Scholar] [CrossRef]

- Benedetto, I.; La Quatra, M.; Cagliero, L.; Vassio, L.; Trevisan, M. TASP: Topic-based abstractive summarization of Facebook text posts. Expert Syst. Appl. 2024, 255, 124567. [Google Scholar] [CrossRef]

- Li, S.; Xu, J. A two-step abstractive summarization model with asynchronous and enriched-information decoding. Neural Comput. Appl. 2021, 33, 1159–1170. [Google Scholar] [CrossRef]

- Prabha, P.L.; Parvathy, M. Abstractive text summarization from Amazon food review dataset using modified attention based Bi-LSTM autoencoder model. J. Chin. Inst. Eng. 2025, 48, 268–282. [Google Scholar] [CrossRef]

- Chen, Y.; Ma, Y.; Mao, X.; Li, Q. Multi-Task Learning for Abstractive and Extractive Summarization. Data Sci. Eng. 2019, 4, 14–23. [Google Scholar] [CrossRef]

- Liu, W.; Gao, Y.; Li, J.; Yang, Y. A Combined Extractive With Abstractive Model for Summarization. IEEE Access 2021, 9, 43970–43980. [Google Scholar] [CrossRef]

- Ma, T.; Pan, Q.; Rong, H.; Qian, Y.; Tian, Y.; Al-Nabhan, N. T-BERTSum: Topic-Aware Text Summarization Based on BERT. IEEE Trans. Comput. Soc. Syst. 2021, 9, 879–890. [Google Scholar] [CrossRef]

- Wang, Q.; Liu, P.; Zhu, Z.; Yin, H.; Zhang, Q.; Zhang, L. A Text Abstraction Summary Model Based on BERT Word Embedding and Reinforcement Learning. Appl. Sci. 2019, 9, 4701. [Google Scholar] [CrossRef]

- Yang, Z.G. Neural text summarization for Hungarian. Acta Linguist. Acad. 2022, 69, 474–500. [Google Scholar] [CrossRef]

- Mhatre, S.; Ragha, L.L. A Hybrid Approach for Automatic Text Summarization by Handling Out-of-Vocabulary Words Using TextR-BLG Pointer Algorithm. Sci. Tech. Inf. Process. 2024, 51, 72–83. [Google Scholar] [CrossRef]

- Liu, W.; Gan, Z.; Xi, T.; Du, Y.; Wu, J.; He, Y.; Jiang, P.; Liu, X.; Lai, X. A semantic and intelligent focused crawler based on semantic vector space model and membrane computing optimization algorithm. Appl. Intell. 2023, 53, 7390–7407. [Google Scholar] [CrossRef]

- Agarwal, A.; Xu, S.; Grabmair, M. Extractive Summarization of Legal Decisions using Multi-task Learning and Maximal Marginal Relevance. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2022, Abu Dhabi, United Arab Emirates, 7–11 December 2022; pp. 1857–1872. [Google Scholar]

- Tomer, M.; Kumar, M.; Hashmi, A.; Sharma, B.; Tomer, U. Enhancing metaheuristic based extractive text summarization with fuzzy logic. Neural Comput. Appl. 2023, 35, 9711–9723. [Google Scholar] [CrossRef]

- Vo, T. A novel semantic-enhanced generative adversarial network for abstractive text summarization. Soft Comput. 2023, 27, 6267–6280. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).