The analysis is organized into four subsections. First, we provide a quantitative evaluation of all tested architectures, reporting standard regression metrics such as root mean squared error (RMSE), coefficient of determination (R2), and correlation coefficients between predicted and ground-truth normalized pressures. Second, we complement these metrics with qualitative visualizations, highlighting representative FEM samples and the corresponding predictions of the neural networks. Third, we conduct a focused sensitivity study, contrasting two modeling stages: (i) a baseline stage where only the undeformed and deformed boundary profiles are provided as inputs, and (ii) an enriched stage where all additional geometric features—namely displacement differences (Δu), normals, and tangents—are incorporated into the node attributes. This comparison clarifies the contribution of mechanics-informed features beyond raw geometry. Finally, we present the architectural evolution and optimization effects, documenting the from enriched GNN to CNN models architectures, and demonstrating how Bayesian hyperparameter optimization improved convergence and predictive stability.

This layered presentation provides not only a rigorous benchmarking of the framework’s predictive capability but also a transparent view of how input representation and network design choices influence inverse load identification performance.

7.1. Quantitative Evaluation—Graph Neural Networks (Boundary-Only Inputs)

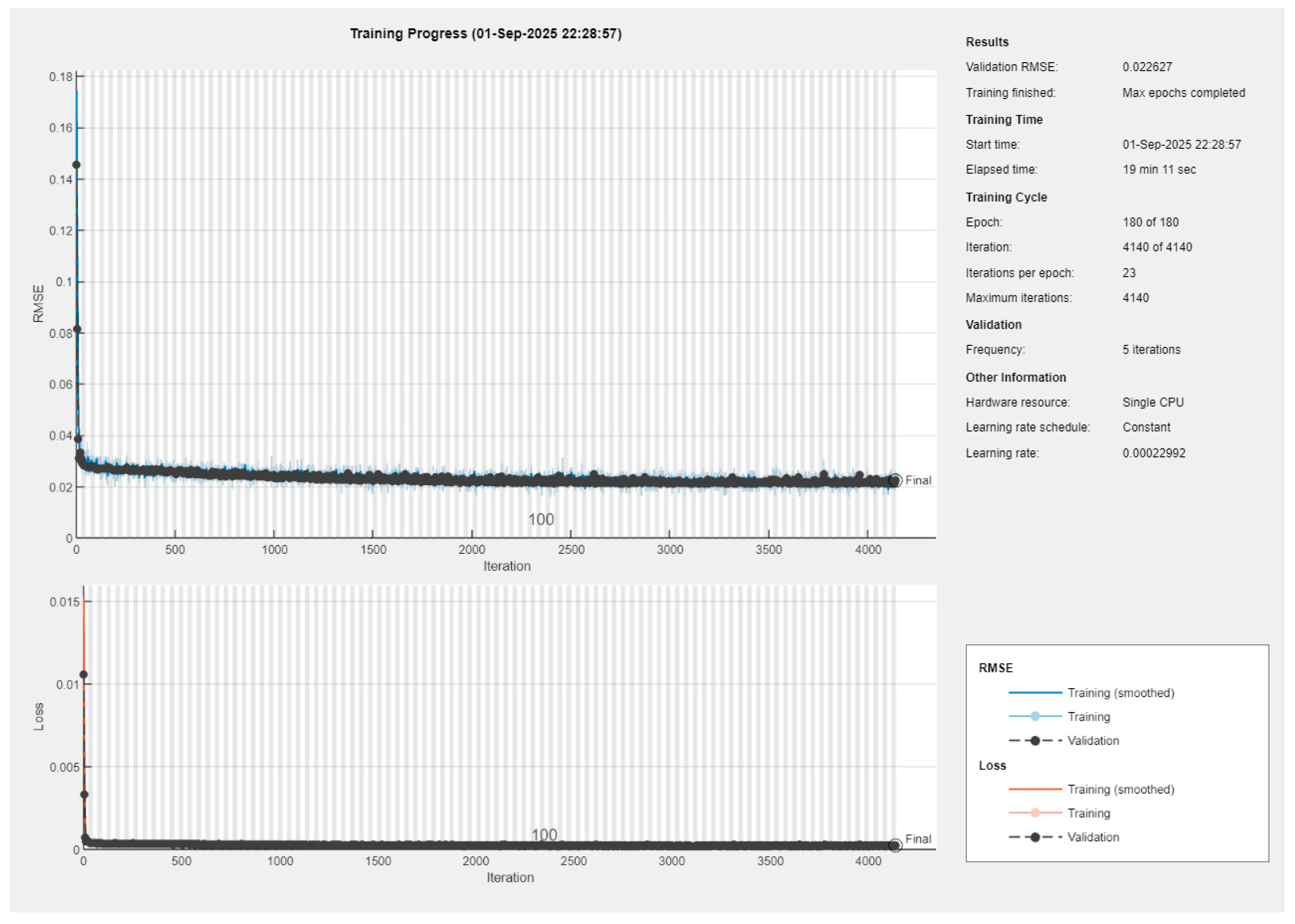

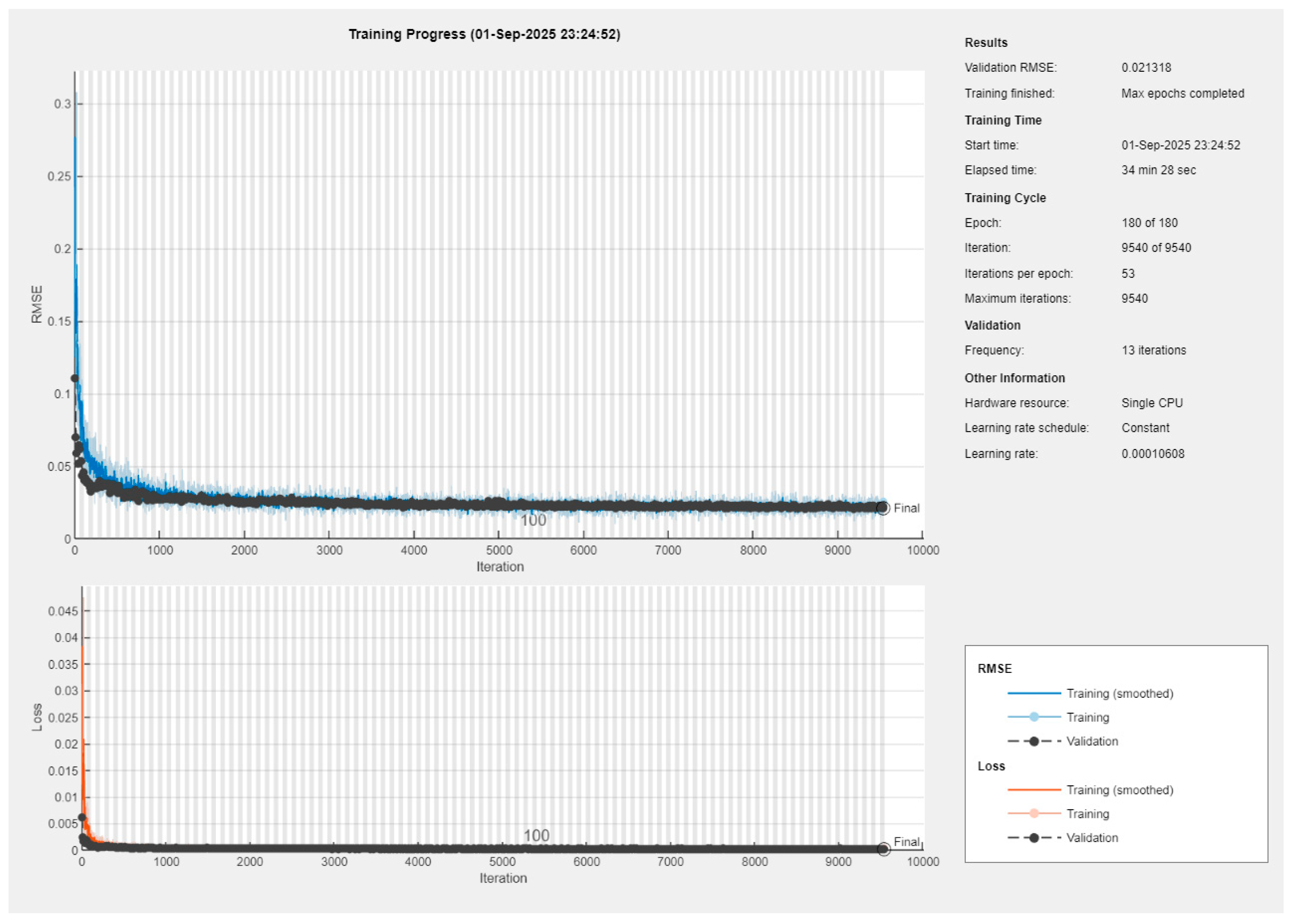

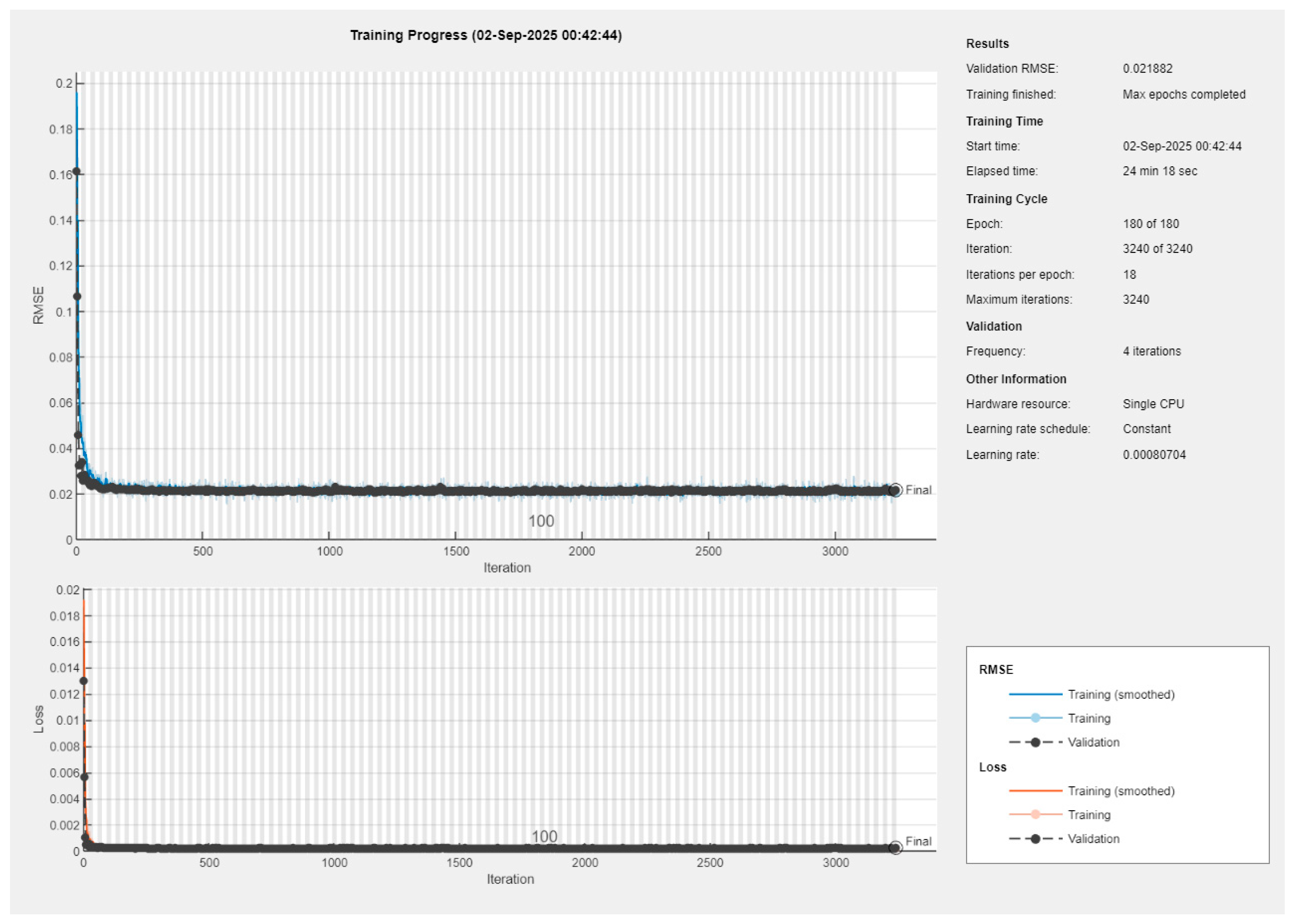

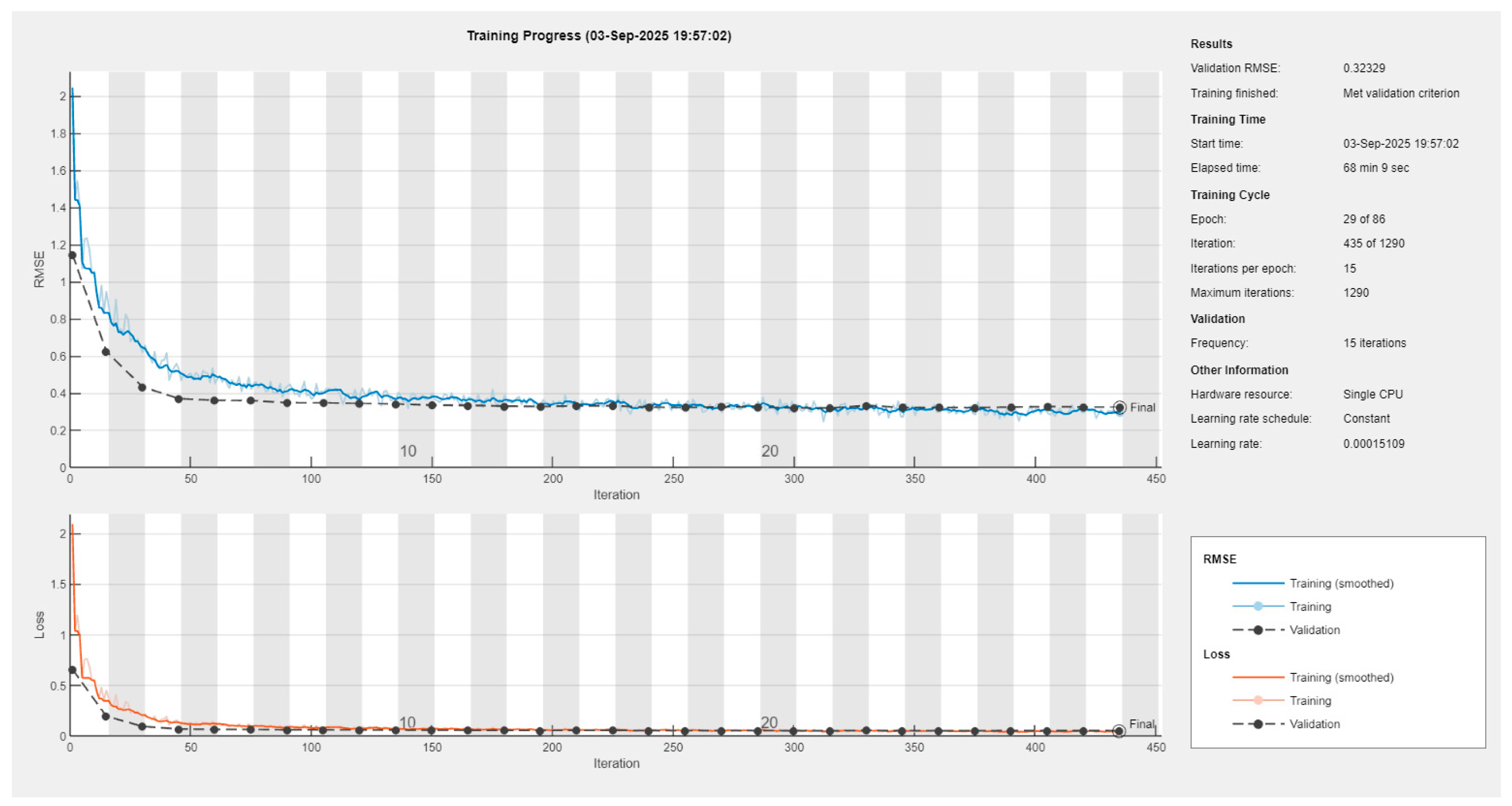

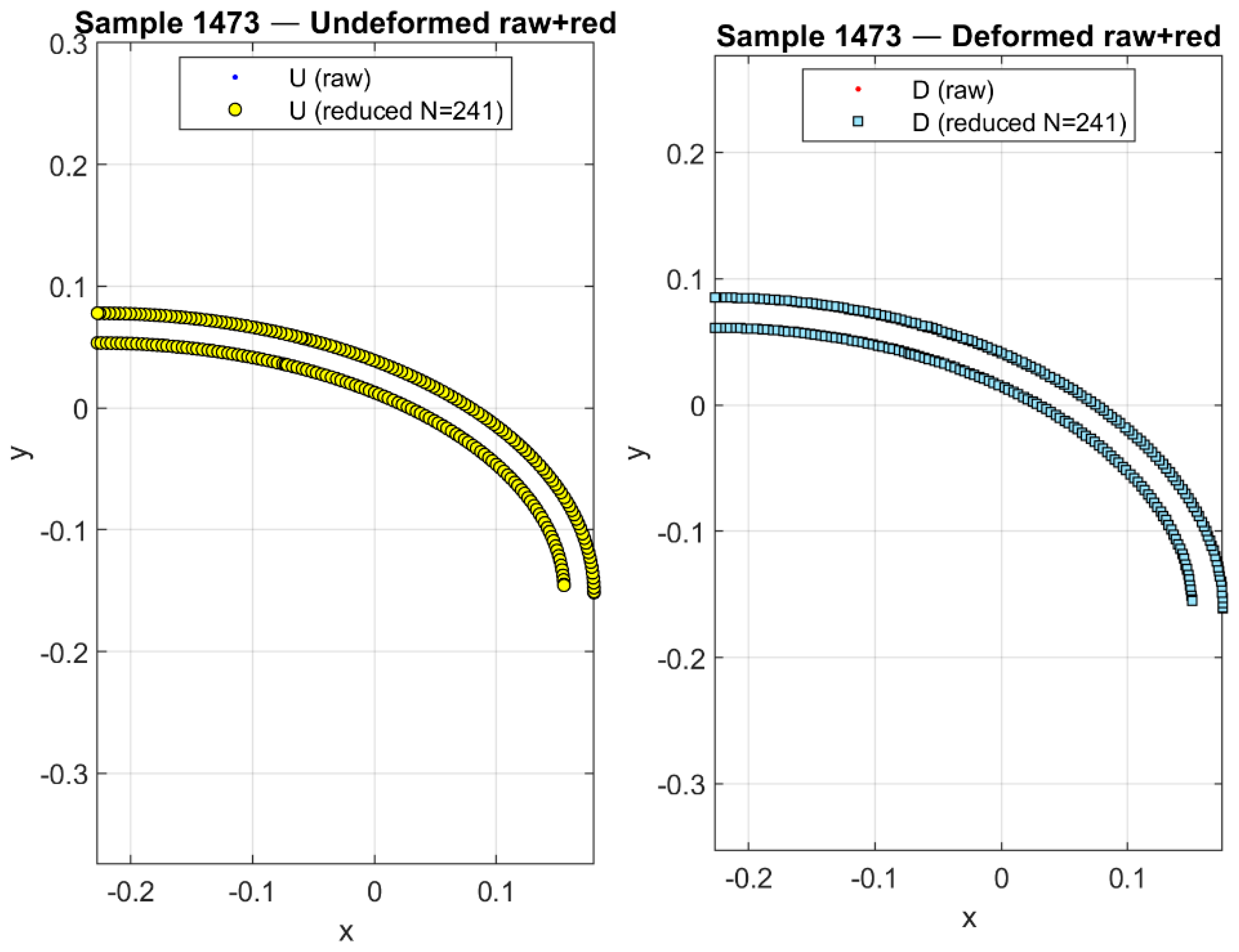

For the GNN models trained with only undeformed and deformed boundary profiles as inputs, the Bayesian-optimized architectures converged to stable and reproducible performance across all splits of the dataset. Training progress curves (see

Appendix A) indicated rapid error decay within the first few hundred iterations, followed by a long plateau where both training and validation losses remained nearly parallel. The validation RMSE stabilized around 0.021–0.022 for both internal and external pressures, with negligible divergence from training curves. These learning dynamics confirm that the chosen configurations effectively prevented overfitting while ensuring consistent convergence.

When the input representation was enriched by including additional mechanics-informed features—namely displacement differences (Δu), normals, and tangents—the optimization process identified slightly different architectures for the internal and external pressure regressors. The enriched models maintained the same level of RMSE as the boundary-only baseline but achieved marginally higher correlation with ground-truth pressures and exhibited smoother convergence behavior. This indicates that while the boundary-only setting already provides a strong baseline, the inclusion of enriched features enhances model stability and predictive fidelity, particularly for cases with higher pressure variability.

A comparative summary of the optimized architectures and their final performance is provided in

Table 8. The table highlights key hyperparameters selected by Bayesian optimization, along with objective values, final RMSE across splits, and correlation ranges. The results show that enriched-input networks yielded marginal improvements in correlation and optimization stability, confirming the added value of physics-informed feature augmentation.

Bayesian optimization selected hyperparameters that balance feature capacity, receptive field, and regularization. Feature dimensions across graph convolution layers () and kernel sizes () control embedding width and neighborhood size, while depth parameters () define overall network complexity. Average pooling (Pool=avg) and no batch normalization were chosen for aggregation. Two fully connected layers () provide dense mappings, with dropout applied at body (0.36) and head (0.52) to reduce overfitting. Training stability was ensured by a small learning rate (8.07 × 10−4), weight decay (2.47 × 10−5), and moderate batch size (53). Collectively, these settings illustrate how BO tuned the GNN to achieve stable convergence and low error without over-parameterization.

A comparative summary of the two training processes is provided in

Table 9. The table highlights key aspects such as initial error levels, convergence speed, final RMSE values, and differences in training duration between the internal and external pressure networks. Despite minor variations in convergence smoothness and iteration counts, both models ultimately achieved nearly identical validation errors and exhibited stable learning behavior with minimal gap between training and validation curves. This consistency underscores the robustness of the boundary-only GNN baseline and sets the stage for the enriched-feature analysis presented later in

Section 7.3.

Quantitatively, the optimized GNN achieved near-identical performance across splits. For internal pressure (), the final RMSE values were 0.0213 (train), 0.0219 (validation), and 0.0213 (test). For external pressure (), the respective values were 0.0214, 0.0215, and 0.0210. These small and tightly clustered errors demonstrate both robustness and generalization of the learned mapping.

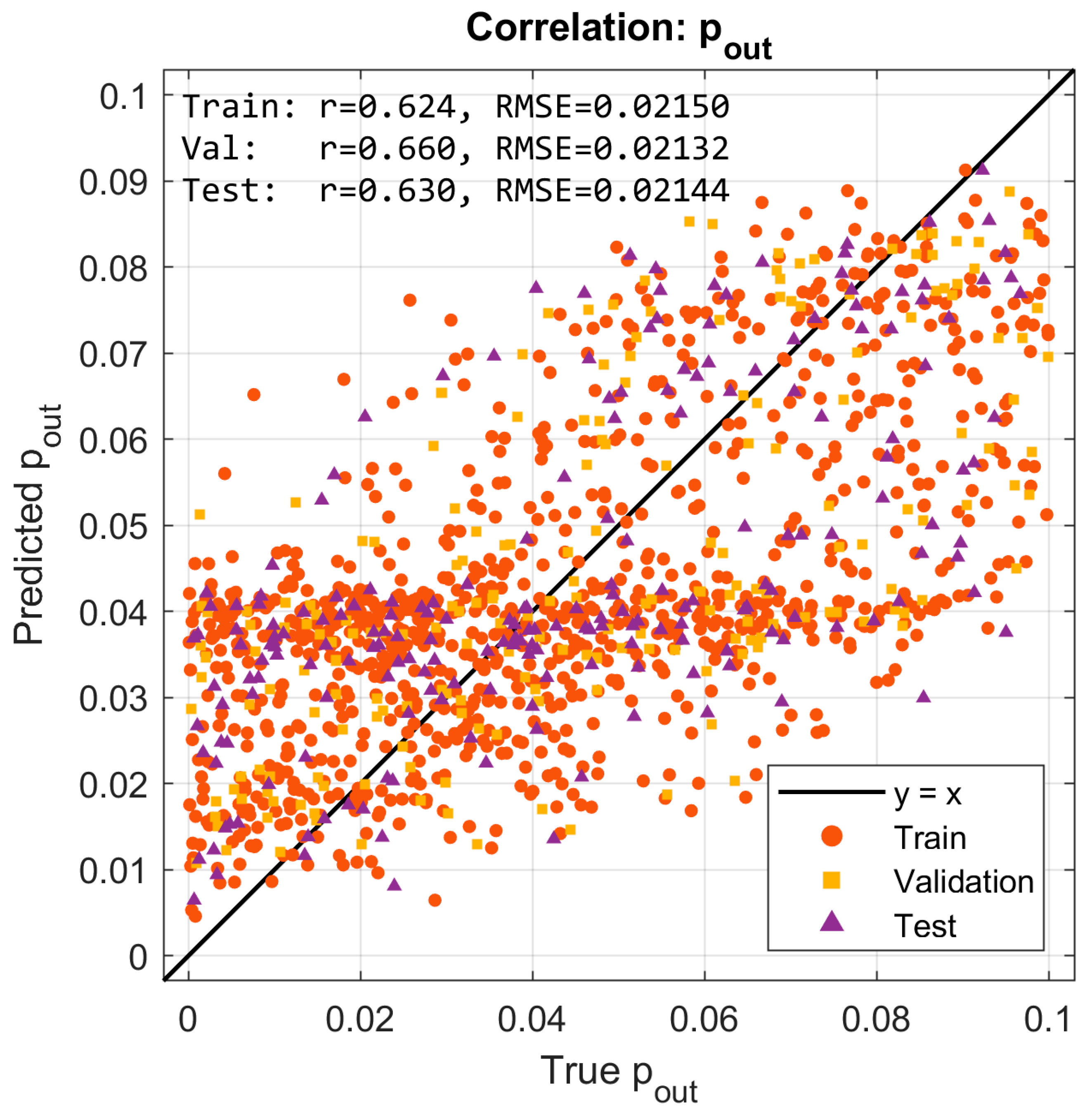

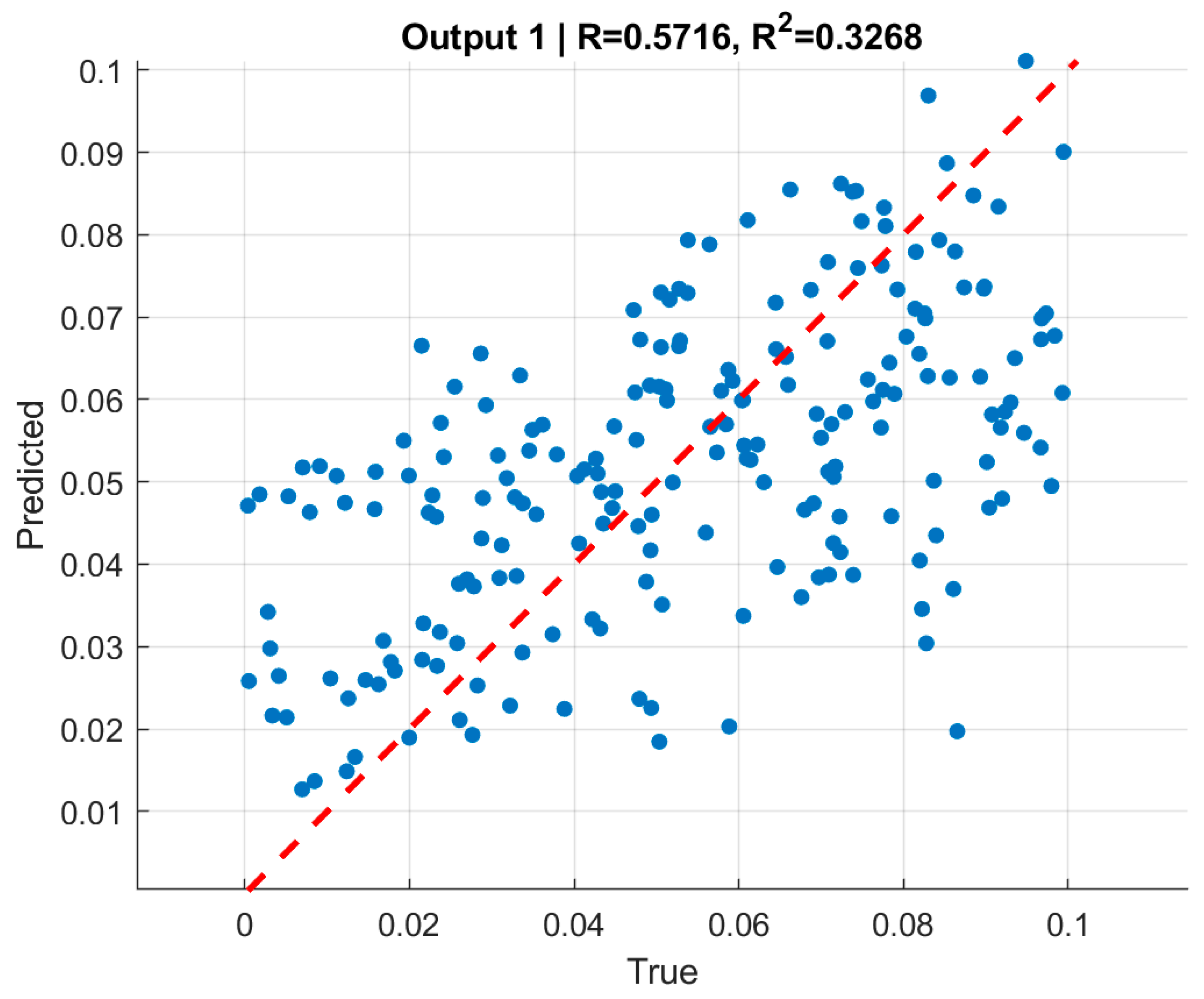

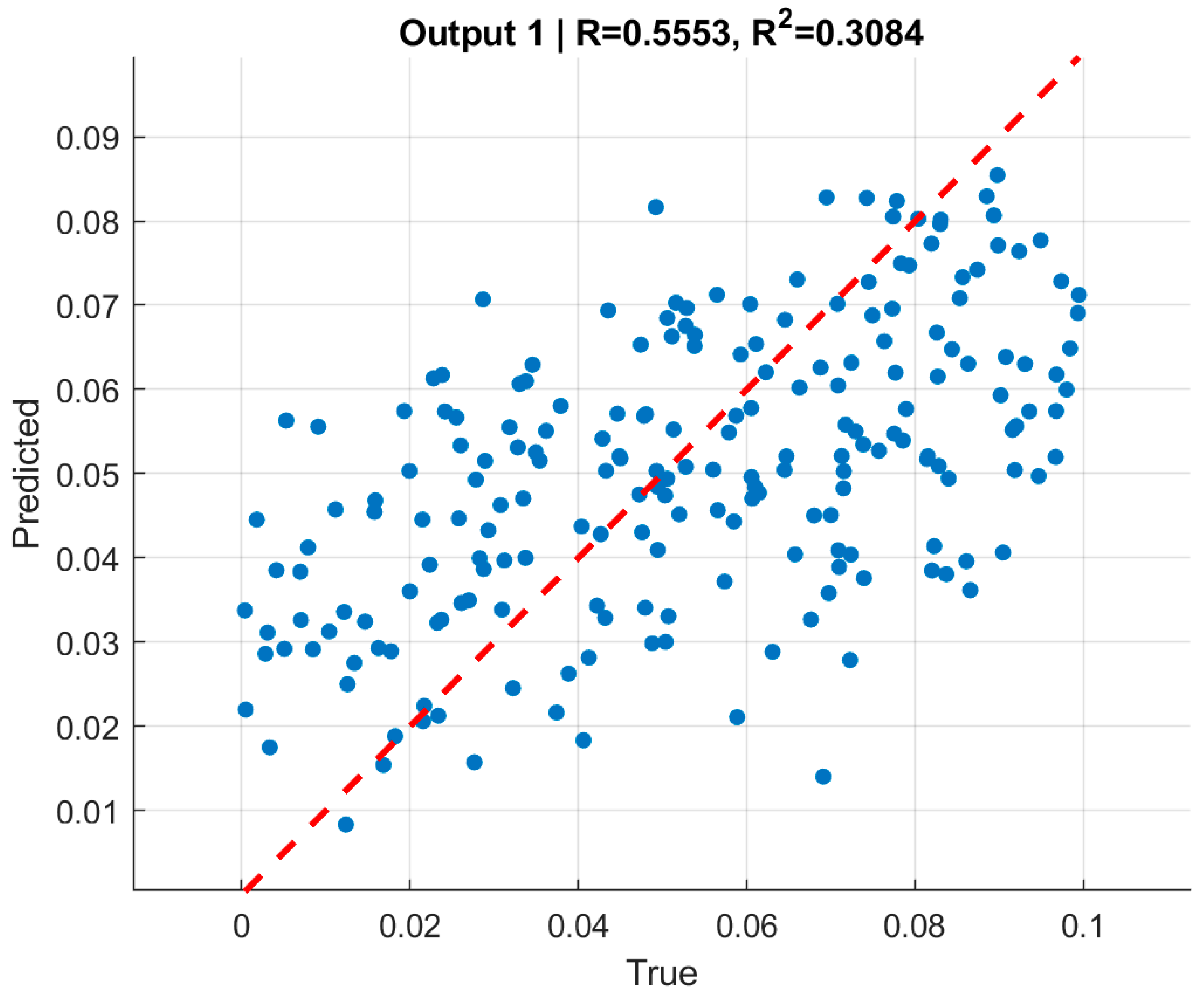

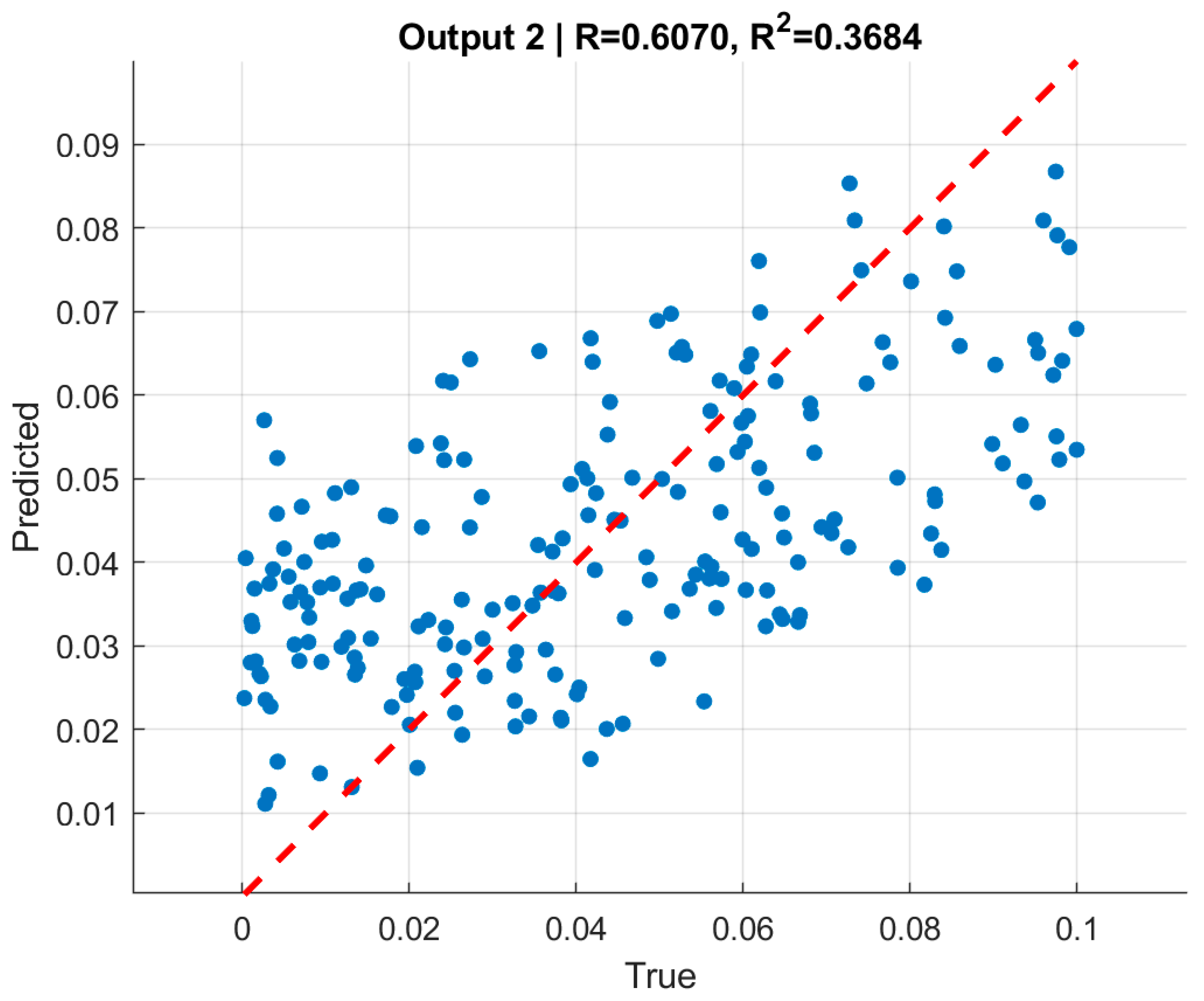

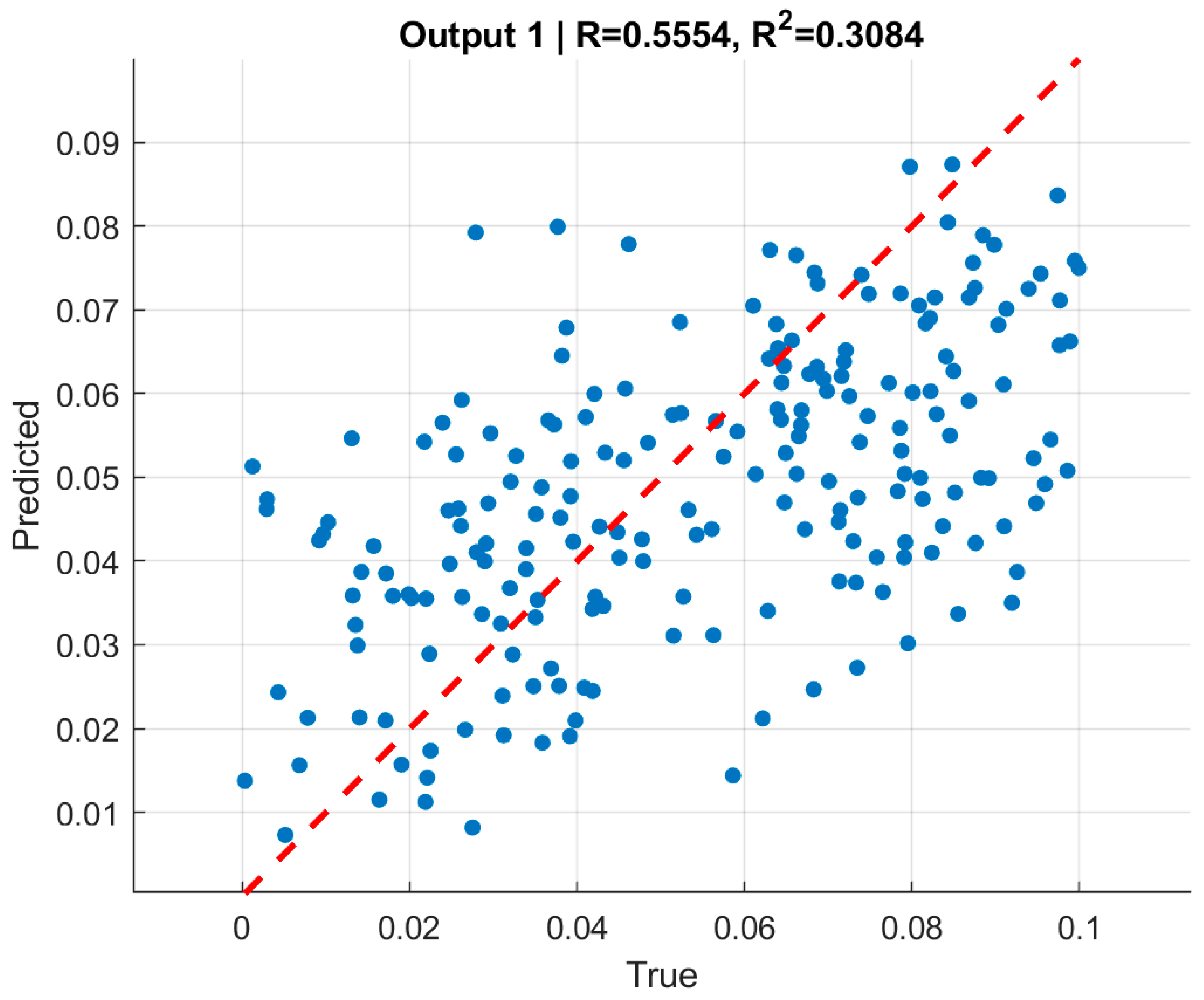

Figure 9 and

Figure 10 present scatter plots of predicted versus true normalized pressures for

and

, respectively. In both cases, the predictions align with the

reference line while showing moderate dispersion. Pearson correlation coefficients reached

for

and

for

. The moderate correlation values, despite very low RMSE, are consistent with the limited dynamic range of the targets [0, 0.1], where even small absolute deviations significantly affect variance-based statistics. This highlights the importance of reporting both absolute error metrics (RMSE) and relative/normalized measures when interpreting model quality.

The moderate correlation coefficients () should be interpreted in the context of the narrow-normalized pressure range (0–0.1) used in the dataset, which naturally limits statistical variance and reduces the sensitivity of correlation-based metrics. Despite this, the predictions exhibit very low absolute errors and excellent consistency across training, validation, and test sets. Therefore, the slightly reduced -values do not indicate model weakness but rather reflect the compact target domain and the stability of the learned inverse mapping.

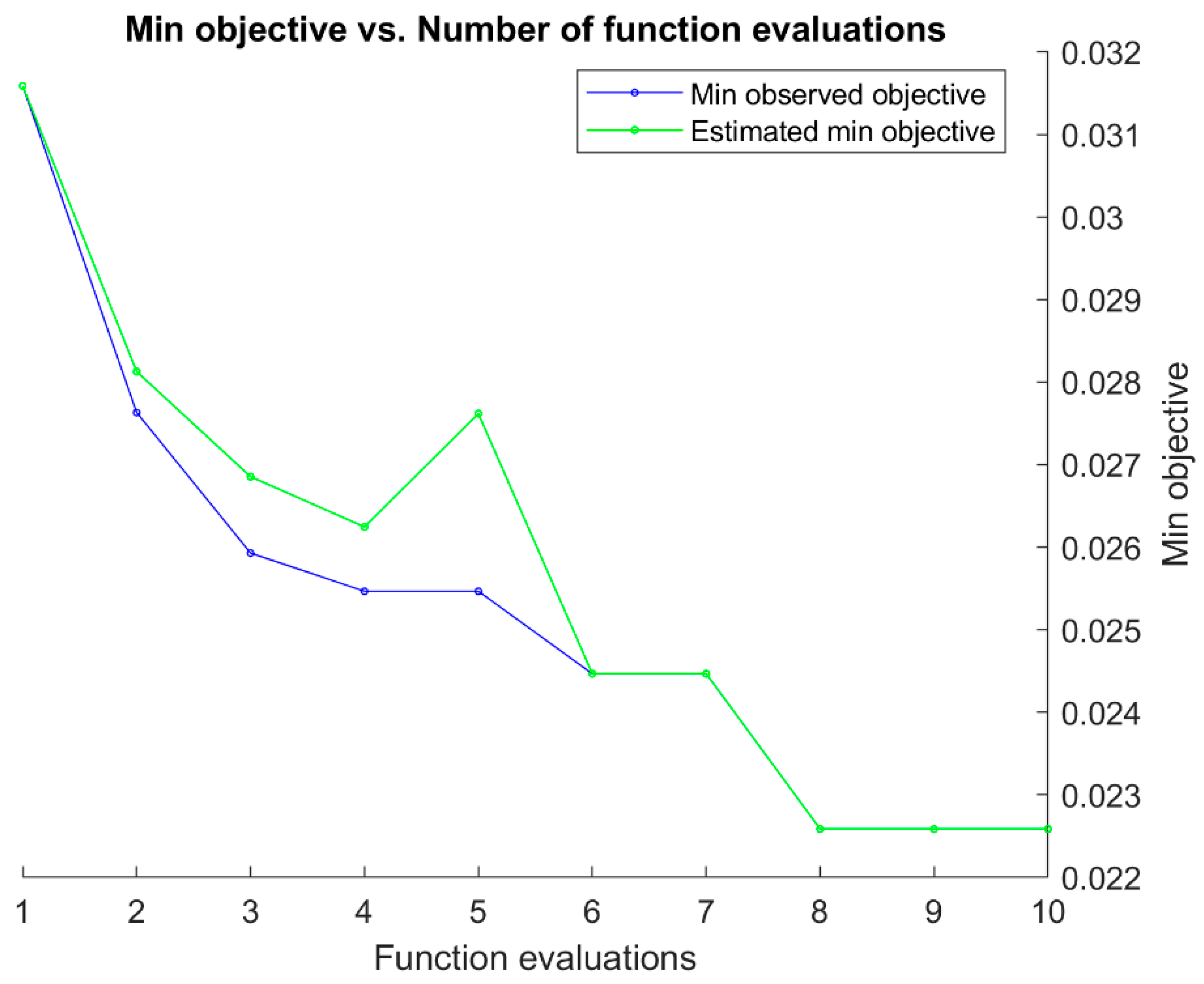

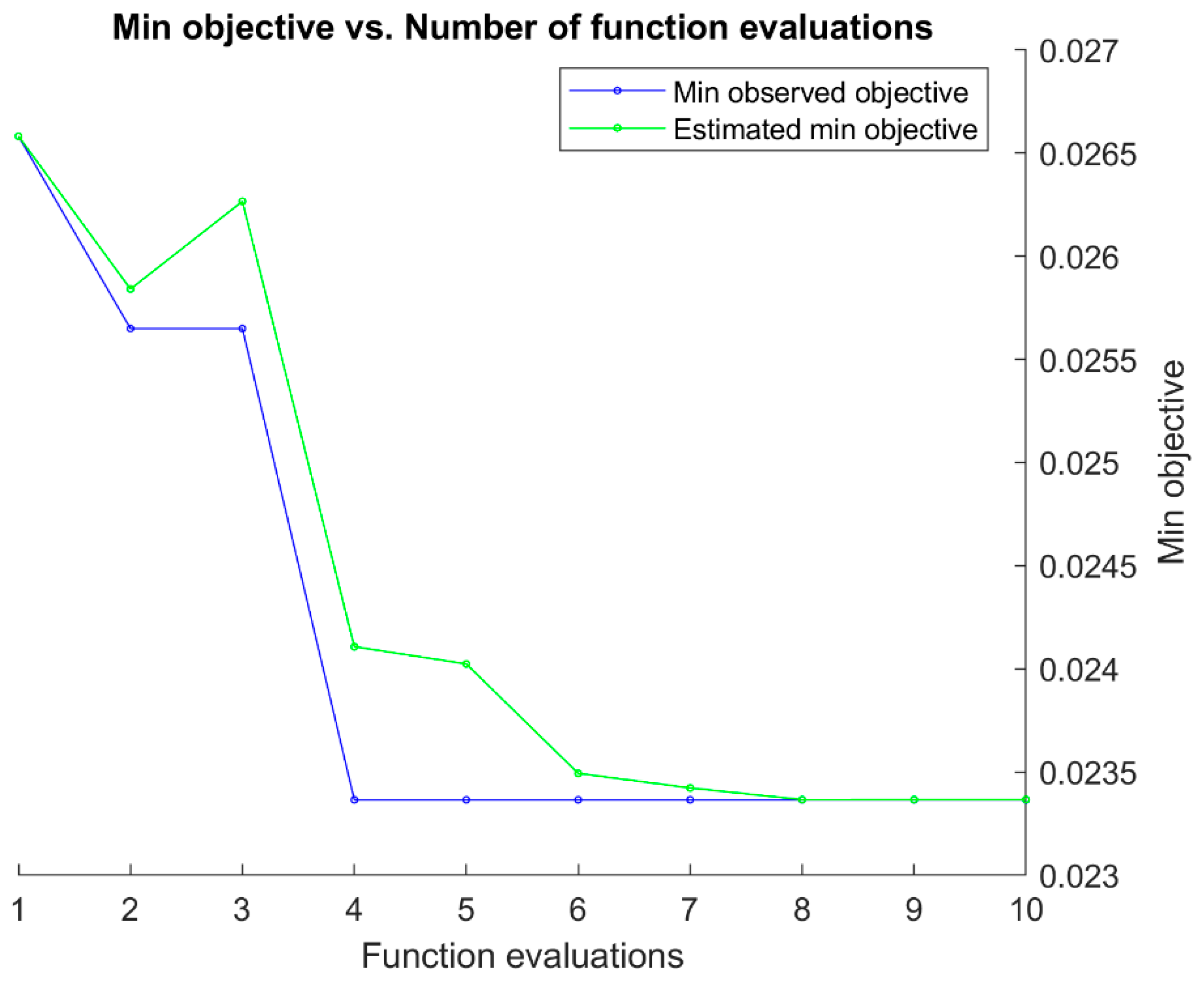

Finally,

Figure 11 and

Figure 12 summarize the Bayesian optimization (BO) histories for the GNN models targeting

and

, respectively. In both cases, the minimum observed objective decreases markedly during the first few evaluations and then gradually levels off, stabilizing after approximately 7–8 iterations.

The close agreement between the observed and estimated objective curves further indicates that the surrogate model employed in BO provided accurate guidance during the search. For the model, the minimum objective converged near 0.023, while for the model it reached approximately 0.022—values consistent with the RMSE reported on the independent validation and test splits. These trajectories demonstrate that BO effectively navigated the hyperparameter space for both networks, yielding architectures that balanced accuracy with stability.

In summary, the boundary-only GNNs for internal and external pressure provide a solid baseline: they achieve low and consistent RMSE across all splits, exhibit stable convergence during training, and yield moderate-to-strong correlation with ground-truth pressures. However, the scatter visible around the line in the correlation plots indicates that relying solely on boundary coordinates leaves some variability unexplained.

By contrast, the enriched-input GNNs—augmented with displacement differences, normals, and tangents—maintain the same low RMSE values while delivering smoother convergence dynamics and slightly higher correlations with the true pressures. These improvements confirm that incorporating mechanics-informed features enhances predictive fidelity and stability, particularly for cases with larger pressure variability. Together, the two configurations establish a clear progression: boundary-only models provide a robust starting point, while enriched models exploit additional geometric and kinematic cues to push performance closer to the limits of the available dataset.

Model accuracy in this study was evaluated using RMSE and Pearson correlation (r), which jointly capture both absolute error magnitude and predictive consistency. These metrics are standard in inverse modeling and were found sufficient to characterize performance across all data splits. Additional measures such as MAE or normalized RMSE were examined during preliminary analysis and showed no new trends; hence, only RMSE and correlation are reported here for clarity and conciseness.

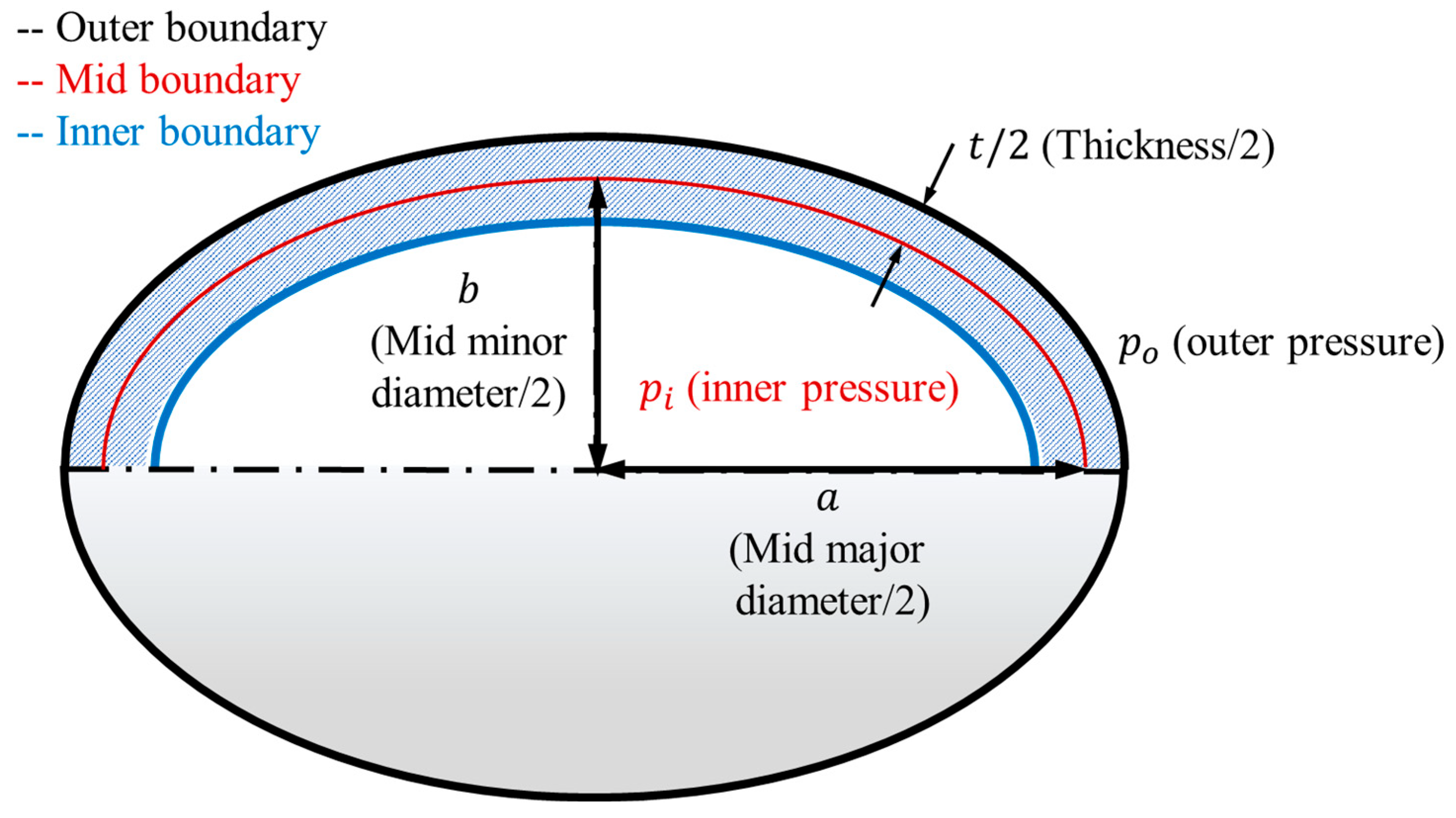

In classical problems with simple geometries—such as spheres or cylinders—the relationship between pressure and displacement can be expressed analytically through closed-form functions. In contrast, the present study deals with nonlinear hyperelastic materials and complex boundary fields, where both the constitutive behavior and geometric mapping are highly nonlinear. Consequently, the relationship between boundary deformations and applied pressures cannot be captured by simple analytical expressions. The adoption of a graph-based neural network thus provides a data-driven means to approximate this intricate mapping, effectively learning the underlying mechanical dependencies that govern the inverse problem.

The stable convergence of the trained GNN models confirms that the network successfully encoded these physical relationships, rather than relying on purely statistical correlations.

7.2. Quantitative Evaluation—Convolutional Neural Networks (CNN)

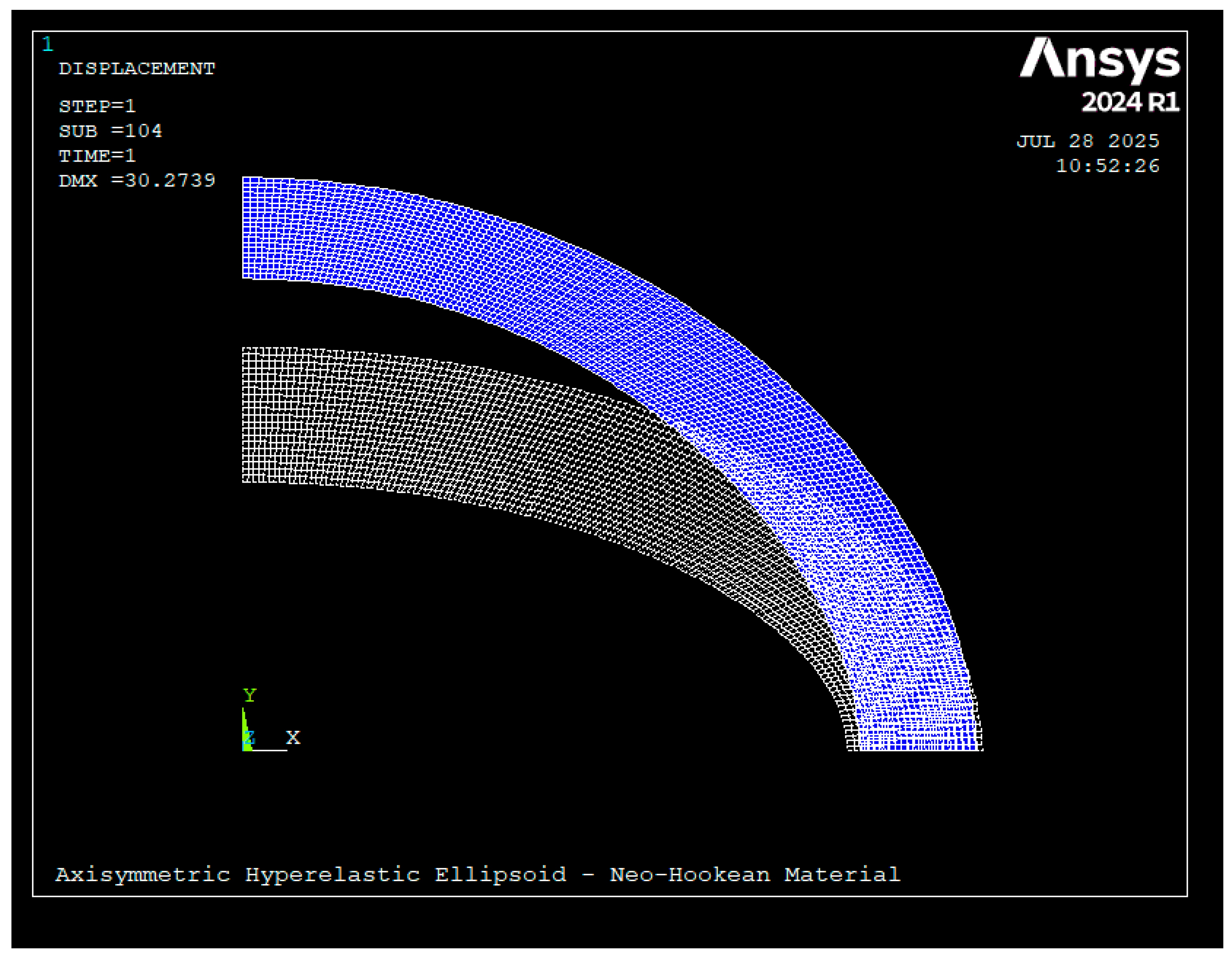

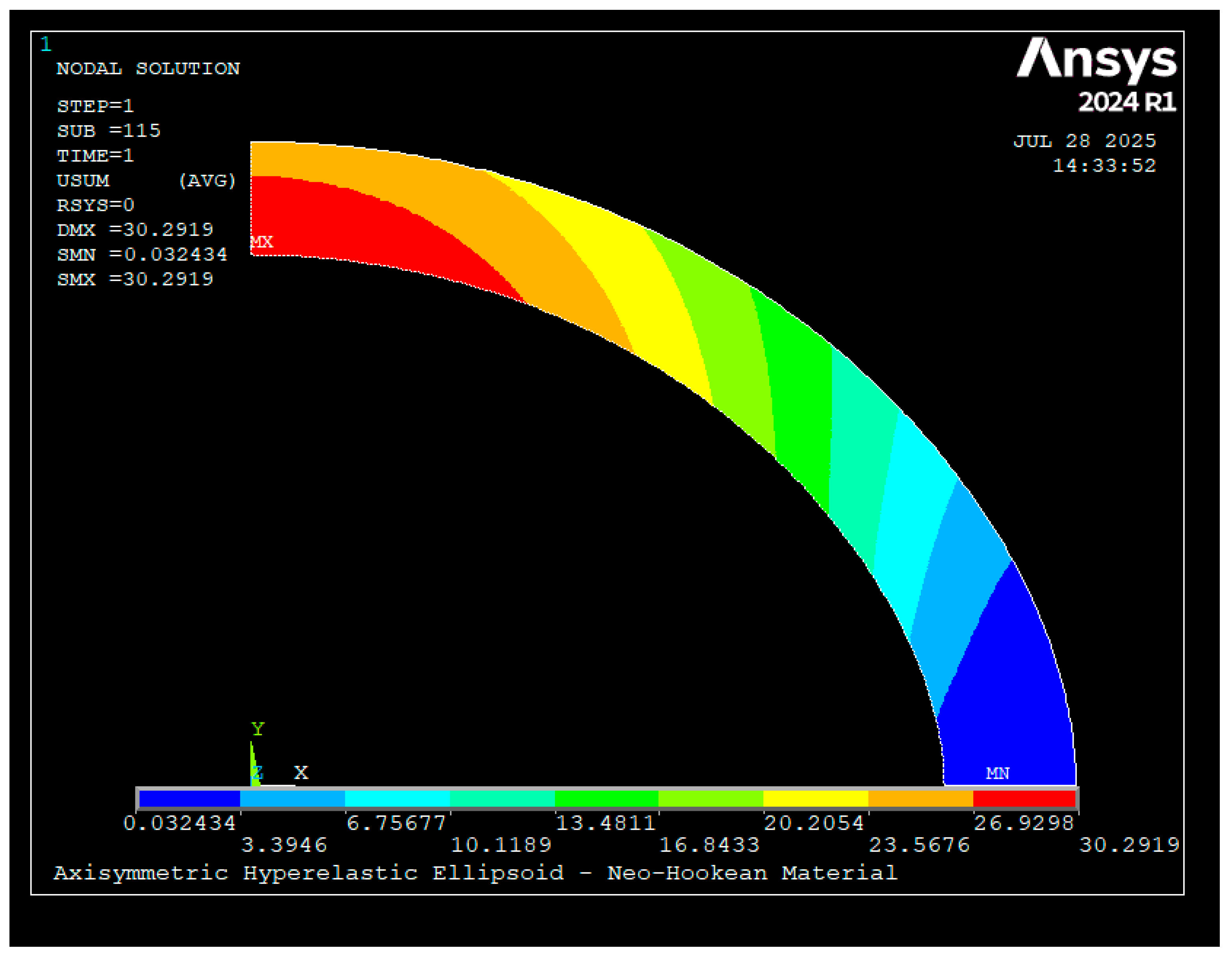

To complement the graph-based models, convolutional neural networks (CNNs) were trained to infer normalized internal and external pressures from FEM-generated deformation data. Unlike GNNs, which operate on resampled boundary graphs, CNNs require structured image representations of the vessel cross-section. Therefore, three input configurations were investigated: (i) quarter-section images capturing local symmetry, (ii) full-ring hollow cross-sections representing the global geometry, and (iii) fully filled cross-sections embedding both inner and outer boundaries within the image domain.

All CNN variants shared a unified architecture consisting of five convolutional blocks followed by two fully connected layers with dropout regularization, and their hyperparameters were tuned using Bayesian optimization. This ensured consistent comparison across different input representations. The following subsections summarize the performance of each CNN configuration and discuss their comparative accuracy and generalization behavior.

The initial experiments employed a conventional CNN architecture consisting of sequential convolutional and pooling layers followed by multiple fully connected layers. However, unlike the GNNs, the CNNs exhibited less satisfactory performance, motivating further exploration of optimized architectures. In particular, the quarter-section configuration showed clear limitations: the predictions tended to collapse toward the mean of the target values for both outputs, indicating that the network failed to capture meaningful correlations between image features and applied pressures.

Given these challenges, the evaluation was extended to full-ring and filled-section inputs, which provide more global geometric information and deformation context. The following subsections present the results for these input types, together with an assessment of underfitting and overfitting behaviors observed during training, and a discussion of how architectural refinements affect predictive accuracy.

Although Bayesian optimization was employed to systematically refine the CNN architectures across all input variants and establish a balanced baseline for comparison with GNNs, additional aspects—such as geometric or intensity-based data augmentation and controlled noise-perturbation studies—could further improve the interpretability and robustness of the CNN results. These complementary analyses fall outside the scope of the present work but are identified as valuable directions for future research, where a more comprehensive assessment of CNN sensitivity to sampling variability and feature perturbations will be undertaken.

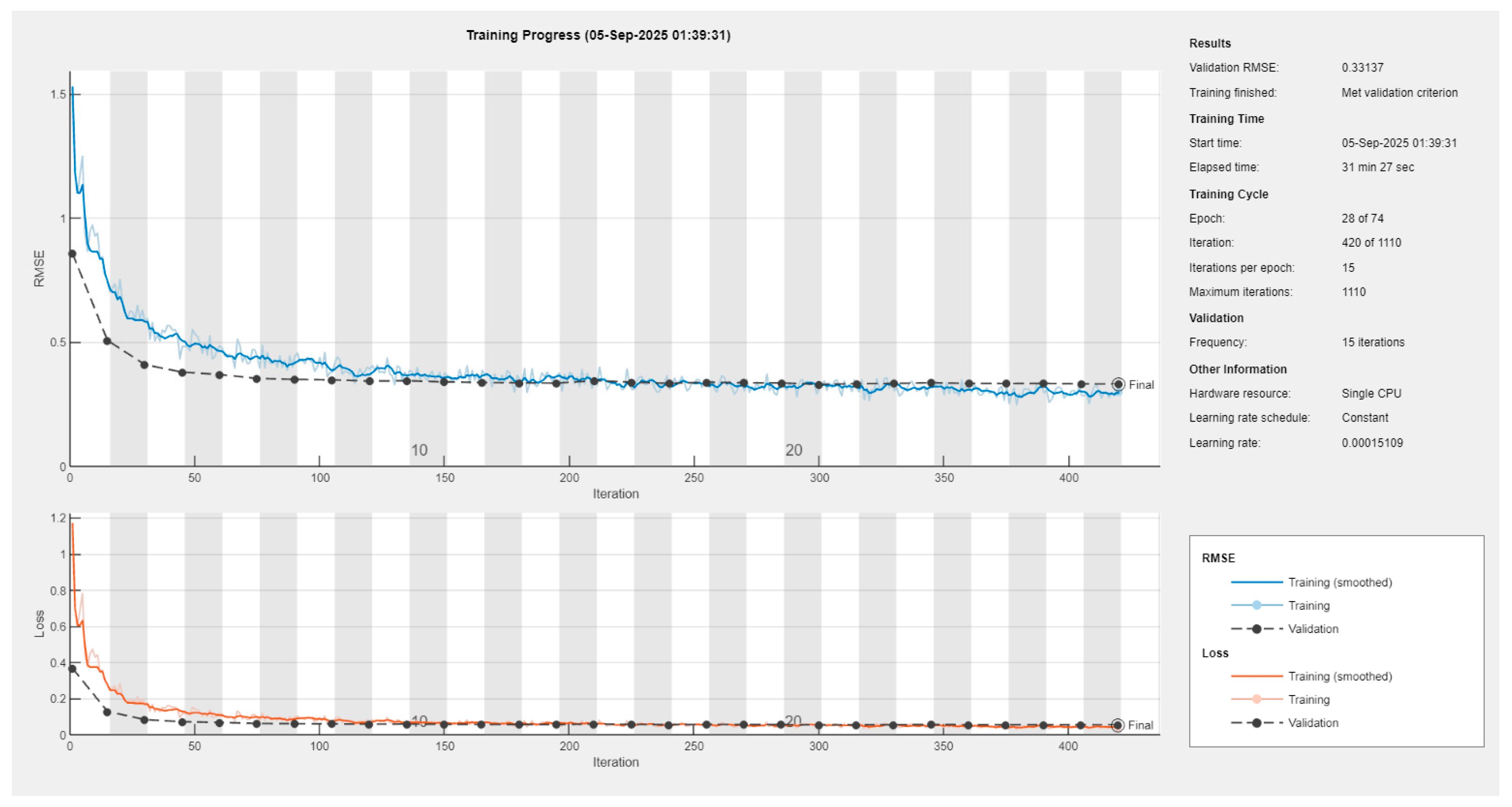

7.2.1. CNN with Full-Ring Cross-Section Inputs

For the full-ring image inputs, the CNN architecture was optimized through Bayesian hyperparameter search. The best-performing model adopted five convolutional blocks with a filter schedule starting at 19 channels and growing modestly per block. The convolutional stack was followed by two fully connected layers (333 and 259 neurons), with dropout regularization applied at rates of 0.20 and 0.14, respectively. The optimization also selected a relatively small learning rate (1.5 × 10−4), weak regularization (6.3 × 10−6), and a batch size of 64, converging within 74 epochs.

Training curves (see

Appendix A) demonstrate rapid decay in both training and validation loss during the initial epochs, followed by stabilization around epoch 25–30. The validation RMSE plateaued near 0.0228 (mean of two outputs), which is notably lower than the unoptimized CNN but still above the performance levels achieved by GNNs. Importantly, overfitting was mitigated by the selected configuration: the validation error remained close to the training error, in contrast to earlier trials where the network memorized training images and failed to generalize.

Across datasets, the model showed consistent but modest predictive power as given in

Table 10. On the validation set, RMSE values were 0.0225 and 0.0242 for the two outputs, corresponding to R

2 values of 0.316 and 0.283. On the test set, RMSE values were 0.0230 and 0.0249, with corresponding R

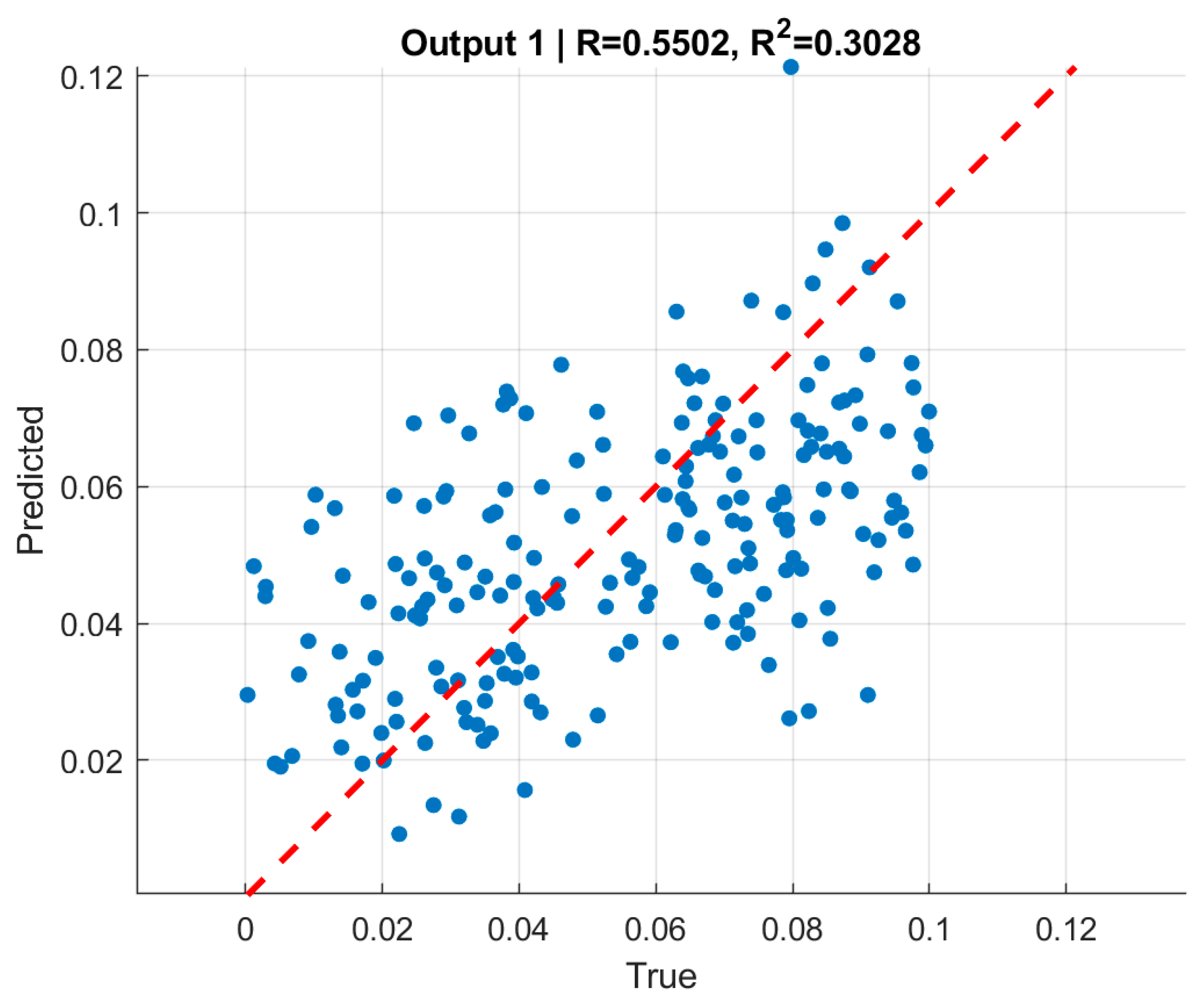

2 values dropping to 0.267 and 0.130, respectively. Scatter plots of true versus predicted values (

Figure 13,

Figure 14,

Figure 15 and

Figure 16) illustrate these findings: while the predictions cluster around the regression line, the dispersion is considerable, reflecting the limited explanatory capacity of CNNs compared to the graph-based approach.

7.2.2. CNN Architecture (Full-Ring Hollow Cross-Section Input)

The optimized CNN architecture for hollow full-ring images was composed of five convolutional blocks, starting with 19 filters in the first layer and gradually increasing through subsequent layers. This design enabled the network to capture both local boundary details and global deformation patterns around the ring. The convolutional backbone was followed by two fully connected layers of sizes 333 and 259 neurons, which provided sufficient representational capacity for mapping high-level image features to pressure predictions. To prevent overfitting, dropout layers with moderate rates (0.20 in the body and 0.14 at the head) were included, complemented by a small L2 regularization coefficient (6.3 × 10−6). Training used the Adam optimizer with a batch size of 64 and an initial learning rate of 1.5 × 10−4, converging within ~74 epochs. This configuration produced stable learning dynamics and improved generalization compared to the unoptimized baseline CNN.

In summary, the CNN trained on full-ring images benefited from architectural optimization, achieving substantially lower RMSE compared to the baseline and avoiding severe overfitting. However, the relatively low R2 values—particularly on the test set—underscore persistent challenges in capturing the nonlinear mapping from cross-sectional images to applied pressures. These results suggest that although CNNs can exploit global cross-sectional patterns, their predictive performance remains inferior to GNNs operating directly on boundary-derived features.

7.2.3. CNN with Fully Filled Cross-Section Inputs

The third CNN configuration employed fully filled cross-sectional images of the vessel, in which both inner and outer boundaries were embedded within a solid domain. This representation was intended to provide the network with a richer spatial encoding of geometry and deformation compared to boundary-only images.

Bayesian optimization was again used to tune the architecture and hyperparameters. The final model adopted five convolutional layers with filter sizes growing moderately, followed by two fully connected layers with 300+ neurons each. Dropout layers (≈0.2) and a small L

2 regularization term (7 × 10

−6) were included to mitigate overfitting, while the learning rate (1.5 × 10

−4) and batch size (64) were selected to ensure stable convergence. Training proceeded for ~86 epochs until the validation criterion was reached (see

Appendix A).

The learning dynamics demonstrated consistent reduction in both training and validation RMSE during the first 20–30 epochs, after which both curves plateaued. The final validation RMSE stabilized around 0.032, which is noticeably higher than the full-ring CNN but still an improvement compared to the quarter-section case. Importantly, validation and training curves remained close throughout training, indicating that overfitting was controlled effectively.

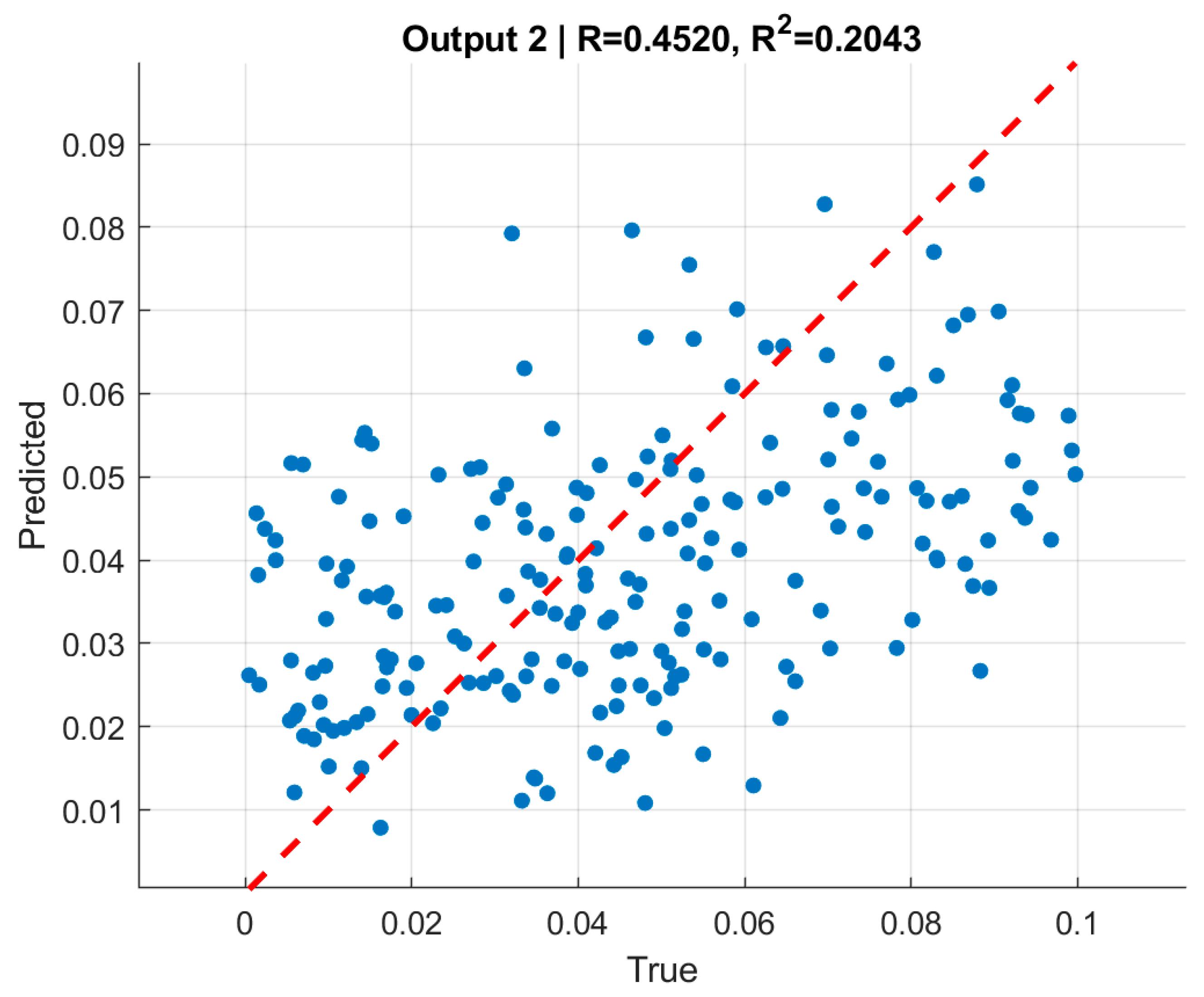

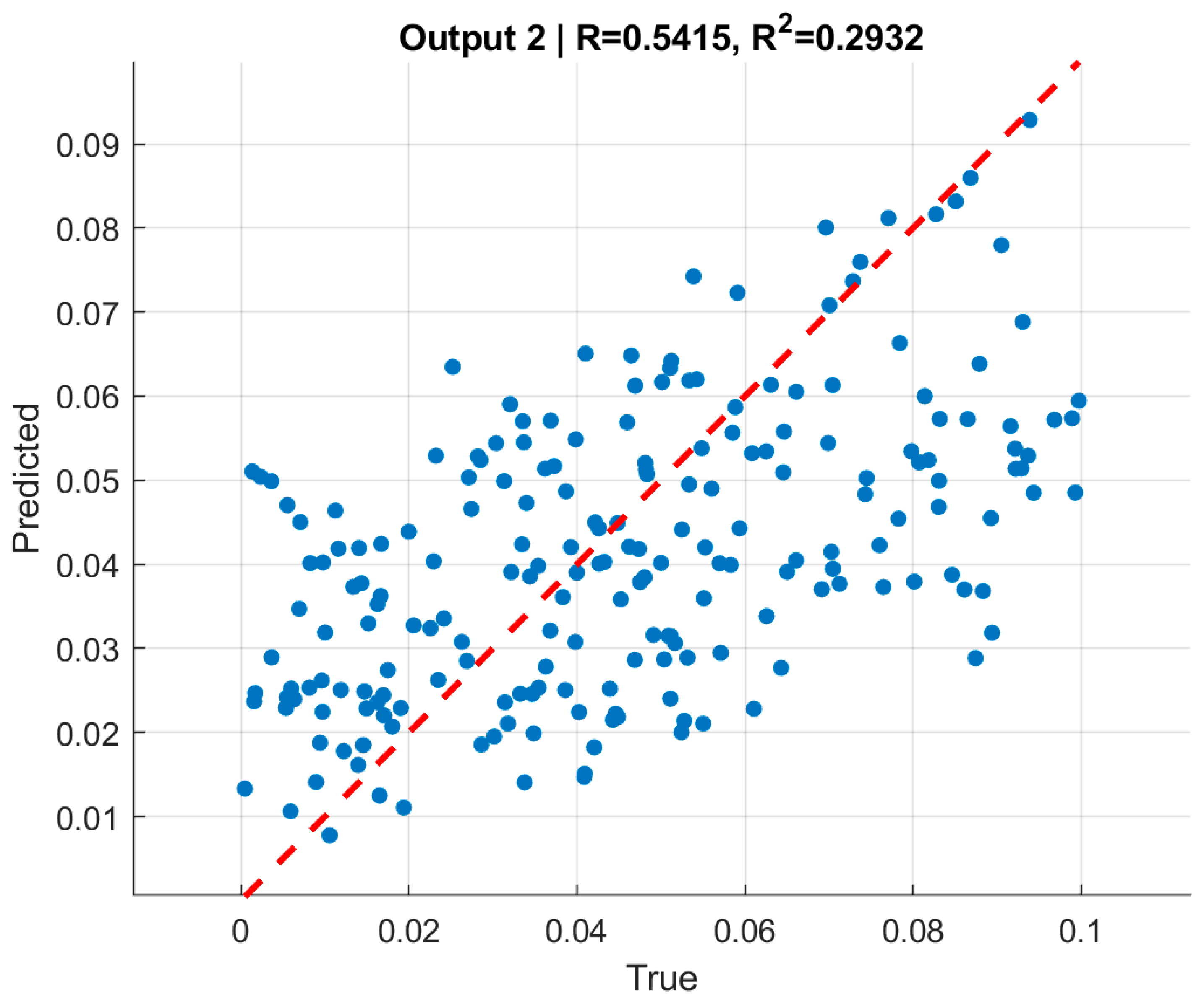

Performance metrics reflected this behavior are shown in

Table 8. On the validation set, the RMSE values were 0.028–0.032 for the two outputs, with corresponding R

2 values of 0.308 and 0.368. On the test set, the network achieved comparable RMSE levels (0.029–0.031), while R

2 values remained modest at 0.308 and 0.293, respectively. Scatter plots of predicted versus true pressures (

Figure 17,

Figure 18,

Figure 19 and

Figure 20) confirm that the CNN was able to capture global deformation trends, but the predictions were dispersed around the regression line, reflecting limited explanatory accuracy compared to GNNs.

7.2.4. CNN Architecture (Filled Cross-Section Input)

The optimized CNN architecture for filled cross-section images employed five convolutional layers with progressively increasing filter numbers, enabling hierarchical extraction of deformation features from the vessel geometry. This convolutional backbone was followed by two fully connected layers of approximately 300 neurons each, designed to integrate high-level spatial features into pressure predictions. To mitigate overfitting, dropout layers with rates around 0.2 were included, along with a small L2 weight penalty (7 × 10−6). Training was performed with a batch size of 64 and an initial learning rate of 1.5 × 10−4, using the Adam optimizer. Convergence was achieved after ~86 epochs, yielding balanced performance across training and validation sets without severe divergence.

In summary, the fully filled input representation enabled the CNN to achieve more stable and generalizable performance than the quarter-section case, with test metrics comparable to validation results. Nevertheless, despite architectural optimization, the CNN struggled to capture fine-scale correlations between deformation fields and applied pressures, as reflected in the relatively low R

2 values, as shown in

Table 11. This reinforces the conclusion that boundary-informed GNNs provide a more effective framework for inverse pressure identification in hyperelastic vessels.

7.3. Qualitative Metrics

In addition to the quantitative indicators of performance such as RMSE and R2, qualitative analyses were conducted to visually assess the predictive behavior of the trained models. Scatter plots comparing true versus predicted pressures for both GNN and CNN frameworks revealed clear differences in fidelity and generalization. For the GNNs, the predicted points clustered closely around the y = x line, with only moderate scatter, demonstrating their ability to capture nonlinear deformation–pressure relationships in a stable manner. In contrast, the CNN models—particularly those trained on quarter-section images—exhibited broader dispersion and a tendency to regress toward average values, highlighting their limited capacity to resolve detailed deformation cues.

Visualizations of selected vessel cross-sections further reinforced these trends. For cases with pronounced deformations, the GNNs provided consistent pressure predictions aligned with FEM ground-truth values, while the CNNs frequently underestimated or overestimated loads, especially in near-spherical configurations. These qualitative observations complement the quantitative metrics, underscoring that GNNs not only achieve lower numerical errors but also deliver predictions that are physically more coherent with observable deformation patterns.

Classical inverse techniques such as regularized least squares and adjoint-based sensitivity analyses have long been employed for load identification problems. However, their direct application to nonlinear hyperelastic systems with dual pressure boundaries would require problem-specific regularization and iterative forward–inverse coupling, leading to high computational cost and potential instability.

The present work instead focuses on establishing a machine-learning-based baseline capable of learning the inverse mapping directly from high-fidelity FEM data. This provides a foundation upon which future studies can incorporate or benchmark against classical optimization-based inversions. Such extensions, including hybrid GNN–adjoint or physics-informed variants, are currently being explored as part of ongoing work.

The observed correspondence between boundary displacements and identified pressures is consistent with classical elasticity theory, confirming that the learned GNN mappings capture physically meaningful relationships. Larger radial displacements and outward normal deformations were consistently associated with higher internal pressure, while variations in tangential components reflected the redistribution of hoop stress along the vessel wall. This behavior is physically consistent with hyperelastic theory, in which the nonlinear strain–energy relation governs the equilibrium between internal and external pressures. The stable convergence of the GNN models therefore indicates that the network effectively captured this underlying mechanical relationship, rather than learning purely statistical correlations.