1. Introduction

This paper focuses on solving the constrained system of nonlinear equations formulated as follows:

where

denotes a monotone and continuously differentiable mapping, and

represents a nonempty closed convex set.

Formally, the monotonicity of the mapping

F is characterized by the following inequality:

Systems of nonlinear monotone equations originate from numerous practical problems and are widely applied in interdisciplinary fields. Typical application scenarios include automatic control systems [

1], optimal power flow calculations [

2], and compressive sensing [

3,

4,

5]. Given the broad scope of application fields for nonlinear monotone equations, a multitude of researchers have developed an array of iterative numerical techniques to solve Problem (

1). Examples of such methods include the Newton algorithm [

6], the Gauss–Newton algorithm [

7], the BFGS algorithm [

8], the quasi-Newton algorithm [

9], and gradient-based algorithms [

10,

11,

12,

13].

In recent years, several optimization methods, which were developed based on the projection and proximal point approach initially proposed by Solodov and Svaiter [

14], have been extended to tackle Problem (

1). Among these extended approaches are conjugate gradient methods—originally developed for solving large-scale unconstrained optimization problems, owing to their advantages of low memory requirements and a simple structural design. For instance, Li and Zheng [

15] developed two derivative-free approaches for solving nonlinear monotone equations. Dai and Zhu [

16] integrated a projection technique into the modified HS conjugate gradient approach and proposed a derivative-free projection approach to tackle high-dimensional nonlinear monotonic equations. Additionally, Kimiaei et al. [

17] established a subspace inertial algorithm specifically tailored for derivative-free nonlinear monotone equations. Meanwhile, Liu et al. [

18] developed an accelerated Dai-Yuan conjugate gradient projection approach, which incorporates an optimal parameter selection strategy to enhance performance. P.Kumam et al. [

19] proposed another hybrid approach for solving monotone operator equations and applied it to signal processing problems. Xia et al. [

20] proposed a modified two-term conjugate gradient-based projection algorithm for constrained nonlinear equations. Fang [

21] presented a derivative-free RMIL conjugate gradient approach for constrained nonlinear systems of monotone equations. Zhang et al. [

22] developed a three-term projection approach specifically formulated for solving nonlinear monotone equations, with subsequent extension of this approach to address practical problems in signal processing, thereby validating its applicability.

Another class of widely used iterative methods for solving nonlinear equations is the quasi-Newton approach. For example, Abubakar et al. [

23] proposed a scaled memoryless quasi-Newton approach based on the SR1 update formula for solving systems of nonlinear monotone operator equations. Awwal et al. [

24] proposed a new derivative-free spectral projection approach for addressing Problem (

1), with the aid of a modified SR1 updating formula. Rao and Huang [

25] developed a novel sufficient descent direction based on a scaled memoryless DFP updating formula and proposed a derivative-free approach for solving systems of nonlinear monotone equations. Tang and Zhou [

26] proposed a two-step Broyden-like approach for solving nonlinear equations, which is designed to compute an additional approximate quasi-Newton step formed by using the previous Broyden-like matrix when the iterates are close to the solution set. Ullah et al. [

27] introduces a two-parameter scaled memoryless BFGS approach with scaling for solving systems of monotone nonlinear equations, in which the optimal scaling parameters are determined by minimizing a measure function that incorporates all eigenvalues of the memoryless BFGS matrix.

Inspired by the studies mentioned above, a new quasi-Newton method is proposed in this paper for solving nonlinear equations, with a revised SR1 updating formula as its technical support. The proposed approach in this paper has the following advantages: (1) The search direction is constructed based on the revised SR1 update formula and utilizes information from the latest three iterates () and their corresponding function values. This three-point information captures richer curvature characteristics, outperforming two-point approaches by enhancing direction reliability. (2) Under the framework of the Lipschitz condition and convex constraints, this approach demonstrates global convergence when applied to monotone nonlinear equations. (3) The search direction of our proposed approach possesses the properties of both sufficient descent and the trust region, with no reliance on line search techniques.

The remainder of this paper is structured as follows:

Section 2 elaborates on the motivation behind the proposed approach and presents the corresponding algorithm.

Section 3 analyzes the global convergence of the proposed approach.

Section 4 conducts comparative numerical experiments to evaluate the method’s performance.

Section 5 provides a summary.

2. Algorithm

In this section, we first revisit the quasi-Newton iterative methods, which produce

using the following iterative formula:

Within this framework, the step length

is obtained using line search methods, and the search direction

is computed based on

in which

is an approximation of the inverse of the Hessian matrix at the k-th iteration. One well-known quasi-Newton matrix update formula, specifically the Symmetric Rank-One (SR1) approach, is presented as follows:

where

,

. In the iterative process of the SR1 approach, the iterative matrix

can hardly maintain positive definiteness consistently. Meanwhile, the denominator may tend to zero, resulting in the failure of the algorithm.

To enhance the performance of the original SR1 approach, two modified formulations have been proposed in existing studies. One is by Abubakar et al. [

23], who adjusted (

5) to introduce a parameter

, and the modified formula is given by:

The other is by Awwal et al. [

24], who improved the denominator of (

5) using a max function to avoid singularity. Their modified formula reads:

The SR1 improvement formulas (

6) and (

7) relying only on the latest two points have two key flaws. First, heavy dependence on the recent

and

causes information singularity. Limited local data fails to capture the global curvature of the function, leading to inaccurate Hessian inverse approximations and unstable convergence paths—especially in strongly nonlinear problems. Second, they underperform in high-dimensional or tightly constrained scenarios: two vectors cannot fully represent multi-dimensional curvature, and the tiny

from tight constraints reduces information distinguishability, rendering matrix updates ineffective and causing algorithm stagnation.

In [

28], Broyden put forward the following update formula:

where

is an approximation of the Jacobian matrix at the k-th iteration. Based on Broyden’s work, Fang et al. [

9] proposes a modified quasi-Newton method, whose update formula is

where

,

,

.

Numerical experiments indicate that the modified quasi-Newton method holds considerable promise. Inspired by Equation (

9), this paper proposes a revised SR1 update formula as follows:

where

,

,

,

,

. If we set

for all k, then the SR1 update given by (

10) reduces to the classical version.

According to the definitions of

and

, we have

where

I is an identity matrix with the same dimension as

. If we set

and use (

10), we have

Based on the monotonicity of

F and

, we conclude that

is a symmetric positive definite matrix.

Combining with (

4) and (

10), we have

From the definition of

, it is easy to see that for

, we have

For

, we set

and then we get

. When

, we have

In addition, inspired by the BB method [

29], we propose the following parameter:

Furthermore, using (

13), (

15) and (

16), this paper proposes the modified search direction as follows:

where

,

.

,

,

,

.

Next, we introduce the projection operator

, defined as follows:

This operator corresponds to projecting the vector

x onto the closed convex set

. Such a projection ensures that the subsequent iterative points generated by our algorithm stay within the domain

. It is well known that the projection operator satisfies the non-expansive property, which is expressed as

Next, we present the detailed steps of our proposed algorithm (Algorithm 1).

| Algorithm 1 Revised SR1 update Method (RSR1M) |

Select along with an initial point . Let . If , terminate the algorithm; Otherwise, proceed to step 2. Determine the search direction via ( 17). Define the step-length , m is the smallest natural number that makes satisfy the following inequality: The definition of the projection operator P for is Moreover, set and proceed to step 4. If and , set , terminate the algorithm; Otherwise compute

and set , proceed to step 1.

|

4. Numerical Experiments

The present section is devoted to conducting a series of numerical experiments to assess the performance of Algorithm 1, which we compare with the following two algorithms: (1) The three-term projection approach based on spectral secant equation (TTPM) [

22]; (2) The derivative-free approach involving symmetric rank-one update (DFSR1) [

24]. The core mechanism of TTPM relies on its three-term search direction, which integrates a projection-based feasible direction and a conjugate gradient-driven correction term. The projection-based direction uses the Solodov-Svaiter operator to keep iterates within the convex constraint set, while the correction term leverages historical direction data to prevent stagnation. This design enables effective constraint handling without derivative information, making TTPM a representative projection-based derivative-free approach for constrained nonlinear monotone equations. DFSR1 extends the SR1 quasi-Newton update to derivative-free scenarios via a modified correction term (with a positive tuning parameter) instead of Jacobian approximation. This modification, paired with a max-value denominator, avoids numerical singularity while preserving the symmetric rank-one structure for efficient updates. Its key strength is adaptive adjustment of the approximation matrix using a spectral parameter, ensuring the search direction meets the sufficient descent property.

These methods are chosen as benchmarks for three reasons: (1) Both are derivative-free and tailored for convex constrained monotone equations, aligning with the proposed method for fair comparison; (2) They represent dominant design philosophies and are widely validated in recent studies; (3) Their performance has been validated in prior studies: TTPM exhibits reliable stability in constraint handling, and DFSR1 outperforms approaches like PDY in convergence speed. These dual strengths provide a well-rounded reference to assess the proposed method’s merits across key performance metrics.

All algorithms were implemented in MATLAB 2018a and executed on an Lenovo computer equipped with an 11th-Generation Intel Core i7-11700K processor. To ensure fairness in the comparison, all algorithms were run using the parameters specified in their respective original papers, with same initial points applied consistently across all tests. We evaluated the algorithms on thirteen problems commonly adopted in existing literature, with dimensions set to 10,000 and 50,000. All test problems were initialized using the following eight starting points:

For Algorithm 1, the parameter settings were as follows: We define

The stopping criteria for the numerical experiments were defined as either of the two conditions below being satisfied: (1) The number of iterations exceeds 1000; (2) The residual norm meets

or

.

We now list the test problems, where the mapping F is defined as follows:

Problem 1. This problem drawn from [30] is Problem 2. This problem drawn from [30] is Problem 3. This problem drawn from [30] is Problem 4. This problem drawn from [31] is Problem 5. This problem drawn from [31] is Problem 6. This problem drawn from [31] is Problem 7. This problem drawn from [31] is Problem 8. This problem drawn from [22] is Problem 9. This problem drawn from [22] is Problem 10. This problem drawn from [22] is Problem 11. This problem drawn from [31] is Problem 12. This problem drawn from [31] is Problem 13. This problem drawn from [31] is Detailed outcomes of the experiments are presented in

Table 1,

Table 2 and

Table 3, following the format “Niter Nfun Tcpu

”. Specifically, “Niter” refers to the number of iterations, “Nfun” denotes the count of function value calculations, and “Tcpu” represents the CPU time (unit: seconds),

indicates the final value of

upon program termination. Meanwhile, “Dim” indicates the dimension of each test problem, and “Sta” stands for the starting points. By analyzing these tables comprehensively, it can be clearly observed that for the given test problems, Algorithm 1 demonstrates consistent advantages over TTPM and DFSR1. Specifically, Algorithm 1 exhibits the minimum Niter and Nfun values in a large number of test instances. Meanwhile, Algorithm 1 also consumes less CPU time: in the vast majority of the test problems, its CPU time is consistently the smallest.

To investigate the impact of parameter variations on the performance of Algorithm 1, we conduct sensitivity analyses for key parameters (

,

,

c,

, and

). The evaluation metrics used include: average number of iterations (

), average number of function evaluations (

), average CPU time (

, unit: seconds), and average optimal residual (

, representing the final value of

when the program terminates). The results are presented in

Table 4,

Table 5,

Table 6 and

Table 7.

Table 4 focuses on the sensitivity analysis of parameter

, with three tested values:

,

, and

. The results show that the best performance is achieved when

, with the minimum average number of iterations (10.529), average number of function evaluations (48.183), average CPU time (0.030), and average optimal residual (2.710 × 10

−7). Compared to

, when

, the average number of iterations increases by 37.3%, the average number of function evaluations by 49.8%, the average CPU time by 13.3%, and the average optimal residual by 13.9%. In contrast, the differences between

and

are negligible: the average number of iterations increases by only 0.34%, the average number of function evaluations by 3.58%, the average CPU time by 10%, and the average optimal residual by 5.98%. This indicates that

is the optimal choice,

is still acceptable, but

leads to significant performance degradation.

Table 5 analyzes the sensitivity of parameter

, with tested values of 0.4, 0.5, and 0.6. The best performance is observed when

, with all metrics minimized (average iterations 10.529, average function evaluations 48.183, average CPU time 0.030, average optimal residual 2.710 × 10

−7). When

, the average number of iterations increases by 43.3%, the average number of function evaluations by 36.3%, the average CPU time by 26.67%, and the average optimal residual by 31.88%. For

, the average number of iterations increases by 3.07%, the average number of function evaluations by 8.74%, the average CPU time by 16.67%, and the average optimal residual by 2.95%. It is evident that

is the optimal balance point, with deviations to 0.4 causing severe performance loss and deviations to 0.6 leading to moderate degradation.

Table 6 examines the sensitivity of parameter c, with tested values of 0.05, 0.1, and 0.2. The optimal performance is achieved when c = 0.1, with all metrics minimized. Compared to c = 0.1, when c = 0.05, the average number of iterations increases by 0.58%, the average number of function evaluations by 1.51%, the average CPU time by 3.33%, and the average optimal residual by 0.07%. For c = 0.2, the average number of iterations increases by 3.12%, the average number of function evaluations by 8.72%, the average CPU time by 16.67%, and the average optimal residual by 8.12%. This demonstrates that c = 0.1 is the optimal choice, c = 0.05 is slightly inferior, and c = 0.2 leads to noticeable performance decline.

Table 7 investigates the sensitivity of parameters

and

, with three tested combinations:

;

; and

. The combination

performs the best, with the minimum average number of iterations (10.529), average number of function evaluations (48.183), average CPU time (0.030), and average optimal residual (2.710 × 10

−7). When

, the average number of iterations remains unchanged, the average number of function evaluations shows no change, the average CPU time increases by 3.33%, and the average optimal residual remains unchanged. For

, the average number of iterations increases by 0.03%, the average number of function evaluations by 1.78%, the average CPU time by 3.33%, and the average optimal residual by 0.4%. This indicates that

is the optimal parameter pair, with other combinations causing only minor performance fluctuations.

These sensitivity analyses identify the optimal ranges for the key parameters of Algorithm 1, enhancing its practical guidance in real-world applications by quantifying the extent of performance changes caused by parameter variations.

In order to conduct a comprehensive comparison across all algorithms, we make use of the performance profile framework developed by Dolan and Mor’e [

32], which is standard in the field of numerical optimization for algorithmic performance assessment.

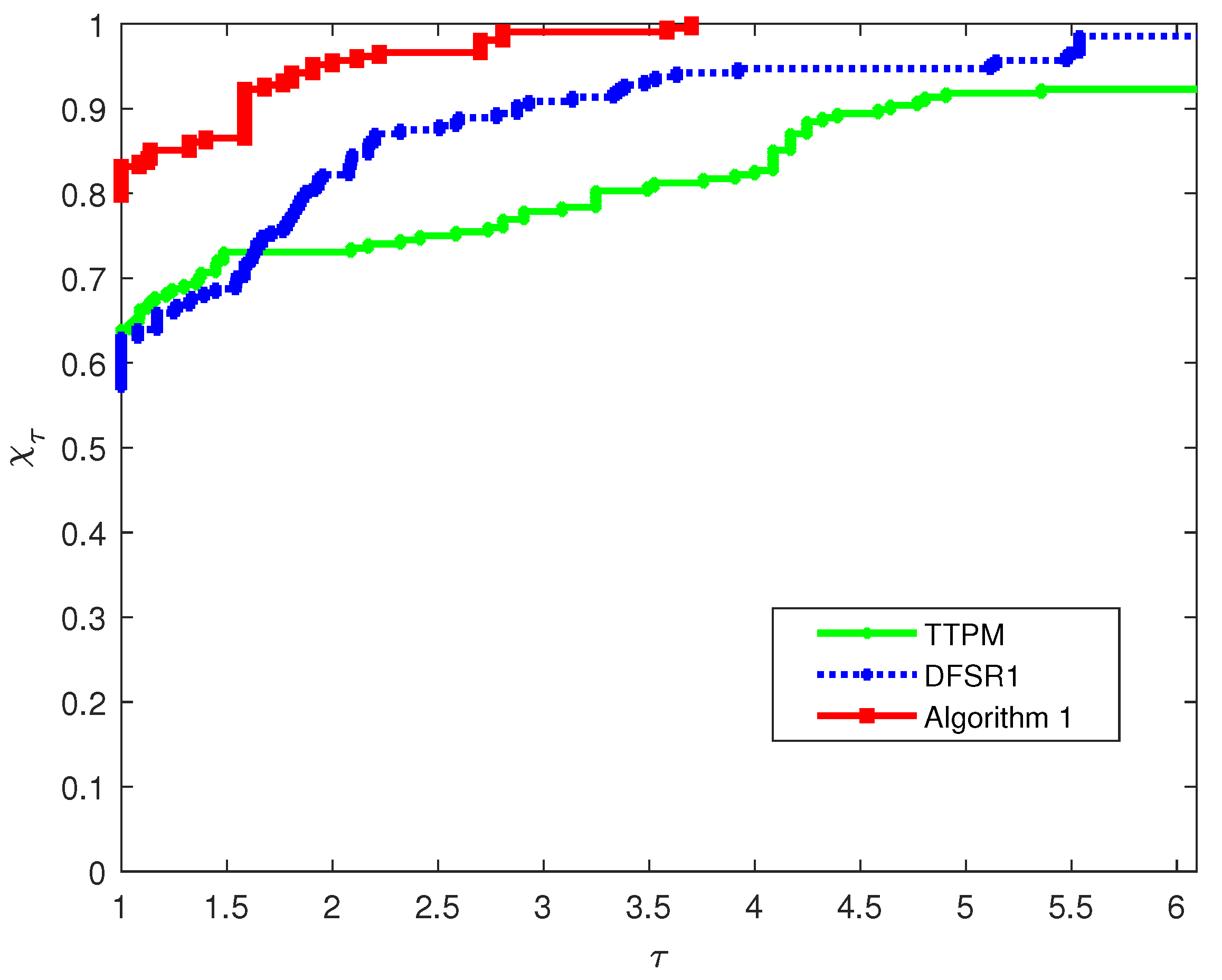

Figure 1 illustrates the performance profiles for the number of iterations on a

-scale, involving three algorithms: TTPM, DFSR1, and Algorithm 1.

In

Figure 1, it can be observed that as

increases, Algorithm 1 consistently achieves a higher proportion of solved problems than TTPM and DFSR1. Specifically, when

reaches around 1.5, the curve of Algorithm 1 rises significantly faster and remains at the top afterward—this clearly demonstrates its notable advantage in iteration efficiency. In contrast, the proportion of solved problems for DFSR1 grows relatively slowly, while TTPM is less competitive in iteration performance compared to the other two algorithms.

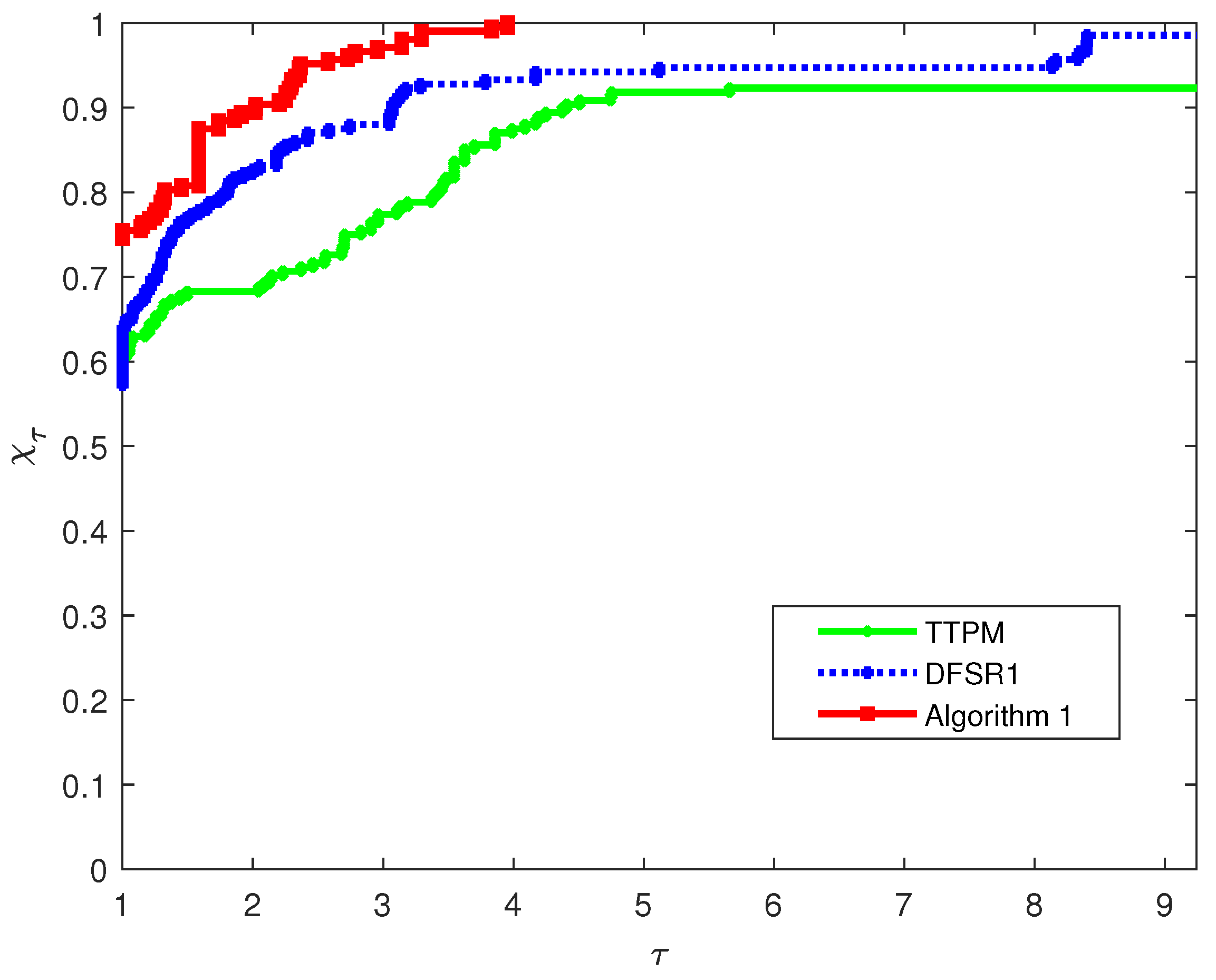

Figure 2 presents the performance regarding function evaluations. It is evident that Algorithm 1 solves the largest proportion of problems no matter what value

takes. Consistent with the iteration results, Algorithm 1 performs exceptionally well, even when

is small, the proportion of problems it solves already surpasses that of TTPM and DFSR1. Additionally, TTPM and DFSR1 show similar performance in function evaluations, but both are less efficient than Algorithm 1.

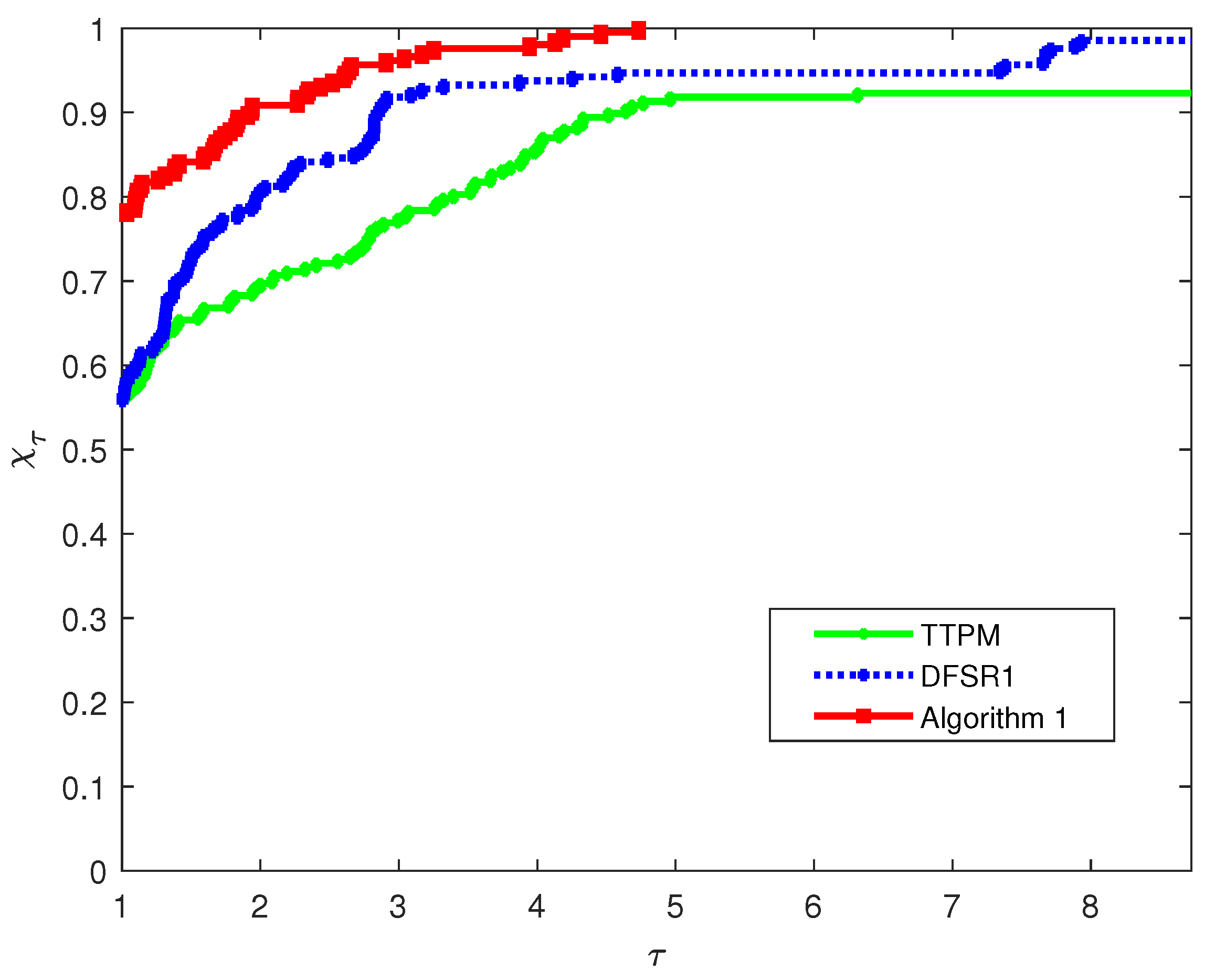

Figure 3 illustrates the performance differences in CPU time. As can be seen from the figure, the curve of Algorithm 1 takes the lead from the very beginning and stays at the highest level as

increases. This means that within the entire range of

values, Algorithm 1 outperforms DFSR1 and TTPM in CPU time efficiency. Whether it is the basic efficiency when

is small or the ability to cover more problems when

increases, Algorithm 1 can solve a higher proportion of test problems with shorter CPU time, fully demonstrating its stable advantage in computational speed.

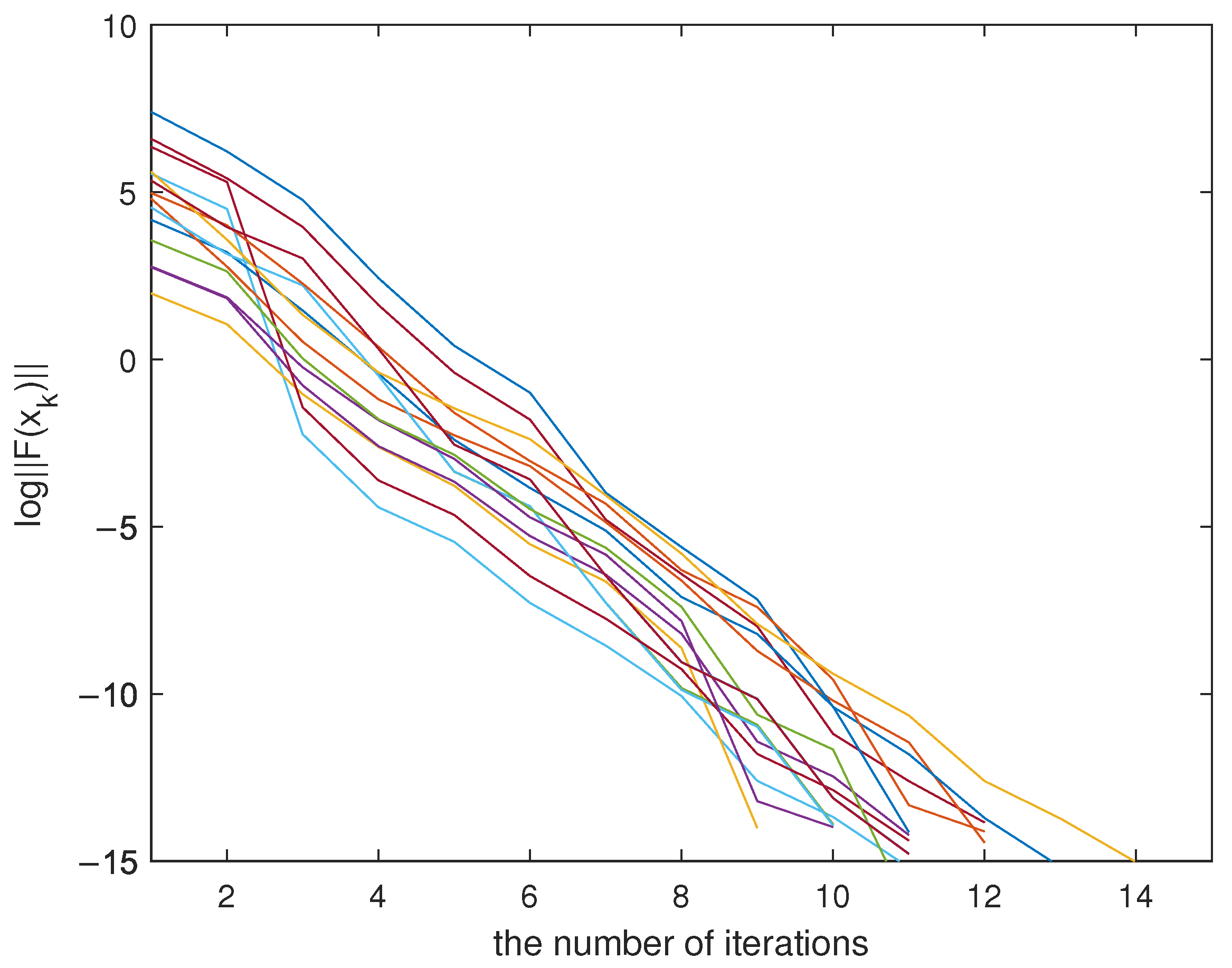

Moreover, we verify the convergence rate of Algorithm 1 through numerical experiments. Specifically, for Problem 8, we consider scenarios with two dimensions and eight initial points, resulting in 16 problem instances in total. We plot the curve of

versus the number of iterations, as shown in

Figure 4. It is evident that the curves of

decrease linearly with the number of iterations, indicating that the Algorithm 1 exhibits linear convergence for Problem 8.