1. Introduction

Fuzzy preference modeling has increasingly relied on relational approaches to systematically capture preference relations between alternatives [

1]. Within this framework, t-norm-based aggregation functions have emerged as fundamental tools, particularly in the study of fuzzy relational equations that represent preference structures in uncertain environments [

2,

3]. These t-norms have proven particularly valuable because they satisfy critical properties like associativity and monotonicity, making them suitable for multi-stage decision processes [

4,

5].

However, as noted by Fodor and Roubens [

6], the associativity condition required by t-norms is not essential in many real-world preference scenarios. This theoretical insight led to the development of overlap functions—a more flexible class of aggregation operators that preserves continuity and boundary conditions while relaxing the need for associativity [

7]. In particular, the seminal work by Dimuro et al. [

8] introduced the concept of additive generator pairs, providing a systematic framework for constructing overlap functions with desirable theoretical properties.

Beyond their theoretical appeal, overlap functions have demonstrated considerable practical value in various application domains. For instance, they have been used to improve image segmentation via advanced thresholding techniques [

9] and to facilitate more sophisticated three-way decision-making models in interval-valued fuzzy information systems [

10]. Their ability to model situations of indifference, where no clear preference exists between alternatives, has also made them a key component in advanced fuzzy decision-making frameworks [

11,

12].

Building on this foundation, recent studies have explored the use of overlap functions in optimization frameworks, particularly within the context of min-max programming. The min-max optimization problem has been extensively applied across various domains, including optimal control systems [

13], robust machine learning [

14], and fuzzy optimization [

15].

In fuzzy optimization, significant attention has focused on min-max programming problems with addition-min fuzzy relational inequalities (FRIs), particularly in modeling resource allocation for BitTorrent-like peer-to-peer (BT-P2P) file-sharing systems [

16,

17]. The general model can be expressed as follows:

where parameters

and

for any

.

Yang et al. [

18] demonstrated that the solution set of addition-min FRIs is determined by a unique maximal solution and a convex set of minimal solutions. Building on this structural insight, Yang et al. [

19] first proposed the uniform-optimal solution for model (

1), where all variables share an identical optimal value. Later, Chiu et al. [

20] simplified the solution process by reformulating model (

1) as a single-variable optimization problem, thereby enabling the derivation of the uniform-optimal solution through both an analytical and an iterative method. Advancements in solving FRIs—a key tool for optimization under uncertainty—have been comprehensively reviewed by Deo et al. [

21]. Specifically, they systematically categorized solution algorithms, ranging from traditional linear programming to modern metaheuristics such as Particle Swarm Optimization, while also exploring advanced topics, including bipolar fuzzy relations and hybrid models. Furthermore, recent advances in max-min optimization models have been developed to evaluate the stability of the widest-interval solution for systems of max-min FRIs [

22,

23] and addition-min FRIs [

24].

While mathematically convenient, the uniform-optimal solution of model (

1) may prove suboptimal for practical applications like BT-P2P data transmission. Yang [

25] addressed this limitation through an optimal-vector-based (OVB) algorithm to compute so-called minimal-optimal solutions. Guu et al. [

26] also proposed a two-phase method optimizing transmission costs under congestion constraints to obtain another minimal-optimal solution, which are strictly better or at least no worse than the uniform-optimal solution in terms of cost. Subsequent studies expanded on this concept, including the exploration of leximax minimal solutions [

27], active-set-based methods [

28], and iterative approaches for generalized min-max problems [

29].

These developments underscore the practical importance of identifying not only optimal but also structurally minimal solutions in min-max optimization models. Motivated by this, the present study extends the concept to a more broader class of problems constrained by addition-overlap functions. Specifically, we consider the following min-max programming model:

where parameters

and

for any

.

is the overlap function, which will be defined later in Definition 1.

When the constraint system in problem (

2) is feasible, Wu et al. [

30] demonstrated that a uniform-optimal solution is guaranteed to exist. Notably, this result remains valid for the optimization problem (

2) regardless of the specific operator chosen from the class of overlap functions. Their approach involves transforming the original problem into an equivalent single-variable optimization problem, which can be efficiently solved using the classical bisection method.

Moreover, Wu et al. [

30] extended the min-max optimization problem (

2), incorporating a novel overlap function operator (

4)—termed the “min-shifted” overlap function. Incorporating this operator, the min-max model becomes

where the overlap function

is defined as

Although the classical bisection method can be applied for general overlap functions, including the min-shifted overlap function in (

4), Wu et al. [

30] adopted a distinct approach for solving model (

3) and then derived the uniform-optimal solution using a tailored algorithm.

However, to the best of our knowledge, no existing method provides an effective way to compute minimal-optimal solutions for problem (

3). This paper fills this gap by proposing an iterative refinement strategy that starts from the uniform-optimal solution and systematically reduces adjustable variables while preserving both feasibility and optimality. The resulting minimal-optimal solution not only retains global optimality but also satisfies a strong minimality condition, ensuring that no component can be further decreased without violating the problem’s constraints.

The contribution of our approach is demonstrated by its ability to successfully solve a problem that was previously intractable. The primary evidence for its effectiveness lies in the following aspects:

- (a).

We have rigorously proven that our method always produces a feasible and minimal-optimal solution (as established in

Section 3 and

Section 4).

- (b).

The numerical examples in

Section 5 confirm that the algorithm functions as intended, correctly identifying diverse minimal-optimal solutions for various problem instances.

- (c).

By finding minimal-optimal solutions, our method provides decision-makers with superior, structurally efficient alternatives compared to the uniform-optimal solution, which is a significant practical advancement.

The remainder of this paper is organized as follows:

Section 2 reviews fundamental concepts of overlap functions and their properties.

Section 3 formulates the min-max optimization problem with addition-overlap constraints.

Section 4 presents our iterative algorithm for finding minimal-optimal solutions along with its theoretical analysis.

Section 5 provides numerical examples demonstrating the method’s effectiveness, and

Section 6 concludes with directions for future research.

2. Some Properties of the Min-Shifted Overlap Function

In this section, we review the definition of overlap function and explore the relevant properties of the min-shifted overlap function as defined in (

4). This foundational knowledge will aid us to find the minimal-optimal solution for problem (

3).

Definition 1. (See [

7,

8])

. A bivariate function is said to be an overlap function if it satisfies the following conditions:- (O1)

for all ;

- (O2)

if and only if ;

- (O3)

if and only if ;

- (O4)

O is non-decreasing;

- (O5)

O is continuous.

For convenience, the form of the min-shifted overlap function in (

4) is simplified as follows:

Let

be the denominator of (

5). Analyzing the value of the overlap function

for (

5), the following results can be obtained.

Lemma 1 ([

30], Lemma 1)

. For (5), there are- (i).

and ;

- (ii).

and ;

- (iii).

, and if and , then ;

- (iv).

;

- (v).

If and , then .

Lemma 2 ([

30], Lemma 2)

. For (5), the following results hold:- (i).

If and , then .

- (ii).

If and , then .

- (iii).

If and , then .

- (iv).

If and , then .

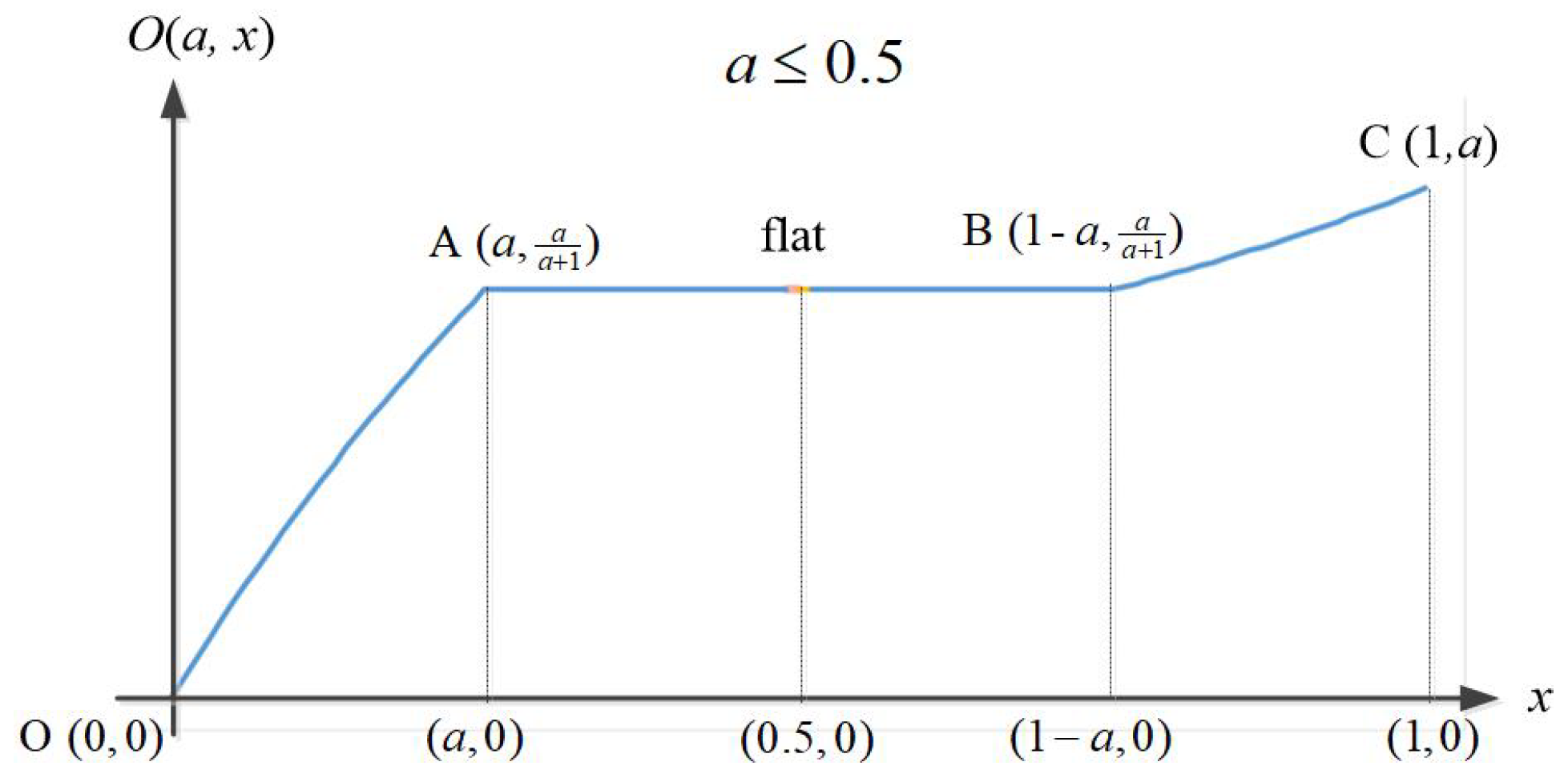

When

is used as a given parameter and

is the dividing point, then the value of the min-shifted overlap function

in (

5) can be derived, and the two sets of results are roughly illustrated in

Figure 1 and

Figure 2.

- (1)

When

, the value of

in (

5) is determined by three cases, as detailed below:

We have

and

. It follows that

and

. Substituting these into

yields

Here,

and

. These imply

and

. Substituting into

gives

Since

, we have

and

. Thus,

and

. Substituting these into

results in

To summarize, when

,

is given by the piecewise function:

Figure 1 (where

) depicts a piecewise function with a flat segment along line AB, where

, indicating that the overlap function

is continuous and non-decreasing in this case.

- (2)

When

, the value of

in (

5) is determined by three cases, as detailed below:

We have

and

. It follows that

and

. Substituting these into

yields

Here,

and

. These imply

and

. Substituting into

gives

Since

, we have

and

. Thus,

and

. Substituting these into

results in

To summarize, when

,

is given by the piecewise function:

Figure 2 (where

) shows a piecewise function without any flat segment, meaning that

is continuous and strictly increasing for all

.

Theorem 1. For the overlap function defined in (5), when , the following properties hold: - (i).

Strict monotonicity for small x: If , then is a strictly increasing function.

- (ii).

Symmetry: If , then .

- (iii).

Strict monotonicity for large x: If , then is a strictly increasing function.

Proof. - (i).

Strict monotonicity for small x:

Given and , we consider two values and show that for .

From (

6), we have

and

. Thus,

Since the denominator is always positive, the numerator

ensures the expression remains positive. Hence,

is strictly increasing in this interval.

Given and , we analyze and as follows:

Evaluating

: Since

, we have

and

, which implies that

,

, and leading to

Evaluating : Since , we have and , which implies that , , and leading to

Since both expressions are identical for all

, it follows that

.

- (iii).

Strict monotonicity for large x:

Given and , we analyze two values and show that for .

From (

6), we have

and

. Thus,

Again, since the denominator is positive and

, we conclude that

is strictly increasing in this interval. □

Theorem 1 states that for , the min-shifted overlap function is strictly increasing in the intervals and , while remaining symmetry in the middle interval .

For example, when , the overlap function is strictly increasing for and . However, by Theorem 1, it remains symmetry at in the middle interval .

Theorem 2. For the overlap function defined in (5), if , then is strictly increasing for all . Proof. Given and , we analyze the following three cases and show that whenever .

From (

7), we have

and

. Thus, the difference is

Since the denominator

is always positive and the numerator

remains positive, it follows that

is strictly increasing in this interval.

From (

7), we have

and

. Thus, the difference is

Since the denominator

is always positive and the numerator

is positive. Hence,

is strictly increasing in this interval.

From (

7), we have

and

. Thus, the difference is

Since

, the denominator is always positive, and the numerator

is positive. Hence,

is strictly increasing in this interval.

Since is strictly increasing in all three cases, we conclude that it is strictly increasing for all . □

Theorem 2 presents a different result from Theorem 1. It states that for

, the min-shifted overlap function

, as defined in (

5), is strictly increasing over the entire interval

. For example, when

, the overlap function

is strictly increasing throughout the interval

. In other words,

takes unique values for every distinct

x in this interval.

Proposition 1. For the min-shifted overlap function defined as in (5), the following strict quasi-monotonicity property holds: Proof. By Definition 1, the function

is non-decreasing and continuous in

for any fixed

. Suppose, for contradiction, that

but

. Since

is non-decreasing in

x, it follows that

which contradicts the initial assumption that

. Therefore, the assumption

is false, and it must hold that

. □

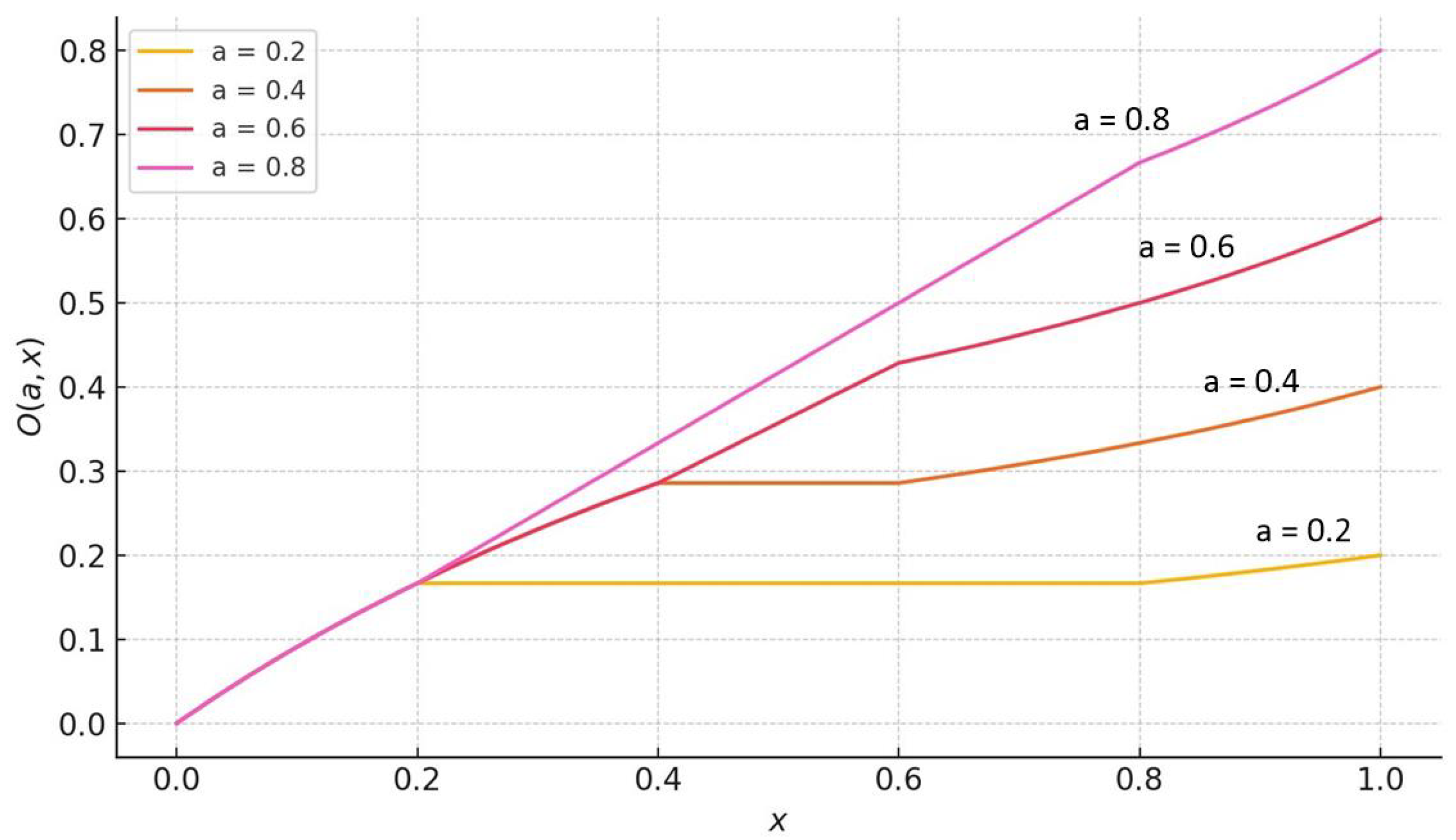

To provide an intuitive understanding of Proposition 1, we examine the graph of the min-shifted overlap function

over the domain

for fixed values of

. As illustrated in

Figure 3, the function

is strictly increasing with respect to

x for any fixed value of

a.

For example, consider the case where . If , it follows that and , which is consistent with the strict quasi-monotonicity of the function.

Proposition 2. For a given , the following properties hold:

- (i).

If and , or if and , then .

- (ii).

If and , then .

Proof. We prove each case separately based on the structure of the min-shifted overlap function .

- (i).

For or when , Theorem 1:(i) and (iii) ensure that is strictly increasing in these intervals. Similarly, when and , Theorem 2 ensures that is strictly increasing on the entire interval , which includes . Thus, in both scenarios, implies that .

- (ii).

If and , then by Theorem 1:(ii), the function is symmetric in this interval: . This symmetry implies that is not strictly increasing in , so it is possible to have even if in this range. Since we are given that , it must be that lies outside this non-strict region. Because the function resumes strict monotonicity in the interval , it follows that . Therefore, we have .

□

Moreover, based on (

6) and (

7), the value of

x in the equation

can be obtained via the inverse function method as follows:

- (1)

If

and

, then there is

- (2)

If

and

, then there is

Note that the value of the variable x does not exist if , since we have according to Lemma 1:(iv).

Why study the min-shifted overlap function? From the above analysis, by adjusting the parameter

a, we could obtain different shapes of the figures. This is to illustrate that the min-shifted overlap function has very rich capacity to model different scenarios. Recall that an overlap function is a mathematical tool used to quantify the degree of “overlap” or shared characteristics between two or more objects or concepts. Take

Figure 3, for example; if

is used to model the “indifference” between

a and

x, then selecting

gives a wide range

of

x, which gives the same low level of indifference. When one increases

a to be close to

, this range shrinks. Another feature is the piecewise property. One can see that the min-shifted overlap function could offer three piecewise segments to capture the features of asymmetric indifference between two objects.

3. A Single-Variable Optimization Problem for the Min-Max Programming Problem (2)

In this section, we review how to find the uniform-optimal solution to the min-max programming problem (

2). Some definitions and relevant properties are given.

Definition 2. Let be a vector in . The solution set of problems (2) and (3) is denoted by . Definition 3. Problem (2) is said to be consistent if . A solution is called optimal

for problem (2) if for all . Definition 4. Let and be two vectors. For any vector and , if and only if for all . A vector is said to be minimal if implies for any .

Proposition 3. Problem (2) is consistent, , if and only if holds for all . Proof. (⇒) Since

, and

for all

, according to Lemma 1-(iv), we have

such that

(⇐) Since

is given, and Lemma 1:(ii) states

, it follows that

with

for all

is a feasible solution to problem (

2). Specifically, we have

□

Proposition 3 shows that the necessary and sufficient condition of the solution set

of (

2) is

for all

. This condition can be used to check the consistency of the problem (

2) before finding the optimal solution.

As previously mentioned, to find a uniform-optimal solution of the problem (

2), Wu et al. [

30] have shown that it can be transformed into a single-variable optimization problem, as follows:

where parameters

and

, for all

.

Theorem 3 ([

30], Theorem 2)

. Suppose is the optimal solution to problem (10), and let be an optimal solution to problem (2) with optimal value . Then, holds. Theorem 3 demonstrates that the min-max programming problem (

2), when constrained by a solvable general addition-overlap function, can be reformulated as a single-variable optimization problem (

10). This transformation enables the use of the bisection method to determine the optimal value

. This methodology also applies to problem (

3), which is subject to the min-shifted overlap function. Consequently, a uniform-optimal solution

for problem (

3), where all variables take the same value,

for all

, can be expressed as

Example 1. Find the optimal solution to the following min-max programming problem, which is subject to a system of addition-overlap functions as defined in problem (3). where

and the operator

is the min-shifted overlap function with

According to Theorem 3, to solve the optimal solution of problem (

11), it can be transformed into the following single-variable problem.

where

By applying either the method proposed in [

30] or the classical bisection method to solve problem (

12), we obtain the optimal value

. According to Theorem 3, the corresponding uniform-optimal solution

for problem (

11) is

In Example 1, the uniform-optimal solution

, where

for all

, is substituted into each constraint of problem (

11). The corresponding values of the constraint functions are computed as follows:

4. Solving the Minimal-Optimal Solution for Problem (3)

As mentioned above, the bisection method can be used to solve the min-max programming problem with a general addition-overlap function, including the problem (

3), to obtain the uniform-optimal solution

. However, no efficient method is currently available for directly computing a minimal-optimal solution to problem (

3).

Although the uniform-optimal solution can be obtained for problem (

3), it is not necessarily a “minimal solution” in terms of constraint satisfaction. Nevertheless, it serves as an

upper bound among all optimal solutions. Therefore, identifying a minimal-optimal solution to the problem (

3) involves determining which components of the uniform-optimal solution can be reduced without violating feasibility or compromising optimality. In other words, we need to distinguish which components are fixed and unchangeable, and which ones can be adjusted.

4.1. The Critical Constraints

Definition 5. Let with for all be the uniform-optimal solution of problem (3). Define the following: - (i).

The critical set is defined as .

- (ii).

For , the constraint is called a critical constraint.

- (iii).

For

, define the binding set as

, and a variable

is called a binding variable if

, where

Lemma 3. Let , with for all , be the uniform-optimal solution of problem (3). Then, the critical set is non-empty, that is, . Proof. By Theorem 3, the uniform solution

is optimal for problem (

3). Suppose, for contradiction, that

. Then, for every

, we have

Since the overlap function

is continuous and non-decreasing in

x, we can slightly decrease

to a value

such that

This implies that

is still feasible for (

3) but strictly smaller than

, which contradicts the optimality of

. Therefore,

must hold. □

Lemma 3 shows that when the optimal value

of problem (

3) is determined, there must exist at least one critical constraint as follows:

Such behavior can be explicitly observed in Example 1 through the equality

. Moreover, the critical constraints

for

in (

13) are essential for characterizing the minimal-optimal solution to problem (

3). The theoretical foundation established in Theorems 1 and 2 rigorously supports this relationship. In particular:

Theorem 1:(i) shows that for , the overlap function is strictly increasing on the interval .

Theorem 1:(iii) ensures that is strict monotonicity over the interval if .

Theorem 2 guarantees that is strictly increasing over the entire interval when .

Lemma 4 (Binding variables)

. Let , with for all , be the uniform-optimal solution to problem (3). Let be any other optimal solution of the same problem. Then- (i).

For and such that , it must hold that ;

- (ii).

For and such that , it also holds that ;

- (iii).

For and such that , it holds that .

Proof. - (i).

Suppose, for contradiction, that for some . Without loss of generality, assume for all , , and for some .

Since

and

, by Theorem 1-(i), the overlap function

is strictly increasing over the interval

. Therefore,

For the critical constraint corresponding to

, we have

which contradicts the feasibility of

, as the

i-th critical constraint is violated.

Alternatively, suppose

for all

, with

for all

,

, and

. Then the objective value of

is

which contradicts the optimality of

.

Hence, in either case, for , we conclude that for all .

- (ii)

The proof follows a similar argument. Since and , the overlap function is strictly increasing on the interval by Theorem 1-(iii). For the critical constraint , reducing would decrease , thereby reducing below , violating feasibility. Increasing would lead to , which contradicts the optimality of . Thus, it must be that for .

- (iii)

For any . Theorem 2 ensures that is strictly increasing over the entire interval . Therefore, for the critical constraint , reducing would decrease , and consequently , violating feasibility. Increasing would result in , again contradicting the optimality of . Hence, it must be that for .

□

The results established in Lemma 4 reveal an essential structural property of the minimal-optimal solution to problem (

3): Certain variables, especially those identified as binding variables

, for

with respect to the critical constraints

, where

, must remain fixed at the uniform-optimal value

in any optimal solution.

Although the uniform-optimal solution

is usually not minimal-optimal, it serves as an upper bound for each component of any minimal-optimal solution. In particular, Lemma 4 confirms that the binding variables

, for all

and

, are fixed and cannot be decreased without violating feasibility or optimality. In contrast, the remaining variables

, where

for

, may be further reduced while maintaining feasibility. This observation provides a solid foundation for constructing a minimal-optimal solution of problem (

3).

Example 2. Apply the optimal value obtained in Example 1 to identify the critical set, critical constraint, and binding variables, according to Lemma 4.

From Example 1, we have the optimal value

and the uniform-optimal solution

, where

for all

. Substituting

into each constraint of problem (

11) yields the following values:

By Definition 5, the critical set is

Analyzing the overlap function components of the critical constraint:

, but for ,

and ,

.

Combining the three subsets, the binding set is

Therefore, the binding variables must remain fixed and cannot be reduced, while the remaining variables , and may be adjusted in the process of identifying a minimal-optimal solution.

4.2. Alternative Optimal Solutions of Problem (3)

Lemma 4 identifies the binding and adjustable variables that determine the structure of a minimal-optimal solution to problem (

3). The remaining challenge is to reduce the values of the adjustable variables while preserving optimality. Fortunately, since the uniform-optimal solution provides an upper bound for all optimal solutions, reducing the value of any one adjustable variable may still yield an alternative optimal solution.

Let

be the uniform-optimal solution to problem (

3), where

for all

. Define

and

with

By the definition in (

14), the vector

is equivalent to the uniform-optimal solution of problem (

3). In the vector

, all components are equal to

except the

j-th component, which is

. The following theorem demonstrates that

is also an optimal solution.

Theorem 4. Let the vectors and be denoted as in (14). Then - (i).

holds;

- (ii).

is an optimal solution of problem (3).

Proof. - (i).

From (

14) and (

15), we know that

for all

. Consider two cases:

Combining both cases, we conclude that holds.

- (ii).

For the vector

, from (

14) and (

15), we have

To show that

is an optimal solution to problem (

3), we check the feasibility and optimality:

Feasibility:

Case 1: If

, then it follows that

, i.e.,

, for all

and

. Therefore,

Case 2: If

for some

, by the definition of

and

, we have

In both cases,

for all

; therefore,

is feasible to problem (

3).

Optimality: From part (i), we know that

, so

Since

is feasible and achieves the same objective value, it is an optimal solution to problem (

3).

□

Theorem 4 provides valuable insight: for each

, the vector

is an optimal solution to problem (

3). These alternative optimal solutions offer flexibility and can be utilized to identify a minimal-optimal solution. Furthermore, by using different combinations of such optimal solutions, it is possible to yield different minimal-optimal solutions to problem (

3).

Theorem 5. Let the vectors and be denoted as in (14). Define the index setIf holds for some , then for , it follows that Proof. Since

holds for some

, it follows that

. By the definition of

, we have

Moreover, from Theorem 4, we know that

, i.e., it is a feasible solution to problem (

3). Therefore, for all

, it holds that

which implies

Now, using (

16), for

, we have

Therefore,

□

4.3. Iterative Approach for Finding the Minimal-Optimal Solution

Based on the optimal value

and the uniform-optimal solution

, where

for all

, together with the properties established in the previous sections, we propose the following iterative approach to find a minimal-optimal solution to problem (

3).

To prove that the vector

obtained through this iterative procedure is indeed a minimal-optimal solution to problem (

3), we first establish the following properties.

Theorem 6. Let the vector , where , be obtained by the iterative approach described above. Then, the following properties hold:

- (i).

For every , the vector is a feasible solution to problem (3). - (ii).

Each component satisfies for all .

- (iii).

For every , the vector is an optimal solution to problem (3). - (iv).

The inequality holds for all and .

Proof. - (i).

According to Theorem 4 and the definition of

we know that the vector

is an optimal solution of problem (

3). Therefore, for

, we have

For

, consider the vector

and observe that

We consider two cases to verify the feasibility of .

Case 1: If

for all

, i.e.,

, then

and

. Substituting into (

19), we get

Case 2: If

for some

, then by construction,

and

, it implies that

Combining both cases, we conclude that

is feasible to problem (

3), i.e.,

The argument extends similarly to

, and in particular,

For

,

- (ii).

Since both

and

for all

, then from part (i), we know that

Moreover, according to (

17) and (

18), we have

Thus, holds for all .

- (iii).

By (i) and (ii) above, for all , there are and .

For

, we have

Thus,

for all

.

Now, assume

for all

. Then

which contradicts the fact that

is the optimal value of problem (

3). Therefore,

, and all

are optimal.

- (iv).

From (ii), we have

; hence,

It follows that

□

Theorem 6 shows that, for all

, the vector

produced at each step of the iterative procedure remains an optimal solution to problem (

3). In particular, this implies that the final vector

, computed at the last iteration, is also optimal. Furthermore, by Definition 4 and the property of Theorem 6:(ii), that

for all

, the sequence of iterates satisfies the following component-wise inequality:

These properties are crucial in establishing that the final output

is a minimal-optimal solution of problem (

3).

Theorem 7. Let the vector , for , be obtained from the iterative approach, and denote the critical set. Define the index setIf for some and , then - (i).

and for all ;

- (ii).

, for all .

Proof. - (i).

By Lemma 3, . Since for some , Theorem 6 provides that .

Assume by contradiction that there exists

such that

. Then,

which implies

, contradicting Theorem 6:(iv), which states that

for all

. Hence, we have

The same argument applies inductively to show

- (ii).

Since

for some

, it follows that

. By the definition of

, we have

From Theorem 6:(i), we know that

. This follows that

which implies

Now, using (

20), for

, we have

so that

□

Theorem 7 identifies that for every , the function value , and the corresponding component satisfies . This confirms that such constraints are tightly satisfied by and, importantly, that any further decrease in the component would cause violation of feasibility for some critical constraints. Hence, Theorem 7 provides the foundational structure and contradiction mechanism required to prove the minimal-optimality of .

Theorem 8. The vector obtained from the iterative approach is a minimal-optimal solution to problem (3). Proof. By Theorem 6:(iii),

is an optimal solution to problem (

3), i.e.,

Suppose, for contradiction, that there exists another minimal-optimal solution such that , where and .

From Theorem 7, we know

Hence,

and

Furthermore, from the definition of

in (

17), we have

Since

and

is strictly increasing in

x (by Theorems 1 and 2), we have

which implies

This contradicts the assumption that

. Therefore, such

cannot exist, and

is indeed a minimal-optimal solution to problem (

3). □

5. Solution Procedure and a Numerical Example

Based on Theorem 8, starting from the uniform-optimal solution

with

for all

, the proposed iterative approach can be used to obtain a minimal-optimal solution

to problem (

3). Furthermore, according to Lemma 4, for the initial optimal solution

, the binding variables

, for all

and

, must remain fixed and cannot be reduced. In contrast, the remaining variables

, for

and

, may potentially be reduced.

By incorporating these properties, we propose a systematic solution procedure for identifying a minimal-optimal solution to the min-max programming problem involving addition-overlap function composition.

- Step 1.

Check the consistency of problem (

3) by verifying whether the inequality

holds for all

, according to Proposition 3. Terminate the procedure if the problem is inconsistent.

- Step 2.

Transform problem (

3) into the single-variable optimization problem (

10), and solve it using the method proposed in [

30] or the bisection method to obtain the optimal value

. The corresponding uniform-optimal solution is given by

.

- Step 3.

Determine the critical set and, for each , compute the corresponding binding set according to Definition 5.

- Step 4.

Iteratively construct the sequence of vectors for , where for .

- Step 5.

Return the final vector

, which is a minimal-optimal solution to problem (

3).

Computational Complexity and Convergence: Problem (

3) involves

m constraints, each containing

n variables. The overall computational complexity and convergence behavior of the proposed solution procedure are primarily determined by Steps 2 and 4.

In Step 2, the optimal value

is obtained by solving a single-variable optimization problem using the method proposed in [

30], which requires

computational complexity. The convergence of this method has been formally established in [

30].

In Step 4, each of the n variables is processed once. For each variable, evaluating all constraints involves computing up to operations. Thus, the total computational complexity for Step 4 is .

Overall, the total computational complexity of the entire solution procedure is .

Moreover, since each variable is examined exactly once, and each update either retains or reduces its value while preserving feasibility and optimality, the algorithm completes in exactly n iterations. Finally, by Theorems 6 and 8, the final solution is guaranteed to be both optimal and minimal in the sense of component-wise ordering among feasible solutions. Therefore, the proposed algorithm is guaranteed to converge to a minimal-optimal solution in a finite number of steps.

The proposed procedure provides a systematic method for constructing a minimal-optimal solution to problem (

3). To demonstrate its effectiveness and practical applicability, we now apply it step-by-step to the specific instance described in Example 1.

Example 3. Apply the proposed solution procedure to determine a minimal-optimal solution to Example 1.

Based on the data given in Example 1, we carry out the solution procedure step-by-step as follows:

Since the condition

holds for all

, the problem (

11) in Example 1 is consistent.

Step 4. Iteratively construct the sequence of vectors for , where for .

For , since , compute

where

with

Calculations then yield

Using the inverse function method specified in (

8) and (

9), we compute

Obtain

and

For , since , set

For , since , compute

Calculations then yield

Obtain

and

For , since , compute

Calculations then yield

Obtain

and

For and , since both , set

and obtain the final vector

which is a minimal-optimal solution to Example 1.

It is worth noting that the proposed solution procedure can be applied to obtain other minimal-optimal solutions. Theoretically, the six variables in Example 3 can be permuted in 6! = 720 different sequences. However, since the critical set

and the corresponding binding set

, the values of variables

must remain unchanged, in accordance with Lemma 4. As a result, only the remaining three variables

, and

can be adjusted, yielding 3!=6 possible permutations. Besides the original sequence

used in Example 3, the following five sequences can be considered to generate other minimal-optimal solutions:

Accordingly, only one distinct minimal-optimal solution

to Example 3 can be obtained using our solution procedure based on these sequences, as follows:

6. Conclusions

In this study, we investigated a min-max optimization problem constrained by a system of addition-overlap functions. Classical methods such as the bisection approach are effective in finding a uniform-optimal solution for such a problem, where all decision variables share the same optimal value. However, these methods are insufficient for identifying minimal-optimal solutions, which are often more informative in practical applications.

To address this gap, we developed an iterative procedure that gradually reduces adjustable variables based on overlap function properties while maintaining feasibility and optimality. This approach relies on a careful identification of critical constraints and binding variables, which must remain fixed, allowing non-binding components to be selectively minimized. We formally proved that the resulting solution satisfies the condition of minimal-optimality. Through illustrative examples, we further demonstrated that the proposed method can yield multiple distinct minimal-optimal solutions, depending on the order in which variables are processed.

Our results contribute both theoretically and algorithmically: the framework enhances the understanding of solution structure in addition-overlap constrained problems and offers a practical tool for constructing minimal-optimal solutions. Future work may involve the pursuit of sufficient conditions, the development of numerical subroutines, and necessary error analyses for broader classes of overlap functions or exploring its applications in practical optimization scenarios.