1. Preliminaries and Introduction

Throughout this paper, denotes the set of fixed points associated with an operator T on an underlying space. Let us recall the basic definitions and known results.

Let be a metric space. A geodesic path joining to is a mapping , such that and , for all , where . The image of denoted by is called a geodesic segment joining x and y. The space X is called geodesic space if every two points of X are joined by a geodesic and X is said to be uniquely geodesic if there is exactly one geodesic joining x and y for each . A subset is said to be convex if Y contains every geodesic segment joining any two of its points.

A geodesic triangle consists of three points in X called the vertices of , and a geodesic segment between each pair of vertices called the edges of . A comparison triangle for a geodesic triangle in X is a triangle in the Euclidean plane such that , for . A geodesic space is called a space if all geodesic triangles satisfy the following comparison axiom.

Let

be a comparison triangle for a geodesic triangle

in

X; then,

is said to satisfy the comparison axiom if for all

and all comparison points

, we have

The above inequality is known as inequality.

If

are points in a geodesic space and if

is the midpoint of the segment

, that is

, then the following inequality

is known as a midpoint inequality. A geodesic space is a

space if and only if it satisfies the midpoint inequality [

1].

Let

be a bounded sequence in a

space

X. For

, we define

The asymptotic radius of a given sequence

, denoted by

, is given by

The asymptotic center of

denoted by

is given by

In CAT(0) spaces, the asymptotic center is unique, which enables us to study the –convergence, the analogue of the notion of weak convergence in Banach spaces.

Definition 1 ([

2])

. A sequence in a space X is called Δ

–convergent to . If for any subsequence of , the point x is the unique asymptotic center of , we write and call x the Δ

–limit of . It is known that every

space has an Opial’s property for any given sequence

in a

space, such that

is

–convergent to

x. Then for any

with

, we have

We now collect some elementary facts about spaces, which are needed in the sequel.

Lemma 1 ([

2])

. Let be a space.- 1.

Every bounded sequence in X always possesses a Δ–convergent subsequence.

- 2.

If is a bounded sequence in a closed convex subset K of X, the asymptotic center of lies in K.

- 3.

If is a bounded sequence with and is the subsequence of with , and converges, then .

Lemma 2 ([

2])

. Let be a space.- 1.

For any and , there is a unique point , such that where the unique point z given above is denoted by .

- 2.

For any and , we have

In the framework of CAT(0) spaces, the symbol ⊕ is commonly used to denote the geodesic convex combination of two points. In other words, this symbol serves as the analogue of linear interpolation in Euclidean spaces, but adapted to the curved geometry of CAT(0) spaces, where geodesics replace straight lines.

Lemma 3 ([

3])

. Let be a sequence in , where . If the sequences in the space satisfy andfor some , then we have Definition 2 ([

4])

. A self-mapping T defined on a nonempty subset K of space X is said to satisfy condition if there is a nondecreasing function with and , for all , such that for any , we have Let us recall that, a mapping

T defined on a subset

K of CAT(0) space is called Banach contraction if there exists a

, such that

for all

.

If we set

in the above definition, then a mapping

T is said to be nonexpansive. In 2008, Suzuki [

5] introduced a more general class of mappings by relaxing the nonexpansive condition, and established both existence and convergence results for such mappings in the framework of Banach spaces.

Definition 3. A self-mapping T defined on a nonempty subset K of space X is said to satisfy condition if, for any , we have The mapping satisfying condition

is also called Suzuki generalized nonexpansive mapping. In [

5], it was shown that a mapping satisfying condition

has the following property:

for all

.

In the case of nonexpansive mapping

T, it is obvious to note that

for all

.

Motivated by these fact, Garcia et al. [

6] introduced a general class of mappings and obtained some fixed point results called the class of mapping with condition

.

Definition 4. A mapping T defined on a nonempty subset K of space X is said to satisfy the Garcia–Falset property if there is , such thatfor all . Thus, every nonexpansive mapping satisfies the Garcia–Falset property for , and a mapping with condition also satisfies the Garcia–Falset property for .

Lemma 4. Let T be a mapping satisfying the Garcia–Falset property defined on a nonempty subset K of space X. Then, is closed.

Lemma 5. Let T be a mapping satisfying the Garcia–Falset property defined on nonempty subset K of space X. If , then T is quasi-nonexpansive. More precisely,for all and . The theory of nonexpansive mappings forms a crucial part of nonlinear analysis, with a wide range of applications. It all started in 1965 when three mathematicians, Browder [

7], Gohde [

8], and Kirk [

9], independently investigated important fixed point results for the class of nonexpansive mappings. Later, many researchers developed new classes of mappings to extend the existing results on nonexpansive mappings. In 1973, Kannan [

10] introduced a class of mappings called Kannan mappings, which need not be continuous and are independent of the Banach contraction principle. In 1980, combining the notions of nonexpansive and Kannan mappings, Gregus [

11] introduced the Reich nonexpansive mappings. Later,

-nonexpansive mappings, an extended class of generalized nonexpansive mappings, were introduced by Aoyama et al. [

12], leading to several interesting fixed point results in this direction. In 2017, Pant and Shukla [

13] considered a class of nonexpansive-type mappings known as generalized

-nonexpansive mappings. Recently, Pandey et al. [

14] presented a significant extension of nonexpansive mappings, known as generalized

-Reich–Suzuki nonexpansive mappings, and showed that this class satisfies the Garcia–Falset property for

. It is shown in [

14] that this class of mappings is more general than Suzuki generalized nonexpansive mappings, and all the mappings discussed above fall into the class of generalized

-Reich–Suzuki nonexpansive mappings. Consequently, all these mappings belong to the class of mappings satisfying the Garcia–Falset property.

Similarly, Karapinar [

15] introduced several classes of operators satisfying certain contractive conditions that generalize existing mappings with condition

, namely Reich–Suzuki–(C) (denoted RSC), Reich–Chatterjee–Suzuki–

(denoted RCSC), and Hardy–Rogers–Suzuki–

(denoted HRSC), which also satisfy the Garcia–Falset property with

,

, and

, respectively. Moreover, the class of operators defined by Bejenaru and Postolache [

16] satisfies the Garcia–Falset property with

. In this way, many well-known nonexpansive-type mappings ultimately satisfy the Garcia–Falset property.

In nonlinear analysis, approximating the solutions of nonlinear operator equations and inclusions poses a significant research challenge, particularly when solving fixed point equations for which an analytic solution cannot be obtained. The Banach contraction principle not only guarantees the existence and uniqueness of solutions for fixed point equations involving contraction operators, but also provides one of the simplest constructive methods, known as the Picard iteration process, to approximate the solution [

17]. Given an arbitrary initial guess

in a closed subset

K of a complete metric space

X, a sequence

can be constructed as follows:

for all

.

If the contraction operator T in the above processes is replaced with a nonexpansive mapping, the resulting sequence may fail to converge to the fixed point of T even if the fixed point is known.

To address this, various iterative procedures have been developed to approximate the fixed points of nonexpansive mappings and their different invariants. The foundational algorithm for nonexpansive mappings include one-step Mann iteration [

18] given as follows. Let

be an arbitrary point in a closed and convex subset

K of a normed space

X. Define a sequence

in

X by

for all

, where

is a real sequence in

. The Mann iterative algorithm has certain limitations: it fails when a nonexpansive mapping is replaced by a pseudocontractive Lipschitzian mapping, highlighting the need for a modified iterative approach. To address this limitation, Ishikawa [

19] defined a two-step iteration scheme as follows. Let

be an arbitrary point in a closed and convex subset

K of a normed space

X. Define a sequence

in

X by

for all

, where

and

are real sequences in

.

Noor [

20] introduced a three-step iterative process to improve the convergence behavior of the Ishikawa iterative scheme. Let

be an arbitrary point in a closed and convex subset

K of a normed space

X. Define a three-step sequence

in

X by

for all

, where

,

and

are real sequences in

.

The focus of improving the convergence rate of fixed point iterative algorithms opened new avenues of developing iterative schemes, even in the cases where existing algorithms are already applicable to the given class of operators. In this direction, Abbas and Nazir [

21] defined the iteration scheme

for all

, where

,

, and

are real sequences in

and

is an arbitrary point in a convex subset

K of a normed space

X.

Sintunavarat and Pitea [

22] introduced the

iteration to approximate the fixed points of Berinde-type operators as follows:

for all

, where

,

, and

are real sequences in

and

is an arbitrary point in a convex subset

K of a normed space

X.

Recently, Aftab et al. [

23] developed a

iterative procedure, given by

for all

, where

,

, and

are real sequences in

and

is an arbitrary point in a convex subset

K of a normed space

X.

Lamba and Panwar et al. [

24] introduced Picard

iterative algorithms, a four-step iterative scheme for approximating the fixed points of generalized nonexpansive mappings, as follows:

for all

, where

,

, and

are real sequences in

and

is an arbitrary point in a convex subset

K of a normed space

X.

Recently, Jia et al. [

25] proposed the Picard–Thakur hybrid iterative scheme, which is given as

for all

, where

,

, and

are real sequences in

and

is an arbitrary point in a convex subset

K of a normed space

X.

As mentioned earlier, CAT(0) spaces provide a flexible framework for studying geometric and topological properties, such as the convexity of sets and functions, and the convergence of sequences in a purely metric setting, without relying on vector operations. They broaden the scope of analysis beyond linear structures while retaining powerful geometric and analytic properties.

Motivated by these findings, we develop a novel iterative technique in the framework of CAT(0) spaces, designed to refine and generalize existing algorithms. Let

T be a mapping defined on a nonempty subset

K of a CAT(0) space, and let

be an arbitrary point. Define a sequence

by

for all

, where

,

, and

are real sequences in

. The iteration scheme exhibits different characteristics based on the parameter values involved therein.

When all the parameters are set to zero or one, the iteration scheme reduces to repeated applications of the Picard iteration operator. With arbitrary parameter values, however, the scheme transforms into a novel formulation, distinct from existing methods in the current literature.

In this paper, we study strong and –convergence results for a generalized class of mappings using the proposed iterative process. A comparative analysis with existing iterative methods demonstrates that the proposed method is more efficient and exhibits faster convergence for generalized contraction mappings. A stability theorem further guarantees the reliability of the new method. Moreover, our results are new even in the framework of Banach spaces, thereby generalizing several well-known convergence results in Banach spaces.

2. Approximation Results

In this section, we present several weak and strong convergence results for a new proposed iterative scheme (

1) for the mappings satisfying Garcia–Falset property

in the framework of CAT(0) spaces. Throughout the section, we assume that a mapping

T is defined on a nonempty closed convex subset

K of CAT(0) space

X and satisfies the Definition 4. Let the sequence

be defined by (

1).

Theorem 1. If , then the exists, for all .

Proof. Using similar arguments, we obtain

This shows that the sequence is both bounded and non-increasing. Thus, the limit of the sequence exists. □

The following theorem provides the necessary and sufficient condition for the existence of a fixed point of a mapping T.

Theorem 2. We have that if and only if the sequence is bounded and .

Proof. Suppose that

and the sequence

is bounded. We will show

. For any

we have

This implies that . Since consists of a single element. This follows that .

Conversely, suppose that

. We show

. By Theorem 1,

is bounded and

exists. Consider

From the proof of Theorem 1, we obtain

Again, from the proof of Theorem 1, we have

From (

4) and (

5), we obtain

From (

2), (

3), (

6), and Lemma 3, we have

which completes the proof. □

We now establish weak convergence result using Opial’s condition in CAT(0) space.

Theorem 3. If , then is Δ–convergent to an element in .

Proof. Set , where union is taken over all subsequences of . If , then there exists a subsequence of such that .

By Theorem 2,

is bounded. Using Lemma 1 (i) and (ii), we have a subsequence

of

such that, for some

,

It follows from Theorem 2 that

, and by Theorem 1,

exists. Suppose that

. Using Opial’s condition and uniqueness of asymptotic center, we get

which is absurd. Therefore,

. To show that

x is the asymptotic center of every subsequence of

, let

since

is a subsequence of

with

, and

exists. By Lemma 1 (iii), we have

. Therefore,

is

–convergent to

. □

Now, we establish some strong convergence results using the underlying class of mappings in the setting of CAT(0) space.

Theorem 4. If K is Δ–compact and , then strongly converges to an element in .

Proof. By Theorem 2, we have

. Due to the compactness of

K, we can find a subsequence

of

, which is

–convergent to

. Hence, we obtain

On taking the limit as , and the uniqueness of asymptotic center, we have . By Theorem 1, exists for any . Therefore, converges strongly to z. □

Theorem 5. If and , then converges strongly to a fixed point of T.

Proof. Suppose

converges strongly to

, then

Conversely, suppose that

. So, a subsequence

of

and

in

exists such that

On the other hand, we have

Now, using the triangle inequality

Hence,

is a Cauchy sequence. Using Lemma 4,

converges to

. Thus, we have

This implies that converges to on taking limit as . By Theorem 1, exists, for all . Therefore, converges strongly to . □

Theorem 6. If and T satisfies condition , then converges strongly to .

Proof. By Theorem 1,

exists for all

. So,

exists. Using Theorem 2, we have

Since

T satisfies condition

, using the Definition 2, we get

Since

g is a nondecreasing function with

and

, for all

, from (

8), we get

By Theorem 5, the sequence converges strongly to . □

4. Numerical Analysis

We begin by providing an example of a mapping that satisfies the García–Falset condition but does not satisfy Suzuki’s condition. This observation once again highlights the fact that not all mappings that meet condition will automatically satisfy condition , although the converse statement is indeed true. Furthermore, we point out that this mapping will be employed to carry out a numerical simulation, which will serve to illustrate that the newly introduced iterative process is more efficient, in terms of the number of iterations required, than previously used schemes.

Example 1. Let be a subset of . We consider Clearly, . For and , we haveand The condition is not satisfied. We illustrate that T satisfies the Garcia–Falset property. We discuss the different cases below:

(i) Suppose that . We have (ii) For , we obtain (iii) If and , we have (iv) For and , we can say (v) If and , we can state that This leads us to conclude that the operator T does not satisfy condition , but it does satisfy condition .

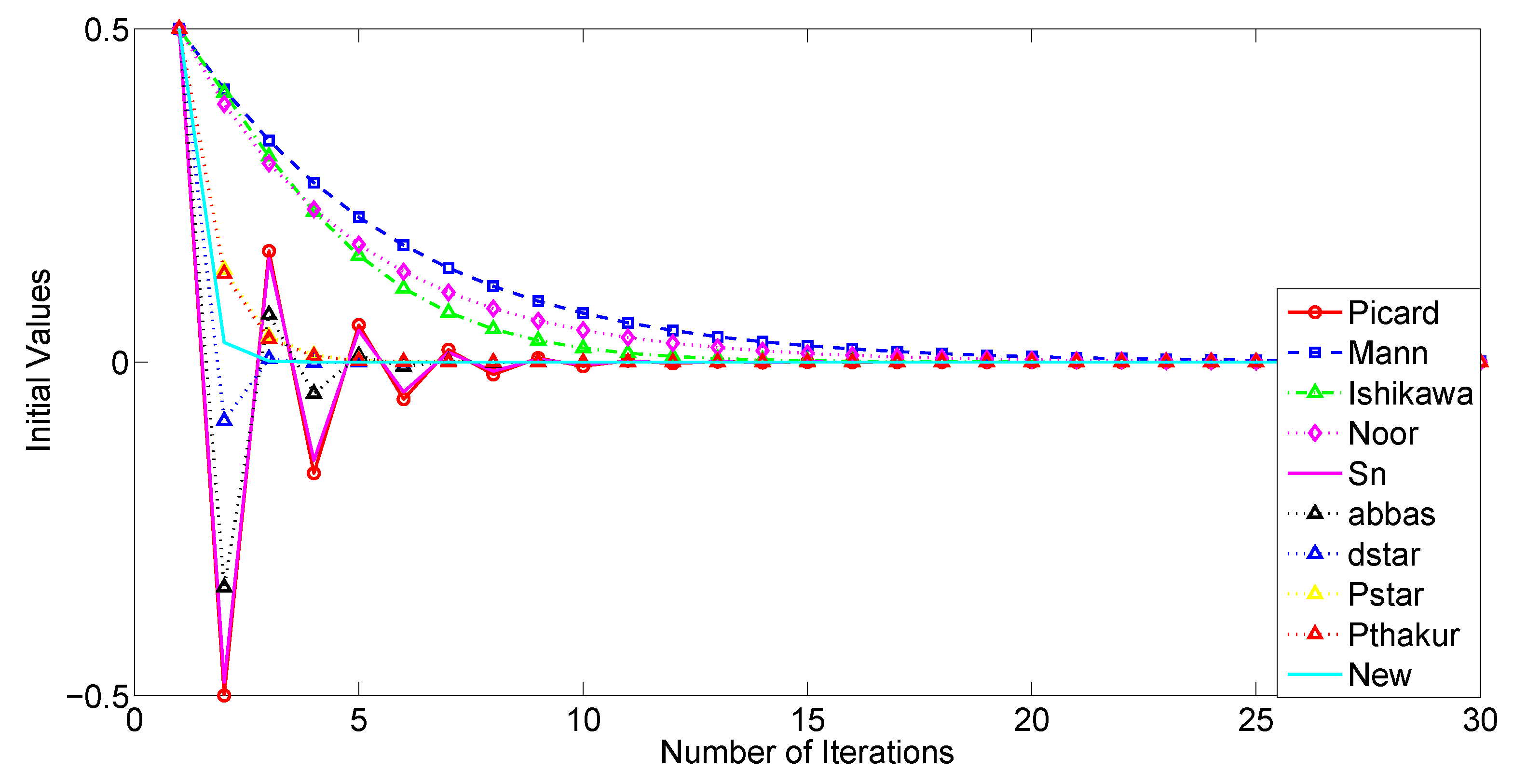

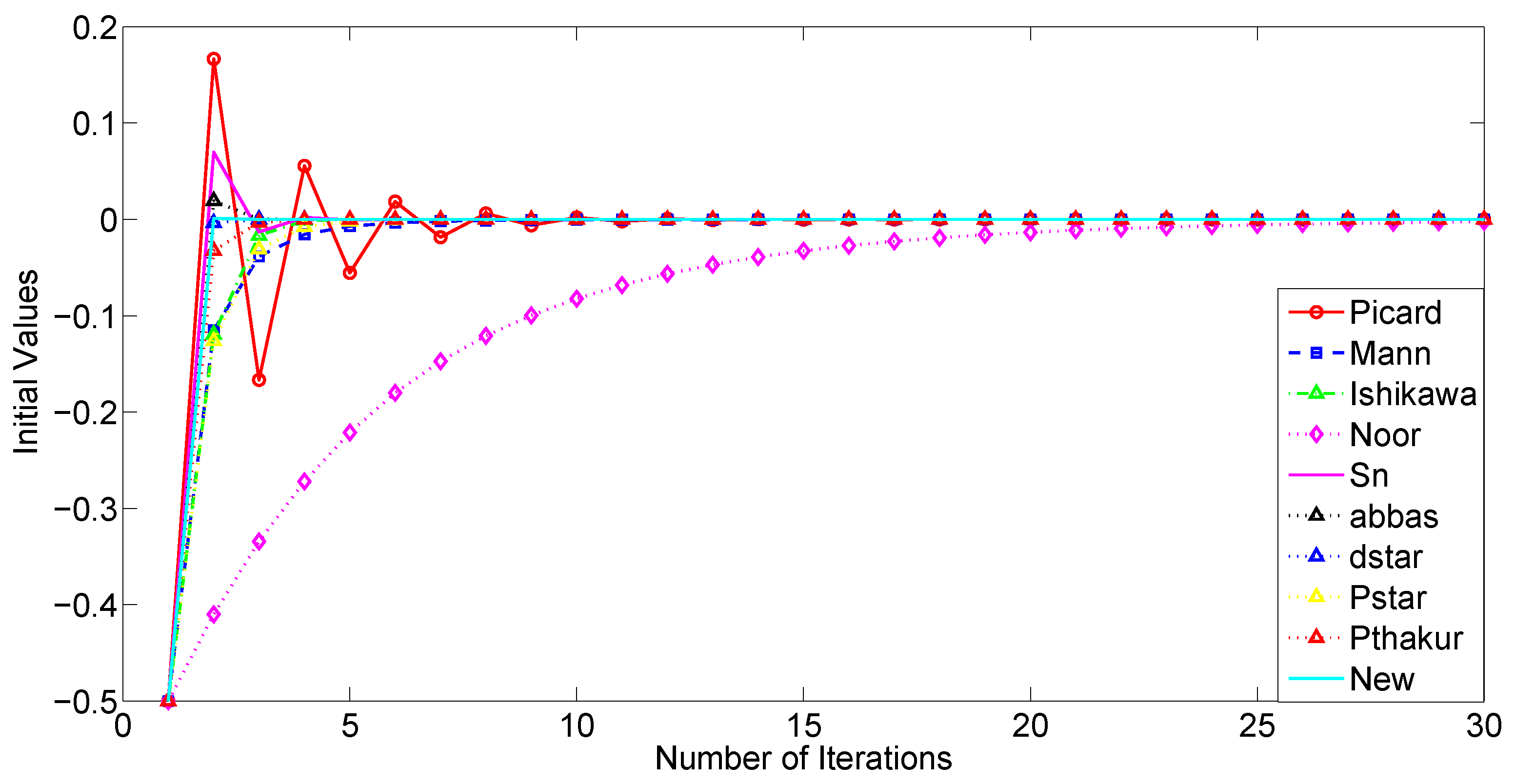

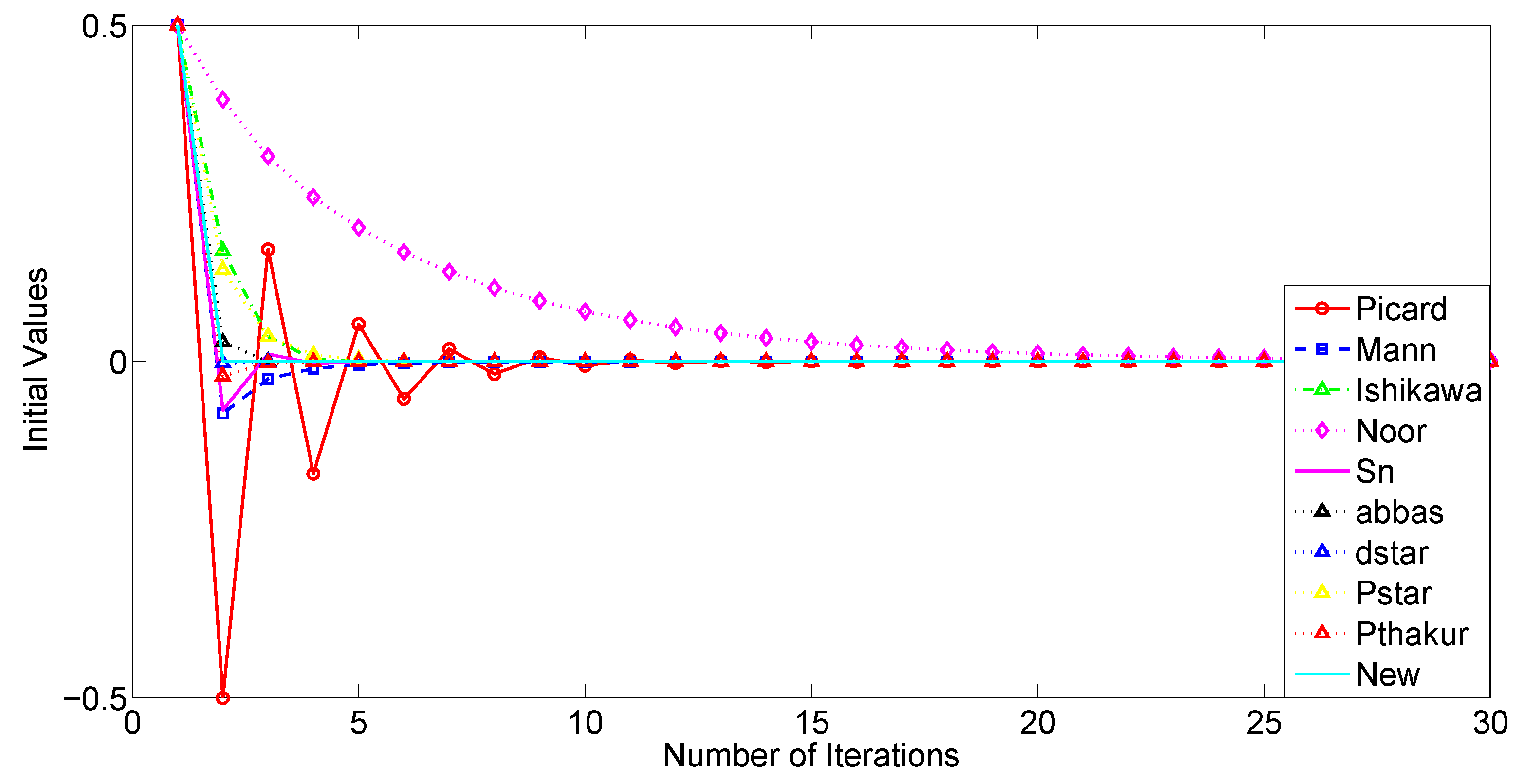

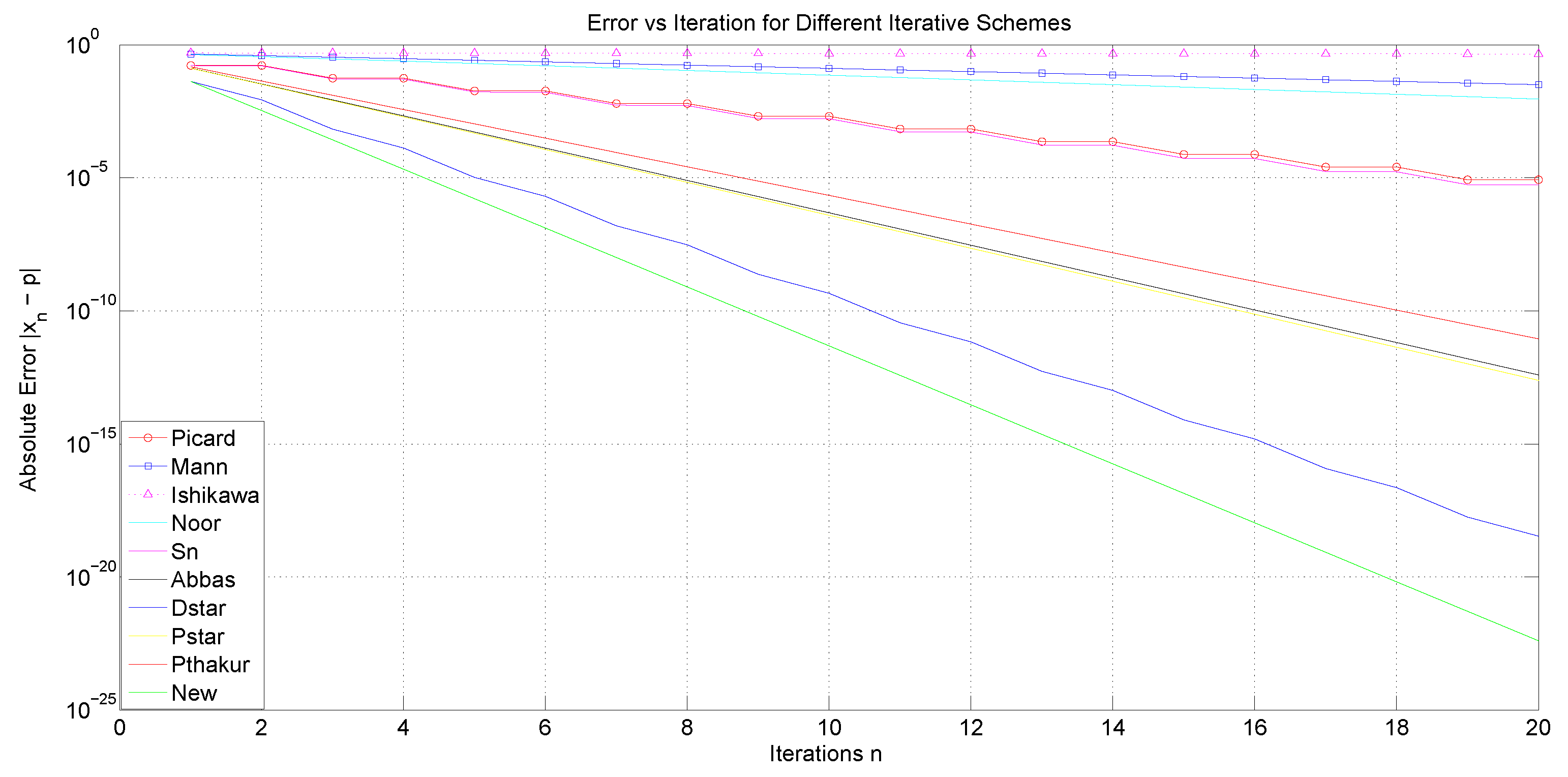

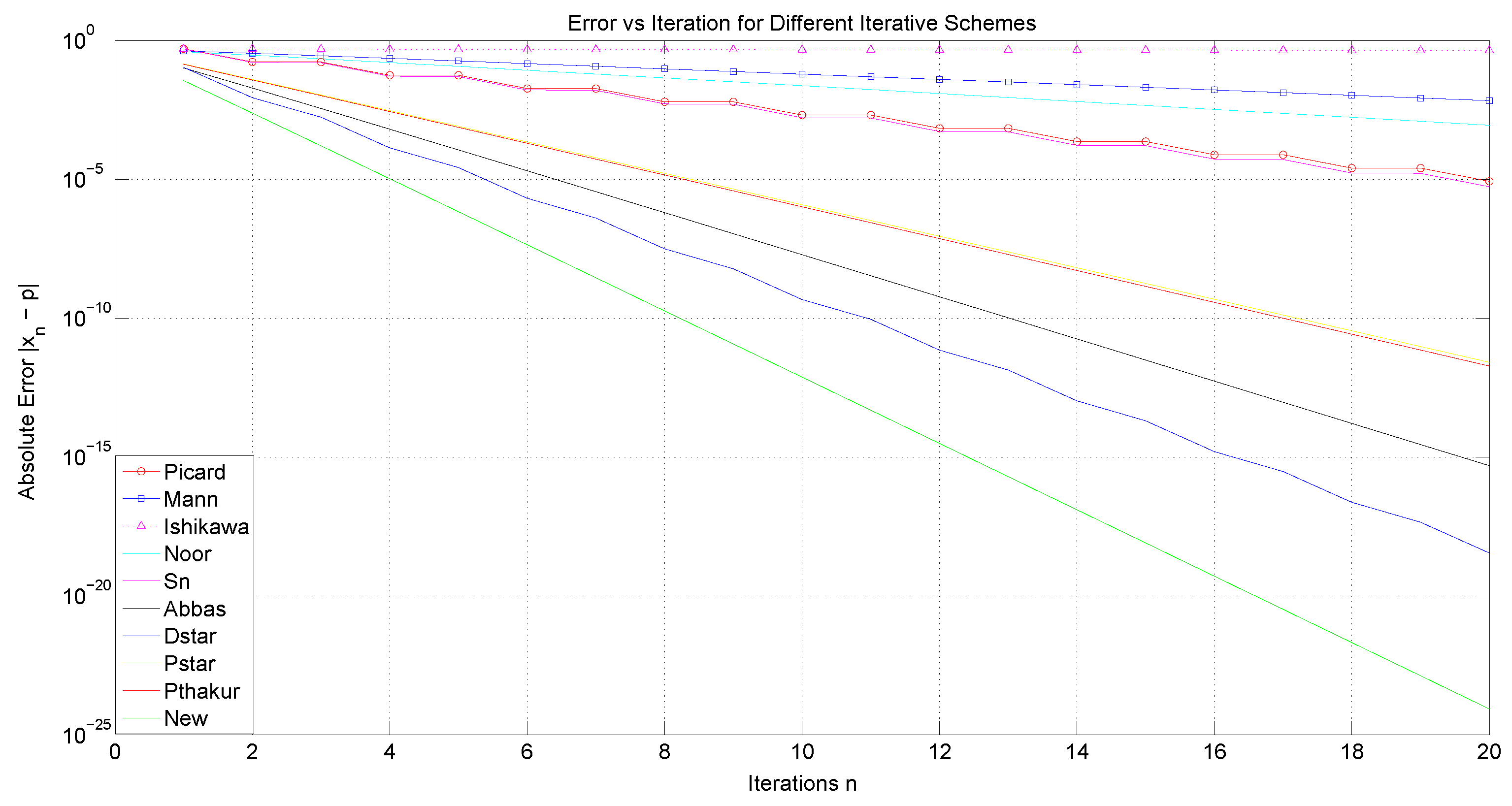

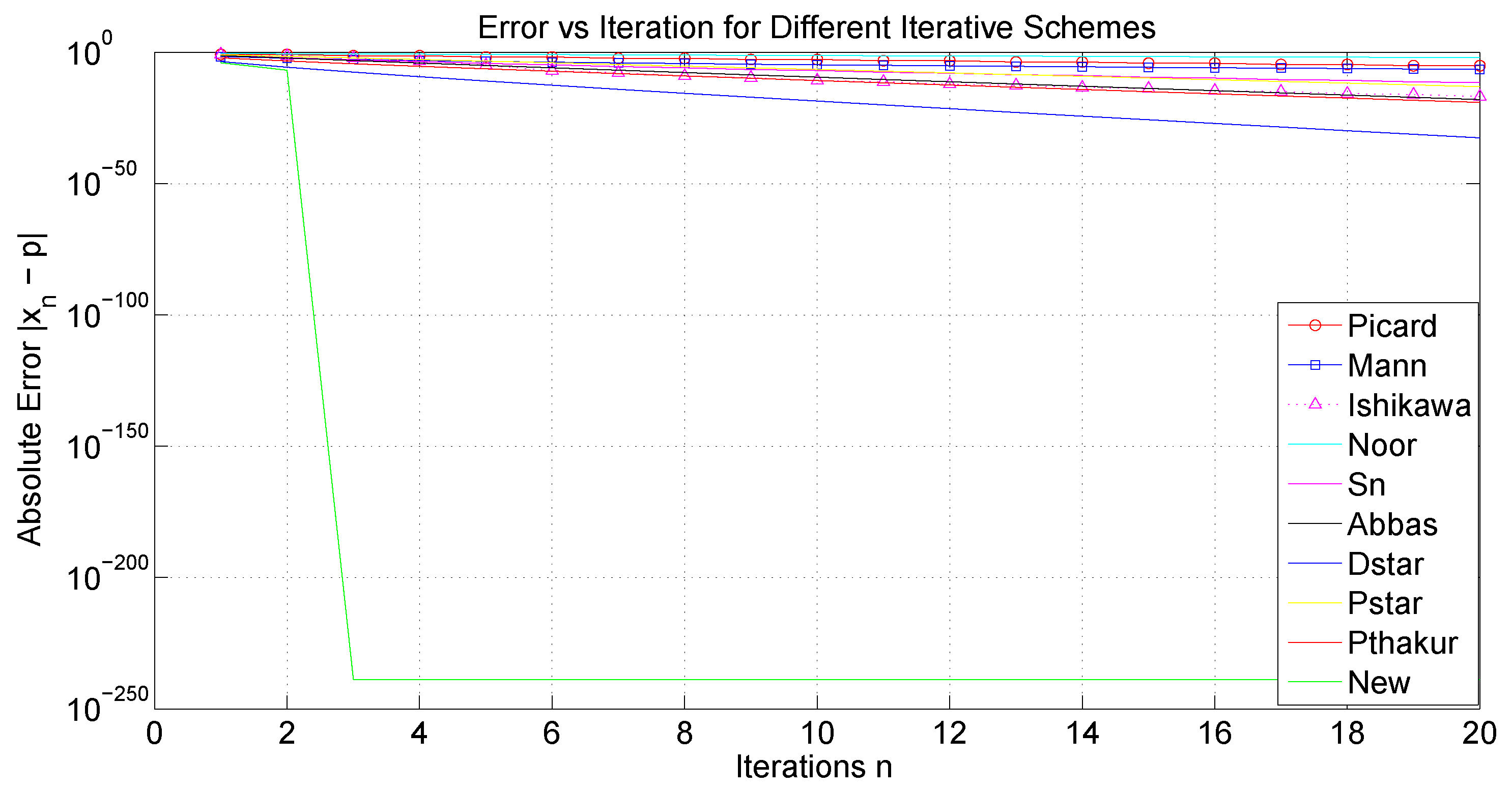

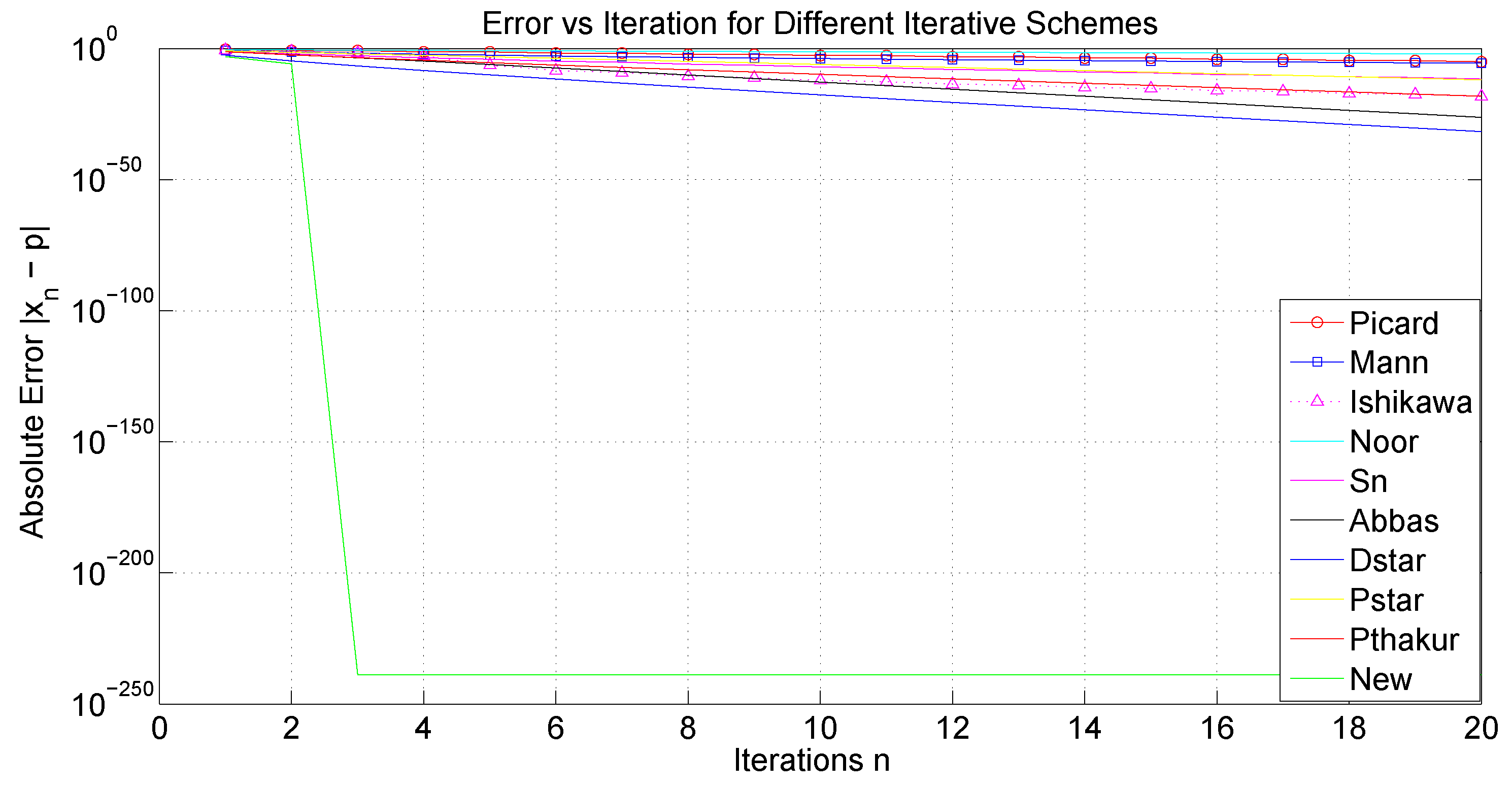

We now examine the convergence rates of several iteration processes, namely Picard, Mann, Ishikawa, Noor, Abbas,

,

, Picard

, Picard–Thakur, and the new iteration (

1) in the framework of Example 1 to compare their effectiveness and investigate the impact of different initial values on the convergence of these iteration schemes by varying the parameter

and

. Let

p denote a fixed point of the mapping

T. The stopping criterion is given by

.

As a starting point, our analysis will focus, on the one hand, on the convergence behavior of the iterative processes under consideration, and, on the other hand, on their computational aspects, such as execution time, the number of iterations required for convergence, and an estimation of the associated error. First, we shall use the sequences and . Analogously to the preceding study, we will proceed by considering , and .

The tables and figures presented below illustrate a comprehensive comparison of the convergence behavior of the iterative schemes and demonstrate that, for different choices of parameters and initial guesses, our proposed iterative scheme (

1) is more efficient and converges more rapidly to the fixed point of the mapping

T. We have considered several choices of the initial point

, as well as different sequences for

,

, and

, in order to emphasize that the efficiency of the proposed algorithm is not restricted to a single particular case. These variations indicate that the method remains effective under a wide range of parameter settings.

To strengthen the formulated conclusion, we emphasize the following points:

It is noteworthy that the three-step iterations, Abbas and , converge faster and are more efficient than the four-step iterations Picard and Picard–Thakur. This indicates that the convergence behavior of an iterative process is independent of the number of steps in which the process is defined.

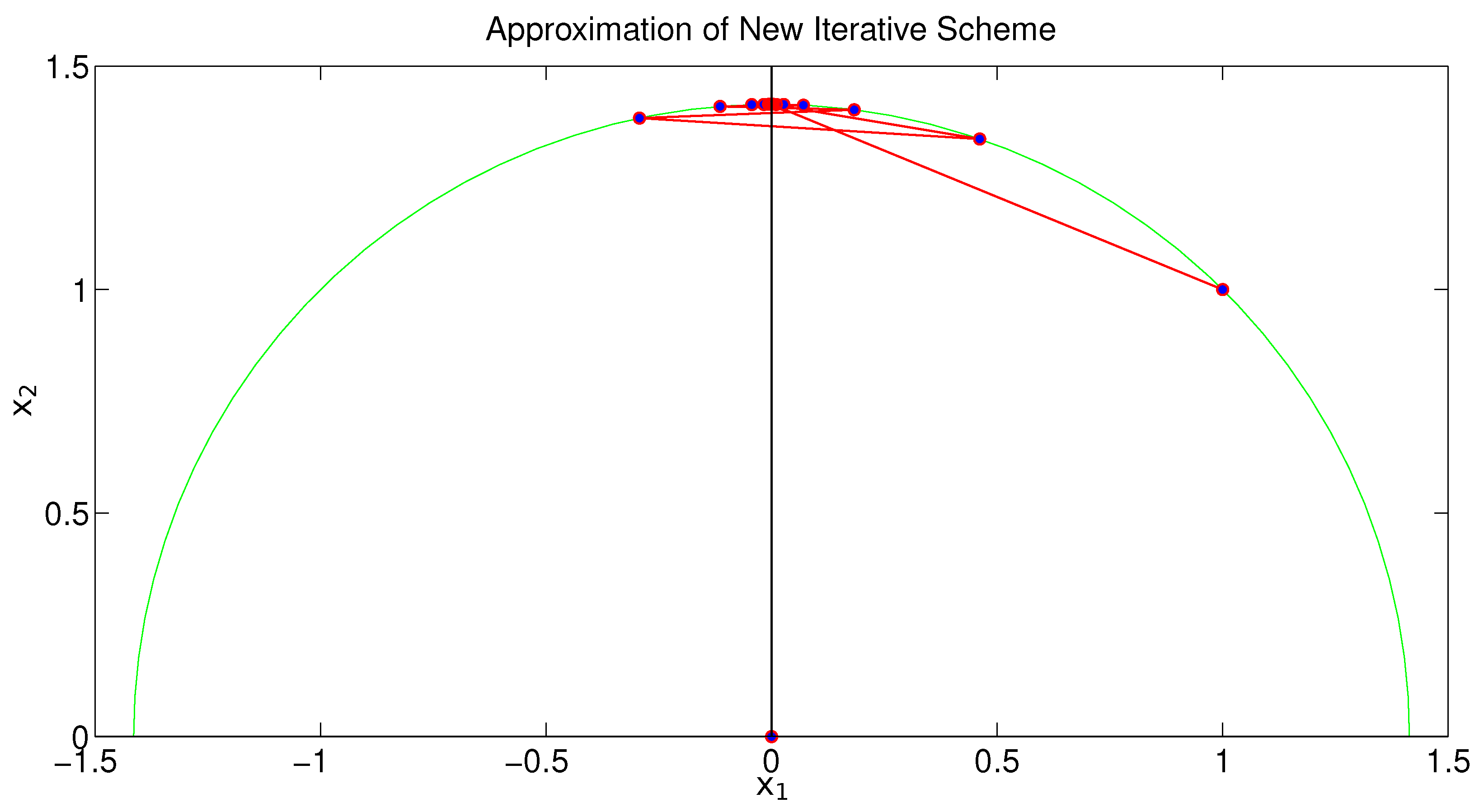

Since the theoretical results were developed within the framework of CAT(0) spaces, we present a recently introduced example from the specialized literature, which also allows for a corresponding numerical simulation.

Example 2 ([

29])

. Let us consider Poincaré half-planewith the Poincaré metricThen, T is nonexpansive in connection with the hyperbolic distance d.

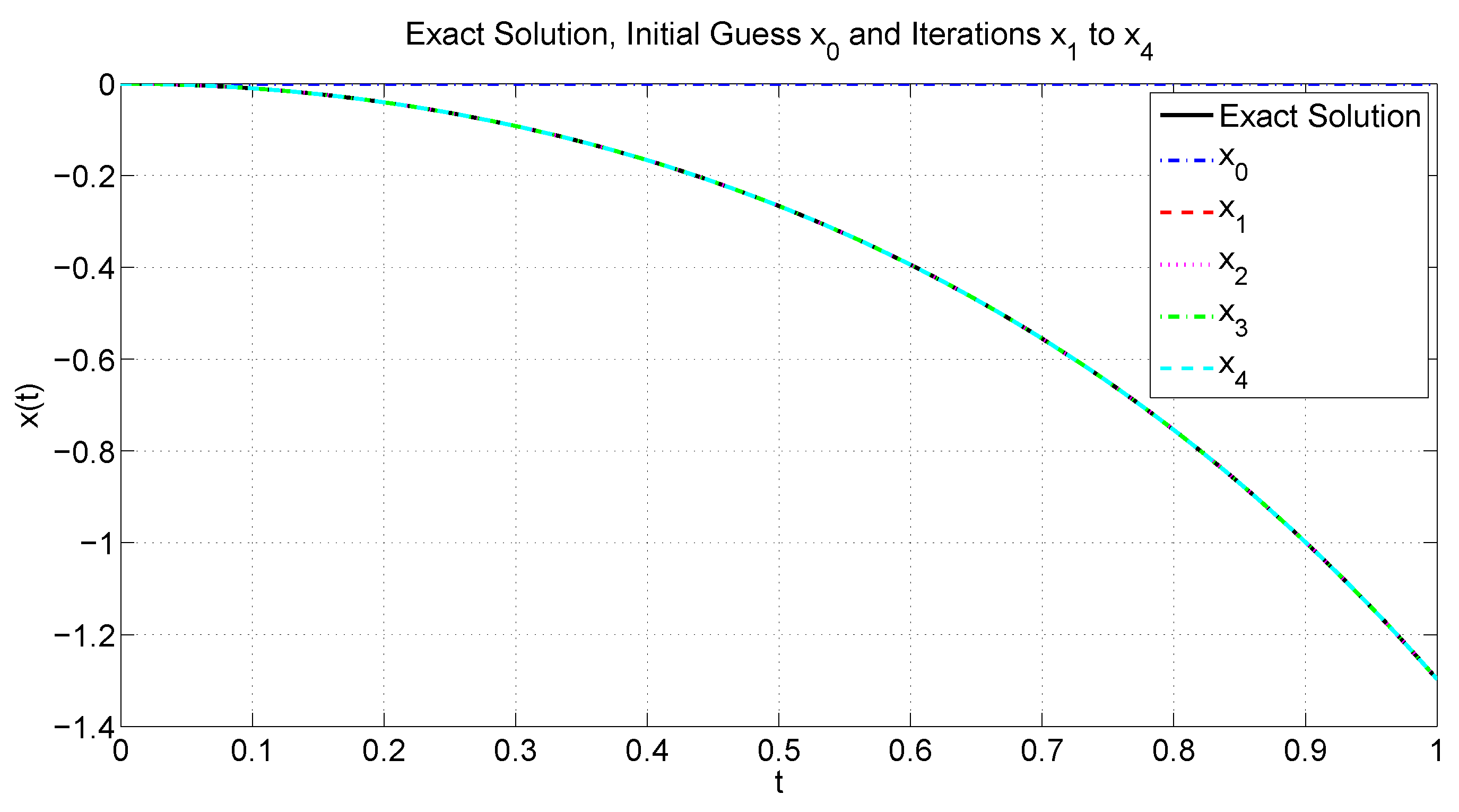

The goal here is to apply the iterative procedure (1) starting from a chosen initial estimate to obtain the corresponding fixed point of T. To perform this, it is first necessary to have a clear understanding of how can be computed exactly. Given two points and a scalar , we aim to derive an explicit expression for , i.e., the unique point on the geodesic segment between x and y, such that According to [29], it has been proven that for two given points, and , one haswhereand We now incorporate the formula derived above into the iterative scheme (1) to approximate the fixed point of the hyperbolic nonexpansive operator T. To illustrate this, we take the initial guess and set the permissible error to . The stopping condition for the algorithm is defined as where d denotes the Poincaré metric. Figure 9 illustrates the approximate sequence from the initial guess to the computed solution. It is worth noting that the entire sequence lies on the geodesic connecting and (depicted as the green semicircle). 6. Comparison via Polynomiography

In this section, we shift our perspective and highlight an alternative application of the new iteration procedure. Consider a nonconstant polynomial

p, defined on the closed unit disk

. According to the Maximum Modulus Principle, the largest value of the modulus, denoted by

is always attained at one or more points located on the boundary of

D.

A significant contribution in this direction was made by Kalantari in [

35], where he proposed a novel approach to the problem of maximizing the modulus of complex polynomials. Specifically, his work introduced a reformulation of the problem, first by expressing it as a fixed point problem and subsequently by reducing it to the search for roots of a suitably defined pseudo-polynomial. This methodological shift opened the way to new computational strategies, and it is grounded in the fundamental equivalence stated below.

Theorem 11 ([

35])

. Let be a nonconstant polynomial on . A point is a local maximum of over D if and only ifwheremeaning that it finds itself among the solutions of the zero-search problemwhere Further, we extend our analysis by combining the newly introduced iteration procedure with specific elements drawn from the so-called Basic Family of Iterations, suitably adapted to the sequence of functions

. More precisely, our focus will be on the first three members of the Basic Family, together with the associated sequences of iteration functions derived within the framework of the Maximum Modulus Principle (MMP):

If

indicates any of the MMP iteration functions listed above, then the resulting New-MMP procedure is

for all

, where

,

, and

are real sequences in

.

Inspired by the fact that several recent studies suggest, based on polynomiographic applications, that the iterative process employed is more efficient (see, for instance, [

36,

37,

38]), we now proceed with a more detailed analysis from this perspective. Our aim is to examine how these iterative schemes perform in practice and what insights can be gained from their visual representation. To set the stage for this discussion, we begin by clarifying several aspects related to the interpretation of the images presented in the following section.

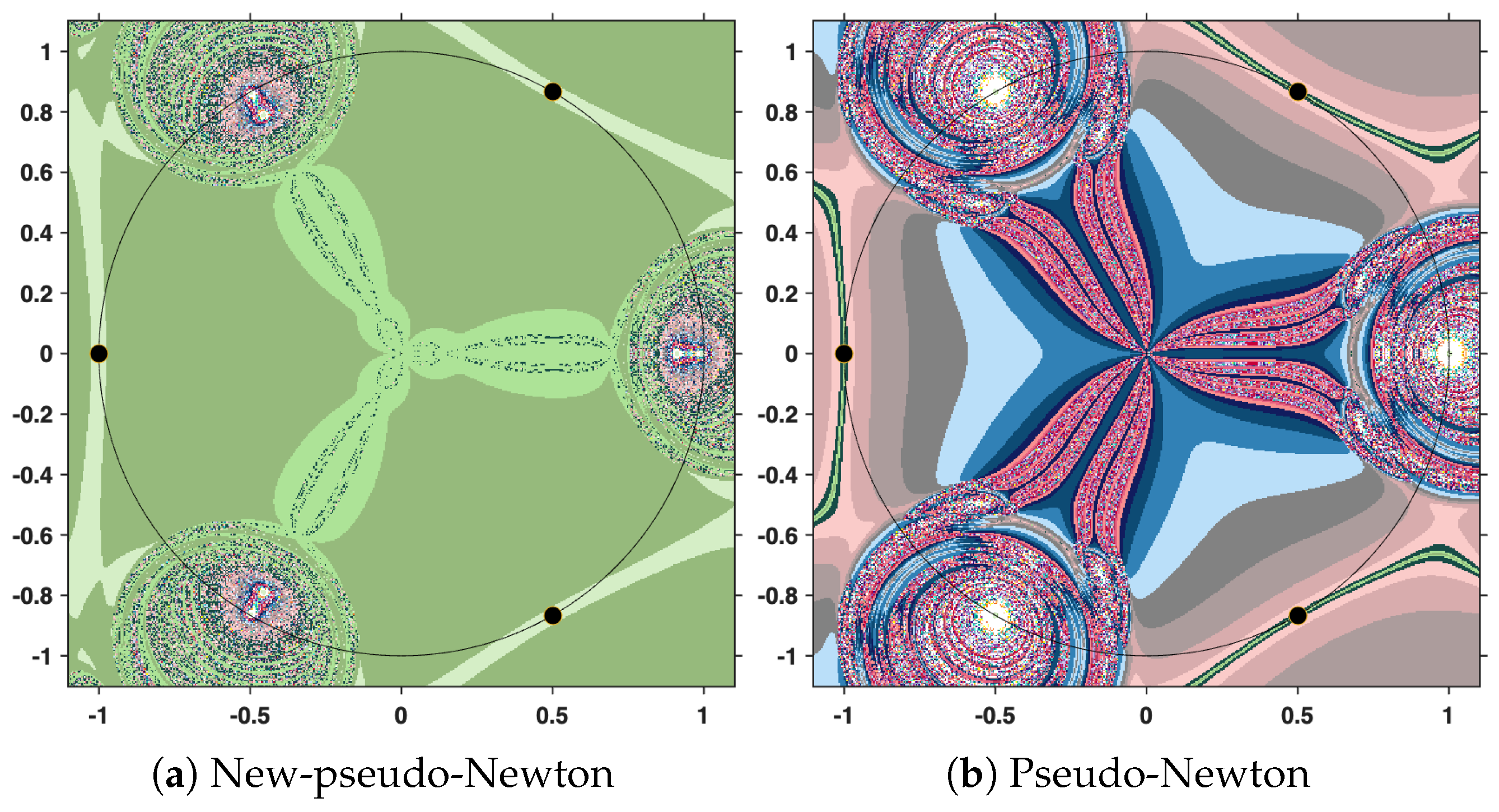

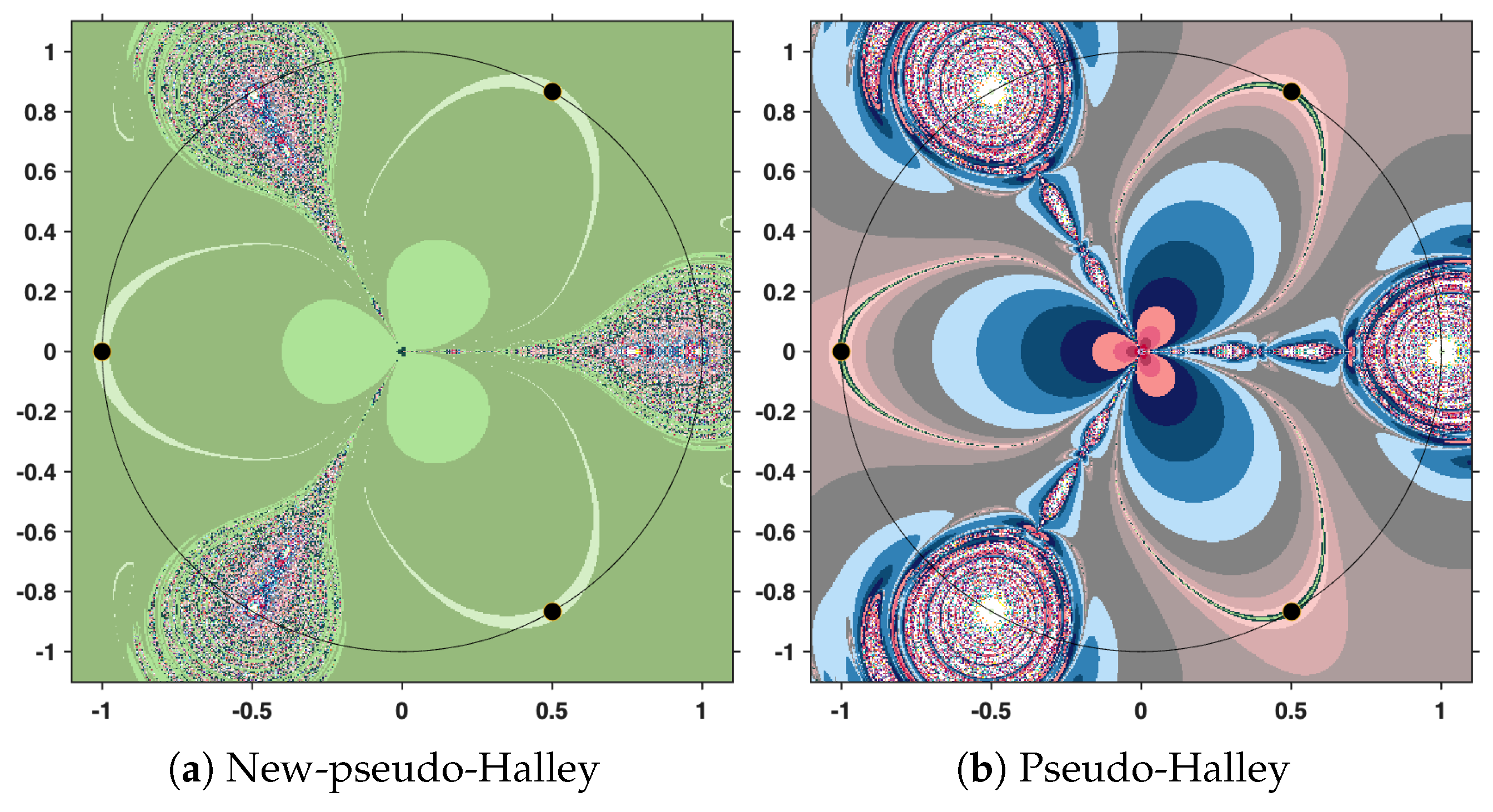

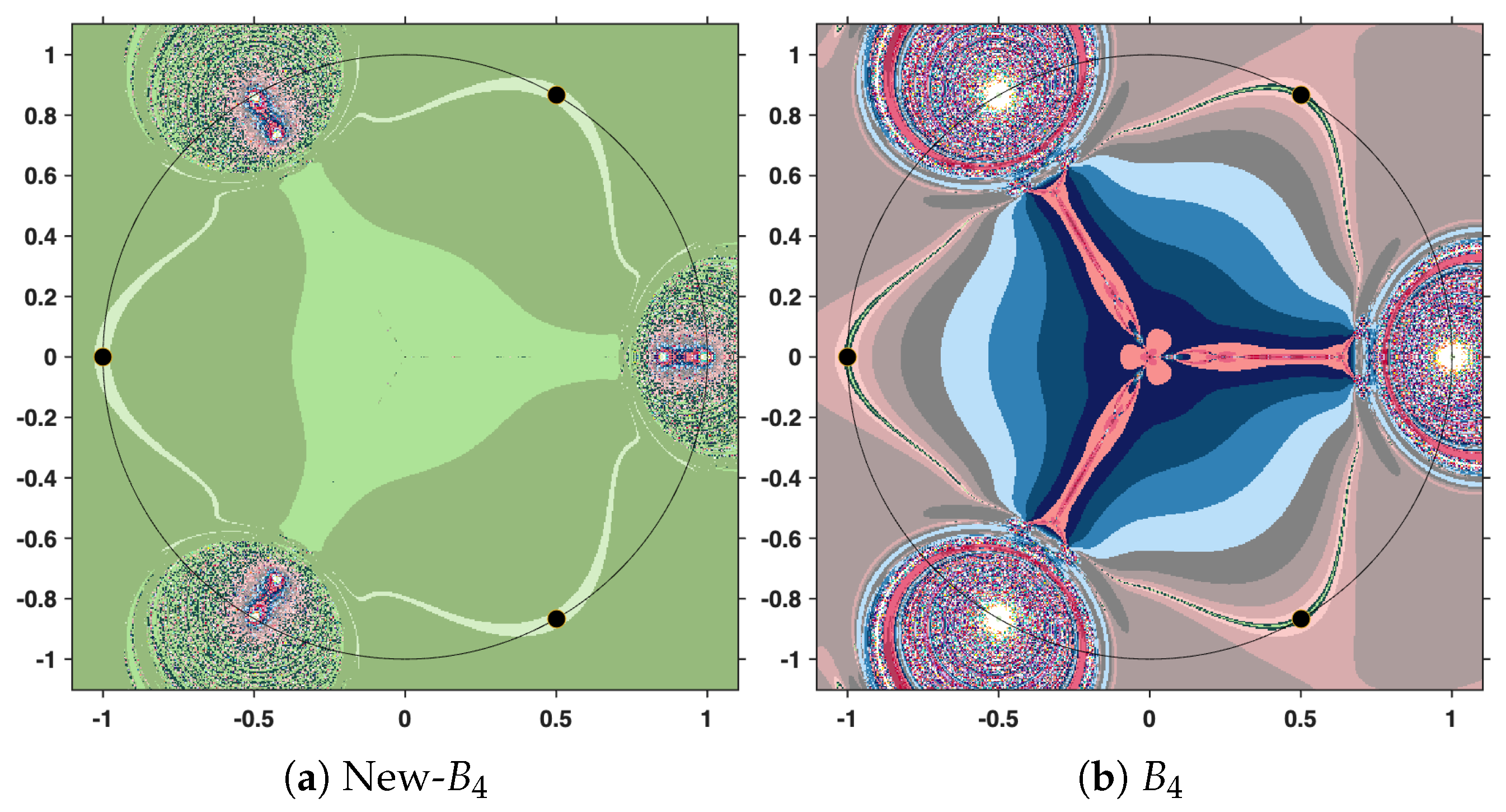

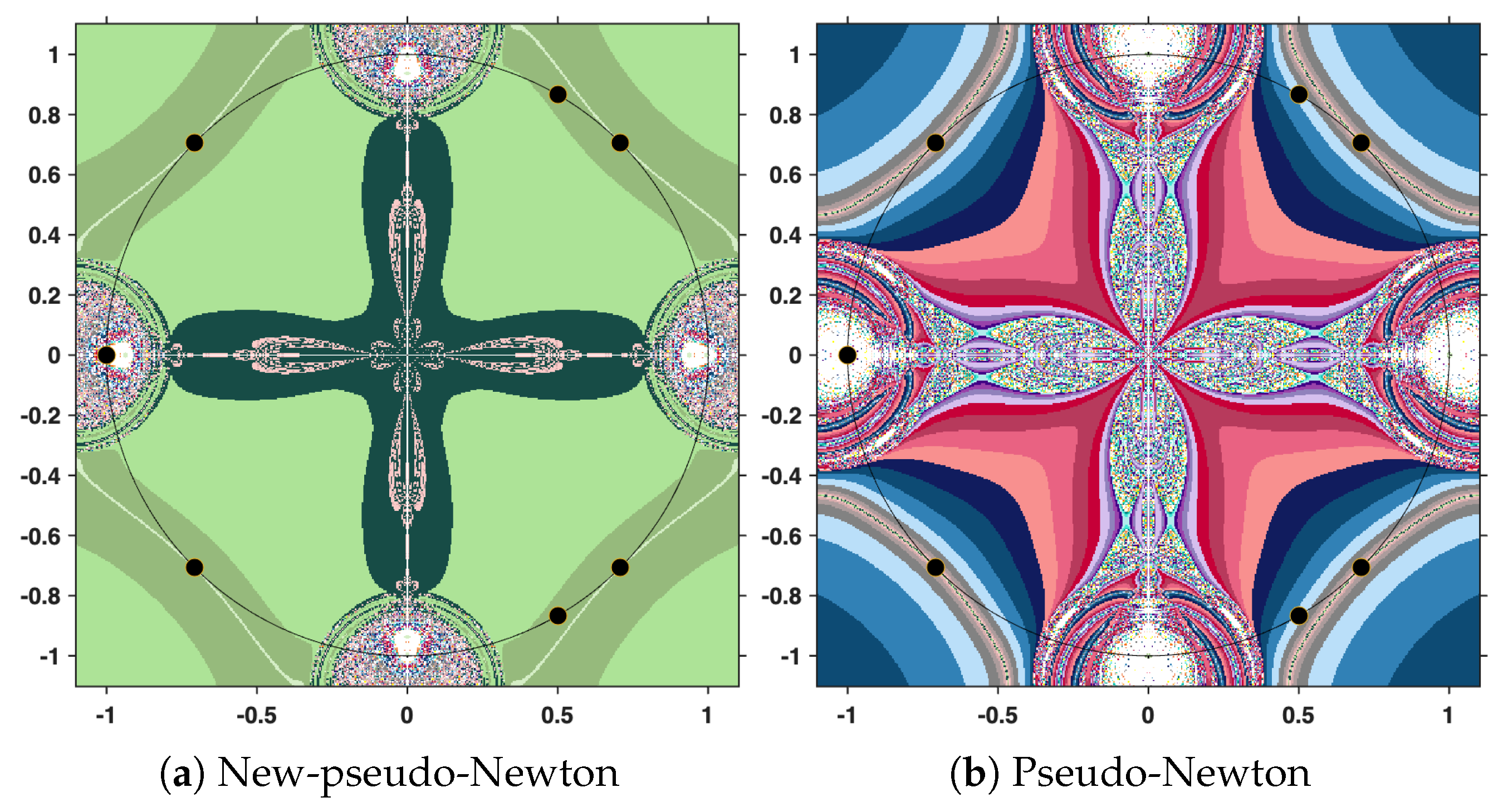

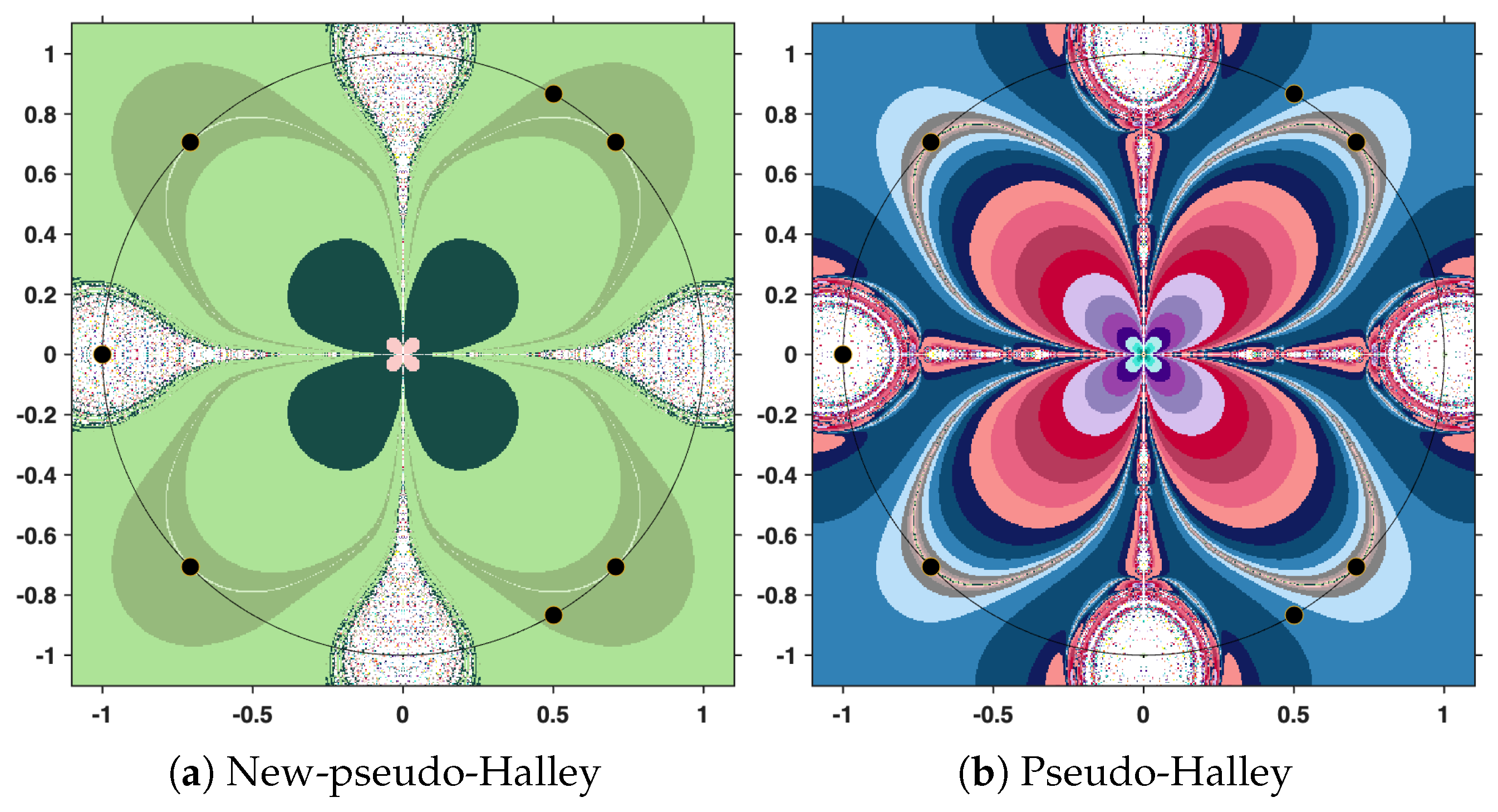

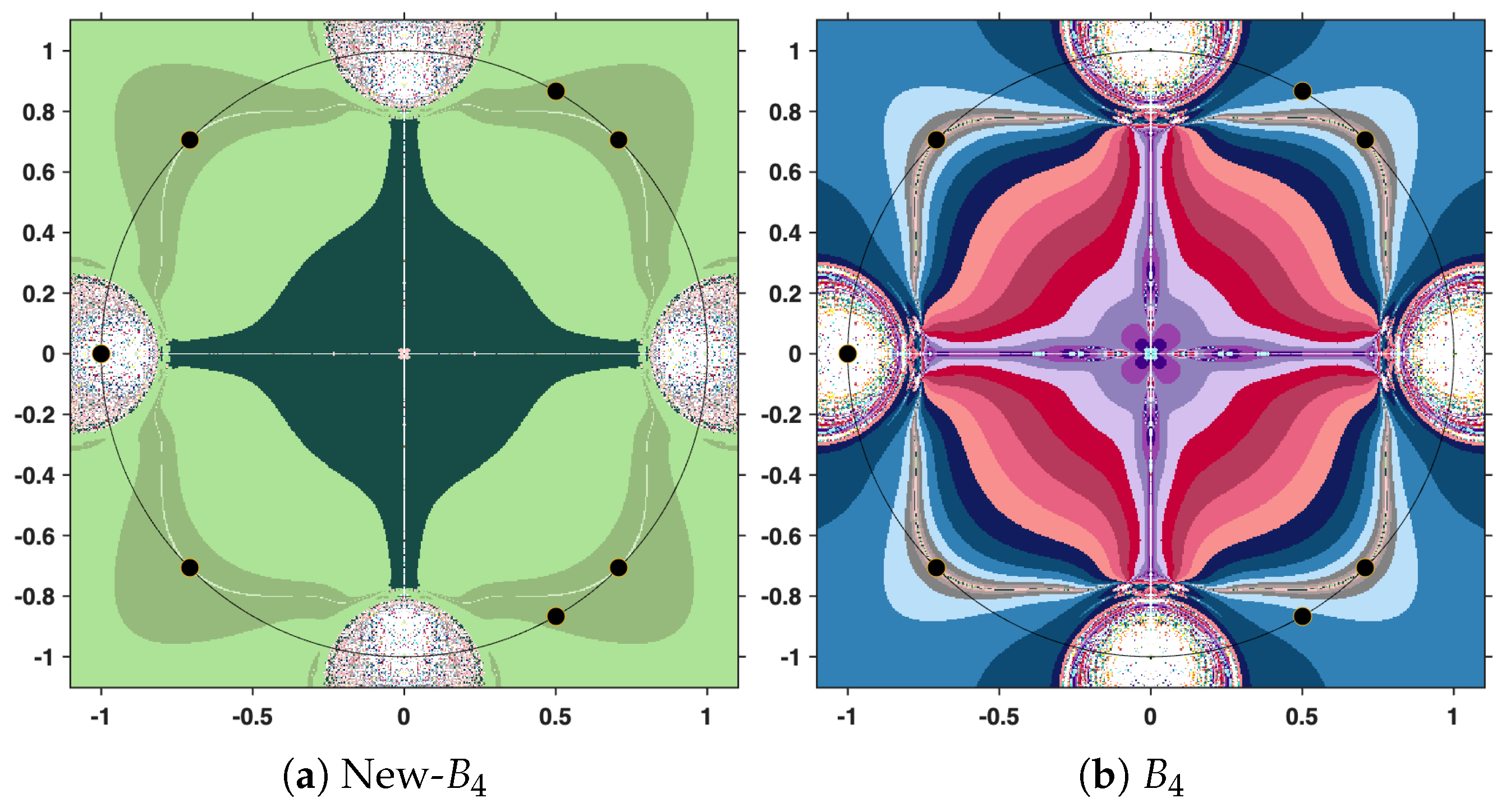

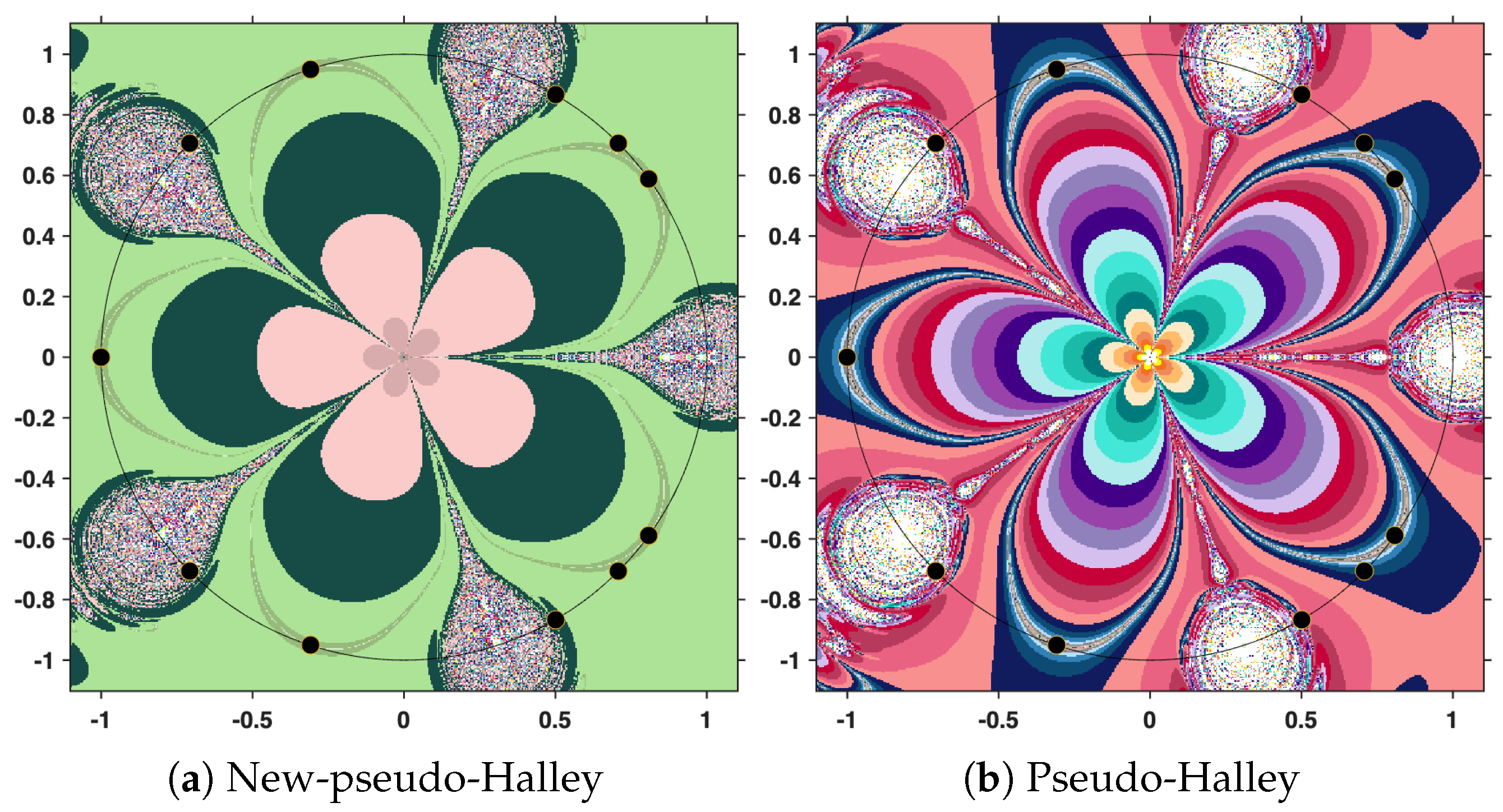

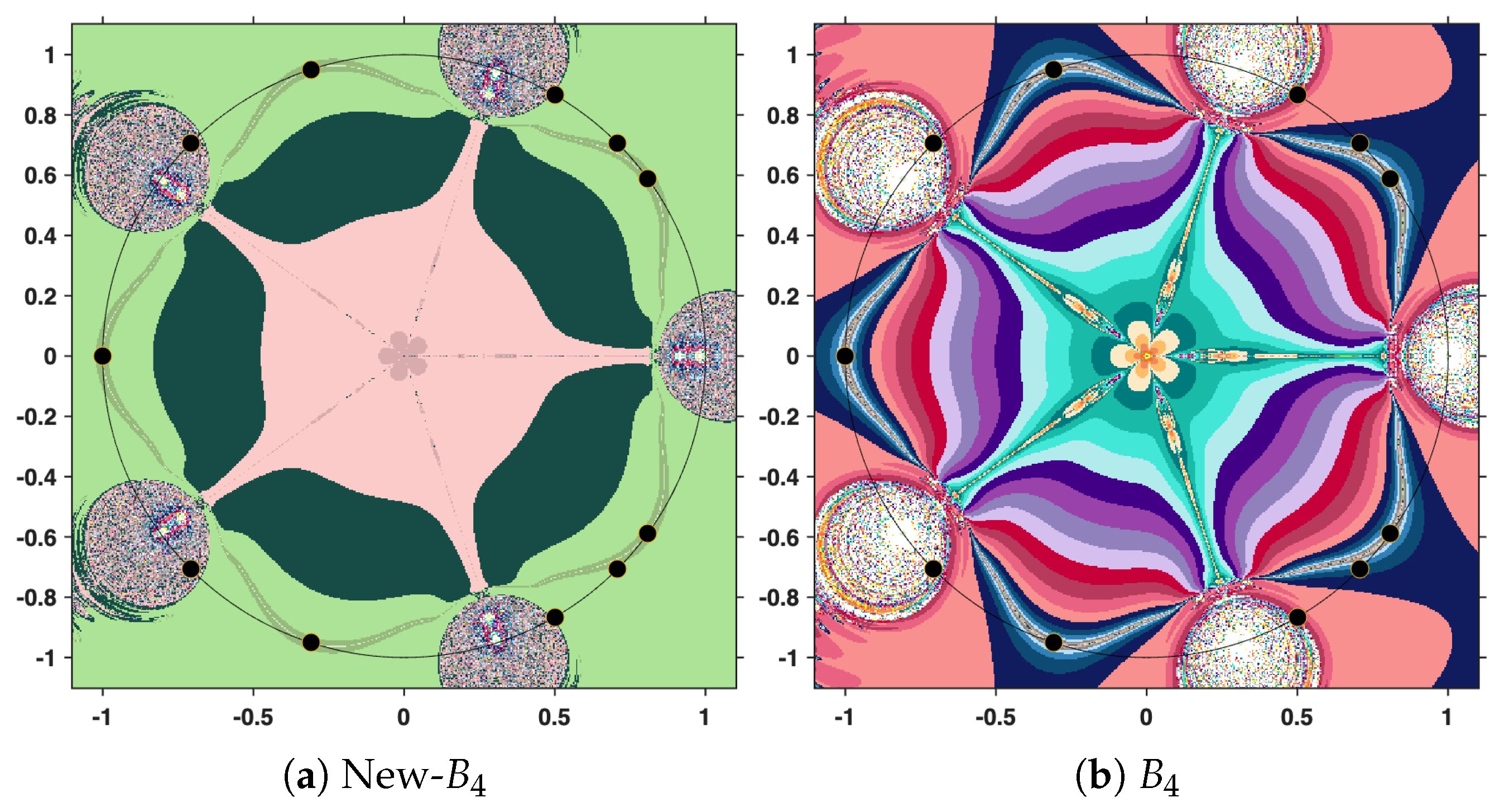

The output of the numerical algorithm is a colored picture (named polynomiograph), emphasizing the behavior of orbits. The black points indicate precisely the solutions, while white points mark the inefficient initial estimates. All the other colors in the color palette present information about a particular length of the orbit. There is an obvious difference in color intensity when comparing a procedure based on the new iteration with the corresponding standard procedure. More precisely, less intense colors indicate shorter orbits, hence a more efficient algorithm. For clarity, the legend of the colors used is shown in

Figure 12.

In the subsequent analysis, we employ the New-MMP procedures in order to compute the maximum modulus of the complex polynomial . For the sake of comparison, we also implement the classical MMP procedures, which are formulated on the basis of Picard iteration. The execution of these numerical algorithms requires, as a first step, the specification of the iteration step sizes. In our case, the parameters are set to , and . It should be emphasized that this selection is purely arbitrary and does not rely on a particular theoretical justification; the purpose is simply to illustrate the performance of the methods under a fixed set of numerical values.

In addition to the step sizes, it is necessary to establish clear termination criteria for the iterative scheme. To this end, two stopping conditions are introduced. The first is a tolerance threshold for the error, which we set at . This ensures that the procedure terminates once two successive iterates in the orbit become sufficiently close, thus providing a guarantee of numerical accuracy. The second condition is a maximal number of iterations, fixed at . This serves as a safeguard against divergence or excessively long computations, preventing the algorithm from running indefinitely.

Therefore, the iterative construction will be terminated as soon as at least one of these conditions is satisfied: either the distance between two consecutive elements of the orbit falls below the prescribed tolerance, or the orbit has been extended to the limit of 31 iterations. In this way, the process balances both accuracy and computational efficiency, while allowing a fair comparison between the New-MMP and the classical MMP procedures. The resulting polynomiographs are pictured in

Figure 13,

Figure 14 and

Figure 15.

This time, we employ the same parameters, the same stopping criterion, and the same maximum number of iterations as in the previous experiment. However, instead of the cubic polynomial, we now consider the complex polynomial

. The purpose of this change is to examine how the methods perform when applied to a polynomial of higher degree, while maintaining identical computational settings. The resulting polynomiographs are pictured in

Figure 16,

Figure 17 and

Figure 18.

Finally, under the same conditions specified above, but this time considering the complex polynomial

, we obtain the graphical results shown in

Figure 19,

Figure 20 and

Figure 21.

To strengthen the efficiency of the newly introduced iterative process, we will present an objective method of analysis in the case of using the polynomial

, by employing an indicator which we will denote as PD(10). This indicator measures the percentage of the considered domain for which the iterative sequence reaches an approximation error smaller than

within at most 10 iterations. The results are presented in

Table 13.

It should be emphasized that the better performance presented in

Table 13 is evaluated specifically for an approximation error of

within the first 10 iterations, the choice of the maximum number of iterations being arbitrary. While this comparison is limited to these parameters, the results still illustrate the method’s overall efficiency and robustness compared to classical iterative methods.