1. Introduction

Remote sensing technology provides economical and real-time data support for global resource exploration, environmental change monitoring, and disaster emergency response. Its operational mechanism relies on the non-contact collection of surface electromagnetic radiation information, which is accomplished through sensors mounted on satellites, aircraft, and near-ground platforms. As high-resolution earth observation system technology advances at a fast pace [

1], the temporal, spatial, and spectral resolution of remote sensing data has been steadily improved. This advancement has established a robust data basis for the interpretation of detailed surface information [

2,

3]. However, the explosive expansion of large-scale remote sensing data, coupled with the need for semantic comprehension in complex scenarios, has posed substantial difficulties for traditional analysis methods. These traditional methods, which rely on artificial rules and shallow machine learning, struggle to meet current demands—especially in advanced visual tasks like target recognition and feature classification. Such tasks are in urgent need of more intelligent data processing models.

Against this context, remote sensing object detection has emerged as a critical breakthrough technology. As a core technique for locating and identifying specific feature targets in high-resolution images, it plays a key role in domains such as national defense security, disaster risk assessment, and smart city development [

4]. Even so, traditional remote sensing object detection methods encounter significant obstacles. These obstacles stem from three main issues in remote sensing images: complex background interference, wide variations in target scales (for instance, the high proportion of small targets), and the occlusion of densely distributed targets [

5]. Consequently, traditional methods suffer from limited feature representation capabilities, low computational efficiency, and insufficient generalization performance.

The rapid evolution of deep learning has injected new vitality into the field of remote sensing object detection. In comparison with traditional methods, deep learning approaches exhibit better detection performance. This advantage is attributed to their larger receptive fields and more precise hierarchical feature extraction capabilities [

6]. Currently, remote sensing object detection algorithms based on deep learning can be categorized into two technical frameworks: two-stage detection and single-stage detection.

Two-stage detection algorithms, such as CSL [

7], Mask R-CNN (Convolutional Neural Network) [

8], and RoI-trans [

9], adopt the region proposal and refined classification architecture to achieve high-precision detection. On the one hand, some classic studies from the past, such as Zhong et al. [

10], improved target localization accuracy using a balanced position-sensitive structure. Li et al. [

11] developed a dual-branch feature fusion network with local-global feature coordination to reduce false alarms for ambiguous targets. Xu et al. [

12] proposed a dynamic convolution module to adaptively model the geometric deformations of targets. CAD-Net [

13] enhances the connection between target features and corresponding scenarios by learning the global and local contexts of regions of interest (ROIs), thereby strengthening the network’s feature representation. ReDet [

14] utilizes group convolution to generate rotation-equivariant features, and then combines rotation-invariant ROI alignment to extract rotation-invariant features from the rotation-equivariant features, enabling accurate detection of rotated targets.However, although the two-stage cascade architecture enhances target localization capability, its complex network structure typically increases parameter counts by from 3 to 5 times compared to single-stage models of comparable accuracy, severely restricting large-scale remote sensing applications.

As a result, researchers have adapted single-stage object detection frameworks for remote sensing imagery. Zhang et al. [

15] designed a depthwise separable convolution module for synthetic aperture radar (SAR) ship detection, reducing computational complexity in feature extraction. Ma et al. [

16] optimized the YOLOv3 model to enable real-time processing in earthquake-damaged building detection. To address the problem of small target omission, Li et al. [

17] integrated a multi-level feature fusion unit into the SSD framework, significantly enhancing the detection capability for small targets.

In recent years, research on remote sensing image target detection technology has centered on two key challenges: optimizing feature representation and enhancing computational efficiency, with notable breakthroughs achieved in adapting to complex scenes and improving real-time processing capabilities.

ABNet proposed by Liu et al. [

18] developed an adaptive feature pyramid network that quantifies the channel contribution of each layer via channel attention. By integrating spatial attention to pinpoint key regions, it achieves adaptive weighted fusion of multi-layer feature maps, effectively mitigating the issue of weak features in small targets. Building on this, RAOD [

19] incorporates a non-local feature enhancement module. After uniformly resampling multi-layer pyramid features to an intermediate scale, it reinforces cross-layer feature correlations by modeling long-range dependencies, thereby markedly enhancing the ability to distinguish dense targets. AFC-Net [

20] developed feature competition selection mechanism, ensuring that the detection of each target fully utilizes information from all feature layers.

At the level of feature expression, Rao et al. [

21] have further overcome limitations in feature representation by proposing a specialized levy-associative CNN model. This model achieves multi-level feature fusion via object labeling and matrix signal processing, enhances multi-scale target adaptability in end-to-end training, and illustrates the potential of cross-task migration. Additionally, Xuan Pang et al. [

22] developed a hybrid algorithm based on three-frame difference and hue-saturation-value (HSV) spatial segmentation. This algorithm employs motion detection and color space analysis, integrating H-component region growth and S-component adaptive threshold segmentation to accurately capture moving small targets. It offers a novel approach to combining traditional methods with deep learning for dynamic detection in low-computing-power environments.

Driven by the dual goals of feature optimization and computational efficiency, deformable convolution technology has gradually emerged as a key breakthrough in addressing challenges related to geometric deformation and scale adaptation. By dynamically adjusting the sampling positions of the convolution kernel, this method overcomes the rigid geometric constraints of traditional convolution operations, offering more flexible feature modeling capabilities for image processing and target detection tasks. In the domain of image processing, the LF-DFnet network, developed by Wang et al. [

23], achieves super-resolution for optical flow field images via an angular deformable alignment module (ADAM). It fuses multi-view angle information through bidirectional feature alignment to generate high-resolution images rich in details, validating the potential of deformable convolution in feature modeling for complex scenes.

In the area of general target detection, Kang et al. [

24] integrated MobileNetv3 with deformable convolution to build an efficient detection framework, reducing model parameters while preserving robustness against target deformation. Meanwhile, Zhou et al. [

25] developed a deformable convolution-based ResNet50 backbone network for urban aerial images, integrating the attention mechanism and Soft-NMS algorithms to address target occlusion and scale disparity issues in aerial photography. Zha et al. [

26] innovatively incorporated the dynamic sampling mechanism of deformable convolution, enhancing the bidirectional feature pyramid network through grouped deformable convolution (GD-BiFPN). This strengthens the ability to capture local details in shaded regions, showcasing the efficiency and robustness of deformable convolution in vertical domains like electric utility monitoring. Research on deformable convolution has progressed from basic feature alignment to multi-level feature fusion, with simultaneous attention to both efficiency optimization and scene adaptability.

While deformable convolution greatly boosts the ability of remote sensing target detection to adapt to geometric deformation and scale changes through its dynamic sampling mechanism, current approaches still grapple with limitations stemming from gradual scale variations. In a single scene, targets of varying sizes (e.g., different types of ships) display continuous, step-by-step changes. Such progressive scale shifts present major hurdles for traditional detection algorithms: detectors designed with fixed scales often struggle to preserve symmetrical feature representation across size dimensions when simultaneously detecting multi-scale targets. Existing multi-level structures like feature pyramids seek to tackle this via hierarchical fusion, yet they inherently introduce uneven computational burdens between scale branches—deeper pyramids drive exponential increases in complexity, while shallower ones sacrifice detection precision.

The LSKNet [

5] method employs large-kernel convolutions to expand receptive fields, but its directionally skewed sampling patterns disturb the rotational symmetry needed for detecting arbitrarily oriented targets. Moreover, dilated convolutions produce geometrically irregular sampling grids that fail to retain the intrinsic shape symmetry of elongated targets such as ships or aircraft. These drawbacks arise from fundamental breaks in scale-space symmetry within feature interaction mechanisms.

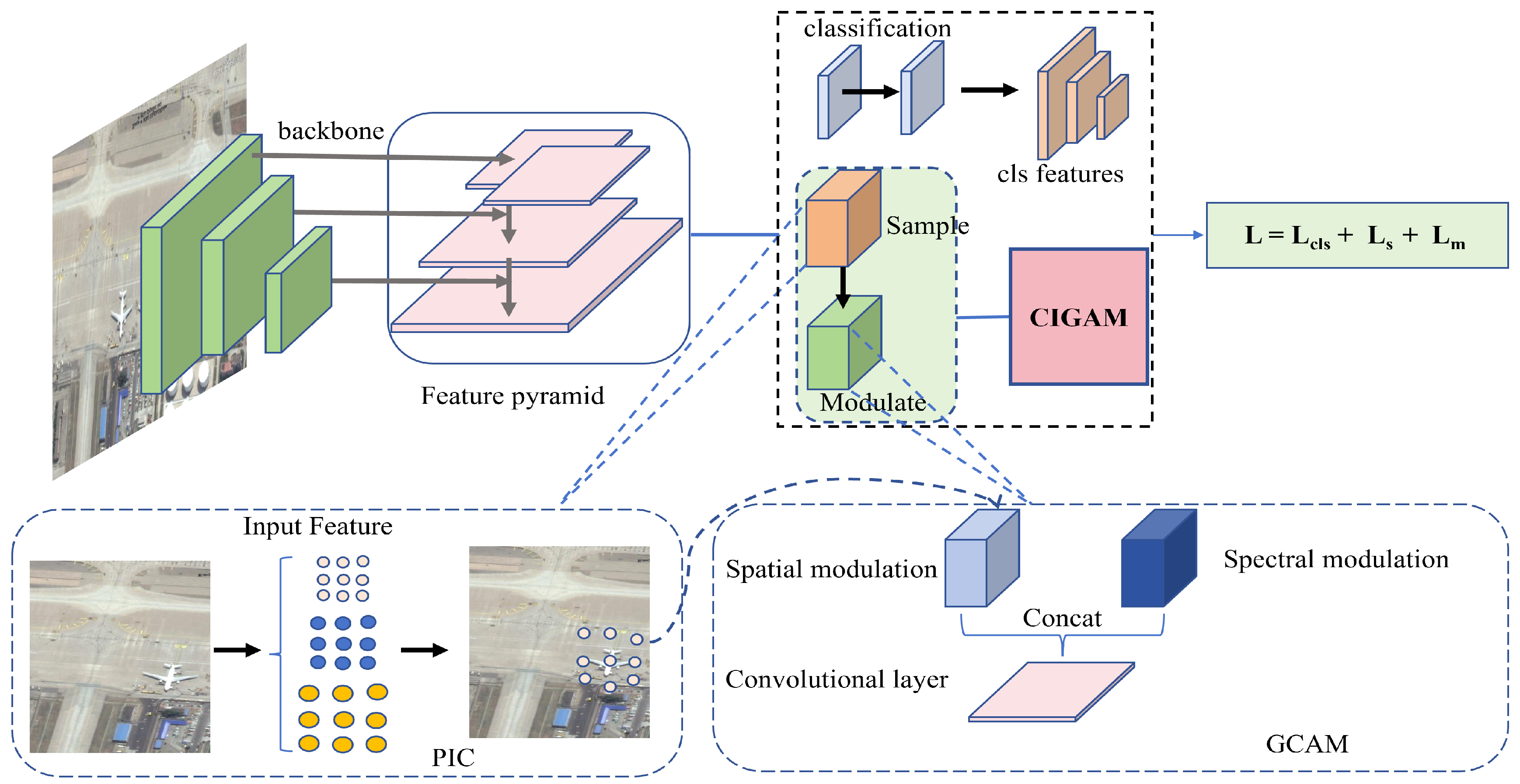

To overcome these challenges, we propose the Parallel Interleaved Convolutional Kernel Network (PICK-Net), which incorporates symmetry-aware multi-scale modeling through two core innovations. First, rather than utilizing dilated or large kernels, we employ parallel depthwise kernels arranged in mirror symmetry (

Figure 1) to attain balanced receptive field coverage while reducing background noise to a minimum. Second, the Global Complementary Attention Mechanism (GCAM) utilizes bidirectional attention symmetry, optimizing channel and spatial features independently via orthogonal pathways. This successfully addresses gradient competition while preserving rotational equivariance in feature responses. Our key contributions are as follows:

- (1)

A systematic analysis uncovering the connection between progressive scale variation and the degradation of feature symmetry—specifically, how limited receptive fields undermine directional completeness in large targets while introducing scale-specific noise asymmetry in small targets.

- (2)

A parallel multi-scale backbone network that attains computational efficiency via symmetrical kernel grouping. Here, depthwise kernels of complementary sizes work together to capture scale-invariant features without the artifacts caused by dilation.

- (3)

A collaborative framework where parallel convolutions and GCAM foster a symbiotic scale-direction balance: the former guarantees spatial symmetry through equidistant sampling, while the latter preserves channel balance via decoupled attention mechanisms.

The rest of this paper is organized as follows:

Section 2 reviews related work,

Section 3 details the design of PICK-Net,

Section 4 introduces experimental settings and result analysis, and

Section 5 summarizes the research findings and prospects, helping readers quickly grasp the context of this paper.

4. Experiments

The experimental results are presented on two typical public datasets containing targeted objects: RSOD [

36] and NWPU-VHR10 [

37]. Details regarding evaluation metrics, datasets, method implementation, and experimental outcomes are discussed in the subsequent subsections.

4.1. Evaluataion Metrics

In the performance evaluation of detection task, the synergistic quantification of detection precision and localization accuracy is the core challenge. In this study, we adopt the average precision metric system based on Intersection over Union (IoU), which can comprehensively reflect the robustness of the model in complex scenarios through multi-dimensional threshold scanning and statistical modeling. Set

and

as evaluation benchmarks.

is defined as the area enclosed by the Precision-Recall (PR) curve and the axes,

is the average AP for each category.

is the threshold of IoU for determining positive and negative cases. The mathematical expression for AP and IoU is as follows:

where

represents the PR curve,

is the prediction frame, and

is the true labeling frame. During the statistical process of detection results, the determination of True Positive (TP), False Positive (FP), and False Negative (FN) needs to be carried out accordingly:

TP: the number of prediction frames that satisfy and have the correct category, characterizing the valid targets correctly detected by the model.

FP: the number of prediction frames with , category-incorrect prediction frames, and duplicate detection frames, reflecting the false alarm rate.

FN: the number of true labeled frames not covered by any prediction frame, reflecting the model leakage detection rate.

Based on the above statistics, the precision rate (

P) and the recall rate (

R) are calculated as follows:

4.2. Datasets

4.2.1. RSOD Dataset

The RSOD dataset is an openly accessible benchmark dataset annotated in PASCAL VOC format specifically designed for identifying typical artificial targets in RS imagery. It comprises four object categories with distinct scale and morphological variations: aircraft, playgrounds, overpasses, and storage tanks. These categories demonstrate complementary characteristics in spatial distribution, textural features, and background complexity.

Constructed through a stratified sampling strategy, the dataset features uniform image resolutions of 1024 × 1024 pixels across all categories. Annotation files include target bounding box coordinates and class labels, enabling joint research on object detection and fine-grained classification tasks. By incorporating balanced multi-scale target distributions (1–53 instances per image) and complex backgrounds, RSOD provides a standardized testbed for evaluating model generalization capabilities in RS scenarios. The category-specific image counts and instance statistics are presented in the following

Table 1.

4.2.2. NWPU-VHR10 Dataset

The NWPU-VHR10 dataset, released by Northwestern Polytechnical University in 2014, is the first publicly available benchmark dataset for multi-class object detection in high-resolution optical remote sensing images under complex scenarios. It comprises 800 very-high-resolution (VHR) remote sensing images, including 650 target-containing images and 150 background images, with spatial resolutions of 0.5–2 m and dimensions ranging from to pixels. These images were cropped from Google Earth and the Vaihingen dataset, followed by expert manual annotation.

The dataset covers 10 categories of geospatial targets: airplane, ships, storage tanks, baseball fields, bridges, and others, totaling 3651 annotated instances stored in horizontal bounding boxes. Characterized by large-scale target variations, high background complexity, and class imbalance, NWPU-VHR10 has become a standard benchmark for evaluating deep learning models in RS object detection. It has significantly driven algorithm advancements in multi-scale feature fusion and small object detection.

This study selects RSOD and NWPU-VHR10 as experimental datasets mainly based on the following considerations: Both are widely recognized benchmark datasets in the field of remote sensing object detection. Their data cover multi-source remote sensing scenarios such as aerial and satellite images, include multi-scale targets (e.g., small-sized vehicles, buildings, and large-sized airport runways) and complex backgrounds (e.g., cloud occlusion, terrain interference). Additionally, they provide complete annotations and public download channels officially, ensuring high accessibility and facilitating the reproduction and horizontal comparison of research results. Besides the aforementioned datasets, datasets such as DOTA (for rotated object detection) and HRRSD (for high-resolution remote sensing scenarios) also have similar research value. However, considering that this study focuses on ‘verifying the performance of general object detection’, the target categories of RSOD and NWPU-VHR10 (e.g., aircraft, ships, vehicles) are more in line with the core needs of this research, hence their priority selection. It should be noted that the selected datasets have certain limitations: RSOD contains only four target categories (aircraft, playground, bridge, oil depot), resulting in a relatively narrow category coverage; although NWPU-VHR10 includes 10 target categories, the sample size of some categories (e.g., aircraft, vehicles) is significantly larger than that of others (e.g., stadium, port), leading to class imbalance. In response to the above limitations, we have adopted corresponding measures in the experimental design: for class imbalance, a ’weighted cross-entropy loss’ was used to balance the weights of positive/negative samples and different categories; to address the narrow category coverage. In subsequent work, we will extend the research to multi-category datasets such as DOTA and HRRSD to further verify the generalization ability of the model, so as to make up for the shortcomings of the current study.

4.3. Implementation Details

To ensure the fairness of the experiments, all comparative experiments and ablation experiments were conducted in the same experimental environment: all experiments were carried out on the Linux Ubuntu 16.04 operating system, with the PyTorch 2.0.1 framework selected for training and prediction tasks, and run on NVIDIA GeForce RTX 4080Ti (Santa Clara, CA, USA). During the training process, considering factors such as the number of model parameters, hardware conditions, and training speed comprehensively, and after verification through multiple rounds of experiments, the final training strategy was determined as follows: the batch size was set to 8, the number of iteration rounds was set to 300, the Stochastic Gradient Descent (SGD) optimizer was adopted, the maximum learning rate was 0.01, the minimum learning rate was 0.001, and the cosine annealing strategy was used for learning rate decay. The processing strategies for the RSOD and NWPU-VHR10 datasets were as follows: for image scaling, an adaptive scaling mechanism was employed—images in the RSOD dataset were uniformly adjusted to 512 × 512 pixels, while those in the NWPU-VHR10 dataset were adjusted to 640 × 640 pixels; the original aspect ratio was maintained, and mean value filling was used for blank areas to avoid target distortion. Data augmentation included geometric transformations (random horizontal flipping with a probability of 0.5, random vertical flipping with a probability of 0.3, random rotation within the range of −15° to 15°, and random cropping with an area ratio of 0.7–1.0), pixel adjustments (random changes in brightness ±15%, contrast ±20%), and noise injection (Gaussian noise with = 0.01 was added to 10% of the training samples).

For data partitioning, the official recommended proportions of the datasets were followed: the RSOD dataset was divided into training and test sets at a ratio of 8:2, and the NWPU-VHR10 dataset was divided into training and test sets at a ratio of 7:3, with consistent distribution of each category ensured in the partitioned datasets. For label processing, the bounding box annotations of the original datasets were uniformly converted to the COCO format for easy model parsing, and a small number of samples with ambiguous annotations in the NWPU-VHR10 dataset were manually corrected to ensure annotation accuracy.

4.4. Ablation Studies

4.4.1. Evaluation of Different Components

We performed ablation experiments on the RSOD and NWPU-VHR10 datasets to validate the performance of the proposed PIC and GCAM.

Table 2 shows the results of the baseline model on the NWPU-VHR10 and RSOD datasets with and without our proposed PIC and GCAM. On the NWPU-VHR10 dataset, the baseline model achieves only 82.15% mAP, which is due to the problem of spatial redundancy and channel coupling of the traditional dense convolutional features. On the one hand, this issue leads to a large amount of computation consumed in the background region, and, on the other hand, the strong correlation of the channel features results in the submergence of the key texture information, which affects the prediction accuracy of the network regressors and classifiers. When combined with our proposed PIC, the performance of the detector is optimized by 2.14%, indicating that in the remote sensing image target detection task, covering different scales of sensory fields by dynamic sparse sampling improves the capability of capturing multi-resolution features; therefore, the detection performance is improved. When GCAM is added to the network, the detector effectively eliminates the gradient competition induced by cross-dimensional feature coupling through the channel-space dual-path independent modulation mechanism, thus enhancing the robustness of gradient optimization under convolutional kernel deformation conditions. When the network uses only the GCAM alone, the improvement in detector performance is 1.81%, which proves that the shallow feature prediction results are beneficial in guiding the prediction of deeper feature parameters. Together, the PIC and the GCAM improve the performance of the PICK-Net by 2.75% compared to the baseline model, achieving higher accuracy in target detection.

In the RSOD dataset, we observe consistent experimental results that align with our findings on the NWPU-VHR10 dataset. As illustrated in

Table 2, PICK-Net achieves superior performance when both proposed modules are integrated compared to configurations utilizing only a single module. Specifically, the Parallel Interleaved Convolution (PIC) module enables the detection network to construct a multi-level adaptive receptive field hierarchy. This mechanism facilitates effective aggregation of cross-scale features in remote sensing target detection scenarios, thereby mitigating the issue of inaccurate parameter prediction in existing methods caused by spatial redundancy in feature representation.

Furthermore, the Global Complementary Attention Mechanism (GCAM) addresses gradient conflicts induced by cross-dimensional feature coupling through independent modulation of feature dimensions. By decoupling channel-wise importance weighting and spatial deformation confidence estimation, GCAM significantly enhances the stability of gradient propagation during deformable convolution kernel optimization. This improvement enhances the adaptability to geometric deformations of targets, effectively alleviating detection accuracy degradation caused by spatial-channel feature entanglement. Consequently, PICK-Net achieves a competitive detection performance of 92.2% mAP on the RSOD dataset, validating the synergistic effectiveness of the proposed modules.

4.4.2. Evaluation of Parameters Inside Modules

In order to verify the cascading optimization effect of Complementary Interleaved Global Attention Module(CIGAM), we modify the number of CIGAM modules and conduct experiments on NWPU-VHR10 and RSOD datasets.

From

Table 3, it is evident that when the feature decoupling units are stacked level by level, the detection performance of PICK-Net shows a trend of increasing and then decreasing in the detection accuracy on a detection-by-detection basis, and reaches the highest value of 83.9% after three units, which is 2.22% higher than that of a single-layer branch. This verifies that CIGAM, by decoupling the independent optimization paths of feature dimensions, enables the high purity feature expression after decoupling of the preamble units to guide the subsequent layers to achieve more accurate spatial localization. However, the model performance starts to decay when the number of stacked layers exceeds 3. This is due to the fact that the decoupling modulation process of the CIGAM module is sensitive to feature noise, and the independent optimization characteristics of the layers lead to the propagation of error signals along the decoupling path step by step, so that it is difficult to obtain correct results for the subsequent units once the prediction results of one unit are wrong; the over-deep decoupling stacking structure triggers the increase in redundancy of the decoupling parameter space, and the feature deviation generated by some of the units during dynamic sparse sampling is difficult to be effectively calibrated by the subsequent modulation process. It is difficult to be effectively corrected by the subsequent modulation process. Therefore, the experiment finally selects 3-layer CIGAM as the optimal configuration. As can be seen from

Table 3, the conclusion is consistent in the controlled experiments on the RSOD dataset.

4.5. Comparisons with State-of-the-Art Detectors

To validate the comprehensive efficacy of this method, seven types of cutting-edge detection models covering Convolutional Networks and Transformer and Hybrid architectures are selected as benchmarks, including the lightweight model EfficientNet, the YOLO family represented by the YOLOv4 [

38] and YOLOv8s [

39], the Transformer architecture DETR and its improved version, and the feature fusion innovation models SwinT and DetecoRS [

4]. All models were fair-tested on the RSOD dataset using official preset parameters, where the input sizes of EfficientNet, DETR, SwinT, DetecoRS, and DAB-DETR were uniformly

pixels, and the rest of the models were kept at

pixels resolution.

4.5.1. Results on RSOD

Experimental data (shown in

Table 4) on the RSOD dataset show that the method in this study shows significant advantages in the accuracy–efficiency balance: in the detection accuracy dimension, the mAP of the RSOD dataset reaches 92.2%, which is 0.26% and 0.9% higher than that of the DAB-DETR and YOLOv8s, respectively, and this confirms the strong adaptability of PICK-Net to complex scenes. In the dimension of computational efficiency, the number of model parameters is compressed to 12.5 M, which breaks through the performance boundary of existing lightweight detection models.

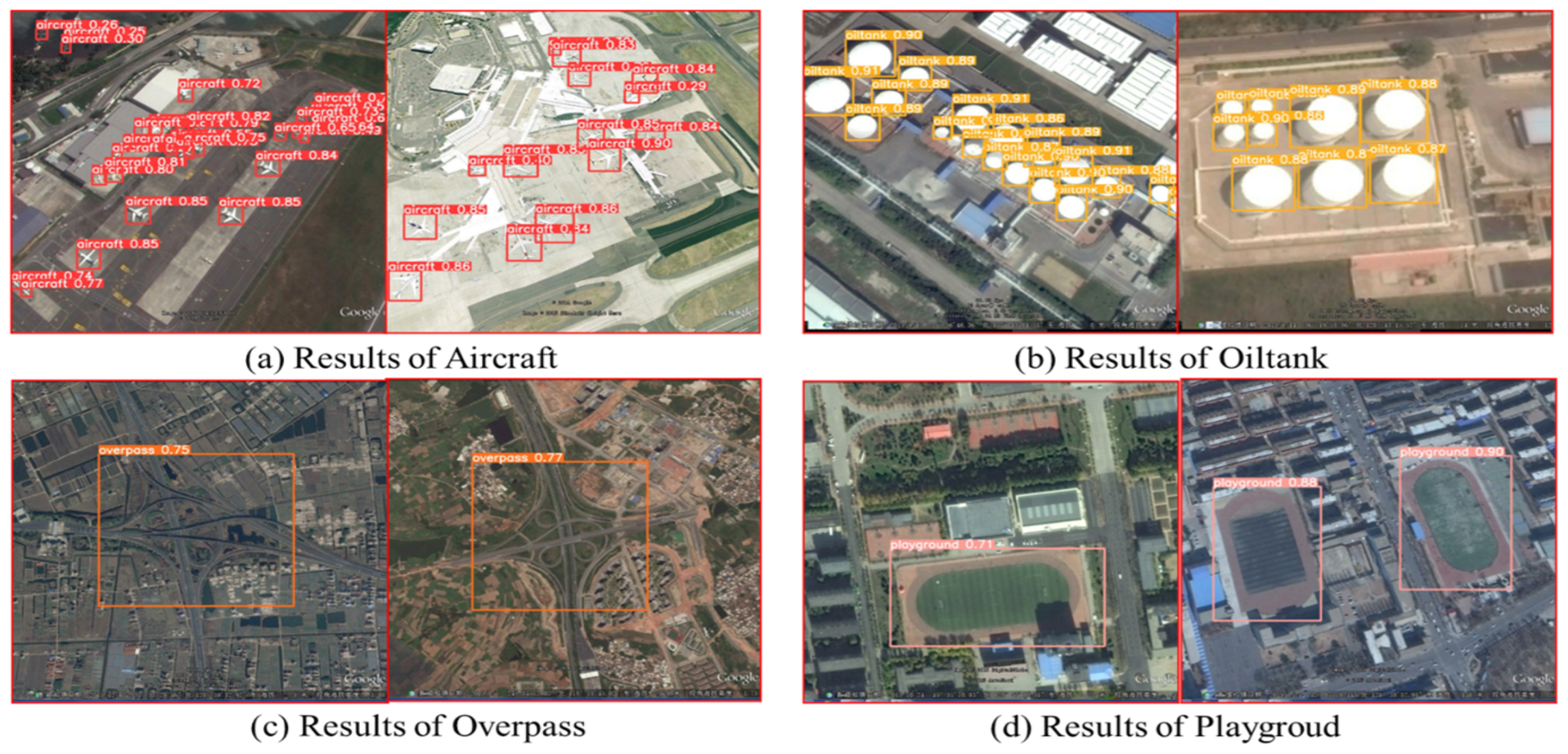

The visual detection results depicted in

Figure 2 show excellent performance in the detection tasks of four types of typical remote sensing targets. For example, for the aircraft detection in

Figure 2a, the algorithm accurately distinguishes neighboring aircrafts with very small spacing in a dense tarmac scenario by the deformable convolution, which verifies the ability of deformable convolution to capture local features.

The oil storage tank detection verifies the adaptability of the algorithm to multi-scale targets. As shown in the result plots in

Figure 2b, the proposed multi-scale feature fusion mechanism in the detection of oil storage tanks spanning 10–50 m in diameter results in a decrease in the standard deviation of the detection rate for targets of different sizes.

The overpass detection illustrates the ability to perceive structures in complex contexts. As in

Figure 2c, the algorithm proposed in this paper accurately identifies the shape features of the overpass target and effectively distinguishes the girder structure from the background road texture, and correctly extracts the overpass target from the similarly colored background.

Playground detection shows strong discriminative power under the shape regularity constraint. For the 400 m standard runway whose color features are highly similar to the surrounding roofs, the algorithm reduces the false alarm rate significantly.

4.5.2. Results on NWPU-VHR10

In the detection task of the NWPU-VHR10 dataset, our model leads existing technology with a mAP score of 84.90%, surpassing other advanced detectors (shown in

Table 5).

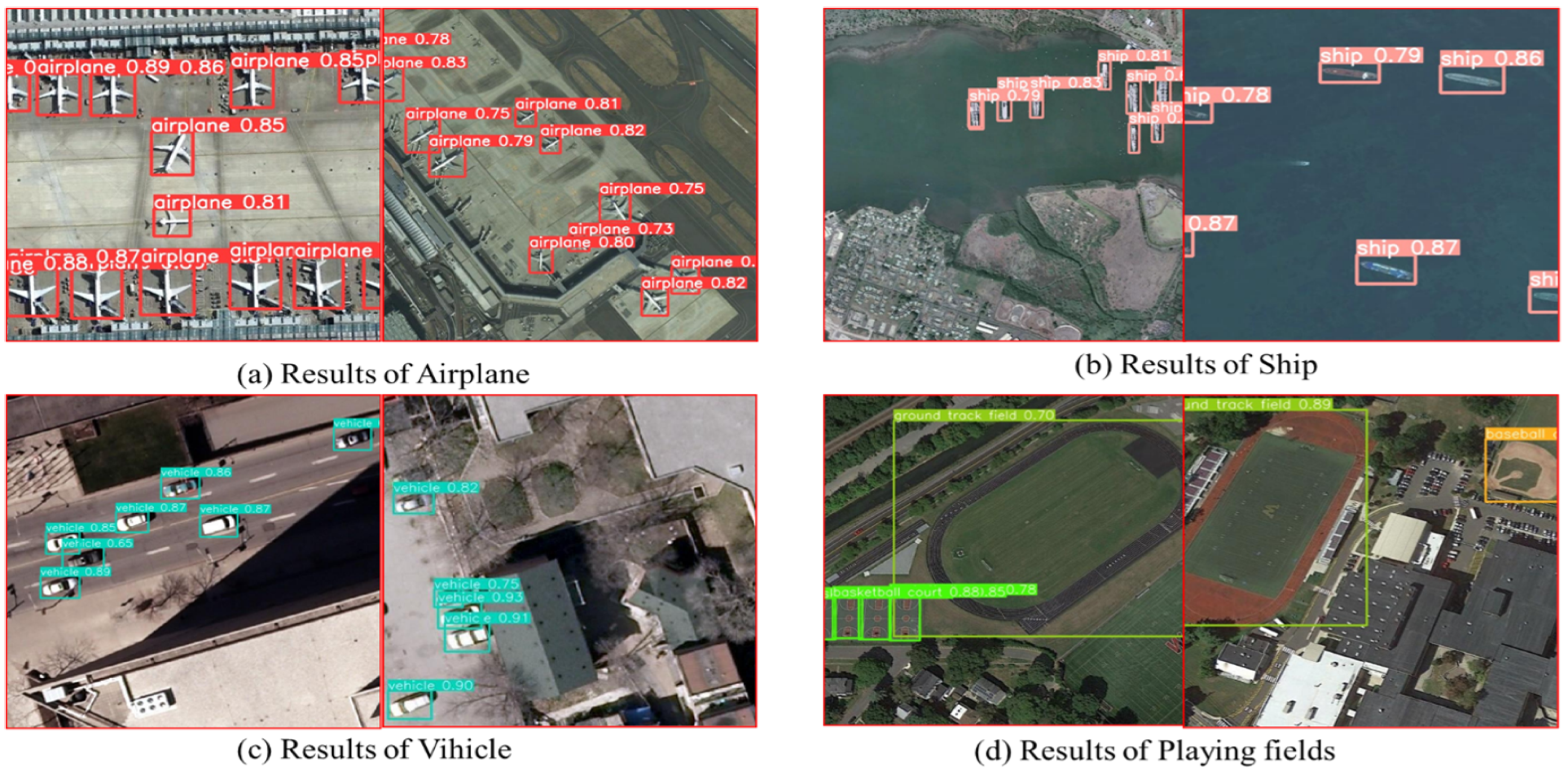

Visualization results (as shown in

Figure 3) further confirm the excellent performance when dealing with targets of varying scenarios.Among them, in

Figure 3a, the airplane targets have small scales and are densely parked at the airport, but PICK-Net still achieves accurate detection for them; in

Figure 3b, the ship targets with extreme aspect ratios and different orientations, the bounding box generated by PICK-Net can be well aligned with the targets to ensure that it contains all the target regions while not covering too much of the background regions; in

Figure 3c, the car target has similar color and shape features to the water tank in the background, but PICK-Net does not cause false detection, indicating that PICK-Net can identify the target well based on the features of the context around the target; in

Figure 3d, with a large size difference between the two types of targets, PICK-Net shows good robustness and accurately detects the athletic field and basketball court targets.

4.6. Robustness Verification

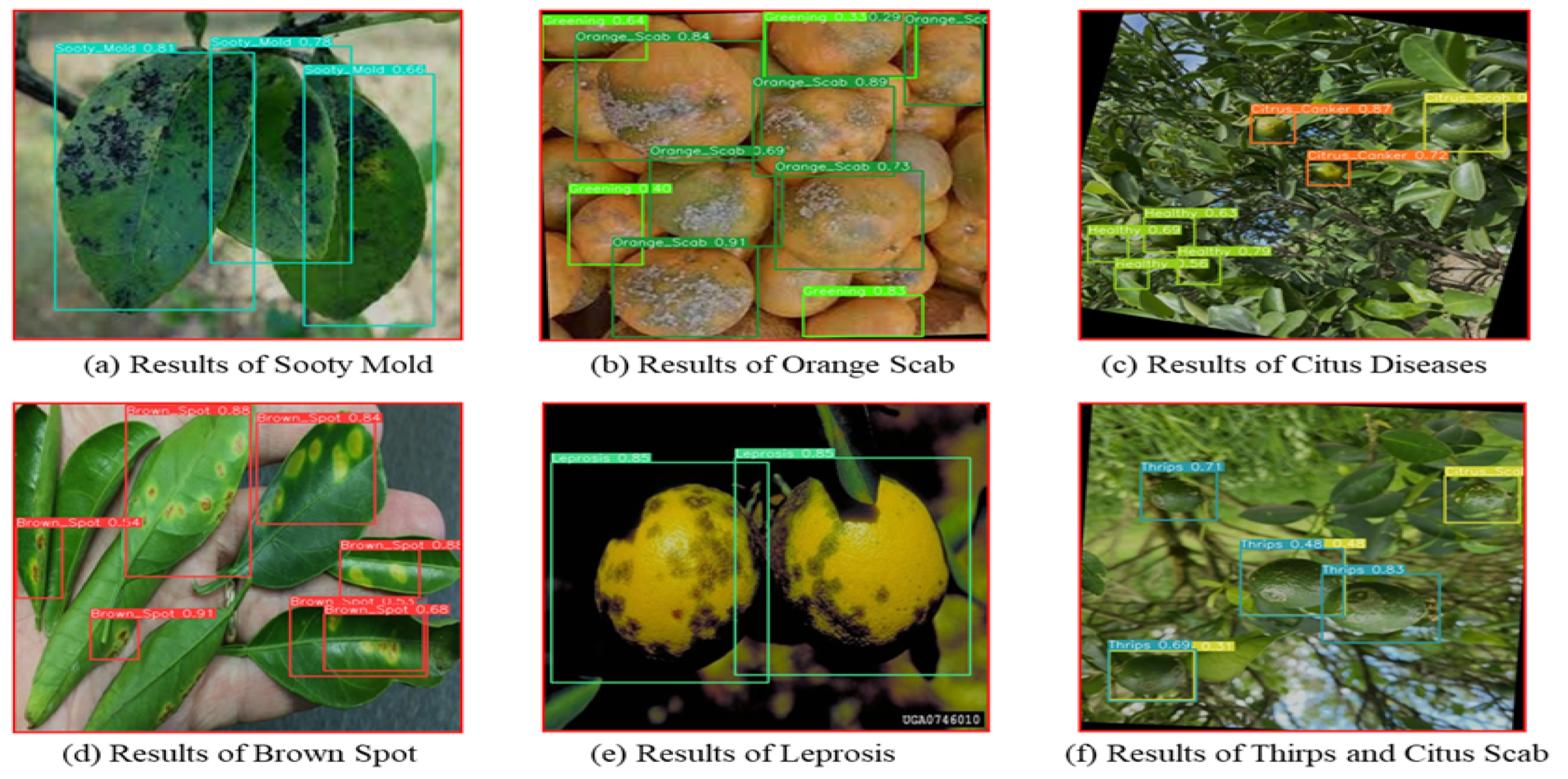

The detection of agricultural diseases and pests is an important extension of remote sensing technology in precision agriculture. Its core challenges (small targets, complex backgrounds) are highly consistent with those of remote sensing target detection. For instance, satellite remote sensing can acquire large-scale crop images, and the PICK-Net proposed in this study can realize fine-grained detection of diseases and pests based on such images. The proposed algorithm also shows significant effectiveness in orange pest detection tasks (as shown in

Figure 4). Specifically, in occlusion scenarios (e.g.,

Figure 4a), even when leaves overlap with each other, the algorithm can still accurately locate pest-infested areas through its feature extraction and target inference mechanisms. By integrating contextual associations and deep semantic feature analysis, it verifies strong robustness under complex spatial relationships.

Regarding light variation issues, the algorithm mitigates interference from intense light, high-light regions, or low-light environments during feature extraction via illumination normalization processing and a multi-scale feature fusion strategy. This ensures stable capture of pest features across varying light conditions, reflecting its adaptability to complex lighting environments. In

Figure 4f, when handling complex background interference, the algorithm extracts fine-grained features unique to pests—such as texture and color—and effectively distinguishes targets from similar background elements. This avoids misdetections caused by redundant background information and highlights its capability for target discrimination in complex backgrounds. Overall, the proposed algorithm not only performs effectively in traditional remote sensing target detection tasks but also generalizes well to crop pest detection scenarios, exhibiting strong robustness.