Fuzzy Shadowed Support Vector Machine for Bankruptcy Prediction

Abstract

1. Introduction

- 1.

- We propose a comprehensive framework of the Fuzzy Support Vector Machine (Fuzzy SVM) using a diverse range of membership functions, including geometric, density-based, and entropy-driven approaches, to quantify the uncertainty of individual samples and enhance model robustness in imbalanced data scenarios.

- 2.

- We introduce a novel Shadowed Support Vector Machine (Shadowed SVM) approach that employs a multi-metric fusion mechanism to define shadow regions near the decision boundary. This is achieved through a combination of geometric distances and margin-based metrics, followed by a shadowed combination to control the influence of uncertain instances.

- 3.

- We empirically observe that Fuzzy SVM excels in achieving high overall accuracy, while the Shadowed SVM provides superior performance in handling data imbalance. Motivated by these complementary strengths, we propose a novel hybrid model—Fuzzy Shadowed Support Vector Machine (Fuzzy Shadowed-SVM)—that combines fuzzy membership weighting with shadowed instance discounting to achieve both high accuracy and class balance.

- 4.

- To validate the robustness and statistical significance of the proposed models, we conduct non-parametric (Wilcoxon signed-rank test) and parametric (Paired Student’s t-Test) analyses on multiple bankruptcy datasets. The results confirm that the performance improvements achieved by the proposed Fuzzy Shadowed-SVM are not only consistent across different datasets but also statistically significant.

2. Literature Review

3. Fuzzy Shadowed Support Vector Machines: Proposed Approach

- Gaussian Radial Basis Function (RBF) Kernel:

- Polynomial Kernel:

- Their resistance to overfitting;

- Their flexibility through kernel selection;

- Their effectiveness in high-dimensional spaces.

- Fuzzy Support Vector Machine (Fuzzy SVM);

- Shadowed Support Vector Machine (Shadowed SVM);

- Fuzzy Shadowed-SVM.

- Reducing the impact of outliers;

- Emphasizing firms on the brink of bankruptcy;

- Attenuating bias toward the majority class.

- Creates a fuzzy boundary between classes;

- Enhances the detection of critical regions;

- Reduces the influence of weakly informative examples.

3.1. Fuzzy Support Vector Machine (Fuzzy SVM)

- Reduction of the effect of outliers;

- Emphasis on borderline companies near financial distress;

- Mitigation of bias toward the majority class.

- 1.

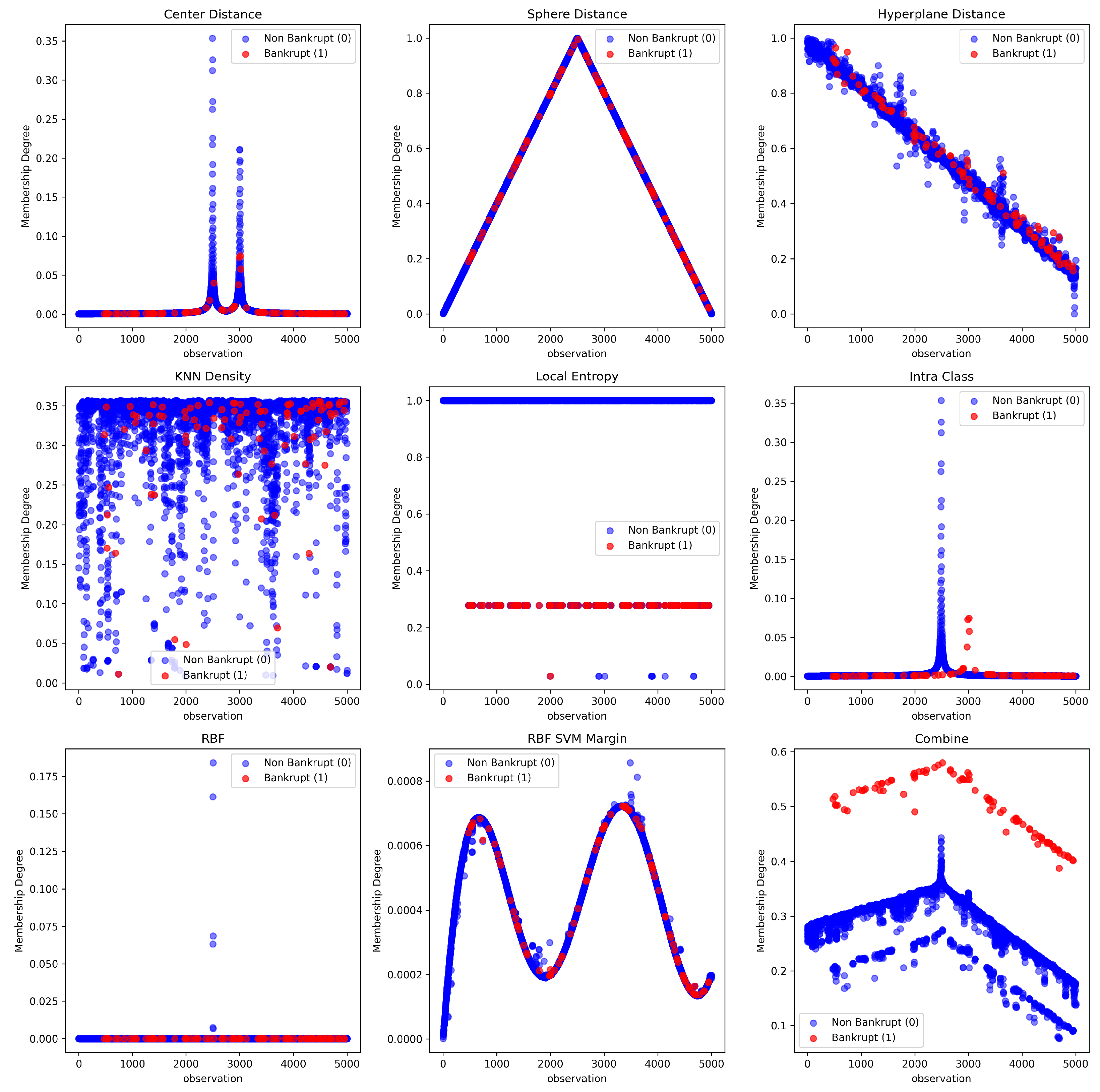

- Center Distance-Based Membership [28]This function evaluates the membership of a sample based on its Euclidean distance to the nearest class center.For minority class samples, the membership is amplified:Description:Samples closer to any class center are assigned higher membership. Minority class instances are emphasized by doubling their score.

- 2.

- Global Sphere-Based Membership [29]This function defines a membership value based on the distance to the global center of all samples.where is the global centroid and is the radius.Description:Points farther from the center receive lower membership. Minority samples get amplified membership values.

- 3.

- Hyperplane Distance Membership [30]This function calculates membership values based on the distance to the decision hyperplane of a linear SVM.Description:Samples closer to the decision boundary receive higher scores. Minority class points have doubled membership.

- 4.

- Local Density-Based Membership (kNN) [31]This method uses the average distance to k-nearest neighbors to assess local density.Description:Samples in dense regions (smaller average distances) get higher membership values.

- 5.

- Local Entropy-Based Membership [30]Using a probabilistic k-NN classifier, this function computes local class entropy.Description:Samples with high uncertainty (high entropy) receive lower membership values.

- 6.

- Intra-Class Distance Membership [32]This function measures the distance of a sample to the center of its own class.Description:Points that are closer to the center of their own class get higher membership scores.

- 7.

- RBF-Based Membership [33]This method uses a Gaussian radial basis function to assign membership based on distance to the global center.Description:Samples near the center receive values close to 1; distant ones decay exponentially.

- 8.

- RBF-SVM Margin Membership [34]This function derives membership based on the confidence margin from an RBF-kernel SVM.where is the decision function of the RBF-SVM.Description:Samples close to the RBF-SVM boundary have high membership scores, capturing uncertainty near the decision margin.

- 9.

- Combined Membership FunctionA weighted aggregation of all eight membership functions is proposed asDescription:This function enables flexible integration of various membership strategies with user-defined weights for enhanced generalization and robustness in imbalanced scenarios.

3.2. Shadowed Support Vector Machine (Shadowed SVM)

- Establishes a fuzzy boundary between classes;

- Enhances the detection of critical zones;

- Reduces the influence of uninformative examples.

- Full membership ();

- Non-membership ();

- Shadowed region ().

- Distance to Class CentersThis method calculates the Euclidean distance of each instance to its respective class centroid. The inverse of the distance is normalized and passed to the shadowed conversion. This ensures that points near their class center (representing prototypical examples) receive higher importance.

- Distance to Global Sphere CenterHere, we compute distances to the global mean vector and normalize them. Instances close to the global center are assumed to be more representative and are therefore favored.

- Distance to Linear SVM HyperplaneWe train a linear SVM and use the absolute value of its decision function as a proxy for confidence. These values are normalized and inverted, assigning higher weights to instances closer to the decision boundary.

- K-Nearest Neighbors DensityThis approach uses the average distance to k-nearest neighbors to estimate local density. High-density points are considered more informative and hence are promoted.

- Local Entropy of Class DistributionBy training a KNN classifier, we compute the class distribution entropy in the neighborhood of each point. Lower entropy values indicate higher confidence, which translates into higher weights.

- Intra-Class CompactnessThis function assesses each instance’s distance to its own class centroid. The inverse of this distance measures intra-class compactness, helping to down-weight class outliers.

- Radial Basis Function KernelWe define a Gaussian RBF centered on the global dataset mean. Points near the center receive higher RBF values and are treated as more central to the learning task.

- RBF-SVM MarginAn RBF-kernel SVM is trained, and the margin is used as a measure of importance. Instances near the margin are prioritized, reflecting their critical role in determining the separating surface.

- Minority class boosting mechanismAfter computing initial weights, an explicit adjustment is applied to enhance minority class representation:

- -

- If , assign ;

- -

- If , assign .

This ensures that no minority class instance is completely ignored, and those with ambiguous status are treated as fully informative. This enhancement is crucial in highly skewed scenarios. - Multi-Metric Fusion via Shadowed CombinationThe function shadowed_combined aggregates all eight previously described metrics using a weighted average:where is the shadowed membership of instance i under metric j and is the corresponding metric weight.This Shadowed SVM significantly advances classical SVMs by embedding granular soft reasoning into the training process. Key advantages include the following:

- -

- Data integrity is preserved; no synthetic samples are generated.

- -

- Minority class enhancement is performed selectively and contextually.

- -

- The methodology is generalizable to any learning algorithm supporting instance weighting.

3.3. Fuzzy Shadowed SVM

- Reduced overfitting on uncertain cases;

- Explicit decision-making in borderline situations.

- Positive region (): membership set to 1, these points have maximum influence on the decision boundary.

- Negative region (): membership set to 0, these points are effectively excluded from the solution (considered as noise).

- Shadowed region (): membership remains uncertain in (0,1), influence proportional to their fuzzy membership degree.

- Enhances minority class contribution via fuzzy memberships;

- Reduces overfitting and misclassification in ambiguous zones through shadowed granulation.

- 1.

- Fuzzy Membership Calculation: Assign fuzzy memberships to each instance using distance-based, entropy-based, or density-based functions.

- 2.

- Shadowed Transformation:

- 3.

- Modified SVM Training: Use transformed fuzzy-shadowed weights in the SVM loss function to penalize misclassifications proportionally to sample certainty.

- Minority Emphasis: The fuzzy component ensures greater influence of rare class examples in decision boundary construction.

- Uncertainty Management: Shadowed sets allow safe treatment of boundary points by avoiding hard decisions for uncertain data.

- Performance Gains: Improved G-mean, Recall, and F1-score, thus ensuring better trade-off between sensitivity and specificity.

- Adaptability: Thresholds and offer flexibility in managing granularity and uncertainty.

- Preprocessing: Normalize data and compute the imbalance ratio.

- Fuzzy Memberships: Use functions based on distance to class center or local density.

- Parameter Selection: Tune , , and regularization parameter C using cross-validation.

- Evaluation Metrics: Use G-mean, AUC-ROC, Recall, and F1-score rather than accuracy alone.

- Fuzzy Sets: Fuzzy logic assigns each training instance a degree of membership to its class, reflecting the confidence or representativeness of that instance. High membership indicates a central or prototypical instance; low membership reflects ambiguity or atypicality.

- Shadowed Sets: Introduced to model vague regions in uncertain environments, shadowed sets define a shadow region around the decision boundary where class labels are unreliable. In this model, instances in this margin are down-weighted to reduce their impact during training, recognizing their inherent ambiguity.

- 1.

- Computes fuzzy membership degrees for all training samples using multiple geometric and statistical criteria;

- 2.

- Identifies shadow regions by evaluating the distance of instances from the SVM decision boundary;

- 3.

- Adjusts sample weights by combining fuzzy memberships and a shadow mask, reducing the influence of uncertain instances and enhancing minority class detection.

- Center Distance: Membership is inversely proportional to the distance to the class center.

- Sphere Distance: Membership decreases linearly with the distance to the enclosing hypersphere.

- Hyperplane Distance: Membership is proportional to the absolute distance to a preliminary SVM hyperplane.

- kNN Density and Local Entropy: Measures local structure and class purity via neighborhood statistics.

- Intra-Class Cohesion: Membership is inversely related to within-class dispersion.

- RBF Kernel and SVM Margin: Membership decays exponentially with Euclidean or SVM margin distance.

- C: SVM regularization parameter;

- : RBF kernel width;

- : shadow threshold;

- : shadow weight;

- Membership method (e.g., “center_distance”, “svm_margin”).

- It models instance uncertainty on two levels: class confidence (fuzzy membership) and ambiguity near the decision boundary (shadow set).

- It provides a flexible and extensible framework with multiple interpretable membership functions.

- It introduces region-based instance discounting directly into kernel-based classifiers.

- It maintains interpretability, as the weighting mechanisms are derived from geometric or statistical properties of the data.

- It improves performance on minority class recognition, often reflected in F1-score, G-mean, and AUC-ROC.

| Algorithm 1 Fuzzy Shadowed SVM Training Algorithm |

| Require: Dataset Ensure: Trained FS-SVM model

|

Computational Complexity Analysis of Fuzzy Shadowed SVM

- 1.

- Fuzzy membership computation: , as each feature of every sample is evaluated against membership functions.

- 2.

- Shadowed set assignment: , to determine the allocation of samples to shadowed, core, or boundary regions.

- 3.

- SVM optimization: Using a quadratic programming solver, classical SVM requires in the worst case. The FS-SVM maintains the same order, but with slightly higher constants due to additional weighting from fuzzy-shadowed memberships.

4. Experimental Studies

- 1.

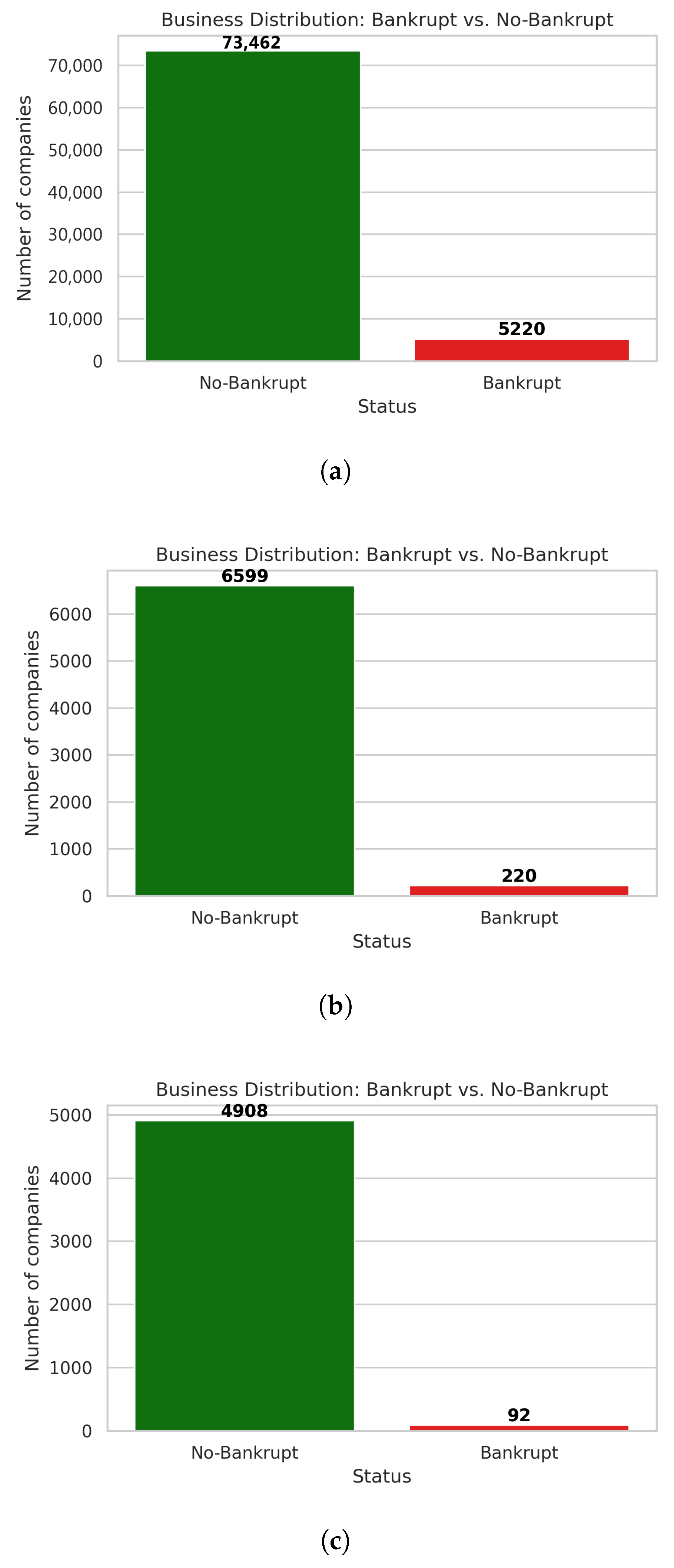

- The first dataset (data1) is the Bankruptcy Data from the Taiwan Economic Journal for the years 1999–2009, available on Kaggle: https://www.kaggle.com/datasets/fedesoriano/company-bankruptcy-prediction/data (accessed on 17 September 2025).It contains 95 features in addition to the bankruptcy class label, and the total number of instances is exactly 6819.

- 2.

- The second dataset (data2) is the US Company Bankruptcy Prediction dataset, also sourced from Kaggle: https://www.kaggle.com/datasets/utkarshx27/american-companies-bankruptcy-prediction-dataset (accessed on 17 September 2025).It consists of 78,682 instances and 21 features.

- 3.

- The third dataset (data3) is the UK Bankruptcy Data, containing 5000 instances and 70 features.

4.1. Comparison with Other Models

- Fuzzy SVM: A theoretical framework that integrates fuzzy membership values to depict the extent of confidence or reliability associated with each training instance, consequently mitigating the impact of noisy or ambiguous data.

- Shadowed SVM: Extends fuzzy SVM by introducing a shadowed region, which explicitly models the zone of uncertainty between clear membership and non-membership, enhancing robustness in decision boundaries.

- Fuzzy Shadowed-SVM: A hybrid model that combines fuzzy logic and shadowed set theory to manage uncertainty more effectively, allowing for refined decision-making under vagueness and imprecision.

- SVM with Different Error Costs: This version of Support Vector Machines (SVM) applies different penalty weights for misclassifying the majority class (0.1) versus the minority class (1.0), aiming to improve the balance between the classes.

- SVM-SMOTE: This method pairs SVM with the Synthetic Minority Over-sampling Technique (SMOTE), which creates artificial samples to boost the representation of the minority class.

- SVM-ADASYN: Building on SMOTE, Adaptive Synthetic Sampling (ADASYN) tailors the number of synthetic samples generated based on the local data distribution, focusing more on challenging areas.

- SVM with Undersampling: Here, the majority class size is reduced before training the SVM to help balance the dataset.

- Random Forest: An ensemble of decision trees known for its robustness and strong performance on imbalanced datasets.

- K-Nearest Neighbors (KNN): A simple, proximity-based classifier that can be sensitive to class imbalance, used here as a benchmark.

- Logistic Regression: A widely used linear classifier serving as a baseline for binary classification tasks.

- : True Positives (instances correctly predicted as positive);

- : True Negatives (instances correctly predicted as negative);

- : False Positives (negative instances incorrectly predicted as positive);

- : False Negatives (positive instances incorrectly predicted as negative).

- Accuracy: Measures the overall proportion of correct predictions.

- Precision: The proportion of true positive predictions among all positive predictions [37].It is crucial in scenarios where false positives are costly.

- Recall (Sensitivity): The proportion of true positive predictions among all actual positives [37].Important in cases where missing positive instances (e.g., bankruptcies) should be minimized.

- It is derived from the general harmonic mean definition:by substituting and . The F1-score is particularly useful when balancing false positives and false negatives is critical. It is effective when a balance between false positives and false negatives is required.

- Specificity: The proportion of true negatives correctly identified [39].Complements Recall and provides insight into the model’s performance on the majority class.

- AUC-ROC (Area Under the Receiver Operating Characteristic Curve) is a performance metric for classification problems [40,41]. It quantifies a model’s ability to discriminate between classes (for instance, bankrupt and non-bankrupt). A higher AUC value (closer to 1.0) indicates superior separability. The ROC curve is constructed by plotting two metrics against each other at every possible probability threshold:

- -

- The True Positive Rate (TPR) on the Y-axis, also referred to as Recall or Sensitivity.

- -

- The False Positive Rate (FPR) on the X-axis.

The AUC is simply the area under this plotted curve. - G-mean: combines Recall and Specificity to evaluate the balance of performance across both classes:

4.1.1. Fuzzy Support Vector Machine (Fuzzy SVM)

- Fuzzy SVM (combined) dominates all metrics, achieving 3 higher recalls (16.67% vs. ≤5.56% for other SVM variants) and superior G-mean (0.4034), proving its effectiveness for bankruptcy detection.

- Traditional models (Random Forest, KNN) fail completely (recall = 0%), confirming the necessity of imbalance-aware methods.

4.1.2. Shadowed Support Vector Machine (Shadowed SVM)

- Shadowed SVM-Combined achieves the best trade-off: Highest precision (60%) and near-perfect specificity (99.55%), minimizing false positives while maintaining competitive recall.

- Stability across parameters: Centre/sphere variants show identical performance (F1 = 0.2807), demonstrating robustness to hyperparameter changes.

4.1.3. Fuzzy Shadowed Support Vector Machine

- Fuzzy Shadowed-combined excels in reliability: Highest G-mean (0.8290) and specificity (99.55%), making it ideal for high-stakes decisions where false positives are costly.

- Hyperplane variant prioritizes recall (63.64%) but sacrifices precision, highlighting a use-case-dependent choice.

4.2. Statistical Tests

- The paired Student’s t-test assesses whether the mean differences between two paired samples are statistically significant, assuming normality.

- The Wilcoxon signed-rank test, a non-parametric alternative, does not assume a normal distribution and is more robust when dealing with skewed or ordinal data.

4.2.1. Paired Student’s t-Test

- is the mean of the paired differences;

- is the standard deviation of the differences ;

- n is the number of paired observations.

4.2.2. Wilcoxon Signed-Rank Test

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| ADASYN | ADAptive SYnthetic Sampling |

| ANN | Artificial Neural Network |

| AUC | Area Under the Curve |

| CNN | Convolutional Neural Network |

| DL | Deep Learning |

| G-mean | Geometric mean |

| GrC | Granular computing |

| GSVM | Granular Support Vector Machine |

| FN | False Negative |

| FP | False Positive |

| FSVM | Fuzzy Support Vector Machine |

| LSTM | Long Short-Term Memory |

| KNN | K-Nearest Neighbors |

| MCC | Matthew’s Correlation Coefficient |

| ML | Machine Learning |

| QP | Quadratic Programming |

| RBF | Radial Basis Function |

| SMOTE | Synthetic Minority Oversampling TEchnique |

| SMOTE-ENN | Synthetic Minority Oversampling TEchnique—Edited Nearest Neighbors |

| SRM | Structural Risk Minimization |

| SVM | Support Vector Machine |

| SVR | Support Vector Regression |

| TN | True Negative |

| TP | True Positive |

References

- Figlioli, B.; Lima, F.G. A proposed corporate distress and recovery prediction score based on financial and economic components. Expert Syst. Appl. 2022, 197, 116726. [Google Scholar] [CrossRef]

- Lohmann, C.; Mallenhoff, S.; Ohliger, T. Nonlinear relationships in bankruptcy prediction and their effect on the profitability of bankruptcy prediction models. J. Bus. Econ. 2022, 93, 1661–1690. [Google Scholar] [CrossRef]

- Gholampoor, H.; Asadi, M. Risk Analysis of Bankruptcy in the U.S. Healthcare Industries Based on Financial Ratios: A Machine Learning Analysis. J. Theor. Appl. Electron. Commer. Res. 2024, 19, 1303–1320. [Google Scholar] [CrossRef]

- Stitson, M.; Weston, J.; Gammerman, A.; Vovk, V.; Vapnik, V. Theory of support vector machines. Univ. Lond. 1996, 117, 188–191. [Google Scholar]

- Li, W.; Paraschiv, F.; Sermpinis, G. A data-driven explainable case-based reasoning approach for financial risk detection. Quant. Financ. 2022, 22, 2257–2274. [Google Scholar] [CrossRef]

- Liu, X.; Zhang, S.; Wang, Z.; Zhang, S. Classification and identification of medical insurance fraud: A case-based reasoning approach. Technol. Econ. Dev. Econ. 2025, 1–27. [Google Scholar] [CrossRef]

- Barboza, F.; Kimura, H.; Altman, E.I. Machine learning models and bankruptcy prediction. Expert Syst. Appl. 2017, 83, 405–417. [Google Scholar] [CrossRef]

- Wang, Z.; Jiang, C.; Zhao, H. Depicting Risk Profile over Time: A Novel Multiperiod Loan Default Prediction Approach. Manag. Inf. Syst. Q. 2023, 47, 1455–1486. [Google Scholar] [CrossRef]

- Chen, Z.; Chen, W.; Shi, Y. Ensemble learning with label proportions for bankruptcy prediction. Expert Syst. Appl. 2020, 146, 113155. [Google Scholar] [CrossRef]

- Wang, S.; Chi, G. Cost-sensitive stacking ensemble learning for company financial distress prediction. Expert Syst. Appl. 2024, 255, 124525. [Google Scholar] [CrossRef]

- Brenes, R.F.; Johannssen, A.; Chukhrova, N. An intelligent bankruptcy prediction model using a multilayer perceptron. Intell. Syst. Appl. 2022, 16, 200136. [Google Scholar] [CrossRef]

- Chen, Y.; Guo, J. LiFoL: An Efficient Framework for Financial Distress Prediction in High-Dimensional Unbalanced Scenario. IEEE Trans. Comput. Soc. Syst. 2023, 11, 2784–2795. [Google Scholar] [CrossRef]

- Shangguan, X.; Wei, K.; Sun, Q.; Zhang, Y.; Bai, R. Research on the standardization strategy of granular computing. Int. J. Cogn. Comput. Eng. 2023, 4, 340–348. [Google Scholar] [CrossRef]

- Li, J.; Zhu, Q.; Wu, Q.; Zhang, Z.; Gong, Y.; He, Z.; Zhu, F. SMOTE-NaN-DE: Addressing the noisy and borderline examples problem in imbalanced classification by natural neighbors and differential evolution. Knowl.-Based Syst. 2021, 223, 107056. [Google Scholar] [CrossRef]

- Radovanovic, J.; Haas, C. The evaluation of bankruptcy prediction models based on socio-economic costs. Expert Syst. Appl. 2023, 227, 120275. [Google Scholar] [CrossRef]

- Xia, S.; Lian, X.; Wang, G.; Gao, X.; Chen, J.; Peng, X. GBSVM: An Efficient and Robust Support Vector Machine Framework via Granular-Ball Computing. IEEE Trans. Neural Netw. Learn. Syst. 2024, 36, 9253–9267. [Google Scholar] [CrossRef]

- Park, M.S.; Son, H.; Hyun, C.; Hwang, H.J. Explainability of Machine Learning Models for Bankruptcy Prediction. IEEE Access 2021, 9, 124887–124899. [Google Scholar] [CrossRef]

- Perboli, G.; Arabnezhad, E. A Machine Learning-based DSS for mid and long-term company crisis prediction. Expert Syst. Appl. 2021, 174, 114758. [Google Scholar] [CrossRef]

- Cho, S.H.; Shin, K.S. Feature-Weighted Counterfactual-Based Explanation for Bankruptcy Prediction. Expert Syst. Appl. 2022, 216, 119390. [Google Scholar] [CrossRef]

- Pedrycz, W. Granular Computing: An Emerging Paradigm; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2001; Volume 70. [Google Scholar]

- Zhang, X.; Ma, Y.; Wang, M. An attention-based Logistic-CNN-BiLSTM hybrid neural network for credit risk prediction of listed real estate enterprises. Expert Syst. 2024, 41, e13299. [Google Scholar] [CrossRef]

- Yu, L.; Li, M.; Liu, X. A two-stage case-based reasoning driven classification paradigm for financial distress prediction with missing and imbalanced data. Expert Syst. Appl. 2024, 249, 123745. [Google Scholar] [CrossRef]

- Xia, S.; Zheng, S.; Wang, G.; Gao, X.; Wang, B. Granular ball sampling for noisy label classification or imbalanced classification. IEEE Trans. Neural Netw. Learn. Syst. 2021, 34, 2144–2155. [Google Scholar] [CrossRef] [PubMed]

- Borowska, K.; Stepaniuk, J. Rough–granular approach in imbalanced bankruptcy data analysis. Procedia Comput. Sci. 2022, 207, 1832–1841. [Google Scholar] [CrossRef]

- Chen, Y.; Guo, J.; Huang, J.; Lin, B. A novel method for financial distress prediction based on sparse neural networks. Int. J. Mach. Learn. Cybern. 2022, 13, 2089–2103. [Google Scholar] [CrossRef]

- Zimmermann, H.J. Fuzzy set theory. Wiley Interdiscip. Rev. Comput. Stat. 2010, 2, 317–332. [Google Scholar] [CrossRef]

- Sardari, S.; Eftekhari, M.; Afsari, F. Hesitant fuzzy decision tree approach for highly imbalanced data classification. Appl. Soft Comput. 2017, 61, 727–741. [Google Scholar] [CrossRef]

- Tang, W. Fuzzy SVM with a New Fuzzy Membership Function to Solve the Two-Class Problems. Neural Process. Lett. 2011, 34, 209–219. [Google Scholar] [CrossRef]

- Leski, J.M. TSK-fuzzy modeling based on/spl epsiv/-insensitive learning. IEEE Trans. Fuzzy Syst. 2005, 13, 181–193. [Google Scholar] [CrossRef]

- Cho, P.; Lee, M.; Chang, W. Instance-based entropy fuzzy support vector machine for imbalanced data. Pattern Anal. Appl. 2020, 23, 1183–1202. [Google Scholar] [CrossRef]

- Bian, Z.; Vong, C.M.; Wong, P.K.; Wang, S. Fuzzy KNN method with adaptive nearest neighbors. IEEE Trans. Cybern. 2020, 52, 5380–5393. [Google Scholar] [CrossRef]

- Çelikyılmaz, A.; Burhan Türkşen, I. Fuzzy functions with support vector machines. Inf. Sci. 2007, 177, 5163–5177. [Google Scholar] [CrossRef]

- Er, M.J.; Wu, S.; Lu, J.; Toh, H.L. Face recognition with radial basis function (RBF) neural networks. IEEE Trans. Neural Netw. 2002, 13, 697–710. [Google Scholar] [PubMed]

- Keesman, K.; Stappers, R. Nonlinear set-membership estimation: A support vector machine approach. J. Inverse Ill-Posed Probl. 2004, 12, 27–42. [Google Scholar] [CrossRef]

- Pedrycz, W. Shadowed sets: Representing and processing fuzzy sets. IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 1998, 28, 103–109. [Google Scholar] [CrossRef]

- Yao, Y.; Yang, J. Granular rough sets and granular shadowed sets: Three-way approximations in Pawlak approximation spaces. Int. J. Approx. Reason. 2022, 142, 231–247. [Google Scholar] [CrossRef]

- Powers, D.M. Evaluation: From precision, recall and F-measure to ROC, informedness, markedness and correlation. arXiv 2020, arXiv:2010.16061. [Google Scholar] [CrossRef]

- Sokolova, M.; Lapalme, G. A systematic analysis of performance measures for classification tasks. Inf. Process. Manag. 2009, 45, 427–437. [Google Scholar] [CrossRef]

- Cullerne Bown, W. Sensitivity and specificity versus precision and recall, and related dilemmas. J. Classif. 2024, 41, 402–426. [Google Scholar] [CrossRef]

- Brzezinski, D.; Stefanowski, J. Prequential AUC: Properties of the area under the ROC curve for data streams with concept drift. Knowl. Inf. Syst. 2017, 52, 531–562. [Google Scholar] [CrossRef]

- Jaskowiak, P.A.; Costa, I.G.; Campello, R.J. The area under the ROC curve as a measure of clustering quality. Data Min. Knowl. Discov. 2022, 36, 1219–1245. [Google Scholar] [CrossRef]

- He, H.; Garcia, E.A. Learning from imbalanced data. IEEE Trans. Knowl. Data Eng. 2009, 21, 1263–1284. [Google Scholar] [CrossRef]

- Branco, P.; Torgo, L.; Ribeiro, R.P. A survey of predictive modeling on imbalanced domains. ACM Comput. Surv. (CSUR) 2016, 49, 1–50. [Google Scholar] [CrossRef]

- Ben Jelloun, R.; Jebari, K.; El Moujahid, A. Open Competency Optimization: A Human-Inspired Optimizer for the Dynamic Vehicle-Routing Problem. Algorithms 2024, 17, 449. [Google Scholar] [CrossRef]

| Model | Accuracy | F1-Score | AUC-ROC | Precision | Recall | Specificity | G-Mean |

|---|---|---|---|---|---|---|---|

| Fuzzy SVM (Centre) | 0.9530 | 0.0408 | 0.7010 | 0.0323 | 0.0556 | 0.9695 | 0.2321 |

| Fuzzy SVM (Sphere) | 0.9550 | 0.0000 | 0.7043 | 0.0000 | 0.0000 | 0.9725 | 0.0000 |

| Fuzzy SVM (Hyperplan) | 0.9550 | 0.0000 | 0.7058 | 0.0000 | 0.0000 | 0.9725 | 0.0000 |

| Fuzzy SVM (knn_density) | 0.9550 | 0.0426 | 0.7025 | 0.0345 | 0.0556 | 0.9715 | 0.2323 |

| Fuzzy SVM (local_entropy) | 0.9470 | 0.0000 | 0.7282 | 0.0000 | 0.0000 | 0.9644 | 0.0000 |

| Fuzzy SVM (intra_class) | 0.9540 | 0.0417 | 0.7133 | 0.0333 | 0.0556 | 0.9705 | 0.2322 |

| Fuzzy SVM (rbf) | 0.9580 | 0.0455 | 0.7531 | 0.0385 | 0.0556 | 0.9745 | 0.2327 |

| Fuzzy SVM (rbf_svm_margin) | 0.9520 | 0.0400 | 0.7037 | 0.0312 | 0.0556 | 0.9684 | 0.2320 |

| Fuzzy SVM (combined) | 0.9620 | 0.1764 | 0.8374 | 0.3154 | 0.1667 | 0.9766 | 0.4034 |

| DEC | 0.9520 | 0.0400 | 0.7021 | 0.0312 | 0.0556 | 0.9684 | 0.2320 |

| SVM-SMOTE | 0.9000 | 0.1525 | 0.8163 | 0.0900 | 0.1001 | 0.9073 | 0.2735 |

| SVM-ADASYN | 0.8280 | 0.1134 | 0.7463 | 0.0625 | 0.0111 | 0.8320 | 0.3130 |

| SVM-Undersampling | 0.7720 | 0.1024 | 0.8280 | 0.0551 | 0.0222 | 0.7729 | 0.3471 |

| Random Forest | 0.9020 | 0.0000 | 0.6211 | 0.0000 | 0.0000 | 0.7000 | 0.0000 |

| KNN | 0.9010 | 0.0000 | 0.6068 | 0.0000 | 0.0000 | 0.7980 | 0.0000 |

| Logistic Regression | 0.9020 | 0.0000 | 0.6626 | 0.0000 | 0.0000 | 0.6001 | 0.0000 |

| Model | Accuracy | F1-Score | AUC-ROC | Precision | Recall | Specificity | G-Mean |

|---|---|---|---|---|---|---|---|

| Shadowed SVM-Centre (, ) | 0.9699 | 0.2807 | 0.8710 | 0.6154 | 0.3818 | 0.9962 | 0.4256 |

| Shadowed SVM-Centre (, ) | 0.9699 | 0.2807 | 0.8710 | 0.6154 | 0.3818 | 0.9962 | 0.4256 |

| Shadowed SVM-Centre (, ) | 0.9699 | 0.2807 | 0.8710 | 0.6154 | 0.3818 | 0.9962 | 0.4256 |

| Shadowed SVM-Centre (, ) | 0.9699 | 0.2807 | 0.8709 | 0.6154 | 0.3818 | 0.9962 | 0.4256 |

| Shadowed SVM-Centre (, ) | 0.9699 | 0.2807 | 0.8711 | 0.6154 | 0.3818 | 0.9962 | 0.4256 |

| Shadowed SVM-Centre (, ) | 0.9699 | 0.2807 | 0.8709 | 0.6154 | 0.3818 | 0.9962 | 0.4256 |

| Shadowed SVM-Centre (, ) | 0.9699 | 0.2807 | 0.8688 | 0.6154 | 0.3818 | 0.9962 | 0.4256 |

| Shadowed SVM-Centre (, ) | 0.9699 | 0.2807 | 0.8688 | 0.6154 | 0.3818 | 0.9962 | 0.4256 |

| Shadowed SVM-Centre (, ) | 0.9699 | 0.2807 | 0.8687 | 0.6154 | 0.3818 | 0.9962 | 0.4256 |

| Shadowed SVM-Sphere | 0.9699 | 0.2807 | 0.8695 | 0.6154 | 0.3818 | 0.9962 | 0.4256 |

| Shadowed SVM-Hyperplan | 0.9545 | 0.2619 | 0.8404 | 0.2750 | 0.2500 | 0.9780 | 0.4945 |

| Shadowed SVM-KNN-Density | 0.9699 | 0.2807 | 0.8708 | 0.6154 | 0.3818 | 0.9962 | 0.4256 |

| Shadowed SVM-Local-Entropy | 0.9699 | 0.3051 | 0.8648 | 0.6000 | 0.2045 | 0.9955 | 0.4512 |

| Shadowed SVM-Intra-Class | 0.9699 | 0.2807 | 0.8728 | 0.6154 | 0.2818 | 0.9962 | 0.4256 |

| Shadowed SVM-RBF | 0.9699 | 0.2807 | 0.8695 | 0.6154 | 0.2818 | 0.9962 | 0.4256 |

| Shadowed SVM-RBF-SVM-Margin | 0.9699 | 0.3051 | 0.8657 | 0.6000 | 0.2045 | 0.9955 | 0.4512 |

| Shadowed SVM-Combined | 0.9699 | 0.3051 | 0.8659 | 0.6000 | 0.4045 | 0.9955 | 0.4512 |

| DEC | 0.9377 | 0.3609 | 0.9185 | 0.2697 | 0.2455 | 0.9508 | 0.3201 |

| SVM-Smote | 0.9304 | 0.3537 | 0.9119 | 0.2524 | 0.2909 | 0.9417 | 0.3459 |

| SVM-ADASYN | 0.8915 | 0.2745 | 0.9078 | 0.1750 | 0.2364 | 0.9000 | 0.3568 |

| SVM-Undersampling | 0.8409 | 0.2644 | 0.9213 | 0.1554 | 0.2864 | 0.8394 | 0.3626 |

| Random Forest | 0.9692 | 0.2759 | 0.9368 | 0.5714 | 0.1818 | 0.8955 | 0.2254 |

| KNN | 0.9507 | 0.2857 | 0.7424 | 0.6667 | 0.1818 | 0.8970 | 0.2258 |

| Logistic Regression | 0.9633 | 0.2188 | 0.8733 | 0.3500 | 0.1591 | 0.8902 | 0.2969 |

| Model | Accuracy | F1-Score | AUC-ROC | Precision | Recall | Specificity | G-Mean |

|---|---|---|---|---|---|---|---|

| Fuzzy Shadowed-center | 0.9142 | 0.3314 | 0.9028 | 0.2214 | 0.6591 | 0.9227 | 0.7798 |

| Fuzzy Shadowed-sphere | 0.9282 | 0.3553 | 0.9168 | 0.2500 | 0.6136 | 0.9386 | 0.7589 |

| Fuzzy Shadowed-hyperplane | 0.9289 | 0.3660 | 0.9187 | 0.2569 | 0.6364 | 0.9386 | 0.7729 |

| Fuzzy Shadowed-combined | 0.9699 | 0.3051 | 0.8663 | 0.6000 | 0.2045 | 0.9955 | 0.8290 |

| DEC | 0.9377 | 0.3609 | 0.9185 | 0.2697 | 0.2455 | 0.9508 | 0.3201 |

| SVM-SMOTE | 0.9304 | 0.3537 | 0.9119 | 0.2524 | 0.2909 | 0.9417 | 0.3459 |

| SVM-ADASYN | 0.8915 | 0.2745 | 0.9078 | 0.1750 | 0.2364 | 0.9000 | 0.3568 |

| SVM-Undersampling | 0.8409 | 0.2644 | 0.9213 | 0.1554 | 0.2864 | 0.8394 | 0.3626 |

| Random Forest | 0.9692 | 0.2759 | 0.9368 | 0.5714 | 0.1818 | 0.8955 | 0.2254 |

| KNN | 0.9507 | 0.2857 | 0.7424 | 0.6667 | 0.1818 | 0.8970 | 0.2258 |

| Logistic Regression | 0.9633 | 0.2188 | 0.8733 | 0.3500 | 0.1591 | 0.8902 | 0.2969 |

| Metric | Comparison | p-Value | Significant |

|---|---|---|---|

| Accuracy | Combined vs. Fuzzy Shadowed-center | 0.00227 | Yes |

| Accuracy | Combined vs. Fuzzy Shadowed-sphere | 0.00227 | Yes |

| Accuracy | Combined vs. Fuzzy Shadowed-hyperplane | 0.00227 | Yes |

| Accuracy | Combined vs. DEC | 0.00227 | Yes |

| Accuracy | Combined vs. SVM-SMOTE | 0.00227 | Yes |

| Accuracy | Combined vs. SVM-ADASYN | 0.00227 | Yes |

| Accuracy | Combined vs. SVM-Undersampling | 0.00227 | Yes |

| Accuracy | Combined vs. Random Forest | 0.00227 | Yes |

| Accuracy | Combined vs. KNN | 0.00227 | Yes |

| Accuracy | Combined vs. Logistic Regression | 0.00227 | Yes |

| Precision | Combined vs. Fuzzy Shadowed-center | 0.00025 | Yes |

| Specificity | Combined vs. Fuzzy Shadowed-center | 0.00001 | Yes |

| Specificity | Combined vs. Fuzzy Shadowed-sphere | 0.00001 | Yes |

| Specificity | Combined vs. Fuzzy Shadowed-hyperplane | 0.00001 | Yes |

| Specificity | Combined vs. DEC | 0.00001 | Yes |

| Specificity | Combined vs. SVM-SMOTE | 0.00001 | Yes |

| Specificity | Combined vs. SVM-ADASYN | 0.00001 | Yes |

| Specificity | Combined vs. SVM-Undersampling | 0.00001 | Yes |

| Specificity | Combined vs. Random Forest | 0.00001 | Yes |

| Specificity | Combined vs. KNN | 0.00001 | Yes |

| Specificity | Combined vs. Logistic Regression | 0.00001 | Yes |

| G-mean | Combined vs. Fuzzy Shadowed-center | 0.00025 | Yes |

| G-mean | Combined vs. Fuzzy Shadowed-sphere | 0.00025 | Yes |

| G-mean | Combined vs. Fuzzy Shadowed-hyperplane | 0.00025 | Yes |

| G-mean | Combined vs. DEC | 0.00025 | Yes |

| G-mean | Combined vs. SVM-SMOTE | 0.00025 | Yes |

| G-mean | Combined vs. SVM-ADASYN | 0.00025 | Yes |

| G-mean | Combined vs. SVM-Undersampling | 0.00025 | Yes |

| G-mean | Combined vs. Random Forest | 0.00025 | Yes |

| G-mean | Combined vs. KNN | 0.00025 | Yes |

| G-mean | Combined vs. Logistic Regression | 0.00025 | Yes |

| Metric | Comparison | p-Value | Significant |

|---|---|---|---|

| Accuracy | Combined vs. Fuzzy Shadowed-center | 0.00098 | Yes |

| Accuracy | Combined vs. Fuzzy Shadowed-sphere | 0.00098 | Yes |

| Accuracy | Combined vs. Fuzzy Shadowed-hyperplane | 0.00098 | Yes |

| Accuracy | Combined vs. DEC | 0.00098 | Yes |

| Accuracy | Combined vs. SVM-SMOTE | 0.00098 | Yes |

| Accuracy | Combined vs. SVM-ADASYN | 0.00098 | Yes |

| Accuracy | Combined vs. SVM-Undersampling | 0.00098 | Yes |

| Accuracy | Combined vs. Random Forest | 0.00098 | Yes |

| Accuracy | Combined vs. KNN | 0.00098 | Yes |

| Accuracy | Combined vs. Logistic Regression | 0.00098 | Yes |

| Specificity | Combined vs. Fuzzy Shadowed-center | 0.00098 | Yes |

| Specificity | Combined vs. Fuzzy Shadowed-sphere | 0.00098 | Yes |

| Specificity | Combined vs. Fuzzy Shadowed-hyperplane | 0.00098 | Yes |

| Specificity | Combined vs. DEC | 0.00098 | Yes |

| Specificity | Combined vs. SVM-SMOTE | 0.00098 | Yes |

| Specificity | Combined vs. SVM-ADASYN | 0.00098 | Yes |

| Specificity | Combined vs. SVM-Undersampling | 0.00098 | Yes |

| Specificity | Combined vs. Random Forest | 0.00098 | Yes |

| Specificity | Combined vs. KNN | 0.00098 | Yes |

| Specificity | Combined vs. Logistic Regression | 0.00098 | Yes |

| G-mean | Combined vs. Fuzzy Shadowed-center | 0.00098 | Yes |

| G-mean | Combined vs. Fuzzy Shadowed-sphere | 0.00098 | Yes |

| G-mean | Combined vs. Fuzzy Shadowed-hyperplane | 0.00098 | Yes |

| G-mean | Combined vs. DEC | 0.00098 | Yes |

| G-mean | Combined vs. SVM-SMOTE | 0.00098 | Yes |

| G-mean | Combined vs. SVM-ADASYN | 0.00098 | Yes |

| G-mean | Combined vs. SVM-Undersampling | 0.00098 | Yes |

| G-mean | Combined vs. Random Forest | 0.00098 | Yes |

| G-mean | Combined vs. KNN | 0.00098 | Yes |

| G-mean | Combined vs. Logistic Regression | 0.00098 | Yes |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tamouh, A.; Tarik, M.; Mniai, A.; Jebari, K. Fuzzy Shadowed Support Vector Machine for Bankruptcy Prediction. Symmetry 2025, 17, 1615. https://doi.org/10.3390/sym17101615

Tamouh A, Tarik M, Mniai A, Jebari K. Fuzzy Shadowed Support Vector Machine for Bankruptcy Prediction. Symmetry. 2025; 17(10):1615. https://doi.org/10.3390/sym17101615

Chicago/Turabian StyleTamouh, Abdelhamid, Mouna Tarik, Ayoub Mniai, and Khalid Jebari. 2025. "Fuzzy Shadowed Support Vector Machine for Bankruptcy Prediction" Symmetry 17, no. 10: 1615. https://doi.org/10.3390/sym17101615

APA StyleTamouh, A., Tarik, M., Mniai, A., & Jebari, K. (2025). Fuzzy Shadowed Support Vector Machine for Bankruptcy Prediction. Symmetry, 17(10), 1615. https://doi.org/10.3390/sym17101615