A Novel Hand Motion Intention Recognition Method That Decodes EMG Signals Based on an Improved LSTM

Abstract

1. Introduction

2. Materials and Methods

2.1. Experimental Paradigm

2.2. Preprocessing and Manual Feature Extraction

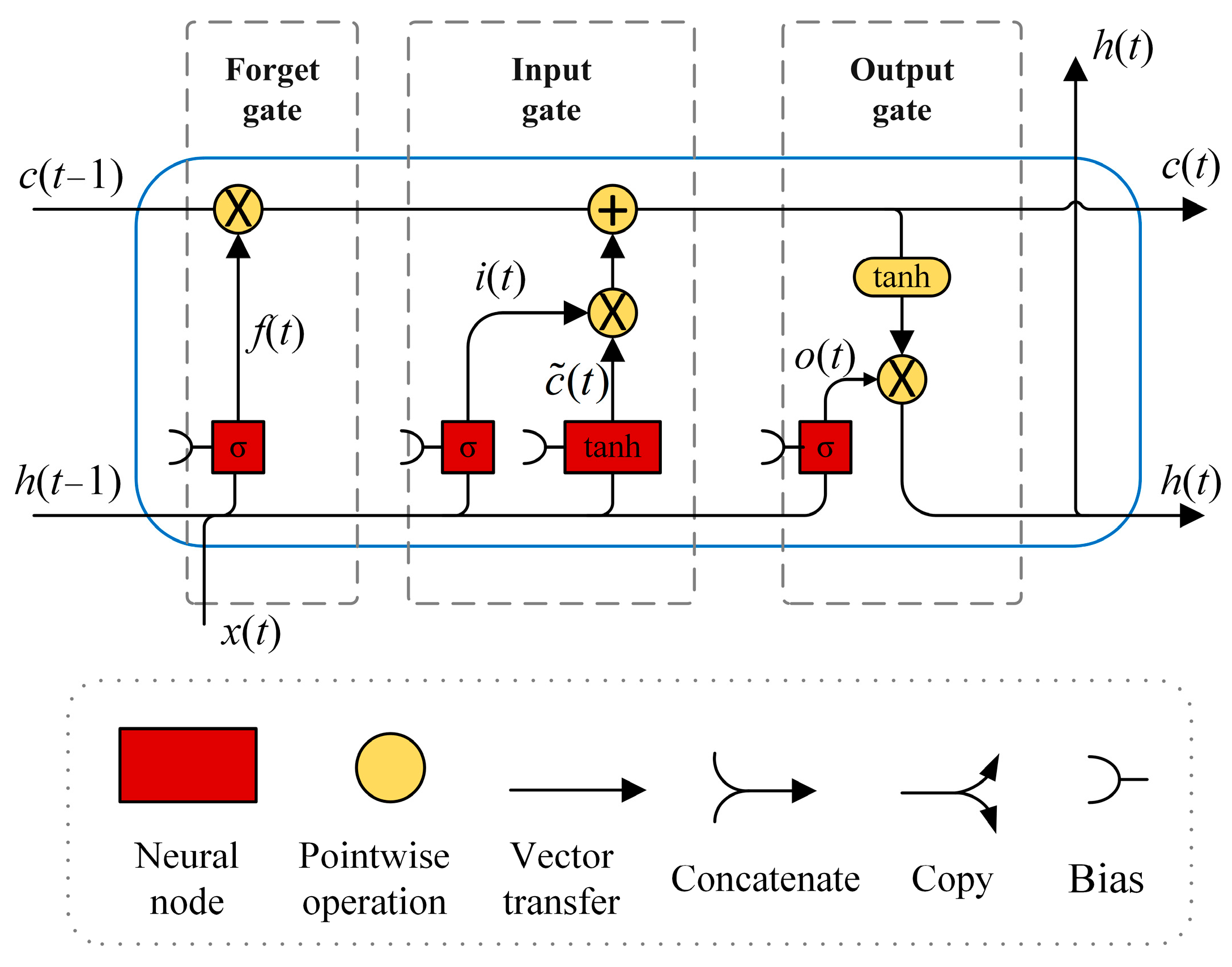

2.3. Construction of Deep Learning Model

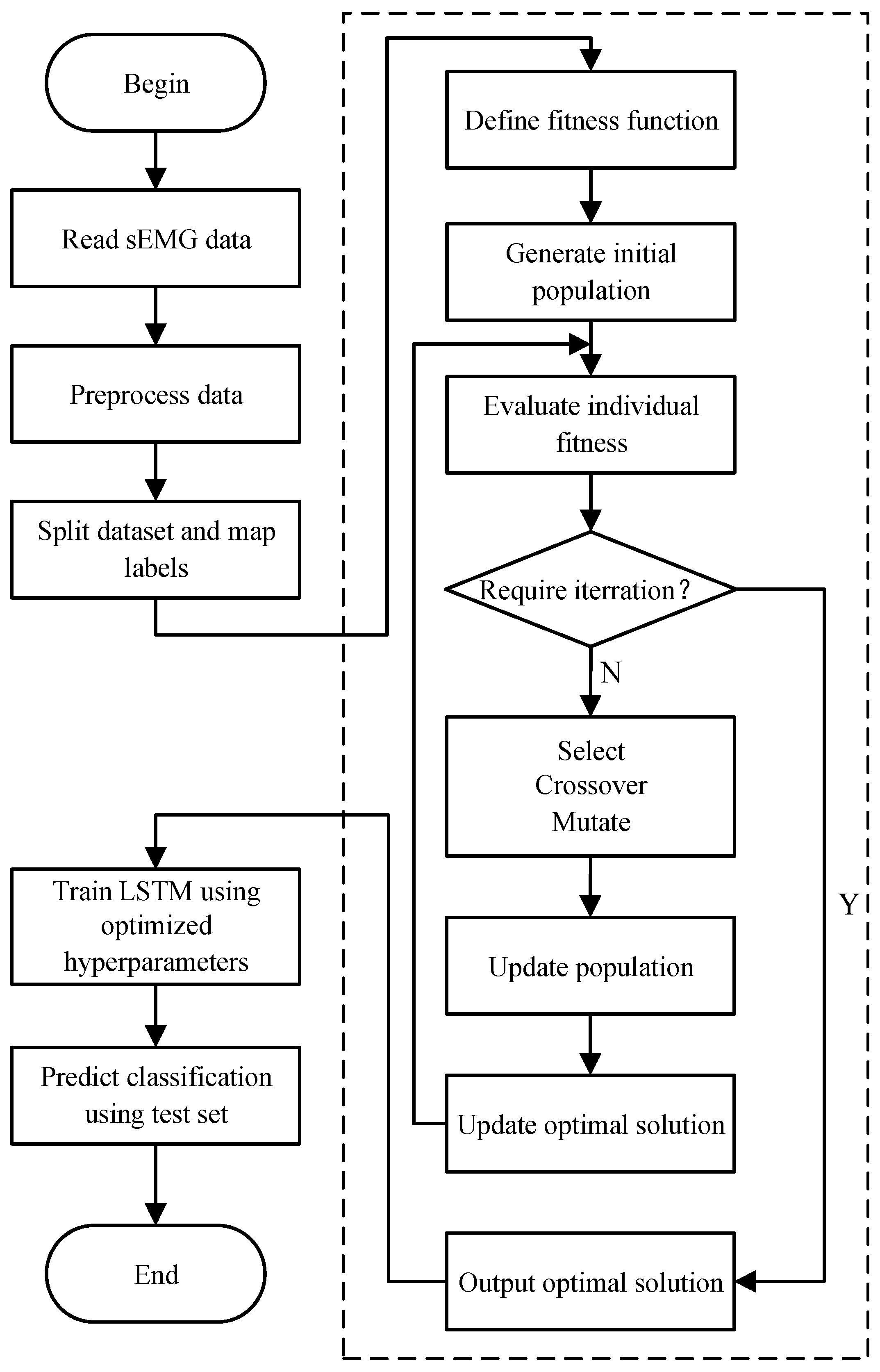

2.4. Improving LSTM Model Using GA via Optimal Key Hyperparameter Combination

- (1)

- Read the sEMG time series.

- (2)

- Preprocess the data, including EMG normalization and segmentation, using overlapping sliding windows.

- (3)

- Generate a certain number of individuals randomly, initialize the population, and create an LSTM parameter model.

- (4)

- Train and validate the LSTM model for each individual, and calculate their fitness values.

- (5)

- Select excellent individuals based on their fitness values, and generate new populations by way of crossover and mutation.

- (6)

- Repeat the genetic operations. The individual with the highest fitness converges gradually after multiple iterations.

- (7)

- Select the individual with the highest fitness, and train the final model by means of its LSTM network configuration.

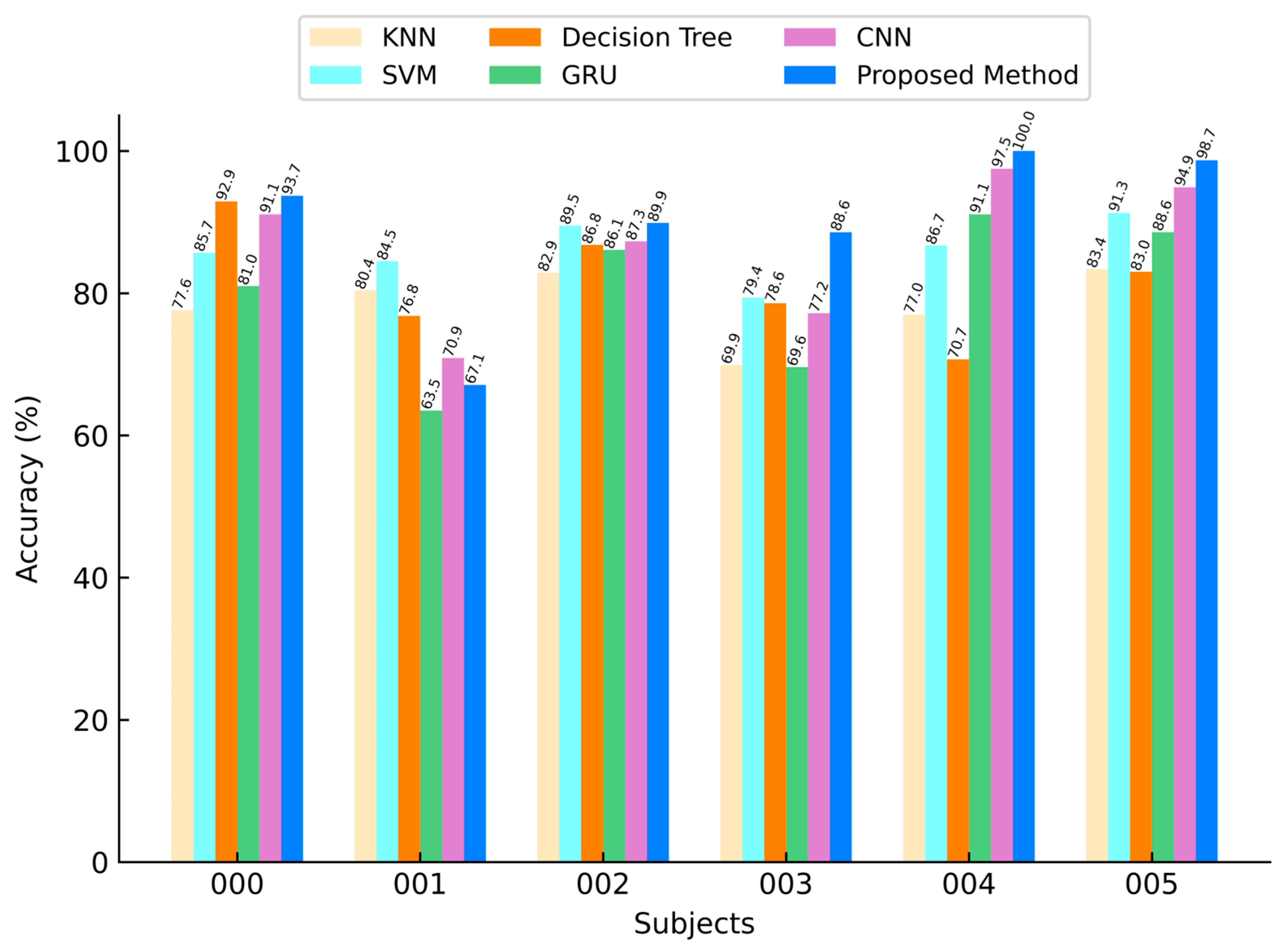

3. Results

3.1. Selection of Overlapping Sliding Window Parameters

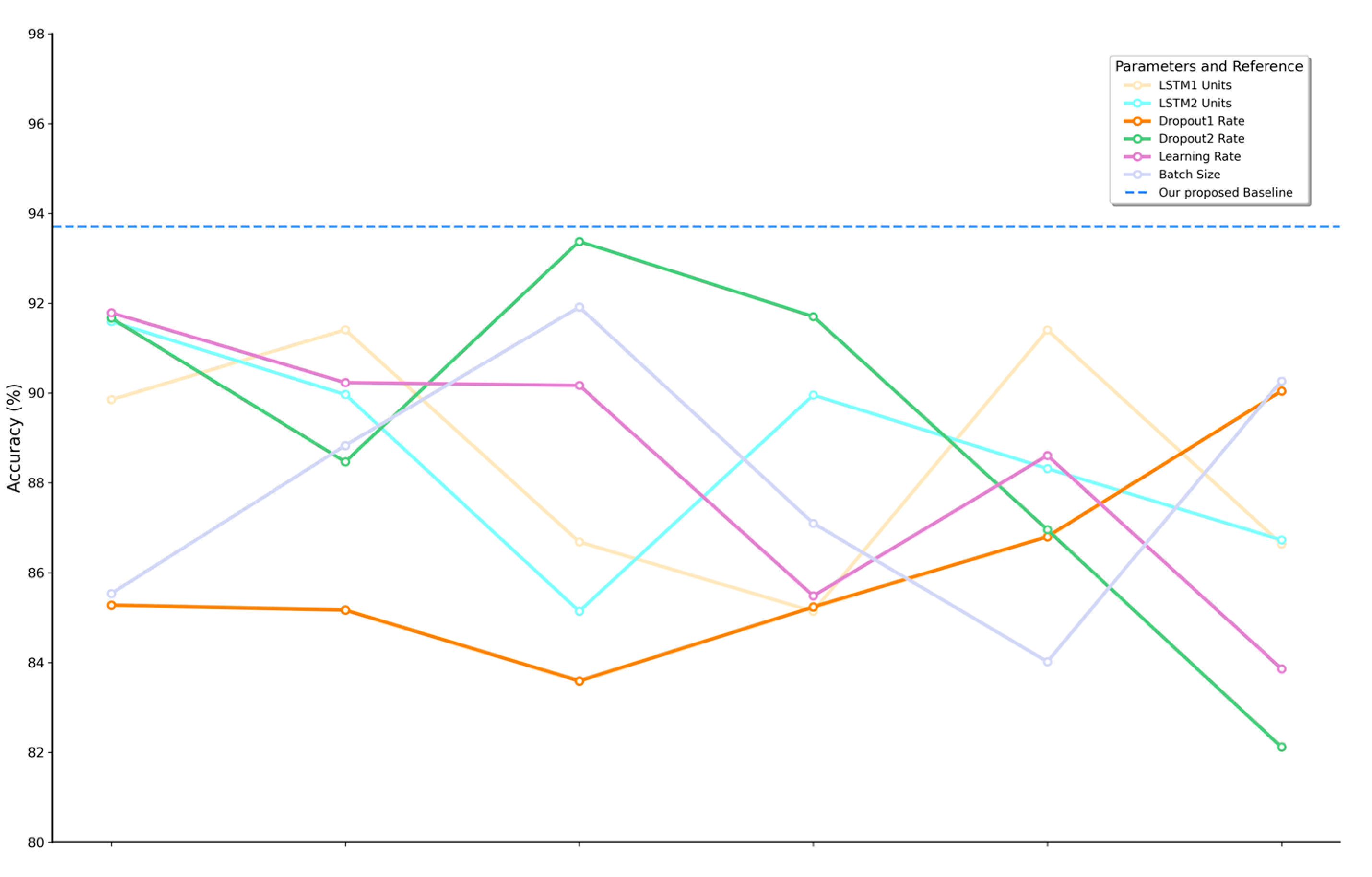

3.2. Optimal Hyperparameter Combination Using GA

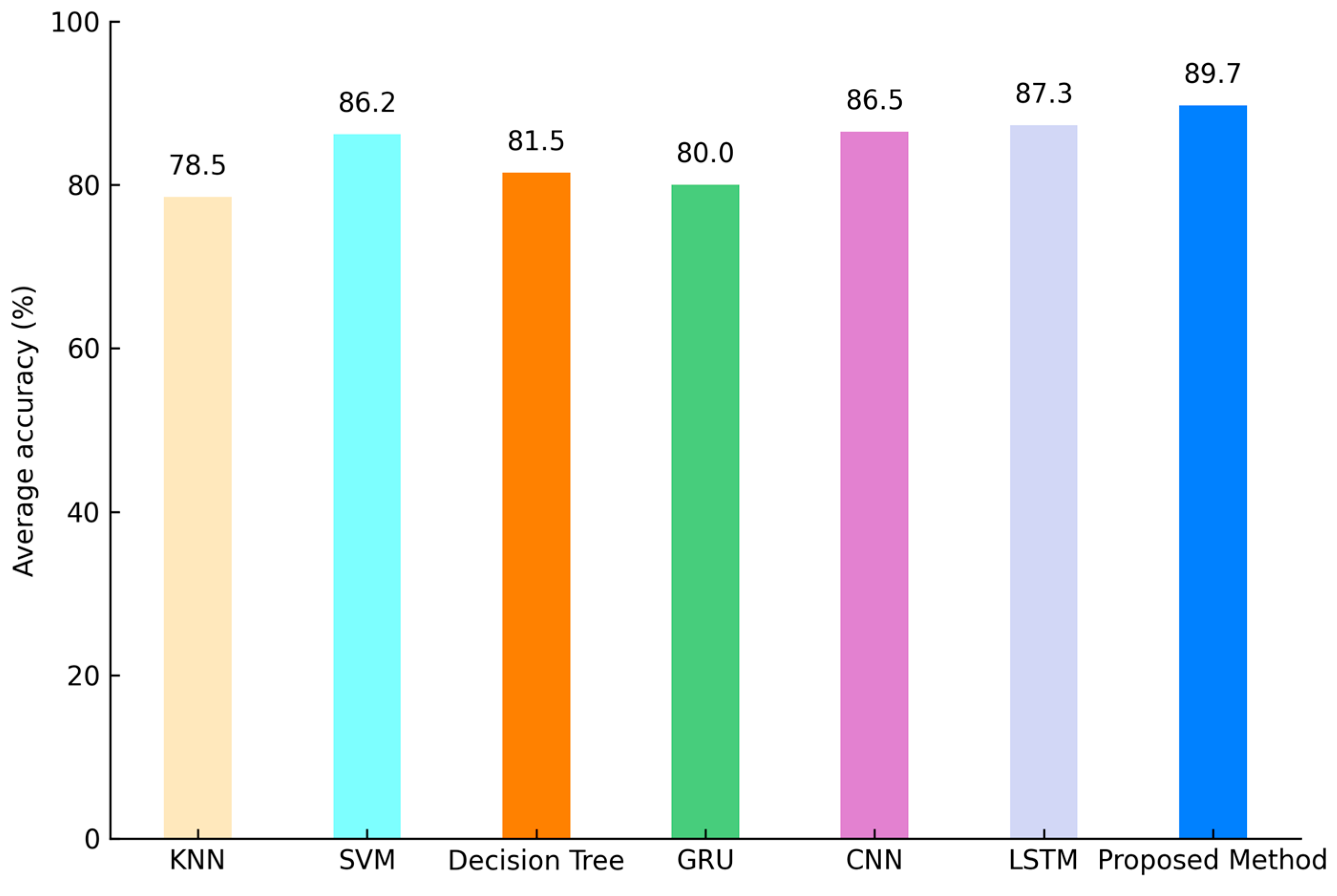

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix A.1

| Parameter Type | Variable Name | Recommended Range |

|---|---|---|

| Population Parameters | POPULATION_SIZE | 20 |

| ELITE_SIZE | 3 | |

| Evolution Parameters | GENERATIONS | 10 |

| CROSSOVER_PROB | 0.8 | |

| MUTATION_PROB | 0.1 | |

| Training Parameters | TRAIN_EPOCHS | 8 |

| EARLY_STOPPING_PATIENCE | 3 |

Appendix A.2

| Genetic Algorithm-Optimized LSTM Pseudocode |

|---|

| // 1. Initialization parameters POPULATION_SIZ GENERATION CROSSOVER_PRO MUTATION_PRO // 2. Fitness function FUNCTION evaluate_fitness (individual): lstm_units1, lstm_units2 = individual [0], individual [1] dropout1_rate, dropout2_rate = individual [2], individual [3] learning_rate, batch_size = individual [4], individual [5] // Train the LSTM model model = create_lstm_model (...) history = model.fit (epochs=TRAIN_EPOCHS, …) // Calculate fitness best_accuracy = max (history.val_accuracy) stability_penalty = std (history.val_accuracy) * STABILITY_WEIGHT fitness = -(best_accuracy - stability_penalty) RETURN fitness END FUNCTION // 3. Main loop FOR generation = 1 TO GENERATIONS: // Assess the population FOR each individual IN population: individual.fitness = evaluate_fitness (individual) // Elite retention hall_of_fame.update (population) // Selection, crossover, mutation, adaptive mutation intensity parents = tournament_selection (population, siz) offspring = two-point crossover and gaussian mutate (parents, CROSSOVER_PROB, MUTATION_PROB) // Update the population population = select_best (offspring + hall_of_fame, POPULATION_SIZE) END FOR RETURN get_best_hyperparameters (hall_of_fame) |

Appendix B

References

- Atzori, M.; Gijsberts, A.; Castellini, C.; Caputo, B. Electromyography data for non-invasive naturally-controlled robotic hand prostheses. Sci. Data 2014, 1, 140053. [Google Scholar] [CrossRef]

- Vogel, J.; Hagengruber, A.; Iskandar, M.; Quere, G. EDAN: An EMG-controlled daily assistant to help people with physical disabilities. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 4183–4190. [Google Scholar] [CrossRef]

- Liu, Y.; Guo, S.; Yang, Z.; Hirata, H.; Tamiya, T. A home-based bilateral rehabilitation system with sEMG-based real-time variable stiffness. IEEE J. Biomed. Health Inform. 2020, 25, 1529–1541. [Google Scholar] [CrossRef]

- Sun, R.; Song, R.; Tong, K.Y. Complexity analysis of EMG signals for patients after stroke during robot-aided rehabilitation training using fuzzy approximate entropy. IEEE Trans. Neural Syst. Rehabil. Eng. 2013, 22, 1013–1019. [Google Scholar] [CrossRef]

- Hye, N.M.; Hany, U.; Chakravarty, S.; Akter, L.; Ahmed, I. Artificial intelligence for sEMG-based muscular movement recognition for hand prosthesis. IEEE Access 2023, 11, 38850–38863. [Google Scholar] [CrossRef]

- Al-Timemy, A.H.; Khushaba, R.N.; Bugmann, G.; Escudero, J. Improving the performance against force variation of EMG controlled multifunctional upper-limb prostheses for transradial amputees. IEEE Trans. Neural Syst. Rehabil. Eng. 2015, 24, 650–661. [Google Scholar] [CrossRef]

- Meattini, R.; Benatti, S.; Scarcia, U.; De Gregorio, D.; Benini, L.; Melchiorri, C. An sEMG-based human–robot interface for robotic hands using machine learning and synergies. IEEE Trans. Compon. Pack. Manuf. Technol. 2018, 8, 1149–1158. [Google Scholar] [CrossRef]

- Oskoei, M.A.; Hu, H. Myoelectric control systems—A survey. Biomed. Signal Process. Control 2007, 2, 275–294. [Google Scholar] [CrossRef]

- Saponas, T.S.; Tan, D.S.; Morris, D.; Balakrishnan, R.; Turner, J.; Landay, J.A. Enabling always-available input with muscle-computer interfaces. In Proceedings of the 22nd Annual ACM Symposium on User Interface Software and Technology, Victoria, Canada, 4–7 October 2009; pp. 167–176. [Google Scholar] [CrossRef]

- Farina, D.; Jiang, N.; Rehbaum, H.; Holobar, A.; Graimann, B.; Dietl, H.; Aszmann, O.C. The extraction of neural information from the surface EMG for the control of upper-limb prostheses: Emerging avenues and challenges. IEEE Trans. Neural Syst. Rehabil. Eng. 2014, 22, 797–809. [Google Scholar] [CrossRef] [PubMed]

- Lin, M.; Huang, J.; Fu, J.; Sun, Y.; Fang, Q. A VR-based motor imagery training system with EMG-based real-time feedback for post-stroke rehabilitation. IEEE Trans. Neural Syst. Rehabil. Eng. 2022, 31, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Jo, H.N.; Park, S.W.; Choi, H.G.; Han, S.H.; Kim, T.S. Development of an Electrooculogram (EOG) and surface Electromyogram (sEMG)-based human computer interface (HCI) using a bone conduction headphone integrated bio-signal acquisition system. Electronics 2022, 11, 2561. [Google Scholar] [CrossRef]

- Qing, Z.; Lu, Z.; Cai, Y.; Wang, J. Elements influencing sEMG-based gesture decoding: Muscle fatigue, forearm angle and acquisition time. Sensors 2021, 21, 7713. [Google Scholar] [CrossRef] [PubMed]

- Fatimah, B.; Singh, P.; Singhal, A.; Pachori, R.B. Hand movement recognition from sEMG signals using Fourier decomposition method. Biocybern. Biomed. Eng. 2021, 41, 690–703. [Google Scholar] [CrossRef]

- Rani, P.; Pancholi, S.; Shaw, V.; Atzori, M.; Kumar, S. Enhancing gesture classification using active EMG band and advanced feature extraction technique. IEEE Sens. J. 2023, 24, 5246–5255. [Google Scholar] [CrossRef]

- Junior, J.J.A.M.; Freitas, M.L.B.; Siqueira, H.V.; Lazzaretti, A.E.; Pichorim, S.F.; Stevan, S.L. Feature selection and dimensionality reduction: An extensive comparison in hand gesture classification by sEMG in eight channels armband approach. Biomed. Signal Process. Control 2020, 59, 101920. [Google Scholar] [CrossRef]

- Karheily, S.; Moukadem, A.; Courbot, J.B.; Abdeslam, D.O. sEMG time–frequency features for hand movements classification. Expert Syst. Appl. 2022, 210, 118282. [Google Scholar] [CrossRef]

- Shen, S.; Gu, K.; Chen, X.; Wang, R. Motion classification based on sEMG signals using deep learning. In Proceedings of the Machine Learning and Intelligent Communications: 4th International Conference (MLICOM 2019), Nanjing, China, 24–25 August 2019; pp. 563–572. [Google Scholar] [CrossRef]

- Xu, Z.; Yu, J.; Xiang, W.; Zhu, S.; Hussain, M.; Liu, B.; Li, J. A novel SE-CNN attention architecture for sEMG-based hand gesture recognition. CMES 2023, 134, 157–177. [Google Scholar] [CrossRef]

- Luo, X.; Huang, W.; Wang, Z.; Li, Y.; Duan, X. InRes-ACNet: Gesture recognition model of multi-scale attention mechanisms based on surface Electromyography signals. Appl. Sci. 2024, 14, 3237. [Google Scholar] [CrossRef]

- Sehat, K.; Shokouhyan, S.M.; Abdallah, N.K.; Khalafet, K. Deep network optimization using a genetic algorithm for recognizing hand gestures via EMG signals. Preprints 2023, 2023010075. [Google Scholar] [CrossRef]

- Zhang, Y.; Chen, Y.; Yu, H.; Yang, X.; Lu, W. Learning effective spatial–temporal features for sEMG armband-based gesture recognition. IEEE Internet Things J. 2020, 7, 6979–6992. [Google Scholar] [CrossRef]

- Lin, C.; Cui, Z.; Chen, C.; Liu, Y.; Chen, C.; Jiang, N. A fast gradient convolution kernel compensation method for surface electromyogram decomposition. J. Electromyogr. Kinesiol. 2024, 76, 102869. [Google Scholar] [CrossRef]

- Graupe, D.; Cline, W.K. Functional separation of EMG signals via ARMA identification methods for prosthesis control purposes. IEEE Trans. Syst. Man Cybern.-Syst. 1975, SMC-5, 252–259. [Google Scholar] [CrossRef]

- Reddy, N.P.; Gupta, V. Toward direct biocontrol using surface EMG signals: Control of finger and wrist joint models. Med. Eng. Phys. 2007, 29, 398–403. [Google Scholar] [CrossRef]

- Ghaemi, A.; Rashedi, E.; Pourrahimi, A.M.; Kamandar, M.; Rahdari, F. Automatic channel selection in EEG signals for classification of left or right hand movement in Brain Computer Interfaces using improved binary gravitation search algorithm. Biomed. Signal Process. Control 2017, 33, 109–118. [Google Scholar] [CrossRef]

- Zhang, S.; Li, X.; Zong, M.; Zhu, X.; Cheng, D. Learning k for kNN classification. ACM Trans. Intell. Syst. Technol. 2017, 8, 1–19. [Google Scholar] [CrossRef]

- Yu, Y.; Si, X.; Hu, C.; Zhang, J. A review of recurrent neural networks: LSTM cells and network architectures. Neural Comput. 2019, 31, 1235–1270. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.; Lee, S.P. A BiLSTM–Transformer and 2D CNN architecture for emotion recognition from speech. Electronics 2023, 12, 4034. [Google Scholar] [CrossRef]

- Kumar, P.; Kumar, R. A hybrid framework for time series trends: Embedding social network’s sentiments and optimized stacked LSTM using evolutionary algorithm. Multimed. Tools Appl. 2024, 83, 34691–34714. [Google Scholar] [CrossRef]

- Khademi, Z.; Ebrahimi, F.; Kordy, H.M. A transfer learning-based CNN and LSTM hybrid deep learning model to classify motor imagery EEG signals. Comput. Biol. Med. 2022, 143, 105288. [Google Scholar] [CrossRef]

- Cao, K.; Zhang, T.; Huang, J. Advanced hybrid LSTM-transformer architecture for real-time multi-task prediction in engineering systems. Sci. Rep. 2024, 14, 4890. [Google Scholar] [CrossRef]

- Greff, K.; Srivastava, R.K.; Koutník, J.; Steunebrink, B.R.; Schmidhuber, J. LSTM: A search space odyssey. IEEE Trans. Neural Netw. Learn. Syst. 2016, 28, 2222–2232. [Google Scholar] [CrossRef] [PubMed]

- Jozefowicz, R.; Zaremba, W.; Sutskever, I. An empirical exploration of recurrent network architectures. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 2342–2350. [Google Scholar]

| Overlap Rate | Window Length | ||

|---|---|---|---|

| 78 | 156 | 312 | |

| 25% | / | 93.3% | 87.5% |

| 50% | 88.3% | 96.4% | 85.0% |

| 75% | / | 92.5% | 93.8% |

| Subject | Flex | Extend | Spread | Fist | Point |

|---|---|---|---|---|---|

| 000 | 100.0 | 100.0 | 100.0 | 90.9 | 90.0 |

| 001 | 90.9 | 71.4 | 60.0 | 100.0 | 60.0 |

| 002 | 72.7 | 100.0 | 80.0 | 72.7 | 100.0 |

| 003 | 100.0 | 100.0 | 100.0 | 100.0 | 70.0 |

| 004 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 |

| 005 | 100.0 | 100.0 | 100.0 | 90.9 | 100.0 |

| Subject | LSTM | Proposed Method |

|---|---|---|

| 000 | 96.4 | 96.4 |

| 001 | 73.2 | 76.8 |

| 002 | 83.9 | 85.7 |

| 003 | 92.9 | 94.6 |

| 004 | 100.0 | 100.0 |

| 005 | 98.2 | 98.2 |

| Mean ± Std | 90.8 ± 10.3 | 92.0 ± 8.9 |

| Subject | LSTM | Proposed Method |

|---|---|---|

| 000 | 87.3 | 93.7 |

| 001 | 63.3 | 67.1 |

| 002 | 89.9 | 89.9 |

| 003 | 87.3 | 88.6 |

| 004 | 98.7 | 100.0 |

| 005 | 97.5 | 98.7 |

| Mean ± Std | 87.3 ± 12.8 | 89.7 ± 12.0 |

| Evaluation Index | Flex | Extend | Adduct | Abduct | Spread | Fist | Point |

|---|---|---|---|---|---|---|---|

| Sensitivity | 0.814 | 0.855 | 0.902 | 0.890 | 0.908 | 0.920 | 0.920 |

| Specificity | 0.961 | 0.977 | 0.980 | 0.649 | 0.838 | 1.000 | 0.939 |

| F1-score | 0.882 | 0.912 | 0.940 | 0.751 | 0.872 | 0.959 | 0.929 |

| SVM_1 | RF_1 | SVM_2 | RF_2 | STF-GR | Proposed Method |

|---|---|---|---|---|---|

| 56.1 | 63.9 | 59.4 | 62.2 | 71.7 | 71.9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cao, T.-A.; Zhou, H.; Chen, Z.; Dai, Y.; Fang, M.; Wu, C.; Jiang, L.; Dai, Y.; Tong, J. A Novel Hand Motion Intention Recognition Method That Decodes EMG Signals Based on an Improved LSTM. Symmetry 2025, 17, 1587. https://doi.org/10.3390/sym17101587

Cao T-A, Zhou H, Chen Z, Dai Y, Fang M, Wu C, Jiang L, Dai Y, Tong J. A Novel Hand Motion Intention Recognition Method That Decodes EMG Signals Based on an Improved LSTM. Symmetry. 2025; 17(10):1587. https://doi.org/10.3390/sym17101587

Chicago/Turabian StyleCao, Tian-Ao, Hongyou Zhou, Zhengkui Chen, Yiwei Dai, Min Fang, Chengze Wu, Lurong Jiang, Yanyun Dai, and Jijun Tong. 2025. "A Novel Hand Motion Intention Recognition Method That Decodes EMG Signals Based on an Improved LSTM" Symmetry 17, no. 10: 1587. https://doi.org/10.3390/sym17101587

APA StyleCao, T.-A., Zhou, H., Chen, Z., Dai, Y., Fang, M., Wu, C., Jiang, L., Dai, Y., & Tong, J. (2025). A Novel Hand Motion Intention Recognition Method That Decodes EMG Signals Based on an Improved LSTM. Symmetry, 17(10), 1587. https://doi.org/10.3390/sym17101587