1. Introduction

Ever since people realized the convenience artificial intelligence (AI) brought us, many AI-based tools and applications have been developed at an amazingly fast speed to leverage the quality of our lives. While the third industrial revolution is approaching, many new developments depending on AI are engaged. Evolutionary robots are not only built to assist in manufacturing, transportation, and healthcare but also built to accommodate modern smart homes. With all these advanced technologies, the traffic of future telecommunications becomes heavier than ever. Traditional cellular networks could not keep up the speed of transmitting data, but the possibility of industry revolution becomes a reality when Fifth-Generation (5G) communication technology comes into play. The ability to handle huge amounts of data through the internet is a major milestone to achieve and it increases the demand on digital cloud utilization.

The benefits of using 5G infrastructure [

1,

2] are that it can transmit data faster because of its higher bandwidth and it can connect to more devices to make internet-of-things (IoT) and machine-to-machine communication possible. Even though 5G can transmit big data, it is critical to improve data compression mechanisms to decrease the sizes of transmitted files. The smaller a file size, the faster the transmission speed and the smaller the cloud storage required.

Images, videos, and audio are common data formats transmitted through networks in our daily lives. Image compression can be categorized as either lossy or lossless, depending on whether the original images can be fully restored. Most of the time, people choose lossy compression for better web performance. Although lossy image compression cannot fully restore the original images, the recovered images are generally visually recognizable with only minor distortions. Vector quantization (VQ) [

3,

4] is a widely used and fundamental image compression technique in academia. To maintain high image quality, obtaining a well-trained codebook is essential. When an image has strong symmetry, the vectors in the codebook can be reused more frequently, improving compression efficiency. The Linde–Buzo–Gray (LBG) algorithm [

5], introduced in 1980, is a well-known method for training codebooks. It divides an image into blocks, converts the data sequences of these blocks into codewords, and replaces these codewords with the indices from a well-trained codebook to compress the image. Improving image compression techniques [

6,

7,

8,

9,

10,

11,

12,

13,

14,

15,

16,

17] is a key issue that many scholars have focused on. VQ compression technology [

6,

7,

8] has been widely used in various fields in recent years. Any improvements in VQ schemes could potentially be applied to other compression techniques in the future.

VQ compression can be categorized into memory VQ and memoryless VQ. The primary difference between the two is whether the correlations are among adjacent blocks or among neighbor pixels in a block. There are many well-known memory VQ compression algorithms, such as finite-state VQ (FSVQ) [

9], side match VQ (SMVQ) [

10], and predictive VQ (PVQ) [

11,

12]. Memory VQ schemes require more computation cost than memoryless VQ ones in general. Several favored memoryless VQ compression algorithms, such as Predictive Mean Search (PMS) [

13], Search-Order Coding (SOC) [

14], and Index Compression VQ (ICVQ) [

17], have been proposed. Subsequently, some algorithms [

16,

17] based on SOC for VQ index tables were developed. In 2009, Chang et al. [

16] used a state codebook to recompress the VQ index table. In 2024, Lin et al. [

17] applied the concept of side match to recompress the VQ index table. When compression is based on the correlation of neighboring indices of an index table, the index values can be treated as the neighboring pixel values. If the adjacent pixels are highly correlated in an image, it can help the compression of the image. Similarly, to an index table, the compression rate will be higher if the adjoined indices are close in value. In order to achieve this purpose, sorting a codebook is used to make neighboring indices close in value. Since there are more and more applications that require reversibility, it is necessary to be able to retrieve the original index table after decompression to keep the visual quality of the images at VQ level. Previous schemes for recompressing the VQ index table perform poorly on texture images. To achieve a high compression rate for the index table, we propose a scheme that combines principal component analysis (PCA) [

18] and Huffman coding [

19]. The real challenge in compressing texture images is finding the right balance between compression efficiency and preserving quality. Specifically, it is about determining how much the VQ data can be reduced without noticeable quality loss. Most importantly, the goal is to ensure that any degradation in image quality is imperceptible to the human eye. The contributions of our proposed scheme are:

We propose a recompression scheme for a VQ index table of texture images, which achieves better compression effectiveness compared to other similar schemes.

Our proposed scheme enables lossless decompression after compression, allowing the restoration of the original VQ index table.

This paper is structured as follows:

Section 2 describes the fundamental concepts of the methods related to our scheme;

Section 3 details the flow of algorithms used in the proposed scheme; the experiments and results are analyzed in

Section 4; and, at the end of the research, we conclude our thoughts in

Section 5.

2. Related Work

Our proposed scheme involves a few common techniques, such as vector quantization (VQ), principal component analysis (PCA), and Huffman coding. To better understand essential concepts of these processes, they are described in the subsections below.

2.1. Vector Quantization Compression

After LBG Algorithm [

5] was first proposed by Linde, Buzo, and Gray back in 1980, the block-based method became a common and widely used technique in image compression. Much research and many studies conducted co-operated with the VQ technique in their schemes to compress images effectively afterwards.

There are three parts involved in a VQ compression:

Part 1: Codebook Training

An image in a training set is divided into nonoverlapped blocks. Each block has pixels and is considered as an -dimensional codeword. By processing a reasonable-sized image training set, a well-trained codebook consisting of the most representative codewords can be obtained. The size of a codebook varies depending on the specifications of an application.

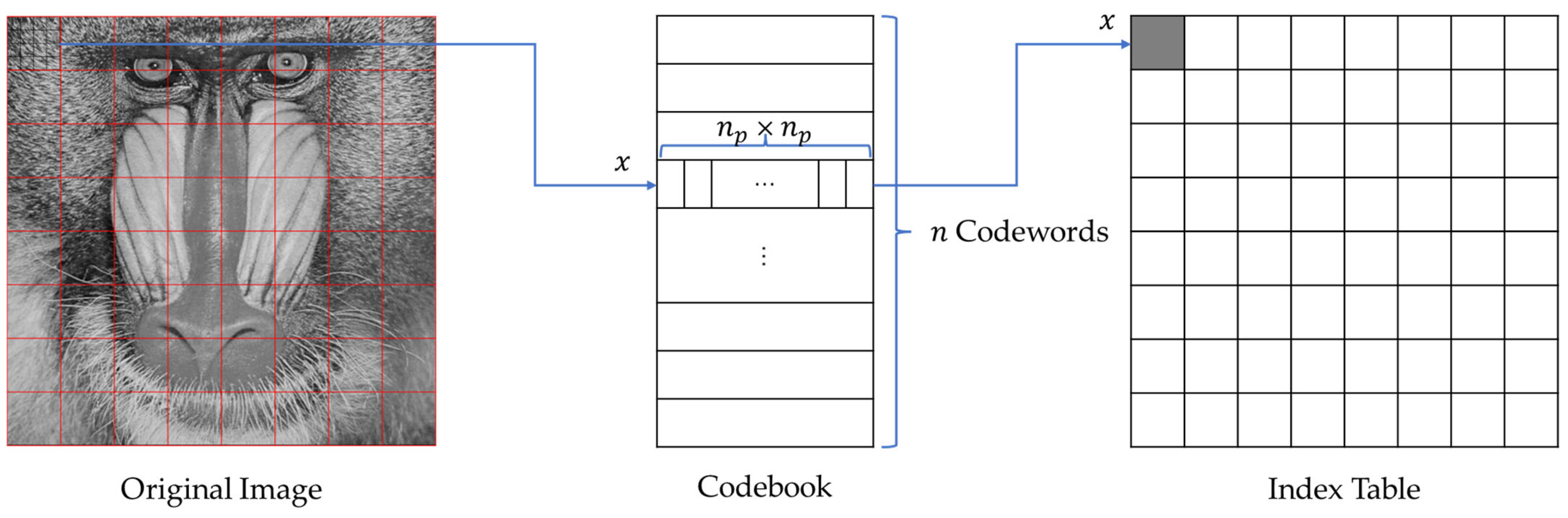

Part 2: Encoding

After obtaining a well-trained codebook, the original image

is divided into

blocks to meet the codeword dimension of the codebook. A set of vectors is represented as

and

is a codebook of size

,

. If

represents the codeword being processed, each codeword

can be represented as

, where

represents the vector being processed. The minimized Euclidean distance

between an input vector

and

is calculated for each pixel block in the image

by going through the codewords in the codebook using Equation (1). Setting

to the minimum distance limits the distortion of the input image.

where

represents the vector dimension being processed.

An index table mapping to the image

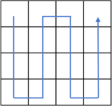

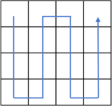

is generated by using the codeword indices in the codebook, which represent the closest distances to the image pixel blocks. A pixel block is replaced by an index of a codeword in the mapped index table. The encoding process is demonstrated in

Figure 1.

Since the index table is then transmitted rather than the pixel values in square blocks, the VQ approach reduces the image size radically. A high compression rate is achieved because the bit size of a codeword’s index is much smaller than that of a -dimensional vector.

Part 3: Decoding

The index table generated by the encoder and the well-trained codebook are sent to the decoder. The codebook for decoding must be the same one used for encoding. Retrieval of the original image with little distortion can simply be a table look-up. The computational time is fast and the image quality can be controlled by the size of the codebook used. However, there is a tradeoff between the size of the codebook and the compression rate. The computation time can increase because there are more Euclidean distance calculations.

2.2. Principal Component Analysis

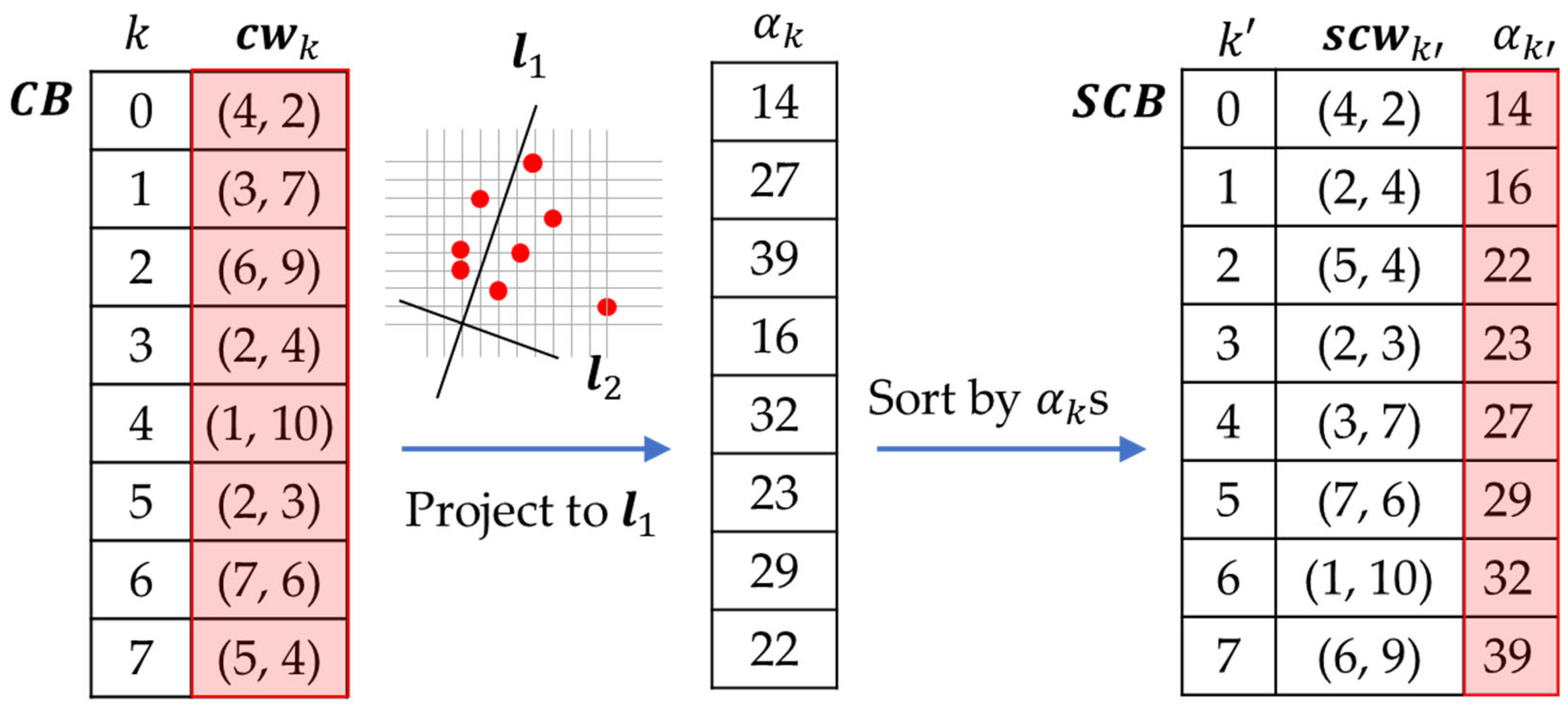

When dealing with a codebook containing -dimensional codewords, it is important to sort the codebook to enhance compression efficiency. To achieve this, we can sort the codewords so that similar codewords are located near each other. Principal component analysis (PCA) is a technique that helps identify an optimal axis and allows us to preserve the maximum variance among codewords. By applying PCA, we can reduce the dimensionality of the data while ensuring that similar codewords remain close to each other. A sorting can be accomplished by projecting the -dimensional codewords onto a specific axis that maintains the relationships between similar codewords. The optimal axis is called the first principal component direction , which is a direction with a maximized variance of projected points. Let be the covariance matrix calculated from the set of codewords , where represents the number of codewords in the codebook. When projecting these codewords onto the directions defined by the eigenvectors , is the codeword dimension. The eigenvalues correspond to the variance of each direction. The eigenvectors are the principal components in the covariance matrix . is the direction of the first principal component and explains the most variance in the data. Therefore, we denote as the first principal component direction that we are looking for.

In order to obtain a sorted codebook and achieve index correlation through PCA, the steps are as follows:

Step 1: Obtain the optimal direction

and calculate the projected values using Equation (2) for all codewords. Here,

denotes the index of the codeword.

Step 2: Sort the codewords based on their projected values to obtain the sorted codebook.

Step 3: Update the index table by finding the corresponding indices in the sorted codebook.

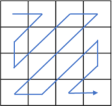

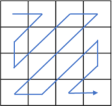

Figure 2 shows an example of PCA sorting. Let a codebook

consist of eight 2D codewords

. PCA lines

and

are adjusted from the center of codewords to the origin.

and

are perpendicular to each other. Allocate the PCA line

, which has the maximum variance of codewords projected to it. Obtain the codeword projected values

s to the PCA line

: 14, 27, 39, 16, 32, 23, 29, and 22. We can then sort the codewords according to their projected values and obtain the sorted codebook

. An index table constructed by using the sorted codebook can better correlate the neighboring blocks.

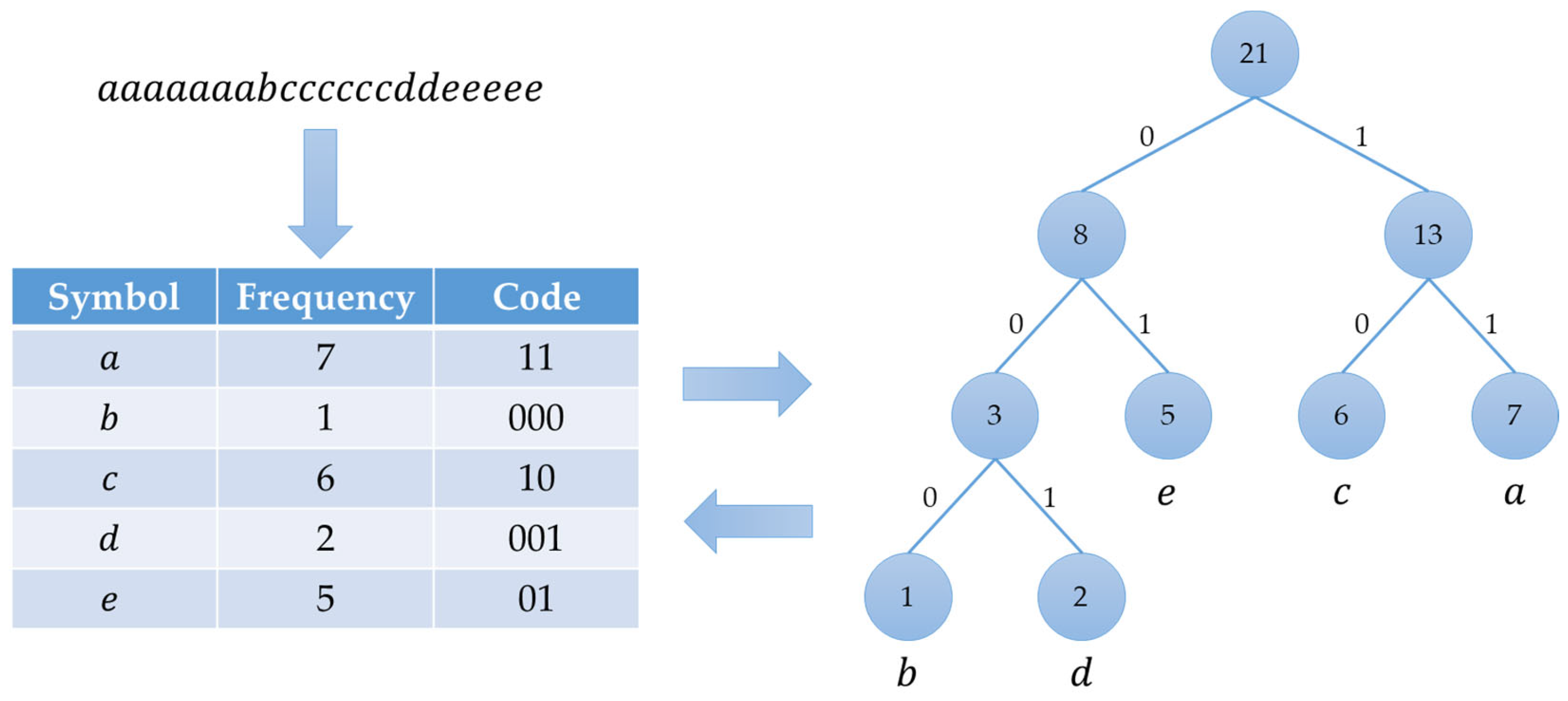

2.3. Huffman Coding

A Huffman coding is a common encoding algorithm used for data compression. The algorithm was developed by David A. Huffman [

19] back in 1952 to represent data by variable-length codes. The key concept of the method is to represent highly used symbols with fewer bits. Based on this concept, a frequency-based binary tree is built from the bottom up and the tree is converged to a Huffman code table eventually.

The inputs to the Huffman coding are a symbol table of size and a corresponding frequency table . The output of the algorithm is a Huffman code table , which can be used to convert symbols into bit streams.

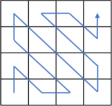

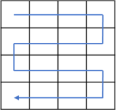

Figure 3 is an example of Huffman code building. If a symbol stream is defined as

, every symbol is a child node in the Huffman tree and the tree is built from the bottom up according to the frequencies of symbols in the stream

. After the tree is built, the left-child is coded with 0 and the right-child is coded with 1. The codes of a symbol are constructed by walking through nodes from the top-most node until reaching a child node matching the desired symbol.

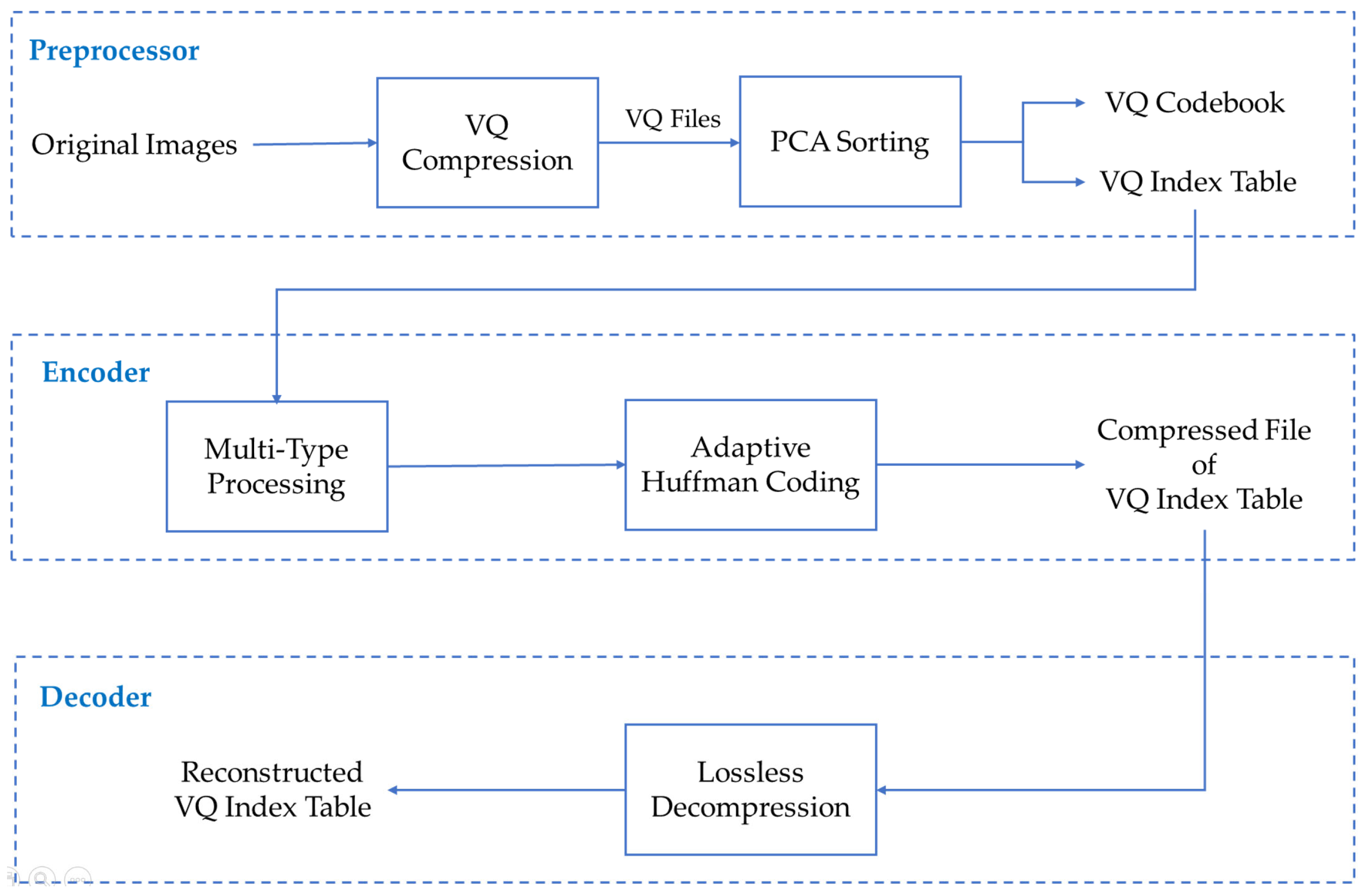

3. Proposed Scheme

In this section, we propose a novel recompression scheme based on Huffman coding of index changes between neighboring blocks. First, we obtain a well-ordered new codebook by applying the PCA algorithm to the pretrained VQ codebook. Due to the texture of the image, after sorting with the PCA algorithm, the codebook indices of neighboring blocks are often highly correlated with each other. Based on this characteristic, we calculate the differences or XOR values between neighboring blocks along specified paths, resulting in a processed index table. We observe that the processed index table contains some high-frequency symbols, allowing us to further compress it using Huffman coding.

Figure 4 shows the flow of our proposed scheme. Inside of the preprocessor, we apply VQ compression to the original images, generating the VQ codebook and VQ index table. We then sort the VQ codebook using PCA and obtain the corresponding VQ codebook and its updated VQ index table. The VQ index table is the input to the encoder. Through multi-type processing using different paths and methods, various types of processed index tables are obtained. Due to the immersion of numerous high-frequency symbols after processing, they undergo compression using adaptive Huffman coding to generate compressed files of all VQ index table types. The type with the smallest file size, indicating the best compression result, is selected and recorded using an indicator. Upon receiving the compressed VQ index table file, the decoder extracts the indicator first and then performs the corresponding reverse process of lossless decompression to reconstruct the original VQ index table.

3.1. Multi-Type Processing Phase

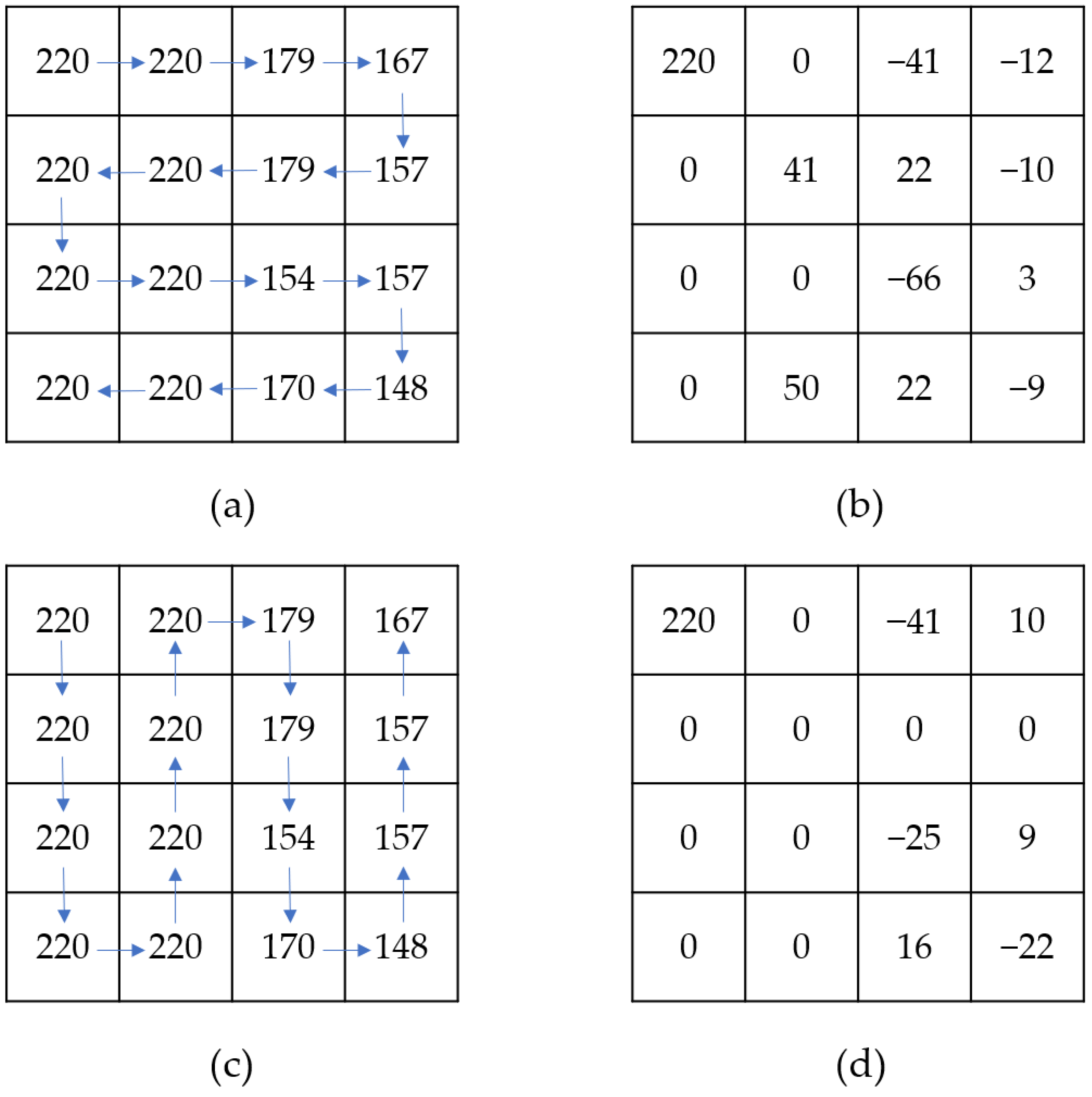

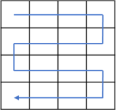

The core of our research is this multi-type processing aiming to optimize the compression results. As shown in

Table 1, there are eight processing types: a total of four different serpentine or zigzag paths combined with either a difference calculation method

or an XOR method

. After obtaining a processed index table, it is compressed using adaptive Huffman coding. To achieve the best compression results, we compare the results of all compression types and select one by using a 3-bit indicator to denote the selected processing type. It should be noted that a path in

Table 1 is only a schematic representation of the direction. Different block sizes for LBG training and different sizes of VQ compressed images will lead to variations in the size of the index table, which is not necessarily the

size shown under the path of

Table 1.

Firstly, we need to retain the index value of the first step position to ensure that the VQ index table can be reconstructed without any loss. For all other positions, they are calculated based on the index value of the previous position relative to the current one according to the selected path. The processed index values are obtained using Equation (3). The index value of the starting position in the path retains its original value, while subsequent positions use either difference calculations or XOR operations depending on the specified method. Here,

represents the processed index table,

represents the original VQ index table,

represents the step in the path, and

represents the processed method.

Figure 5 shows examples for two different types: (a) and (c) represent the original indices of the VQ compressed image for

and

, respectively, while (b) and (d) represent the processed index tables for

and

after using difference calculations. We observe that the index value of the starting point, which is 220, remains unchanged, while the values at other positions indicate relative changes to their respective previous positions. Results of other types are similar to the shown examples. In other words, an image VQ compressed index table corresponds to eight processed index tables depending on types.

3.2. Adaptive Huffman Coding Phase

Since there is a certain correlation between the changes in adjacent indices in texture images, there will be some high-frequency changed values. We can use this characteristic feature to compress using Huffman coding. Finally, we choose the type that produces the shortest compression code and record it with a 3-bit indicator.

Due to varying texture features in different images, we construct an independent Huffman coding table for each image instead of using a shared Huffman coding table. Therefore, our scheme involves adaptively constructing Huffman coding tailored to each texture image. Algorithm 1 describes the adaptive Huffman coding. First, input the processed index table to the encoder; then, count the number of changed values in , that is, to obtain the number of unique symbols and the counts of each symbol that constitute the frequency table . Then, convert the frequency into probability, construct the Huffman tree, and, finally, use the Huffman code to represent the compressed based on the constructed Huffman tree. To summarize, we obtain the Huffman code and the frequency table at the end of the process. The reason for storing the frequency table instead of the Huffman tree is that the storage space occupied by the frequency table will be smaller than that of the Huffman tree, which can achieve a better compression effect.

| Algorithm 1. Adaptive Huffman Coding |

| Input | Processed index table PIT. |

| Output | Huffman codes HC, frequency table FT. |

| Step 1 | Calculate frequencies:

symbols = unique(PIT)

//Get unique symbols in the processed index table.

counts = histcounts(PIT, [symbols, max(symbols) + 1])

//Count the frequency of each symbol.

FT = [symbols’, counts’]

//Combine symbols and counts into a frequency table.

|

| Step 2 | Build Huffman tree:

prob = counts/sum(counts)

//Normalize frequencies to probabilities.

huffmanTree = huffmandict(symbols, prob)

//Construct the Huffman tree using the symbols and their probabilities.

|

| Step 3 | Generate codes:

HC = huffmanenco(PIT, huffmanTree)

//Encode the symbols in the PIT using the Huffman tree.

|

| Step 4 | Output Huffman codes HC, frequency table FT. |

We perform adaptive Huffman coding on the processed index tables corresponding to the eight types, obtaining eight sets of Huffman codes HC and frequency table FT. To obtain the best compression effect, we then choose the path type with the smallest storage space for HC and FT and represent it with a 3-bit indicator. Assuming that type 2 has the best compression effect, “001” is recorded as the indicator. Finally, the compressed file of the VQ index table includes the indicator, frequency table, and Huffman code.

3.3. Lossless Decompression Phase

In the lossless decompression phase, the decoder first identifies the type of processing through the indicator after receiving the compressed file of the VQ index table. Then, it uses the frequency table to build a Huffman tree in the same way as the encoder and decodes the Huffman code to retrieve the processed index table. Since the processing type is known, the VQ index table can be reconstructed using the corresponding path and method. Equation (4) shows the formula of VQ index table reconstruction. At the starting step of the path, the index value is the same as the processed index value. For other positions, the processed index value of the current step is added to the processed index value of the previous step to reconstruct the original VQ index value if the method is

. When the method is

, the processed index value of the current step is XORed with the processed index value of the previous step to reconstruct the original VQ index value. After processing all steps of the entire path, the VQ index table can be reconstructed.

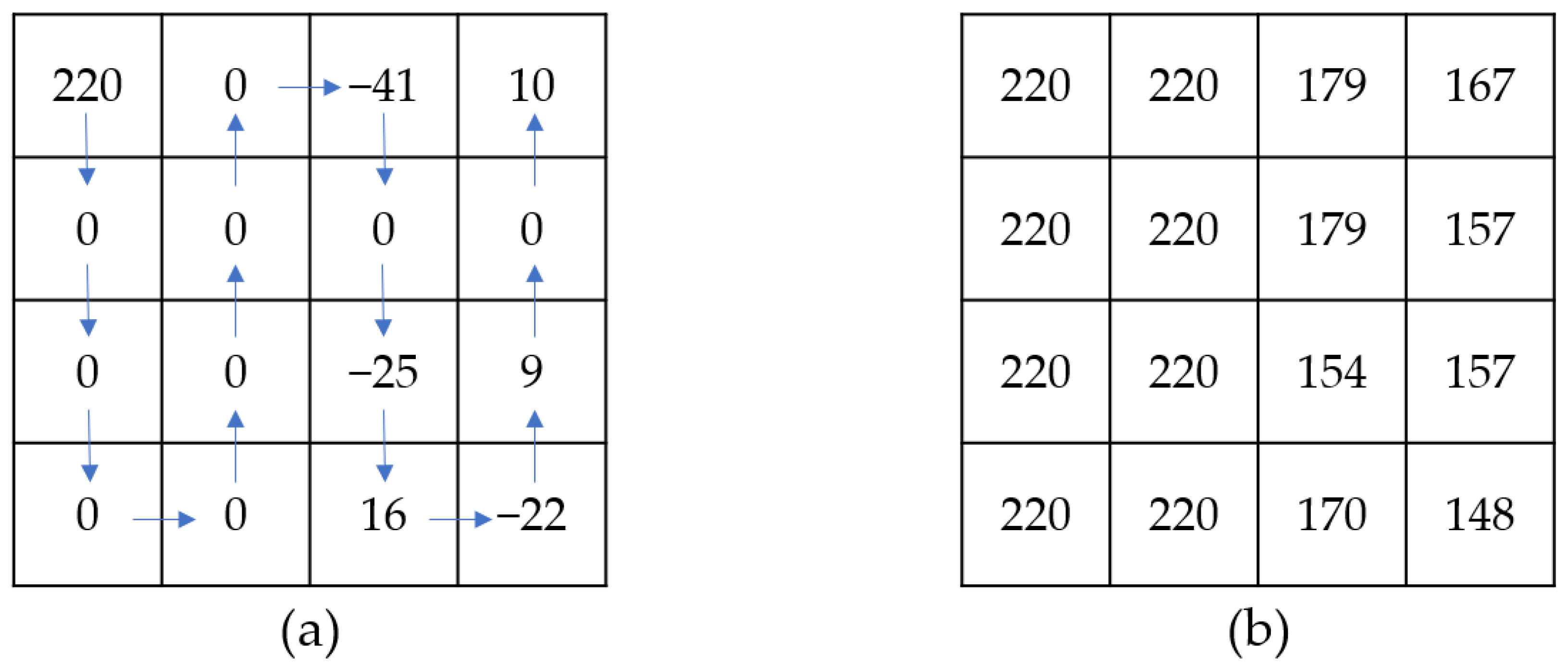

Figure 6 is a specific example. First, assume that the indicator extracted by the decoder is “001”, which corresponds to

.

Figure 6a shows the processed index table with the

path. Because the method of

is “difference”, according to Equation (4), the reconstructed original VQ index table is shown in

Figure 6b.

4. Experimental Results

To verify the performance of our proposed scheme on texture images, we compare it with other algorithms that also compress the VQ index table, namely the search order coding (SOC) algorithm [

14], the SOC-based state codebook (SOC+SC) algorithm [

16], and the SOC-based side match (SOC+SM) algorithm [

17]. Our experimental environment consists of a Windows 11 laptop with a 3.20 GHz AMD Ryzen 7 CPU and 16 GB RAM. The software used is MATLAB R2024a.

We use bit rate (

), i.e., bits per pixel, as a metric to compare compression performance with other schemes. Equation (5) shows how bit rate is calculated, where

represents the size of the compressed file of a VQ index table and

represents the dimensions of the VQ image.

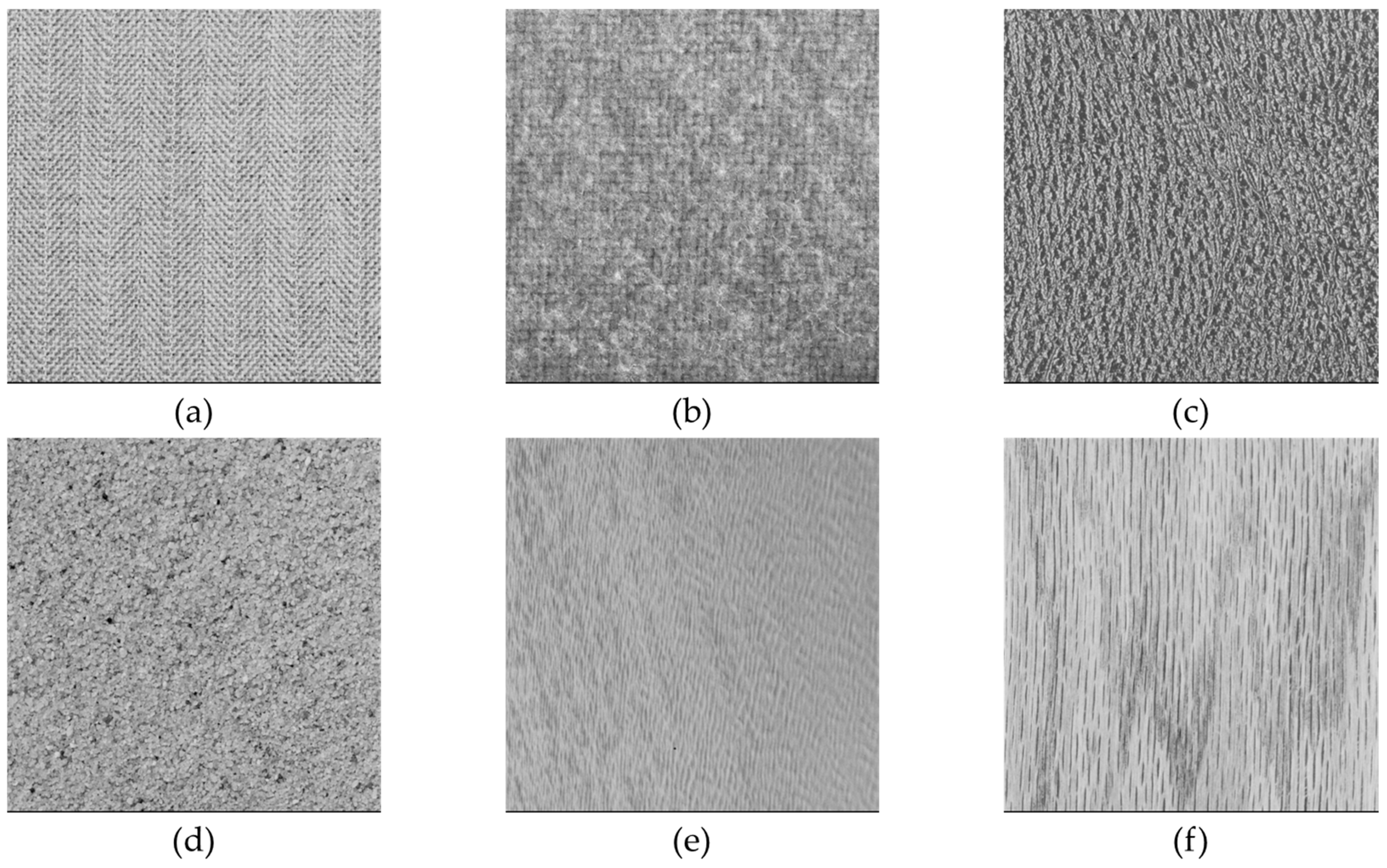

To explore potential limitations of the proposed method, we designed experiments to investigate the impacts of texture images on compression efficiency. In fact, texture images have influence on VQ compression and, consequently, impact the efficiency of recompression. Therefore, we conducted experiments on different images and with varying codebook sizes to obtain comprehensive results.

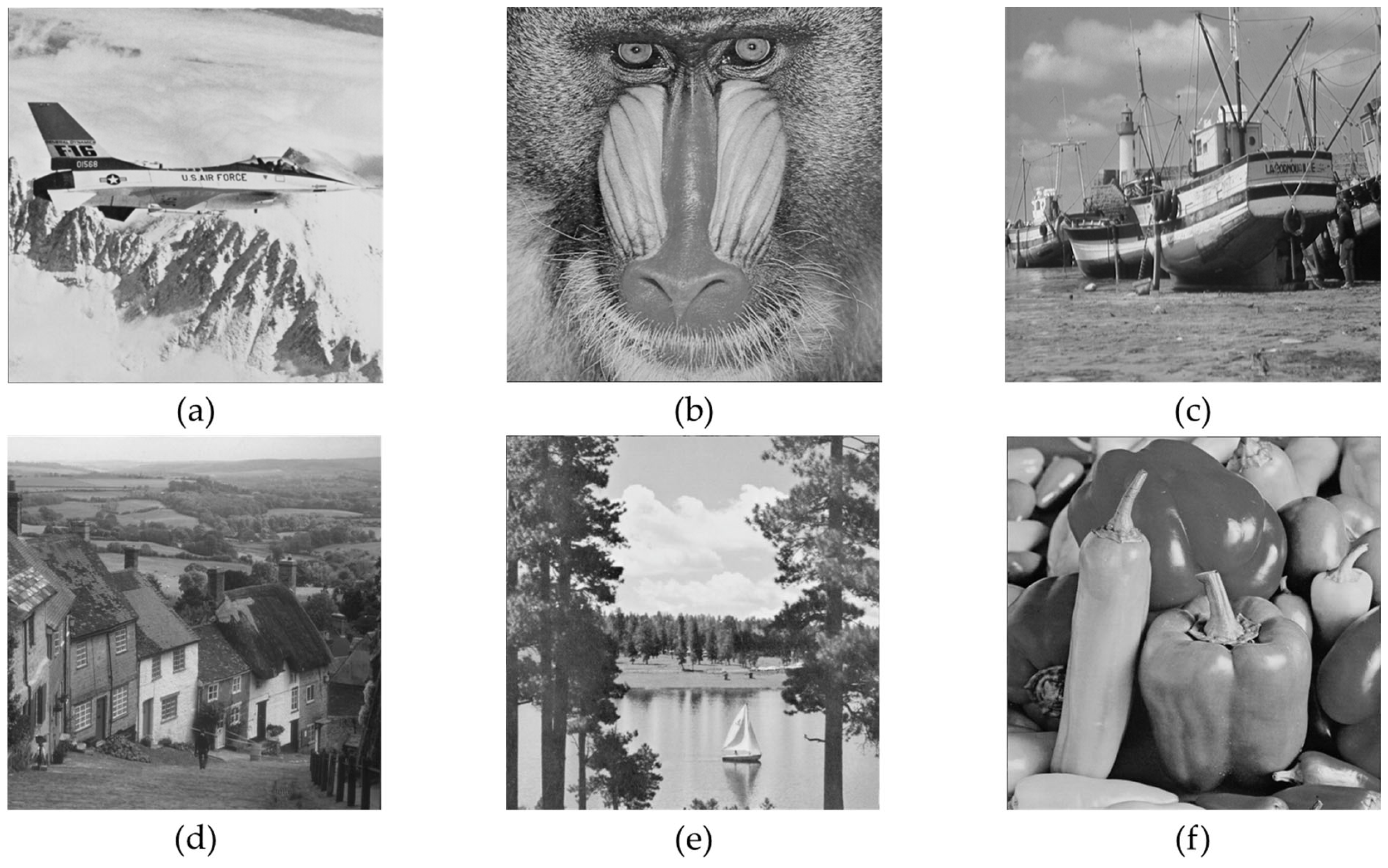

Figure 7 shows six

monochrome texture images from the USC-SIPI image database [

20], which we use as test images. We divided an image into

blocks and then used LBG algorithm [

5] to obtain a VQ codebook and its index table.

Table 2,

Table 3,

Table 4 and

Table 5 show the bit rate and improvement rate of our proposed scheme compared to other schemes using index table recompression in the monochrome texture images while VQ codebook sizes were 64, 128, 256, and 512, respectively. The three schemes [

14,

16,

17] we used in comparisons are all based on the SOC algorithm. In this experiment, we set the number of bits to

for all three schemes and set the matching range to

for the scheme proposed by Lin et al. [

17]. The bit rates for all VQ images across all codebook sizes using our proposed scheme are lower than those of other schemes, demonstrating that our proposed scheme provides better compression performance for texture images. Notably, the bit rate of the Wood Grain image reaches 0.2188, which is more than 22% higher than the other three schemes, if there are 64 codewords in the VQ codebook.

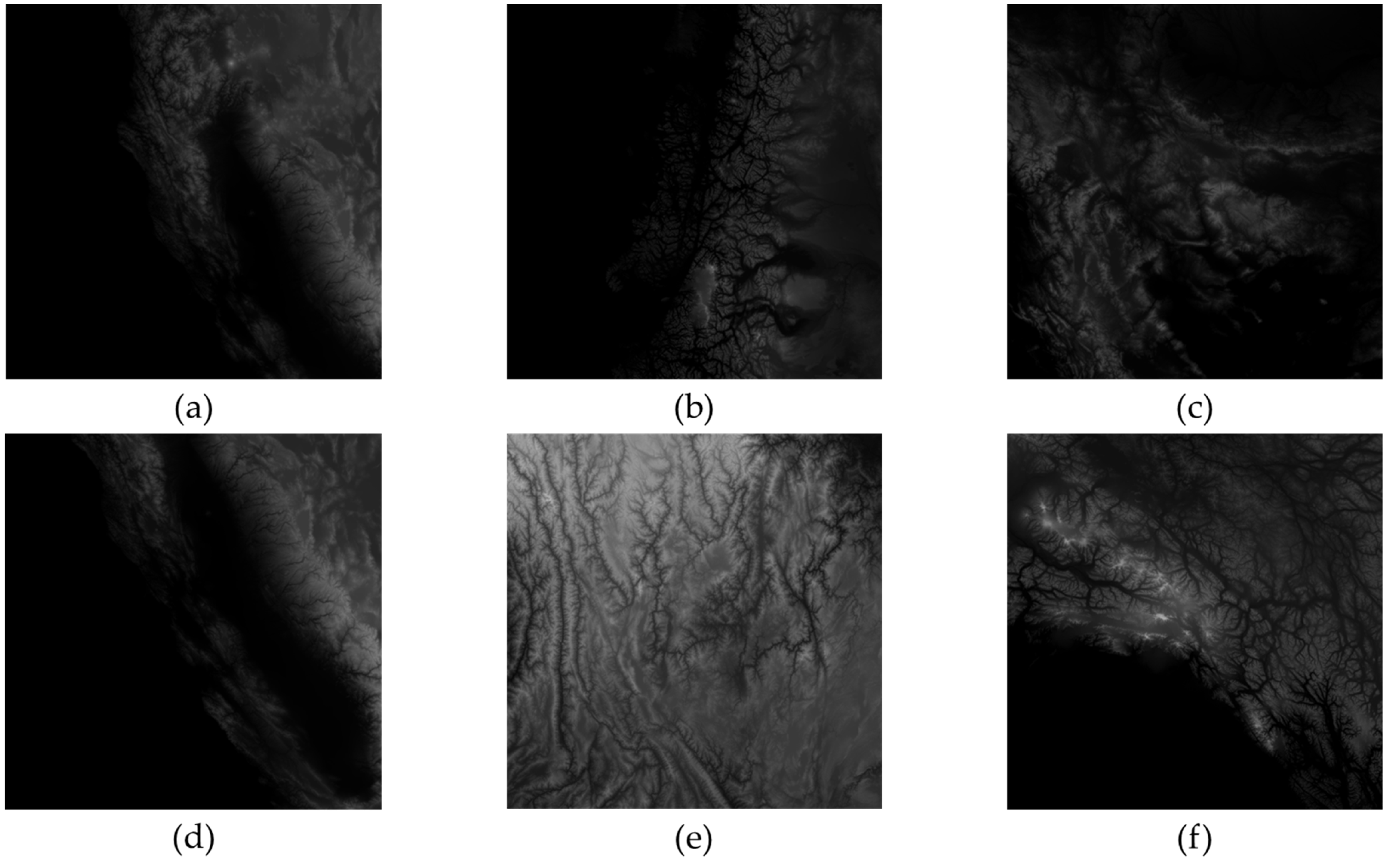

We found that earth height map images also exhibited texture features. Therefore, we selected six height map images from the Earth Terrain, Height, and Segmentation Map Images dataset [

21] as test images, shown in

Figure 8, for experiments as well.

Table 6,

Table 7,

Table 8 and

Table 9 display the bit rate and improvement rate of our proposed scheme compared to other schemes using earth height map images with VQ codebooks of sizes 64, 128, 256, and 512, respectively. Our scheme demonstrated better compression effectiveness across all codebook sizes. In particular, as shown in

Table 6, the bit rates are only 0.1556 for Height map 1 and 0.1503 for Height map 4, achieving an improvement rate of more than 30% over other schemes. We observed that it also had high improvement rates for other sizes. This demonstrates that our scheme applied to texture images, including earth height map images, is more effective.

The reason our scheme is better than the traditional SOC-based schemes is that the blocks of texture images are very similar, leading to many similar VQ codewords. Without a sorted codebook, the visual appearances can be similar but the adjacent index values are very different. Our proposed scheme does not rely on this entirely; it counts on using adaptive coding to compress based on the high frequencies of changed symbols as well.

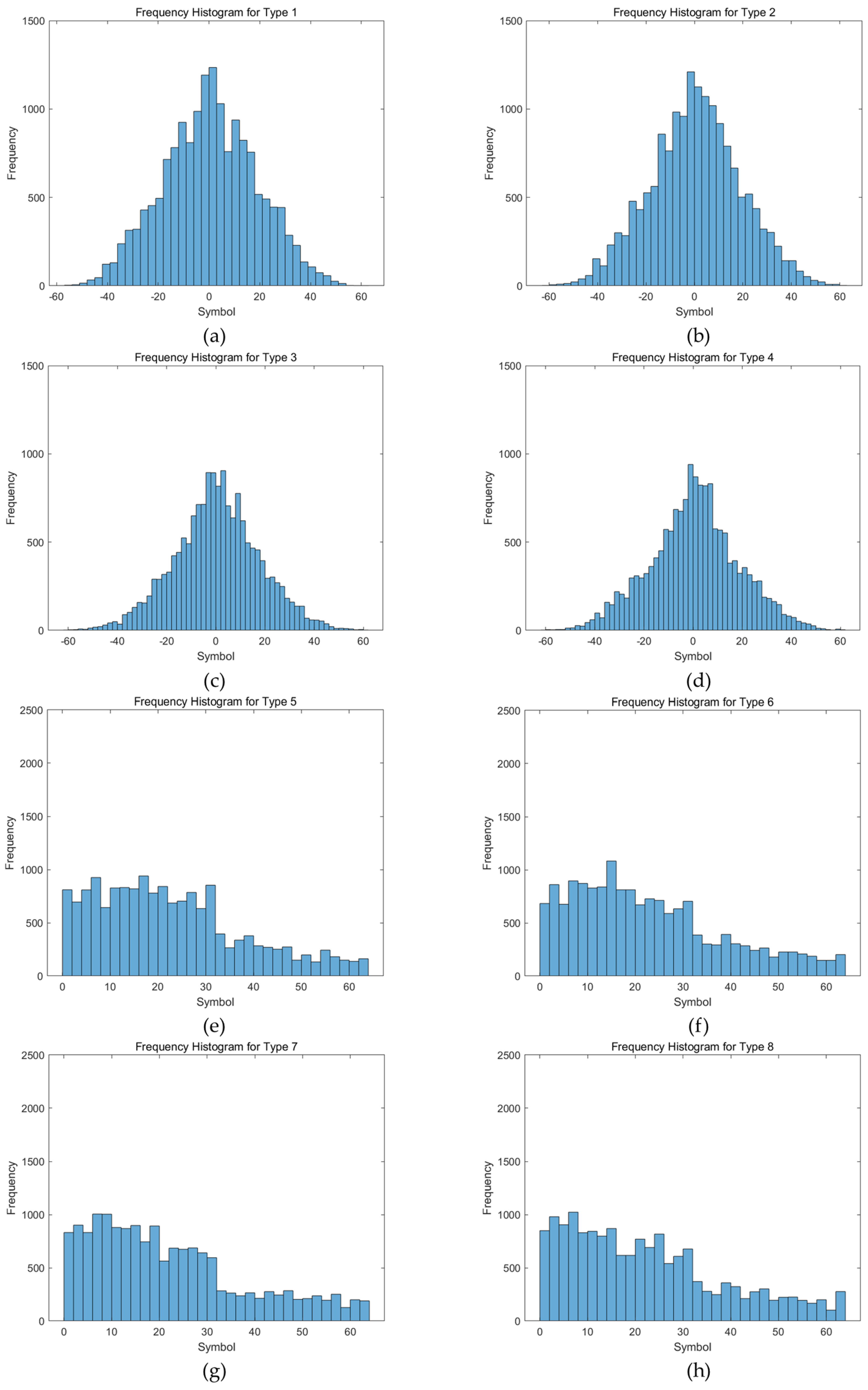

Figure 9 shows the histogram of the processed index frequencies for different processing types of “Herringbone Weave” using a VQ codebook containing 64 codewords. We can observe that the types coped with the

method have more symbols concentrated at the high-frequency area near 0 and the frequency distribution trends are more obvious. In contrast, the processing types using the

method have fewer symbols, and the high-frequency areas are spread out around smaller values. Due to these differences in numbers of symbols and their frequencies, each method exhibits its own advantages when compressing various types of images. In summary, such frequency distributions are conducive to further compression using adaptive Huffman coding.

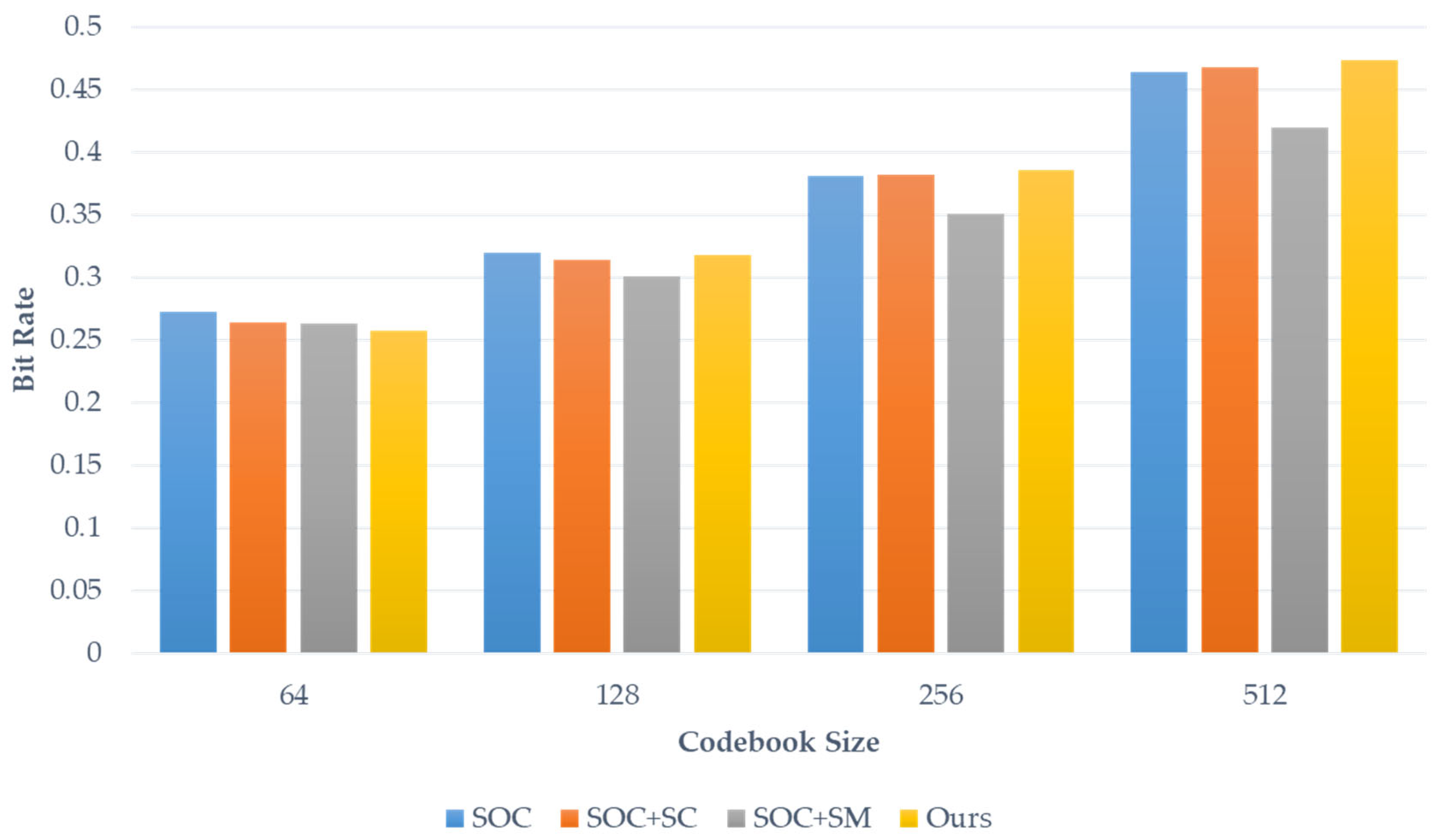

To evaluate the performance of our proposed scheme on different images beyond texture images, we used six common images shown in

Figure 10.

Figure 11 presents the results of our scheme and other schemes when using codebook sizes of 64, 128, 256, and 512. The experimental results show that, with a codebook of 64 codewords, the compression rates of our method are superior to other methods. For other codebook sizes, although our scheme does not achieve the best compression performance, it is only slightly behind. This demonstrates that our method performs well on various images, not just texture images.

Table 10 presents

p-values comparing our proposed scheme with other methods. The

p-value is a statistical measure that helps determine the significance of results; a smaller

p-value indicates a more significant result. It can be observed that all

p-values for the other methods are below 0.05 when compared to our method, demonstrating the statistical significance of our results. This finding provides strong support for our research conclusions.

Table 11 and

Table 12, respectively, present the time complexities of the proposed scheme. The time complexity is

for the encoder and

for the decoder. With different codebook sizes trained using various test images, the fastest execution time occurs at a codebook size of 64, which is approximately 0.1 s. This demonstrates one of the advantages of our proposed scheme. Additionally, we observe that a larger codebook size does result in longer execution time. This is because there are more symbols to encode in the Huffman coding stage, which results in increasing the processing time. Therefore, shorter execution time usually indicates fewer symbols.