Abstract

Nowadays, unlabeled data are abundant, while supervised learning struggles with this challenge as it relies solely on labeled data, which are costly and time-consuming to acquire. Additionally, real-world data often suffer from label noise, which degrades the performance of supervised models. Semi-supervised learning addresses these issues by using both labeled and unlabeled data. This study extends the twin support vector machine with the generalized pinball loss function (GPin-TSVM) into a semi-supervised framework by incorporating graph-based methods. The assumption is that connected data points should share similar labels, with mechanisms to handle noisy labels. Laplacian regularization ensures uniform information spread across the graph, promoting a balanced label assignment. By leveraging the Laplacian term, two quadratic programming problems are formulated, resulting in LapGPin-TSVM. Our proposed model reduces the impact of noise and improves classification accuracy. Experimental results on UCI benchmarks and image classification demonstrate its effectiveness. Furthermore, in addition to accuracy, performance is also measured using the Matthews Correlation Coefficient (MCC) score, and the experiments are analyzed through statistical methods.

1. Introduction

Support vector machine (SVM) [1] is an efficient machine learning model that remains widely utilized today. This is due to its simplicity and ability to explain its mathematical principles easily. It can find a global classification solution, dividing data by creating a single optimal hyperplane. Although the support vector machine is highly popular, it has to solve large matrices when attempting to find solutions using quadratic programming problems (QPPs).

To improve computational efficiency, Jayadeva et al. [2] developed the twin support vector machine (TSVM), which finds two nonparallel hyperplanes, each closer to one class. This reduces problem-solving time by splitting it into two smaller QPPs. Due to its lower computational cost and better generalization than an SVM, many adaptations of the TSVM have emerged. Kumar et al. [3] introduced a least-squares TSVM (LS-TSVM) to simplify computations by replacing QPPs with linear equations. Mei et al. [4] extended TSVMs to multi-task learning with a multi-task LS-TSVM algorithm. Rastogi et al. [5] proposed a robust parametric TSVM (RP-TWSVM), which adjusts the margin to handle heteroscedastic noise. Further extensions of TSVMs are discussed in [6,7,8,9].

A TSVM assigns equal importance to all data, making it sensitive to noise, outliers, and class imbalances [10], which can lead to reduced predictive capability or overfitting. To address these issues, Rezvani et al. [11] introduced Intuitionistic Fuzzy Twin SVMs (IFTSVMs) using intuitionistic fuzzy sets. Xu et al. [12] proposed PinTSVMs for noise insensitivity, and Tanveer et al. [13] introduced the general TSVM with pinball loss (Pin-GTSVM), which reduced sensitivity to outliers. However, TSVMs and Pin-GTSVMs lose model sparsity, motivating Tanveer et al. [14] to propose a Sparse Pinball TSVM (SP-TSVM) using the -insensitive-zone pinball loss. Rastogi et al. [15] developed the generalized pinball loss, which extends the pinball and hinge loss functions. Panup et al. [16] applied this generalized pinball loss to a TSVM, resulting in the GPin-TSVM. The GPin-TSVM improves accuracy in pattern classification, handles noise and outliers effectively, and retains model sparsity, enhancing scalability. Its structure, based on solving two smaller QPPs, reduces computational complexity and increases efficiency.

The model discussed above is classified as supervised learning, which relies on labeled data for training. However, a key challenge with this approach is the requirement for labeled data, which can be difficult and costly to obtain. Additionally, real-world data often suffer from label noise, where incorrect labels degrade the performance of supervised models. To overcome this limitation, unsupervised learning [17] has been developed, allowing models to be built using unlabeled data. To combine the advantages of both labeled and unlabeled data, a method called semi-supervised learning has emerged, enabling models to utilize both types of data effectively. This area has seen significant growth.

Semi-supervised learning (SSL) [18] integrates both labeled and unlabeled data to enhance the effectiveness of supervised learning. The goal is to build a more robust classifier by leveraging large volumes of unlabeled data alongside a relatively small set of labeled data. Recent advancements in deep learning, such as GACNet, CVANet, and CATNet, apply semi-supervised techniques and attention mechanisms to address data scarcity and improve feature extraction. GACNet [19] uses a semi-supervised GAN to augment hyperspectral datasets, while CVANet [20] and CATNet [21] enhance image resolution and classification with attention modules. These methods collectively demonstrate the power of combining semi-supervised learning and attention for robust image processing across various domains. In addressing noisy labels, the ECMB framework [22] introduced real-time correction with a Mixup entropy and a balance term to prevent overfitting. Then, C2MT [23] advanced this with a co-teaching strategy and the Median Balance Strategy (MBS) to maintain class balance. In 2024, BPT-PLR [24] improved accuracy and robustness by addressing class imbalance and optimization conflicts through a Gaussian mixture model and a pseudo-label relaxed contrastive loss.

While these advancements strengthened noisy label learning, a graph-based approach offers a more structured solution for combining labeled and unlabeled data. The Laplacian Twin Support Vector Machine (Lap-TSVM) [25] enhances the TSVM by incorporating Laplacian regularization to exploit the underlying data structure. This technique smooths the decision function across the data manifold, making the Lap-TSVM especially effective for data with local structures. Widely used in machine learning, spectral clustering, and semi-supervised learning, it captures the structural properties of graphs to improve model performance [26]. One notable feature of the graph Laplacian is its symmetry; when the graph is undirected, the Laplacian matrix is symmetric. This symmetry is critical in many spectral graph algorithms, simplifying computations and ensuring the stability of solutions derived from the matrix. The Laplacian matrix’s symmetry and regularization make it effective for graph-based representations in machine learning, particularly in semi-supervised learning. To further reduce computational time, Chen et al. [27] developed a least-squares version of Lap-TSVM that solved linear equations. Additionally, the Lap-PTSVM [28] was introduced, integrating pinball loss with Lap-TSVM and yielding promising results in classification tasks.

In earlier discussions, the GPin-TSVM extended SVM to nonparallel hyperplanes, offering a resilient model that tackled challenges like noise and sparsity, making it highly reliable for practical applications. To further improve classification performance, particularly when labeled data are limited, the GPin-TSVM is extended by integrating a Laplacian graph-based technique, resulting in the LapGPin-TSVM, a semi-supervised model. The LapGPin-TSVM leverages both labeled and unlabeled data, making it more robust in real-world scenarios where large-scale labeling is impractical.

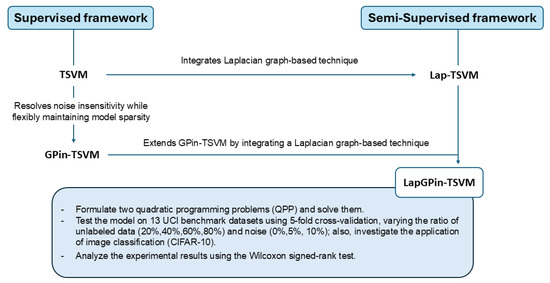

Our approach involves formulating two quadratic programming problems (QPP) to solve for hyperplanes. We evaluate the model across 13 UCI benchmark datasets using 5-fold cross-validation and varying the ratio of unlabeled data (20%, 40%, 60%, 80%) and noise (0%, 5%, 10%). Additionally, we investigate its performance in image classification using CIFAR-10. The results are analyzed using the Wilcoxon signed-rank test to determine statistical significance. The overall concept of this study is stated in Figure 1. Our proposed approach is outlined and characterized by the following:

Figure 1.

A conceptual diagram illustrating the development of LapGPin-TSVM by integrating a Laplacian graph-based technique into the GPin-TSVM framework, evolving from both supervised and semi-supervised methods.

- We combine the twin support vector machine based on the generalized pinball loss (GPin-TSVM) with the Laplacian technique, introducing a novel semi-supervised framework named LapGPin-TSVM. Additionally, we demonstrate noise insensitivity along with a corresponding analysis.

- We evaluate the efficacy of our model through experiments on the UCI dataset, using various ratios of unlabeled data and noise, and compare the results with three state-of-the-art models. Moreover, we also investigate the application of our approach to image classification.

- To analyze the performance of LapGPin-TSVM, we employ the win/tie/loss method, average rank, and use the Wilcoxon signed-rank test to better describe the effectiveness of our proposed method.

The rest of the paper is organized as follows: The preliminary section covers the notation used in the study. We then describe key models, including the TSVM, GPin-TSVM, and semi-supervised Lap-TSVM. Section 3 discusses the primary and dual problems, along with the property of the model. Section 4 provides a model comparison, and Section 5 presents the experimental results. Section 6 offers the discussion, followed by the conclusion in Section 7.

2. Preliminaries

To understand the fundamental concepts, we define the notation and symbols used in this work as follows. For a matrix denoted as M and a vector represented by x, their transposes are denoted as and , respectively. The inverse matrix of M is expressed as . Here, we are dealing with a binary semi-supervised classification problem in a d-dimensional real space . The complete dataset is denoted as , where , and for represents labeled data, while for represents unlabeled data. Assume that we have and samples belonging to the labeled data corresponding to classes +1 and −1, respectively. The positively labeled samples are represented in the matrix , and the negatively labeled samples are denoted by the matrix .

2.1. Twin Support Vector Machine (TSVM)

This method is based on the idea of identifying two nonparallel hyperplanes that classify the data points into their respective classes. It involves a pair of nonparallel planes given by:

where and . This approach requires solving two small-sized quadratic programming problems (QPPs), formulated as follows:

and

where is a slack variable, and are positive penalty parameters. and are unit vectors of the appropriate size. As the twin support vector machine demonstrates commendable performance, researchers are working to enhance it further. They are currently exploring the integration of a new type of loss called the generalized pinball loss, with a specific focus on improving its handling of classification tasks.

2.2. Twin Support Vector Machine with Generalized Pinball Loss (GPin-TSVM)

Panup et al. [16] recently introduced a variant of the TSVM called the generalized pinball loss TSVM, denoted as GPin-TSVM. The definition of the generalized pinball loss function is given as follows

where and . This improvement from the -insensitive zone [29] results in enhanced model sparsity while retaining all the inherent properties of the original. The optimization problems are outlined as follows:

and

where and are non-negative parameters. Define , . Their dual problems are as follows:

and

where , and are Lagrange multipliers. After solving the QPPs, the separating hyperplanes are obtained from

and

The GPin-TSVM improves the SVM model by allowing nonparallel hyperplanes, helping it handle various challenges while maintaining strong performance compared to the TSVM. It uses a special loss function called the generalized pinball loss, which is better at handling noise and outliers than the original TSVM. This means that noisy data have less effect on the decision boundaries, making it a reliable choice for real-world applications. Additionally, its increased sparsity makes it easier to compute, improving its scalability.

However, as a purely supervised method, the TSVM relies on labeled data, which can be limited in practice. While effective on well-annotated datasets, the TSVM struggles in scenarios where labeling large volumes of data is costly or impractical. To address this limitation, a method called semi-supervised learning (SSL) has emerged. SSL enables the use of abundant unlabeled data to improve classifier robustness and accuracy while requiring fewer labeled examples. Next, we discuss a semi-supervised learning model based on the TSVM.

2.3. Laplacian Twin Support Vector Machine

The Lap-TSVM model [25] is formulated by integrating a semi-supervised learning framework derived from the TSVM. As mentioned above, the semi-supervised technique uses the Laplacian matrix, which finds applications in various domains, particularly in spectral graph theory and graph-based machine learning. It has properties and eigenvalues that are related to the structure and connectivity of the underlying graph. The Lap-TSVM finds a pair of nonparallel-planes as follows:

where and . The primal problems of this work can be expressed as

and

The first three terms are concepts from the TSVM. The fourth term is the Laplacian term that considers the entire dataset. Here, M is the matrix that represents both labeled and unlabeled data. L denotes the graph Laplacian, defined as , where D is the diagonal matrix of vertex degrees, and A is the adjacency matrix of the graph derived from the weight matrix defined by k-nearest neighbors, expressed as follows:

where is a parameter that controls the width of the Gaussian kernel, influencing the similarity measure between neighboring points. The decision function of this problem is derived from:

where is the perpendicular distance of point x to the two hyperplanes and .

In summary, integrating semi-supervised learning into the TSVM framework offers the benefit of utilizing information from both labeled and unlabeled data. This can enhance model performance, particularly in situations where labeled data are limited.

3. Proposed Work

Inspired by the concepts of GPin-TSVMs and Lap-TSVMs, we developed the LapGPin-TSVM model. This extension shifts from supervised to semi-supervised learning by integrating the Laplacian regularization term.

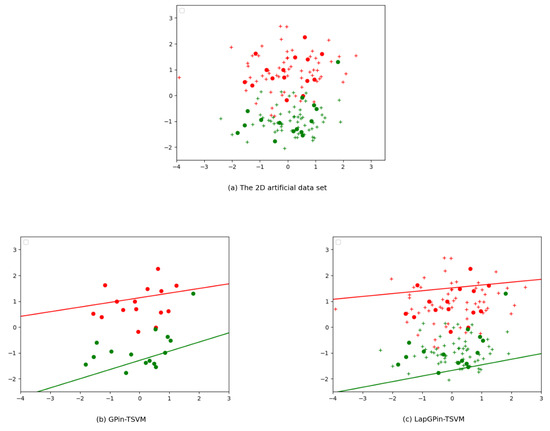

The motivation behind our interest in semi-supervised learning is rooted in the challenges posed by relying solely on labeled data. When dealing with problems that involve the entire dataset, labeled data alone may not be sufficient to establish the most accurate nonparallel hyperplane. This limitation is clearly demonstrated in Figure 2. As stated in Figure 2a, which illustrates the distribution of the entire dataset, the solid circles represent labeled data with two colors: red for the positive class and green for the negative class. The plus symbols, both red and green, represent unlabeled data. The original GPin-TSVM, a supervised learning model, can only be trained using labeled data, as illustrated in Figure 2b. However, in the new approach, LapGPin-TSVM, where the Laplacian term is incorporated into a GPin-TSVM, the model learns from labeled and unlabeled data, as demonstrated in Figure 2c. This approach results in a more reasonable nonparallel hyperplane that better corresponds to the data distribution.

Figure 2.

Visual representations of classification results on a 2D artificial dataset using the GPin-TSVM (supervised framework) and our proposed LapGPin-TSVM (semi-supervised framework), highlighting the impact of unlabeled data on the classifier. The solid circles represent labeled data, with red indicating the positive class and green indicating the negative class. The plus symbols, both red and green, represent unlabeled data.

3.1. Primal Problem

The classification task aims to define two nonparallel hyperplanes, similar to (11). We derive this by applying the frameworks of GPin-TSVM ((5) and (6)) and Lap-TSVM ((12) and (13)) to find the positive and negative hyperplanes. This leads to the following optimization problem:

and

where are non-negative parameters, and . and are unit vectors of the appropriate size. If we set and to 0 in (16) and (17), the problems reduce to a GPin-TSVM, demonstrating that our proposed model is a generalization of the previous one.

In problem (16), the first term aims to minimize the sum of the squared distances of labeled samples to the hyperplane, while the second term represents the slack variable controlling the loss of samples by employing the concept of generalized pinball loss. The term serves as regularization to prevent ill-conditioning. The fourth term is the Laplacian regularization term, introducing a penalty for deviations from smoothness in the decision function across the data manifold. It encourages the model to respect the underlying geometric structure of the data, promoting a more coherent decision boundary.

The given minimization problems can be converted into an unconstrained optimization format by interpreting the constraint as the significance of the generalized pinball loss. This leads to the formulation of the new problem as follows:

and

3.2. Dual Problem

For considering the dual form and solving the problem, we focus on problem (16), as the computation method for problem (17) is the same. Here, we introduce Lagrange multiplier and then obtain the following form:

Next, we apply the KKT optimality conditions to obtain the following results:

Since , we have

which implies that

Now, we define , , , and . By combining (21) and (22) and using (27), the dual form of the problem (16) is obtained as follows:

Since the above dual form is considered a quadratic programming problem, we solve it to find the solution. Once solved, we obtain the values of and , and then we obtain:

Similar to the negative hyperplane (17), we obtain

where are Lagrange multipliers. The solution of this problem is

After acquiring the two hyperplanes, we categorize the new data sample using the following expression:

In this context, represents the perpendicular distance of the data from the hyperplane. The data are assigned to the class of the hyperplane to which they have the minimum distance. The Algorithm of our proposed is shown in Algorithm 1.

| Algorithm 1 LapGPin-TSVM |

|

3.3. Property of the Lap-GPTSVM

Noise Insensitivity

We explore how the LapGPin-PTSVM tackles the problem of sensitivity to noise. To be concise, we concentrate on the linear case and resolve the issue presented in (18). However, it is worth noting that the same analysis is applicable to the nonlinear case. Define the generalized sign function as

where , and represents the subgradient of the generalized pinball loss function. By employing the Karush–Kuhn–Tucker (KKT) optimality condition for Equation (18), we can formulate it as follows:

Here, represents the zero vector, , and is the number of negative samples. Given and , we can partition the index set of B into five distinct sets:

where . By introducing the notation , and , Equation (35) can be reformulated to assert the existence of and for which:

As indicated in Equation (34), the samples in may not contribute to the improvement of due to the fact that the generalized sign function is zero. However, directly influences the sparsity of the model. It is important to note that the quantities and regulate the number of samples in . As and approach zero, sparsity diminishes. Conversely, when and , we enhance sparsity by including a greater number of samples in .

Proposition 1.

Proof.

Consider an arbitrary negative point, denoted as , belonging to the set . Utilizing the KKT conditions represented by equations (25) and (26), we derive that . Further analysis of the KKT condition (23) leads to the conclusion that , resulting in .

Define , and consequently, . Additionally, the expression is obtained from (22).

Considering the constraints and , it follows that . Thus, the sum of over points not in is given by

Consequently, we establish that . This leads to the inequality:

and

This completes the proof of our argument. □

When analyzing the aforementioned proposition, we find that the values of affect the number of samples in the set . Decreasing the values results in fewer members in , causing the decision boundary to be more sensitive to noise. Conversely, increasing the values make the decision boundary less sensitive to noise. Additionally, there is a term related to the graph-based approach, namely, the Laplacian term. This introduces the consideration that the analysis of both labeled and unlabeled data influences the creation of the classification boundary.

In considering the negative hyperplane, we define a set in a similar manner but with respect to positive samples instead. These sets are as follows:

where . For the analysis, we use the same approach. Consequently, we obtain the following proposition.

Proposition 2.

The inclusion of Laplacian regularization in LapGPin-TSVM contributes to enhanced generalization and robustness, particularly in scenarios where the data exhibit intrinsic geometric properties.

4. Comparison of the Models

We compare our model with three other models: GPin-TSVM [16], Lap-TSVM [25], and Lap-PTSVM [28].

4.1. LapGPin-TSVM vs. GPin-TSVM

Both the original GPin-TSVM and the new LapGPin-TSVM find solutions using the dual problem and obtain nonparallel hyperplanes. They utilize the same generalized pinball loss function to enhance model performance. Additionally, both approaches are designed to tackle issues of sparsity and noise sensitivity. However, the GPin-TSVM operates using only labeled data, while the LapGPin-TSVM can leverage both labeled and unlabeled data. Moreover, in the LapGPin-TSVM framework, setting the third term’s coefficient to zero reduces the problem to a GPin-TSVM, making the LapGPin-TSVM a more generalized approach.

4.2. LapGPin-TSVM vs. Lap-TSVM

The LapGPin-TSVM and Lap-TSVM generate two nonparallel hyperplanes and solve the dual problem. They operate within a semi-supervised framework that incorporates the Laplacian term. However, there are key differences between them. The Lap-TSVM is based on the study of TSVMs and hinge loss, which does not tackle the noise sensitivity problem since this loss penalizes only misclassified data. In contrast, the LapGPin-TSVM is improved based on the GPin-TSVM, which utilizes the generalized pinball loss function, penalizing all data, even if correctly classified. This makes the LapGPin-TSVM stable for resampling and imparts noise insensitivity to the model.

4.3. LapGPin-TSVM vs. Lap-PTSVM

The LapGPin-TSVM and Lap-PTSVM generate two nonparallel hyperplanes and address the dual problem within a semi-supervised framework. They differ notably in their loss functions. The Lap-PTSVM uses a pinball loss, which penalizes deviations from a specific threshold, focusing on particular quantile issues but not necessarily handling all data points uniformly. On the other hand, the LapGPin-TSVM utilizes a generalized pinball loss, which offers a more flexible and thorough approach to penalization. This type of loss considers the entire range of errors, including those from correctly classified instances, thus boosting the model’s robustness. Consequently, the LapGPin-TSVM enhances model sparsity and offers improved generalization, making it more adaptable to various datasets and resistant to noise.

5. Numerical Experiments

In this section, the GPin-TSVM [16], Lap-TSVM [25], and Lap-PTSVM [28] are compared with the LapGPin-TSVM. We selected 13 benchmark datasets to evaluate the performance of our proposed model. A comprehensive overview of these datasets is presented in Table 1. A Grid Search was employed to explore a range of hyperparameters. We fine-tuned the parameters , , and from the set , while , , , , , , , and were selected from the range . In the context of nonlinear cases, the kernel parameter was tuned from the set .

Table 1.

The detailed description of the 13 benchmark datasets.

All experiments were executed in Python 3.9.5 on a Windows 10 system, utilizing an Intel(R) Core(TM) i7-4500U CPU @ 1.80 GHz 2.40 GHz. The experimental results were obtained from a 5-fold cross-validation. Our investigation focused on the model performance, emphasizing the effects of varying ratios of unlabeled data and noise. The bold type indicates the best result.

5.1. Evaluation Metrics

In addition to standard measures such as accuracy (ACC), we employed the Matthews Correlation Coefficient (MCC) as a pivotal metric for evaluating the overall classification performance of the models. It takes into account true positives (), true negatives (), false positives (), and false negatives (). The formula for MCC is as follows:

It ranges from to , where 1 indicates perfect prediction, 0 indicates random prediction, and −1 indicates total disagreement between predictions and actual outcomes.

5.2. Variation in Ratio of Unlabeled Data

The LapGPin-TSVM extends the GPin-TSVM by considering the incorporation of Laplacian techniques and transforming the model into a semi-supervised model. To test the performance of our model, we systematically changed the proportion of unlabeled data in the dataset. We considered ratios ranging from to of the total data. The accuracy results for both the linear and nonlinear cases (using the RBF kernel) are shown in Table 2, Table 3, Table 4 and Table 5, respectively. Each table corresponds to a different percentage of unlabeled data: Table 2 presents the results for of unlabeled data, Table 3 for , Table 4 for , and Table 5 for .

Table 2.

The average values of accuracy, MCC score, and time for experimenting with data containing 20% of unlabeled data on the UCI dataset.

Table 3.

The average values of accuracy, MCC score, and time for experimenting with data containing 40% of unlabeled data on the UCI dataset.

Table 4.

The average values of accuracy, MCC score, and time for experimenting with data containing 60% of unlabeled data on the UCI dataset.

Table 5.

The average values of accuracy, MCC score, and time for experimenting with data containing 80% of unlabeled data on the UCI dataset.

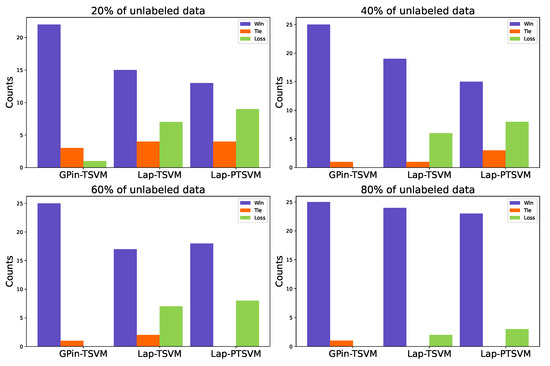

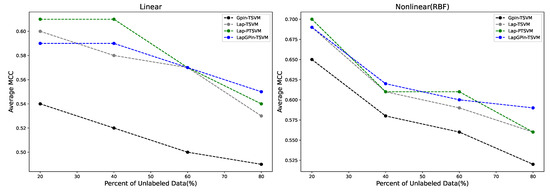

We present the win/tie/loss count for accuracy in the last column of each table. To provide a clearer view, Figure 3 illustrates the results for each percentage of unlabeled data. Each graph represents 26 cases, showing that our proposed model achieved more wins than the others. In some cases, with 60% and 80% unlabeled data, there were no ties or losses. The average rank of accuracy and MCC score of all cases was computed and is shown in Table 6. Here, ranks were assigned by ordering the values in ascending order, then the smallest values as rank 1. Thus, a higher average rank generally indicated better performance.

Figure 3.

The count of wins, ties, and losses for accuracy at each percentage of unlabeled data is shown. If there is no color, it means the value is 0.

Table 6.

Average rank of all methods when considering different ratios of unlabeled data.

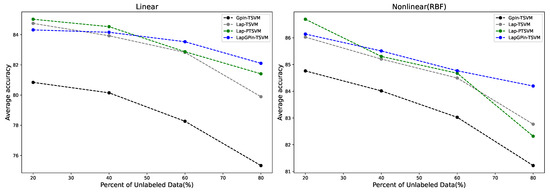

It can be observed that in almost every case where the percentage of unlabeled data increased, the GPin-TSVM method exhibited lower accuracy compared to other methods as stated in Figure 4. The MCC score for this method consistently yielded results in the same direction showing in Figure 5. This is because this method is a supervised learning approach that builds a classifier using only labeled data.

Figure 4.

Comparison of average accuracy in linear and nonlinear (RBF) cases across different percentages of unlabeled data.

Figure 5.

Comparison of average MCC in linear and nonlinear (RBF) cases across different percentages of unlabeled data.

From Figure 4, in the linear case, the GPin-TSVM, Lap-TSVM, Lap-PTSVM, and LapGPin-TSVM were evaluated as the percentage of unlabeled data increased from 20% to 80%. Initially, the Lap-PTSVM performed better with lower percentages of unlabeled data (20%) but dropped in accuracy as the percentage increases. The LapGPin-TSVM, on the other hand, started strong and maintained relatively stable performance, particularly as the percentage increased, indicating its robustness in handling larger proportions of unlabeled data. The Lap-TSVM also showed consistent performance, though with a slight downward trend as the quantity of unlabeled data increased.

In the nonlinear case using an RBF kernel, all methods generally performed better than in the linear case. The LapGPin-TSVM maintained the highest accuracy levels, even as the percentage of unlabeled data reached 80%, demonstrating its adaptability and superior performance in nonlinear cases.

As shown in Table 2, it is evident that our method outperformed others in both linear and nonlinear cases on WDBC, Heart, and Specf heart data. However, for Sonar, Bupa and Monk-2 data, our method exhibited lower accuracy compared to the Lap-TSVM and Lap-PTSVM. When considering the scenario where the proportion of unlabeled data was 40% of the total data in Table 3, it was observed that our method achieved the highest accuracy in Pima, Diabetes, and WDBC data. Similar to Table 4, our method continued to demonstrate the highest accuracy in both cases when considering Pima, Diabetes, Ionosphere and Monk-2 data. However, for Bupa and Specf heart data, the Lap-PTSVM achieved the highest accuracy. Additionally, in the case of Sonar data, the Lap-TSVM outperformed our approach in both cases. For Table 5, the LapGPin-TSVM outperformed most other methods, except on the Bupa, Sonar, Diabetes, Australian, and Specf heart data.

In addition to the evaluation based on accuracy, we now turn our attention to the results concerning the MCC score. In Table 2, the maximum MCC score for the nonlinear scenario in Monk-2 data was consistent across the Lap-TSVM, Lap-PTSVM, and our method, standing at 0.9464 and closely approaching 1. Furthermore, in Table 3, within the same datasets, our LapGPin-TSVM consistently outperformed in both cases, but the highest accuracy in the linear case belonged to Lap-TSVM. Notably, as indicated in Table 4, the Lap-TSVM outperformed other methods on the Sonar data. Nevertheless, our proposed method surpassed the others on the Ionosphere and Monk-2 data. These outcomes demonstrated a similar pattern to those observed in Table 5.

In conclusion, within the linear scenario, the LapGPin-TSVM showed superior performance in both accuracy and MCC, as evidenced by the values in Table 6, where it held an average rank of 3.45 for accuracy and 3.38 for MCC, while the Lap-PTSVM achieved 3.05 and 2.85, respectively. A higher average rank generally indicates better performance. Similarly, in the nonlinear case, our proposed exhibited the best performance, as highlighted by the average rank values for accuracy and MCC of 3.28 and 3.38, respectively, as presented in Table 6. This investigation put the emphasis on the ability of the LapGPin-TSVM to utilize unlabeled instances, thereby leading to enhanced generalization and adaptability of decision boundaries.

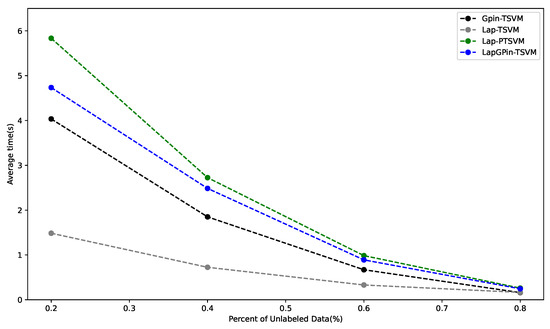

Computational Efficiency of Model

Figure 6 shows that the Lap-PTSVM had the highest average computation time across all percentages of unlabeled data, making it the most computationally demanding. This is due to its use of the pinball loss function, which reduces model sparsity and increases processing time. The LapGPin-TSVM, the second most time-consuming method, uses the generalized pinball loss function to restore sparsity. Both models also require Laplacian matrix calculations, which further increase their processing time compared to GPin-TSVM.

Figure 6.

The graph shows the average computational time for the GPin-TSVM, Lap-TSVM, Lap-PTSVM, and LapGPin-TSVM across different unlabeled data percentages.

In contrast, the GPin-TSVM and Lap-TSVM had the lowest average times. The GPin-TSVM avoids Laplacian matrix computations and benefits from the generalized pinball loss function, which restores sparsity. Despite needing Laplacian matrix calculations, the Lap-TSVM remains efficient due to its sparsity properties. As the percentage of unlabeled data increased, all models showed reduced computational time, suggesting that larger volumes of unlabeled data are less computationally demanding to process.

5.3. Ablation Study

An ablation study, often applied in the context of neural networks [30], involves systematically altering specific components to assess their impact on a model’s performance. In our investigation, we focused on the effects of ablation on the proposed model by modifying its Laplacian regularization term. This allowed us to evaluate the influence of the modification. After analyzing all test cases with unlabeled data, we identified the optimal configuration of our proposed LapGPin-TSVM model that achieved the highest accuracy.

To assess the impact of the Laplacian regularization term on model performance, we carried out ablation experiments on the Ionosphere dataset. The goal was to examine how the inclusion of the Laplacian regularization term affected the model’s overall accuracy. In this experiment, we tested the problems (16) and (17) of the LapGPin-TSVM without the Laplacian regularization by setting the parameter . The results in Table 7 reveal that the model’s performance declined when the Laplacian term was excluded. This decrease in accuracy likely resulted from information loss, as the model struggled to make effective use of the unlabeled data. However, when the Laplacian regularization was included, the performance improved notably, boosting accuracy from 80.91% to 82.91% on the Ionosphere dataset.

Table 7.

Effects of the Laplacian term.

5.4. Variation in Ratio of Noise

Due to the fact that the LapGPin-TSVM utilizes error measurement through the computation of the generalized pinball loss, a characteristic that follows this type of loss is its ability to handle noise issues in the data. Therefore, to test the model performance regarding this feature, we conducted experiments on datasets with noise, having a zero mean and different variances of 0, 0.05, and 0.1. Here, we denote the percentage of noise in the data as r. The results are presented in Table 8 for the linear case. For the nonlinear case, the results are displayed in Table 9. Similarly, we computed and presented the average rank for all cases in Table 10.

Table 8.

The mean and standard deviation of test accuracy with various amounts of noise on the UCI dataset calculated using a linear kernel.

Table 9.

The mean and standard deviation of test accuracy with various amounts of noise on the UCI dataset calculated using an RBF kernel.

Table 10.

Average rank of all methods when considering different ratios of noise.

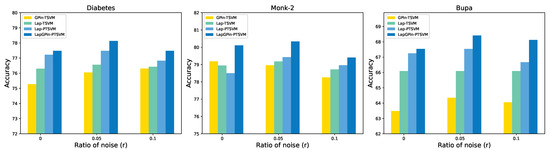

The results are categorized into linear and nonlinear cases. For the linear case presented in Table 8, our method achieved the best performance at 70% (21 out of 30 instances). On the Fertility data, the experimental results for each model were comparable. On the Pima, Sonar, and Spambase data, our proposed loss outperformed other models. Considering the case where on the Sonar data, although the Lap-TSVM had the highest accuracy, the highest MCC score was achieved by the Lap-PTSVM. The results are shown in Figure 7.

Figure 7.

The accuracy on the Diabetes, Monk-2, and Bupa datasets varies with different noise ratios in the linear cases.

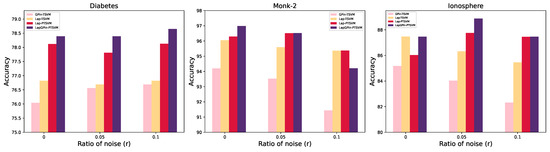

In Table 9, which display the results of the nonlinear case, our model achieved the highest accuracy in 23 out of 30 instances, equating to 76.67%. Similarly to the previous findings on the Fertility data, all models produced closely aligned results. In the Bupa data, considering and , our model achieved the highest accuracy, but the highest MCC values belonged to the Lap-TSVM and Lap-PTSVM, respectively. Similar trends emerged on the Ionosphere and Monk-2 data when considering . Some results are shown in Figure 8.

Figure 8.

The accuracy on the Diabetes, Monk-2, and Ionosphere datasets varies with different noise ratios in the nonlinear cases.

Analyzing the average rank values for accuracy and MCC in Table 10, it is evident that our method attained the highest average rank. This denotes superior performance compared to other models, with the second-ranking model being the Lap-PTSVM. This outcome is attributed to the fact that the generalized pinball loss is derived from the pinball loss, which possesses the capability to handle noise. Therefore, in addition to our model being a generalized version of the Lap-PTSVM, it also exhibits better sensitivity to noise.

This comprehensively assesses robustness and performance under different levels of noise. The Laplacian regularization term in the LapGPin-TSVM improves model generalization by considering the local data structure, particularly beneficial for datasets with complex intrinsic geometry.

Furthermore, we also conducted a statistical analysis to assess the differences between the model we proposed and other models [31]. Due to the non-normal distribution of our data, we chose to employ the Wilcoxon signed-rank test [32]. This test is a non-parametric statistical test employed to compare two related samples and determine whether there is a significant difference between the paired observations in a sample, employing a significance level of 0.05 for our analysis.

In this analysis, we employed accuracy as the metric, and the results are presented in Table 11 and Table 12. Table 11 compares our model with others, incorporating data from Table 2, Table 3, Table 4 and Table 5, which present results based on the ratio of unlabeled samples. Table 12, on the other hand, extracts data from Table 8 and Table 9. As observed in both tables, the LapGPin-TSVM exhibited significant differences compared to the GPin-TSVM, Lap-TSVM, and Lap-PTSVM.

Table 11.

The results of the Wilcoxon signed-rank test analysis of the models when examining changes in the ratio of unlabeled data.

Table 12.

The results of the Wilcoxon signed-rank test analysis of the models when examining changes in the ratio of noise.

5.5. Experiment on an Image Dataset

We evaluated the proposed model on a binary classification task using the CIFAR-10 dataset [33], which is a widely used dataset for image retrieval and classification, comprising 60,000 samples from ten classes: airplane, automobile, bird, cat, deer, dog, frog, horse, ship, and truck, as shown in Figure 9. Each class contains 6000 samples. For the binary classification experiments, specific class pairs were chosen: airplane vs. automobile, ship vs. truck, deer vs. shore, and dog vs. cat. In each instance, feature extraction utilized the ResNet18 architecture to enhance image representation for classification, and the percentage of unlabeled data was set at 70%.

Figure 9.

An illustration of the CIFAR-10 dataset.

As shown in Table 13, it is evident that the LapGPin-TSVM outperformed most other models across various datasets, including the Lap-PTSVM and Lap-TSVM. For instance, in the “deer vs. shore” classification, the LapGPin-TSVM achieved the highest accuracy of 92.85%, surpassing the Lap-PTSVM (92.46%), Lap-TSVM (91.76%), and the supervised GPin-TSVM (91.15%). Similarly, for the “ship vs. truck” dataset, the LapGPin-TSVM led with an accuracy of 98.03%, exceeding both Lap-PTSVM and Lap-TSVM.

Table 13.

Test accuracy of four models using a 5-fold cross-validation. The table includes accuracy for each fold and the average accuracy for each model.

6. Discussion

In our proposed method, the addition of the Laplacian term offers significant benefits but also introduces potential challenges. One of the primary concerns is the increase in computational complexity, as calculating the Laplacian matrix requires constructing a data graph based on similarity measures. Another challenge is related to data noise. While the Laplacian regularization helps enforce smoothness in the decision boundary, it may cause the model to overfit when dealing with noisy data, thus reducing generalization performance.

However, our method addresses these concerns by leveraging the robust properties of the generalized pinball loss function, which inherently handles noise more effectively. This ensures that despite the inclusion of the Laplacian term, the model can manage noisy data and still maintain high performance.

7. Conclusions

This study investigated the LapGPin-TSVM, a novel adaptation of the twin support vector machine (TSVM) enriched with the Laplacian graph-based technique, making it well suited for semi-supervised learning tasks. The LapGPin-TSVM improved model performance by integrating unlabeled data, leading to enhanced generalization, particularly in situations with limited labeled datasets. Our proposed model established a smooth decision boundary, enhancing robustness in the presence of noise, as guaranteed by theoretical foundations. The solution involved solving the quadratic programming problems to determine the classification’s hyperplanes. Additionally, this work considered an extended scenario, encompassing previous studies. A potential direction for future research is to develop techniques for semi-supervised learning that align with advancements in Laplacian matrix methodologies.

Author Contributions

Conceptualization, V.D. and R.W.; methodology, V.D. and R.W.; software, V.D.; validation, V.D.; formal analysis, R.W. and V.D.; writing—original draft preparation, V.D.; writing—review and editing, V.D. and R.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Faculty of Science, Naresuan University (NU), grant no. R2566E041.

Data Availability Statement

Data are contained within the article.

Acknowledgments

The authors are thankful to the referees for their attentive reading and valuable suggestions. This research was supported by the Science Achievement Scholarship of Thailand.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Christmann, A.; Steinwart, I. Support Vector Machines; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Jayadeva; Khemchandani, R.; Chandra, S. Twin Support Vector Machines for Pattern Classification. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 905–910. [Google Scholar] [CrossRef] [PubMed]

- Kumar, M.A.; Gopal, M. Least Squares Twin Support Vector Machines for Pattern Classification. Expert Syst. Appl. 2009, 36, 7535–7543. [Google Scholar] [CrossRef]

- Mei, B.; Xu, Y. Multi-task Least Squares Twin Support Vector Machine for Classification. Neurocomputing 2019, 338, 26–33. [Google Scholar] [CrossRef]

- Rastogi, R.; Sharma, S.; Chandra, S. Robust parametric twin support vector machine for pattern classification. Neural Process. Lett. 2018, 47, 293–323. [Google Scholar] [CrossRef]

- Xie, X.; Sun, F.; Qian, J.; Guo, L.; Zhang, R.; Ye, X.; Wang, Z. Laplacian Lp Norm Least Squares Twin Support Vector Machine. Pattern Recognit. 2023, 136, 109192. [Google Scholar] [CrossRef]

- Li, Y.; Sun, H. Safe Sample Screening for Robust Twin Support Vector Machine. Appl. Intell. 2023, 53, 20059–20075. [Google Scholar] [CrossRef]

- Si, Q.; Yang, Z.; Ye, J. Symmetric LINEX Loss Twin Support Vector Machine for Robust Classification and Its Fast Iterative Algorithm. Neural Netw. 2023, 168, 143–160. [Google Scholar] [CrossRef]

- Gupta, U.; Gupta, D. Least Squares Structural Twin Bounded Support Vector Machine on Class Scatter. Appl. Intell. 2023, 53, 15321–15351. [Google Scholar] [CrossRef]

- Tanveer, M.; Rajani, T.; Rastogi, R.; Shao, Y.H.; Ganaie, M.A. Comprehensive Review on Twin Support Vector Machines. Ann. Oper. Res. 2022, 1–46. [Google Scholar] [CrossRef]

- Rezvani, S.; Wang, X.; Pourpanah, F. Intuitionistic Fuzzy Twin Support Vector Machines. IEEE Trans. Fuzzy Syst. 2019, 27, 2140–2151. [Google Scholar] [CrossRef]

- Xu, Y.; Yang, Z.; Pan, X. A Novel Twin Support-Vector Machine with Pinball Loss. IEEE Trans. Neural Netw. Learn. Syst. 2016, 28, 359–370. [Google Scholar] [CrossRef] [PubMed]

- Tanveer, M.; Sharma, A.; Suganthan, P.N. General Twin Support Vector Machine with Pinball Loss Function. Inf. Sci. 2019, 494, 311–327. [Google Scholar] [CrossRef]

- Tanveer, M.; Tiwari, A.; Choudhary, R.; Jalan, S. Sparse Pinball Twin Support Vector Machines. Appl. Soft Comput. 2019, 78, 164–175. [Google Scholar] [CrossRef]

- Rastogi, R.; Pal, A.; Chandra, S. Generalized Pinball Loss SVMs. Neurocomputing 2018, 322, 151–165. [Google Scholar] [CrossRef]

- Panup, W.; Ratipapongton, W.; Wangkeeree, R. A Novel Twin Support Vector Machine with Generalized Pinball Loss Function for Pattern Classification. Symmetry 2022, 14, 289. [Google Scholar] [CrossRef]

- Alloghani, M.; Al-Jumeily, D.; Mustafina, J.; Hussain, A.; Aljaaf, A.J. A Systematic Review on Supervised and Unsupervised Machine Learning Algorithms for Data Science. In Supervised and Unsupervised Learning for Data Science; Springer Nature: Berlin, Germany, 2020; pp. 3–21. [Google Scholar]

- Reddy, Y.C.A.P.; Viswanath, P.; Reddy, B.E. Semi-supervised Learning: A Brief Review. Int. J. Eng. Technol. 2018, 7, 81. [Google Scholar] [CrossRef]

- Zhang, W.; Li, Z.; Li, G.; Zhuang, P.; Hou, G.; Zhang, Q.; Li, C. Gacnet: Generate adversarial-driven cross-aware network for hyperspectral wheat variety identification. IEEE Trans. Geosci. Remote Sens. 2023, 62, 5503314. [Google Scholar] [CrossRef]

- Zhang, W.; Zhao, W.; Li, J.; Zhuang, P.; Sun, H.; Xu, Y.; Li, C. CVANet: Cascaded visual attention network for single image super-resolution. Neural Netw. 2024, 170, 622–634. [Google Scholar] [CrossRef]

- Zhang, W.; Chen, G.; Zhuang, P.; Zhao, W.; Zhou, L. CATNet: Cascaded attention transformer network for marine species image classification. Expert Syst. Appl. 2024, 256, 124932. [Google Scholar] [CrossRef]

- Zhang, Q.; Lee, F.; Wang, Y.g.; Ding, D.; Yao, W.; Chen, L.; Chen, Q. An joint end-to-end framework for learning with noisy labels. Appl. Soft Comput. 2021, 108, 107426. [Google Scholar] [CrossRef]

- Zhang, Q.; Zhu, Y.; Yang, M.; Jin, G.; Zhu, Y.; Chen, Q. Cross-to-merge training with class balance strategy for learning with noisy labels. Expert Syst. Appl. 2024, 249, 123846. [Google Scholar] [CrossRef]

- Zhang, Q.; Jin, G.; Zhu, Y.; Wei, H.; Chen, Q. BPT-PLR: A Balanced Partitioning and Training Framework with Pseudo-Label Relaxed Contrastive Loss for Noisy Label Learning. Entropy 2024, 26, 589. [Google Scholar] [CrossRef] [PubMed]

- Qi, Z.; Tian, Y.; Shi, Y. Laplacian Twin Support Vector Machine for Semi-supervised Classification. Neural Netw. 2012, 35, 46–53. [Google Scholar] [CrossRef] [PubMed]

- Merris, R. Laplacian Graph Eigenvectors. Linear Algebra Its Appl. 1998, 278, 221–236. [Google Scholar] [CrossRef]

- Chen, W.J.; Shao, Y.H.; Deng, N.Y.; Feng, Z.L. Laplacian Least Squares Twin Support Vector Machine for Semi-supervised Classification. Neurocomputing 2014, 145, 465–476. [Google Scholar] [CrossRef]

- Damminsed, V.; Panup, W.; Wangkeeree, R. Laplacian Twin Support Vector Machine with Pinball Loss for Semi-supervised Classification. IEEE Access 2023, 11, 31399–31416. [Google Scholar] [CrossRef]

- Huang, X.; Shi, L.; Suykens, J.A.K. Support Vector Machine Classifier with Pinball Loss. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 36, 984–997. [Google Scholar] [CrossRef]

- Meyes, R.; Lu, M.; de Puiseau, C.W.; Meisen, T. Ablation studies in artificial neural networks. arXiv 2019, arXiv:1901.08644. [Google Scholar]

- García, S.; Fernández, A.; Luengo, J.; Herrera, F. Advanced Nonparametric Tests for Multiple Comparisons in the Design of Experiments in Computational Intelligence and Data Mining: Experimental Analysis of Power. Inf. Sci. 2010, 180, 2044–2064. [Google Scholar] [CrossRef]

- Demšar, J. Statistical Comparisons of Classifiers over Multiple Data Sets. J. Mach. Learn. Res. 2006, 7, 1–30. [Google Scholar]

- Krizhevsky, A.; Hinton, G. Learning Multiple Layers of Features from Tiny Images; Computer Science University of Toronto: Toronto, ON, Canada, 2009. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).