Modifying Hellwig’s Method for Multi-Criteria Decision-Making with Mahalanobis Distance for Addressing Asymmetrical Relationships

Abstract

1. Introduction

- Normalization: This step involves transforming performance ratings into a standardized unit scale.

- Weights determination: This process involves assigning weights to the criteria based on their relative importance in the decision-making process.

- Distance Measure: This step calculates the distance between the alternatives and reference points, providing a measure of their dissimilarity or similarity.

- Aggregation Formula: Aggregation involves combining the normalized values, weights, and distance measures to obtain an overall preference value for each alternative.

2. Mahalanobis Distance and Multi-Criteria Analyses

3. Mahalanobis Distance-Based Hellwig Method

3.1. The Hellwig’s Framework—A Short Literature Review

3.2. The Hellwig’s Method

- Vector normalization, which transforms performance ratings into a normalized vector as follows:

- Linear scale transformation (Max-Min method) which involves scaling the performance ratings linearly based on the minimum and maximum values observed across criteria.

- Linear scale transformation (Sum method), where performance ratings are linearly transformed based on the sum of values across all criteria.

- Euclidean distance measure ( [10]:

- Classical approach (H measure based on Euclidean distance):

- Extended approach (HM measure based on Mahalanobis distance):

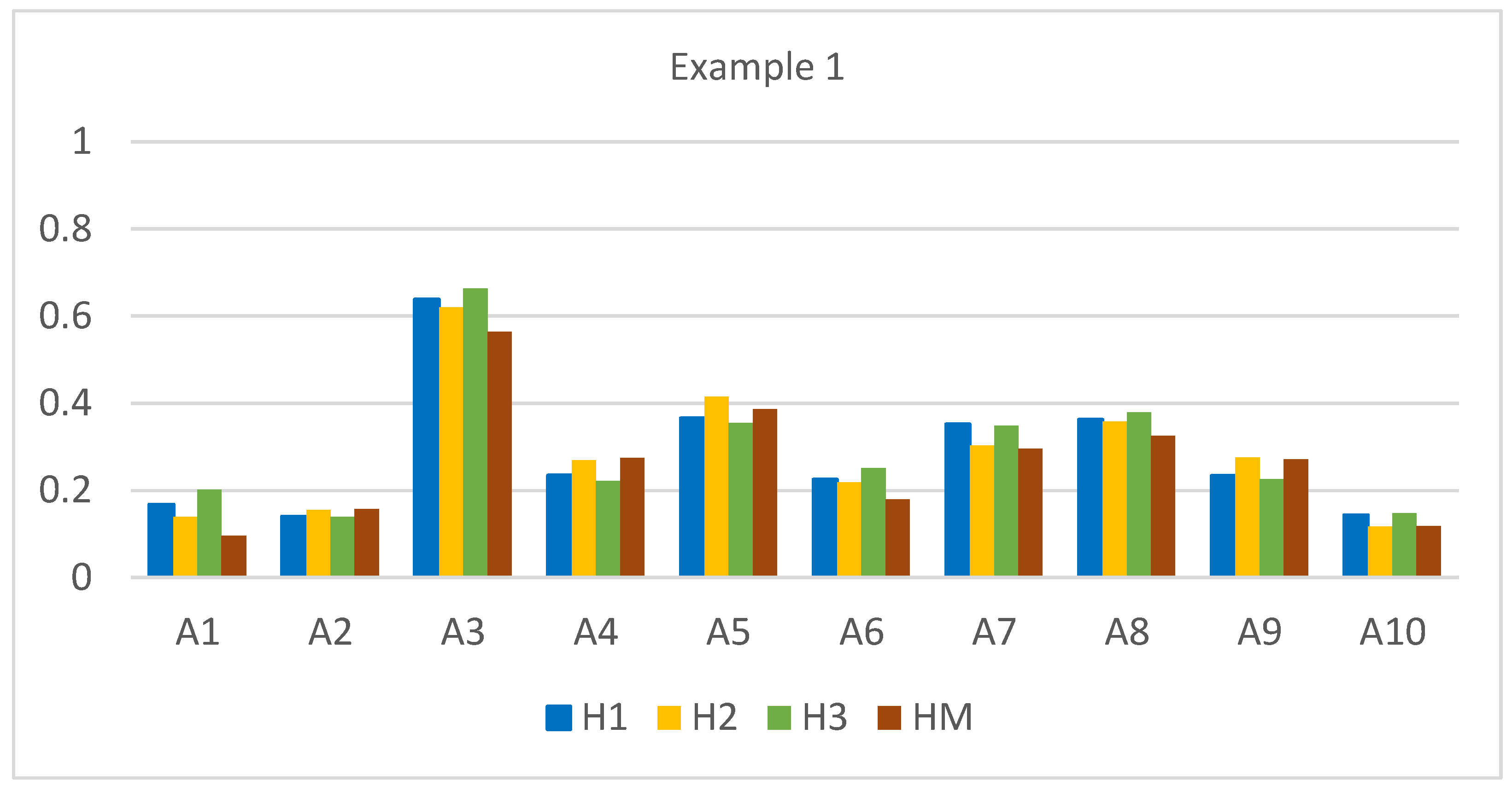

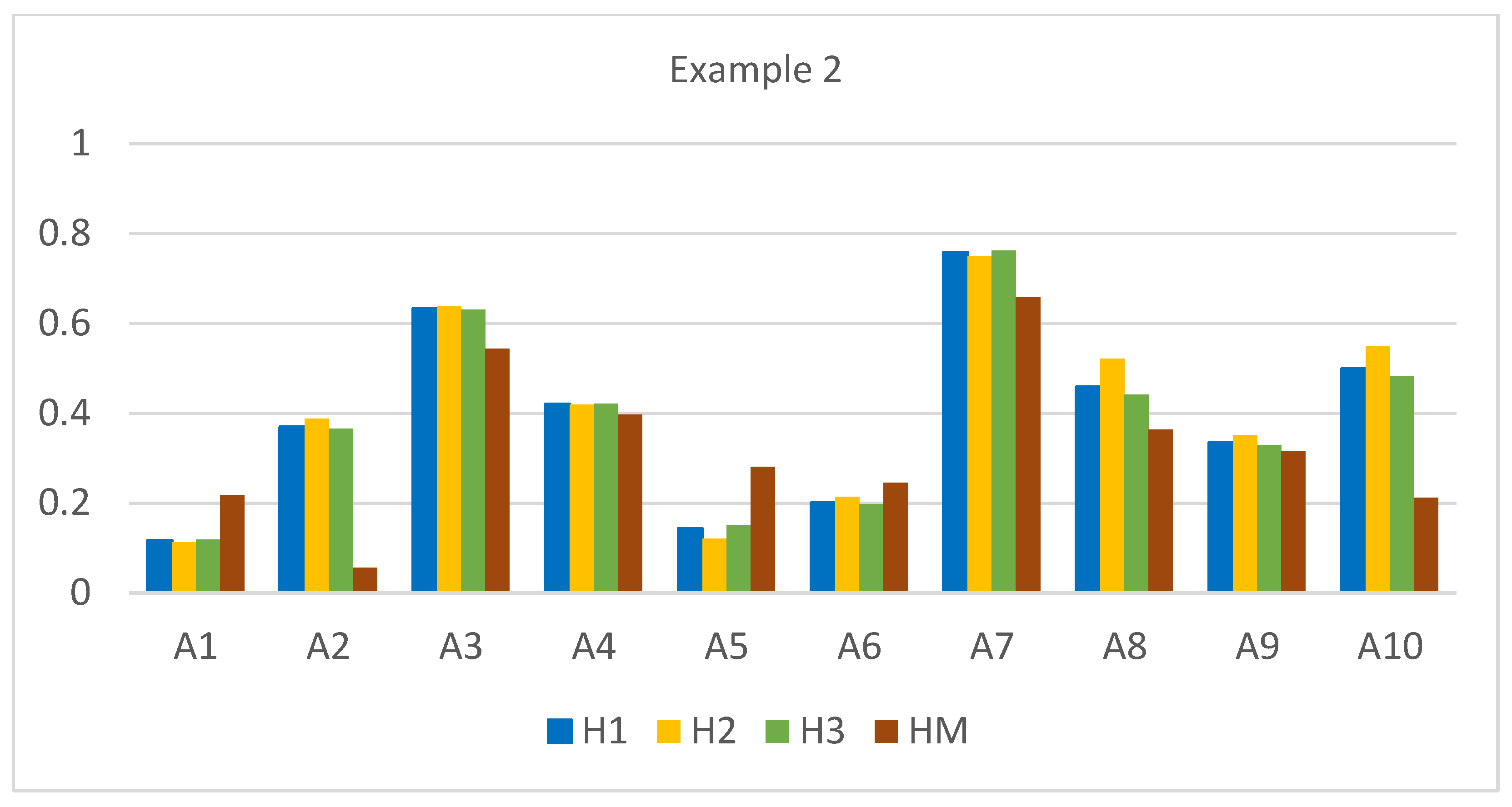

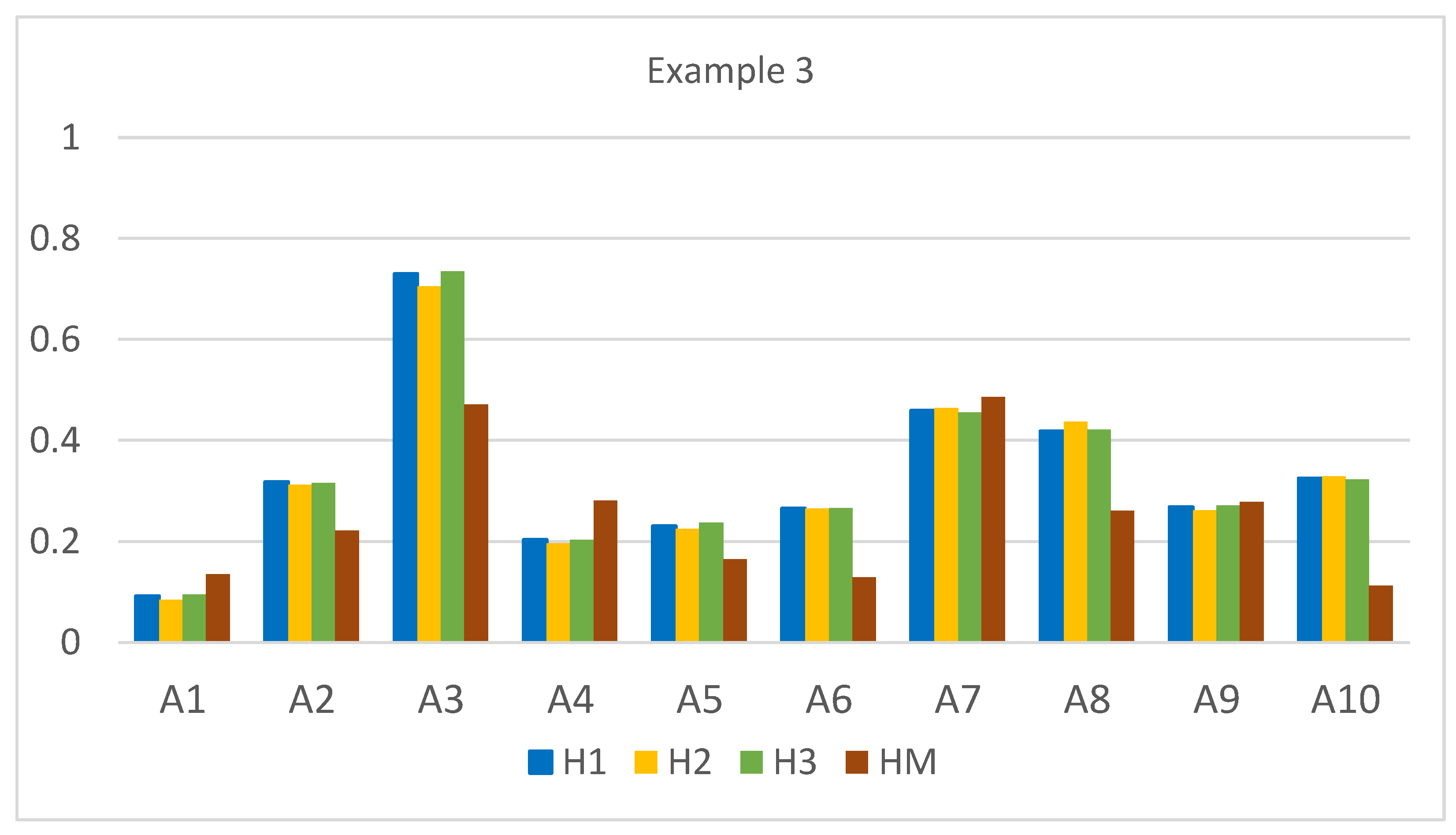

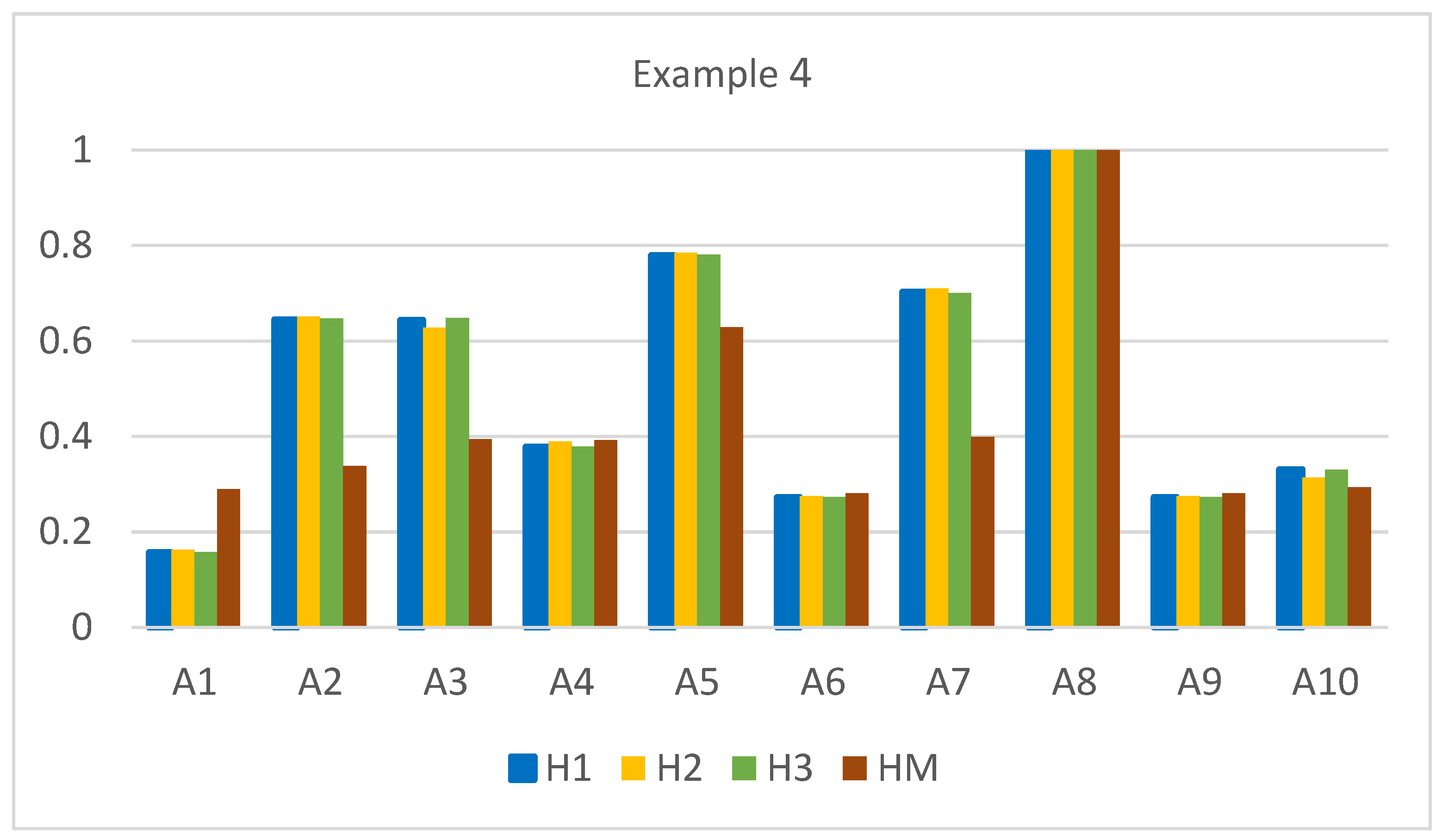

4. Numerical Examples

5. Conclusions

- Developing a modification of the Hellwig measure by utilizing the Mahalanobis distance, which considers correlations with different criteria, enables us to effectively account for the asymmetrical relationships between criteria.

- Investigating the impact of the distance measure and normalization variants of Hellwig procedures for the evaluation and rank ordering of alternatives.

- Analyzing the impact of the correlation between criteria on the consistency of results obtained using different variants of Hellwig’s method.

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| H | Hellwig’s method based on Euclidean distance |

| H_M | Hellwig’s method based on Mahalanobis distance |

| H1 | Hellwig’s method based on Euclidean distance with vector normalization |

| H2 | Hellwig’s method based on Euclidean distance with min-max normalization |

| H3 | Hellwig’s method based on Euclidean distance with sum normalization |

| TODIM | an acronym in Portuguese for Interactive and Multicriteria Decision-Making |

| MCDM | Multi-criteria decision-making |

| VIKOR | VlseKriterijuska Optimizacija I Komoromisno Resenje |

| TOPSIS | Technique for Ordering Preferences by Similarity to Ideal Solution |

| DARP | Distances to Aspiration Reference Point method |

References

- Figueira; Ehrgott, M.; Greco, S. Multiple Criteria Decision Analysis: State of the Art Surveys; Springer Science + Business Media: Berlin/Heidelberg, Germany, 2005. [Google Scholar]

- Roy, B. Multicriteria Methodology for Decision Aiding; Kluwer Academic Publisher: Dordrecht, The Netherlands, 1996. [Google Scholar]

- Basílio, M.P.; Pereira, V.; Costa, H.G.; Santos, M.; Ghosh, A. A Systematic Review of the Applications of Multi-Criteria Decision Aid Methods (1977–2022). Electronics 2022, 11, 1720. [Google Scholar] [CrossRef]

- Yalcin, A.S.; Kilic, H.S.; Delen, D. The Use of Multi-Criteria Decision-Making Methods in Business Analytics: A Comprehensive Literature Review. Technol. Forecast. Soc. Change 2022, 174, 121193. [Google Scholar] [CrossRef]

- Štilić, A.; Puška, A. Integrating Multi-Criteria Decision-Making Methods with Sustainable Engineering: A Comprehensive Review of Current Practices. Eng 2023, 4, 1536–1549. [Google Scholar] [CrossRef]

- Cegan, J.C.; Filion, A.M.; Keisler, J.M.; Linkov, I. Trends and Applications of Multi-Criteria Decision Analysis in Environmental Sciences: Literature Review. Environ. Syst. Decis. 2017, 37, 123–133. [Google Scholar] [CrossRef]

- Diaz-Balteiro, L.; González-Pachón, J.; Romero, C. Measuring Systems Sustainability with Multi-Criteria Methods: A Critical Review. Eur. J. Oper. Res. 2017, 258, 607–616. [Google Scholar] [CrossRef]

- Kaya, İ.; Çolak, M.; Terzi, F. A Comprehensive Review of Fuzzy Multi Criteria Decision Making Methodologies for Energy Policy Making. Energy Strategy Rev. 2019, 24, 207–228. [Google Scholar] [CrossRef]

- Hwang, C.-L.; Yoon, K. (Eds.) Methods for Multiple Attribute Decision Making; Lecture Notes in Economics and Mathematical Systems; Springer: Berlin/Heidelberg, Germany, 1981; ISBN 978-3-642-48318-9. [Google Scholar]

- Hellwig, Z. Zastosowanie Metody Taksonomicznej Do Typologicznego Podziału Krajów Ze Względu Na Poziom Ich Rozwoju Oraz Zasoby i Strukturę Wykwalifikowanych Kadr [Application of the Taxonomic Method to the Typological Division of Countries According to the Level of Their Development and the Resources and Structure of Qualified Personnel]. Przegląd Statystyczny 1968, 4, 307–326. [Google Scholar]

- Opricovic, S.; Tzeng, G.-H. Compromise Solution by MCDM Methods: A Comparative Analysis of VIKOR and TOPSIS. Eur. J. Oper. Res. 2004, 156, 445–455. [Google Scholar] [CrossRef]

- Roszkowska, E.; Filipowicz-Chomko, M.; Wachowicz, T. Using Individual and Common Reference Points to Measure the Performance of Alternatives in Multiple Criteria Evaluation. Oper. Res. Decis. 2020, 30, 77–96. [Google Scholar] [CrossRef]

- Rezaei, J. Best-Worst Multi-Criteria Decision-Making Method. Omega 2015, 53, 49–57. [Google Scholar] [CrossRef]

- Konarzewska-Gubała, E. Bipolar: Multiple Criteria Decision Aid Using Bipolar Refernce System. LAMSADE Cashier Doc. 1989, 56. [Google Scholar]

- Jahan, A.; Edwards, K.L. A State-of-the-Art Survey on the Influence of Normalization Techniques in Ranking: Improving the Materials Selection Process in Engineering Design. Mater. Des. 2014, 65, 335–342. [Google Scholar] [CrossRef]

- Çelen, A. Comparative Analysis of Normalization Procedures in TOPSIS Method: With an Application to Turkish Deposit Banking Market. Informatica 2014, 25, 185–208. [Google Scholar] [CrossRef]

- Chakraborty, S.; Yeh, C.-H. A Simulation Based Comparative Study of Normalization Procedures in Multiattribute Decision Making. In Proceedings of the 6th Conference on 6th WSEAS International Conference on Artificial Intelligence, Knowledge Engineering and Data Bases, Corfu Island, Greece, 16–19 February 2007; Citeseer: State College, PA, USA, 2007; Volume 6, pp. 102–109. [Google Scholar]

- Chakraborty, S.; Yeh, C.-H. A Simulation Comparison of Normalization Procedures for TOPSIS. In Proceedings of the 2009 International Conference on Computers and Industrial Engineering (CIE39), Troyes, France, 6–9 July 2009; IEEE, Institute of Electrical and Electronics Engineers: Piscataway, NJ, USA, 2009; pp. 1815–1820. [Google Scholar]

- Milani, A.S.; Shanian, A.; Madoliat, R.; Nemes, J.A. The Effect of Normalization Norms in Multiple Attribute Decision Making Models: A Case Study in Gear Material Selection. Struct. Multidiscip. Optim. 2005, 29, 312–318. [Google Scholar] [CrossRef]

- Palczewski, K.; Sałabun, W. Influence of Various Normalization Methods in PROMETHEE II: An Empirical Study on the Selection of the Airport Location. Procedia Comput. Sci. 2019, 159, 2051–2060. [Google Scholar] [CrossRef]

- Pavličić, D. Normalization Affects the Results of MADM Methods. Yugosl. J. Oper. Res. 2001, 11, 251–265. [Google Scholar]

- Vafaei, N.; Ribeiro, R.A.; Camarinha-Matos, L.M. Normalization Techniques for Multi-Criteria Decision Making: Analytical Hierarchy Process Case Study. In Technological Innovation for Cyber-Physical Systems, Proceedings of the 7th IFIP 5.5/SOCOLNET Doctoral Conference on Computing, Electrical and Industrial Systems, DoCEIS 2016, Costa de Caparica, Portugal, 11–13 April 2016; Camarinha-Matos, L.M., Falcão, A.J., Vafaei, N., Najdi, S., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 261–269. [Google Scholar]

- Zavadskas, E.K.; Zakarevicius, A.; Antucheviciene, J. Evaluation of Ranking Accuracy in Multi-Criteria Decisions. Informatica 2006, 17, 601–618. [Google Scholar] [CrossRef]

- Mahalanobis, P.C. On the Generalised Distance in Statistics. Proc. Natl. Inst. Sci. 1936, 2, 49–55. [Google Scholar]

- Ghojogh, B.; Ghodsi, A.; Karray, F.; Crowley, M. Spectral, Probabilistic, and Deep Metric Learning: Tutorial and Survey. arXiv 2022, arXiv:2201.09267. [Google Scholar]

- Liu, D.; Qi, X.; Qiang, F.; Li, M.; Zhu, W.; Zhang, L.; Abrar Faiz, M.; Khan, M.I.; Li, T.; Cui, S. A Resilience Evaluation Method for a Combined Regional Agricultural Water and Soil Resource System Based on Weighted Mahalanobis Distance and a Gray-TOPSIS Model. J. Clean. Prod. 2019, 229, 667–679. [Google Scholar] [CrossRef]

- Ponce, R.V.; Alcaraz, J.L.G. Evaluation of Technology Using TOPSIS in Presence of Multi-Collinearity in Attributes: Why Use the Mahalanobis Distance? Rev. Fac. Ing. Univ. Antioq. 2013, 31–42. [Google Scholar] [CrossRef]

- Antuchevičienė, J.; Zavadskas, E.K.; Zakarevičius, A. Multiple Criteria Construction Management Decisions Considering Relations between Criteria. Technol. Econ. Dev. Econ. 2010, 16, 109–125. [Google Scholar] [CrossRef]

- Wang, Z.-X.; Li, D.-D.; Zheng, H.-H. The External Performance Appraisal of China Energy Regulation: An Empirical Study Using a TOPSIS Method Based on Entropy Weight and Mahalanobis Distance. Int. J. Environ. Res. Public Health 2018, 15, 236. [Google Scholar] [CrossRef] [PubMed]

- Chang, C.-H.; Lin, J.-J.; Lin, J.-H.; Chiang, M.-C. Domestic Open-End Equity Mutual Fund Performance Evaluation Using Extended TOPSIS Method with Different Distance Approaches. Expert Syst. Appl. 2010, 37, 4642–4649. [Google Scholar] [CrossRef]

- Wang, Z.-X.; Wang, Y.-Y. Evaluation of the Provincial Competitiveness of the Chinese High-Tech Industry Using an Improved TOPSIS Method. Expert Syst. Appl. 2014, 41, 2824–2831. [Google Scholar] [CrossRef]

- Ozmen, M. Logistics Competitiveness of OECD Countries Using an Improved TODIM Method. Sādhanā 2019, 44, 108. [Google Scholar] [CrossRef]

- Wasid, M.; Ali, R. Multi-Criteria Clustering-Based Recommendation Using Mahalanobis Distance. Int. J. Reason. -Based Intell. Syst. 2020, 12, 96. [Google Scholar] [CrossRef]

- Dong, H.; Yang, K.; Bai, G. Evaluation of TPGU Using Entropy—Improved TOPSIS—GRA Method in China. PLoS ONE 2022, 17, e0260974. [Google Scholar] [CrossRef]

- Xiang, S.; Nie, F.; Zhang, C. Learning a Mahalanobis Distance Metric for Data Clustering and Classification. Pattern Recognit. 2008, 41, 3600–3612. [Google Scholar] [CrossRef]

- Ghosh-Dastidar, S.; Adeli, H. Wavelet-Clustering-Neural Network Model for Freeway Incident Detection. Comput. Aided Civ. Infrastruct. Eng. 2003, 18, 325–338. [Google Scholar] [CrossRef]

- Jefmański, B. Intuitionistic Fuzzy Synthetic Measure for Ordinal Data. In Clasification and Data Analysis, Proceedings of the Conference of the Section on Classification and Data Analysis of the Polish Statistical Association, Szczecin, Poland, 18–20 September 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 53–72. [Google Scholar]

- Jefmański, B.; Roszkowska, E.; Kusterka-Jefmańska, M. Intuitionistic Fuzzy Synthetic Measure on the Basis of Survey Responses and Aggregated Ordinal Data. Entropy 2021, 23, 1636. [Google Scholar] [CrossRef] [PubMed]

- Kusterka-Jefmańska, M.; Jefmański, B.; Roszkowska, E. Application of the Intuitionistic Fuzzy Synthetic Measure in the Subjective Quality of Life Measurement Based on Survey Data. In Modern Classification and Data Analysis, Proceedings of the Conference of the Section on Classification and Data Analysis of the Polish Statistical Association, Poznań, Poland, 8–10 September 2021; Springer: Berlin/Heidelberg, Germany, 2022; pp. 243–261. [Google Scholar]

- Roszkowska, E. The Intuitionistic Fuzzy Framework for Evaluation and Rank Ordering the Negotiation Offers. In Intelligent and Fuzzy Techniques for Emerging Conditions and Digital Transformation, Proceedings of the International Conference on Intelligent and Fuzzy Systems INFUS 2021, Istanbul, Turkey, 24–26 August 2021; Lecture Notes in Networks and Systems; Kahraman, C., Cebi, S., Onar, S., Oztaysi, B., Tolga, A.C., Sari, I.U., Eds.; Springer: Cham, Switzerland, 2021; Volume 308, pp. 58–65. [Google Scholar]

- Roszkowska, E.; Jefmański, B. Interval-Valued Intuitionistic Fuzzy Synthetic Measure (I-VIFSM) Based on Hellwig’s Approach in the Analysis of Survey Data. Mathematics 2021, 9, 201. [Google Scholar] [CrossRef]

- Roszkowska, E.; Wachowicz, T.; Filipowicz-Chomko, M.; Łyczkowska-Hanćkowiak, A. The Extended Linguistic Hellwig’s Methods Based on Oriented Fuzzy Numbers and Their Application to the Evaluation of Negotiation Offers. Entropy 2022, 24, 1617. [Google Scholar] [CrossRef] [PubMed]

- Mazur-Wierzbicka, E. Towards Circular Economy—A Comparative Analysis of the Countries of the European Union. Resources 2021, 10, 49. [Google Scholar] [CrossRef]

- Balcerzak, A.P. Multiple-Criteria Evaluation of Quality of Human Capital in the European Union Countries. Econ. Sociol. 2016, 9, 11–26. [Google Scholar] [CrossRef]

- Łuczak, A.; Wysocki, F. Rozmyta Wielokryterialna Metoda Hellwiga Porządkowania Liniowego Obiektów [Fuzzy Multi-Criteria Hellwig’s Method of Linear Ordering of Objects]. Pr. Nauk. Akad. Ekon. We Wrocławiu Taksonomia 2007, 14, 330–340. [Google Scholar]

- Golejewska, A. A Comparative Analysis of the Socio-Economic Potential of Polish Regions. Stud. Ind. Geogr. Comm. Pol. Geogr. Soc. 2016, 30, 7–22. [Google Scholar] [CrossRef]

- Barska, A.; Jędrzejczak-Gas, J.; Wyrwa, J. Poland on the Path towards Sustainable Development—A Multidimensional Comparative Analysis of the Socio-Economic Development of Polish Regions. Sustainability 2022, 14, 10319. [Google Scholar] [CrossRef]

- Jędrzejczak-Gas, J.; Barska, A. Assessment of the Economic Development of Polish Regions in the Context of the Implementation of the Concept of Sustainable Development—Taxonomic Analysis. Eur. J. Sustain. Dev. 2019, 8, 222. [Google Scholar] [CrossRef]

- Iwacewicz-Orłowska, A.; Sokołowska, D. Ranking of EU Countries in Terms of the Value of Environmental Governance Indicators in 2010 and 2015. Ekon. Sr. Econ. Environ. 2018, 66, 13. [Google Scholar]

- Sompolska-Rzechuła, A. Selection of the Optimal Way of Linear Ordering of Objects: Case of Sustainable Development in EU Countries. Stat. Stat. Econ. J. 2021, 101, 24–36. [Google Scholar]

- Reiff, M.; Surmanová, K.; Balcerzak, A.P.; Pietrzak, M.B. Multiple Criteria Analysis of European Union Agriculture. J. Int. Stud. 2016, 9, 62–74. [Google Scholar] [CrossRef]

- Gostkowski, M.; Koszela, G. Application of the Linear Ordering Methods to Analysis of the Agricultural Market in Poland. Metod. Ilościowe W Badaniach Ekon. 2019, 20, 167–177. [Google Scholar] [CrossRef]

- Wysocki, F. Metody Taksonomiczne w Rozpoznawaniu Typów Ekonomicznych Rolnictwa i Obszarów Wiejskich [Taxonomic Methods in Recognizing Economic Types of Agriculture and Rural Areas]; Wydawnictwo Uniwersytetu Przyrodniczego w Poznaniu: Poznan, Poland, 2010; 399p. [Google Scholar]

- Krukowski, A.; Nowak, A.; Różańska-Boczula, M. Evaluation of Agriculture Development in the Member States of the European Union in the Years 2007–2015. In Proceedings of the 31st International Business Information Management Association Conference, Milan, Italy, 25–26 August 2018. [Google Scholar]

- Di Domizio, M. The Competitive Balance in the Italian Football League: A Taxonomic Approach; Department of Communication, University of Teramo: Teramo, Italy, 2008. [Google Scholar]

- Elżbieta Roszko-Wójtowicz, E.; Grzelak, M.M. The Use of Selected Methods of Linear Ordering to Assess the Innovation Performance of the European Union Member States. Econ. Environ. Stud. 2019, 19, 9–30. [Google Scholar]

- Gałecka, M.; Smolny, K. Evaluation of Theater Activity Using Hellwig’s Method. Optim. Econ. Stud. 2018, 38–50. [Google Scholar] [CrossRef]

- Dmytrów, K. Comparison of Several Linear Ordering Methods for Selection of Locations in Order-Picking by Means of the Simulation Methods. Acta Univ. Lodz. Folia Oecon. 2018, 5, 81–96. [Google Scholar] [CrossRef]

- Ahn, B.S.; Park, K.S. Comparing Methods for Multiattribute Decision Making with Ordinal Weights. Comput. Oper. Res. 2008, 35, 1660–1670. [Google Scholar] [CrossRef]

- Ayan, B.; Abacıoğlu, S.; Basilio, M.P. A Comprehensive Review of the Novel Weighting Methods for Multi-Criteria Decision-Making. Information 2023, 14, 285. [Google Scholar] [CrossRef]

- Choo, E.U.; Schoner, B.; Wedley, W.C. Interpretation of Criteria Weights in Multicriteria Decision Making. Comput. Ind. Eng. 1999, 37, 527–541. [Google Scholar] [CrossRef]

- Da Silva, F.F.; Souza, C.L.M.; Silva, F.F.; Costa, H.G.; da Hora, H.R.M.; Erthal, M., Jr. Elicitation of Criteria Weights for Multicriteria Models: Bibliometrics, Typologies, Characteristics and Applications. Braz. J. Oper. Prod. Manag. 2021, 18, 1–28. [Google Scholar] [CrossRef]

- Roszkowska, E. Rank Ordering Criteria Weighting Methods—A Comparative Overview. Optim. Econ. Stud. 2013, 5, 14–33. [Google Scholar] [CrossRef]

- Zardari, N.H.; Ahmed, K.; Shirazi, S.M.; Yusop, Z.B. Weighting Methods and Their Effects on Multi-Criteria Decision Making Model Outcomes in Water Resources Management; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Tzeng, G.-H.; Chen, T.-Y.; Wang, J.-C. A Weight-Assessing Method with Habitual Domains. Eur. J. Oper. Res. 1998, 110, 342–367. [Google Scholar] [CrossRef]

| Alternative | C1 | C2 | C3 | C4 | Correlation Matrix | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| A1 | 1 | 18 | 7 | 6 | C1 | C2 | C3 | C4 | ||

| A2 | 3 | 2 | 10 | 10 | C1 | 1.000 | 0.089 | 0.003 | 0.038 | |

| A3 | 5 | 30 | 15 | 30 | C2 | 0.089 | 1.000 | 0.103 | −0.003 | |

| A4 | 3 | 1 | 15 | 20 | C3 | 0.003 | 0.103 | 1.000 | 0.116 | |

| A5 | 8 | 10 | 20 | 8 | C4 | 0.038 | −0.003 | 0.116 | 1.000 | |

| A6 | 2 | 20 | 10 | 5 | ||||||

| A7 | 10 | 4 | 6 | 25 | ||||||

| A8 | 12 | 25 | 10 | 2 | ||||||

| A9 | 5 | 5 | 15 | 8 | ||||||

| A10 | 6 | 2 | 5 | 9 | ||||||

| Ideal solution | 12 | 30 | 20 | 30 | ||||||

| [0,0.1) | [0.1,0.2) | [0.2,0.3) | [0.3,0.4) | [0.4,0.5) | [0.5,0.6) | [0.6,0.7) | [0.7,0.8) | [0.8,0.9) | [0.9,1.0) | 1 |

| negligible | weak | moderate | strong | very strong | ||||||

| Alternative | dEH1 | Value H1 | Range H1 | dEH2 | Value H2 | Range H2 | dEH3 | Value H3 | Range H3 | dM | Value HM | Range HM |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| A1 | 0.211 | 0.168 | 8 | 0.408 | 0.139 | 9 | 0.080 | 0.202 | 8 | 2.333 | 0.096 | 10 |

| A2 | 0.218 | 0.141 | 10 | 0.400 | 0.155 | 8 | 0.086 | 0.140 | 10 | 2.177 | 0.157 | 8 |

| A3 | 0.092 | 0.639 | 1 | 0.180 | 0.620 | 1 | 0.034 | 0.663 | 1 | 1.123 | 0.565 | 1 |

| A4 | 0.194 | 0.235 | 5 | 0.345 | 0.270 | 6 | 0.078 | 0.222 | 7 | 1.872 | 0.275 | 5 |

| A5 | 0.161 | 0.366 | 2 | 0.277 | 0.415 | 2 | 0.064 | 0.355 | 3 | 1.584 | 0.387 | 2 |

| A6 | 0.197 | 0.225 | 7 | 0.370 | 0.219 | 7 | 0.075 | 0.252 | 5 | 2.116 | 0.180 | 7 |

| A7 | 0.165 | 0.352 | 4 | 0.330 | 0.303 | 4 | 0.065 | 0.349 | 4 | 1.819 | 0.296 | 4 |

| A8 | 0.162 | 0.363 | 3 | 0.304 | 0.358 | 3 | 0.062 | 0.380 | 2 | 1.741 | 0.326 | 3 |

| A9 | 0.195 | 0.234 | 6 | 0.342 | 0.276 | 5 | 0.077 | 0.226 | 6 | 1.880 | 0.272 | 6 |

| A10 | 0.217 | 0.144 | 9 | 0.418 | 0.117 | 10 | 0.085 | 0.148 | 9 | 2.277 | 0.118 | 9 |

| do | 0.254 | 0.473 | 0.100 | 2.582 |

| Alternative | C1 | C2 | C3 | C4 | Correlation Matrix | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| A1 | 1 | 4 | 3 | 5 | C1 | C2 | C3 | C4 | ||

| A2 | 4 | 10 | 12 | 10 | C1 | 1.000 | 0.708 | 0.881 | 0.136 | |

| A3 | 5 | 20 | 13 | 33 | C2 | 0.708 | 1.000 | 0.922 | 0.350 | |

| A4 | 3 | 12 | 9 | 20 | C3 | 0.881 | 0.922 | 1.000 | 0.200 | |

| A5 | 2 | 2 | 2 | 8 | C4 | 0.136 | 0.350 | 0.200 | 1.000 | |

| A6 | 2 | 8 | 6 | 5 | ||||||

| A7 | 10 | 16 | 16 | 25 | ||||||

| A8 | 12 | 20 | 20 | 2 | ||||||

| A9 | 3 | 12 | 9 | 10 | ||||||

| A10 | 6 | 24 | 18 | 9 | ||||||

| Ideal | 12 | 20 | 20 | 25 | ||||||

| [0,0.1) | [0.1,0.2) | [0.2,0.3) | [0.3,0.4) | [0.4,0.5) | [0.5,0.6) | [0.6,0.7) | [0.7,0.8) | [0.8,0.9) | [0.9,1.0) | 1 |

| negligible | weak | moderate | strong | very strong | ||||||

| Alternative | dEH1 | Value | Range | dEH2 | Value | Range | dEH3 | Value | Range | dM | Value | Range |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| H1 | H1 | H2 | H2 | H3 | H3 | HM | HM | |||||

| A1 | 0.255 | 0.117 | 10 | 0.470 | 0.113 | 10 | 0.097 | 0.119 | 10 | 1.959 | 0.218 | 8 |

| A2 | 0.182 | 0.370 | 6 | 0.324 | 0.388 | 6 | 0.070 | 0.365 | 6 | 2.364 | 0.056 | 10 |

| A3 | 0.106 | 0.632 | 2 | 0.192 | 0.638 | 2 | 0.041 | 0.630 | 2 | 1.141 | 0.544 | 2 |

| A4 | 0.168 | 0.420 | 5 | 0.308 | 0.419 | 5 | 0.064 | 0.421 | 5 | 1.510 | 0.397 | 3 |

| A5 | 0.248 | 0.143 | 9 | 0.466 | 0.121 | 9 | 0.093 | 0.151 | 9 | 1.800 | 0.281 | 6 |

| A6 | 0.231 | 0.201 | 8 | 0.417 | 0.214 | 8 | 0.088 | 0.198 | 8 | 1.891 | 0.245 | 7 |

| A7 | 0.070 | 0.758 | 1 | 0.133 | 0.750 | 1 | 0.026 | 0.762 | 1 | 0.855 | 0.659 | 1 |

| A8 | 0.156 | 0.459 | 4 | 0.254 | 0.521 | 4 | 0.062 | 0.441 | 4 | 1.594 | 0.363 | 4 |

| A9 | 0.192 | 0.334 | 7 | 0.344 | 0.351 | 7 | 0.074 | 0.329 | 7 | 1.712 | 0.316 | 5 |

| A10 | 0.145 | 0.499 | 3 | 0.238 | 0.550 | 3 | 0.057 | 0.483 | 3 | 1.972 | 0.212 | 9 |

| do | 0.289 | 0.530 | 0.110 | 2.504 |

| Alternative | C1 | C2 | C3 | C4 | Correlation Matrix | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| A1 | 1 | 2 | 2 | 5 | C1 | C2 | C3 | C4 | ||

| A2 | 4 | 6 | 10 | 10 | C1 | 1.000 | 0.501 | 0.328 | 0.088 | |

| A3 | 5 | 23 | 24 | 33 | C2 | 0.501 | 1.000 | 0.676 | 0.575 | |

| A4 | 3 | 1 | 6 | 10 | C3 | 0.328 | 0.676 | 1.000 | 0.907 | |

| A5 | 2 | 10 | 4 | 8 | C4 | 0.088 | 0.575 | 0.907 | 1.000 | |

| A6 | 4 | 7 | 8 | 6 | ||||||

| A7 | 10 | 6 | 12 | 15 | ||||||

| A8 | 12 | 20 | 8 | 6 | ||||||

| A9 | 3 | 6 | 7 | 10 | ||||||

| A10 | 6 | 8 | 10 | 6 | ||||||

| Ideal | 12 | 23 | 24 | 33 | ||||||

| [0,0.1) | [0.1,0.2) | [0.2,0.3) | [0.3,0.4) | [0.4,0.5) | [0.5,0.6) | [0.6,0.7) | [0.7,0.8) | [0.8,0.9) | [0.9,1.0) | 1 |

| negligible | weak | moderate | strong | very strong | ||||||

| Alternative | dEHI | Value | Range | dEH2 | Value | Range | dEH3 | Value | Range | dM | Value | Range |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| H1 | H1 | H2 | H2 | H3 | H3 | HM | HM | |||||

| A1 | 0.310 | 0.092 | 10 | 0.494 | 0.084 | 10 | 0.120 | 0.095 | 10 | 2.223 | 0.135 | 8 |

| A2 | 0.233 | 0.318 | 5 | 0.371 | 0.312 | 5 | 0.090 | 0.316 | 5 | 2.001 | 0.221 | 6 |

| A3 | 0.092 | 0.730 | 1 | 0.159 | 0.705 | 1 | 0.035 | 0.735 | 1 | 1.360 | 0.471 | 2 |

| A4 | 0.272 | 0.204 | 9 | 0.434 | 0.196 | 9 | 0.105 | 0.203 | 9 | 1.848 | 0.281 | 3 |

| A5 | 0.263 | 0.231 | 8 | 0.418 | 0.225 | 8 | 0.101 | 0.237 | 8 | 2.146 | 0.165 | 7 |

| A6 | 0.251 | 0.266 | 7 | 0.397 | 0.265 | 6 | 0.097 | 0.266 | 7 | 2.239 | 0.129 | 9 |

| A7 | 0.185 | 0.460 | 2 | 0.289 | 0.464 | 2 | 0.072 | 0.455 | 2 | 1.320 | 0.486 | 1 |

| A8 | 0.199 | 0.419 | 3 | 0.304 | 0.437 | 3 | 0.076 | 0.421 | 3 | 1.900 | 0.261 | 5 |

| A9 | 0.250 | 0.269 | 6 | 0.398 | 0.262 | 7 | 0.096 | 0.271 | 6 | 1.857 | 0.278 | 4 |

| A10 | 0.231 | 0.325 | 4 | 0.362 | 0.329 | 4 | 0.089 | 0.323 | 4 | 2.282 | 0.112 | 10 |

| do | 0.342 | 0.540 | 0.132 | 2.570 |

| Alternative | C1 | C2 | C3 | C4 | Correlation Matrix | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| A1 | 2 | 4 | 3 | 6 | C1 | C2 | C3 | C4 | ||

| A2 | 5 | 10 | 9 | 10 | C1 | 1.000 | 0.730 | 0.723 | 0.723 | |

| A3 | 7 | 8 | 9 | 9 | C2 | 0.730 | 1.000 | 0.801 | 0.910 | |

| A4 | 3 | 6 | 5 | 10 | C3 | 0.723 | 0.801 | 1.000 | 0.748 | |

| A5 | 6 | 10 | 10 | 13 | C4 | 0.723 | 0.910 | 0.748 | 1.000 | |

| A6 | 3 | 6 | 4 | 6 | ||||||

| A7 | 6 | 12 | 6 | 14 | ||||||

| A8 | 8 | 12 | 10 | 16 | ||||||

| A9 | 3 | 6 | 4 | 6 | ||||||

| A10 | 6 | 5 | 3 | 7 | ||||||

| Ideal | 8 | 12 | 10 | 16 | ||||||

| [0,0.1) | [0.1,0.2) | [0.2,0.3) | [0.3,0.4) | [0.4,0.5) | [0.5,0.6) | [0.6,0.7) | [0.7,0.8) | [0.8,0.9) | [0.9,1.0) | 1 |

| negligible | weak | moderate | strong | very strong | ||||||

| Alternative | dEH1 | Value | Range | dEH2 | Value | Range | dEH3 | Value | Range | dM | Value | Range |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| H1 | H1 | H2 | H2 | H3 | H3 | HM | HM | |||||

| A1 | 0.162 | 0.157 | 10 | 0.500 | 0.162 | 10 | 0.055 | 0.157 | 10 | 1.552 | 0.289 | 8 |

| A2 | 0.068 | 0.645 | 4 | 0.208 | 0.651 | 4 | 0.023 | 0.647 | 5 | 1.446 | 0.338 | 6 |

| A3 | 0.068 | 0.644 | 5 | 0.222 | 0.628 | 5 | 0.023 | 0.648 | 4 | 1.324 | 0.394 | 4 |

| A4 | 0.119 | 0.378 | 6 | 0.365 | 0.389 | 6 | 0.041 | 0.378 | 6 | 1.326 | 0.392 | 5 |

| A5 | 0.042 | 0.780 | 2 | 0.128 | 0.785 | 2 | 0.014 | 0.781 | 2 | 0.810 | 0.629 | 2 |

| A6 | 0.140 | 0.273 | 8 | 0.432 | 0.275 | 8 | 0.047 | 0.273 | 8 | 1.573 | 0.280 | 9 |

| A7 | 0.057 | 0.703 | 3 | 0.173 | 0.710 | 3 | 0.020 | 0.700 | 3 | 1.312 | 0.399 | 3 |

| A8 | 0.000 | 1.000 | 1 | 0.000 | 1.000 | 1 | 0.000 | 1.000 | 1 | 0.000 | 1.000 | 1 |

| A9 | 0.140 | 0.273 | 8 | 0.432 | 0.275 | 8 | 0.047 | 0.273 | 8 | 1.573 | 0.28 | 9 |

| A10 | 0.129 | 0.331 | 7 | 0.410 | 0.313 | 7 | 0.044 | 0.33 | 7 | 1.544 | 0.293 | 7 |

| do | 0.192 | 0.597 | 0.065 | 2.183 |

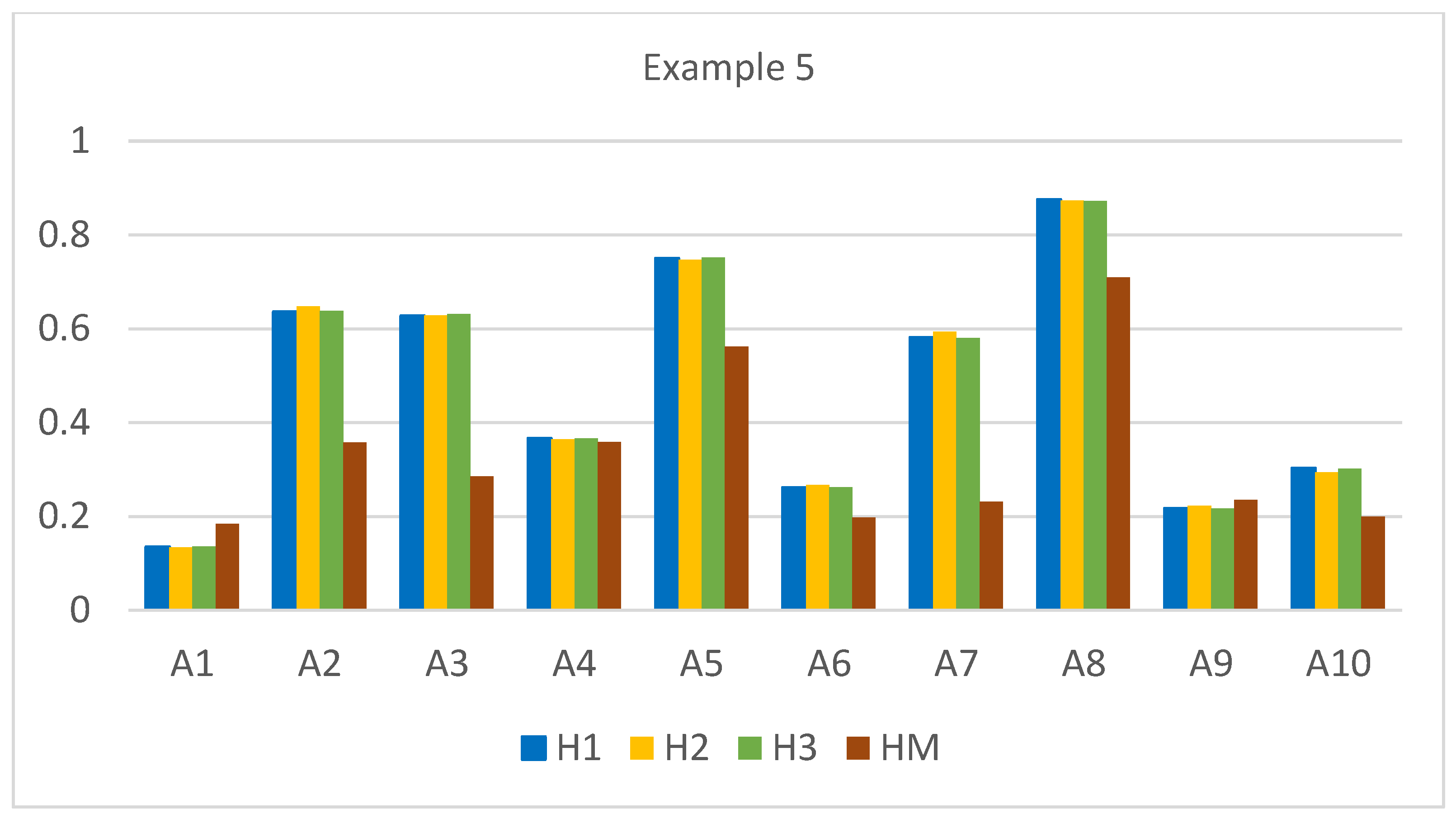

| Alternative | C1 | C2 | C3 | C4 | Correlation Matrix | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| A1 | 2 | 4 | 3 | 6 | C1 | C2 | C3 | C4 | ||

| A2 | 5 | 10 | 9 | 10 | C1 | 1.000 | 0.720 | 0.656 | 0.670 | |

| A3 | 7 | 8 | 9 | 9 | C2 | 0.720 | 1.000 | 0.711 | 0.731 | |

| A4 | 3 | 6 | 5 | 10 | C3 | 0.656 | 0.711 | 1.000 | 0.747 | |

| A5 | 6 | 9 | 10 | 13 | C4 | 0.670 | 0.731 | 0.747 | 1.000 | |

| A6 | 4 | 6 | 4 | 5 | ||||||

| A7 | 6 | 12 | 6 | 9 | ||||||

| A8 | 8 | 12 | 8 | 16 | ||||||

| A9 | 3 | 6 | 3 | 6 | ||||||

| A10 | 6 | 5 | 3 | 7 | ||||||

| Ideal | 8 | 12 | 10 | 16 | ||||||

| [0,0.1) | [0.1,0.2) | [0.2,0.3) | [0.3,0.4) | [0.4,0.5) | [0.5,0.6) | [0.6,0.7) | [0.7,0.8) | [0.8,0.9) | [0.9,1.0) | 1 |

| negligible | weak | moderate | strong | very strong | ||||||

| Alternative | dEH1 | Value | Range | dEH2 | Value | Range | dEH3 | Value | Range | dM | Value | Range |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| H1 | H1 | H2 | H2 | H3 | H3 | HM | HM | |||||

| A1 | 0.166 | 0.135 | 10 | 0.489 | 0.134 | 10 | 0.056 | 0.136 | 10 | 1.657 | 0.184 | 10 |

| A2 | 0.070 | 0.636 | 3 | 0.198 | 0.648 | 3 | 0.024 | 0.638 | 3 | 1.303 | 0.358 | 4 |

| A3 | 0.072 | 0.628 | 4 | 0.210 | 0.629 | 4 | 0.024 | 0.631 | 4 | 1.451 | 0.285 | 5 |

| A4 | 0.122 | 0.366 | 6 | 0.359 | 0.364 | 6 | 0.041 | 0.366 | 6 | 1.301 | 0.359 | 3 |

| A5 | 0.048 | 0.750 | 2 | 0.143 | 0.747 | 2 | 0.016 | 0.752 | 2 | 0.889 | 0.562 | 2 |

| A6 | 0.142 | 0.261 | 8 | 0.414 | 0.267 | 8 | 0.048 | 0.262 | 8 | 1.628 | 0.198 | 9 |

| A7 | 0.081 | 0.581 | 5 | 0.229 | 0.594 | 5 | 0.027 | 0.580 | 5 | 1.560 | 0.231 | 7 |

| A8 | 0.024 | 0.875 | 1 | 0.071 | 0.873 | 1 | 0.008 | 0.872 | 1 | 0.588 | 0.710 | 1 |

| A9 | 0.150 | 0.217 | 9 | 0.439 | 0.223 | 9 | 0.051 | 0.217 | 9 | 1.552 | 0.235 | 6 |

| A10 | 0.134 | 0.303 | 7 | 0.399 | 0.294 | 7 | 0.045 | 0.302 | 7 | 1.623 | 0.200 | 8 |

| do | 0.192 | 0.565 | 0.065 | 2.030 |

| Example | Correlation between Criteria | Relationships between Hi Measures | Relationships between Hi and HM Measure |

|---|---|---|---|

| Example 1 | Negligible or week | Spearman: very strong | Spearman: strong or very strong |

| Pearson: very strong | Pearson: very strong | ||

| Example 2 | From weak to very strong | Spearman: very strong | Spearman: moderate |

| Pearson: very strong | Pearson: moderate or strong | ||

| Example 3 | From negligible to very strong | Spearman: very strong | Spearman: week or moderate |

| Pearson: very strong | Pearson: strong | ||

| Example 4 | Strong and very strong | Spearman: very strong | Spearman: very strong |

| Pearson: very strong | Pearson: strong | ||

| Example 5 | Moderate and strong | Spearman: very strong | Spearman: strong |

| Pearson: very strong | Pearson: strong |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Roszkowska, E. Modifying Hellwig’s Method for Multi-Criteria Decision-Making with Mahalanobis Distance for Addressing Asymmetrical Relationships. Symmetry 2024, 16, 77. https://doi.org/10.3390/sym16010077

Roszkowska E. Modifying Hellwig’s Method for Multi-Criteria Decision-Making with Mahalanobis Distance for Addressing Asymmetrical Relationships. Symmetry. 2024; 16(1):77. https://doi.org/10.3390/sym16010077

Chicago/Turabian StyleRoszkowska, Ewa. 2024. "Modifying Hellwig’s Method for Multi-Criteria Decision-Making with Mahalanobis Distance for Addressing Asymmetrical Relationships" Symmetry 16, no. 1: 77. https://doi.org/10.3390/sym16010077

APA StyleRoszkowska, E. (2024). Modifying Hellwig’s Method for Multi-Criteria Decision-Making with Mahalanobis Distance for Addressing Asymmetrical Relationships. Symmetry, 16(1), 77. https://doi.org/10.3390/sym16010077