Applications of Convolutional Neural Networks to Extracting Oracle Bone Inscriptions from Three-Dimensional Models

Abstract

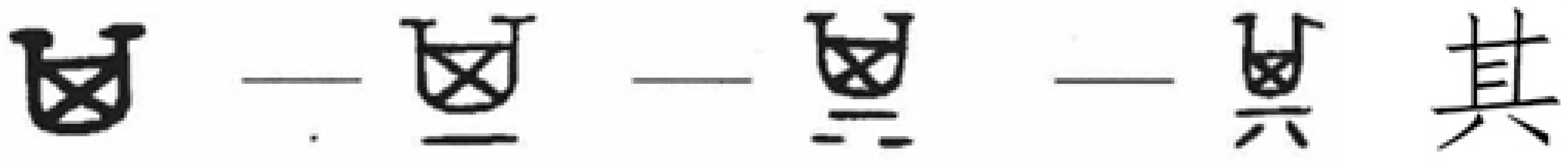

1. Introduction

2. Related Studies

2.1. Oracle Bone Information Processing

2.2. OBC Related 3D Segmentation and Object Detection

3. Material and Methods

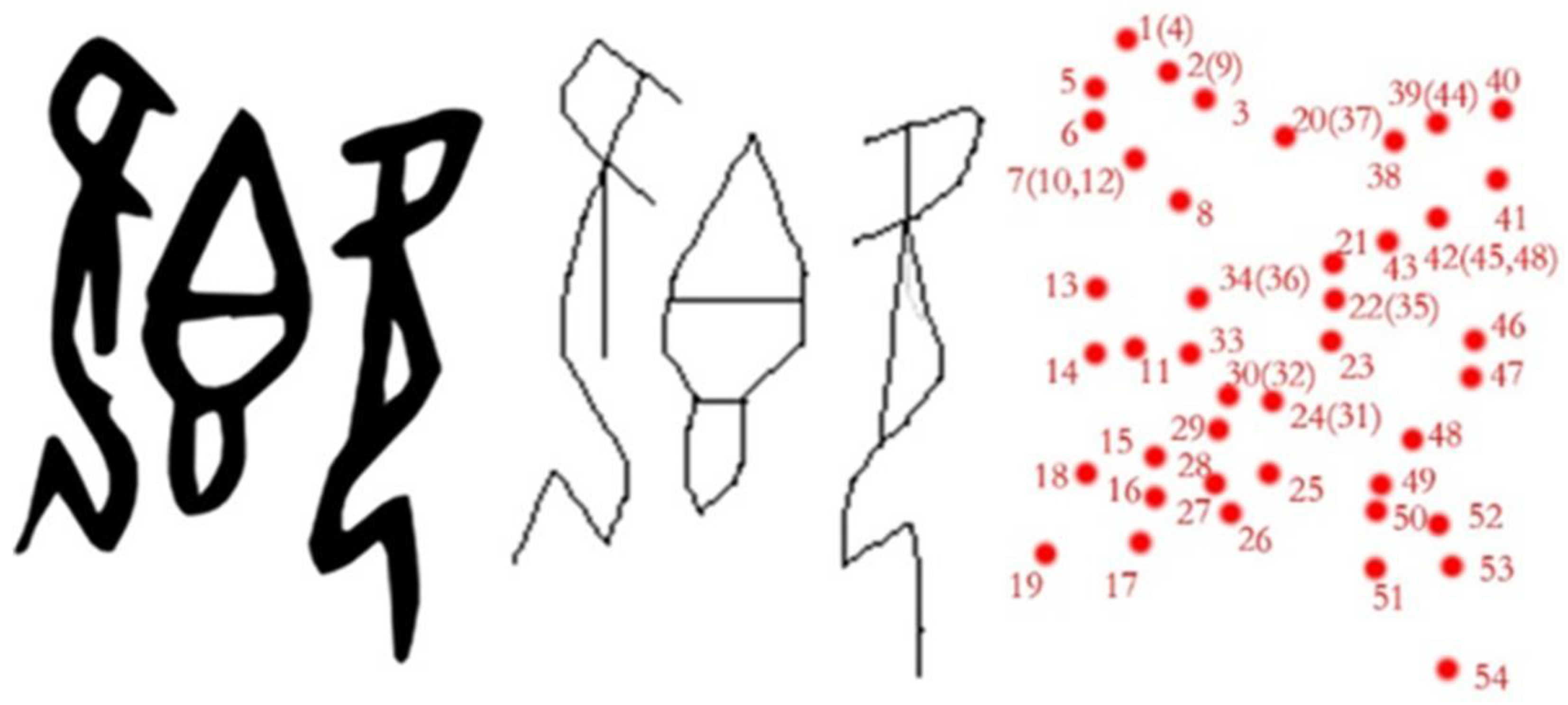

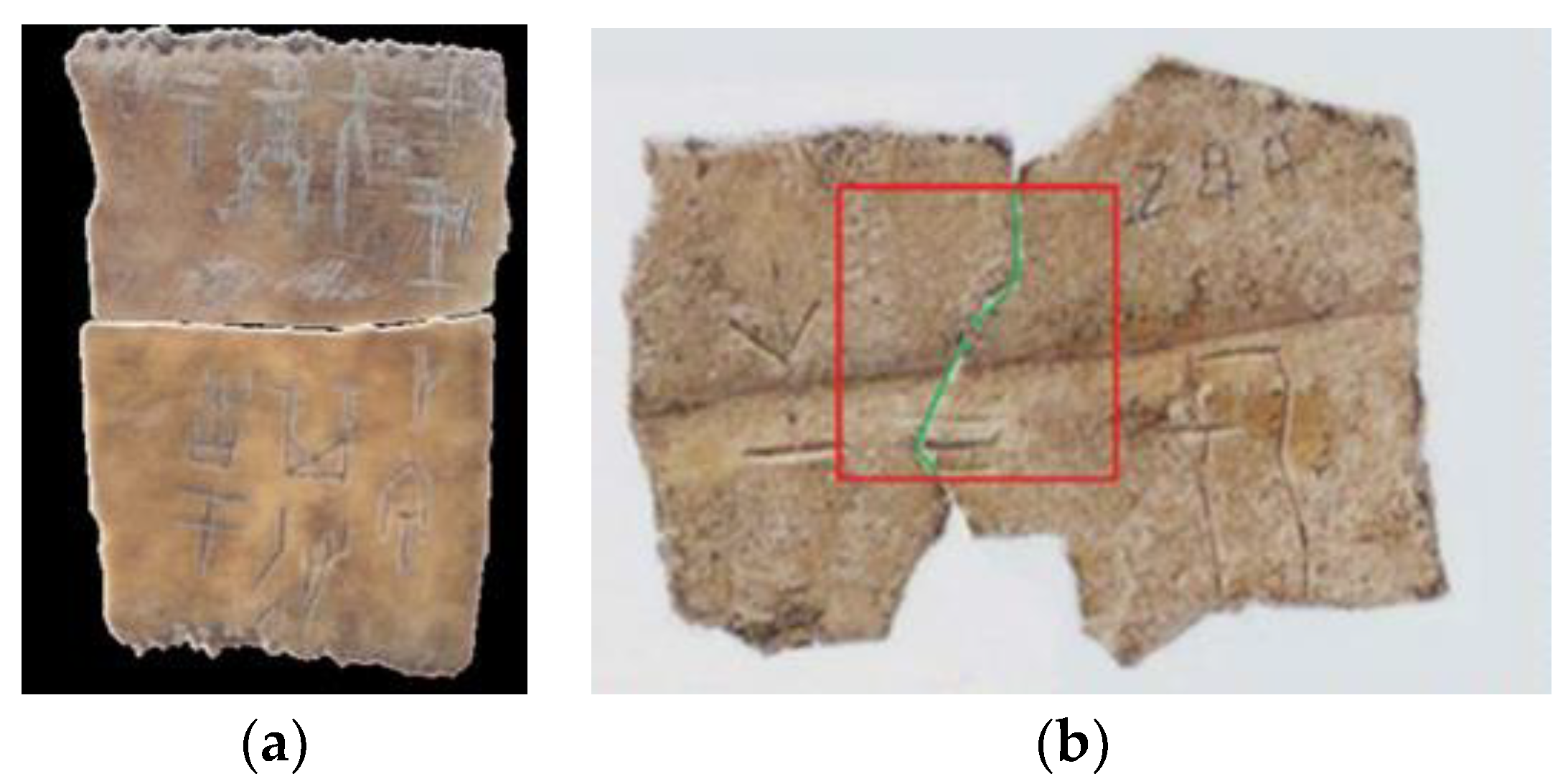

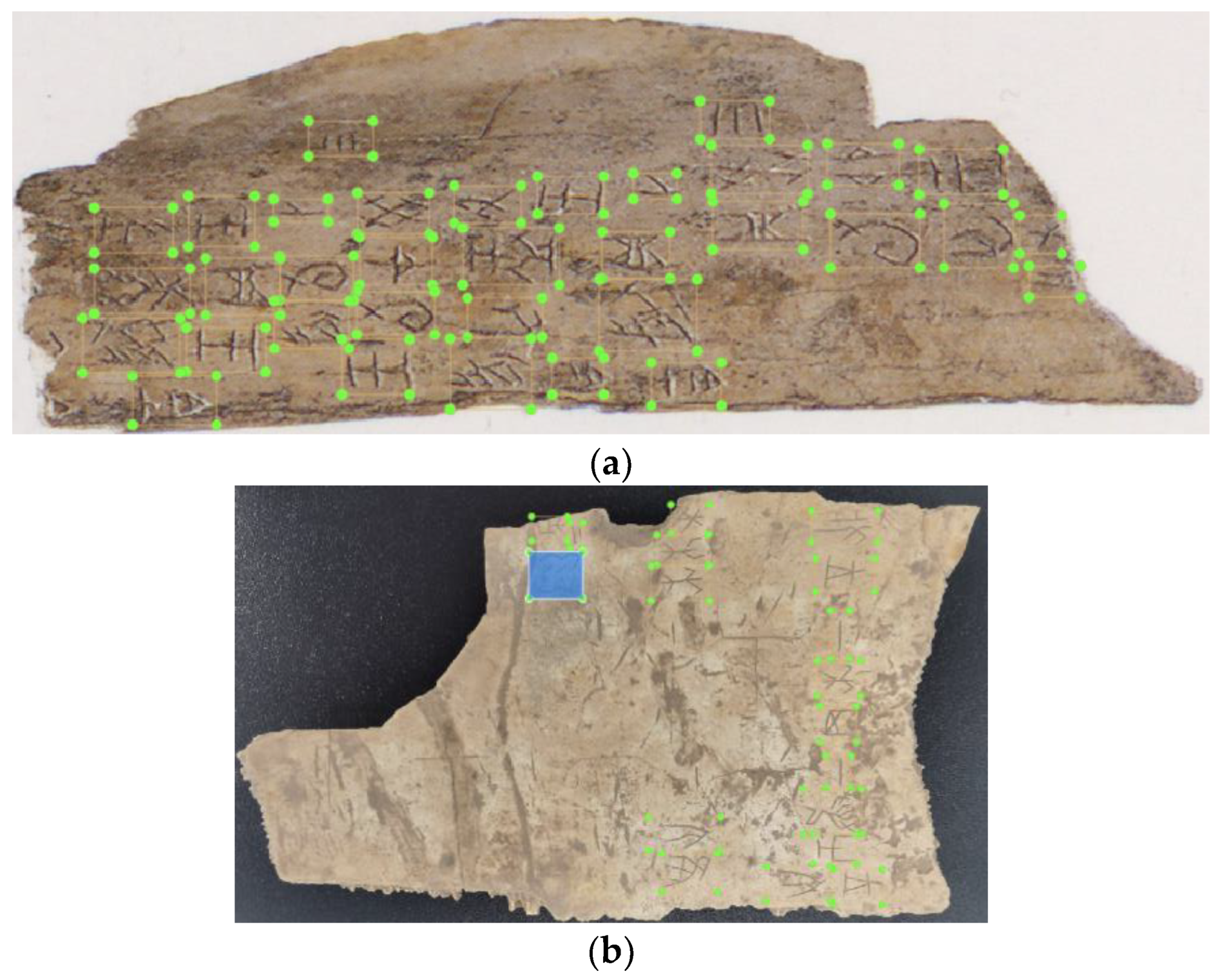

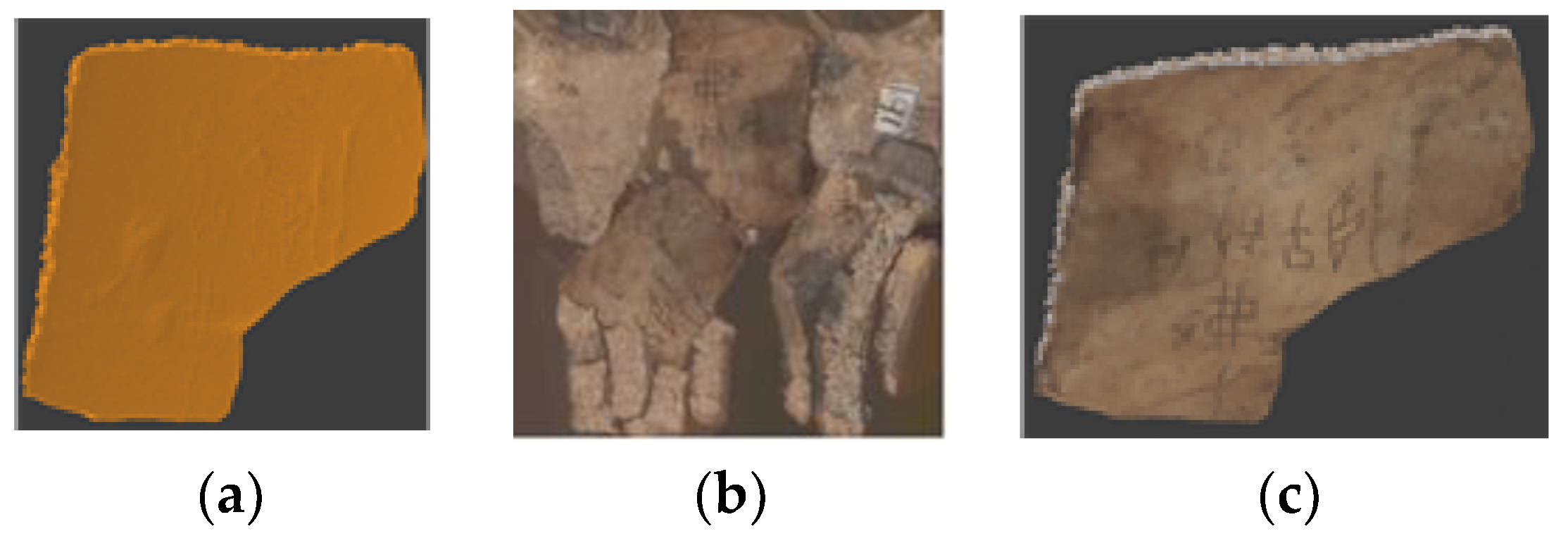

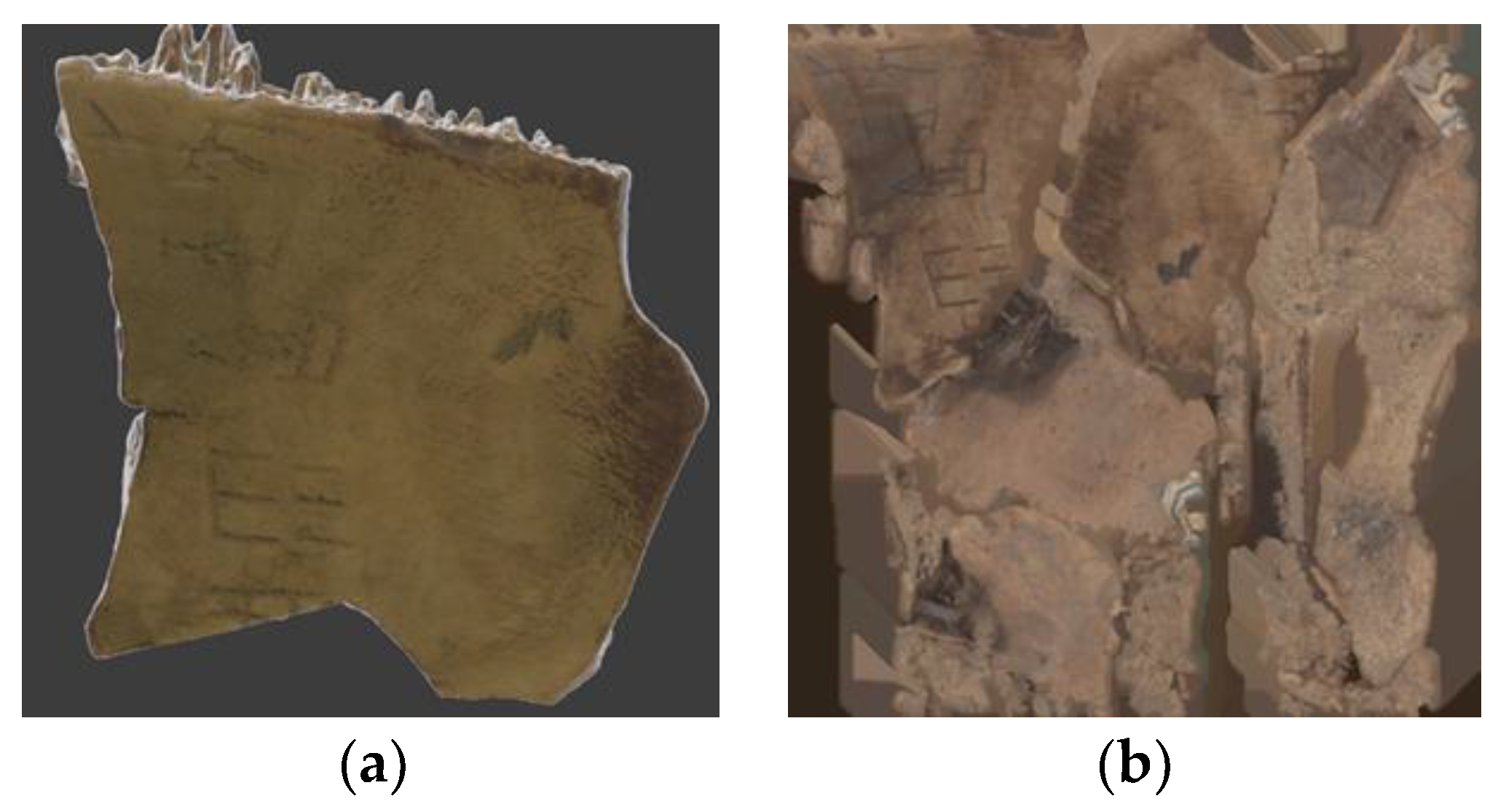

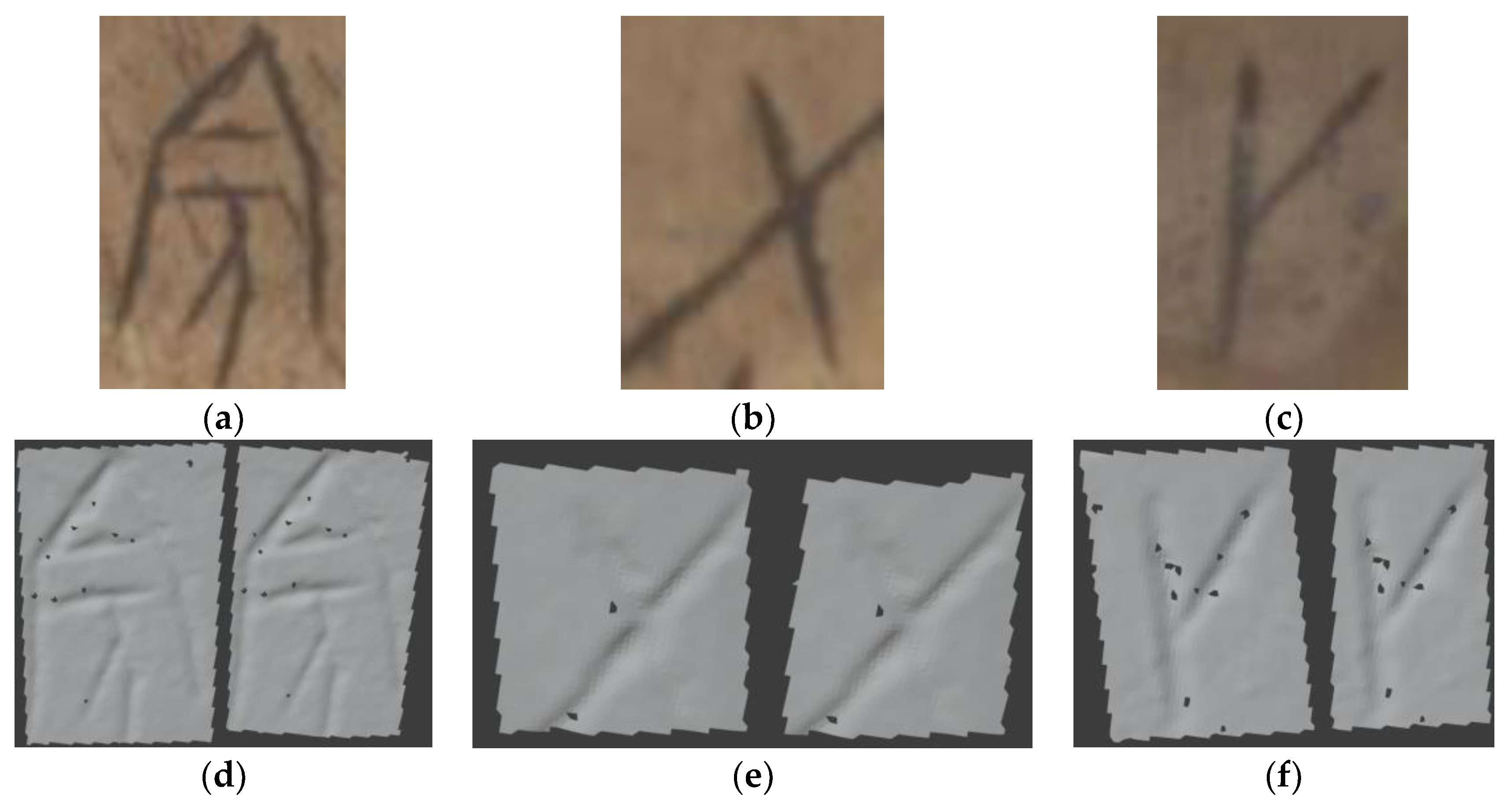

3.1. The Construction of Datasets

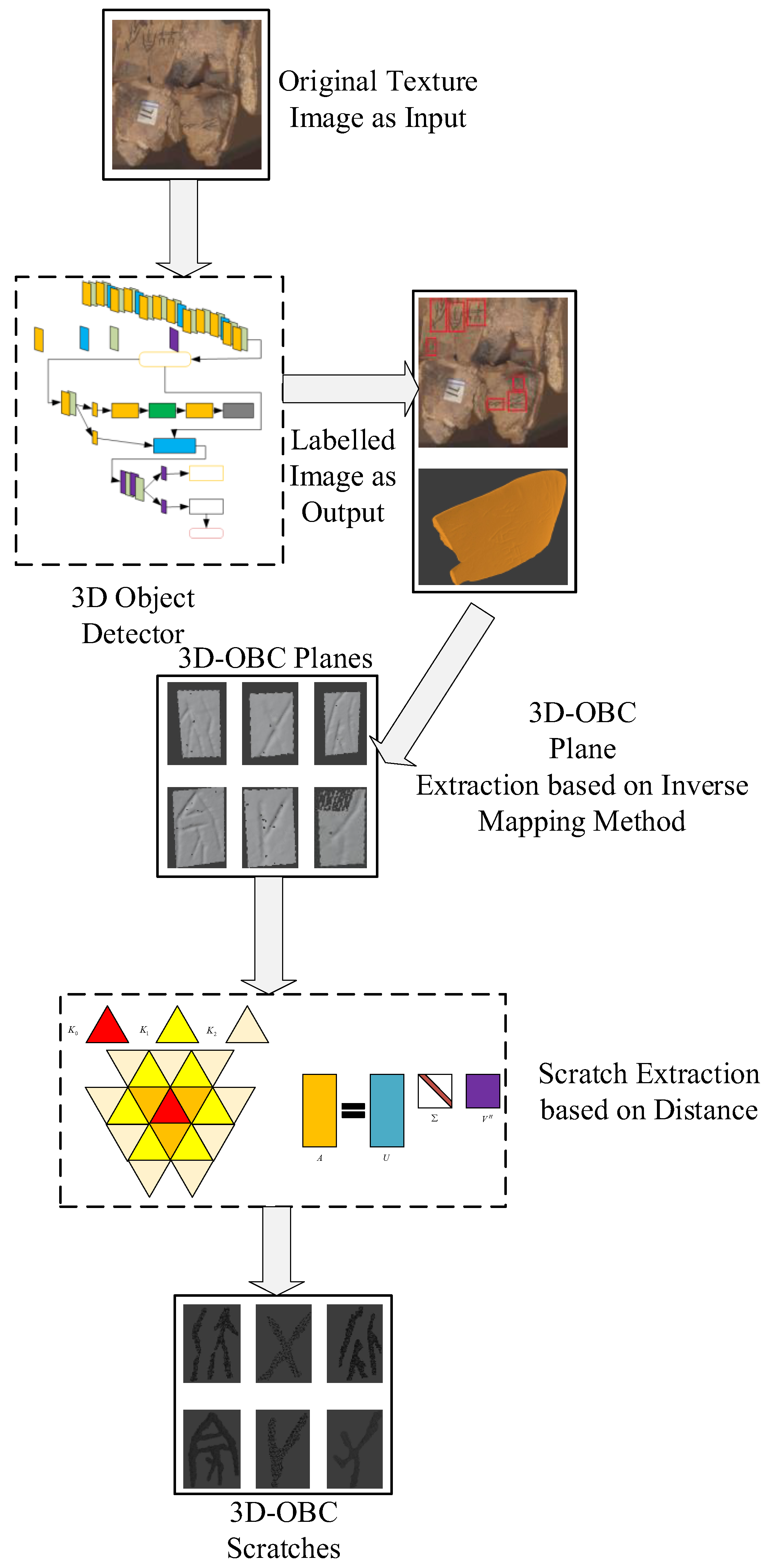

3.2. The Framework of tm-OBIE

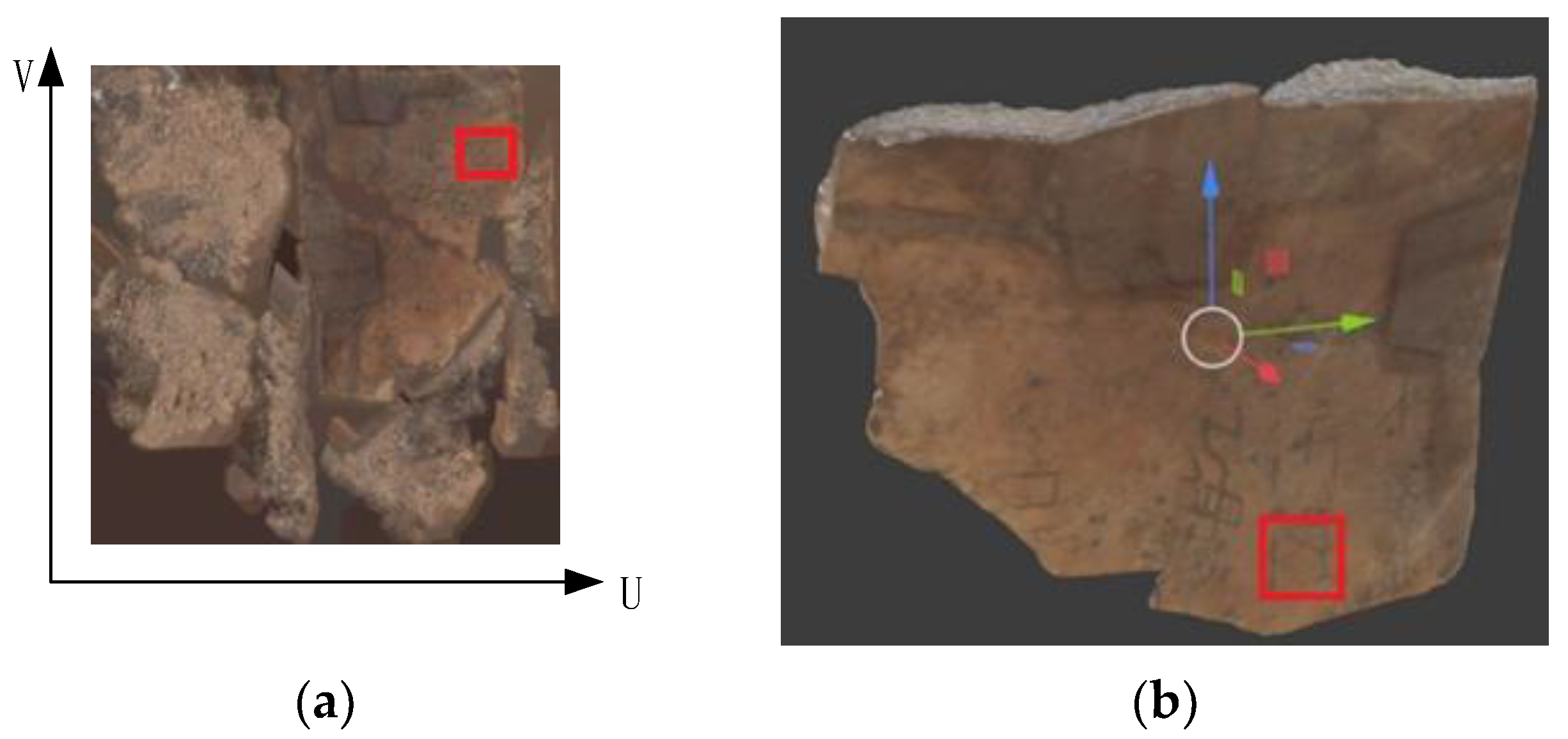

3.3. 3D-OBC Plane Extraction Based on Inverse Mapping Method

| Algorithm 1: The Inverse Mapping Algorithm |

| Input: The triangular mesh set and the two coordinates of the diagonal vertexes of the bounding box which annotates the OBC in the Texture Image denoted as and ; Output: The set containing the triangular meshes of the 3D-OBC scratches with backgrounds;

|

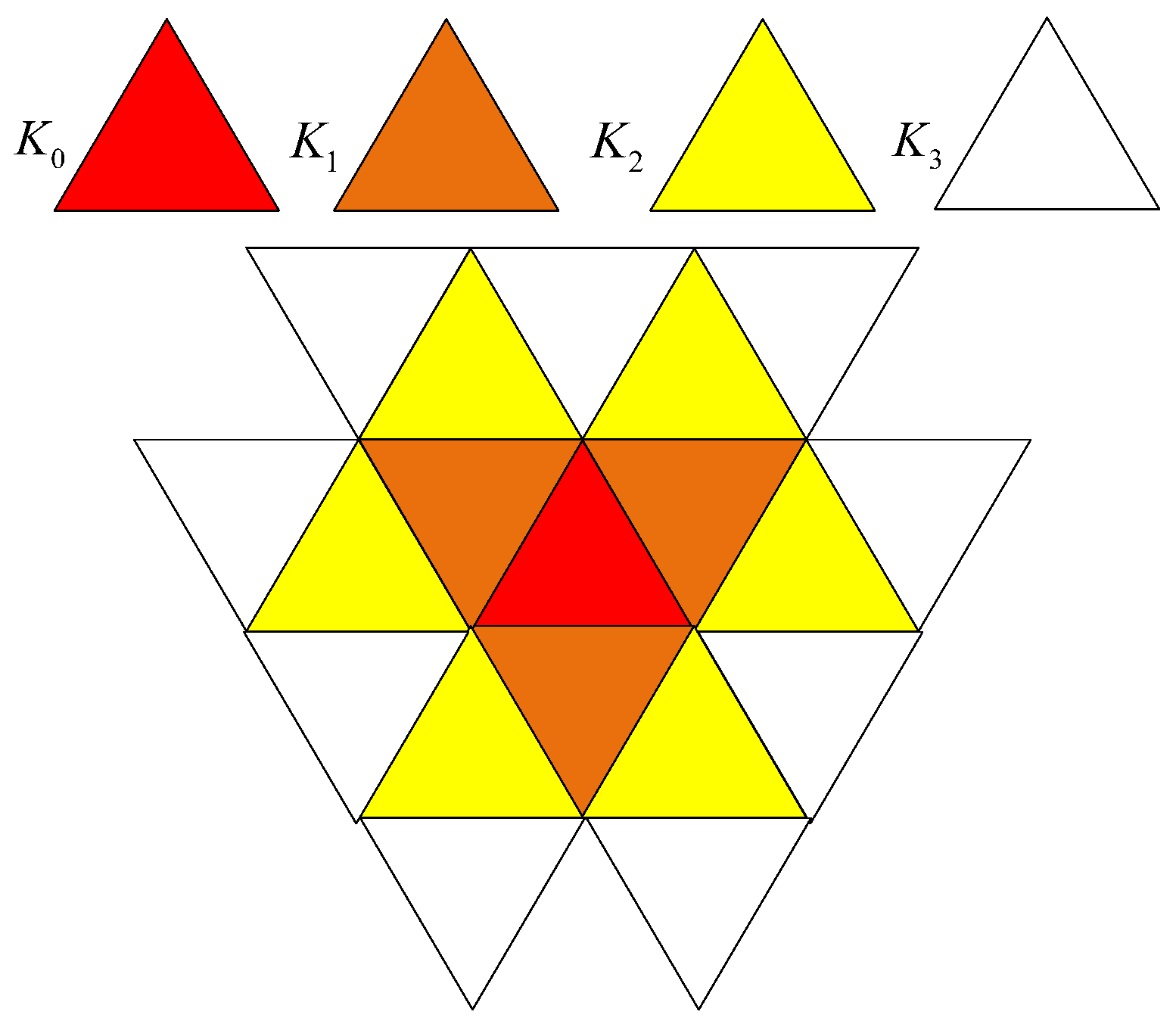

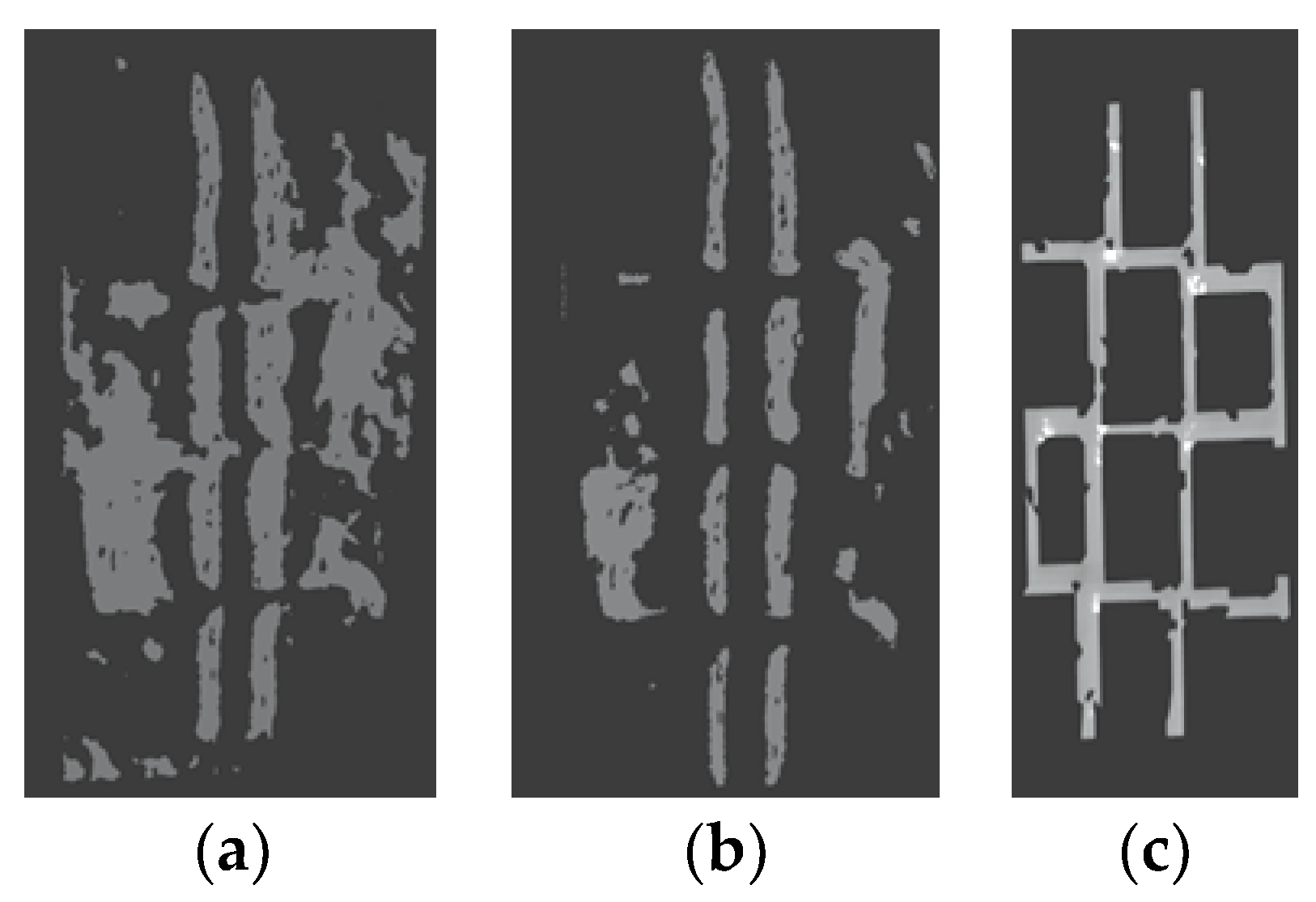

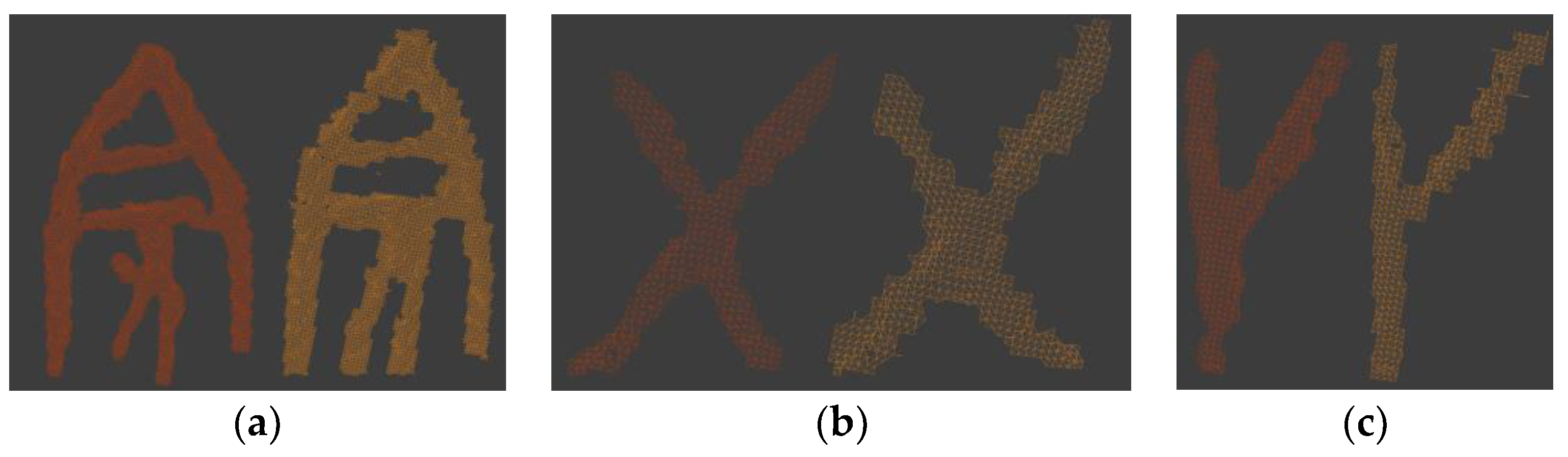

3.4. Scratch Extraction Based on Distance

| Algorithm 2: The Scratch Extraction Algorithm based on Distance |

| Input: The hyperparameters , , , and ; The discriminate set of triangular meshes corresponding to each 3D-OBC , where denote the total number of 3D-OBCs; Output: The set containing the triangular meshes of the 3D-OBC scratches;

|

4. Experiments and Analysis

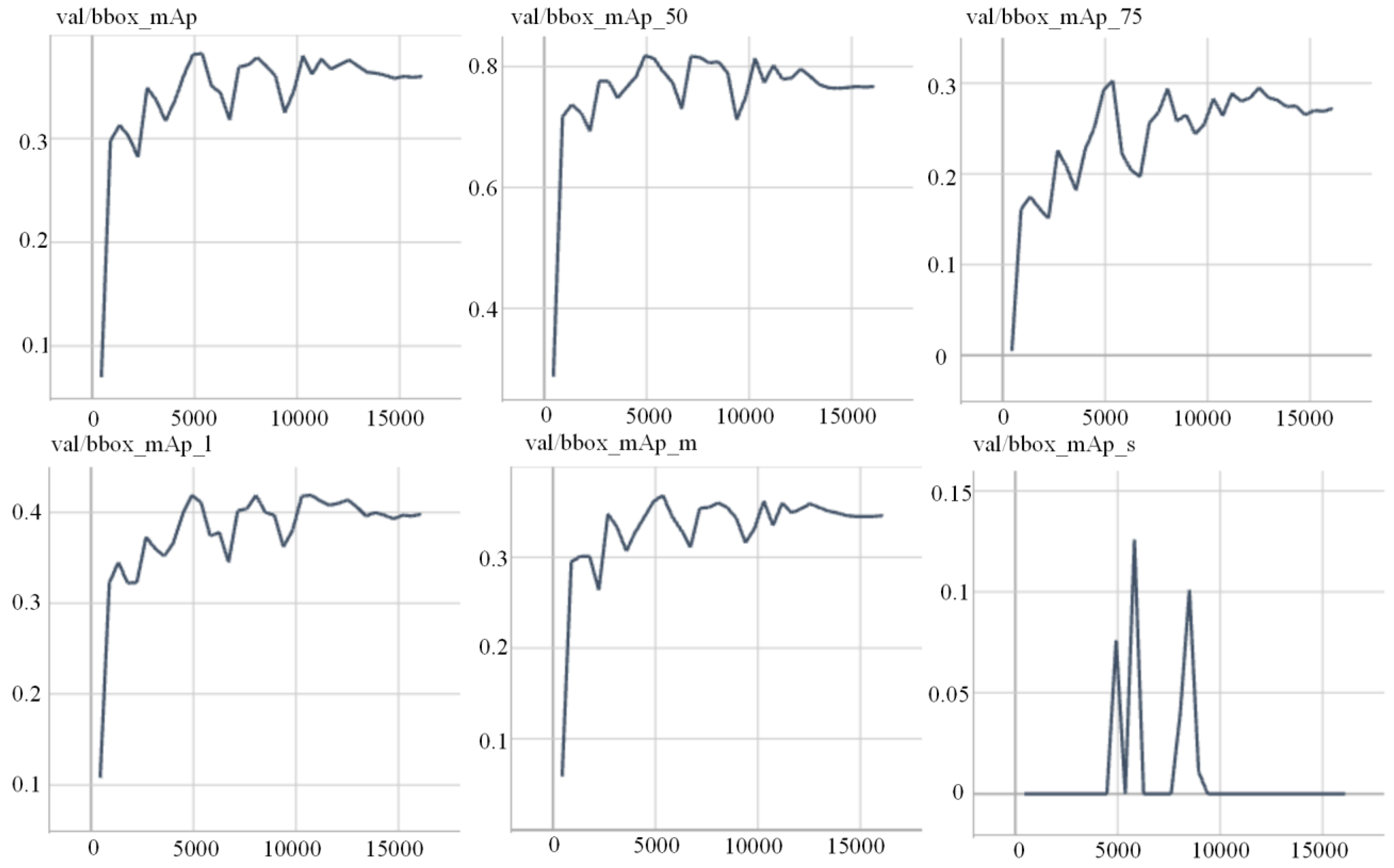

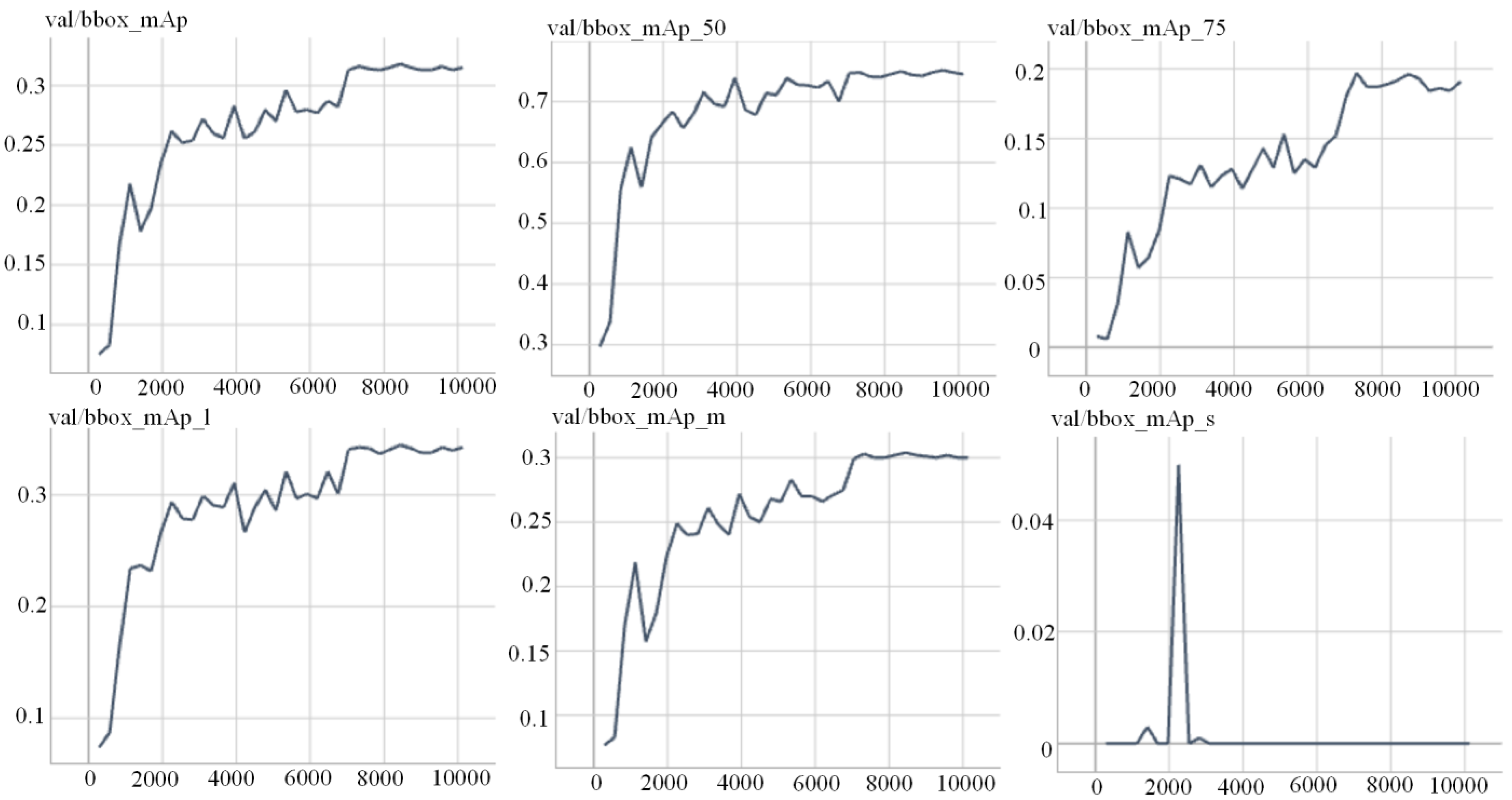

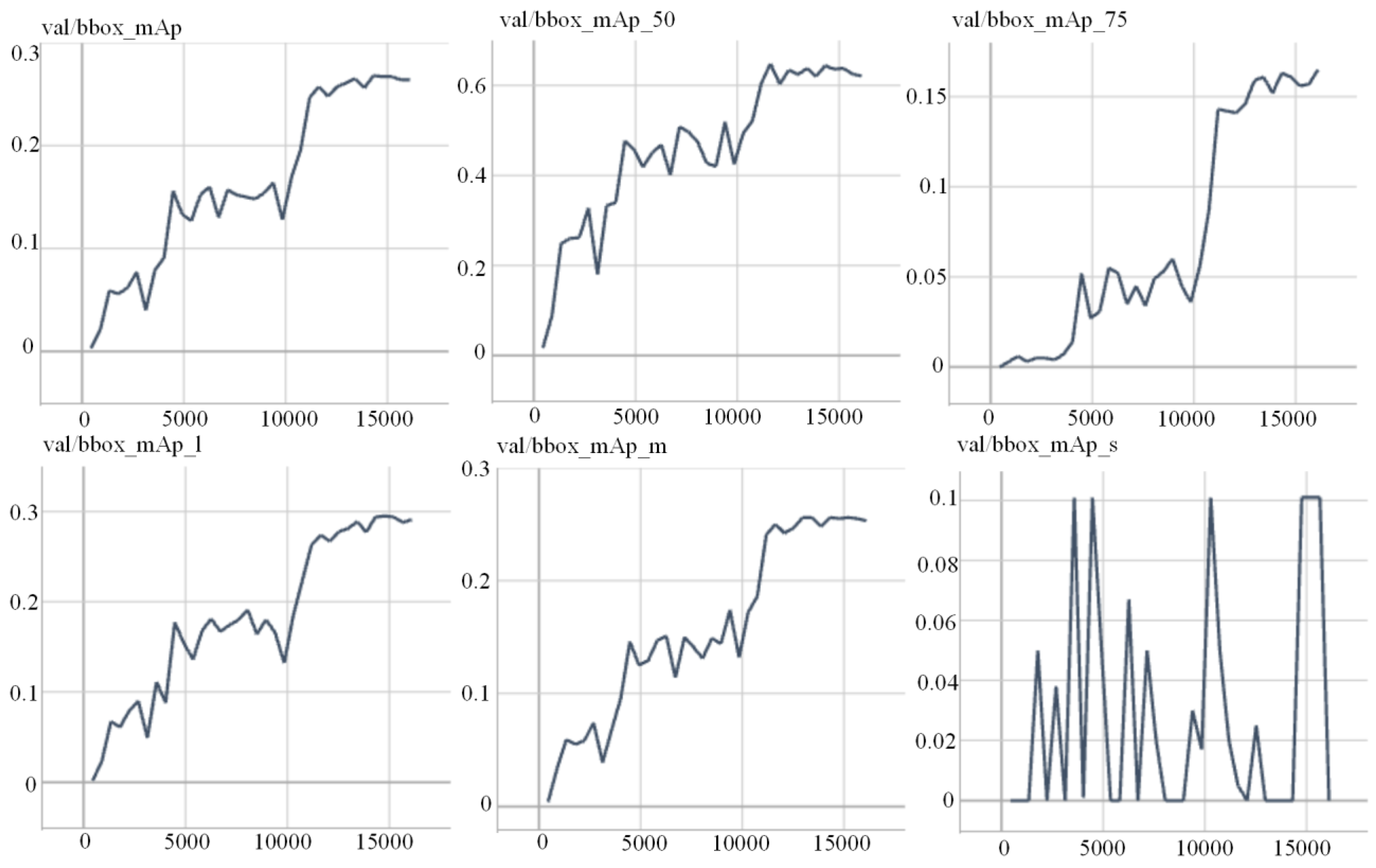

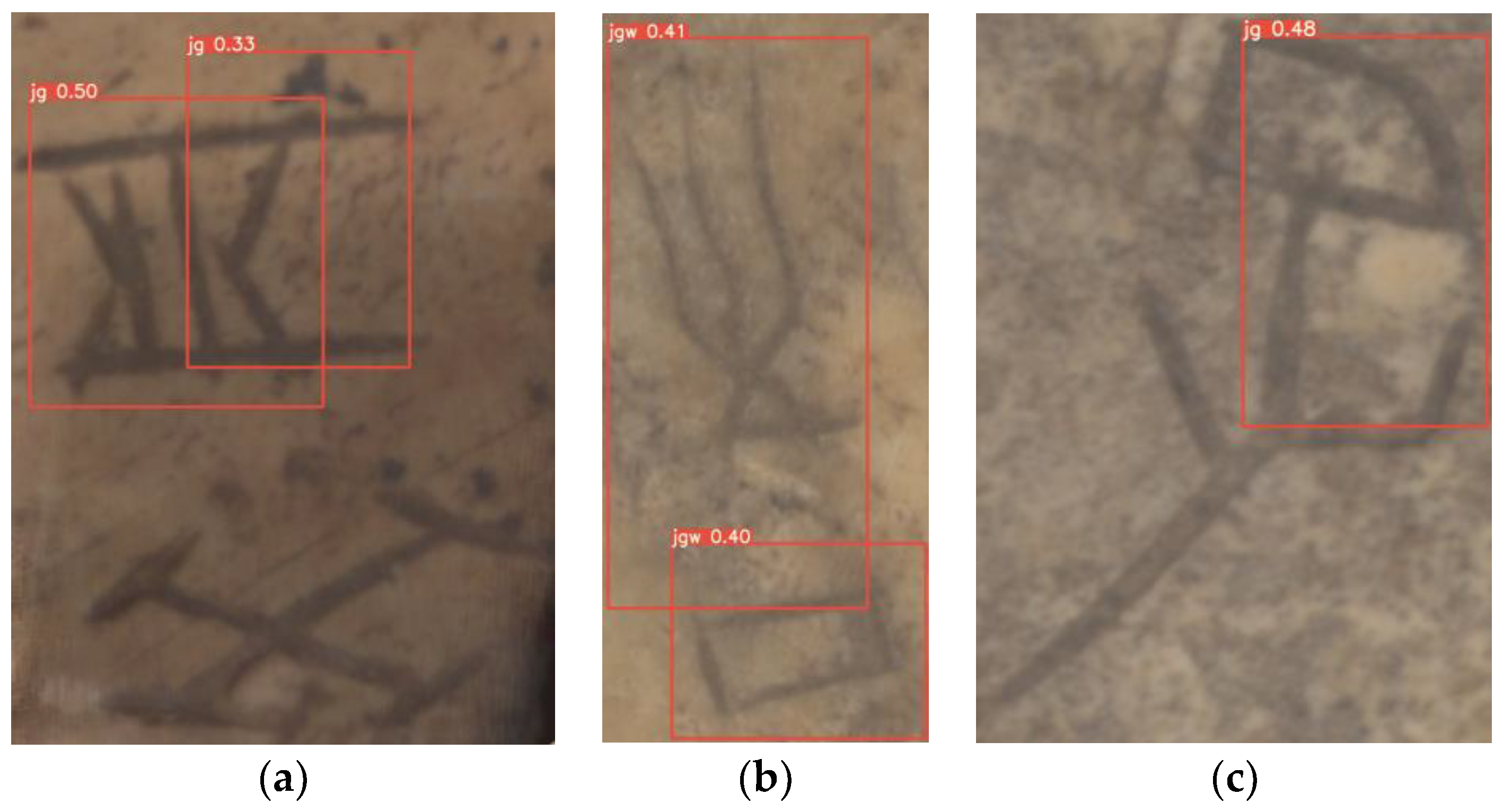

4.1. OBC Detection Experiment

4.2. Inverse Mapping Experiment

4.3. Scratch Extraction Experiment

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Sun, Y. Manifold and splendid: 120 Years of research on the oracle bone inscriptions and Shang history. Chin. Stud. Hist. 2020, 53, 351–368. [Google Scholar]

- Gao, F.; Zhang, J.; Liu, Y.; Han, Y. Image Translation for Oracle Bone Character Interpretation. Symmetry 2022, 14, 743. [Google Scholar] [CrossRef]

- Zhang, Z.; Guo, A.; Li, B. Internal Similarity Network for Rejoining Oracle Bone Fragment Images. Symmetry 2022, 14, 1464. [Google Scholar] [CrossRef]

- Meng, L.; Lyu, B.; Zhang, Z.; Aravinda, C.; Kamitoku, N.; Yamazaki, K. Oracle bone inscription detector based on SSD. In Proceedings of the New Trends in Image Analysis and Processing–ICIAP 2019: ICIAP International Workshops, BioFor, PatReCH, e-BADLE, DeepRetail, and Industrial Session, Trento, Italy, 9–10 September 2019; Revised Selected Papers 20. pp. 126–136. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 42, 386–397. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the Computer Vision & Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Li, Z.; Mahapatra, D.; Tielbeek, J.A.; Stoker, J.; van Vliet, L.J.; Vos, F.M. Image registration based on autocorrelation of local structure. IEEE Trans. Med. Imaging 2015, 35, 63–75. [Google Scholar] [CrossRef] [PubMed]

- Zhou, F.; De la Torre, F. Factorized Graph Matching. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 1774–1789. [Google Scholar] [CrossRef] [PubMed]

- Kumar, G.; Bhatia, P.K. A detailed review of feature extraction in image processing systems. In Proceedings of the 2014 Fourth International Conference on Advanced Computing & Communication Technologies, Rohtak, India, 8–9 February 2014; pp. 5–12. [Google Scholar]

- Sun, R.; Lei, T.; Chen, Q.; Wang, Z.; Du, X.; Zhao, W.; Nandi, A.K. Survey of image edge detection. Front. Signal Process. 2022, 2, 826967. [Google Scholar] [CrossRef]

- Choy, C.B.; Gwak, J.; Savarese, S.; Chandraker, M. Universal correspondence network. In Proceedings of the Advances in Neural Information Processing Systems 29 (NIPS 2016), Barcelona, Spain, 5–10 December 2016. [Google Scholar]

- Rocco, I.; Cimpoi, M.; Arandjelović, R.; Torii, A.; Pajdla, T.; Sivic, J. Neighbourhood consensus networks. In Proceedings of the Advances in Neural Information Processing Systems 31 (NeurIPS 2018), Montreal, QC, Canada, 3–8 December 2018. [Google Scholar]

- Au, O.K.-C.; Zheng, Y.; Chen, M.; Xu, P.; Tai, C.-L. Mesh segmentation with concavity-aware fields. IEEE Trans. Vis. Comput. Graph. 2011, 18, 1125–1134. [Google Scholar]

- Golovinskiy, A.; Funkhouser, T. Randomized cuts for 3D mesh analysis. ACM Trans. Graph. (TOG) 2008, 27, 1–12. [Google Scholar] [CrossRef]

- Xin, S.-Q.; He, Y.; Fu, C.-W. Efficiently computing exact geodesic loops within finite steps. IEEE Trans. Vis. Comput. Graph. 2011, 18, 879–889. [Google Scholar]

- Zhang, J.; Wu, C.; Cai, J.; Zheng, J.; Tai, X.-C. Mesh snapping: Robust interactive mesh cutting using fast geodesic curvature flow. Comput. Graph. Forum 2010, 29, 517–526. [Google Scholar] [CrossRef]

- George, D.; Xie, X.; Tam, G.K. 3D mesh segmentation via multi-branch 1D convolutional neural networks. Graph. Models 2018, 96, 1–10. [Google Scholar] [CrossRef]

- Gezawa, A.S.; Wang, Q.; Chiroma, H.; Lei, Y. A Deep Learning Approach to Mesh Segmentation. CMES-Comput. Model. Eng. Sci. 2023, 135, 1745–1763. [Google Scholar]

- Jiao, X.; Chen, Y.; Yang, X. SCMS-Net: Self-supervised clustering-based 3D meshes segmentation network. Comput.-Aided Des. 2023, 160, 103512. [Google Scholar] [CrossRef]

- Bo, L.; Zhang, T.; Tian, X. Vehicle Detection from 3D Lidar Using Fully Convolutional Network. In Proceedings of the Robotics: Science and Systems 2016, Ann Arbor, MI, USA, 18–22 June 2016. [Google Scholar]

- Minemura, K.; Liau, H.; Monrroy, A.; Kato, S. LMNet: Real-time Multiclass Object Detection on CPU Using 3D LiDAR. In Proceedings of the 2018 3rd Asia-Pacific Conference on Intelligent Robot Systems (ACIRS), Singapore, 21–23 July 2018. [Google Scholar]

- Yu, F.; Koltun, V. Multi-scale context aggregation by dilated convolutions. arXiv 2015, arXiv:1511.07122. [Google Scholar]

- Simon, M.; Amende, K.; Kraus, A.; Honer, J.; Samann, T.; Kaulbersch, H.; Milz, S.; Michael Gross, H. Complexer-yolo: Real-time 3d object detection and tracking on semantic point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar]

- Zeng, Y.; Hu, Y.; Liu, S.; Ye, J.; Han, Y.; Li, X.; Sun, N. RT3D: Real-Time 3-D Vehicle Detection in LiDAR Point Cloud for Autonomous Driving. IEEE Robot. Autom. Lett. 2018, 3, 3434–3440. [Google Scholar] [CrossRef]

- Beltran, J.; Guindel, C.; Moreno, F.M.; Cruzado, D.; Garcia, F.; Arturo, D. BirdNet: A 3D Object Detection Framework from LiDAR information. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018. [Google Scholar]

- Feng, D.; Rosenbaum, L.; Dietmayer, K. Towards safe autonomous driving: Capture uncertainty in the deep neural network for lidar 3d vehicle detection. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 3266–3273. [Google Scholar]

- Shi, B.; Bai, S.; Zhou, Z.; Bai, X. Deeppano: Deep panoramic representation for 3-d shape recognition. IEEE Signal Process. Lett. 2015, 22, 2339–2343. [Google Scholar] [CrossRef]

| Title | Content |

|---|---|

| OS | Ubuntu v20.04 |

| Python IDE | Pycharm v.2021.3 |

| Framework for Deep Learning | Pytorchv1.7 & Apex & CUDA10.1 & Cudnn v8.0.5 |

| CV Library | Open CV v.3.7 |

| Title | Content |

|---|---|

| CPU | Intel(R) Xeon(R) E5-2683 v3@2.00GHz |

| RAM | DDR4 2133 Mhz× 4(32 GB in total) |

| Graphic Cards | Nvidia(R) RTX 3090× 4(each with 24 GB vram) |

| Hard Disk | Intel(R) SSDSC2KW512G8(512 GB) |

| Name | Batch Size | Learn Rate (Initial) | Weight Decay |

|---|---|---|---|

| Faster R-CNN | 8 | 10−4 | 5 × 10−2 |

| SSD | 16 | 10−4 | 5 × 10−2 |

| YOLO v7 | 8 | 10−4 | 5 × 10−2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, A.; Zhang, Z.; Gao, F.; Du, H.; Liu, X.; Li, B. Applications of Convolutional Neural Networks to Extracting Oracle Bone Inscriptions from Three-Dimensional Models. Symmetry 2023, 15, 1575. https://doi.org/10.3390/sym15081575

Guo A, Zhang Z, Gao F, Du H, Liu X, Li B. Applications of Convolutional Neural Networks to Extracting Oracle Bone Inscriptions from Three-Dimensional Models. Symmetry. 2023; 15(8):1575. https://doi.org/10.3390/sym15081575

Chicago/Turabian StyleGuo, An, Zhan Zhang, Feng Gao, Haichao Du, Xiaokui Liu, and Bang Li. 2023. "Applications of Convolutional Neural Networks to Extracting Oracle Bone Inscriptions from Three-Dimensional Models" Symmetry 15, no. 8: 1575. https://doi.org/10.3390/sym15081575

APA StyleGuo, A., Zhang, Z., Gao, F., Du, H., Liu, X., & Li, B. (2023). Applications of Convolutional Neural Networks to Extracting Oracle Bone Inscriptions from Three-Dimensional Models. Symmetry, 15(8), 1575. https://doi.org/10.3390/sym15081575