NOMA Resource Allocation Method Based on Prioritized Dueling DQN-DDPG Network

Abstract

1. Introduction

2. Materials and Methods

2.1. System Model

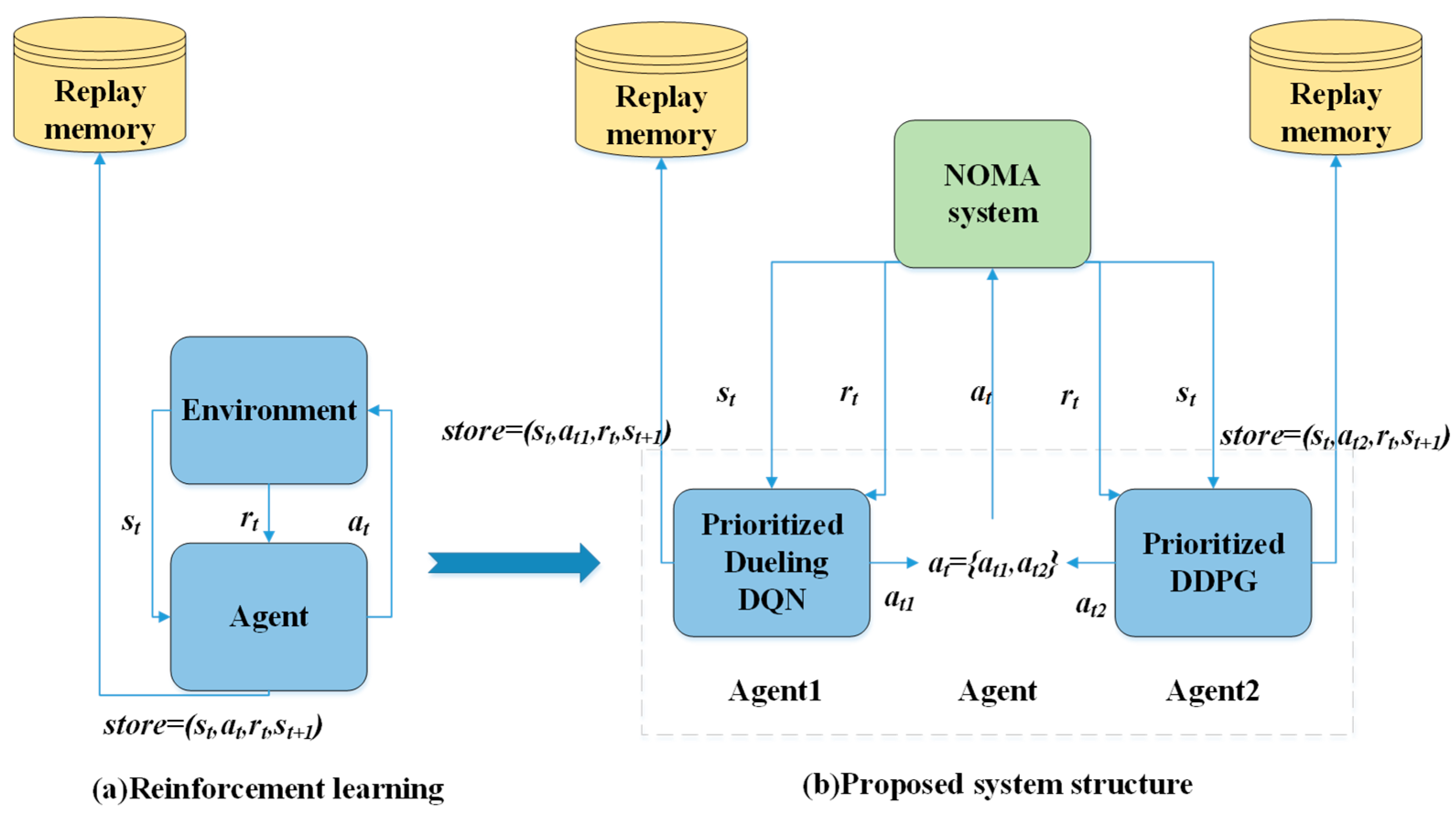

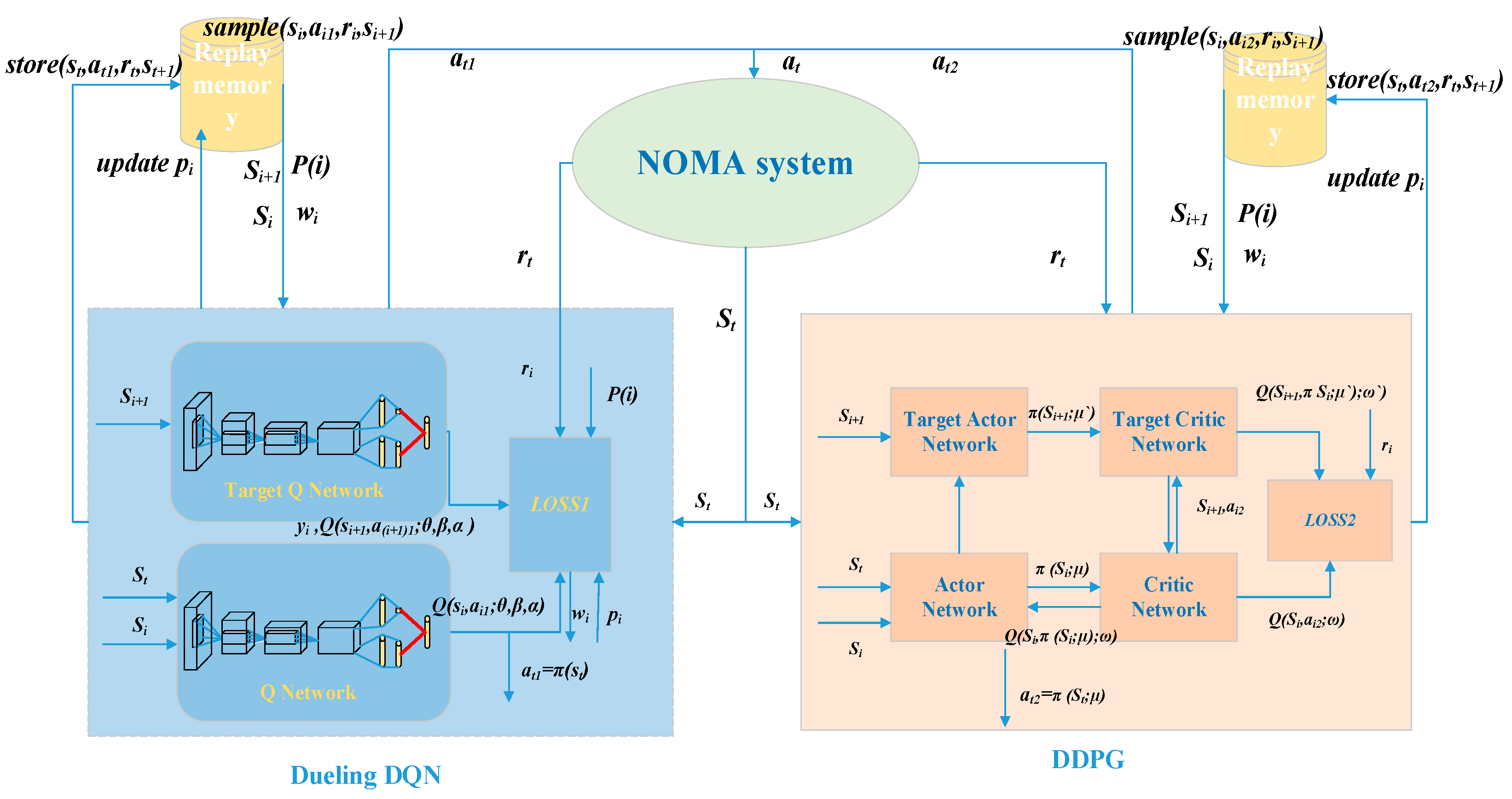

2.2. Resource Allocation Method Based on Prioritized Dueling DQN-DDPG

2.2.1. Resource Allocation Network Architecture

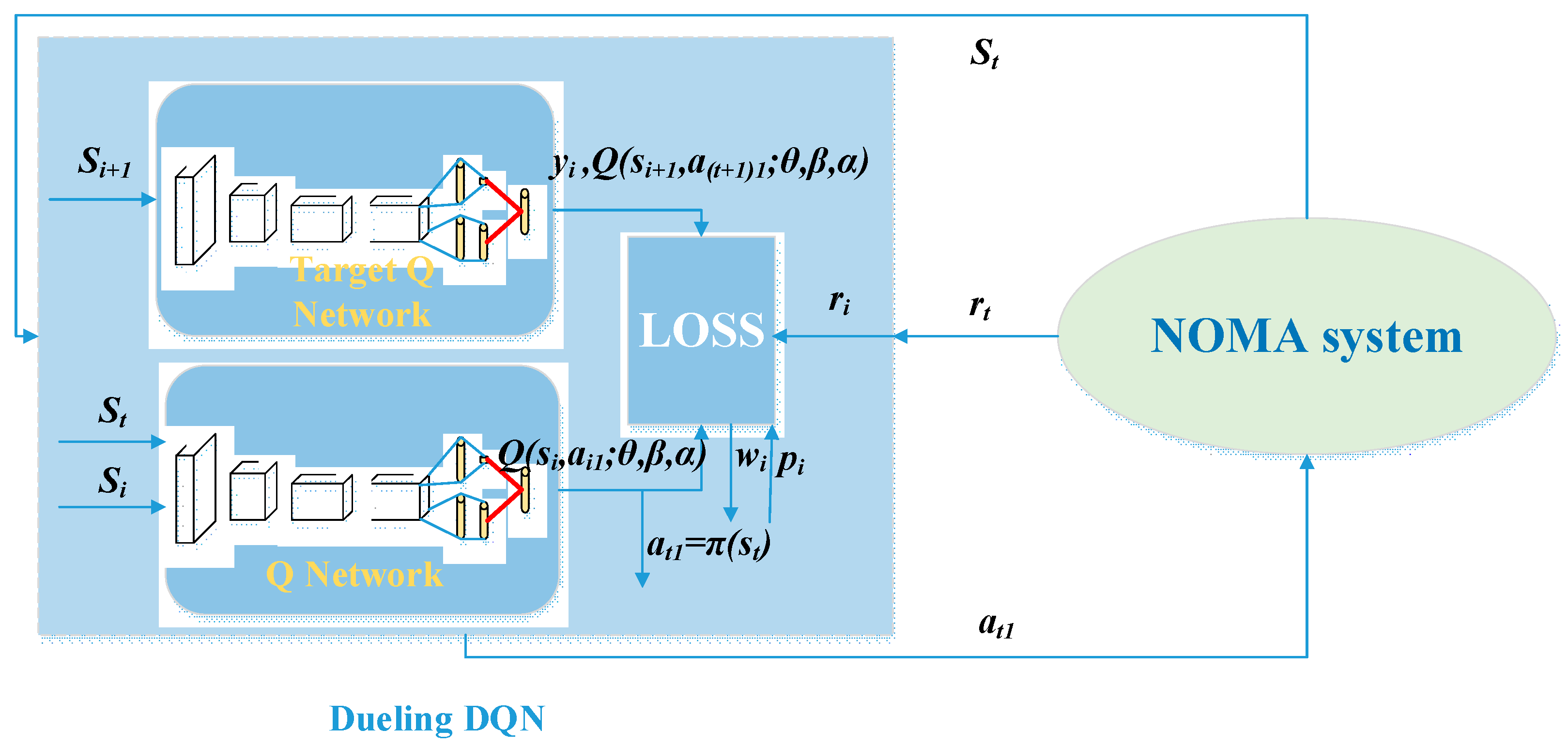

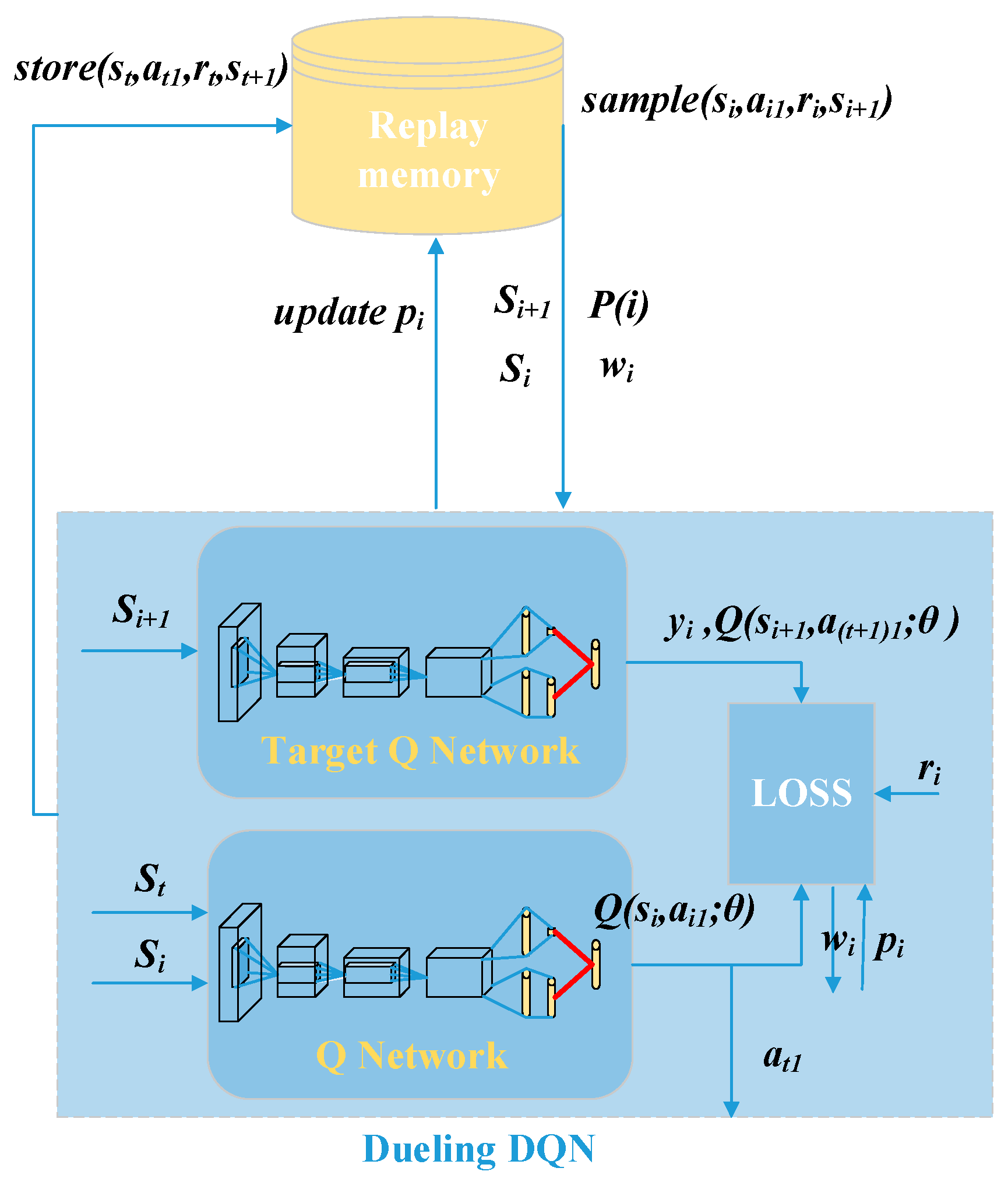

2.2.2. User Grouping Based on Dueling DQN

Dueling DQN-Based User Grouping Network

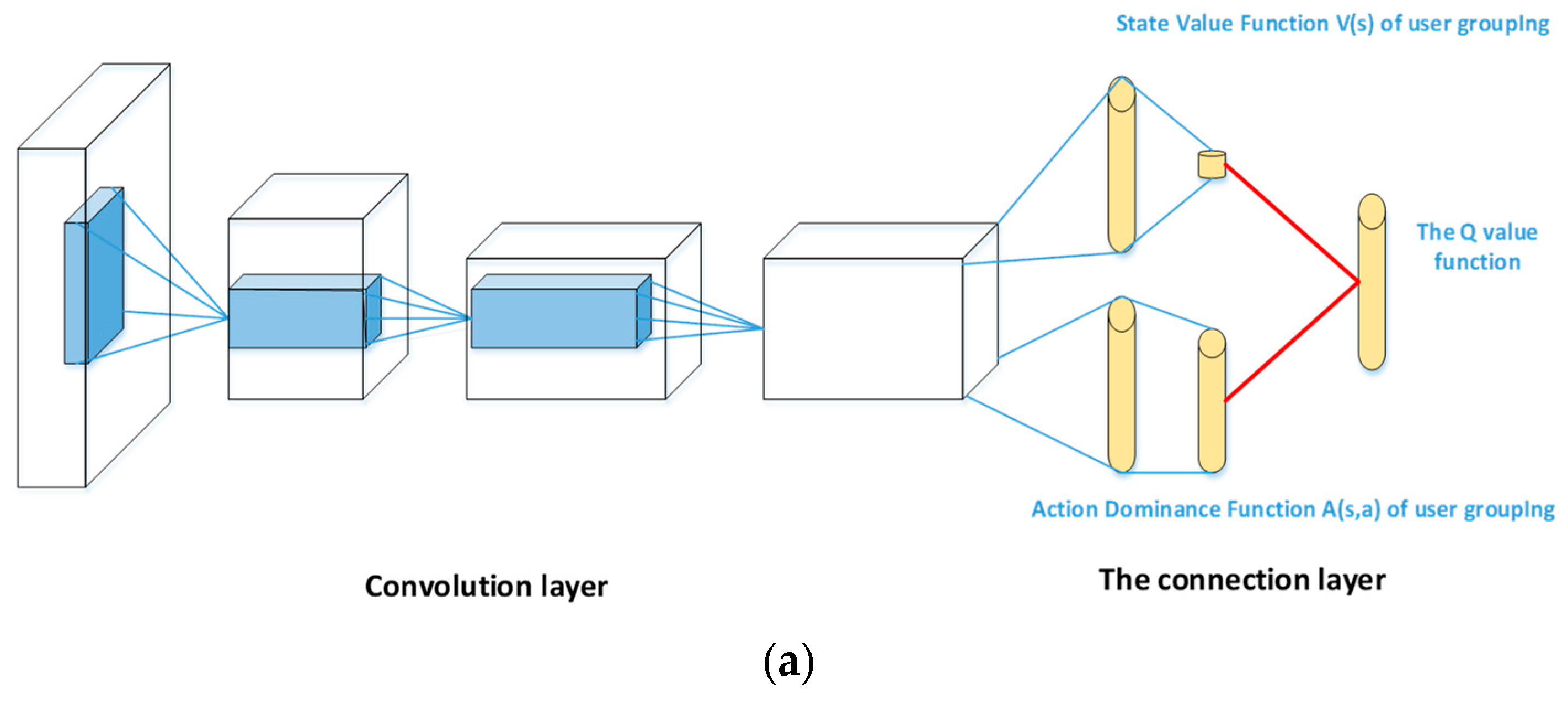

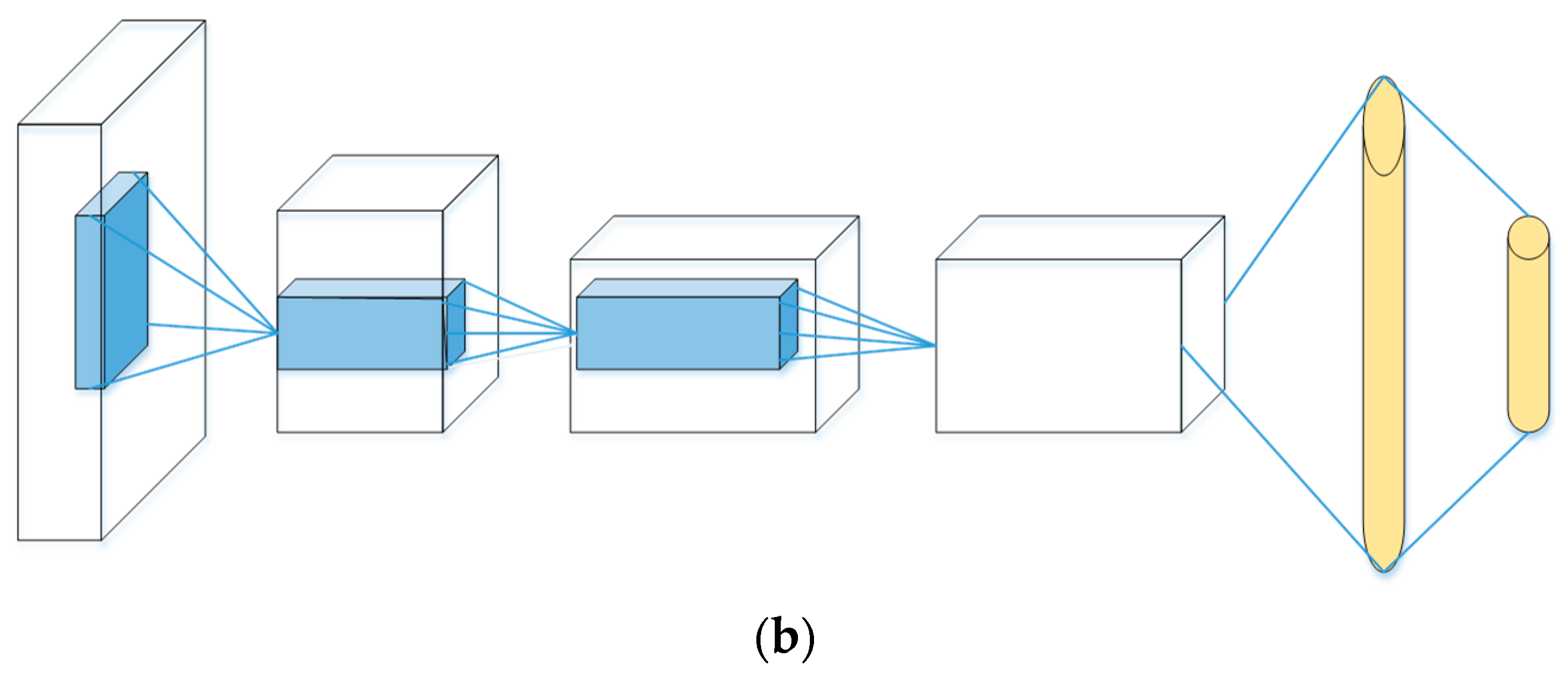

Dueling DQN Network Structure

- (1)

- It can generalize the learning process to all possible actions in the environment without changing the underlying reinforcement learning algorithm.

- (2)

- Since it can learn the most critical state for the agent, it does not need to know the impact of each action on each state, enabling it to quickly identify the best action.

- (3)

- From a network training perspective, less data is required, making the network training more user-friendly and straightforward.

- (4)

- Training the state and advantage functions separately makes it easier to maintain the order between actions. When breaking down the value function, each result part has practical significance, and their combination is uniquely determined, making the network learning more precise and robust.

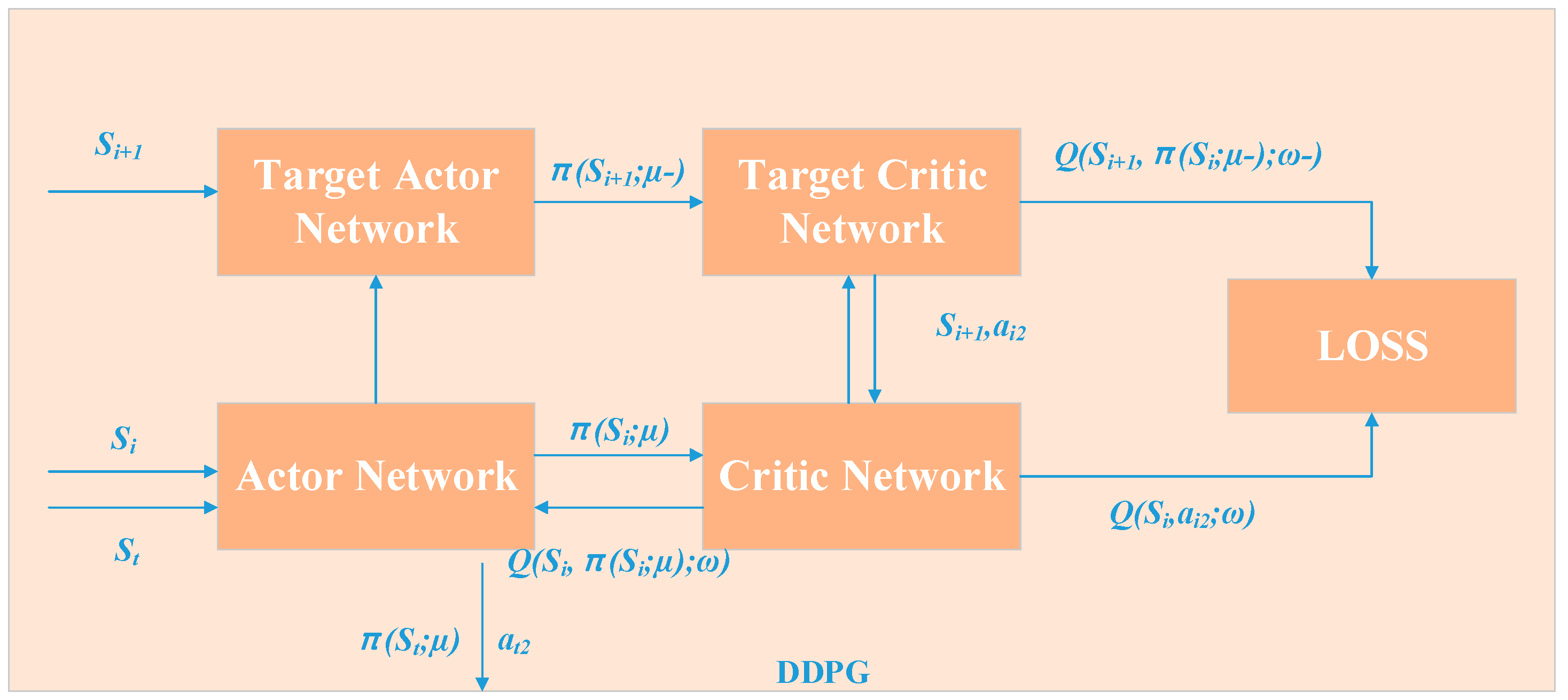

2.2.3. Power Allocation Based on DDPG Network

2.2.4. Priority Experience Playback Mechanism

2.2.5. User Grouping and Power Allocation of Prioritized Dueling DQN-DDPG

| Algorithm 1: User grouping and power allocation of Prioritized Dueling DQN-DDPG |

| Initialize the memory D, store the maximum value of the experience sample to N, and the weight update interval W. Initialize the prediction Q-network and weight of all Dueling DQN units, the target Q-network and weight . The random weights and are used to initialize the current π(;) of the Actor network, and the Q(;) of Critic current network; Update Actor target network π(; ) with parameter < −; Update Critic target network Q(; ) with parameter < −. Initialize state , action and ambient noise . Repeat The time step in the empirical trajectory, from t = 1 to T. The Dueling DQN network chooses action according to the ε-greedy strategy, and otherwise chooses , and get the return reward and the next state . Save the to the memory. Sample data by priority size from the memory. The target value of each state is calculated, and the value of Q is updated by the reward after the action is performed by the target network Q. The Target value of Target Q Network in Dueling DQN network is calculated, the TD error() of samples is calculated, and the loss function is calculated. Calculate the Target Q value of the Target Critic Network, calculate the sample , and get the loss function . Through continuous parameter update to train the sample, finally find the appropriate user group and power allocation mode . Through the calculated loss function, update all parameters, recalculate TD error, and then determine all sample priority according to the TD error, update all priority . The weight parameter of Dueling DQN is updated by minimizing the loss function formula . Update the weight of Critic current network in DDPG by minimizing loss function formula . The resampling strategy gradient formula was used to update the policy parameter of the Actor’s current network in DDPG. Every W interval, update the weight of the target network with the prediction network weight . Every W time intervals, update parameter of Actor target network according to and parameter according to Critic target network . END END |

3. Results and Discussion

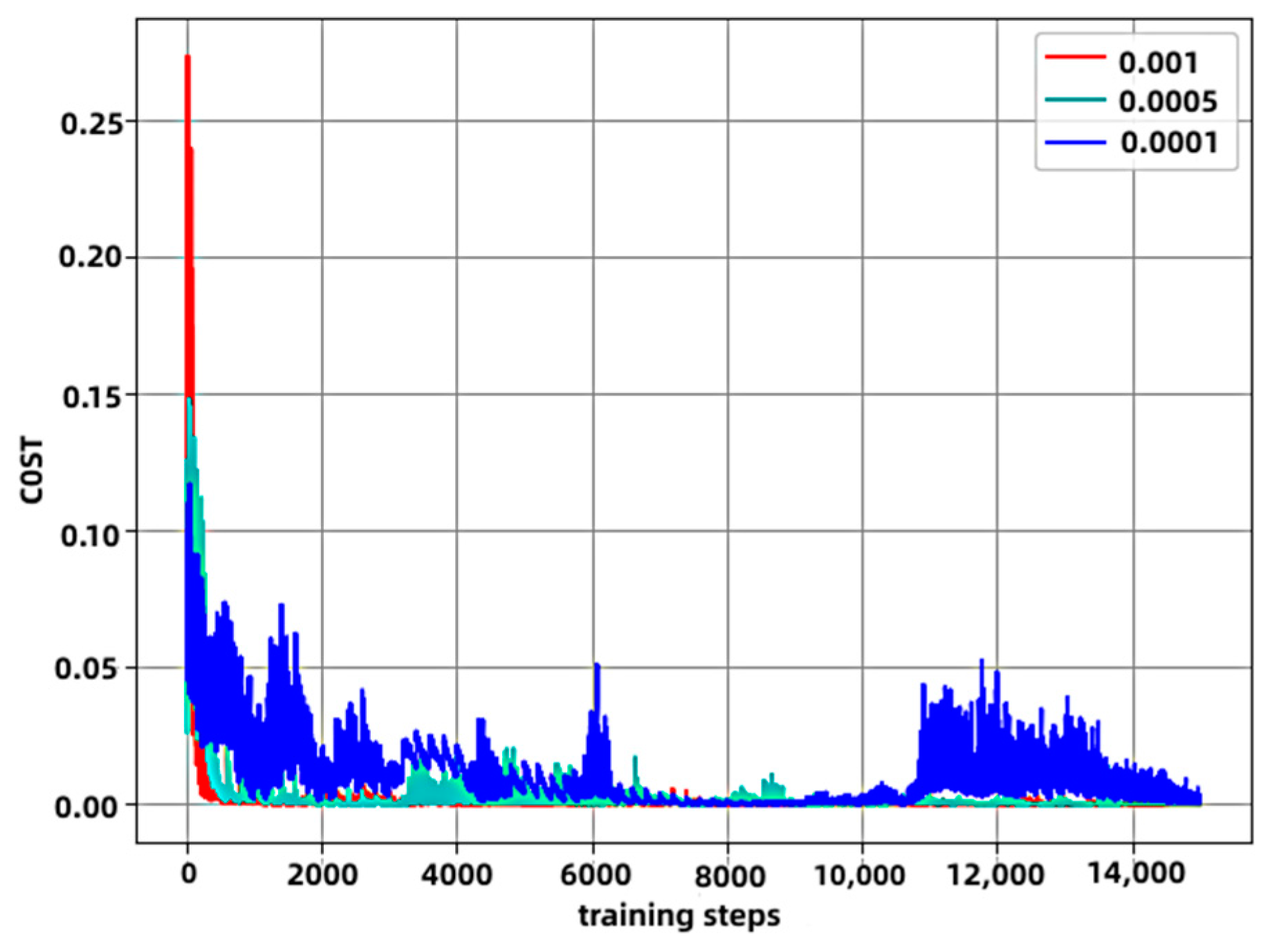

3.1. Convergence of the Proposed Algorithm

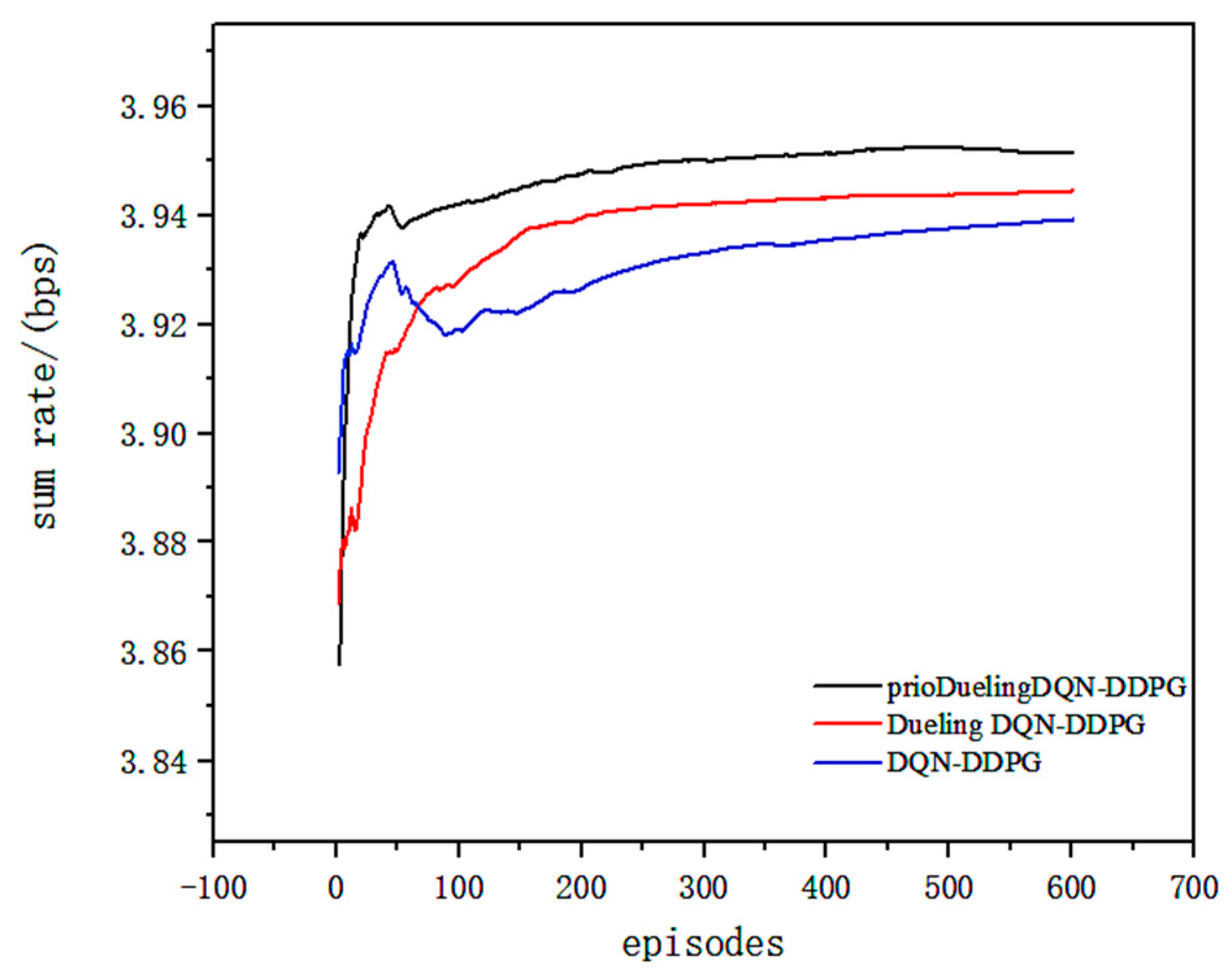

3.2. Average Sum Rate Performance of the Proposed Algorithm

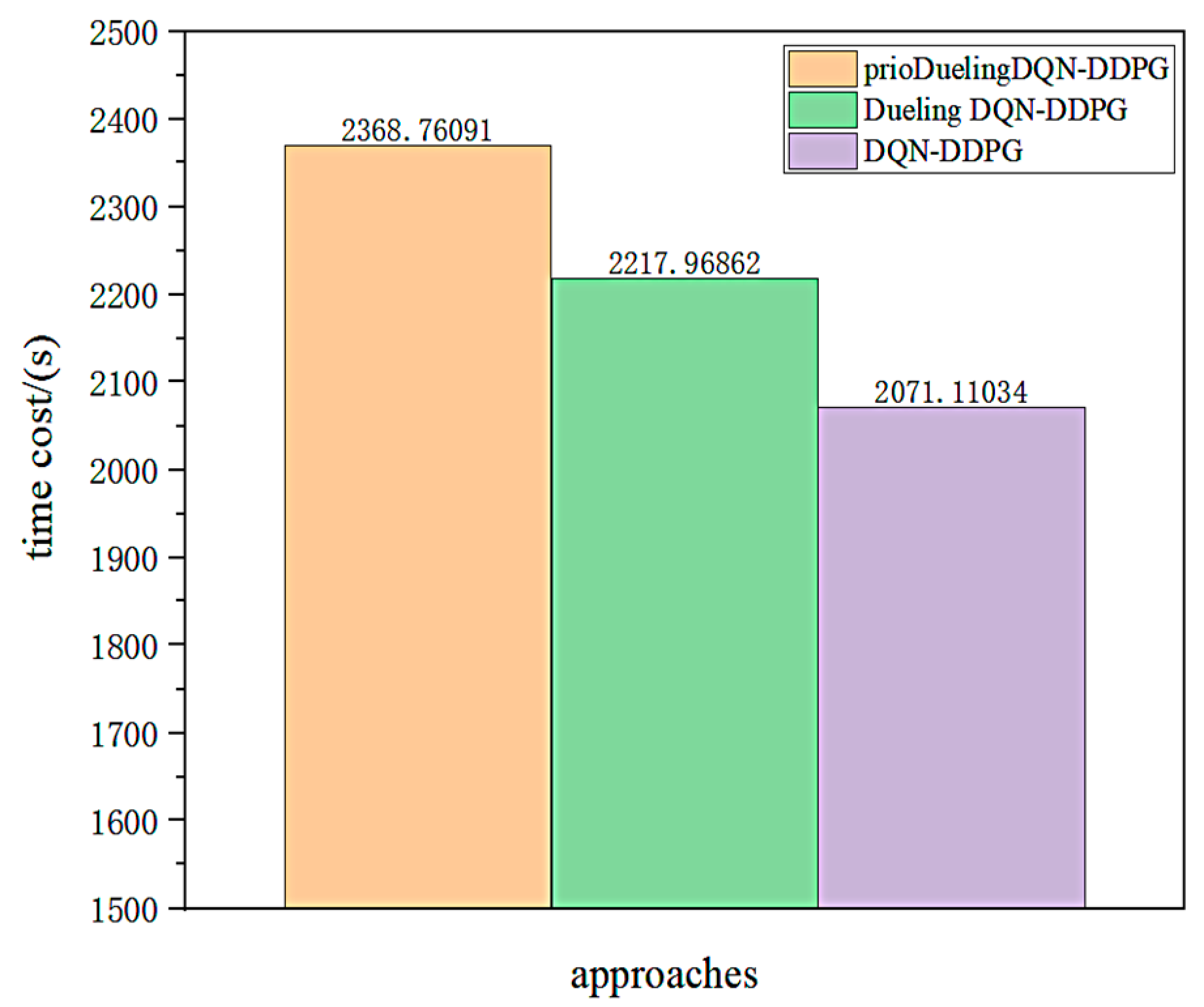

3.3. Computational Complexity Analysis

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- You, X.; Pan, Z.; Gao, X.; Cao, S.; Wu, H. The 5G mobile communication: The development trends and its emerging key techniques. Sci.-Sin. Inf. 2014, 44, 551–563. [Google Scholar]

- Yu, X.H.; Pan, Z.W.; Gao, X.Q.; Cao, S.M.; Wu, H.S. Development trend and some key technologies of 5G mobile communication. Sci. China Inf. Sci. 2014, 44, 551–563. [Google Scholar]

- Goto, J.; Nakamura, O.; Yokomakura, K.; Hamaguchi, Y.; Ibi, S.; Sampei, S. A Frequency Domain Scheduling for Uplink Single Carrier Non-orthogonal Multiple Access with Iterative Interference Cancellation. In Proceedings of the 2014 IEEE 80th Vehicular Technology Conference (VTC2014-Fall), Vancouver, BC, Canada, 14–17 September 2014; IEEE: Vancouver, BC, Canada; pp. 1–5. [Google Scholar]

- Islam, S.M.R.; Zeng, M.; Dobre, O.A.; Kwak, K.-S. Resource allocation for downlink NOMA systems: Key techniques and open issues. IEEE Wirel. Commun. 2018, 25, 40–47. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, D.-K.; Meng, W.-X.; Li, C. User pairing algorithm with SIC in non-orthogonal multiple access system. In Proceedings of the International Conference on Communications, Kuala Lumpur, Malaysia, 22–27 May 2016; pp. 1–614. [Google Scholar]

- Sun, Y.; Ng, D.W.K.; Ding, Z.; Schober, R. Optimal Joint Power and Subcarrier Allocation for Full-Duplex Multicarrier Non-Orthogonal Multiple Access Systems. IEEE Trans. Commun. 2017, 65, 1077–1091. [Google Scholar] [CrossRef]

- Li, X.; Ma, W.; Luo, L.; Zhao, F. Power allocation of NOMA system in Downlink. Syst. Eng. Electron. 2018, 40, 1595–1599. [Google Scholar]

- Asif, M.; Ihsan, A.; Khan, W.U.; Ranjha, A.; Zhang, S.; Wu, S.X. Energy-Efficient Backscatter-Assisted Coded Cooperative-NOMA for B5G Wireless Communications. IEEE Trans. Green Commun. Netw. 2022, 7, 70–83. [Google Scholar] [CrossRef]

- Shi, J.; Yu, W.; Ni, Q.; Liang, W.; Li, Z.; Xiao, P. Energy Effcient Resource Allocation in Hybrid Non-Orthogonal Multiple Accrss Systems. IEEE Trans. Commun. 2019, 67, 3496–3511. [Google Scholar] [CrossRef]

- Fang, F.; Cheng, J.; Ding, Z. Joint energy effcient subchannel and power optimization for a downlink NOMA heterI ogeneous network. IEEE Trans. Veh. Technol. 2019, 68, 1351–1364. [Google Scholar] [CrossRef]

- Yang, N.; Zhang, H.; Long, K.; Hsieh, H.-Y.; Liu, J. Deep Neural Network for Resource Management in NOMA Networks. IEEE Trans. Veh. Technol. 2019, 69, 876–886. [Google Scholar] [CrossRef]

- He, C.; Hu, Y.; Chen, Y.; Zeng, B. Joint power allocation and channel assignment for NOMA with deep reinforcement learning. IEEE J. Sel. Areas Commun. 2019, 37, 2200–2210. [Google Scholar] [CrossRef]

- Shamna, K.F.; Siyad, C.I.; Tamilselven, S.; Manoj, M.K. Deep Learning Aided NOMA for User Fairness in 5G. In Proceedings of the 2020 7th International Conference on Smart Structures and Systems (ICSSS), Chennai, India, 23–24 July 2020; IEEE: Chennai, India, 2020; pp. 1–6. [Google Scholar]

- Kumaresan, S.P.; Tan, C.K.; Ng, Y.H. Deep Neural Network (DNN) for Efficient User Clustering and Power Allocation in Downlink Non-Orthogonal Multiple Access (NOMA) 5G Networks. Symmetry 2021, 13, 1507. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-Level Control Through Deep Reinforcement Learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef] [PubMed]

- Ahsan, W.; Yi, W.; Qin, Z.; Liu, Y. Arumugam Nallanathan, Resource allocation in uplink NOMA-IoT networks: A reinforcement-learning approach. IEEE Trans. Wirel. Commun. 2021, 20, 5083–5098. [Google Scholar] [CrossRef]

- Wang, Z.; Schaul, T.; Hessel, M. Dueling network architectures for deep reinforcement learning. PMLR 2015, 48, 1995–2003. [Google Scholar]

- Zhang, S.; Li, L.; Yin, J.; Liang, W.; Li, X.; Chen, W.; Han, Z. A dynamic power allocation scheme in power-domain NOMA using actor-critic reinforcement learning. In Proceedings of the 2018 IEEE/CIC International Conference on Communications in China (ICCC), Beijing, China, 16–18 August 2018; IEEE: Beijing, China, 2018; pp. 719–723. [Google Scholar]

- Liu, Y.; Li, Y.; Li, L.; He, M. NOMA Resource Allocation Method Based on Prioritized Dueling DQN-DDPG Network. Res. Sq. 2022. Preprint. [Google Scholar]

- Schaul, T.; Quan, J.; Antonoglou, I.; Silver, D. Prioritized experience replay. In Proceedings of the International Conference Learning, Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous control with deep reinforcement learning, in ICLR. arXiv 2015, arXiv:1509.02971. [Google Scholar]

- Le, Q.N.; Nguyen, V.-D.; Nguyen, N.-P.; Chatzinotas, S.; Dobre, O.A.; Zhao, R. Learning-assisted user clustering in cell-free massive MIMO-NOMA networks. IEEE Trans. Veh. Technol. 2021, 70, 12872–12887. [Google Scholar] [CrossRef]

- Liu, X.; Zhang, X. NOMA-based resource allocation for cluster-based cognitive industrial internet of things. IEEE Trans. Ind. Inform. 2019, 16, 5379–5388. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, X.; Xu, Y. Energy-efcient resource allocation in uplink NOMA systems with deep reinforcement learning. In Proceedings of the International Conference on Wireless Communications and Signal Processing (WCSP), Xi’an, China, 23–25 October 2019; pp. 1–6. [Google Scholar]

- Salaün, L.; Coupechoux, M.; Chen, C.S.J.I.T.O.S.P. Joint subcarrier and power allocation in NOMA: Optimal and approximate algorithms. IEEE Trans. Signal Process. 2020, 68, 2215–2230. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, Y.; Shen, R.; Xu, Y.; Zheng, F.-C. DRL-based energy-efficient resource allocation frameworks for uplink NOMA systems. IEEE Internet Things J. 2020, 7, 7279–7294. [Google Scholar] [CrossRef]

- Cheng, W.; Zhao, S.; Mei, C.; Zhu, Q. Joint Time and Power Allocation Algorithm in NOMA Relaying Network. Int. J. Antennas Propag. 2019, 2019, 7842987. [Google Scholar]

- Xiao, L.; Li, Y.; Dai, C.; Dai, H.; Poor, H.V. Reinforcement learning-based NOMA power allocation in the presence of smart jamming. IEEE Trans. Veh. Technol. 2017, 67, 3377–3389. [Google Scholar] [CrossRef]

- Feng, L.; Fu, X.; Tang, Z.; Xiao, P. Power Allocation Intelligent Optimization for Mobile NOMA Communication System. Int. J. Antennas Propag. 2022, 2022, 5838186. [Google Scholar]

- Meng, F.; Chen, P.; Wu, L.; Cheng, J. Power allocation in multi-user cellular networks: Deep reinforcement learning approaches. IEEE Trans. Wirel. Commun. 2020, 19, 6255–6267. [Google Scholar] [CrossRef]

- Neto, F.H.C.; Araujo, C.; Mota, M.P.; Macieland, T.; De Almeida, A.L.F. Uplink Power Control Framework Based on Reinforcement Learning for 5G Networks. IEEE Trans. Veh. Technol. 2021, 70, 5734–5748. [Google Scholar] [CrossRef]

- Ge, J.; Liang, Y.-C.; Joung, J.; Sun, S. Deep Reinforcement Learning for Distributed Dynamic MISO Downlink-Beamforming Coordination. IEEE Trans. Commun. 2020, 68, 6070–6085. [Google Scholar] [CrossRef]

- Mismar, F.B.; Evans, B.L.; Alkhateeb, A. Deep Reinforcement Learning for 5G Networks: Joint Beamforming, Power Control, and Interference Coordination. IEEE Trans. Commun. 2020, 68, 1581–1592. [Google Scholar] [CrossRef]

| Parameter | Numerical |

|---|---|

| The number of users | 4 |

| Radius of neighborhood | 500 m |

| Path loss factor | 3 |

| Number of samples | 64 |

| Noise power density | −110 dBm/Hz |

| The minimum power | 3 dBm |

| Total system bandwidth | 10 MHz |

| Discount factor γ | 0.9 |

| Greedy choice strategy probability ς | 0.9 |

| Algorithm learning rate | 0.001 |

| Number | DQN-DDPG | Prioritized Dueling DQN-DDPG | Time Complexity Increased by Percentage |

|---|---|---|---|

| 1 | 2355.0431316 s | 2724.8576722 s | 15.703% |

| 2 | 2074.8412441 s | 2376.3507379 s | 14.531% |

| 3 | 2021.6223315 s | 2280.1505739 s | 12.788% |

| 4 | 2042.1567555 s | 2304.2756512 s | 12.835% |

| 5 | 2006.6152422 s | 2290.4872706 s | 14.146% |

| 6 | 2020.9810086 s | 2323.9442205 s | 14.990% |

| 7 | 2031.1689703 s | 2305.3912113 s | 13.500% |

| 8 | 2011.4987920 s | 2276.6919056 s | 13.159% |

| 9 | 2103.3444909 s | 2434.7927646 s | 15.758% |

| 10 | 2043.8314553 s | 2370.6670829 s | 15.991% |

| DQN-DDPG (300 TS) | Prioritized Dueling DQN-DDPG (150 TS) | (Prioritized Dueling DQN-DDPG/DQN-DDPG) × 100% | |

|---|---|---|---|

| 1 | 597.822680 s | 212.271871 s | 35.51% |

| 2 | 617.336156 s | 228.685814 s | 38.25% |

| 3 | 607.584831 s | 219.122785 s | 36.06% |

| 4 | 582.458203 s | 209.875624 s | 36.03% |

| 5 | 584.686530 s | 209.240106 s | 36.01% |

| 6 | 586.673505 s | 213.803065 s | 36.44% |

| 7 | 561.049539 s | 206.073456 s | 36.73% |

| 8 | 568.325278 s | 206.477650 s | 36.33% |

| 9 | 551.649149 s | 227.379164 s | 41.22% |

| 10 | 558.230064 s | 185.991332 s | 33.32% |

| Average value | - | - | 36.58% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Y.; Li, Y.; Li, L.; He, M. NOMA Resource Allocation Method Based on Prioritized Dueling DQN-DDPG Network. Symmetry 2023, 15, 1170. https://doi.org/10.3390/sym15061170

Liu Y, Li Y, Li L, He M. NOMA Resource Allocation Method Based on Prioritized Dueling DQN-DDPG Network. Symmetry. 2023; 15(6):1170. https://doi.org/10.3390/sym15061170

Chicago/Turabian StyleLiu, Yuan, Yue Li, Lin Li, and Mengli He. 2023. "NOMA Resource Allocation Method Based on Prioritized Dueling DQN-DDPG Network" Symmetry 15, no. 6: 1170. https://doi.org/10.3390/sym15061170

APA StyleLiu, Y., Li, Y., Li, L., & He, M. (2023). NOMA Resource Allocation Method Based on Prioritized Dueling DQN-DDPG Network. Symmetry, 15(6), 1170. https://doi.org/10.3390/sym15061170