1. Introduction

Global terrorist activities are on the rise, and it is easy for extremist actions to proliferate. Terrorism is a serious threat to the civilized world. To quickly and efficiently deal with explosives, various EOD equipment has been developed, such as the Andros series EOD robot [

1], RMI-9WT [

2], Packbot-EOD [

3], etc. EOD robots can replace people in carrying out extremely hazardous tasks such as detecting, inspecting, grabbing, and transporting suspicious explosives in a dangerous environment. Many countries have developed different kinds of EOD robots. For example, the British Morfax corporation has invented an EOD robot called Wheelbarrow [

4]. It is one of the most famous EOD robots in the world, possessing good vehicle mobility and explosive disposal performance. Meanwhile, American Remotec has developed an EOD robot called Andros F6A [

5], which is used to help the police complete security tasks. It consists of a mobile carrier and a manipulator. The carrier is an articulated walking chassis that helps the robot adapt to complex terrain such as sloping, sandy, or rugged surfaces. The Chinese Shenyang Institute of Automation has invented the Lingxi-B EOD robot [

6], which has three cameras; EOD personnel can accurately control the robot over long distances with cabled or cableless operations.

The path planning problem has attracted many researchers’ attention, and it is currently one of the biggest hotspots in the EOD robot control area. The main goal of path planning is to construct a collision-free path that allows the robot to move from the start position to the goal position in a given environment. Over the past few decades, a considerable amount of path planning algorithms have been proposed, such as artificial potential fields (APF) [

7], genetic algorithm (GA) [

8,

9], harmony search algorithm (HSA) [

10], A* algorithm [

10,

11,

12], particle swarm optimization (PSO) [

13,

14], ant colony optimization (ACA) [

15], rapidly exploring random tree. (RRT) [

16,

17], neural networks [

18], etc. Pak et al. [

19] proposed a path planning algorithm for smart farms by using simultaneous localization and mapping (SLAM) technology. In the study, A*, D*, RRT, and RRT* are compared to solve a short-distance path planning problem with static obstacles, longest-distance path planning problem with static obstacles, and a path planning problem with dynamic obstacles. The results showed that the A* algorithm is suitable when solving the path planning problem. Zeyu Tian et al. [

20] introduce a SLAM construction based on the RRT algorithm to solve the path planning problemm which is considered as a partially observable Markov decision process (POMDP). The boundary points are extracted, and the global RRT tree are introduced with adaptive step. The results show that when solving the problem of local RRT boundary detection, the tree is reset to accelerate the detection of the boundary points around the robot and when solving the problem of the global RRT boundary detection process, the generated RRT tree can also continue to grow, so that the small corners and the robot’s boundary points can also be detected in the end. An RRT-based path planning method for two-dimensional building environments was proposed by Yiqun Dong et al. [

21]. To minimize the planning time, the idea of biasing the RRT tree growth in more focused ways was used, the results show that the proposed RRT algorithm is faster than the other baseline planners such as RRT, RRT*, A*-RRT, Theta*-RRT, and MARRT. Symmetry is widely used in path planning. A* is a popular algorithm based on graph search. The method searches from the current node to surrounding nodes; this search process is symmetrical. The A* algorithm [

22,

23] is one of the best-known heuristic path planning algorithms. It was proposed by Peter Hart et al. in 1968. It is widely used due to its simple principle, high efficiency, and modifiability. It evaluates the path by estimating the cost value of the current node’s extensible nodes in the search area and guides the search toward the goal by selecting the lowest cost from the current node’s adjacent nodes. Although the traditional A* algorithm shows good performance, it may fall into a failed search state, and its speed is slow. Consequently, many scholars have proposed improved algorithms. Ruijun Yang and Liang Cheng [

24] proposed an improved A* algorithm with an updated weights, which considers the degree of channel congestion in a restaurant using a gray-level model. The results show that the service robot can effectively avoid crowded channels in the restaurant and complete various tasks in a restaurant environment. Anh Vu Le et al. [

25] invented a modified A* algorithm for efficient coverage path planning. They introduced zigzag thought into the traditional A* algorithm. The results show that the modified A* algorithm can generate waypoints to cover narrow spaces as well as efficiently maximize the coverage area. Erke Shang et al. [

26] proposed a novel A* algorithm that considers evaluation standards, guidelines, and key points. The improved A* algorithm uses a new evaluation standard to measure the performance of many algorithms and selects appropriate parameters for the proposed A* algorithm. The experimental results demonstrated that the algorithm is robust and stable. Gang Tang et al. [

27] proposed an improved geometric A* algorithm by introducing the filter function and the cubic B-spline curve. The improved strategy can reduce redundant nodes and effectively counter the problems associated with long distances.

Inspired by nature, PSO [

28] is first introduced by Kennedy and Eberhart in 1995. This biological heuristic algorithm is widely used in path planning [

29], complex optimization problems [

30], inverse kinematics solutions [

31], etc. In particular, the application of PSO in path planning has attracted the attention of many scholars. An improved PSO algorithm is proposed by Yong Zhang et al. [

32] to determine the shortest and safest path problem. In their paper, the path plan problem is described as a constrained multiobjective problem. A membership function that considers both the degree of risk and the distance of the path is proposed to resolve the issue. The results showed that the improved algorithm can generate high-quality Pareto optimal paths to solve the robot planning path in an uncertain dangerous environment. Mihir Kanta Rath and B.B.V.L. Deepak [

33] established a PSO-based system architecture to solve the robot path planning problem in a dynamic environment. The main goal of the proposed algorithm is to find the shortest possible path with obstacle avoidance. The simulation showed that the objective function successfully improves the efficiency of path planning in various environments.

Before the EOD robot removes explosives, searching for and finding the explosives from all the suspicious positions where terrorists may have hidden them is vital. Most studies only considered the planning of the path from one initial position to one goal position. Some additional problems that also need to be solved, however, are that the terrorists may hide multiple explosives in different positions. Furthermore, as the number of goal positions increases, the time needed to compute the path increases. It is particularly important to remove explosives quickly, as they may detonate at any time. The proposed algorithms are always time-consuming and easily fall into the local optimum. Thus, the EOD robot needs to plan the shortest path to detect multiple suspicious positions as quickly as possible. In this study, we focuse on the shortest distance path of traversing multiple goal positions while considering computation time optimization. The contributions of this study are as follows:

(1) A bidirectional dynamic weighted A* algorithm is proposed to reduce the calculation time. By using the grid method, the environment map is established to introduce the obstacle, initial position, and goal position information. The path planning problem is divided into two subproblems: one is the shortest path between any two positions, and the other is the shortest path for traversing all goal positions. Firstly, to ensure the problem is clearly described, the mathematical model is also presented. Then, we optimize the computation time by improving the A* algorithm with a novel dynamic OpenList cost weight strategy and a bidirectional search strategy. Finally, the adjacent nodes are expanded to 16 to obtain the shortest distance between any two positions. The simulation results of the improved strategies are analyzed and discussed.

(2) A learn-memory strategy is introduced to the PSO algorithm to enhance the exploration ability. The LM strategy imitates the behaviors of human learning and memory, such as comparison, analysis, association, retention, forgetting–reinforcement, and divergent thinking, to optimize the initial solutions, maintain the diversity of the swarm, improve the quality of solutions, etc. In the proposed algorithm, we obtain new particles by optimizing the nodes in one particle or between particles, learning some features or figures that exist to generate new excellent particles, etc.

(3) A swap-sequence strategy is introduced to the PSO algorithm to maintain the diversity of the swarm. The swap sequence strategy is introduced into the process of forgetting–reinforcement. By using the strategy, the traditional could make PSO avoid falling into local optimum. The performance of the LM-SSPSO algorithm is also compared with that of ACO, Tabu search (TB), swap sequence particle swarm optimization (SSPSO), and several other intelligent algorithms.

(4) The performance of the proposed algorithm is verified. To confirm the effectiveness of the proposed algorithm, different environment maps are also designed and analyzed. Finally, the conclusions of this paper are given.

The remainder of this paper is organized as follows: In

Section 2, the environment map construction for path planning is established, and the mathematical model is presented. Then, in

Section 3, an improved A* algorithm for the shortest distance path between any two goal positions and the computation time optimization are described and detailed. In

Section 4, an LM-SSPSO algorithm is proposed for optimizing the path of traversing all goal positions. In

Section 5, the experiments are designed and compared to further verify the effectiveness of the proposed approach. Finally, the conclusion and perspectives are given in

Section 6.

3. Improved A* Algorithm for the Path Planning of Any Two Points

In this section, we analyze the path planning of any two goal positions. Although the A* algorithm is widely used in the path planning area, there are many problems such as large numbers of redundant nodes, turn angles, slow search speed, etc. These issues may waste time and lengthen the path distance. To find a collision-free, shortest distance, and reduce the computational time, we improve the algorithm by introducing the following two strategies: the computational time optimization strategy and the path distance optimization strategy. First, we try to introduce a bidirectional search and dynamic OpenList cost weight strategy into the traditional A* algorithm to reduce the computational time. Then, based on the time-optimal strategies, we extend the adjacent nodes from 8 to 16, calculate and compare the distance matriedces of any two positions planned by 8 adjacent nodes and 16 adjacent nodes, separately. Finally, we select the shorter path from two distance matrices as the new distance matrix for the following path planning.

3.1. The Traditional A* Algorithm

In the traditional A* algorithm, there are two important lists: OpenList and CloseList. After the extension nodes in the OpenList are evaluated, the node with the minimum cost will be removed from the OpenList and added to the CloseList until the end node is found. The cost function of traditional A* algorithm [

37] is

, where

represents the total cost from the start node passes through the current node and arrives at the goal node;

represents the actual cost that has finished, which evaluates the cost from the start node to the current node;

is a heuristic function (Manhattan, Euclidean, and diagonal) that represents the estimated cost from the current node to the goal node.

3.2. Strategy for Computational Time Optimization between Any Two Points

To solve the long computational time problem mentioned above, in this subsection, we improve the traditional A* algorithm using the following strategies: bidirectional search and dynamic OpenList cost weight strategy.

3.2.1. Bidirectional Strategy for Computational Time Optimization

The bidirectional search algorithm is an efficient path planning algorithm. It shortens the planning time by simultaneously searching from the start node and the goal node. The search stops until the bidirectional nodes converge in the middle. The formula of the bidirectional A* algorithm is:

where

and

represent the current traversal nodes that search from the start position and the goal position, respectively;

and

represent the total cost of node

and node

, respectively;

and

represent the cost of the start node to current node

and the goal node to current node

, respectively;

and

represent the cost of current node

to node

calculated in the last loop and current node

to node

calculated in the last loop, respectively.

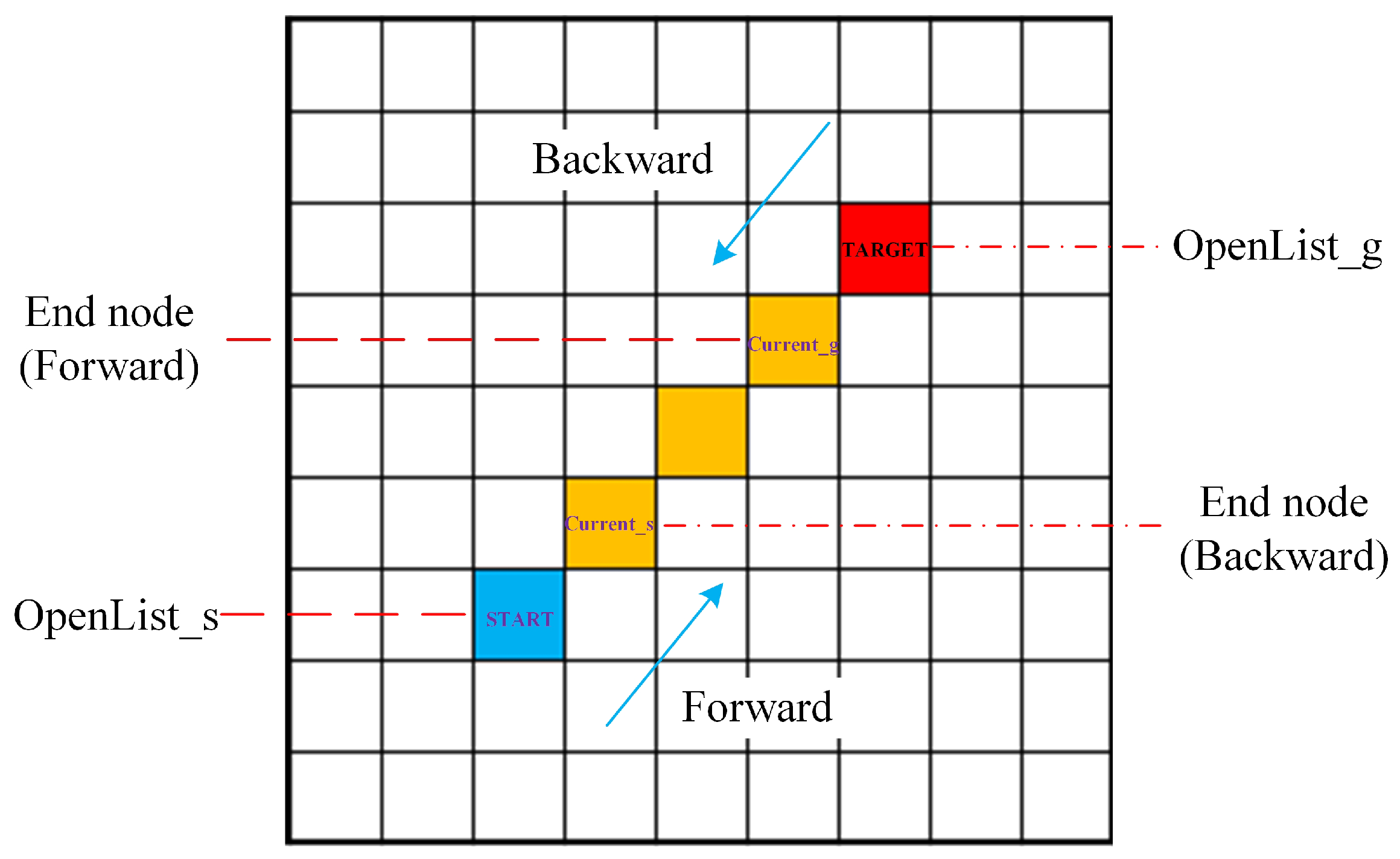

The main purpose of bidirectional A* is to take advantage of the start and goal positions to search during the path planning process. There are two OpenLists (

,

) and two CloseLists (

,

) in the bidirectional A* algorithm. As shown in

Figure 2, when initializing the parameters, in the forward search direction, the START node is in the

, and the end node is the current node

of the backward search direction; similarly, the GOAL node is in the

, and the end node is the current node

of the forward search direction.

3.2.2. Dynamic OpenList Cost Weight Strategy for Computational Time Optimization

In the A* algorithm formula, the heuristic function can be used to choose a preferred direction for searching. So, we adjust the

part by introducing the weight coefficient to increase its influence and improve the calculation speed of the A* algorithm. When computing the cost of the OpenList nodes, they change gradually during the iterative process. With the increase in iterations, more and more nodes with less cost value are obtained. By considering the characteristic of the OpenList nodes’ maximum cost, minimum cost, and mean cost, we design a new weight coefficient dynamic OpenList cost weight for the path planning calculation in this study. The proposed dynamic OpenList cost weight is represented by the following formula:

where

,

, and

are the total cost, actual cost, and estimated cost of the

node, respectively; similarly,

,

, and

are the total cost, actual cost, and estimated cost of

node, respectively;

,

are constant;

represents the node’s heuristic function weight (extended from the START node);

represents the node’s heuristic function weight (extended from the TARGET node);

is a cost list that consists of the cost value of all the nodes (extended from the start node direction) in the

;

represents the mean value of all the costs in the

,

represents the maximum value of all the costs in the

;

represents the minimum value of all the costs in the

; similarly,

is a cost list that consists of the cost value of all the nodes (extending from the target node direction) in the

;

represents the mean value of all the costs in the

;

represents the maximum value of all the costs in the

; and

represents the minimum value of all the costs in the

.

The weight coefficients and are dynamically changing parameters that describe the effect of nodes cost and nodes cost on heuristic function, respectively. The coefficients include the maximum, minimum, and mean costs of the OpenList nodes. The mean cost and minimum cost are also related to the process of iterations, so the heuristic part is always dynamically changing. This optimizes the computational time by efficiently adjusting the weight coefficient and guide the search toward the goal node.

When calculating the

, the two most commonly used methods are the Manhattan distance and Euclidean distance, as shown in Formulas (

8) and (

9). The Euclidean distance describes the distance between two points; the Manhattan distance describes the distance between two points in the north–south direction and the distance in the east–west direction. Euclidean distance is one of the most common distance metrics, measuring the absolute distance between two positions in a multidimensional space. It is the real distance between two positions. The Manhattan distance is used to indicate the sum of the absolute wheelbases of two positions on a standard coordinate system. It only needs to be added and subtracted, which requires less computation for a large number of calculations; this eliminates the approximation in solving the square root. To reduce the computational time, in this study, we use the Manhattan distance to calculate

.

where

is the position of the robot at the current node n;

is the position of the end node.

The process of the bidirectional dynamic OpenList cost A* algorithm can be described as follows:

Step 1:

Establish OpenLists and CloseLists. First, we establish two OpenLists and CloseLists for the improved algorithm: the OpenList and the CloseList. The searches from the forward search direction are defined as

and

. Similarly, from the backward search direction; the

and

are also defined. Second, as shown in

Figure 2, we add the START node into the

and select the

node (current node, searching from the backward search direction) as the end node. Similarly, we add the GOAL node into the

and select the

node (current node, search from the forward search direction) as the end node. We carry out the following operations on the OpenLists and ClostLists. We keep the

list and

empty at first.

Step 2:

Parameter initialization. We define the weight coefficients of the forward search direction and backward search direction as

and

, respectively, and assign them a value of 1 at first. We repeat steps 3–6 twice, and obtain the mean, maximum, and minimum cost values of

and

nodes; then, we compute

,

by considering Formulas (

6) and (

7) and use them as the weight coefficients of the following calculation.

Step 3: Calculate the value of new extended nodes. We add the adjacent nodes in the forward direction into the and calculate the cost function value of the newly added nodes. If the node is an obstacle grid, then we remove it from the . We find the node with the minimum evaluation value in the and define it as the current node . Next, we remove from the and transfer it into the . We carry out the same operations on the adjacent nodes in the backward direction. Then, we update the and .

Step 4: Define the parent node. We set the adjacent nodes of the current node in the forward direction. These are not placed in the or into the . We use the current node in the forward direction as the parent node of the newly added nodes in the forward direction. We conduct the same operations on the adjacent nodes in the backward direction.

Step 5: Judge and update the newly added nodes and values. First, we check if the newly added nodes in the forward direction obtained in Step 3 already exist in the . If the nodes do not exist in the , then we use the current node as the parent node of the newly added nodes. If the nodes are in the , then we compare their values. If the newly added node’s value is smaller, we use the current node as the parent node of the newly added nodes and update the newly added node’s value. We carry out the same operations on the adjacent nodes in the backward direction and update the parent node of the newly added nodes in the backward direction.

Step 6: Judge the loop exit condition. We judge whether the is empty or not. If the is empty, all of the operations will stop; otherwise, we judge whether and have the same nodes. If so, then we calculate the value of all the same nodes and select the node with the smallest value as the intersection node of (forward search) and (backward search). We obtain the path from the GOAL node, move forward to the intersection node, and finally reach the START node. Otherwise, we continue to calculate the cost of the extended nodes that search from the forward search direction. We carry out the same operations on the .

As described above, in Step 1, the node and node were obtained from the iterative process. We needed to consider the initialization of the end node in both the forward direction and the backward direction. During initialization, we selected the GOAL node as the end node of the node and the START node as the end node of the node. Furthermore, to facilitate the calculation of the weight coefficient, as described in step 2, we first assigned the two weight coefficients of the heuristic function of the bidirectional search a value of 1. Thus, we obtained the initial cost of nodes in the and . Finally, the dynamic OpenList cost weight coefficients , were obtained; we could use these to calculate the cost value of later.

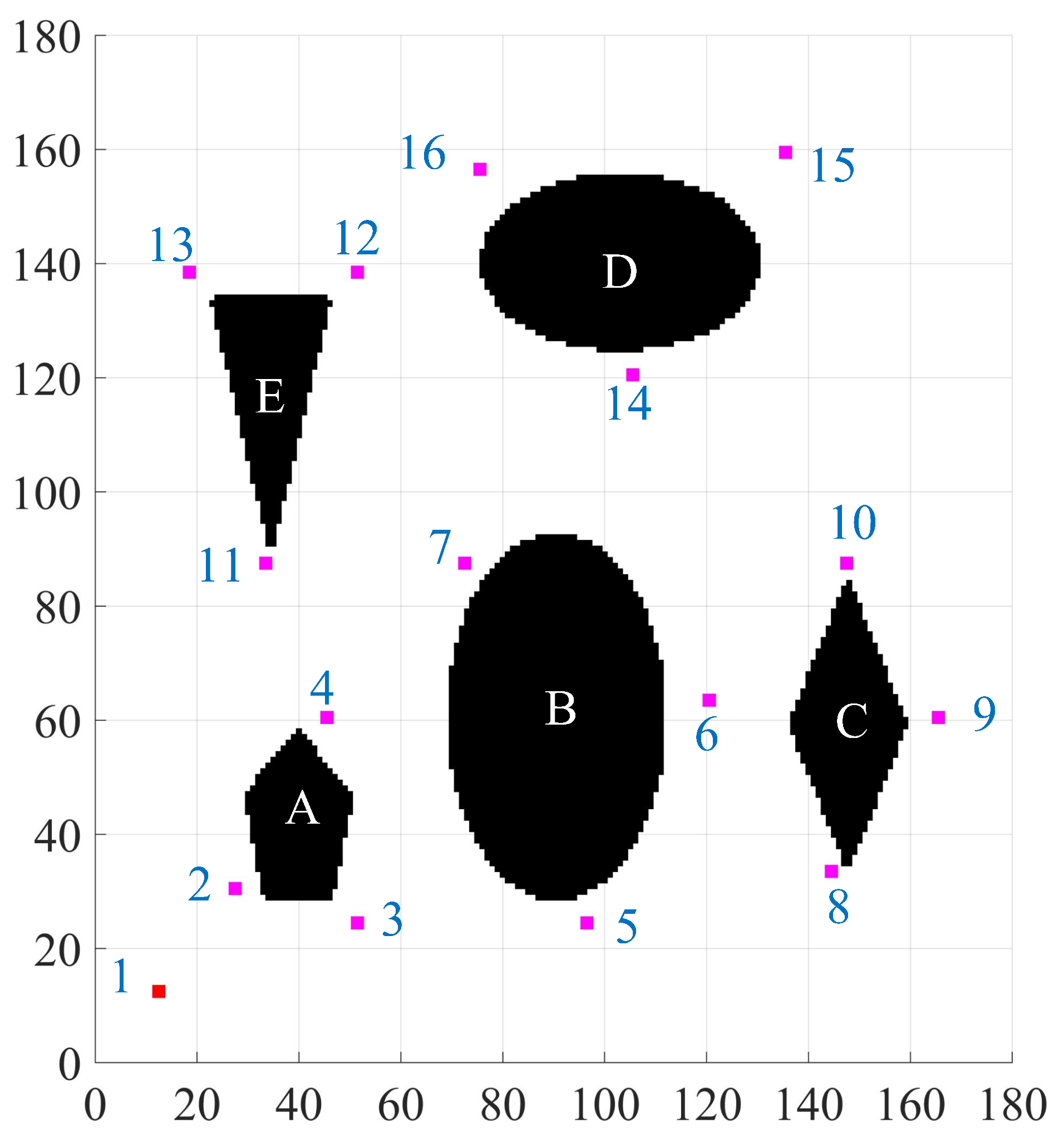

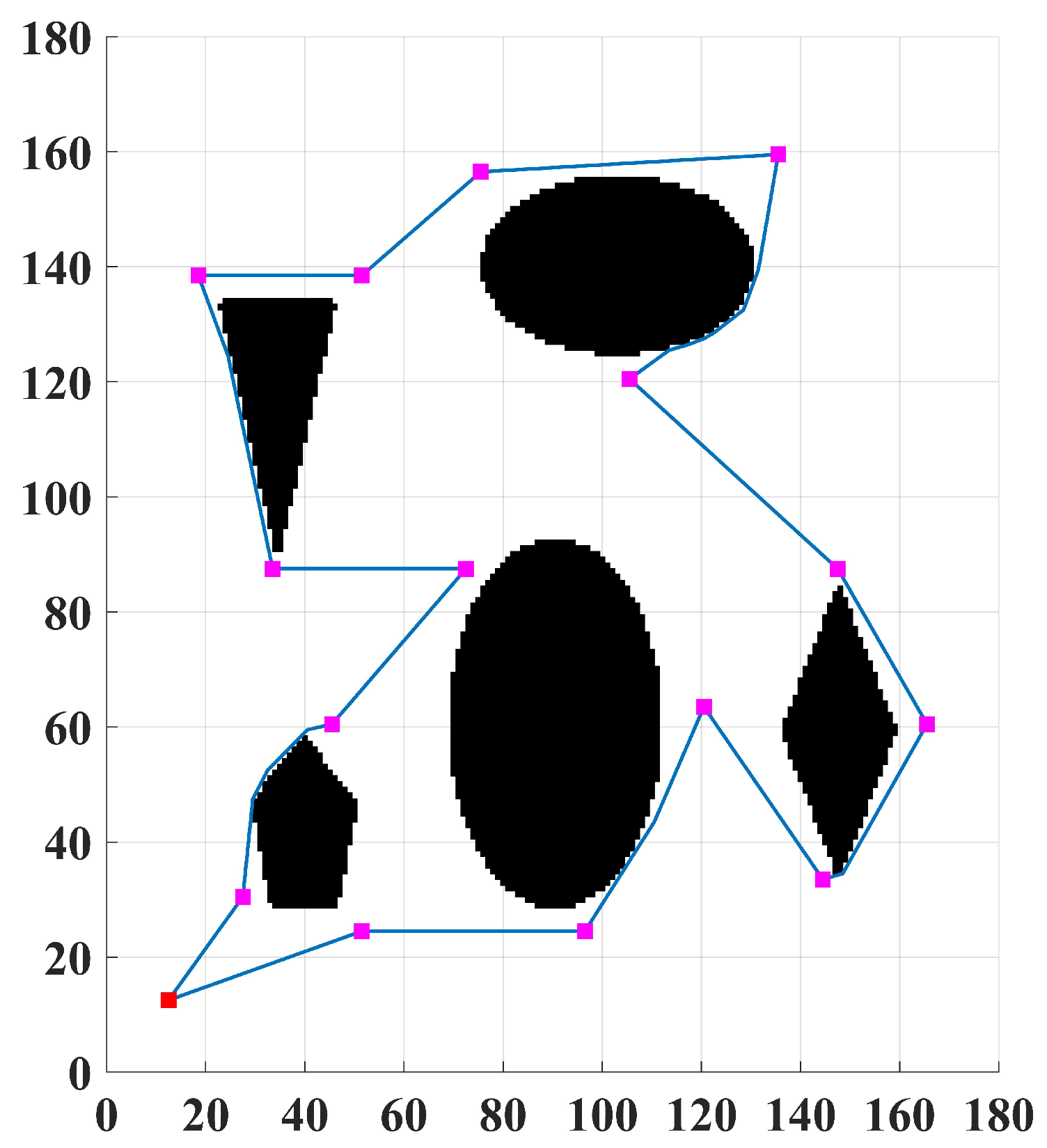

3.3. Simulation Results and Analysis of the Computational Time Optimization Strategy

In this study, an Intel(R) Core (TM) i5-5200U CPU @ 2.20 GHz, RAM 16 GB, with a Windows 10 Education 64 operating system, was used for experimental simulation. The coordinates of the start position were (12, 12), and the coordinates from goal position 1 to 15 in

Figure 1 are (27, 30), (51, 24), (45, 60), (96, 24), (120, 63), (72, 87), (144 33), (165, 60), (147, 87), (33 87), (51,138), (18,138), (105,120), (135, 159), and (75,156). To verify the efficiency of the bidirectional dynamic OpenList cost weight A* algorithm, some comparative simulations were designed. We compared the weighted bidirectional improved A* algorithm with traditional A* and discussed the effects of different weight coefficients and the resolution on the computation time. To ensure the accuracy of the simulation results, we designed and repeated the algorithm 30 times.

As shown in

Table 1, compared with the traditional A* algorithm, the computation time of the bidirectional dynamic OpenList cost weight A* algorithm is significantly shorter. As the resolution of the environment map changes from

to

and

to

, the calculation time’s multiples of the traditional A* algorithm and the bidirectional dynamic OpenList cost weight A* algorithm are 3.16 (250.91:79.50), 7.91 (628.53:79.50) and 1.90 (50.27:26.39), 3.16 (83.36:26.39), respectively. The multiple increases in the calculation time of the algorithm are significantly less than those of the traditional A* algorithm.

To further verify the effectiveness of the bidirectional dynamic OpenList cost weight A* algorithm, we introduced constant weight

w = 1, 1.5, 2, and five other weight coefficients for comparison. The formulas of the five weight coefficient functions (

) are described in Formulas (

10)–(

14):

where

k represents the

kth iteration;

represents the weight coefficient of the

kth iteration;

,

, and c are constants;

represents the pre-estimated maximum number of iterations’ and e is the Euler number.

The parameter in the five functions are obtained by calculating the value, which evaluates from the start node to the end node. The constant c in the coefficient is 1. Constant is 1, and is 2. The e coefficient is 1. Constant is 1 and is 2.

The results of

and

w = 1, 1.5, 2 are based on a bidirectional search. As can be seen from

Table 2, the dynamic OpenList cost weight A* algorithm shows better performance than any other weight coefficient in terms of calculation time.

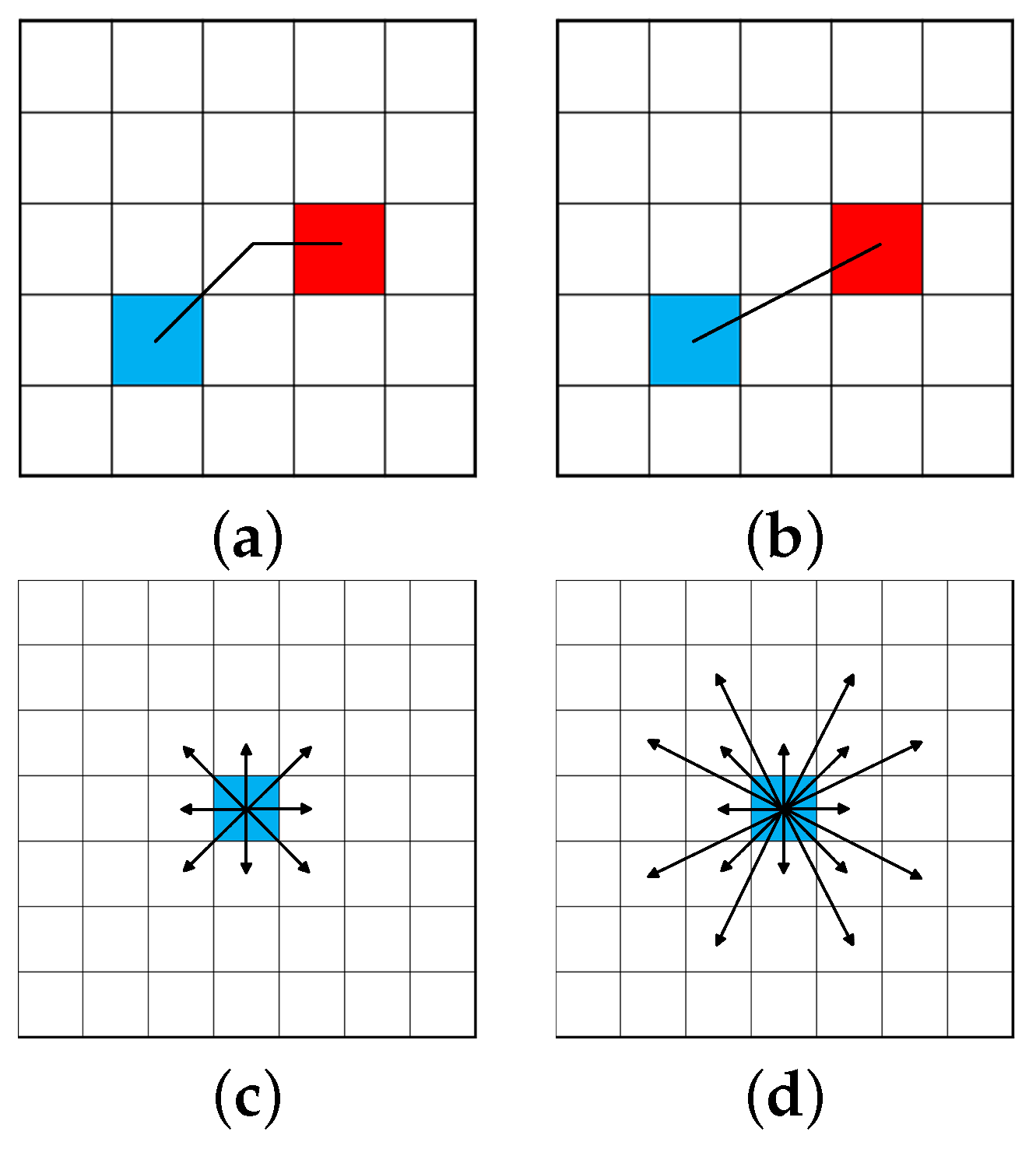

3.4. Strategies of Improved A* Algorithm for the Shortest Distance between Any Two Points

Although the collision-free path can be obtained by using the traditional eight-adjacent nodes A* algorithm, there are many inflection nodes and repeated calculations, and sometimes the path distance is long. Thus, it is necessary to reduce the inflection nodes and shorten the path distance for path planning. To solve this problem, we changed the expansion node number from 8 to 16.

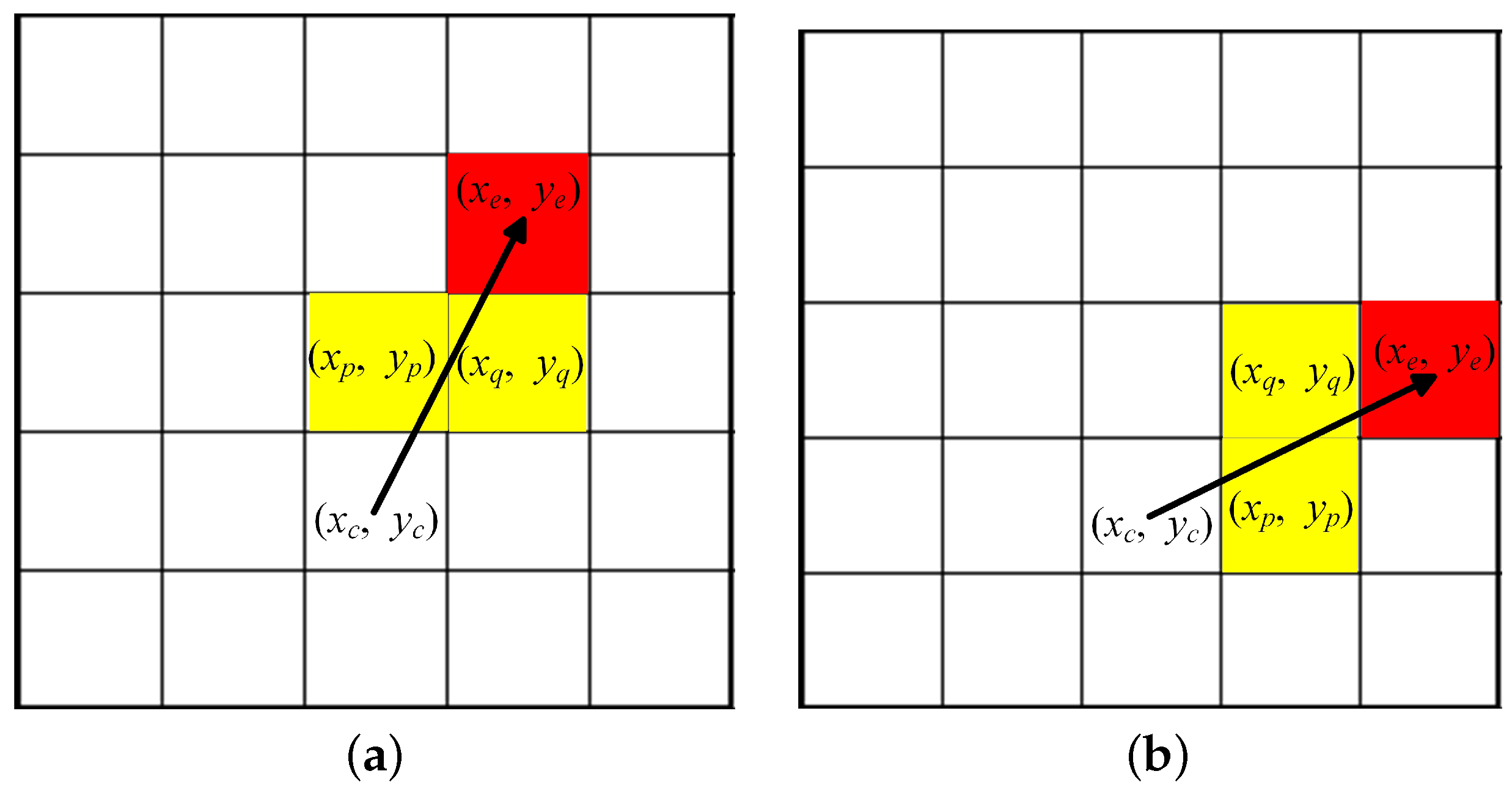

As shown in

Figure 3, the 16 adjacent nodes search method can lead the search to the goal node (red node in

Figure 3 directly), which significantly reduces the path length and the number of nodes. However, the 16 adjacent nodes strategy also introduces more nodes that must be evaluated to determine whether they are an obstacle or not. For example, in

Figure 4, node P

and node Q

are the waypoints, and node E

is the expansion node; before adding the extend nodes into

or

, it is necessary to judge whether the three nodes (P, Q, and E) are obstacles or not. The relationship between the waypoints, current node C

, and next node E

in the coordinates are:

The obstacle judgment formula is:

When node P and node Q are above or at the bottom of node C, the relationships between the extended node and the waypoints are as shown in Formula (

15), where (

,

), (

,

), (

,

), (

,

) are the coordinates of node E, node P, node Q, and node C in the Cartesian coordinate system, respectively. By using the proposed obstacle judgment formula method, we more accurately judge whether the new expansion point is an obstacle or not than is possible with many other methods, such as selecting the sample points in the path to judge whether the sample point is in the obstacle or not. When node P and node Q are to the left or to the right of node C, the relationship between the extended node and the waypoints is as shown in Formula (

16):

3.5. Simulation Results and Analysis of the Shortest Distance of the Path Planning Improved Strategy

To optimize the path planning and compare the performance of the shortest distance path planning strategy, we compared the 8 adjacent nodes and the 16 adjacent nodes using the improved bidirectional weight A* algorithm mentioned above.

Table 3 and

Table 4 are the path length matrix of the 8 neighborhood and 16 neighborhood, respectively. In the tables, the number in row

i, column

j represents the shortest distance from point

i to point

j. For example, the shortest distance from point 3 to point 1 in

Table 3 is the number in row 3 column 1; that is, 42.968.

Table 5 is the path length matrix composed of the minimum length of the 8 neighborhood (

Table 3) and the 16 neighborhood (

Table 4). For example, the shortest distance from point 3 to point 1 in

Table 3 is 42.968; in

Table 4, it is 38.14; and so, in

Table 5, the shortest distance from point 3 to point 1 is 38.14. This is due to the distance matrix being symmetric, simultaneously allowing us to effectively solve the proposed problem. We can obtain a complete distance matrix of any two points, as shown in

Table 5.

The total positions number for path planning is 16, so we obtain a

integer distance matrix as the distance matrix is symmetrical; that is, the distance from one position to the other is equal to the distance from the other to the position. In

Table 3 and

Table 4, we only show the partial distance for a better comparison of the distance value between any two positions; the distance number between all of the 16 nodes is 120. As shown in

Table 6, compared with the path length generated by using 8 adjacent nodes, the 16 adjacent nodes A* algorithm shows better performance, and all of the generated path lengths are less than or equal to the 8 adjacent nodes A* algorithm for the redundant points are removed.

To further verify the performance of bidirectional dynamic weighted-A* algorithm, we compared the improved A* algorithm with goal-oriented rapidly exploring random tree (GORRT) [

38] algorithm, where the step size of GORRT is eight, and the threshold is five. If nodes were closer than this, they were taken as almost the same. So, the shortest distance between of any two nodes by using GORRT are shown in

Table 7.

By analyzing the results of the16 adjacent nodes (

Table 4) and GORRT’s path length (

Table 7), we also obtained the comparison results of the 16 adjacent nodes and GORRT’s path length. The results are shown in

Table 8. The path length generated by using 16 adjacent nodes also produced better performance with 199 path lengths being shorter and 1 path length being longer.

4. LM-SSPSO Algorithm for Traversing Multiple Goal Positions

As mentioned above, we obtained the shortest distance of any two positions of the 16 nodes (including the start position). The next step was to find the shortest path for traversing all suspicious positions just once. The process is similar to that of the traditional trade salesperson problem (TSP) [

39]: the distance from position P to position Q is equal to that from position Q to position P. Thus, in this section, we solve the multiple goal positions path planning problem by considering the methods of solving the TSP. To solve the TSP, many algorithms have been studied, such as the greedy algorithm, genetic algorithm (GA), particle swarm optimization (PSO), harmony search (HS), etc. Refael Hassin and Ariel Keinan [

40] proposed greedy heuristics with regret as the cheapest insertion method for solving the TSP. They improve the Greedy algorithm by allowing partial regret. The computational results showed that the relative error is reduced and the computational time is quite short. Urszula Boryczka and Krzysztof Szwarc [

41] studied an improved HS algorithm to solve the TSP. The results showed that the proposed algorithm has a significant effect and that the summary average error can be effectively reduced. An initial population strategy (KIP, k-means initial population) was applied by Yong Deng et al. [

42]. The information to improve the GA, helping to find the optimal solution for the TSP. Fourteen TSP examples were used to test the algorithm, and the results showed that the KIP strategy can effectively decrease the best error value. Matthias Hoffmann et al. [

43] presented a discrete PSO algorithm used to solve the TSP, which provides insight into the convergence behavior of the swarm. The method is based on edge exchanges and combines differences by computing their centroid. The method was compared with other approaches; the evaluation result showed that the proposed edge-exchange-based PSO algorithm performs better than the traditional approaches. Although many traditional algorithms have been used to solve the TSP, each of the above approaches has its limitations. For example, the diversity of PSO is easily lost during iteration. The greedy algorithm and HS algorithm may fall into a local optimum, and the result of GA strongly depends on the initial value. So, we introduced the LM-SSPSO algorithm to improve the efficiency of EOD robot path planning.

4.1. Mathematical Model of Multiple Goal Positions Path Planning

In this subsection, we present the path planning for the EOD robot for the multiple-goal positions mathematical model. The aim of our research was to find the shortest path that allows all goal positions to be traversed just once, followed by a return to the start position. So, we developed the mathematical solution with reference to the methods of solving the TSP. We define the path planning problem as an instance

of multiple goal positions

, so we obtain an

integer distance matrix of all the goal positions. The path to be optimized can be described as a permutation D of the multiple goal positions, where

denotes the

ith visited goal positions. Hence, the formula of the total distance

is:

where Rn is the symmetric group on

,

denotes the distance between the

ith goal position and the

th goal position, and

denotes the distance between the

nth goal position and the start position.

4.2. Traditional PSO Algorithm

The PSO algorithm is a population-based metaheuristic algorithm that is widely used for its powerful control parameters; it is also easy to apply. It is inspired by the social behavior of bird flocks. When birds move in a group, each bird can be regarded as one particle, and all the particles form a swarm

, where

N is the population size of the swarm. Each bird has its own velocity and position. The velocity of particle

i is represented as

, where d is the dimension. The position of particle

i is represented as

. As time advances, the particles update their velocity and position with mutual coordination and information-sharing mechanisms. The particles obtain the best position by constantly updating their individual optimal positions and swarm optimal positions. The best position of an individual particle

i is represented as

. The best position of the swarm is represented as

. The positions and velocities of each particle are updated as follows:

where

Dm represents the maximum dimension of the particle;

and

represent the d-dimension element of the current velocity and new velocity of the particle, respectively;

and

represent the d-dimension element of the current position and new position of the particle, respectively;

w represents the weight coefficient;

i represents the particle number;

k and

represent the

kth and

th iteration, respectively;

and

are positive acceleration constants and scale the contribution of cognitive and social components, respectively;

and

represent uniform random variables between (0, 1);

represents the

dth element of the particle

i’s best individual position; and

represents the

dth element of the swarm’s global (swarm) best position. In the PSO algorithm, each particle is attracted by two positions: the global best position and its previous best position. During iterations, the particles adjust their positions and velocities according to Formula (

18), and the flowchart of the traditional PSO algorithm shown in

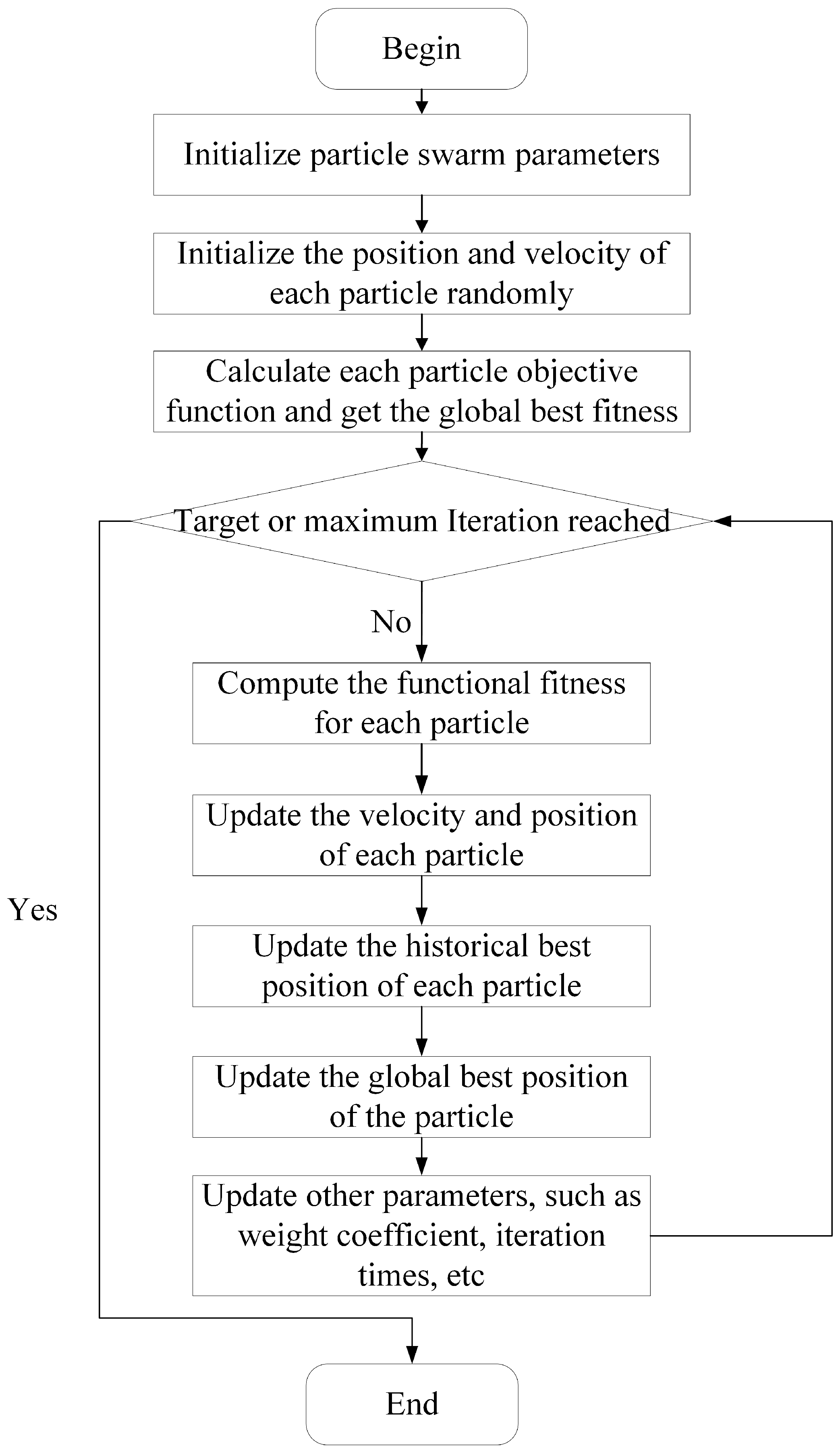

Figure 5; when the algorithm achieves the goal or reaches the maximum iteration, the search stops. The application of PSO in path planning has been explored in various studies. In the following section, we provide a hybrid algorithm that shows fast convergence and addresses diversity in solving optimization problems.

4.3. Improved Strategies for Planning the Shortest Distance of Traversing All Goal Positions

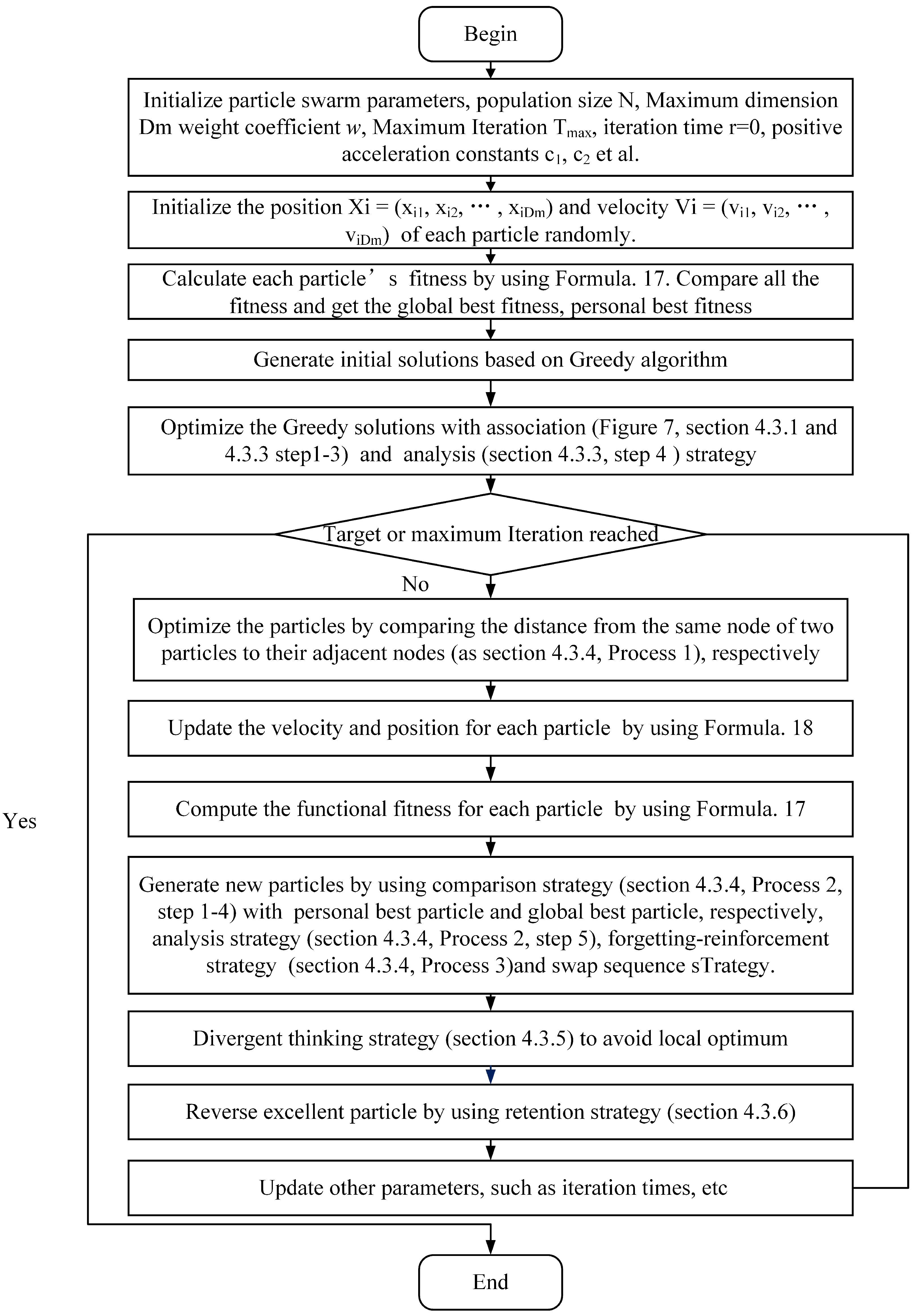

The PSO algorithm is widely used due to its simple operation, easy implementation, and fast speed. However, it still has some shortcomings, such as the results depending on the initial solution, and the lack of randomness in particle position changes, so the diversity of the particles may reduce and fall into a local optimum during iteration. To overcome the drawbacks, we improve the traditional PSO with the following strategies. First, a greedy algorithm and an association strategy are introduced to optimize initial solutions. In addition, forgetting–reinforcement, comparison, analysis strategies, and divergent thinking are proposed to maintain the diversity of the swarm, avoid the local optimum, and obtain better solutions. The forgetting–reinforcement strategy is used to update the particle in the swarm or to randomly generate a new particle under certain conditions, the comparison strategy is used to optimize the individual particle, and the analysis strategy is used to generate a new particle from two particles. Furthermore, a divergent thinking strategy is introduced to increase the randomness of the particles. Finally, the retention strategy is used to reserve a better solution in the swarm for the next iteration. The flowchart of the LM-SSPSO algorithm is presented in

Figure 6.

Compared with the traditional PSO algorithm, there are four aspects in which the LM-SSPSO algorithm provides optimization. Firstly, the initial solutions are optimized; by using the greedy algorithm and analyze strategy, the quality of initial solution can be effectively improved. Then, two different particles are optimized by considering the distance of the same node to their adjacent nodes with the compare strategy. Meanwhile, the personal best particle and global best particle are also used to provide path points to optimize the particle. Additionally, the particle is optimized with analysis strategy the swaps two randomly different position in one particle. The swap sequence strategy is also introduced to update the particle by using new swap operators, which include new position and velocity update formulas. Whether the swap sequence strategy is used or not depends on the forgetting–reinforcement strategy. Finally, to avoid the local optimum, the divergent thinking strategy is also used to optimize the particle with the worst particle and select the better particle with the retention strategy in the end. The detailed description of each strategy is introduced in the following section.

4.3.1. The LM-SSPSO-Based Method to Traverse All Goal Positions

Inspired by the behaviors of learning and memory, we propose a novel intelligent algorithm called LM-SSPSO. In the improved algorithm, we introduce several strategies to solve the multiple goal positions path planning problem. When people learn and memorize information, they often forget or confuse similar knowledge. To solve problems, people can compare and analyze specific features, associate the information with a similar object, and combine divergent thinking to enhance memory, etc. For example, if someone confuses the words “dessert”, “desert”, and “dissert”, they can compare the differences: “d-es-sert”, “d-e-sert”, and “d-is-sert”. The middle letters are different in the three words. The flowchart of LM-SSPSO is shown in

Figure 6. It includes the process of association, comparison, analysis, forgetting–reinforcement, divergent thinking, and retention.

Association means that one concept leads to other related concepts; the origin of association can be a geometric figure, such as the behaviors of animals or social behaviors, etc. The results are the operations with similar behaviors in the swarm. In the LM-SSPSO algorithm, the operations of association can be described as selecting a group of particles from the swarm and then referring to a feature or figure, for example, a triangle. The sequences of the corresponding nodes are swapped for each particle.

Figure 7 shows the process of association; the node on the hypotenuse is swapped with that on the right-angled side. The operations are detailed in the following section.

Comparison is the act of distinguishing or finding the differences between two or more objects and obtaining a better final result. In LM-SSPSO, a comparison strategy is usually applied to two objects, and the strategy obtains a desirable solution according to a certain rule. There are two methods of comparison. One involves comparing the distance from the same node of two particles to their adjacent nodes, and the other involves comparing the distance of a partial optimization particle with that of an unprocessed particle. Partial optimization includes selecting a segment from one particle, deleting the nodes that are the same as the segment in another particle, and adding the selected segment to the end of another particle.

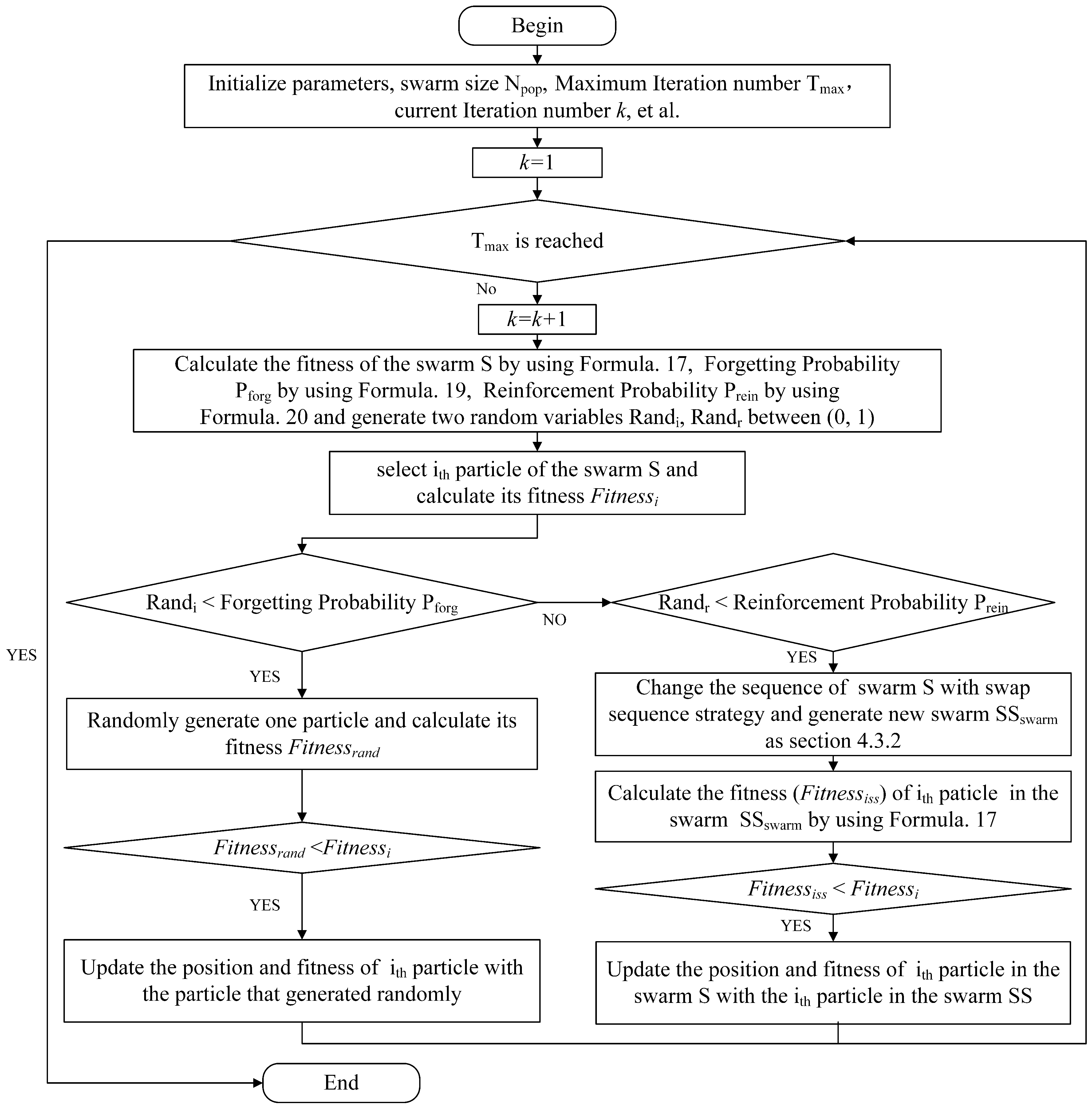

Forgetting [

44] is a special function in the human brain, which means the things that are experienced no longer remain in the memory. This behavior may involve the random loss or removal of information. Reinforcing is the opposite of forgetting; it is a way to consolidate information firmly. The proposed forgetting–reinforcement strategy imitates the behaviors of people forgetting information and strengthening their memories. This can lead to the loss of some or all of the information in one particle to avoid local optima and to generate new particles to maintain the diversity of the swarm. In the LM-SSPSO, a forget probability function and a reinforcement probability function are introduced to increase the diversity of the swarm. The flowchart of the forgetting–reinforcement strategy is shown in

Figure 8, and the forgetting probability formula and reinforcement probability formula are described in Formulas (

19) and (

20), respectively.

The forgetting–reinforcement strategy is applied after the analysis strategy of LM-SSPSO, as shown in

Figure 6. It generates a new particle in a novel way with the swap sequence idea. In the forgetting–reinforcement strategy, two probability parameters, the forgetting probability and reinforcement probability, are used to determine the proper strategy. If a random number is less than the forgetting probability, a randomly particle is generated, or another randomly number is generated to determine whether the swap sequence strategy is used to generate new particle or not.

The forgetting probability formula is:

The reinforcement probability formula is:

The relationship between the forgetting probability and the reinforcement probability is:

where

k is the number of iterations, and

and

in

Figure 8 are random variables between zero and one. When using the reinforcement strategy to generate a new particle, the swap sequence method is used; the exact operations are detailed in the subsections “Swap Sequence-Based PSO” and “Comparison Strategy and Forgetting–Reinforcement Strategy for Swarm Optimization”.

Analysis is the process of studying the essence and the inner connection of one object. In LM-SSPSO, this means obtaining a desirable particle by swapping the nodes sequence of a particle.

Divergent thinking is a diffuse state of thinking. It is manifested as a broad vision of thinking, which presents multidimensional divergence. Divergent thinking obtains a new particle by randomly generating a new solution under certain conditions.

Retention is the process of maintaining optimal results. In the algorithm, retention is used to keep the better particles for the next iteration. It works on the swarm and accelerates the convergence of the algorithm.

4.3.2. Swap Sequence-Based PSO

The swap-sequence-based PSO is an effective method for solving the TSP [

45]. It has the same theory as traditional PSO but has new features by considering the swap sequence idea. In SSPSO, the swap sequence (SS) is defined as an ordered arrangement of one or more swap operators. SS = (

,

,…,

), where

,

,…,

are swap operators. In the velocity and position update formula, the position represents a complete TSP tour, and the velocity represents the swap operator from one tour to another. The position and velocity update formulas are as follows:

where the operator ⊕ means that the two swap sequences are merged to form a new swap sequence. For example, for the swap operators

= (1,2) and

= (2,3), SS =

= ((1,2),(2,3)). The ⊖ operator indicates the subtraction of two TSP paths. For example, Path1 = {1,2,3,4} and Path2 = {1,2,4,3}, Path1⊖Path2 = SO(3,4). In contrast with Formula (

18),

in Formula (

22) denotes the probability that all of the swap operators in the swap sequence

are used, and

denotes the probability that all the swap operators in the swap sequence

are used. An SS acts on a particle by applying all of the operators and finally producing a new particle.

Each particle updates its position and velocity by considering Formulas (

22) and (

23). The formula of weight coefficient w is:

where

represents the weight coefficient of the velocity update in the formula.

= 1,

= 0.5;

represents the current iteration count, and

represents the maximum number of iterations.

4.3.3. Association and Analysis Strategy for Initial Solution Optimization

Generally, the initial solutions always play an important role in the convergence of the swarm. Before the proposed association and analysis strategy is used, we optimize the initial solutions using greedy thought. The greedy algorithm is based on local optimum thought. Its solutions are not always compliant with the global optimum path, but they are always better than the stochastic path. Considering the greedy algorithm’s characteristics, we use the greedy idea to obtain local optimal initial particles. Then, based on the greedy solutions, the association strategy is used to imitate the right-angled triangle swap method and swap the corresponding nodes of the right-angled side and the hypotenuse, as shown in

Figure 7. The operations are described as follows:

Step 1: Randomly generate (the population size of the swarm) set paths.

Step 2:

Generate a set path using the greedy algorithm. We define the START node as the initial node and plan the path using the greedy algorithm. For example, the first set in

Figure 9a is a path that is planned by the greedy algorithm.

Step 3:

Generate more sets of the path using the association strategy. First, we generate

(a constant,

<

) sets of paths that are the same as the path generated in Step 2. Then, we number the paths as

(

− 1)th,

th. Finally, we use the association strategy and imitate the right-angled triangle to swap the

ith node of the

ith set path with the first node, as shown in

Figure 9b, where

= 16.

Step 4: Generate a new path using an analysis strategy. Generating (for example, 10) sets a path that is the same as that in Step 2. We use the analysis strategy to randomly select two nodes for each set path and then swap the selected nodes to generate new paths.

4.3.4. Comparison Strategy and Forgetting–Reinforcement Strategy for Swarm Optimization

In this subsection, we introduce the comparison and forgetting–reinforcement strategy into the PSO algorithm to avoid local optima and to maintain the diversity of the swarm. First, we use the comparison strategy to obtain new particles by comparing the distance between the same node of two particles and their adjacent nodes. If the fitness of the new particle is smaller, we update it. Secondly, we optimize the partial nodes of each particle with the individually best solution and the global best solution, in turn. If the fitness of the new particle is smaller, we update the particle. Finally, the forgetting–reinforcement strategy is used to maintain the diversity of the swarm; the operations are as follows:

Process 1. Optimize the particles by considering the distance from the same node of two particles to their adjacent nodes.

Step 1: Generate a group of particles for comparison. First, we generate n random variables between 0 and 1, and then we compare the generated n random numbers with the comparison probability . If the ith number is less than , we select the ith particle for comparison, then a selected particle group is obtained.

Step 2: Generate a partial optimization particle using the comparison strategy. We select two particles in the and randomly generate an integer as the first position of the new particle. Next, we find the index of in the two selected particles, separately, and compare the distance between and its right node in the two selected particles. We insert the node with a shorter distance into the right node of the new particle.

Step 3: Generate one new particle. We use the newly added node as the position to be compared and perform the same operations with the right nodes in the selected particles as the position . We use this method to tackle all of the resting positions until all the nodes have been added to the new particle.

Step 4: Generate more new particles. We select (equal to ) as the first position of another new particle, perform the operations described in Steps 2–3, and compare the distance from the corresponding node to its left node in the two selected particles. Next, we add the left node with a shorter distance into the left position of the new particle until another new particle is obtained.

Step 5: Deal with all the particles. We deal with all the particles in as described in Steps 2–4 until all the particles have been compared or only one particle is yet to be compared.

Step 6: Update the individual best fitness and global best fitness.

Process 2. Optimize the partial nodes of each particle with a comparison strategy.

Step 1: Select a segment for comparison. We randomly generate two different integers, Position1 and Position2 (Position1 < Position2), which are no greater than the dimension of the particle (e.g., 16), and use the two randomly generated integers as the index of the path position. We select the positions from the index Position1 to the index Position2 as pathc. For example, if the historical best path is Position1 = 6; Position2 = 8, then the pathc between Position1 and Position2 is .

Step 2: Delete the comparison segment. We delete the positions in the historical best path that are the same as the positions in the cross-segment (pathc) and form a path that is made up of the remaining positions.

Step 3: Add a comparison segment. We add the path (pathc) to the end of the path generated in Step 2, and judge whether the new solution is less suitable. If this is the case, we update the position and fitness of the new particle.

Step 4: Deal with the global best path. We carry out the same operations on the global best path as those conducted for the historical best path.

Step 5: Deal with the particles using the analysis strategy. First, we randomly generate two unequal indexes: Position3 and Position4 (Position3 < Position4). Then, we swap the node of index Position3 with the node of index Position4. Finally, we judge whether the fitness of the solution is reduced. If it is indeed reduced, we update the position and fitness of the new particle.

Process 3. Forgetting–reinforcement strategy for maintaining the diversity of the swarm.

As shown in

Figure 8, the process of the forgetting–reinforcement strategy is as follows: First, we generate a random variable

and determine if it is smaller than the forgetting probability

. If it is smaller, then we select one particle (

ith) from swarm S and then randomly generate one particle and calculate its fitness

. Next, we judge whether

is less than the selected particle’s fitness. If

is lower than the selected fitness, then we update the position and fitness of the

ith particle with the particle that is randomly generated. If

is no less than

, we generate another random variable

and determine if it is smaller than the reinforcement probability

. If it is smaller, we change the sequence of the swarm using the swap sequence strategy and obtain a new swarm SS. We compare the

ith particle’s fitness in swarm SS with the

ith particle’s fitness in swarm S; if it is smaller, then we update the particle.

4.3.5. Divergent Thinking Strategy to Avoid a Local optimum

Divergent thinking manifests as a broad vision of thinking, while thinking presents multidimensional divergence. This thinking with strong randomness can exert more imagination under minimal constraints; the operations are as follows:

Step 1: Initialization parameter. We assign a constant to heuristic probability (between 0 and 1); then, we randomly generate a number between 0 and 1 and name it .

Step 2: Generate a new solution vector for the LM-SSPSO algorithm. We judge whether is less than or not. If is less, then we select a set of solutions from the swarm and define it as ; otherwise, we randomly generate a set of solutions and define it as .

Step 3: Optimize the worst solution in the swarm with the divergent thinking solution by considering the comparison and analysis strategies. We perform the same comparison and analysis operations as the process 2 strategies in the subsection “Comparison strategy and forgetting-reinforcement strategy for swarm optimization” on the with the worst solution in the swarm.

4.3.6. Retention Strategy for Swarm optimization

To speed up the convergence of the algorithm, the retention strategy is used to reserve excellent individuals for the next iteration; the operations are as follows:

Step 1: Reserve top fitness particles. We sort all the particles via fitness minimum to maximum and reserve the top ( < ) particles for the following operation.

Step 2: Generate a new particle. We use roulette wheel selection to reserve particles from the initial swarm and form a new particle swarm.

Step 3: Compare and reserve better particles. We compare the fitness of the top set of particles generated in Step 2 with the top set of particles in step 1. We reserve the particle with minimum fitness as the corresponding new particle.

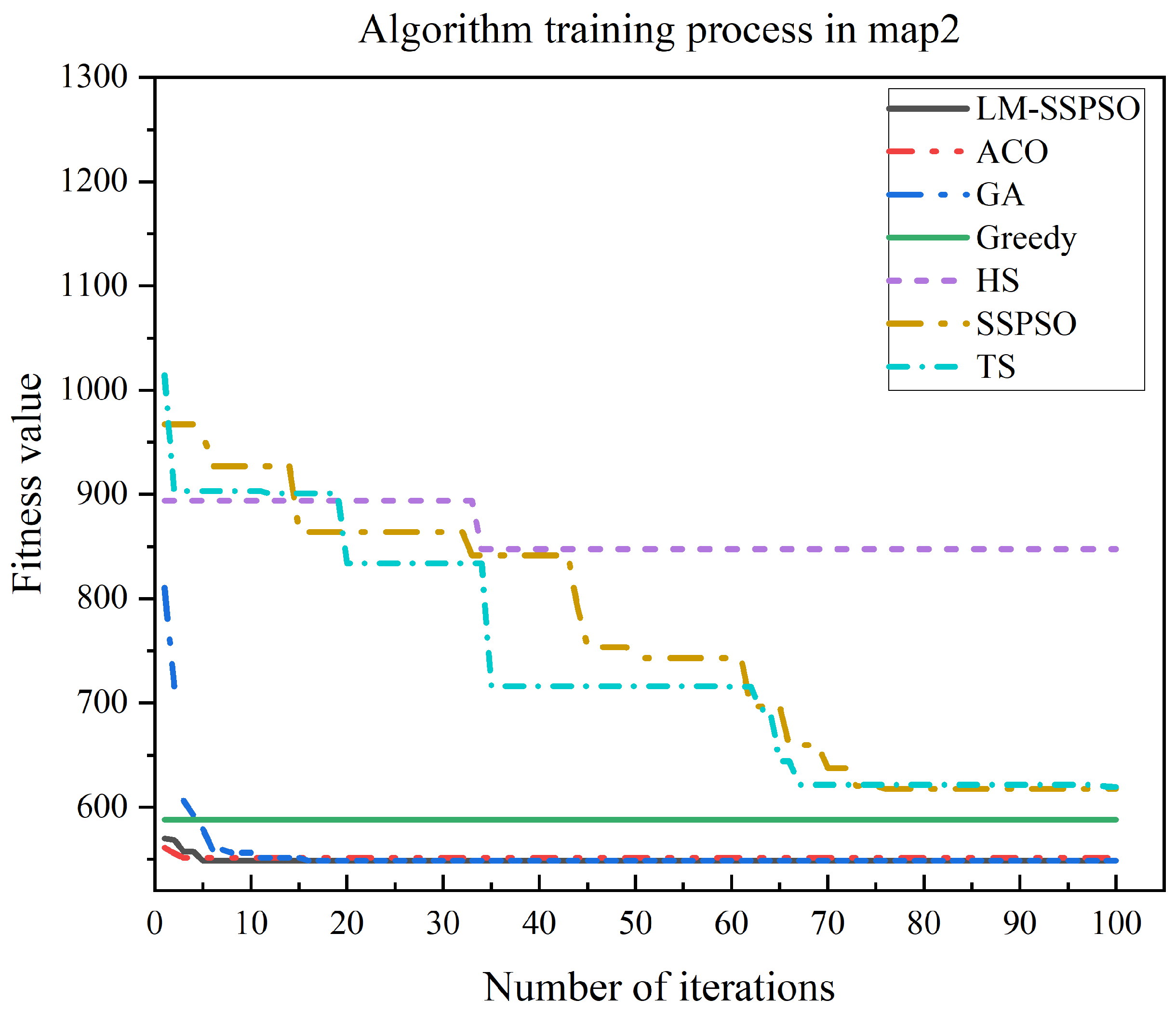

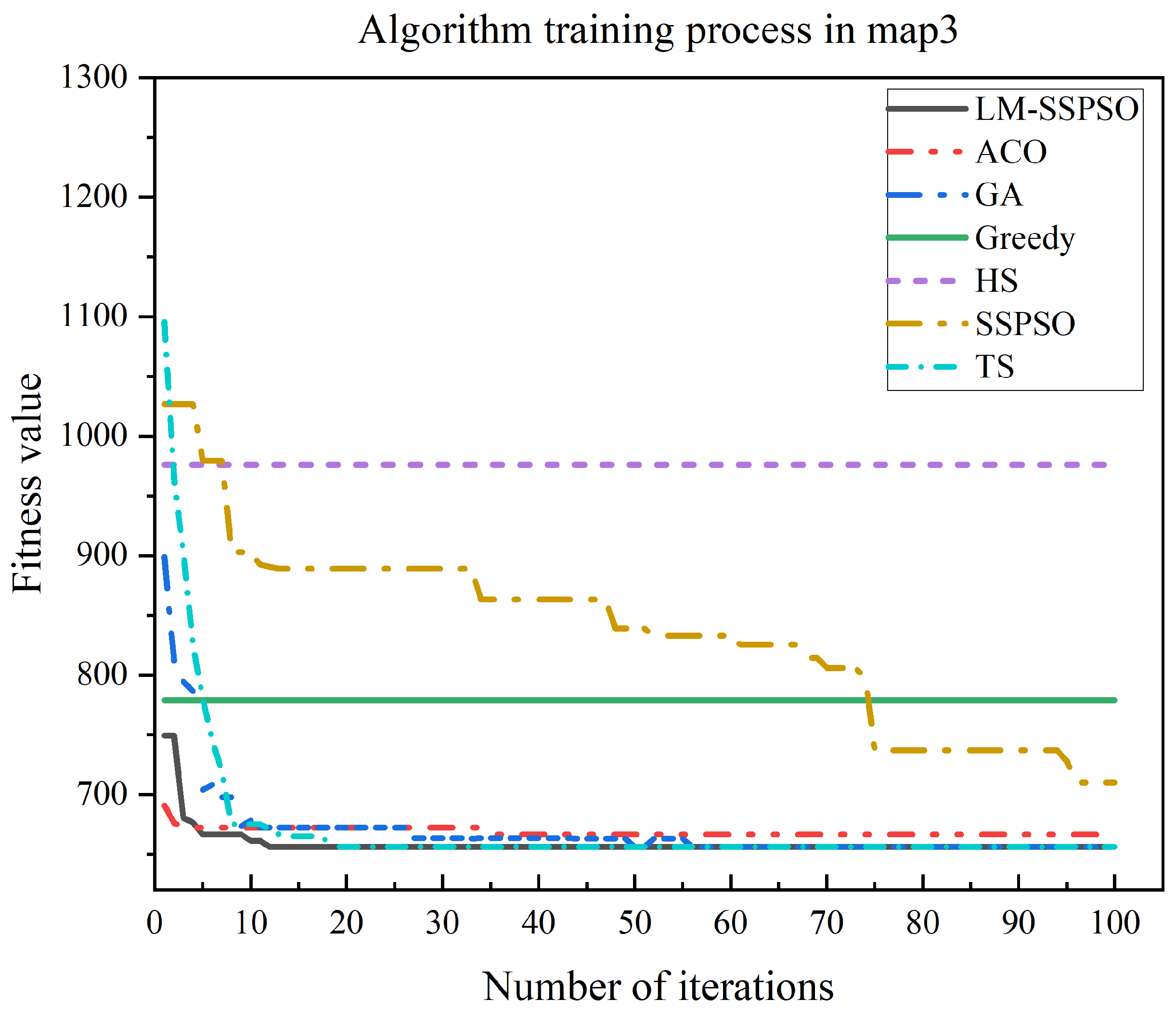

4.4. Performance Analysis of LM-SSPSO with Other Approaches for Multiple Goal Positions Path Planning

To evaluate the performance of the proposed LM-SSPSO algorithm, we compared several algorithms with the proposed algorithm. The parameter settings are presented. The resolution of the environment map used to compared the following algorithms was

, and the distances between any two positions are detailed in

Table 5. To verify the performance, each of the following algorithms was run 50 times. The parameter settings are shown as

Table 9:

represents the population size of the swarm,

represents the maximum number of iterations,

represents the number of particles selected from the swarm and is used for the analysis strategy,

represents the comparison probability,

represents the heuristic probability,

represents the numbers of particles that are reserved, and HMCR represents harmony memory, considering rate.

represents the crossover probability;

represents the mutate probability; alpha and beta represent the relative importance of the pheromone information and heuristic information, respectively; rho represents the pheromone evaporation coefficient; Q represents the pheromone increase intensity coefficient; and TabuLength represents the Tabu length. We compared the minimum value, numbers of optimal solutions, maximum value, and average value of 50 calculations to verify the effectiveness of LM-SSPSO. The results are shown as

Table 10:

The minimum value represents the minimum value in the 50 loop computations. The number of minimum values represents the number of all the intelligent algorithms’ minimum values in the 50 loop computations. The maximum value represents the maximum value in the 50 loop computations for each loop (this included 100 iterations, as shown in

Table 9). We obtained an optimal solution: the maximum value is the maximum value of all the 50 optimal solutions. The average value represents the average value in the 50 loop computations. The optimal path is

. As shown in

Table 10, the total distance is 560.87, where LM-SSPSO, GA, SSPSO, ACO, and TS can find the shortest path for the multiple goal positions and the 50 calculations. Compared with other algorithms, the LM-SSPSO shows better performance for 50 runs. The maximum value of the LM-SSPSO algorithm is also the smallest. The path planning results of the proposed algorithm and the training process are shown as

Figure 10.

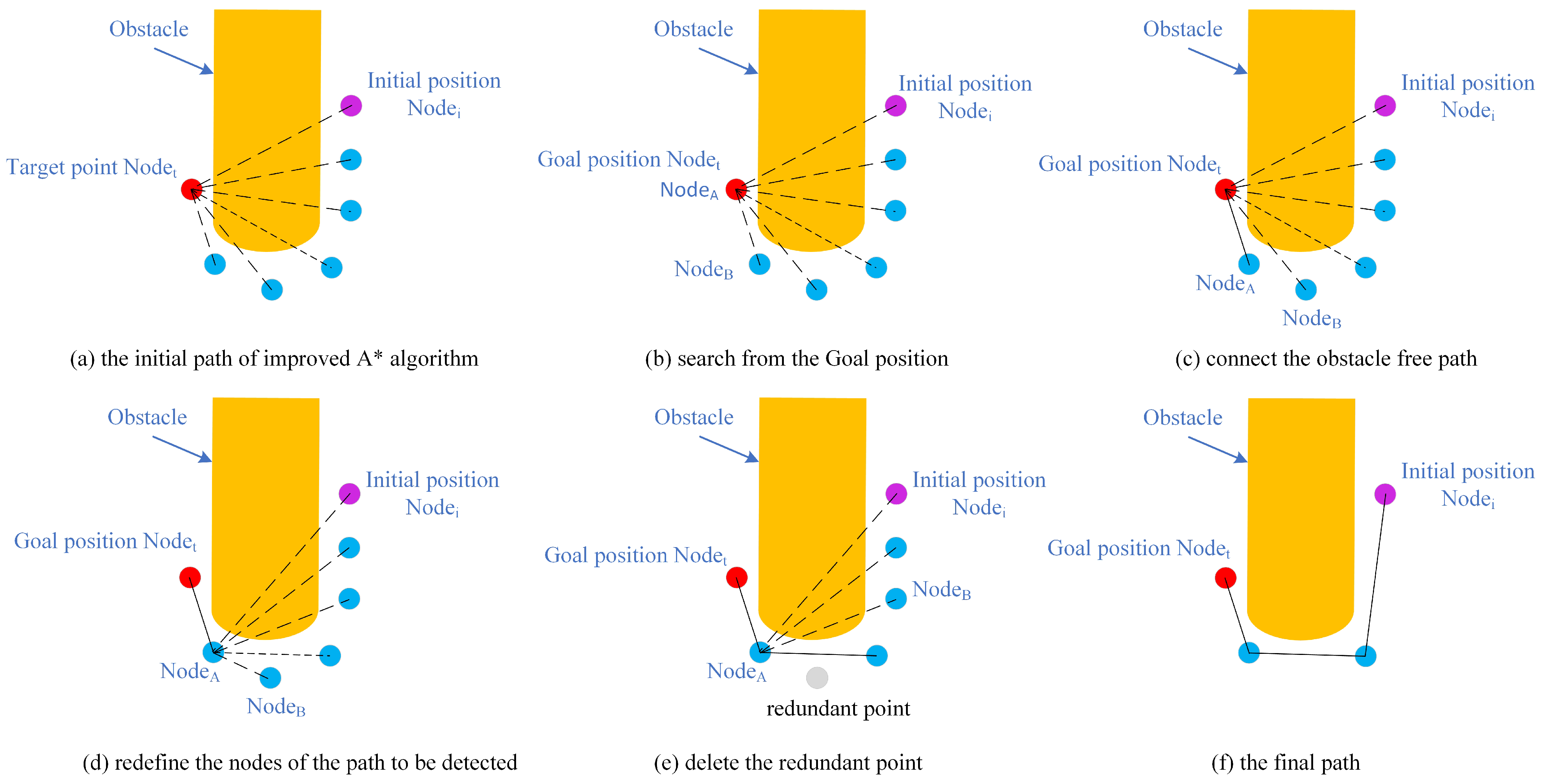

4.5. Postprocess of the Optimized Path

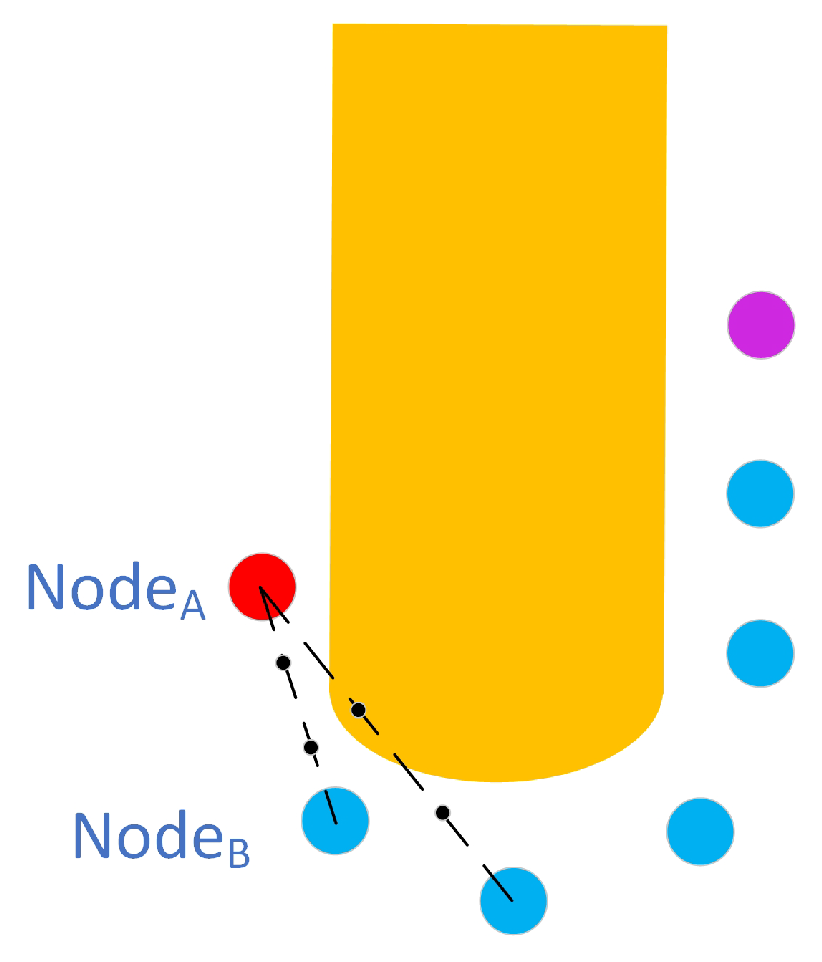

When planning the path, the search area is limited to the local adjacent nodes. As a result, the optimal path may not be obtained. Simultaneously, the variable goal positions in the bidirection can reduce the calculation time; however, the final path may also introduce new redundancy positions, and the efficiency of the robot movement may reduce as a result. To solve this problem, we optimize the path by introducing a postprocessing stage. In

Figure 11, the yellow area represents the obstacle, the purple dot

represents the initial node, the red dot

is the goal node, and the blue dots are the waypoints. Due to the number of waypoints being significantly higher than usual, as shown in

Figure 4, the proposed judgment formulas are unsuitable for the complicated postprocess, and we use sample points to complete the obstacle avoidance task. The postprocessing is described as follows:

Step 1: Initialization. Initialize the obstacle, initial position, waypoint, and goal position.

Step 2:

Delete the redundancy point and optimize the path. As shown in

Figure 11b, we assign the GOAL node as

and the adjacent point as

. Connect

and

, and obtain a dotted line. We divide the dotted line into

(for example,

= 3) equal parts (

Figure 12), and judge whether the divided points (black point) are in the obstacle area or not. If not, we select the next point as

, connect

and

to form a new dotted line, and obtain the new divided points. Next, we judge whether the new divided points are in the obstacle or not; if so, as shown in

Figure 11d, we connect

with the previous point of

using a solid line and delete the redundancy points, as shown in

Figure 11e.

Step 3:

Optimize the remaining path and obtain the final optimized path. We define the previous point of

in Step 2 as

. We repeatedly analyze the dotted line between

and

as Step 2, and delete the redundancy points until the initial point is

and its previous point is

. The final optimized path is shown in

Figure 11f.

The postprocess results are presented in

Figure 13.

6. Conclusions

In this paper, we introduced a novel approach to multiple-goal position path planning. Two problems were solved. The first problem involved finding the shortest distance between any two positions, and the other involved finding the shortest distance path which traverses all the goal positions. When solving the first problem, we introduced a bidirectional strategy and dynamic OpenList cost weight strategy into the A* algorithm. As the resolution of the first environment map changed from to and to , the calculation time was less than that of traditional A* algorithm. The obtain time multiples were 1.90 and 3.16, respectively. These are significantly less than those of the traditional A* algorithm. When comparing with different weight coefficients, the proposed algorithm required the shortest time with 50.27 s at resolution. Based on the proposed time optimization strategy, we also extended the search nodes from 8 to 16 adjacent nodes and introduced a new obstacle judgment formula to analyze the obstacles. Compared with the distance in the 8 adjacent nodes’ distance matrix, 115 path length in 16 adjacent nodes was shorter; the others were equal to 8 adjacent nodes. Compared with the GORRT algorithm, in 16 adjacent nodes, 119 path lengths were shorter, and 1 was longer. When tackling the second problem, we developed the LM-SSPSO strategy to purposefully optimize all the processes of PSO. First, the initial solutions are optimized by using the greedy algorithm, association strategy, and analysis strategy to obtain the local optimal solutions. Then, the comparison, forgetting–reinforcement, and diverse thinking strategy are used to maintain diversity, accelerating particles convergence. The swap sequence strategy is also used in the process of forgetting–reinforcement, and a forget probability function and a reinforcement probability function are used to avoid a local optimum. Finally, the retention idea was also introduced to obtain a better swarm. Compared with six other intelligent algorithms, the LM-SSPSO showed the best performance with the shortest Minmum, Maximum, Average path length 560.87 in the 50 loop computations. It also obtained a higher success rate in finding the optimal solution. After obtaining the multiple-goal-positions path, the redundant points are also removed in the postprocessing. Additionally, the results of comparative experiments were analyzed using three new environment maps. The simulation results further verified that the proposed algorithm’s performance is efficient across different environment maps.

In future work, we will improve our proposed BD-A* algorithm with novel criteria to reduce searching nodes and add a guide line to accelerate the search process and optimize the calculation time. We will optimize the swarm size of LM-SSPSO and analyze the influence of different strategies on the performance of LM-SSPSO, so that we can improve the effectiveness of the LM-SSPSO algorithm. Additionally, we will also focus our research on multiobjective function optimization, which includes torque, global energy reductions, shortest distance, etc. A dynamic environment will also be considered. We may apply our path planning method with the Pareto set strategy and create a real-time application of multiple-goal positions path planning in a dynamic environment.