A Dual-Population Genetic Algorithm with Q-Learning for Multi-Objective Distributed Hybrid Flow Shop Scheduling Problem

Abstract

1. Introduction

2. Logical Scheme to DHFSP

3. Description of Distributed Hybrid Flow Shop Scheduling Problem

4. Introduction to GA and Q-Learning

5. A Dual-Population Genetic Algorithm with Q-Learning

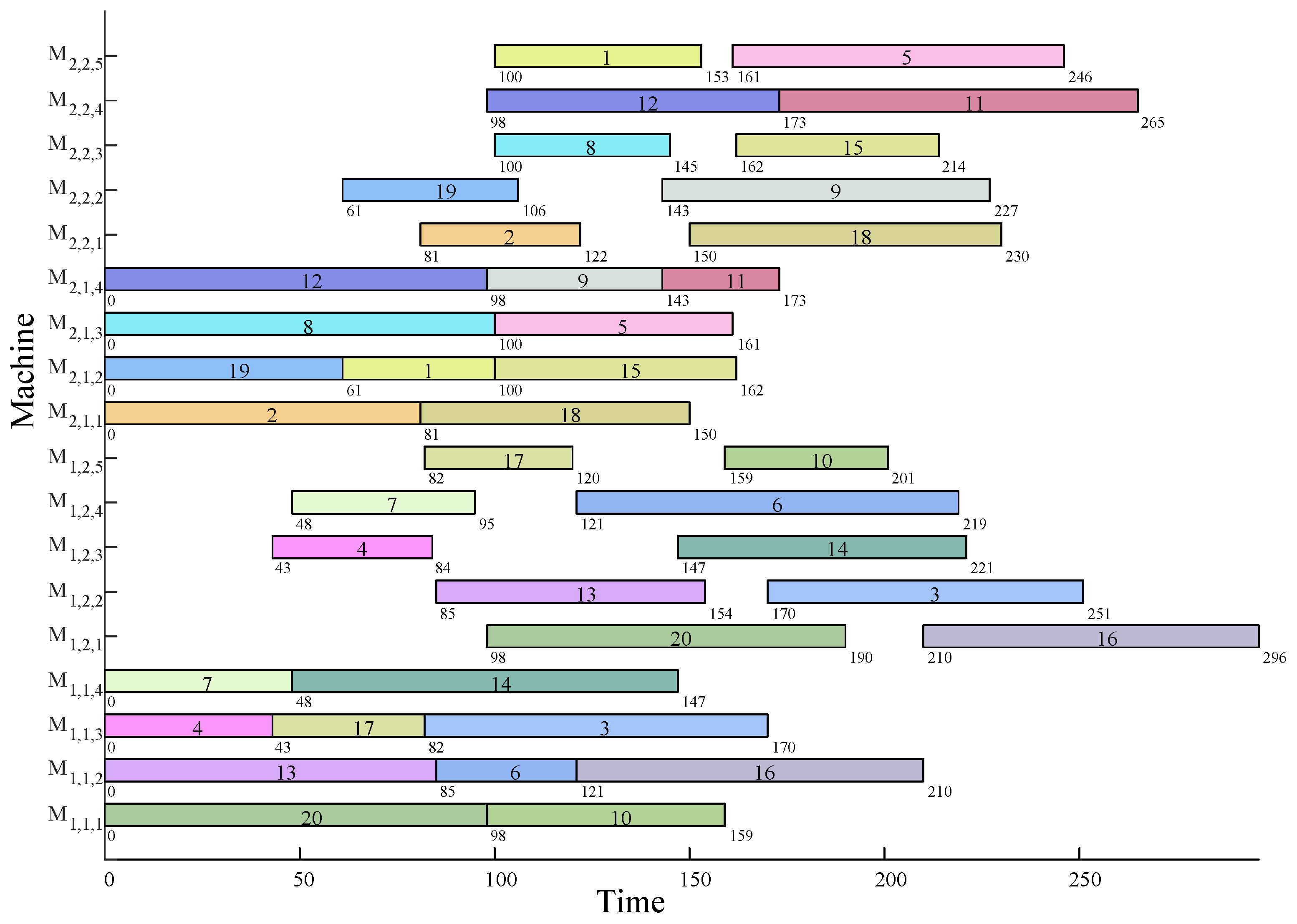

5.1. Coding and Decoding

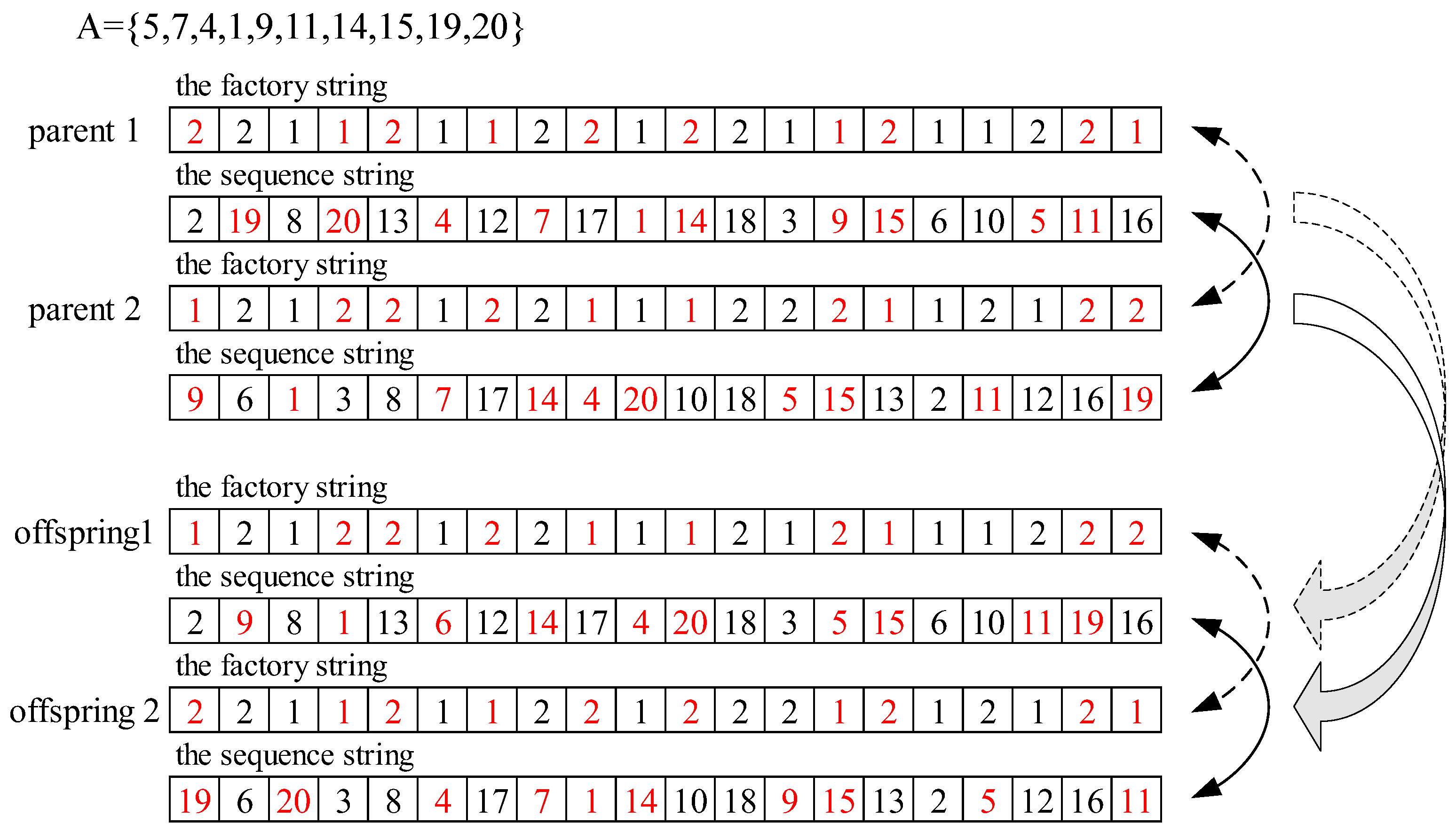

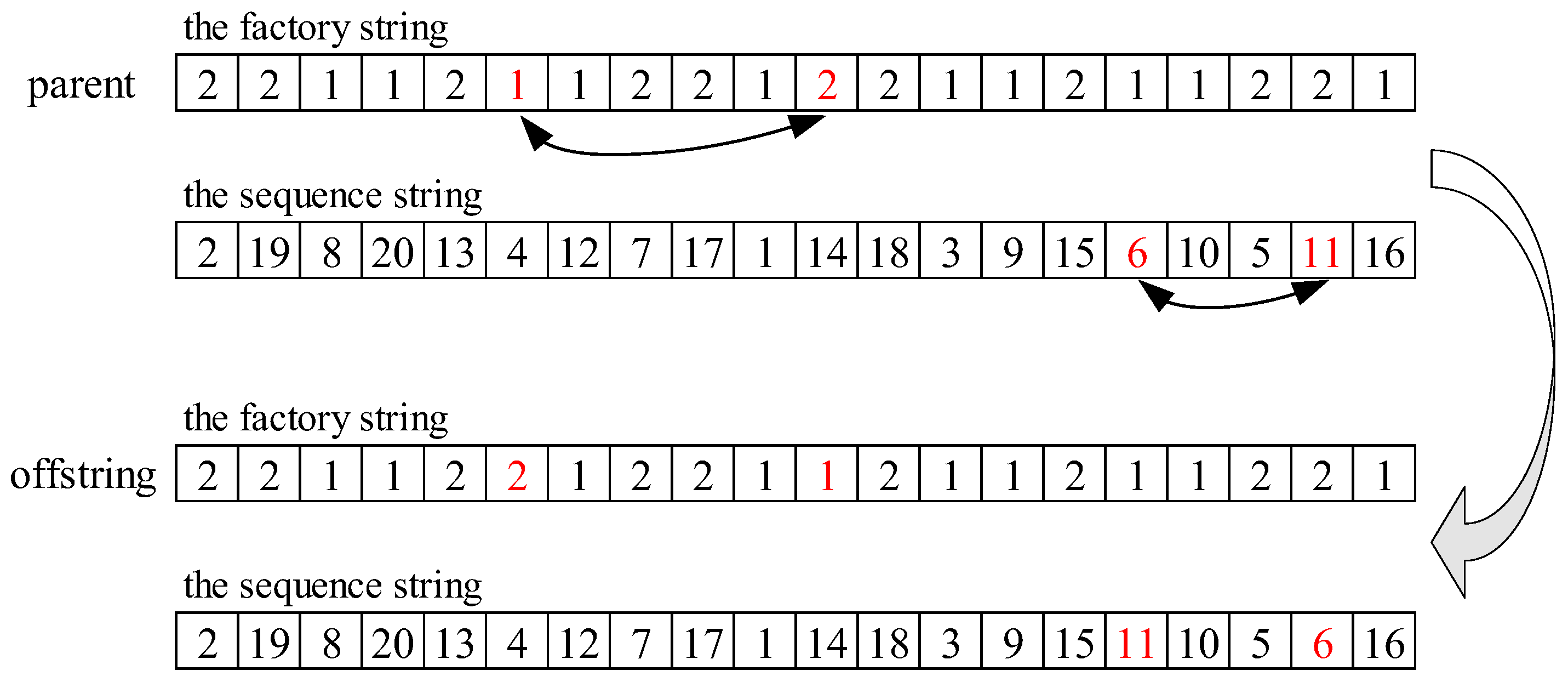

5.2. Crossover Operator

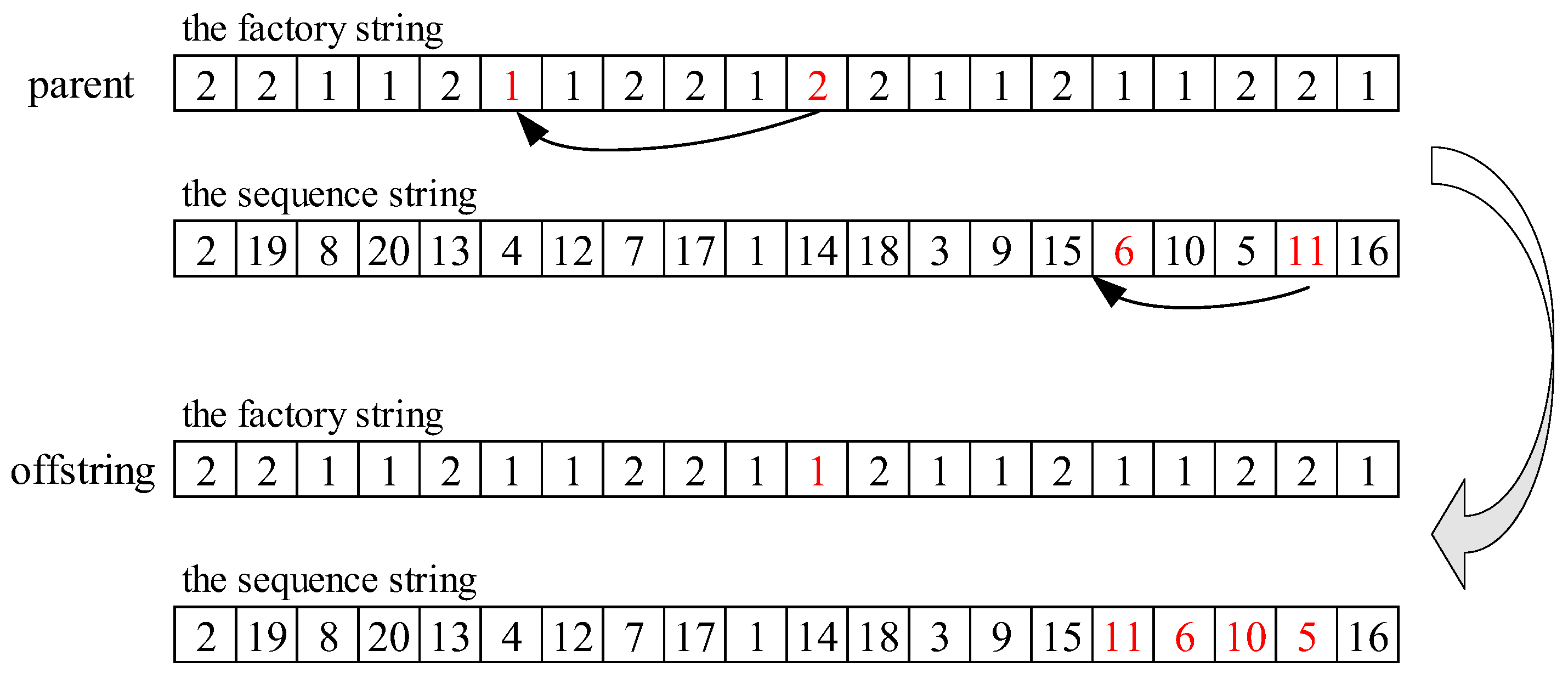

5.3. Mutation Operator

5.4. Q-Learning Process

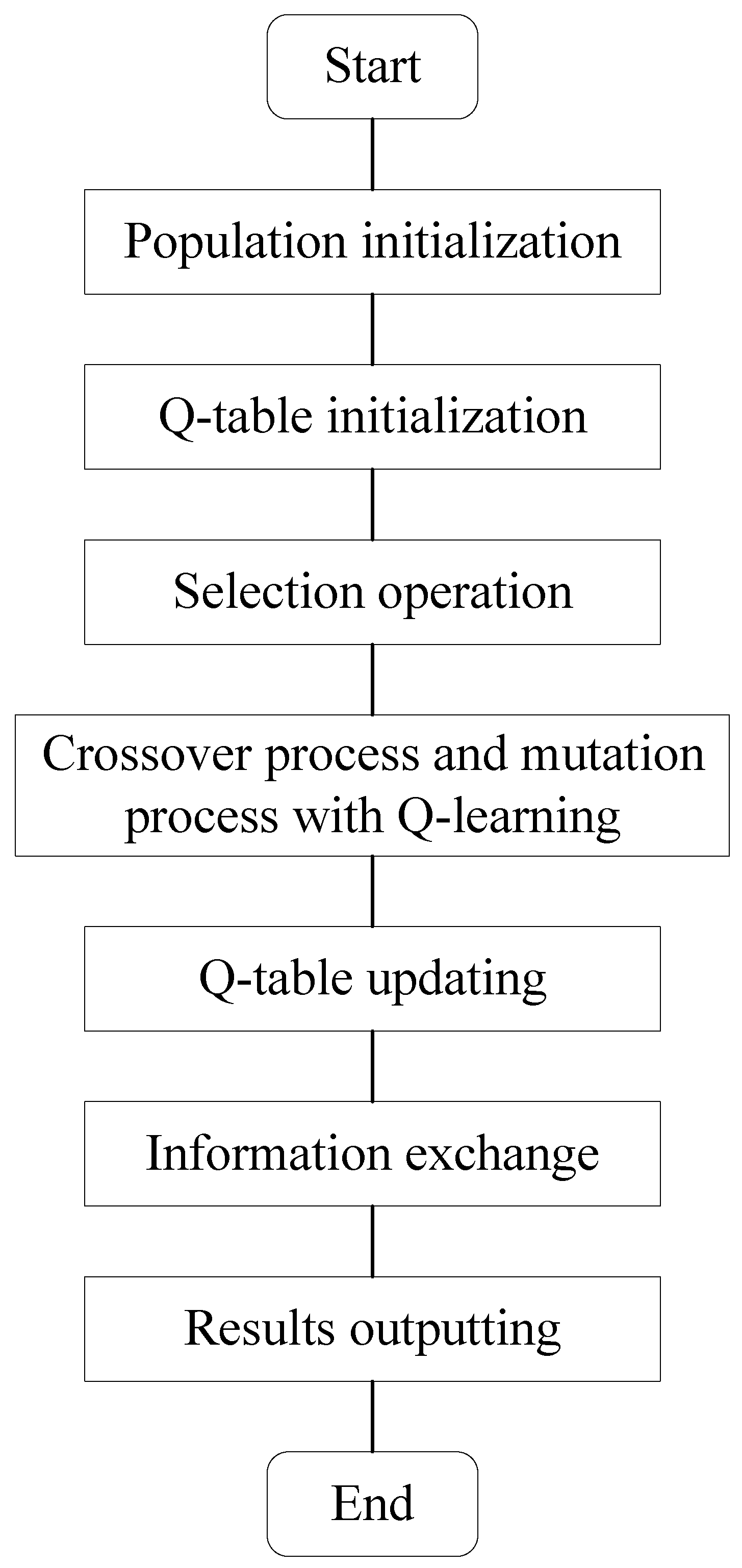

5.5. Dual-Population Genetic Algorithm with Q-Learning

6. Computation Experiments

6.1. Instances

6.2. Calculational Metrics

6.3. Comparison Algorithms

6.4. Parameter Settings

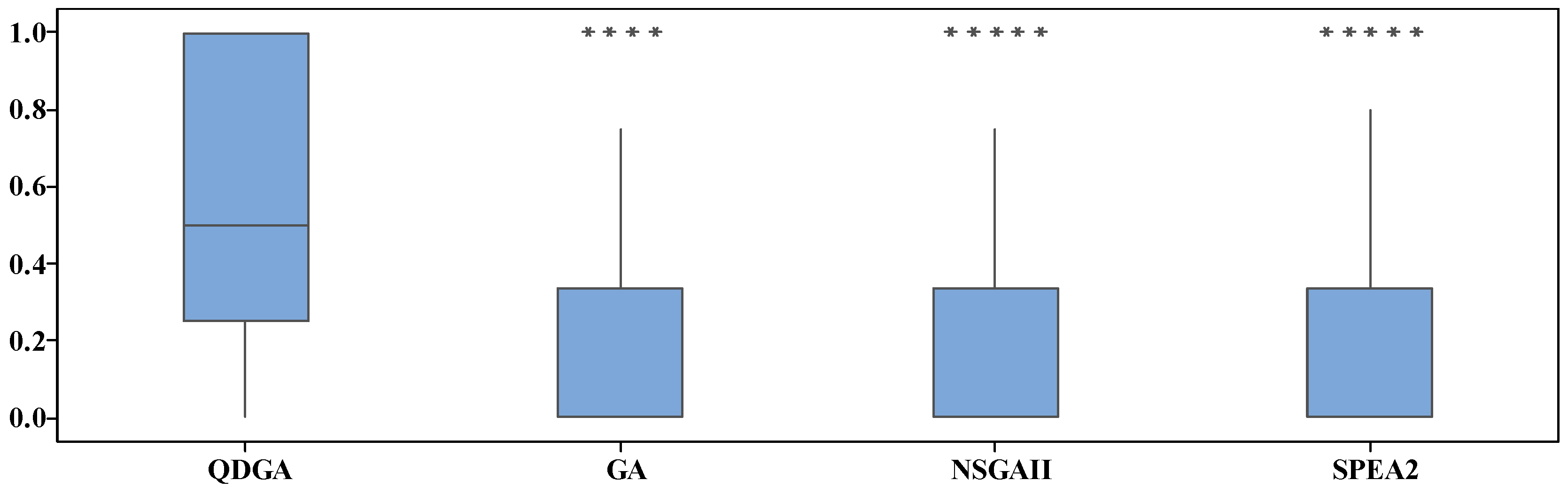

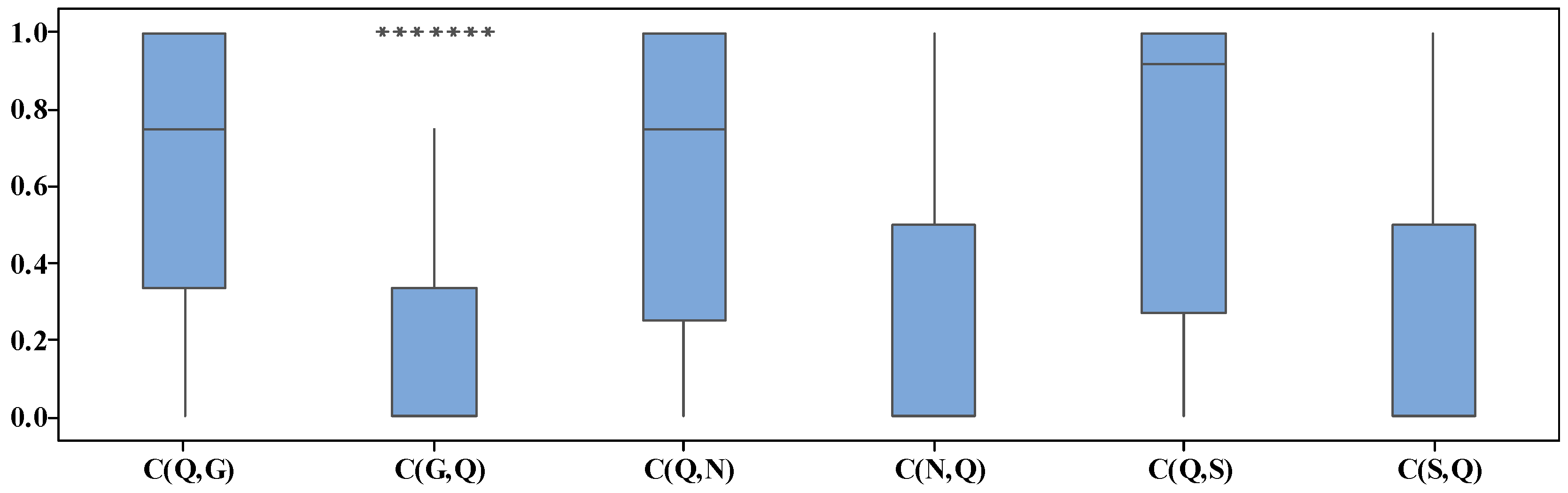

6.5. Results and Analyses

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Qin, H.X.; Han, Y.Y.; Zhang, B.; Meng, L.L.; Liu, Y.P.; Pan, Q.K.; Gong, D.W. An improved iterated greedy algorithm for the energy-efficient blocking hybrid flow shop scheduling problem. Swarm Evol. Comput. 2022, 69, 100992. [Google Scholar] [CrossRef]

- Qin, M.; Wang, R.; Shi, Z.; Liu, L.; Shi, L. A Genetic Programming-Based Scheduling Approach for Hybrid Flow Shop with a Batch Processor and Waiting Time Constraint. IEEE Trans. Autom. Sci. Eng. 2021, 18, 94–105. [Google Scholar] [CrossRef]

- Meng, L.L.; Zhang, C.Y.; Shao, X.Y.; Zhang, B.; Ren, Y.P.; Lin, W.W. More MILP models for hybrid flow shop scheduling problem and its extended problems. Int. J. Prod. Res. 2020, 58, 3905–3930. [Google Scholar] [CrossRef]

- Wang, G.; Li, X.; Gao, L.; Li, P. An effective multi-objective whale swarm algorithm for energy-efficient scheduling of distributed welding flow shop. Ann. Oper. Res. 2021, 310, 223–255. [Google Scholar] [CrossRef]

- Zhao, F.Q.; Zhang, L.X.; Cao, J.; Tang, J.X. A cooperative water wave optimization algorithm with reinforcement learning for the distributed assembly no-idle flowshop scheduling problem. Comput. Ind. Eng. 2021, 153, 107082. [Google Scholar] [CrossRef]

- Zhang, Z.Q.; Qian, B.; Hu, R.; Jin, H.P.; Wang, L. A matrix-cube-based estimation of distribution algorithm for the distributed assembly permutation flow-shop scheduling problem. Swarm Evol. Comput. 2021, 60, 116484. [Google Scholar] [CrossRef]

- Yang, J.; Xu, H. Hybrid Memetic Algorithm to Solve Multiobjective Distributed Fuzzy Flexible Job Shop Scheduling Problem with Transfer. Processes 2022, 10, 1517. [Google Scholar] [CrossRef]

- Shao, W.; Shao, Z.; Pi, D. A multi-neighborhood-based multi-objective memetic algorithm for the energy-efficient distributed flexible flow shop scheduling problem. Neural Comput. Appl. 2022, 34, 22303–22330. [Google Scholar] [CrossRef]

- Meng, L.; Ren, Y.; Zhang, B.; Li, J.Q.; Sang, H.; Zhang, C. MILP Modeling and Optimization of Energy-Efficient Distributed Flexible Job Shop Scheduling Problem. IEEE Access 2020, 8, 191191–191203. [Google Scholar] [CrossRef]

- Li, Y.; Li, F.; Pan, Q.K.; Gao, L.; Tasgetiren, M.F. An artificial bee colony algorithm for the distributed hybrid flowshop scheduling problem. Procedia Manuf. 2019, 39, 1158–1166. [Google Scholar] [CrossRef]

- Wang, J.J.; Wang, L. A bi-population cooperative memetic algorithm for distributed hybrid flow-shop scheduling. IEEE Trans. Emerg. Top. Comput. Intell. 2020, 5, 947–961. [Google Scholar] [CrossRef]

- Cai, J.C.; Lei, D.M. A cooperated shuffled frog-leaping algorithm for distributed energy-efficient hybrid flow shop scheduling with fuzzy processing time. Complex Intell. Syst. 2021, 7, 2235–2253. [Google Scholar] [CrossRef]

- Zheng, J.; Wang, L.; Wang, J.J. A cooperative coevolution algorithm for multi-objective fuzzy distributed hybrid flow shop. Knowl.-Based Syst. 2020, 194, 105536. [Google Scholar] [CrossRef]

- Wang, J.J.; Wang, L. A cooperative memetic algorithm with learning-based agent for energy-aware distributed hybrid flow-Shop scheduling. IEEE Trans. Evol. Comput. 2021, 26, 461–475. [Google Scholar] [CrossRef]

- Jiang, E.D.; Wang, L.; Wang, J.J. Decomposition-based multi-objective optimization for energy-aware distributed hybrid flow shop scheduling with multiprocessor tasks. Tsinghua Sci. Technol. 2021, 26, 646–663. [Google Scholar] [CrossRef]

- Li, Y.; Li, X.; Gao, L.; Zhang, B.; Pan, Q.K.; Tasgetiren, M.F.; Meng, L. A discrete artificial bee colony algorithm for distributed hybrid flowshop scheduling problem with sequence-dependent setup times. Int. J. Prod. Res. 2021, 59, 3880–3899. [Google Scholar] [CrossRef]

- Lei, D.M.; Xi, B.J. Diversified teaching-learning-based optimization for fuzzy two-stage hybrid flow shop scheduling with setup time. J. Intell. Fuzzy Syst. 2021, 41, 4159–4173. [Google Scholar] [CrossRef]

- Cai, J.C.; Zhou, R.; Lei, D.M. Dynamic shuffled frog-leaping algorithm for distributed hybrid flow shop scheduling with multiprocessor tasks. Eng. Appl. Artif. Intell. 2020, 90, 103540. [Google Scholar] [CrossRef]

- Wang, L.; Li, D.D. Fuzzy distributed hybrid flow shop scheduling problem with heterogeneous factory and unrelated parallel machine: A shuffled frog leaping algorithm with collaboration of multiple search strategies. IEEE Access 2020, 8, 214209–214223. [Google Scholar] [CrossRef]

- Cai, J.C.; Zhou, R.; Lei, D.M. Fuzzy distributed two-stage hybrid flow shop scheduling problem with setup time: Collaborative variable search. J. Intell. Fuzzy Syst. 2020, 38, 3189–3199. [Google Scholar] [CrossRef]

- Dong, J.; Ye, C. Green scheduling of distributed two-stage reentrant hybrid flow shop considering distributed energy resources and energy storage system. Comput. Ind. Eng. 2022, 169, 108146. [Google Scholar] [CrossRef]

- Li, Y.L.; Li, X.Y.; Gao, L.; Meng, L.L. An improved artificial bee colony algorithm for distributed heterogeneous hybrid flowshop scheduling problem with sequence-dependent setup times. Comput. Ind. Eng. 2020, 147, 106638. [Google Scholar] [CrossRef]

- Li, J.Q.; Yu, H.; Chen, X.; Li, W.; Du, Y.; Han, Y.Y. An improved brain storm optimization algorithm for fuzzy distributed hybrid flowshop scheduling with setup time. In Proceedings of the Genetic and Evolutionary Computation Conference, GECCO, New York, NY, USA, 8–12 July 2020; pp. 275–276, Association for Computing Machinery, Inc.: New York, NY, USA, 2020. [Google Scholar] [CrossRef]

- Qin, H.; Li, T.; Teng, Y.; Wang, K. Integrated production and distribution scheduling in distributed hybrid flow shops. Memetic Comput. 2021, 13, 185–202. [Google Scholar] [CrossRef]

- Li, J.; Chen, X.l.; Duan, P.; Mou, J.h. KMOEA: A knowledge-based multi-objective algorithm for distributed hybrid flow shop in a prefabricated system. IEEE Trans. Ind. Inform. 2021, 18, 5318–5329. [Google Scholar] [CrossRef]

- Ying, K.C.; Lin, S.W. Minimizing makespan for the distributed hybrid flowshop scheduling problem with multiprocessor tasks. Expert Syst. Appl. 2018, 92, 132–141. [Google Scholar] [CrossRef]

- Shao, W.S.; Shao, Z.S.; Pi, D.C. Modeling and multi-neighborhood iterated greedy algorithm for distributed hybrid flow shop scheduling problem. Knowl.-Based Syst. 2020, 194, 105527. [Google Scholar] [CrossRef]

- Shao, W.S.; Shao, Z.S.; Pi, D.C. Multi-objective evolutionary algorithm based on multiple neighborhoods local search for multi-objective distributed hybrid flow shop scheduling problem. Expert Syst. Appl. 2021, 183, 115453. [Google Scholar] [CrossRef]

- Meng, L.; Gao, K.; Ren, Y.; Zhang, B.; Sang, H.; Chaoyong, Z. Novel MILP and CP models for distributed hybrid flowshop scheduling problem with sequence-dependent setup times. Swarm Evol. Comput. 2022, 71, 101058. [Google Scholar] [CrossRef]

- Cai, J.; Lei, D.; Wang, J.; Wang, L. A novel shuffled frog-leaping algorithm with reinforcement learning for distributed assembly hybrid flow shop scheduling. Int. J. Prod. Res. 2023, 61, 1233–1251. [Google Scholar] [CrossRef]

- Cai, J.; Lei, D.; Li, M. A shuffled frog-leaping algorithm with memeplex quality for bi-objective distributed scheduling in hybrid flow shop. Int. J. Prod. Res. 2020, 59, 5404–5421. [Google Scholar] [CrossRef]

- Hao, J.H.; Li, J.Q.; Du, Y.; Song, M.X.; Duan, P.; Zhang, Y.Y. Solving distributed hybrid flowshop scheduling problems by a hybrid brain storm optimization algorithm. IEEE Access 2019, 7, 66879–66894. [Google Scholar] [CrossRef]

- Lei, D.; Wang, T. Solving distributed two-stage hybrid flowshop scheduling using a shuffled frog-leaping algorithm with memeplex grouping. Eng. Optim. 2019, 52, 1461–1474. [Google Scholar] [CrossRef]

- Li, J.Q.; Li, J.K.; Zhang, L.J.; Sang, H.Y.; Han, Y.Y.; Chen, Q.D. Solving type-2 fuzzy distributed hybrid flowshop scheduling using an improved brain storm optimization algorithm. Int. J. Fuzzy Syst. 2021, 23, 1194–1212. [Google Scholar] [CrossRef]

- Atallah, M.J.; Blanton, M. Algorithms and Theory of Computation Handbook, Volume 2: Special Topics and Techniques; CRC Press: Boca Raton, FL, USA, 2009. [Google Scholar]

- Charu, A. Neural Networks and Deep Learning, A Textbook; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Chen, R.; Yang, B.; Li, S.; Wang, S. A self-learning genetic algorithm based on reinforcement learning for flexible job-shop scheduling problem. Comput. Ind. Eng. 2020, 149, 106778. [Google Scholar] [CrossRef]

- Wang, J.; Lei, D.; Cai, J. An adaptive artificial bee colony with reinforcement learning for distributed three-stage assembly scheduling with maintenance. Appl. Soft Comput. 2021, 117, 108371. [Google Scholar] [CrossRef]

- Deb, K.; Pratap, A.; Agarwal, S.; Meyarivan, T. A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans. Evol. Comput. 2002, 6, 182–197. [Google Scholar] [CrossRef]

- Zitzler, E.; Laumanns, M.; Thiele, L. SPEA2: Improving the Strength Pareto Evolutionary Algorithm for Multiobjective Optimization; Technical Report Gloriastrasse; 103; TIK-Rep; Swiss Federal Institute of Technology: Lausanne, Switzerland, 2001; pp. 1–20. [Google Scholar]

| Job/i | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | 18 | 19 | 20 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 39 | 81 | 88 | 43 | 61 | 36 | 48 | 100 | 45 | 61 | 30 | 98 | 85 | 99 | 62 | 89 | 39 | 69 | 61 | 98 | |

| 53 | 41 | 81 | 41 | 85 | 98 | 47 | 45 | 84 | 42 | 92 | 75 | 69 | 74 | 52 | 86 | 38 | 80 | 45 | 92 |

| Action 1 | Action 2 | Action 3 | Action 4 | |

|---|---|---|---|---|

| State 1 | 0.029 | 0.783 | 0.069 | 0.684 |

| State 2 | 0.320 | 0.381 | 0.577 | 0.732 |

| State 3 | 0.548 | 0.486 | 0.006 | 0.316 |

| State 4 | 0.636 | 0.416 | 0.615 | 0.630 |

| State 5 | 0.451 | 0.005 | 0.735 | 0.469 |

| State 6 | 0.429 | 0.639 | 0.647 | 0.512 |

| State 7 | 0.742 | 0.209 | 0.386 | 0.442 |

| State 8 | 0.583 | 0.691 | 0.347 | 0.209 |

| State 9 | 0.674 | 0.181 | 0.164 | 0.346 |

| State 10 | 0.104 | 0.811 | 0.135 | 0.224 |

| Action | Search Method |

|---|---|

| 1 | + |

| 2 | + |

| 3 | + |

| 4 | + |

| Instance | QDGA | GA | NSGAII | SPEA2 | Instance | QDGA | GA | NSGAII | SPEA2 |

|---|---|---|---|---|---|---|---|---|---|

| 1 | 0.833 | 0.000 | 0.167 | 0.000 | 43 | 0.800 | 0.000 | 0.200 | 0.000 |

| 2 | 0.667 | 0.000 | 0.333 | 0.000 | 44 | 0.400 | 0.000 | 0.200 | 0.400 |

| 3 | 0.500 | 0.000 | 0.500 | 0.500 | 45 | 1.000 | 0.000 | 0.000 | 0.000 |

| 4 | 0.000 | 0.500 | 0.500 | 0.000 | 46 | 1.000 | 0.000 | 0.000 | 0.000 |

| 5 | 0.667 | 0.667 | 0.333 | 0.000 | 47 | 1.000 | 0.000 | 0.000 | 0.000 |

| 6 | 0.400 | 0.400 | 0.200 | 0.000 | 48 | 0.333 | 0.667 | 0.000 | 0.000 |

| 7 | 1.000 | 1.000 | 1.000 | 1.000 | 49 | 0.333 | 0.000 | 0.333 | 0.667 |

| 8 | 0.667 | 0.333 | 0.000 | 0.000 | 50 | 0.333 | 0.000 | 0.000 | 0.667 |

| 9 | 1.000 | 1.000 | 1.000 | 1.000 | 51 | 0.667 | 0.000 | 0.333 | 0.000 |

| 10 | 1.000 | 0.000 | 0.000 | 0.000 | 52 | 0.250 | 0.000 | 0.750 | 0.000 |

| 11 | 1.000 | 0.000 | 0.000 | 0.000 | 53 | 0.500 | 0.000 | 0.500 | 0.000 |

| 12 | 0.800 | 0.200 | 0.200 | 0.000 | 54 | 1.000 | 0.000 | 0.000 | 0.000 |

| 13 | 1.000 | 0.000 | 0.000 | 0.000 | 55 | 0.000 | 0.000 | 1.000 | 0.000 |

| 14 | 1.000 | 0.000 | 0.000 | 0.000 | 56 | 0.500 | 0.000 | 0.000 | 0.500 |

| 15 | 0.500 | 0.000 | 0.000 | 0.500 | 57 | 0.667 | 0.333 | 0.000 | 0.000 |

| 16 | 0.500 | 0.250 | 0.250 | 0.000 | 58 | 1.000 | 0.000 | 0.000 | 0.000 |

| 17 | 0.667 | 0.333 | 0.000 | 0.000 | 59 | 0.667 | 0.000 | 0.000 | 0.333 |

| 18 | 0.000 | 0.333 | 0.333 | 0.333 | 60 | 1.000 | 0.000 | 0.000 | 0.000 |

| 19 | 0.000 | 0.000 | 1.000 | 0.000 | 61 | 0.000 | 0.000 | 0.000 | 1.000 |

| 20 | 0.000 | 0.333 | 0.000 | 0.667 | 62 | 0.000 | 0.000 | 0.500 | 0.500 |

| 21 | 0.167 | 0.333 | 0.500 | 0.000 | 63 | 0.250 | 0.250 | 0.000 | 0.500 |

| 22 | 0.429 | 0.429 | 0.000 | 0.143 | 64 | 0.000 | 0.000 | 0.000 | 1.000 |

| 23 | 0.250 | 0.750 | 0.000 | 0.250 | 65 | 0.333 | 0.333 | 0.333 | 0.000 |

| 24 | 1.000 | 0.000 | 0.000 | 0.000 | 66 | 0.000 | 0.000 | 1.000 | 0.000 |

| 25 | 0.500 | 0.500 | 0.000 | 0.000 | 67 | 0.000 | 1.000 | 0.000 | 0.000 |

| 26 | 1.000 | 0.000 | 0.000 | 0.000 | 68 | 0.000 | 0.000 | 0.500 | 0.500 |

| 27 | 0.500 | 0.000 | 0.000 | 0.500 | 69 | 0.000 | 0.000 | 0.200 | 0.800 |

| 28 | 0.000 | 0.000 | 0.000 | 1.000 | 70 | 0.000 | 0.250 | 0.750 | 0.000 |

| 29 | 1.000 | 0.000 | 0.000 | 0.000 | 71 | 0.667 | 0.000 | 0.000 | 0.667 |

| 30 | 0.500 | 0.500 | 0.000 | 0.000 | 72 | 0.667 | 0.000 | 0.000 | 0.333 |

| 31 | 0.333 | 0.333 | 0.333 | 0.000 | 73 | 0.667 | 0.000 | 0.000 | 0.333 |

| 32 | 1.000 | 0.000 | 0.000 | 0.000 | 74 | 0.500 | 0.500 | 0.000 | 0.000 |

| 33 | 0.000 | 0.333 | 0.333 | 0.333 | 75 | 0.667 | 0.000 | 0.000 | 0.333 |

| 34 | 1.000 | 0.000 | 0.000 | 0.000 | 76 | 0.333 | 0.000 | 0.333 | 0.333 |

| 35 | 0.667 | 0.000 | 0.333 | 0.000 | 77 | 0.500 | 0.000 | 0.000 | 0.500 |

| 36 | 0.000 | 0.333 | 0.667 | 0.000 | 78 | 0.000 | 0.333 | 0.000 | 0.667 |

| 37 | 0.500 | 0.500 | 0.000 | 0.000 | 79 | 1.000 | 0.000 | 0.000 | 0.000 |

| 38 | 0.000 | 1.000 | 0.000 | 0.000 | 80 | 0.333 | 0.167 | 0.500 | 0.000 |

| 39 | 1.000 | 0.000 | 0.000 | 0.000 | 81 | 0.000 | 0.500 | 0.500 | 0.000 |

| 40 | 1.000 | 0.000 | 0.000 | 0.000 | 82 | 1.000 | 0.000 | 0.000 | 0.000 |

| 41 | 0.500 | 0.000 | 0.500 | 0.000 | 83 | 0.667 | 0.333 | 0.000 | 0.000 |

| 42 | 0.500 | 0.500 | 0.250 | 0.000 | 84 | 1.000 | 0.000 | 0.000 | 0.000 |

| Ins | Ins | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 1.000 | 0.000 | 0.857 | 0.000 | 0.833 | 0.000 | 43 | 1.000 | 0.000 | 0.667 | 0.000 | 1.000 | 0.000 |

| 2 | 1.000 | 0.000 | 0.750 | 0.333 | 1.000 | 0.000 | 44 | 1.000 | 0.000 | 0.750 | 0.000 | 0.500 | 0.000 |

| 3 | 1.000 | 0.000 | 0.333 | 0.333 | 0.333 | 0.333 | 45 | 1.000 | 0.000 | 1.000 | 0.000 | 1.000 | 0.000 |

| 4 | 0.667 | 0.000 | 0.000 | 1.000 | 1.000 | 0.000 | 46 | 1.000 | 0.000 | 1.000 | 0.000 | 1.000 | 0.000 |

| 5 | 0.333 | 0.333 | 0.667 | 0.000 | 1.000 | 0.000 | 47 | 1.000 | 0.000 | 1.000 | 0.000 | 1.000 | 0.000 |

| 6 | 0.600 | 0.500 | 0.667 | 0.500 | 0.833 | 0.000 | 48 | 0.333 | 0.750 | 0.250 | 0.500 | 1.000 | 0.000 |

| 7 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 49 | 1.000 | 0.000 | 0.667 | 0.000 | 0.000 | 0.500 |

| 8 | 0.500 | 0.333 | 1.000 | 0.000 | 1.000 | 0.000 | 50 | 0.000 | 0.600 | 1.000 | 0.000 | 0.000 | 0.800 |

| 9 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 51 | 1.000 | 0.000 | 0.500 | 0.333 | 1.000 | 0.000 |

| 10 | 1.000 | 0.000 | 1.000 | 0.000 | 1.000 | 0.000 | 52 | 0.500 | 0.333 | 0.000 | 0.667 | 1.000 | 0.000 |

| 11 | 1.000 | 0.000 | 1.000 | 0.000 | 1.000 | 0.000 | 53 | 1.000 | 0.000 | 0.667 | 0.500 | 0.500 | 0.500 |

| 12 | 0.750 | 0.000 | 0.750 | 0.000 | 1.000 | 0.000 | 54 | 1.000 | 0.000 | 1.000 | 0.000 | 1.000 | 0.000 |

| 13 | 1.000 | 0.000 | 1.000 | 0.000 | 1.000 | 0.000 | 55 | 0.000 | 0.333 | 0.000 | 1.000 | 0.000 | 1.000 |

| 14 | 1.000 | 0.000 | 1.000 | 0.000 | 1.000 | 0.000 | 56 | 1.000 | 0.000 | 1.000 | 0.000 | 0.000 | 0.500 |

| 15 | 1.000 | 0.000 | 0.750 | 0.200 | 0.000 | 0.600 | 57 | 0.500 | 0.333 | 1.000 | 0.000 | 1.000 | 0.000 |

| 16 | 0.500 | 0.333 | 0.667 | 0.000 | 1.000 | 0.000 | 58 | 1.000 | 0.000 | 1.000 | 0.000 | 1.000 | 0.000 |

| 17 | 0.667 | 0.333 | 0.667 | 0.333 | 1.000 | 0.000 | 59 | 1.000 | 0.000 | 1.000 | 0.000 | 0.750 | 0.333 |

| 18 | 0.500 | 0.500 | 0.667 | 0.000 | 0.500 | 0.500 | 60 | 1.000 | 0.000 | 1.000 | 0.000 | 1.000 | 0.000 |

| 19 | 1.000 | 0.000 | 0.000 | 1.000 | 1.000 | 0.000 | 61 | 0.000 | 1.000 | 0.000 | 1.000 | 0.000 | 1.000 |

| 20 | 0.667 | 0.333 | 1.000 | 0.000 | 0.000 | 1.000 | 62 | 0.500 | 0.000 | 0.000 | 1.000 | 0.000 | 1.000 |

| 21 | 0.400 | 0.333 | 0.250 | 0.667 | 1.000 | 0.000 | 63 | 0.667 | 0.000 | 0.400 | 0.333 | 0.000 | 0.667 |

| 22 | 0.500 | 0.571 | 1.000 | 0.000 | 0.750 | 0.000 | 64 | 0.333 | 0.667 | 0.000 | 1.000 | 0.000 | 1.000 |

| 23 | 0.000 | 0.750 | 1.000 | 0.000 | 0.000 | 0.500 | 65 | 0.750 | 0.000 | 0.667 | 0.750 | 1.000 | 0.000 |

| 24 | 1.000 | 0.000 | 1.000 | 0.000 | 1.000 | 0.000 | 66 | 0.500 | 0.500 | 0.000 | 1.000 | 0.667 | 0.000 |

| 25 | 0.000 | 0.500 | 1.000 | 0.000 | 0.667 | 0.000 | 67 | 0.000 | 1.000 | 0.500 | 0.500 | 1.000 | 0.000 |

| 26 | 1.000 | 0.000 | 1.000 | 0.000 | 1.000 | 0.000 | 68 | 1.000 | 0.000 | 0.000 | 0.333 | 0.000 | 1.000 |

| 27 | 0.500 | 0.200 | 0.667 | 0.200 | 0.333 | 0.600 | 69 | 0.000 | 1.000 | 0.000 | 1.000 | 0.000 | 1.000 |

| 28 | 1.000 | 0.000 | 1.000 | 0.000 | 0.000 | 1.000 | 70 | 0.333 | 0.500 | 0.000 | 1.000 | 1.000 | 0.000 |

| 29 | 1.000 | 0.000 | 1.000 | 0.000 | 1.000 | 0.000 | 71 | 1.000 | 0.000 | 1.000 | 0.000 | 0.333 | 0.333 |

| 30 | 0.000 | 0.750 | 1.000 | 0.000 | 0.250 | 0.625 | 72 | 1.000 | 0.000 | 1.000 | 0.000 | 0.500 | 0.500 |

| 31 | 0.500 | 0.000 | 0.500 | 0.500 | 0.750 | 0.000 | 73 | 1.000 | 0.000 | 1.000 | 0.000 | 0.667 | 0.333 |

| 32 | 1.000 | 0.000 | 1.000 | 0.000 | 1.000 | 0.000 | 74 | 0.667 | 0.667 | 1.000 | 0.000 | 0.750 | 0.667 |

| 33 | 0.500 | 0.250 | 0.000 | 0.750 | 0.000 | 0.750 | 75 | 1.000 | 0.000 | 0.500 | 0.250 | 0.500 | 0.500 |

| 34 | 1.000 | 0.000 | 1.000 | 0.000 | 1.000 | 0.000 | 76 | 1.000 | 0.000 | 0.667 | 0.000 | 0.750 | 0.000 |

| 35 | 1.000 | 0.000 | 0.667 | 0.333 | 1.000 | 0.000 | 77 | 0.000 | 0.200 | 1.000 | 0.000 | 0.000 | 0.600 |

| 36 | 0.000 | 1.000 | 0.000 | 1.000 | 0.667 | 0.333 | 78 | 0.000 | 1.000 | 0.000 | 1.000 | 0.000 | 1.000 |

| 37 | 0.000 | 0.500 | 1.000 | 0.000 | 1.000 | 0.000 | 79 | 1.000 | 0.000 | 1.000 | 0.000 | 1.000 | 0.000 |

| 38 | 0.000 | 1.000 | 0.000 | 0.500 | 0.667 | 0.250 | 80 | 0.333 | 0.333 | 0.000 | 0.667 | 1.000 | 0.000 |

| 39 | 1.000 | 0.000 | 1.000 | 0.000 | 1.000 | 0.000 | 81 | 0.000 | 1.000 | 0.000 | 1.000 | 0.000 | 0.667 |

| 40 | 1.000 | 0.000 | 1.000 | 0.000 | 1.000 | 0.000 | 82 | 1.000 | 0.000 | 1.000 | 0.000 | 1.000 | 0.000 |

| 41 | 1.000 | 0.000 | 0.000 | 0.667 | 1.000 | 0.000 | 83 | 0.500 | 0.000 | 1.000 | 0.000 | 1.000 | 0.000 |

| 42 | 0.333 | 0.000 | 0.500 | 0.000 | 0.667 | 0.000 | 84 | 1.000 | 0.000 | 1.000 | 0.000 | 1.000 | 0.000 |

| Instance | QDGA | GA | NSGAII | SPEA2 | Instance | QDGA | GA | NSGAII | SPEA2 |

|---|---|---|---|---|---|---|---|---|---|

| 1 | 0.021 | 0.020 | 0.014 | 0.015 | 43 | 0.011 | 0.165 | 0.146 | 0.437 |

| 2 | 0.002 | 0.037 | 0.003 | 0.235 | 44 | 0.092 | 0.252 | 0.027 | 0.023 |

| 3 | 0.029 | 0.221 | 0.155 | 0.142 | 45 | 0.000 | 0.065 | 0.723 | 0.453 |

| 4 | 0.445 | 0.018 | 0.531 | 0.179 | 46 | 0.000 | 0.029 | 0.037 | 0.167 |

| 5 | 0.001 | 0.027 | 0.027 | 0.083 | 47 | 0.000 | 0.248 | 0.091 | 0.057 |

| 6 | 0.161 | 0.098 | 0.008 | 0.087 | 48 | 0.040 | 0.042 | 0.062 | 0.157 |

| 7 | 0.000 | 0.000 | 0.000 | 0.000 | 49 | 0.189 | 0.277 | 0.039 | 0.149 |

| 8 | 0.001 | 0.021 | 0.036 | 0.040 | 50 | 0.037 | 0.145 | 0.289 | 0.029 |

| 9 | 0.000 | 0.000 | 0.000 | 0.000 | 51 | 0.014 | 0.341 | 0.168 | 0.168 |

| 10 | 0.000 | 0.027 | 0.009 | 0.024 | 52 | 0.109 | 0.098 | 0.085 | 0.210 |

| 11 | 0.000 | 0.063 | 0.500 | 0.813 | 53 | 0.073 | 0.100 | 0.014 | 0.037 |

| 12 | 0.013 | 0.087 | 0.038 | 0.041 | 54 | 0.000 | 0.573 | 0.401 | 1.190 |

| 13 | 0.000 | 0.255 | 0.407 | 0.276 | 55 | 0.416 | 0.309 | 0.000 | 0.262 |

| 14 | 0.000 | 0.176 | 0.077 | 0.103 | 56 | 0.000 | 0.627 | 0.044 | 0.131 |

| 15 | 0.016 | 0.247 | 0.022 | 0.091 | 57 | 0.004 | 0.057 | 0.113 | 0.539 |

| 16 | 0.059 | 0.213 | 0.072 | 0.145 | 58 | 0.000 | 0.063 | 0.397 | 0.253 |

| 17 | 0.003 | 0.026 | 0.042 | 0.028 | 59 | 0.012 | 0.020 | 0.168 | 0.049 |

| 18 | 0.100 | 0.103 | 0.035 | 0.139 | 60 | 0.000 | 0.324 | 0.431 | 0.403 |

| 19 | 0.203 | 0.700 | 0.000 | 1.000 | 61 | 0.042 | 0.029 | 0.032 | 0.000 |

| 20 | 0.060 | 0.076 | 0.058 | 0.033 | 62 | 0.546 | 0.583 | 0.189 | 0.223 |

| 21 | 0.030 | 0.055 | 0.058 | 0.060 | 63 | 0.034 | 0.176 | 0.076 | 0.023 |

| 22 | 0.036 | 0.021 | 0.080 | 0.076 | 64 | 0.403 | 0.337 | 0.049 | 0.000 |

| 23 | 0.056 | 0.000 | 0.142 | 0.072 | 65 | 0.039 | 0.194 | 0.155 | 0.185 |

| 24 | 0.000 | 0.072 | 0.032 | 0.113 | 66 | 0.534 | 1.111 | 0.000 | 0.588 |

| 25 | 0.020 | 0.135 | 0.055 | 0.046 | 67 | 0.137 | 0.000 | 0.176 | 0.798 |

| 26 | 0.000 | 0.083 | 0.141 | 0.163 | 68 | 0.173 | 0.398 | 0.372 | 0.514 |

| 27 | 0.022 | 0.119 | 0.234 | 0.120 | 69 | 0.353 | 0.192 | 0.156 | 0.000 |

| 28 | 0.023 | 0.346 | 0.040 | 0.000 | 70 | 0.030 | 0.062 | 0.013 | 0.324 |

| 29 | 0.000 | 1.160 | 0.058 | 0.400 | 71 | 0.002 | 0.719 | 0.245 | 0.007 |

| 30 | 0.020 | 0.134 | 0.108 | 0.084 | 72 | 0.005 | 0.095 | 0.087 | 0.077 |

| 31 | 0.064 | 0.063 | 0.191 | 0.158 | 73 | 0.005 | 0.052 | 0.079 | 0.014 |

| 32 | 0.000 | 0.038 | 0.192 | 0.628 | 74 | 0.053 | 0.053 | 0.051 | 0.022 |

| 33 | 0.057 | 0.073 | 0.107 | 0.141 | 75 | 0.014 | 0.327 | 0.298 | 0.050 |

| 34 | 0.000 | 0.025 | 0.017 | 0.084 | 76 | 0.095 | 0.083 | 0.078 | 0.061 |

| 35 | 0.000 | 0.099 | 0.046 | 0.201 | 77 | 0.008 | 0.086 | 0.136 | 0.082 |

| 36 | 0.088 | 0.378 | 0.000 | 0.124 | 78 | 1.398 | 0.204 | 0.394 | 0.054 |

| 37 | 0.000 | 0.040 | 0.038 | 0.161 | 79 | 0.000 | 0.166 | 0.134 | 0.122 |

| 38 | 0.012 | 0.000 | 0.012 | 0.013 | 80 | 0.042 | 0.142 | 0.036 | 0.352 |

| 39 | 0.000 | 0.428 | 1.121 | 0.376 | 81 | 0.297 | 0.000 | 0.070 | 0.102 |

| 40 | 0.000 | 0.134 | 0.043 | 0.113 | 82 | 0.000 | 0.138 | 0.130 | 0.901 |

| 41 | 0.016 | 0.168 | 0.501 | 0.289 | 83 | 0.189 | 0.274 | 0.427 | 0.426 |

| 42 | 0.151 | 0.017 | 0.127 | 0.141 | 84 | 0.000 | 0.176 | 0.566 | 0.795 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, J.; Cai, J. A Dual-Population Genetic Algorithm with Q-Learning for Multi-Objective Distributed Hybrid Flow Shop Scheduling Problem. Symmetry 2023, 15, 836. https://doi.org/10.3390/sym15040836

Zhang J, Cai J. A Dual-Population Genetic Algorithm with Q-Learning for Multi-Objective Distributed Hybrid Flow Shop Scheduling Problem. Symmetry. 2023; 15(4):836. https://doi.org/10.3390/sym15040836

Chicago/Turabian StyleZhang, Jidong, and Jingcao Cai. 2023. "A Dual-Population Genetic Algorithm with Q-Learning for Multi-Objective Distributed Hybrid Flow Shop Scheduling Problem" Symmetry 15, no. 4: 836. https://doi.org/10.3390/sym15040836

APA StyleZhang, J., & Cai, J. (2023). A Dual-Population Genetic Algorithm with Q-Learning for Multi-Objective Distributed Hybrid Flow Shop Scheduling Problem. Symmetry, 15(4), 836. https://doi.org/10.3390/sym15040836