1. Introduction

Circle detection is a fundamental feature extraction task in pattern recognition, which is crucial for computer vision and shape analysis. With the production digitization and automation requirements, circle detection has been widely used in PCB hole detection [

1], spacecraft [

2,

3], ball detection [

4], remote sensing localization [

5], cell analysis [

6], and cannon hole detection [

7].

The circle Hough transform (CHT) [

8,

9] is the most classical circle detection method, which maps any three points to a three-dimensional parameter by traversing all of the edge points to determine the true circle, which leads to serious time consumption. To avoid traversing all points, Xu et al. [

10] proposed the random Hough transform (RHT), which randomly samples three different points to calculate circle parameters and votes on the linked list of parameters [

11]. However, random sampling generates invalid accumulation. Chen et al. proposed the random circle detection (RCD) algorithm [

12]. This method samples one more point than RHT to verify candidate circles which reduces invalid accumulation. Although RCD improves computational efficiency, the probability of four randomly sampled points on the same circle is low. In order to improve the sampling efficiency, Jiang et al. [

13] used probabilistic sampling and defined feature points to quickly exclude a large number of false circles. However, the method is based on the assumption of complete circles, and once the circle is obscured, the efficiency decreases rapidly. Wang et al. [

14] proposed an improved sampling strategy, which samples one point and obtains the other two points by searching in horizontal and vertical directions, respectively. Although robust to noise, the time consumed rises sharply in complex environments. Jiang et al. [

15] proposed a method based on difference region sampling, if the candidate circle is judged to be a false circle, and its number of points reaches a certain threshold, the next sampling is from its difference region. This method improves the sampling efficiency but degrades rapidly when there are many non-circular contours around the false circle.

The large number of iterations and traversal calculations is the main drawback of RHT-based and RCD-based methods. To solve this problem, another approach is to use the geometric properties of circles. These methods generally connect edge points into curves and then estimate the circle parameters using curve information such as least-squares circle fitting, perpendicular bisector or inscribed triangles.

Yao et al. proposed the curvature-assisted Hough transform for circle detection (CACD) algorithm [

16], which adaptively estimates the radius of curvature to eliminate non-circular contours, but the calculation of curvature requires high accuracy for edge detection, pseudo-edges or intersecting edge curves can lead to erroneous results. Lu et al. [

17] used criteria such as area constraints and gradient polarity to determine whether a curve is a candidate circle, and then obtained the true circle by clustering. However, this method often fails to identify defective circles. Le et al. [

18] clustered the set of line segments based on the mean shift [

19], and then obtained the circle parameters by least-squares fitting. Although this method can effectively handle occluded circles, redundant calculations lead to longer detection times. Zhao et al. [

20] proposed a circle detection algorithm using inscribed triangles to estimate the circle parameters. In addition, a linear error compensation algorithm replaced least-squares fitting, which significantly improves the detection accuracy. Liu et al. [

21] proposed a contour refinement and corner point detection algorithm to increase the arc segmentation accuracy but is very dependent on the edge extraction. The performance is often poor for images with edge curves crossing each other or interrupted edges. To address these problems, Ou et al. [

22] proposed a circle detection algorithm based on information compression, which compresses the points on the arc with the same geometric properties into a single point and uses points instead of curves to fit the circle parameters, but the method is time-consuming. We summarize the previous work in

Table 1.

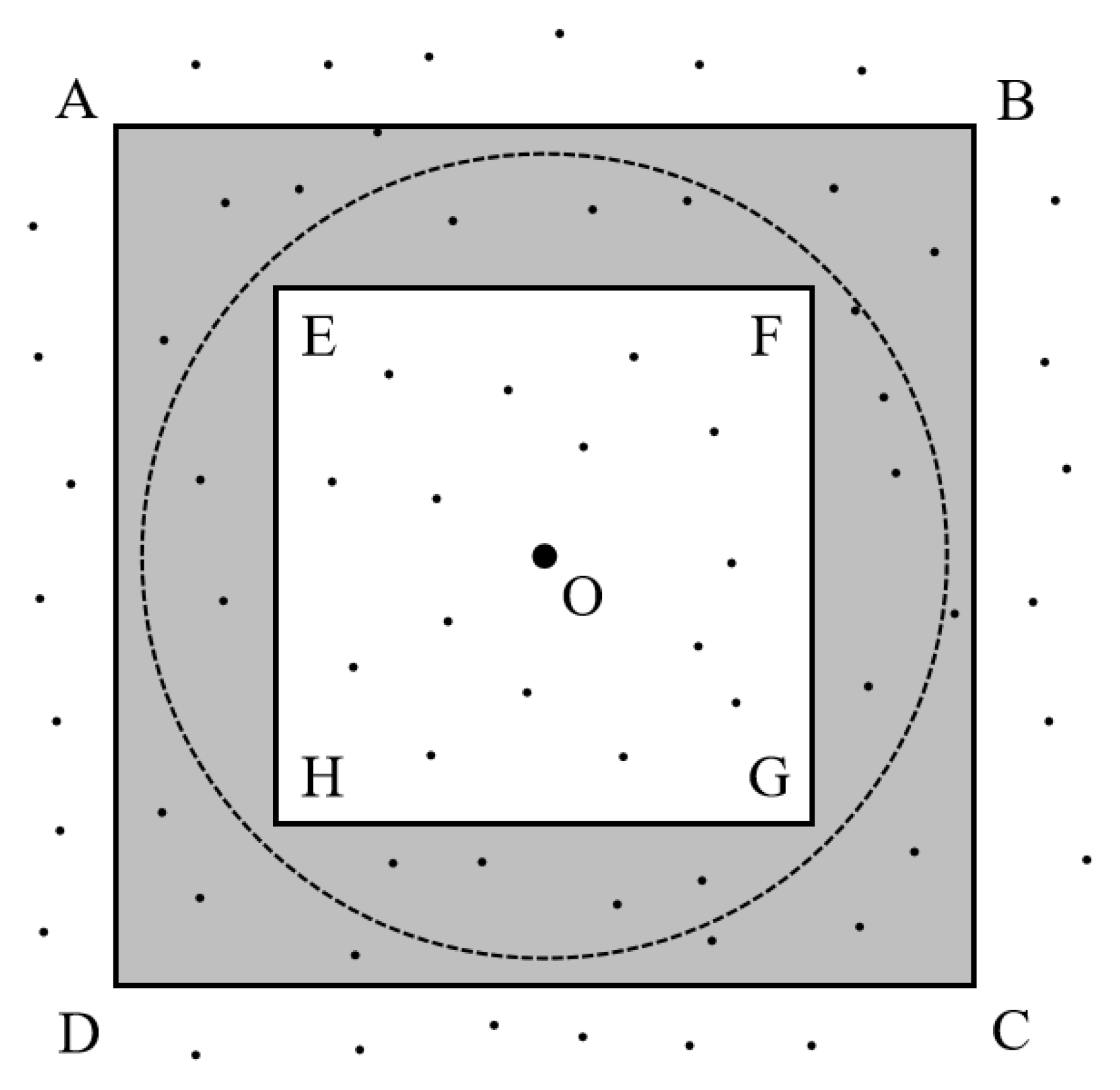

In order to enhance the sampling efficiency, decrease the space consumption of verification, and ensure better performance for defective circles, this paper proposes a fast circle detection algorithm based on circular arc feature screening. First, we improve the fuzzy inference edge detection algorithm by adding multi-directional gradient input, main contour edge screening, edge refinement, and arc-like determination to remove unnecessary contour edges. Then, we sample using two feature matrices by steps and perform auxiliary point finding for defective circles. Finally, we build a square verification support region to validate the complete and defective circles respectively to obtain the true circle. The experimental results show that the algorithm is fast, accurate, and robust.

The main contributions of this paper are as follows:

An improved fuzzy inference edge detection algorithm that removes unnecessary contour edges by adding multi-directional gradient input, main contour edge screening, edge refinement, and arc-like determination;

A random sampling method based on arc features reinforcement with step-wise sampling on two feature matrices and an assisted point-finding method for defective circles;

A verification method with a square verification support region and the complete circle and defective circle constraints.

The rest of the paper is organized as follows:

Section 2 presents our circle detection principle,

Section 3 presents the experimental results and a comparative analysis,

Section 4 concludes this paper.

3. Experiments and Results Analysis

In this section, we compared the algorithm proposed in this paper with five other algorithms. The first is the RHT algorithm proposed by Xu et al. [

10]. The second is the RCD algorithm proposed by Chen et al. [

12]. The third is a difference region sampling algorithm proposed by Jiang [

15]. The fourth is an improved sampling algorithm proposed by Wang [

14]. The fifth is the CACD algorithm proposed by Yao et al. [

16] and the code is from the authors’ open source sharing [

28]. Finally, there is our algorithm. In order to unify the standard, all experiments were run in MATLAB R2021a on the same desktop with an Intel Core i5-9400 2.9 GHz desktop with 8 G RAM. We used the following four metrics to evaluate the above methods:

,

,

, and

.

The experiments refer to the validation method in the literature [

20,

21,

22], where

is the number of correct identifications,

is the number of false identifications, and

is the number of the basic facts missed.

refers to the average time from inputting the image to outputting all of the found circles in each dataset.

The test images in this paper are mainly from our dataset and three public datasets available on the internet:

Interference Dataset. This complex dataset is from the internet, consisting of 16 images from different scenes. The large amount of invalid interference consisting of unimportant details and interference curves makes it impossible for general circle detection algorithms to extract the edges of the circle well. Moreover, compared with some common datasets, the interference dataset contains more circles in each image, making the measurement of circles very difficult.

GH dataset. This complex dataset is from [

27] and consists of 257 gray images of different realistic scenes. Circles in different scenarios make detection compatibility a challenge. Difficulties, such as blurred edges, occlusions, and large variations in radius inconvenience the measurements.

Mini Dataset. For this dataset [

27], eight common images have been used over the years to test circular algorithms: ball (231 × 232), cake (231 × 231), coin (256 × 256), quintuplet (239 × 237), insulators (204 × 150), plate (400 × 390), stability ball (236 × 236 pixels) and watch (236 × 272). Most methods have been tested with this dataset, so performance comparisons will be more convincing. Low resolution, blurred edges, and occlusion are the main challenges of this dataset.

Traffic Dataset. This complex dataset is from [

27] and the internet. Compared with the GH dataset, the traffic dataset contains many colorful images and involves more cluttered backgrounds. Realistic scenes and sign information inside the signs test the practicality of circle algorithms. Factors such as lighting, weather changes, and the angle of outdoor cameras make detection difficult.

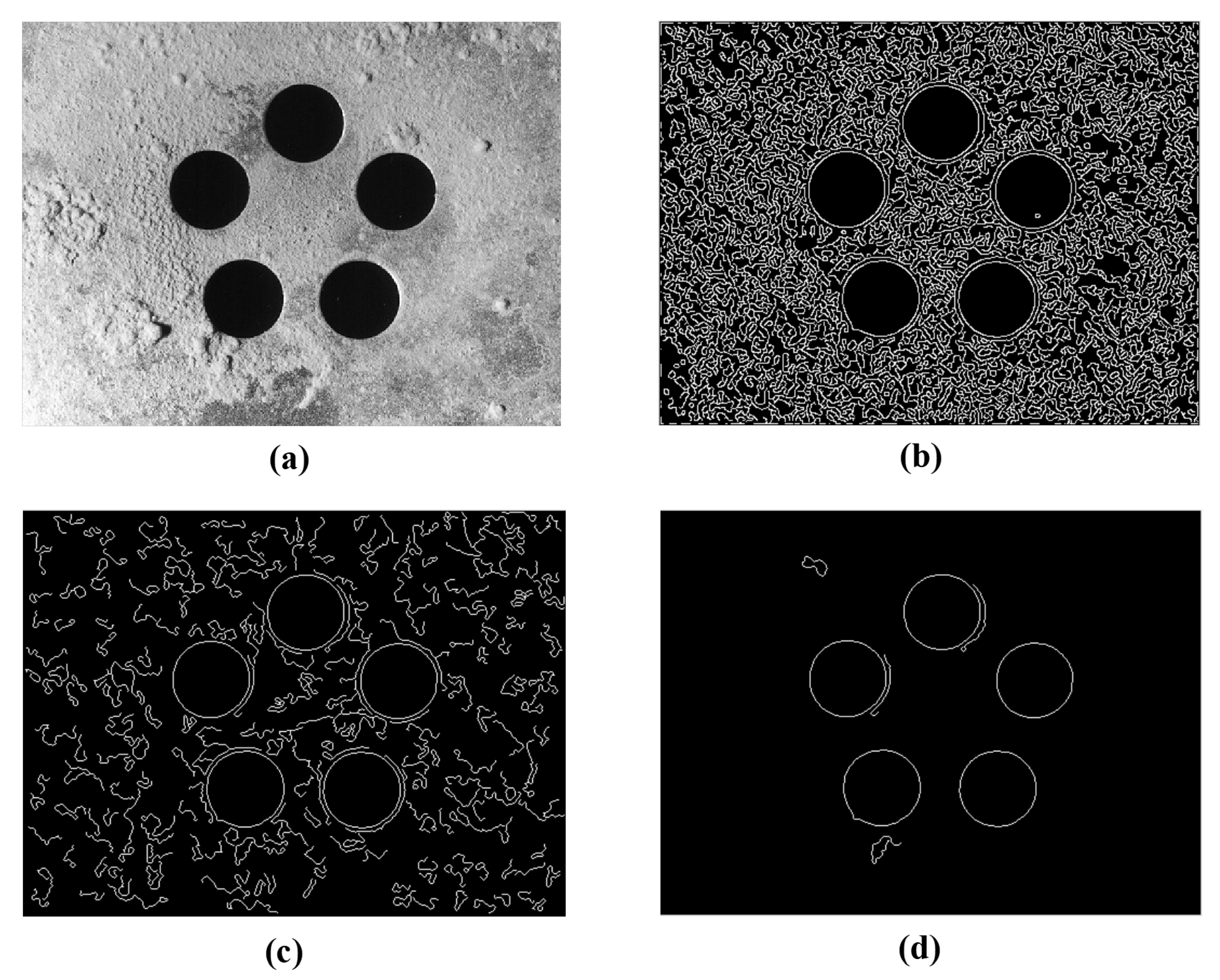

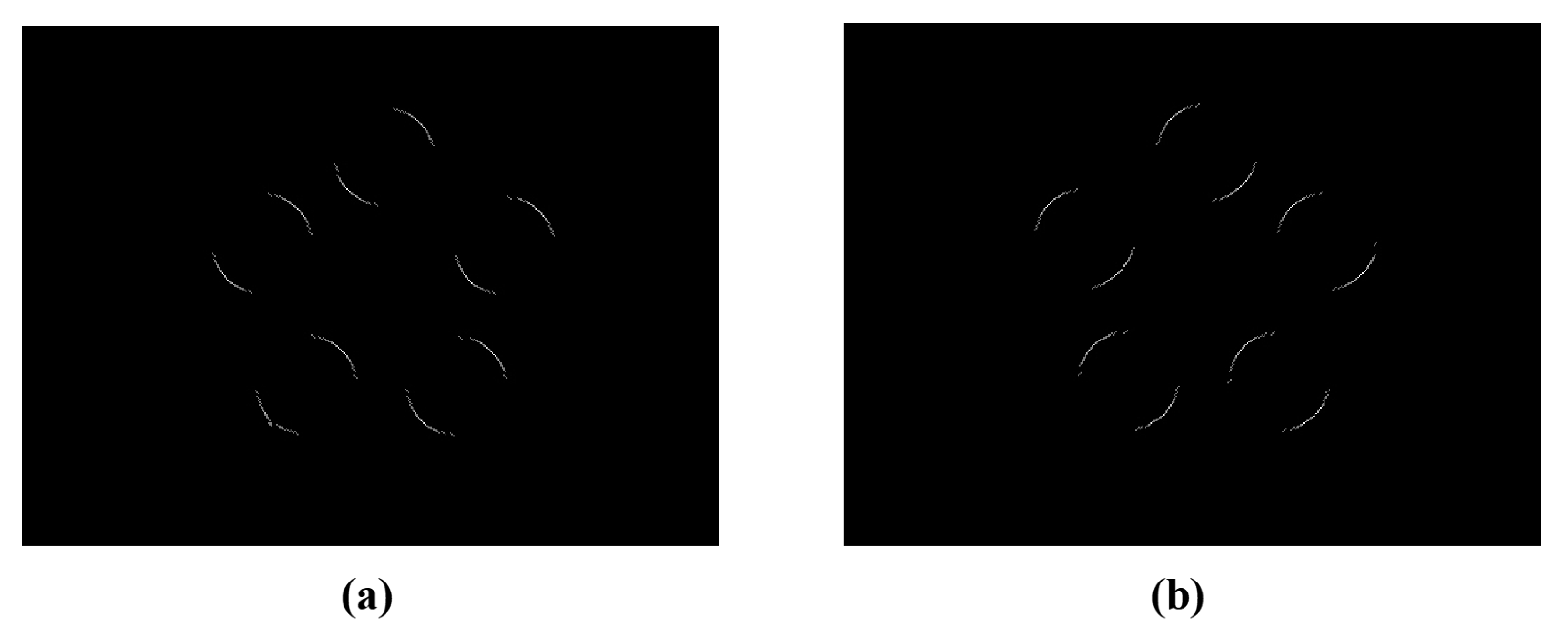

3.1. Fuzzy Test

To test the performance of our improved fuzzy inference algorithm at different fuzziness levels, we compared it with the original fuzzy inference edge detection algorithm, with code from the authors’ open source share [

29]. The size of the filter window affects the blurring degree of the picture, and the larger the size, the more obvious the blurring degree. We added Gaussian filters with 3 × 3, 5 × 5, 7 × 7, 9 × 9, and 11 × 11 window sizes to the pictures respectively to test their robustness. The experimental results are shown in

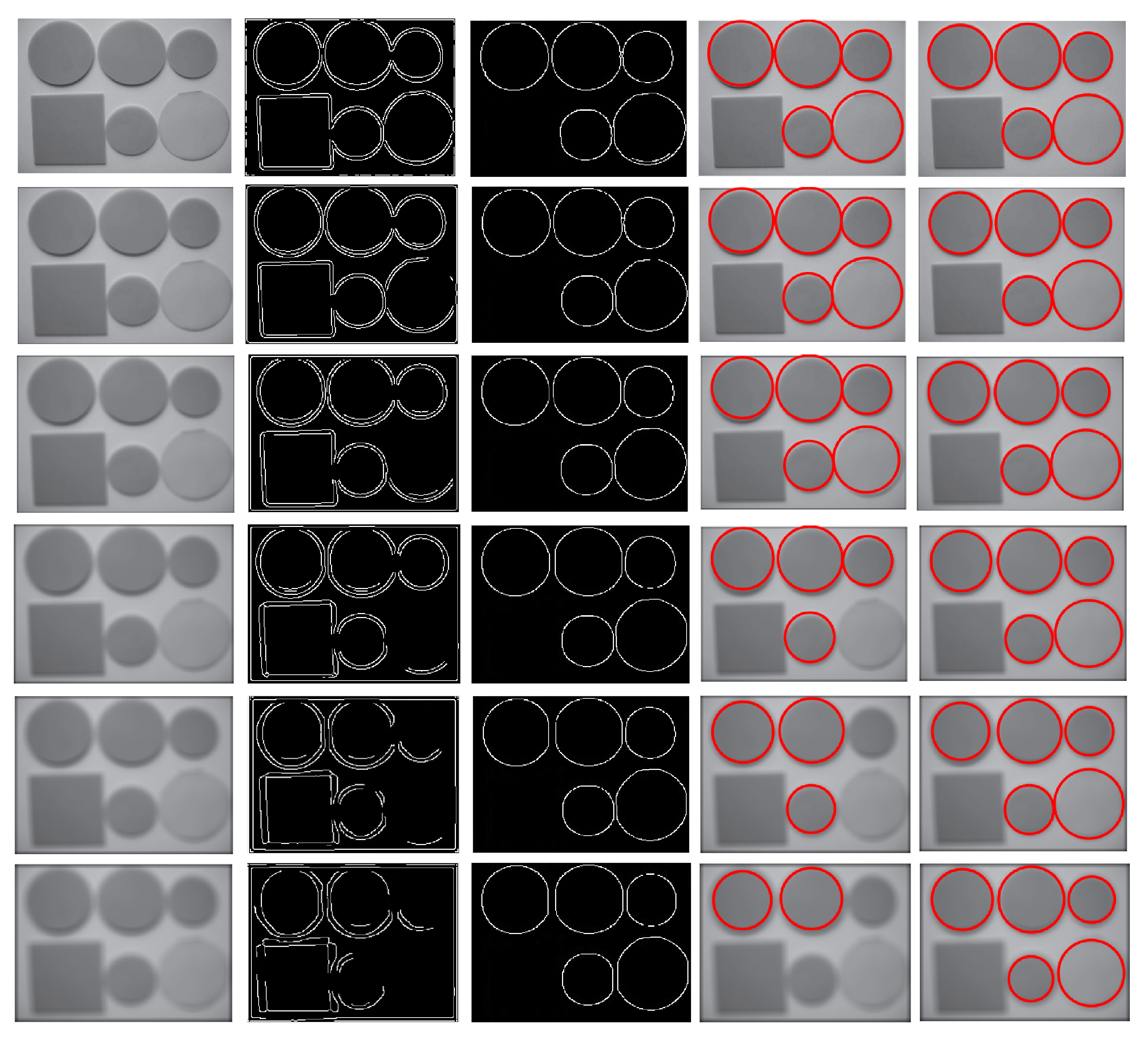

Figure 5.

Figure 5 shows the detection capability of our algorithm for Gaussian-filtered images, where the first image in each row represents Gaussian-filtered images with different window sizes, the second image is the result extracted by the original fuzzy inference edge detection algorithm, the third image is the result extracted by our improved fuzzy inference algorithm, the fourth image is the circle detection result extracted by the original fuzzy inference edge detection algorithm, and the fifth image is the result of our circle detection.

It can be seen that the original image has some shadow contours in

Figure 5, and the original fuzzy inference edge detection algorithm has a false detection of pseudo-circular edges, which leads to the deviation of circle detection. Moreover, as the filter window size increases, the blurring becomes more and more serious. A large number of edges have been distorted and lost since the picture is with a Gaussian filter of 7 × 7 window size, leading to the missed detection results.

In contrast, our improved algorithm improves the edge localization accuracy by multi-directional gradient input and main contour edge screening, reduces the influence of pseudo-arc edges, and enables the contour curve to maintain a relatively complete and stable trend under different Gaussian filters, then eliminates the curves with near straight line and obscure circular arc features by arc-like determination, which effectively reduces the detection error. In addition, as the blurring of the images deepens, our algorithm still maintains a good performance without any missed and false detection, showing high anti-interference and strong robustness.

3.2. Performance Comparison

3.2.1. Interference Dataset and GH Dataset

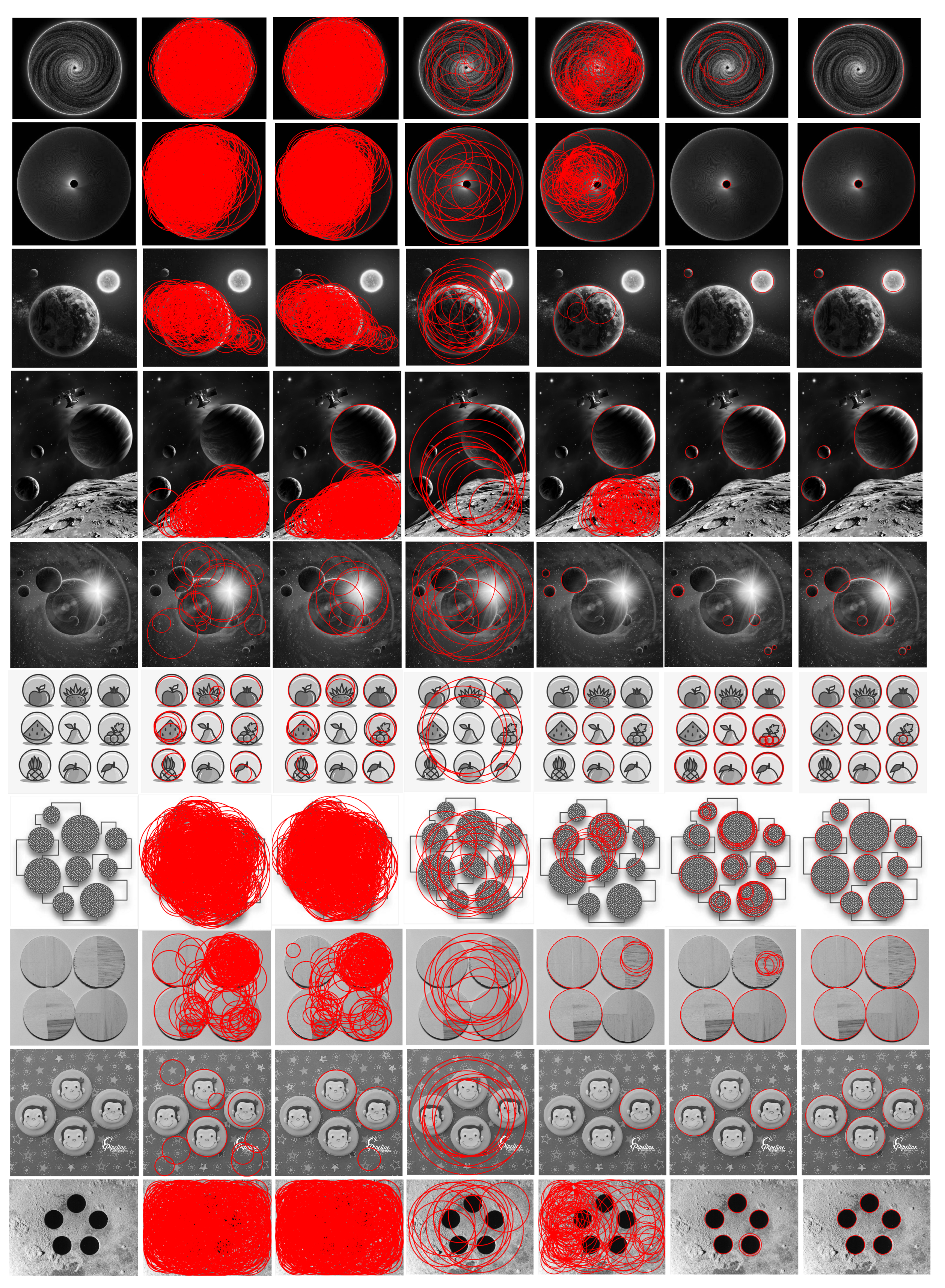

Figure 6 shows the run results of the six algorithms, and

Table 3 and

Table 4 summarize the run results of the six algorithms on two datasets, Interference, and GH, respectively.

As can be seen from the chart, the RHT algorithm has a large amount of false and missed detection, while RCD has a higher recall in the GH dataset due to sampling one more point for verification. Jiang’s method is improved but performs poorly in the interference dataset, mainly because of a large number of non-circular contours around the false circle, which leads to misjudgment of sampling from the differential region. Wang’s algorithm improved compared to Jiang’s in both datasets but false detection still exists in images with complex textures. The CACD algorithm has a substantial improvement in both datasets, but the running time becomes longer in images with larger sizes, and there are missed and wrong detections (similar to the first, seventh and eighth pictures in

Figure 6), which is because the interference curves make circle fitting and the radius of curvature estimation tough. Our algorithm removes many cluttered texture edges and retains more complete arc edges before detection, and builds a validation support region to exclude a large number of edge points on non-candidate circles, which improves precision and recall, speeds up the runtime, and performs better in both the interference dataset and GH dataset.

3.2.2. Mini Dataset

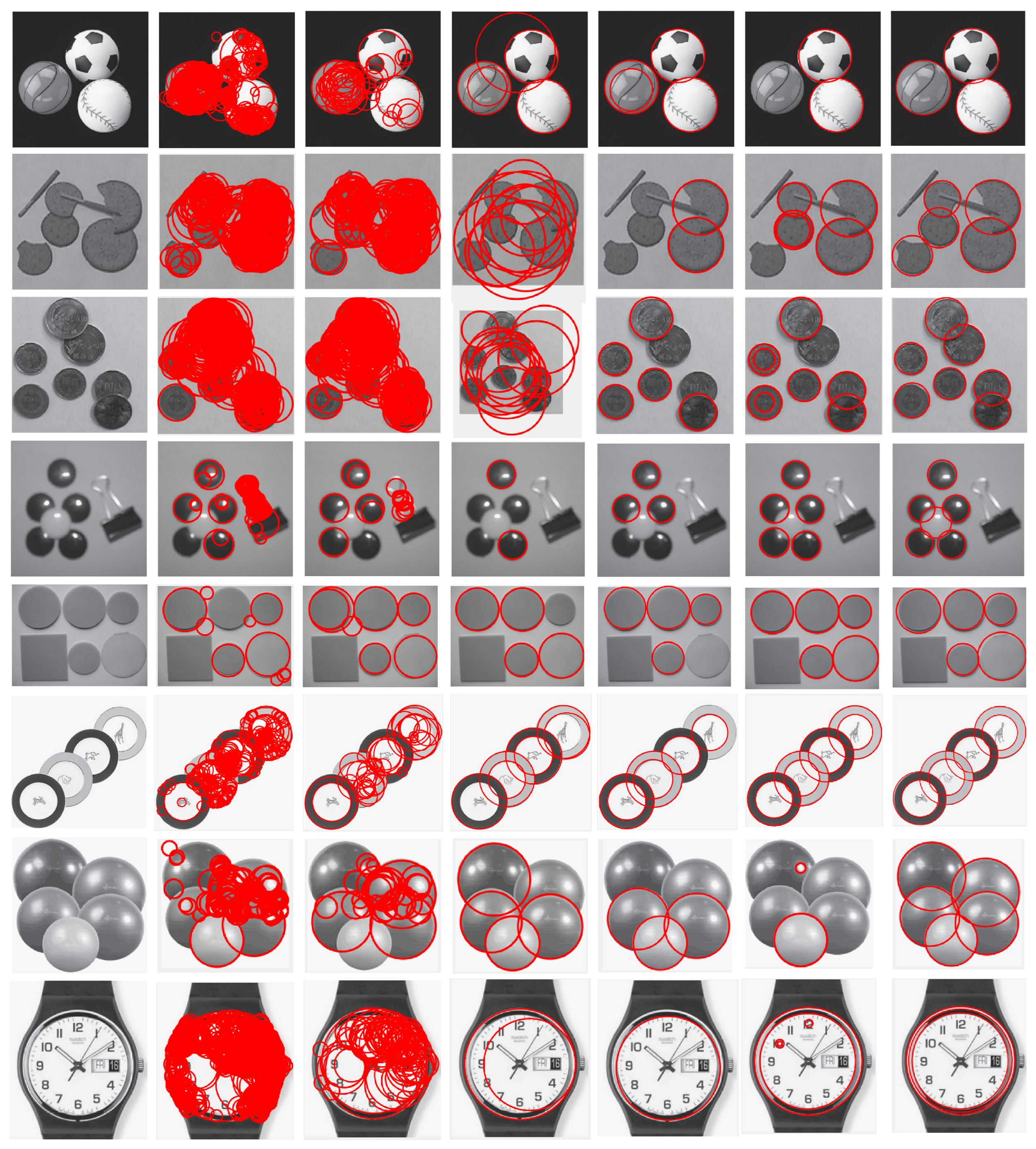

From the data in

Figure 7 and

Table 5, it can be seen that RHT shows low precision and high recall in the mini dataset. RCD is similar to RHT, but the time has reduced. However, the iterative nature of the RHT and RCD makes the performance poor on all four metrics. Jiang is significantly optimized compared to RCD in terms of precision, but the time has increased instead, mainly because complex textures require a lot of iterative operation by difference region sampling, which slows down the speed. Wang has a higher precision but performs poorly on defective circles and concentric circles (similar to the second, fourth, and seventh pictures in

Figure 7). This is because it only searches sampling points in horizontal and vertical directions. CACD performs better, but curvature estimation is difficult in blurred-edge images, which leads to missed detection (such as the 2nd and 7th pictures in

Figure 7). Our algorithm improves the edge localization accuracy by fuzzy inference before detection and performs verification determination for defective circles, which not only improves the detection speed but also reduces the influence of image blurring degrees and defective circles on the results.

3.2.3. Traffic Dataset

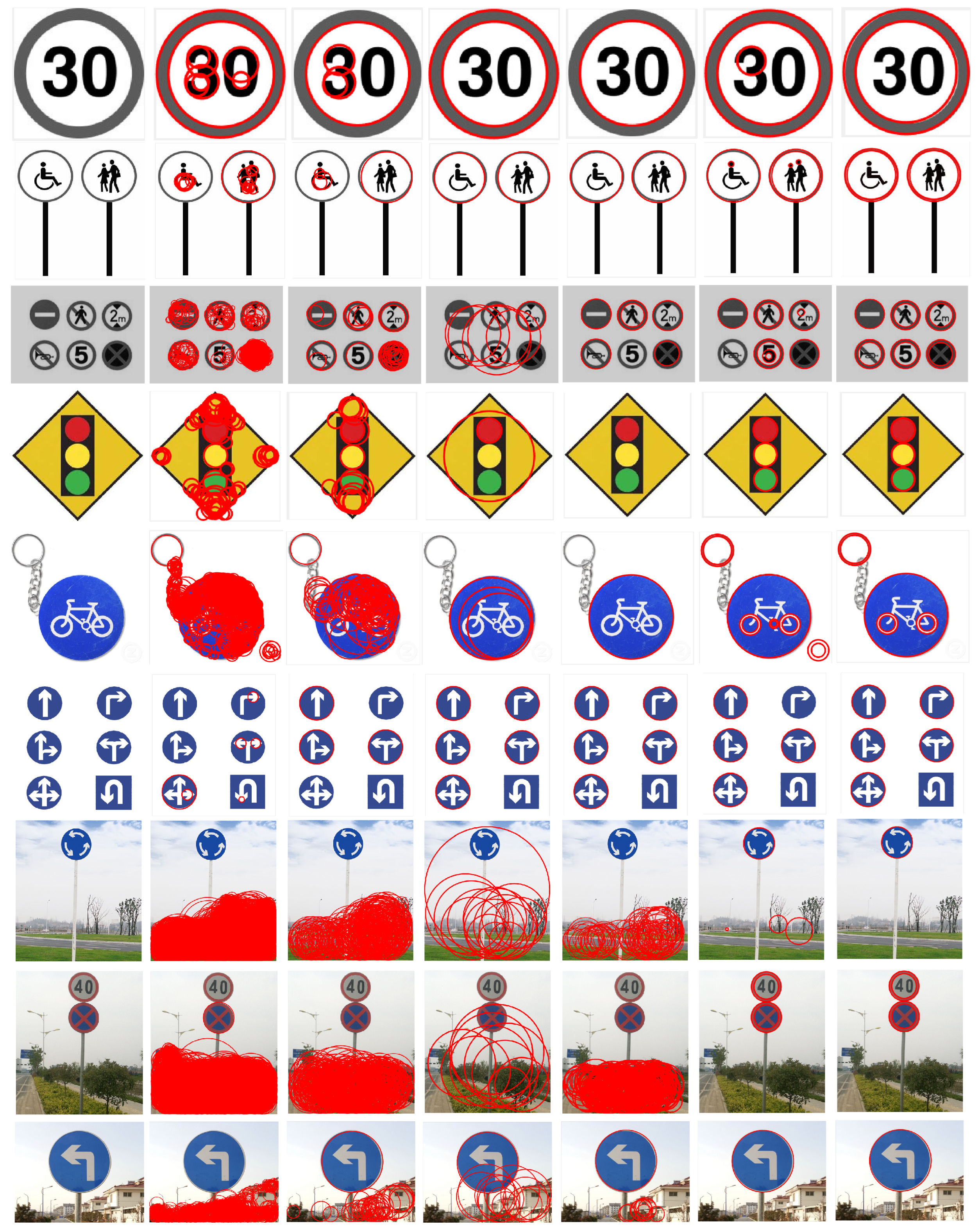

The data from

Figure 8 and

Table 6 show that RHT has a high false detection rate. However, both the precision and recall of RCD have been improved. Jiang’s algorithm has improved on precision and recall, especially for images with simple backgrounds, but the sharp increase in points in the difference evidence collection area leads to a drastic increase in runtime on real and multi-circle images and a high false detection rate. Wang’s algorithm runs significantly faster than Jiang’s but still performs poorly on complex background images taken in real life. This is because the algorithm easily detects a large number of false circles that cannot be eliminated in complex images with a large number of dense interference points. CACD has a high recall, but unnecessary features are incorrectly identified. Moreover, the runtime increases substantially as the image size becomes larger, which reflects the disadvantage of the HT-class circle detection methods: only circles with a small range of radii could be detected in a limited time. Our algorithm removes a large number of the interfering non-circular contour curves and samples with arc features to improve the detection speed and precision, but there are partial misses on the small circles (such as the second and fifth pictures in

Figure 8) because the edge points in this part are denser and our algorithm omits the set of points that are too close to the first point in the sampling phase.

3.3. Discussion

As can be seen from

Table 3,

Table 4,

Table 5 and

Table 6, our proposed method has some advantages over other methods. Compared with the above methods, our method removes many cluttered texture edges before detection to improve the detection speed. Specifically, the circular contour screening stage removes 89.03% of invalid edge points at most, which effectively reduces the computational effort of sampling. Compared with CACD, our method does not iterate a large number of radius layers, which speeds up to four times at least and keeps the detection time stable at large-size images. Our method improves Wang’s method by about 40% in terms of accuracy and recall, this is because Wang performs poorly on images, such as defective circles and concentric circles for sampling in both horizontal and vertical directions, moreover, the performance degrades rapidly in complex images with cluttered textures. In addition, the performance of our algorithms differs in tests on the four datasets. The recall of datasets Interference and GH is lower than datasets Mini and Traffic, which is because datasets Interference and GH contain many images with a lot of interfering invalid information and complex scenes, which bring difficulties to the screening of circular features. The runtime of the mini dataset is the shortest among the four datasets because the number of edge points contained in the image is the least.

4. Conclusions

In this paper, we propose a fast circle detection algorithm based on circular arc feature screening and analyze its performance. First, we improve the original fuzzy inference detection algorithm by adding main contour edge screening, edge refinement, and arc-like determination, which removes unnecessary contour edges and reduces the computational workload. Then, we sample step-by-step with the arc features on two feature matrices and set auxiliary points to find defective circles, which greatly decreases the number of invalid edge points sampled and improves the sampling efficiency. Finally, we built a square verification support domain to verify complete and defective circles, respectively, to further find the true circle.

In the experimental analysis section, we compared the fuzzy experiments with the original fuzzy inference edge detection algorithm, and the experimental results show that our algorithm still guarantees good performance under different Gaussian filtering. In addition, we compared it with five methods on four datasets. The results show that our algorithm had an average performance in terms of recall due to the circular contour screening phase. In order to speed up the subsequent process, we directly removed contour curves smaller than 30 pixels and skipped the set of points that were too close to the first point in the sampling phase, so that sometimes circles with smaller radii were not fully detected. The circular contour screening phase removes a large number of interfering non-circular contour curves and the sampling phase based on arc features reinforcement also effectively improves the detection speed, making our method the best in terms of precision, F-measure, and time. In general, our method is more suitable for cases with less rigorous accuracy requirements and slightly larger circle radii. In the future, we will continue to improve the circular contour screening phase and the arc feature-based sampling phase to improve the detection of small radius circles.