An Improved Three-Way K-Means Algorithm by Optimizing Cluster Centers

Abstract

:1. Introduction

- The clustering results of k-means are dependent on the random selection of clustering centers and the problem of local optimization readily occurs.

- Traditional k-means algorithms are based on the assumption that a cluster is represented by one single set with a sharp boundary. Only two types of relationship between an object and cluster are considered, i.e., belong to and not belong to. The requirement for a sharp boundary is easily met for analytical clustering results, but may not adequately show the uncertainty information in the dataset.

2. Related Work

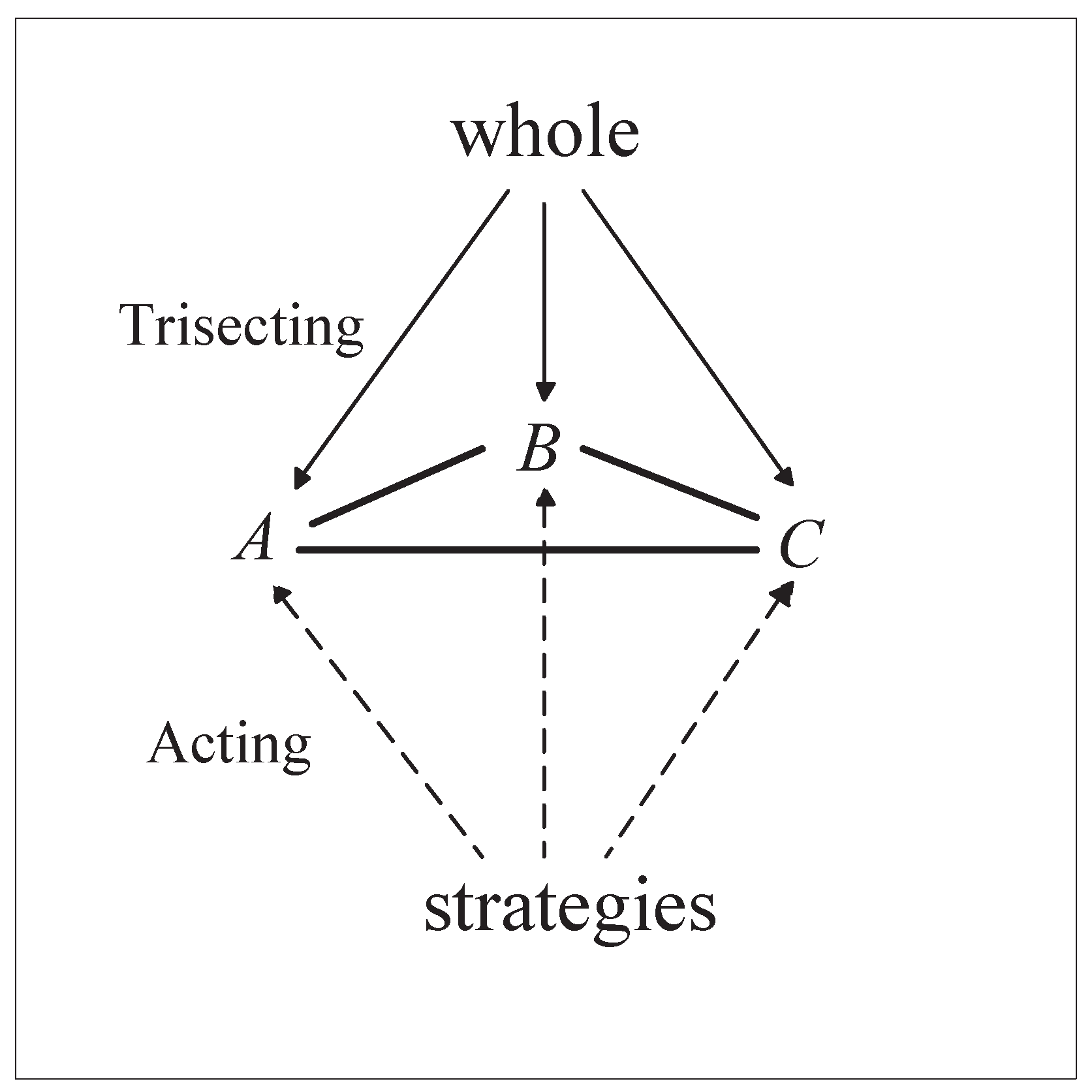

2.1. Three-Way Clustering

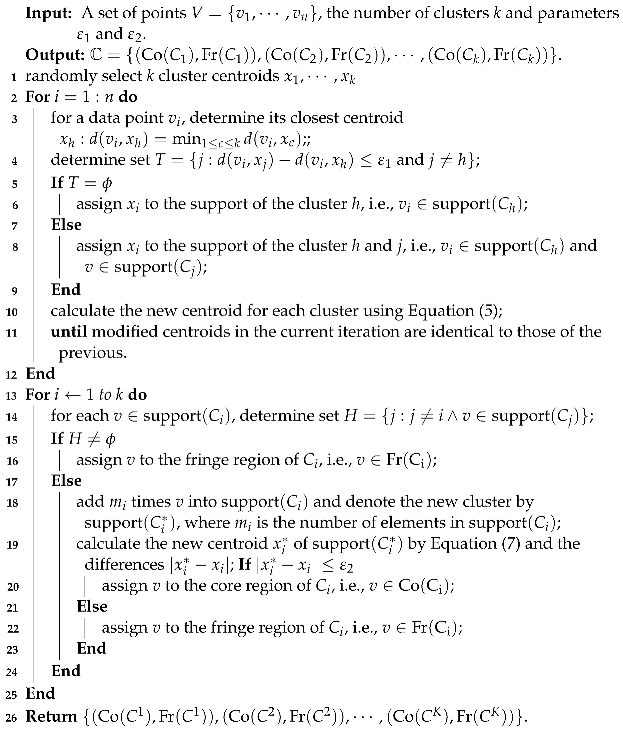

2.2. Three-Way k-Means

- (1)

- If , then and .

- (2)

- If , then .

| Algorithm 1: Three-way k-means [21] |

|

3. The Improved Three-Way k-Means

3.1. Random Probability Selection Strategy

3.2. The Improved Three-Way k-Means Algorithm

| Algorithm 2: The improved three-way k-means based on ant colony algorithm. |

|

4. Experimental Analysis

4.1. Evaluation Indices

- Accuracy () [47]In the above formula, n is the total number of samples in the dataset, is the correct number of samples divided into class clusters i, and k is the number of class clusters. represents the ratio between the number of correctly partitioned elements and the total number. A greater value implies a better clustering result. When = 1, the result of the clustering algorithm is consistent with the real result.

- Davies–Bouldin index () [47].where and are the intra-cluster distance and the inter-cluster separation, respectively. is defined as follows:As a function of the ratio of the within cluster scatter to the between cluster separation, a lower value will mean that the clustering is better.

- Average silhouette index () [47].where n is the total number of objects in the set and is the silhouette of object , which is defined as,is the average distance between and all other objects in its own cluster, and is the minimum of the average distance between and objects in other clusters. The range of the average silhouette index is ; a larger value means a better clustering result.

4.2. Performances of Proposed Algorithm

4.3. Experimental Results Analysis

- Similar to the k-means algorithm, the proposed method can achieve good results for convex datasets. If the dataset is non-convex, the proposed algorithm fails to give good results.

- The time complexity and computation complexity of the proposed algorithm are higher than for k-means and three-way k-means, which means it is not suitable for big data.

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Fujita, H.; Li, T.R.; Yao, Y.Y. Advances in three-way decisions and granular computing. Knowl.-Based Syst. 2016, 91, 1–3. [Google Scholar] [CrossRef]

- Yao, Y.Y. Integrative Levels of Granularity, Human-Centric Information Processing through Granular Modelling; Springer: Berlin/Heidelberg, Germany, 2009; pp. 31–47. [Google Scholar]

- Fujita, H.; Gaeta, A.; Loia, V.; Orciuoli, F. Resilience analysis of critical infrastructures: A cognitive approach based on granular computing. IEEE Trans. Cybern. 2018, 49, 1835–1848. [Google Scholar] [CrossRef] [PubMed]

- Pedrycz, W. Granular Computing Analysis and Design of Intelligent Systems; CRC Press: Boca Raton, FL, USA, 2013. [Google Scholar]

- Xu, R.; Wunsch, D.C. Survey of clustering algorithms. IEEE Trans. Neural Netw. Learn. Syst. 2005, 16, 645–678. [Google Scholar] [CrossRef] [PubMed]

- Yang, X.B.; Qi, Y.S.; Song, X.N.; Yang, J.Y. Test cost sensitive multigranulation rough set: Model and minimal cost selection. Inf. Sci. 2013, 250, 184–199. [Google Scholar] [CrossRef]

- Xu, W.H.; Guo, Y.T. Generalized multigranulation double-quantitative decision-theoretic rough set. Knowl.-Based Syst. 2016, 105, 190–205. [Google Scholar] [CrossRef]

- Li, W.T.; Xu, W.H.; Zhang, X.Y.; Zhang, J. Updating approximations with dynamic objects based on local multigranulation rough sets in ordered information systems. Artif. Intell. Rev. 2022, 55, 1821–1855. [Google Scholar] [CrossRef]

- Xu, W.H.; Yu, J.H. A novel approach to information fusion in multi-source datasets: A granular computing viewpoint. Inf. Sci. 2017, 378, 410–423. [Google Scholar] [CrossRef]

- Chen, X.W.; Xu, W.H. Double-quantitative multigranulation rough fuzzy set based on logical operations in multi-source decision systems. Int. J. Mach. Learn. Cybern. 2022, 13, 1021–1048. [Google Scholar] [CrossRef]

- Xu, W.H.; Yuan, K.H.; Li, W.T. Dynamic updating approximations of local generalized multigranulation neighborhood rough set. Appl. Intell. 2022, 52, 9148–9173. [Google Scholar] [CrossRef]

- Yang, X.B.; Yao, Y.Y. Ensemble selector for attribute reduction. Appl. Soft Comput. 2018, 70, 1–11. [Google Scholar] [CrossRef]

- Jiang, Z.H.; Yang, X.B.; Yu, H.L.; Liu, D.; Wang, P.X.; Qian, Y.H. Accelerator for multi-granularity attribute reduction. Knowl.-Based Syst. 2019, 177, 145–158. [Google Scholar] [CrossRef]

- Li, J.Z.; Yang, X.B.; Song, X.N.; Li, J.H.; Wang, P.X.; Yu, D.-J. Neighborhood attribute reduction: A multi-criterion approach. Int. J. Mach. Learn. Cybern. 2019, 10, 731–742. [Google Scholar] [CrossRef]

- Liu, K.Y.; Yang, X.B.; Yu, H.L.; Fujita, H.; Chen, X.J.; Liu, D. Supervised information granulation strategy for attribute reduction. Int. J. Mach. Learn. Cybern. 2020, 11, 2149–2163. [Google Scholar] [CrossRef]

- Xu, S.P.; Yang, X.B.; Yu, H.L.; Yu, D.-J.; Yang, J.; Tsang, E.C.C. Multi-label learning with label-specific feature reduction. Knowl.-Based Syst. 2016, 104, 52–61. [Google Scholar] [CrossRef]

- Liu, K.Y.; Yang, X.B.; Fujita, H. An efficient selector for multi-granularity attribute reduction. Inf. Sci. 2019, 505, 457–472. [Google Scholar] [CrossRef]

- Liu, K.Y.; Yang, X.B.; Yu, H.L.; Mi, J.S.; Wang, P.X.; Chen, X.J. Rough set based semi-supervised feature selection via ensemble selector. Knowl.-Based Syst. 2020, 165, 282–296. [Google Scholar] [CrossRef]

- MacQueen, J. Some methods for classification and analysis of multivariate observations. In Proceedings of the Fifth Berkeley Symposium on Mathematical Statistics and Probability, Volume 1: Statistics; University of California Press: Berkeley, CA, USA, 1967; pp. 281–297. [Google Scholar]

- Zhang, G.; Zhang, C.C.; Zhang, H.Y. Improved k-means algorithm based on density canopy. Knowl.-Based Syst. 2018, 145, 289–297. [Google Scholar] [CrossRef]

- Wang, P.X.; Shi, H.; Yang, X.B.; Mi, J.S. Three-way k-means: Integrating k-means and three-way decision. Int. J. Mach. Learn. Cybern. 2019, 10, 2767–2777. [Google Scholar] [CrossRef]

- Yao, Y.Y. Three-way decisions with probabilistic rough sets. Inf. Sci. 2010, 180, 341–353. [Google Scholar] [CrossRef]

- Yao, Y.Y. The superiority of three-way decisions in probabilistic rough set models. Inf. Sci. 2011, 181, 1080–1096. [Google Scholar] [CrossRef]

- Colorni, A.; Dorigo, M.; Maniezzo, V. Distributed 0ptimization by ant colonies. In Proceedings of the European Conference on Artificial Life, Paris, France, 11–13 December 1991. [Google Scholar]

- Yao, Y.Y. Three-way decision and granular computing. Int. J. Approx. Reason. 2018, 103, 107–123. [Google Scholar] [CrossRef]

- Luo, J.F.; Hu, M.J.; Qin, K.Y. Three-way decision with incomplete information based on similarity and satisfiability. Int. J. Approx. Reason. 2020, 120, 151–183. [Google Scholar] [CrossRef]

- Xu, J.F.; Zhang, Y.J.; Miao, D.Q. Three-way confusion matrix for classification: A measure driven view. Inf. Sci. 2020, 507, 772–794. [Google Scholar] [CrossRef]

- Qi, J.J.; Qian, T.; Wei, L. The connections between three-way and classical concept lattices. Knowl.-Based Syst. 2016, 91, 143–151. [Google Scholar] [CrossRef]

- Li, J.H.; Huang, C.C.; Qi, J.J.; Qian, Y.H.; Liu, W.Q. Three-way cognitive concept learning via multi-granularity. Inf. Sci. 2017, 378, 244–263. [Google Scholar] [CrossRef]

- Yuan, K.H.; Xu, W.H.; Li, W.T.; Ding, W.P. An incremental learning mechanism for object classification based on progressive fuzzy three-way concept. Inf. Sci. 2022, 584, 127–147. [Google Scholar] [CrossRef]

- Li, X.N. Three-way fuzzy matroids and granular computing. Int. J. Approx. Reason. 2019, 114, 44–50. [Google Scholar] [CrossRef]

- Fujita, H.; Gaeta, A.; Loia, V.; Orciuoli, F. Improving awareness in early stages of security analysis: A zone partition method based on GrC. Appl. Intell. 2019, 49, 1063–1077. [Google Scholar] [CrossRef]

- Yang, X.; Li, T.R.; Liu, D.; Fujita, H. A temporal-spatial composite sequential approach of three-way granular computing. Inf. Sci. 2019, 486, 171–189. [Google Scholar] [CrossRef]

- Hu, C.X.; Zhang, L. Incremental updating probabilistic neighborhood three-way regions with time-evolving attributes. Int. J. Approx. Reason. 2020, 120, 1–23. [Google Scholar] [CrossRef]

- Yu, H. A framework of three-way cluster analysis. Proceeding of the International Joint Conference on Rough Sets, Olsztyn, Poland, 3–7 July 2017; Volume 425, pp. 300–312. [Google Scholar]

- Shah, A.; Azam, N.; Ali, B.; Khan, M.T.; Yao, J.T. A three-way clustering approach for novelty detection. Inf. Sci. 2021, 569, 650–668. [Google Scholar] [CrossRef]

- Wang, P.X.; Yao, Y.Y. CE3: A three-way clustering method based on mathematical morphology. Knowl.-Based Syst. 2018, 155, 54–65. [Google Scholar] [CrossRef]

- Jia, X.Y.; Rao, Y.; Li, W.W.; Yang, S.C.; Yu, H. An automatic three-way clustering method based on sample similarity. Int. J. Mach. Learn. Cybern. 2021, 12, 1545–1556. [Google Scholar] [CrossRef]

- Fan, J.C.; Wang, P.X.; Jiang, C.M.; Yang, X.B.; Song, J.J. Ensemble learning using three-way density-sensitive spectral clustering. Int. J. Approx. Reason. 2022, 149, 70–84. [Google Scholar] [CrossRef]

- Shah, A.; Azam, N.; Alanazi, E.; Yao, J.T. Image blurring and sharpening inspired three-way clustering approach. Appl. Intell. 2022. [Google Scholar] [CrossRef]

- Afridi, M.K.; Azam, N.; Yao, J.T. A three-way clustering approach for handling missing data using gtrs. Int. J. Approx. Reason. 2018, 98, 11–24. [Google Scholar] [CrossRef]

- Wang, P.X.; Chen, X.J. Three-way ensemble clustering forincomplete data. IEEE Access 2020, 8, 91855–91864. [Google Scholar] [CrossRef]

- Wang, P.X.; Yang, X.B. Three-way clustering method based on stability theory. IEEE Access 2021, 9, 33944–33953. [Google Scholar] [CrossRef]

- Yu, H.; Chen, L.Y.; Yao, J.T. A three-way density peak clustering method based on evidence theory. Knowl.-Based Syst. 2021, 211, 106532. [Google Scholar] [CrossRef]

- Fan, J.C.; Wang, X.X.; Wu, T.F.; Zhu, J.; Wang, P.X. Three-way ensemble clustering based on sample’s perturbation theory. Mathematics 2022, 10, 2598. [Google Scholar] [CrossRef]

- Wu, T.F.; Fan, J.C.; Wang, P.X. An improved three-way clustering based on ensemble strategy. Mathematics 2022, 10, 1457. [Google Scholar] [CrossRef]

- Vinh, N.X.; Epps, J.; Bailey, J. Information theoretic measures for clusterings comparison: Variants, properties, normalization and correction for chance. J. Mach. Learn. Res. 2010, 11, 2837–2854. [Google Scholar]

- Bezdek, J.C. Pattern recognition with fuzzy objective function algorithms. Adv. Appl. Pattern Recognit. 1981, 22, 203–239. [Google Scholar]

| ID | Datasets | Samples | Attributes | Classes |

|---|---|---|---|---|

| 1 | Wine | 178 | 13 | 3 |

| 2 | Class | 214 | 9 | 6 |

| 3 | Ecoli | 366 | 7 | 8 |

| 4 | Forest | 523 | 27 | 4 |

| 5 | Bank | 1372 | 4 | 2 |

| 6 | Iris | 150 | 4 | 3 |

| 7 | Contraceptive | 1473 | 9 | 3 |

| 8 | Molecular Biology | 106 | 52 | 2 |

| 9 | Libras | 360 | 90 | 15 |

| 10 | Caffeine Consumption | 1885 | 12 | 7 |

| ID | Data Sets | k-Means | FCM | Three-Way k-Means | Proposed Algorithm |

|---|---|---|---|---|---|

| 1 | Wine | 0.6573 | 0.6692 | 0.6831 | 0.6911 |

| 2 | Class | 0.5981 | 0.6007 | 0.6112 | 0.6366 |

| 3 | Ecoli | 0.6339 | 0.6335 | 0.6652 | 0.6773 |

| 4 | Forest | 0.7795 | 0.7540 | 0.7807 | 0.8294 |

| 5 | Bank | 0.5758 | 0.5969 | 0.6123 | 0.6131 |

| 6 | Iris | 0.8866 | 0.8933 | 0.9040 | 0.9040 |

| 7 | Contraceptive | 0.2145 | 0.2179 | 0.2822 | 0.2826 |

| 8 | Molecular Biology | 0.6037 | 0.6226 | 0.6547 | 0.6659 |

| 9 | Libras | 0.8611 | 0.9162 | 0.9256 | 0.9240 |

| 10 | Caffeine Consumption | 0.2005 | 0.1960 | 0.2411 | 0.2422 |

| ID | Data Sets | k-Means | FCM | Three-Way k-Means | Proposed Algorithm |

|---|---|---|---|---|---|

| 1 | Wine | 1.7835 | 1.6922 | 1.5542 | 1.5431 |

| 2 | Class | 1.0475 | 1.2233 | 0.7855 | 0.7596 |

| 3 | Ecoli | 1.1504 | 1.0273 | 0.9667 | 0.9425 |

| 4 | Forest | 1.2774 | 1.2253 | 1.200 | 1.1879 |

| 5 | Bank | 1.1913 | 1.1952 | 1.1332 | 1.1267 |

| 6 | Iris | 0.7609 | 0.7507 | 0.7236 | 0.7355 |

| 7 | Contraceptive | 1.2716 | 1.2539 | 1.2323 | 1.2220 |

| 8 | Molecular Biology | 4.9588 | 4.8236 | 4.6783 | 4.6689 |

| 9 | Libras | 1.9240 | 1.9126 | 1.9033 | 1.9023 |

| 10 | Caffeine Consumption | 1.9116 | 1.8072 | 1.6655 | 1.6048 |

| ID | Data Sets | k-Means | FCM | Three-Way k-Means | Proposed Algorithm |

|---|---|---|---|---|---|

| 1 | Wine | 0.3383 | 0.2337 | 0.3347 | 0.3574 |

| 2 | Class | 0.5309 | 0.5543 | 0.5887 | 0.6038 |

| 3 | Ecoli | 0.4419 | 0.4326 | 0.4433 | 0.4524 |

| 4 | Forest | 0.4029 | 0.4302 | 0.4559 | 0.4669 |

| 5 | Bank | 0.5000 | 0.4954 | 0.5111 | 0.5280 |

| 6 | Iris | 0.6959 | 0.7091 | 0.7114 | 0.7188 |

| 7 | Contraceptive | 0.4236 | 0.4309 | 0.4597 | 0.4672 |

| 8 | Molecular Biology | 0.0553 | 0.0538 | 0.0558 | 0.0585 |

| 9 | Libras | 0.3519 | 0.3000 | 0.3533 | 0.3556 |

| 10 | Caffeine Consumption | 0.3150 | 0.3491 | 0.3517 | 0.3563 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, Q.; Yin, Z.; Wang, P. An Improved Three-Way K-Means Algorithm by Optimizing Cluster Centers. Symmetry 2022, 14, 1821. https://doi.org/10.3390/sym14091821

Guo Q, Yin Z, Wang P. An Improved Three-Way K-Means Algorithm by Optimizing Cluster Centers. Symmetry. 2022; 14(9):1821. https://doi.org/10.3390/sym14091821

Chicago/Turabian StyleGuo, Qihang, Zhenyu Yin, and Pingxin Wang. 2022. "An Improved Three-Way K-Means Algorithm by Optimizing Cluster Centers" Symmetry 14, no. 9: 1821. https://doi.org/10.3390/sym14091821

APA StyleGuo, Q., Yin, Z., & Wang, P. (2022). An Improved Three-Way K-Means Algorithm by Optimizing Cluster Centers. Symmetry, 14(9), 1821. https://doi.org/10.3390/sym14091821