3.2. Enhanced Image

The color-imbalanced sandstorm images are corrected using the proposed method, and the balanced images seem natural. Because the balanced sandstorm images seem hazy, to enhance the image, a dehazing procedure is needed. To enhance the hazy image, dehazing algorithms are used frequently. He et al. enhanced hazy images using dark channel prior and a transmission map [

1]. Meng et al. improved hazy images using a boundary-refined transmission map [

11]. Ren et al. enhanced hazy images using a convolutional neural network (CNN) [

17]. Gao et al. improved sandstorm images using the autoreversing blue channel compensation method and an adaptive transmission map [

13]. Shi et al. enhanced sandstorm images using the mean shift of color components and an adjustable transmission map [

12]. Al Ameen [

9] improved sandstorm images using the gamma correction method. Shi et al. enhanced sandstorm images using the mean shift of color components and adaptive CLAHE [

10]. Lee improved degraded sandstorm images using normalized eigenvalue and adaptive DCP [

16]. Dhara et al. improved hazy images using adaptive airlight refinement and nonlinear color balancing [

15]. Because sandstorm images resemble dusty or hazy images, to compare the enhanced images, existing dehazing methods were used.

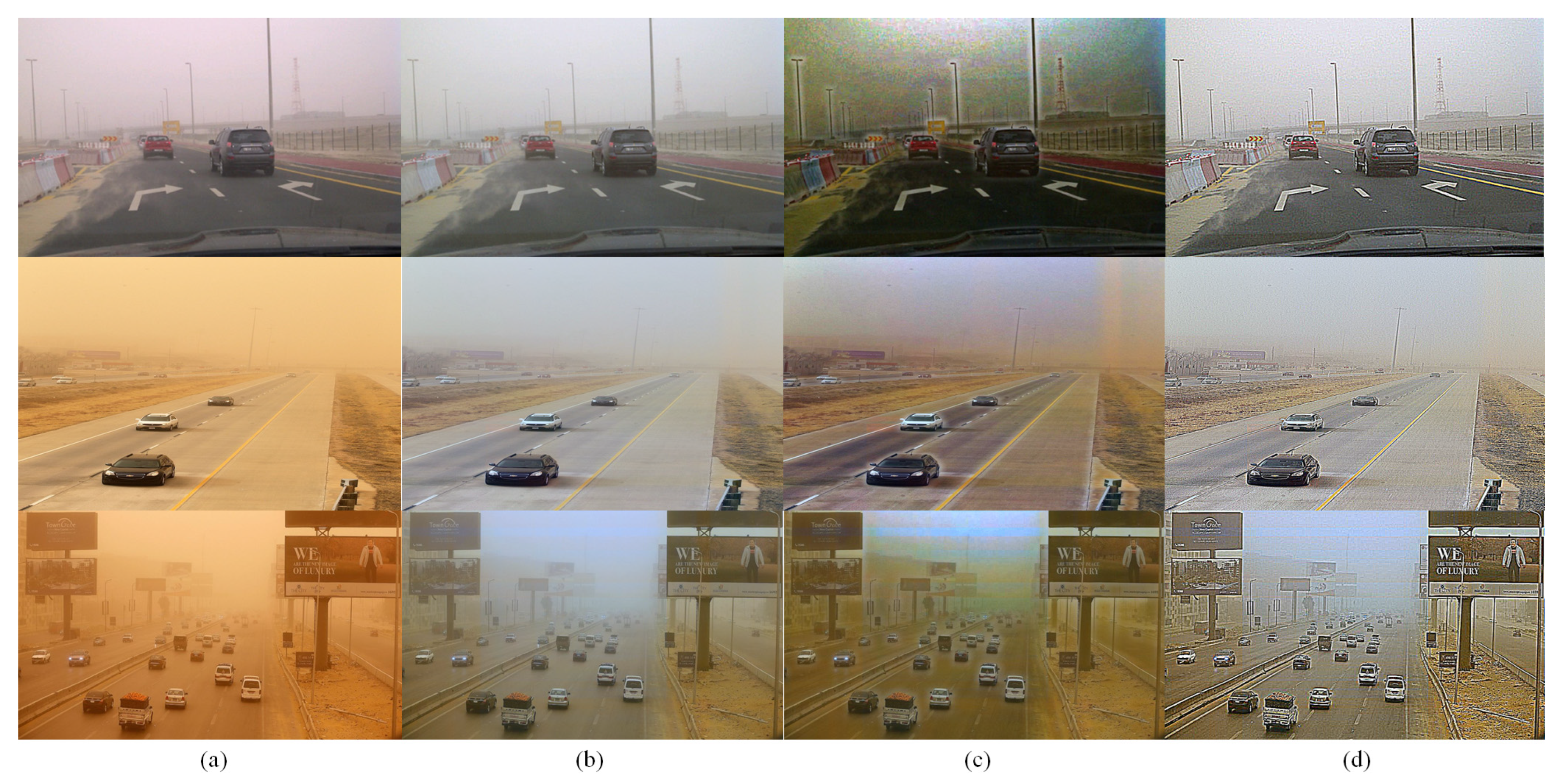

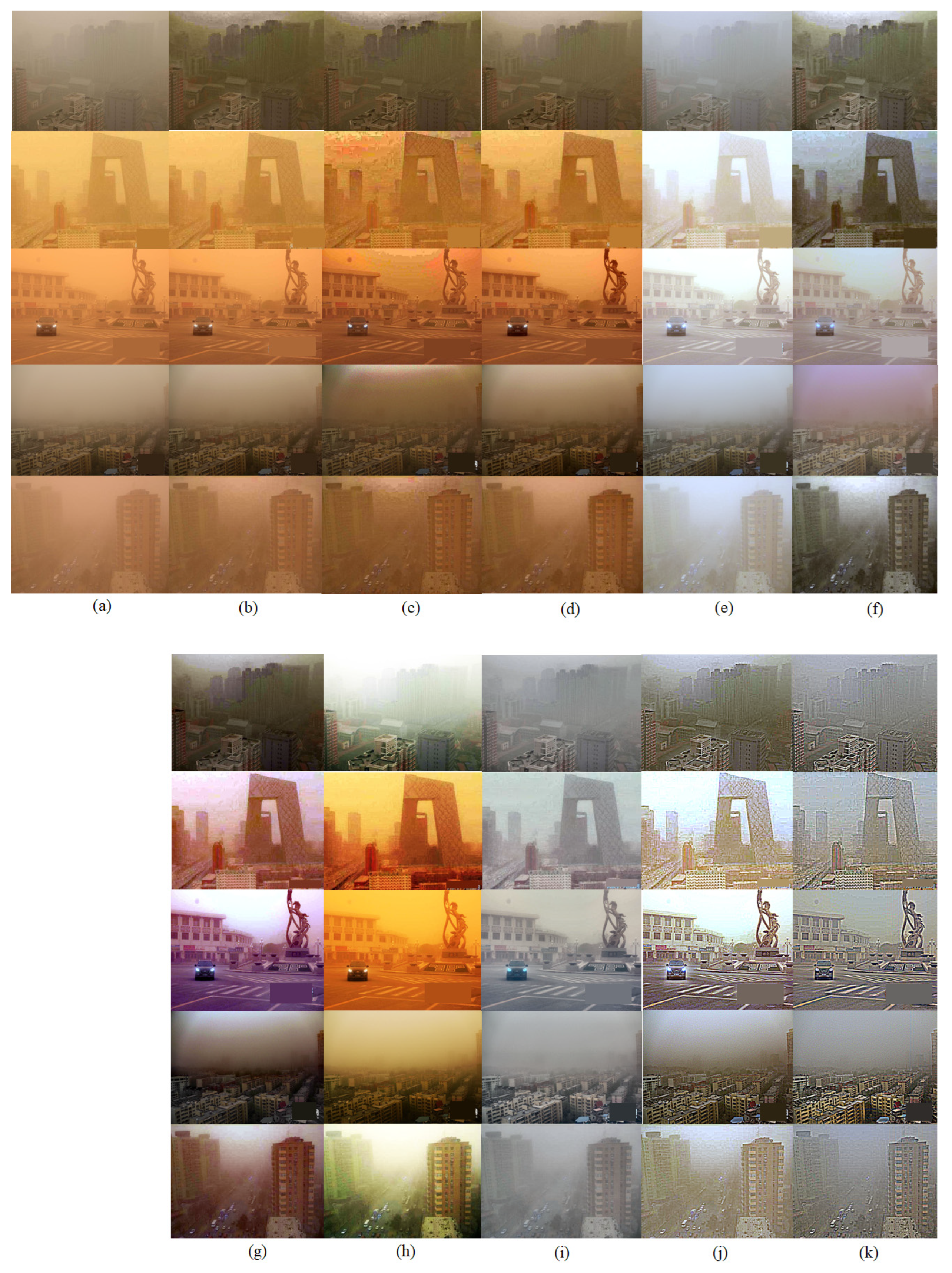

Figure 8 and

Figure 9 show lightly degraded sandstorm images and greatly degraded sandstorm images, as well as enhanced images produced using the proposed method and state-of-the-art methods. He et al.’s [

1] method enhances the sandstorm images in the case of lightly degraded sandstorm images, because this method has no color balancing procedure and in the enhanced image, an artificial color shift occurs. Meng et al.’s [

11] method improves the sandstorm images in the lightly distorted case. However, in the most degraded sandstorm images, the enhanced images produced using this method have an artificial color because this method has no color correction procedure. Ren et al.’s method [

17] enhanced the lightly degraded sandstorm images because the lightly degraded sandstorm images resemble dusty images. However, in the most degraded sandstorm images, the enhanced images produced using this method have a new color shift. Gao et al.’s [

13] method enhances the sandstorm images without color shift, and the improved images seem naturally. Shi et al.’s [

12] method enhances the sandstorm images, but the enhanced images have color shift and a ringing effect because of the transmission map. When estimating the transmission map, a kernel of a certain size is used. Additionally, because of this, the enhanced images demonstrate a color shift and a ringing effect. Al Ameen’s [

9] method enhances the degraded sandstorm images in the lightly degraded case. However, in the greatly degraded sandstorm images, the enhanced images have an artificial color shift. Because this method uses a constant value regardless of the image’s features to enhance sandstorm images, the enhanced images have a distorted region. The enhanced images produced using Dhara et al.’s method [

15] have an artificial color in some cases, as although this method has a color balancing procedure, it does not sufficiently reflect the sandstorm images’ features. Lee’s method [

16] improves the sandstorm images; however, due to the abundant red channel, a bright region is shown. Shi et al.’s method [

10] improves the sandstorm images; however, a bluish color appears in some of the images due to the mean shift of color components. Meanwhile, the enhanced images produced using the proposed method have no color shift or ringing effect in both the lightly and greatly degraded sandstorm images.

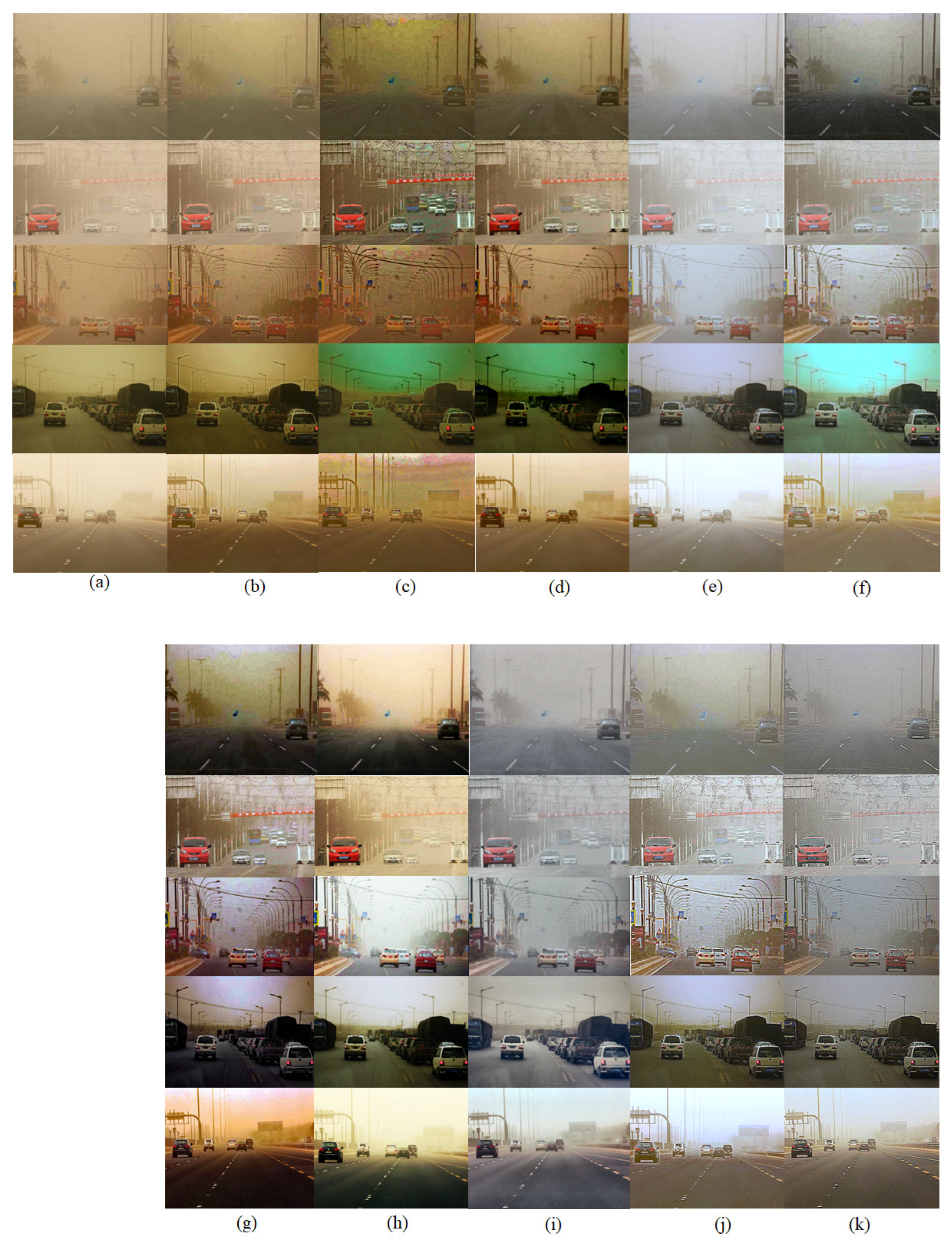

Figure 10 and

Figure 11 show the lightly degraded sandstorm images and greatly distorted sandstorm images, as well as the improved images produced using the proposed method and state-of-the-art methods. He et al.’s method [

1] improves the lightly degraded sandstorm images; however, in the case of the greatly degraded sandstorm images, the enhanced images demonstrate a color shift and an artificial effect. The enhanced images produced using Meng et al.’s [

11] method have an artificial effect as well as a color shift and a ringing effect. Ren et al.’s [

17] method improves the sandstorm images in the case of light degradation. However, in most of the degraded sandstorm images, the enhanced images have a greenish color shift and an artificial effect. Gao et al.’s method [

13] enhances both the lightly and greatly degraded sandstorm images. Shi et al.’s [

12] method enhances the sandstorm images; however, the enhanced images have a color shift and an artificial region due to the dehazing procedure as well as the transmission map. Al Ameen’s [

9] method enhances the sandstorm images in case of lightly degraded sandstorm images. However, in the case of greatly degraded sandstorm images, the enhanced images have a color shift because this method uses a constant value to correct the color, and this causes the new color-distorted region. The enhanced images produced using Dhara et al.’s method [

15] have an artificial color in some images owing to this method not sufficiently reflecting sandstorm images’ features. The enhanced images produced by Lee’s method [

16] have a bright area in some cases due to the red channel being abundant and that of the eigenvalue also being more abundant than others. The enhanced images produced using Shi et al.’s method [

10] have a bluish color in some images due to the mean shift of color components. Meanwhile, the enhanced images produced using the proposed method have no color shift or artificial regions.

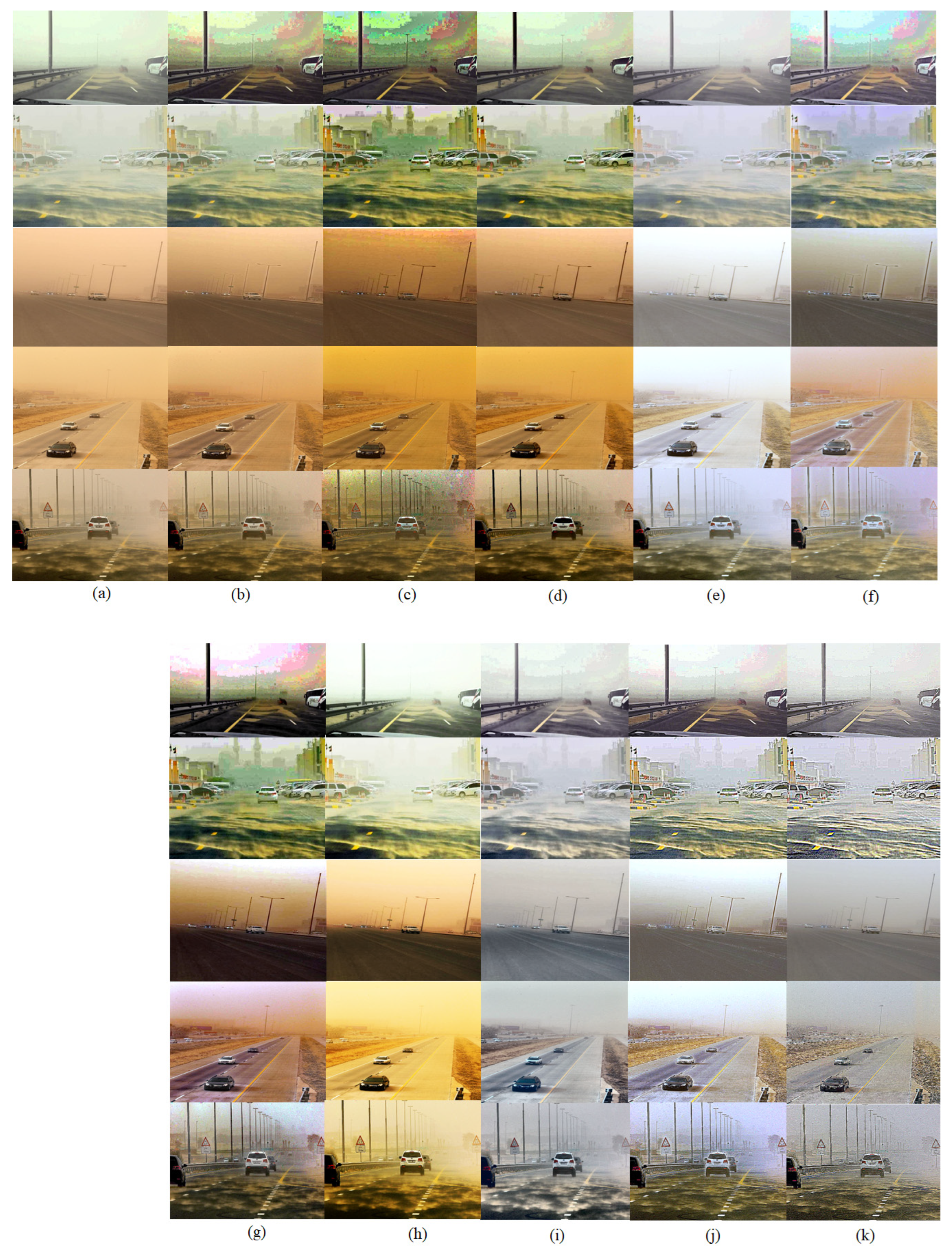

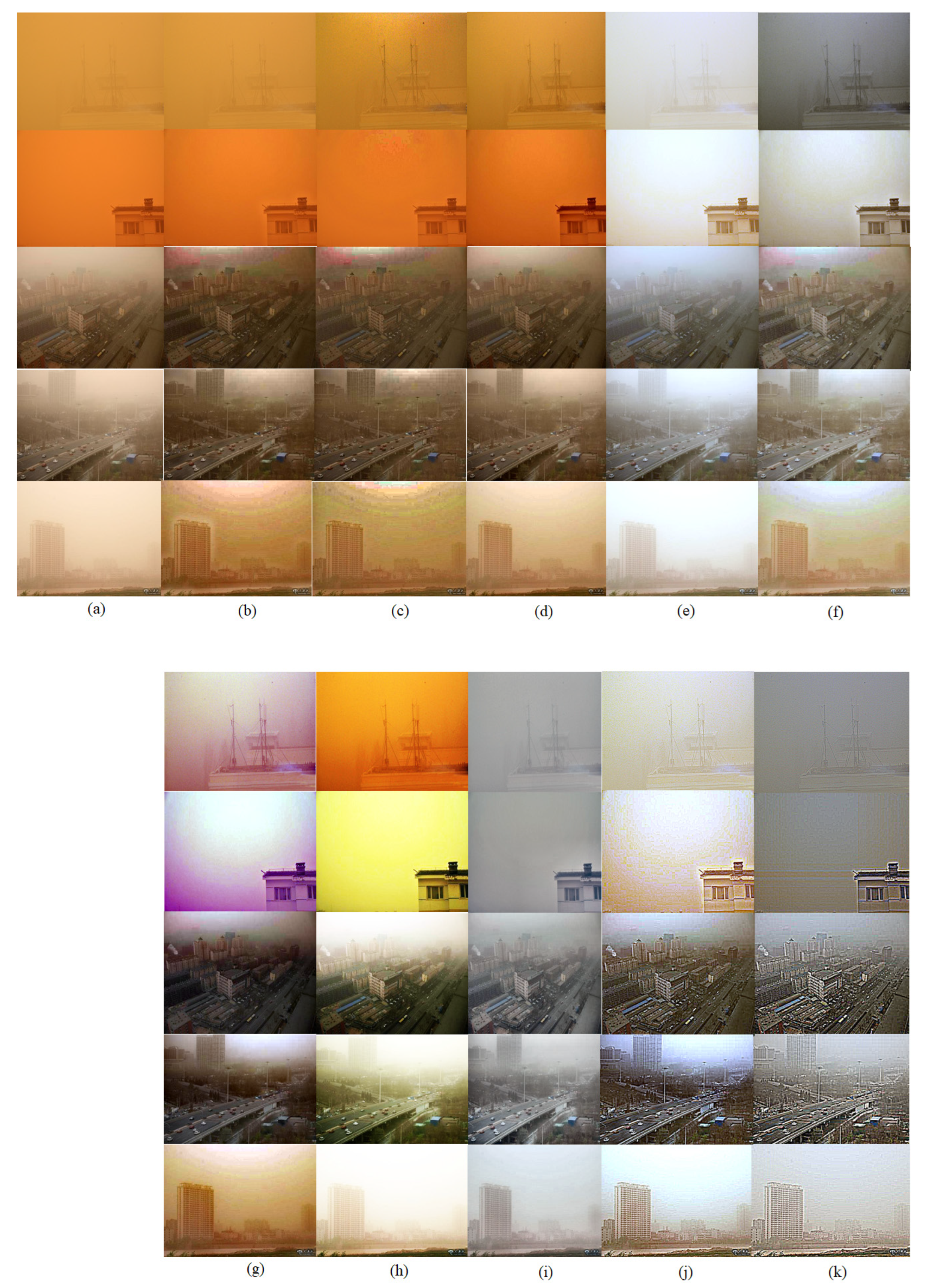

Figure 12 and

Figure 13 show the degraded sandstorm images and enhanced images produced using the proposed method and state-of-the-art methods. The enhanced images produced using the He et al. [

1] method have a color shift and ringing effect, and the degraded color is still present because this method has no color balancing procedure. Meng et al.’s [

11] method enhances sandstorm images in the case of lightly degraded sandstorm images. In the case of greatly distorted sandstorm images, the enhanced images produced using Meng et al.’s [

11] method have an artificial color shift and a ringing effect because this method lacks a color correction procedure. The enhanced images produced using Ren et al.’s [

17] method have a color shift and an artificial ringing effect, and color degradation occurs still because this method has no color balancing procedure. The Gao et al. [

13] method enhances sandstorm images naturally without a color shift. However, the enhanced images display bright regions. The Shi et al. [

12] method enhances the distorted sandstorm images. However, the ringing effect and distorted areas occur due to the dehazing procedure as well as the transmission map. The Al Ameen [

9] method enhances sandstorm images in case of lightly degraded sandstorm images, because this method has no image-adaptive color correction procedure and uses a constant value to correct the image’s color. The Shi et al. method [

10] improves sandstorm images naturally; however, in some images, a blueish artificial color is present. Lee’s method [

16] enhances the degraded sandstorm images naturally; however, a bright region is present and it looks unnatural. Dhara et al.’s method [

15] enhances the sandstorm images; however, degraded color is present in some images due to this method not being able to reflect the sandstorm images’ features.

As shown in

Figure 8,

Figure 9,

Figure 10,

Figure 11,

Figure 12 and

Figure 13, to enhance the degraded sandstorm images naturally, the image adaptive color correction procedure and the dehazing procedure are needed. The proposed method enhances the degraded sandstorm images naturally in both lightly degraded sandstorm images and greatly degraded sandstorm images without any color shift or artificial regions. Therefore, the improved performance of the proposed method in the sandstorm image enhancement field is superior compared to that of state-of-the-art methods subjectively.

3.3. Objective Comparison

The enhanced sandstorm images are compared in

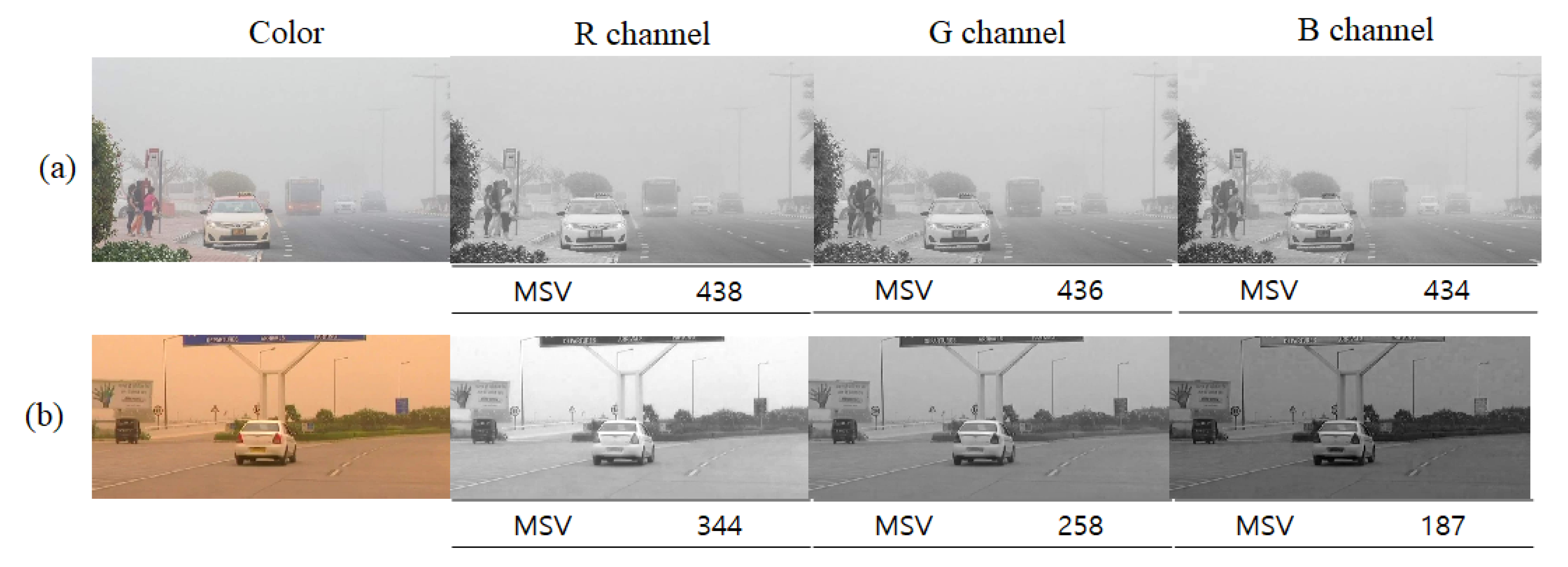

Figure 5,

Figure 6,

Figure 7,

Figure 8,

Figure 9,

Figure 10,

Figure 11,

Figure 12 and

Figure 13. As shown in

Figure 5,

Figure 6,

Figure 7,

Figure 8,

Figure 9,

Figure 10,

Figure 11,

Figure 12 and

Figure 13, the proposed method has good subjective performance in the sandstorm image enhancement field. This section presents the objective comparison of the enhanced images produced using the proposed method and state-of-the-art methods. To assess the performance, this paper use three metrics, namely UIQM [

27], NIQE [

28], and FADE [

29]. The UIQM [

27] measure is used in the enhanced underwater image assessment area. Sandstorm and underwater images have similar features, such as color distortion. Underwater images have a blueish or greenish color distortion due to light attenuation. Additionally, sandstorm images have reddish or yellowish color degradation owing to color channel attenuation because of light being scattered by sand particles. Because underwater images have similar features to sandstorm images, for this reason, this paper assessed the enhanced sandstorm images using the UIQM measure [

27]. The UIQM [

27] measure indicates the image’s sharpness, contrast, and colorfulness. If the score is high, then the enhanced image has high quality. The NIQE [

28] measure indicates how natural an image is using the image’s statistical features such as the Gaussian total variation. If the enhanced image has good quality, then the NIQE [

28] has a low score. The FADE [

29] score reflects how hazy the enhanced image is. If the score is lower, then the enhanced image is less hazy, and vice versa.

Table 1,

Table 2 and

Table 3 show the NIQE [

28] scores for

Figure 8 and

Figure 9.

Table 1 shows the NIQE [

28] scores for

Figure 8 and

Figure 9. He et al.’s [

1] method has a lower NIQE score than Gao et al.’s [

13] method, although the enhanced images produced, using He et al.’s [

1] method, have a color shift and ringing effect; however, the enhanced images are less hazy than those produced using Gao et al.’s method [

13], and therefore, the NIQE score of He et al.’s [

1] method is less than that of Gao et al.’s method [

13]. Gao et al.’s method [

13] has a higher NIQE score than that of Al Ameen’s [

9] method. Although the enhanced images produced using Gao et al.’s [

13] method have no color shift, they are hazier than those produced using Al Ameen’s method [

9]. Therefore, the NIQE score of Gao et al.’s [

13] method is higher than that of Al Ameen’s method [

9]. Meng et al.’s method [

11] has a lower NIQE score than that of Shi et al.’s method [

10], as although the enhanced images produced using Meng et al.’s [

11] method have a distorted color, they are less hazy than those produced using Shi et al.’s method [

12]. Shi et al.’s [

12] method has a higher NIQE score than that of Ren et al.’s method [

17], although the enhanced images produced using Ren et al.’s method [

17] have a color shift. Lee’s method [

16] has a lower NIQE score than that of Shi et al.’s [

10] method, although the enhanced images produced using the Lee method [

16] have a bright region, because the enhanced images have no artificial color cast. Dhara et al.’s method [

15] has a lower NIQE score than that of Gao et al.’s [

13] method because the enhanced images produced using Dhara et al.’s method [

15] are less hazy than those produced using Gao et al.’s [

13] method. Shi et al.’s [

10] method has a higher NIQE score than that of Meng et al.’s [

11] method, although the enhanced images produced using Shi et al.’s method [

10] have less color cast than those produced using Meng et al.’s [

11] method, because the improved images produced using Meng et al.’s [

11] method are less hazy than those produced using Shi et al.’s [

10] method. Meanwhile, the enhanced images produced using the proposed method have a lower NIQE score than those produced using the existing methods because the enhanced images produced by the proposed method have no color shift or ringing effect.

Table 2 shows the NIQE [

28] scores for

Figure 10 and

Figure 11. He et al.’s method [

1] has a lower NIQE score than that of the Gao et al. [

13] method. Although the enhanced images produced using the He et al. [

1] method have a color shift and ringing effect, because they are less hazy than those produced using Gao et al.’s [

13] method, the NIQE score is lower than that of Gao et al.’s method [

13]. Gao et al.’s [

13] method has a higher NIQE score than that of Al Ameen’s method, although the enhanced images produced using Al Ameen’s method [

9] have a color shift. Because the enhanced images produced by Al Ameen’s method [

9] are less hazy than those produced using Gao et al.’s method [

13], this is reflected in the NIQE score. The enhanced images produced using Meng et al.’s [

11] method have a lower NIQE score than those produced using Shi et al.’s method [

12]. Although the enhanced images using Meng et al.’s method [

11] have a color shift, because the enhanced images produced using Meng et al.’s [

11] method are less hazy than those produced using Shi et al.’s method [

12], the NIQE score is lower than that of Shi et al.’s method [

12]. The Shi et al. method [

12] has a lower NIQE score than that of Ren et al.’s [

17] method in some image. The enhanced images produced using Ren et al.’s [

17] method have a lower NIQE score than those produced using Gao et al.’s method [

13] in some image, although the enhanced images produced using the Ren et al. method [

17] have a color shift, because the enhanced images are less hazy, and so the NIQE score is less than that of Gao et al.’s method [

13] in some image. Lee’s [

16] method has a lower NIQE score than that of Gao et al.’s [

13] method because the enhanced images produced using Lee’s method [

16] are less hazy than those produced using Gao et al.’s method [

13]. Shi et al.’s [

10] method has a higher NIQE score than that of Meng et al.’s method [

11] in some image, although the enhanced images produced using Shi et al.’s [

10] method have a less artificial color cast than those produced using Meng et al.’s method [

11], because the enhanced images are hazier than those produced using Meng et al.’s method [

11]. Meanwhile, the enhanced images produced using the proposed method have a lower NIQE score than those produced using other methods, because the enhanced images produced using the proposed method have no color shift and ringing effect.

Table 3 shows the NIQE scores for

Figure 12 and

Figure 13. He et al.’s method [

1] has a lower NIQE score than that of the Gao et al. method [

13] because the enhanced images produced, using He et al.’s method [

1], are less hazy than those produced using the Gao et al. method [

13]. The Al Ameen method [

9] has a higher NIQE score than that of the Meng et al. [

11] method in some image, although the enhanced images produced using the Meng et al. method [

11] have a color shift. The Meng et al. method [

11] has a lower NIQE score than that of the Shi et al. [

12] method in some image, although the enhanced images using the Meng et al. [

11] method have a color shift, because the enhanced images produced using the Meng et al. [

11] method are less hazy than those produced using the Shi et al. method [

12]. The enhanced images produced using the Ren et al. method [

17] have a lower NIQE score than those produced using the Gao et al. method [

13] because the enhanced images produced using the Gao et al. method [

13] are hazier than those produced using the Ren et al. method [

17]. Lee’s method [

16] has a lower NIQE score than that of the Shi et al. method [

10]. Shi et al.’s method [

10] has a lower NIQE score than that of the Gao et al. method [

13] because the enhanced images produced using the Shi et al. method [

10] are less hazy than those produced using the Gao et al. [

13] method. Dhara et al.’s method [

15] has a lower NIQE score than that of the Al Ameen method [

9] because the enhanced images produced using Dhara et al.’s [

15] method have less color casting than those produced using the Al Ameen method [

9]. Meanwhile, the enhanced images produced using the proposed method have a lower NIQE score than those of other methods because the enhanced images produced by the proposed method have no color shift and ringing effect.

As shown in

Table 1,

Table 2 and

Table 3, to enhance sandstorm images naturally, image color balancing and hazy components are considered.

Table 4 shows the average NIQE [

28] scores for

Figure 8,

Figure 9,

Figure 10,

Figure 11,

Figure 12 and

Figure 13 and the DAWN dataset [

25] and WEAPD [

26]. As shown in

Table 4, although the existing dehazing methods cause a color shift, because the enhanced images are less hazy than those produced using the sandstorm image enhancement methods, these methods have a lower NIQE score than those of the existing sandstorm image enhancement methods.

Table 5 shows the UIQM scores for

Figure 8 and

Figure 9. He et al.’s method [

1] has a higher UIQM score than that of Gao et al.’s [

13] method, although the enhanced images produced using He et al.’s method [

1] have a color shift, because the enhanced images are less hazy than those produced using the Gao et al. method [

13]. The enhanced images produced using the Al Ameen method [

9] have a higher UIQM score than those produced using the Gao et al. method [

13], as although the enhanced images produced using the Al Ameen method [

9] have color distortion, the hazy effect in the enhanced images is less pronounced than that seen in those produced using the Gao et al. method [

13]. Meng et al.’s method [

11] has a higher UIQM score than that of the Al Ameen method [

9] in some image, as although the enhanced images have a color shift, the hazy effect is less pronounced than that seen in those produced using the Al Ameen method [

9]. Shi et al.’s method [

12] has a lower UIQM score than that of Ren et al.’s method [

17] in some image, although the enhanced images produced using Ren et al.’s method [

17] have a color shift, because the hazy effect is less pronounced than that seen in those produced using Shi et al.’s method [

12]. Lee’s method [

16] has a higher UIQM score than that of Gao et al.’s [

13] method because the enhanced images produced using Lee’s method [

16] are less hazy than those produced using Gao et al.’s [

13] method. Shi et al.’s method [

10] has a lower UIQM score than that of Ren et al.’s [

17] method in some image, although the enhanced images using Ren et al.’s method [

17] have an artificial color because the hazy effect is less pronounced than that seen in those produced using Shi et al.’s method [

10]. Dhara et al.’s method [

15] has a higher UIQM score than that of Meng et al.’s [

11] method because Dhara et al.’s method [

15] produces enhanced images with a less pronounced color cast than those produced using Meng et al.’s method [

11]. Meanwhile, the enhanced images produced using the proposed method have a higher UIQM score than those produced using the other methods because the enhanced images produced by the proposed method have no color shift or ringing effect.

Table 6 shows the UIQM scores [

27] for

Figure 10 and

Figure 11. He et al.’s [

1] method has a higher UIQM score than that of Geo et al.’s method [

13], although the enhanced images produced, using He et al.’s [

1] method, have color distortion because the hazy effect is less produced than that seen in those produced using Gao et al.’s method [

13]. Al Ameen’s method [

9] has a higher UIQM score than that of Meng et al.’s method [

11] in some image because the UIQM score reflects the image’s colorfulness, contrast, and sharpness. Meng et al.’s method [

11] has a lower UIQM score than that of Al Ameen’s method [

9] in some image because the enhanced images produced using Meng et al.’s method [

11] have a color shift. Shi et al.’s method [

12] has a higher UIQM score than that of Meng et al.’s method [

11] in some image because the enhanced images using Shi et al.’s method [

12] have less color shift than those produced using Meng et al.’s method [

11]. Ren et al.’s method [

17] has a lower UIQM score than that of Shi et al.’s method [

12] in some image because the UIQM score reflects the image’s colorfulness, and the enhanced images produced using Ren et al.’s [

17] method demonstrate a color shift. Lee’s method [

16] has a higher UIQM score than that of Dhara et al.’s [

15] method because the enhanced images produced using Lee’s method [

16] have less color cast. The Dhara et al. method [

15] has a higher UIQM score than that of Meng et al.’s [

11] method because the enhanced images produced using Dhara et al.’s method [

15] have less color cast. Shi et al.’s method [

10] has a higher UIQM score than that of Gao et al.’s [

13] method because the enhanced images using Shi et al.’s method [

10] are less hazy than those produced using Gao et al.’s method [

13]. Meanwhile, the enhanced images produced by the proposed method have a higher UIQM score than those produced using other methods because the enhanced images produced using the proposed method have no color distortion or ringing effect.

Table 7 shows the UIQM [

27] scores for

Figure 12 and

Figure 13. He et al.’s [

1] method has a higher UIQM score than that of Gao et al.’s method [

13] because the hazy effect in the enhanced images by He et al. method [

1] is less pronounced than that seen in those produced using the Gao et al. method [

13]. Al Ameen’s method [

9] has a higher UIQM score than that of the He et al. [

1] and Gao et al. [

13] methods. Although the enhanced images produced using the Al Ameen method [

9] have color distortion, the hazy effect is less pronounced than that seen in the enhanced images produced using the Gao et al. method [

13], and the color distortion is less than that seen in those produced using the He et al. method [

1]. Meng et al.’s method [

11] has a higher UIQM score than that of the Gao et al. method [

13], although the enhanced images produced using Meng et al.’s method [

11] have color degradation, because the enhanced images are less hazy than those produced using the Gao et al. method [

13]. The enhanced images produced using Shi et al.’s method [

12] have a higher UIQM score than those produced using Meng et al.’s method [

11] in some image because the enhanced images produced by means of the Shi et al. method [

12] have less color shift than those produced using the Meng et al. method [

11]. The enhanced images produced by means of the Ren et al. method [

17] have a higher UIQM score than those produced using the Gao et al. method [

13], although the enhanced images produced using the Ren et al. method [

17] have color distortion, because the enhanced images are less hazy than those produced using the Gao et al. method [

13]. Lee’s method [

16] has a higher UIQM score than that of the Gao et al. method [

13] because the enhanced images produced using Lee’s method [

16] are less hazy. Shi et al.’s method [

10] has a lower UIQM score than that of Meng et al.’s method [

11], although the enhanced images produced using Shi et al.’s method [

10] have less color cast than those produced using the Meng et al. method [

11], because they are hazier. Dhara et al.’s method [

15] has a higher UIQM score than that of Meng et al.’s method [

11] because the enhanced images using Dhara et al.’s [

15] method have less color cast. Meanwhile, the enhanced images provided by the proposed method have a higher UIQM score than that of the other methods because the enhanced images produced using the proposed method have no color shift or ringing effect, and the enhanced images seem natural.

As shown in

Table 5,

Table 6 and

Table 7, to enhance a sandstorm image naturally, the image’s colorfulness should be considered.

Table 8 shows the average UIQM scores [

27] for

Figure 8,

Figure 9,

Figure 10,

Figure 11,

Figure 12 and

Figure 13, the DAWN dataset [

25], and WEAPD [

26]. The enhanced images using the Gao et al. method [

13] have a lower UIQM score than that of the dehazing methods, although the enhanced images have no color distortion. Meanwhile, the proposed method has a higher UIQM score than that of the other methods because the enhanced images produced using the proposed method have no color distortion or ringing effect.

As shown in

Table 8, to enhance a degraded sandstorm image naturally, the image’s colorfulness, sharpness, and contrast should be considered.

Table 9 shows the FADE [

29] score for

Figure 8 and

Figure 9. He et al.’s method has a higher FADE score than that of Meng et al.’s method [

11] because the Meng et al. method [

11] has a more suitable refined transmission map. Meng et al.’s method [

11] has a lower FADE score among the existing dehazing methods because this method has a refined transmission map. Ren et al.’s method [

17] has a higher FADE score than that of Meng et al.’s method [

11] because the enhanced images are hazier. Gao et al.’s method [

13] has a higher FADE score than that of other methods because the enhanced images are too hazy. Shi et al.’s method [

12] has a lower FADE score than that of Gao et al.’s method [

13] because the enhanced images are less hazy. Lee et al.’s method [

16] has a lower FADE score than that of Shi et al.’s method [

12] because the enhanced images are less hazy. Dhara et al.’s method [

15] has a lower FADE score than that of Gao et al.’s method [

13] because the enhanced images produced using the Gao et al. method [

13] are hazier. Shi et al.’s method [

10] has a higher FADE score than that of Shi et al.’s method [

12] because the enhanced images are hazier. Al Ameen’s method [

9] has a lower FADE score than that of Gao et al.’s method [

13], although the enhanced images have a color cast, because the hazy effect is less pronounced than that seen in those produced using the Gao et al. method [

13]. Meanwhile, the proposed method has a lower FADE score than that of the other methods because the enhanced images produced using the proposed method have no color cast and the enhanced images are less hazy.

Table 10 shows the FADE [

29] scores for

Figure 10 and

Figure 11. He et al. [

1] has a lower FADE score than that of Gao et al.’s method [

13], although the enhanced images produced using the He et al. method [

1] have a color cast, because the enhanced images produced using the He et al. method [

1] are less hazy than those produced using the Gao et al. method [

13]. Meng et al.’s method [

11] has a lower FADE score than that of the He et al. method [

1] because the enhanced images are less hazy. Ren et al.’s method [

17] has a lower FADE score than that of the Gao et al. method [

13], although the enhanced images produced using the Ren et al. method [

17] have a color cast, because the hazy effect is less pronounced. Gao et al.’s method [

13] has a high FADE score, although the enhanced images have no color cast, because the hazy effect is more pronounced. Lee’s method [

16] has a lower FADE score than that of the Gao et al. method [

13] because the enhanced images are less hazy. Shi et al.’s [

12] method has a lower FADE score than that of the Gao et al. method [

13] because the enhanced images are less hazy. Shi et al.’s method [

10] has a higher FADE score than that of the other dehazing methods, although the enhanced images have no color cast, because the enhanced images are hazier. Dhara et al.’s method [

15] has a higher FADE score than that of Meng et al.’s method [

11] because the enhanced images are hazier. However, the enhanced images using the proposed method have a lower FADE score than that of the other methods because the enhanced images produced using the proposed method have no color cast and are less hazy.

Table 11 shows the FADE [

29] scores for

Figure 12 and

Figure 13. He et al.’s method [

1] has a higher FADE score than that of Meng et al.’s method [

11] because the Meng et al. method [

11] has a refined transmission map and it provides a less hazy image. Meng et al.’s method [

11] has a lower FADE score than that of the Ren et al. method [

17] because the enhanced images using Meng et al.’s method [

11] are less hazy. Ren et al.’s method [

17] has a lower FADE score than that of He et al.’s method [

1] because the enhanced images are less hazy. Gao et al.’s method [

13] has a higher FADE score than that of the other methods, although the enhanced images have no color cast because the enhanced images have a hazy effect. Al Ameen’s method [

9] has a lower FADE score than that of Gao et al.’s method [

13], although the enhanced images have a color cast, because the hazy effect is less pronounced. Lee’s method [

16] has a lower FADE score than that of the Gao et al. method [

13] because the enhanced images are less hazy. Shi et al.’s method [

12] has a higher FADE score than that of Al Ameen’s method [

9], although the enhanced images have no color cast, because the hazy effect is more pronounced. Shi et al.’s method [

10] has a higher FADE score than that of Meng et al.’s method [

11], although the enhanced images have no color cast, because the hazy effect is more pronounced. Dhara et al.’s method [

15] has a lower FADE score than that of Gao et al.’s method [

13], although the enhanced images have a color cast, because the enhanced images are less hazy. Meanwhile, the enhanced images produced using the proposed method have a lower FADE score than that of the other methods because the enhanced images produced using the proposed method have no color cast and are less hazy.

Table 12 shows the average FADE [

29] score for

Figure 8,

Figure 9,

Figure 10,

Figure 11,

Figure 12 and

Figure 13, the DAWN dataset [

25], and WEAPD [

26]. The existing dehazing methods have lower FADE scores than those of the sandstorm image enhancement methods, although the enhanced images have a color cast. Meanwhile, the enhanced images produced using the proposed method have a lower FADE score than that of other methods because the enhanced images have no color cast and are less hazy.

As shown in

Figure 8,

Figure 9,

Figure 10,

Figure 11,

Figure 12 and

Figure 13 and

Table 1,

Table 2,

Table 3,

Table 4,

Table 5,

Table 6,

Table 7,

Table 8,

Table 9,

Table 10,

Table 11 and

Table 12, the performance of the proposed method in the sandstorm image enhancement field is superior compared to that of other existing methods.

The experimental setup consisted of an Intel® Core™ i7-8700 CPU @ 3.20GHz, 32 GB RAM, GeForce GTX 1650 4 GB GPU.