Abstract

This paper deals with spatial data that can be modelled by partially linear varying coefficient spatial autoregressive models with Bayesian P-splines quantile regression. We evaluate the linear and nonlinear effects of covariates on the response and use quantile regression to present comprehensive information at different quantiles. We not only propose an empirical Bayesian approach of quantile regression using the asymmetric Laplace error distribution and employ P-splines to approximate nonparametric components but also develop an efficient Markov chain Monte Carlo technique to explore the joint posterior distributions of unknown parameters. Monte Carlo simulations show that our estimators not only have robustness for different spatial weight matrices but also perform better compared with quantile regression and instrumental variable quantile regression estimators in finite samples at different quantiles. Finally, a set of Sydney real estate data applications is analysed to illustrate the performance of the proposed method.

1. Introduction

Spatial econometric models can deal with the spatial correlation and heterogeneity of variables in cross-sectional data and panel data, which expands the application scope of traditional econometric models. Among many spatial econometric models, there is a large amount of literature focusing on the spatial autoregressive (SAR) model [1]. For instance, Lee [2] studied the asymptotic properties of the quasi-maximum likelihood estimator of the spatial autoregressive model. Lee [3] proposed the generalized method of moments method and classical two-stage least-squares method to estimate mixed regressive and spatial autoregressive models. Kakamu and Wago [4] compared the Bayesian method and maximum likelihood method to study small-sample properties of the panel spatial autoregressive model. Xu and Lee [5] considered the instrumental variable and maximum likelihood estimation for a spatial autoregressive model with a nonlinear transformation of the dependent variable among others.

However, these studies mainly focused on parametric models and could not well explain complex economic phenomena. In fact, the relationship among many economic variables is nonlinear [6,7,8,9]. In order to improve the flexibility and applicability of spatial econometric models, the research of semiparametric spatial econometric models has been gradually increasing. For example, Sun et al. [10] proposed semiparametric varying coefficient spatial autoregressive models. Chen et al. [11] developed a two-stage Bayesian estimation method for semiparametric spatial autoregressive models. Dai et al. [12] applied the quantile regression approach to partially linear varying coefficient spatial autoregressive models. Cai and Xu [13] constructed varying coefficient quantile regression models for time series data.

Although nonparametric spatial autoregressive models can improve the performance of the parametric spatial autoregressive model, it is unavoidable to suffer from the “curse of dimensionality” problem [14]. To solve the problem, a few dimension-reduction approaches have been developed in some literature, including additive model [15], single-index model [16] and varying coefficient model [17], to name a few. Among the different semiparametric models, the partially linear varying coefficient model is perhaps the most widely used. It not only contains the advantages of a linear model but also retains the robustness of nonparametric regression, which can overcome the “curse of dimensionality" problem well. Many scholars have enriched the estimation methods of varying coefficient models both theoretically and empirically, such as the splines method [18,19,20], the kernel method [21], local polynomial estimation [22,23], basis function approximation [24,25] and so on.

According to the regression object of the model, the semiparametric spatial econometric model usually includes mean regression model and quantile regression model. Most of the spatial econometric models involved in the existing literature belong to the former [26,27,28,29]. The mean regression model can only reflect the location information of the conditional distribution of dependent variable and cannot describe its scale and shape. On the contrary, the quantile regression model [30] can find the location, scale and shape of the conditional distribution of dependent variable and, in particular, capture the tail characteristics of the distribution. While linear regression needs to assume that the random error term obeys the normal distribution or generalized Gauss–Laplace distribution [31], the quantile regression approach has strong robustness without making any distribution assumption for the random error terms. Many early studies have been summarized in Koenker and Bassett [30], Koenker and Machado [32], Zerom [33], Chernozhukov and Hansen [34], Su and Yang [35], which considered quantile regression from both a frequentist and a Bayesian point of view. Form the former perspective, the estimation method relies on the minimum asymmetric absolute loss function [36]. Concerning the Bayesian approach, Yu and Moyeed [37] introduced the asymmetric Laplace distribution [38] as a working likelihood to perform the inference. Bayesian quantile regression has since been implemented in a wide range of applications, including models for longitudinal studies [39], Lasso regression [40] and spatial analysis [41], among others. As a result, we develop Bayesian quantile regression for a partially linear varying coefficient spatial autoregressive model.

The estimation methods of semiparametric spatial autoregressive models include quasi-maximum likelihood estimation method [42], instrumental variable method [43], generalized method of moments [44] and Bayesian estimation methods [45]. Compared with the frequentist solutions, Bayesian estimation methods can infer the posterior distributions of parameters by utilizing prior information and allow for parameter uncertainty to be considered. To the best of our knowledge, Bayesian inference for quantile regression has been applied by few authors, such as [37,46,47]. In addition, P-splines [48] have been a popular way for estimating nonlinearities in semiparametric models. This has attractive properties, including that each piecewise polynomial only forms a local basis with unit integrals and overlaps with a limited number of other polynomials [49]. As they are composed of piecewise polynomials, the upper range of basis function is limited, and the differentials of splines are readily available [50]. These characteristics ensure the P-splines are available both numerically and analytically, and P-splines can approximate nonparametric components in the semiparametric spatial autoregressive model. Hence, we can apply a Bayesian P-splines method to approximate nonparametric functions using penalized splines with fixed number and location of knots inferred through Markov chain Monte Carlo (MCMC). Gibbs sampler is a common sampling method employing the MCMC technique, which can generate simple random samples from various distributions, including uniform distribution [51].

In this paper, we developed the partially linear varying coefficient spatial autoregressive (PLVCSAR) models with Bayesian quantile regression using the asymmetric Laplace error distribution for spatial data. It allows for different degrees of spatial dependence at different quantile points of the response distribution. The PLVCSAR model is a good balance between flexibility and parsimony, which can simultaneously capture linearity, non-linearity and the spatial correlation relationship of exogenous variables in a response variable. We employ a Bayesian P-splines method to estimate the unknown parameters and approximate the varying coefficient functions, and we also design a Gibbs sampler to explore the joint posterior distributions using the MCMC technique. It may update the iterations to draw parameters from the full conditional posterior distributions of the unknown quantities through appropriate selection of prior information, which makes Bayesian inference efficient and useful even in complicated situations. The proposed model combines a spatial autoregressive model with a semiparametric framework in an adaptive way, and estimators of this article may be a great breakthrough and improvement in the field of related research.

The rest of the paper is organized as follows. In Section 2, we introduce Bayesian quantile regression of PLVCSAR models for spatially dependent responses and discuss the identifiability conditions, and then we obtain the likelihood function by approximating the varying coefficient functions with the P-splines method. In Section 3, we give the prior distributions and infer the full conditional posteriors of latent variables and unknown parameters. We also describe the detailed Gibbs sampler procedure. Simulation studies for assessing the finite sample performance of the proposed method are reported, and an empirical example is illustrated in Section 4. We summarize the article in Section 5.

2. Methodology

2.1. Model

Given the following partially linear varying coefficient spatial autoregressive model with quantile regression

where is the dependent variable, , and are the associated explanatory variables, is the th element of an exogenously given spatial weight matrix with known constants, consists of a q-dimensional vector of unknown smooth functions, denotes the th quantile spatial regression parameters and is restricted to the condition , is p-dimensional unknown parameters, is the smoothing variable, and is the random error with the th quantile on , which equals zero for .

2.2. Likelihood

We assume that are mutually independent and identically distributed random variables from an asymmetric Laplace distribution with the density

where is the location parameter, is the scale parameter, and is called the check function. Then, the conditional distribution of y is in the form of

Quantile regression is typically based on the check loss function to solve a minimization problem. With model (1), the specific problem is estimating , and by minimizing the following objective function

and , , , , giving (3) a likelihood-based interpretation. By introducing the location-scale mixture representation of the asymmetric Laplace distribution [52], model (1) can be equivalently written as

where with mean and is independent of , and . In the following expressions, we omit for ease of notation.

Considering the advantages of the Bayesian P-splines method, we intend to approximate varying coefficient function in (1) with P-splines. For , the unknown function is a polynomial spline of degree with order interior knots with , i.e.

where , is a vector of spline basis, which is determined by the knots, is a vector of spline coefficients, and boundary knots are

It follows from (5) that model (4) can be written as

where and . We view as latent variables for and define . The matrix form of the model (7) is

where , , is vector with all elements being 1, is an specified constant spatial weight matrix, and is an matrix with as its ith row. Denote , where is an matrix.

The likelihood function corresponding to (8) is as follows:

where , is a vector of the regression coefficient, is an matrix, is an identity matrix of order n, , and ,

3. Bayesian Estimation

In this section, we construct a Bayesian P-splines method with a Gibbs sampler to analyse the proposed model. First of all, we specify the prior distributions of the unknown parameters, and then we infer the full conditional posteriors and describe the detailed Gibbs sampler procedure.

3.1. Priors

According to the Bayesian P-splines method, we need to provide appropriate prior distributions for all the unknown parameters, including spatial autocorrelation coefficient , regression coefficient vector , spline coefficient vector and the location and scale parameters and .

Firstly, we choose a hierarchical prior for , which consists of conjugate normal prior

and an inverse-gamma prior

where and are pre-specified hyper-parameters. Secondly, we choose the random walk prior for

where d is the order of the random walk, is the penalty matrix that equals for d-order random walk prior, is a matrix with the form

For , the prior of hyper-parameters is given by

where and are pre-specified hyper-parameters. In addition, we give no prior information for the location parameter and a conjugate normal inverse-gamma prior for the scale parameter

where and are also pre-specified hyper-parameters. We select to obtain a Cauchy distribution of and use and to obtain a highly dispersed inverse gamma prior for each hyper-parameter of for . Lastly, the spatial autocorrelation coefficient is set a uniform prior for , where and are the minimum and maximum eigenvalues of the standardized spatial weight matrix W

The joint prior distribution of all the unknown quantities are presented by

where is a parameter vector that contains all the unknown hyper-parameters for computational convenience.

3.2. The Full Conditional Posterior Distributions of the Latent Variables

According to the likelihood function (9) together with a standard exponential density, we can derive the full conditional posterior distributions of latent variables for under the condition of observation data and the remaining unknown quantities, as follows

where and . Since (11) is the kernel of a generalized inverse Gaussian distribution, we infer

where the probability density function of is

and is a modified Bessel function of the third kind [53]. There exist efficient algorithms to simulate from a generalized inverse Gaussian distribution [54] so that our Gibbs sampler can be easily applied to quantile regressive estimation.

3.3. The Full Conditional Posterior Distributions of the Parameters

In this section, because the joint posterior of the parameters is complicated and it is not easy to draw samples directly, we propose a hybrid Gibbs sampler [55], also derive the full conditional posterior of all parameters and describe the detailed sampling procedure.

We can obtain the conditional posterior distribution of the spatial autocorrelation coefficient from the likelihood function (9), which is proportional to

However, it is difficult to directly simulate from (12) without the form of any standard density function. We apply the Metropolis–Hastings algorithm [56,57] to overcome the difficulty: generate a candidate from a truncated Cauchy distribution with location and scale on interval , where acts as a tuning parameter; and calculate accept probability

about , where

From the likelihood function (9), the full conditional posterior of the location parameter is derived by

where , , follows a normal distribution as , and the full conditional posterior of the scale parameter is as follows

where . Since (14) is inverse-gamma distribution, we infer

where and . Consequently, the introduction of scale parameter does not cause any difficulties in our Gibbs sampler algorithm.

3.4. Sampling

We will obtain the Bayesian estimation of by drawing from the full conditional posterior distribution of all parameters and running some MCMC tools, including the Gibbs sampler and the Metropolis–Hastings algorithm [56,57]. The detailed procedure of MCMC algorithm (Algorithm 1) in our method is presented as follows:

| Algorithm 1: The pseudocode of the MCMC sampling scheme |

|

4. Numerical Illustration

In the section, Monte Carlo simulations are implemented to demonstrate the finite sample performance of the proposed model and estimation method. We also apply to analyse a real dataset example. In order to ensure the robustness and applicability, two kinds of matrices are chosen to investigate the spatial influence of the spatial weight matrix W on the estimation effects. One is the Rook weight matrix as [35], and the Rook weight matrix is generated according to Rook contiguity, which allocates the n spatial units on a lattice of () squares and finds the neighbours for unit with row normalizing. The other is the Case weight matrix as in [59], we consider the spatial scenario with r districts and m members in each district, and each neighbour of a member in a district is given equal weight.

4.1. Simulation

The samples are generated from the following model:

where the covariate vectors follows a bivariate normal distribution with mean vector and covariance matrix

and are bivariate, and for , and the error term , where F is the common cumulative distribution function of . By subtracting the th quantile, the error term is equal to zero at the th quantile. The varying coefficient functions with and . Furthermore, we chose three different values of spatial parameters at three different quantile points , two kinds of the Rook and Case weight matrix as the spatial weight matrix W, respectively. The sample sizes are for the Rook weight matrix, districts and members are for the Case weight matrix.

We conducted each simulation with 1000 replications. For , we use a quadratic P-splines in which the number of knots are placed at equally spaced interval of the predictor variables and design hyper-parameters in our computation. The second-order random walk penalties are used for the Bayesian P-splines to approximate the unknown smooth functions. The unknown parameters are drawn from their respective prior distributions. The tuning parameter is used to control the resultant acceptable rate for parameter around 25% by incrementally increasing or decreasing value.

We generated 6000 sampled values following the proposed Gibbs sampler and deleted the first 3000 values as a burn-in period for each of the replications until the Markov Chains reach steady state. According to the last 3000 values, we calculate the corresponding means across 1000 replications for the posterior mean (Mean), standard error (SE) and 2.5th and 97.5th percentiles of the parameters, namely the 95% posterior credible intervals (95% CI), which are defined by the posterior probability of the parameters falling into the intervals is 95% based on the highest posterior density.

We also computed the standard derivations (SD) of the estimated posterior means to compare them with the means of the estimated posterior SE. From the model (19), LeSage and Pace [60] suggested scalar summary measures for the marginal effects, which are given by for . The direct effects are labeled as the average of the diagonal elements. The average of either the row sums or the column sums of the non-diagonal elements are used as the indirect effects, and the total effects are the sum of the direct and indirect effects.

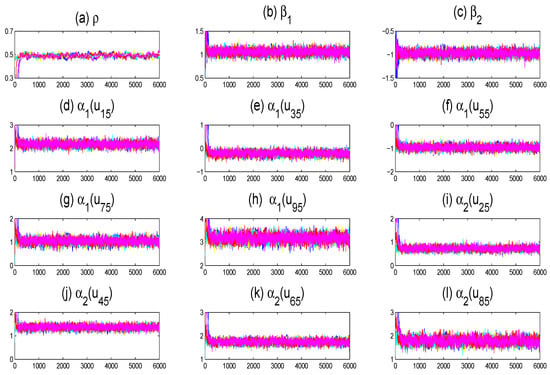

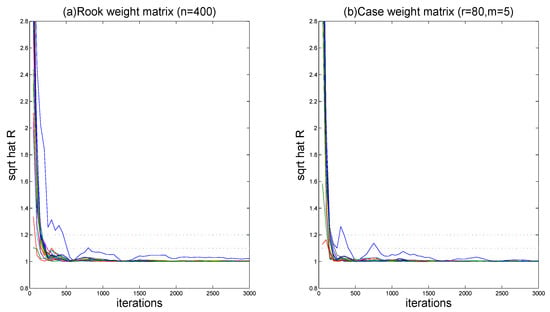

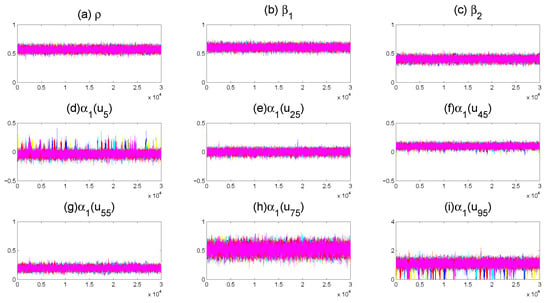

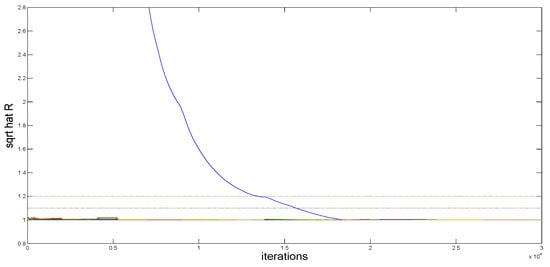

To check the convergence of the MCMC algorithm, five different Markov Chains corresponding to different starting values have been ran through the Gibbs sampler to perform each replication. Figure 1 displays the sampled traces of parts of the unknown quantities, including model parameters and fitting functions on grid points. It is clear that the five parallel sequences mix reasonably well. We further calculate the “potential scale reduction factor” for all unknown parameters and varying coefficient functions on 10 selected grid points based on the five parallel sequences. Figure 2 shows the values of after iterating 3000 times. We observe that all the values of are less than 1.2 following the suggestion of Gelman and Rubin [61] after 3000 burn-in iterations, which is sufficient for convergence.

Figure 1.

Trace plots of five parallel sequences corresponding to different starting values for parts of the unknown quantities, where (a–c) are trace plots of parameters , (d–h) are trace plots of the varying coefficient function , and (i–l) are trace plots of the varying coefficient function (only a replication with , and is displayed).

Figure 2.

The “potential scale reduction factor” for simulation results (the case of spatial parameter is , ).

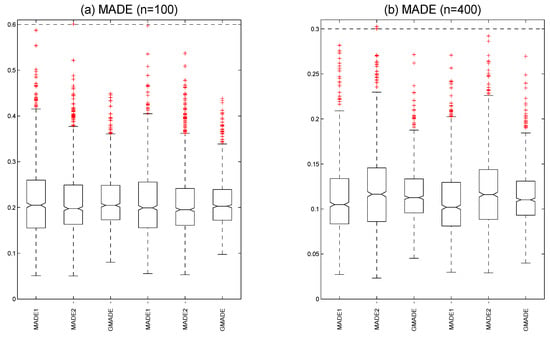

In order to investigate the finite sample performance of varying coefficient functions, the variability measures of the mean absolute deviation errors (MADE) and global mean absolute deviation errors (GMADE) are used to measure the estimation performance. MADE and GMADE are defined as

at 100 fixed grid points that are equally-spaced chosen from interval . Figure 3a displays the boxplots of the MADE and GMADE values with sample size and at quantile point. Based on the Rook weight matrix on the left three panels, the medians are , and . Based on the Case weight matrix on the right three panels, the medians are , and . Figure 3b shows the boxplots of the MADE and GMADE values with sample size and at quantile point. Based on the Rook weight matrix, the medians are , and on the left three boxplots. Based on the Case weight matrix , the medians are , and on the right three boxplots. We can see that the MADE and GMADE values not only decrease when the number of n increase but also become smaller under the Case weight matrix than the Rook weight matrix, meaning the varying coefficient functions become more accurate when increasing the sample size with application of the Case weight matrix. This shows that the proposed model and estimation method with both the Rook weight matrix and the Case weight matrix in the finite sample can obtain reasonable estimation and good performance.

Figure 3.

The boxplots (a) the mean absolute deviation errors with sample size . The boxplots (b) the mean absolute deviation errors with sample size (the three panels on the left are based on the Rook weight matrix and the three panels on the right are based on the Case weight matrix).

Table 1 and Table 2 summarize the estimation results. The parameter estimates are quite different at three quantiles of the response distributions. Under the same spatial weight matrix, the accuracy of the results improves with the increasing of the sample sizes. We can see that the means of the unknown estimators are close to the respective true values, and the average values of the SE are close to the corresponding SD, indicating that the parameter estimates and the standard errors are more precise. For the parameter under the same sample sizes, we find the SE and SD of parameter with the Case weight matrix are slightly better than that with the Rook weight matrix. In addition, the general pattern from the estimates reported in Table 1 and Table 2 is that all estimators impose relatively larger bias on the total effect estimates when there is strong positive spatial dependence for similar sample sizes. When we repeat the aforementioned experiences with different starting values, the estimation results are similar, all of which indicate that the proposed Gibbs sampler performs quite well.

Table 1.

Simulation results of the parameter estimation for .

Table 2.

Simulation results of the parameter estimation for .

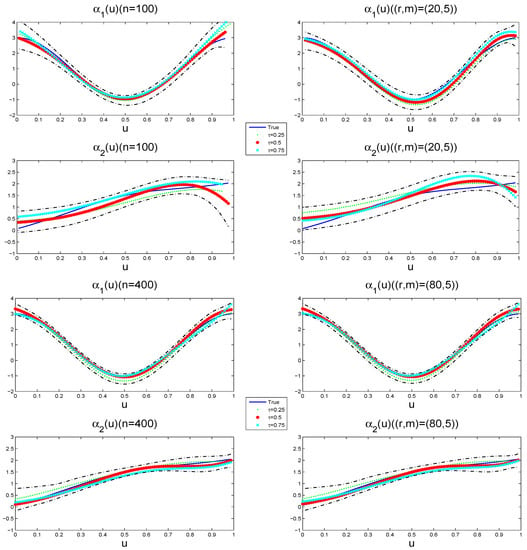

Figure 4 compares the estimation results of varying coefficient functions at different quantiles, along with its 95% pointwise posterior credible intervals of and from a typical sample under and , respectively. The typical sample is selected in such a way that its MADE value is equal to the median in the 1000 replications. We can see that the three fitting curves are fairly close to the solid curve, and the corresponding credible bandwidth is narrow. With the increasing of the sample sizes, the gaps between the fitting curves and the true value become short. There also exist visible differences at different quantiles of the response distributions. It illustrates that the varying coefficient function estimation procedure works well for small samples.

Figure 4.

The estimated varying coefficient functions (dotted line at quantile, star line at quantile and forked line at quantile) and their 95% pointwise posterior credible intervals (dot- dashed lines) for a typical sample (the left panels are based on the Rook weight matrix and the right panels are based on the Case weight matrix with ). The solid lines denote the true varying coefficient functions.

We compare the performance of the Bayesian quantile regression (BQR) estimator in this paper to the instrumental variable quantile regression (IVQR) estimator in Dai et al. [12] with two examples.

Example 1.

The model is given as follows

where , , and , , F is the common cumulative distribution function of , and the th quantile of random error is centred to zero. and are generated from and , are bivariate. and are generated independently from and . Table 3 summarizes the comparison results of QR, IVQR and BQR estimators with a homoscedastic error term.

Table 3.

Simulation results of the parameter estimation.

Example 2.

The model is given as follows

where , , and , , F is the common cumulative distribution function of , and the th quantile of random error is equal to zero. and are generated from , and , are bivariate. and are generated independently from and . Table 4 summarizes the comparison results of QR, IVQR and BQR estimators with a heteroscedastic error term.

Table 4.

Simulation results of parameter estimation.

The spatial weight matrix is generated based on mechanism that for , and then standardized transformation is applied to convert the matrix W to have row-sums of unit [12]. After repeating the estimation procedure 1000 times for each case, we calculate the Bias and RMSE between the parameter estimates and true values, the MADE of the estimation accuracy of the varying coefficient functions.

Table 3 and Table 4 report the results of QR, IVQR and BQR corresponding to example 1 and example 2. It can be seen that the influence of explanatory variables on the response is quite different at different quantiles of the response distributions. When the sample sizes enhance, all the bias, RMSE and MADE of the estimators will decrease significantly. Comparing with the three methods QR, IVQR and BQR, the BQR estimator can obtain more robust results in the same condition with less bias, RMSE and MADE. We think that BQR algorithm is superior to QR and IVQR, although the later can also achieve reasonable estimations.

4.2. Application

As an application of the proposed model and methods to a real data example, we use the well-known Sydney real estate data with detailed description in [62]. The data set contains 37,676 properties sold in the Sydney Statistical Division (an official geographical region including Sydney) in the calendar year of 2001, which is available from HRW package in R. We focus on the last week of February only to avoid the temporal issue including 538 properties.

In this application, the house price (Price) is explained by four variables, which are the distance from house to the nearest coastline location in kilometres (DC), distance from house to the nearest main road in kilometres (DR), inflation rate measured as a percentage (IR) and average weekly income (Income). The DC and DR have linear effects on the response Price, while the IR and Income have nonlinear effects on the response Price. Moreover, we make Price and DC logarithmic transformation to avoid the trouble caused by big gaps in the domain. In addition, Income is transformed so that the marginal distribution is approximately . Therefore, the following partially linear varying coefficient spatial autoregressive model will be developed:

where the response variable , , , , . Regarding the choice of the weight matrix, according to the practice in Sun et al. [10], we use the Euclidean distance in terms of any two houses to calculate the spatial weight matrix W. The location is represented with longitude and latitude, denoted as . The spatial weight is

For this dataset, we adopt quadratic P-splines and hyper-parameters for . The tuning parameter is used to control the acceptable rate for updating around 25%.

We run the proposed Gibbs sampler five times with different starting values and generate 10,000 sampled values following a burn-in of 20,000 iterations in each run. Traces of parts of the unknown quantities are plotted in Figure 5, and the five parallel sequences aggregate very well. Based on the five parallel sequences, we further calculate the “potential scale reduction factor” , which is plotted in Figure 6. It is clear that all the values of are less than 1.2 after 20,000 burn-in iterations. The proposed estimators can realize excellent convergence effects applying to the actual data.

Figure 5.

Trace plots of five parallel sequences corresponding to different starting values for parts of the unknown quantities, where (a–c) are trace plots of parameters , and (d–i) are trace plots of the varying coefficient function .

Figure 6.

The “potential scale reduction factor” for Sydney real estate data.

Table 5 lists the estimated parameters together with their standard errors and 95% posterior credible intervals. It shows that the estimation of the spatial coefficient is 0.57 with the standard deviation SE at quantile, which means that there exists positive and significant spatial spillover effects for the housing prices of Sydney real estate. However, the spatial coefficient decreases with the increase of quantiles, when the house prices are lower, the spatial effects and the interaction between different regions become stronger. The coefficients of the two covariates and are and at quantile, they also have promotional effects on housing prices at the other two quantiles, and will play an important positive role with the house prices rising because the two parameters present an increasing trend at higher quantiles.

Table 5.

Parameter estimation in the model (20) for Sydney real estate data.

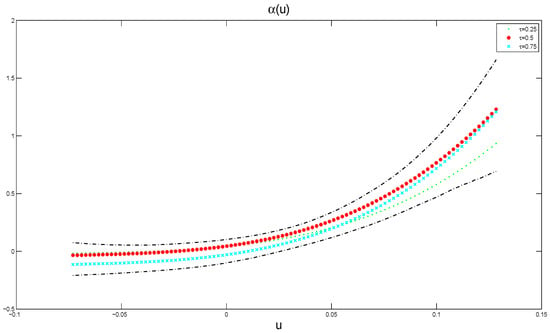

Figure 7 presents the estimated varying coefficient functions together with its 95% pointwise posterior credible intervals, which includes three quantiles and by a dotted line, star line and forked line, respectively. The curves totally show an upward trend, especially when u becomes larger, the curves rise up more. This shows that the effect of covariate Income on the response has a U-shaped nonlinear relationship. More specifically, when the quantile at , the varying coefficient function is greater than the other two, meaning that Income has a significant promoting influence in areas with higher housing prices. The empirical result confirms the robustness and practicability of the Bayesian P-splines method.

Figure 7.

The estimated function (dot line) and its 95% pointwise posterior credible intervals (dot-dashed lines) in the model (20) for Sydney real estate data.

5. Summary

This article focused on studying Bayesian estimation and inference in a quantile regression of partially linear varying coefficient spatial autoregressive models with P-splines. This can analyse the linear and nonlinear effects of the covariates on the response for spatial data, reduce the high risk of misspecification of the traditional SAR models and avoid certain serious drawbacks of fully nonparametric models.

We developed Bayesian quantile regression of PLVCSAR models using the asymmetric Laplace error distribution, which can capture comprehensive features at different quantile points without strict restrictions. Moreover, we considered a fully Bayesian P-splines approach to analyse the PLVCSAR models and designed a Gibbs sampler to explore the full conditional posterior distributions. Compared with the QR and IVQR estimators in the same condition, our methodology obtained more robust and precise results. Finally, the proposed model and method with an application were used to analyse a real dataset.

In this article, we considered spatial data with homoscedasticity or heteroscedastic error term, which does not need any specification of error distribution. Although we used a partially linear varying coefficient SAR model, the other models, such as a partially linear single-index SAR model and partially linear additive SAR model can also be considered. In addition, we also need to study variable selection and model selection in a large sample.

Author Contributions

Supervision, Z.C. and F.J.; software, Z.C. and M.C.; methodology, Z.C.; writing—original draft preparation, Z.C.; writing—review and editing, Z.C., M.C. and F.J. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Natural Science Foundation of China (12001105), the Postdoctoral Science Foundation of China (2019M660156), the Natural Science Foundation of Fujian Province (2021J01662) and the Humanities and Social Sciences Youth Foundation of Ministry of Education of China (19YJC790051).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are openly available in Reference [62].

Acknowledgments

The authors are deeply grateful to the editors and anonymous referees for their careful reading and insightful comments as they helped to significantly improve this paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Cliff, A.D.; Ord, J.K. Spatial Autocorrelation; Pion Ltd.: London, UK, 1973. [Google Scholar]

- Lee, L.F. Asymptotic Distribution of Quasi-Maximum Likelihood Estimators for Spatial Autoregressive Models. Econometrica 2004, 72, 1899–1925. [Google Scholar] [CrossRef]

- Lee, L.F. GMM and 2SLS Estimation of Mixed Regressive Spatial Autoregressive Models. J. Econom. 2007, 137, 489–514. [Google Scholar] [CrossRef]

- Kakamu, K.; Wago, H. Small-sample properties of panel spatial autoregressive models: Comparison of the Bayesian and maximum likelihood methods. Spat. Econ. Anal. 2008, 3, 305–319. [Google Scholar] [CrossRef]

- Xu, X.B.; Lee, L.F. A spatial autoregressive model with a nonlinear transformation of the dependent variable. J. Econom. 2015, 186, 1–18. [Google Scholar] [CrossRef]

- Basile, R. Regional economic growth in Europe: A semiparametric spatial dependence approach. Pap. Reg. Sci. 2008, 87, 527–544. [Google Scholar] [CrossRef]

- Basile, R. Productivity polarization across regions in Europe: The role of nonlinearities and spatial dependence. Int. Reg. Sci. Rev. 2008, 32, 92–115. [Google Scholar] [CrossRef] [Green Version]

- Basile, R.; Gress, B. Semi-parametric spatial auto-covariance models of regional growth behaviour in Europe. Reg. Dev. 2005, 21, 93–118. [Google Scholar] [CrossRef]

- Paelinck, J.H.P.; Klaassen, L.H. Spatial Econometrics; Gower Press: Aldershot, UK, 1979. [Google Scholar]

- Sun, Y.; Yan, H.J.; Zhang, W.Y.; Lu, Z. A Semiparametric spatial dynamic model. Ann. Stat. 2014, 42, 700–727. [Google Scholar] [CrossRef] [Green Version]

- Chen, J.Q.; Wang, R.F.; Huang, Y.X. Semiparametric spatial autoregressive model: A two-step Bayesian approach. Ann. Public Health Res. 2015, 2, 1012. [Google Scholar]

- Dai, X.; Li, S.; Tian, M. Quantile regression for partially linear varying coefficient spatial autoregressive models. arXiv 2016, arXiv:1608.01739. [Google Scholar]

- Cai, Z.; Xu, X. Nonparametric quantiles estimations for dynamic smooth coefficients models. J. Am. Stat. Assoc. 2008, 103, 1595–1608. [Google Scholar] [CrossRef]

- Bellman, R.E. Adaptive Control Processes; Princeton University Press: Princeton, NJ, USA, 1961. [Google Scholar]

- Hastie, T.J.; Tibshirani, R.J. Generalized Additive Models; Chapman and Hall: New York, NY, USA, 1990. [Google Scholar]

- Friedman, J.H.; Stuetzle, W. Projection Pursuit Regression. J. Am. Stat. Assoc. 1981, 376, 817–823. [Google Scholar] [CrossRef]

- Hastie, T.J.; Tibshirani, R.J. Varying-coefficient models. J. R. Stat. Soc. 1993, 55, 757–796. [Google Scholar] [CrossRef]

- Chiang, C.; Rice, J.; Wu, C. Smoothing Spline Estimation for Varying Coefficient Models with Repeatedly Measured Dependent Variables. J. Am. Stat. Assoc. 2001, 96, 605–619. [Google Scholar] [CrossRef]

- Eubank, R.L.; Huang, C.F.; Buchanan, R.J. Smoothing Spline Estimation in Varying-coefficient Models. J. R. Stat. Soc. 2004, 66, 653–667. [Google Scholar] [CrossRef]

- Lu, Y.Q.; Zhang, R.Q.; Zhu, L.P. Penalized Spline Estimation for Varying-Coefficient Models. Commun. Stat. Theory Methods 2008, 37, 2249–2261. [Google Scholar] [CrossRef]

- Wu, C.O.; Chiang, C.; Hoover, D.R. Asymptotic confidence regions for kernel smoothing of a varying-coefficient model with longitudinal data. J. Am. Stat. Assoc. 1998, 93, 1388–1403. [Google Scholar] [CrossRef]

- Cai, Z.W.; Fan, J.Q.; Li, R.Z. Efficient Estimation and Inferences for Varying Coefficient Models. J. Am. Stat. Assoc. 2000, 451, 888–902. [Google Scholar] [CrossRef]

- Cai, Z.W. Two-Step Likelihood Estimation Procedure for Varying Coefficient Models. J. Multivar. Anal. 2002, 1, 18–209. [Google Scholar] [CrossRef] [Green Version]

- Huang, J.Z.; Wu, C.O.; Zhou, L. Varying-coefficient models and basis functions approximations for the analysis of repeated measurements. Biometrika 2002, 89, 111–128. [Google Scholar] [CrossRef]

- Lu, Y.Q.; Mao, S.S. Local asymptotics for B-spline estimators of the varying-coefficient model. Commun. Stat. 2004, 33, 1119–1138. [Google Scholar] [CrossRef]

- Elhorst, J. Unconditional Maximum Likelihood Estimation of Linear and Log-Linear Dynamic Models for Spatial Panels. Geogr. Anal. 2005, 37, 85–106. [Google Scholar] [CrossRef]

- Yu, J.H.; De Jong, R.; Lee, L.F. Quasi-maximum likelihood estimators for spatial dynamic panel data with fixed effects when both n and t are large. J. Econom. 2008, 146, 118–134. [Google Scholar] [CrossRef] [Green Version]

- Lee, L.F.; Yu, J.H. Estimation of spatial autoregressive panel data models with fixed effects. J. Econom. 2010, 154, 165–185. [Google Scholar] [CrossRef]

- Chen, Z.Y.; Chen, J.B. Bayesian analysis of partially linear additive spatial autoregressive models with free-knot splines. Symmetry 2021, 13, 1635. [Google Scholar] [CrossRef]

- Koenker, R.; Bassett, G. Regression Quantiles. Econometrica 1978, 46, 33–50. [Google Scholar] [CrossRef]

- Jäntschi, L.; Bálint, D.; Bolboacǎ, S.D. Multiple linear regressions by maximizing the likelihood under assumption of generalized Gauss–Laplace distribution of the error. Comput. Math. Methods Med. 2016, 2016, 8578156. [Google Scholar] [CrossRef]

- Koenker, R.; Machado, J. Goodness of Hit and related inference processes for quantile regression. J. Am. Stat. Assoc. 1999, 94, 1296–1309. [Google Scholar] [CrossRef]

- Zerom, G.D. On additive conditional quantiles with high-dimensional covariates. J. Am. Stat. Assoc. 2003, 98, 135–146. [Google Scholar]

- Chernozhukov, V.; Hansen, C. Instrumental variable quantile regression: A robust inference approach. J. Econom. 2008, 142, 379–398. [Google Scholar] [CrossRef] [Green Version]

- Su, L.J.; Yang, Z.L. Instrumental Variable Quantile Estimation of Spatial Autoregressive Models; Working Papers; Singapore Management University: Singapore, 2009. [Google Scholar]

- Koenker, B. Quantile Regression; Cambridge University Press: Cambridge, UK, 2005. [Google Scholar]

- Yu, K.M.; Moyeed, R.A. Bayesian quantile regression. Stat. Probab. Lett. 2001, 54, 437–447. [Google Scholar] [CrossRef]

- Kozubowski, T.J.; Podgórski, K. A multivariate and asymmetric generalization of Laplace distribution. Comput. Stat. 2000, 15, 531–540. [Google Scholar] [CrossRef]

- Yuan, Y.; Yin, G.S. Bayesian quantile regression for longitudinal studies with nonignorable missing data. Biometrics 2010, 66, 105–114. [Google Scholar] [CrossRef]

- Li, Q.; Xi, R.B.; Lin, N. Bayesian regularized quantile regression. Bayesian Anal. 2010, 5, 533–556. [Google Scholar] [CrossRef]

- Lum, K.; Gelfand, A.E. Spatial quantile multiple regression using the asymmetric Laplace process. Bayesian Anal. 2012, 7, 235–258. [Google Scholar] [CrossRef]

- Anselin, L. Spatial Econometrics: Methods and Models; Kluwer Academic Publishers: Dordrecht, The Netherlands, 1988. [Google Scholar]

- Anselin, L. Estimation Methods for Spatial Autoregressive Structures. Reg. Sci. Diss. Monogr. Ser. 1980, 8, 263–273. [Google Scholar]

- Conley, T.G. GMM Estimation with Cross Sectional Dependence. J. Econom. 1999, 92, 1–45. [Google Scholar] [CrossRef]

- LeSage, J. Bayesian Estimation of Spatial Autoregressive Models. Int. Relations 1997, 20, 113–129. [Google Scholar] [CrossRef]

- Dunson, D.B.; Taylor, J.A. Approximate Bayesian inference for quantiles. J. Nonparametr. Stat. 2005, 17, 385–400. [Google Scholar] [CrossRef]

- Thompson, P.; Cai, Y.; Moyeed, R.; Reeve, D.; Stander, J. Bayesian nonparametric quantile regression using splines. Comput. Stat. Data Anal. 1993, 54, 1138–1150. [Google Scholar] [CrossRef]

- Boor, C.D. A Practical Guide to Splines; Springer: New York, NY, USA, 1978. [Google Scholar]

- Krisztin, T. Semi-parametric spatial autoregressive models in freight generation modeling. Transp. Res. Part Logist. Transp. Rev. 2018, 114, 121–143. [Google Scholar] [CrossRef]

- Eilers, P.H.C.; Marx, B.D. Flexible smoothing with B-splines and penalties. Stat. Sci. 1996, 11, 89–121. [Google Scholar] [CrossRef]

- Jäntschi, L. A test detecting the outliers for continuous distributions based on the cumulative distribution function of the data being tested. Symmetry 2019, 11, 835. [Google Scholar] [CrossRef] [Green Version]

- Kozumi, H.; Kobayashi, G. Gibbs sampling methods for bayesian quantile regression. J. Stat. Comput. Simul. 2011, 81, 1565–1578. [Google Scholar] [CrossRef] [Green Version]

- Barndorff-Nielsen, O.E.; Shephard, N. Non-gaussian ornstein-uhlenbeck-based models and some of their uses in financial economics. J. R. Stat. Soc. 2001, 63, 167–241. [Google Scholar] [CrossRef]

- Dagnapur, J.S. An easily implemented generalized inverse Gaussian generator. Commun. Stat. Simul. Comput. 1989, 18, 703–710. [Google Scholar]

- Tierney, L. Markov chains for exploring posterior distributions. Ann. Stat. 1994, 22, 1701–1728. [Google Scholar] [CrossRef]

- Hastings, W.K. Monte Carlo sampling methods using Markov Chains and their applications. Biometrika 1970, 57, 97–109. [Google Scholar] [CrossRef]

- Metropolis, N.; Rosenbluth, A.W.; Rosenbluth, M.N.; Teller, A.H.; Teller, E. Equations of state calculations by fast computing machine. J. Chem. Phys. 1953, 21, 1087–1091. [Google Scholar] [CrossRef] [Green Version]

- Tanner, M.A. Tools for Statistical Inference: Methods for the Exploration of Posterior Distributions and lIkelihood Functions, 2nd ed.; Springer: New York, NY, USA, 1993. [Google Scholar]

- Case, A.C. Spatial patterns in householed demand. Econometrica 1991, 59, 953–965. [Google Scholar] [CrossRef] [Green Version]

- LeSage, J.; Pace, R.K. Introduction to Spatial Econometrics; Chapman and Hall/CRC: New York, NY, USA, 2009. [Google Scholar]

- Gelman, A.; Rubin, D.B. Inference from iterative simulation using multiple sequences. Stat. Sci. 1992, 7, 457–511. [Google Scholar] [CrossRef]

- Harezlak, J.; Ruppert, D.; Wand, M.P. Semiparametric Regression with R; Springer: New York, NY, USA, 2018. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).