5.1. Properties of the Proposed Solutions

Each proposed solution is (anti)symmetrical, not relatively to the observed successes but relatively to its complement compared to half. Specifically, if

is the CI of

x successes from

m draws, then

is the CI of

successes from

m draws. This property is available for the normal approximation interval as well (Equation (

5)). It can be easily checked that if

(see Equation (

5)), then substituting

x with

,

and

+

=

m =

+

and the actual coverage probability is the same (see

Figure 1,

Figure 2,

Figure 3 and

Figure 4; all are symmetrical). When considering the proposed solutions (Algorithms 1–3) the (anti)symmetry is kept due to the presence of the

If(r[i-1]=r[j+1])…EndIf (Algorithms 1 and 3), and

If(r[i]=r[j])…EndIf (Algorithm 2) instructions blocks. Additionally, an important remark could be made about the Normal approximation interval (Equation (

5)). This interval is actually (as defined by Equation (

5)) even more symmetrical in relation to the observed number of successes (

x), but if anyone considers the confidence intervals proposed by Equation (

5) to be a very small (such as is

x = 1, 2, …) or very large (such as is

x =

,

, …) number of successes (see

Table 5), it will be seen that a bound greater than

m or smaller than 0 is not logical; therefore, it must be immediately patched (Equation

8).

All integer boundaries formulas (column

in

Table 5) can be easily obtained (Equation (

9), where

is the ceil function and

is the floor function) from Equation (

8).

and possess exactly the same (anti)symmetrical properties like the proposed ones (Algorithms 1–3).

A consequence derives from this property: it is sufficient to know one bound for all range of successes in order to know the other bound as well. For convenience, three series are listed in the

Appendix A (

Table A1,

Table A2 and

Table A3) of lower boundaries for the proposed CI alternatives. In each case, if

is the series given, then the confidence interval of

is

. Each table presents the run of one algorithm, so the solution proposed by individual algorithms (

Table A1 for Algorithm 1,

Table A2 for Algorithm 2, and

Table A3 for Algorithm 3). The algorithms were implemented in PHP language and the outputs are given. There is no serious constraint in the computation, other than memory limits of the computer; see

Section 5.3 for details. An example is suitable here. It would be useful to know the smallest sample size for which the proposed algorithms provide different solutions. That is

. The CI calculated from the

numbers are given in

Table 6.

Please note that when

m is very small the whole probability space is small (Equation (

3) defines a

matrix) so any method (optimized or not) have to choose from a very small set of possibble choices. This peculiar behavior is visible in the

Appendix A Table A1,

Table A2,

Table A3 and

Table A4 as well: the entries for

and

are identical and until

it is in one way or another an overlap between the proposed solutions.

5.2. Smoothing of the Proposed Solutions

Smoothing a data set is typically done to create an approximating function that captures important patterns in the data, leaving out noise or other fine-scale variations [

30]. In this instance, smoothing is proposed to be used to introduce noise, or a fine-scale variation, to make the confidence interval more similar to the normal approximation (Equation (

5); to all intents and purposes like its patch Equation (

8). Ergo, someone may say that

is the anti-smoothed version of the patched normal approximation confidence interval (

).

Considering that two adjacent intervals that natively do not intersect will still not intersect after smoothing. It means that, by smoothing, they should not be enlarged with more than a half of 1/

m. Also, smoothing should balance the transition from one number of successes (

x) to adjacent numbers of successes (

and

). An interval is made with common sense by picking its limits between two integers such that the ratio of the probabilities of their extraction is also the ratio in which the limit divides the allotted distance between them (0.5, Equation (

10).

Smoothing with Equation (

10) does not change the coverage probability (the coverage probabilities of

and

are equal) because the change is too small, smaller than the increment between the success events.

5.3. General Discussion

What if one follows the (numerous) sets of rules, such as having a confidence interval of which boundaries are monotonic functions? It may be checked that the solutions proposed by Algorithms 1 and 2 are both monotonic (all the data given in

Table A1 and

Table A2. A screening conducted for

m from 21 to 100 also supported the monotony. So, in actuality, two proposed solutions follow some rules (another rule implemented in the algorithm is that the confidence interval is constructed from redrawn successes taken in descending order of the probabilities—most likely first).

The series of numbers giving one boundary may be inspected in more details. Consider the series for

m = 25 and

provided by Algorithm 1: N = {0, 0, 0, 0, 1, 2, 2, 3, 4, 5, 6, 7, 8, 8, 9, 10, 11, 12, 14, 15, 16, 18, 19, 21, 22, 25}. Independently of the algorithm, sample size, and imposed significance level, the first (

) and last (

) are known (

= 0,

=

m) as well as actual non-coverage probabilities associated with a

and

draw (0%,

and [

] intervals cover all possible cases, see Equation (

3). Since CI is

(see

Section 5.1) bigger numbers in the series means narrower confidence intervals (the bounds are then closer) while smaller numbers in the series mean larger confidence intervals (the bounds are then farther). This is an important property which allows us to simplify the reasoning.

Nevertheless, someone may argue that this is not enough (see [

17] patched in [

18]), so what is happening when arbitrary (but with a sort of common sense) rules are imposed (see Equation 1.3 and 1.4 in [

17]) should be analyzed. Moving from the CI for

m = 25 to the CI for

m = 26 (whenever; applicable for any succession), one may argue that the lower bound of the CIs for

x successes from

draws should not be greater than the bound of the CI for

x successes from

m draws (the general trend in the columns in

Table A1 and

Table A2 should be considered). As result, once you have constructed the series of

N for

from the series of

N for

m, all that is needed is to add the new sample size (

) at the end, thus getting a series of bounds which are (the first ones) following the defined rule and may contain numbers generally higher than the desired ones. A such series should be labeled

;

series for

m = 26 and

is

= {0, 0, 0, 0, 1, 2, 2, 3, 4, 5, 6, 7, 8, 8, 9, 10, 11, 12, 14, 15, 16, 18, 19, 21, 22, 25, 26}). By following an exactly mirrored reasoning, by adding a 0 at the beginning, the result is a series of too large but still rule complying series. A such series may contain numbers generally smaller than the desired ones and this series should be labeled

(

series for

m = 26 and

is

= {0, 0, 0, 0, 0, 1, 2, 2, 3, 4, 5, 6, 7, 8, 8, 9, 10, 11, 12, 14, 15, 16, 18, 19, 21, 22, 25}).

The

and

series defines a trap for any confidence interval following an extended monotony rule (if

is the best option then

for

). Why not find the best one? It is a matter of extensive search and the algorithm is of no interest, since its result is a failure.

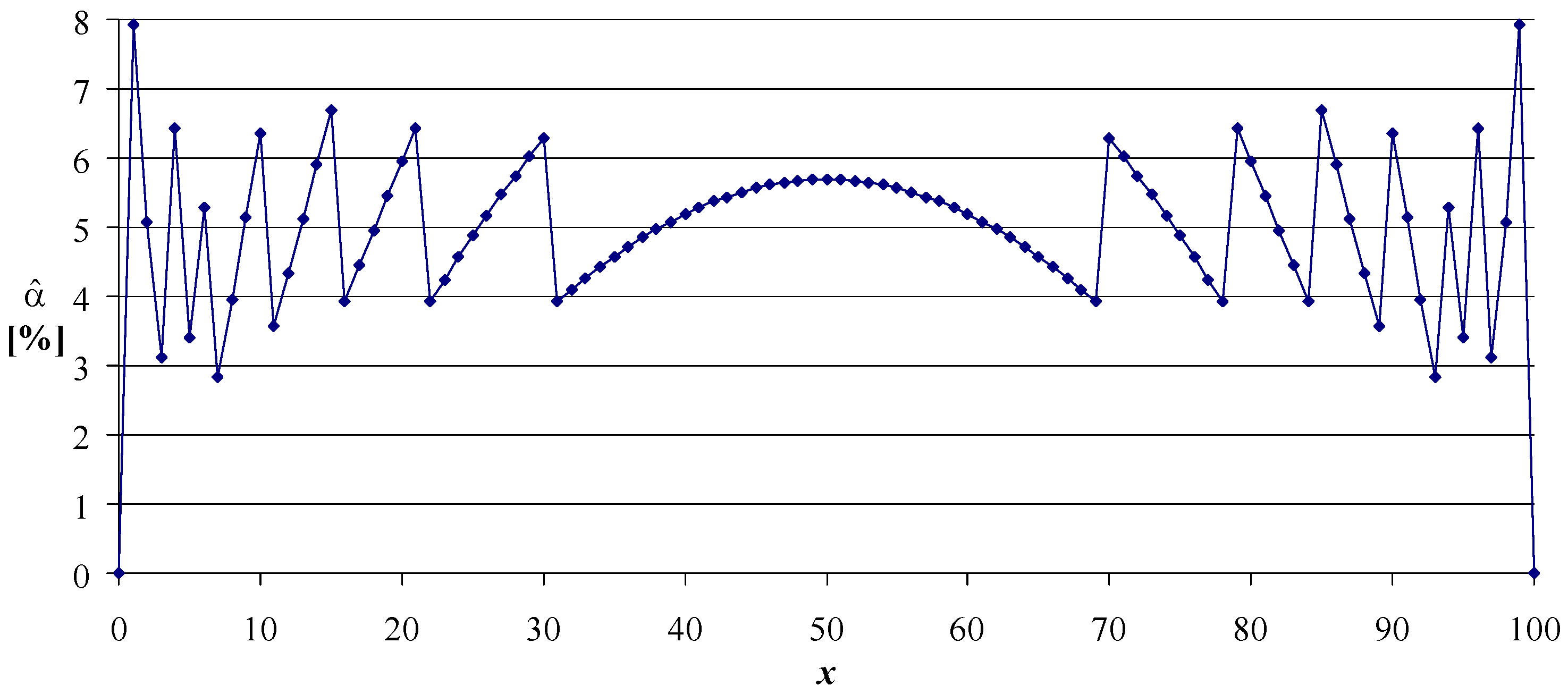

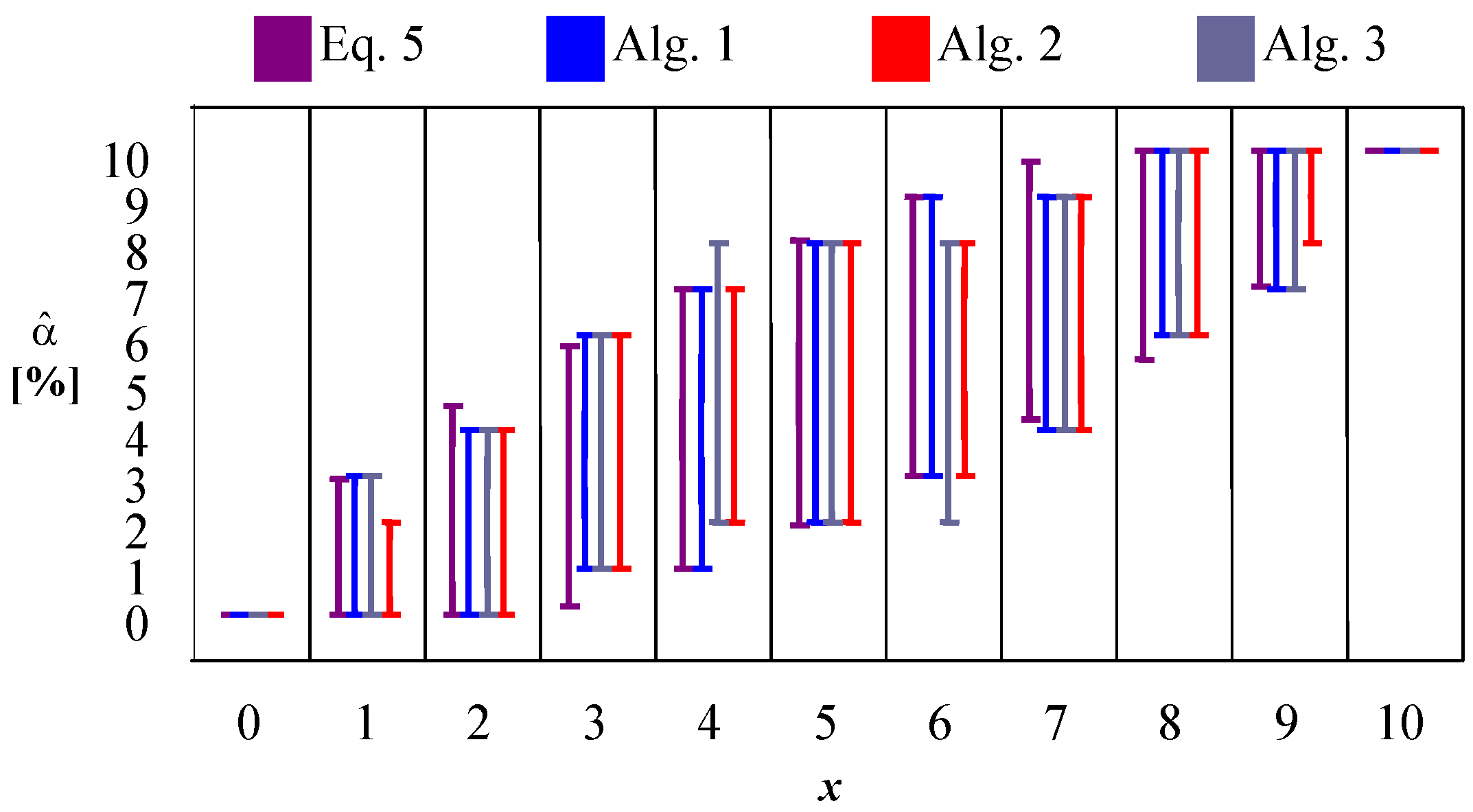

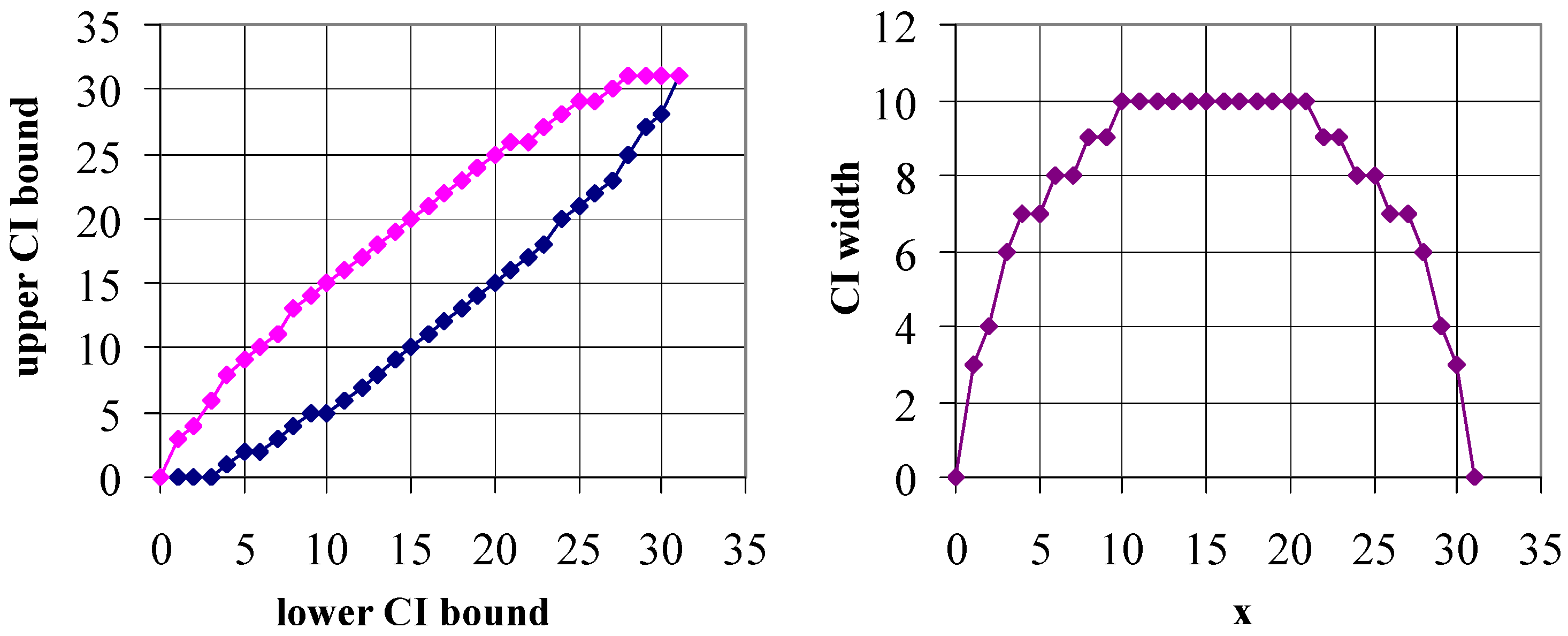

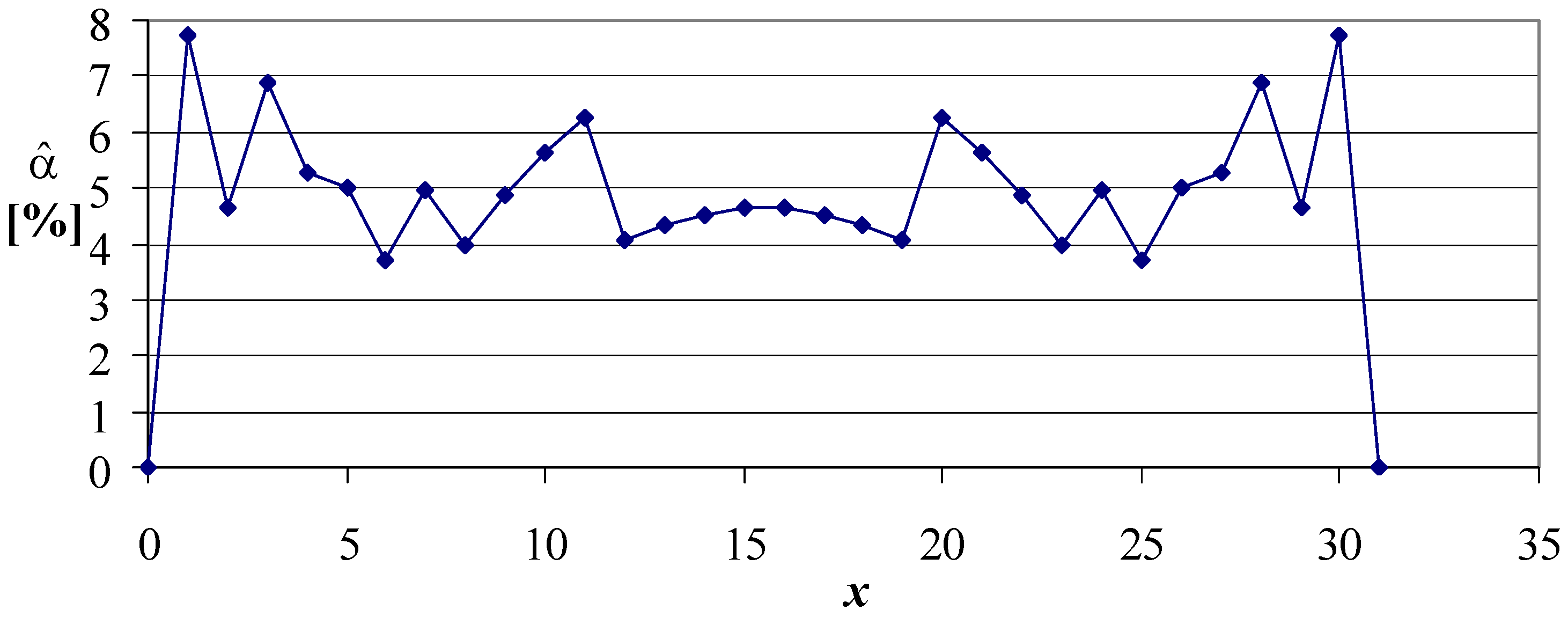

Figure 6 depicts the “optimal” solution for

m = 31,

= 0.05 providing the smallest departure between the imposed and the actual non-coverage probability, when the intervals were constructed iteratively starting from the series of N for

m = 1 (

); raw data in

Table A4. For comparison, the

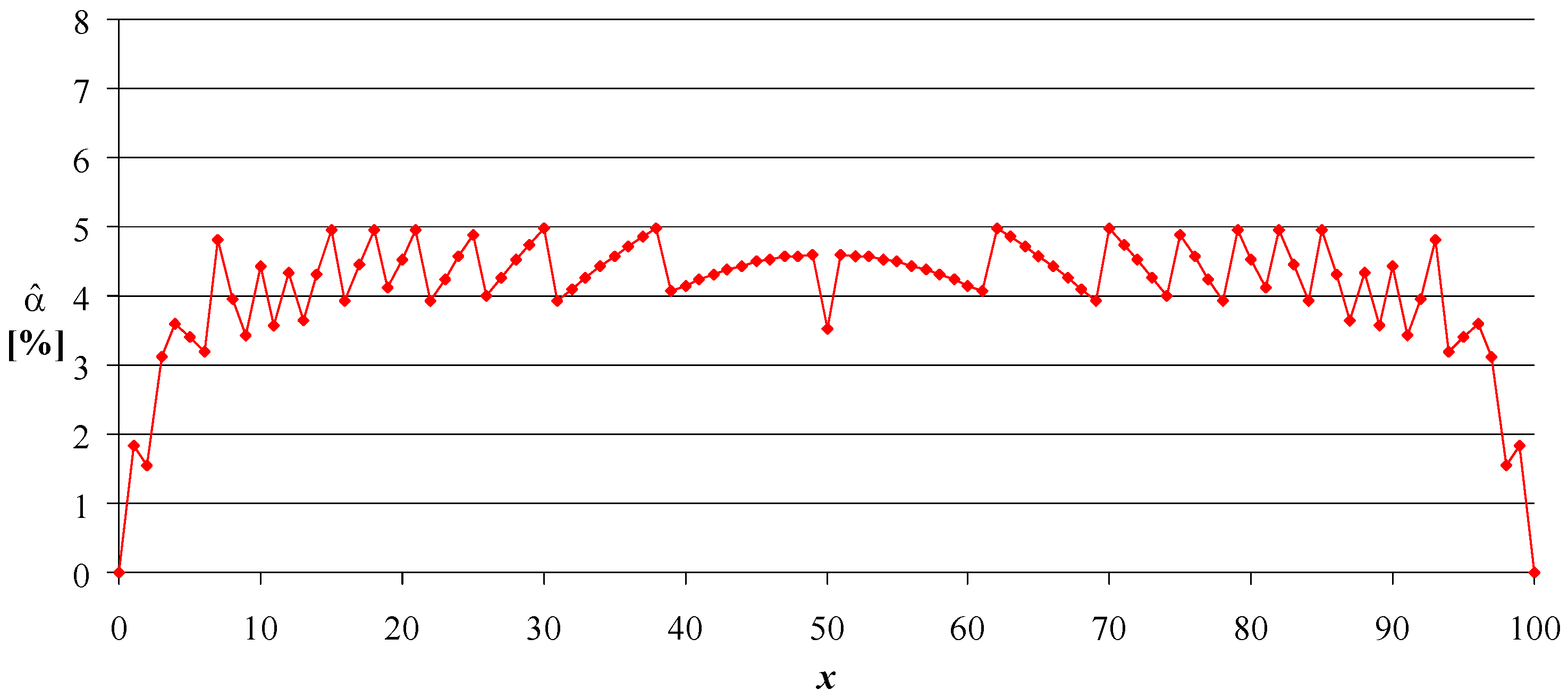

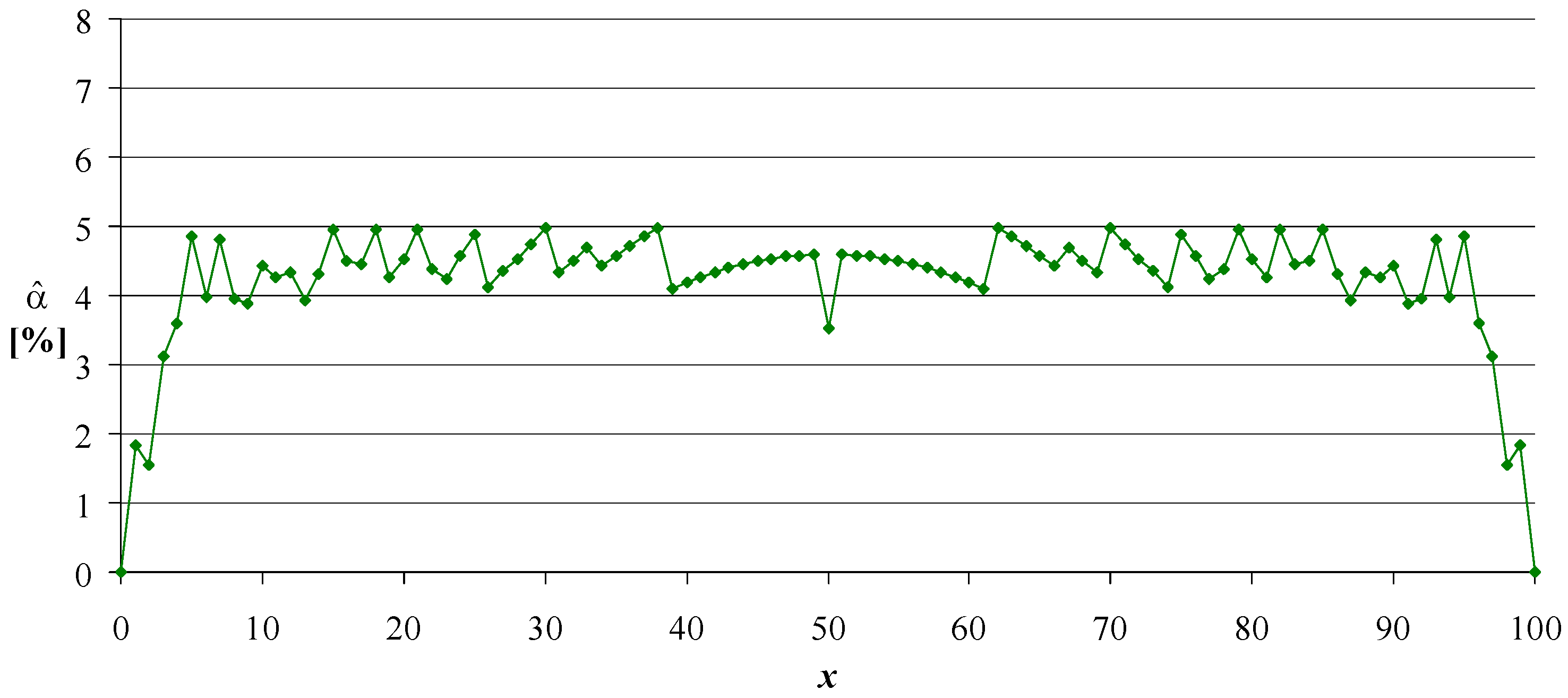

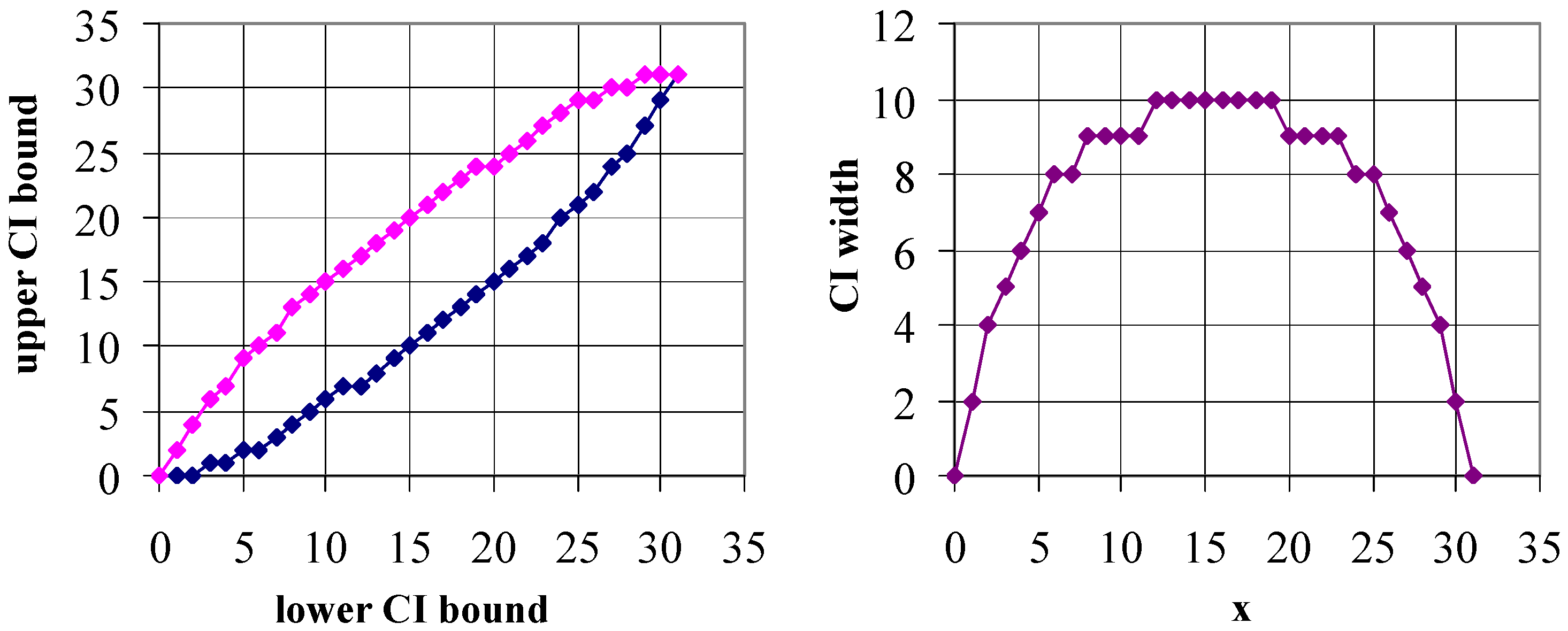

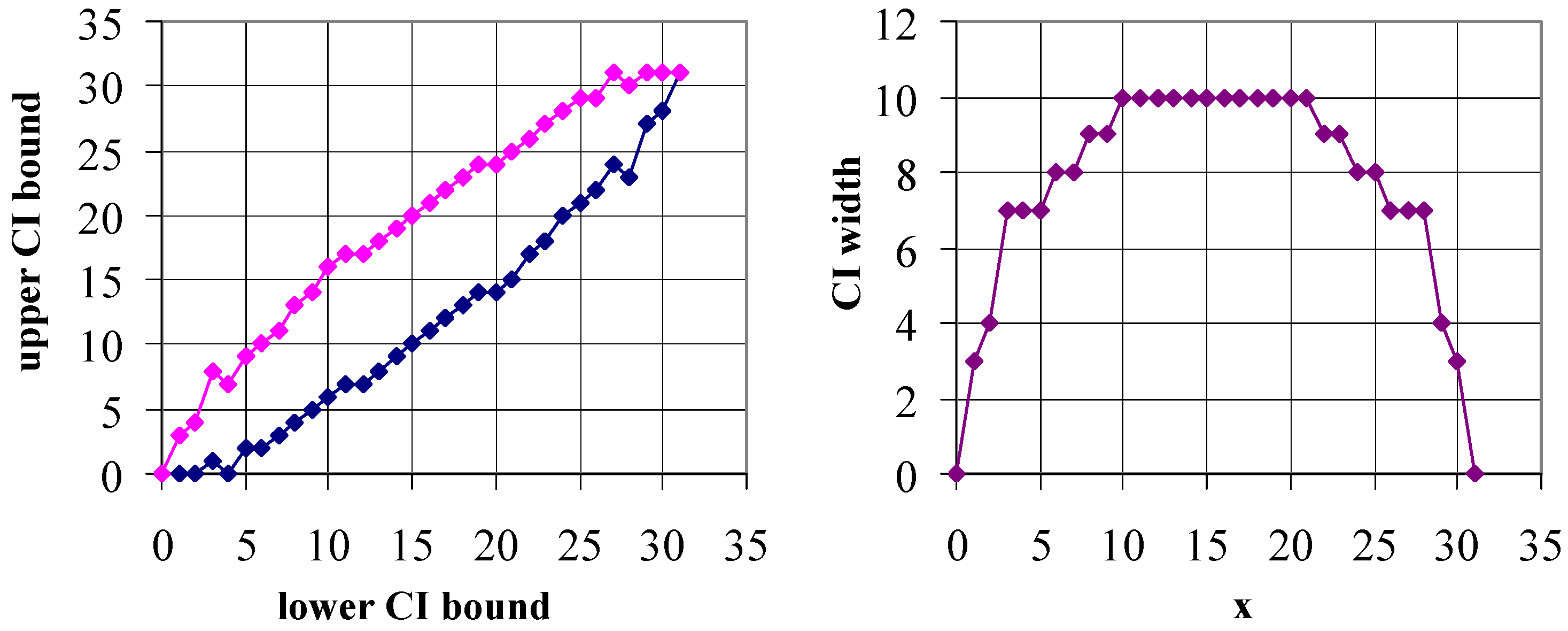

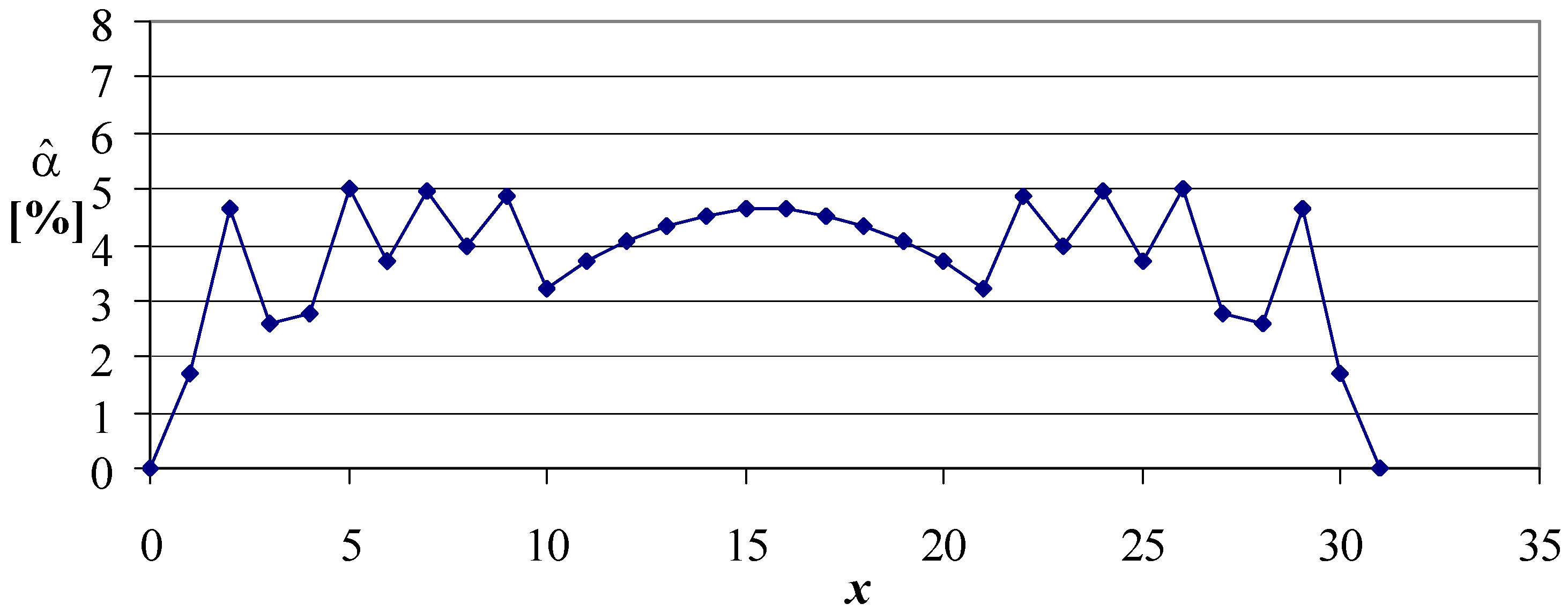

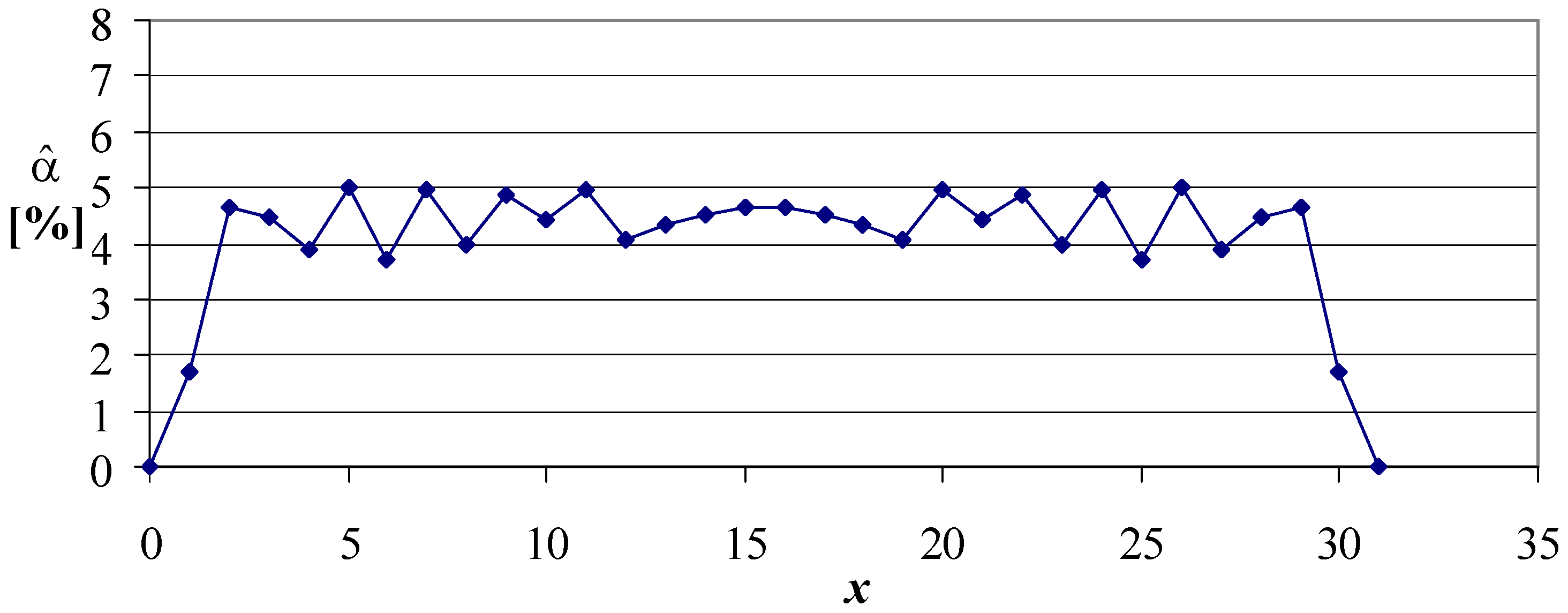

Figure 7,

Figure 8 and

Figure 9 show the confidence intervals obtained from the proposed strategies followed by the illustration of actual non-coverage probabilities (

Figure 10,

Figure 11,

Figure 12 and

Figure 13).

As can be observed from the above figures, the confidence interval boundaries of Algorithms 1 and 2 are monotonic, while those of Algorithm 3 are not (see

Figure 9 left). Nevertheless, the confidence interval itself is monotonic and symmetric from the middle (see

Figure 9 right). In contrast, the (supposed) optimum confidence interval built on monotonies (see

Figure 6 right) is symmetric but not monotonic relative to the center.

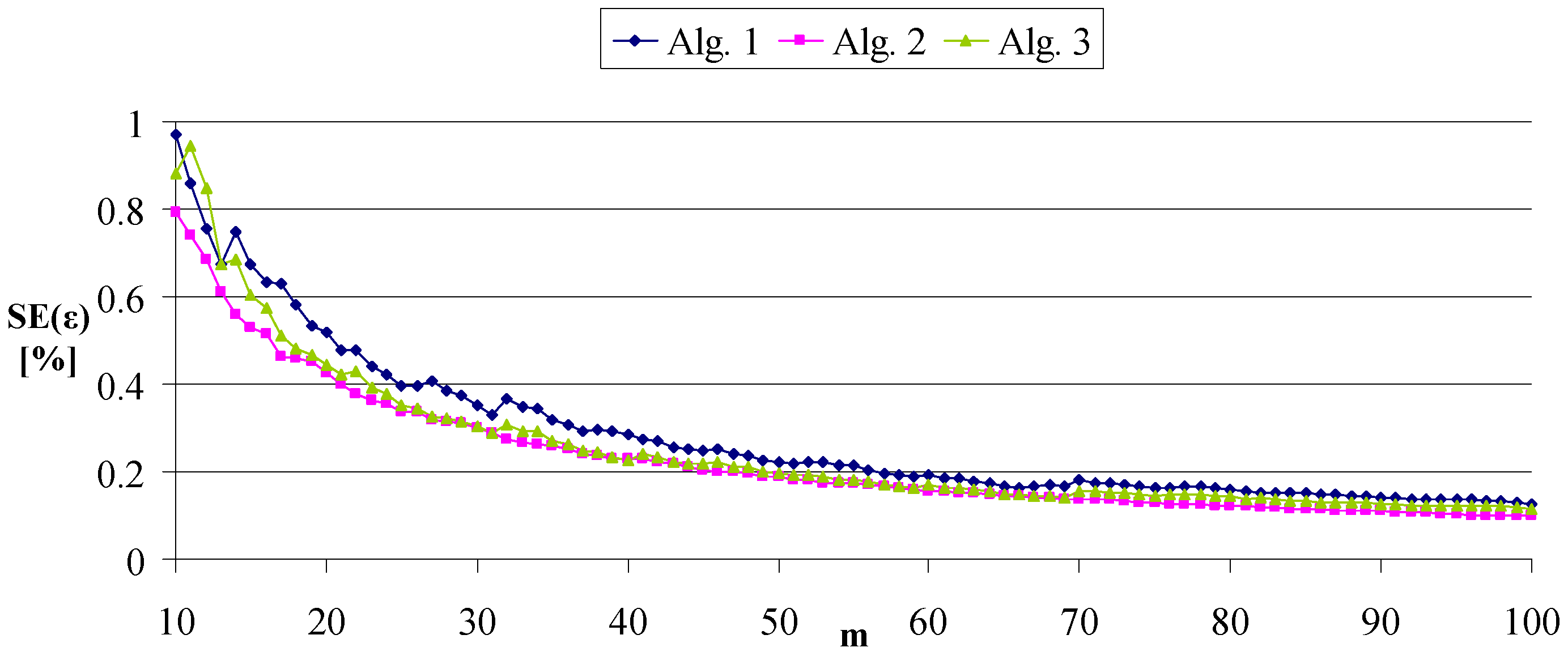

The standard error of the estimate (SE) as function of the sample size for samples from 10 to 100 shows the expected behavior of increasing precision with sample size (

Figure 14).

In retrospect, the improvement proposed by Algorithm 3 vs. Algorithms 1 and 2 seems insignificant (see SE in

Figure 12 vs. in

Figure 13) in relation to the effects—the intervals, even if they are of monotonous width relative to the middle (

Figure 8 right and

Figure 9 right), and are still in a zig-zag form (see

Figure 8 left and

Figure 9 left).

Once we have the confidence interval for a binomially distributed variable (such as the ones proposed by the Algorithm 1 to Algorithm 3) we can derive the confidence interval for the proportion (Equation

11).

Roughly the same calculations are involved when the binomial distribution (Equation (

3)) is replaced with multinomial distribution (Equation (

12)) thus permitting the usual factorial analysis [

31] to be enriched with statistical significance.

The multinomial distribution proportion is a generalization from the binomial distribution proportion (Equation (

12); when

Equation (

12) becomes Equation (

3). Further research is required to adapt the procedures for binomial distributed samples confidence intervals calculation for multinomial distributed variables and proportions.

Since Poisson distribution can be applied to systems with a large number of possible events, each of which is rare, a future work is to adapt the approach for it.