3.1. Additive Bias Field

In a traditional multiplicative model, the fitting intensity of a local region must be obtained by solving the energy function’s Euler Lagrange equation. In this process, multiplication in convolution will add to the algorithm’s complexity. By inducing the Retinex theory into a multiplicative model, we can separate the reflectance and bias field from the multiplicative model. In the Euler Lagrange equation, the additive model has fewer calculations than the multiplicative model.

As mentioned above in

Section 2.2, the image domain is divided into two parts. Firstly, this paper transfers the input image domain into the logarithmic domain. The

is regarded as the input image’s logarithmic domain,

is the high-frequency component, and

is the low-frequency component

Formula (13) can be alternatively presented as follows:

To effectively handle images with uneven intensity, based on Formula (17), the following two hypotheses are provided as:

Hypothesis 1. This paper divides the image domain into n disjoint local regions (in this paper, n is equal to 2), each region is described as and the image domain is . Furthermore, they do not intersect with each other. Then, we assume that the bias field B changes smoothly in the local region with inhomogeneous intensity, and each disjoint local region is given a fitting function .

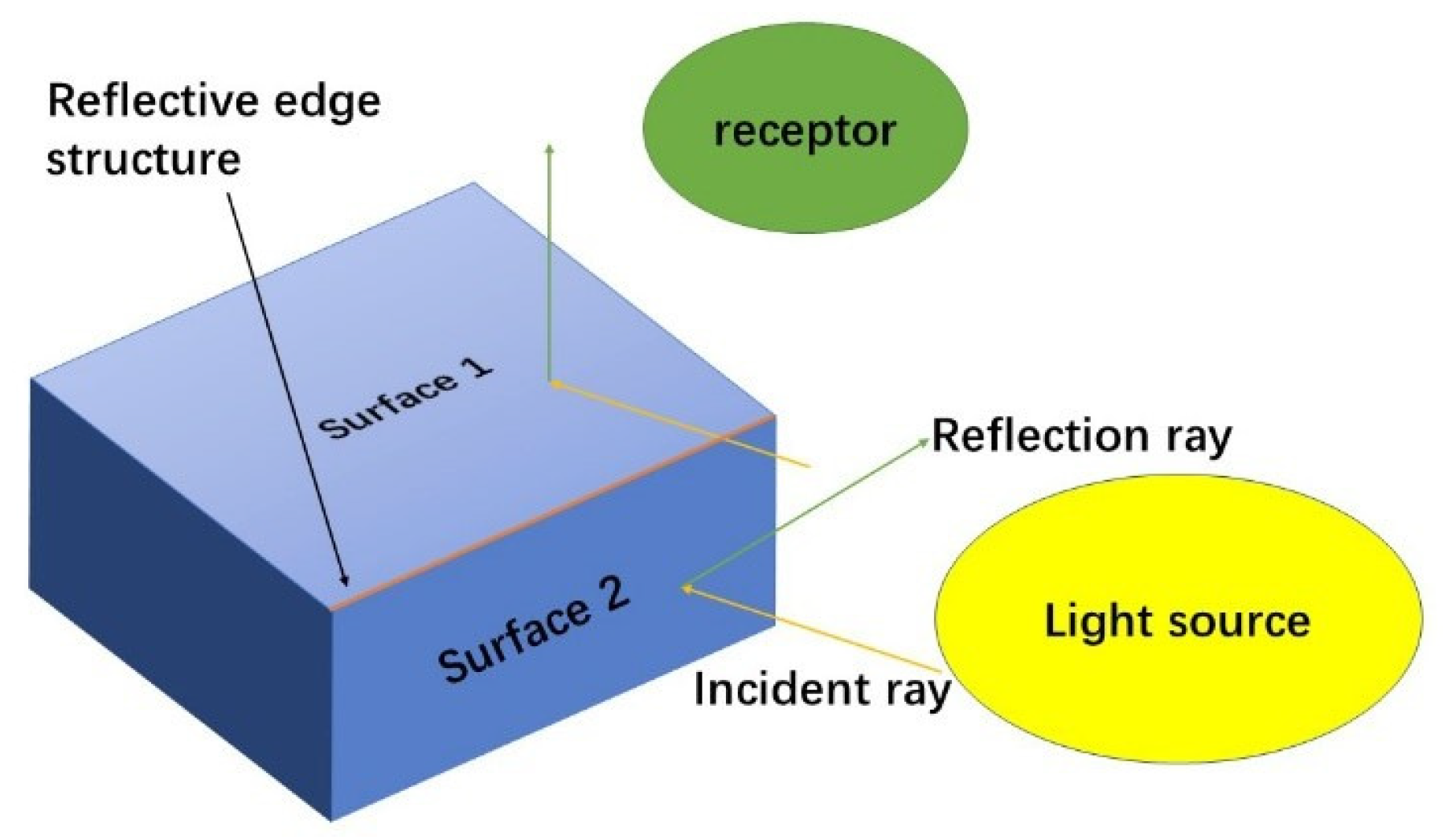

Hypothesis 2. Considering the reflectance behavior characteristics of a derivative in an image domain, the Retinex theory can be fitted in the image domain. Thereinto, represents the inhomogeneous intensity (bias component), is the reflectance, which possesses the characteristics of the derivative, is the whole image’s logarithmic domain, and is the zero-mean gaussian noise. The image is modeled as follows: 3.2. The Second Derivative of the Image—Reflectance

This paper figures out the previous reflectance

. By using a Laplace operator to calculate the second derivative of the image domain, the reflection edge structure of the image domain is obtained as

In this sense, the following subsection will explain why the reflection edge structure can be obtained by a second-order differential calculation of the image domain.

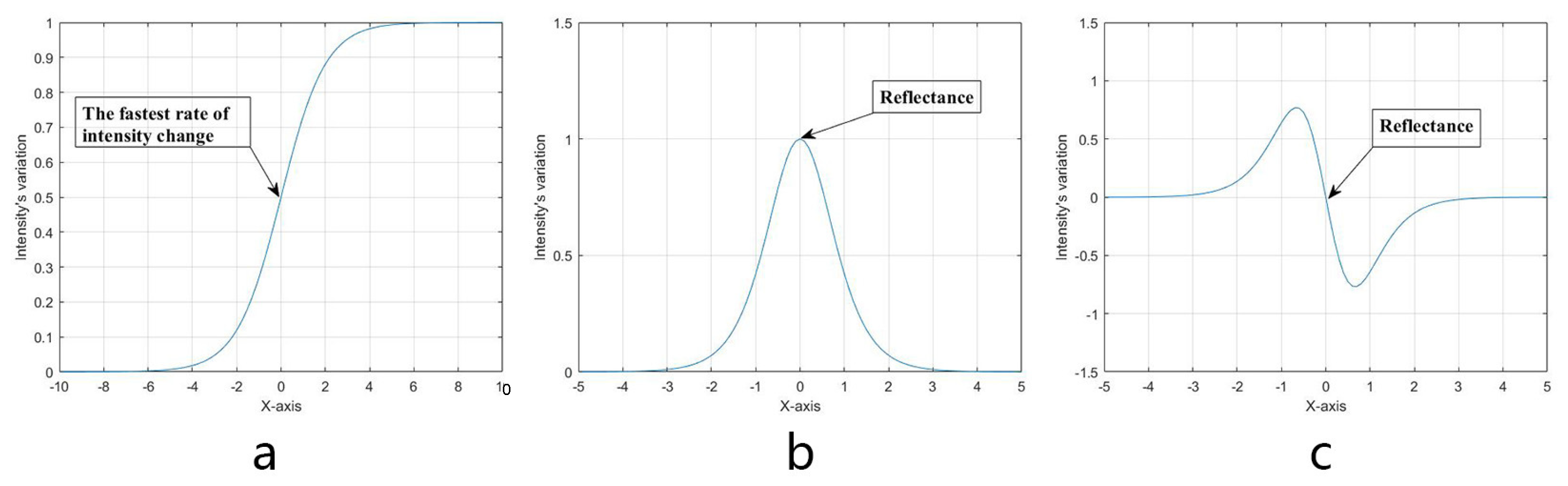

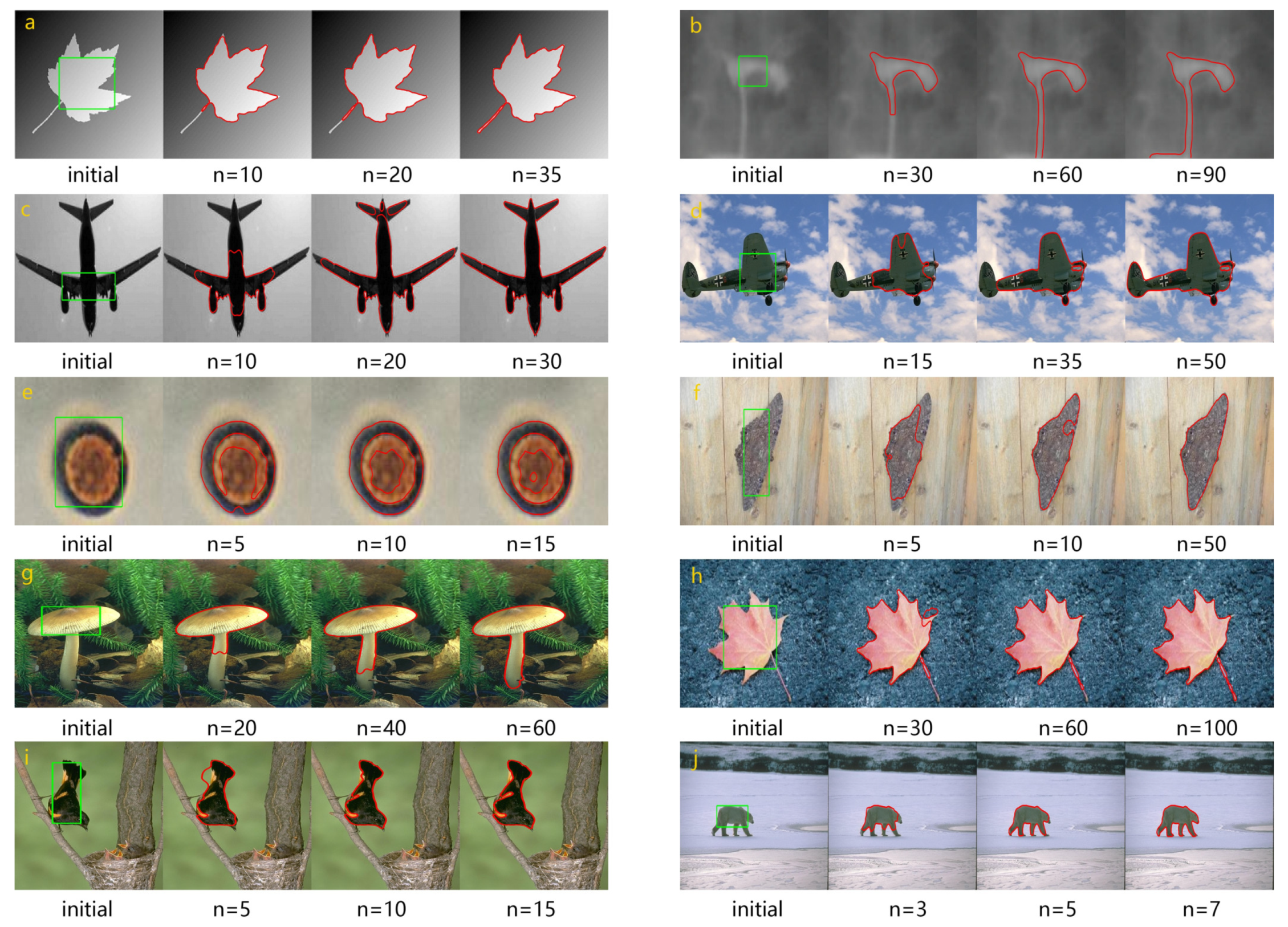

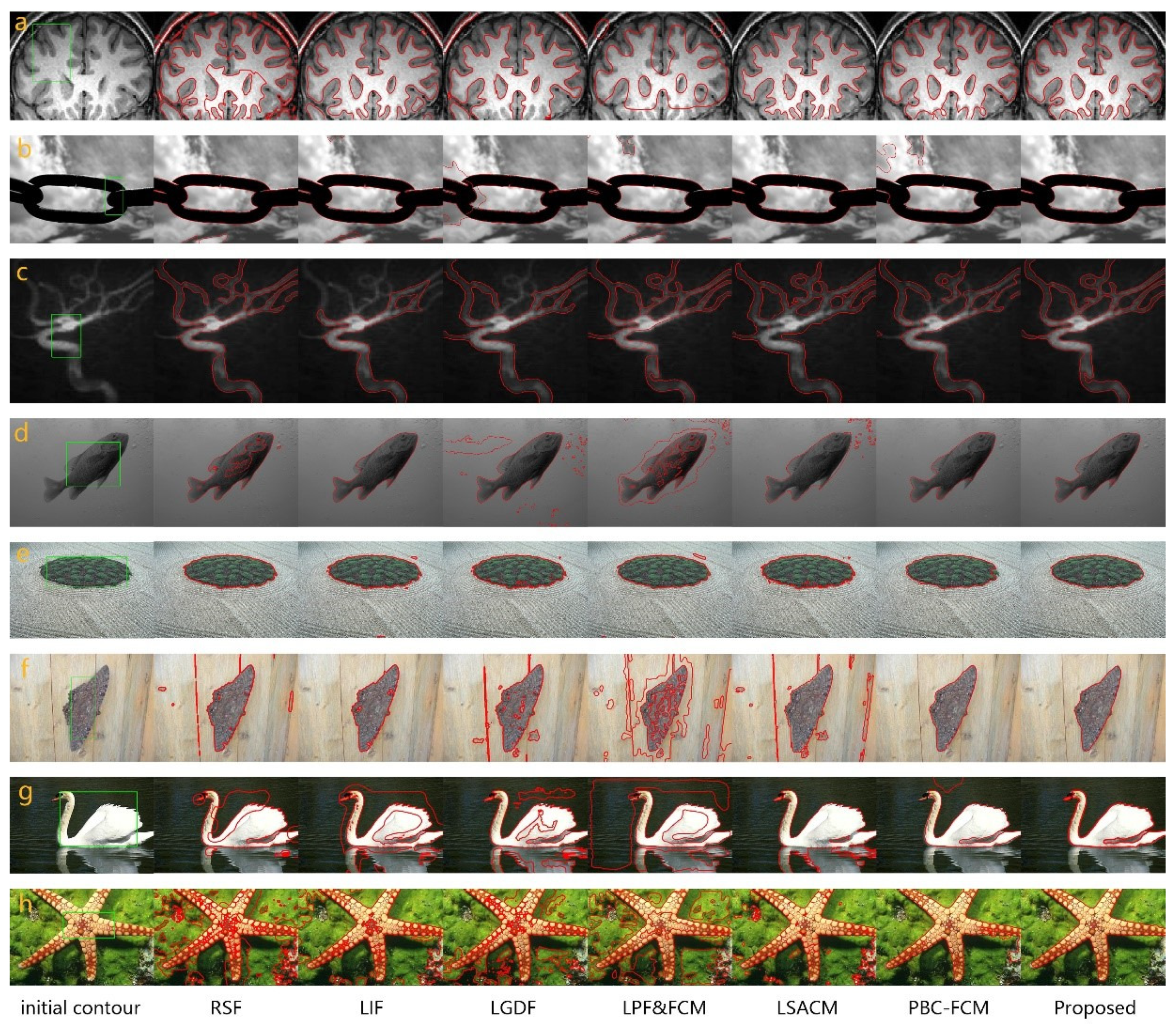

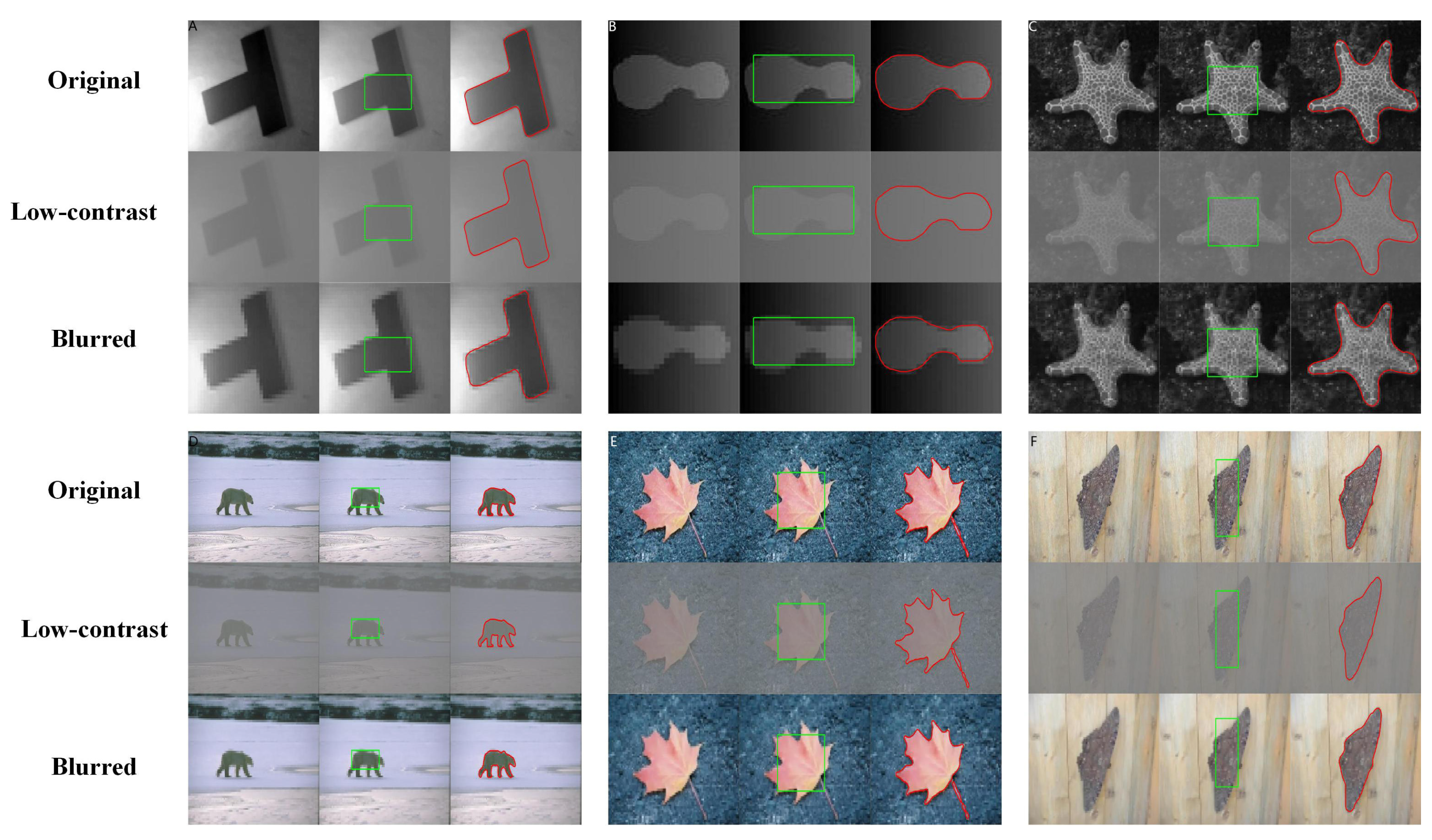

For most images, at the boundary, which is between the target and the background, the variations of intensity are always drastic. From the first graph a in

Figure 2, the first curve represents the intensity change in the image domain. In the transition process from the background image to the target, the intensity value changes from weak to strong. The red circle marked in the image denotes one of the most dramatic changes, which is the target boundary to segment.

To make this phenomenon more obvious, the gradient descent algorithm is carried out on the image to compute the first-order derivative, and the results are plotted in second graph b of

Figure 2. The boundary between the background and foreground is represented as the highest point. Compared with a, the peak value at this time can clearly reflect the edge structure and the change in the trend of the image intensity.

Following the same logic, continue to take the second derivative of the graph using the Laplace operator, and the third graph c of

Figure 2 is plotted. The point in the curve that crosses the zero value between the peak and the trough is the target boundary to be divided.

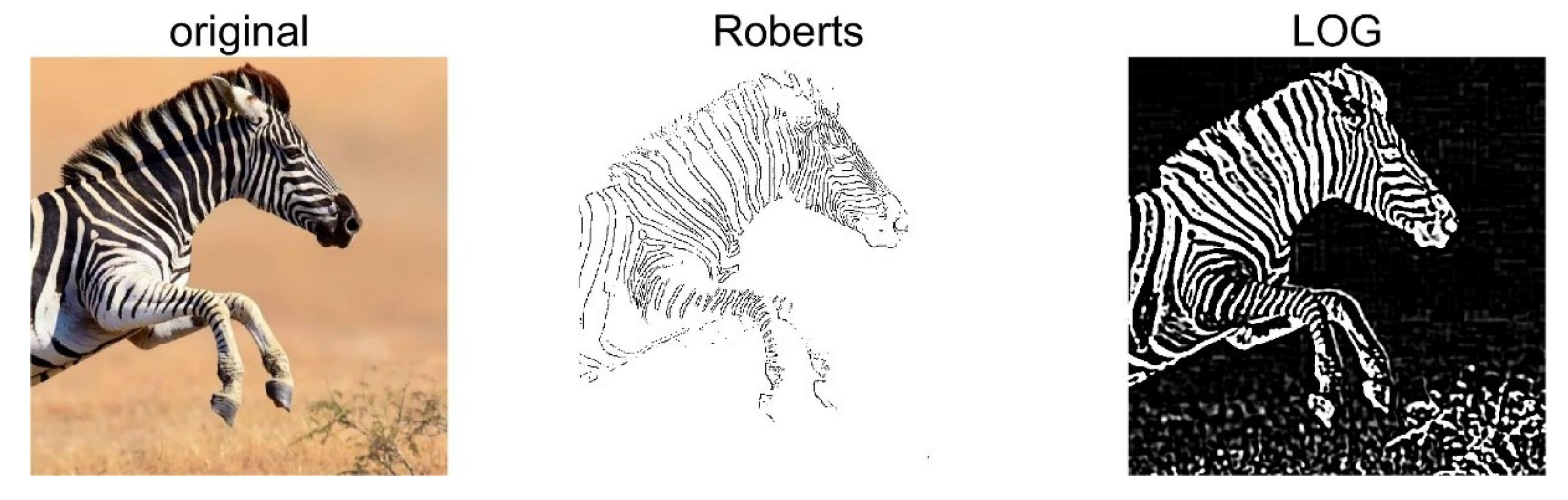

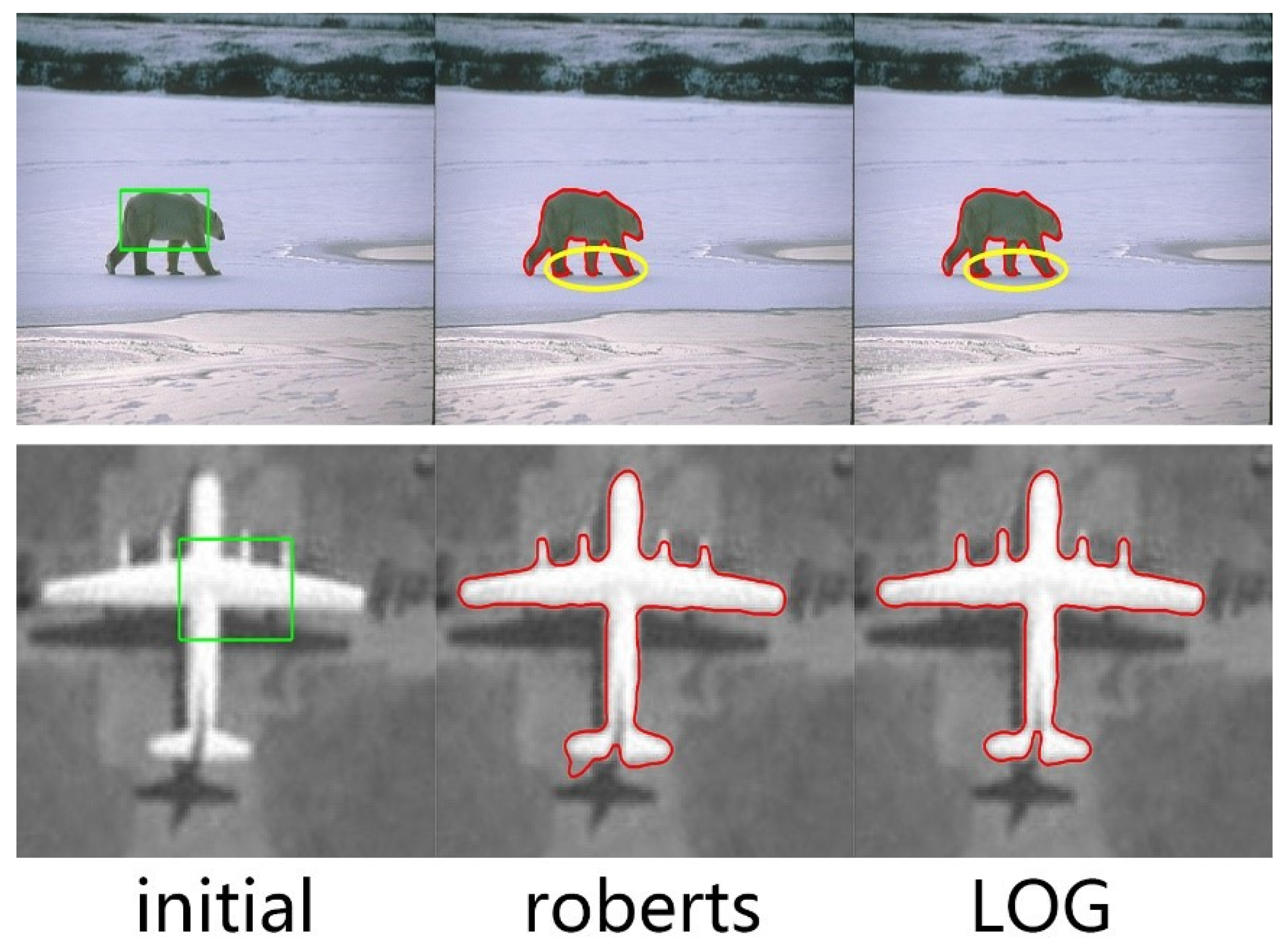

An image of a zebra is selected in

Figure 3 to exhibit the difference between a first-order differential operator and second-order differential operator. This figure shows the effect of boundary extraction between LoG and Roberts algorithm. From the outcome, it is explicit that the LoG algorithm is stronger than Roberts algorithm.

The preprocessed edge structure

only needs to carry out the second derivative operation in the image domain, which eliminates the partial derivative of the edge structure

when the energy function is minimized. It eliminates the multiple convolution operation of calculating the edge structure

. Therefore, according to the above-mentioned information, our additive bias field formula is rewritten as

3.3. Criterion Function

According to the two hypotheses and image models proposed in

Section 3.2, Formula (18) is further improved. Firstly, an initial contour line

c is given as the dividing line between the two regions

, and the image domain

is divided into two parts. Since the

function can merely calculate the data of the very small narrow-band region attached to the boundary line

c, a Gaussian truncation function

is introduced on contour line

c. Define a circle with a radius of

, and the center of the circle is point

on the initial contour line

c:

. The Gaussian truncation only has values in this neighborhood, and

when it goes beyond this neighborhood.

In Hypothesis 1, it is mentioned that the image domain is divided into n disjoint neighborhoods , the value of n is 2 means the two regions that are inside and outside the initial contour. Using these regions, induce the partition of into , as shown above . Then, integrate the slowly changing bias field into each partition that has been divided as . Since the partition of has been induced into each subregion of the image domain, the partial field is expressed as .

3.4. Energy Function

A level set image segmentation model is proposed based on the additive bias field. To remove the influence of the reflection edge structure on the image, the

is obtained as

. Referring to the clustering criterion algorithm of K-means and using the dichotomy method of the membership function, the following energy function is put forward as

where the

is the membership function. The execution rule is described as

Because the

proposed in this paper represents the intensity fitting of the local region, the fitting value of the local regional intensity in the formula, i.e., the clustering center, is approximately expressed as

. Furthermore, this formula is rewritten as

Introducing the Gaussian truncation function, clustering regions

can be integrated into the area

, and the energy value of all points

x in the local region except the center point can be calculated. The redefined criteria are obtained in the following formula

This formula only expresses the energy value of a point

in the local image domain, and this local image domain is a local region centered at point

. The next step is to integrate it globally over all local regions’ centers points

in the whole image domain

, to the minimum energy

in the image domain

is obtained. The energy formula is presented as follows:

In order to integrate the

integral area into the energy formula, the Lipschitz function is introduced, which can be used to represent two partitions of

. The Lipschitz function in this paper is expressed as follows:

where

is the Heaviside function, the strength of the regional

value is

, the strength of the regional

value is

, and the new energy function can be obtained by exchanging the order of integration

According to Equation (20) proposed in this paper, the formula can be written as

To find the optimal solution

when the energy is minimized, regard

as a fixed value and find the partial derivative of energy

with respect to

, and make it equal to 0. Solving the derivative formula to obtain

, based on the Lipschitz function defined in this paper, the

and

areas of the clustering center values can be expressed as, respectively,

A new local energy term

is defined, and the regional energy term in the formula is represented by

; then, the energy function of local regions is expressed as

Expand the absolute value squared term, and write the integral multiplication as convolution

means in the region of the Gaussian truncation function is 1, and 0 outside the region. The energy term can be rewritten as

Then, the partial derivative of energy

with respect to

is carried out by means of gradient descent flow to find the appropriate

to minimize the energy term

E. Take

b as a fixed component, take the partial derivative of

E with respect to

, and the energy driving term is obtained as

where

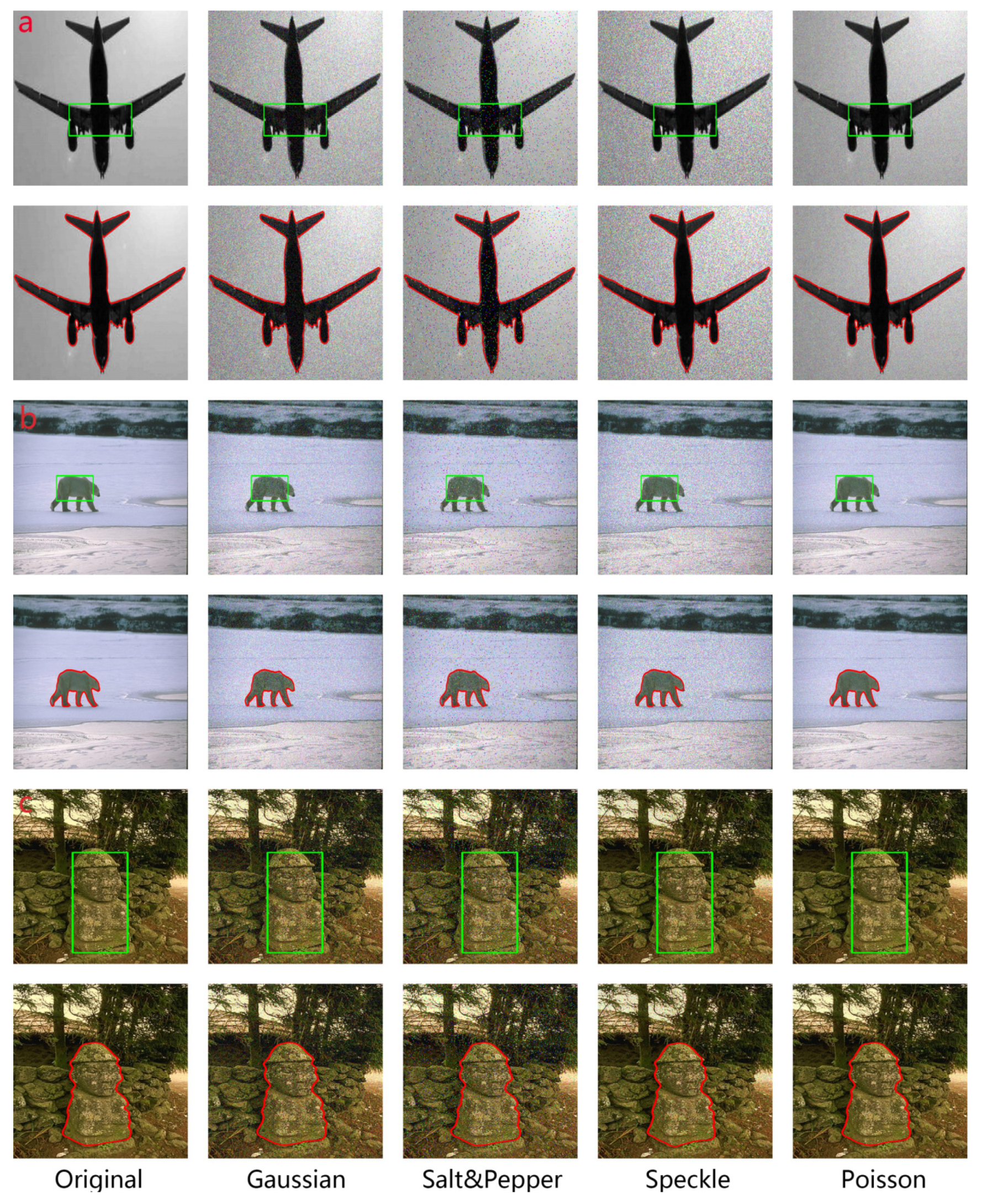

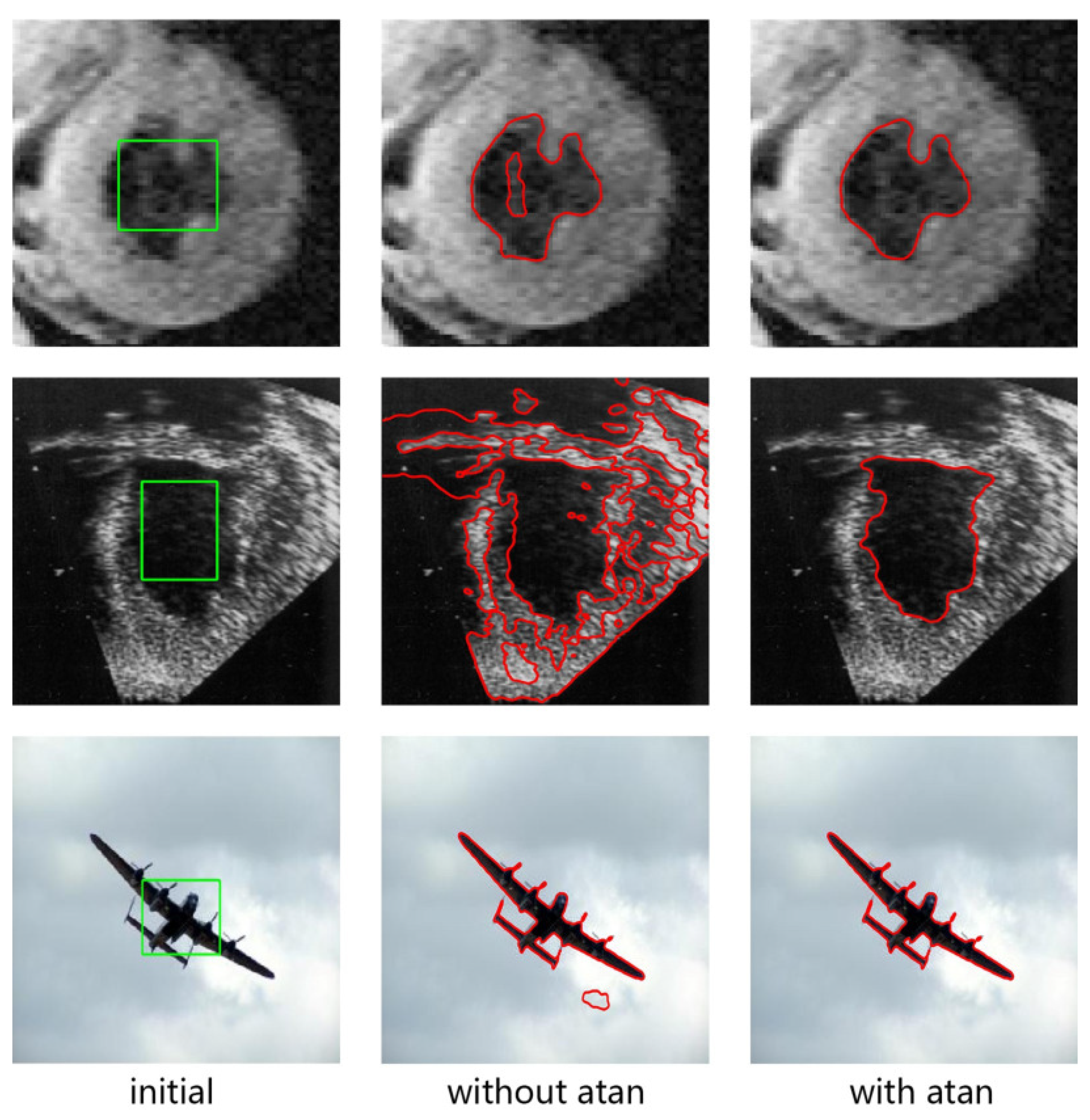

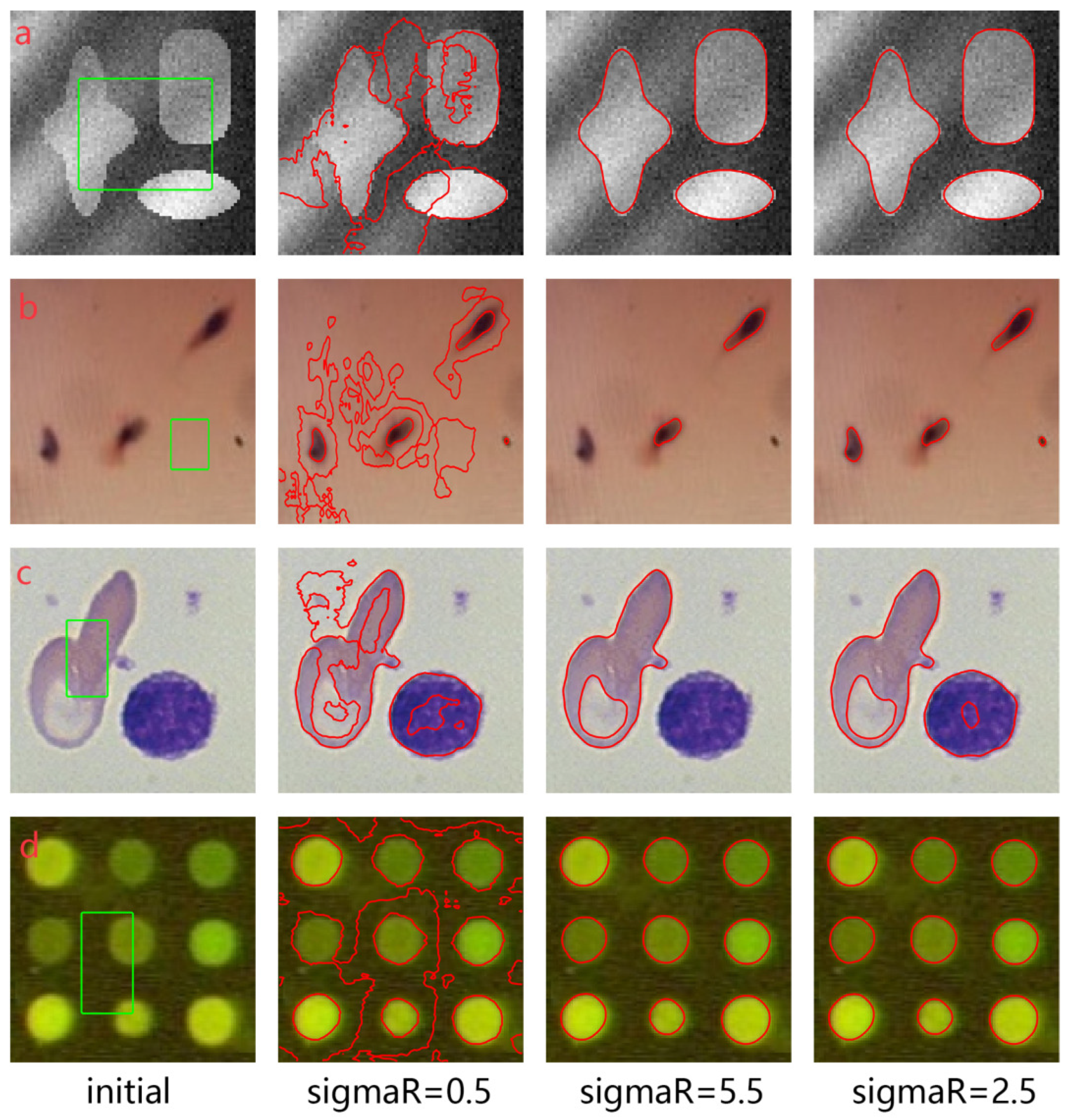

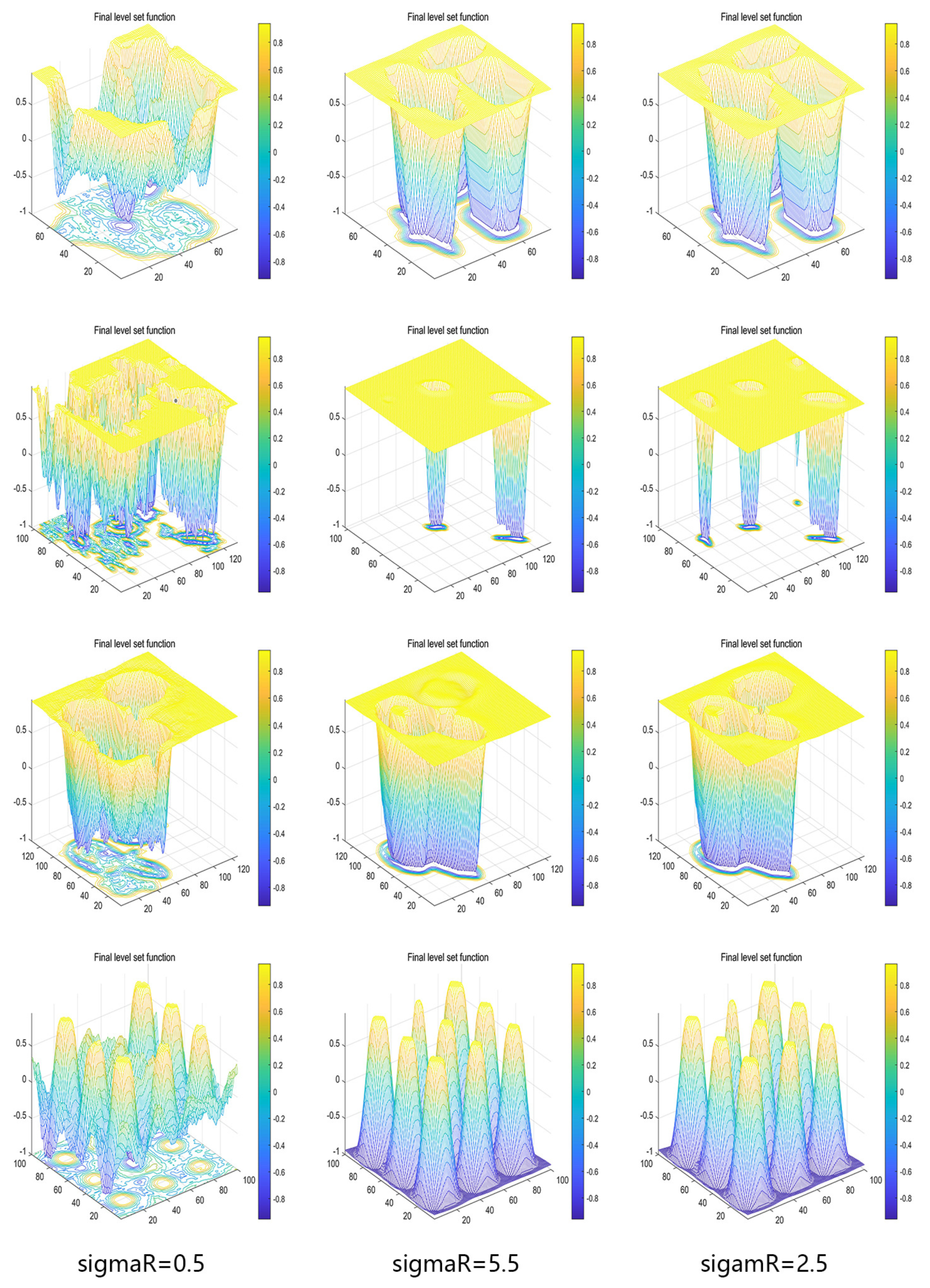

is the derivative of the Heaviside function, and this formula considers the inhomogeneity of image intensity on the basis of the CV model. Compared with the BC model, the time consumed by the convolution operation is reduced. In practical applications, due to the diversity of the images, the intensity difference of the images leads to

great differences in the results. As a result, the robustness is poor in different images, and the time consumed in different images is uncontrollable. Hence, an activation function is added to handle data-driven items

.

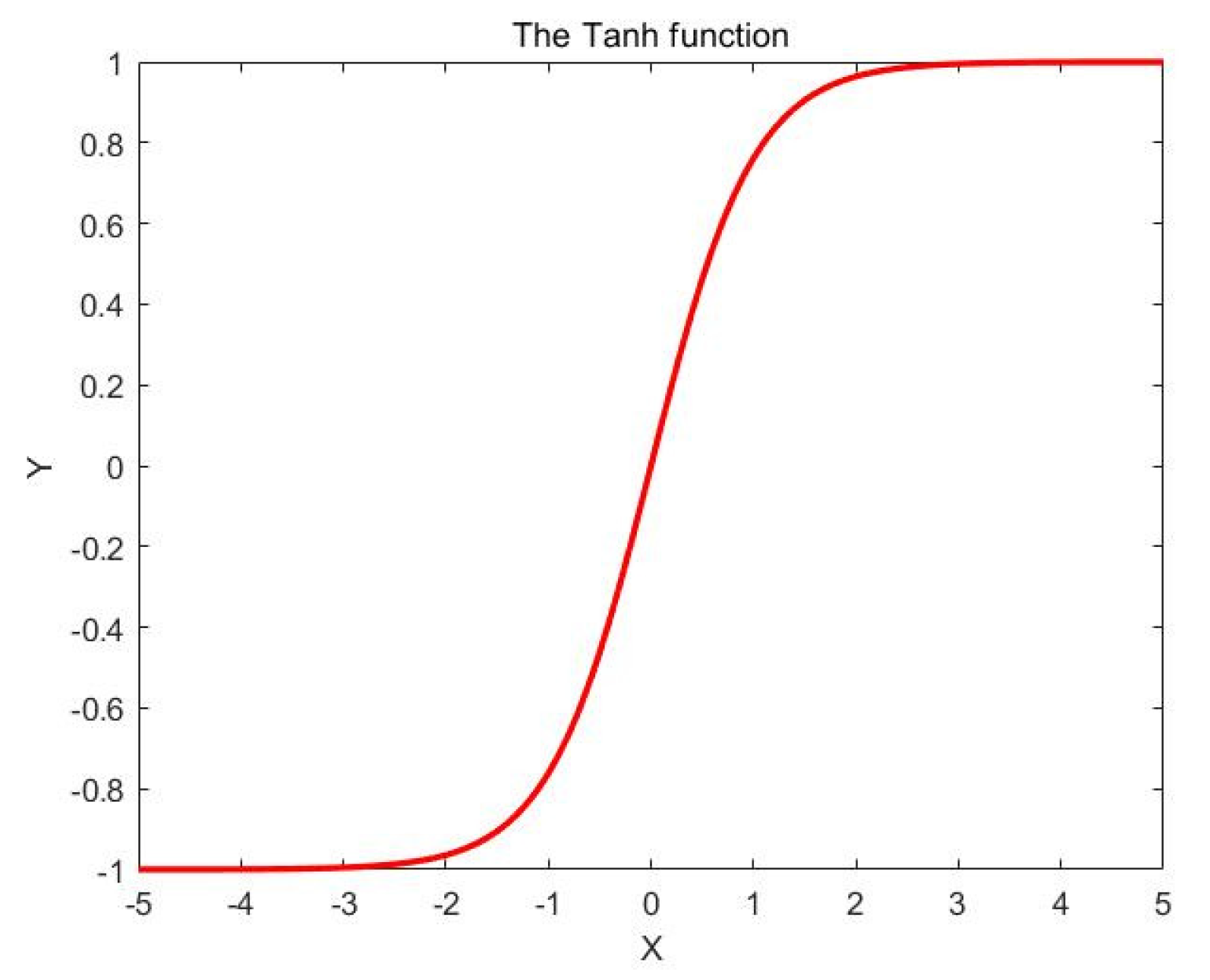

Note that

is an odd function of zero crossing, and near zero, the rate of change is large, which improves the sensitivity of the small value part and makes it easy to determine the boundary. The range of the activation function is (−

, so the data-driven entries are bounded between (−

. This solves the problem that the data driver items of different images differ greatly. Adding an adjustable

parameter enables data-driven items to adapt to different images, where

is the standard deviation, increasing the robustness of the activation function. When

is large, increasing the value of

makes it closer to zero faster and determines the boundary of the target. When

is small, decreasing the value of

achieves the same effect. Finally, an adjustable energy proportionality factor

is added, and the original energy drive term can be rewritten as follows:

Once the gradient descent equation has been defined, the next procedure is evolution. Consider the evolution process of the level set function

Thereinto, is a time step, and has been defined in Equation (33).

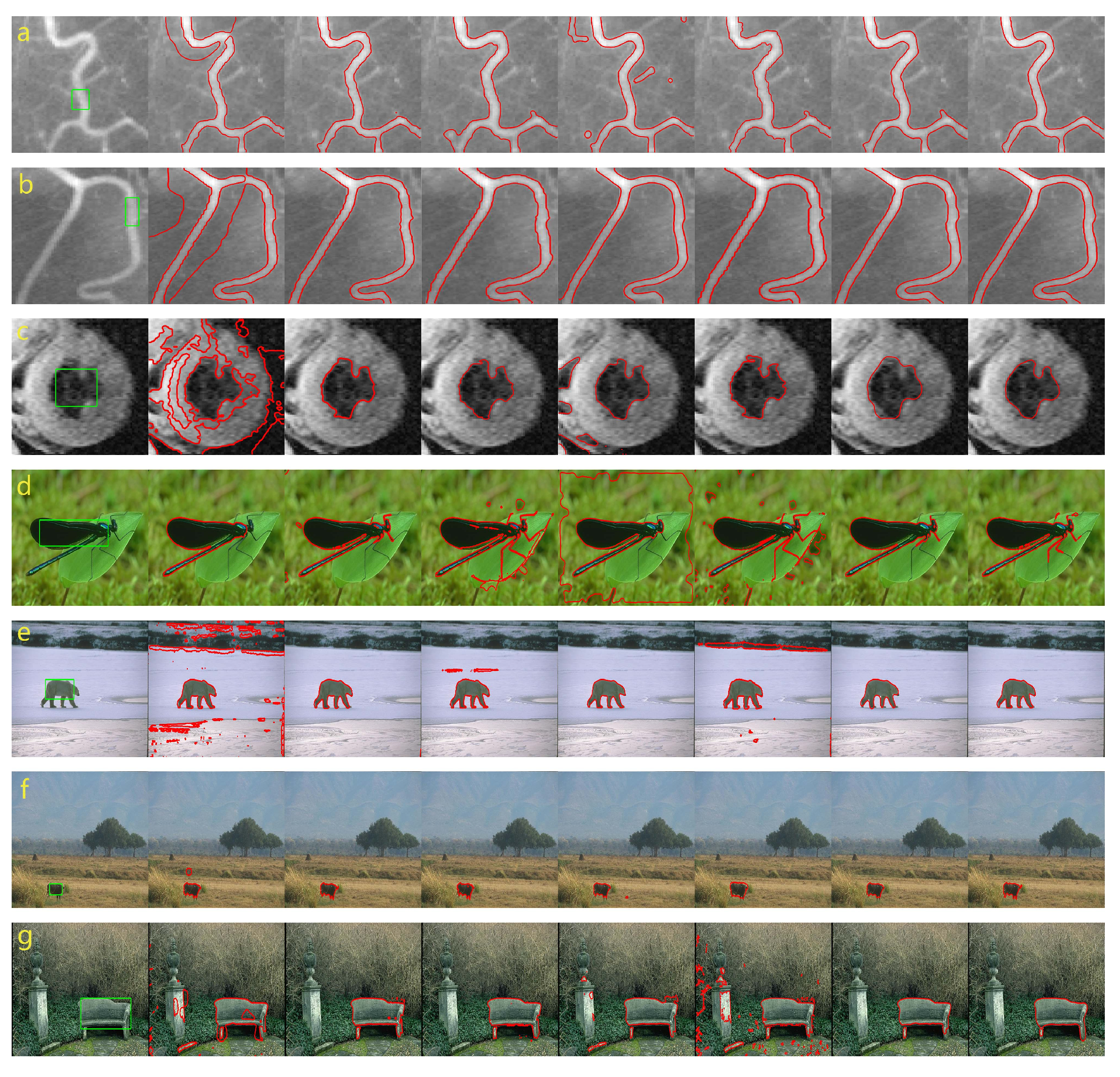

3.6. Position of Initial Contour

Although our model is robust to the initial contour, the initial contour in two cases will lead to a great segmentation challenge:

The first case: The initial contour does not intersect with the segmentation target. In this case, the segmentation result will contain many meaningless segmentations, and the position of this initial contour will become stuck in a false boundary because of background information.

The second case: This case most likely happens when segmenting big targets in which the original image is divided into left and right or up and down regions. The initial contour is set outside the target, but it intersects with one side of the target’s boundary. In this case, the level set cannot evolve to another side of the target’s boundary, and the level set will be trapped in the local optimal solutions.

In conclusion, the position of the initial contour is usually set inside the target or set to contain the target. If the initial contour is set outside the target, we should ensure that the intersection accounts for a large proportion of the initial contour.