Energy-Efficient Task Scheduling and Resource Allocation for Improving the Performance of a Cloud–Fog Environment

Abstract

1. Introduction

2. Literature Survey

2.1. Drawbacks in the Existing Algorithms

- In [7], the authors did not consider the network bandwidth, co-location, and parallelization, which were major concerns due to data centers from various locations, serving user requests, network criteria, and delays.

- In [8], the computational time complexity was high. Moreover, the authors did not consider the minimization of the workload completion time to resolve the TS dilemma in the cloud–fog networks.

- In [9], the memory and execution time were not taken into the objective function.

- In [10], the longer queuing delay provided a high computation time.

- The CMaS scheme [11] was not suitable for real-world applications.

- In [12], the authors did not provide more suitable services to users.

- In [13], the costs of the service providers and the power usage in fog servers were not reduced.

- The efficiency of MRBEA [14] was not effective due to more VM migrations.

- In [15], the authors did not consider the power–delay tradeoff dilemma in heterogeneous fog networks while constructing the preference profile.

- The MEETS scheme [16] did not consider the offloading workloads in heterogeneous fog systems.

- The computational complexity of the DA-DMS [17] was high.

- The DEBTS scheme [18] was not effective for complex homogeneous fog networks.

- In [19], the optimum locations of FNs were not determined, and caching efficiency was not enhanced.

- In [20], the authors did not consider the dependent types of workloads, and the execution order of the workloads for further enhancing the performance.

- The ECORA [21] method has high complexity.

- In ITSA [22], workloads were not managed instantly in a few situations where workloads contained arbitrariness.

- In [23], the service failure probability was high because, in this structure, the workload was performed on a single VM, which restricted the CPU resources. Moreover, the workload was not properly sent while there were several workloads in the network.

- In [24], the decision was contradictory in real-time scenarios since the framework was only suitable for a single objective function.

- In OppoCWOA [25], the scenario was not considered, where the workload implementation was unsuccessful on a fog node because of the destruction of the CPU.

- In [26], the authors did not consider the execution time and memory to determine the objective function.

- In [27], the running period of IGS increased while the population was huge.

2.2. Contribution of the Study

- It determines whether the workloads are decided or not.

- An energy-cost–makespan-aware scheduling algorithm is applied, in which the decided workloads are denoted as graphs using a DAG.

- Once TS is completed, this ECBTSA-IRA algorithm using different reinforcement learning methods, such as QL, SARSA, ESARSA, and MC, is performed to allocate the resources efficiently.

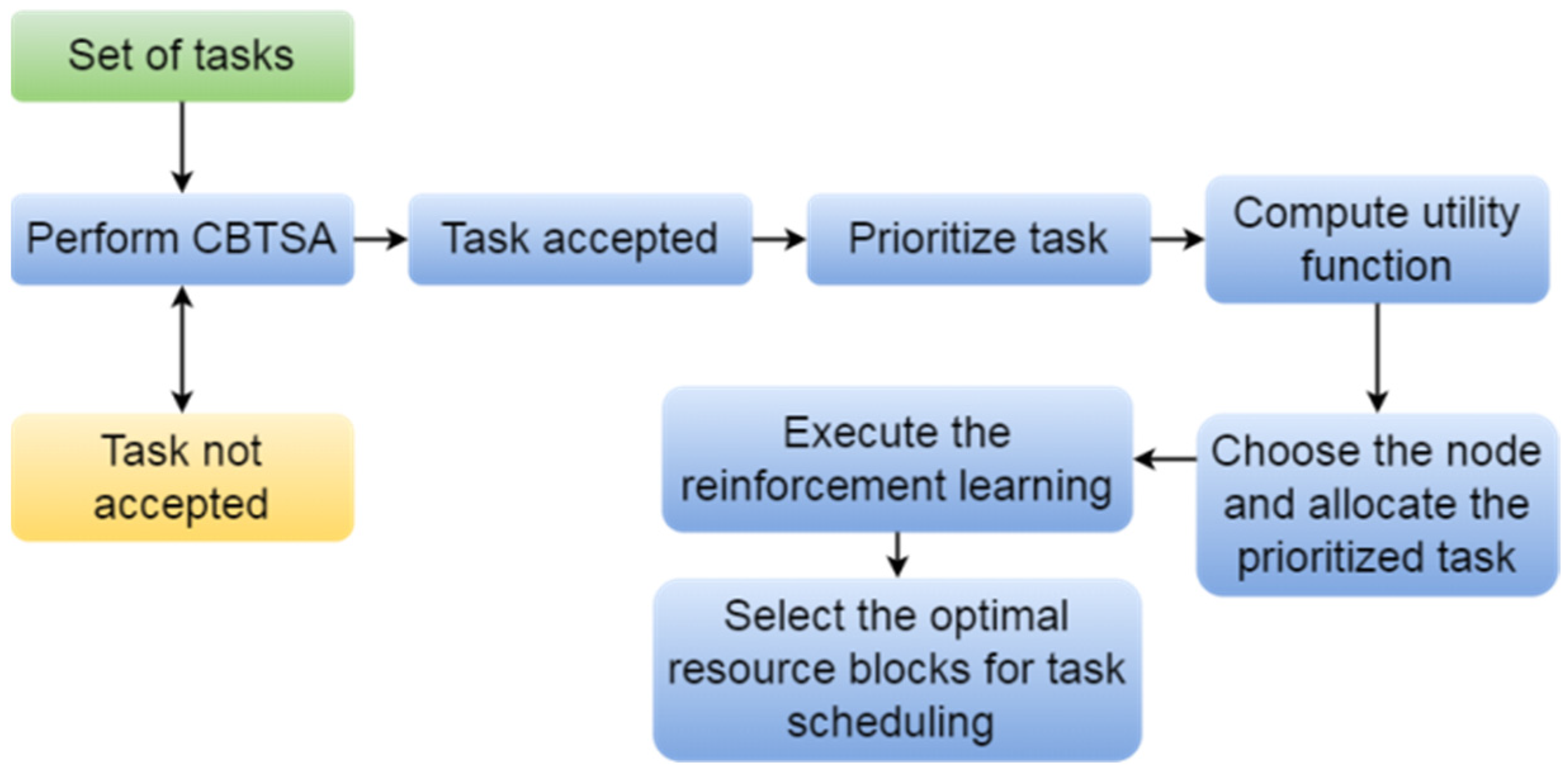

3. Proposed Methodology

3.1. Energy-Efficient, Cost- and Performance-Effective Container-Based Task Scheduling Algorithm

3.1.1. Workload Prioritizing Step

3.1.2. Node Selection Step

| Algorithm 1 |

| Input: Decided workload set |

| Output: A workload schedule |

| Initialize |

| Determine the priority range of every ; |

| Rank all decided workloads into a list according to their priority levels; |

| Compute and ; |

| Compute ; |

| Allocate to that increases of; |

| End |

3.2. ECBTSA with Intelligent Resource Allocation

3.2.1. MDP Problem Formulation

3.2.2. Optimum Strategies

| Algorithm 2. Learning the Best Strategy using MC |

| Choose:and maximum iteration |

| Input://Number of RBs;= 100; |

| Initialize //an array to store the state’s profits in all iterations |

| Set ; |

| Obtain resources using Equation (24) until the end; |

| Total of offered incentives from till end state for every state; |

| Add to; |

| Break |

| ; |

| Algorithm 3. Learning the Best Strategy using QL, E-SARSA, and SARSA |

| Choose:and number of time steps |

| Input://Number of RBs; |

| Initializearbitrarily in; Initialize; |

| Obtain based on and accumulate and ; |

| ; |

| //QL: |

| ; |

| //E-SARSA: |

| ; |

| //SARSA: |

| ; |

| ; |

| Modify with ; |

| ; |

4. Simulation Results

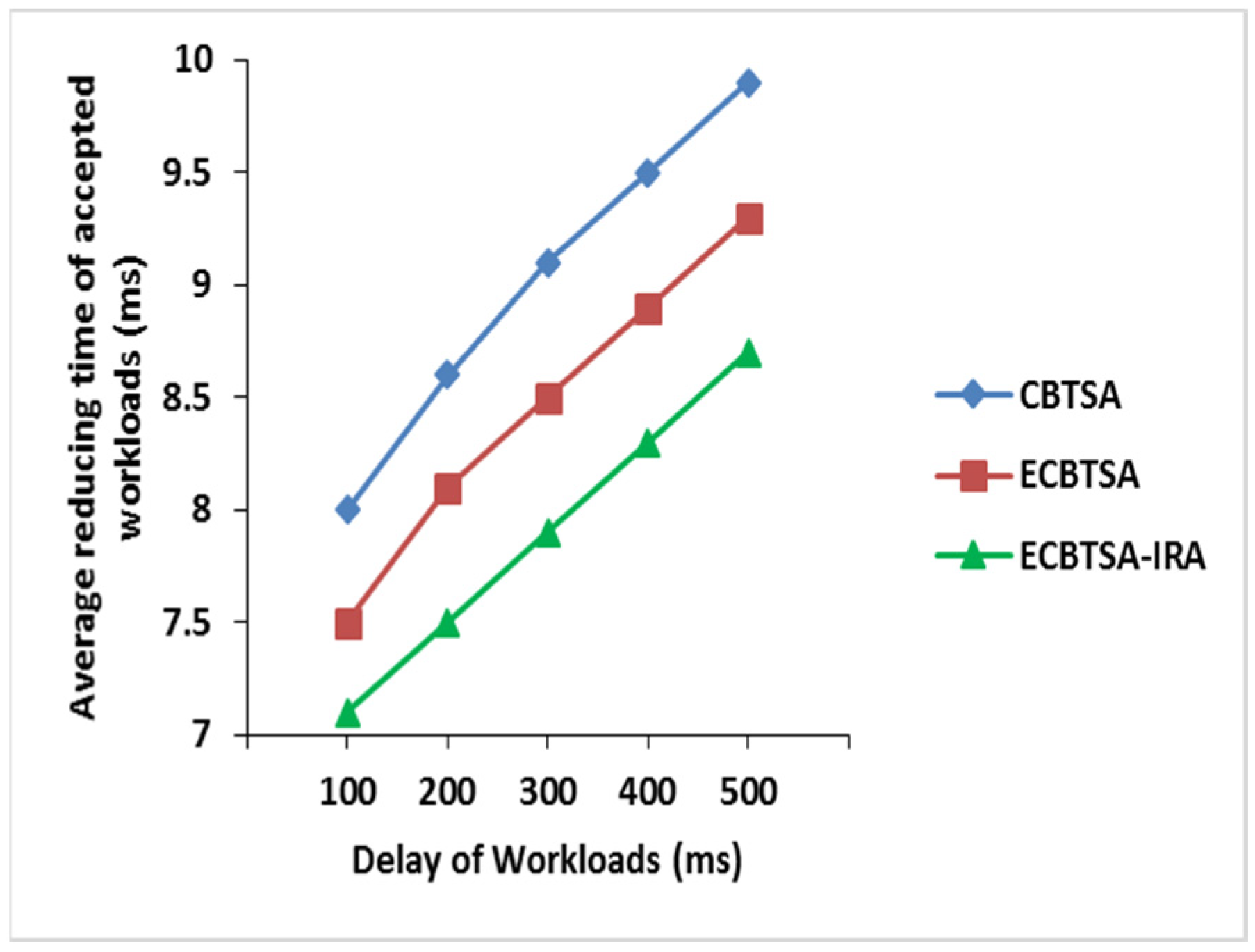

4.1. Mean Interval of Received Workloads

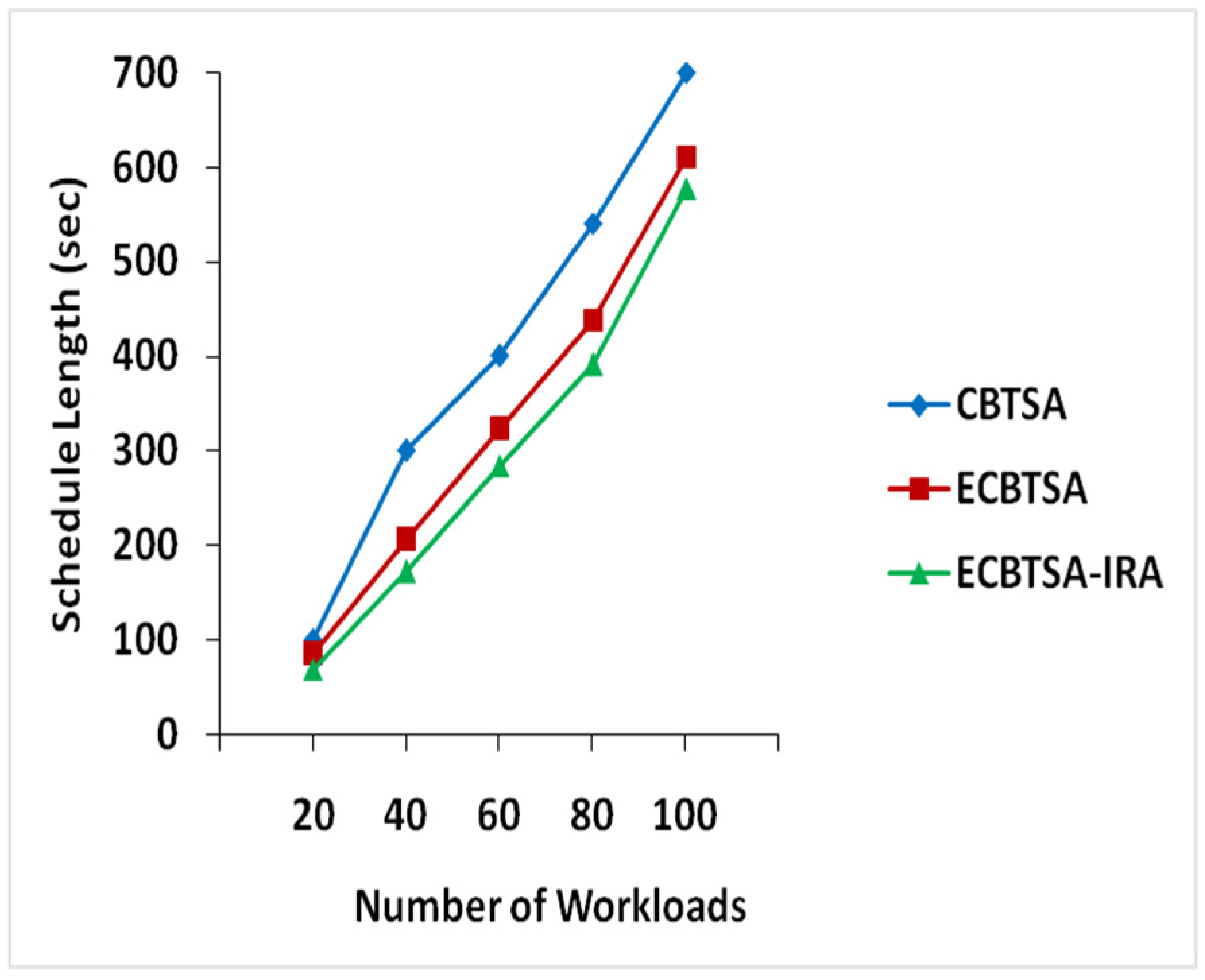

4.2. Schedule Length

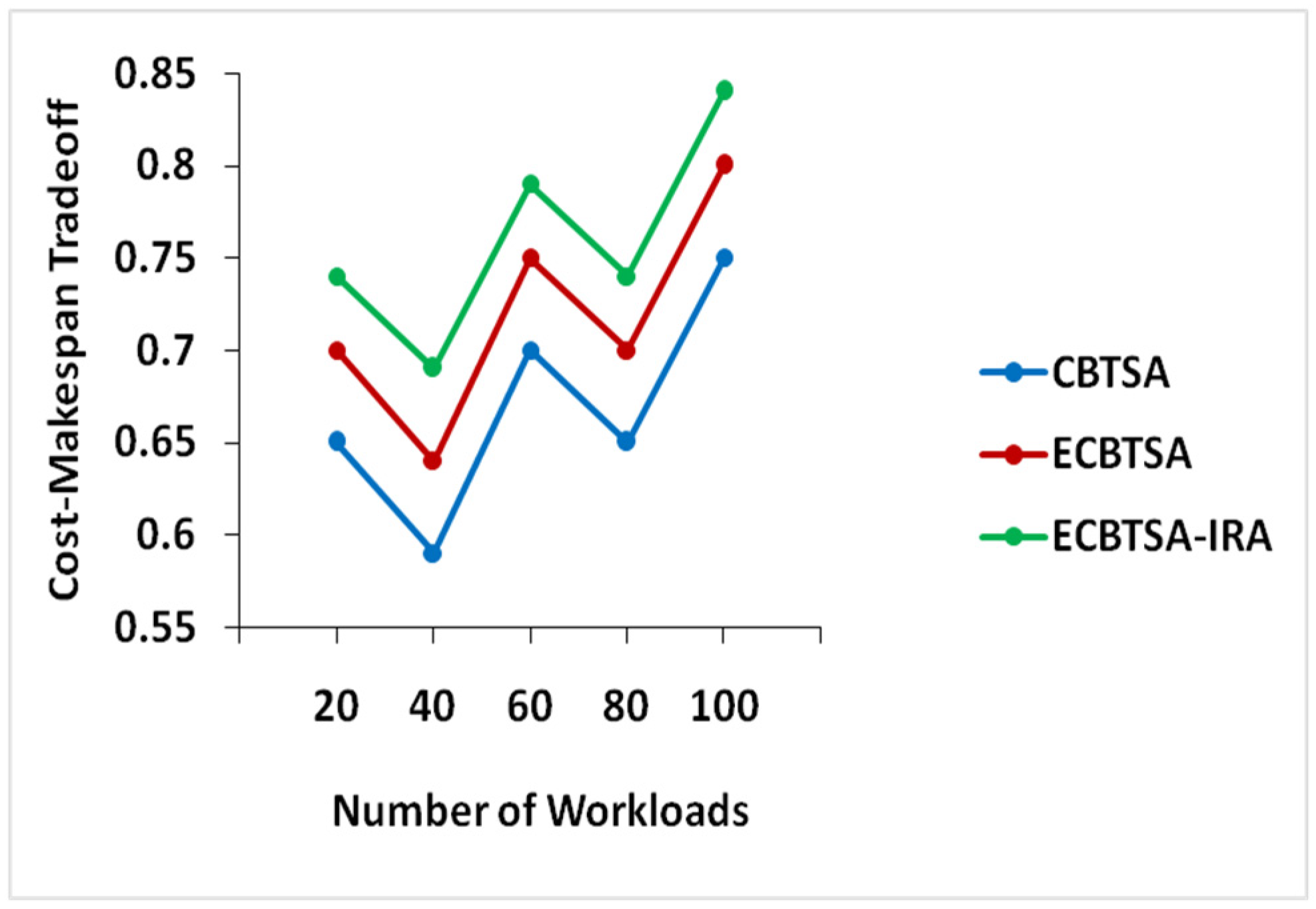

4.3. Cost–Makespan Tradeoff

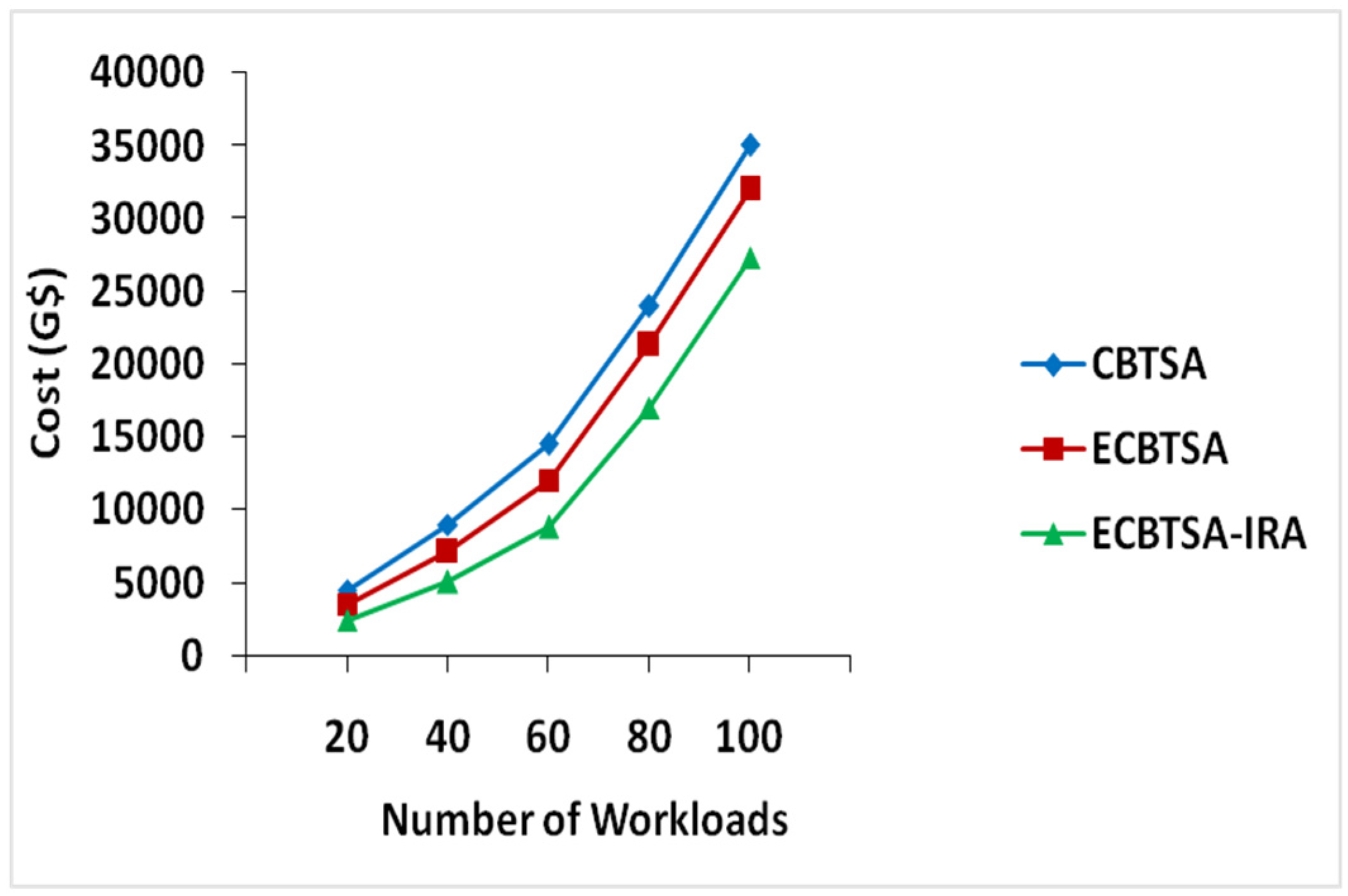

4.4. Economic Cost

5. Discussion and Limitations

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Kunal, S.; Saha, A.; Amin, R. An overview of cloud-fog computing: Architectures, applications with security challenges. Secur. Priv. 2019, 2, e72. [Google Scholar] [CrossRef]

- Naha, R.K.; Garg, S.; Georgakopoulos, D.; Jayaraman, P.P.; Gao, L.; Xiang, Y.; Ranjan, R. Fog computing: Survey of trends, architectures, requirements, and research directions. IEEE Access 2018, 6, 47980–48009. [Google Scholar] [CrossRef]

- Kimovski, D.; Ijaz, H.; Saurabh, N.; Prodan, R. Adaptive nature-inspired fog architecture. In Proceedings of the IEEE 2nd International Conference on Fog and Edge Computing (ICFEC), Washington, DC, USA, 1–3 May 2018; pp. 1–8. [Google Scholar] [CrossRef]

- Gorski, T.; Woźniak, A.P. Optimization of business process execution in services architecture: A systematic literature review. IEEE Access 2016, 4, 1–20. [Google Scholar] [CrossRef]

- Liu, L.; Qi, D.; Zhou, N.; Wu, Y. A task scheduling algorithm based on classification mining in fog computing environment. Wirel. Commun. Mob. Comput. 2018, 2018, 2102348. [Google Scholar] [CrossRef]

- Yin, L.; Luo, J.; Luo, H. Tasks scheduling and resource allocation in fog computing based on containers for smart manufacturing. IEEE Trans. Ind. Inform. 2018, 14, 4712–4721. [Google Scholar] [CrossRef]

- Hasan, S.; Huh, E.N. Heuristic based energy-aware resource allocation by dynamic consolidation of virtual machines in cloud data center. KSII Trans. Internet Inf. Syst. 2013, 7, 1825–1842. [Google Scholar] [CrossRef]

- Alsaffar, A.A.; Pham, H.P.; Hong, C.S.; Huh, E.N.; Aazam, M. An architecture of IoT service delegation and resource allocation based on collaboration between fog and cloud computing. Mob. Inf. Syst. 2016, 2016, 6123234. [Google Scholar] [CrossRef]

- Yu, L.; Jiang, T.; Zou, Y. Fog-assisted operational cost reduction for cloud data centers. IEEE Access 2017, 5, 13578–13586. [Google Scholar] [CrossRef]

- Hoang, D.; Dang, T.D. FBRC: Optimization of task scheduling in fog-based region and cloud. In Proceedings of the IEEE Trustcom/BigDataSE/ICESS, Sydney, NSW, Australia, 1–4 August 2017; pp. 1109–1114. [Google Scholar] [CrossRef]

- Pham, X.Q.; Man, N.D.; Tri, N.D.T.; Thai, N.Q.; Huh, E.N. A cost-and performance-effective approach for task scheduling based on collaboration between cloud and fog computing. Int. J. Distrib. Sens. Netw. 2017, 13, 1–16. [Google Scholar] [CrossRef]

- Ni, L.; Zhang, J.; Jiang, C.; Yan, C.; Yu, K. Resource allocation strategy in fog computing based on priced timed petri nets. IEEE Internet Things J. 2017, 4, 1216–1228. [Google Scholar] [CrossRef]

- Sun, Y.; Zhang, N. A resource-sharing model based on a repeated game in fog computing. Saudi J. Biol. Sci. 2017, 24, 687–694. [Google Scholar] [CrossRef] [PubMed]

- Nie, J.; Luo, J.; Yin, L. Energy-aware multi-dimensional resource allocation algorithm in cloud data center. KSII Trans. Internet Inf. Syst. 2017, 11, 4320–4333. [Google Scholar] [CrossRef]

- Liu, Z.; Yang, X.; Yang, Y.; Wang, K.; Mao, G. DATS: Dispersive stable task scheduling in heterogeneous fog networks. IEEE Internet Things J. 2018, 6, 3423–3436. [Google Scholar] [CrossRef]

- Yang, Y.; Wang, K.; Zhang, G.; Chen, X.; Luo, X.; Zhou, M.T. MEETS: Maximal energy efficient task scheduling in homogeneous fog networks. IEEE Internet Things J. 2018, 5, 4076–4087. [Google Scholar] [CrossRef]

- Jia, B.; Hu, H.; Zeng, Y.; Xu, T.; Yang, Y. Double-matching resource allocation strategy in fog computing networks based on cost efficiency. J. Commun. Netw. 2018, 20, 237–246. [Google Scholar] [CrossRef]

- Yang, Y.; Zhao, S.; Zhang, W.; Chen, Y.; Luo, X.; Wang, J. DEBTS: Delay energy balanced task scheduling in homogeneous fog networks. IEEE Internet Things J. 2018, 5, 2094–2106. [Google Scholar] [CrossRef]

- Balevi, E.; Gitlin, R.D. Optimizing the number of fog nodes for cloud-fog-thing networks. IEEE Access 2018, 6, 11173–11183. [Google Scholar] [CrossRef]

- Wang, T.; Wei, X.; Liang, T.; Fan, J. Dynamic tasks scheduling based on weighted bi-graph in mobile cloud computing. Sustain. Comput. Inform. Syst. 2018, 19, 214–222. [Google Scholar] [CrossRef]

- Li, Q.; Zhao, J.; Gong, Y.; Zhang, Q. Energy-efficient computation offloading and resource allocation in fog computing for internet of everything. China Commun. 2019, 16, 32–41. [Google Scholar] [CrossRef]

- Zhou, Z.; Wang, H.; Shao, H.; Dong, L.; Yu, J. A high-performance scheduling algorithm using greedy strategy toward quality of service in the cloud environments. Peer-to-Peer Netw. Appl. 2020, 13, 2214–2223. [Google Scholar] [CrossRef]

- Ren, Z.; Lu, T.; Wang, X.; Guo, W.; Liu, G.; Chang, S. Resource scheduling for delay-sensitive application in three-layer fog-to-cloud architecture. Peer-to-Peer Netw. Appl. 2020, 13, 1474–1485. [Google Scholar] [CrossRef]

- Guevara, J.C.; da Fonseca, N.L. Task scheduling in cloud-fog computing systems. Peer-to-Peer Netw. Appl. 2021, 14, 962–977. [Google Scholar] [CrossRef]

- Movahedi, Z.; Defude, B. An efficient population-based multi-objective task scheduling approach in fog computing systems. J. Cloud Comput. 2021, 10, 53. [Google Scholar] [CrossRef]

- Yin, Z.; Xu, F.; Li, Y.; Fan, C.; Zhang, F.; Han, G.; Bi, Y. A multi-objective task scheduling strategy for intelligent production line based on cloud-fog computing. Sensors 2022, 22, 1555. [Google Scholar] [CrossRef]

- Guo, W.; Kong, L.; Lu, X.; Cui, L. An intelligent genetic scheme for multi-objective collaboration services scheduling. Symmetry 2022, 14, 2037. [Google Scholar] [CrossRef]

| Environment | Parameter | Range |

|---|---|---|

| Fog | Number of processors | 20 |

| Number of containers/VMs | 10 | |

| Processing rate | MIPS | |

| Bandwidth | 1024 Mbps | |

| Cloud | Number of processors | 30 |

| Number of container/VMs | 15 | |

| Processing rate | MIPS | |

| Bandwidth | 10, 100, 512 and 1024 Mbps |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

V, S.; M, P.; P, M.K. Energy-Efficient Task Scheduling and Resource Allocation for Improving the Performance of a Cloud–Fog Environment. Symmetry 2022, 14, 2340. https://doi.org/10.3390/sym14112340

V S, M P, P MK. Energy-Efficient Task Scheduling and Resource Allocation for Improving the Performance of a Cloud–Fog Environment. Symmetry. 2022; 14(11):2340. https://doi.org/10.3390/sym14112340

Chicago/Turabian StyleV, Sindhu, Prakash M, and Mohan Kumar P. 2022. "Energy-Efficient Task Scheduling and Resource Allocation for Improving the Performance of a Cloud–Fog Environment" Symmetry 14, no. 11: 2340. https://doi.org/10.3390/sym14112340

APA StyleV, S., M, P., & P, M. K. (2022). Energy-Efficient Task Scheduling and Resource Allocation for Improving the Performance of a Cloud–Fog Environment. Symmetry, 14(11), 2340. https://doi.org/10.3390/sym14112340