Abstract

The study of temporal behavior of local characteristics in complex growing networks makes it possible to more accurately understand the processes caused by the development of interconnections and links between parts of the complex system that occur as a result of its growth. The spatial position of an element of the system, determined on the basis of connections with its other elements, is constantly changing as the result of these dynamic processes. In this paper, we examine two non-stationary Markov stochastic processes related to the evolution of Barabási–Albert networks: the first describes the dynamics of the degree of a fixed node in the network, and the second is related to the dynamics of the total degree of its neighbors. We evaluate the temporal behavior of some characteristics of the distributions of these two random variables, which are associated with higher-order moments, including their variation, skewness, and kurtosis. The analysis shows that both distributions have a variation coefficient close to 1, positive skewness, and a kurtosis greater than 3. This means that both distributions have huge standard deviations that are of the same order of magnitude as the expected values. Moreover, they are asymmetric with fat right-hand tails.

1. Introduction

Many technological, biological, and social systems can be represented by underlying complex networks. Such networks consist of numerous nodes, and if an interaction between a pair of elements in the system takes place, then it is assumed that the corresponding pair of nodes is connected by a link.

An important exmaple of complex systems of this kind are economic systems, the elements (or nodes) of which are firms or companies, and the links between these elements reflect their economic, informational, or financial interactions. The well-observed effect of the first-mover advantage is that companies that have appeared earlier than others (“first-moving” significant element of the system) usually receive a serious advantage in their development and a larger market segment than firms that entered the market later [1]. The same effect is often observed in the innovation propagation, in the production and the distribution of patents and technologies, in information interaction, as well as in social networks. However, numerous examples of new successful technology companies (or new popular social network accounts) show that the temporal behavior of network elements is very diverse: elements that appeared much later can take a more dominant position in the complex system than elements that appeared in the early stages of the system development.

In this paper, we study complex systems whose growth is based on the use of the preferential attachment mechanism and show that while the “first-moving” effect is performed, on average, in relation to the node degree (i.e., the number of its links), the temporal behavior of this quantity has an important feature: its coefficient of variation is close to 1. This means that the variance of the random variable (which is the node degree) is comparable to its average value, which can explain the effect of the appearance of large and important nodes in such complex systems at later stages of their growth. In addition, the paper examines other local node characteristics, associated with the higher order moments, which makes it possible to find the skewness and kurtosis of the distributions of these random variables.

Many networks, such as social, economic, citation, or WWW networks, evolve over time by adding new nodes, which at the moment of their appearance join the already existing ones. Numerous studies of real networks have shown that degree distributions in such complex networks follow the power law [2,3,4,5,6,7,8]. Modeling the growth of real complex networks is an important problem, and one of the first successful attempts was the Barabási–Albert model [9]. Using the mechanisms of growth and preferential attachment, the model made it possible to describe the evolution of networks with the power-law degree distribution. In recent years, researchers have proposed many extensions of this model, to approximate the properties of real systems [10,11,12,13,14,15,16,17,18,19,20]. Nevertheless, studying the peculiarities of networks generated by the pioneering Barabási–Albert model is of interest, as it sheds light on the properties of extended models [21].

The dynamics of the degree of a certain individual node, while the Barabási–Albert network is evolving, is a stochastic process. On the one hand, it is a Markov process, since at each iteration the newborn node selects those vertices to which it will join, based on their current degrees, i.e., does not depend on their degrees taken at previous iterations. On the other hand, this stochastic process is not stationary, since the local characteristics change with the growth of the network.

It is known [22] that the expected value of the degree of node at moment t follows the power law:

The degree of a vertex at a particular moment in time is a random variable. However, to characterize a random variable, knowing its mathematical expectation alone is not enough. The higher-order moment-related quantities, such as variation, the coefficient of asymmetry (skewness), and kurtosis, allow one to clearer understand the dynamic behavior of the degree of a vertex and to more definitely characterize the underlying stochastic process.

Another local characteristic of a node (in addition to its degree), which is of interest, is the sum of the degrees of all its neighbors. The knowledge of its dynamics makes it possible for one to answer many questions related to the local neighborhood of a given node:

- how much faster does the total degree of neighbors grow than the degree of the node itself?

- Are the variation of node degree and the variation of the total degree of its neighbors comparable?

- Do the nodes’ asymmetry coefficients differ or not?

- Do the nodes’ kurtosises differ or not?

In this paper, we answer these questions and find the values of these characteristics for the distributions of both the degree of a node and the total degree of its neighbors. While different methods can be employed to estimate these local characteristics [23], in this paper we use the mean-field approach as a method for assessing these quantities [22,24,25].

The recent paper [26] studies the behavior in the limit of the degree of an individual node in the Barabási–Albert model, and it shows that after some scaling procedures, this stochastic process converges to a Yule process (in distribution). Based on this findings, the paper examines why the limit degree distribution of a node picked uniformly at random (as the network grows to infinity) matches the limit distribution of the number of species chosen randomly in a Yule model (as time goes to infinity).

In contrast with paper [26], our paper focuses on time dynamics of the distribution characteristics, rather than on their limit behavior. In addition, we expand the study with the analysis of the total degree of neighbors of node.

2. Barabási–Albert Model

2.1. Notations and Definitions

Let be a graph, where is the set of vertices and is the set of edges. Let denote the degree of node of graph . Let be a fixed integer.

According to the Barabási–Albert model, graph is obtained from graph (at each discrete time moment ) in the following way:

- In the initial time , is a graph with and ;

- One vertex is attached to the graph, i.e., ;

- edges that connect vertex with existing vertices are added; each of these edges appears as the result of the realization of the discrete random variable that takes the value i with probability . If , then edge is added to the graph. We conduct m such independent repetitions. If the random variable takes the same value i in two or more repetitions at the iteration, then only one edge is added (there are no multiple edges in the graph).

Denote by the (cumulative) random variable that takes i if takes i at least in one of m repetitions at iteration .

Remark 1.

We are interested in the evolution of the graph for sufficiently large t. In this case, the probability that the random variable will take the value of i exactly k times in a series of m independent repetitions is proportional to , which is an order of magnitude less than the probability . Therefore, without a loss of generality, we will assume that for all . Then the probability that an edge from new vertex that appears at iteration is linked to vertex is

Let be the degree of node of graph , and let be the total sum of degrees of all neighbors in graph .

Note that trajectories of these quantities over time t are described by non-stationary Markov processes, since their values at each moment t are random variables that depend only on the state of the system at the previous moment. In the papers [27,28], asymptotic estimates of the expected values of these quantities at iteration t are found:

where C is a constant.

The aim of this work is to further analyze the behavior of these stochastic processes in time. In this article, we focus on estimating its moments, variances, asymmetry coefficient and kurtosis.

2.2. Temporal Behavior in Simulated Networks

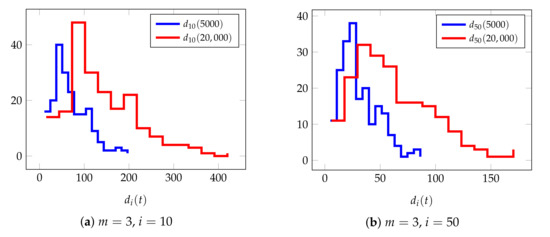

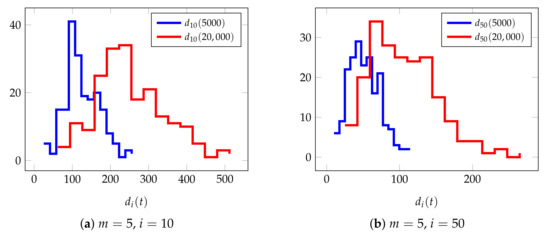

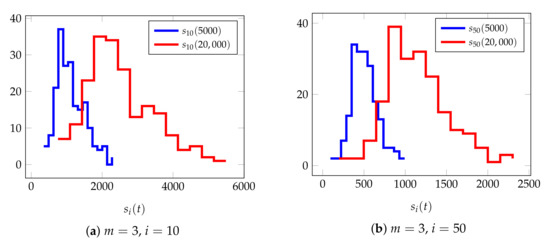

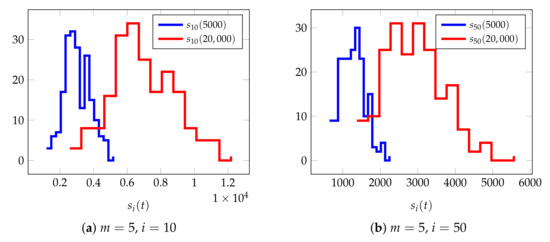

The stationarity of stochastic processes means that the distribution parameters of a random variable remain unchanged over time. Obviously, the processes under consideration are not stationary. This can clearly be seen in Figure 1, Figure 2, Figure 3 and Figure 4, which show empirical histograms of distributions of random variables and based on different realizations of their trajectories. The histograms were obtained as follows: we simulated the evolution of the BA graphs 200 times and obtained 200 corresponding values of random variables and for two nodes and at iterations and 20,000. To construct the histograms, we used the number of bins equal to 15. Figure 1 and Figure 2 represent histograms of and were obtained for and , respectively. Figure 3 and Figure 4 show histograms of and were obtained for and , respectively. The empirical values of the characteristics of the distributions of random variables are presented in Table 1, Table 2, Table 3 and Table 4.

Figure 1.

The histograms of the empirical values of obtained by stimulating 200 different networks with at iterations 5000 (blue line) and 20,000 (red line), (a) for node , (b) for node .

Figure 2.

The histograms of the empirical values of obtained by stimulating 200 different networks with at iterations 5000 (blue line) and 20,000 (red line), (a) for node , (b) for node .

Figure 3.

The histograms of the empirical values of obtained by stimulating 200 different networks with at iterations 5000 (blue line) and 20,000 (red line), (a) for node , (b) for node .

Figure 4.

The histograms of the empirical values of obtained by stimulating 200 different networks with at iterations 5000 (blue line) and 20,000 (red line), (a) for node , (b) for node .

Table 1.

Empirical characteristics of the -distribution obtained by simulating 200 different Barabási–Albert networks with , for nodes and at iterations 5000 and 20,000.

Table 2.

Empirical characteristics of the -distribution obtained by simulating 200 different Barabási–Albert networks with , for nodes and at iterations 5000 and 20,000.

Table 3.

Empirical characteristics of the -distribution obtained by simulating 200 different Barabási–Albert networks with , for nodes and at iterations 5000 and 20,000.

Table 4.

Empirical characteristics of the -distribution obtained by simulating 200 different Barabási–Albert networks with , for nodes and at iterations 5000 and 20,000.

Experimental results show that both distributions have mean values that increase over time. In addition, the growth of their standard deviations is proportional to the increase in their means. The values of the skewness coefficient are positive in all cases, which indicate the asymmetry of the distributions. Kurtosis is greater than 3, which means that their tails are thicker than the tail of normal distribution.

2.3. The Evolution of the Barabási–Albert Networks

It follows from the definition of the Barabási–Albert network that

- If , then and , as the result of joining node with newborn node of degree m.

- If and , i.e., new node joins a neighbor of node , then and .

Let if node links to node at iteration , and otherwise; i.e.,

Let if node links to one of the neighbors of node at iteration , and otherwise; i.e.,

Then the conditional expectations of and at moment are equal to

Let denote n-th central moment of a random variable defined by

Due to the linearity of the mathematical expectation, the following formula holds for finding the n-th central moment of a random variable:

where is i-th moment defined by

Indeed, it follows from Equation (4) that we have

The variance is the second central moment of the random variable, i.e., .

3. Node Degree Dynamics: The Evolution of Its Variation and High-Order Moments in Time

3.1. The Variation of

Lemma 1.

The second moment of follows

Proof.

We have

It follows from (3) that the conditional expectation of at iteration is

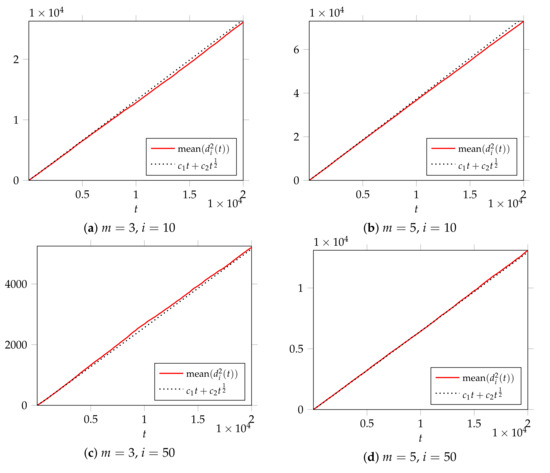

To illustrate the result, we carried out independent repetitions in which BA graph evolution was simulated 200 times, each time for 20,000 iterations, for different values and . Then we obtained the mean of the empirical values of . The results are presented in Figure 5.

Figure 5.

Dynamics of empirical values for in networks based on BA model for selected nodes as t iterates up to 20,000. Network in (a) is modeled with , , (b) with , , (c) with , , (d) with , .

Theorem 1.

The variation of at iteration t is

Proof.

The definition of variation implies

Then Theorem follows from Lemma 1 and the estimate (see Equation (2)). □

The standard deviation of , defined as , is the same order of magnitude as :

i.e., the coefficient of variation for tends to as t tends to ∞. Thus, the -distribution is high-variance.

3.2. The High-Order Moments of

Theorem 2.

where depends on n and m only.

Proof.

We have

Then it follows from (3) that

Assuming that are obtained for all , the expectation of can be found with the use of linearity of expectation. Taking the expectation of both sides and denoting and by and , respectively, we get the following differential equation

We get its solution in the following form:

and we obtain the recurrent formula for finding :

where constant C can be found from the initial condition .

Let us show by induction that

Indeed, if , we get the well-known estimate .

Suppose that (11) is true for all . We will show that (11) is also fulfilled for . We have

by the induction hypothesis. The sum can be presented as

Then the whole integral will be the sum of integrals of the form , where , each of which is equal to . Therefore, we get

□

3.3. The Skewness of

The asymmetry coefficient of a random variable is defined by

where and are the third and the second central moments of the -distribution, respectively.

Lemma 2.

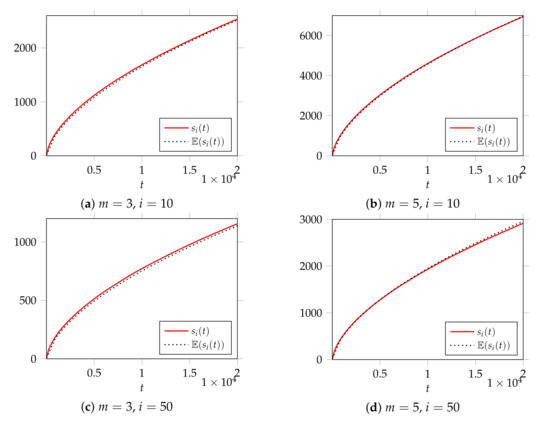

The third moment of follows

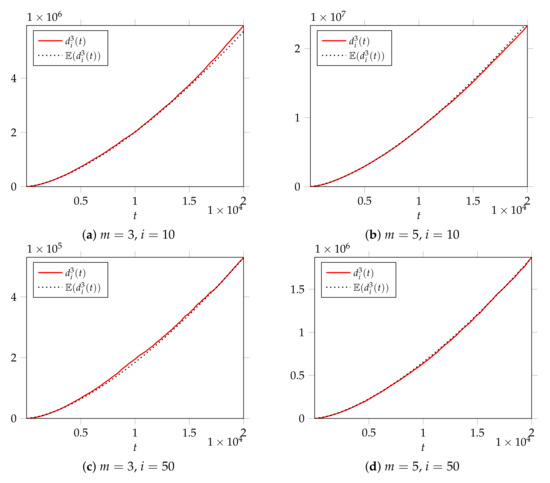

To exhibit the result, we carried out independent repetitions; in each of them, the BA graph was simulated for 20,000 iterations, for different values and . Then the empirical values of were obtained. The results are presented in Figure 6.

Figure 6.

Dynamics of empirical values for in networks based on BA model for selected nodes as t iterates up to 20,000. Network in (a) is modeled with , , (b) with , , (c) with , , (d) with , .

Theorem 3.

The asymmetry coefficient for the distribution of follows

Proof.

Using Equation (5) we can find the third central moments as follows

Therefore, using Theorem 1 we have

□

Remark 2.

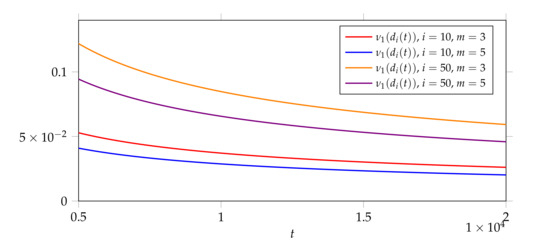

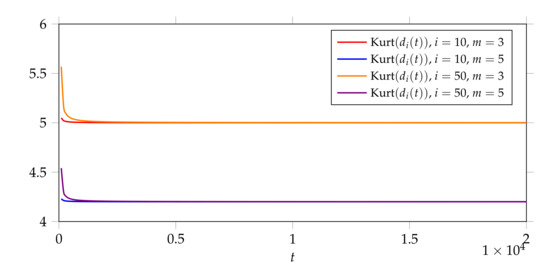

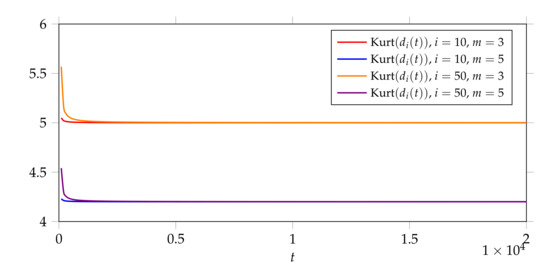

It follows from Theorem 3 that for all , therefore, the distribution of is asymmetric, and its right tail is thicker than the left tail. The initial value of the asymmetry coefficient is 4 . However, 2 as . Therefore, its value decreases with the network growth (see Figure 7).

Figure 7.

Evolution of asymmetry coefficient in BA networks for selected nodes and as t iterates up to 20,000.

3.4. The Kurtosis of

Using Equation (10), we can find :

which in turn can be used to find the kurtosis of .

Theorem 4.

The kurtosis of follows

Proof.

By definition of kurtosis, we have

where

Thus, we get (14). □

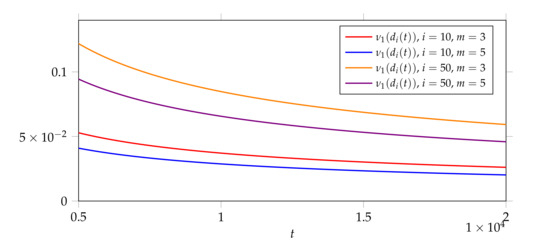

Remark 3.

Equation (14) implies for all t. Moreover, gradually decreases to as t tends to infinity (see Figure 8). This means that the distribution of is heavy-tailed for small t and is close to normal distribution for large t and large m.

Figure 8.

Evolution of kurtosis in BA networks for selected nodes and as t iterates up to 20,000.

-0.6cm0cm

4. The Dynamics of : Its Variation, Asymmetry Coefficient and Kurtosis

In this section, we consider the random variable , which is defined as the sum of the degrees of the -vertex neighbors at the time t. The mathematical expression of this random variable is found in the works [27] (for ) and [28] (for arbitrary m):

where C is a constant.

In this section, the dynamics of the stochastic process of is investigated a little deeper, namely, the dynamics of the second, third and fourth moments are found, i.e., , , which allow us to estimate the variation, the asymmetry coefficient and the kurtosis of .

4.1. The Second Moment and the Variation of

We first find the exact value of constant C from Equation (16).

Let denote the probability that vertex is connected to the vertex at the moment of its appearance at time i, i.e., . We get

Since

we can continue equality as follows:

Therefore,

and we finally get

This result will be useful to us later.

To exhibit the result, we carried out independent repetitions; in each of them the BA graph was simulated for 20,000 iterations, for different values and . Then the empirical values of were obtained. The results are presented in Figure 9.

Figure 9.

Dynamics of empiricalvalues for in networks based on BA model for selected nodes as t iterates up to 20,000. The network in (a) is modeled with , , (b) with , , (c) with , , (d) with , .

Lemma 3.

The second moment of is

Proof.

Let us consider how the values and are related:

- If new vertex joins the vertex at the time , then increases by m, since the vertex i obtains a new neighbor whose degree is m;

- If new vertex joins one of the neighbors for vertex i, then increases by 1, since in this case the contribution of one neighboring vertex to the increase of is 1;

- If none of these events occurs, then does not change.

Now we can obtain the stochastic difference equation for the random variable at the moment of time t. We have

Since

we get

We cannot assert that and are independent; therefore, we may expect that . Lemma A1 finds (see Appendix A).

Using Lemma A1, Equations (2) and (19), passing to the mathematical expectation of both sides, and making the substitution for convenience, we get the approximate differential equation:

Its solution has the form

where C is a constant. We have

On the other hand, since , we have

Thus, we get Lemma. □

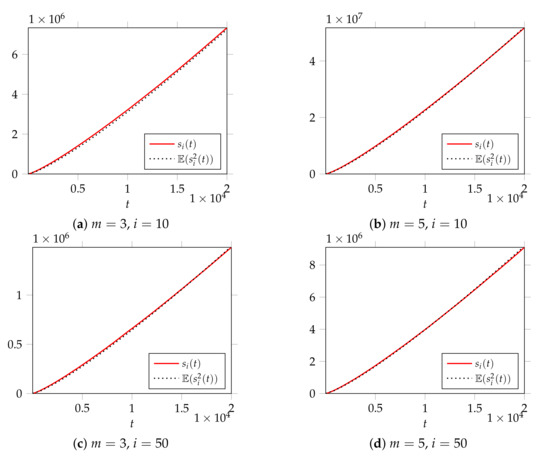

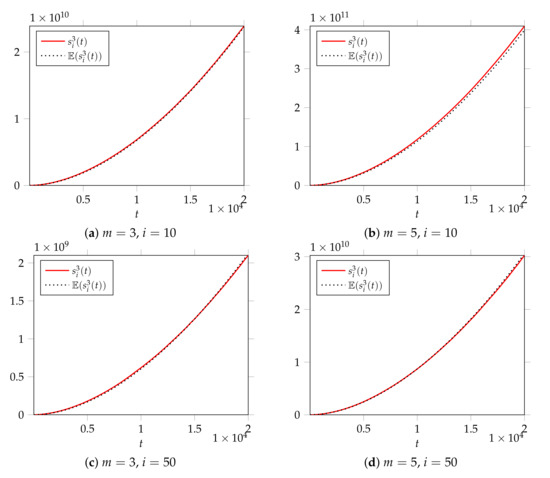

To confirm the result, we carried out independent repetitions; in each of them the BA graph was simulated for 20,000 iterations, for different values and . Then, the empirical values of were obtained. The results are presented in Figure 10.

Figure 10.

Dynamics of empirical values for in networks based on BA model for selected nodes as t iterates up to 20,000. Network in (a) is modeled with , , (b) with , , (c) with , , (d) with , .

Theorem 5.

The variation of follows

Proof.

Since , the statement is the consequence of Equation (19) and Lemma 3. □

4.2. The Third Moment and the Asymmetry Coefficient of

Lemma 4.

The third moment of is

Proof.

Let us obtain the difference stochastic equation describing the dynamics of random variable at moment t. We have

It follows from (21) that

Note that and may not be independent, and therefore, it is possible that .

After using Lemma A3, Lemma A1, Lemma 3, and Equations (19) and (2), taking the expectation of both parts, making the substitution , we get

The solution of which is

□

To confirm the result, we carried out independent repetitions, and in each of them, the BA graph was simulated for 20,000 iterations, for different values and . Then the empirical values of were obtained. The results are presented in Figure 11.

Figure 11.

Dynamics of empirical values for in networks based on BA model for selected nodes as t iterates up to . Network in (a) is modeled with , , (b) with , , (c) with , , (d) with , .

Theorem 6.

The asymmetry coefficient of follows

for sufficiently large t.

Proof.

The asymmetry coefficient is defined by

where and are the third and the second central moments of the -distribution, respectively. It follows from Lemma 4, Lemma 3, and Equation (19) that

From Lemma 3, we have

Remark 4.

The positivity of implies the asymmetry of the distribution.

4.3. The Kurtosis of

Lemma 5.

The fourth moment of at moment t follows

Proof.

The change in the value of from t to occurs as follows:

Equation (21) implies that

Denote . Using Equations (2) and (19), Lemmas 3, 4, A1, A3, A4, and A6, we get the following differential equation

the solution of which has the form

□

Theorem 7.

The Kurtosis of at iteration t follows

for sufficiently large t.

Proof.

By definition, we have

It follows from Theorem 5 that

Therefore,

for large t. □

5. Conclusions

In this article, we studied two Markov non-stationary random processes related to the evolution of BA networks: the first of them describes the dynamics of the degree of one fixed network node, the second is related to the dynamics of the total degree of the neighbors of one node. We evaluated the dynamic behavior of some characteristics of the distributions of these two random variables, which are associated with higher-order moments, including their variation, skewness, and kurtosis. The analysis showed that both distributions have the following properties:

- The coefficient of variation, defined as the ratio of the mathematical expectation to the variance, is close to the value of at each moment of time. Moreover, as the number of iterations increases, the coefficient of variation converges to .

- The skewness coefficient is positive for both distributions at any moment of the network evolution, which indicates that both distributions are asymmetric (their right tails are greater than their left ones).

- The kurtosis is greater than 3 for all subsequent iterations. This means that the right tails of both distributions are thicker than the tail of the normal distribution.

- It is also interesting to note that if the number of added edges m increases, then the coefficient of variation approaches 1, the coefficient of asymmetry tends to 0, and kurtosis converges to 3. This means that the characteristics of the random numbers are close to the ones of the normal distribution.

It should also be noted that although the characteristics of both distributions are close to each other, the mathematical expectation of the total degree of the neighbors of a node grows times faster than the expected degree of the same node.

Author Contributions

Conceptualization, S.S. and S.M.; methodology, S.S.; software, S.M. and D.K.; validation, S.S., N.A., and S.M.; formal analysis, S.S., S.M., and D.K.; writing—original draft preparation, S.S. and S.M.; writing—review and editing, S.M.; visualization, D.K.; supervision, S.S. All authors have read and agreed to the published version of the manuscript.

Funding

The work was supported by the Russian Science Foundation, Project 19-18-00199.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Lemma A1.

Proof.

Let us consider the stochastic difference equation:

It follows from (21) that

We pass to the unconditional expectation of both parts taken at the moment t, make the substitution , and using the previously obtained relations (Equations (2) and (6))

we obtain the following approximate differential equation:

Its solution is

where C is a constant, which we would like to find.

Finally, we get Lemma A1. □

Lemma A2.

Proof.

The stochastic equation is

It follows from (21) that

Let us pass to the unconditional expectation of both parts at the moment t and make the replacement and, using the obtained earlier relations (2), (6), (12), and Lemma A1, we get the following approximate differential equation:

Its solution has the form

where C is a constant of integration, which we find from the initial condition. To find at moment , we note that is equal to constant , while . Then we get

and consequently,

and we obtain Equation (A3). □

Lemma A3.

Proof.

The dynamics of can be described by

It follows from (21) that

Lemma A4.

Proof.

The evolution of can be described by the stochastic difference equation as follows:

Then it follows from (21) that

Denote . Then it follows from Equations (2), (6), (12), and (13), Lemmas A1 and A2 that

for some , , , . The solution of this differential equation is

□

Lemma A5.

Proof.

The value of evolves according to the following equation:

Then it follows from (21) that

Denote . Then it follows from Equations (2), (6) and (12), Lemmas A1–A4 that

for some , , , , . The solution of Equation (A6) has the form

□

Lemma A6.

References

- Lieberman, M.B.; Montgomery, D.B. First-mover advantages. Strateg. Manag. J. 1988, 9, 41–58. [Google Scholar] [CrossRef]

- Faloutsos, M.; Faloutsos, P.; Faloutsos, C. On Power-Law Relationships of the Internet Topology. SIGCOMM Comput. Commun. Rev. 1999, 29, 251–262. [Google Scholar] [CrossRef]

- Kleinberg, J.M.; Kumar, R.; Raghavan, P.; Rajagopalan, S.; Tomkins, A.S. The Web as a Graph: Measurements, Models, and Methods. In International Computing and Combinatorics Conference; Asano, T., Imai, H., Lee, D.T., Nakano, S.I., Tokuyama, T., Eds.; Springer: Berlin/Heidelberg, Germany, 1999; pp. 1–17. [Google Scholar] [CrossRef]

- Clauset, A.; Shalizi, C.R.; Newman, M.E.J. Power-Law Distributions in Empirical Data. SIAM Rev. 2009, 51, 661–703. [Google Scholar] [CrossRef] [Green Version]

- De Solla Price, D. Networks of scientific papers. Science 1976, 149, 292–306. [Google Scholar] [CrossRef] [PubMed]

- Klaus, A.; Yu, S.; Plenz, D. Statistical Analyses Support Power Law Distributions Found in Neuronal Avalanches. PLoS ONE 2011, 6, e19779. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Newman, M.E.J. The Structure and Function of Complex Networks. SIAM Rev. 2003, 45, 167–256. [Google Scholar] [CrossRef] [Green Version]

- Albert, R.; Barabási, A.L. Statistical mechanics of complex networks. Rev. Mod. Phys. 2002, 74, 47–97. [Google Scholar] [CrossRef] [Green Version]

- Barabási, A.L.; Albert, R. Emergence of Scaling in Random Networks. Science 1999, 286, 509–512. [Google Scholar] [CrossRef] [Green Version]

- Dorogovtsev, S.N.; Mendes, J.F.F.; Samukhin, A.N. Structure of growing networks with preferential linking. Phys. Rev. Lett. 2000, 85, 4633–4636. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Krapivsky, P.L.; Redner, S. Organization of growing random networks. Phys. Rev. E 2001, 63, 066123. [Google Scholar] [CrossRef] [Green Version]

- Krapivsky, P.L.; Redner, S.; Leyvraz, F. Connectivity of growing random networks. Phys. Rev. Lett. 2000, 85, 4629–4632. [Google Scholar] [CrossRef] [Green Version]

- Sidorov, S.; Mironov, S. Growth network models with random number of attached links. Phys. A Stat. Mech. Its Appl. 2021, 576, 126041. [Google Scholar] [CrossRef]

- Tsiotas, D. Detecting differences in the topology of scale-free networks grown under time-dynamic topological fitness. Sci. Rep. 2020, 10, 10630. [Google Scholar] [CrossRef]

- Pal, S.; Makowski, A.M. Asymptotic Degree Distributions in Large (Homogeneous) Random Networks: A Little Theory and a Counterexample. IEEE Trans. Netw. Sci. Eng. 2020, 7, 1531–1544. [Google Scholar] [CrossRef] [Green Version]

- Rak, R.; Rak, E. The fractional preferential attachment scale-free network model. Entropy 2020, 22, 509. [Google Scholar] [CrossRef] [PubMed]

- Cinardi, N.; Rapisarda, A.; Tsallis, C. A generalised model for asymptotically-scale-free geographical networks. J. Stat. Mech. Theory Exp. 2020, 2020, 043404. [Google Scholar] [CrossRef] [Green Version]

- Shang, K.K.; Yang, B.; Moore, J.M.; Ji, Q.; Small, M. Growing networks with communities: A distributive link model. Chaos 2020, 30, 041101. [Google Scholar] [CrossRef]

- Bertotti, M.L.; Modanese, G. The configuration model for Barabasi-Albert networks. Appl. Netw. Sci. 2019, 4, 1–13. [Google Scholar] [CrossRef] [Green Version]

- Pachon, A.; Sacerdote, L.; Yang, S. Scale-free behavior of networks with the copresence of preferential and uniform attachment rules. Phys. D Nonlinear Phenom. 2018, 371, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Van Der Hofstad, R. Random Graphs and Complex Networks; Cambridge University Press: Cambridge, UK, 2016; Volume 1. [Google Scholar] [CrossRef] [Green Version]

- Barabási, A.; Albert, R.; Jeong, H. Mean-field theory for scale-free random networks. Phys. A Stat. Mech. Its Appl. 1999, 272, 173–187. [Google Scholar] [CrossRef] [Green Version]

- Krapivsky, P.L.; Redner, S. Finiteness and fluctuations in growing networks. J. Phys. A Math. Gen. 2002, 35, 9517–9534. [Google Scholar] [CrossRef]

- Kadanoff, L. More is the Same; Phase Transitions and Mean Field Theories. J. Stat. Phys. 2009, 137, 777–797. [Google Scholar] [CrossRef]

- Parr, T.; Sajid, N.; Friston, K.J. Modules or Mean-Fields? Entropy 2020, 22, 552. [Google Scholar] [CrossRef] [PubMed]

- Pachon, A.; Polito, F.; Sacerdote, L. On the continuous-time limit of the Barabási-Albert random graph. Appl. Math. Comput. 2020, 378, 125177. [Google Scholar] [CrossRef] [Green Version]

- Sidorov, S.; Mironov, S.; Malinskii, I.; Kadomtsev, D. Local Degree Asymmetry for Preferential Attachment Model. Stud. Comput. Intell. 2021, 944, 450–461. [Google Scholar] [CrossRef]

- Sidorov, S.P.; Mironov, S.V.; Grigoriev, A.A. Friendship paradox in growth networks: Analytical and empirical analysis. Appl. Netw. Sci. 2021, 6, 35. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).