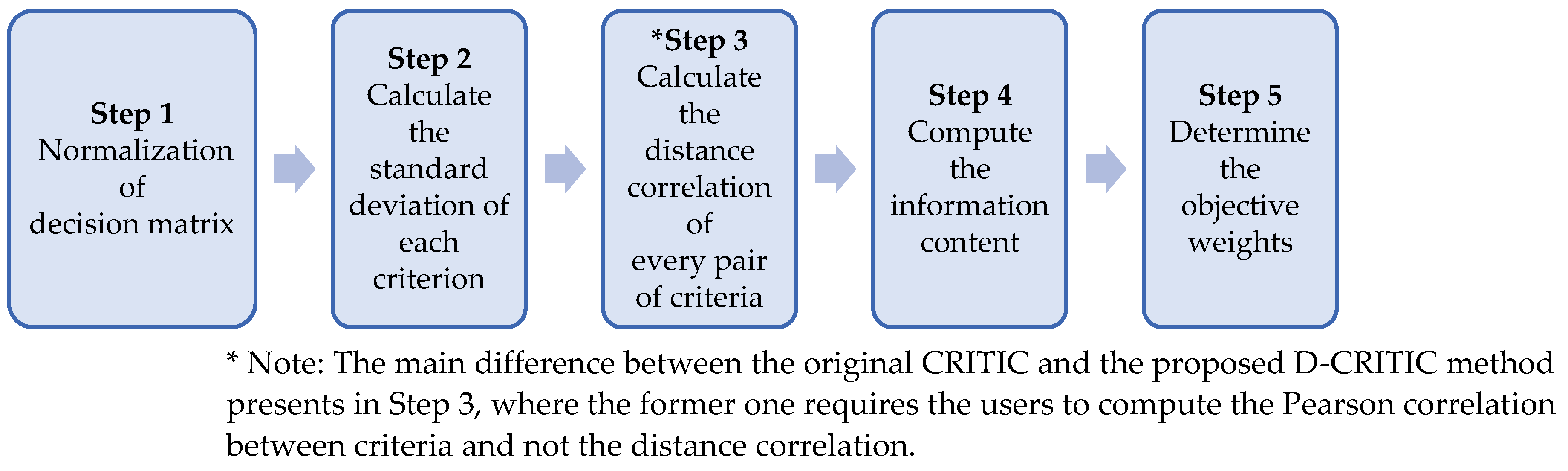

1. Introduction

The primary purpose of any standard multi-criteria decision-making (MCDM) analysis is to evaluate and rank the available alternatives based on a predetermined set of decision criteria [

1]. There are four fundamental stages in executing an MCDM analysis. In the first stage, the decision-makers identify all the relevant criteria that can be used to evaluate the alternatives. Such an identification can be made either by reviewing the literature, based on the decision-makers’ knowledge, or by seeking advice from experts [

2]. The decision-makers should invest ample time in this stage because omitting any salient criterion will result in a futile analysis.

In the second stage, the decision-makers need to collect each alternative’s data or local score with respect to all the criteria identified in the earlier stage to form the decision matrix. Assume an MCDM problem where

denotes the set of alternatives under investigation and

represents the set of evaluation criteria. The general form of the decision matrix can then be expressed as in Equation (1), where

denotes the score of alternative

with respect to criterion

[

3].

In the third stage, the weight of each criterion is determined. It is worth noting that it does not make sense to treat all the criteria equally as, in reality, they may carry different degrees of importance in a decision system [

4]. In the final stage, these criteria weights and the local scores belonging to each alternative are aggregated into a global score. Based on these global scores, the alternatives can then be ordered from the most to the least preferred one [

5].

The focus of this paper is on the third stage, which is the weight determination stage. Imprecise weights will result in misleading global scores, causing us to choose an inappropriate alternative or solution for the decision problem. One, therefore, needs to be extremely cautious in determining the weights. Unfortunately, this process can quickly transform into a complex one, especially when the decision problem involves many criteria. Hence, various methods have been proposed to determine the criteria weights systematically.

The remainder of this paper is organized as follows. In

Section 1.1, the motivation of the study is elucidated by reviewing the previous literature. The contributions of the study are explicated in

Section 1.2.

Section 2 introduces the proposed modified CRITIC method. The usage of the modified method is illustrated in

Section 3 through a smartphone criteria evaluation problem. The validity of the method is tested in

Section 4. In

Section 5, important findings from

Section 3 and

Section 4 are discussed.

Section 6 describes the research limitations and potential future studies.

1.1. Literature and Motivation

The existing literature classified the weighting methods into two distinct groups, namely subjective and objective methods [

6,

7]. Subjective methods require some initial information from the decision-makers prior to weight determination, with such information usually provided based on the decision-makers’ knowledge or experience [

8]. Some popular subjective weighting methods are pairwise-comparison-based methods [

9,

10], SWARA [

11], KEMIRA [

12], SIMOS [

13], P-SWING [

14], PIPRECIA [

15], FUCOM [

16], and DEMATEL [

17], to name a few. Although subjective methods have the advantage of integrating information from experienced decision-makers, such information may sometimes favour a specific criterion because of the decision-makers’ past belief, thus leading to biased results [

18]. Besides, decision-makers who do not have complete knowledge about the decision problem under consideration may be unable to furnish the needed initial information [

19]. Apart from this, the process of delivering such information may become complex when the MCDM problem involves many criteria.

Unlike subjective methods, objective methods do not require any sort of initial information or judgment from the decision-makers [

20]; they merely assess the structure of the data available in the decision matrix to determine the weights [

21,

22,

23]. These methods are known for eliminating possible bias associated with subjective evaluation, thus increasing objectivity [

24]. The following are some examples of objective methods, as mentioned in the literature: entropy-based methods [

25,

26], CRiteria Importance Through Inter-criteria Correlation (CRITIC) [

27], and the recent CILOS and IDOCRIW methods [

28].

Our review of the literature suggests that entropy-based methods and CRITIC are the most widely applied objective methods for the weighting of criteria. However, CRITIC is found to have extra merit as it considers both the contrast intensity and the conflicting relationship held by each decision criterion [

29,

30], unlike the Shannon entropy method, which addresses only the contrast intensity [

31]. Below, we describe these two aspects in more detail.

Overall, it can be claimed that the CRITIC method assigns a higher weight to a criterion that has a higher contrast intensity and a higher degree of conflict with other criteria [

37]. Because of this aspect, CRITIC has been used in many real applications. Previous studies also show that CRITIC has been used jointly with other objective or subjective methods for weight determination. For instance, Yerlikaya et al. [

38] used a combination of a pairwise comparison method and CRITIC to evaluate the weights of logistic location selection criteria. Marković et al. [

39] used the fuzzy PIPRECIA method and the CRITIC method to measure the weights of bank performance criteria. Piasecki and Kostyrko et al. [

40] applied a combination of an entropy method and CRITIC to determine the weights of indoor air quality criteria.

Surprisingly, there are not many modified versions of CRITIC available in the literature. Only two modified methods have recently been introduced [

41,

42], with both methods using different data normalization techniques. The methods were claimed to better model the contrast intensity of each criterion; however, additional statistical tests were not performed to validate the reliability or accuracy of the methods.

In fact, the limited number of studies on modified CRITIC methods implies that researchers may not have detected any serious issues with CRITIC’s fundamental components, suggesting that major modifications may not be needed. However, in the present study, we discovered that the original CRITIC method has a shortcoming in properly capturing the conflicting relationships between criteria, since it merely utilizes the Pearson correlation for this purpose. Studies indicate that this correlation does not always denote the actual relationships between criteria [

43]. For instance, two criteria with a zero Pearson correlation coefficient may not be completely independent [

44]. This undesirable situation occurs because the Pearson correlation detects only the linear relationship between two criteria and not the nonlinear relationship [

45,

46]. Thus, the validity of the weights computed by the original CRITIC method can be disputed.

Therefore, this research was motivated by the need for a modified CRITIC method that does not misrepresent the conflicting relationships between decision criteria. Proving that such a modified method can perform better than the original CRITIC method was another challenge that was addressed in this research.

1.2. Statement on Contributions

The key contribution of this research is twofold. First, in the context of the MCDM literature, we have introduced an improved version of the CRITIC method, namely D-CRITIC. D-CRITIC was developed by incorporating the idea of distance correlation into the original CRITIC method. Such a novel extension has not been reported in any of the studies relating to criteria weighting methods. The proposed D-CRITIC method has the merit of modelling the conflicting relationships between criteria more reliably with the aid of distance correlation. More importantly, this research has proven that D-CRITIC can produce a more valid set of criteria weights and ranks than the original CRITIC method. The introduction of D-CRITIC can also be regarded as an attempt to diversify the current literature, which is concentrated more on subjective weighting methods than on objective methods [

47].

The second contribution of our research is linked to one of the tests conducted to validate the performance of D-CRITIC. Overall, we have conducted three different tests to compare the performance of the method. The purpose of the first test was to compare the degree of agreement of the criteria weights derived by D-CRITIC against four other weighting methods, including the original CRITIC method. Usually, the Pearson correlation test is conducted for this purpose [

48,

49]. However, we discovered that this test could deliver misleading results since the Pearson correlation is unable to capture a nonlinear association [

50] between any two sets of weights. Therefore, we used the distance correlation test as an alternative approach to comparing the degree of agreement between different sets of criteria weights. The study is the first to offer a distance correlation test to measure the performance of different weighting methods.

In short, we employed the idea of distance correlation not only to develop a modified version of the CRITIC method but also to validate the performance of the modified method, that is, the D-CRITIC method.

3. Application of D-CRITIC to a Decision Problem

Many popular gadget websites provide a list of crucial criteria for smartphone selection. Some even rank these criteria from the most to the least important one. Since the smartphone market is becoming more and more competitive, providing such information will undoubtedly be helpful for the manufacturers to develop the right product strategies to sustain them in such a competitive marketplace.

There are several MCDM-based studies conducted to determine the weights and ranks of smartphone criteria scientifically. For instance, Peaw and Mustafa [

52] evaluated various smartphone criteria, including the dimension and screen resolution, using the combination of AHP and Data Envelopment Analysis. Meanwhile, Ho et al. [

53] analyzed the responses collected from a sample of customers using a modified AHP to evaluate eight selected criteria, which include the display quality, camera, and price. In another study, Okfalisa et al. [

54] applied both the fuzzy AHP and fuzzy analytical network process for a similar smartphone criteria evaluation purpose.

Surprisingly, most of the previous studies on the evaluation of smartphone criteria were more focused on using subjective weighting methods, e.g., AHP. In this section, we are interested in establishing that the objective weighting methods, particularly the D-CRITIC method, can be used as a potential alternative tool to determine the weights and ranks of smartphone criteria.

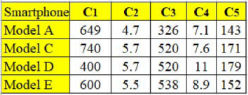

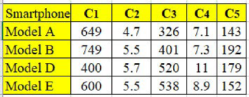

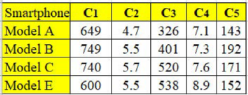

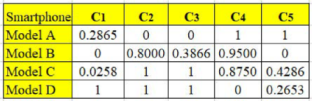

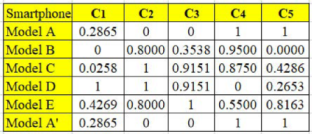

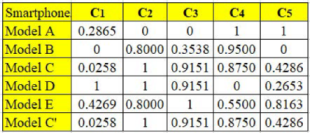

Table 1 shows the decision matrix of five smartphone models that were compared according to five criteria, namely the base price measured in dollars (

, the screen size measured in inches (

, the pixel density measured in pixels per centimetre (

, the thickness measured in millimetres (

, and the mass measured in grams (

. The smartphone models were renamed as Models A, B, C, D, and E for confidentiality.

Note that the actual smartphone comparison data obtained from

https://www.trendhunter.com/trends/smartphone-guide (accessed on 2 November 2020) consists of ten criteria. However, we had to drop five criteria from further analysis due to some reasons. The operating system, processor, and special feature criteria were removed since they are qualitative in nature, making them not compatible as input criteria for D-CRITIC. A similar reason applied to storage criterion as the data are mixed with quantitative and qualitative values, besides the fact that there is no single, fixed value with respect to each smartphone. The battery criterion was removed due to the issue of data incompleteness. To simplify, after taking into consideration the suitability of the criteria data with the proposed method, only five criteria were finalised for the analysis.

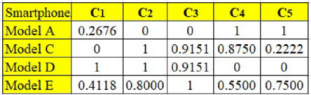

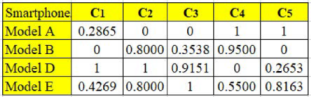

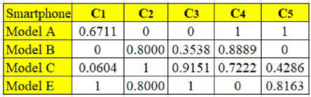

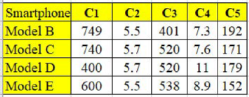

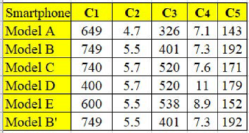

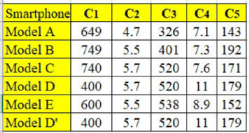

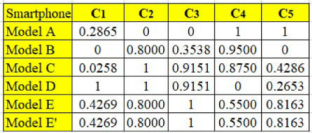

Table 2 is the normalized decision matrix derived from Equation (1). The worst and best values of each criterion were carefully identified before applying Equation (1). Note that the base price, thickness, and mass are nonbeneficial criteria, whereas the remaining are beneficial criteria. Therefore, for the case of a nonbeneficial criterion, the lowest value is considered the most preferred or best value. In contrast, for a beneficial criterion, as usual, the highest value is considered the best one. For instance, the best and worst values for the base price are

$400 and

$749, respectively. Meanwhile, for screen size, 5.7 in. and 4.7 in. are considered the best and the worst value, respectively. The standard deviation value of each criterion, which was computed using Equation (2), is also presented in the same table. These values suggest that the data pattern of pixel density has the highest contrast, followed by thickness, screen size, mass, and base price. However, at this level, it is too early for us to identify pixel density as the essential criterion, for the following two reasons:

The standard deviation values, which are relatively close to each other, do not show a clear distinction in terms of their contrast intensity, so we are unable to make a concrete decision about the importance of the criteria.

The relationships held by the criteria are yet to be considered.

The analysis, therefore, proceeded by computing the distance correlation measures of the criteria.

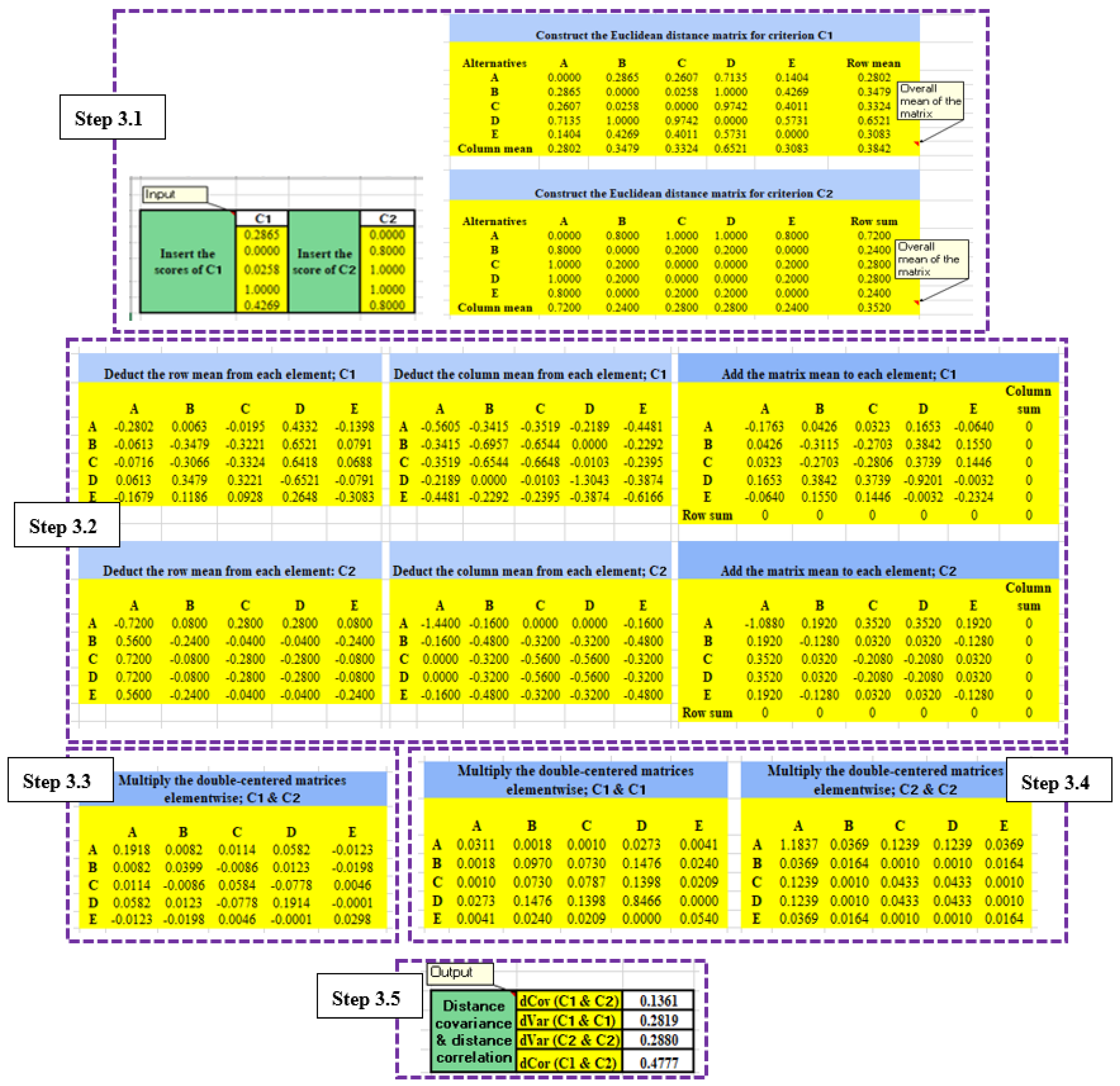

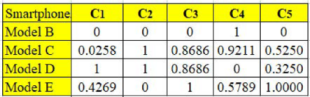

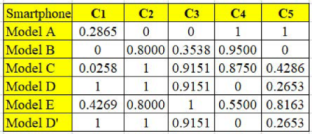

Table 3 depicts the distance correlation matrix of the criteria. To facilitate a better understanding, we present in

Appendix A an example of calculating the distance correlation for

and

,

. Based on

Table 3, the highest distance correlation measure is noticed between the base price and the thickness (i.e., 0.9437), indicating a strong redundancy between both criteria. It also appears that the mass criterion does not largely overlap with any other criterion since none of the measures are above 0.8. This situation suggests that the mass could be identified as the most important criterion by the end of the analysis.

Table 4 shows the information content and the weight of each criterion determined using Equations (5) and (6), respectively. In short, as expected, D-CRITIC identifies

as the most important criterion with a weight score of 0.2118, followed by criteria

,

,

, and

.

5. Sensitivity Analysis

In this section, we furthered our investigation to understand the robustness of the proposed D-CRITIC method. Robustness explains the steadiness of the results produced by a method. As far as MCDM literature is concerned, sensitivity analysis has always been a popular choice of tool to examine the robustness of various MCDM methods, e.g., [

63,

64]. Generally, a sensitivity analysis explores how a little change in the input parameters could affect the output resulting from a method.

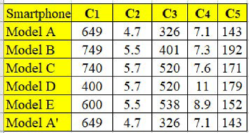

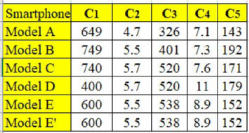

Therefore, in this study, the sensitivity analysis was conducted with the aim of understanding how the variation in the size of the decision matrix will affect the criteria weights and ranks estimated by D-CRITIC. Since the weights estimated by D-CRITIC are subjected to the data structure in the decision matrix, it is then rational to analyze the effect of different dimensions of the decision matrix on the criteria weights and ranks.

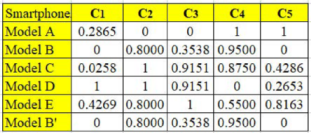

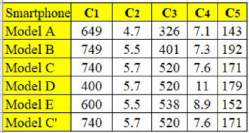

We commenced the analysis by generating ten different scenarios. These scenarios were created by merely amending the existing decision matrix that comprises data of five alternatives, i.e., smartphones (

). The amendment was done so that at the end, we would have slightly smaller (

) and larger (

) decision matrices than the actual ones. For the first five scenarios, we eliminated one alternative while retaining the other four. Meanwhile, for the next five scenarios, we duplicated the data of one selected alternative so that each scenario would have a decision matrix with

. More details about these ten scenarios are summarized in

Table 10.

The D-CRITIC method was then applied to each scenario.

Table 11 summarizes the criteria weights resulting from every different scenario.

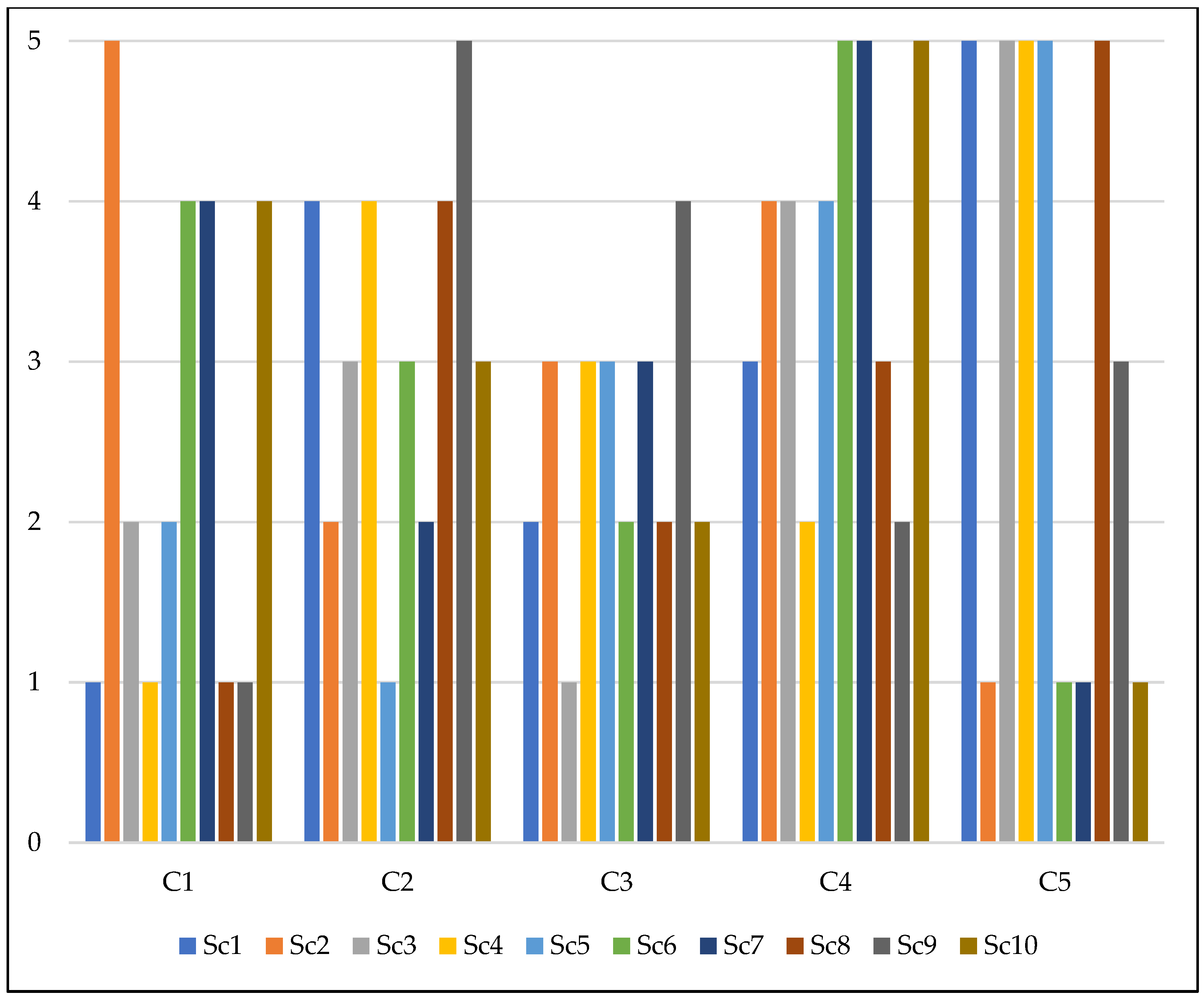

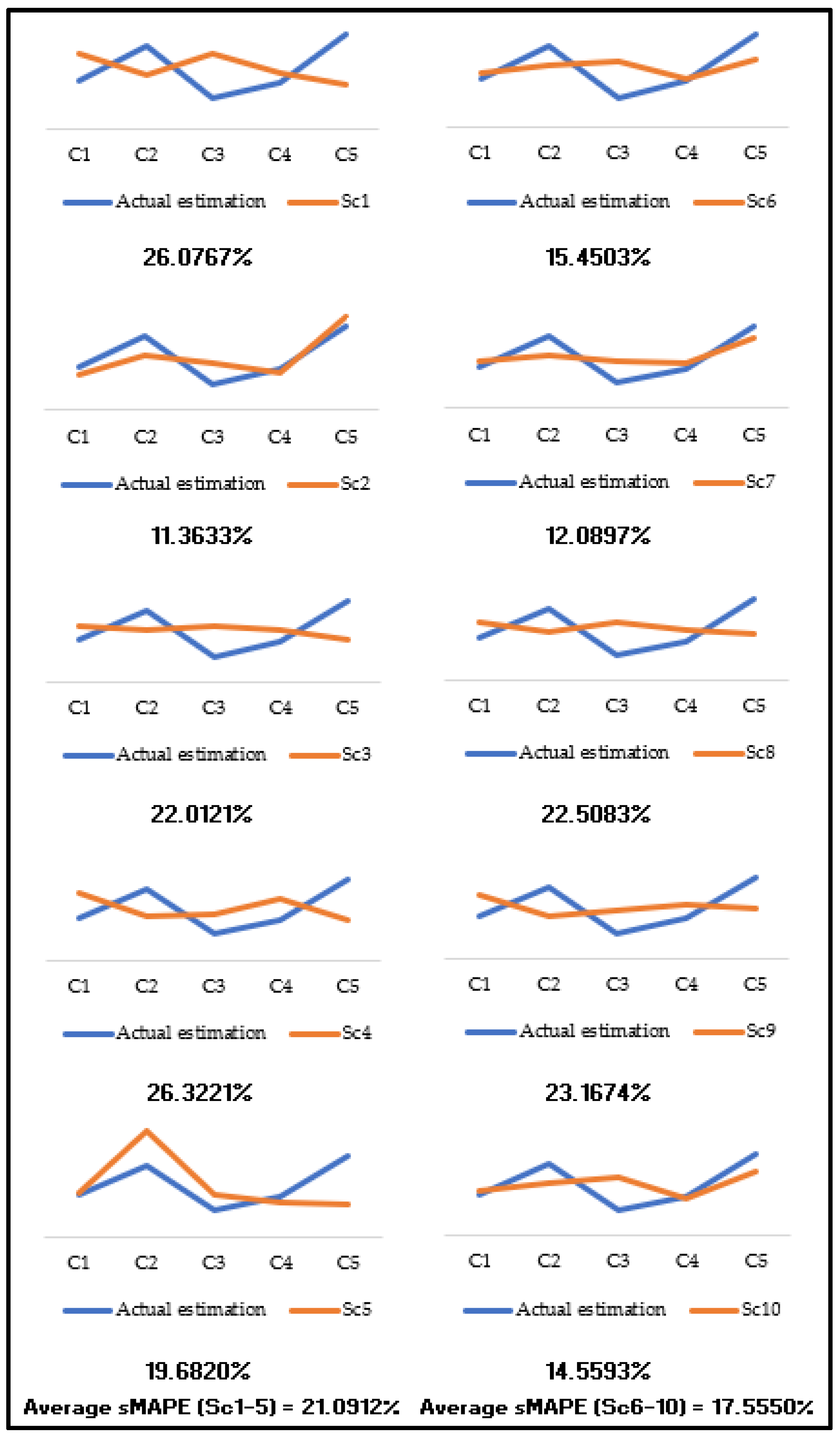

Figure 4 displays the variations observed in the ranking of each criterion across the ten scenarios. Meanwhile,

Figure 5 displays the comparison between the criteria weights of each scenario against the weights estimated from the actual decision matrix, together with the sMAPE of each scenario and average sMAPE for Sc1-Sc5 and Sc6-Sc10. On the other hand,

Table 12 provides a comparison of the criteria ranks from all ten scenarios against the actual estimation. Note that the green highlight in

Table 12 indicates that the ranking of the criterion under that specific scenario has remained unaffected when compared to the actual ranks.

6. Discussion and Conclusions

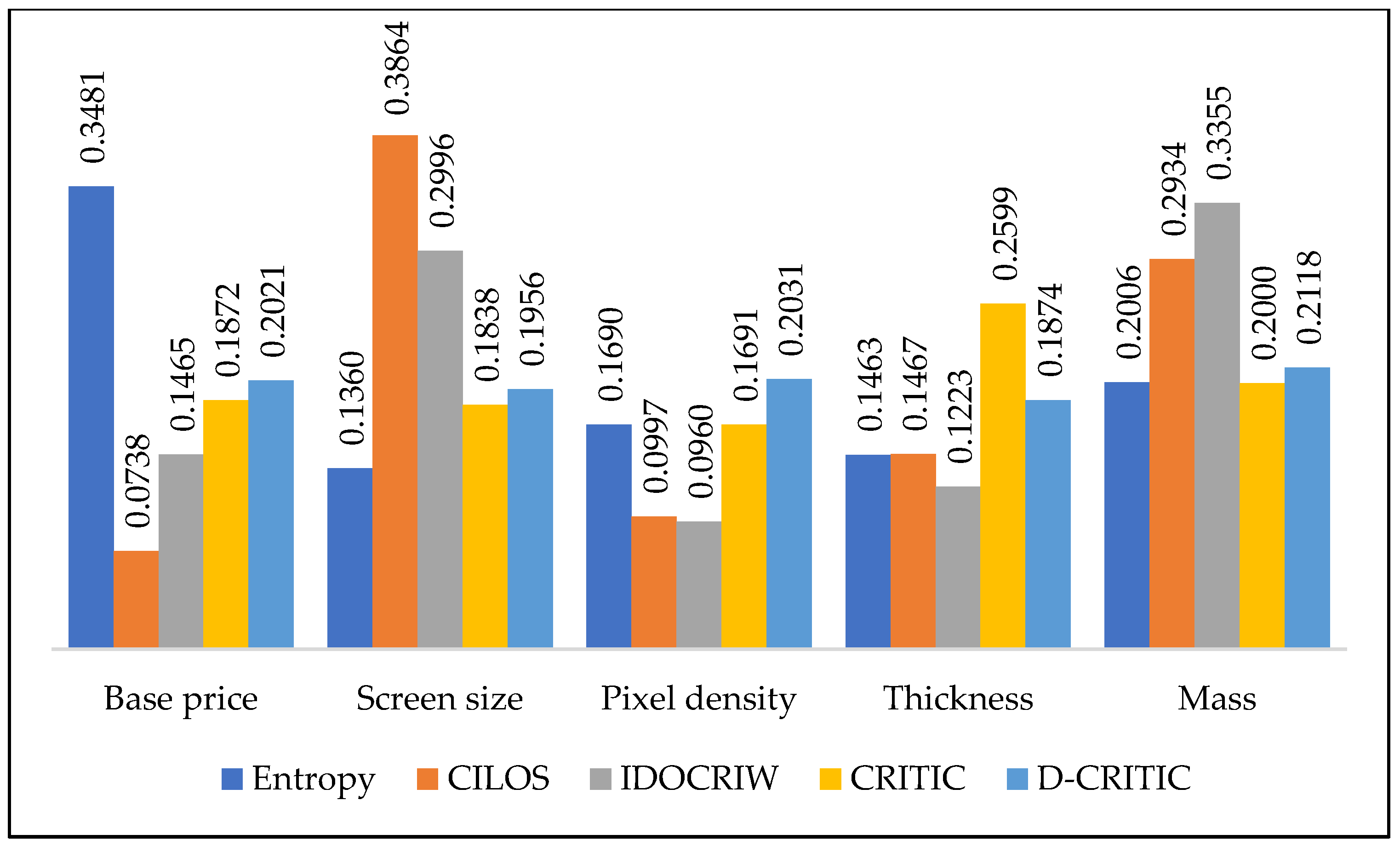

The proposed D-CRITIC method and four other objective weighting methods were applied to a smartphone criteria evaluation problem to demonstrate the workability of D-CRITIC. D-CRITIC identified mass as the most salient criterion with a weight of 0.2118 (see

Table 5), followed by pixel density (0.2031), base price (0.2021), screen size (0.1956), and thickness (0.1874). Interestingly, two other methods, namely CILOS and IDOCRIW, also reported mass as the most critical smartphone criterion. We realize that the results of D-CRITIC are consistent with the findings reported in several past studies. Yildiz and Ergul [

65] applied a subjective weighting method, i.e., ANP, for evaluating a long list of smartphone selection criteria and proved that the mass of a smartphone is more important than its thickness. Lee et al. [

66] indeed claimed that mass is an essential criterion for an ergonomic smartphone since it helps in providing the expected one-handed grip comfort to users. In another study conducted by Mishra et al. [

67], similar to the results of D-CRITIC, it was reported that pixel density is more crucial than screen size. In fact, Zhu et al. [

68] stated that the specifications of the camera and the quality of taken photos are becoming dominant criteria for customers purchasing smartphones. Many smartphone manufacturers also tend to promote their smartphones by emphasizing the strength of their smartphone camera specifications, including the pixel density.

It could be surprising to notice that D-CRITIC did not identify price as the most important smartphone selection criterion. Similar to our findings, Bhalla et al. [

69] also recently reported that price has less effect on a customer’s buying decision when compared to other physical features of a smartphone. In a similar vein, Osman et al. [

70] claimed that customers nowadays care more about the physical features of a smartphone and are willing to pay more in exchange for better features. More importantly, from the MCDM perspective, the price data of the five smartphone models considered in this study are found not to vary too much (with the lowest standard deviation value), indicating that this specific criterion has the lowest degree of contrast intensity and least information to tell. Such a data pattern further strengthens the logic as to why D-CRITIC did not identify price as the most crucial criterion.

On the other hand, a somewhat uniform line for D-CRITIC compared with the other lines (see

Figure 1) indicates that D-CRITIC assigns weights that are relatively close to each other. In other words, it appears that D-CRITIC concludes that all five smartphone criteria hold a similar degree of importance, with only marginal differences. Although the weights estimated by D-CRITIC are relatively close, it has to be emphasized that they are still distinct enough to enable a decent ranking on the criteria. It is acceptable to have relatively close weights since, in some situations, that could be the actual case. For instance, Suh et al. [

71], who used an integrated weighting method to evaluate eight mobile service criteria, discovered that the computed weights did not vary too much and merely ranged between 0.0870 and 0.1780.

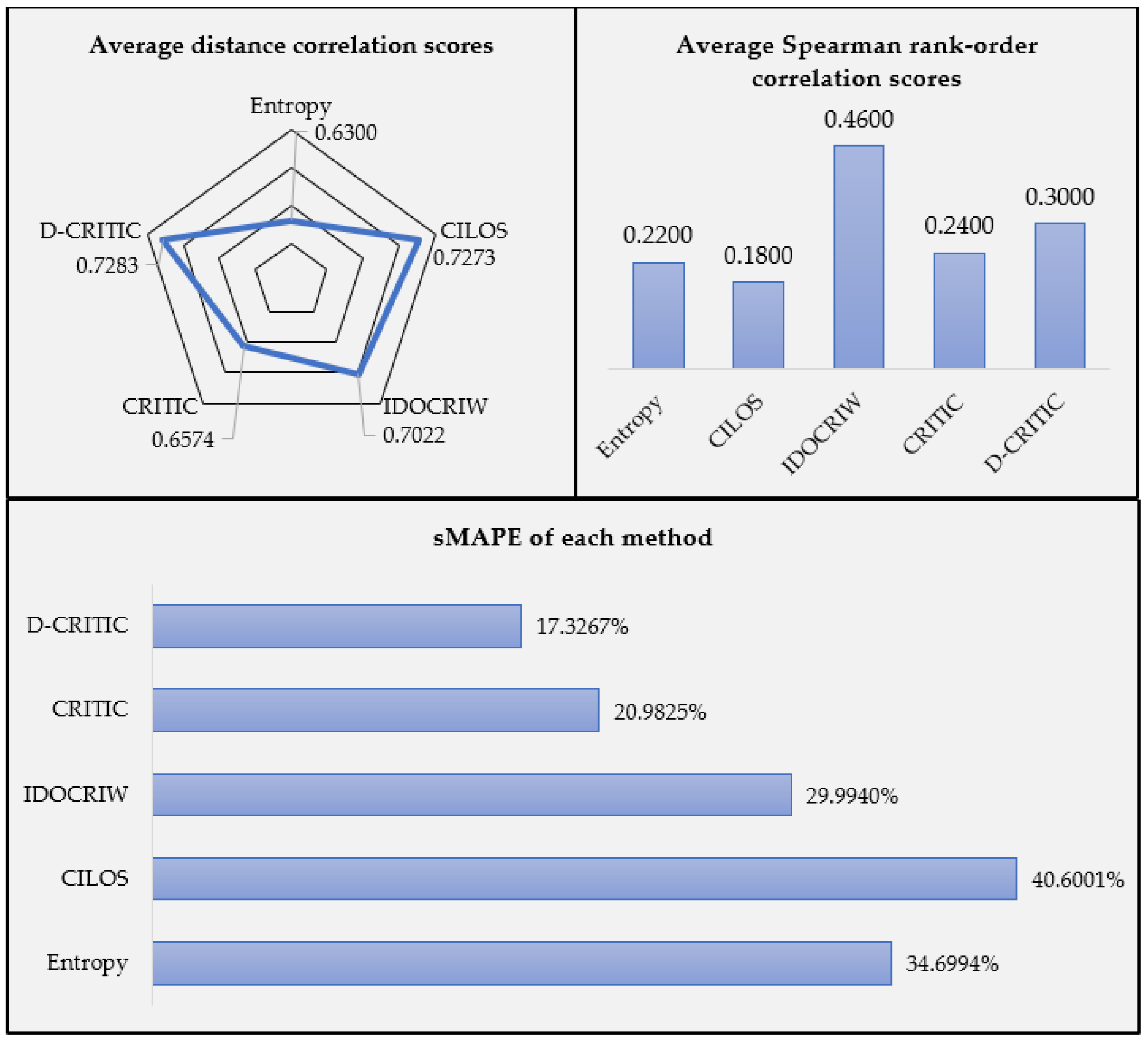

More importantly, further analyses (the distance correlation test, the Spearman rank-order correlation, the sMAPE test, and the sensitivity analysis) have provided obvious evidence that the weights estimated by D-CRITIC are more acceptable than those of other methods.

The distance correlation test reveals that the set of weights derived by the D-CRITIC method is strongly consistent with the sets of weights produced by the other methods since all the coefficient values are above 0.6. The highest consistency is reported for the original CRITIC method, with a coefficient value of 0.8186. This finding is undoubtedly the result of the similarity between the two methods in determining the criteria weights. More specifically, unlike other methods, both methods capture the contrast intensity and the conflicting nature of the criteria while determining the weights. However, overall, the set of weights produced by D-CRITIC has the highest degree of consistency compared with the weights produced by the other four methods; that is, D-CRITIC yielded the largest average distance correlation score (0.7283). The second most consistent weights were derived by CILOS (0.7273), followed by IDOCRIW (0.7022), CRITIC (0.6574), and the entropy-based method (0.6300).

On the other hand, the Spearman rank-order correlation test reveals that the most consistent criteria ranks, which agree well with the other four sets of criteria ranks, resulted from the IDOCRIW method. Interestingly, as in the case of criteria weights, it appears that the set of ranks derived by D-CRITIC is more consistent than that derived by CRITIC, since the former has a higher average Spearman rank-order correlation score (0.3000).

Besides, the sMAPE test shows that D-CRITIC has estimated the most accurate criteria weights since the method obtained the lowest sMAPE value, that is, 17.3267%, when compared with the aggregated weights. The original CRITIC method is reported as the second most accurate method with an sMAPE value of 20.9825%. The test also indicates that the entropy method produced the second-least accurate estimates because, unlike D-CRITIC and CRITIC, the entropy method considers only the contrast intensity of the criteria.

To sum up, based on

Figure 3, it can be claimed that D-CRITIC has a better performance than the original CRITIC method. The D-CRITIC method is proven to have the ability to produce a more valid set of criteria weights and ranks. The results support the initial argument made in

Section 1.1 that the weights determined by the CRITIC method could be flawed since it misrepresents conflicting relationships between criteria. This shortcoming is minimized in the D-CRITIC method, mainly with the aid of distance correlation.

On the other hand, the results in

Table 11 and

Figure 4 clearly show that a little modification in the size of the decision matrix or the data structure, which was done through ten different scenarios, have caused changes to the weights or ranking of criteria estimated from the actual decision matrix. These changes prove that the D-CRITIC method results are sensitive to the variations in the size of the decision matrix. This situation is caused by the integrated distance correlation measures. Unlike the Pearson correlation, distance correlation is not only more responsive to the change in the amount of data, but at the same time, it is more sensitive to the presence of both linear and non-linear associations between data vectors.

However, it can be claimed that D-CRITIC can produce more stable criteria weights with a larger decision matrix. This quality is evident based on

Figure 5, where it appears that weights generated by Sc6, Sc7, Sc8, Sc9, and Sc10 are more consistent with the actual estimation compared to Sc1, Sc2, Sc3, Sc4, and Sc5. Furthermore, the lower average sMAPE value for Sc6–Sc10, i.e., 17.5550%, in general, suggests that weights estimated via a larger decision matrix have better proximity to the actual estimation. It has to be reiterated that the decision matrix of Sc1–Sc5 only comprises four alternatives, whereas six alternatives make up the decision matrix of Sc6–Sc10.

In terms of the criteria ranks, few scenarios have generated ranks that tally with the actual estimation. For instance, , which was identified as the most important criterion, has also been ranked first in Sc2, Sc6, Sc7, and Sc10. Besides, , which was reported as the least important criterion, has remained at the same fifth rank in Sc6, Sc7, and Sc10. However, based on the number of green boxes distributed between Sc1-Sc5 and Sc6–Sc10, we can specifically claim that the ranks derived from larger decision matrices are more consistent with the actual estimation. All in all, the sensitivity analysis reveals that D-CRITIC has the tendency to deliver more stable criteria weights and ranks with a larger decision matrix, or in other words, if the decision problem involves a larger set of alternatives.