Abstract

This paper addresses the projection pursuit problem assuming that the distribution of the input vector belongs to the flexible and wide family of multivariate scale mixtures of skew normal distributions. Under this assumption, skewness-based projection pursuit is set out as an eigenvector problem, described in terms of the third order cumulant matrix, as well as an eigenvector problem that involves the simultaneous diagonalization of the scatter matrices of the model. Both approaches lead to dominant eigenvectors proportional to the shape parametric vector, which accounts for the multivariate asymmetry of the model; they also shed light on the parametric interpretability of the invariant coordinate selection method and point out some alternatives for estimating the projection pursuit direction. The theoretical findings are further investigated through a simulation study whose results provide insights about the usefulness of skewness model-based projection pursuit in the statistical practice.

1. Introduction

The key idea behind the term “projection pursuit” (PP) is concerned with the search of “interesting” low-dimensional representations of multivariate data, an idea dating back to Kruskal’s early work [1], which later on inspired its use as an exploratory data analysis tool for uncovering hidden patterns and structure in data as described in several of the foundational works [2,3,4,5]. In order to assess the interestingness of a projection, a gamut of indices have been proposed [3,4,6,7,8,9,10,11,12] in combination with proposals for computational developments and the difficulties posed by the need for efficient optimization routines that implement the search of the PP solution [5,11,12,13,14,15,16,17]. Although PP was born as an exploratory data analysis technique, the need to enhance progress towards the inferential arena has motivated the use of skewness and kurtosis, based on third and fourth order moments, as projection indices in the context of parametric models either in an explicit way [11,12,18,19,20] or implicitly [21,22,23].

This paper revisits the PP problem when the third moment skewness measure is taken as projection index so that PP reduces to finding the direction that yields the maximal skewness projection, an idea already studied in the past [24]. We will assume that the underlying multivariate distribution belongs to the flexible family of scale mixtures of skew normal (SMSN) distributions so the issue is delimited within the skewness model based PP framework. Under this assumption, it is shown that skewness PP can be described in terms of two eigenvector problems: the first problem stems from the third cumulant matrix of the SMSN distribution and the second problem from the simultaneous diagonalization of the covariance and scale scatter matrices of the SMSN model; it can be shown that both approaches have an appealing interpretation in terms of the shape vector that regulates the asymmetry of the model. The theoretical findings ascertain alternative ways to approach the PP problem with important computational implications when estimating the direction yielding the maximal skewness projection.

The rest of the paper is organized as follows: Section 2 gives an overview about the SMSN family. Section 3 addresses the eigenvector formulation of PP by exploring the theoretical ins and outs of the problem when the input vector follows a SMSN distribution; under this assumption, two eigenvector standpoints—based on third cumulants and on the scatter matrices of the SMSN model—are used to tackle it. Our findings serve to contribute to the invariant coordinate selection (ICS) literature shedding light on the parametric interpretability of this method under the SMSN model; they also give rise to several theoretical grounded proposals for estimating the PP direction from data, which are discussed in Section 3.3. The theoretical results and the proposals herein are further investigated in Section 4 through a simulation study that shows their usefulness when applied to calculate the PP direction; the performance of the estimations is compared by assessing their mean squared errors in well-defined population scenarios. Finally, Section 5 provides some summary remarks for concluding the paper.

2. Background

2.1. The Skew Normal and the Scale Mixtures of Skew Normal Distributions

The multivariate skew normal (SN) distribution has become a widely used model for regulating asymmetry departures from normality. Its study has originated fruitful research [25,26,27,28,29,30] with incremental works pursuing its enrichment and extension to wider classes of distributions [31,32,33,34,35,36,37,38,39]. This paper adopts the formulation from previous work [25,40] to define the density function of a p-dimensional SN vector with location vector and scale matrix as follows:

where is the p-dimensional normal probability density function (pdf) with zero mean vector and covariance matrix , is the distribution function of a variable, is a diagonal matrix with non-negative entries such that is a correlation matrix and or is the shape vector that regulates the asymmetry of the model. We put to denote that follows a SN distribution with pdf (1); note that when the normal pdf is recovered.

The SN vector can be represented through the stochastic formulation: , where is a normalized multivariate skew normal variable with pdf given by

The previous stochastic representation highlights the natural extension of the SN to the SMSN family that accounts for asymmetry and tail weight simultaneously [23,41]; due to its analytical tractability, this family has gained increasing interest both from the theory [21,23,42,43,44,45] and the applications, with a few works showing its usefulness in real data [20,22,46]. Here, we use the formulation in [23] to define the SMSN as follows:

Definition 1.

Let be a random vector such that , with pdf as in (2), and let S be a non negative scalar variable, independent of . The vector , where ω is a diagonal matrix with non-negative entries, is said to follow a multivariate SMSN distribution.

In this case, we write , with H the distribution function of the mixing variable S, to indicate that follows a p-dimensiontal SMSN distribution. When , the vector follows a scale mixture of normal distributions. On the other hand, if H is degenerate at then the input vector has a distribution.

2.2. Examples of SMSN Distributions

There are some well-known multivariate distributions that belong to the SMSN family. Here, we focus on a few representative instances.

2.2.1. The Multivariate SN Distribution

We have just mentioned that the SN distribution is obtained when S is degenerate at . Hence, the only source of non-normality is regulated by the shape vector .

2.2.2. The Multivariate Skew-t Distribution

The multivariate skew-t (ST) distribution arises when the mixing variable in Definition 1 is with . Its pdf [46] is given by

with the quantity given by , the pdf of a p-dimensional ST variate with degrees of freedom and the distribution function of a scalar ST variable with degrees of freedom. We put to indicate that follows a ST distribution with pdf as in (3). Note that when the ST becomes a p-dimensional SN distribution, i.e., .

2.2.3. The Multivariate Skew-Slash Distribution

Another model that simultaneously tackles asymmetry and tail weight behavior is the multivariate skew–slash (SSL) distribution [47]. It appears when the mixing variable is , with and q a tail weight parameter such that . We write to indicate that follows a p-dimensional SSL distribution with location , scale matrix , shape vector and tail parameter . When , the SSL becomes a SN variable, i.e., .

3. Skewness Projection Pursuit

Skewness PP has already been studied for specific models [18,19,48]. Here, we assume that ; its scaled version is , where and are the covariance matrix and mean vector of , respectively. PP is aimed at finding the direction for which the variable attains the maximum skewness, which implies solving the following problem:

with the standard skewness measure: , and the set of all non-null p-dimensional vectors.

In order to ensure a unique solution, taking into account the scale invariance of , the problem can be stated by the following equivalent formulations:

where and with . The maximal skewness , with the superscript D standing for directional skewness, is a well-known index of asymmetry [24].

The vectors yielding the maximal skewness projection for and its scaled version are denoted by

and satisfy that .

When a simple condition on the moments of the mixing variable is fulfilled, the PP problem can be brought to the parametric arena [22]. Now, we examine the eigenvector formulation of the problem; some instrumental results about moments and cumulants of SMSN vectors are needed in advance [21,49].

Proposition 1.

Let be a vector such that . Provided that the first three moments, , of the mixing variable exist, the moments of up to the third order are given by

- 1.

- .

- 2.

- .

- 3.

where the symbols vec and ⊗ denote the vectorization and Kronecker operators and γ is a shape asymmetry vector given by.

The next proposition establishes the relationship between cumulants and moments of multivariate SMSN distributions.

Proposition 2.

Let be a vector such that . Provided that the first three moments, , of the mixing variable exist, the cumulants of up to the third order are given by

- 1.

- .

- 2.

- .

- 3.

- .

3.1. Projection Pursuit from the Third Cumulant Matrix

Now, skewness PP is revisited using an eigenvector approach from the third cumulant matrix of the scaled input vector . The approach is motivated by the formulation of directional skewness as the maximum of an homogeneous third-order polynomial obtained from the third cumulant matrix as follows [11]:

This formulation inspires the eigenvector approach, based on the third-cumulant tensor, and the use of the higher-order power method (HOPM) to implement the computation [14]. The problem admits a simplification for skew-t vectors, which in turn provides the theoretical grounds and interpretation for the choice of the initial direction in the HOPM algorithm ([11], Proposition 2). Theorem 1 below enriches the interpretation by extending the result to the family of SMSN distributions.

Firstly, we recall the definitions of left and right singular vectors of the third cumulant matrix of a vector : We say that the vector is a right singular vector of the third cumulant matrix if is proportional to . On the other hand, the vec operator can be used to define a left singular vector of as the vectorized matrix, with a matrix, such that gives a vector proportional to .

Theorem 1.

Let be a vector such that , with mixing variable having finite second order moment satisfying the condition , and let be the scaled version of the input vector having third cumulant matrix . Then, it holds that the right dominant eigenvector of is given by .

Proof.

Putting together the results from Propositions 1 and 2, we can write:

Taking into account the expression for the covariance matrix provided in the second item of Proposition 2, we obtain that

When these quantities are inserted into the previous expression for , we obtain

where and . Now, taking into account that [50], an analogous argument as the one used in [11] leads to

On the other hand, using the standard properties of the kronecker product, the following mathematical relationships can be derived after simple calculations:

from which we obtain

and as a result: , where . This finding implies that is an eigenvector of with its associated eigenvalue. Hence, is a right singular vector of .

From the well-known moment inequality we conclude that . Thus, taking into account the condition for the moments of the mixing variable of the statement, we can assert that

which implies that .

It can also be shown that is the right dominant eigenvector of using the following simple argument: If is another right eigenvector orthogonal to then with . Therefore, is the right dominant eigenvector of . □

The result given by Theorem 1 gives rise to the following meaningful remarks:

First of all, we known that the direction attaining the maximal skewness projection for the scaled vector is proportional to [22]; henceforth, the vector driving the maximal skewness projection for is which in turn lies on the direction of , being also an eigenvector of ([22], Lemma 1). This fact enhances the role of as a shape vector that regulates the asymmetry of the multivariate model in a directional fashion. Furthermore, the previous disquisition also provides a grounded theoretical basis for the choice of the right dominant eigenvector of to set the initial direction of the HOPM algorithm, an issue advocated by De Lathauwer et al. [14] without theoretical justification.

Secondly, we can also note that the expression

from which the right dominant singular vector of is derived, reduces to that one found by (Loperfido [11], Proposition 2) for the case of the multivariate skew-t distribution when the moments of the skew-t are inserted into the quantities r and s above. Actually, our result is an extension of (Loperfido [11], Theorem 2) to the SMSN family.

Thirdly, coming back to expression Equation (6), recall that a unit length vector satisfies if and only if with the largest eigenvalue [11,51]. Thus, we can put , with from Theorem 1, to show that

Therefore, which, taking into account that with , reduces to the expression derived by [22] using another approach. Moreover, for the case of a SN vector we know that S is degenerate at ; so , and , which gives as previously stated by [18].

Finally, it is worthwhile noting that the moment condition given in the statement of Theorem 1 is fulfilled by the most popular subfamilies of SMSN distributions such as those described in Section 2.2.

3.2. Projection Pursuit from Scatter Matrices

In the previous section, projection pursuit under SMSN multivariate distributions is formulated as an eigenvector problem which stems from the third order cumulant matrix. In this section, the approach is formulated through an eigenvector problem concerned with the simultaneous diagonalization of the scatter matrices and ; in fact, such formulation is related to the canonical transformation of an SMSN vector [23]. The next theorem provides the theoretical foundation for the approach.

Theorem 2.

Let be a vector such that with mixing variable having finite first and second order moments. Then, η is the dominant eigenvector of .

Proof.

The proof follows pieces of previous work [22,23], so we sketch it. From Lemma 1 (Arevalillo and Navarro [22], Lemma 1), we know that the shape vector is an eigenvector of ; it is straightforward to show that it has eigenvalue

Now, let us consider another eigenvector of . Then, it holds that

since the vector is orthogonal to [23]. As a result, has associated eigenvalue equal to which satisfies that ; hence, we conclude that is the dominant eigenvector of . □

Theorem 2 establishes that the dominant eigenvector of lies on the direction of , which in turn gives the direction at which the maximal skewness projection is attained [22]; this finding points out the parametric interpretation of the dominant eigenvector of under SMSN distributions as a shape parameter accounting for the directional asymmetry of the model. On the other hand, it is well-known that the canonical transformation of a SMSN vector can be derived by the simultaneous diagonalization of the pair of scatter matrices , with the only skewed canonical variate being obtained by the projection onto the direction of the shape vector [23]; therefore, the computation of the skewness-based PP under the SMSN family would only require the estimation of both scatter matrices. Another alternative to derive the canonical transformation relies on the pair of scatter matrices , where is the scatter kurtosis matrix given by [52]; such alternative has appealing properties for SMSN distributions [23]. Hence, we can work with either or , in order to derive the canonical form of a SMSN vector, and compute the corresponding dominant eigenvectors in their simultaneous diagonalizations to get the vector driving the projection pursuit direction.

It is worthwhile noting that the ideas behind the canonical form of SMSN vectors are inspired in the ICS method for representing multivariate data through the comparison of two scatter matrices [53]. Although the choice of the scatter pair is of key relevance, has become a standard choice which works well in the statistical practice. The idea behind the ICS method is the search of a new representation coordinate system of the data by sequential maximization of the quotient . For SMSN distributions, a natural alternative is the scatter pair , given by the covariance and scale matrices of the model; therefore, the projection index for maximization would be . The study and comparison of projection pursuit and the ICS method has been previously discussed in the literature ascertaining the problem of which method is better suited to uncover structure in multivariate data [54,55,56]; the SMSN family is a flexible and wide class of distributions that allows to settle the problem within a parametric framework so that we can deep dive into the investigation by means of a simulation study at controlled parametric scenarios.

The next section tackles several issues concerned with the estimation of the dominant eigenvector from the formulations we have studied so far: on the one hand, the PP from the third cumulant matrix and, on the other hand, the projection pursuit from scatter matrices with the indices and .

3.3. Estimation and Computational Issues

The previous theoretical material has outstanding practical implications, as they point to different estimation methods for computing the skewness PP direction for SMSN vectors. In this section, we discuss the computational side of the issue.

Let us denote by the data matrix and let be the regular covariance matrix. Furthermore, let be the scaled data matrix, where is a vector, is the sample mean vector and the symmetric positive definite square root of . Then, the third cumulant matrix can be estimated by , where is the i-th row of .

The result given by Theorem 1 gives theoretical support for the empirical conjecture that proposes the right dominant eigenvector of the cumulant matrix as the starting direction of the HOPM iterative method [14], an issue also ascertained by Loperfido [11, Proposition 1] under the rank-one assumption for the left dominant singular vector of the third cumulant matrix, as well as in the context of the multivariate skew-t distribution with a reference to Arevalillo and Navarro [19]. When the input vector follows a SMSN distribution, we know that the vector , with the right dominant eigenvector of , is proportional to the shape vector that yields the maximal skewness projection [22]. Then, it stands to reason that the skewness PP direction can be estimated by , where is the right dominant eigenvector of ; therefore, the first method we propose to obtain the maximal skewness direction for SMSN vectors is non-parametric in nature and relies on the computation of the right dominant eigenvector of the third cumulant sample matrix.

Method 1

(M1). Estimate the skewness PP direction by .

Another approach to compute the PP direction arises from the scatter matrices and which can also be estimated from data. In this case the PP direction can be derived by computing the dominant eigenvector of the matrix , where is a sample estimate of the population kurtosis matrix . The ICS R package [57] has functionalities for the estimation of and the computation of the dominant eigenvector as a result. Hence, the second approach could be stated as follows.

Method 2

(M2). Estimate the skewness PP direction by the dominant eigenvector, , of .

Theorem 2 suggests an alternative approach to the problem, which brings it to the parametric arena: Let us denote by and the maximum likelihood estimators of and ; then the dominant eigenvector of is the estimator of the shape vector derived from the maximum likelihood (ML) method. The ML approach has obvious practical limitations as it requires knowing in advance the multivariate distribution of the underlying model that generated the data. In order to overcome this limitation, we propose an approach aimed at balancing the accuracy and the computational cost of the estimation procedure; a varied bunch of simulation assays—not reported here for the sake of space—suggests, as a good tradeoff, the estimation of the dominant eigenvector of by the dominant eigenvector of , where is the regular covariance matrix and is the estimation of the scale matrix , calculated by the ML method under the multivariate SN model. The proposal can be stated as follows:

Method 3

(M3). Estimate the skewness PP direction by which is the dominant eigenvector of .

In the next section, a simulation study with artificial data is carried out in order to investigate the performance of the proposed methods.

4. Simulation Experiment

Here, we consider the multivariate ST and SSL models; for the sake of simplicity, we take for the location vector. The assays of the simulation study are carried out by drawing 1000 samples from a multivariate ST or SSL distribution under the experimental sampling conditions specified as follows. The dimension of the input vector is set at the values ; the parameters of the underlying model are determined by the following settings: the correlation matrix is defined by a Toeplitz matrix , where , with , and the entries of the diagonal matrix are generated at random from the set of integer values between 5 and 25; the shape vector is given by with a unit-norm vector chosen at random and the magnitude, which is set at the values ; the tail weight parameters are set at the values for the ST model and for the SSL model. Finally, the sample size is taken in accordance with the dimension of the input vector at the values . Under these experimental settings, the accuracy of the proposed estimation methods is assessed using the mean square error (MSE), which is computed through the comparison of the unit-norm vectors corresponding to the directions estimated by each method with the exact theoretical direction of the multivariate model, using the square of the Euclidean distance.

The next section gives the results of the simulations for the bidimensional case, they are illustrated by fancy plots of directions; on the other hand, Section 4.2 shows the results when the dimension is .

4.1. Simulation Study for Bidimensional SMSN Distributions

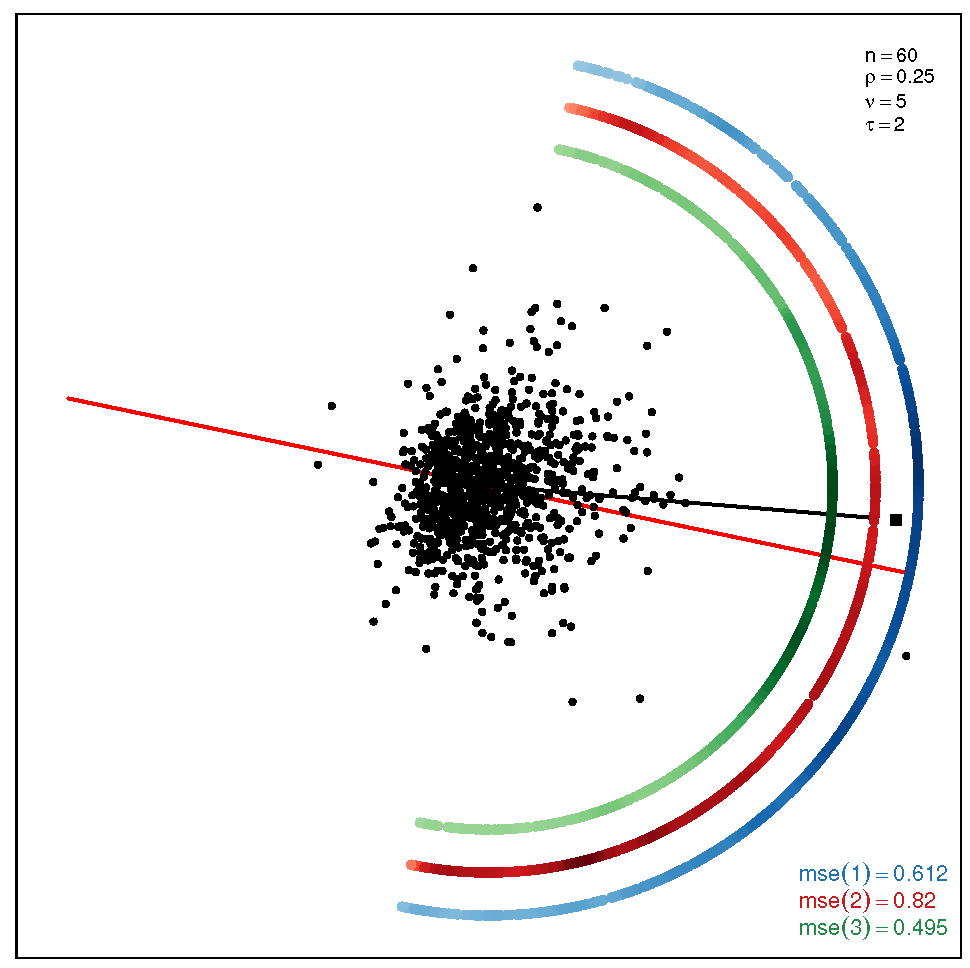

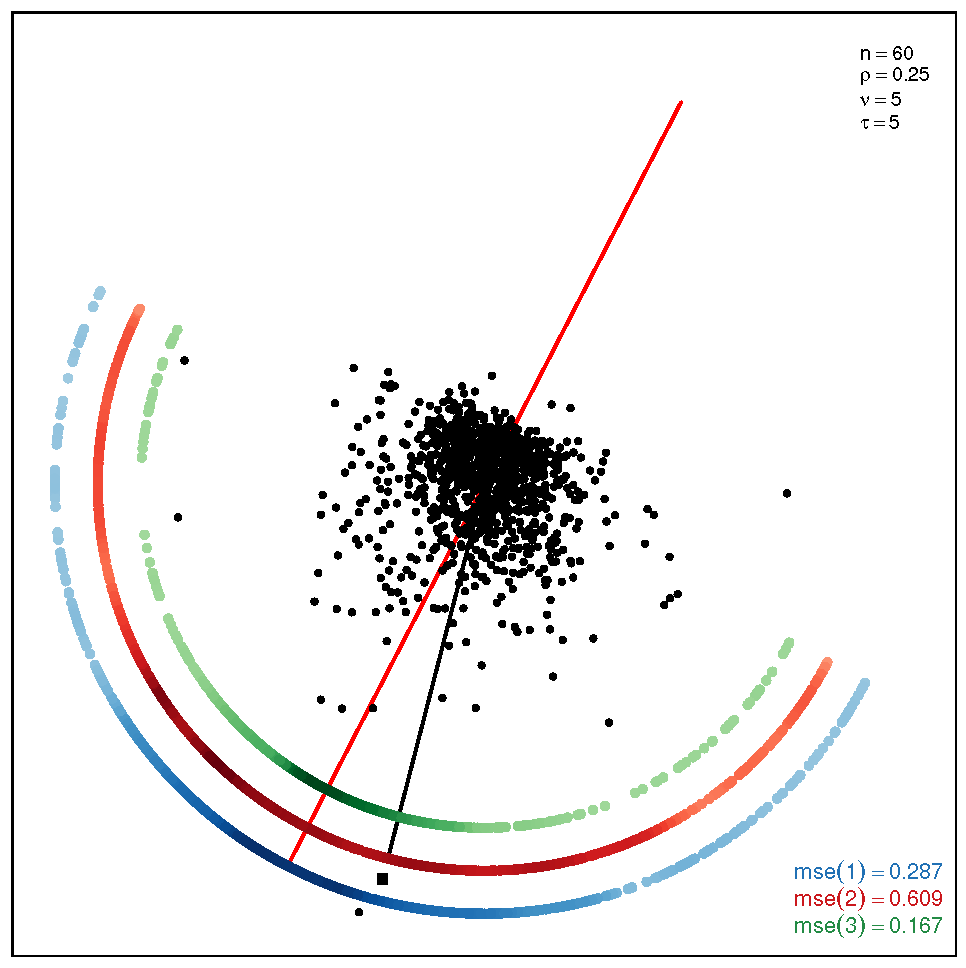

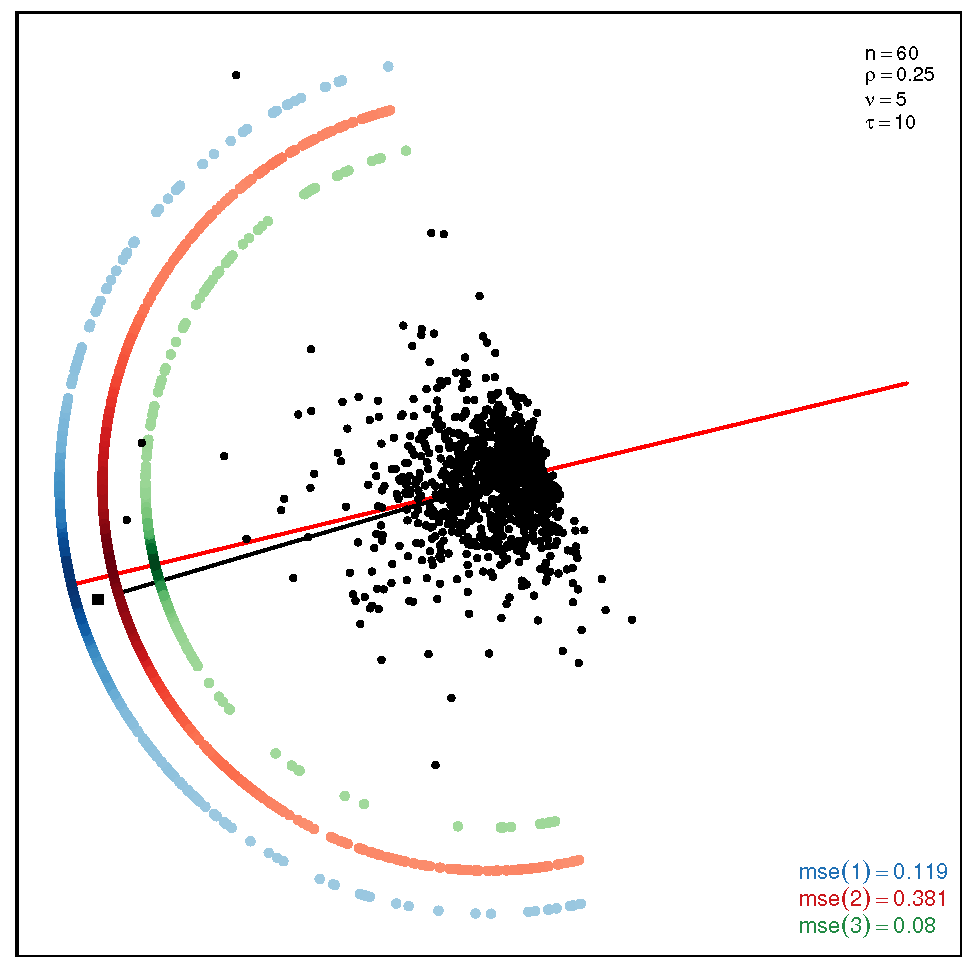

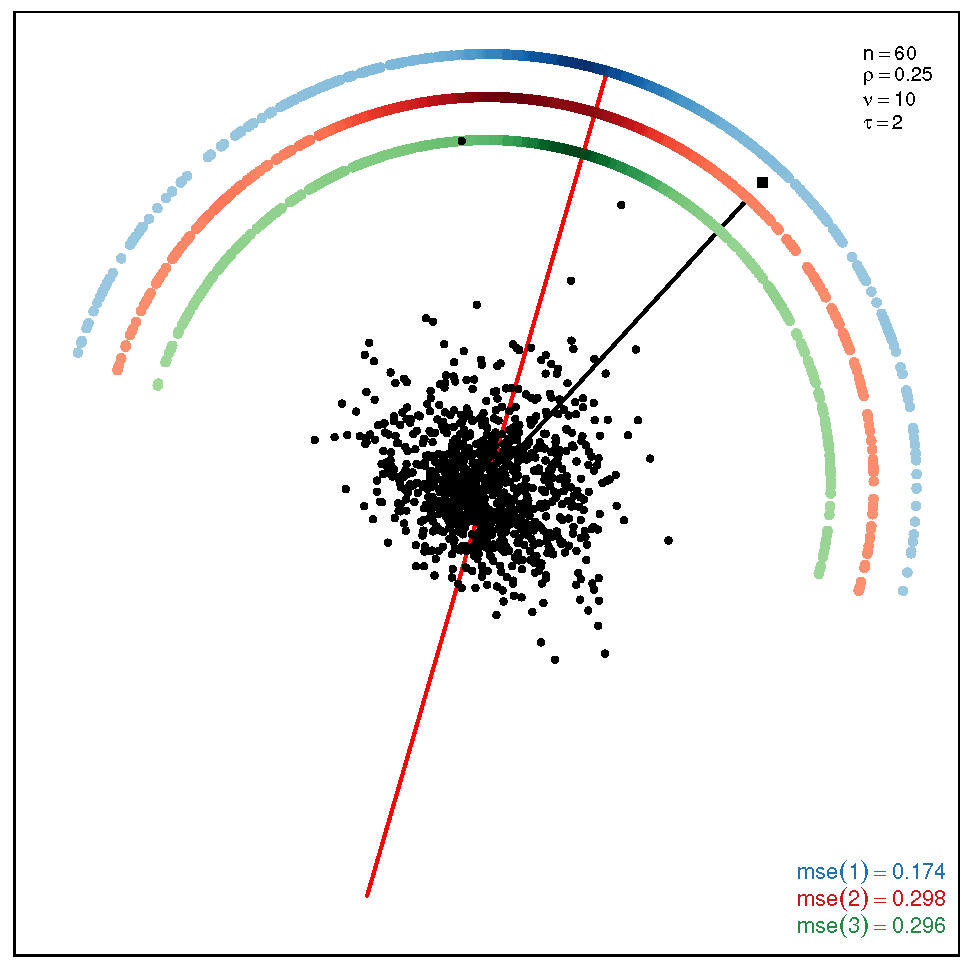

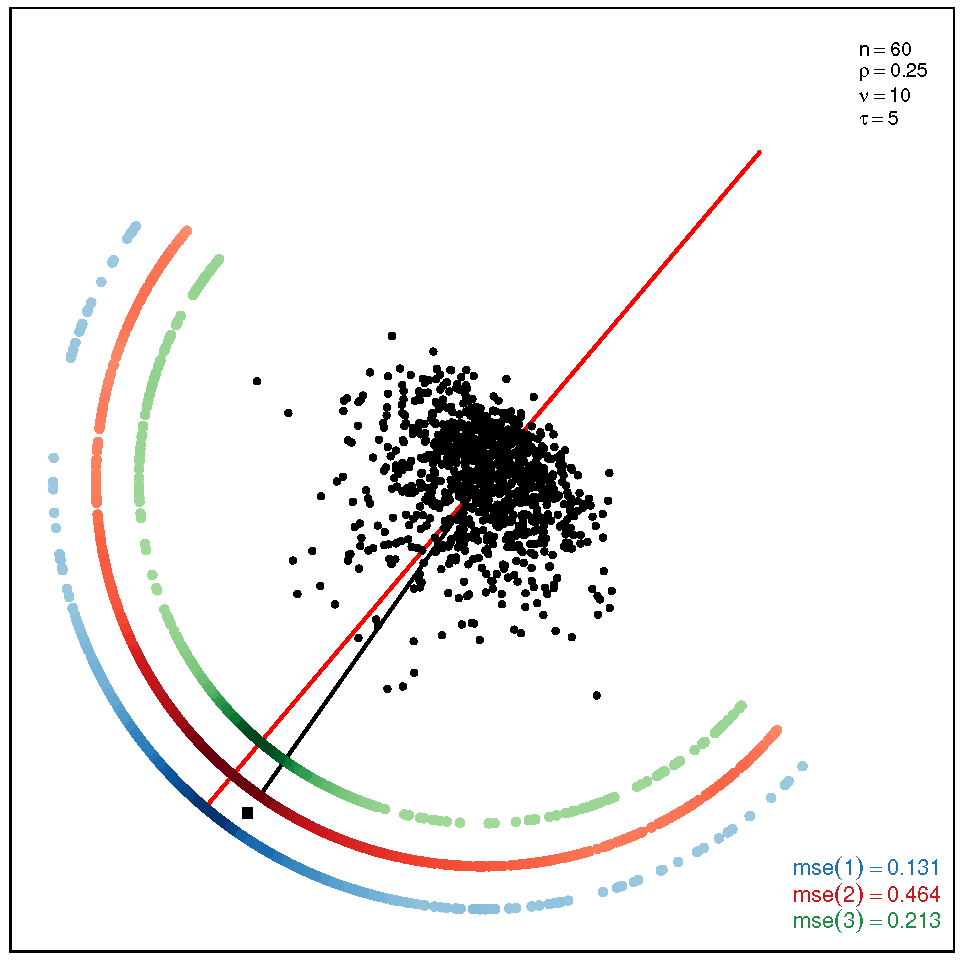

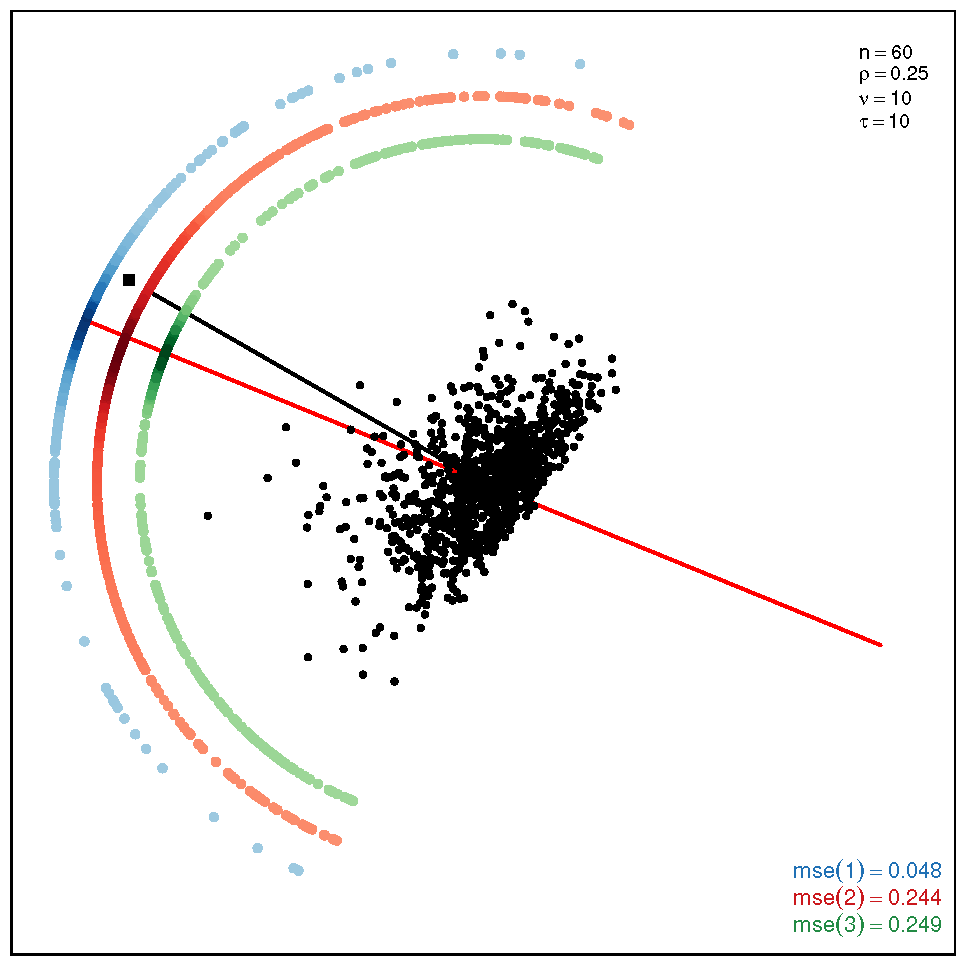

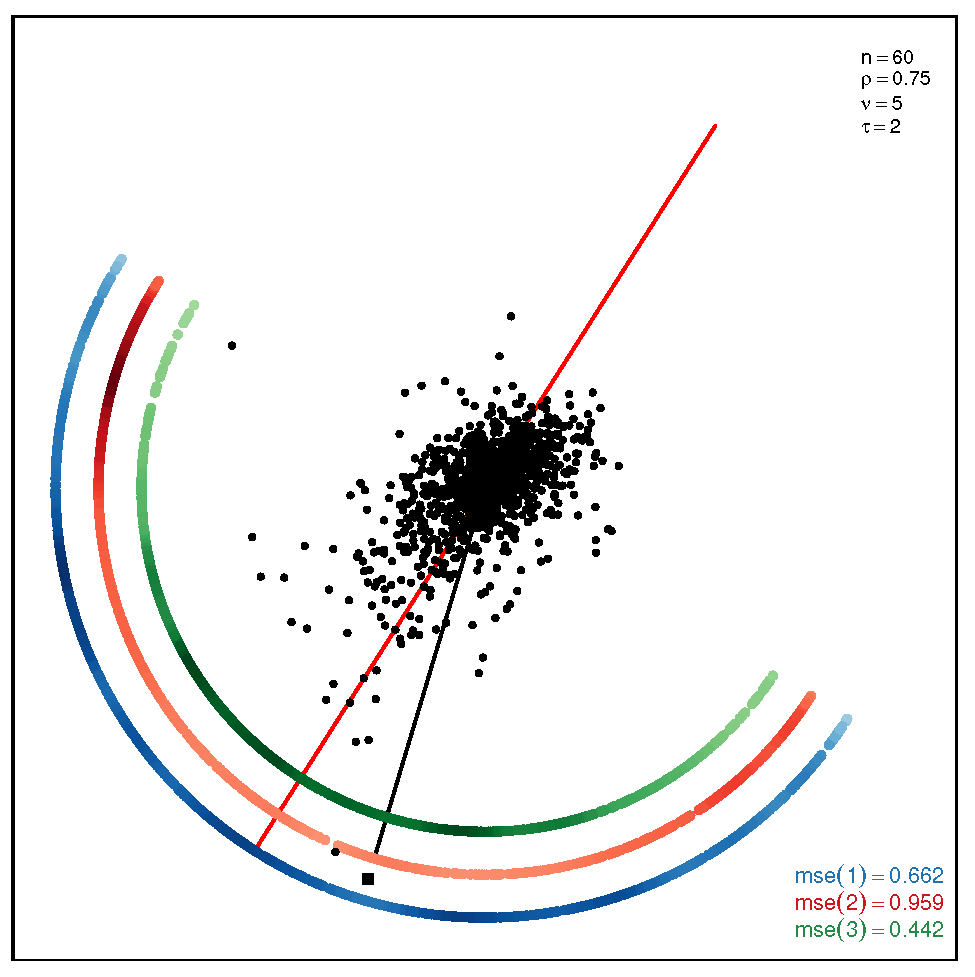

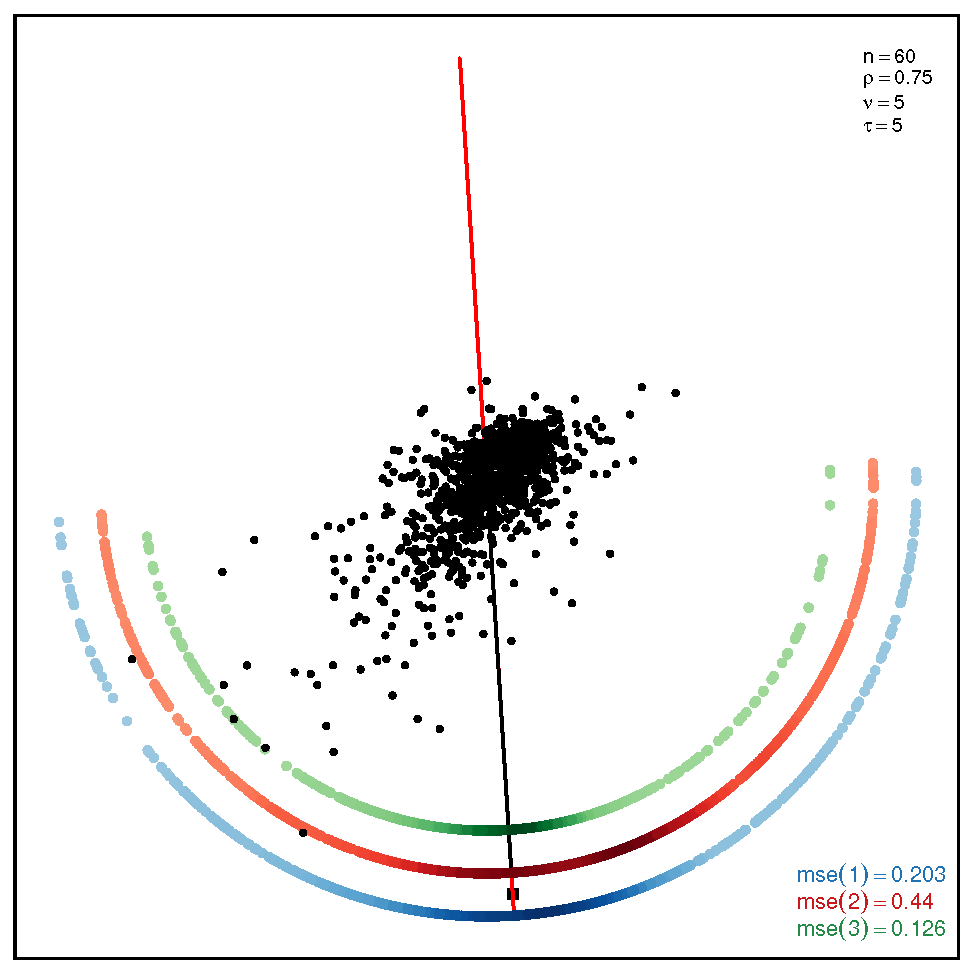

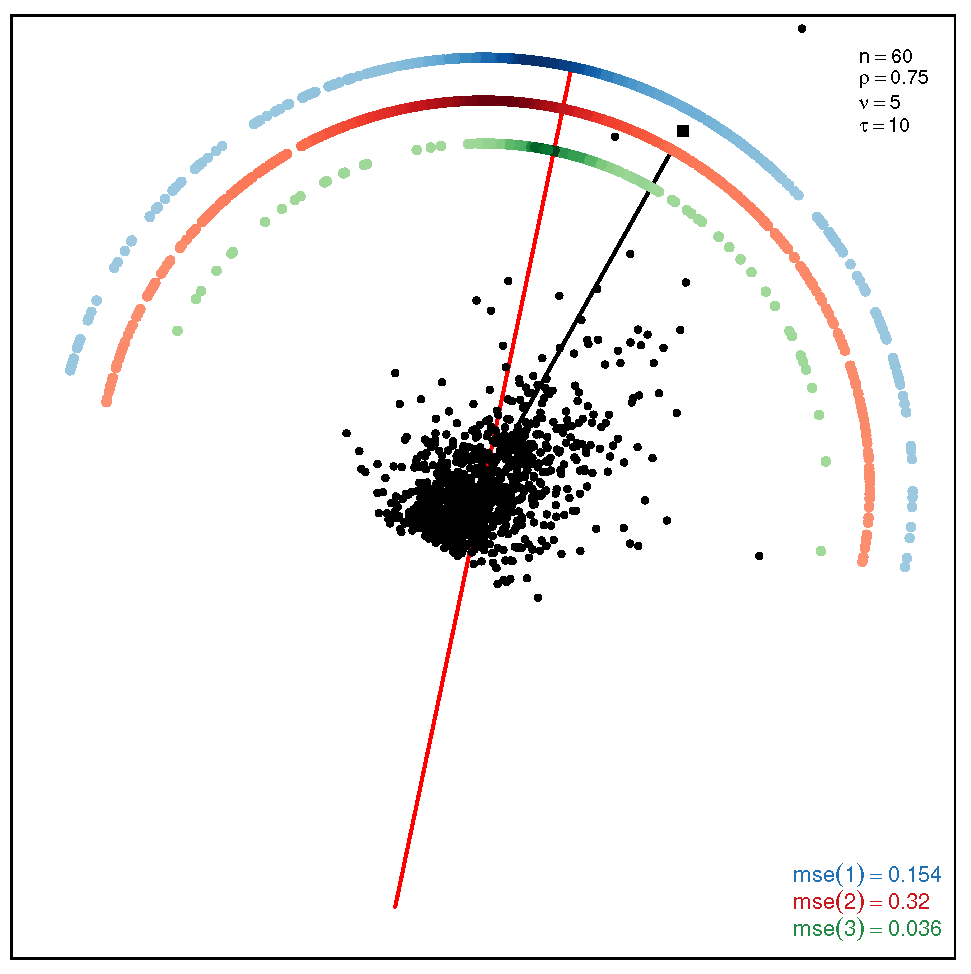

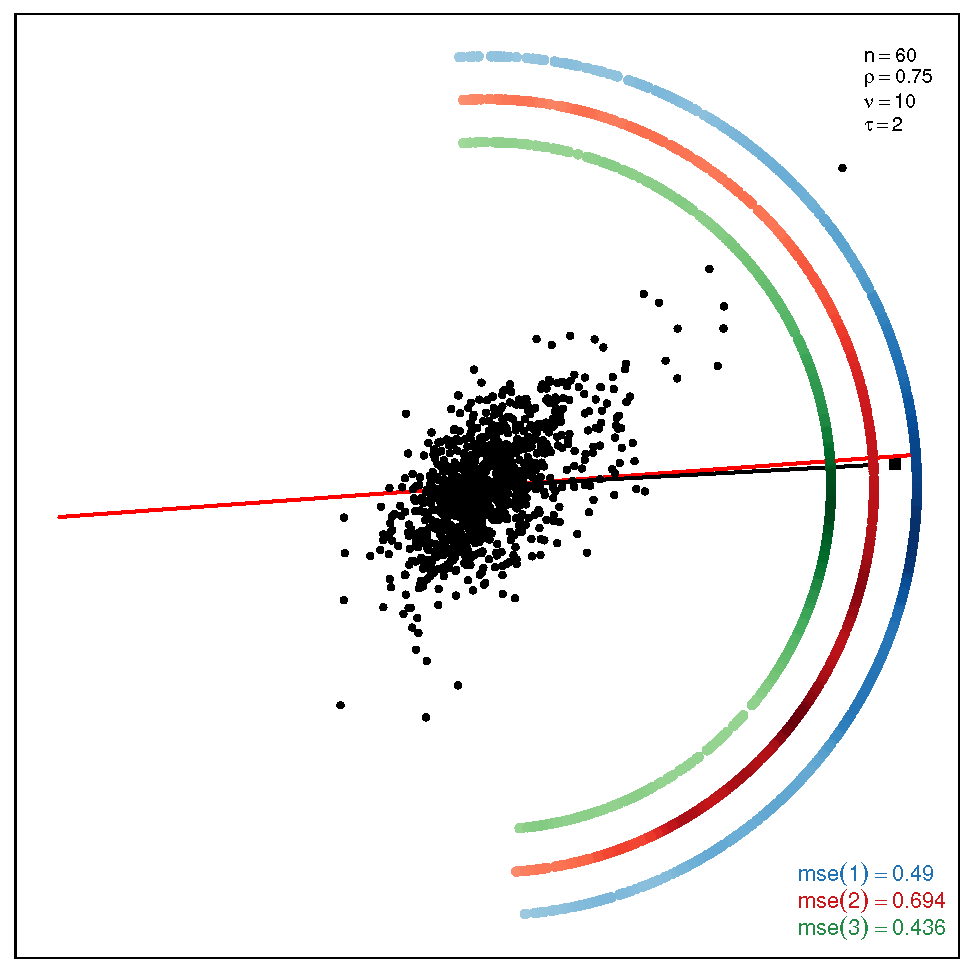

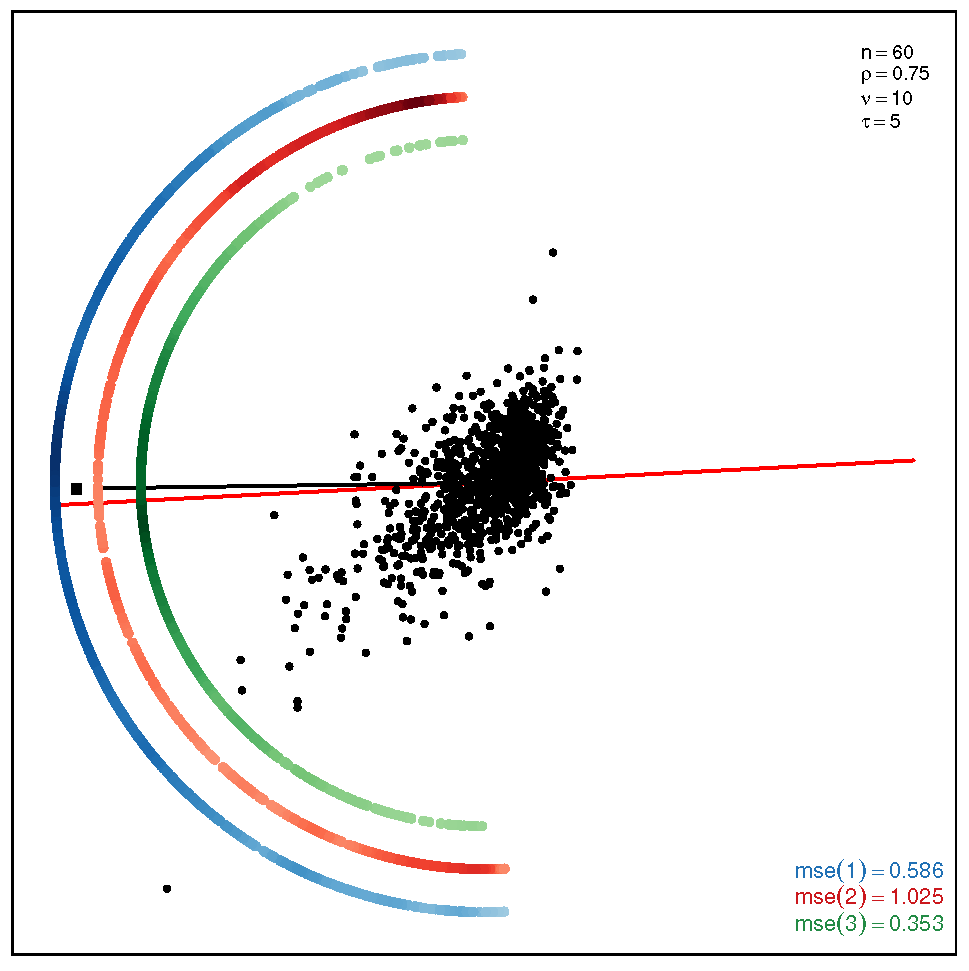

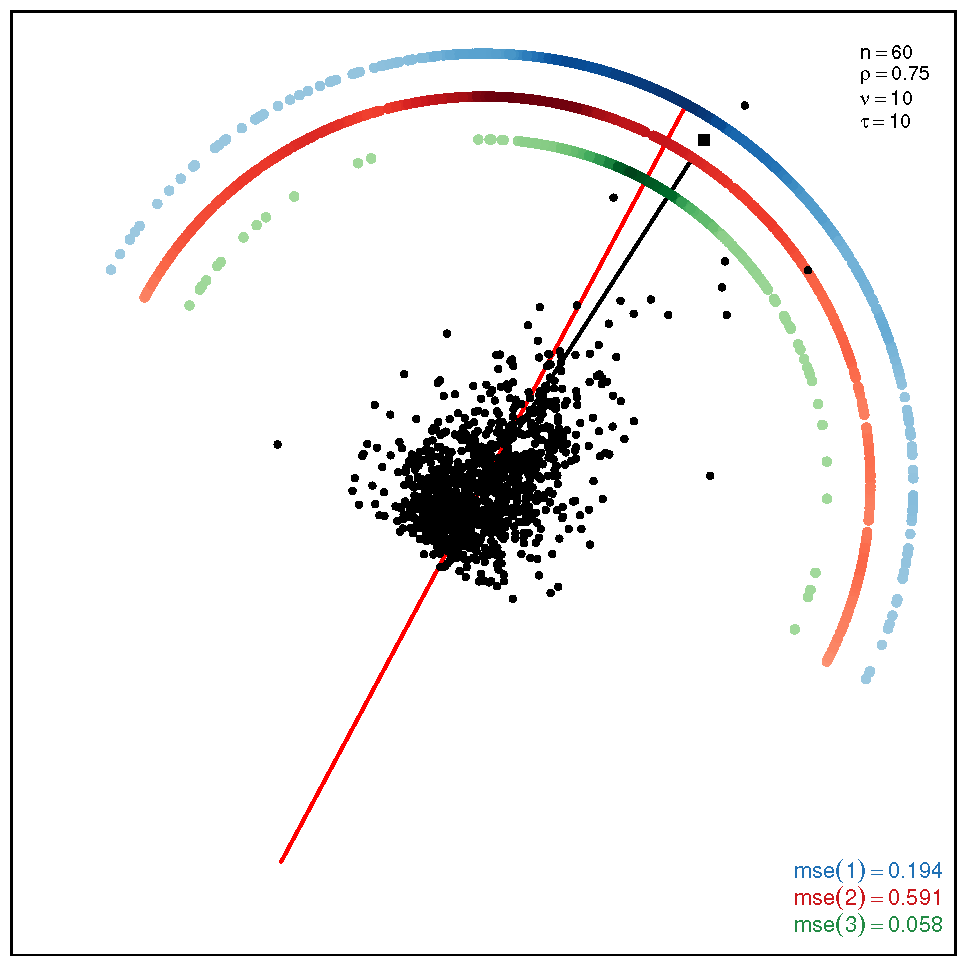

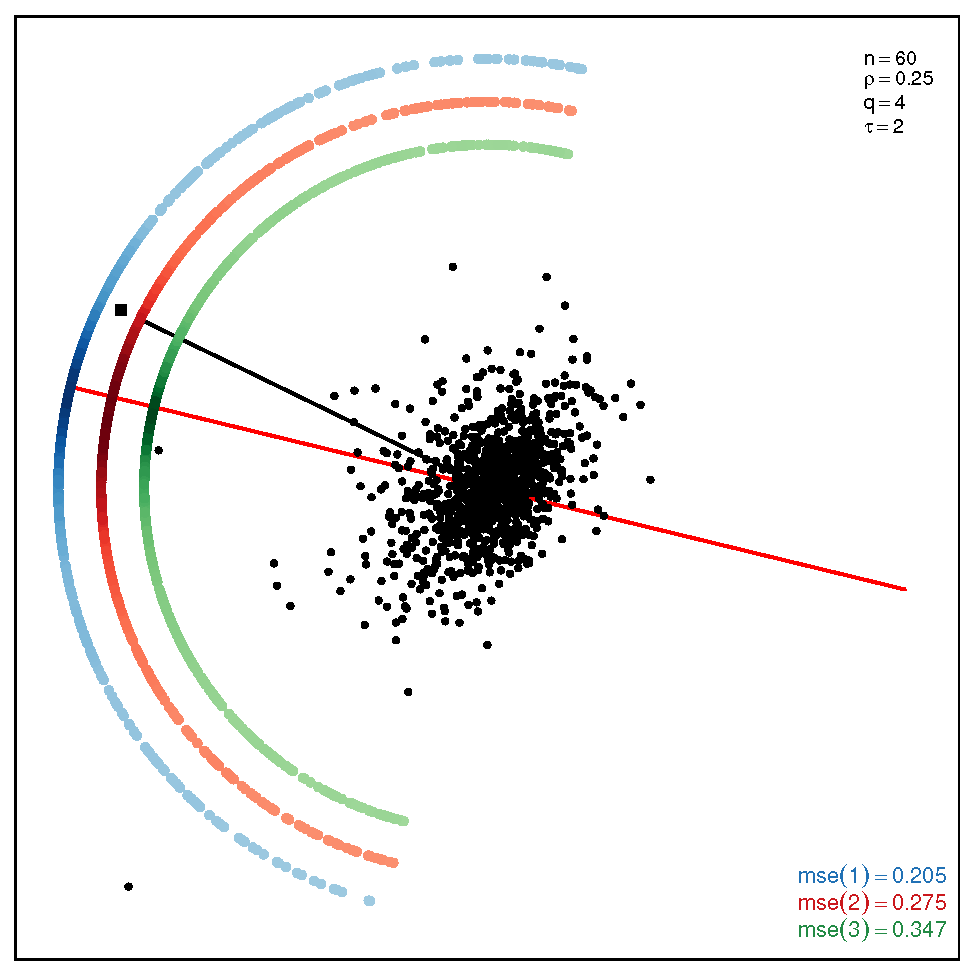

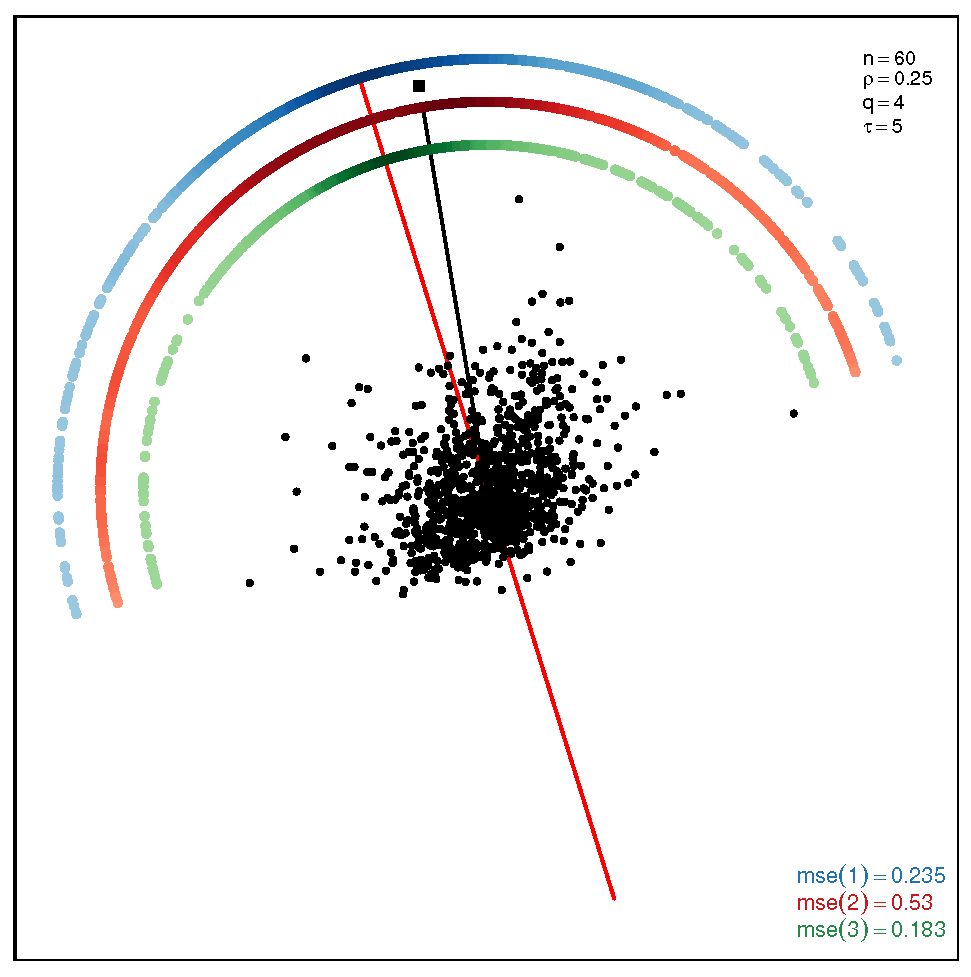

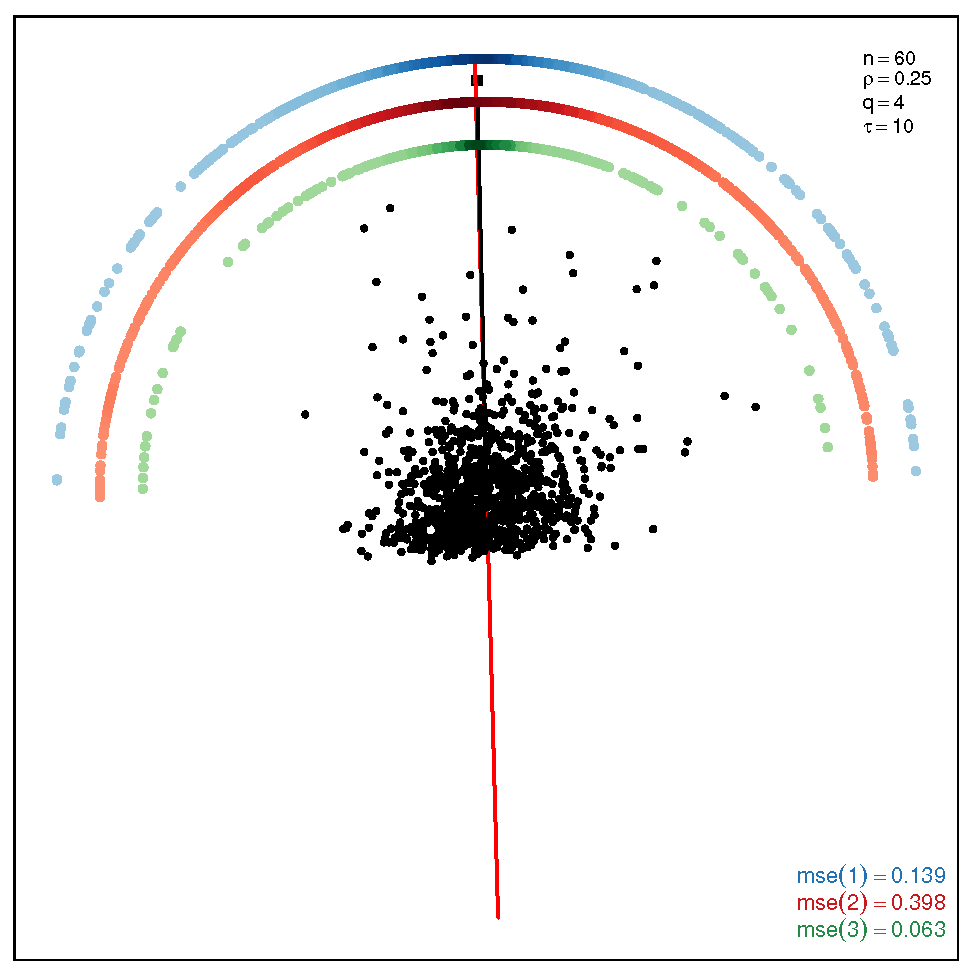

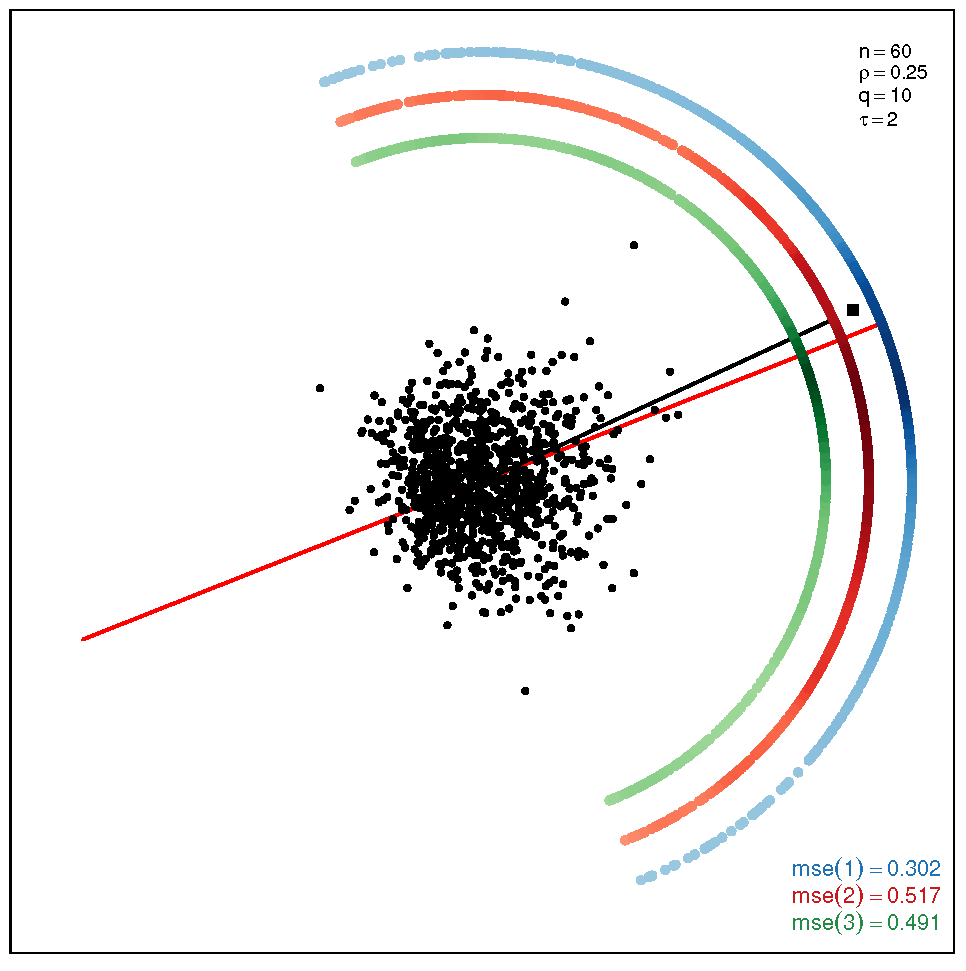

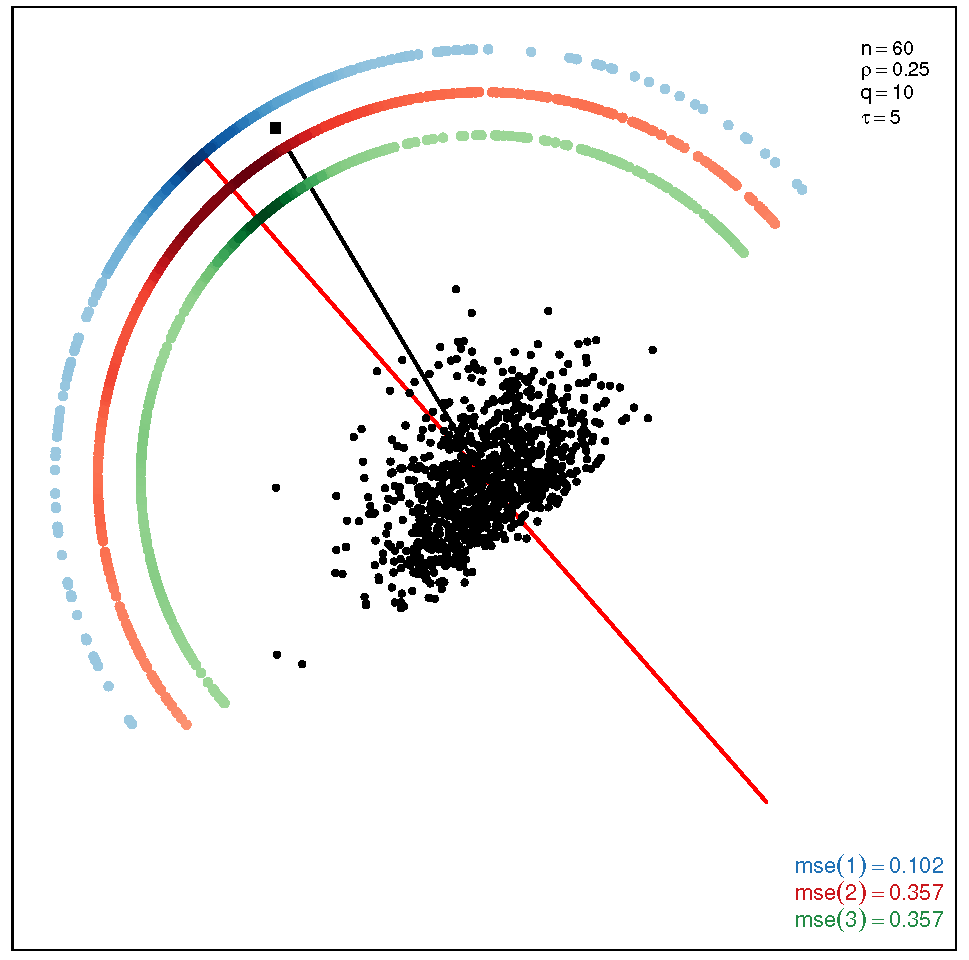

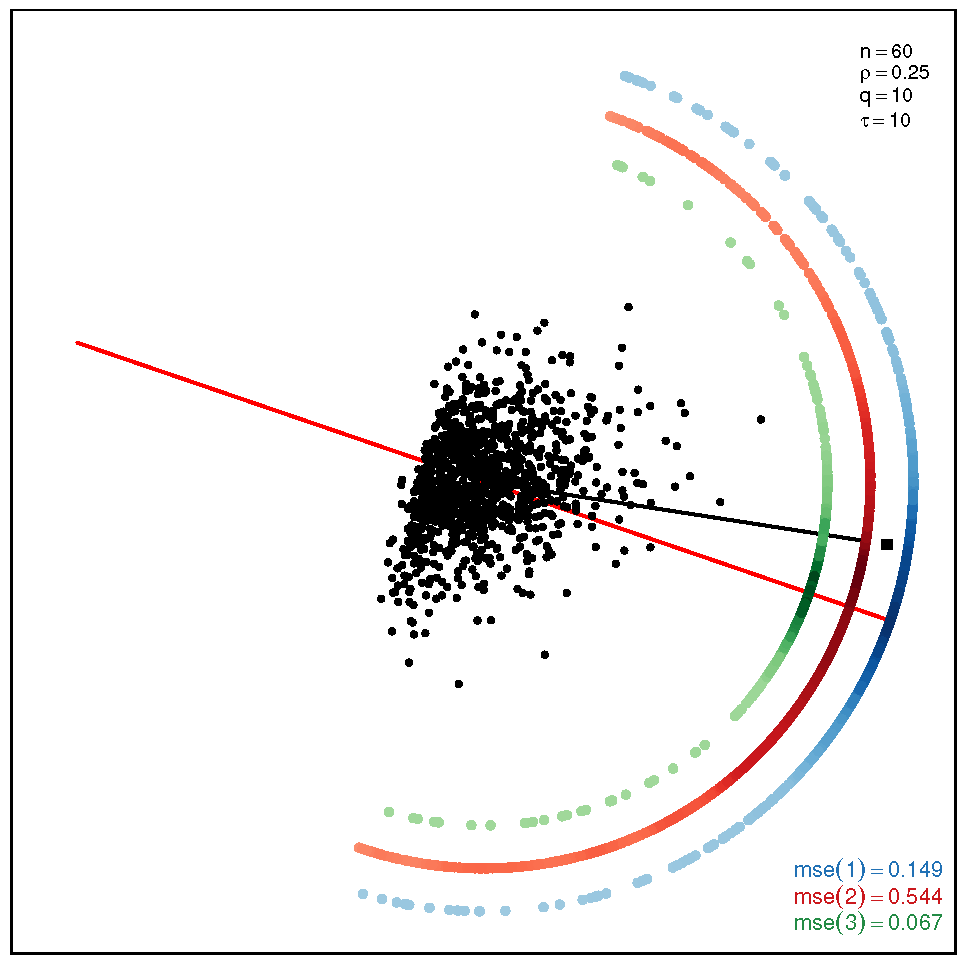

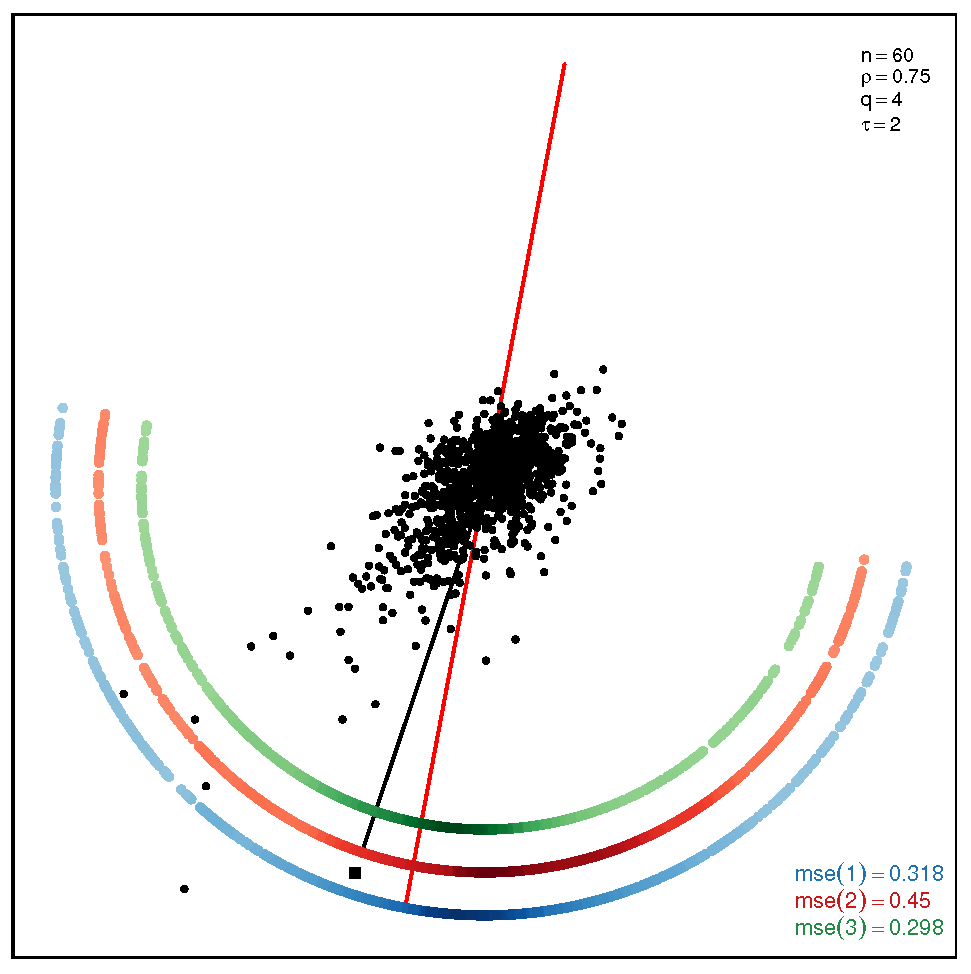

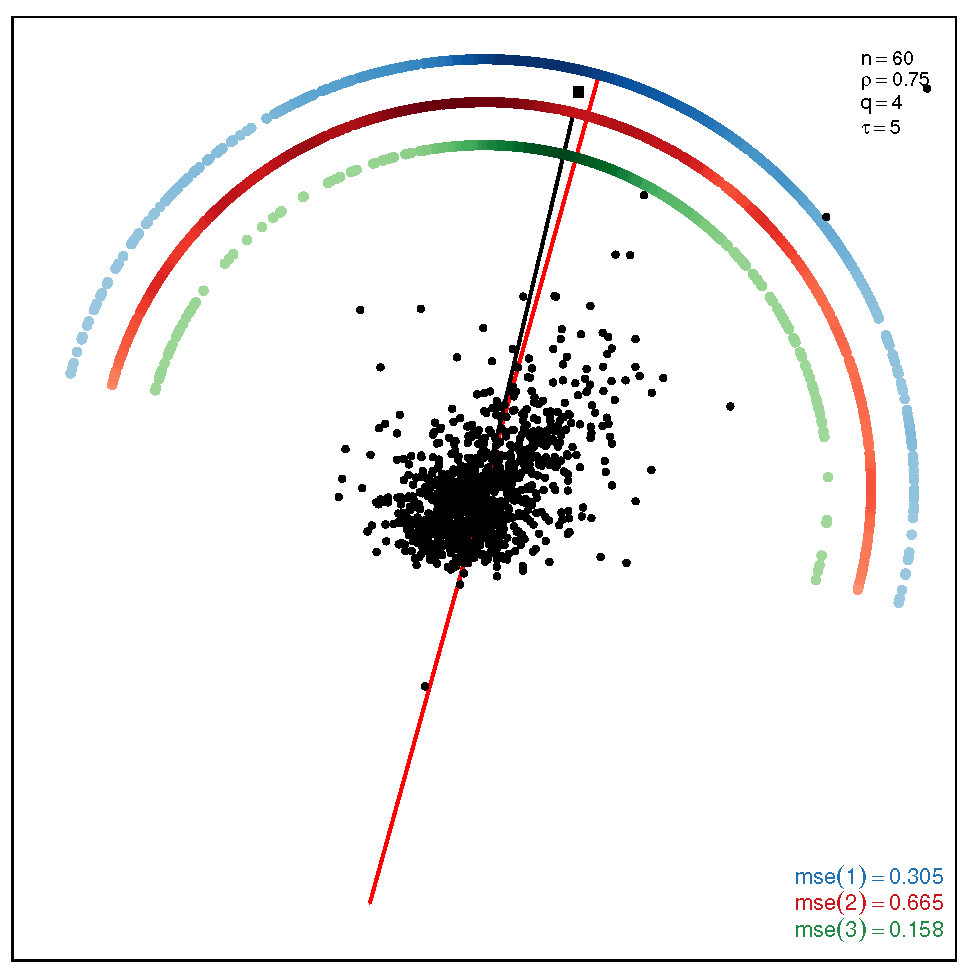

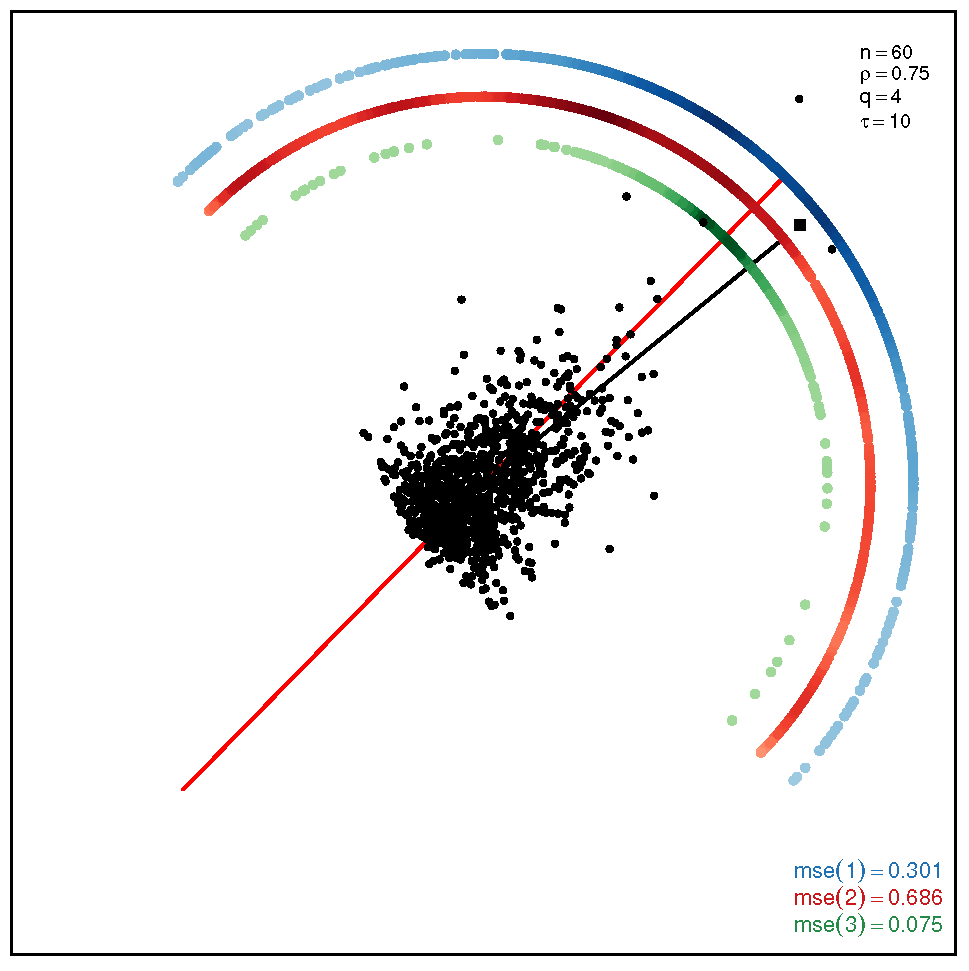

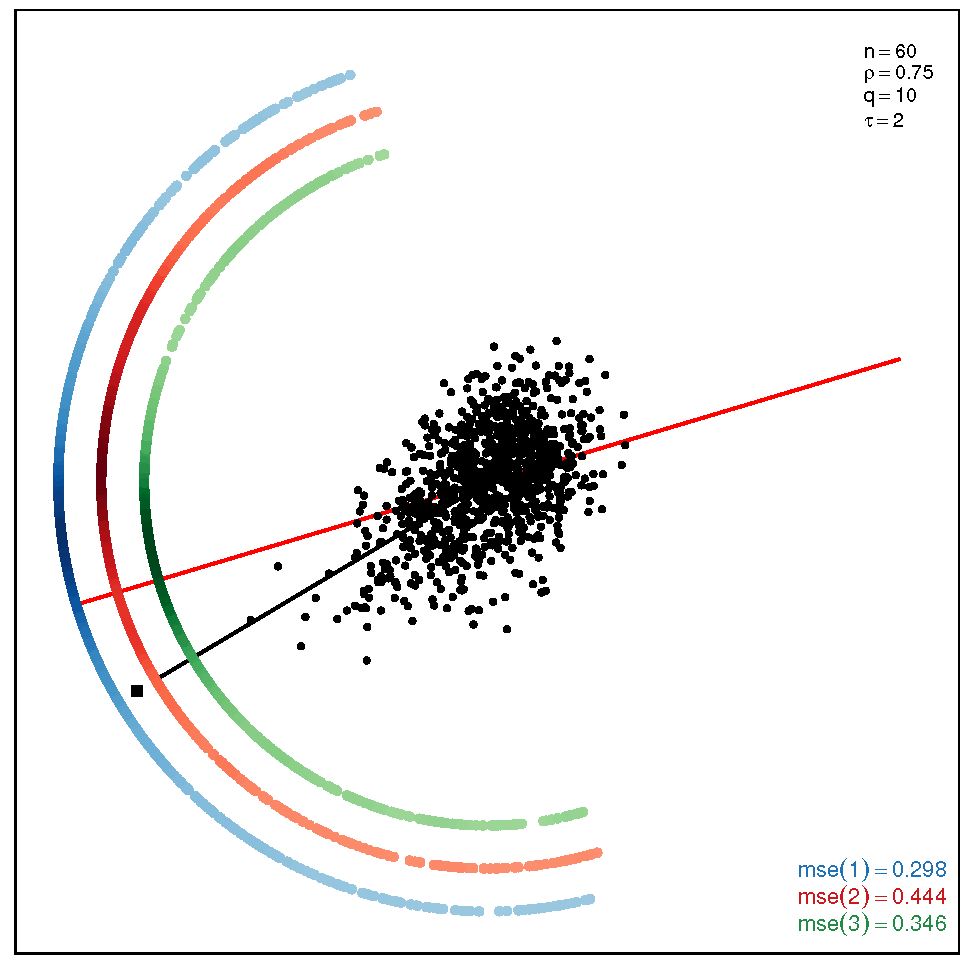

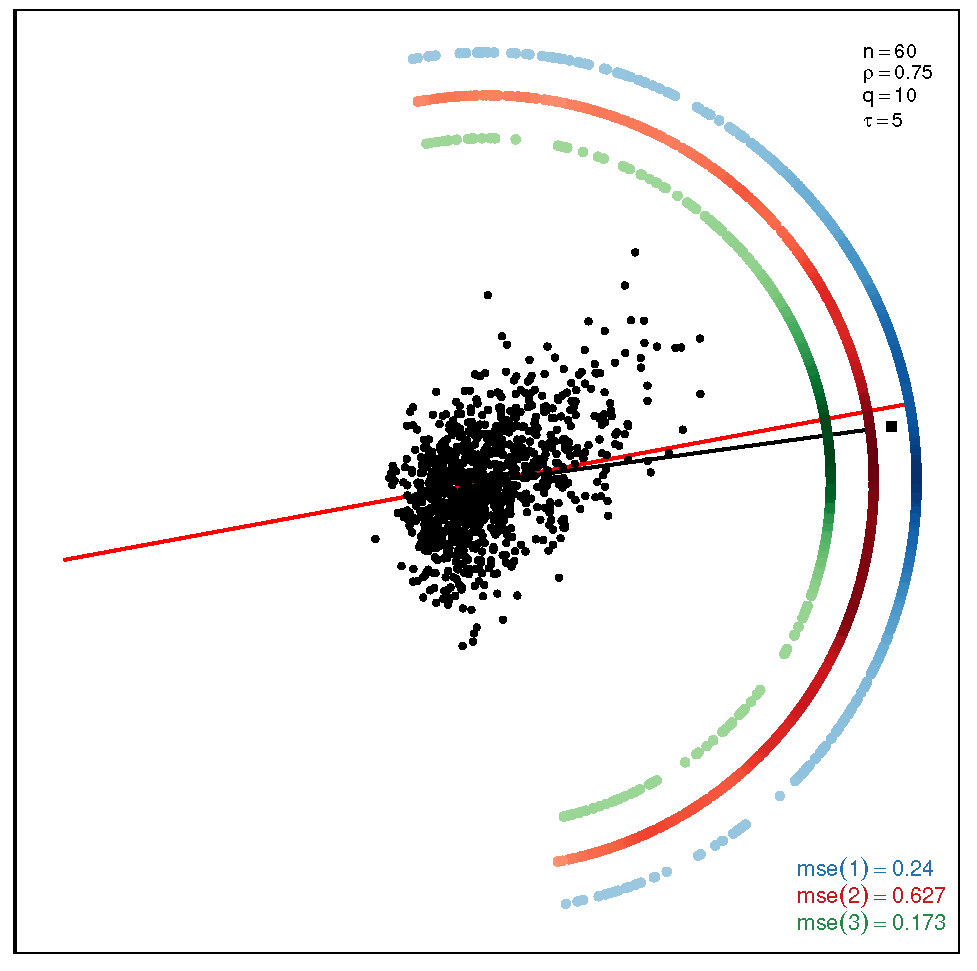

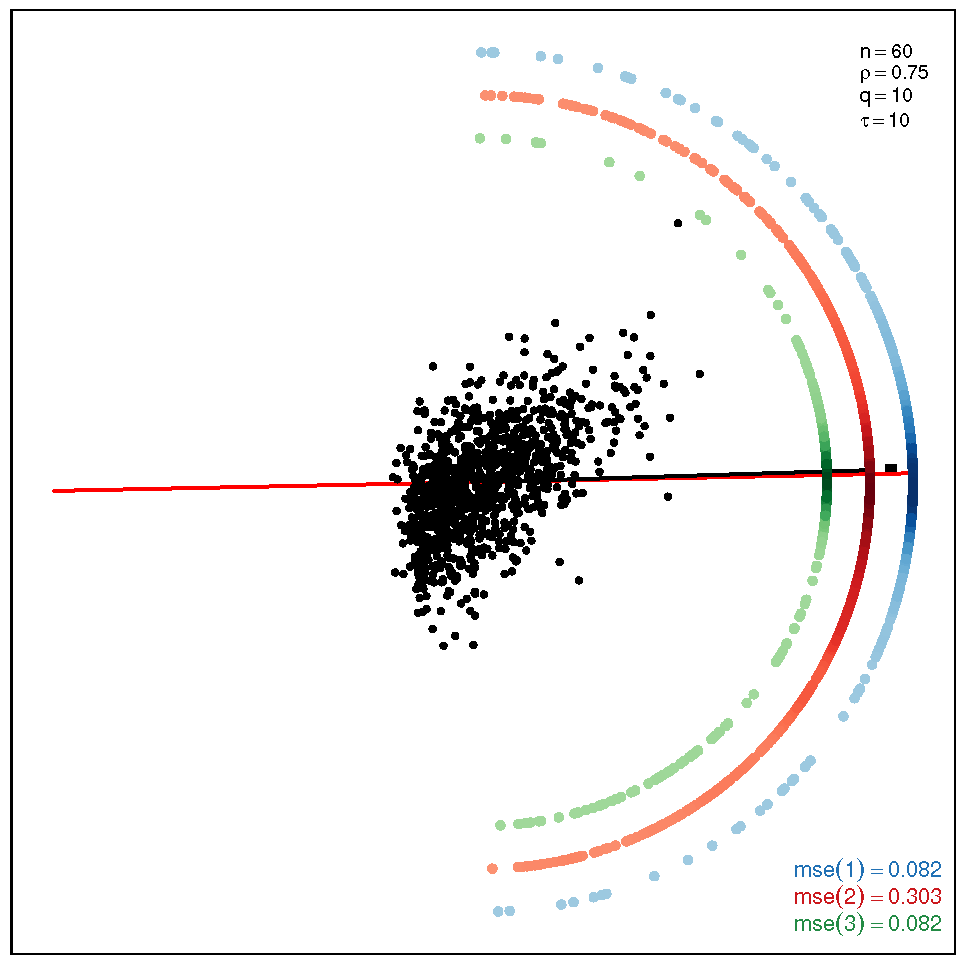

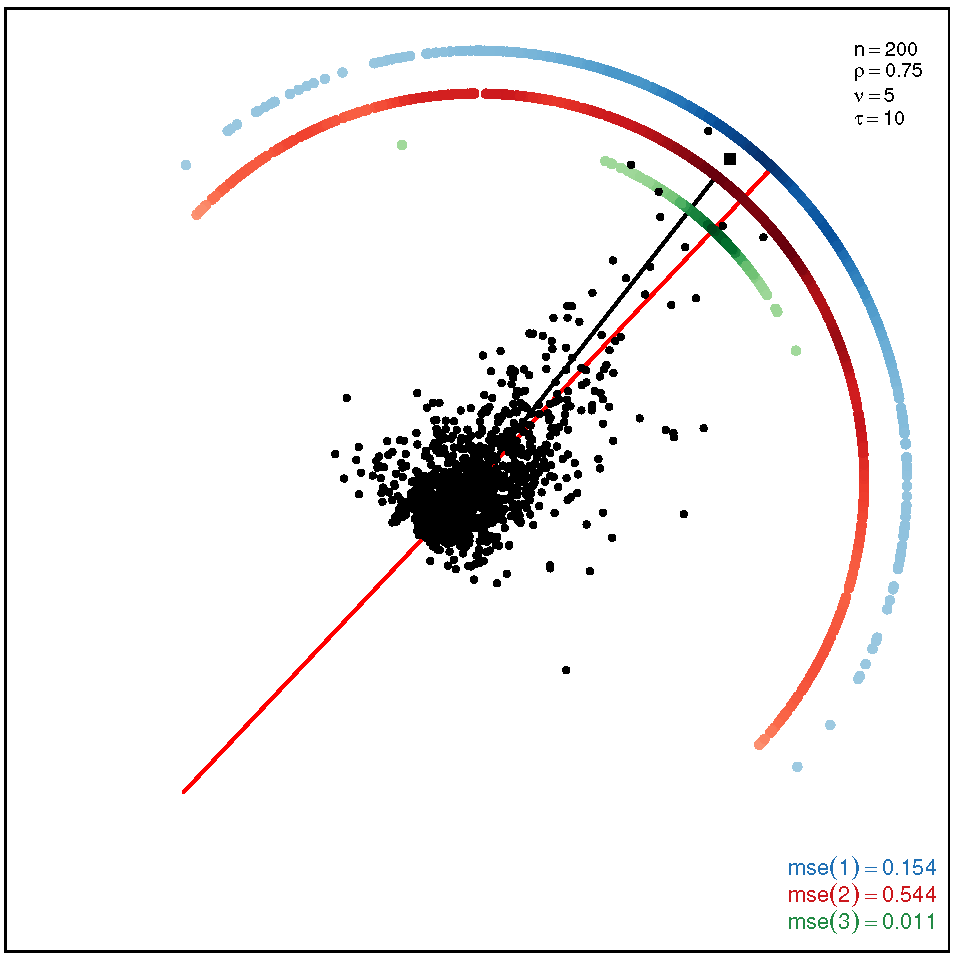

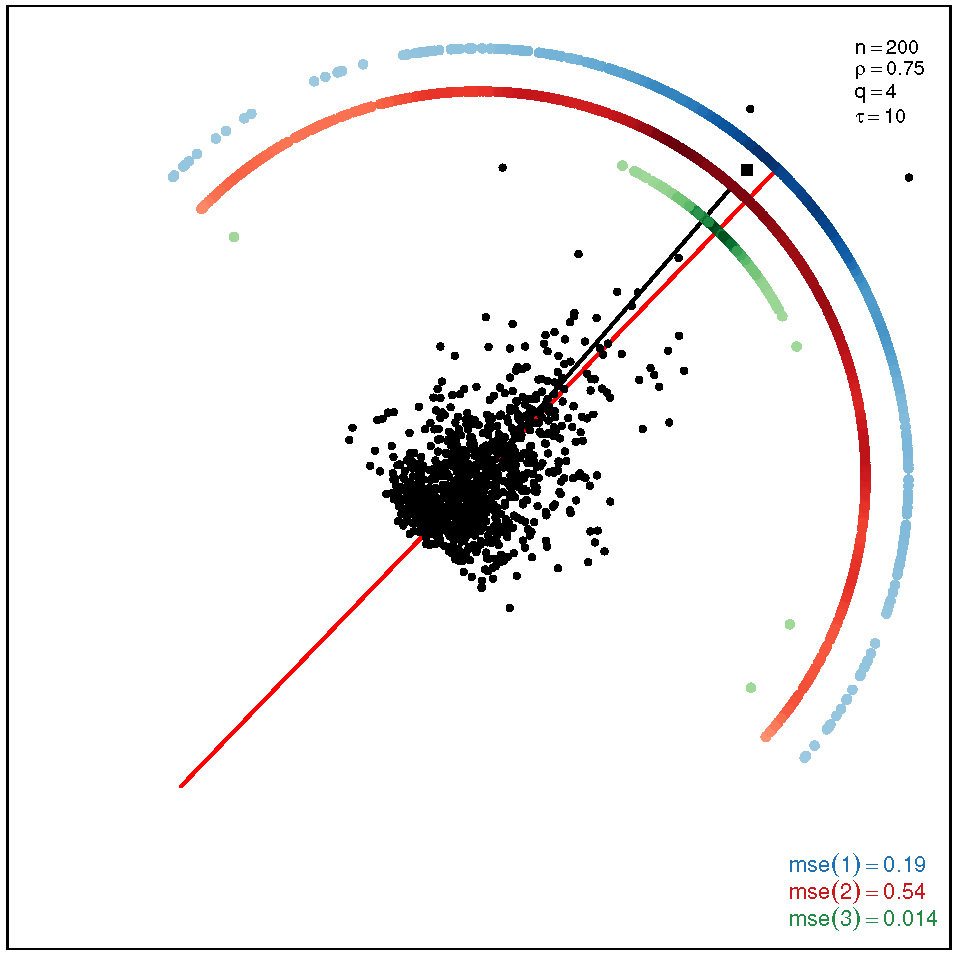

This section addresses the bidimensional case; the outputs of the simulations are summarized by means of plots of directions arranged in tables of figures that display the following information: the red and black lines represent the directions of the theoretical shape vectors and , respectively. On the other hand, the points in the coloured circular crowns correspond to the locations of the directions estimated by the proposed estimation methods, with the blue crown showing the estimations provided by M1, the red crown by M2 and the green crown by M3; their MSE values are displayed at the bottom right legend of each figure. Finally, the clouds of points for the observations obtained by the simulations are also depicted for completeness.

In order to assess the performance of the estimation methods for the bidimensional case, the simulation assays are carried out taking a sample size which may be a representative choice of the small data sets arising in real studies. The plots depicted in Table 1 show the MSEs obtained for samples coming from a ST distribution when . Overall, we can observe that methods M1 and M3 are more accurate than M2. The outputs show that the errors are quite similar for all the methods in the scenario closer to the normal model, provided by the pair ; starting from this scenario, we can see that, as we inject asymmetry into the multivariate model, M1 outperforms methods M2 and M3 but its accuracy gets worse when we move to the heavy tail scenarios for ; in such a case, M3 provides the most accurate results (). On the other hand, the results displayed by the plots in Table 2 for the coefficient show that M3 is the most accurate method in all the situations, with its best performance () in the scenario which is furthest away from the normal distribution.

Table 1.

Plots with the theoretical and estimated directions under the bidimensional ST distribution when .

Table 2.

Plots with the theoretical and estimated directions under the bidimensional ST distribution when .

The results of the simulations under the SSL distribution are given by the plots displayed in Table 3 and Table 4. In this case, the accuracy of the estimation methods presents similar patterns as those ones observed under the ST model, with the exceptions of the non-normality pair at the bottom right corner, where M3 outperforms M1 and M2, and the pair , at the top right corner, where M2 and M3 are outperformed by M1. When the coefficient is equal to (see Table 4), we can observe once again that M3 gives more accurate results than M1 and M2.

Table 3.

Plots with the theoretical and estimated directions under the bidimensional SSL distribution when .

Table 4.

Plots with the theoretical and estimated directions under the bidimensional SSL distribution when .

We have deployed additional experiments for other sample sizes, not reported here for the sake of space. In order to illustrate their results, we provide a representative case when for the scenario with the highest asymmetry and tail weight under both multivariate models ST and SSL. The results are displayed by the plots appearing in the figures of Table 5; they show the remarkably superiority of method M3.

Table 5.

Plots with the theoretical and estimated directions under the bidimensional ST (left) and SSL (right) distributions for the highest asymmetry and tail weight levels when .

4.2. Simulation Study for SMSN Distributions when

We have carried out a wide range of experimental trials for dimensions greater than two. Here, we just provide the results obtained from a few simulation assays—for dimensions and sample sizes —which summarize and illustrate our main findings (see Table 6 and Table 7). As could be expected, we observe that, as the dimension increases, the accuracy of all the estimation methods deteriorates, with M3 being the most resistant method to the curse of dimensionality.

Table 6.

Results under the ST distribution. For each non-normality pair , dimensions of the data frame and coefficient , the cells contain the MSEs given by method 1 (first row), method 2 (second row) and method 3 (third row).

Table 7.

Results under the SSL distribution. For each non-normality pair , dimensions of the data frame and coefficient , the cells contain the MSEs given by method 1 (first row), method 2 (second row) and method 3 (third row).

Under the ST distribution, the results of Table 6 report the following findings: when and , the estimations given by methods M1 and M3 show similar competitive accuracies provided that , whereas the superiority of M3 can be observed for the sample size , especially in the case of a heavier tail weight . When we take , the superiority of M3 becomes more remarkable, as M1 tends to get worse while M3 gains in accuracy, with the lowest MSE appearing when in the furthest scenario from the normal model . When we move to the higher dimension , the errors increase to values greater than 1 in many cases, specifically in the scenarios closer to the normality; we can also see that, as the asymmetry and tail weight increase, the accuracy of M1 and M3 improves, with the latter giving acceptable errors () at the sample size .

For the SSL distribution, the general patterns reported for the case of the ST model come up once again: all the estimation methods give similar accuracies in the scenarios close to normality, M1 and M3 have a good performance as the asymmetry increases, with a better behavior of M3 for the heavier tail weight; note that this pattern becomes more remarkable for the larger coefficient . Nevertheless, in this case, we can observe the negative impact of the dimension on the performance of M1, and particularly M2, methods which in most cases yield very large MSEs. Unlike them, M3 gives very good estimations with the minimum errors ranging in the interval .

Finally, we would like to stress that the curse of dimensionality has a smaller impact on the performance of method M3 than some other simulation experiments, not reported here for the sake of space, have shown. Here, we provide an illustrative example: when we consider a ST vector with dimension and parametric settings given by and , the values of the MSE for the sample size are (M1), (M2) and (M3).

5. Summary and Concluding Remarks

This paper has addressed the skewness-based PP problem when the underlying multivariate distribution of the input vector belongs to the family of SMSN distributions. We have presented skewness PP as an eigenvector problem based on either the third order cumulant matrix or well-known scatter matrices of the model; the connection between the resulting dominant eigenvectors and the shape vector that regulates the asymmetry of the multivariate model has been established, such a connection also sheds light on the interpretability of PP and the ICS method when approached from the parametric framework. The theoretical results point out three methods for estimating the skewness PP direction; the application to artificial data has allowed us to evaluate their performance and has also provided useful insights for comparing them.

This paper has also gone through the role played by the shape vector of the model to assess the multivariate asymmetry in a directional way. However, it is not clear to us how the tail weight and shape vector of the SMSN distribution act together to account for the multivariate non-normality. We conjecture that the issue should be addressed by examining the role of both parameters as indicators of non-normality in accordance to well-defined multivariate stochastic orderings along the lines of previous work [58,59,60]. To the best of the authors’ knowledge, the issue is still unexplored and may deserve future theoretical research to achieve a deeper understanding about their role as non-normality indicators in the SMSN family.

Author Contributions

Conceptualization, J.M.A. and H.N.; methodology, J.M.A. and H.N.; validation, J.M.A. and H.N.; formal analysis, J.M.A. and H.N.; investigation, J.M.A. and H.N.; writing—original draft preparation, J.M.A. and H.N.; writing—review and editing, J.M.A. and H.N.; visualization, J.M.A. and H.N.; funding acquisition, J.M.A. and H.N. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by Universidad Nacional de Educación a Distancia (UNED). Spain.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors wish to thank the reviewers for their helpful comments.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kruskal, J.B. Toward a practical method which helps uncover the structure of a set of multivariate observations by finding the linear transformation which optimizes a new “index of condensation”. In Statistical Computation; Elsevier: Amsterdam, The Netherlands, 1969; pp. 427–440. [Google Scholar]

- Friedman, J.H.; Tukey, J.W. A Projection Pursuit Algorithm for Exploratory Data Analysis. IEEE Trans. Comput. 1974, 23, 881–890. [Google Scholar] [CrossRef]

- Huber, P.J. Projection Pursuit. Ann. Stat. 1985, 13, 435–475. [Google Scholar] [CrossRef]

- Jones, M.C.; Sibson, R. What is Projection Pursuit? J. R. Stat. Society. Ser. A 1987, 150, 1–37. [Google Scholar] [CrossRef]

- Friedman, J.H. Exploratory Projection Pursuit. J. Am. Stat. Assoc. 1987, 82, 249–266. [Google Scholar] [CrossRef]

- Hall, P. On Polynomial-Based Projection Indices for Exploratory Projection Pursuit. Ann. Stat. 1989, 17, 589–605. [Google Scholar] [CrossRef]

- Cook, D.; Buja, A.; Cabrera, J. Projection Pursuit Indexes Based on Orthonormal Function Expansions. J. Comput. Graph. Stat. 1993, 2, 225–250. [Google Scholar] [CrossRef]

- Peña, D.; Prieto, F.J. Cluster Identification Using Projections. J. Am. Stat. Assoc. 2001, 96, 1433–1445. [Google Scholar] [CrossRef]

- Rodriguez Martinez, E.; Goulermas, J.; Mu, T.; Ralph, J. Automatic Induction of Projection Pursuit Indices. Neural Netw. IEEE Trans. 2010, 21, 1281–1295. [Google Scholar] [CrossRef]

- Hou, S.; Wentzell, P. Re-centered kurtosis as a projection pursuit index for multivariate data analysis. J. Chemom. 2014, 28, 370–384. [Google Scholar] [CrossRef]

- Loperfido, N. Skewness-Based Projection Pursuit: A Computational Approach. Comput. Stat. Data Anal. 2018, 120, 42–57. [Google Scholar] [CrossRef]

- Loperfido, N. Kurtosis-based projection pursuit for outlier detection in financial time series. Eur. J. Financ. 2020, 26, 142–164. [Google Scholar] [CrossRef]

- Posse, C. Projection pursuit exploratory data analysis. Comput. Stat. Data Anal. 1995, 20, 669–687. [Google Scholar] [CrossRef]

- De Lathauwer, L.; De Moor, B.; Vandewalle, J. On the best rank-1 and rank-(R1, R2, …, RN) approximation of higher-order tensor. SIAM J. Matrix Anal. Appl. 2000, 21, 1324–1342. [Google Scholar] [CrossRef]

- Kofidis, E.; Regalia, P.A. On the Best Rank-1 Approximation of Higher-Order Supersymmetric Tensors. SIAM J. Matrix Anal. Appl. 2002, 23, 863–884. [Google Scholar] [CrossRef]

- Loperfido, N. A new kurtosis matrix, with statistical applications. Linear Algebra Its Appl. 2017, 512, 1–17. [Google Scholar] [CrossRef]

- Franceschini, C.; Loperfido, N. An Algorithm for Finding Projections with Extreme Kurtosis. In Studies in Theoretical and Applied Statistics; Perna, C., Pratesi, M., Ruiz-Gazen, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2018; pp. 61–70. [Google Scholar]

- Loperfido, N. Canonical transformations of skew-normal variates. TEST 2010, 19, 146–165. [Google Scholar] [CrossRef]

- Arevalillo, J.M.; Navarro, H. A note on the direction maximizing skewness in multivariate skew-t vectors. Stat. Probab. Lett. 2015, 96, 328–332. [Google Scholar] [CrossRef]

- Arevalillo, J.M.; Navarro, H. Skewness-Kurtosis Model-Based Projection Pursuit with Application to Summarizing Gene Expression Data. Mathematics 2021, 9, 954. [Google Scholar] [CrossRef]

- Kim, H.M.; Kim, C. Moments of scale mixtures of skew-normal distributions and their quadratic forms. Commun. Stat. Theory Methods 2017, 46, 1117–1126. [Google Scholar] [CrossRef]

- Arevalillo, J.M.; Navarro, H. Data projections by skewness maximization under scale mixtures of skew-normal vectors. Adv. Data Anal. Classif. 2020, 14, 435–461. [Google Scholar] [CrossRef]

- Capitanio, A. On the canonical form of scale mixtures of skew-normal distributions. Statistica 2020, 80, 145–160. [Google Scholar]

- Malkovich, J.F.; Afifi, A.A. On Tests for Multivariate Normality. J. Am. Stat. Assoc. 1973, 68, 176–179. [Google Scholar] [CrossRef]

- Azzalini, A.; Capitanio, A. Statistical applications of the multivariate skew normal distribution. J. R. Stat. Soc. Ser. B 1999, 61, 579–602. [Google Scholar] [CrossRef]

- Azzalini, A. The Skew-normal Distribution and Related Multivariate Families. Scand. J. Stat. 2005, 32, 159–188. [Google Scholar] [CrossRef]

- Contreras-Reyes, J.E.; Arellano-Valle, R.B. Kullback–Leibler Divergence Measure for Multivariate Skew-Normal Distributions. Entropy 2012, 14, 1606–1626. [Google Scholar] [CrossRef]

- Balakrishnan, N.; Scarpa, B. Multivariate measures of skewness for the skew-normal distribution. J. Multivar. Anal. 2012, 104, 73–87. [Google Scholar] [CrossRef]

- Balakrishnan, N.; Capitanio, A.; Scarpa, B. A test for multivariate skew-normality based on its canonical form. J. Multivar. Anal. 2014, 128, 19–32. [Google Scholar] [CrossRef]

- Azzalini, A.; Capitanio, A. The Skew-Normal and Related Families; IMS Monographs; Cambridge University Press: Cambridge, UK, 2014. [Google Scholar]

- González-Farías, G.; Domínguez-Molina, A.; Gupta, A.K. Additive properties of skew normal random vectors. J. Stat. Plan. Inference 2004, 126, 521–534. [Google Scholar] [CrossRef]

- González-Farías, G.; Domínguez-Molina, A.; Gupta, A.K. The closed skew-normal distribution. In Skew-Elliptical Distributions and Their Applications: A Journey Beyond Normality; Genton, M.G., Ed.; Chapman & Hall/CRC: Boca Raton, FL, USA, 2004; Chapter 2; pp. 25–42. [Google Scholar]

- Loperfido, N. Generalized skew-normal distributions. In Skew-Elliptical Distributions and Their Applications: A Journey Beyond Normality; Genton, M.G., Ed.; Chapman & Hall/CRC: Boca Raton, FL, USA, 2004; Chapter 4; pp. 65–80. [Google Scholar]

- Lachos, V.H.; Labra, F.V.; Bolfarine, H.; Ghosh, P. Multivariate measurement error models based on scale mixtures of the skew–normal distribution. Statistics 2010, 44, 541–556. [Google Scholar] [CrossRef]

- Vilca, F.; Balakrishnan, N.; Zeller, C.B. Multivariate Skew-Normal Generalized Hyperbolic distribution and its properties. J. Multivar. Anal. 2014, 128, 73–85. [Google Scholar] [CrossRef]

- Kahrari, F.; Rezaei, M.; Yousefzadeh, F.; Arellano-Valle, R. On the multivariate skew-normal-Cauchy distribution. Stat. Probab. Lett. 2016, 117, 80–88. [Google Scholar] [CrossRef]

- Kahrari, F.; Arellano-Valle, R.; Rezaei, M.; Yousefzadeh, F. Scale mixtures of skew-normal-Cauchy distributions. Stat. Probab. Lett. 2017, 126, 1–6. [Google Scholar] [CrossRef]

- Arellano-Valle, R.B.; Ferreira, C.S.; Genton, M.G. Scale and shape mixtures of multivariate skew-normal distributions. J. Multivar. Anal. 2018, 166, 98–110. [Google Scholar] [CrossRef]

- Ogasawara, H. A non-recursive formula for various moments of the multivariate normal distribution with sectional truncation. J. Multivar. Anal. 2021, 183, 104729. [Google Scholar] [CrossRef]

- Azzalini, A.; Dalla Valle, A. The multivariate skew-normal distribution. Biometrika 1996, 83, 715–726. [Google Scholar] [CrossRef]

- Branco, M.D.; Dey, D.K. A General Class of Multivariate Skew-Elliptical Distributions. J. Multivar. Anal. 2001, 79, 99–113. [Google Scholar] [CrossRef]

- Kim, H.M. A note on scale mixtures of skew normal distribution. Stat. Probab. Lett. 2008, 78, 1694–1701. [Google Scholar] [CrossRef]

- Basso, R.M.; Lachos, V.H.; Cabral, C.R.B.; Ghosh, P. Robust mixture modeling based on scale mixtures of skew-normal distributions. Comput. Stat. Data Anal. 2010, 54, 2926–2941. [Google Scholar] [CrossRef]

- Zeller, C.B.; Cabral, C.R.B.; Lachos, V.H. Robust mixture regression modeling based on scale mixtures of skew-normal distributions. TEST 2016, 25, 375–396. [Google Scholar] [CrossRef]

- Lachos, V.H.; Garay, A.M.; Cabral, C.R.B. Moments of truncated scale mixtures of skew-normal distributions. Braz. J. Probab. Stat. 2020, 34, 478–494. [Google Scholar] [CrossRef]

- Azzalini, A.; Capitanio, A. Distributions generated by perturbation of symmetry with emphasis on a multivariate skew t-distribution. J. R. Stat. Soc. Ser. B 2003, 65, 367–389. [Google Scholar] [CrossRef]

- Wang, J.; Genton, M.G. The multivariate skew-slash distribution. J. Stat. Plan. Inference 2006, 136, 209–220. [Google Scholar] [CrossRef]

- Loperfido, N. Skewness and the linear discriminant function. Stat. Probab. Lett. 2013, 83, 93–99. [Google Scholar] [CrossRef]

- Kollo, T.; von Rosen, D. Advanced Multivariate Statistics with Matrices; Mathematics and Its Applications; Springer: Dordrecht, The Netherlands, 2005. [Google Scholar]

- Christiansen, M.C.; Loperfido, N. Improved Approximation of the Sum of Random Vectors by the Skew Normal Distribution. J. Appl. Probab. 2014, 51, 466–482. [Google Scholar] [CrossRef]

- Qi, L. Rank and eigenvalues of a supersymmetric tensor, the multivariate homogeneous polynomial and the algebraic hypersurface it defines. J. Symb. Comput. 2006, 41, 1309–1327. [Google Scholar] [CrossRef]

- Cardoso, J.F. Source separation using higher order moments. In Proceedings of the International Conference on Acoustics, Speech, and Signal Processing, Glasgow, UK, 23–26 May 1989; pp. 2109–2112. [Google Scholar]

- Tyler, D.E.; Critchley, F.; Dümbgen, L.; Oja, H. Invariant co-ordinate selection. J. R. Stat. Soc. Ser. B 2009, 71, 549–592. [Google Scholar] [CrossRef]

- Kent, J.T. Discussion on the paper by Tyler, Critchley, Dumbgen & Oja: Invariant co-ordinate selection. J. R. Stat. Soc. Ser. B 2009, 71, 575–576. [Google Scholar]

- Peña, D.; Prieto, F.J.; Viladomat, J. Eigenvectors of a kurtosis matrix as interesting directions to reveal cluster structure. J. Multivar. Anal. 2010, 101, 1995–2007. [Google Scholar] [CrossRef]

- Alashwali, F.; Kent, J.T. The use of a common location measure in the invariant coordinate selection and projection pursuit. J. Multivar. Anal. 2016, 152, 145–161. [Google Scholar] [CrossRef]

- Nordhausen, K.; Oja, H.; Tyler, D.E. Tools for Exploring Multivariate Data: The Package ICS. J. Stat. Softw. 2008, 28, 1–31. [Google Scholar] [CrossRef]

- Wang, J. A family of kurtosis orderings for multivariate distributions. J. Multivar. Anal. 2009, 100, 509–517. [Google Scholar] [CrossRef][Green Version]

- Arevalillo, J.M.; Navarro, H. A study of the effect of kurtosis on discriminant analysis under elliptical populations. J. Multivar. Anal. 2012, 107, 53–63. [Google Scholar] [CrossRef]

- Arevalillo, J.M.; Navarro, H. A stochastic ordering based on the canonical transformation of skew-normal vectors. TEST 2019, 28, 475–498. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).