Abstract

Imbalanced data and feature redundancies are common problems in many fields, especially in software defect prediction, data mining, machine learning, and industrial big data application. To resolve these problems, we propose an intelligent fusion algorithm, SMPSO-HS-AdaBoost, which combines particle swarm optimization based on subgroup migration and adaptive boosting based on hybrid-sampling. In this paper, we apply the proposed intelligent fusion algorithm to software defect prediction to improve the prediction efficiency and accuracy by solving the issues caused by imbalanced data and feature redundancies. The results show that the proposed algorithm resolves the coexisting problems of imbalanced data and feature redundancies, and ensures the efficiency and accuracy of software defect prediction.

1. Introduction

In machine learning applications, the class imbalance problem refers to the large difference in the number of different datasets, namely, one class has more samples than the other. Classic classification algorithms such as decision tree, Bayesian algorithms, Support Vector Machine (SVM) and neural networks are widely used in software defect prediction. Traditional algorithms assume that a data set is balanced and a focus on achieving the best overall classification accuracy. However, in an imbalanced data set, the number of samples in a class can be significantly larger than those in other classes. When a traditional classification algorithm is applied to an imbalanced data set, it can misclassify a large number of minority class samples into the majority class. As a result, the classification results favor the majority class, reducing the classification accuracy of the minority classes [1]. Because the classification accuracy of high-risk modules is more critical than the overall classification accuracy, the minority class can be more important than the majority class.

With the development of information technology, high-dimensional data has spread throughout the fields of machine learning, data processing, and pattern recognition. The data contain a large number of irrelevant and redundant features, which greatly reduces the classification performance and causes a “dimension disaster”. The number of features in a software module has significantly increased as software complexity increases. However, these features have different weights in the module. Some of them are irrelevant, which can obscure the essential classification features, affecting the performance of the learning algorithm and reducing the effectiveness of prediction models [2]. Research shows that the number of training samples exponentially increases as the redundancy feature attributes increase [3]. Hence, deleting can reduce computation, improve classification accuracy, and optimize the classification algorithm model.

Software defect prediction, which is a typical unbalanced classification problem, is an essential tool used in software quality assurance. The efficiency and accuracy of the prediction algorithm can significantly affect the quality of a software system. The key to software defect prediction is to identify the high-risk modules, which are far fewer than the low-risk modules, and identify the defects in the high-risk modules.

In this paper, we propose an intelligent fusion algorithm, SMPSO-HS-AdaBoost, which is a combination of sampling, feature selection, and classification methods. The proposed algorithm (1) solves the imbalance problem using AdaBoost classification based on hybrid-sampling, and (2) solves the feature redundancy problem using particle swarm optimization based on subgroup migration feature selection.

2. Related Work

2.1. Research on Imbalanced Data

Software defect prediction models use the code metrics of modular structures (such as lines of code and class inheritance depth) as the input and output. Software defect prediction performance is affected by various methods used in the prediction, including data preprocessing, model building, optimization, and evaluation [4]. In addition, the distribution characteristics of the data set also affect the performance of software defect prediction models. The research of imbalanced data focuses on improving the algorithms to increase classification accuracy and balancing the data set to achieve good results.

2.1.1. Sampling Techniques

In a software system, the ratio of high-risk modules is relatively small and the data sets are typically imbalanced, causing the prediction accuracy of traditional software defect prediction models to be relatively low. Data sampling techniques were introduced to balance the data set, improving the accuracy of the prediction [5].

Data sampling techniques include under-sampling and over-sampling techniques. The under-sampling algorithms reduce the number of negative class samples by deleting negative samples, and hence they are likely to lose some important information during the deletion process. Oversampling algorithms increase the number of positive classes, but they can introduce overfitting. Some sampling algorithms simply copy or delete samples, and they cause classifier overfitting and generate poor classification results.

Wang et al. [6] proposed an entropy and confidence-based under-sampling boosting framework to solve imbalanced problems. Hou et al. [7] initially used the synthetic minority over-sampling technique to balance a training set before generating a candidate classifier pool. Chawla et al. [8] proposed the Synthetic Minority Over-sampling Technique (SMOTE). The experiments show that SMOTE can ease over-fitting and improve the classification accuracy of the minority class and maintain overall accuracy. Verbiest et al. [9] used SMOTE enhanced with fuzzy rough prototype selection to process noisy imbalanced data sets and achieve good results. In practical applications, using data sampling combined with ensemble learning algorithms to process imbalanced data can generate good results. Chawlet al. [10] proposed SMOTEBoost, which uses SMOTE to generate new minority classes in each iteration, and the results show that SMOTEBoost can improve the classification accuracy of the minority class. Liu et al. [11] proposed a software defect prediction method based on integrated sampling and ensemble learning. These algorithms combine data sampling with ensemble approaches and have relatively good classification accuracy. However, software defect prediction requires both high classification accuracy and efficiency.

2.1.2. Ensemble Methods

Ensemble learning algorithms are effective methods to address the class imbalance problem [12,13,14,15]. General classification algorithms assume that misclassifying the different classes will lead to the same loss. However, in the imbalanced data sets, misclassifying different classes can have different costs. Ensemble algorithms make the classifier focus more on the misclassified samples by changing the weight of training samples, which can improve classification performance. In recent years, ensemble algorithms have been increasingly used in software defect prediction. Wang et al. [16] proposed a multiple kernel ensemble learning (MKEL) approach for software defect classification and prediction, which integrates the advantages of ensemble learning and multiple kernel learning. Rathore et al. [17] proposed ensemble methods to predict the number of faults in software modules, demonstrating the effectiveness of software fault prediction based on ensemble methods. Malhotra et al. [18] proposed four ensemble learning strategies to predict change-prone classes of software. As a boosting learning algorithm, the AdaBoost algorithm has a wide range of applications in dealing with imbalanced data.

2.2. Feature Selection

The software modules face not only the problem of imbalanced data but also the problem of redundant features. Feature selection has been widely used in pattern recognition and machine learning applications in recent decades to reduce redundant features and keep the remaining features consistent with the original features. A subset with useful features is selected and irrelevant and redundant features are removed [19,20]. There are two types feature selection methods: wrapper and filter. In wrapper methods, a pre-determined classifier is required to evaluate the performance of the chosen features, whereas the filter methods are independent of any classifier in the feature subset evaluation process [21]. Although the filter method runs quickly, it makes the results of subsequent operations significantly deviate, and it is easy to delete some feature combinations that should be retained. The wrapper method runs slowly, but it can select the optimal feature subset according to the evaluation criteria such as classification accuracy and feature number. Assuming that there are n-dimensional features in the data set, the feature selection for this data set will be an NP-hard problem [22]. Therefore, performing exhaustive searches for the optimal feature subset is both resourcing-demanding and time-consuming, and is not suitable for feature selection in high-dimensional data sets.

In contrast, heuristic search algorithms can obtain a set of solutions in one run, allowing them to find the ideal solution set with less time and resources. In recent years, many feature selection methods based on heuristic algorithms, such as genetic (GA), ant colony (ACO), artificial bee colony (ABC), and particle swarm optimization (PSO) algorithms, have been proposed and achieved good results. Among these, PSO has attracted many researchers’ attention due to its low complexity, fast convergence speed, and requirement of fewer parameters. Lu et al. [23] proposed improved PSO models based on both functional constriction factors and functional inertia weights for text feature selection. The experimental results show that the proposed model is both effective and stable. Gu et al. [24] used the competitive swarm optimizer (CSO), a recent PSO variant to solve high-dimensional feature selection problems. Tran et al. [25] proposed a variable-length PSO that defines a smaller search space by making the particles have different and shorter lengths, and then a smaller search space is defined. This method can obtain smaller feature subsets in a shorter time and significantly improve the classification performance.

3. SMPSO-HS-AdaBoost Algorithm

3.1. Hybrid-Sampling (HS)

It is commonly assumed that various types of data samples are balanced in numbers. However, in practice, data classes are typically imbalanced, and classifiers can be overwhelmed by the majority class, so they ignore the minority class. Data imbalance can lead to the loss of key attributes in high-risk modules during software attribute reduction and reduce the classification accuracy. When processing imbalanced data, traditional learning algorithms tend to produce high prediction accuracy for the majority class but produce poor prediction accuracy for the minority class that is more interesting to the researchers. The reason is that traditional classifiers are designed to process balanced data sets and generate predictions for the whole data set. They are not suitable to perform learning tasks on imbalanced data sets because they tend to assign all instances to the majority class, which is usually less important. Because of the high cost of misclassifying minority class data [26], in this paper, we propose a hybrid-sampling (HS) method to handle imbalanced data.

SMOTE is an oversampling technique that generates synthetic samples from the minority class using the available information in the data. It is a typical data synthesis algorithm, and its main goal is to synthesize new samples by interpolation rather than randomly copy samples from the original data. First, for each sample in the minority class, SMOTE finds the k nearest neighbors from the original samples. Then, artificial minority instances are added between the minority instances and their k nearest neighbors, until the data set is balanced. The new synthetic sample S was defined as:

where u is a random number between (0, 1), y is one of k nearest neighbors.

Random under-sampling is an under-sampling technique that reduces the number of samples in the majority class by randomly removing the majority samples. The under-sampling algorithm is likely to cause loss of significant information of the majority class due to the loss of majority samples.

Definition 1.

Hybrid-sampling (HS). If S is the training sample data set, M is the over-sampling rate, and N is the under-sampling rate, then the hybrid-sampling can be defined as:

Datasetnew = under − sampling(SMOTE (Datasetold, M), N)

First, SMOTE over-sampling is used to add the instances of the minority class with an over-sampling rate of M, and then random under-sampling is used to process the over-sampled data set with an under-sampling rate of N.

3.2. Feature Selection Method Based SMPSO

3.2.1. Standard Particle Swarm Optimization

PSO is a global random search algorithm based on swarm intelligence, and it simulates the migration and clustering behavior of birds in the process of foraging [27]. PSO treats each particle in the population as a standalone solution and assigns each particle with a fitness value representing the quality of the solution. Each particle has two attributes, position and velocity. The position vector represents the solution of the corresponding particle. The velocity vector is used to adjust the particle’s next flight to update the position and search for a new solution set. Based on the global optimal solution and the optimal solution found by the particle itself, the particle can adjust its moving direction and speed and gradually approach the optimal particle.

The basic principles of PSO summarized below [28].

Assuming that m particles search for the optimal solution in an N-dimensional target space, vectors and represent the position and flight speed of particle i, respectively.

represents the optimal location discovered by particle i.

represents the optimal location discovered by all particles.

The position and velocity of particle i are updated as follows.

where w is inertia weight, c1, c2 are two positive constants representing the acceleration factors, represents the nth-dimensional velocity component generated by the (k+1)th iteration of the ith particle, and represents the nth-dimensional position component generated by the (k+1)th iteration of the ith particle.

3.2.2. SMPSO

PSO features fast convergence speed and simple implementation, but it often falls into a local optimum. To solve this issue, we propose a Particle Swarm Optimization algorithm based on Subgroup Migration (SMPSO), which improves the evolutionary strategy of PSO. SMPSO uses the knowledge of other particles in the population and expands the search space as much as possible to guide the particle to avoid the local optimum.

During the evolutionary process, the population is defined into three subgroups, A, B and C, based on the fitness values. Subgroup A consists of particles with large fitness values, indicating that the convergence degree is high and making it easy to fall into the local optimum. In this case, the particle adjusts its speed and direction based on the position of the nearest particle and performs a more detailed search near the extreme value point to find a better position than the previous position’s fitness. Equations (9) and (10) demonstrate how a particle in subgroup A updates its speed and position. The updating strategy enhances the local search using its inertia speed, the optimal value calculated by itself, and the location of its nearest particle. Subgroup B consists of particles with moderate fitness values, and the speed and location of these particles are updated using the standard PSO. Subgroup C consists of particles with poor fitness values, and the speed and location of these particles are updated based on Equations (11) and (12). The updating strategy covers all possible solutions and enhances the global search ability using its inertia speed, its optimal value, and the positions of the random particles. Subgroups A, B, and C evolve separately based on their updating strategies. The particles migrate to the corresponding subgroup according to new fitness values after every iteration until the termination condition is satisfied.

where represents the nth-dimensional velocity component generated by the (k+1)th iteration of the ith particle, represents the nth-dimensional position component generated by the (k+1)th iteration of the ith particle, represents the nth-dimensional position component generated by the kth iteration of the ith particle’s nearest particle, and represents the nth-dimensional position component of the random particle, random .

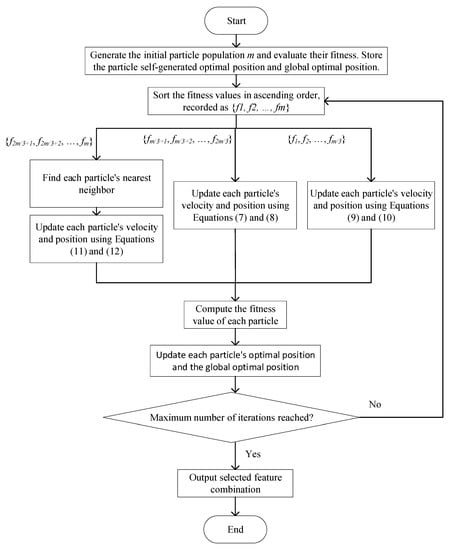

3.2.3. SMPSO for Feature Selection

In a continuous SMPSO, each particle can change its position to any point in space. If SMPSO is used for feature selection, the value of each dimension for the particle’s position is represented by 0 or 1, where 0 means that none of the features corresponding to the position is selected and 1 means that the features corresponding to the position are selected. Below, Equations (13) and (14) summarize SMPSO’s updating strategy for feature selection and Figure 1 demonstrates the SMPSO workflow.

Figure 1.

SMPSO Workflow.

3.3. SMPSO-HS-AdaBoost

Adaptive boosting (AdaBoost) is an adaptive enhancement technique. It is a typical ensemble algorithm that improves classification performance by combining multiple weak classifiers into one strong classifier. Firstly, AdaBoost gives each training sample the same weight and obtains a learning machine by training the samples. Secondly, it evaluates the learning results and improves the weights of samples with poor learning effect. Samples with poor learning results are more likely to appear in the next training set, thus enhancing the learning dynamics and iteratively improving the learning results, especially for the samples with poor learning results. By repeating the above process, an optimal learning machine will eventually be built. The training procedure of AdaBoost is shown in Algorithm 1.

| Algorithm 1. The AdaBoost algorithm. |

| Input: |

| Sample distribution D; |

| Base learning algorithm C; |

| Number of learning iterations T; |

| Process: |

| 1. D1 = D. % Initialize distribution |

| 2. for t = 1, …, T: |

| 3. ht = C(Dt); % Train a weak learner from distribution Dt |

| 4. % Evaluate the error of ht |

| 5. Dt+1 = Adjust_Distribution(Dt, ) |

| 6. end |

| Output: |

| H(x) = Combine_Outputs({ht(x)}) |

Although AdaBoost reduces the variance and the bias in the final ensemble, it may not be effective for data sets with skewed class distributions. The standard boosting procedure gives equal weights to all misclassified examples. Because the boosting algorithm samples from a pool that predominantly consists of the majority class samples, subsequent training set samplings may still be skewed towards the majority class.

This paper proposes SMPSO-HS-AdaBoost, an algorithm that combines the data sampling method (HS), feature selection method (SMPSO), and classification algorithm (AdaBoost). Firstly, it processes the data using HS and SMPSO. Secondly, it uses the processed data to train the weak classifiers and update weight values based on the sample classification results. After finite iterations, it generates the final output through the voting results generated by of weak classifiers. The training procedure of SMPSO-HS-AdaBoost is shown in Algorithm 2.

| Algorithm 2. The proposed SMPSO-HS-AdaBoost algorithm. |

| Training sets , |

| Feature vector is dimension, |

| Class tag , 1 is the Positive class, −1 is the Negative class. |

| Input: |

| Training sets ; |

| Base classifiers ; |

| Over-sampling rate ; |

| Under-sampling rate ; |

Process:

|

| (1) Compute based on , weight distribution , and weak classifier . (2) Compute the classification loss of . |

| (3) Calculate the coefficient of |

| (4) Update the weight distribution. |

| (5) Normalize the results. |

| Step4 Generate the classification model. |

| Output: |

| Classification model |

4. Results and Analysis

4.1. Performance Metric

Evaluation criterion plays an important role in classification performance evaluation. Evaluation criteria, such as the ROC curve, AUC, and confusion matrix-based measures including recall, precision, F-measure, and G-mean, are commonly used to evaluate imbalanced data classification performance. As shown in Table 1, the classification results can be represented by a confusion matrix with two rows and two columns reporting true positives (TP), false positives (FP), false negatives (FN), and true negatives (TN). In binary classification, the minority class with a high recognition is considered a positive class and the majority class is considered a negative class.

Table 1.

Confusion Matrix.

Based on the confusion matrix, precision and recall can be defined as:

Precision and recall are essential measures to evaluate the classification performance of the positive class. In this paper, F-value [29], G-mean [30], and AUC [31] are used to evaluate the performance of the classifier.

Equation (17) shows how the F-value is obtained.

β indicates the relative importance between recall and precision. Usually β = 1, and F-value is written as F1. Recall equals true positive divided by the sum of true positive and false positive. Precision equals true positive divided by the sum of true positive and false negative.

Recall that precision, and F-value are used as the evaluation criteria of the positive class (minority class). F-value is the harmonic mean of recall rate and precision rate and is typically used to evaluate imbalanced data classification performance. Because its value is close to the smaller of the recall and precision rates, a large F-value value indicates that the recall and precision rates are large.

G-mean is typically used to evaluate the overall classification performance of imbalanced data. Equation (18) shows how G-mean is obtained.

Curve ROC [32] describes the performance of classifiers in different discrimination threshold values, but in practice, the AUC value (the area between the ROC curve and the axis), not the ROC curve, is used to evaluate the classifier performance.

4.2. Experimental Results

To evaluate SMPSO-HS-AdaBoost, seven data sets from aerospace software systems, CM1, KC1, KC3, PC1, PC3, PC4, and PC5 (publicly available NASA MDP software defect data sets), were selected. The class label of these data sets is {Y, N}, where Y (high-risk module) is the minority class, and N (low-risk module) is the majority class. The basic information of the data sets is summarized in Table 2.

Table 2.

Data sets used in evaluation.

We evaluated the performance of SMPSO and SMPSO-HS-AdaBoost using Pycharm. The neighborhood k value in SMOTE was set to 5. After SMOTE over-sampling and random under-sampling, the ratio of minority class instances to majority class instances was 1:2.

To increase the evaluation accuracy, all experimental results were obtained using five-fold cross-validation (CV). The samples were divided into five groups. In each iteration, four groups were randomly selected to train the model, and the remaining group was used to test the model. Five trials were performed, and the cross-validation results were obtained by averaging the results of the five trials.

4.3. Results and Analysis of SMPSO

The classical KNN single classifier and classical AdaBoost combination classifier were used as benchmark classifiers to evaluate the performance of SMPSO in comparison with PSO (wrapper method), ANOVA (filter method), and no feature selection method, labeled as NFS. Again, five-fold CV was used, and the evaluation results, including accuracy, F1, AUC, and G-mean values, are summarized in Table 3. Results show that regardless of using AdaBoost or KNN as the base classifier, SMPSO achieves the best accuracy, F1, AUC, and G-mean, compared to the other approaches. Thus, SMPSO can effectively improve the performance of software defect prediction.

Table 3.

SMPSO performance comparison.

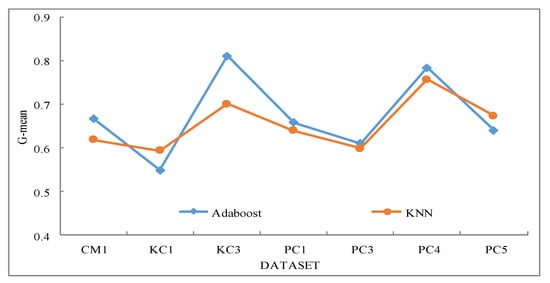

G-mean is a robust evaluation index of an imbalanced data classification method that can evaluate the comprehensive performance of a learning algorithm, thus, we compare the G-mean values of SMPSO+AdaBoost with the G-mean values of SMPSO+KNN. Figure 2 shows that the G-mean value of SMPSO+AdaBoost is significantly higher than that of SMPSO+KNN on the five data sets of CM1, PC1, PC3, PC4, and KC3, which are seriously unbalanced. The comparison results show that the proposed SMPSO can generate better feature selection results when combined with AdaBoost, especially for data sets with high degrees of imbalance. Therefore, the proposed SMPSO-HS-AdaBoost was used as the final classification algorithm in our approach.

Figure 2.

Comparison of G-mean values generated by AdaBoost and KNN.

4.4. Results and Analysis of SMPSO-HS-AdaBoost

In this section, we evaluate the performance of SMPSO-HS-AdaBoost according to accuracy, precision, recall, F1, AUC, and G-mean, in comparison with PSO-HS-AdaBoost, SMPSO-AdaBoost, HS-AdaBoost, SMOTE-AdaBoost, and Random-AdaBoost. Seven data sets were used, and the results are summarized in Table 4. The results show that SMPSO-AdaBoost and Random-AdaBoost generate poor F1, AUC, and G-mean values on imbalanced data, indicating that classification suffers performance loss if only under-sampling is used or data set balancing is not performed. HS-AdaBoost performs better than SMOTE-AdaBoost except on CM1, which indicates that hybrid-sampling performs better than over-sampling. The proposed SMPSO-HS-AdaBoost approach is based on hybrid-sampling, and it improves feature selection and AdaBoost classification to enhance the performance. The results show that it achieves better performance in accuracy, precision, recall, F1, AUC, and G-mean on all data sets.

Table 4.

SMPSO-HS-AdaBoost performance comparison.

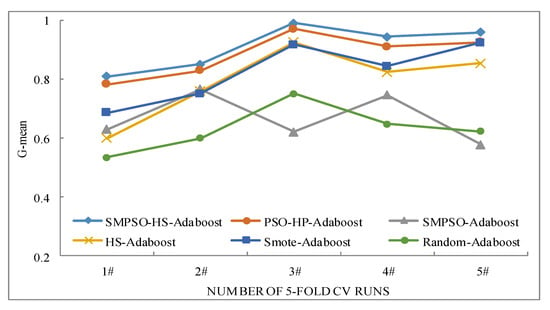

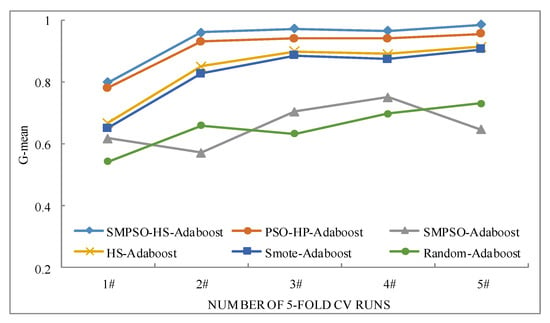

Next, we evaluate the effectiveness and stability of SMPSO-HS-AdaBoost using two data sets with the minority class sample ratio less than 10%, CM1, and PC1. Again, five-fold CV was performed, and the G-mean values of each trial are shown in Figure 3 and Figure 4. In Figure 3, we can see that the G-mean value of SMPSO-HS-AdaBoost is the best on each trial, and the overall G-mean values of SMPSO-AdaBoost and Random-AdaBoost are the worst. In Figure 4, we can see that the G-mean value of SMPSO-HS-AdaBoost is still the best on each trial, and the overall G-mean values of SMPSO-AdaBoost and Random-AdaBoost are still the worst. Results show that the proposed SMPSO-HS-AdaBoost outperforms the others in each trial, demonstrating its performance and stability.

Figure 3.

Comparison of G-mean values generated by AdaBoost and KNN.

Figure 4.

G-mean value comparison on PC1.

5. Conclusions

It is critical to identify the high-risk modules in a software system to assure its quality. There are two key problems in software defect prediction, imbalanced data and feature redundancies. In this paper, we propose an intelligent fusion algorithm, SMPSO-HS-AdaBoost, which can be used to build software defect prediction models. SMPSO-HS-AdaBoost focuses on solving the imbalanced data issue to ensure the efficiency and accuracy of software defect prediction. Firstly, it uses SMOTE and under-sampling to balance the data set. Then, it extracts the significant features using the proposed SMPSO. Finally, AdaBoost is used to perform classification on the new data set. Experimental results show that the proposed SMPSO-HS-AdaBoost improves the efficiency and accuracy of the software defect prediction model by resolving the coexisting problems of imbalanced data and feature redundancies.

Author Contributions

Writing—review and editing, additional experiments, T.L.; Writing—original draft, early experiments, L.Y.; Conceptualization, Methodology, K.L.; Investigation, J.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China, grant number 61673396.

Data Availability Statement

Not applicable.

Acknowledgments

The authors are very indebted to the anonymous referees for their critical comments and suggestions for the improvement of this paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhou, Z.H.; Liu, X.Y. Training cost-sensitive neural networks with methods addressing the class imbalance problem. IEEE Trans. Knowl. Data Eng. 2006, 18, 63–77. [Google Scholar] [CrossRef]

- Gao, K.; Khoshgoftaar, T.M.; Seliya, N. Predicting high-risk program modules by selecting the right software measurements. Softw. Qual. J. 2012, 20, 3–42. [Google Scholar] [CrossRef]

- Seena, K.; Sundaravardhan, R. Application of nyaya inference method for feature selection and ranking in classification algorithms. In Proceedings of the International Conference on Advances in Computing, Communications and Informatics, Karnataka, India, 13–16 September 2017; pp. 1085–1091. [Google Scholar]

- Khoshgoftaar, T.M.; Gao, K.; Seliya, N. Attribute Selection and Imbalanced Data: Problems in Software Defect Prediction. In Proceedings of the 22nd International Conference on Tools with Artificial Intelligence, Arras, France, 27–29 October 2010; pp. 137–144. [Google Scholar]

- Kotsiantis, S.; Kanellopoulos, D.; Pintelas, P. Handling imbalanced datasets: A review. GESTS Int. Trans. Comput. Sci. Eng. 2006, 30, 25–36. [Google Scholar]

- Wang, Z.; Cao, C.; Zhu, Y. Entropy and Confidence-Based Undersampling Boosting Random Forests for Imbalanced Problems. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 5178–5191. [Google Scholar] [CrossRef]

- Hou, W.H.; Wang, X.K.; Zhang, H.Y.; Wang, J.Q.; Li, L. A novel dynamic ensemble selection classifier for an imbalanced data set: An application for credit risk assessment. Knowl. Based Syst. 2020, 208, 1–14. [Google Scholar] [CrossRef]

- Chawla, N.V.; Bowyer, K.W. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 341–378. [Google Scholar] [CrossRef]

- Verbiest, N.; Ramentol, E.; Cornelis, C.; Herrera, F. Preprocessing noisy imbalanced datasets using SMOTE enhanced with fuzzy rough prototype selection. Appl. Soft Comput. 2014, 22, 511–517. [Google Scholar] [CrossRef]

- Chawla, N.V.; Lazarevic, A.; Hall, L.O.; Bowyer, K.W. Smoteboost: Improving Prediction of the Minority Class in Boosting. In Proceedings of the 7th European Conference on Principles and Practice of Knowledge Discovery in Databases, Dubrovnik, Croatia, 22–26 September 2003; pp. 107–119. [Google Scholar]

- Xiang, D.; Yu-Guang, M. Research on Cross-company Software Defect Prediction Based on Integrated Sampling and Ensemble Learning. J. Chin. Comput. Syst. 2015, 37, 930–936. [Google Scholar]

- Li, K.; Zhou, G.; Zhai, J.; Li, F.; Shao, M. Improved PSO_AdaBoost Ensemble Algorithm for Imbalanced Data. Sensors 2019, 19, 1476. [Google Scholar] [CrossRef] [PubMed]

- Chen, D.; Wang, X.; Zhou, C.; Wang, B. The Distance-Based Balancing Ensemble Method for Data with a High Imbalance Ratio. IEEE Access 2019, 7, 68940–68956. [Google Scholar] [CrossRef]

- Gong, J.; Kim, H. RHSBoost: Improving classification performance in imbalance data. Comput. Stat. Data Anal. 2017, 111, 1–13. [Google Scholar] [CrossRef]

- Sun, J.; Lang, J.; Fujita, H.; Li, H. Imbalanced enterprise credit evaluation with DTE-SBD: Decision tree ensemble based on SMOTE and bagging with differentiated sampling rates. Inf. Sci. 2018, 425, 76–91. [Google Scholar] [CrossRef]

- Wang, T.; Zhang, Z.; Jing, X.; Zhang, L. Multiple kernel ensemble learning for software defect prediction. Autom. Softw. Eng. 2016, 23, 569–590. [Google Scholar] [CrossRef]

- Rathore, S.S.; Kumar, S. Linear and non-linear heterogeneous ensemble methods to predict the number of faults in software systems. Knowl. Based Syst. 2017, 119, 232–256. [Google Scholar] [CrossRef]

- Malhotra, R.; Khanna, M. Particle swarm optimization-based ensemble learning for software change prediction. Inf. Softw. Technol. 2018, 102, 65–84. [Google Scholar] [CrossRef]

- Deng, Z.; Wang, S.; Chung, F. A minimax probabilistic approach to feature transformation for multi-class data. Appl. Soft Comput. 2013, 13, 116–127. [Google Scholar] [CrossRef]

- Imani, M.; Ghassemian, H. Feature space discriminant analysis for hyperspectral data feature reduction. ISPRS J. Photogramm. Remote Sens. 2015, 102, 1–13. [Google Scholar] [CrossRef]

- Zeng, Z.; Zhang, H.; Zhang, R.; Yin, C. A novel feature selection method considering feature interact ion. Pattern Recognit. 2015, 48, 2656–2666. [Google Scholar] [CrossRef]

- Gheyas, I.A.; Smith, L.S. Feature subset selection in large dimensionality domains. Pattern Recognit. 2010, 43, 5–13. [Google Scholar] [CrossRef]

- Lu, Y.; Liang, M.; Ye, Z.; Cao, L. Improved particle swarm optimization algorithm and its application in text feature selection. Appl. Soft Comput. 2015, 35, 629–636. [Google Scholar] [CrossRef]

- Gu, S.; Cheng, R.; Jin, Y. Feature selection for high-dimensional classification using a competitive swarm optimizer. Soft Comput. 2018, 22, 811–822. [Google Scholar] [CrossRef]

- Tran, B.; Xue, B.; Zhang, M. Variable-Length Particle Swarm Optimization for Feature Selection on High-Dimensional Classification. IEEE Trans. Evol. Comput. 2018, 23, 473–787. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, H. Handling Class Imbalance Problems via Weighted BP Algorithm. In Proceedings of the Advanced Data Mining and Applications, Beijing, China, 17–19 August 2009; pp. 713–720. [Google Scholar]

- Bratton, D.; Kennedy, J. Defining a Standard for Particle Swarm Optimization. In Proceedings of the Swarm Intelligence Symposium, Honolulu, HI, USA, 1–5 April 2007; pp. 120–127. [Google Scholar]

- Yang, X.; Yuan, J. A modified particle swarm optimizer with dynamic adaptation. Appl. Math. Comput. 2007, 189, 1205–1213. [Google Scholar] [CrossRef]

- Liu, M.; Xu, C.; Luo, Y.; Xu, C.; Wen, Y.; Tao, D. Cost-Sensitive Feature Selection by Optimizing F-measures. IEEE Trans. Image Process. 2018, 27, 1323–1335. [Google Scholar] [CrossRef] [PubMed]

- Yan, J.; Han, S. Classifying Imbalanced Data Sets by a Novel RE-Sample and Cost-Sensitive Stacked Generalization Method. Math. Probl. Eng. 2018, 1790–2021. [Google Scholar] [CrossRef]

- Chao, X.; Peng, Y. A Cost-sensitive Multi-criteria Quadratic Programming Model. Procedia Comput. Sci. 2015, 55, 1302–1307. [Google Scholar] [CrossRef]

- Zhang, J.; Cui, X.; Li, J.; Wang, R. Imbalanced classification of mental workload using a cost-sensitive majority weighted minority oversampling strategy. Cogn. Technol. Work 2017, 19, 1–21. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).