1. Introduction

Quantitative domination of acquired color images over gray level images results in the development not only of color image processing methods but also of Image Quality Assessment (IQA) methods. These methods have an important role in a wide spectrum of image processing operations such as image filtration, image compression and image enhancement. They allow an objective assessment of the perceptual quality of a distorted image. Distorted images do not necessarily originate from the image acquisition process but can be created by various types of image processing. Among IQA methods, the most developed are methods that compare the processed (distorted) images to the original ones, and are called Full-Reference IQA (FR-IQA) methods. In this way, they assess the image quality after performing image processing operations. The assessments obtained for each IQA measure can be compared with the subjective Mean Opinion Score (MOS) or Difference Mean Opinion Score (DMOS) average ratings from human observers. However, determining subjective MOS values is time-consuming and expensive. In many practical applications, the reference image is unavailable, and therefore “blind” IQA techniques are more appropriate. When we use the FR-IQA methods then the results of comparisons will be the correlation coefficients: the higher the number, the better the measure. In conclusion, the aim of research in the field of IQA is to find measures to objectively and quantitatively assess image quality according to subjective judgment by a Human Visual System (HVS). There is a tradition in the IQA field of improving and refining existing quality measures. Similarly, attempts are made to assemble elements of one measure with elements of another measure to increase the correlation of the index with the MOS.

A low-complexity measure named the Mean Squared Error (MSE) has dominated for a long time among IQA measures used in color image processing due to its simplicity. Similarly popular was the use of other signal-theory-based measures such as Mean Absolute Error (MAE) and Peak Signal-to-Noise Ratio (PSNR). Over time, a weak link has been noted between these measures and human visual perception. The work [

1] shows the drawback in the PSNR measure using the example of the Lighthouse image. Additional noise was added to this image and its copy and was located in the lower or upper part of the image. In the first case the noise was invisible to the observer; in the second case it reduced the image quality assessment. Meanwhile, the PSNR values for both images were the same. Comparison of images on a pixel-to-pixel basis does not allow the influence of the pixel’s neighborhood on the perceived color. This lack of influence is a disadvantage for all those the measures mentioned above.

Since 2004, the Structural Similarity Index Measure (SSIM) [

2] has been used as an IQA method, which reflects the perceptual change of information about the structure of objects in a scene. This measure uses the idea of pixel inter-dependencies, which are adjacent to each other in image space. The SSIM value in compared images is created as a combination of three similarities of luminance, contrast and structure. It is calculated by averaging the results obtained in separate local windows (e.g., 8 × 8 or 11 × 11 pixels). In 2011, the Gradient SIMilarity index measure (GSIM) [

3] was proposed to consider the similarity of the gradient in images by expressing their edges. These ideas have thus become a starting point for new perceptual measures of image quality and many such IQA measures were proposed during the last decade. In [

4], a quality index named the Feature SIMilarity index (FSIM) was proposed. Here the local quality of an evaluated image is described by two low-level feature maps based on phase congruency (PC) and gradient magnitude (GM). FSIMc, a color version of FSIM, is a result of IQ chrominance components incorporation. Another example of a gradient approach is a simple index called the Gradient Magnitude Similarity Deviation (GMSD) [

5]. The gradient magnitude similarity map expresses the local image quality and then the standard deviation of this map is calculated and used as the final quality index GMSD.

Zhang L. et al. [

6] described a measure named the Visual Saliency-based Index (VSI), which uses a local quality map of the distorted image based on saliency changes. In addition, visual saliency serves as a weighting function conveying the importance of particular regions in the image.

Recently, Nafchi et al. [

7] proposed new IQA model named the Mean Deviation Similarity Index (MDSI), which uses a new gradient similarity for the evaluation of local distortions and chromaticity similarity for color distortions. The final computation of the MDSI score requires a pooling method for both similarity maps; here a specific deviation pooling strategy was used. A Perceptual SIMilarity (PSIM) index was presented in [

8], which computed micro- and macrostructural similarities, described as usual by gradient magnitude maps. Additionally, this index uses color similarity and realizes perceptual-based pooling. IQA models developed recently are complex and include an increasing number of properties of the human visual system. Such a model was developed by Shi C. and Lin Y. and named Visual saliency with Color appearance and Gradient Similarity (VCGS) [

9]. This index is based on the fusion of data from three similarity maps defined by visual salience with color appearance, gradient and chrominance. IQA models increasingly consider the fact that humans pay more attention to the overall structure of an image rather than local information about each pixel.

Many IQA methods first transform the RGB components of image pixels into other color spaces that are more related to color perception. These are generally spaces belonging to the category “luminance–chrominance” spaces such as YUV, YIQ, YCrCb, CIELAB etc. Such measures have been developed recently, e.g., MDSI. They achieve higher correlation coefficients with MOS during tests on the publicly available image databases.

The similarity assessment idea that is presented above was used by Sun et al. to propose another IQA index named the SuperPixel-based SIMilarity index (SPSIM) [

10]. The idea of a superpixel dates back to 2003 [

11]. A group of pixels with similar characteristics (intensity, color, etc.) can be replaced by a single superpixel. Superpixels provide a convenient and compact representation of images that is useful for computationally complex tasks. They are much smaller than pixels, so algorithms operating on superpixel images can be faster. Superpixels preserve most of the image edges. Many different methods exist for decomposing images into superpixels, of which the fast Simple Linear Iterative Clustering (SLIC) method has gained the most popularity. Typically, image quality measures make comparisons of features extracted from pixels (e.g., MSE, PSNR) or rectangular patches (e.g., SSIM: Structural Similarity Index Measure).

Such patches usually have no visual meaning, whereas superpixels, unlike artificially generated patches, have a visual meaning and are matched to the image content.

In this paper, we consider new modifications of the SPSIM measure with improved correlation coefficients with MOS. This paper is organized as follows. After the introduction,

Section 2 presents a relative new image quality measure named SPSIM.

Section 3 introduces two modifications to the SPSIM measure. In

Section 4 and

Section 5 the results of experimental tests on different IQA databases are presented. Finally, in

Section 6 the paper is concluded.

3. The Proposed Modifications of SPSIM

In 2013 [

15] a modification of the SSIM index was proposed, including an analysis of color loss based on a color space representing color information better than the classical RGB space. A study was conducted to determine which color space best represents chromatic changes in an image. A comparison was made between LIVE images, obtained by modifying the index using YCbCr, HSI, YIQ, YUV and CIELab color spaces. From the analyzed color spaces, the best convergence with subjective assessment was shown for the quality measure based on YCbCr space. YCbCr is a color space proposed by the International Telecommunication Union, which was designed for processing JPEG format digital images [

16] and MPEG format video sequences. Conversion of RGB to YCbCr is expressed by the formulae:

The Y component represents image luminance, while the and components represent the chrominance. The high convergence of the color version of the SSIM index using the YCbCr color space with the subjective assessment allows us to assume that the use of this color space for other IQA indices should also improve the prediction performance of the image quality assessment. Therefore, it is possible to to change the SPSIM index algorithm by replacing the YUV space used in it with the YCbCr space, while keeping the other steps of the algorithm unchanged.

Another proposal to improve image quality assessment using the SPSIM index is to exploit the advantages of the previously described MDSI quality measure. For both SPSIM and MDSI measures, the local similarity of chrominance and image structure is used to determine their values. Both of these components differ for each of the indexes. In the case of the SPSIM index, the chrominance similarity for each of the two channels is calculated separately, whereas for the MDSI index, the chrominance similarity is calculated simultaneously. Similarly, for structural similarity, the MDSI index additionally considers a map of the gradient values for the combined luminance channels, whereas the map of local structural similarities in SPSIM is calculated for each image separately. Therefore, the second proposal for the modification of the SPSIM index consists of determining the the local chrominance and image structure similarity maps based on approaches known from the MDSI index. This approach employs a combination of two methods: SPSIM and MDSI.

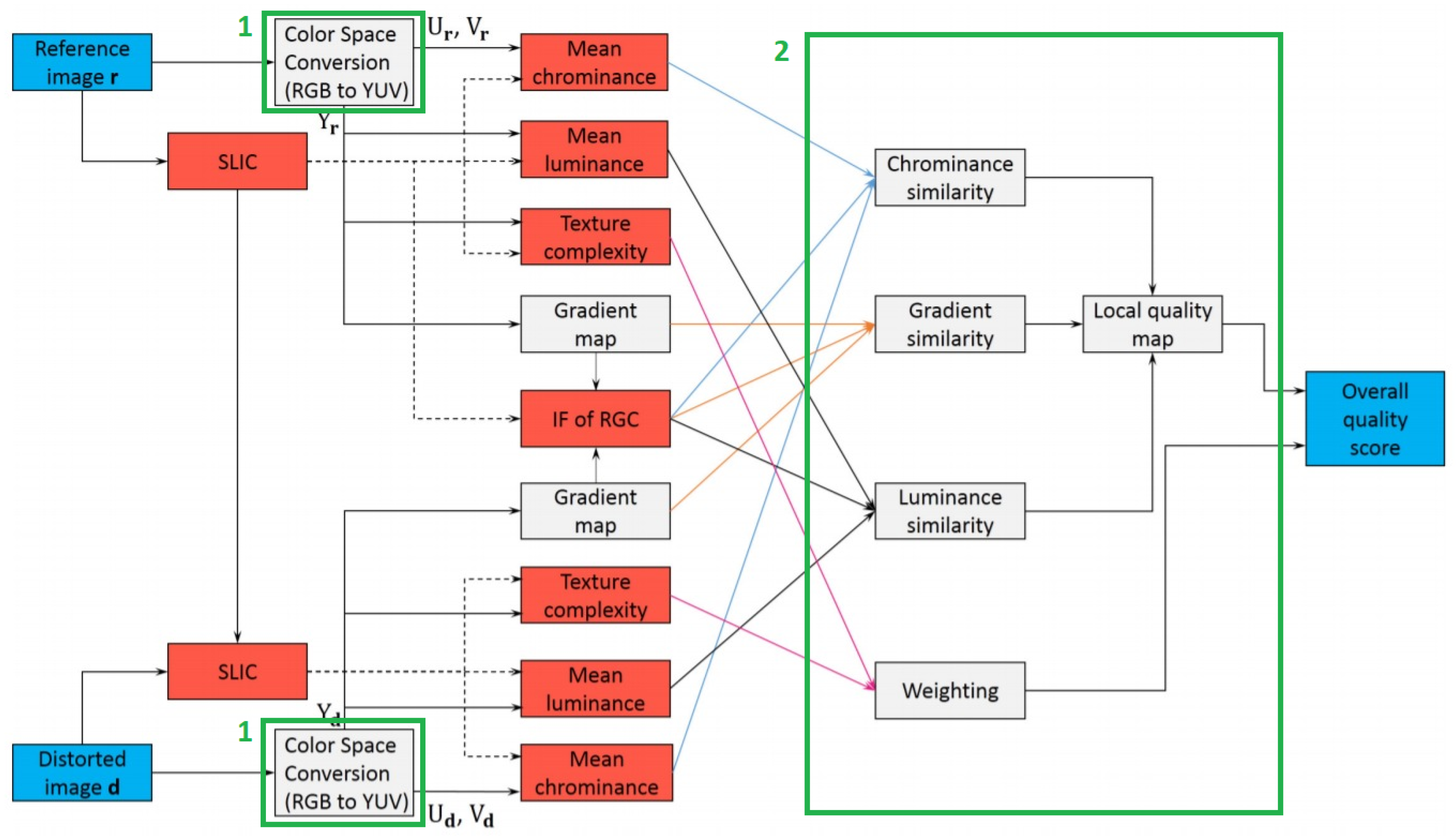

Both modifications to the SPSIM algorithm proposed above were separately implemented and compared with other quality measures. The implementation of the new color space was particularly straightforward (two boxes labeled 1 in

Figure 4), coming down to simply replacing the transforming formulae from RGB to the proposed color space.

The implementation of the second modification required the use of elements of the MDSI code describing the construction of chrominance and structural similarity maps (box 2 in

Figure 4). The implementation details can be found in the paper about MDSI [

7]. Furthermore, a solution combining both proposed modifications to SPSIM has also been implemented. In summary, we will consider three modifications of SPSIM: the first one limited to a change of color space and further denoted as SPSIM(YCbCr), the second one operating in YUV color space but using elements of MDSI and denoted as SPSIM (MDSI) and the third one combining the two previous modifications of SPSIM and denoted as (YCbCr_ MDSI). The effectiveness of the resulting modifications to SPSIM is presented in the next section.

4. Experimental Tests

Four benchmark databases (LIVE (2006) [

17], TID2008 [

18], CSIQ (2010) [

19] and TID2013 [

20]) were selected for the initial experiment, which are characterized by many reference images, various distortions and different levels of their occurrence in the images.

The LIVE image dataset contains 29 raw images subjected to five types of distortion: JPEG compression, JPEG2000 compression, white noise, Gaussian blur and bit errors occurring during the transmissions of the compressed bit stream. Each type of distortion was assessed by an average of 23 participants. Most images have a resolution of 768 × 512 pixels.

The TID2008 image database contains 1700 distorted images, which were generated using 17 types of distortions, with 4 levels per distortion superimposed on 25 reference images. Subjective ratings were obtained based on 256,428 comparisons made by 838 observers. All images have a resolution of 512 × 384 pixels.

The CSIQ database contains 30 reference images and 866 distorted images using six types of distortion. The distortions used include JPEG compression, JPEG2000 compression, Gaussian noise, contrast reduction and Gaussian blur. Each original image has been subjected to four and five levels of distortions. The average values subjective ratings were calculated on the basis of 5000 ratings obtained from 35 interviewed subjects. The resolution of all images is 512 × 512 pixels.

Image database TID2013 is an extended version of the earlier image collection TID2008. For the same reference images, the number of distortion types has been increased to 24, and the number of distortion levels to 5. The database contains 3000 distorted digital images. The research group, on the basis of which the average subjective ratings of the images were determined, was also increased.

Below is a summary table of the most relevant information about the selected IQA benchmark databases (

Table 1). The information on the Konstanz Artificially Distorted Image quality Database (KADID-10k) will be further discussed in

Section 5.

The values of the individual IQA measures are usually compared with the values of the subjective ratings for the individual images. Four criteria are used to assess the linearity, monotonicity, and accuracy of such predictions: the Pearson linear correlation coefficient (PLCC), the Spearman rank order correlation coefficient (SROCC), the Kendall rank order correlation coefficient (KROCC) and the root mean squared error (RMSE). The formulae for such comparisons are presented below:

where

and

are raw values of subjective and objective measures,

and

are mean values.

where

means the difference between the ranks of both measures for observation

i and

N is the number of observations.

where

and

are the numbers of concordant and discordant pairs, respectively.

where

and

are as above.

Table 2,

Table 3,

Table 4 and

Table 5 contain the values of the Pearson, Spearman and Kendall correlation coefficients, and RMSE errors for the tested IQA measures. In each column of the table, the top three results are shown in bold. The two right-hand columns contain the arithmetic average and weighted average values with respect to the number of images in each database. Considering the weighted averages of the calculated correlation coefficients and the RMSE errors, we can see that modifications of the SPSIM index give very good results. A superpixel number of 400 was assumed for the SPSIM index and its three modifications.

The good results obtained for SPSIM and its modifications mainly relate to images from the TID2008 and TID2013 databases. Correlation coefficients computed for CSIQ and LIVE databases are not so clear. The large number of images contained in the TID databases allows reliable transfer of these good results in weighted averages.

The performance of the analyzed new quality measures was measured using a PC with an Intel Core i5-7200U 2.5 GHz processor and 12 GB of RAM. The computational scripts were performed using the Matlab R2019b platform. For each image from the TID2013 set, the computation time of each quality index was measured. The performance of an index was measured as the average time to evaluate the image quality.

Table 6 shows average computation times for the chosen IQA measures. SPSIM modifications caused only a few percent increase in the computation time compared to the original SPSIM.

5. Additional Tests on Large-Scale IQA Database

The IQA databases previously used by us contained a limited number of images, most of them using the TID2013 database, i.e., 3000 images. The use of online crowdsourcing for image assessment allowed building larger databases. In recent years, a large-scale image database called KADID-10k (Konstanz Artificially Distorted Image quality Database) [

21], which contains more than 10,000 images with subjective scores of their quality (MOS) was created and published. This database contains a limited number of image types (81), a limited number of artificial distortion types (25) and a limited number of levels for each type of distortion (5), resulting in 10,125 digital images. Recently, the KADID-10k database has frequently been used for training, validation and testing of deep learning networks used in image quality assessment [

22]. Further use of pretrained convolutional neural networks (CNNs) in IQA algorithms seems promising.

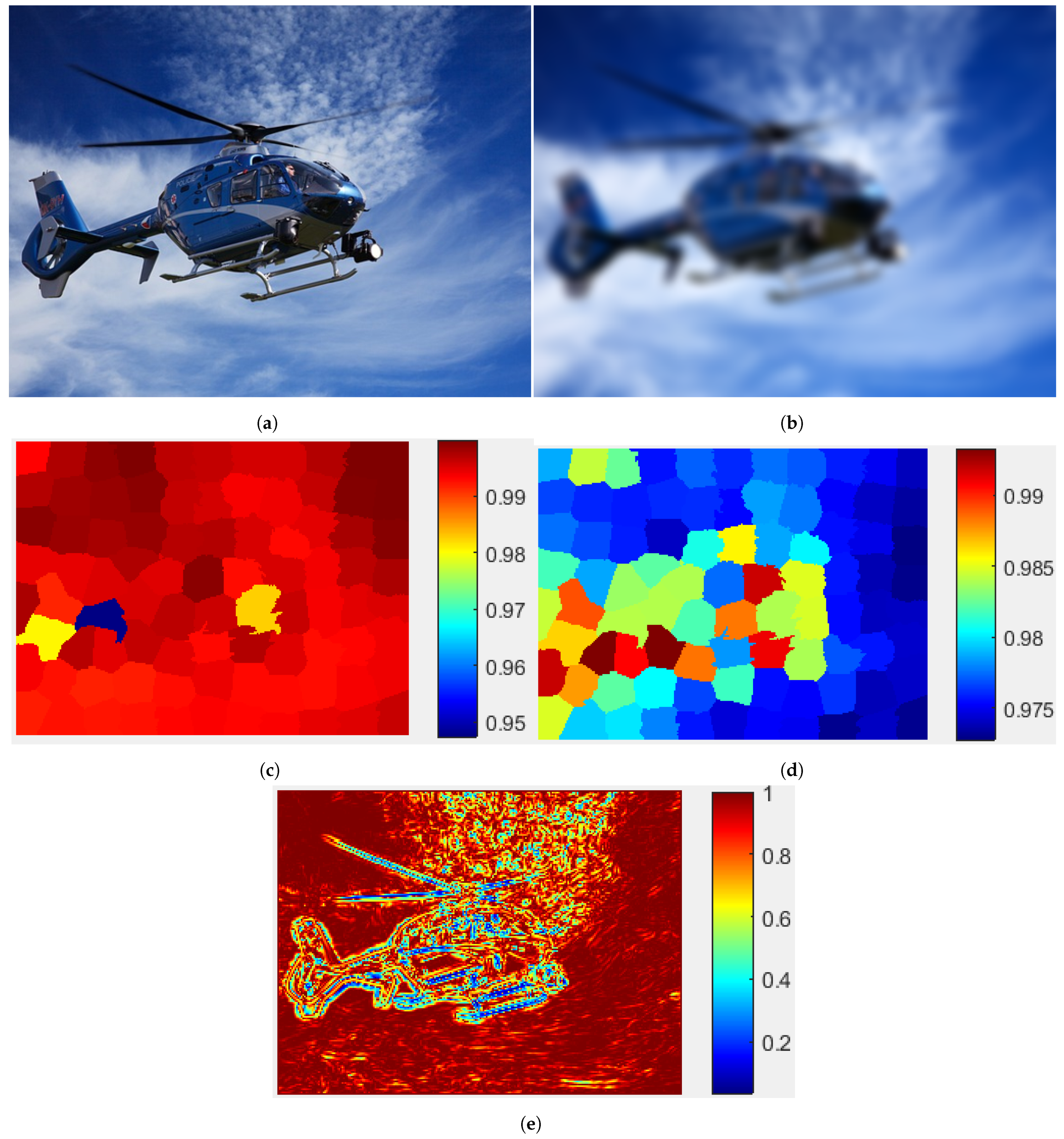

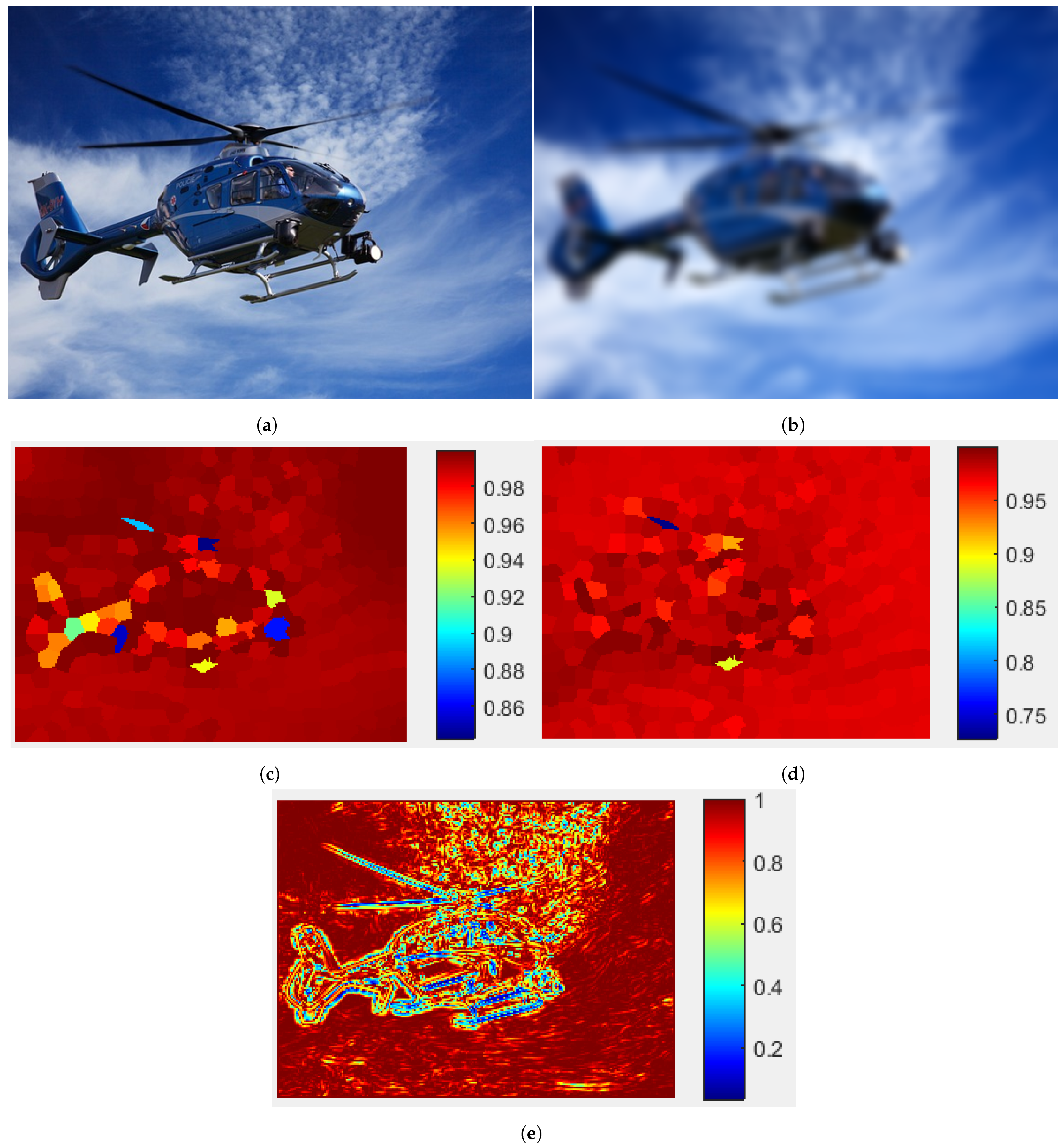

High perceptual quality images from the public website have been rescaled to a resolution 512 × 384 (

Figure 5). The artificial distortions used during the creation of the KADID-10k database include blurs, noise, spatial distortions, etc. A novelty was the use of crowdsourcing for subjective IQA in this database. Details of crowdsourcing experiments are described in the article [

21].

For SPSIM index tests we used the large-scale IQA database KADID-10k and its modifications with different numbers of superpixels between 100 and 4000. The results obtained are presented in

Table 7. The best results for a given number of superpixels are highlighted in bold. In each case, the best results were obtained for the SPSIM modification based on a combination of color space change to YCbCr and application of the similarity maps used in MDSI.

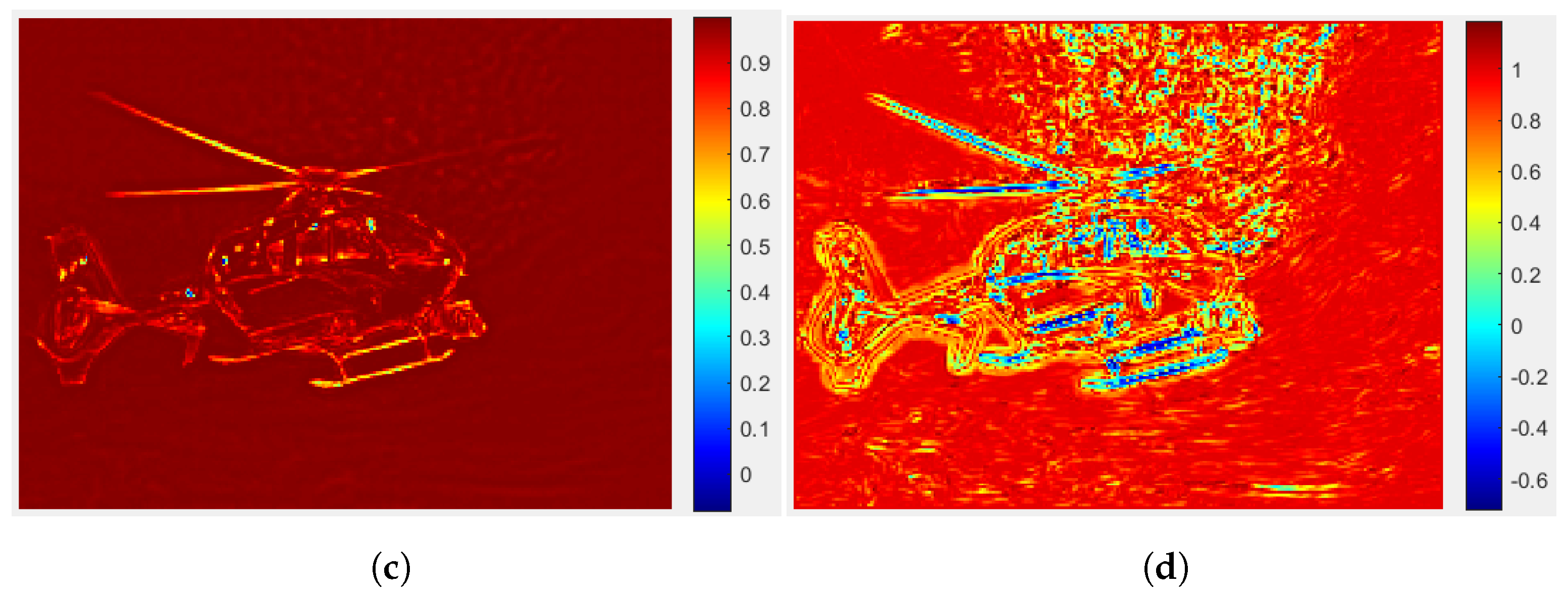

Increasing the number of superpixels improves the values of all four indices. In addition, the effect of the number of superpixels on the computing time for the SPSIM (YCbCr_ MDSI) index was checked for the pair of images presented in

Figure 1. Changing the number of superpixels from 100 to 4000 caused a significant increase in the computation time (6.14 times). The choice of the number of superpixels must be a compromise between the computation time and the index values expressing the level of correlation with the MOS scores.