1. Introduction

Cloning of program fragments in any form has been identified both as a boon and bane depending upon the purpose for which it is used [

1,

2,

3,

4,

5,

6,

7]. Writing code from scratch often hinders code understandability and evolvability. In such situations, reusability achieved via the use of already existing libraries, design patterns, frameworks, and templates aids structuring code well, while on the other hand, code clones formed as a result of the programmer’s laziness can turn out to be harmful in the long term. These clones adversely affect software maintenance as even the smallest change made in a code fragment may have to be propagated to all the clones of that fragment; failing may lead to inconsistent code, which may result in software bugs [

8,

9,

10,

11]. Clones can also arise out of language limitations or external business forces.

Depending on the extent of similarity between code clones, they can be classified as

exact or type-1 clones. They are the result of copy paste activity of programmers, when they find a piece of code providing exact solution to their problem. To suit their needs, programmers may further modify the pasted code leading to Type-2 or parameterized clones (modification of identifier names) and Type-3 or near miss clones (modification in control structures, deletion or addition of statements). Further clones can also occur when the developer re-invents the wheel. These types of clones generally occur when the developer is unaware of the existing solution to the problem or wants to develop a more efficient algorithm. These clones can be termed as wide miss clones. A wide miss clone solves the same or similar problem, while having structural dissimilarities. From the experience of marcus and maletic [

12], these type of clones often manifest themselves as higher-level abstractions in the problem or solution domain such as an Abstract Data Type (ADT) list. They can be termed as “concept clones”. Further, on the basis of chosen granularity of code fragments (forming the basic units of clone detection), clones can be simple (fined grained) or high-level (coarse-grained). Simple clones are generally composed of few set of statements, while high level clones are clones having granularity as a block, method, class, or file. High-level clones are said to be composed of multiple simple clones. High-level clones can further be classified into behavior clones (clones having same set of input and output variables and similar functionality), concept clones (clones implementing a similar higher-level abstraction), structural clones (clones having similar structures), and domain-model clones (clones having similar domain models such as class diagram) [

13]. This study aims at the detection of “high-level concept clones having granularity as java methods” in a software system.

High-level concept clones are those code fragments that implement a similar underlying concept. Their detection depends on the user’s understanding of the system. Using the code’s internal documentation and program’s semantics could be a viable mechanism for their detection [

13]. Detecting such clones is useful in selecting an efficient implementation of higher-level abstraction and propagating changes to all clones whenever underlying concept changes.

Different algorithms have been proposed in prior published studies to detect clones in source code. The algorithms can be text-based (using source code implementation or associated text, like, comments and identifiers directly or by converting to some intermediate form) [

12,

14,

15,

16,

17,

18,

19], token-based (using source code converted to meaningful tokens) [

20,

21,

22,

23,

24,

25,

26,

27,

28,

29], tree-based (using parse trees) [

30,

31,

32,

33,

34,

35], metrics based (using metrics derived from source code) [

36,

37,

38,

39,

40,

41], or graph-based (using source code converted to suitable graph) [

42,

43,

44,

45,

46].

As the use of the code’s internal documentation and program semantics is recommended for detection of concept clones, several studies use text-based Information Retrieval (IR) techniques. The IR techniques enable us to extract relevant information from a collection of resources [

47]. There are several techniques for information retrieval, such as Latent Semantic Indexing (LSI), Vector Space Modeling, Latent Dirichlet Allocation (LDA), etc. Researchers have used these techniques for clone detection by extracting source code text from software programs. Marcus and Maletic [

12] conducted one of the earlier studies that took source code text (comments and identifiers) to identify similar high-level concept clones. They applied LSI on the text corpus formed from extracted comments and identifiers to identify such clones. Later, the authors extended this technique to locate a given concept in the source code [

48] and then to determine conceptual cohesion between classes in object-oriented programs [

49]. Bauer et al. [

50] also compared the effectiveness of LSI with the TF-IDF approach and regarded the former as the better alternative.

One of the drawbacks of LSI is the production of a large number of false positives [

51], making it difficult for developers to identify actual concept clones. Poshyvanyk et al. [

52] combined LSI with Formal Concept Analysis (FCA) to reduce this difficulty. FCA was used for presenting the generated ranked results with a labeled concept lattice, thereby reducing the efforts of programmers to locate similar concepts during software maintenance. In some of the recent studies, researchers have highlighted the potential use of information retrieval with learning-based approaches to detect type-4 clones. Xie et al. [

53] used the TF-IDF approach with the Siamese neural network, while Fu et al. [

54] combined a Continuous Bag of Words (CBOW)/Skip Gram (SG) model with ensemble learning and reported improved accuracy measures as compared to other solo approaches. Some researchers have used LSI to further examine the clones, which have already been detected by some clone detection tools. The findings suggest that clone detection and LSI complement each other and give better quality results [

18,

55].

Table 1 summarizes some of the relevant studies related to the use of information retrieval techniques in clone detection.

Kuhn et al. [

56] also illustrated the use of LSI for topic modeling of source code vocabulary. Later, Maskeri et al. [

64] used a more efficient approach called Latent Dirichlet Allocation (LDA) to identify similar topics. Ghosh and Kuttal applied LDA on a text corpus formed from a combination of comments and associated source code to identify semantic clones [

58]. It gave better

precision and

recall values as compared to PDG-based approaches. In their recent study, they emphasized the usage of source code comments to detect clones. They performed clone detection by employing a PDG-based approach to source code and LDA to source code comments (excluding task and copyright comments) for finding file level clones [

63]. Their study empirically shows the relevance of good quality comments to scale such IR-based techniques well for larger projects and detecting inter-project clones. Reddivari and Khan also developed CloneTM, which uses LDA to identify code clones (up to type-3) using source code (with identifier splitting, stop-word removal, and stemming). CloneTM gave better results when compared to CCFinder [

60] and CloneDr [

61]. Abid [

62] developed Feature-driven API usage-based Code Examples Recommender (FACER) to detect and report similar functions based on the user’s query. The tool uses comments, keywords from the function name, API class names, and API method names used in functions to find similar functions using an IR-based engine called Lucene. At the data level, data clones (replicas) can help recover lost data at runtime for quality assurance of data analysis tasks in the big data analysis [

65].

The literature also provides other similar techniques for clone detection. Grant and Cordy [

57] replaced LSI with a feature extraction technique, namely, Independent Component Analysis (ICA), and used tokens in the program to build a vector space model instead of terms in the comments.

Several previously published studies [

12,

48,

49,

58,

63] apply IR-based techniques using source code comments (including other programming constructs) to identify clones, thereby resulting in a bulky text corpus. This study, however, omits all the unnecessary comments and uses only comments present in the method’s documentation. Blending suitable NLP techniques with descriptive documentation only (excluding notes comment, explanatory comment, contextual comment, evolutionary comment, and conditional comment) makes the proposed mechanism lightweight and efficient.

In this study, high-level concept clones at method-level granularity are detected by extracting descriptive documentation of each method to form the text corpus. The descriptive part of documentation generally describes the semantics of code, thus fulfilling the criteria for detection of concept clones as stated above [

13]. Latent Semantic Indexing (LSI) coupled with Part-of-Speech (POS) tagging strategy and lemmatization is then applied on the formed text corpus to identify high-level concept clones. After obtaining similar descriptions, the methods corresponding to these descriptions are regarded as concept clones.

The followings points summarize the procedural flow and factors contributing to the efficacy of the proposed approach:

The text corpus is formed only from the description of methods (other documentation details are removed). This is because the semantics of a particular method are mostly contained in its description. All other comments augmented with methods are also not used. Furthermore, no additional text element of the source code is used for text corpus preparation. All this makes the comparison process efficient with the reduced size of the text corpus.

A POS tagging strategy has been employed to assign higher weights to certain parts of speech (generally nouns and verbs) and for the removal of stop-word, filtering out all terms not tagged as nouns, adverbs, verbs, and adjectives. The use of POS tagging also reduces the size of text corpus and increases efficiency by combining two tasks in one step. Other works carry out stop-word removal as a separate task.

Different combinations of weight and similarity measures have been examined for clone detection. These combinations are compared based on several parameters to enable the end user to choose suitable variant. In

Section 6.3, each combination is ranked based on their efficiency to find contextual clones.

The proposed mechanism is able to detect methods implementing similar high-level concepts with good accuracy values. The mechanism is empirically validated using 3 open source java projects (JGrapht, Collections, and JHotDraw) as subject systems.

The organization of the rest of the paper is as follows.

Section 2 explains the relevant terminologies used in this study.

Section 3 lays down the research questions relevant to this study.

Section 4 lists the key features of documentation in java projects.

Section 5 delineates the clone detection process.

Section 6 outlines the empirical validation process used and addresses the listed research questions.

Section 7 outlines threats to the validity of results derived in this work. The conclusion is presented in

Section 8 of this exposition.

2. Terminology

This section briefly outlines the relevant terms used in this study.

2.1. High-Level Concept Clones

High-level concept clones [

12,

13] are defined as implementations of a similar higher-level abstraction like an ADT list. They arise when developers try to re-invent the wheel, i.e., implementing the same or similar concept using different algorithms. These clones address the same or similar problem, while having very dissimilar structures. Their detection depends on the user’s understanding of the system. Using the code’s internal documentation and program semantics can greatly improve detection accuracy of this type of clone.

In this research work, we have used descriptive documentation associated with methods (as it contributes to the method’s semantics) to detect high-level concept clones having granularity as java methods.

2.2. Latent Semantic Indexing (LSI)

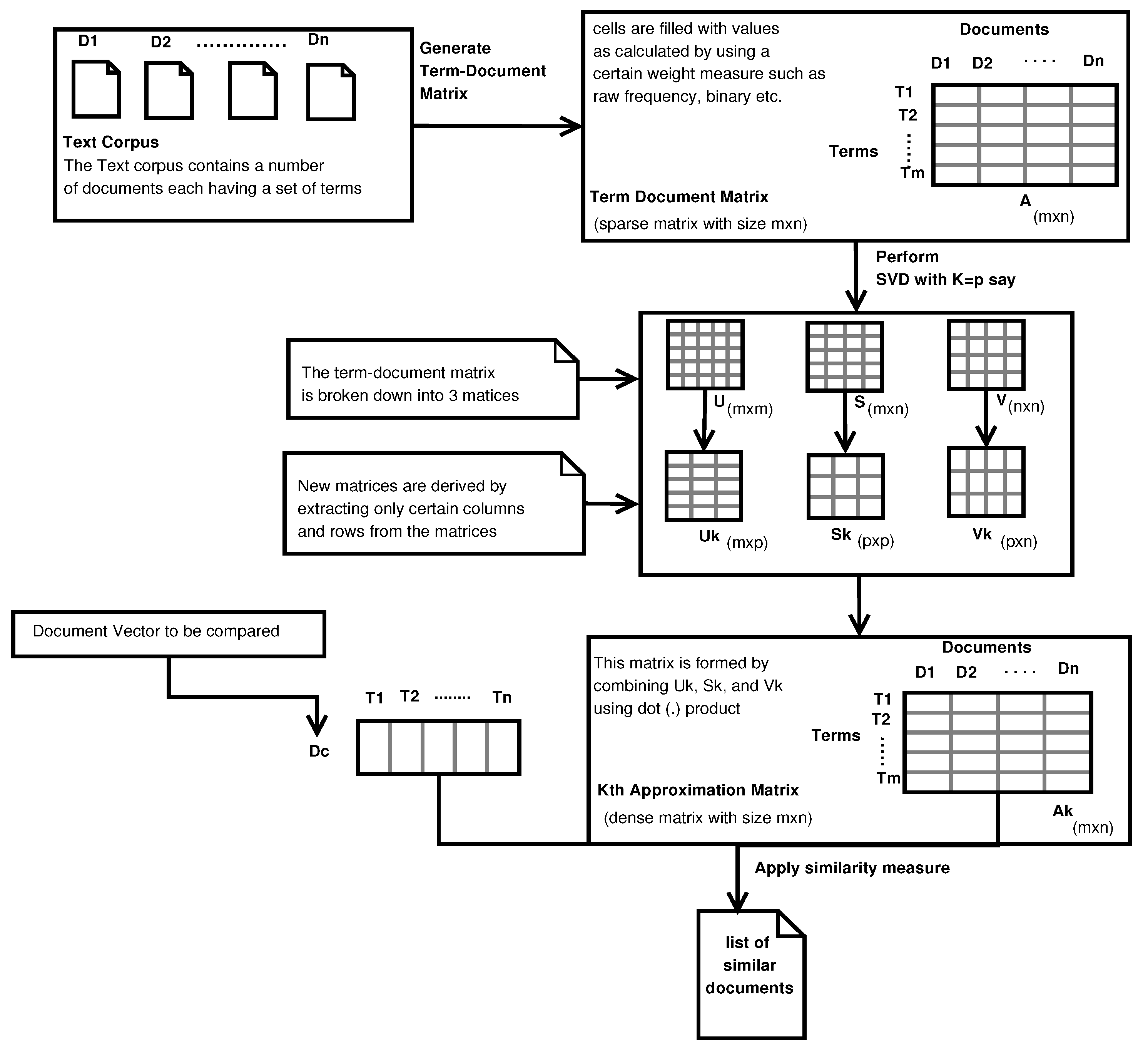

LSI [

51] is an information retrieval technique used for automating the process of semantic understanding of sentences written in a particular language. It uses Singular Value Decomposition (SVD) of the term-document matrix developed using the Vector Space Model (VSM) to find the relationship among documents.

To perform LSI on a given set of documents, a term-document matrix is built by extracting terms from these documents. Every entry of the matrix indicates the weight assigned to a particular term in the corresponding document. This term-document matrix is decomposed using SVD. It decomposes the matrix (say A with dimension m,n) into three matrices as follows,

where the dimension of

U is (m,m),

S (diagonal matrix) is (m,n), and

V is (n,n). A random value

K is chosen to form the matrix

(a kth approximation of matrix A).

is derived as

After

is successfully computed, given a document, its similarity with other documents is calculated. The measure of similarity can be cosine, jaccard, dice, etc. The result of applying LSI is a list of similar documents. A schematic representation of LSI is given in

Figure 1.

LSI usually finds its usage in applications like search engines to extract the most relevant documents for a given query. It can also be used for document clustering in text analysis, recommendation systems, building user profiles, etc.

2.3. Part-of-Speech (POS) Tagging

POS tagging [

66] is defined as the process of mapping words in a text to their corresponding Part-of-Speech tag. The tag is assigned based on both its definition and context (relationship with other words in the sentence)*. For example, consider two sentences say “S1 = Sameera likes to eat ice cream” and “S2 = There are many varieties of ice cream like butterscotch, Kesar-Pista, etc.”. Look at the word

like in both sentences. The tag assigned to “like” changes according to the sentence: in S1

like is a verb, while in S2

like is a preposition.

POS tagging uses a predefined tag-set, such as the penn treebank tagset; transition probabilities; and word distribution. The tag-set contains a list of tags to be assigned to different words in the corpus. Transition probabilities define the probabilities of transition from one tag to another in a sentence. The word distribution denotes the probability that a particular word may be assigned to a specific tag.

Applications of POS tagging are named entity recognition (recognition of the longest chain of proper nouns), sentiment analysis, word sense disambiguation, etc.

While comparing two sentences it is sometimes required that different parts of speech be weighed according to the semantic information it conveys in a particular context, which can be done using POS tagging. In this paper, we have used POS-Tagging to give extra weight-age to nouns and verbs.

In prior studies, D. Falessi et al. [

67] used this technique to find equivalent requirements. By comparing different combinations of Natural Language Processing (NLP) techniques required for building algebraic model, doing term extraction, assigning weights to each term in documents, and measuring similarity among documents, they concluded that the best outcome is observed for a combination of VSM, raw weights, extraction of relevant terms (verbs and nouns only), and cosine similarity measure. They also did an empirical validation of their results using a case study [

68]. Johan [

69,

70] used similar NLP techniques to find related or duplicate requirements coming from different stakeholders to avoid reworking on a similar requirement.

2.4. Lemmatization

Lemmatization refers to the process of reducing different forms of a word to its root form [

71]. For example, “builds”, “building”, or “built” gets reduced to the lemma “build”.

The purpose of lemmatization is to gain homogeneity among different documents, though, another mechanism, stemming, can also be used. Stemming works by removing suffixes from different forms of a word, e.g., “studies” becomes “study”, “studying” becomes “study”, etc. As is evident from this example, lemmatization reduces words to a word present in the dictionary, while stemming removes suffixes without caring whether the reduced form is available in the dictionary or not.

Stemming/lemmatization strategies have several applications in natural language processing. In the software engineering field, these are generally used to understand and analyze textual artifacts of the software development process, such as documentation provided at each level, e.g., requirement document, design document, code documentation, etc. Falessi et al. [

67,

68] employed stemming along with POS tagging as a word extraction strategy to find equivalent requirements.

2.5. Term Weighting

While generating a term-document matrix, each entry carries some weight according to its occurrence in the documents. These weights can be assigned in several ways as discussed below.

Raw frequency: Weights are assigned according to the number of times they appear in a particular document.

Binary: Weight value is one if a term occurs in the document; otherwise, its value would be zero.

Term Frequency (TF): Raw frequency divided by the length of the document. This helps prevent giving more preference to longer text because longer text tends to contain a particular term at a higher frequency than the shorter ones.

Inverse Document Frequency (IDF): IDF is computed as the inverse of the number of times a term occurs in all the documents. IDF score can be useful to give less importance to the terms that frequently occur in all documents, thereby making it semantically less useful for the document under review.

TF-IDF: Weight for a particular term in a document is computed by combining its TF and IDF values. This mechanism leverages the benefits of both TF and IDF scores.

Word Embeddings: Word embeddings are a way to represent words in the text corpus using distributed representations of words instead of a one-hot encoding vector. Using these vector representations, one can explore the semantic and syntactic similarity of words, the context of words, etc. in the document. Various word embedding techniques exist:

- (i)

Word2Vec: Word2Vec is a feedforward neural network with two variants. It either accepts two or more context words as input and returns the target word as output, or vice versa. The former is called the Continuous Bag of Words (CBOW) model and the latter is the Skip-Gram (SG) model.

- (ii)

Glove: Glove works by generating a high-dimensional vector representation of each word. The training is based on the surrounding words. This high-dimensional matrix (context matrix) is then reduced to a lower-dimension matrix such that maximum variance is preserved. Glove uses pretrained models that fail for text containing new words. The documentation of methods may contain many new words (such as method names), therefore glove was found unsuitable for this study.

- (iii)

Fasttext is similar to glove, with a feature that makes it suitable for random words not in the pretrained model. It breaks the word into n-grams and then generates the required context-matrix, for example, given the word “fruits” and n = 3. The n-grams generated are <fru, rui, uit, its>. Each row in the matrix represents the generated n-grams instead of whole words.

2.6. Similarity Measures

The two text documents are compared for their similarity using established similarity metrics. As this study uses the term-document matrix, we are only interested in vector similarity measures. There are three commonly used vector similarity metrics: cosine, jaccard, and dice. For two vectors, say X and Y, the three metrics are calculated as follows:

2.7. Thresholds Used

Certain thresholds are used for the proper execution of the proposed mechanism:

- (i)

#Method_Tokens: This threshold is used to filter out small methods. Methods with count of tokens greater than a certain threshold are only considered in the comparison process. This helps in getting more relevant results.

- (ii)

#Simterms: #Simterms define the count of similar terms obtained by textual comparison of each pair of documentation to keep track of ordering of terms. This threshold allows only those documents to be compared which have a similar ordering of terms greater than the threshold value.

- (iii)

Simmeasure: Simmeasure defines the similarity value obtained by using similarity measures given in

Section 2.6 (i.e., cosine, jaccard, or dice similarity) between the pair of documentations. This threshold ensures that the resulting clone pairs have similarity greater than the threshold value.

- (iv)

K: The value used for arriving at the kth approximation of the term-document matrix. It is used when performing LSI to ensure that maximum variance is preserved when reducing the high-dimensional matrix to a lower dimension.

2.8. Precision and Recall

In this study,

recall refers to the ratio of the number of items that are known to be true clones and are detected successfully (number of true positives say

x) to the total number of true clones say

y.

Here,

precision refers to the ratio of the number of true positives in the detected clones, say

p, set to the total number of clones detected in the detected clones set, say

q.

5. Clone Detection Process

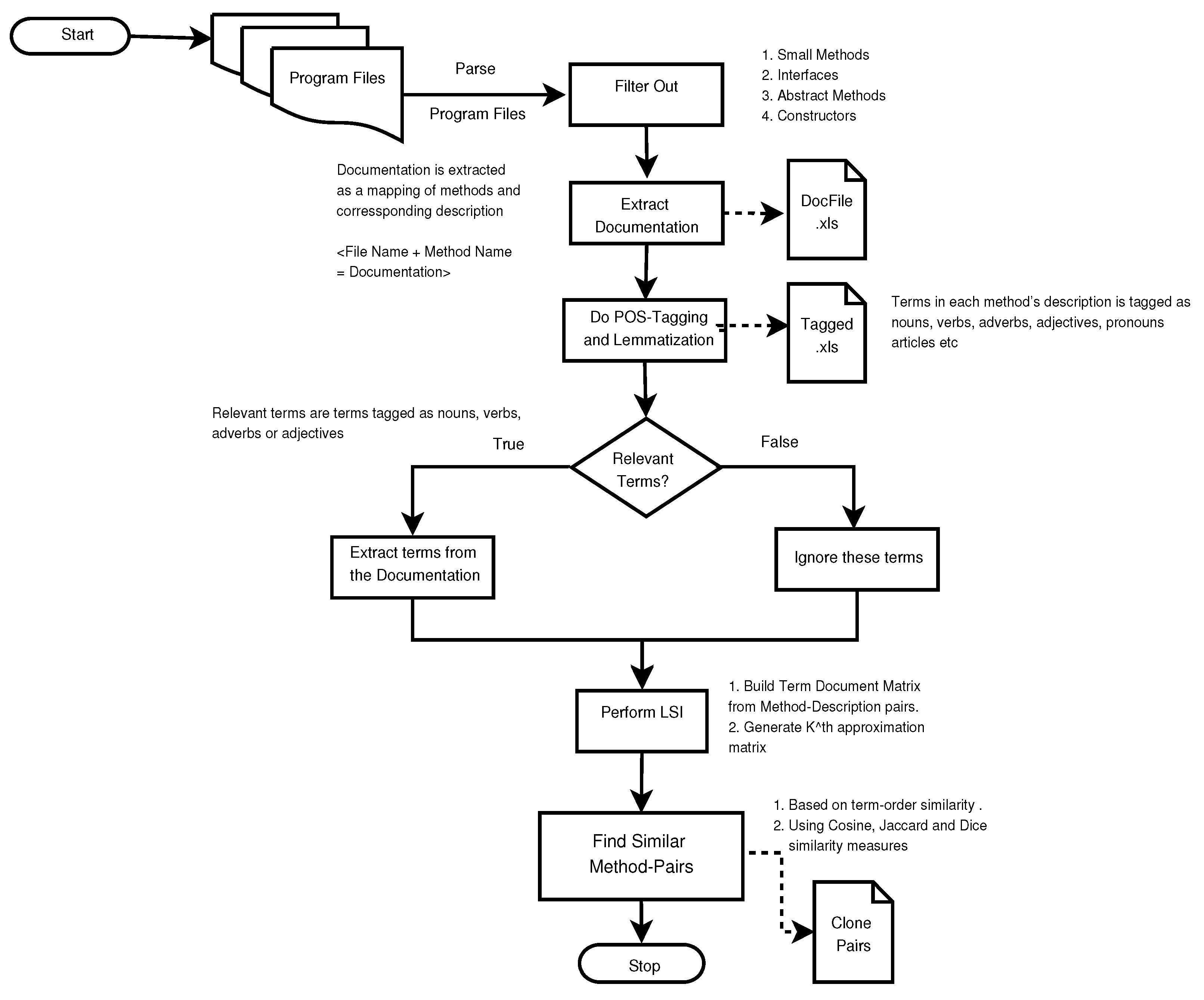

This section briefly describes the clone detection process used in this paper. We have taken three open source software written in java to validate our findings empirically. The steps carried out in the process are as follows.

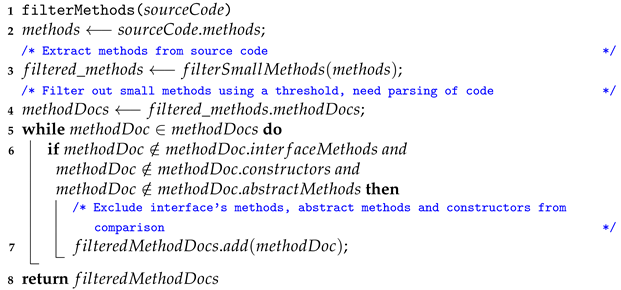

Step 1: Filtering out Interfaces, Abstract Methods, Small Methods, and Constructors: Programs written in java contain a large number of interfaces and methods that need to be filtered out because they do not contribute to relevant clones. This helps in increasing the performance of the proposed mechanism by reducing the number of spurious clones and makes the overall analysis of resulting clone pairs less time-consuming (see Algorithms 1 and 2). The following programming constructs shall be filtered out:

- (i)

Interfaces: An interface contains only the names of methods without any implementation. Classes that implement the interface need to implement the required functionality for each of the methods. As interfaces do not contain any implementation, they must be filtered out as they do not contribute much to the detection of relevant clones.

- (ii)

Abstract Methods: Abstract methods are similar to the methods in interfaces and do not contain any implementation. Thus, they are also filtered out.

- (iii)

Small Methods: Methods that are smaller than a particular threshold, i.e., a specific number of tokens, are also filtered out. This threshold value is a hyperparameter. This filtering helps to remove various setter and getter methods and methods that only call other methods to perform the required functionality. These are generally single line methods whose detection as clones serves the least purpose.

- (iv)

Constructors: In this study, constructors are also not taken into account for clone detection, thus they are also filtered out.

| Algorithm 1: Filter out small methods. |

![Symmetry 13 00447 i001 Symmetry 13 00447 i001]() |

| Algorithm 2: Filter out methods that least contribute to clones in software systems. |

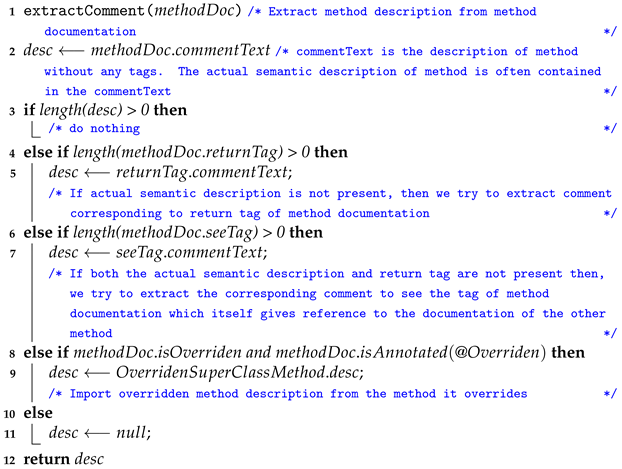

![Symmetry 13 00447 i002 Symmetry 13 00447 i002]() |

Step 2:

Extraction of Documentation: In this work, only documentation related to methods is used, as described in

Section 4. Furthermore, we need to extract only the

description associated with methods (see Algorithm 3). If the description is not present, the proposed method uses descriptive tags to extract a suitable description for the method using @return and @see tags.

- (i)

@return tag describes what is returned from the function. The contents of the @return tag are used when the description is absent.

- (ii)

@see tag is used when a lookup to another method/class is required (no description for the method and no @return tag is present).

| Algorithm 3: Extract comments from program’s source code. |

![Symmetry 13 00447 i003 Symmetry 13 00447 i003]() |

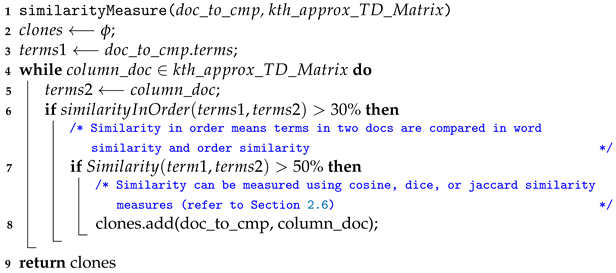

| Algorithm 4: Find similarity between documents. |

![Symmetry 13 00447 i004 Symmetry 13 00447 i004]() |

Overridden methods are usually annotated with @override annotation. If documentation is not available for these methods, it is extracted from the method being overridden.

Further, to extract java documentation in a processable file format, we used built-in java library doclet (by default javadocs are generated as HTML pages). Steps 1 and 2 collectively describe the key features our generated javadoc should have.

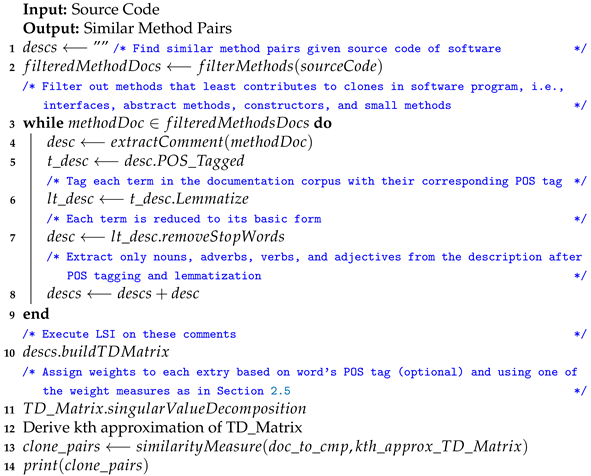

| Algorithm 5: Using POS tagging and LSI to find similar methods. |

![Symmetry 13 00447 i005 Symmetry 13 00447 i005]() |

Step 3: Part-of-Speech (POS) Tagging of Documentation and Lemmatization:

For each method’s extracted documentation, POS tagging is performed (see Algorithm 5 (Step 5)). The purpose of this step is twofold:

- (i)

To give more weight to nouns and verbs in a sentence.

- (ii)

To remove stop words by extracting only words tagged as verbs, adjectives, nouns, and adverbs (see Algorithm 5 (Step 7)).

Lemmatization is done to reduce each term in documentation to its base form, to curb differences among documentation arising out of different forms of words (see Algorithm 5 (Step 6)).

Step 4: Latent Semantic Indexing (LSI): LSI (see Algorithm 5 (steps 10–15)) is performed as follows:

- (i)

Extract those terms tagged as verbs, nouns, adjectives, or adverbs.

- (ii)

Perform LSI on these extracted terms. The VSM built contains weights (matrix entries), as described in

Section 2.5. Weights can also be assigned by giving extra weight-age to certain parts of speech (nouns and verbs).

- (iii)

For a pair of method’s description, use similarity measures described in

Section 2.6, also taking into account similarity based on term ordering to find clone pairs in a software project (see Algorithm 4).

For the complete algorithm, refer to Algorithms 1 to 5. The flowchart representation of this algorithm is given in

Figure 2.

6. Empirical Validation

To empirically validate the proposed mechanism, it has been applied to three open source java projects, namely, JGrapht [

73], Collections [

74], and JHotDraw [

75]. The results obtained were validated against the benchmark Qualitas Corpus (QCCC) [

76], which was provided by Ewan Tempero [

77] to present the efficacy of the proposed approach. The researcher prepared a corpus of clones for 111 open source java projects. However, the corpus only presents type-1, type-2, and type-3 clones, while our mechanism reports high-level concept clones. The validation is justified because type-1, type-2, and type-3 clones are very similar in structure and mostly implement similar concepts. The recall values obtained on validation also indicate that concept clones generally cover most clone pairs falling in these three categories. Nevertheless, the manual validation of resulting clone pairs is necessary for

accuracy calculations.

Table 2 gives details of projects used for the empirical validation. The details include #Files analyzed, #Methods (excluding constructors, abstract methods, methods of interfaces, and small methods), Estimated Lines of Code (ELOC; excluding constructors, abstract methods, methods of interfaces, and small methods), and % of Documented Methods (i.e., % of methods documented before manual modification (excluding constructors, abstract methods, and methods of interfaces)), which shows that with increase in size, the number of documented methods decreases.

After applying POS tagging, lemmatization, and stop-word removal to the description of methods, different weighing measures were used to generate term-document matrix, and thereafter to obtain a list of clone pairs, different similarity measures (to measure similarity between several term-document vectors) were used after performing LSI.

Table 3 summaries a list of all the combinations of weight and similarity measures used in this study. Furthermore, with each combination POS tagging is used in two ways, i.e., with or without selective weight assignment (giving priority to certain parts of speech). Selective weight assignment cannot be used with binary weight measures because it can only hold values 1 and 0.

Owing to the small size (ELOC is approx 6K), the JGrapht project has been used to illustrate the underlying working of the proposed mechanism. The recall is measured by a comparison of results obtained using our mechanism with the one provided in QCCC. Precision calculations are made for individual methods through augmenting manual validation of resulting clone pairs.

6.1. Relevance of Jaccard and Dice Similarity Measures with LSI

The reason behind using only binary weight measures with jaccard and dice similarity measures can be explained with the help of a counterexample.

The jaccard similarity measure is calculated as given in Equation (

4). Therefore, for two vectors with raw frequency weights say x = {1,2,1,0,1,2} and y = {1,2,2,1,0,1}, then Jaccard (x,y) = 2/5 = 0.4. It will treat (1,2) and (0,1) as different numbers and consider these documents as not similar w.r.t. these terms. While, in reality, methods with vector representation x and y are highly similar, as these numbers indicate the frequency of occurrence of terms in the method’s descriptions being compared. A similar example can be quoted for TF weight measure and non-applicability of LSI. Furthermore, the Dice similarity measure, as given in Equation (

3), can be used only with binary weight measure and cannot be combined with LSI, based on a similar explanation, as indicated above.

6.2. Results and Discussion

The results of empirical validation of each of the case studies are presented here.

6.2.1. Empirical Validation Using JGrapht

JGraphT is a free Java class library that provides mathematical graph-theory objects and algorithms. It runs on java 2 platform (requires JDK 1.8 or later starting with JGraphT 1.0.0).

This subsection provides execution details of the proposed mechanism on JGrapht. The thresholds (see

Section 2.7) used for arriving at results are as follows:

- (i)

#Method_Tokens: Small methods are filtered out using a minimum threshold of 50 on the number of tokens in a method (Evans used the same threshold on the number of Abstract Syntax Tree (AST) nodes in a method [

77]).

- (ii)

K: For LSI, the value of K is taken as half of the total number of terms in the text corpus.

- (iii)

Simmeasure is taken as 0.5, i.e., documentation of resulting method-level clone pairs should be 50% similar.

- (iv)

#Simterms is set to 30%, i.e., documentation of resulting method-level clone pairs should be at least 30% similar in term ordering.

Table 4 shows the results obtained after applying each of the 12 combinations of weight and similarity measures to JGrapht. Column 1 gives the type of combination applied (see

Table 3). Column 2 enlists the number of clone pairs observed for each applied combination. Column 3 gives the intersection of clone pairs present in both benchmark QCCC (provided by Evans [

77]) and the proposed mechanism. The number of clone pairs reported for JGrapht in QCCC were 85 methods with 6 constructors (constructors have already been filtered out in this study because of their limited relevance). Column 4 gives

recall, which is measured by comparing the obtained results against the benchmark. Last, column 5 gives

precision metric values, which are obtained by manual analysis of resulting clone pairs.

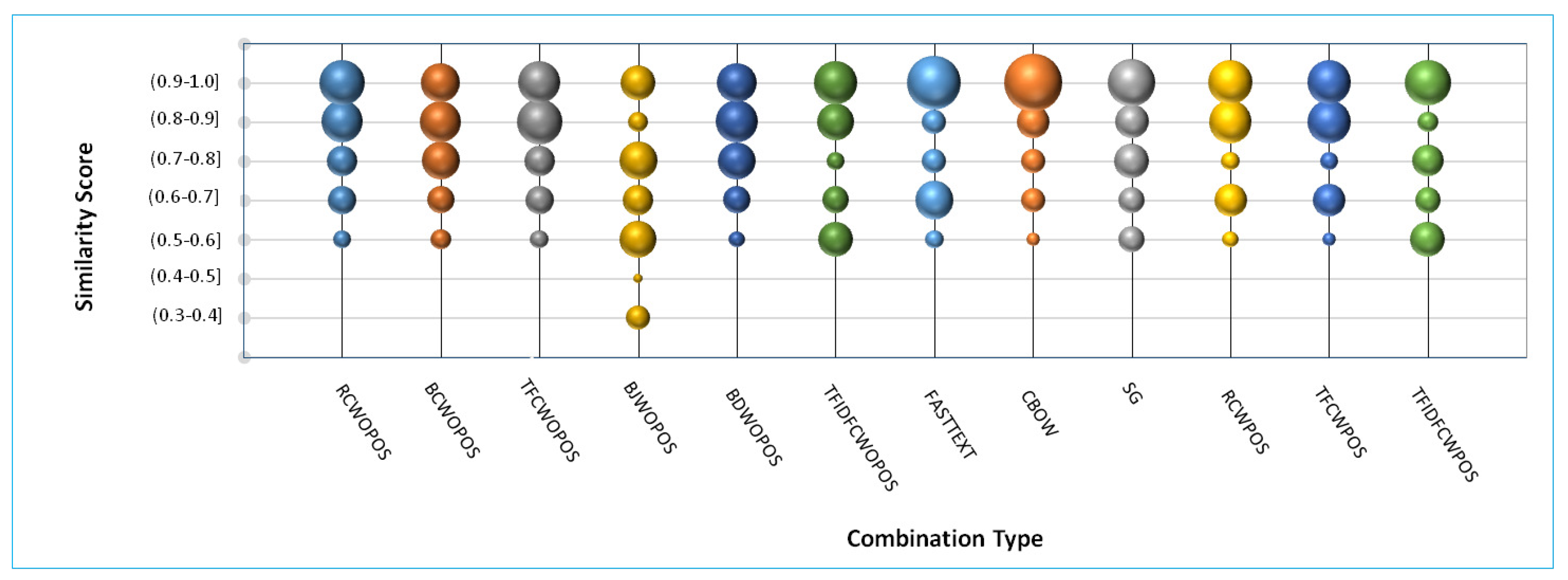

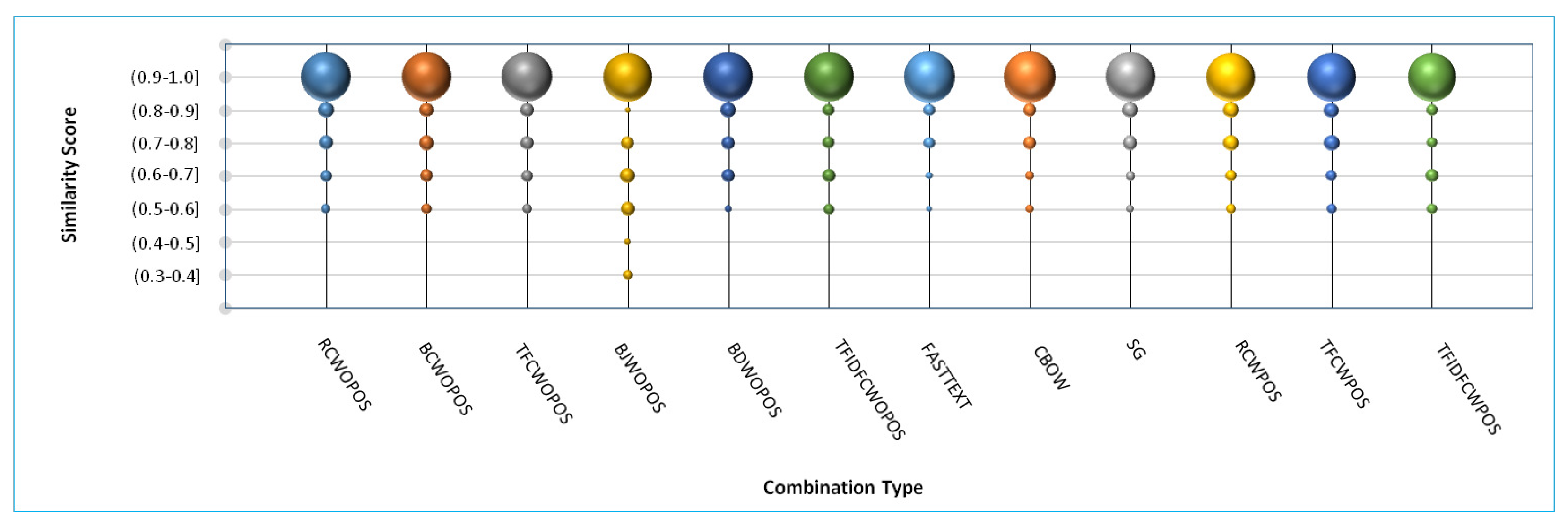

The results are then compared (see

Figure 3) to analyze the relative variation of the number of true positive clone pairs with similarity scores. Similarity scores can be Cosine, Dice, or Jaccard. An ideal plot should exhibit a large proportion of clone pairs for higher similarity values (say for similarity score > 0.7). The following observations can be made while analyzing clone pairs identified for JGrapht.

- (i)

A large number of clone pairs observed for each combination is attributed to the basic nature of LSI. LSI finds clone pairs by measuring the similarity between terms. In two sentences, terms can be similar, but the overall meaning of a sentence may be different, e.g., when a query search is performed on a search engine, a large number of results are obtained with ranking given to each result. This is also the reason for less values of

precision in the result set (see Column 5 of

Table 4).

- (ii)

Maximum recall is achieved using combination number 4 (binary weight measure and jaccard similarity measure), and maximum precision is achieved using combination number 12 (TF-IDF weight measure and cosine similarity measure). Both of them gave better values of precision and recall metrics.

- (iii)

While comparing “Similarity Score” with the count of true positive clone pairs in the result set, we observed combinations 1(RCWOPOS), 2(BCWOPOS), 5(BDWOPOS), 3(TFCWOPOS), 8(CBOW), and 9(SG) as having an ideal property, as a large proportion of true positive clone pairs are encountered for higher similarity values. The rest of the combinations show a somewhat higher count of clone pairs for low values of similarity score. Combination 4(BJWOPOS) show the worst results as the high number of true positives are observed for similarity score as low as 0.3.

- (iv)

The graphs and tables suggested a trivial advantage of using POS selective weight-age. However, it resulted in increased precision values but with a decline in the recall values.

- (v)

Of all the combinations, combination 1(RCWOPOS) is preferred as it gave good values of precision and recall; it also exhibits defined idle graph property.

6.2.2. Empirical Validation Using Collections and JHotDraw

This section gives complete details of the execution of the proposed mechanism on Collections and JHotDraw open source software. Each of the 12 combinations of weight and similarity measures are applied to each open source java project. Small methods are filtered out using a minimum threshold of 50 on the number of tokens in the methods for both projects.

The results of the application of each of the 12 combinations on the two open source projects are given in

Table 5 (for Collections case study) and

Table 6 (for JHotDraw case study).

Only recall is measured for results obtained for Collections and JHotDraw due to time constraints. As the precision calculated is a manual and time-intensive process for such a large result set of clone pairs obtained, it was performed for JGrapht to show the relevance of the proposed mechanism. The number of clone pairs reported in the benchmark for Collections is 1037 methods and 3 constructors, and for JHotDraw is 2550 methods and 199 constructors.

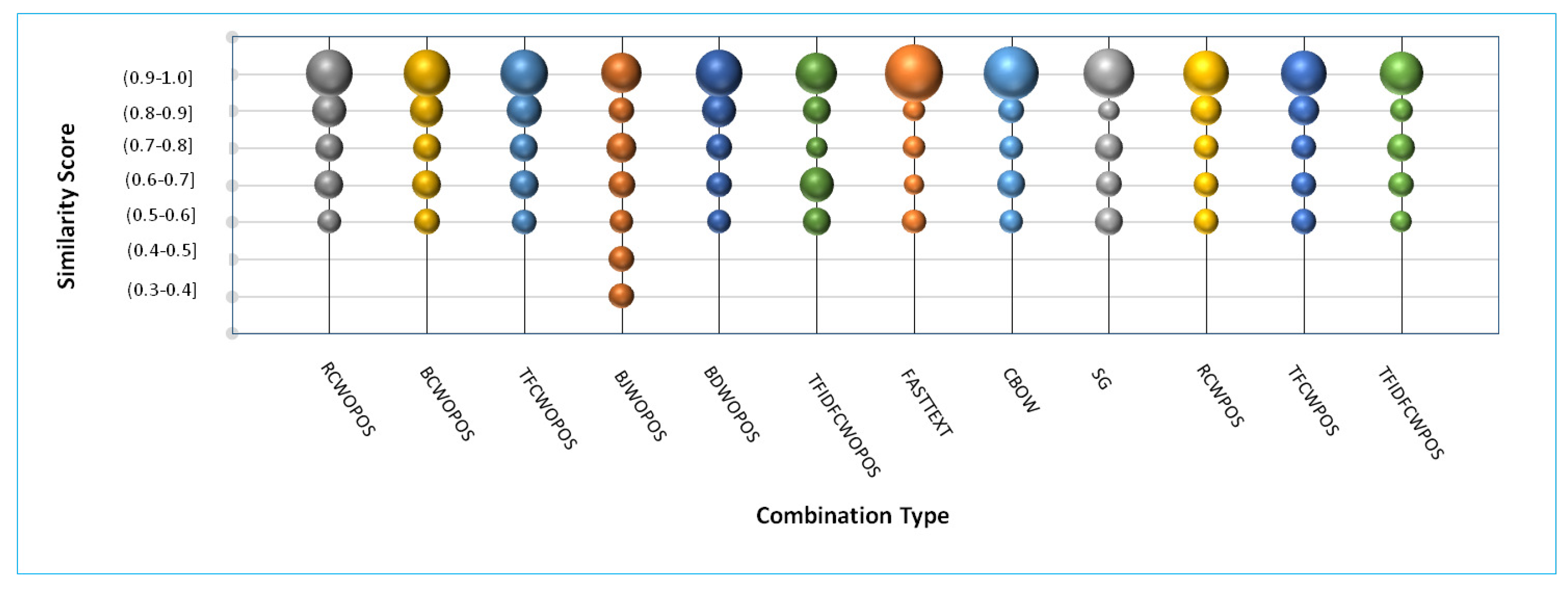

A comparison similar to JGrapht (between similarity scores and count of true positive clone pairs) is also made for the Collections and JHotDraw case study (see

Figure 4 and

Figure 5). The following observations can be made from these graphs and tables.

- (i)

For the Collections case study, combinations 1(RCWOPOS), 2(BCWOPOS), 3(TFCWOPOS), 5(BDWOPOS), 7(FASTTEXT), 8(CBOW), 10(RCWPOS), and 11(TFCWPOS) show ideal curve property. Here, also similar to JGrapht, combination 4(BJWOPOS) shows the worst results with a significant number of true positives being observed for lower similarity scores. Here, combinations 1(RCWOPOS), 2(BCWOPOS), and 3(TFCWOPOS) can also be seen to show good results both in terms of recall and concentration of clone pairs.

- (ii)

For the JHotDraw case study, all the combinations showed a similar behavior: all of these satisfy the defined ideal property (except combination 4). Here, combination 4(BJWOPOS) also gave the worst results for similar reasons as mentioned above. The highest recall values are shown by combination 2, 4 and 8. Here, combinations 1, 3 and 9 also have good recall values with only 1 % decrease in recall.

We use a Windows 10 machine with Intel(R) Core(TM) i5-6200U CPU having 2.7 GHz frequency for the three case studies. The execution time increases exponentially with the number of documents (documents contain methods linked with its documentation).

6.3. Exploring the Research Questions

RQ1: How is documentation present along with source code in a software system useful for finding concept clones?

The result of the three case studies exhibited good recall values using the proposed mechanism, thereby showing the effectiveness of considering the method’s documentation for clone detection purposes. This shows the strength of the LSI mechanism to link semantically related documents together. Therefore, using documentation present along with source code is beneficial for high-level concept clone detection.

Java projects follow a certain structure and style while building the documentation as given in

Section 4. We saw a similar structure in the case studies used.

Table 7 gives examples of each documentation characteristic that is seen in the case studies and also the manual modifications that can be applied in each situation. Looking at the availability of the method’s documentation in these software projects, we observed that not all methods are documented.

Table 2 shows the percentage of documented methods in JGrapht (80%), Collections (70%), and JHotDraw (53%), therefore mandating the manual modifications as described in

Section 4.

For this technique to work credibly for java-based projects without the requirement of manual checking, the following points need to be taken care of:

The description part of documentation must only contain comments related to the semantics of the method.

If the description part is absent, it should contain description associated with the @return tag or a lookup to similar method documentation using @see tag (so that its documentation can be used here).

Only overridden methods (methods overriding some other methods present in the project) with @override annotations are allowed to be undocumented.

All the methods in a project should follow a uniform style of documentation.

For projects in other languages, the documentation should follow uniform style and should only contain description related to semantics of methods. These two requirements are necessary for the proposed technique to give useful results.

Most importantly methods should not be left undocumented.

RQ2: What are the implications of applying different combinations of weight and similarity measures for performing LSI on the extracted method’s documentation?

We examined the result obtained using all the combinations listed in

Table 3. We observed that all of them gave similar results with minor variation in recall values. In all the case studies, combination 3 (TF weights and Cosine Similarity without POS Selective weight-age) outperform others, while combination 4 (binary weights and Jaccard similarity measure) performed the least. The ranking of the 12 combinations is given in

Table 8. This ranking is based on the recall values and whether the combination exhibits an ideal property (a large proportion of clone pairs concentrated at higher similarity values). It can be seen that while combination 4 gave good recall values, it is ranked lowest because it shows a large proportion of clone pairs for similarity values as low as 0.3.

RQ3: Is the technique scalable, i.e., applicable to large-sized projects?

In the case studies, it can be observed that with an increase in size, the number of methods documented decreases. The style of documentation becomes nonuniform, as for larger-sized projects, more developers get involved, and they follow different styles of documentation.

Section 7 explains how the documentation of methods becomes nonuniform. However, if the proper documentation structures are followed, the proposed mechanism shall scale well even to the large-sized projects.

8. Conclusions and Future work

A software’s documentation of methods shows an excellent expressiveness in describing “what the method does”. In this study, applying information retrieval techniques to these documentations to extract functionally similar or nearly functionally similar methods exhibited impressive results. Recall values are found to be between 68% to 89% for the three case studies, i.e., JGrapht, JHotDraw, and Collections. However, the number of clone pairs identified are significant, which is attributed to LSI’s superior capability to match two contextually similar terms in different documents. The proposed technique not only considers similar terms in the documentation, but also considers their ordering, e.g., two strings “Are you there?” and “there you are” shall be processed differently. For certain combinations, we used selective POS weight assignment strategy, which is not found to exhibit significant improvement in results when compared to their counterparts that do not use selective POS weight assignment. Different weight measures (Raw, Binary, TF, and TF-IDF) and models (SG, CBOW, and FASTTEXT) are used in the study. The study shows that the combination using TF weights, cosine similarity, and without POS selective weight-age shows better results for all the three case studies performed for predicting similar methods from their documentations. The relatively low values of the “Precision” metric could be justified owing to the simplicity and lower implementation cost of the proposed mechanism.

Future works may consider tag-based comparison, i.e., the content of each tag (@param, @see, @return, etc.) may be compared separately, especially for java-based projects. Precision can also be greatly improved if along with documentation of code, the source code itself takes part in clone detection process. Various researchers also recommend the use of IR techniques combined with other clone detection tools to improve upon their results. Advanced variations of latent semantic indexing can also be used for further experimentation to analyze and compare their capabilities towards giving improved clone detection results.