Recommendation Model Based on a Heterogeneous Personalized Spacey Embedding Method

Abstract

:1. Introduction

2. Related Work

3. Proposed Model and Baseline Algorithms

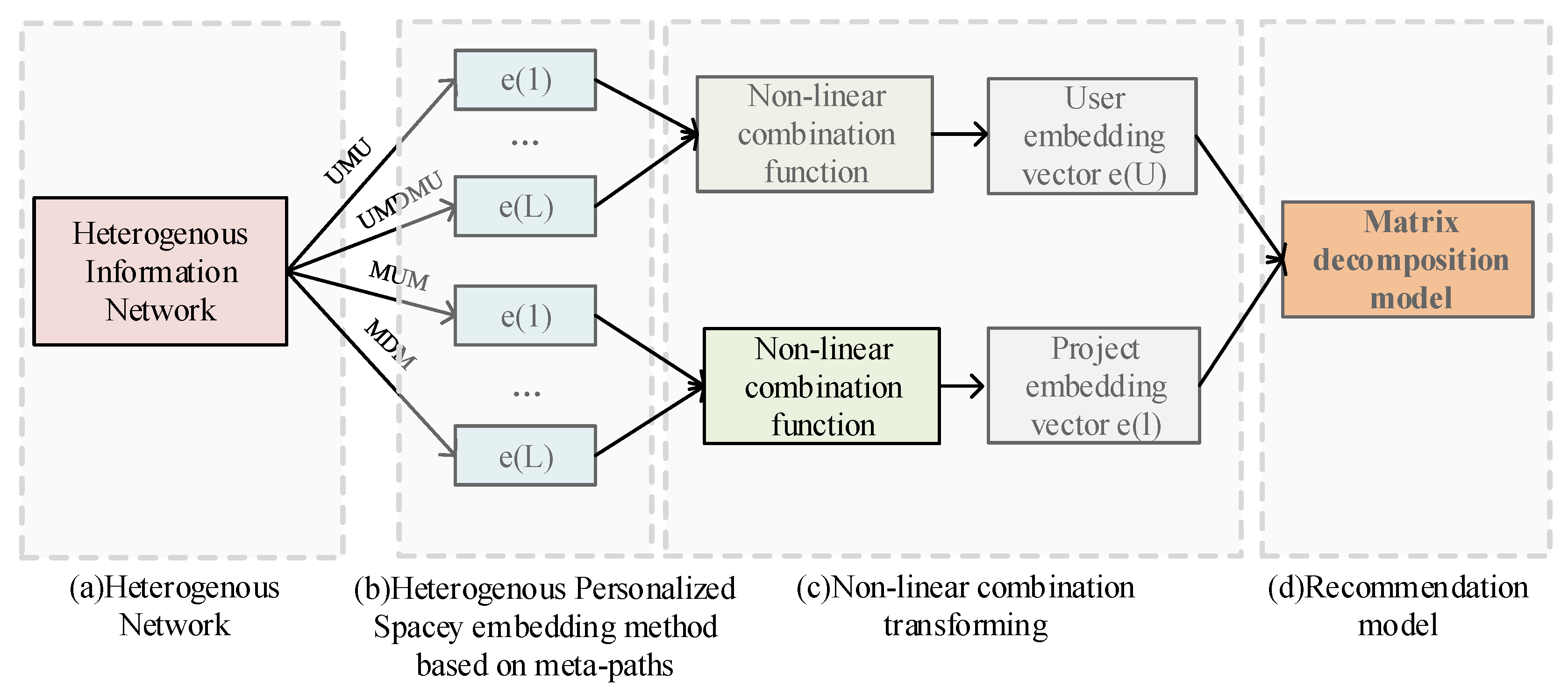

- A meta-path-based heterogeneous personalized spacey random walk (MPHPSRW) strategy and a heterogeneous Skip-Gram algorithm are applied to network representation learning in order to learn the embedding vectors of users and items according to different meta-paths.

- The learned embedding vectors of the different meta-path nodes conduct a nonlinear fusion transformation to generate the final user and item vectors.

- The final user and item vectors are used to construct the objective function. The objective function generates a loss function in the Matrix Factorization framework and updates the parameters by optimizing the loss function. Finally, the specific form of the objective function is obtained, and the rating score is predicted based on the obtained objective function.

3.1. Meta-Path-Based Heterogeneous Personalized Spacey Random Walk

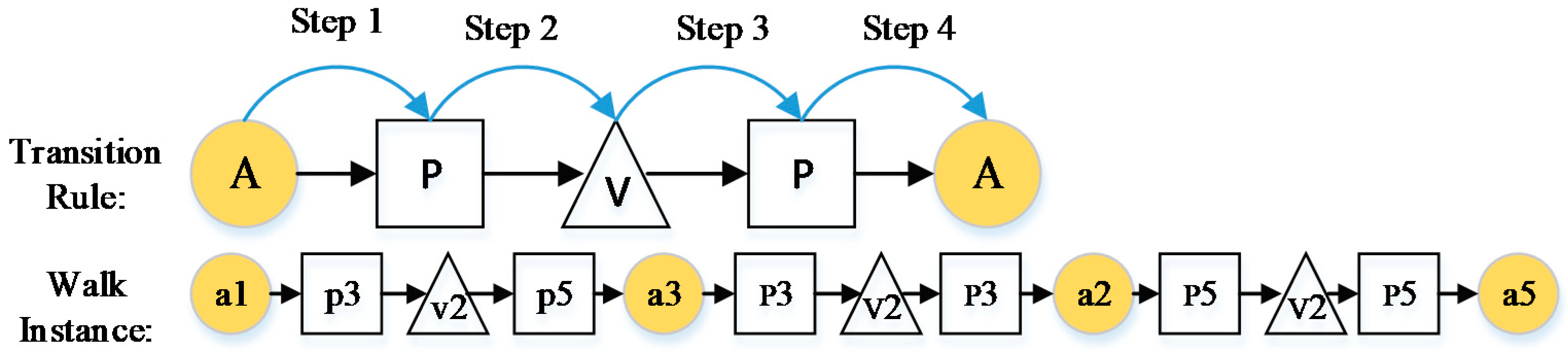

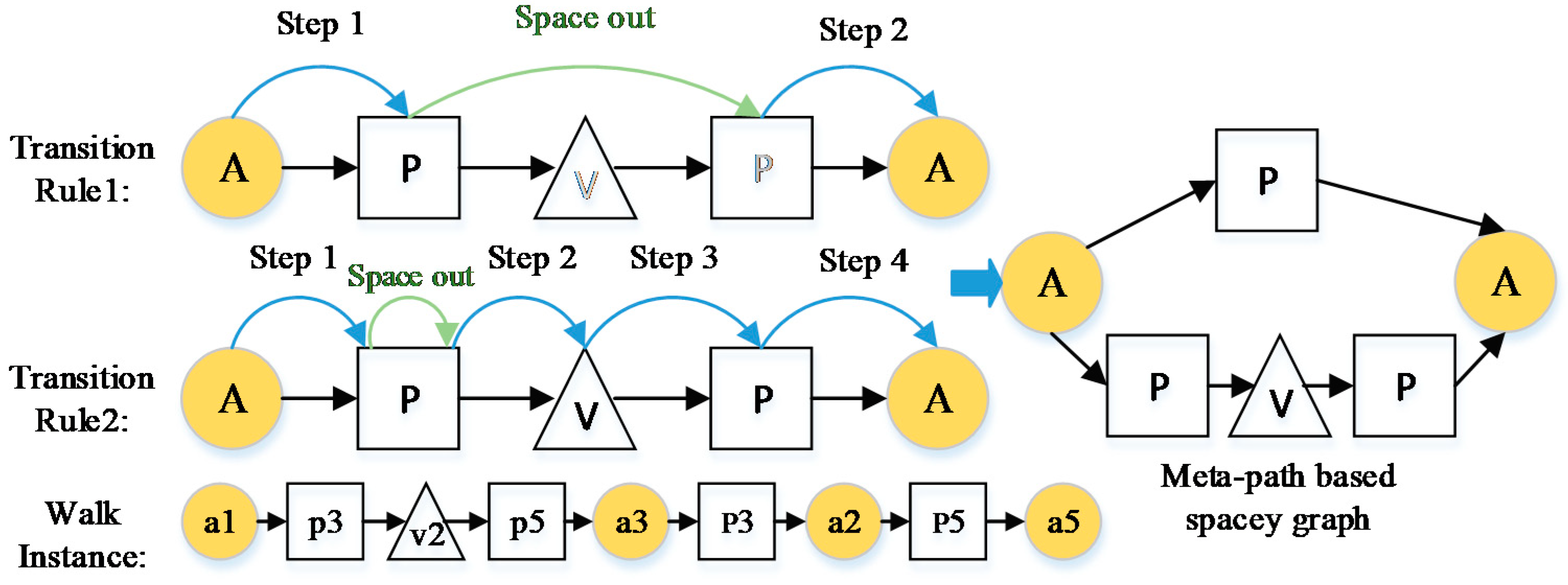

- Algorithm idea: The standard meta-path based random walk is essentially a higher-order Markov chain. Node transfers in a random walk are equivalent to the transfer probabilities of higher-order Markov chains, whose SDs take up a lot of memory, and for large datasets consume a lot of memory for storage. The memory is optimized in the spacey random walk, providing a spatially efficient alternative approximation and mathematically guaranteeing the SD with convergence to the same limit, saving memory usage to some extent. In addition, the spacey random walk also ignores unimportant intermediate states to get a more efficient node sequence. For personalization, α acts as a hyperparameter to control the user’s personalization behavior, and once the spacey random walk visits X(n) at step n it skips and forgets the penultimate state X(n − 1) by probability α.

- Spacey random walk strategy: In heterogeneous networks, the random walk strategy guided by meta-paths was first proposed in the Path Ranking Algorithm [31], which computes the similarity between nodes. Equation (1) is the key transfer probability formula in the Path Ranking Algorithm. It is applied in part (b) of Figure 1 to compute the importance of all meta-paths.

3.2. Heterogeneous Skip-Gram Algorithm

3.3. Personalized Nonlinear Fusion Function

3.4. Recommendation Model

- Modeling rating prediction: First of all, we need to establish the rating prediction expression, input the user and item embedding vectors obtained in the previous step into the recommendation model based on the Matrix Factorization model [35], and put the fusion function into the Matrix Factorization framework to learn the parameters of the model. The final rating prediction expression is shown in Equation (15).

- 2.

- Establishing the loss function: After building the model, the parameters in the model need to be learned and solved. The loss function is shown in Equation (16).

- 3.

- Parameter learning: The stochastic gradient descent algorithm is used to optimize the loss function. The original implicit layer factors and are updated in the same way as the original Matrix Factorization. The other parameters in the model can be updated by the following Equations (17)–(20):

| Algorithm 1 Recommendation Model Based on a Heterogeneous Personalized Spacey Embedding Method |

| Input: Rating Matrix R, Dataset S, walk node time w, walk length l, embedding vector dimension d, neighbor domain k. Output: Model of rating prediction (named by MPHSRec) 1. Create heterogeneous information network HN from S with Networkx tool. 2. Compute Path(U), Path(I) for user meta sets and item meta sets. 3. For each path of Path(U), Path(I): 4. Ulist = GainNodeSequence(Path(U)), Ilist = GainNodeSequence(Path(I)) 5. by Equation (1) to Equation (6). 6. GetEmbeddi_ 7. . 8. For each vector of ,: 9.=Fusion (), =Fusion () by Equation (11) 10. to Equation (13). 11. UIMX=Fusion () into Matrix Factorization model from [35]. 12. Model=CreateMPHSRec(UIMX) by Equation (15). 13. Create loss function for Model by Equation (16). 14. For each of iterations N: 15. Optimize loss function by Equation (17) to Equation (21). 16. Save Model. |

3.5. Statement of Baseline Algorithm

- DeepWalk is a homogeneous network embedding model that uses the traditional random walk algorithm to obtain contextual information to learn low-dimensional node vectors.

- LINE is a method based on the neighborhood similarity assumption. It uses different definitions of similarity between vertices in the graph, including first-order and second-order similarity.

- Metapath2vec is a heterogeneous information network-based embedding method that generates heterogeneous neighborhoods by an ordinary random walk based on meta-paths and learns node embedding vectors by the heterogeneous Skip-Gram algorithm.

- PMF is a classical probabilistic Matrix Factorization model, where the score of Matrix Factorization is reduced to a low-dimensional user matrix and a product matrix.

- SemRec is a collaborative filtering method based on a weighted heterogeneous information network constructed by connecting users and items with the same rank. It flexibly integrates heterogeneous information for recommendation using weighted meta-path and weight fusion methods.

- Variation of HERec: HERec is a recommendation algorithm based on a heterogeneous information network that uses the heterogeneous embedding method to learn the low-dimensional vectors of users and items in the heterogeneous network, and then incorporates the representation of users and items into the recommendation algorithm. The DeepWalk, LINE, and Metapath2vec algorithms were used to replace the heterogeneous embedding method module.

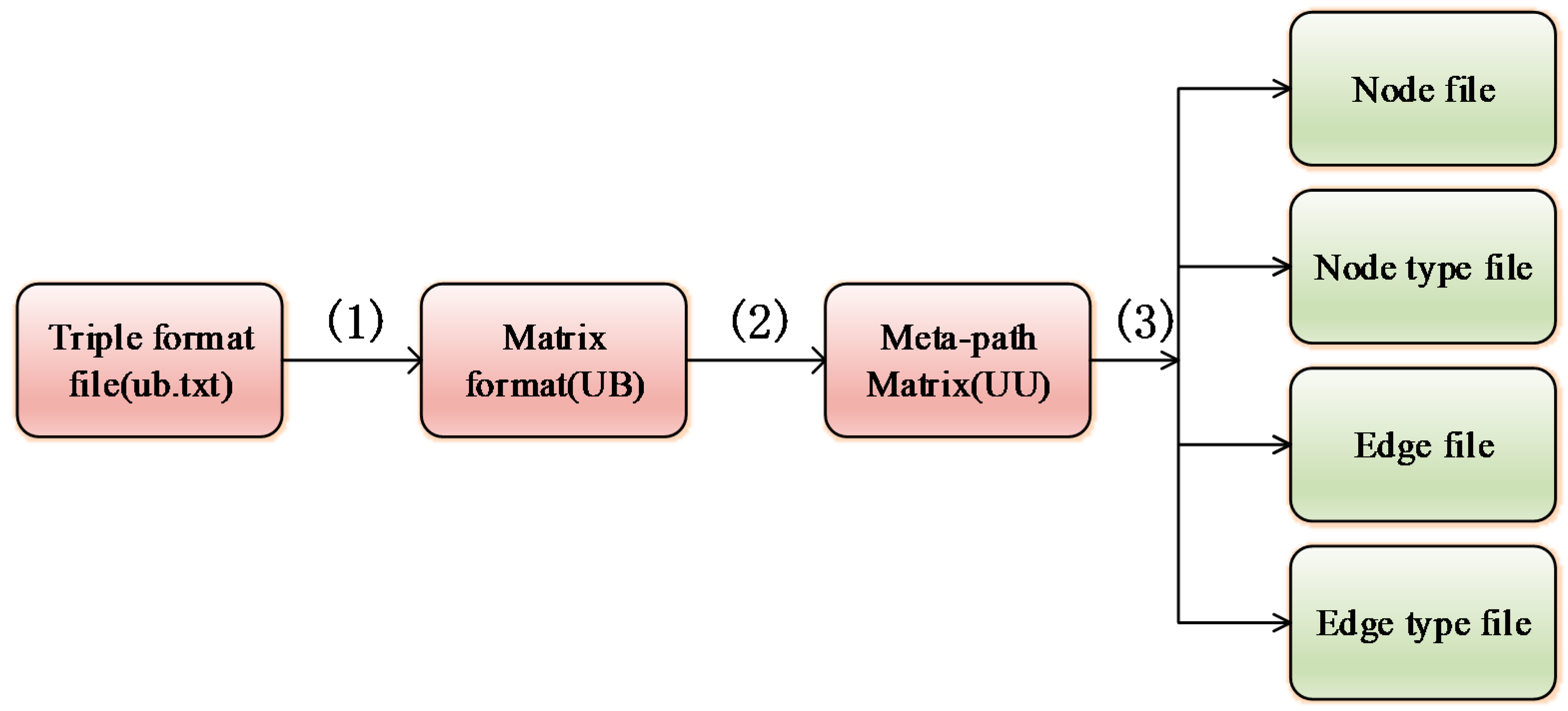

3.6. Heterogeneous Information Network Generation

- According to different meta-paths, the dataset file will be processed accordingly. If the meta-path is UBU (User-Business-User, UBU), process the dataset file ub.txt. First, use the information in ub.txt to generate the interaction matrix UB (User-Business, UB). This matrix dimension is the number of users × the number of items.

- Use the following matrix multiplication equation to obtain the matrix UU (User-User, UU) of UBU.

- The meta-path matrix UU is obtained in the previous step. The first sets of different typeIDs of nodes, nodes, and their type settings for the Yelp and Douban Movie datasets are shown in Table 1 and Table 2. Then, according to this mapping relationship, extract node files, edge files, node type files, and edge type files from UU in turn, including UBU.nodes, UBU.nodes_types, UBU.edges, and UBU.edges_types. The following describes the file format in detail. The format of the node file is nodeID node_type. Each line represents the node and its node type. The format of the node type file is node_typeID. Each line has only one value, which represents the typeID of the node, where all the different node types are listed. The format of the edge file is start_nodeID and target_nodeID, which means that there are edges from node start_node to node target_node. The format of the edge type file is start_node_typeID and target_node_typeID, where the node correspondence is changed to the typeID of the node compared to the edge file.

4. Experiment

4.1. Datasets

- The Douban Movie dataset [36] (Douban Movie) includes 13,367 users, 12,677 movies, and 1,068,278 ratings, ranging from 1 to 5. The data also include user and movie attribute information, such as Group, Actor, Director, and Type.

- The Yelp dataset [37] includes 16,239 users, 14,284 merchants, 47 cities, and 511 categories. The city information is the city where the merchant is located, and the category information is the category of the merchant.

4.2. Memory Effectiveness Verification of Spacey Random Walk Based on Meta-Paths

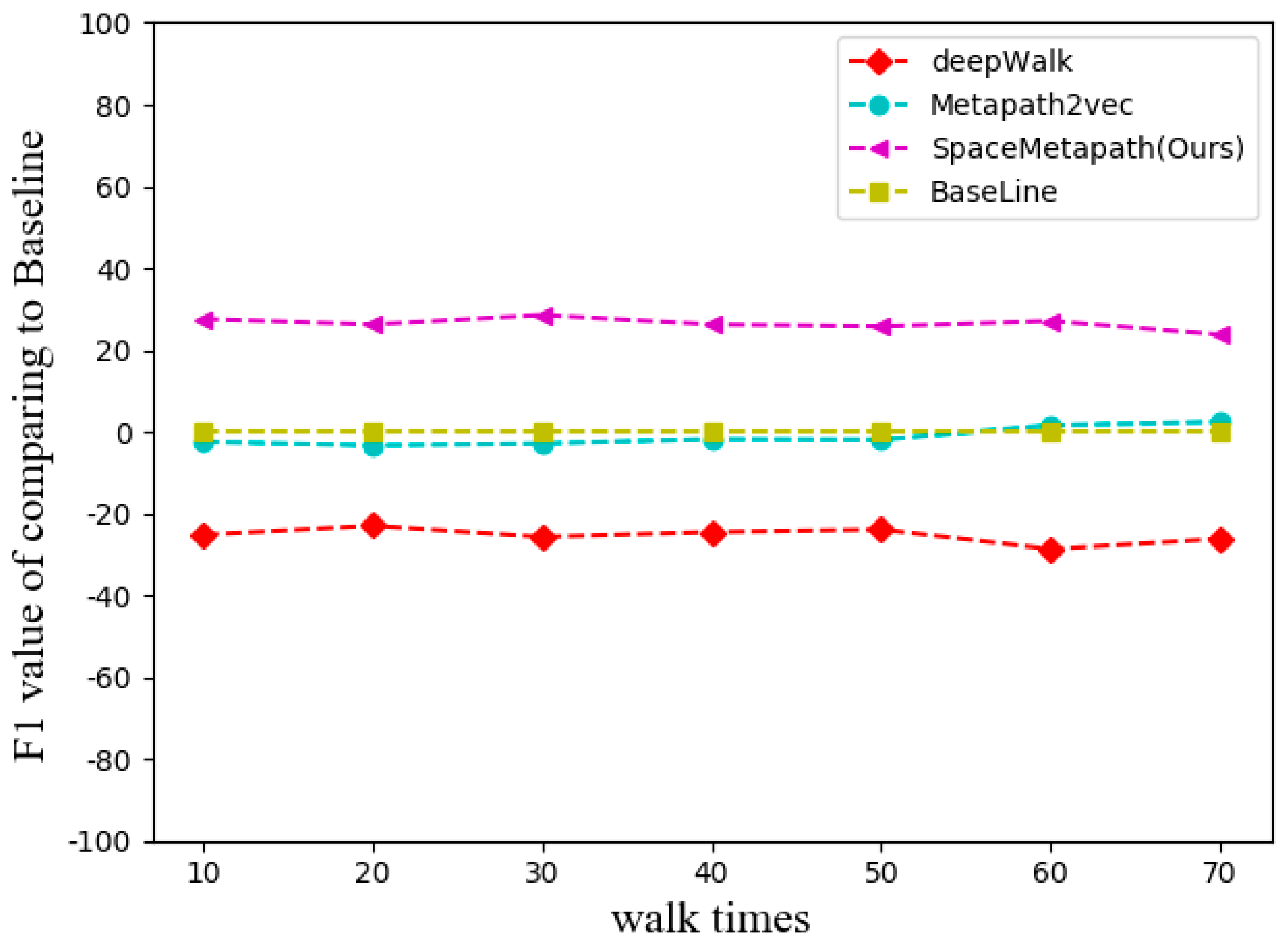

4.3. Effect of Different Parameters on the Random Walk Algorithm

- The times of a random walk. For the times of a random walk, the default value is 10, using values of 10 to 70 for each experiment with an interval of 10. In order to better show the contrast effect, we set up a baseline. The baseline in the figure is the average value of F1 of each algorithm, and the comparison effect of the F1 value of the three algorithms with the baseline is shown in Figure 6.

- 2.

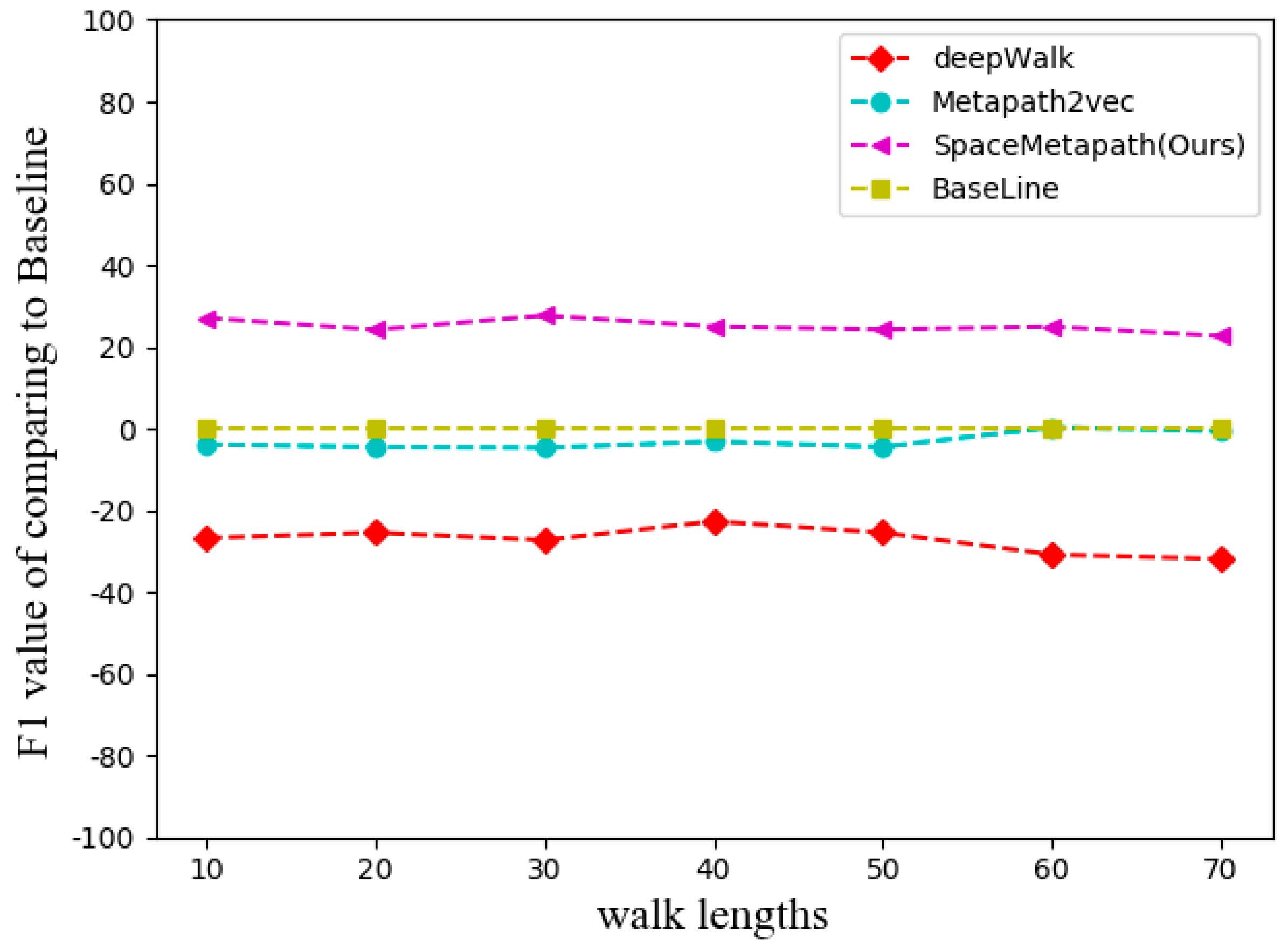

- Random walk path length. For a random walk path length, the default value is 10, and the experiments were conducted from 10 to 70 times with an interval of 10 times.

- 3.

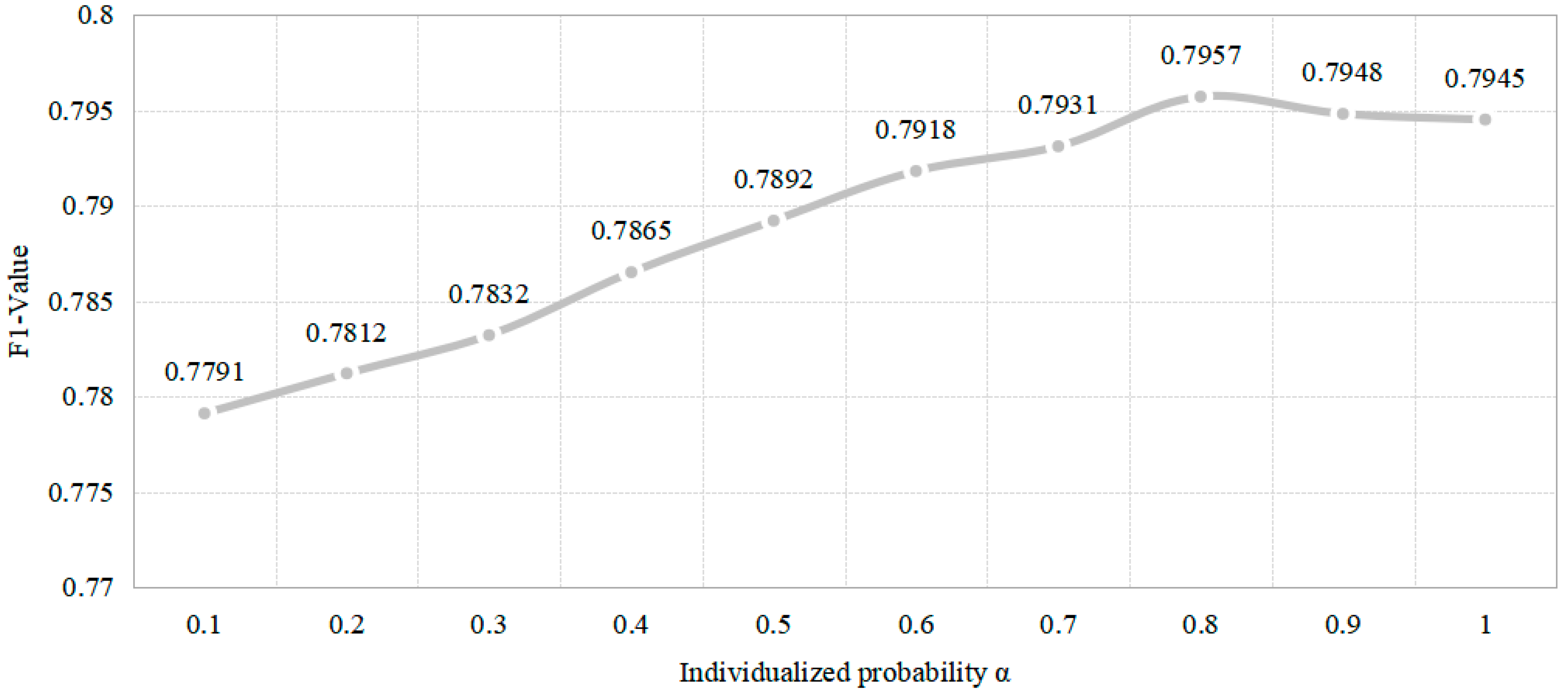

- Personalized probability. Here, we used SpaceyMetapath with a parameter range of 0.1–1.0 and an interval of 0.1. Figure 8 shows the F1 result with different personalization probabilities from the Douban Movie dataset. The horizontal coordinate is the probability of different personalized probabilities, and the vertical coordinate is the result of SpaceyMetapath’s classification for different personalized probabilities.

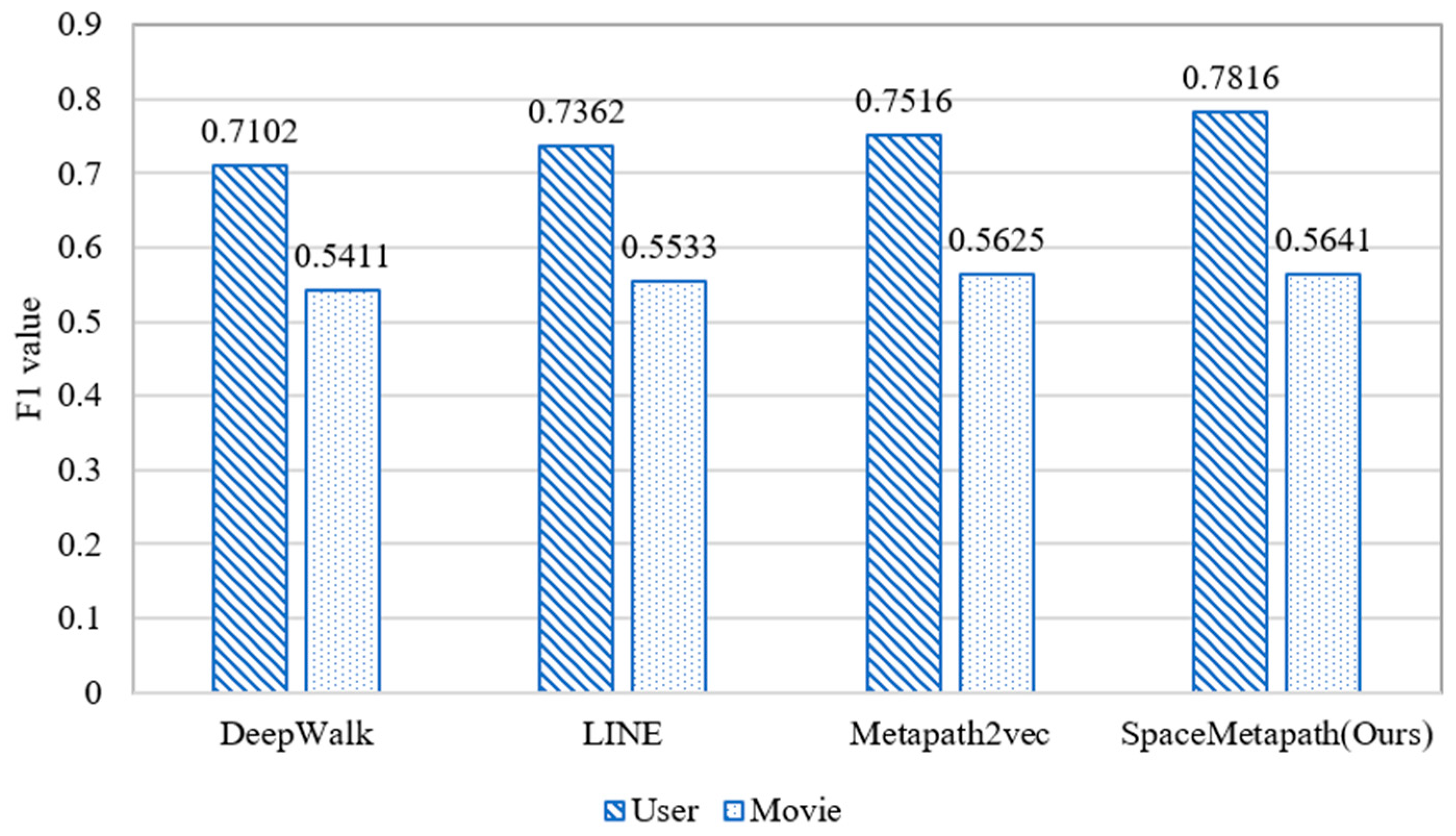

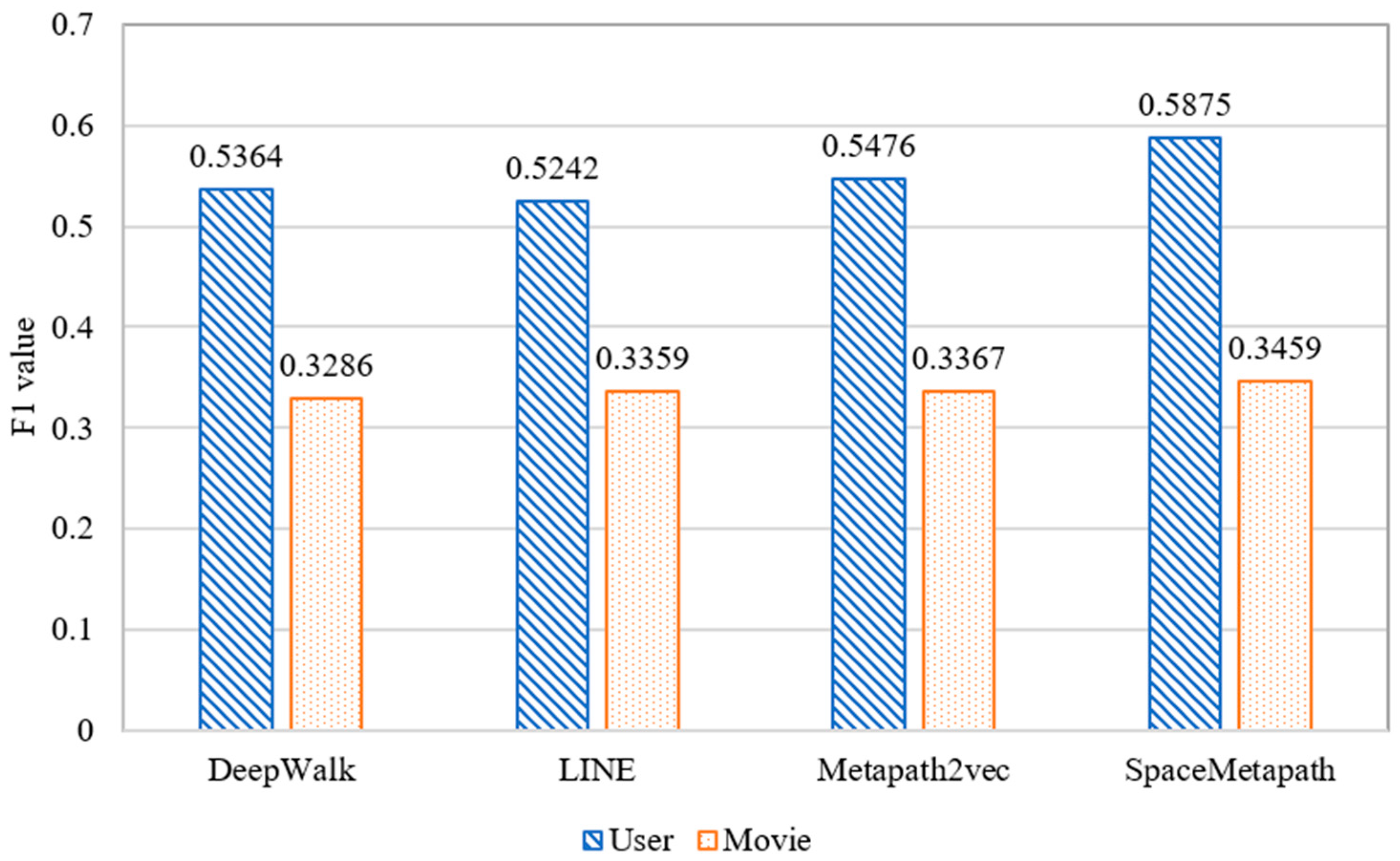

4.4. Validation of the Effectiveness of the Meta-Path-Based Heterogeneous Personalized Spacey Embedding Method

4.5. Validation of MPHSRec

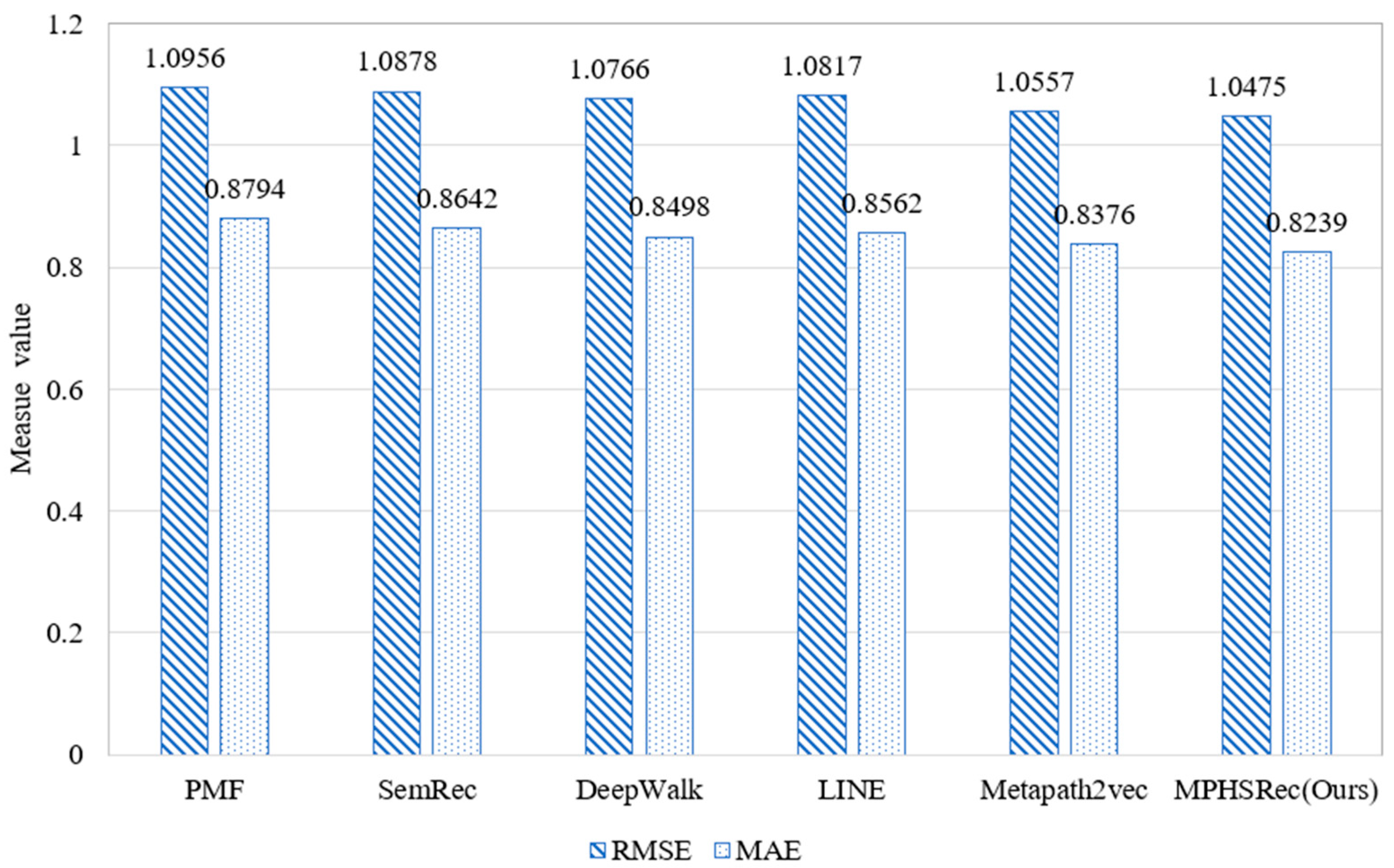

- From the perspective of heterogeneous information networks, PMF is a traditional recommendation algorithm based on Matrix Factorization, while SemRec, HERec and its variants, and MPHSRec are recommendation algorithms that introduce heterogeneous information networks. Because heterogeneous networks contain a large number of semantic relations, the MAE and RMSE of the latter algorithms have all declined significantly in Figure 7. The recommendation performance can be improved by the heterogeneous embedding method. The dataset contains a lot of attribute information, such as the director of the movie, the actor, the subject matter, and the city and category of the merchant, which is useful for the recommendation model and can improve the recommendation performance of the model. Compared with the heterogeneous information network embedding method, PMF does not introduce this additional attribute information, which is why the heterogeneous network embedding method is advantageous.

- From the perspective of heterogeneous embedding methods, since the algorithms with traditional heterogeneous embedding, namely DeepWalk, LINE, Metapath2vec, and the algorithm proposed in this paper adopt the personalized spatial embedding method, their MAE and RMSE will be decreased, that is, they will have a better recommendation performance. This embedding method can obtain a more efficient node vector representation than the traditional heterogeneous embedding method, which results in a better recommendation performance. Table 5 shows the MAE and RMSE results with different training ratios between the different models on the Yelp dataset. Similar to the Douban Movie dataset, an increase in the number of training samples leads to a gradual decrease in the MAE and RMSE from Table 6. Since the heterogeneous information network contains a large amount of semantic information, and the semantic information can be mined through meta-paths and used for recommendations, the MAE and RMSE of the recommendation algorithm with the heterogeneous embedding method are smaller than those of the PMF of the traditional Matrix Factorization method. In Table 5 and Table 6, ↓ represents the minimum in comparison values.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sun, Y.Z.; Han, J.W.; Yan, X.F.; Yu, P.; Wu, T.Y. PathSim: Meta Path-Based Top-K Similarity Search in Heterogeneous Information Networks. In Proceedings of the 37th International Conference on Very Large Data Bases (VLDB), Seattle, WA, USA, 11–14 August 2011; pp. 992–1003. [Google Scholar]

- Yu, X.; Ren, X.; Gu, Q.; Sun, Y. Collaborative Filtering with Entity Similarity Regularization in Heterogeneous Information Networks. In Proceedings of the 23th International Joint Conference on Artificial Intelligence (IJCAI), Beijing, China, 3–9 August 2013; pp. 542–549. [Google Scholar]

- Feng, W.; Wang, J.Y. Incorporating Heterogeneous Information for Personalized Tag Recommendation in Social Tagging Systems. In Proceedings of the 18th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD), Beijing, China, 12–16 August 2012; pp. 1276–1284. [Google Scholar]

- He, Y.; Song, Y.Q.; Li, C.; Peng, J.; Peng, H. Hetespaceywalk: A Heterogeneous Spacey Random Walk for Heterogeneous Information Network Embedding. In Proceedings of the 28th ACM International Conference on Information and Knowledge Management (CIKM), Beijing, China, 3 November 2019; pp. 639–648. [Google Scholar]

- Goldberg, D.; Nichols, D.; Oki, B.M.; Terry, D. Using collaborative filtering to weave an information TAPESTRY. Commun. ACM 1992, 35, 61–70. [Google Scholar] [CrossRef]

- Sarwar, B.; Karypis, G.; Konstan, J. Item-Based Collaborative Filtering Recommendation Algorithms. In Proceedings of the 10th International Conference on World Wide Web (WWW), Hong Kong, China, 1–5 May 2001; pp. 285–295. [Google Scholar]

- Koren, Y. Factorization Meets the Neighborhood: A Multifaceted Collaborative Filtering Model. In Proceedings of the 14th ACMKDD International Conference on Knowledge Discovery and Data Mining, Las Vegas, NV, USA, 24–27 August 2008; pp. 426–434. [Google Scholar]

- Shi, C.; Kong, X.N.; Huang, Y.; Wu, B. Hetesim: A general framework for relevance measure in heterogeneous networks. IEEE Trans. Knowl. Data Eng. 2013, 26, 2479–2492. [Google Scholar] [CrossRef] [Green Version]

- Shi, C.; Zhang, Z.; Luo, P.; Yu, P.S. CIKM’15-. In Proceedings of the 24th ACM International Conference on Information and Knowledge Management (CIKM), Melbourne, Australia, 19–23 October 2015; pp. 453–462. [Google Scholar]

- Zhao, H.; Yao, Q.M.; Li, J.D.; Song, Y.Q.; Lee, D.L. Meta-Graph Based Recommendation Fusion over Heterogeneous Information Networks. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD), Halifax, NS, Canada, 13 August 2017; pp. 635–644. [Google Scholar]

- Li, L.N.; Wang, L.J.; Jiang, X.Q.; Han, H.Q.; Zhai, Y. A New Algorithm for Literature Recommendation Based on a Bibliographic Heterogeneous Information Network. Chin. J. Electron. 2018, 27, 761–767. [Google Scholar] [CrossRef]

- Ji, Z.Y.; Yang, C.; Wang, H. BRScS: A Hybrid Recommendation Model Fusing Multi-source Heterogeneous data. EURASIP J. Wirel. Commun. 2020, 2020, 1256–1263. [Google Scholar]

- Cheng, S.L.; Zhang, B.F.; Zou, G.B. Friend recommendation in social networks based on multi-source information fusion. Int. J. Mach. Learn. Cybern. 2019, 10, 1003–1024. [Google Scholar] [CrossRef]

- Langer, M.; He, Z.; Rahayu, W. Distributed Training of Deep Learning Models: A Taxonomic Perspective. IEEE Trans. Parallel Distrib. Syst. 2020, 31, 2802–2818. [Google Scholar] [CrossRef]

- Fan, S.H.; Zhu, J.X.; Han, X.T.; Shi, C. Metapath-guided Heterogeneous Graph Neural Network for Intent Recommendation. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining (KDD), Anchorage, AK, USA, 4–8 August 2019; pp. 2478–2486. [Google Scholar]

- Song, W.P.; Xiao, Z.P.; Wang, Y.F. Session-Based Social Recommendation via Dynamic Graph Attention Networks. In Proceedings of the 12th ACM International Conference on Web Search and Data Mining (WSDM), Melbourne, Australia, 30 January 2019; pp. 555–563. [Google Scholar]

- Fan, W.; Ma, Y.; Li, Q.; He, Y.; Zhao, E.; Tang, J.; Yin, D. Graph Neural Networks for Social Recommendation. In Proceedings of the World Wide Web Conference (WWW), San Francisco, CA, USA, 4–6 May 2019; pp. 1458–1462. [Google Scholar]

- Ji, M.; Han, J.W.; Marina, D. Ranking-Based Classification of Heterogeneous Information Networks. In Proceedings of the 17th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD), San Diego, CA, USA, 23–27 August 2011; pp. 1298–1306. [Google Scholar]

- Sun, Y.Z.; Brandon, N.; Han, J.W.; Yan, X.F.; Yu, P.S. Integrating Meta-Path Selection with User-Guided Object Clustering in Heterogeneous Information Networks. In Proceedings of the ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Beijing, China, 12–16 August 2012; pp. 1348–1356. [Google Scholar]

- Sun, Y.Z.; Yu, Y.T.; Han, J.W. Ranking-based Clustering of Heterogeneous Information Networks with Star Network Schema. In Proceedings of the 15th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Paris, France, 28 June 2009; pp. 797–806. [Google Scholar]

- Hu, B.B.; Shi, C.; Zhao, W. Leveraging Meta-Path Based Context for Top-N Recommendation with A Neural Co-Attention Model. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Anchorage, AK, USA, 19–23 August 2018; pp. 1531–1540. [Google Scholar]

- Yu, X.; Ren, X.; Sun, Y.Z.; Gu, Q.Q.; Sturt, B.; Khandelwal, U. Personalized Entity Recommendation: A Heterogeneous Information Network Approach. In Proceedings of the 15th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, New York, NY, USA, 28 February 2014; pp. 283–292. [Google Scholar]

- Meng, Z.Q.; Shen, H. Fast top-k similarity search in large dynamic attributed networks. Inf. Process. Manag. 2019, 56, 1445–1454. [Google Scholar] [CrossRef]

- Wold, S. Principal component analysis. Chemom. Intell. Lab. Syst. 1987, 2, 27–52. [Google Scholar] [CrossRef]

- Kruskal, J.B.; Wish, M.; Uslaner, E.M. Multidimensional Scaling; Sage: Beverly Hills, CA, USA, 1978; pp. 201–205. [Google Scholar]

- Perozzi, B.; Al-Rfou, R.; Skiena, S. DeepWalk: Online Learning of Social Representations. In Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, New York, NY, USA, 24 August 2014; pp. 701–710. [Google Scholar]

- Mikolov, T.; Chen, K.; Corrado, G. Efficient Estimation of Word Representations in Vector Space. Comput. Sci. 2013, 28, 562–569. [Google Scholar]

- Tang, J.; Qu, M.; Wang, M. LINE: Large-Scale Information Network Embedding. In Proceedings of the 24th International Conference on World Wide Web (WWW), Florence, Italy, 18 May 2015; pp. 1067–1077. [Google Scholar]

- Dong, Y.X.; Chawla, N.V.; Swami, A. Metapath2vec: Scalable Representation Learning for Heterogeneous Networks. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Halifax, NS, Canada, 13 August 2017; pp. 135–144. [Google Scholar]

- Zhang, D.; Yin, J.; Zhu, X. Metagraph2vec: Complex Semantic Path Augmented Heterogeneous Network Embedding. In Advances in Knowledge Discovery and Data Mining; Springer: Cham, Switzerland, 2018; pp. 35–39. [Google Scholar]

- Laok, N.; Cohen, W.W. Relational Retrieval Using a Combination of Path-constrained Random Walk. Mach. Learn. 2010, 81, 53–67. [Google Scholar]

- Shi, C.; Li, Y.T.; Yu, P.S. Constrained-meta-path-based ranking in heterogeneous information network. Knowl. Inf. Syst. 2016, 49, 719–747. [Google Scholar] [CrossRef]

- Han, J.; Moraga, C. The Influence of the Sigmoid Function Parameters on the Speed of Backpropagation Learning. In Proceedings of the International Workshop on Artificial Neural Networks, Torremolinos, Spain, 9 June 1995; pp. 195–201. [Google Scholar]

- Chen, B.; Ding, Y.; Xin, X. AIRec: Attentive intersection model for tag-aware recommendation. Neurocomputing 2021, 421, 105–114. [Google Scholar] [CrossRef]

- Salakhutdinov, R.; Mnih, A. Probabilistic Matrix Factorization. In Proceedings of the 20th International Conference on Neural Information Processing System, Vancouver, BC, Canada, 3 December 2007; pp. 1257–1264.

- Douban. Available online: https://book.douban.com (accessed on 12 December 2019).

- Yelp. Available online: https://www.Yelp.com/dataset-challenge (accessed on 20 December 2019).

- Shi, C.; Hu, B.; Zhao, W.X. Heterogeneous Information Network Embedding for Recommendation. IEEE Trans. Knowl. Data Eng. 2019, 31, 357–370. [Google Scholar] [CrossRef] [Green Version]

| Node Name | Type ID |

|---|---|

| user | 0 |

| business | 1 |

| Compliment | 2 |

| category | 3 |

| city | 4 |

| Node Name | Type ID |

|---|---|

| user | 0 |

| Movie | 1 |

| Group | 2 |

| Actor | 3 |

| Director | 4 |

| Type | 5 |

| Data Set | Entity Name | Number of Entities | Relationship Name | Number of Relationships | Meta-Path |

| Douban Movie | User | 13,367 | User–Movie | 1,068,278 | UMU UMAMU UMDMU UMTMU MUM MAM MDM MTM |

| Movie | 12,677 | User–Group | 570,047 | ||

| Group | 2753 | User–User | 4085 | ||

| Actor | 6311 | Movie–Actor | 33,587 | ||

| Director | 2449 | Movie–Director | 11,276 | ||

| Type | 38 | Movie–Type | 27,668 | ||

| Yelp | User | 16,239 | User–Business | 198,397 | UBU UBCiBU UBCaBU BUB BCiB BCaB |

| Business | 14,284 | User–User | 158,590 | ||

| Compliment | 11 | User–Compliment | 76,875 | ||

| Category | 511 | Business–City | 14,267 | ||

| City | 47 | Business–Category | 40,009 |

| Meta-Path | DeepWalk | MPHPSRW |

|---|---|---|

| UBU | 237.85 MB | 39.45 MB |

| UBCiBU | 53.67 MB | 32.23 MB |

| UBCaBU | 70.53 MB | 41.97 MB |

| BUB | 104.99 MB | 73.42 MB |

| BCiB | 25.34 MB | 12.24 MB |

| BCaB | 34.43 MB | 23.05 MB |

| MUM | 523.32 MB | 159.16 MB |

| MAM | 32.25 MB | 21.03 MB |

| MDM | 21.97 MB | 9.31 MB |

| MTM | 28.85 MB | 15.36 MB |

| Model | Evaluation Indicators | Training Ratio (20%) | Training Ratio (40%) | Training Ratio (60%) | Training Ratio (80%) |

|---|---|---|---|---|---|

| PMF | MAE | 1.5472 | 1.0215 | 0.9128 | 0.8794 |

| RMSE | 1.7215 | 1.2256 | 1.1782 | 1.0956 | |

| SemRec | MAE | 1.4664 | 0.9863 | 0.8821 | 0.8642 |

| RMSE | 1.6742 | 1.2176 | 1.1423 | 1.0878 | |

| DeepWalk | MAE | 1.4268 | 0.9664 | 0.8506 | 0.8498 |

| RMSE | 1.6641 | 1.2106 | 1.1021 | 1.0766 | |

| LINE | MAE | 1.4329 | 0.9782 | 0.8612 | 0.8562 |

| RMSE | 1.6721 | 1.2272 | 1.0845 | 1.0817 | |

| Metapath2vec | MAE | 1.4054 | 0.9547 | 0.8424 | 0.8376 |

| RMSE | 1.6598 | 1.2012 | 1.0618 | 1.0557 | |

| MPHSRec (Ours) | MAE | 1.3921↓ | 0.9421↓ | 0.8319↓ | 0.8239↓ |

| RMSE | 1.6476↓ | 1.1982↓ | 1.0539↓ | 1.0475↓ |

| Model | Evaluation Indicators | Training Ratio (60%) | Training Ratio (70%) | Training Ratio (80%) | Training Ratio (90%) |

|---|---|---|---|---|---|

| PMF | MAE | 1.3412 | 1.2413 | 1.1923 | 1.0738 |

| RMSE | 1.8215 | 1.6574 | 1.4763 | 1.4384 | |

| SemRec | MAE | 1.2664 | 1.1874 | 1.1545 | 1.0423 |

| RMSE | 1.7742 | 1.3106 | 1.2542 | 1.2438 | |

| DeepWalk | MAE | 1.2068↓ | 1.1624 | 1.0932 | 1.0872 |

| RMSE | 1.6641 | 1.2106 | 1.1221 | 1.1066 | |

| LINE | MAE | 1.4329 | 0.9782 | 0.8612 | 0.8562 |

| RMSE | 1.6721 | 1.2272 | 1.1845 | 1.1817 | |

| Metapath2vec | MAE | 1.4054 | 0.9547 | 0.9124 | 0.9076 |

| RMSE | 1.3598 | 1.2012 | 1.1618 | 1.1457 | |

| MPHSRec (Ours) | MAE | 1.3821 | 0.9415↓ | 0.8465↓ | 0.8429↓ |

| RMSE | 1.3356↓ | 1.1954↓ | 1.1056↓ | 1.0438↓ |

| Model | Douban Movie | Yelp |

|---|---|---|

| PMF | 0.7502 | 0.7238 |

| SemRec | 0.7946 | 0.7663 |

| DeepWalk | 0.9034 | 0.8824 |

| LINE | 0.8465 | 0.8272 |

| Metapath2vec | 0.9162 | 0.8892 |

| MPHSRec (Ours) | 0.9301 | 0.9038 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ruan, Q.; Zhang, Y.; Zheng, Y.; Wang, Y.; Wu, Q.; Ma, T.; Liu, X. Recommendation Model Based on a Heterogeneous Personalized Spacey Embedding Method. Symmetry 2021, 13, 290. https://doi.org/10.3390/sym13020290

Ruan Q, Zhang Y, Zheng Y, Wang Y, Wu Q, Ma T, Liu X. Recommendation Model Based on a Heterogeneous Personalized Spacey Embedding Method. Symmetry. 2021; 13(2):290. https://doi.org/10.3390/sym13020290

Chicago/Turabian StyleRuan, Qunsheng, Yiru Zhang, Yuhui Zheng, Yingdong Wang, Qingfeng Wu, Tianqi Ma, and Xiling Liu. 2021. "Recommendation Model Based on a Heterogeneous Personalized Spacey Embedding Method" Symmetry 13, no. 2: 290. https://doi.org/10.3390/sym13020290

APA StyleRuan, Q., Zhang, Y., Zheng, Y., Wang, Y., Wu, Q., Ma, T., & Liu, X. (2021). Recommendation Model Based on a Heterogeneous Personalized Spacey Embedding Method. Symmetry, 13(2), 290. https://doi.org/10.3390/sym13020290