1. Introduction

In industrial scenes, robots to perceive the 3D environment and operate on targets are the key to achieving intelligent manufacturing [

1,

2]. The realization of rapid self-calibration and rapid spatial positioning of targets in complex environments is important technologies, and they have always been hotspots in robotics and computer vision [

3,

4].

In order to recognize, locate and manipulate objects in 3D environment, robots must locate their poses and then operate them accordingly through robot actuators. The establishment of a vision-based intelligent robot system usually requires calibration of the spatial posture relationship between vision sensor and robot actuator (hand-eye calibration). The point cloud model is widely used in industrial 3D vision for its flexibility, matrix representation and ease of calculation. The robot perceives the environment and targets through 3D vision and needs to reconstruct the spatial environment through point clouds. The target object is segmented from the environment to achieve specific operations on the object, such as machine assembly, industrial stacked parts sorting and 3D reconstruction of large components.

The calibration of the vision system is divided into camera calibration and hand-eye calibration. Due to the physical properties of the lens in the vision sensor, the image will be distorted. Camera calibration calculates the aberration coefficients and the physical parameters of lens, and then establishes the conversion relationships of image pixel coordinates to physical coordinate systems. Zhang proposed a classical camera calibration method by using a checkerboard as a calibration plate to calibrate cameras. This method is widely used in camera calibration for its high calibration accuracy [

5].

In terms of point cloud reconstruction after calibration, point cloud registration have to be carried out. Traditional hand-eye calibration methods generally require professional operators to operate cameras and manipulators to shoot multiple calibration images. For example, Arne Peters et al. proposed a calibration method for a six-axis robot based on 2D laser rangefinder, and the robot can be calibrated by hand-eye without standard calibration parts [

6]. Mingyang Li et al. carried out hand-eye calibration of the robot from the perspective of 3D reconstruction and proposed a reconstruction-based calibration accuracy evaluation method [

7]. Although these methods can calibrate the internal and external parameters of the camera and the relationship between the end- effector of the manipulator and the camera, the process is generally too complicated. Operators are required to have a professional level, especially in environments that operators cannot reach. The rapid, automatic and accurate calibration of the robot hand-eye system cannot be achieved, and the application occasions and production efficiency of the robot are limited.

Spatial point cloud registration matches the points with the same meaning obtained from the point cloud in the same scene, aligning the two clouds in the same coordinate system through spatial transformation so as to reconstruct the expression of the three-dimensional model of the target in the manipulator coordinate system. This is a prerequisite for spatial positioning [

7,

8]. Boce Xue et al. realized the three-dimensional reconstruction of the welding joint based on Kalman filter and more, and guided the robot to complete the welding of the joint [

9]. Peters Arne et al. used a two-dimensional LiDAR to generate a 3D scan of the surrounding area through a moving sensor, and ran an iterative closest point (ICP) variant on it to estimate the missing part of the motion chain to reconstruct a three-dimensional model of the environment [

6]. These registration algorithms based on point pair relationship generally have the disadvantages of time-consuming, insufficient accuracy and noise sensitivity, which seriously affect the real-time and accuracy of the manipulator operation. QY Zhou presented an algorithm for fast global registration of partially overlapping 3D surfaces. The algorithm operates on candidate matches that covers the surfaces [

10]. However, it requires a complete point cloud to register the target point cloud, so it is not suitable for point cloud registration of unknown objects. Yang, H et al. proposed the first fast and certifiable algorithm for the registration of two sets of point cloud in the presence of large amounts of outlier correspondence. Additionally, it is more accurate than ICP while being orders of magnitude faster, and with the continuous improvement of 3D datasets in recent years, deep learning has started to be applied in 3D feature matching algorithms [

10,

11]. Zeng et al. proposed a deep learning model based on 3DMatch for local feature learning [

12], which is a data-driven model that learns a local volumetric patch descriptor for establishing correspondence between partial 3D data. Using 3DMatch for self-supervised feature learning to obtain local feature descriptors.

In the registered point cloud space, segmenting the object of interest from the background and other objects for spatial positioning is a key step for the manipulator to achieve target positioning. Zhao et al. proposed an improved pattern matching segmentation algorithm based on the maximum likelihood estimation sampling consensus (MLESAC) algorithm to refine and segment indoor objects [

13]. Awwad proposed an improved plane segmentation method based on Seq-NV-RANSAC for unstructured 3D point clouds [

14]. These methods can segment space objects. However, because they all directly operate on massive point cloud data, and they require extensive computational resources. Moreover, due to the interference of random noise, these methods have poor segmentation effects on randomly stacked objects. Recently, deep learning theory and practice have been continuously applied in many topics. Maturana et al. proposed a Voxnet structure, which uses 3D CNN to process the voxels of the grid, rasterize the 3D shape and sample the distance function on dense voxels, and apply 3D CNN on the whole voxels to classify 3D data quickly and accurately [

15]. However, this algorithm uses the voxel space as the definition domain of calculation. It needs large computation, is uneasy to store, and depends on the accuracy of voxel segmentation.

In this paper, algorithms are designed based on three necessary stages (Hand-eye calibration, point cloud reconstruction, point cloud segmentation) of an intelligent robot system with 3D vision. A RGB camera and a depth camera are used to form the RGB-D camera, which are mounted near the end-effector. Aiming at the problem of cumbersome hand-eye calibration steps and relying on manual operation to a large extent, a self-calibration method of eye-in-hand manipulator system is proposed. It automatically plans image acquisition path planning based on quaternion spherical linear interpolation method to calculate spatially symmetric path trajectory of the calibrator, and collects multiple sets of photos of the calibration board. Combining the RGB camera, the depth camera and in the end pose of the manipulator, the internal parameter matrix, external parameter matrix and hand-eye conversion matrix of the RGB-D camera (RGB camera and depth camera) can be solved without human intervention. With regard to the poor real-time and low accuracy of traditional point cloud registration, a rapid point cloud registration method based on the shooting pose space coordinate conversion of iterative manipulator is proposed. The missing part of the point cloud is extracted and the symmetric shooting pose of the complementary image is planned, to fill the point cloud holes caused by the shooting blind area and improve the registration accuracy. Complete point cloud reconstruction of the environment and stationary objects. Due to the poor real-time performance of traditional point cloud target segmentation method and poor adaptability to stacked targets, an improved double pyramid feature fusion depth image is proposed to segment the RGB image through Mask R-CNN [

16] and map it to the point cloud space to realize the rapid instance segmentation of point cloud targets.

The main contributions of this paper are as follows: (1) a self-calibration method of eye-in-hand manipulator system is proposed; (2) a millisecond fast and accurate point cloud hole completion registration method is proposed, which can quickly reconstruct the outer surface model of the static target object; (3) a 2D to 3D end-to-end 3D segmentation method is proposed, and finally an independent point cloud of stacked objects is obtained to achieve fast and accurate point cloud segmentation.

2. Related Works

The operation of the robot on the target object involves hand-eye calibration [

6], point cloud reconstruction [

17] and segmentation [

13] technology, and these technologies have also been research hotspots.

- (1)

Vision system calibration

The calibration of the vision system is divided into camera internal parameter calibration and hand-eye calibration. For RGB-D camera, if use it to generate point cloud image with texture information, it is necessary to calibrate RGB camera and depth camera to obtain the relationship between RGB image and depth image.

Currently, the calibration method of camera internal parameters mainly uses Zhang Zhengyou calibration method [

5]. However, in view of the problem that the depth camera cannot find the corner points of the checkerboard, SMISEK J et al. [

18] proposed to block the infrared transmitter of the RGB-D camera and use a halogen lamp to generate infrared images, and infrared images instead of depth images are used to calibrate the position between the two cameras. Zhang et al. [

19] proposed the maximum likelihood solution for the joint calibration of two cameras, according to the criterion that the points on the depth image calibration plate should be coplanar and the corresponding points of depth image and color image can be specially specified.

The hand-eye calibration of RGB-D camera is the same as that of RGB camera. Tsai et al. [

20] proposed a new analytical algorithm for hand-eye calibration and gave the principle of selecting the data set for hand-eye calibration under this method for the first time. Fassi I et al. [

21] proposed an algorithm to analyze the hand-eye calibration matrix formula from a geometric perspective, and studied the excessive restriction and singularity in the algorithm.

However, the above methods all need to be carried out independently. The operation of camera calibration needs to be completed first to obtain the calibration result, and then the operation of hand-eye calibration is carried. Moreover, most of the calibration of RGB-D cameras requires additional hardware equipment overhead. Whether it is camera internal parameter and distortion coefficient calibration or hand-eye calibration, it is necessary to move the camera and calibration parts multiple times to collect multi-calibration parts pictures to obtain landmark points to solve the overdetermined linear equations and obtain the results. The process requires professional manual intervention, which brings trouble to actual operation. Combined with the advantages of controllable pose of the manipulator, this paper uses the calibration parts that can be recognized by both RGB camera and depth camera to complete the internal and external parameter calibration of the camera and hand-eye calibration and reduce manual intervention as much as possible.

- (2)

Point cloud registration

In terms of point cloud reconstruction after calibration, it is necessary to take a global shot of the object during the reconstruction process to obtain complete three-dimensional information, as the point cloud data collected from different viewpoints are based on the coordinate system of the vision sensor. The point clouds are all unified in the same world coordinate system to complete the point cloud completion and reconstruction, which requires the point cloud registration [

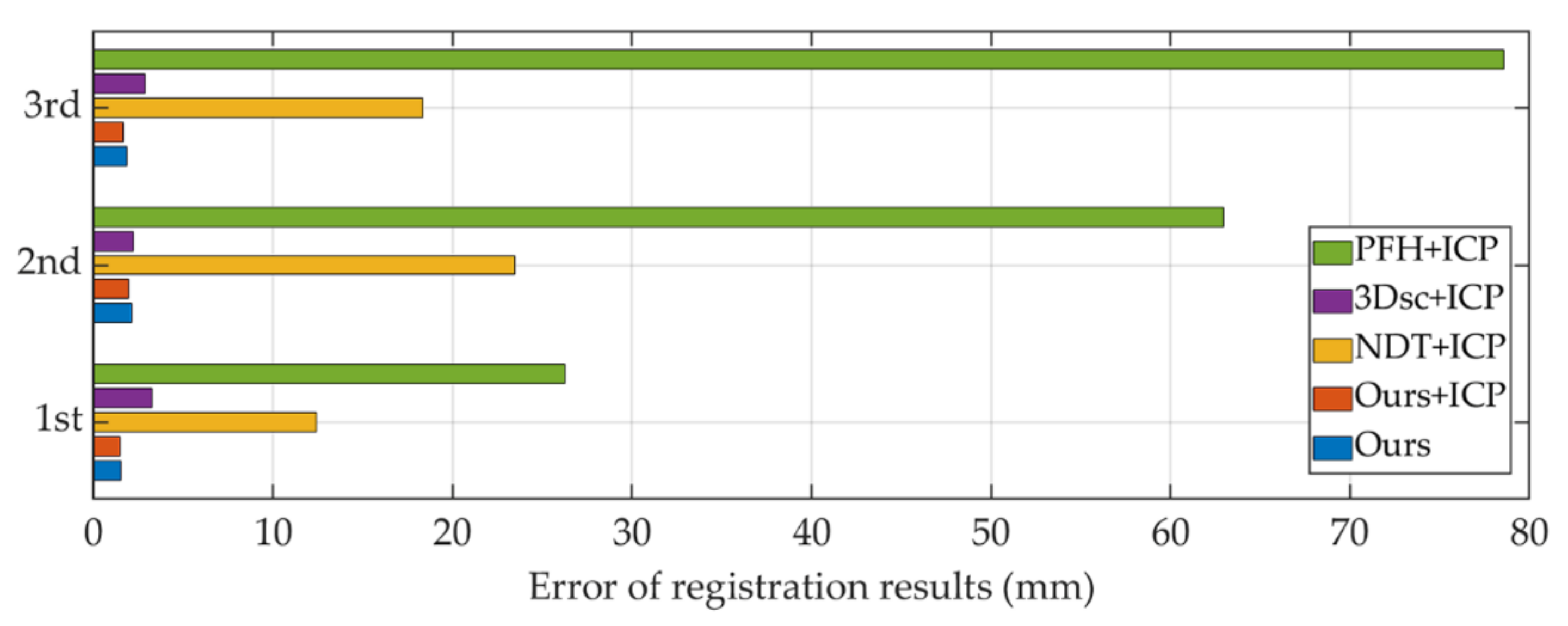

22]. Coarse registration provides a good initial value for fine registration, minimizing the spatial position difference between point clouds to achieve three-dimensional reconstruction. Currently, the more common point cloud coarse registration algorithms are PFH (point feature histogram) [

23], FPFH (fast point feature histogram) [

24], 3Dsc (3D shape context) [

25]. The more common point cloud precision registration algorithms are NDT (normal distribution transformation) [

26], ICP (iterative closest point) [

27] and their variants [

28,

29].

In addition to the classic algorithm mentioned above, Thirion J et al. [

30] proposed to establish an extremal mesh based on the special principal curvature in the point cloud model, and to use its rigid body transformation invariance to carry out coarse registration. However, the mesh established by this method is often vulnerable to noise interference and is only suitable for ideal models. Aiger et al. [

31] believe that when the noise of the point cloud is in a certain threshold, the coarse registration can be achieved only based on the congruence relationship of the quadrilateral formed by four coplanar points. Nevertheless, it adopts the idea of exhaustive, which computationally expensive. Mellado et al. [

32] proposed a coarse registration method based on index technology to realize the linear time complexity in view of the low global comparison efficiency of [

31] proposed algorithm. Fast Global Registration [

10] is widely used for its fast performance. However, in order to increase the registration accuracy and speed, lots of parameters need to be set. Sukhyun Lim et al. proposed a method to help Fast Global Registration set parameters [

33]. Through analysis and experiment, the parameters that work effectively in actual registration are proposed. This result will help determine the direction when a Fast Global Registration method is needed. Li et al. proposed a point cloud registration method based on convolutional neural networks with improved Harris-SIFT (scale-invariant feature transform) [

34]. They improved Harris-SIFT to extract the stable key points of point cloud models in 3D space. Meanwhile, the weighted adjacency matrix of the key points is used as the input feature map of the convolutional neural network to achieve the prediction matching of key points of the source and target point clouds. However, in order to extract key points accurately, this algorithm must target point clouds with a large number of repetitive parts.

However, the above rough registration algorithm of point cloud usually has the characteristics of large calculation and time-consuming, which cannot ensure the accurate registration of two point clouds under noise or strong convergence. In this paper, combined with the controllable nature of the hand-eye system, the missing point cloud is automatically detected, and the missing parts are registered in a targeted manner to repair the missing point cloud and improve the real-time registration.

- (3)

Point cloud instance segmentation

After obtaining a more complete environmental point cloud model, only when the target object is extracted from the environment can the manipulator carry out corresponding operations on the target [

29]. Traditional point cloud segmentation mainly uses the position relationship between points in point cloud data to segment it, mainly including clustering and region growth. Wang et al. proposed a point cloud segmentation algorithm that uses the core ideas of local sampling and statistical inference. The algorithm uses anomalous sample datasets to determine the planes and surfaces in the point cloud data to obtain valid samples, using local evaluation and constraints to obtain segmentation results [

35]. However, the traditional point cloud segmentation methods cannot meet the requirements of real-time semantic segmentation of large-scale point cloud in artificial intelligence, and cannot accurately segment continuous contact objects, such as stacking in most cases.

Kalogerakis et al. [

36] designed a projection convolutional network applied to 3D shape segmentation, and proposed a deep network architecture for 3D shape segmentation and inferred recognition of individual parts. The algorithm combines FCNs (fully convolutional networks) and CRFs (conditional random field) by using a pre-trained model for image recognition of end-to-end training. FCN projects multi-view images to different parts of the corresponding 3D objects, and then uses surface feature-based CRF to segment the 3D shapes by combining the features on the surfaces of different parts of the projected 3D objects. This method processes the input data efficiently with an image-based CNN, but cannot determine the size of the number of views covering the 3D model, which may lead to the loss of 3D information.

The development of two-dimensional image processing by RGB-D cameras and artificial intelligence provides a way to segment three-dimensional point clouds based on two-dimensional images for point cloud segmentation. The multi-view convolutional neural network (MVCNN) proposed by Su et al. [

37] firstly simulates the camera shooting three-dimensional objects from multiple angles to obtain projected two-dimensional images. Then it uses the pre-trained network under ImageNet to extract features. Finally, the features from each perspective are aggregated by pooling, and input into the classification network for classification. Feng Y et al. [

38] proposed GVCNN base on MVCNN. They grouped the feature descriptors extracted by CNN and used the group feature descriptors for classification to further improve the classification accuracy. The advantage of this method is that it can directly use the existing two-dimensional neural network for training. Experiments prove that this method has better results in point cloud classification tasks. Nevertheless, the disadvantage is that the parameter setting in the process of rendering the 3D point cloud into a 2D image relies on manual experience heavily. The extraction of two-dimensional feature images will inevitably lead to information loss, and the internal structure information of three-dimensional objects cannot be obtained. Therefore, the effect is not satisfactory in high-resolution point cloud segmentation.

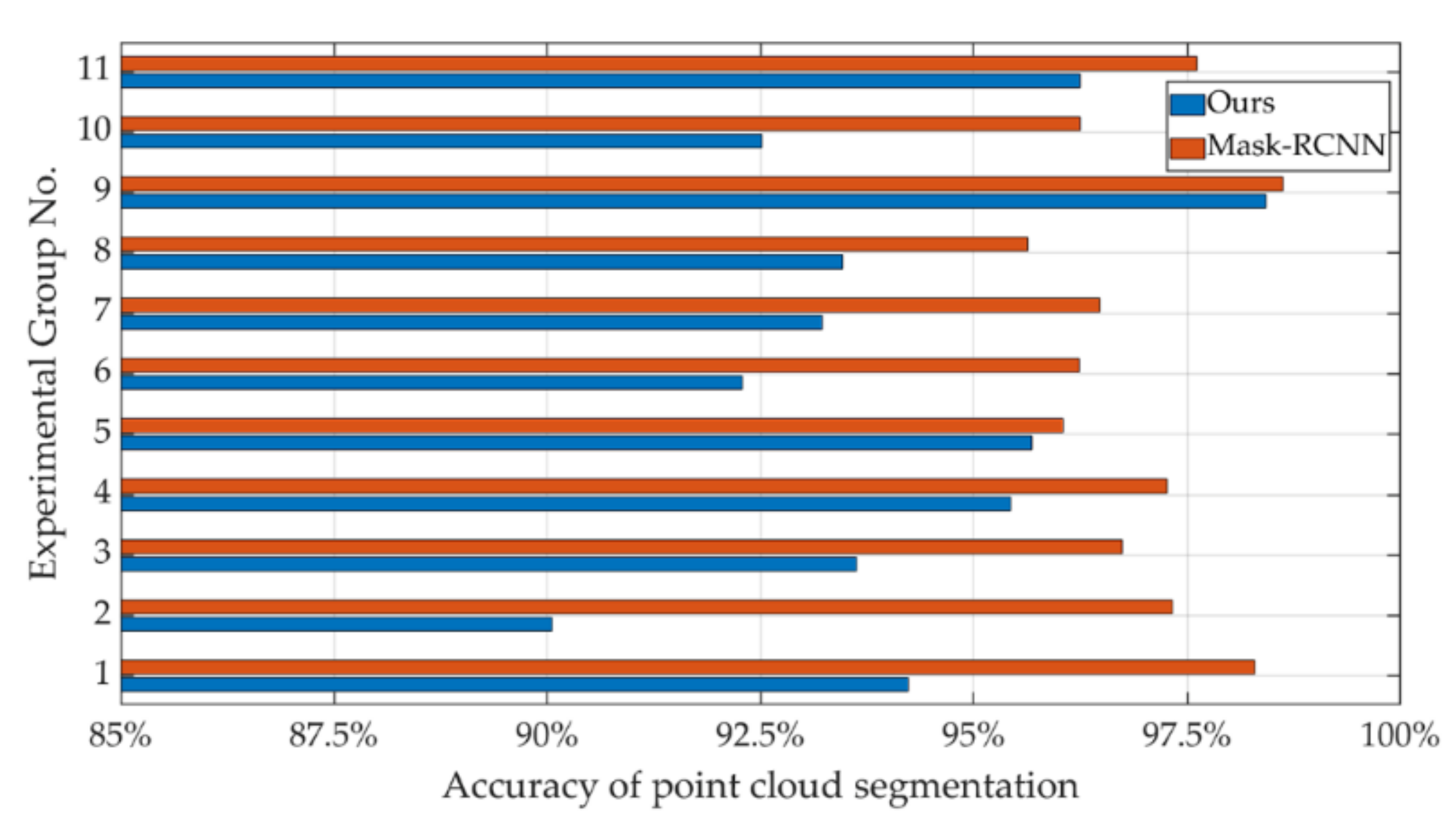

According to the advanced ideas provided by the above-mentioned similar studies, a double pyramid feature fusion model based on Mask R-CNN is designed. This model superimposes the depth image on the original three-channel RGB image as a new channel, and uses the convolutional neural network for learning to realize the point cloud segmentation.

3. Principle and Method

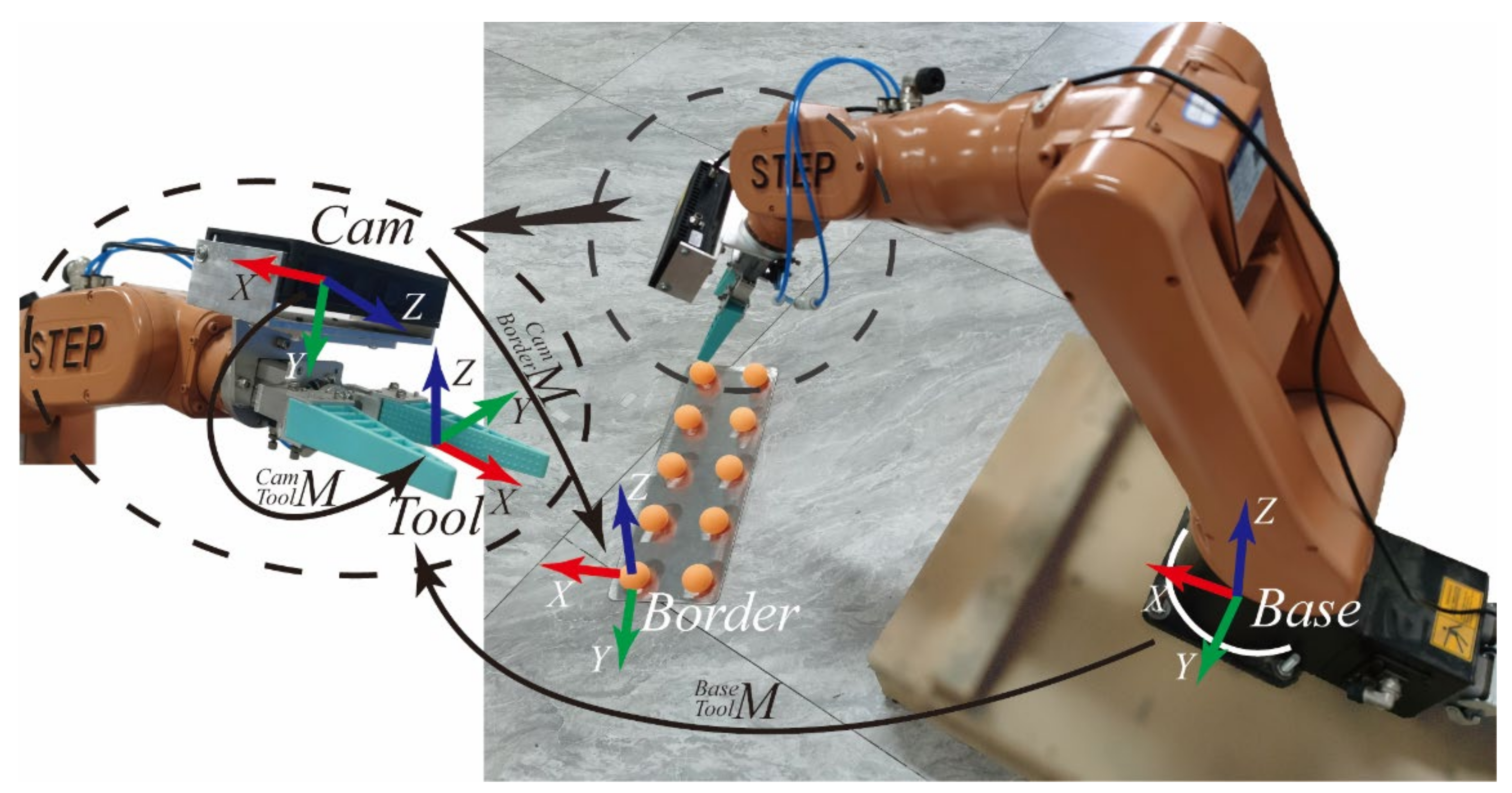

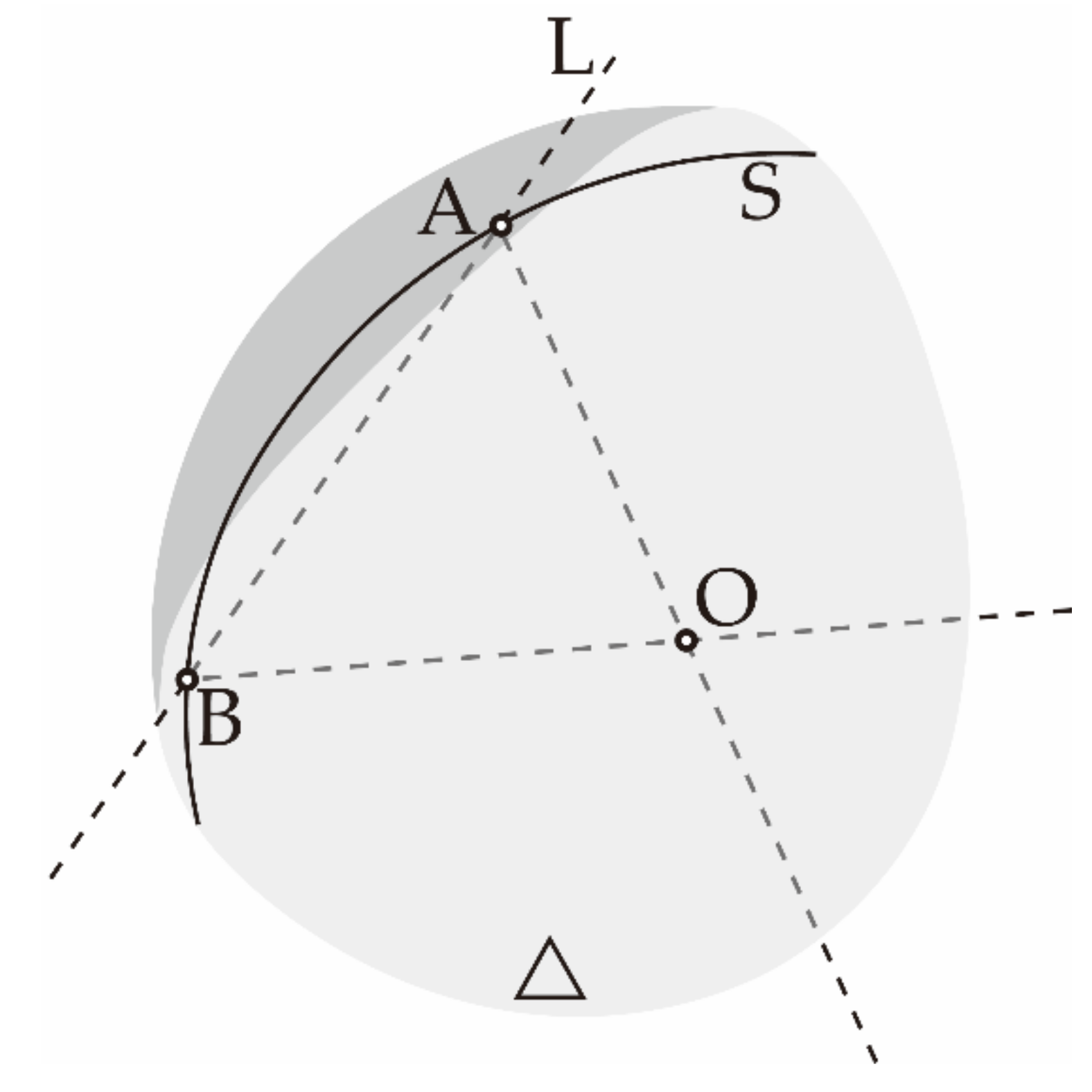

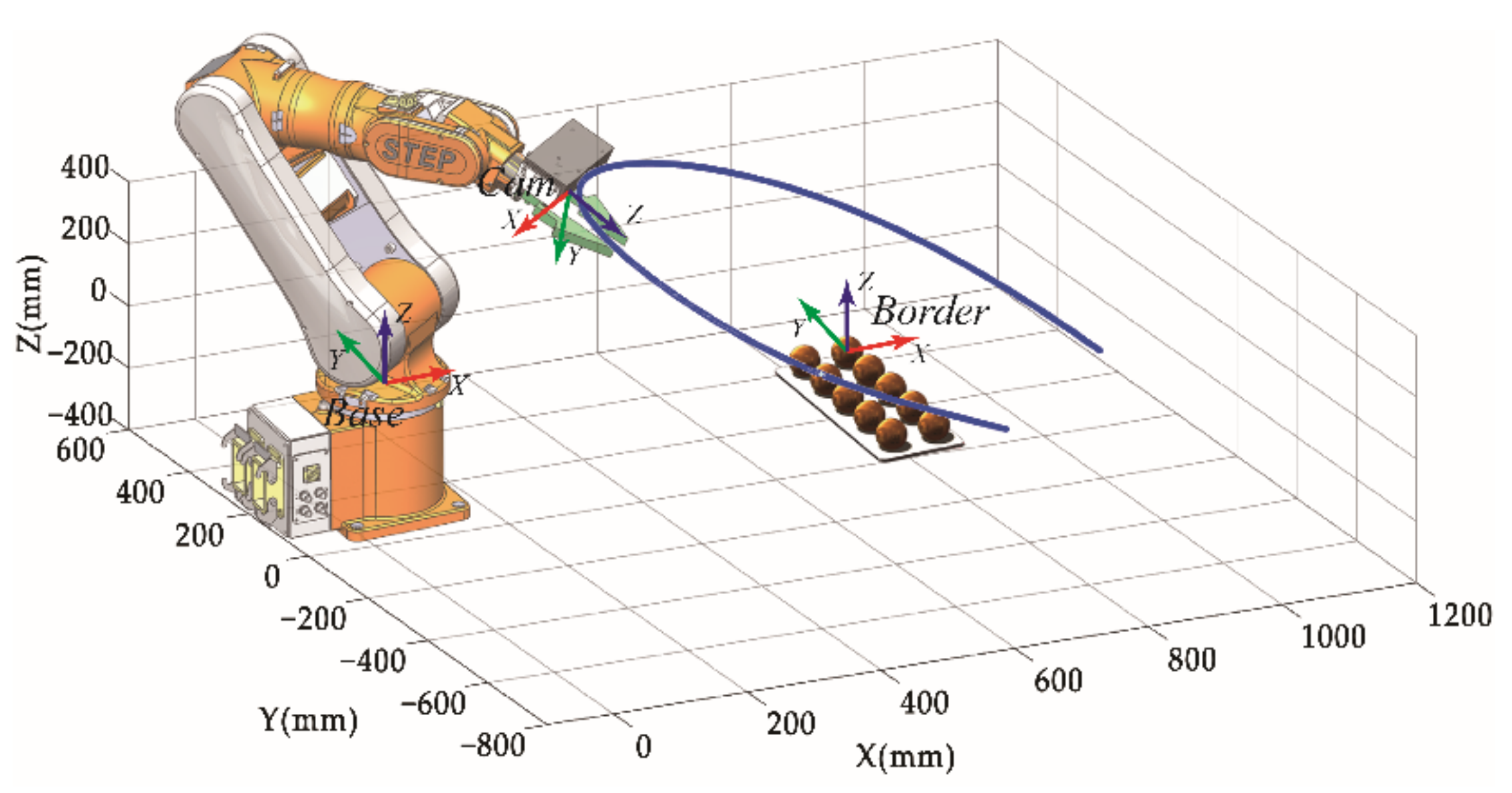

In order to achieve rapid self-calibration of the vision system and rapid target positioning, this paper builds a robotic arm system shown as

Figure 1. The system mainly includes the manipulator, end-effector, RGB-D camera and table tennis calibration board. The depth image acquisition device we use consists of two symmetric infrared (IR) cameras. The depth image is computed from the parallax of the dual IR cameras and combined with the RGB camera to generate a point cloud image with texture information.

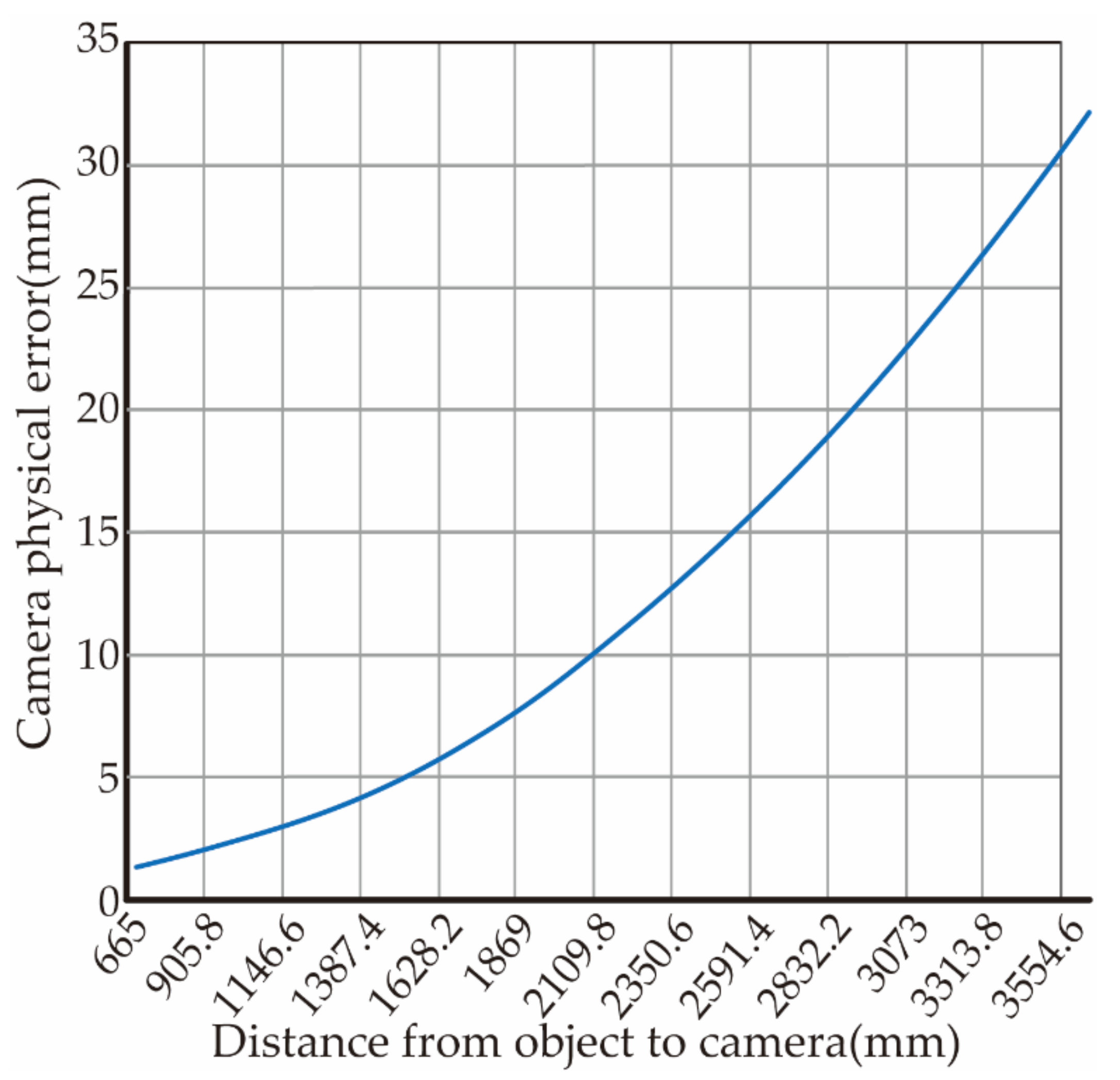

The experimental platform uses a STEP-SD500E six-axis robotic arm (repeatable positioning accuracy ±2 mm) with a pneumatic end-effector to form a robotic system. The camera uses the Percipio-FM830 RGB-D camera, which consists of an infrared (IR) projector, two IR cameras and an RGB camera. The IR projector with IR camera forms a binocular camera to acquire Depth images, and each pixel represents the distance from the camera to each point in the scene. The monocular RGB camera acquires RGB images. The acquisition error of the RGB-D camera is 0.2%~1% of the shooting distance. The image resolution is 1280 × 960.

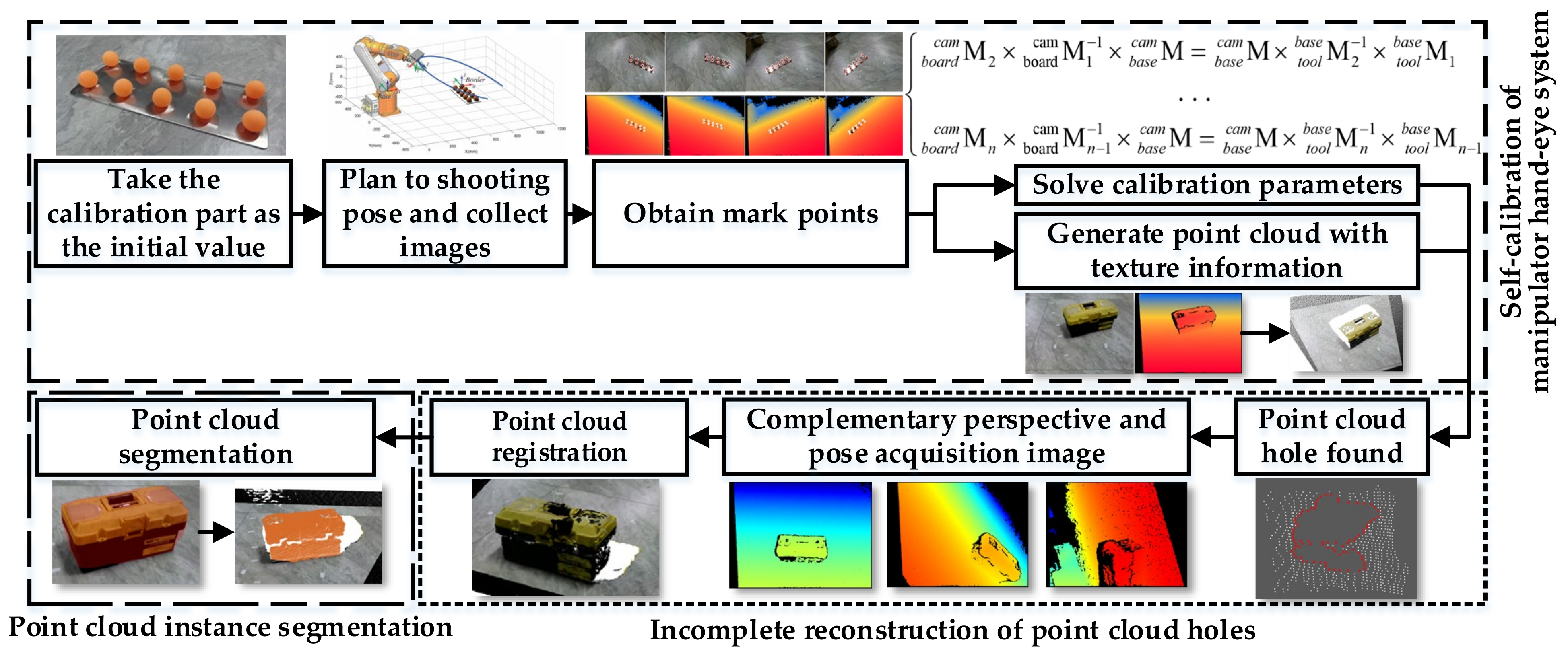

As shown in

Figure 2, the system process can be divided into three stages: (1) in the self-calibration stage of the manipulator hand-eye system, the image acquisition path planning is automatically planned based on the quaternion spherical linear interpolation method and the image of the calibration board is collected to solve the calibration parameters; (2) the point cloud hole completion stage is based on the point cloud rapid registration method of the iterative manipulator shooting pose space coordinate conversion to complete the point cloud reconstruction of the outer surface of the object; (3) the point cloud instance segmentation stage uses the improved double pyramid feature fusion depth image to segment the RGB image through Mask R-CNN, and maps it to the point cloud space to achieve point cloud instance segmentation.

3.1. Hand-Eye System Self-Calibration

The RGB-D camera used in this paper consists of an RGB camera and a depth camera, which can obtain depth images and RGB images, respectively. As the RGB camera and the depth camera are not in the same position in space, camera calibration is necessary to unify the reference systems of the two images and obtain the conversion relationship between the image coordinate system and physical coordinate system. The RGB camera is used as a reference for hand-eye calibration and completes calibration of the hand-eye system.

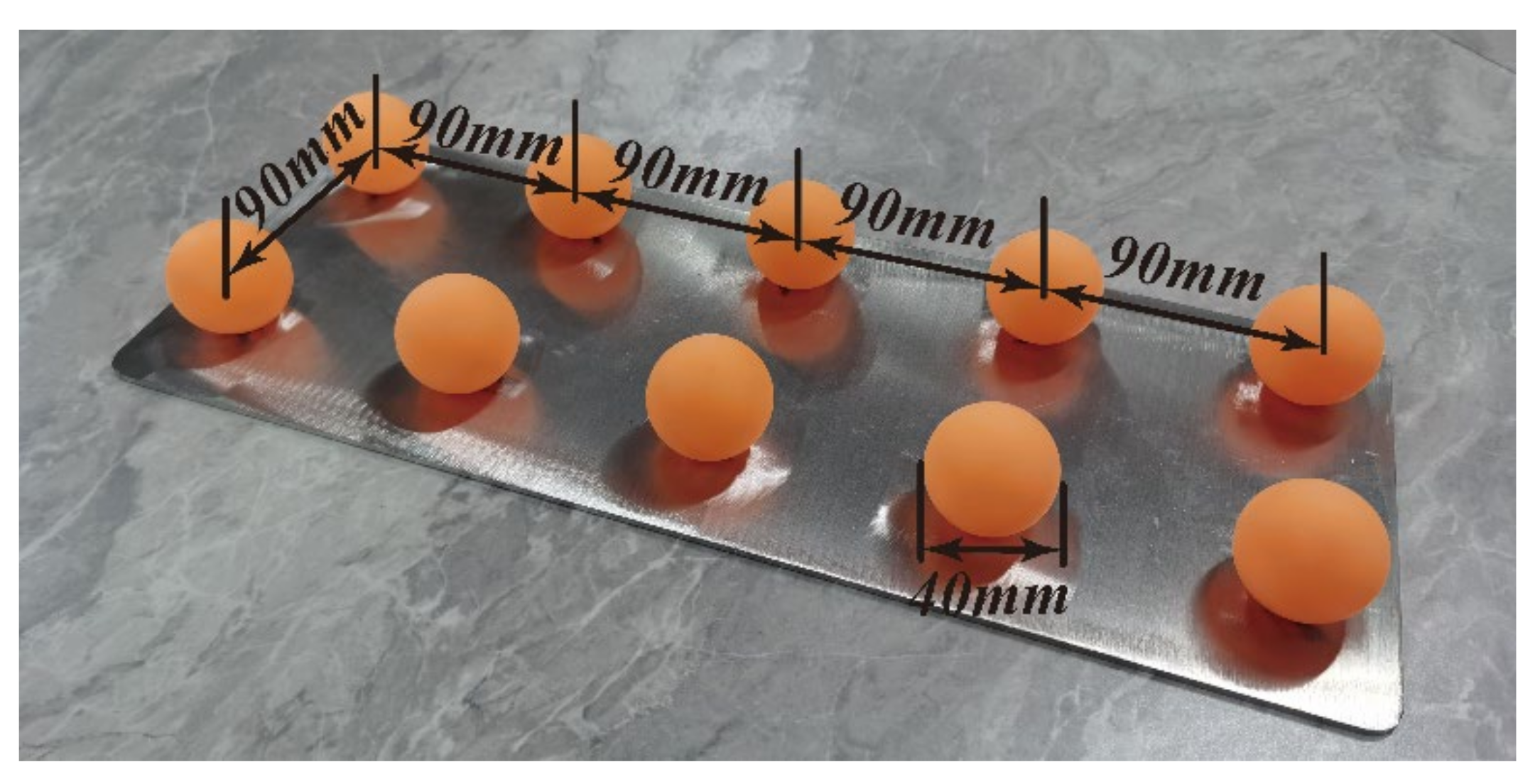

As both the depth map and the RGB map are more sensitive to the contour of the three-dimensional object. A three-dimensional calibration object needs to be made, because ping pong balls are very easy to obtain, and the size is appropriate. The balls need to be spaced in a certain viewing angle to ensure that they are not blocked from each other. This paper uses the self-made table tennis array as shown in

Figure 3 as the three-dimensional calibration object. The diameter of each ping pong ball is 40 mm and the spacing is 90 mm.

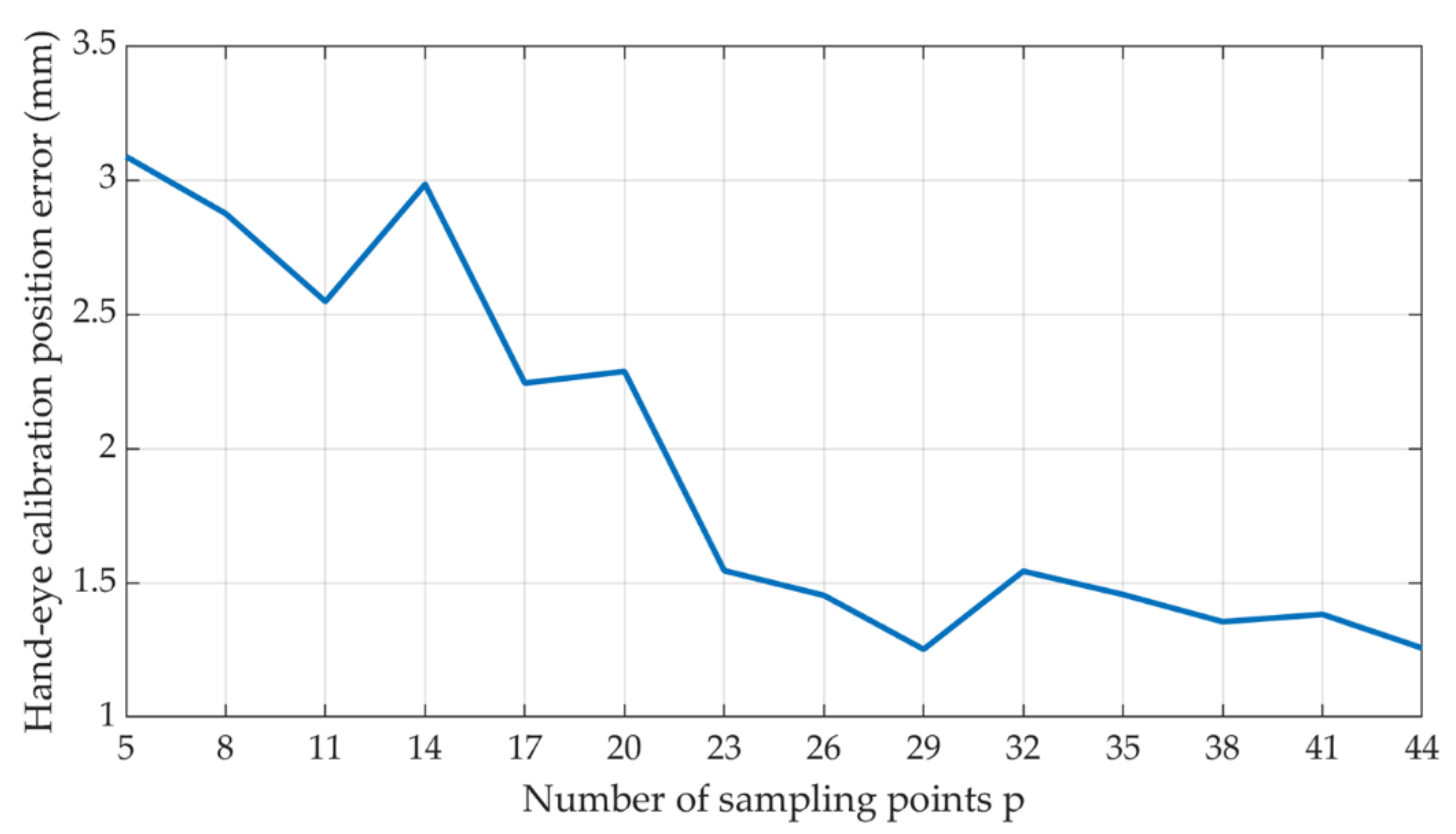

The path is automatically planned for the camera to shoot the calibration parts, and multiple sets of calibration pictures are collected. The center coordinates of the ping-pong ball under RGB and depth images are detected by Hough transform, and the two sets of linear overdetermined equations are solved to obtain the internal and external parameters and hand-eye calibration matrix of the camera.

3.1.1. Automatic Acquisition of Calibration Images Based on Spatial Spherical Interpolation

This paper proposes an automatic path planning algorithm based on quaternion Slerp space spherical linear interpolation. Given two initial shooting poses, the continuous shooting path S can be automatically planned, and multiple pictures of the calibration parts shot at different angles can be collected.

As shown in

Figure 4, the two manipulator postures

and

are manually set as initial values through the teach pendant, so that the calibration parts

and

are in the middle of the field of view of the RGB image and the Depth image.

It is also possible to use an external global camera to obtain the position of the calibrator to automatically calculate the initial value, but this increases the hardware cost and complexity of the operation.

As shown in

Figure 5, after the initial value poses A and B are located at the space point

, the intersection of the direction pointed by the manipulator end is set as the center of the circle

to make the spherical surface

. Let the intersection of L and the range of movement

of the manipulator end-effector is

. Connect

to get a straight line

, project it on

, and achieve a space curve

. Then the two ends of the record pointing to the center

of the sphere are the poses

and

, and interpolate the image acquisition pose

of

pointing to the center of the sphere

, and they are each spatially symmetric about the calibrator.

Make the transformation of posture

to posture

, the coordinates (

) of the center of posture

is under

. The direction of the

x-axis,

y-axis and

z-axis of posture

can be expressed by vectors, which are the mutually orthogonal unit vectors (

) in

. Define the rotation matrix R as:

The end pose M of the robot is composed of translational freedom T and rotation freedom R. Because they are two different units of quantities, the normalization method cannot be used for simultaneous planning. Therefore, the position and posture need to be planned separately, and then synchronized. The augmented matrix synthesized by T and R is easy to send singular solutions in the special pose of the manipulator, so a quaternion is introduced to represent the robot posture to avoid singularity [

39].

Convert Equation (1) to a unit quaternion

through Equation (2):

The unit quaternion and the quaternion in the same direction have the same rotation function, thereby the quaternion can be used to plan the direction of the path, and the position matrix T can be used to plan the position.

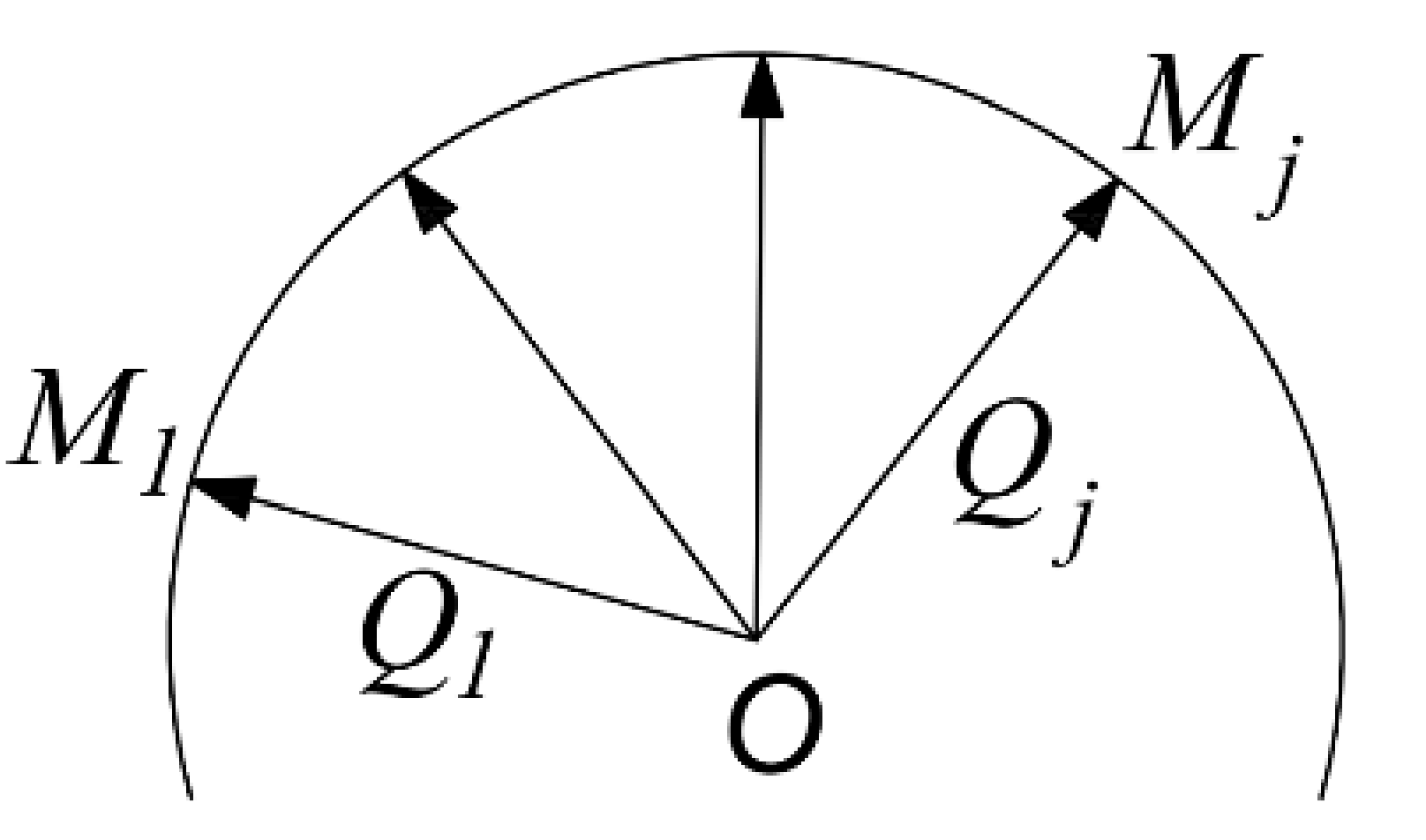

As shown in

Figure 6, the directions of the end-point poses

and

of the feasible region are represented by quaternion as

and

, and the path is planned by Slerp interpolation [

40] along the space curve S.

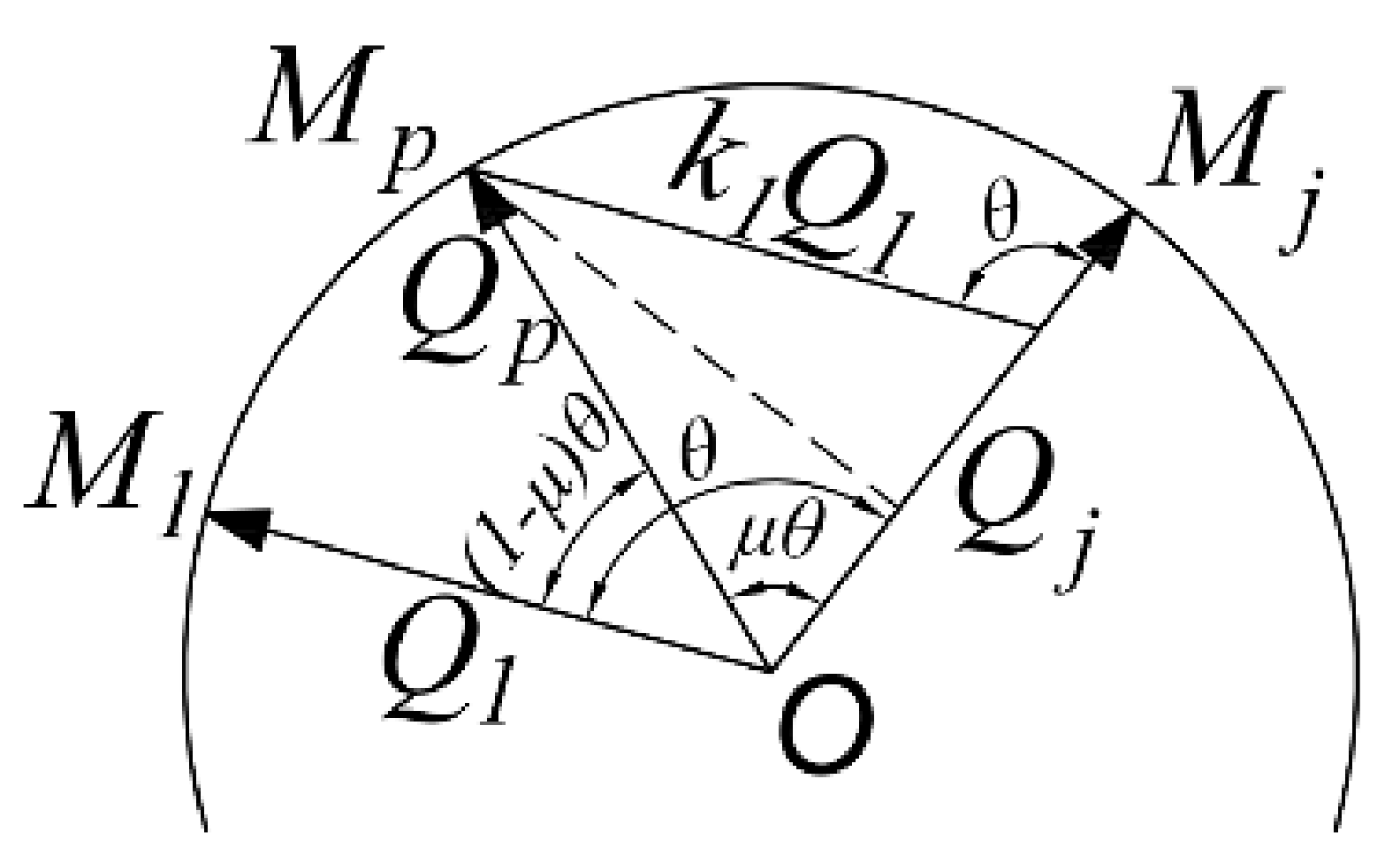

The Slerp function of the quaternion interpolation of the

-th point unit can be obtained:

In the process of real-time interpolation and image acquisition, each frame taken continuously shall be consistent with the mechanical pose of the frame as far as possible. Thereby, it is necessary to avoid time-consuming calculations such as power, inverse and multiplication of quaternion. Equation (4) can be obtained from several relationship in

Figure 7, and

is the angle between quaternions

and

.

Similarly,

can be solved. The

-th pose pointing to the center of the sphere can express the scale coefficient

as:

Slerp interpolation formula (3) is rewritten as:

In Equation (6), there are only number multiplication and addition with small calculation amount, and it can maintain high real-time performance with the camera for continuous shooting.

3.1.2. Calibration Data Processing Method

On the movement curve S,

image acquisition poses

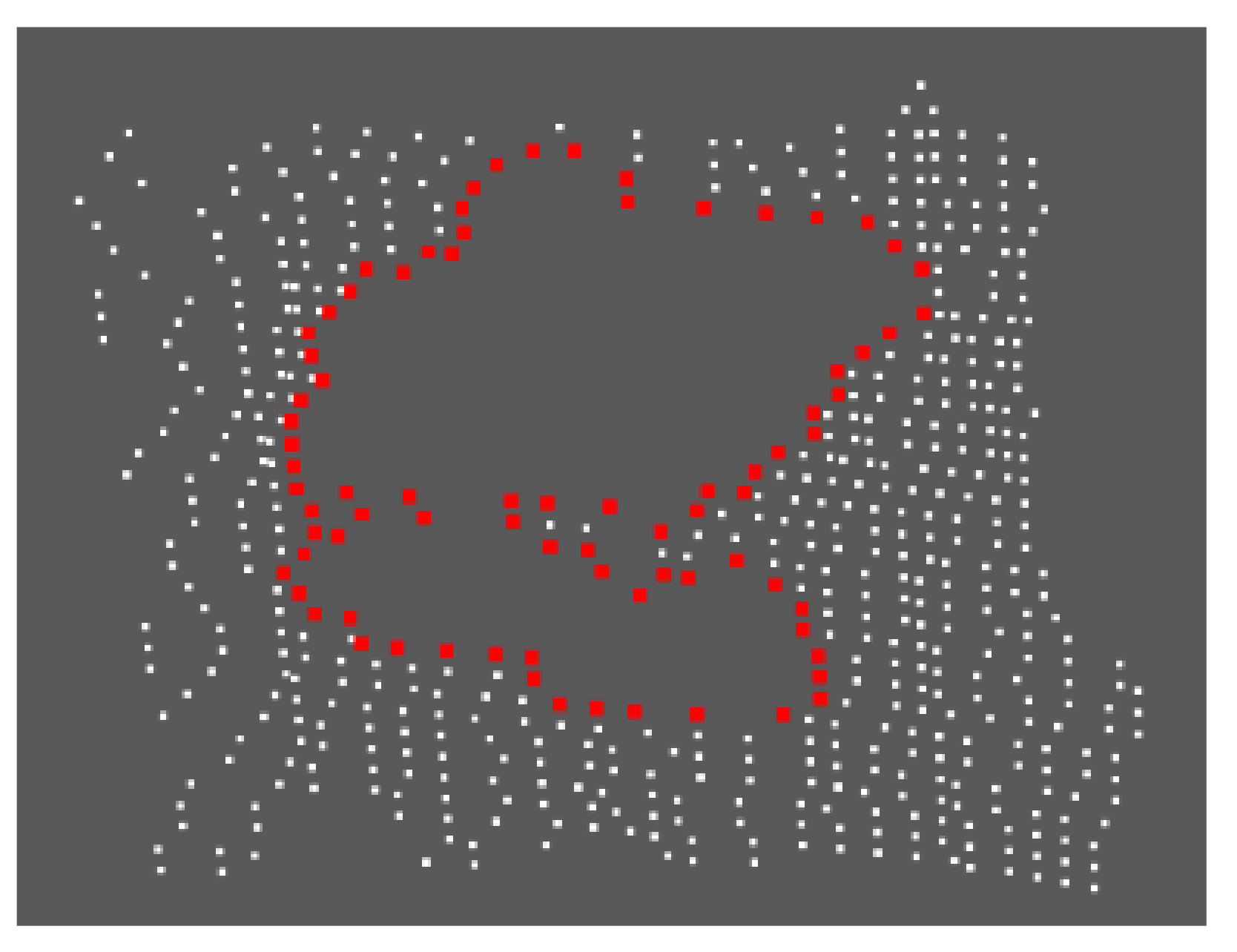

are selected to obtain the key frames of RGB and Depth images. The coordinates of the center of the sphere are extracted through the Hough circle detection algorithm, as shown in

Figure 8.

Using Zhang Zhengyou calibration method, the internal parameter matrix

and distortion coefficient of RGB camera, the internal parameter matrix

and distortion coefficient of depth camera are calculated respectively. After the distortion coefficient is used to repair the image distortion, the RGB image is fused with the depth image through Equation (7), so that each pixel of the RGB image corresponds to a depth value.

where,

is the space point converted from the world coordinate system to the color coordinate system;

is the external parameter matrix from the world coordinate system to the color camera coordinate system;

is the external parameter matrix from the depth camera coordinate system to the color camera coordinate system;

is the point from the depth map to the world coordinate system;

is the external parameter matrix converted from the world coordinate system to the depth camera coordinate system, and

is the three-dimensional point coordinate of a point in the depth map of the depth camera coordinate system.

After fusing the depth values, 4 channels of data are obtained, which are three color components and one depth component, and then a textured point cloud image can be constructed.

Taking the RGB camera as the reference, the pose sequence

of the calibration part under

camera coordinate systems by locating the center point of the calibration plate is obtained. Corresponding to the

pose sequence

of the manipulator end-effector during shooting, the hand-eye calibration matrix is calculated by Equation (8).

where, as the calibration part is fixed relative to the coordinate system

, and

is constant;

is obtained by the image algorithm through each shot of the calibration part, and

can be obtained by reading the manipulator teach pendant.

can be obtained by solving Equation (8), and the hand-eye calibration matrix

can be obtained through Equation (9).

Generally, given only two initial shooting values, the calibration parameters of RGB-D camera can be automatically calculated by this algorithm, and the textured point cloud can be generated by integrating RGB and depth data, to finally realize the hand-eye calibration of manipulator and RGB-D camera.

3.2. Fast Point Cloud Registration

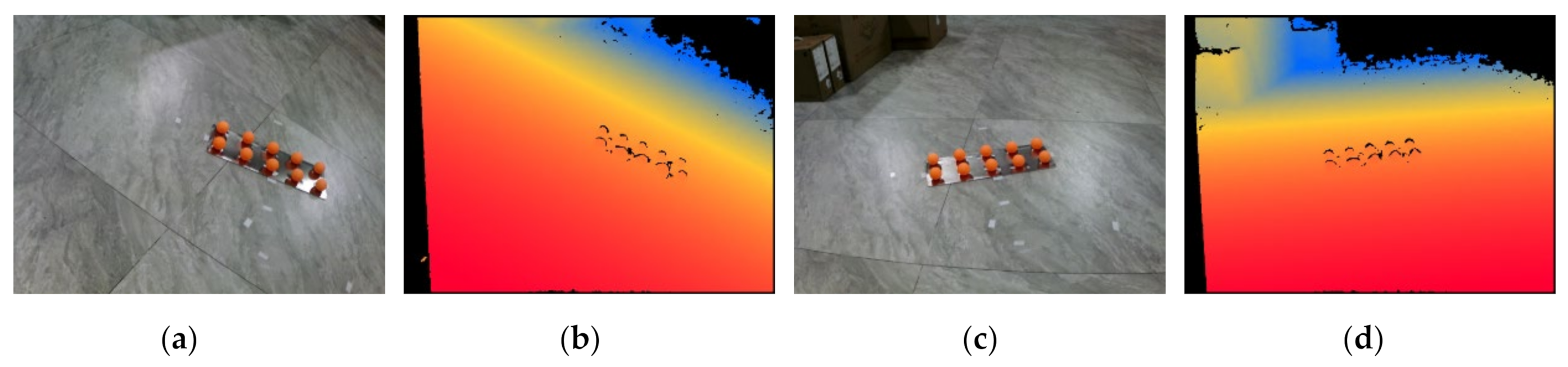

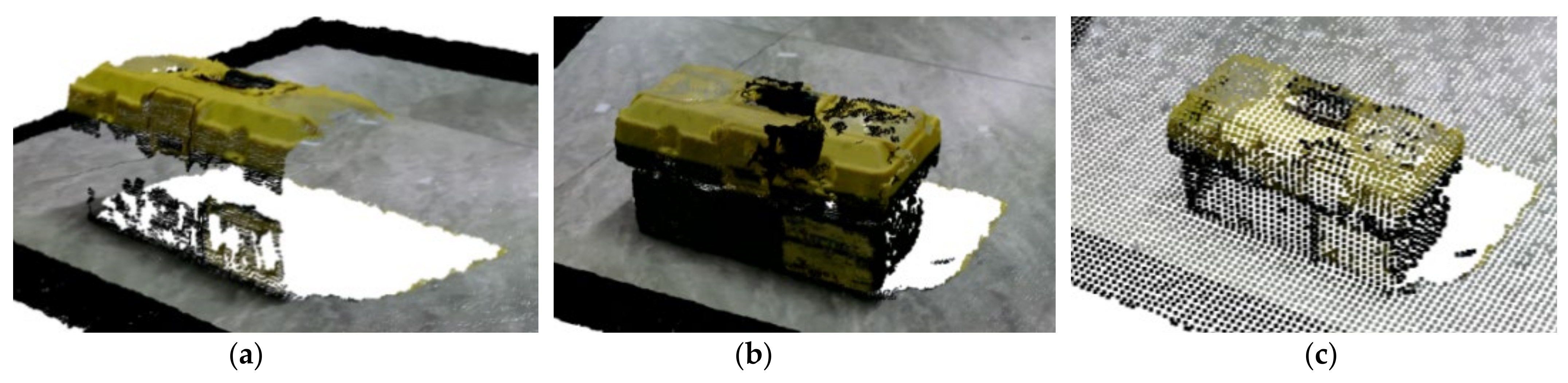

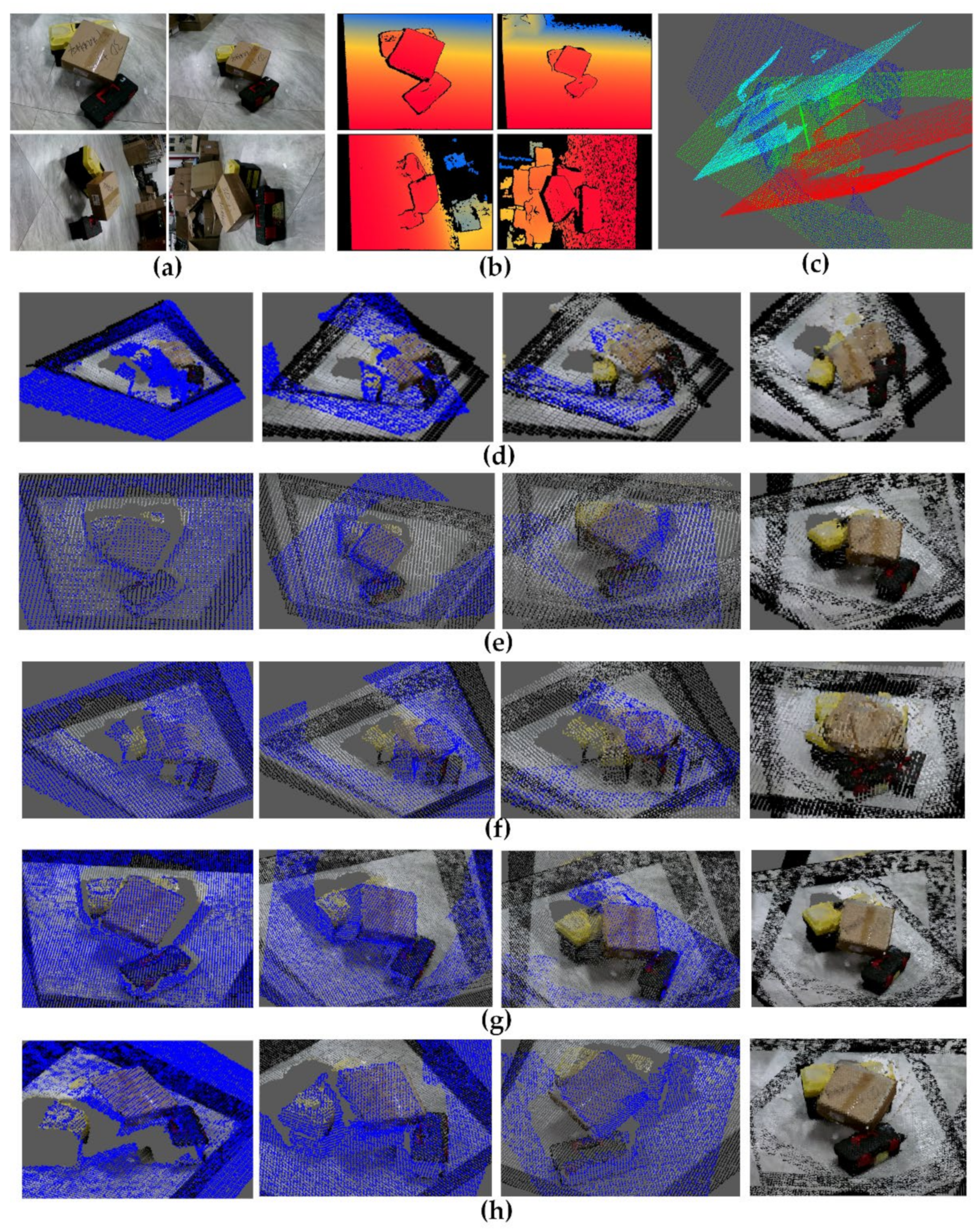

The point cloud image obtained by photographing the target in the scene with RGB-D camera usually forms the point cloud hole in the blind area of field of vision as shown in

Figure 9. This results in reconstruction failure and is unable to provide accurate pose information of the target for subsequent grasping, dispensing and assembly. Thus a point cloud hole filling shooting method is proposed, and fast point cloud registration is conducted.

3.2.1. Pose Calculation of Point Cloud Hole Completion Shooting

- 1

Hole discrimination:

KD tree with strong adaptability, low memory consumption and not affected by point cloud density is used to establish the topological relationship of point cloud. The Euclidean distance from the sampling point to its adjacent points is calculated. The point whose distance is less than the given hole repair threshold is determined as the boundary point of the hole.

Firstly calculate the average density

of the point cloud.

points are randomly selected from the sample as feature points to obtain the feature point set

, and

. KD tree is used to traverse each point in the point cloud to find the k points with the smallest distance from it. Calculate the distance

between K adjacent points and

, and average all

to obtain the point cloud density

. The hole threshold

is obtained from the point cloud density

by specifying the threshold coefficient

.

Then K neighborhood search is used to traverse all points of the point cloud, find the points closest to them, and calculate the distance between this point and points, . Once a distance in sequence is greater than the hole threshold , the point is considered as a boundary point and saved in the boundary point set .

After the boundary point set

is obtained, the boundary

is further extracted. Using the K neighborhood search method, K adjacent points

of

is found to obtain the boundary

and calculate the pose

of each hole. Where n is less than or equal to the number of holes, and the local hole boundary detection effect is shown in

Figure 10.

- 2

Complementary image shooting pose calculation:

After knowing the pose of the hole compared with the object , it is necessary to automatically plan the manipulator to move to the appropriate pose to supplement the blind area of the field of view collected for the first time. Take the coordinate of a point on the object as , the first shooting pose as , as the center of the circle and as the radius to make the spherical surface . Note that the intersection of and the activity range of the manipulator end-effector is . The normal plane composed of the missing hole contour of the point cloud is moved to the spatial sphere through the translation matrix .

Convert it to the manipulator shooting pose through the following formula:

Repeat the above process, and stop if one of the following conditions is met: (1) the missing hole has no intersection with the feasible region of the manipulator after moving through the translation matrix, i.e., the manipulator cannot move to the supplementary image shooting pose due to its mechanical structure; (2) no holes that meet the requirements, i.e., the holes in the working part of the model are supplemented and meet the requirements; (3) when the set upper limit of image acquisition is reached, image acquisition is stopped.

3.2.2. Registration of Complementary Point Clouds

According to the position relationship of the manipulator under different shooting angles, the point cloud coarse registration is carried out for the collected multi-frame data, and then the ICP algorithm is used for the fine registration of coarse registration results. After multiple sets of point cloud data are registered, the point cloud is too dense and redundant, and downsampling algorithm is needed to remove redundant points.

- (1)

Coarse registration of point cloud based on manipulator pose: Record the pose

of the manipulator when collecting images, and obtain the pose

of the camera through the hand-eye calibration matrix

.

The initial point cloud data collected each time is moved to the

coordinate system through rigid body transformation, so that the point cloud coordinate system coincides with the base system and satisfies,

where,

and

are the coordinates in the coordinate system before and after the point cloud conversion.

- (2)

The steps of point cloud fine registration based on ICP algorithm are as follows:

Step 1: Convert the point cloud collected for the first time to the coordinate system through Equation (13) to obtain ;

Step 2: Convert the point cloud collected for the time to the through Equation (13) to obtain . Then ICP algorithm is used to register to based on and fuse into ;

Step 3: Repeat Step 2 until all complementary point clouds are registered to obtain a composed of multiple complementary point clouds.

- (3)

Downsampling of point clouds

After multiple sets of point cloud data registration, although some holes are filled, it also causes the redundancy of point clouds in overlapping areas. It is not conducive to the segmentation of point clouds. In this paper, a method based on geometric sampling is proposed to the downsampling of the registered point cloud to obtain point cloud data with uniform density. This not only reduces the amount of invalid data, but also retains the details of the model.

Firstly, the k-nearest neighbor point cloud around each point cloud is approximately in a local plane. The implicit surface equation obtained by least square fitting is

, and then the Gaussian curvature K at point

is calculated as follows:

where,

is the gradient corresponding to the implicit equation at

;

is the Hessian matrix describing the local curvature of the function, and

is the adjoint matrix of

.

The given point cloud model

is represented by

, and the point set of iterative farthest point sampling can be expressed as

. Assuming that the current sampling is point j, the next sampling point should be point m

that farthest from point j, and m satisfies:

where,

is the distance between two points. It can be seen from Equation (15) that the fusion of Euclidean distance and curvature can sample more model details, and

where,

is the curvature of m, and

is the weight set to balance Euclidean distance and curvature.

Figure 11a is a point cloud image with multiple holes generated by the viewing angle blind area acquired for the first time;

Figure 11b is the point cloud model after complementary point cloud registration, which completes the point cloud model within the activity range of the robotic arm.

Figure 11c is a sparse point cloud model that can accurately express the shape of an object after geometric sampling, and it also can save computational resources for subsequent operations.

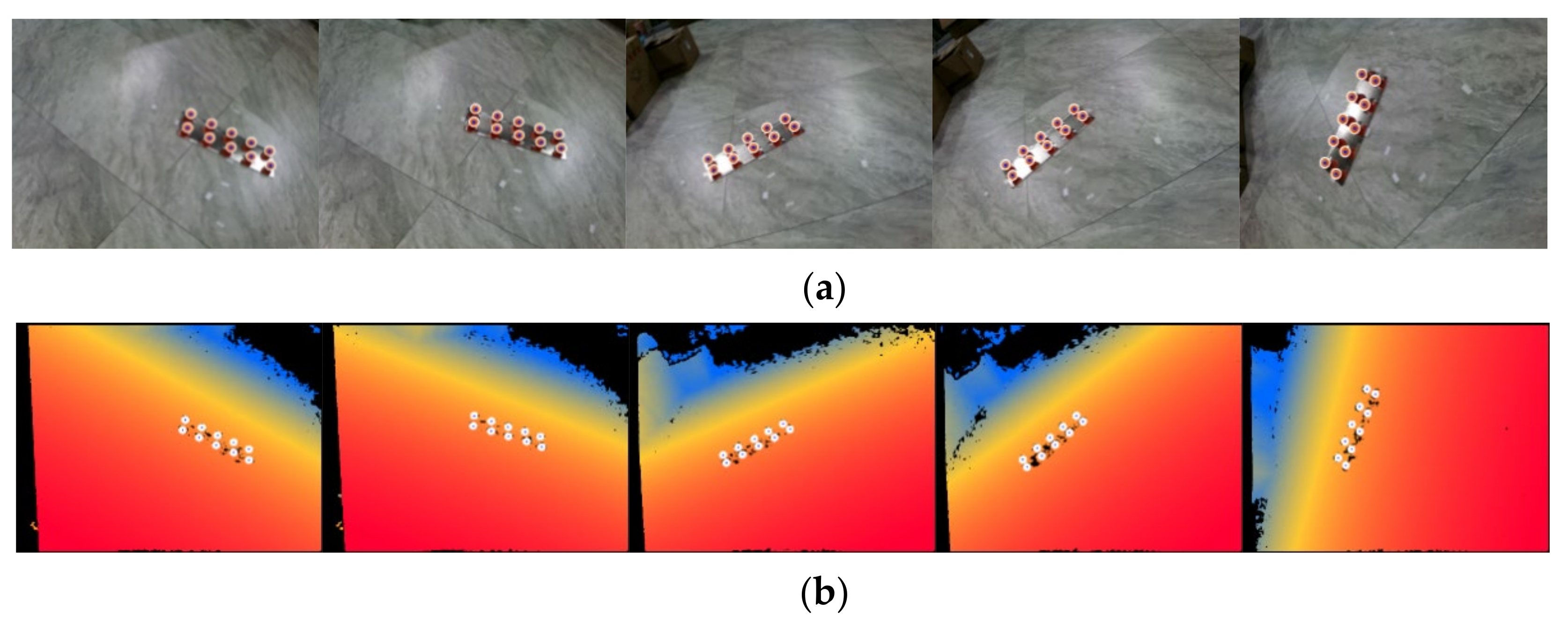

3.3. Point Cloud Instance Segmentation

Presently, the traditional point cloud segmentation methods cannot segment the point cloud in multiple scenes. As multi-dimensional information, the huge data structure and chaotic distribution of point cloud also bring great difficulties to the data annotation of deep learning. Thus RGB-D image acquisition equipment provides us with new ideas. The RGB image is used to fuse the depth image for two-dimensional input, and the Mask-RCNN is improved by using their sensitive characteristics to the object boundary. It not only facilitates the annotation training, but also increases the accuracy of segmentation. The RGB segmentation results of the fusion segmentation are obtained and mapped to the point cloud space to achieve point cloud segmentation.

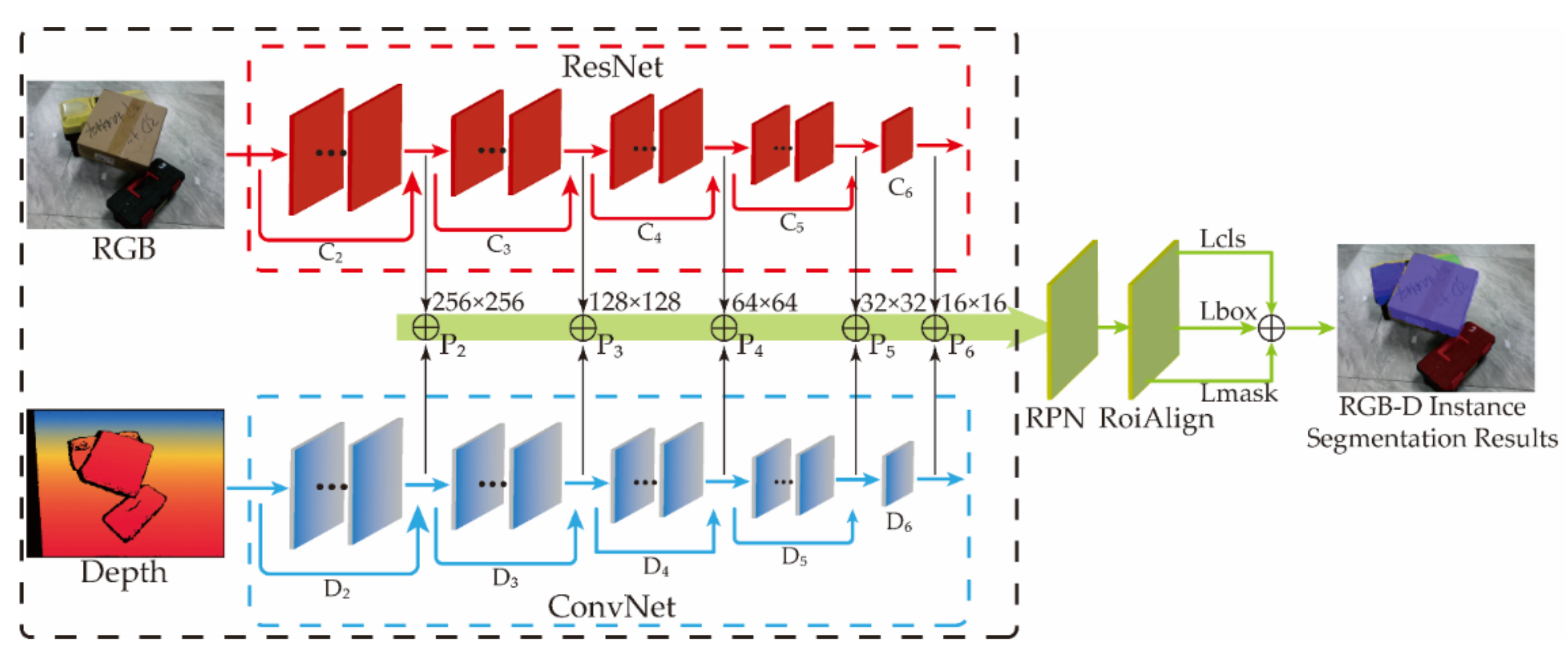

In order to better combine the complementary advantages of RGB and depth images, this paper proposes a double pyramid feature fusion network, which uses convolutional networks of different complexity to extract RGB and depth features, respectively.

The residual network with a deeper network layer is used to extract the features of RGB images, and the network branch with a smaller number of layers is used to extract the features of depth images. As shown in

Figure 12, the image pyramid is used in the feature extraction stage to save RGB and depth features of different resolutions. RGB features with the same resolution are added with depth features, and then input them the into RPN (region proposal network) layer. The header structure network segment of Mask-RCNN forms a double pyramid feature fusion network to realize multi-class instance segmentation of RGB-D images.

Studies have shown that the feature maps at the top of the image pyramid will contain richer semantic information, but the corresponding location information is less accurate. Although low-level feature maps contain less semantic information, the location information is more accurate [

41]. In this paper, the application more focuses on the accurate extraction of location information. Depth information is more effective in position detection tasks, and the results of semantic detection are inaccurate in most cases. Therefore, the use of RGB images and depth images at the same time can achieve the enhancement effect of two features. The high-level RGB features contain rich semantic information, and the low-level depth features contain accurate location information. This requirement can be met by fusing the relatively low-level depth feature with the RGB feature of the same resolution and inputting it into the shared feature map of RPN.

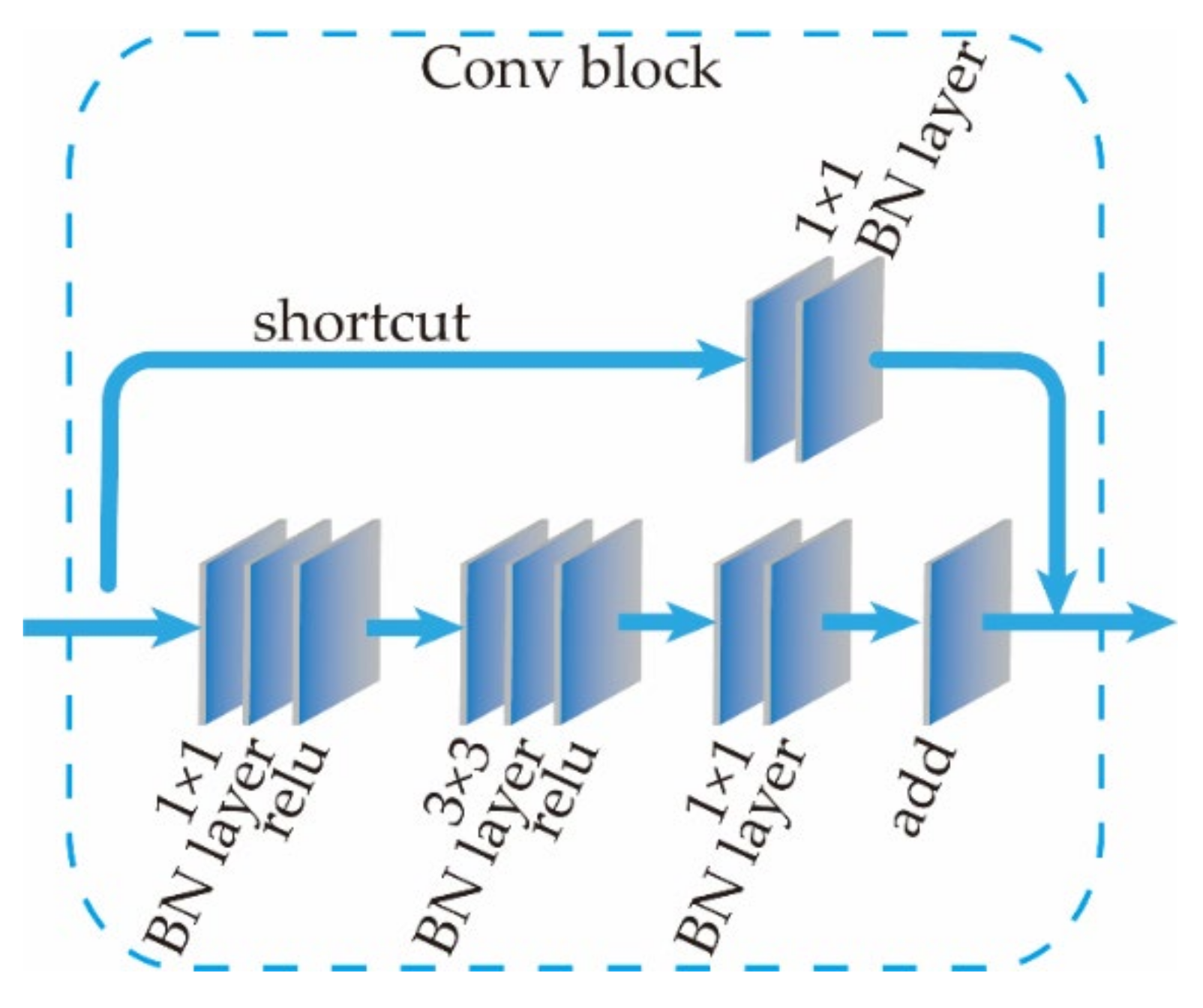

Based on the above inference, in order to achieve the effect of making full use of depth information, the same residual network as the RGB branch cannot be used. Referring to the structural design of residual network convolution module [

42], the depth feature maps of different resolutions are extracted through multiple convolution blocks, and the structure of one convolution block is shown in

Figure 13.

The main structure of the convolution block contains three convolution layers, and the shortcut connection network path contains a single convolutional layer and a single BN layer. The size of the convolution kernel is 1 × 1, and the step size is 2. The input depth feature map passes through a convolution block model, and its size is one-fourth of the original. A structure similar to this module in the residual network structure of RGB features is extracted, but it contains many deep residual block models. Hence, for the feature map with the same resolution, the Depth image undergoes fewer convolution operations, and the extracted features are lower than the RGB features. This conforms to the RGB feature-guided semantic segmentation, and the depth feature perfects the target of position detection.

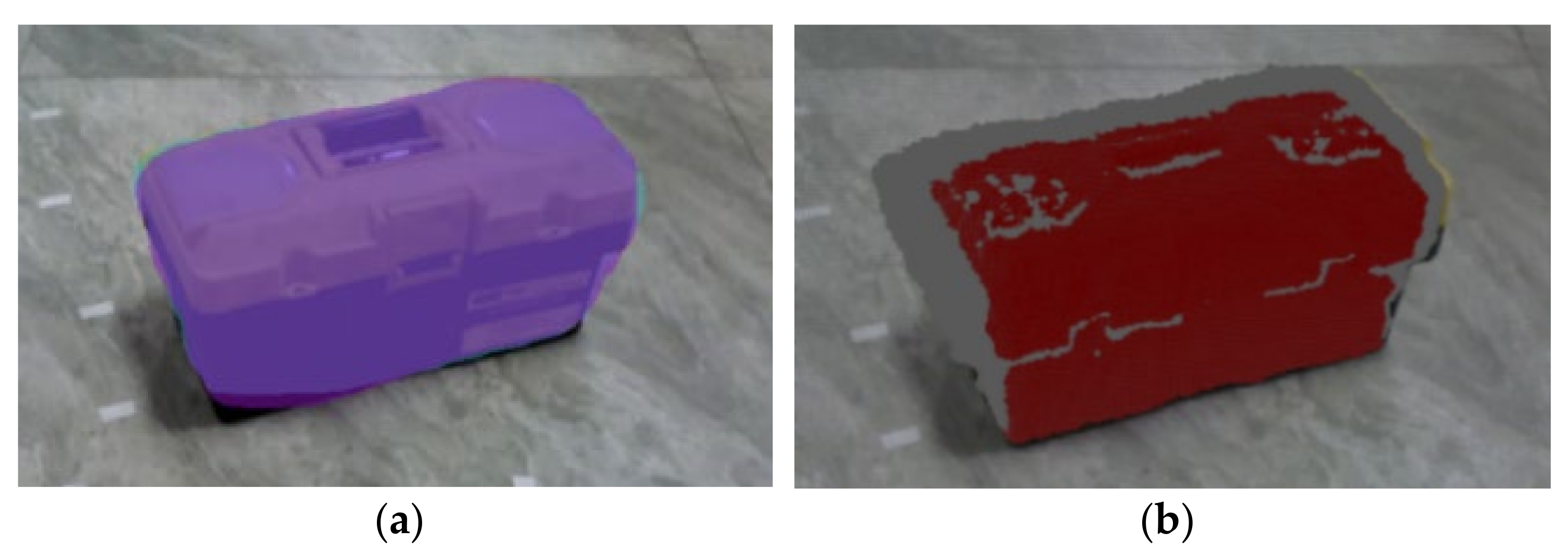

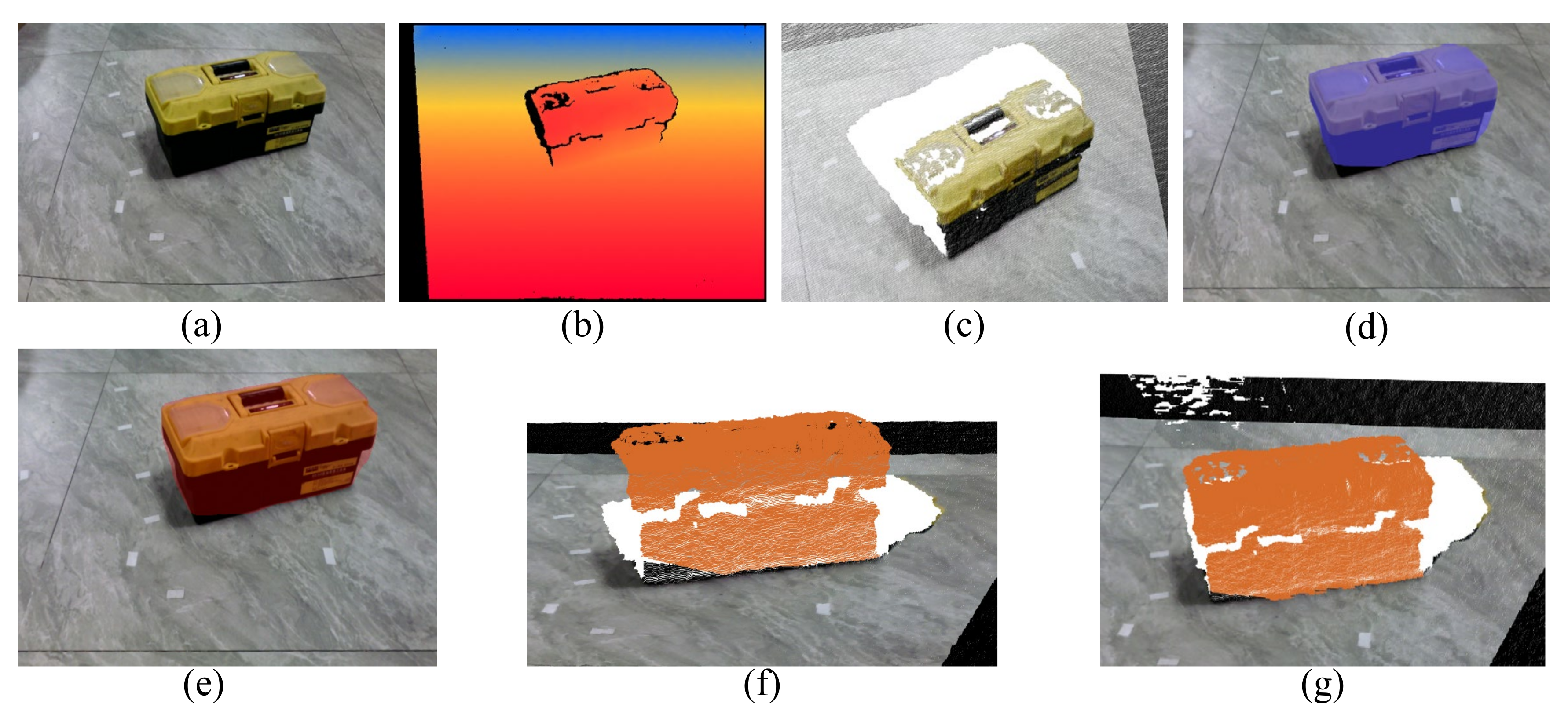

The instance segmentation result under RGB image obtained by the algorithm finally is shown in

Figure 14a, which indicates that the toolbox is more accurately marked in the image. The marked ROI (region of interest) is mapped to the point cloud space, and the point cloud of the toolbox is segmented from the point cloud image, as shown in

Figure 14b with a red point. The segmentation results meet the expectations of the algorithm design.