Mapping Directional Mid-Air Unistroke Gestures to Interaction Commands: A User Elicitation and Evaluation Study

Abstract

:1. Introduction

2. Related Work

2.1. Command-to-Gesture Mappings for Mid-Air Unistroke Gesture

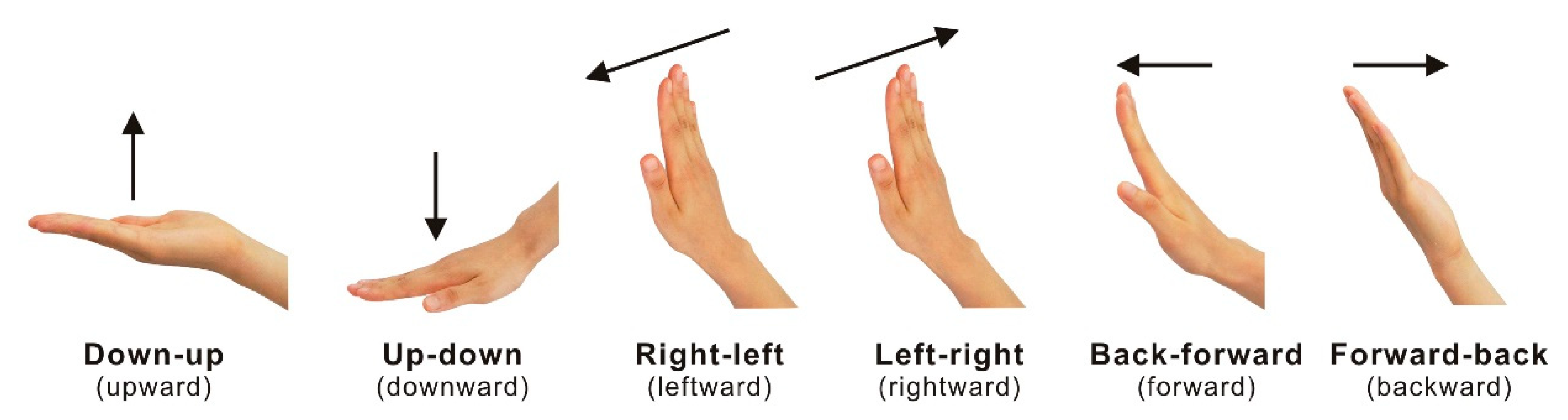

2.2. Theories of Semantically Mapping a Command to the Direction

3. Experiment 1: User Elicitation Study

3.1. Interaction Scenarios and Commands

3.2. Experimental Design

3.3. Participants

3.4. Apparatus

3.5. Experimental Materials

3.6. Procedure

3.7. Results

3.8. Discussion of Experiment 1

4. Experiment 2: Evaluation Study

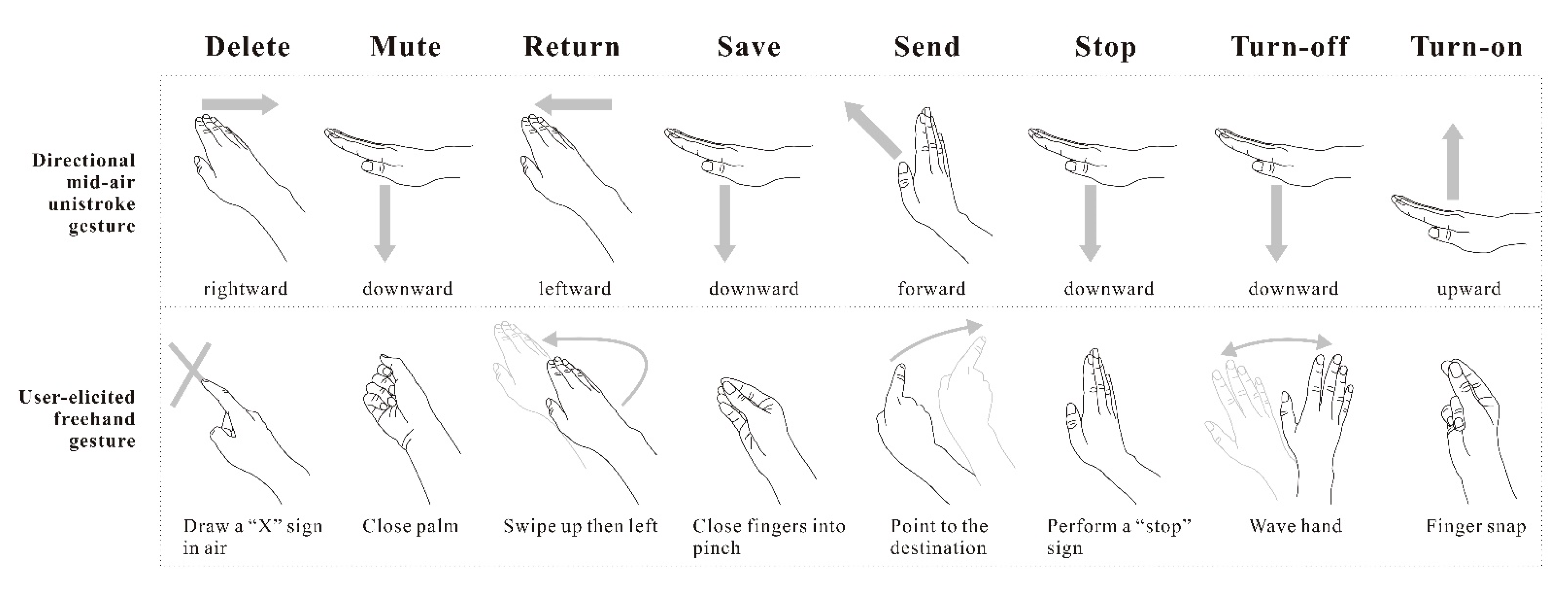

4.1. Defining the Directional Unistroke Gestures and User-Elicited Gestures

4.2. Experimental Design

4.3. Participants

4.4. Apparatus

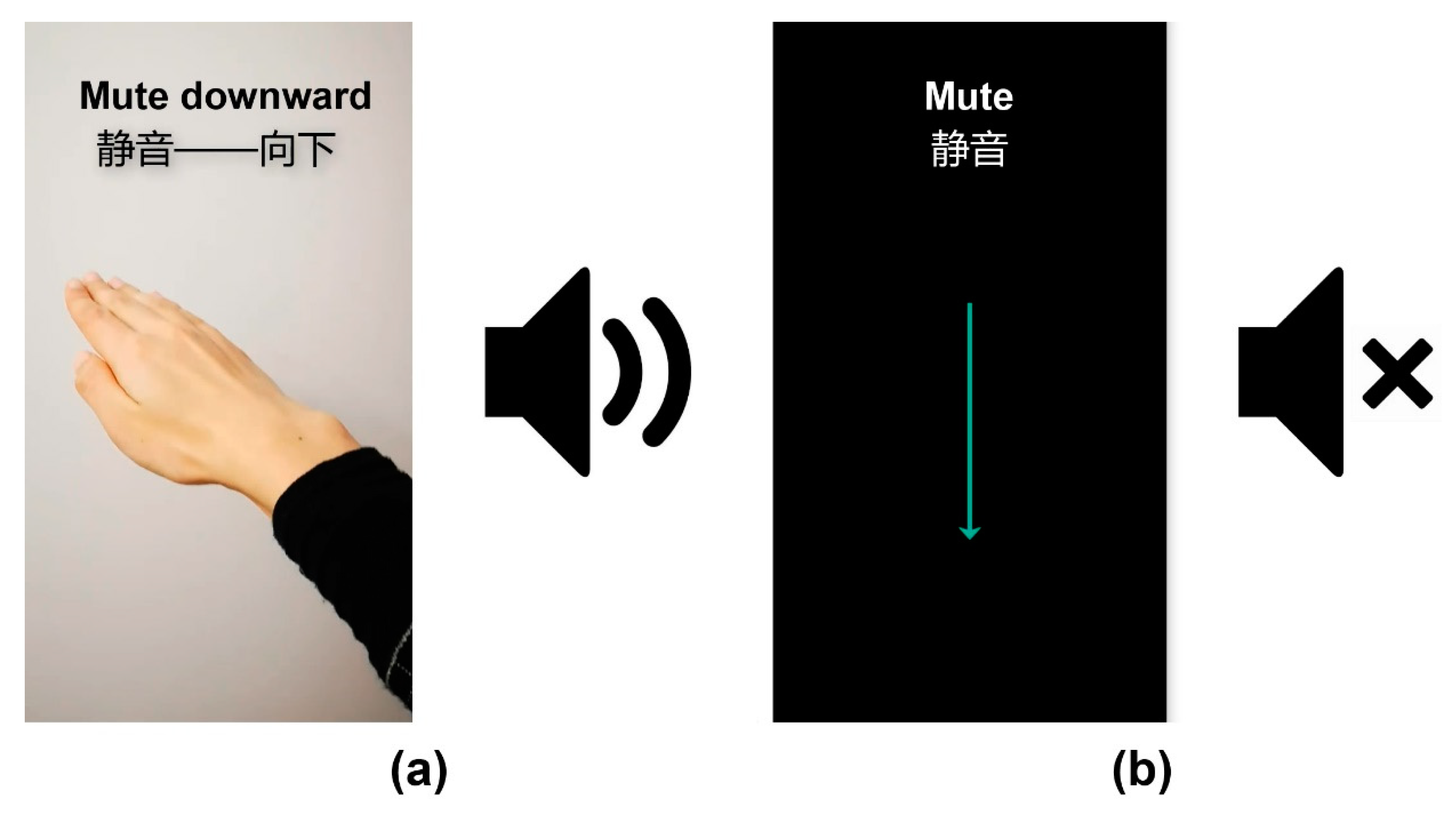

4.5. Experimental Materials

4.6. Procedure

4.7. Data Analysis

4.8. Results

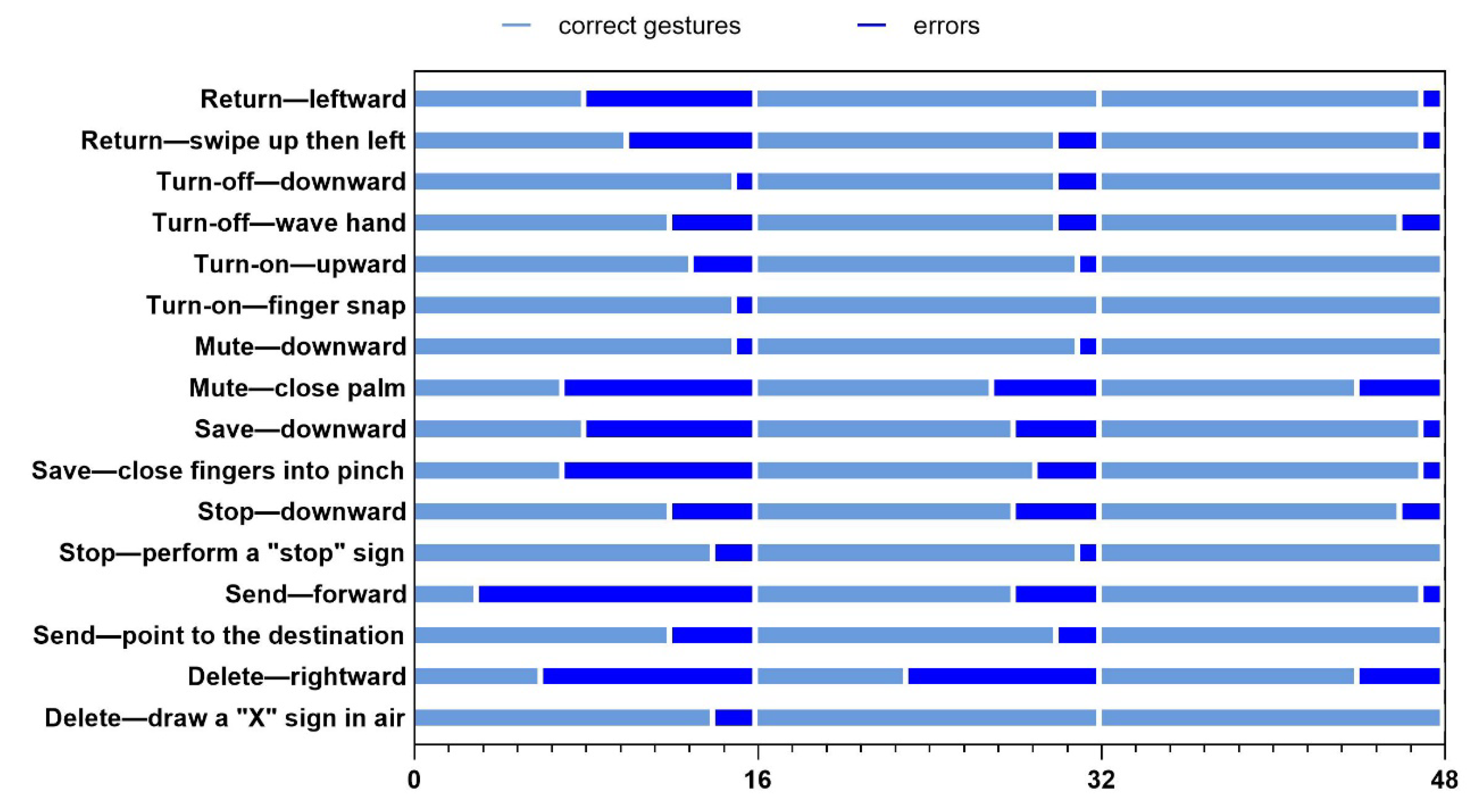

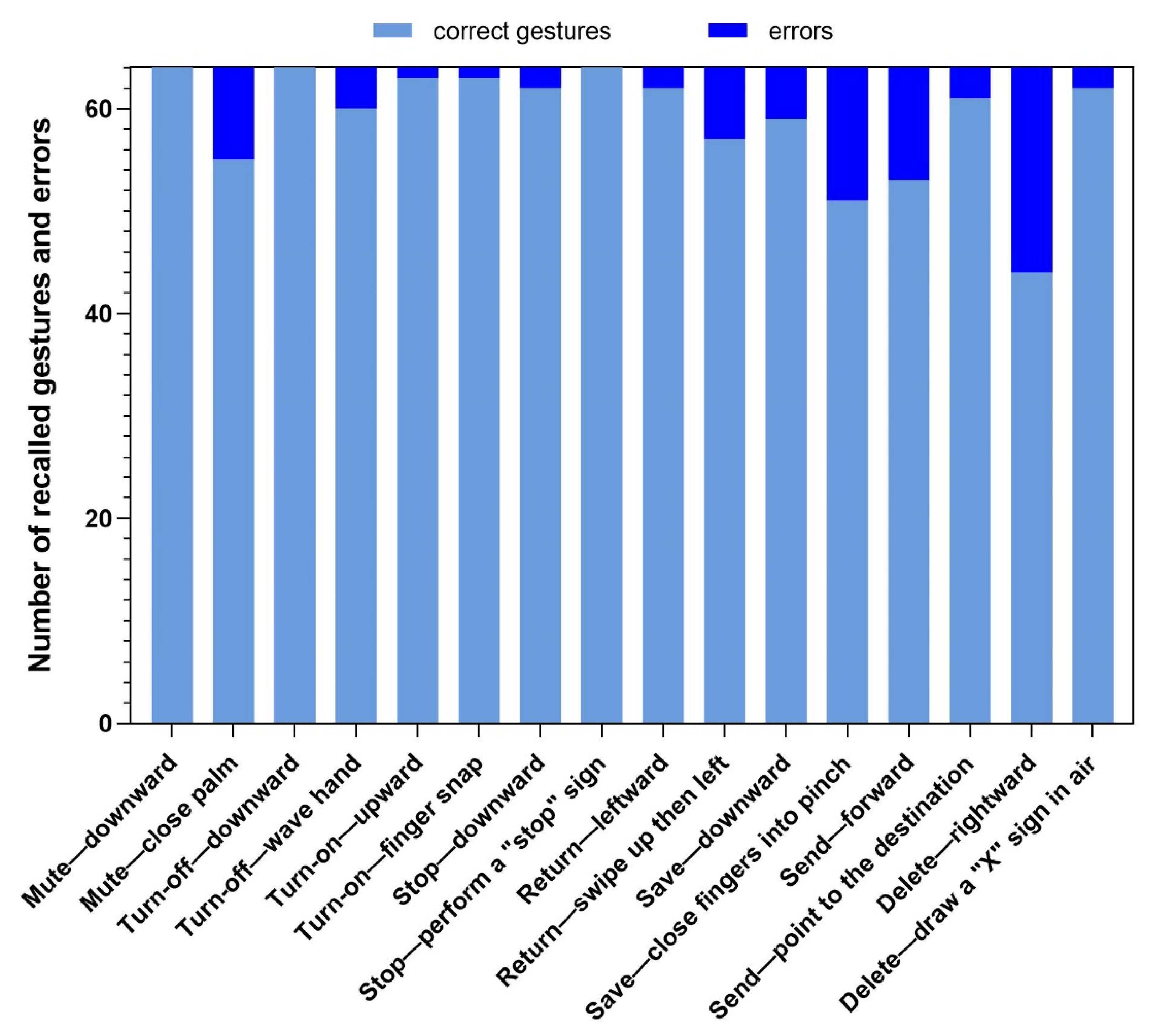

4.8.1. Recall Rate in the Learning Phase

4.8.2. Recall Accuracy in the Test Phase

4.8.3. Response Time

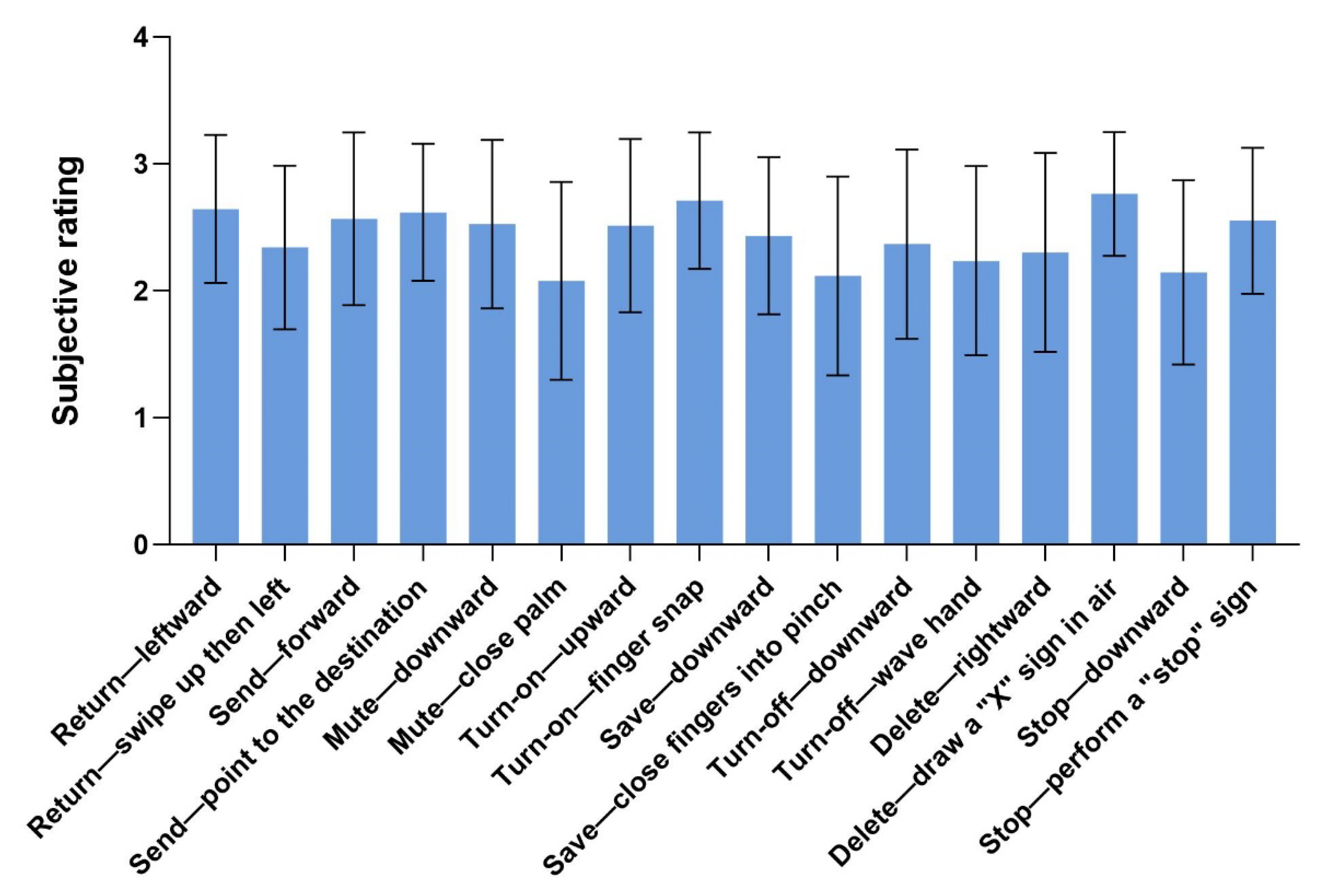

4.8.4. Subjective Rating

4.9. Discussion of Experiment 2

5. General Discussion

5.1. Suitable Commands for the Mapping to Direction of Unistroke Gesture

5.2. Ambiguity of Directional Mid-Air Unistroke Gestures

5.3. Limitations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Coskun, A.; Kaner, G.; Bostan, I. Is smart home a necessity or a fantasy for the mainstream user? A study on users expectations of smart household appliances. Int. J. Des. 2017, 12, 7–20. [Google Scholar]

- Koutsabasis, P.; Vogiatzidakis, P.J.B. Empirical research in mid-air interaction: A systematic review. Int. J. Hum.-Comput. Interact. 2019, 35, 1–22. [Google Scholar] [CrossRef]

- Dong, H.; Danish, A.; Figueroa, N.; El Saddik, A. An elicitation study on gesture preferences and memorability toward a practical hand-gesture vocabulary for smart televisions. IEEE Access 2015, 3, 543–555. [Google Scholar] [CrossRef]

- Zaiţi, I.A.; Pentiuc, Ş.; Vatavu, R.D. On free-hand TV control: Experimental results on user-elicited gestures with leap motion. Pers. Ubiquitous Comput. 2015, 19, 821–838. [Google Scholar] [CrossRef]

- Wu, H.Y.; Wang, J.M.; Zhang, X.L. User-centered gesture development in TV viewing environment. Multimed. Tools Appl. 2015, 75, 733–760. [Google Scholar] [CrossRef]

- Chen, Z.; Ma, X.; Peng, Z.; Zhou, Y.; Yao, M.; Ma, Z.; Wang, C.; Gao, Z.; Shen, M. User-defined gestures for gestural interaction: Extending from hands to other body parts. Int. J. Hum.-Comput. Interact. 2018, 34, 238–250. [Google Scholar] [CrossRef]

- Wobbrock, J.O.; Morris, M.R.; Wilson, A.D. User-defined gestures for surface computing. In Proceedings of the CHI’09, Boston, MA, USA, 4 April 2009; pp. 1083–1092. [Google Scholar] [CrossRef]

- Zwaan, R.A. Spatial iconicity affects semantic relatedness judgments. Psychon. Bull. Rev. 2003, 10, 954–958. [Google Scholar] [CrossRef] [Green Version]

- Richardson, D.C.; Spivey, M.J.; Barsalou, L.W.; McRae, K. Spatial representations activated during real-time comprehension of verbs. Cogn. Sci. 2003, 27, 767–780. [Google Scholar] [CrossRef]

- Lakoff, G.; Johnson, M. The metaphorical structure of the human conceptual system. Cogn. Sci. 1980, 4, 195–208. [Google Scholar] [CrossRef]

- Vatavu, R.D.; Vogel, D.; Casiez, G.; Grisoni, L. Estimating the perceived difficulty of pen gestures. In Proceeding of the INTERACT’11, Lisbon, Portugal, 5 September 2011; pp. 89–106. [Google Scholar] [CrossRef] [Green Version]

- Han, T.; Alexander, J.; Karnik, A.; Irani, P.; Subramanian, S. Kick: Investigating the use of kick gestures for mobile interactions. In Proceedings of the MobileHCI, Stockholm, Sweden, 30 August 2011. [Google Scholar] [CrossRef] [Green Version]

- Probst, K.; Lindlbauer, D.; Haller, M.; Schwartz, B.; Schrempf, A. A chair as ubiquitous input device: Exploring semaphoric chair gestures for focused and peripheral interaction. In Proceedings of the CHI’14, Toronto, ON, Canada, 26 April 2014; pp. 4097–4106. [Google Scholar] [CrossRef]

- Chattopadhyay, D.; Bolchini, D. Motor-intuitive interactions based on image schemas: Aligning touchless interaction primitives with human sensorimotor abilities. Interact. Comput. 2014, 27, 327–343. [Google Scholar] [CrossRef] [Green Version]

- Hong, J.I.; Landay, J.A. SATIN: A toolkit for informal ink-based applications. In Proceedings of the UIST, San Diego, CA, USA, 5 August 2000. [Google Scholar] [CrossRef]

- Appert, C.; Zhai, S. Using strokes as command shortcuts: Cognitive benefits and toolkit support. In Proceedings of the CHI, Boston, MA, USA, 4 April 2009; pp. 2289–2298. [Google Scholar]

- Tu, H.; Yang, Q.; Liu, X.; Yuan, J.; Ren, X.; Tian, F. Differences and similarities between dominant and non-dominant thumbs for pointing and gesturing tasks with bimanual tablet gripping interaction. Interact. Comput. 2018, 30, 243–257. [Google Scholar] [CrossRef]

- Leiva, L.A.; Alabau, V.; Romero, V.; Toselli, A.H.; Vidal, E. Context-aware gestures for mixed-initiative text editing UIs. Interact. Comput. 2015, 27, 675–696. [Google Scholar] [CrossRef] [Green Version]

- Bevan, C.; Fraser, D.S. Different strokes for different folks? Revealing the physical characteristics of smartphone users from their swipe gestures. Int. J. Hum.-Comput. Stud. 2016, 88, 51–61. [Google Scholar] [CrossRef] [Green Version]

- Mihajlov, M.; Law, E.L.C.; Springett, M. Intuitive learnability of touch gestures for technology-naïve older adults. Interact. Comput. 2015, 27, 344–356. [Google Scholar] [CrossRef] [Green Version]

- Sziladi, G.; Ujbanyi, T.; Katona, J.; Kovari, A. The analysis of hand gesture based cursor position control during solve an IT related task. In Proceedings of the 8th IEEE International Conference on Cognitive Infocommunications, Debrecen, Hungary, 11 September 2017. [Google Scholar]

- Chen, C.; Wang, J. The semantic meaning of hand shapes and Z-dimension movements of freehand distal pointing on large displays. Symmetry 2020, 12, 329. [Google Scholar] [CrossRef] [Green Version]

- Katona., J.; Peter, D.; Ujbanyi, T.; Kovari, A. Control of incoming calls by a Windows Phone based brain computer interface. In Proceedings of the 15th IEEE International Symposium on Computational Intelligence and Informatics, Budapest, Hungary, 19 November 2014; pp. 121–125. [Google Scholar]

- Guy, E.; Punpongsanon, P.; Iwai, D.; Sato, K.; Boubekeur, T. LazyNav: 3D ground navigation with non-critical body parts. In Proceedings of the 3D User Interfaces (3DUI), 2015 IEEE Symposium, Arles, France, 23–24 March 2015; pp. 43–50. [Google Scholar]

- Chan, E.; Seyed, T.; Stuerzlinger, W.; Yang, X.D.; Maurer, F. User-elicitation on single-hand microgestures. In Proceedings of the SIGCHI Conference on Human Factors in Computer Systems, San Jose, CA, USA, 7–12 May 2016; pp. 3403–3414. [Google Scholar]

- Burnett, G.; Crundall, E.; Large, D.; Lawson, G.; Skrypchuk, L. A study of unidirectional swipe gestures on in-vehicle touch screens. In Proceedings of the AutomotiveUI’13, Eindhoven, The Netherlands, 28 October 2013; pp. 22–29. [Google Scholar] [CrossRef]

- Jahani-Fariman, H. Developing a user-defined interface for in-vehicle mid-air gestural interactions. In Proceedings of the 22nd International Conference on Intelligent User Interfaces Companion (IUI’17 Companion), New York, NY, USA, 7 March 2017; pp. 165–168. [Google Scholar]

- May, K.; Gable, T.M.; Walker, B.N. Designing an In-Vehicle Air Gesture Set Using Elicitation Methods. In Proceedings of the 9th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Oldenburg, Germany, 24–27 September 2017; pp. 74–83. [Google Scholar]

- Bostan, I.; Buruk, O.T.; Canat, M.; Tezcan, M.O.; Yurdakul, C.; Göksun, T.; Özcan, O. Hands as a controller: User preferences for hand specific On-Skin gestures. In Proceedings of the 2017 Conference on Designing Interactive Systems, Edinburgh, UK, 10–14 June 2017; pp. 1123–1134. [Google Scholar]

- Wu, H.; Gai, J.; Wang, Y.; Liu, J.; Qiu, J.; Wang, J.; Zhang, X.L. Influence of cultural factors on freehand gesture design. Int. J. Hum.-Comput. Stud. 2020, 143, 102502. [Google Scholar] [CrossRef]

- Dim, N.K.; Silpasuwanchai, C.; Sarcar, S.; Ren, X. Designing mid-air TV gestures for blind people using user- and choice-based elicitation approaches. In Proceedings of the DIS’ed16, New York, NY, USA, 4 June 2016; pp. 204–214. [Google Scholar] [CrossRef]

- Vogiatzidakis, P.; Koutsabasis, P. Frame-based elicitation of mid-air Gestures for a smart home device ecosystem. In Informatics; Multidisciplinary Digital Publishing Institute: Basel, Switzerland, 2019; Volume 6. [Google Scholar]

- Vatavu, R.D.; Zaiţi, I.A. Leap gestures for TV: Insights from an elicitation study. In Proceedings of the TVX’14, New York, NY, USA, 25 June 2014; pp. 131–138. [Google Scholar]

- Aigner, R.; Haller, M.; Lindlbauer, D.; Ion, A.; Zhao, S.; Koh, J. Understanding mid-air hand gestures: A study of human preferences in usage of gesture types for HCI. Microsoft Res. Tech. Rep. MSR-TR-2012-111 2012, 2, 30. [Google Scholar]

- Mcneill, D. Gesture and Thought; University of Chicago Press: Chicago, IL, USA, 2008; pp. 34–41. [Google Scholar] [CrossRef]

- Vatavu, R.D. Smart-Pockets: Body-deictic gestures for fast access to personal data during ambient interactions. Int. J. Hum.-Comput. Stud. 2017, 103, 1–21. [Google Scholar] [CrossRef]

- Loehmann, S. Experience Prototyping for Automotive Applications. Ph.D. Dissertation, LMU München, Fakultät für Mathematik, Informatik und Statistik, 2015. [Google Scholar]

- Xiao, Y.; He, R. The intuitive grasp interface: Design and evaluation of micro-gestures on the steering wheel for driving scenario. Univers. Access Inf. Soc. 2020, 19, 433–450. [Google Scholar] [CrossRef]

- Cienki, A.; Müller, C. Metaphor, gesture and thought. In Cambridge Handbook of Metaphor and Thought; Gibbs, R.W., Ed.; APA: Worcester, MN, USA, 2008; pp. 483–501. [Google Scholar] [CrossRef]

- Bacim, F.; Nabiyouni, M.; Bowman, D.A. Slice-n-Swipe: A free-hand gesture user interface for 3D point cloud annotation. In Proceedings of the 2014 IEEE Symposium on 3D User Interfaces, Minnesota, MN, USA, 29–30 March 2014; pp. 185–186. [Google Scholar] [CrossRef] [Green Version]

- Ackad, C.; Kay, J.; Tomitsch, M. Towards learnable gestures for exploring hierarchical information spaces at a large public display. In Proceedings of the Gesture-based Interaction Design: Communication and Cognition, Toronto, ON, Canada, 26 April 2014; Volume 49, pp. 16–19. [Google Scholar]

- Lakoff, G.; Johnson, M. Philosophy in the Flesh—The Embodied Mind and Its Challenge to Western Thought; Basic Books: New York, NY, USA, 1999. [Google Scholar]

- Hurtienne, J.; Stößel, C.; Sturm, C.; Maus, A.; Rötting, M.; Langdon, P.; Clarkson, J. Physical gestures for abstract concepts: Inclusive design with primary metaphors. Interact. Comput. 2010, 22, 475–484. [Google Scholar] [CrossRef]

- Huang, Y.; Tse, C.S. Re-examining the automaticity and directionality of the activation of the spatial-valence Good is Up metaphoric association. PLoS ONE 2015, 10, e0123371. [Google Scholar] [CrossRef] [Green Version]

- Kóczy, J.B. Orientational metaphors. In Nature, Metaphor, Culture: Cultural Conceptualizations in Hungarian Folksongs; Springer: Singapore, 2018; pp. 115–132. [Google Scholar]

- Lakoff, G. The neural theory of metaphor. In The Metaphor Handbook; Cambridge University Press: Cambridge, UK, 2009. [Google Scholar]

- Grady, R. Foundations of Meaning: Primary Metaphors and Primary Scenes. Ph.D. Dissertation, University of California at Berkeley, Berkeley, CA, USA, 1997. [Google Scholar]

- Gu, Y.; Mol, L.; Hoetjes, M.; Swerts, M. Conceptual and lexical effects on gestures: The case of vertical spatial metaphors for time in Chinese. Lang. Cogn. Neurosci. 2017, 32, 1048–1063. [Google Scholar] [CrossRef] [Green Version]

- Boroditsky, L. Metaphoric structuring: Understanding time through spatial metaphors. Cognition 2000, 75, 1–28. [Google Scholar] [CrossRef]

- Boroditsky, L.; Ramscar, M. The roles of body and mind in abstract thought. Psychol. Sci. 2002, 3, 185–189. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Casasanto, D.; Bottini, R. Spatial language and abstract concepts. WIREs Cogn. Sci. 2014, 5, 139–149. [Google Scholar] [CrossRef] [PubMed]

- Narayanan, S. Embodiment in Language Understanding: Modeling The Semantics Of Causal Narratives. Ph.D. Dissertation, University of California at Berkeley, Berkeley, CA, USA, 1997. [Google Scholar]

- Zwaan, R.A. The immersed experiencer: Toward an embodied theory of language comprehension. Psychol. Learn. Motiv. 2004, 44, 35–62. [Google Scholar] [CrossRef]

- Barsalou, L.W. Language comprehension: Archival memory or preparation for situated action? Discourse Process. 1999, 28, 61–80. [Google Scholar] [CrossRef]

- Zwaan, R.A.; Madden, C.J.; Yaxley, R.H.; Aveyard, M.E. Moving words: Dynamic representations in language comprehension. Cogn. Sci. 2004, 28, 611–619. [Google Scholar] [CrossRef]

- Hayward, W.G.; Tarr, M.J. Spatial language and spatial representation. Cognition 1995, 55, 39–84. [Google Scholar] [CrossRef]

- Stanfield, R.A.; Zwaan, R.A. The effect of implied orientation derived from verbal context on picture recognition. Psychol. Sci. 2001, 12, 153–156. [Google Scholar] [CrossRef]

- Richardson, D.C.; Spivey, M.J.; Edelman, S.; Naples, A.J. Language is spatial: Experimental evidence for image schemas of concrete and abstract verbs. In Proceedings of the 23rd Annual Meeting of the Cognitive Science Society, Mawhah, NJ, USA, 26–29 July 2001; pp. 873–878. [Google Scholar]

- Chatterjee, A.; Southwood, M.H.; Basilico, D. Verbs, events and spatial representations. Neuropsychologia 1999, 37, 395–402. [Google Scholar] [CrossRef]

- Bergen, B.K.; Lindsay, S.; Matlock, T.; Narayanan, S. Spatial and linguistic aspects of visual imagery in sentence comprehension. Cogn. Sci. 2007, 31, 733–764. [Google Scholar] [CrossRef] [PubMed]

- Wu, L.M.; Mo, L.; Wang, R.M. The activation process of spatial representations during real-time comprehension of verbs. Acta Psychol. Sin. 2006, 38, 663–671. [Google Scholar]

- Vatavu, R.D.; Wobbrock, J.O. Formalizing agreement analysis for elicitation studies: New measures, significance test, and toolkit. In Proceedings of the CHI’ 15, Seoul, Korea, 18 April 2015; pp. 1325–1334. [Google Scholar]

- Maouene, J.; Hidaka, S.; Smith, L.B. Body parts and early-learned verbs. Cogn. Sci. 2008, 32, 1200–1216. [Google Scholar] [CrossRef] [Green Version]

- Grossman, T.; Fitzmaurice, G.W.; Attar, R. A survey of software learnability: Metrics, Methodologies and Guidelines. In Proceedings of the CHI’09, Boston, MA, USA, 4 April 2009; pp. 649–658. [Google Scholar]

- Nacenta, M.A.; Kamber, Y.; Qiang, Y.; Kristensson, P.O. Memorability of pre-designed & user-defined gesture sets. In Proceedings of the CHI’13, Paris, France, 27 April 2013; pp. 1099–1108. [Google Scholar]

- Blackler, A.L.; Hurtienne, J. Towards a unified view of intuitive interaction: Definitions, models and tools across the world. MMI-Interakt. 2007, 13, 36–54. [Google Scholar]

- Wu, H.; Zhang, S.; Liu, J.; Qiu, J.; Zhang, X. The gesture disagreement problem in free-hand gesture interaction. Int. J. Hum.-Comput. Interact. 2018, 35, 1102–1104. [Google Scholar] [CrossRef]

- Choi, E.; Kwon, S.; Lee, D.; Lee, H.; Chung, M.K. Can user-derived gesture be considered as the best gesture for a command? Focusing on the commands for smart home system. In Proceedings of the Human Factors and Ergonomics Society 56th Annual Meeting, Boston, MA, USA, 1 September 2012; pp. 1253–1257. [Google Scholar]

| Commands/Referents | Descriptions | Scenarios |

|---|---|---|

| Display | To awaken a device and images show on the screen. | Controlling a multi-media program or a device |

| Turn-on | To turn on a device or a machine to make it work. | |

| Turn-off | To turn off a device or a machine. | |

| Mute | To make the sound of a device quiet. | |

| Screen Capture | To capture an image displayed on a screen. | |

| Start | To turn on a device, an instrument or open an application. | |

| Stop | To turn off a device, an instrument or close an application. | |

| Zoom in | To show a close-up picture of items. | |

| Accept | To accept a request from system or other users. | Browsing web pages, using Apps or doing remote interactions |

| Delete | To discard a file or remove something. | |

| Hide | To make a program not show on the current page. | |

| Pop up | To make a hidden program or a window show on the current page. | |

| Reject | To reject a request from system or other users. | |

| Return | To go back to a previous stage of the program. | |

| Save | To save a file, picture or text. | |

| Send | To send out a digital file or signal. |

| Commands/Referents | Upward | Downward | Leftward | Rightward | Backward | Forward |

|---|---|---|---|---|---|---|

| Accept | 3 | 3 | 2 | 11 | 6 | 5 |

| Delete | 2 | 8 | 5 | 13 | 2 | 0 |

| Display | 8 | 2 | 0 | 3 | 2 | 12 |

| Hide | 2 | 14 | 0 | 0 | 12 | 2 |

| Mute | 0 | 16 | 4 | 0 | 7 | 1 |

| Pop up | 12 | 9 | 0 | 3 | 5 | 1 |

| Reject | 1 | 4 | 8 | 5 | 9 | 3 |

| Return | 3 | 4 | 14 | 2 | 6 | 1 |

| Save | 3 | 13 | 2 | 2 | 5 | 2 |

| Screen-capture | 3 | 11 | 4 | 7 | 1 | 4 |

| Send | 5 | 1 | 0 | 6 | 0 | 16 |

| Start | 13 | 2 | 0 | 5 | 0 | 10 |

| Stop | 2 | 12 | 0 | 1 | 2 | 0 |

| Turn-off | 0 | 17 | 1 | 7 | 0 | 5 |

| Turn-on | 20 | 0 | 0 | 4 | 0 | 6 |

| Zoom-in | 12 | 1 | 0 | 1 | 3 | 13 |

| Commands | Mean Value | Difference | |||

|---|---|---|---|---|---|

| Highest Score | Second-Highest Score | Z | p-Value | r | |

| Accept | 1.900 (rightward) | 1.733 (forward) | 0.936 | 0.349 | 0.101 |

| Delete | 2.367 (rightward) | 1.867 (downward) | 2.368 | 0.018 | 0.317 |

| Display | 2.200 (forward) | 2.000 (upward) | 0.953 | 0.340 | 0.119 |

| Hide | 2.300 (backward) | 2.167 (downward) | 0.744 | 0.457 | 0.076 |

| Mute | 2.400 (downward) | 1.833 (backward) | 2.635 | 0.008 | 0.352 |

| Pop up | 2.267 (upward) | 1.967 (downward) | 1.455 | 0.146 | 0.168 |

| Reject | 1.967 (leftward) | 1.900 (backward) | 0.371 | 0.710 | 0.038 |

| Return | 2.467 (leftward) | 2.033 (backward) | 2.168 | 0.030 | 0.268 |

| Save | 2.267 (downward) | 1.600 (backward) | 2.880 | 0.004 | 0.405 |

| Screen-capture | 2.167 (downward) | 1.900 (rightward) | 1.291 | 0.197 | 0.167 |

| Send | 2.533 (forward) | 2.067 (rightward) | 2.004 | 0.045 | 0.296 |

| Start | 2.433 (upward) | 2.233 (forward) | 1.166 | 0.243 | 0.132 |

| Stop | 2.167 (downward) | 1.567 (backward) | 2.830 | 0.005 | 0.393 |

| Turn-off | 2.467 (downward) | 1.933 (rightward) | 2.537 | 0.011 | 0.280 |

| Turn-on | 2.600 (upward) | 2.133 (forward) | 2.311 | 0.021 | 0.317 |

| Zoom-in | 2.567 (upward) | 2.500 (forward) | 0.440 | 0.660 | 0.054 |

| Participant Number | Order of Trials | Participant Number | Order of Trials |

|---|---|---|---|

| 1 | A B C D E F G H I J K L M N O P | 9 | I J K L P O M N B A D C F E H G |

| 2 | E F G H A B C D J I L K N M P O | 10 | J I L K O P N M F E H G B A D C |

| 3 | B A D C F E H G M N O P I J K L | 11 | M N O P L K I J C D A B H G E F |

| 4 | F E H G B A D C N M P O J I L K | 12 | N M P O K L J I H G E F C D A B |

| 5 | C D A B I J K L E F G H P O M N | 13 | L K I J C D A B O P N M G H F E |

| 6 | D C B A J I L K P O M N E F G H | 14 | K L J I D C B A G H F E O P N M |

| 7 | H G E F M N O P L K I J A B C D | 15 | P O M N H G E F K L J I D C B A |

| 8 | G H F E N M P O A B C D L K I J | 16 | O P N M G F H E D C B A K L J I |

| Commands | Z | Sig. |

|---|---|---|

| Turn-on | −1.262 | 0.207 |

| Turn-off | −4.150 | 0.000 |

| Mute | −2.999 | 0.003 |

| Stop | −1.383 | 0.167 |

| Return | −3.026 | 0.002 |

| Save | −3.033 | 0.002 |

| Send | −2.057 | 0.040 |

| Delete | −3.294 | 0.001 |

| Commands | Z | Sig. |

|---|---|---|

| Turn-on | −2.072 | 0.038 |

| Turn-off | −1.167 | 0.243 |

| Mute | −3.513 | 0.000 |

| Stop | −3.475 | 0.001 |

| Return | −2.891 | 0.004 |

| Save | −2.699 | 0.007 |

| Send | −0.546 | 0.585 |

| Delete | −3.900 | 0.000 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xiao, Y.; Miao, K.; Jiang, C. Mapping Directional Mid-Air Unistroke Gestures to Interaction Commands: A User Elicitation and Evaluation Study. Symmetry 2021, 13, 1926. https://doi.org/10.3390/sym13101926

Xiao Y, Miao K, Jiang C. Mapping Directional Mid-Air Unistroke Gestures to Interaction Commands: A User Elicitation and Evaluation Study. Symmetry. 2021; 13(10):1926. https://doi.org/10.3390/sym13101926

Chicago/Turabian StyleXiao, Yiqi, Ke Miao, and Chenhan Jiang. 2021. "Mapping Directional Mid-Air Unistroke Gestures to Interaction Commands: A User Elicitation and Evaluation Study" Symmetry 13, no. 10: 1926. https://doi.org/10.3390/sym13101926

APA StyleXiao, Y., Miao, K., & Jiang, C. (2021). Mapping Directional Mid-Air Unistroke Gestures to Interaction Commands: A User Elicitation and Evaluation Study. Symmetry, 13(10), 1926. https://doi.org/10.3390/sym13101926