A Novel Identification Method for Apple (Malus domestica Borkh.) Cultivars Based on a Deep Convolutional Neural Network with Leaf Image Input

Abstract

1. Introduction

2. Related Works

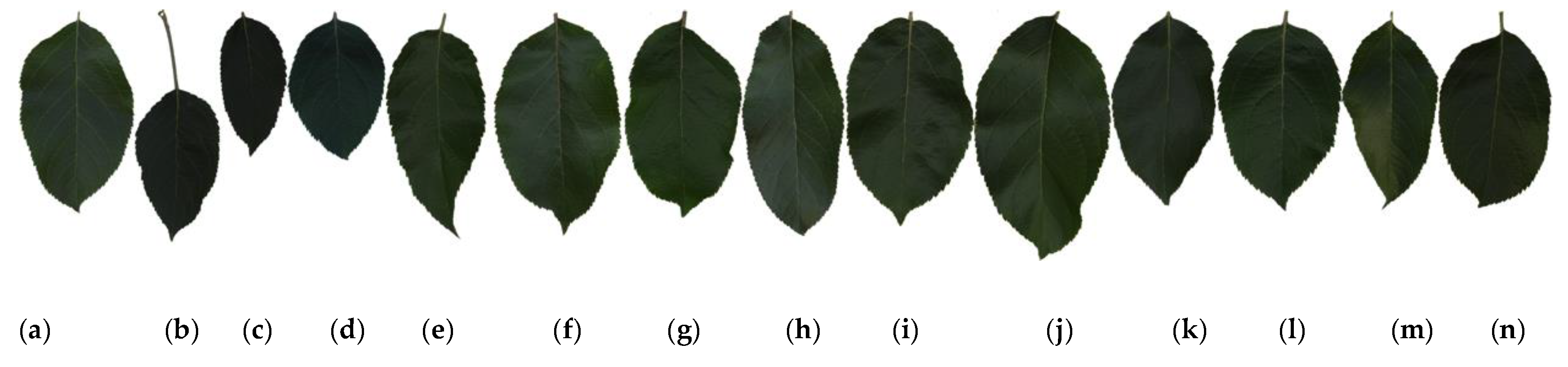

- Sufficient apple leaf samples were obtained as research objects by choosing 14 apple cultivars, most of which grow in Jingning County, Gansu Province, which is the second-largest apple production area in the Loess Plateau of Northwest China. We took apple leaf images in the orchard under natural sunlight conditions at a resolution of 3264 × 2448 and/or 1600 × 1200 from multiple angles in automatic shooting mode to capture diverse apple leaf images to train the DCNN-based model. In particular, the diversity of leaf images increased under various weather conditions by capturing leaf images for 37 days from 15 July 2019 to 20 August 2019. This period included sunny, cloudy, rainy (light rain, moderate rain, rain, heavy rain), and foggy days. Finally, a total of 12,435 leaf images from 14 apple cultivars were obtained. This large number and wide range can enhance the robustness of the DCNN-based model in the training process and ensure that it has a high generalization capability.

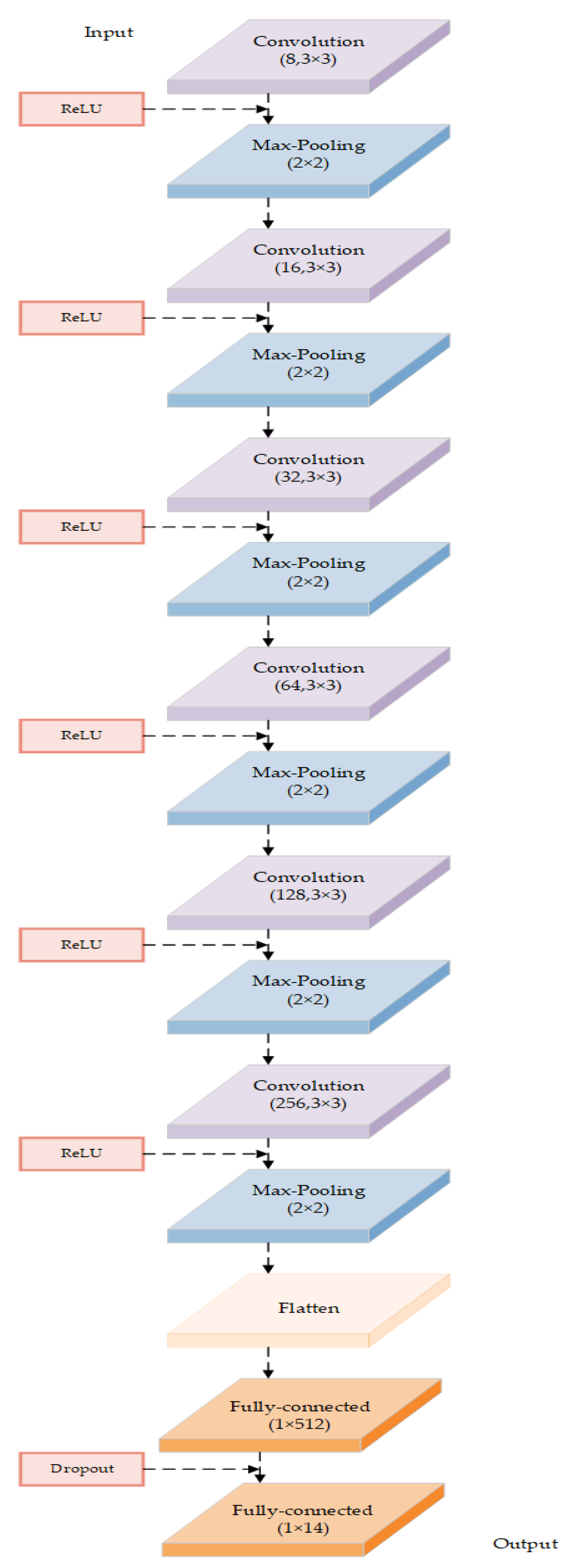

- A novel deep convolutional neural network model with leaf image input was proposed for the identification of apple cultivars through the analysis of the characteristics of apple cultivar leaves. The convolution kernel size and number were adjusted, and a max-pooling operation after each convolution layer was implemented; dropout was used after the dense layer to prevent the over-fitting problem.

3. Acquisition of Sufficient Apple Cultivar Leaf Images

3.1. Plant Materials and Method

3.2. Acquisition of Sufficient Apple Cultivar Leaf Images

3.3. Software and Computing Environment

4. Generation of the Deep Convolutional Neural Network-Based (CNN-Based) Model for Apple Cultivar Classification

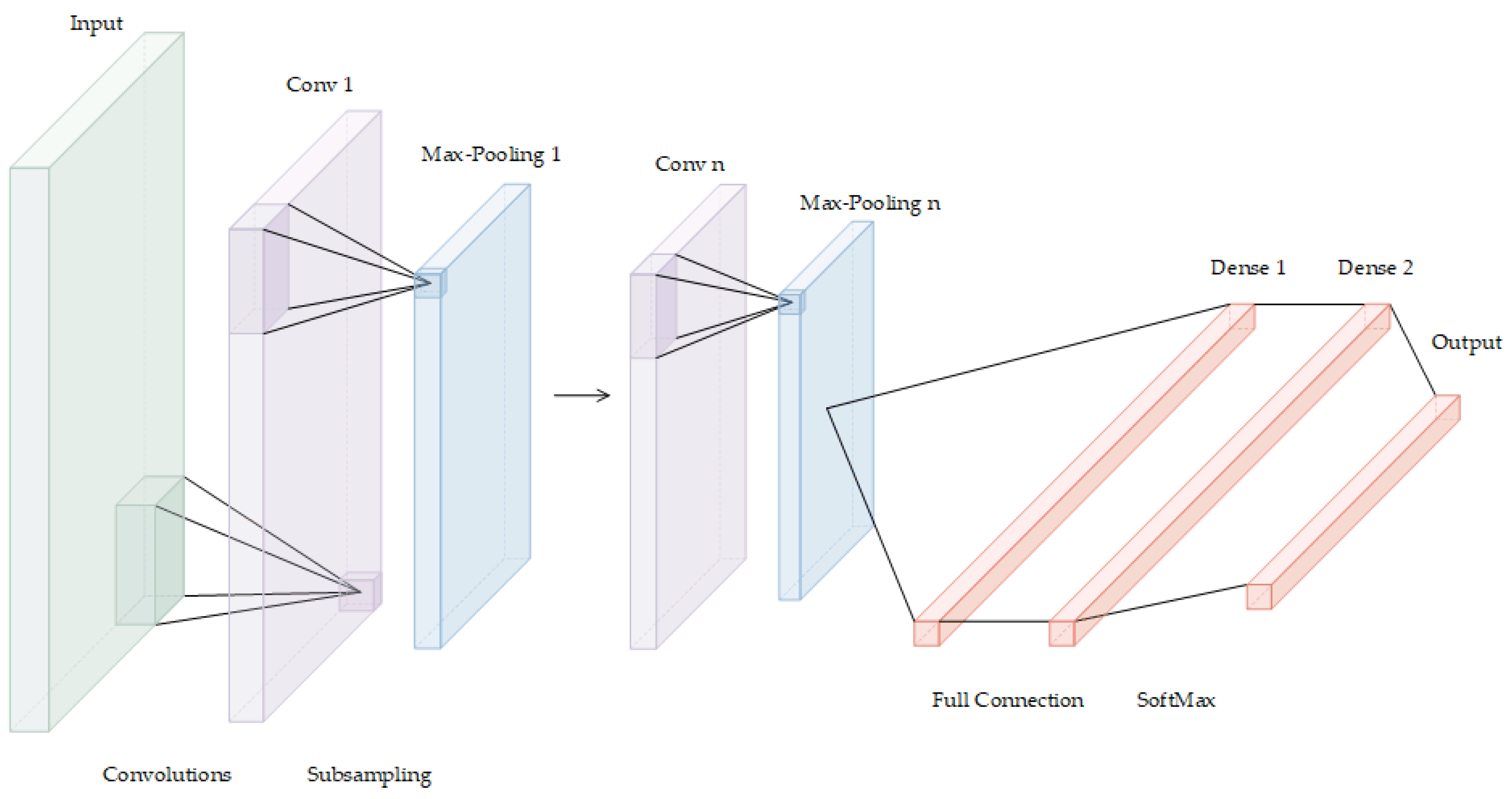

4.1. Construct the Deep Convolutional Neural Networks-Based (DCNNs-Based) Model for Apple Cultivar Identification with Leaf Image Input

4.2. Specific Parameters of the DCNN-Based Model in This Study

4.3. Ten-Fold Cross-Validation

5. Experimental Results and Analysis

5.1. Evaluation of the Accuracy of the DCNN-Based Model

5.1.1. Accuracy and Loss

5.1.2. Accuracy of the Proposed Model Compared with the Accuracy of Other Classical Machine Learning Algorithms

5.2. Evaluation of the Generalization Performance on an Independent and Identically Distributed Testing Set

5.2.1. Accuracy on an Independent and Identically Distributed Testing Dataset

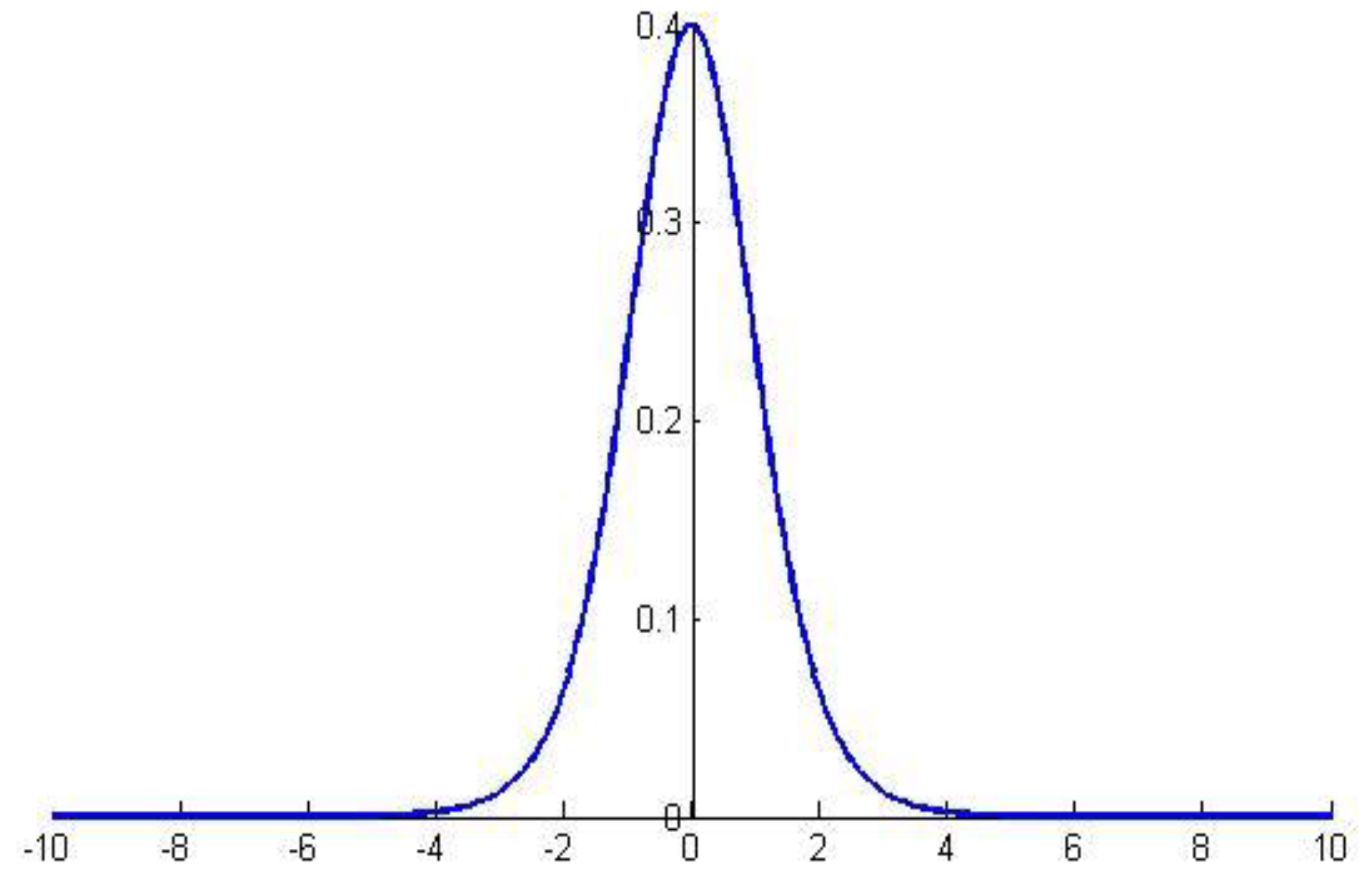

5.2.2. Test for the General Error of the DCNN-Based Model on an Unknown Testing Set

5.2.3. Evaluation of Classification Performance in Each Cultivar

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Srivastava, K.K.; Ahmad, N.; Das, B.; Sharma, O.C.; Singh, S.R.; Rather, J.A. Genetic divergence in respect to qualitative traits and their possible use in precision breeding programme of apple (Malus Domestica). Indian J. Agric. Sci. 2013, 83, 1217–1220. [Google Scholar]

- Peihua, C. Apple Varieties in China[M]; China Agriculture Press: Beijing, China, 2015; pp. 2–6. [Google Scholar]

- Zhengyang, Z. Fruit Science and Practice in China. Apple[M]; Shaanxi Science and Technology Press: Xi’an, China, 2015; pp. 1–7. [Google Scholar]

- Sheth, K. 25 Countries That Import The Most Apples. WorldAtlas. Available online: https://www.worldatlas.com/articles/the-countries-with-the-most-apple-imports-in-the-world.html (accessed on 25 December 2019).

- Sohu News. “World Apple Production and Sales in 2018/2019.”. Available online: https://www.http://www.sohu.com/a/284046969_120045201 (accessed on 12 December 2019).

- Chen, X.S.; Han, M.Y.; Su, G.L.; Liu, F.Z.; Guo, G.N.; Jiang, Y.M.; Mao, Z.Q.; Peng, F.T.; Shu, H.R. Discussion on today’s world apple industry trends and the suggestions on sustainable and efficient development of apple industry in China. J. Fruit Sci. 2010, 27, 598–604. [Google Scholar]

- Lixin, W.; Xiaojun, Z. Establishment of SSR fingerprinting database on major apple cultivars. J. Fruit Sci. 2012, 29, 971–977. [Google Scholar]

- Huiling, M.; Wang, R.; Cheng, C.; Dong, W. Rapid Identification of Apple Varieties Based on Hyperspectral Imaging. Trans. Chin. Soc. Agric. Mach. 2017, 48, 305–312. [Google Scholar]

- Ba, Q.; Zhao, Z.; Gao, H.; Wang, Y.; Sun, B. Genetic Relationship Analysis of Apple Cultivars Using SSR and SRAP Makers. J. Northwest A F Univ. -Nat. Sci. Ed. 2011, 39, 123–128. [Google Scholar]

- Hongjie, S. Identification of Grape Varieties Based on Leaves Image Analysis; Northwest A&F University: Xianyang, China, 2016. [Google Scholar]

- Pankaja, K.; Suma, V. Leaf Recognition and Classification Using Chebyshev Moments. In Smart Intelligent Computing and Applications; Smart Innovation, Systems and Technologies; Satapathy, S., Bhateja, V., Das, S., Eds.; Springer: Singapore, 2019; Volume 105. [Google Scholar]

- Hall, D.; McCool, C.; Dayoub, F.; Sunderhauf, N.; Upcroft, B. Evaluation of Features for Leaf Classification in Challenging Conditions. In Proceedings of the 2015 IEEE Winter Conference on Applications of Computer Vision, WACV, Waikoloa, HI, USA, 5–9 January 2015; pp. 797–804. [Google Scholar] [CrossRef]

- Söderkvist, O. Computer Vision Classification of Leaves from Swedish Trees. Master’s Thesis, Teknik Och Teknologier, Stockholm, Sweden, 2001. [Google Scholar]

- Lei, W.; Dongjian, H.; Yongliang, Q. Study on plant leaf classification based on image processing and SVM. J. Agric. Mech. Res. 2013, 5, 12–15. [Google Scholar]

- Zhang, S.; Ju, C. Orthogonal global-locally discriminant projection for plant leaf classification. Nongye Gongcheng Xuebao/Trans. Chin. Soc. Agric. Eng. 2010, 26, 162–166. [Google Scholar]

- Dyrmann, M.; Karstoft, H.; Midtiby, H.S. Plant species classification using deep convolutional neural network. Biosyst. Eng. 2016, 151, 72–80. [Google Scholar] [CrossRef]

- Yalcin, H.; Razavi, S. Plant classification using convolutional neural networks. In Proceedings of the 5th International Conference on Agro-geoinformatics, Tianjin, China, 18–20 July 2016; pp. 1–5. [Google Scholar]

- Lee, S.H.; Chan, C.S.; Remagnino, P. Multi-Organ Plant Classification Based on Convolutional and Recurrent Neural Networks. IEEE Trans. Image Process. 2018. [Google Scholar] [CrossRef] [PubMed]

- ImageCLEF / LifeCLEF—Multimedia Retrieval in CLE. Available online: https://www.imageclef.org/2015 (accessed on 16 September 2019).

- Sue, H.L.; Chee, S.C.; Simon, J.M.; Paolo, R. How deep learning extracts and learns leaf features for plant classification. Pattern Recognit. 2017, 71, 1–13. [Google Scholar]

- Guillermo, L.G.; Lucas, C.U.; Mónica, G.L.; Pablo, M.G. Deep learning for plant identification using vein morphological patterns. Comput. Electron. Agric. 2016, 127, 418–424. [Google Scholar]

- Baldi, A.; Pandolfi, C.; Mancuso, S.; Lenzi, A. A leaf-based back propagation neural network for oleander (Nerium oleander L.) cultivar identification. Comput. Electron. Agric. 2017, 142, 515–520. [Google Scholar] [CrossRef]

- Weather China. Available online: https://www.weather.com.cn (accessed on 20 August 2019).

- MATLAB for Artificial Intelligence. Available online: www.mathworks.com (accessed on 10 November 2019).

- Hanson, A.M.J.; Joy, A.; Francis, J. Plant leaf disease detection using deep learning and convolutional neural network. Int. J. Eng. Sci. Comput. 2017, 7, 5324–5328. [Google Scholar]

- Lu, Y.; Yi, S.J.; Zeng, N.Y.; Liu, Y.; Zhang, Y. Identification of rice diseases using deep convolutional neural networks. Neurocomputing 2017, 267, 378–384. [Google Scholar] [CrossRef]

- Kawasaki, Y.; Uga, H.; Kagiwada, S.; Iyatomi, H. Basic study of automated diagnosis of viral plant diseases using convolutional neural networks. In Proceedings of the 12th International Symposium on Visual Computing, Las Vegas, NV, USA, 12–14 December 2015; pp. 638–645. [Google Scholar]

- Mohanty, S.P.; Hughes, D.P.; Marcel, S. Using deep learning for image-based plant disease detection. Front. Plant Sci. 2016, 7, 14–19. [Google Scholar] [CrossRef] [PubMed]

- Fuentes, A.; Yoon, S.; Kim, S.C.; Park, D.S. A robust deep-learning-based detector for real-time tomato plant diseases and pests recognition. Sensors 2017, 17, 2022. [Google Scholar] [CrossRef] [PubMed]

- Sladojevic, S.; Arsenovic, M.; Anderla, A.; Culibrk, D.; Stefanovic, D. Deep neural networks based recognition of plant diseases by leaf image classification. Comput. Intell. Neurosci. 2016, 2016. [Google Scholar] [CrossRef] [PubMed]

- Liu, B.; Zhang, Y.; He, D.; Li, Y. Identification of Apple Leaf Diseases Based on Deep Convolutional Neural Networks. Symmetry 2018, 10, 11. [Google Scholar] [CrossRef]

| Class ID | Abbreviation | Apple Cultivar | Leaves | Leaf Images |

|---|---|---|---|---|

| 1 | 2001 | 2001 | 206 | 946 |

| 2 | ACE | Ace | 195 | 938 |

| 3 | ADR | Ada Red | 186 | 1029 |

| 4 | FJMM | Fujimeiman | 210 | 982 |

| 5 | HANF | Hanfu | 185 | 777 |

| 6 | HRYX | Hongrouyouxi | 196 | 779 |

| 7 | JN1 | Jingning 1 | 176 | 817 |

| 8 | JAZ | Jazz | 206 | 758 |

| 9 | HOC | Honey Crisp | 186 | 899 |

| 10 | PIN | Pinova | 207 | 879 |

| 11 | JONG | Jonagold | 184 | 887 |

| 12 | SHF3 | Shoufu 3 | 174 | 1017 |

| 13 | GOS | Gold Spur | 204 | 756 |

| 14 | YANF3 | Yanfu 3 | 196 | 971 |

| Total | 2711 | 12,435 | ||

| Configuration Item | Value |

|---|---|

| Type and Specification | Lenovo 30BYS33G00 |

| CPU | Intel® Xeon® W-2123 CPU @ 3.60 GHz (8 CPUs) |

| GPU | NVIDIA GeForce GTX 1080 8 GB |

| Memory | 16 GB |

| Operating System | Windows 10 Professional (64-bit) |

| Integrated Development Environment | PyCharm Community 2019.2.3 |

| Programming Language | Python 3.7 |

| Experimental Results Analyzing Software | MATLAB R2010a |

| Type | Patch Size/Stride | Output Size |

|---|---|---|

| Convolution | ||

| Pool/Max | ||

| Convolution | ||

| Pool/Max | ||

| Convolution | ||

| Pool/Max | ||

| Convolution | ||

| Pool/Max | ||

| Convolution | ||

| Pool/Max | ||

| Convolution | ||

| Pool/Max | ||

| Full Connection | - | 512 |

| No. | Feature | Expression |

|---|---|---|

| 1 | Mean Gray | |

| 2 | Gray Variance | |

| 3 | Skewness | |

| 4 | Contrast | |

| 5 | Correlation | |

| 6 | ASM | |

| 7 | Homogeneity | |

| 8 | Dissimilarity | |

| 9 | Entropy |

| Model | acc | |||

|---|---|---|---|---|

| Maximum | Minimum | Mean | Variance | |

| KNN | 0.5852 | 0.3838 | 0.4636 | 3.6164e+1 |

| SVM | 0.4743 | 0.4594 | 0.4287 | 3.6814e-1 |

| Decision Tree | 0.5954 | 0.5763 | 0.5836 | 4.5833e-1 |

| Naive Bayes | 0.3930 | 0.3702 | 0.3837 | 5.5842e-1 |

| DCNN-based model (proposed in this paper) | 0.9932 | 0.9607 | 0.9711 | 1.1937e-2 |

| Class | Testing Set | Training Set | Validation Set |

|---|---|---|---|

| 2001 | 47 | 809 | 90 |

| ACE | 47 | 802 | 89 |

| ADR | 50 | 881 | 98 |

| FJMM | 49 | 840 | 93 |

| HANF | 39 | 664 | 74 |

| HRYX | 39 | 666 | 74 |

| JN1 | 41 | 698 | 78 |

| JAZ | 38 | 648 | 72 |

| HOC | 45 | 769 | 85 |

| PIN | 44 | 752 | 83 |

| JONG | 44 | 759 | 84 |

| SHF3 | 50 | 870 | 97 |

| GOS | 38 | 646 | 72 |

| YANF3 | 49 | 830 | 92 |

| Total | 620 | 10,634 | 1181 |

| Class | 10-Fold Cross-Validation Tests | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 1st | 2nd | 3rd | 4th | 5th | 6th | 7th | 8th | 9th | 10th | |

| 2001 (47) | 45 | 46 | 47 | 47 | 46 | 46 | 41 | 47 | 44 | 46 |

| ACE (47) | 45 | 47 | 47 | 47 | 47 | 47 | 47 | 47 | 46 | 47 |

| ADR (50) | 50 | 50 | 50 | 50 | 50 | 50 | 50 | 50 | 50 | 49 |

| FJMM (49) | 48 | 49 | 45 | 46 | 48 | 47 | 49 | 47 | 47 | 45 |

| HANF (39) | 38 | 38 | 29 | 38 | 39 | 37 | 35 | 39 | 36 | 37 |

| HRYX (39) | 37 | 39 | 39 | 37 | 39 | 39 | 38 | 39 | 39 | 39 |

| JN1 (41) | 40 | 37 | 41 | 41 | 40 | 39 | 34 | 38 | 35 | 36 |

| JAZ (38) | 38 | 38 | 38 | 38 | 38 | 38 | 38 | 38 | 38 | 38 |

| HOC (45) | 44 | 44 | 42 | 45 | 45 | 42 | 44 | 45 | 43 | 45 |

| PIN (44) | 41 | 44 | 41 | 43 | 44 | 42 | 41 | 41 | 44 | 39 |

| JONG (44) | 44 | 41 | 44 | 44 | 42 | 44 | 43 | 44 | 44 | 43 |

| SHF3 (50) | 48 | 47 | 48 | 47 | 47 | 47 | 49 | 47 | 47 | 47 |

| GOS (38) | 38 | 38 | 29 | 38 | 38 | 37 | 37 | 38 | 38 | 37 |

| YANF3 (49) | 48 | 49 | 42 | 46 | 48 | 45 | 49 | 46 | 48 | 46 |

| 0.9742 | 0.9790 | 0.9387 | 0.9790 | 0.9855 | 0.9677 | 0.9597 | 0.9774 | 0.9661 | 0.9581 | |

| 0.0258 | 0.0210 | 0.0613 | 0.0210 | 0.0145 | 0.0323 | 0.0403 | 0.0226 | 0.0339 | 0.0315 | |

| 2001 | ACE | ADR | FJMM | HANF | HRYX | JN1 | JAZ | HOC | PIN | JONG | SHF3 | GOS | YANF3 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2001 | 46 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| ACE | 0 | 47 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| ADR | 1 | 0 | 49 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| FJMM | 1 | 0 | 0 | 45 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 1 |

| HANF | 0 | 0 | 0 | 0 | 37 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 |

| HRYX | 0 | 0 | 0 | 0 | 0 | 39 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| JN1 | 0 | 0 | 0 | 0 | 1 | 0 | 36 | 0 | 0 | 0 | 0 | 4 | 0 | 0 |

| JAZ | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 38 | 0 | 0 | 0 | 0 | 0 | 0 |

| HOC | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 45 | 0 | 0 | 0 | 0 | 0 |

| PIN | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 3 | 39 | 2 | 0 | 0 | 0 |

| JONG | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 43 | 0 | 0 | 0 |

| SHF3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 47 | 0 | 3 |

| GOS | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 37 | 0 |

| YANF3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 3 | 0 | 46 |

| Class | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 2001 | 46 | 4 | 1 | 569 | 0.9787 | 0.9930 | 0.0070 | 0.9919 | 0.9200 | 0.9787 | 0.9485 |

| ACE | 47 | 0 | 0 | 573 | 1.0000 | 1.0000 | 0.0000 | 1.0000 | 1.0000 | 1.0000 | 1.0000 |

| ADR | 49 | 0 | 1 | 570 | 0.9800 | 1.0000 | 0.0000 | 0.9984 | 1.0000 | 0.9800 | 0.9899 |

| FJMM | 45 | 1 | 4 | 570 | 0.9184 | 0.9982 | 0.0018 | 0.9919 | 0.9783 | 0.9184 | 0.9474 |

| HANF | 37 | 2 | 2 | 579 | 0.9487 | 0.9966 | 0.0034 | 0.9935 | 0.9487 | 0.9487 | 0.9487 |

| HRYX | 39 | 0 | 0 | 581 | 1.0000 | 1.0000 | 0.0000 | 1.0000 | 1.0000 | 1.0000 | 1.0000 |

| JN1 | 36 | 0 | 5 | 579 | 0.8780 | 1.0000 | 0.0000 | 0.9919 | 1.0000 | 0.8780 | 0.9351 |

| JAZ | 38 | 0 | 0 | 582 | 1.0000 | 1.0000 | 0.0000 | 1.0000 | 1.0000 | 1.0000 | 1.0000 |

| HOC | 45 | 3 | 0 | 572 | 1.0000 | 0.9948 | 0.0052 | 0.9952 | 0.9375 | 1.0000 | 0.9677 |

| PIN | 39 | 0 | 5 | 576 | 0.8864 | 1.0000 | 0.0000 | 0.9919 | 1.0000 | 0.8864 | 0.9398 |

| JONG | 43 | 2 | 1 | 574 | 0.9773 | 0.9965 | 0.0035 | 0.9952 | 0.9556 | 0.9773 | 0.9663 |

| SHF3 | 47 | 8 | 3 | 562 | 0.9400 | 0.9860 | 0.0140 | 0.9823 | 0.8545 | 0.9400 | 0.8952 |

| GOS | 37 | 0 | 1 | 582 | 0.9737 | 1.0000 | 0.0000 | 0.9984 | 1.0000 | 0.9737 | 0.9867 |

| YANF3 | 46 | 6 | 3 | 565 | 0.9388 | 0.9895 | 0.0105 | 0.9855 | 0.8846 | 0.9388 | 0.9109 |

| Class | Platform No. | Resource No. | Origin | Type |

|---|---|---|---|---|

| SHF3 | 1111C0003883000057 | MAPUM6208260057 | Xiaocaogou Horticultural farm of Laizhou, Yantai City, Shandong Province | Breeding variety |

| YANF 3 | 1111C0003883000006 | MAPUM6208260006 | Fruit and Tea Extension Station of Yantai, Yantai City, Shandong Province | Breeding variety |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, C.; Han, J.; Chen, B.; Mao, J.; Xue, Z.; Li, S. A Novel Identification Method for Apple (Malus domestica Borkh.) Cultivars Based on a Deep Convolutional Neural Network with Leaf Image Input. Symmetry 2020, 12, 217. https://doi.org/10.3390/sym12020217

Liu C, Han J, Chen B, Mao J, Xue Z, Li S. A Novel Identification Method for Apple (Malus domestica Borkh.) Cultivars Based on a Deep Convolutional Neural Network with Leaf Image Input. Symmetry. 2020; 12(2):217. https://doi.org/10.3390/sym12020217

Chicago/Turabian StyleLiu, Chengzhong, Junying Han, Baihong Chen, Juan Mao, Zhengxu Xue, and Shunqiang Li. 2020. "A Novel Identification Method for Apple (Malus domestica Borkh.) Cultivars Based on a Deep Convolutional Neural Network with Leaf Image Input" Symmetry 12, no. 2: 217. https://doi.org/10.3390/sym12020217

APA StyleLiu, C., Han, J., Chen, B., Mao, J., Xue, Z., & Li, S. (2020). A Novel Identification Method for Apple (Malus domestica Borkh.) Cultivars Based on a Deep Convolutional Neural Network with Leaf Image Input. Symmetry, 12(2), 217. https://doi.org/10.3390/sym12020217