1. Introduction

Nature is replete with intelligent and disciplined phenomena with impressive capabilities that are being continuously discovered. The behavior of animals and insects has been observed for centuries; however, the observed behaviors, which are represented by physics and particle dynamics, represent only a small portion of the intelligent processes that exist in nature. The intelligent behavior found in nature has inspired many intelligent search algorithms, such as genetic algorithms (GAs) [

1], particle swarm optimization (PSO) [

2,

3], ant colony optimization [

4,

5], the artificial fish swarm algorithm [

6], the artificial immune system [

7], bacterial foraging optimization algorithm [

8,

9], bat-inspired algorithm [

10,

11], imperialist competitive algorithm [

12,

13,

14], and the gravitational attraction search [

15]. Although each of the intelligent search algorithms exhibits their own set of efficiencies and can solve many types of optimization problems, there are some issues they have in common for solving large-scale problems especially those possessing search spaces with staggering high dimensions. Amongst these issues, local optima problem [

16,

17,

18] and the absence of inborn exploitation operations [

19,

20,

21] are seemingly impossible to overcome. Thus, many researchers are searching for unique methods to tackle these issues. Hybrid heuristic search and memetic algorithms [

19,

22,

23] have been proposing since years ago to tackle these problems. Innovative combinations of the Bee colony algorithm with other heuristics are also showing promising results in properly exploring search spaces [

24,

25].

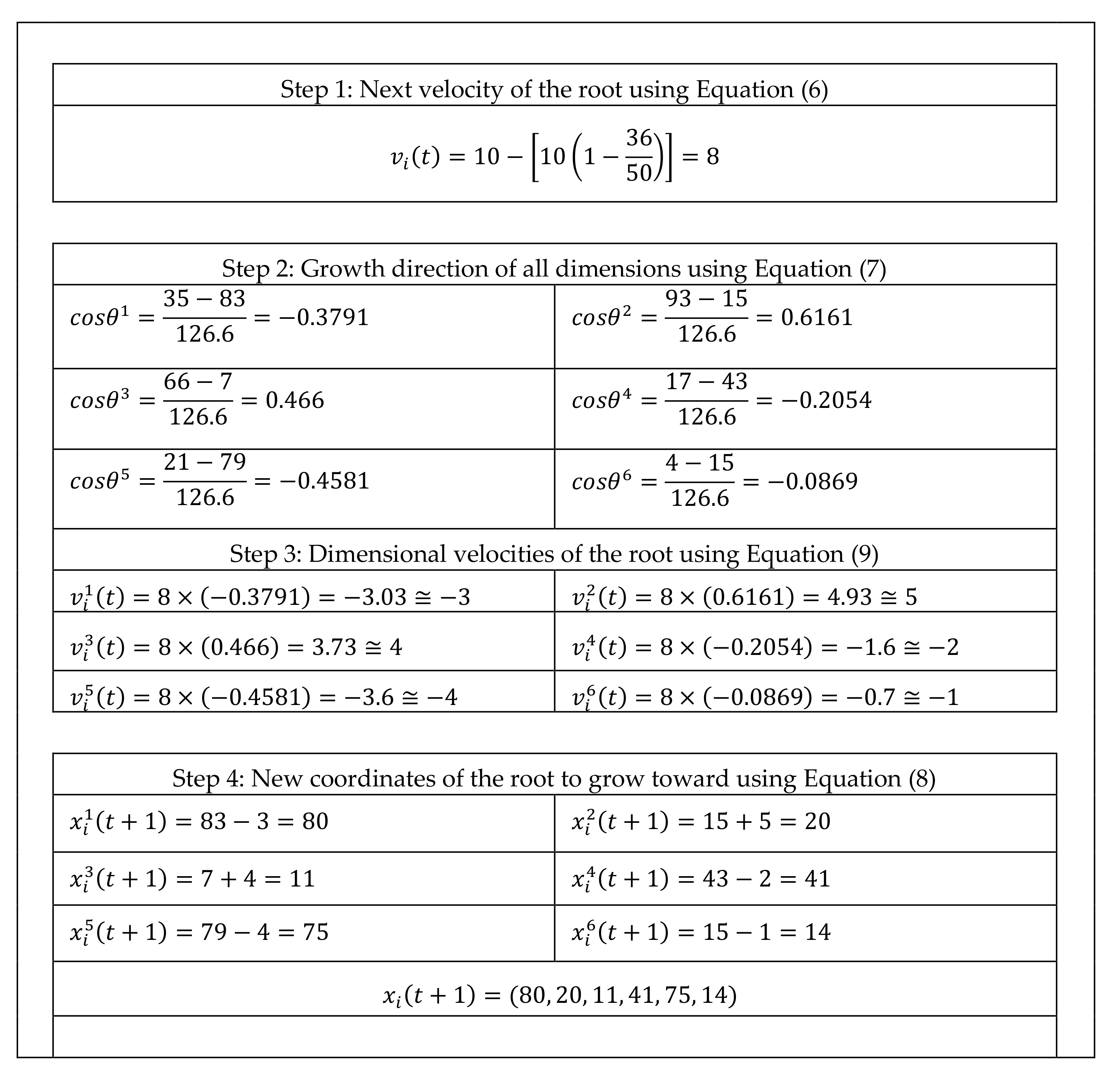

In the process of searching the soil for nutrients, such as minerals and water, plant roots demonstrate highly intelligent behavior [

26]. This intelligence must be creative to ensure the survival of the plant in environments with low levels of water and insufficient nutrients. In these limited-resource communities, roots, as explained in

Section 1.1.1, often adapt and search a broad area before plant dies due to absence of food or water. Therefore, the intelligent behavior of roots could be the basis for a brand new, swift, effective, and appealing intelligent search algorithm able to efficiently search large spaces.

A few attempts have been made to imitate plant root behavior and develop new search algorithms. These studies resulted in four algorithms: the root growth model (RGM) [

27], root mass optimization (RMO) [

28], the artificial root foraging model (ARFO) [

29,

30] and artificial root mass (ARM) [

31]. Despite researchers’ efforts, the proposed algorithms have been unable to utilize plant intelligence in a way that overcomes the weaknesses that affect other heuristic search algorithms (refer to

Section 1.1.2 for more details).

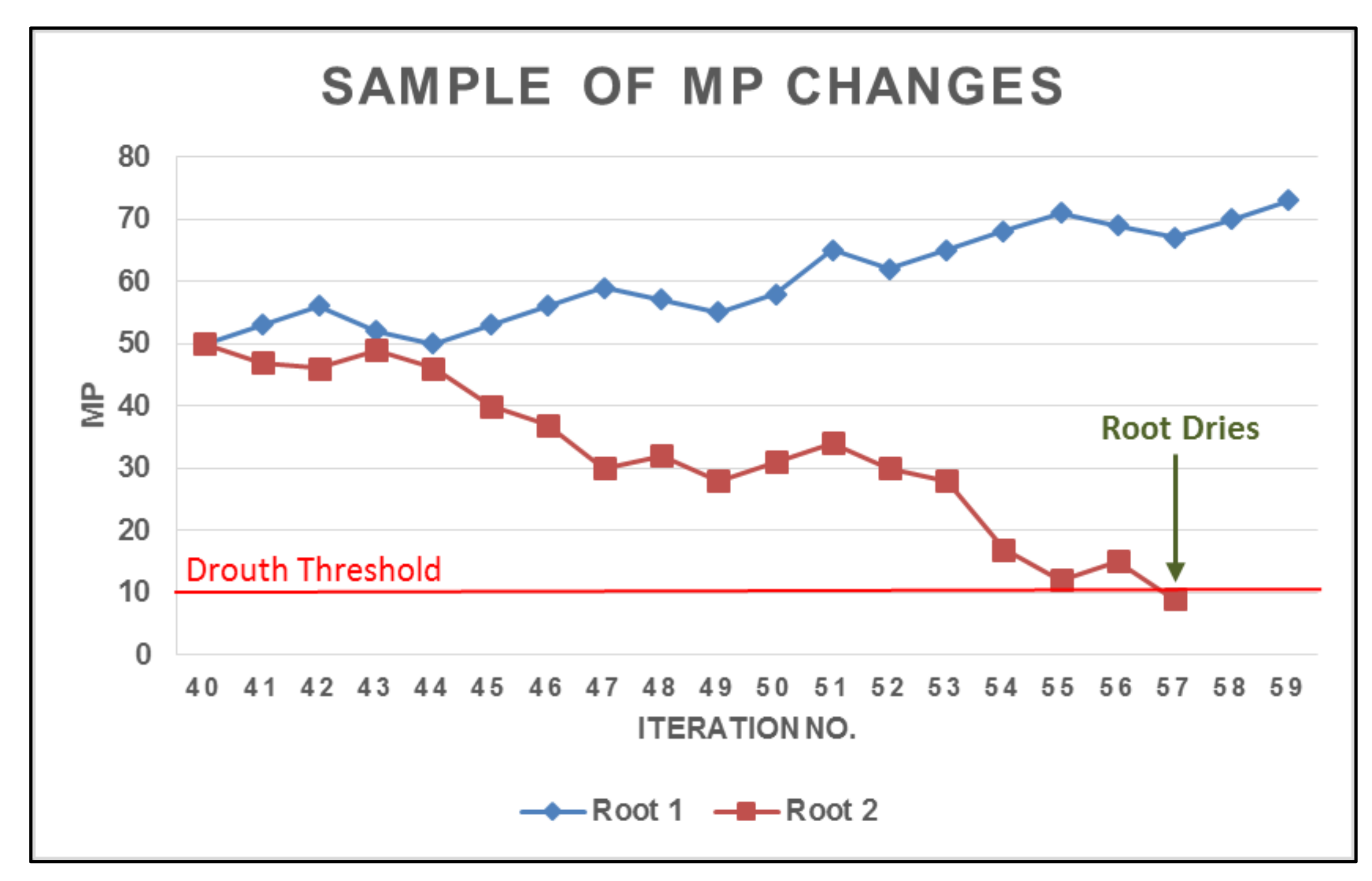

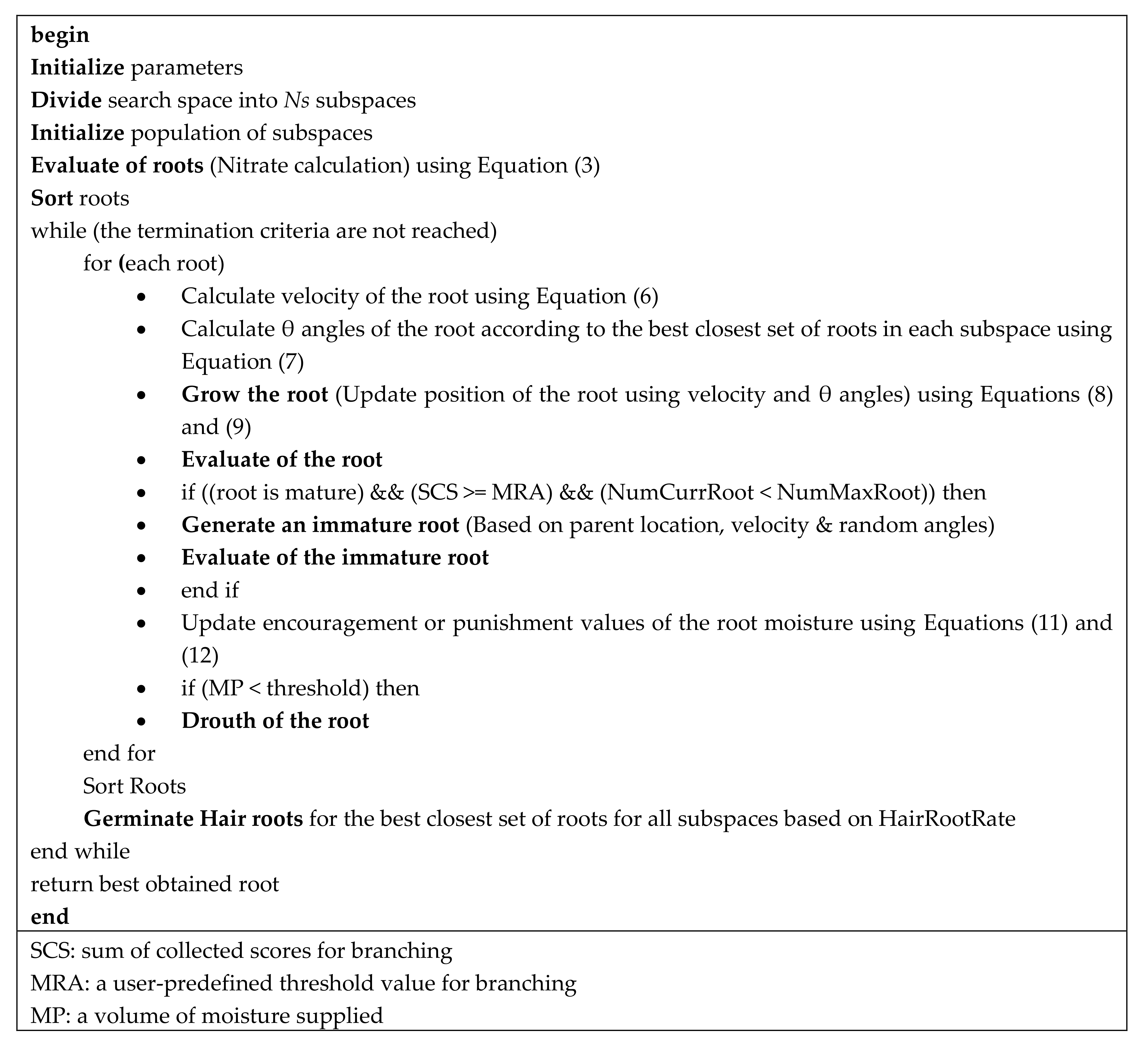

Hence, to achieve high efficiency and overcome problems, a new, independent, growth-inspired Smart Root Search (SRS) algorithm was proposed by the authors of this paper in [

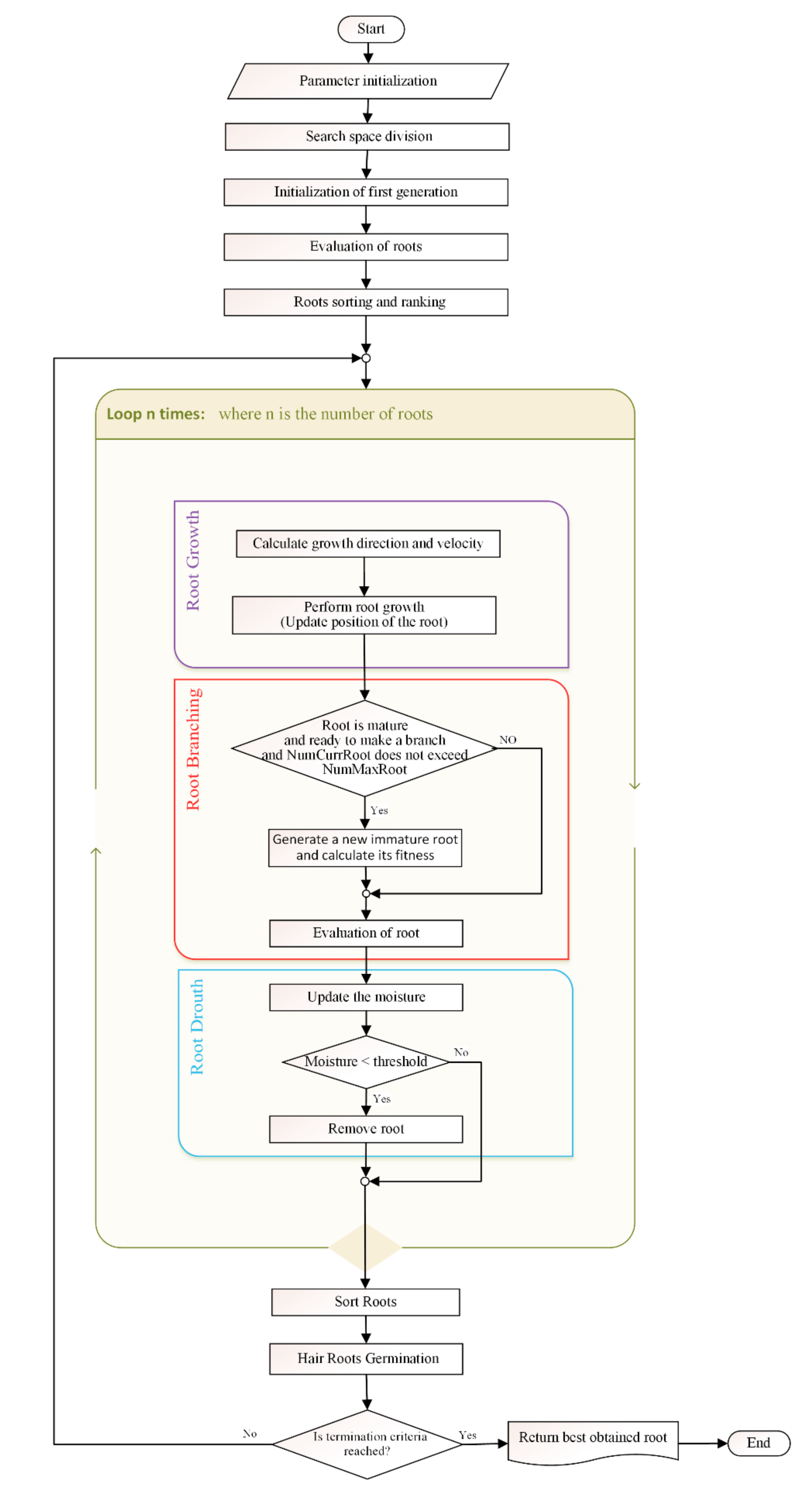

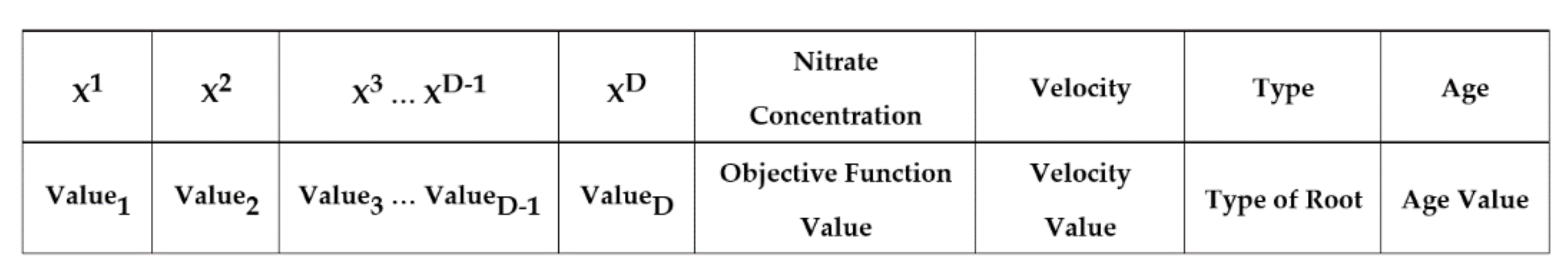

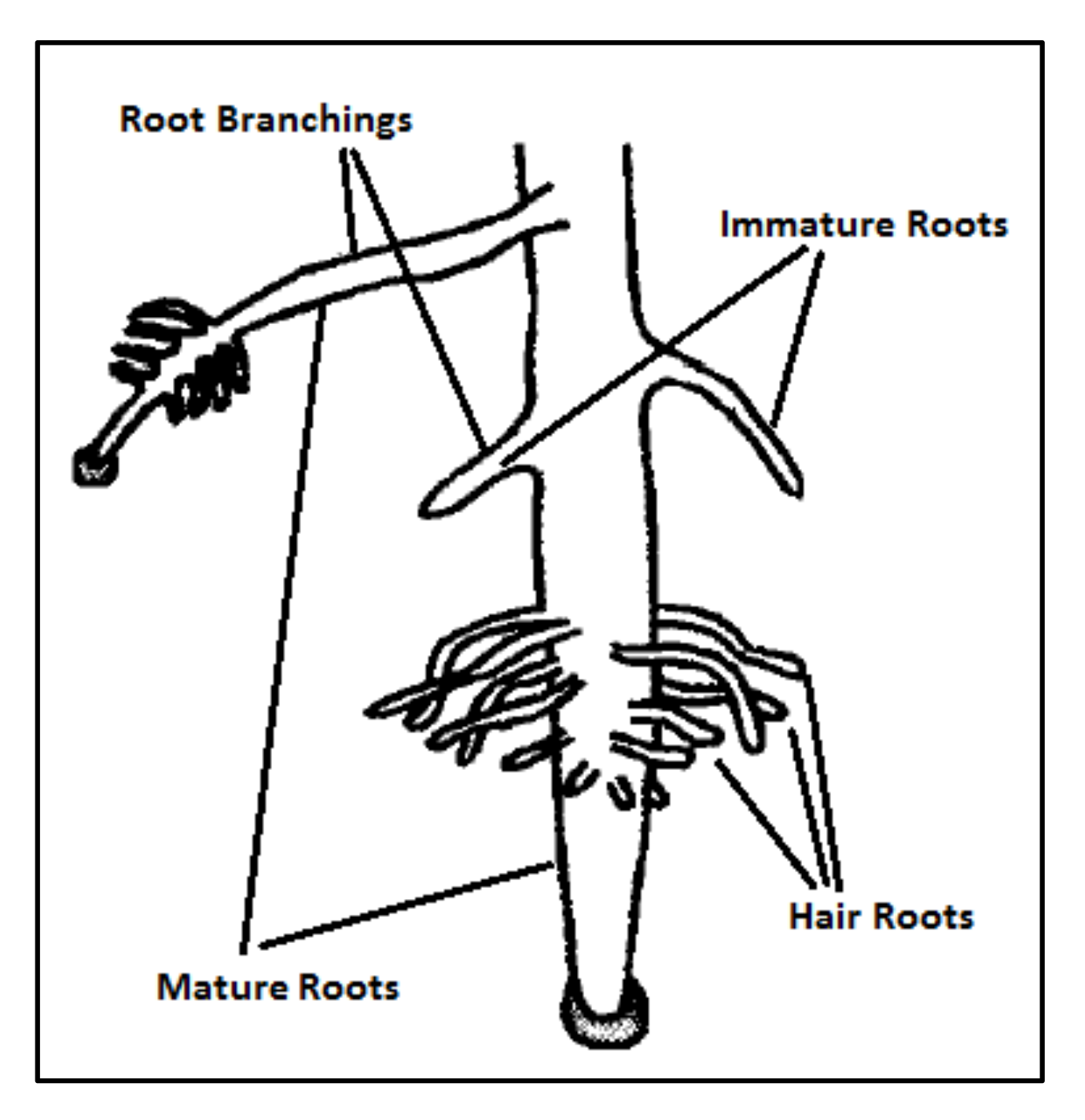

32]. The SRS is equipped with unique features and well-defined operators that are extracted precisely from intelligence of plant, and, unlike previous plant root-based search algorithms, is in absolute alignment with optimization principles. The novelty of SRS can be summarized in three items including (1) dividing the search space into a number of subspaces helping the algorithm to quickly find the more potential areas of the search space, and control local convergence of the algorithm in those areas; (2) defining three different types of roots, immature, mature and hair roots, that use different exploration approaches together with a mechanism to convert immature to mature, in order to search more in promising areas and provide embedded local search mechanism; and (3) proposing root drouth operator to control local and global convergence of the algorithm simultaneously.

As the proposed SRS was a brief preliminary model, it required supplementary parts, revision, implementation, test and comparison. To this end, in this paper the final version of the model is proposed and explained in detail and represented in the form of flowchart and pseudocode. Supported by graphical examples, a new structure is employed for roots for getting better performance, and root drought equation is improved to protect promising roots more accurately. In addition to that, a clear parameter initialization is added to the algorithm, and a precise explanation is provided for Immature-to-Mature mechanism of the roots. Most importantly, a complete experimental test has designed and applied to evaluate the performance of the algorithm and compare with introduced comparative algorithms followed by an in-detail statistical test.

The remainder of the paper is structured according to the following. The literature on the intelligent behavior of roots is reviewed in

Section 1.1. A detailed explanation of the SRS algorithms is then described in

Section 2, followed by the experimental test and results in

Section 3. Finally, conclusion and suggestions for future work are provided in

Section 4, followed by references.

1.1. Literature Review

From a general perspective, living things can be divided into two main groups: animals and plants. Animals sense their environment using their senses, such as sight and touch. Similarly, plants perceive their surrounding environment using a series of senses and reactions. To become more familiar with plant physiology and their senses and reactions, this section provides information on how plant roots sense their environment and the consequences of these reactions.

1.1.1. Plant Senses and Reactions

Plants use the same five senses as humans: hearing [

33], touching, tasting, seeing and smelling. Furthermore, plants have evolved to use more senses than humans and, in fact, have approximately twenty distinct senses. Indeed, plants are able to detect moisture, gravity, minerals, humidity, light, wind, soil composition and structure, snow melt, pressure, temperature, and infection. Plants can decide to react against environmental stimuli based on the information obtained by these senses. Thus, plants are considered prototypical intelligent creatures [

26,

34] and exhibit their intelligence via shoot and root growth.

A thorough review on the architecture of root system and the pathways and networks forming root behavior was provided in [

35]. Those authors showed that root growth is a reaction to the nutrients of the soil depending on several changing factors. Hydrotropism, nutrient tropism, cell memory and electrical impulse are some of the most interesting behaviors that demonstrate root intelligence. These behaviors are summarized below.

Hydrotropism: Plant survival depends on the ability of roots to find water in soil. Hence, plant root growth curves correspond to the moisture gradient (higher water potential) called hydrotropism [

36,

37].

In addition, when they encounter moisture in soil, roots absorb and store water to support all plant activities. The plant loses stored water during plant growth or evapotranspiration. Roots can also transfer water to dry parts of the soil and release it to promote root survival [

38,

39,

40]. This release occurs when the absorbed water is not sufficient for root survival because roots that cannot survive will dry out.

Nutrient tropism: Nitrates and phosphates are considered the most important elements for plant growth [

41]. Important developmental processes, such as lateral root (LR) and hair root (HR) formation as well as primary root (PR) elongation (length), are to a great extent sensitive to the nutrient concentration changes.

Strong evidence shows that the nitrate concentration affects LR formation: development of LR is hindered by high nitrate concentrations and stimulated by low concentrations of nitrate, respectively [

35,

42]. PR elongation under the inhibitory impact of high nitrate concentrations is also discussed in [

43]. Accessibility and distribution of phosphate and Nitrate have been shown to have contrasting effects on PR elongation and LR density but comparable impacts on LR elongation [

44]. PR elongation is known to decrease with increasing nitrate availability but increase as the phosphate supply increases. The LR density remains constant across varying concentrations of nitrates but decreases as the phosphate supply increases. In contrast, LR elongation is suppressed by high concentrations of nitrate as well as phosphate.

In this regard, Ref. [

45] demonstrated that phosphate starvation enhances HR elongation and density. Furthermore, research conducted at the Pennsylvania State University shows that

Arabidopsis thaliana roots grow more condensed and longer reacting to lower availability of phosphate [

46].

Memory: Although plants do not have a neural network, many studies show that they can recall some conditions, which suggests that plants exhibit memory. Ref. [

47] addressed traumatic plant memories, related facts, and potential mechanisms. Stress factors make the plant impervious to subsequent exposures. This stress-related feature indicates that every plant has a memory capacity. In addition, plants also possess “stress memory” and “drought memory”. Surprisingly, the proportion of live biomass after a late drought is higher in plants that were exposed to drought earlier in their growing season contrasted with single-stressed plants [

48].

Electrical Impulse: Plants also use a message-passing system [

49]. Research on plants has shown that electrical communication plays a significant role in root-to-shoot contact in the plants under water stress. Furthermore, Ref. [

50] showed that when one organ of a seedling is stimulated (i.e., the root region), a characteristic response (electrical stimulus) is produced and would be recorded upward in another organ from the stimulating area.

1.1.2. Plant-Imitating Methods

A comprehensive analysis at plant root domains shows that only a few research studies have focused on using the inherent intelligence of the plants as a search algorithm. The studies have been leaded and conducted by Zhu Yunlong and his several research teams, respectively. In this section, the small number of proposed plant root algorithms and the main ideas are assessed and discussed.

RGM is a proposed algorithm for numerical function optimization that simulates the interactions between HR growth and the soil [

27,

51]. In each iteration of growth in RGM, high-functioning roots, which have higher Morphactin (fitness function) values, are selected to branch areas distant from the selected roots. New branches are called HRs. HRs follow random growth directions, and their growth length depends on their growth direction. HRs are added to a set of roots, and then a set of non-selected branching roots is removed. Accordingly, RGM could be known as a local search algorithm that does not take advantage of the well-extracted root intelligence and is not suitable to search in large search spaces. Furthermore, when local optima become trapped, the RGM method fails.

In 2013, an RGM for numerical optimization—the RMO algorithm—was proposed [

28]. RMO is the primary inspiration for two other algorithms: ARFO and ARM.

ARFO [

29,

30] was proposed for image segmentation problems and then generalized to address other optimization problems. This algorithm uses the Auxin hormone levels of roots as the objective function and employs branching, re-growing, hydrotropism and gravity-tropism operations. The ARFO root system consists of three groups of roots, including main roots and lateral roots (large and small elongated length units, respectively) and dead roots. Two main shortcomings of ARFO are evident: first, absence of precise extraction and accurate modeling of plant intelligence in terms of root growth. Second, optimization-averse behavior is inherent in the algorithm. A list of shortcomings of the ARFO is presented in

Table 1.

ARM optimization was proposed to solve the data clustering problem. ARM is based on a harmony-like search algorithm [

52] that simulates plant root growth strategies, such as proliferation and decision making, that depend on the growth direction [

31]. ARM generates a set of roots randomly. Some of the roots with better fitness values can continue their growth, while the rest stop growing. For every root, one neighbor is selected randomly. If the fitness of the neighbor is better than that of the root, then a new root will be generated between them in the search space; otherwise, a new root will be generated randomly. The new root will be added to the set of roots with better fitness values than its parent. Therefore, no intelligent root behaviors are applied in ARM.

4. Conclusions and Future Work

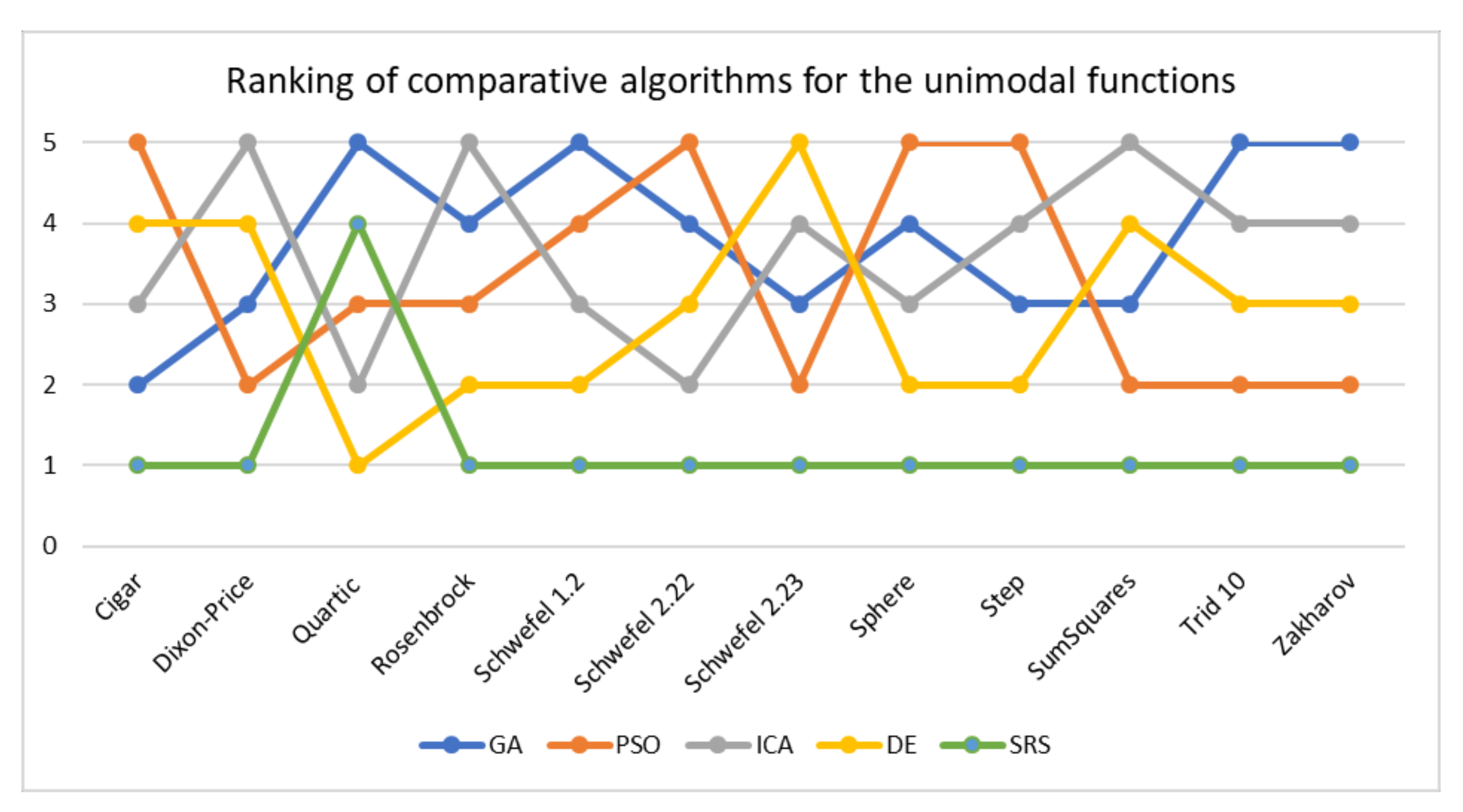

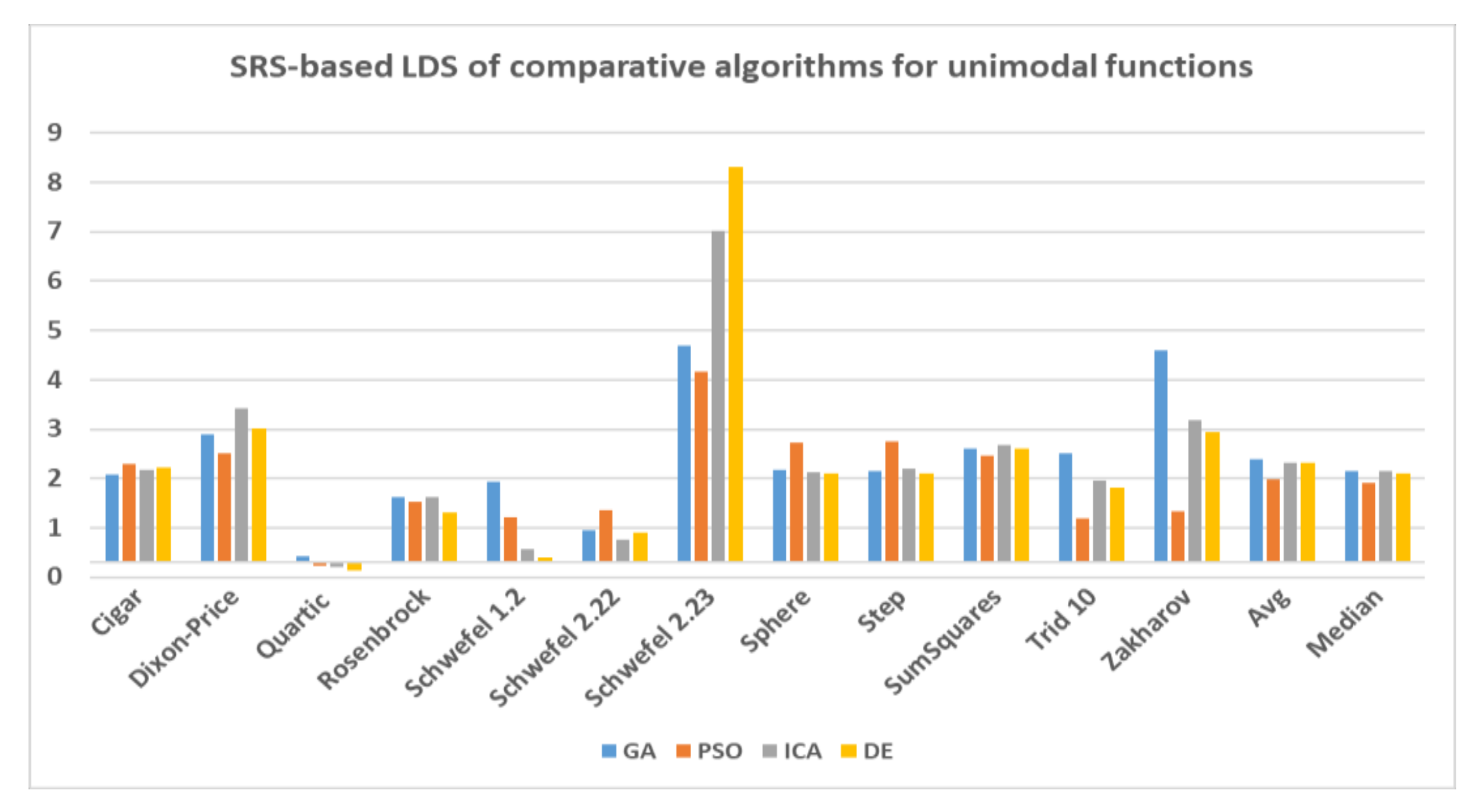

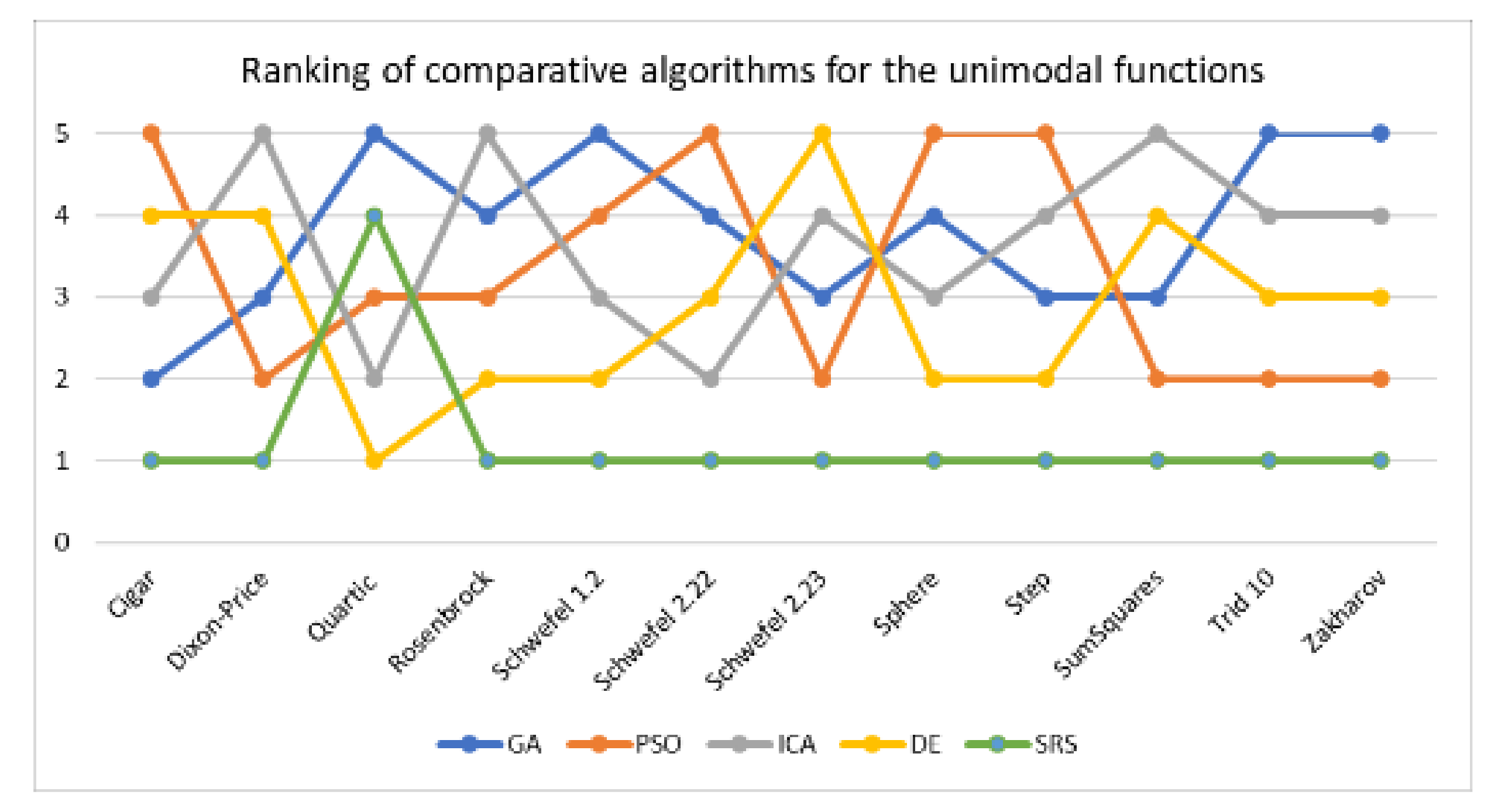

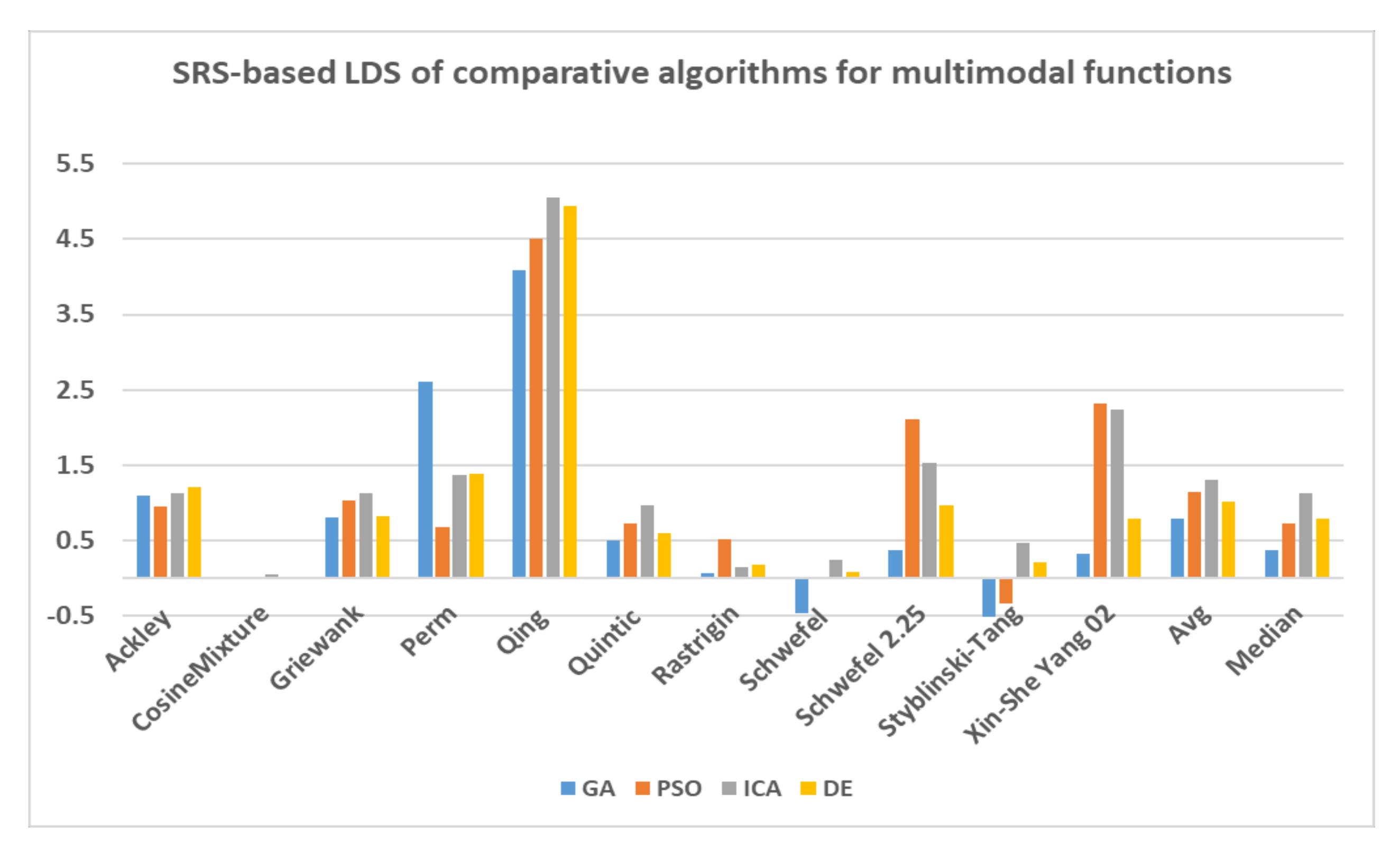

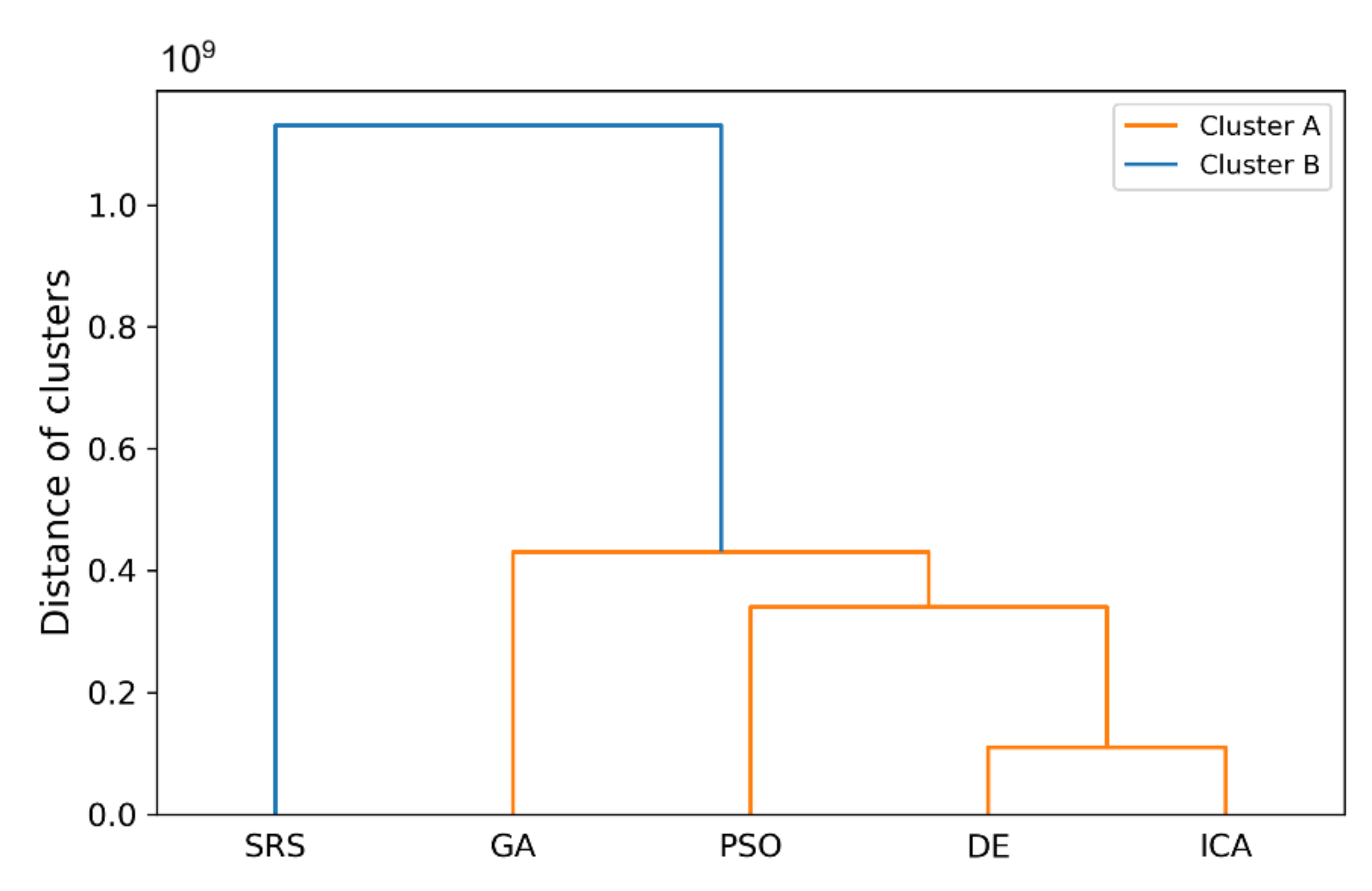

In this paper, a high-performance combinatorial search algorithm called SRS is introduced. SRS was inspired by the plant root growth in soil that occurs to find higher densities of nutrition and water. By mapping solutions to the root and then utilizing three different types of roots with different search characteristics, the algorithm shows high efficiency in both exploration and exploitation activities. Mature roots are responsible for exploration, while immature roots help the SRS escape from local optima traps and non-promising points. Meanwhile, hair roots as very short life searching elements try to search around the best-found solutions for finding higher satisfying points. To evaluate the performance of the SRS and compare its efficiency with those of other algorithms, a complete experimental test was conducted. Twenty-four unimodal and multimodal test functions were employed, and GA, PSO, ICA and DE were applied as comparative algorithms for SRS to find the optimal values of the test functions. To ensure that the collected results were reliable and accurate, every algorithm was executed 40 times per test function, and the average of the results was used in the comparisons.

In investigating unimodal test functions, the collected results demonstrated that the SRS performed significantly better than the comparative algorithms, except for the quartic function. Therefore, the SRS won the competition for 91.67% of the functions. For the multimodal test functions, the achieved results indicated that the SRS prevailed 81.81% of the time. Overall, the aggregated results for the unimodal and multimodal test functions indicated that SRS is superior to the comparative algorithms for 86.96% of the test functions used. The higher efficiency in addressing unimodal and multimodal functions demonstrates that the SRS has strong ability to coordinate exploitation and exploration in a way that local optima points are not able to catch it into the traps. Briefly, based on the experimental results and discussion provided here, it can be concluded that the SRS is a formidable competitor for currently well-known combinatorial search algorithms in solving different types of optimization search problems. Well-defined structures and carefully tuned operators are the strengths of the SRS to solving np-hard optimization problems.

Despite the discussed capabilities of the SRS, there are some aspects of the algorithm that can still be upgraded. In terms of functional settings, the SRS enjoys many parameters to customize the functionality of the algorithm. Different values for these parameters may cause different effects in solving various types of optimization problems. Therefore, in the future, efforts should be focused on finding effective values for these parameters. Furthermore, these parameters exert mutual effects on the performance and final achieved solutions. Additional research is needed to regulate the relations among these parameters to be able to run the algorithm effectively by setting a smaller number of parameters. In addition, SRS must be applied in solving several benchmarks as well as real-world optimization problems to determine its strengths and weaknesses as a further matter, the HRG operation was not utilized in the conducted experimental test to provide a more suitable test environment for examining the core exploration and exploitation behaviors of the mature and immature roots. In the future, the performance of the SRS will be achieved by applying a well-organized HRG when solving np-hard problems.

Represents Increasing and

Represents Increasing and  represents Decreasing.

represents Decreasing.

Represents Increasing and

Represents Increasing and  represents Decreasing.

represents Decreasing.