Better Understanding: Stylized Image Captioning with Style Attention and Adversarial Training

Abstract

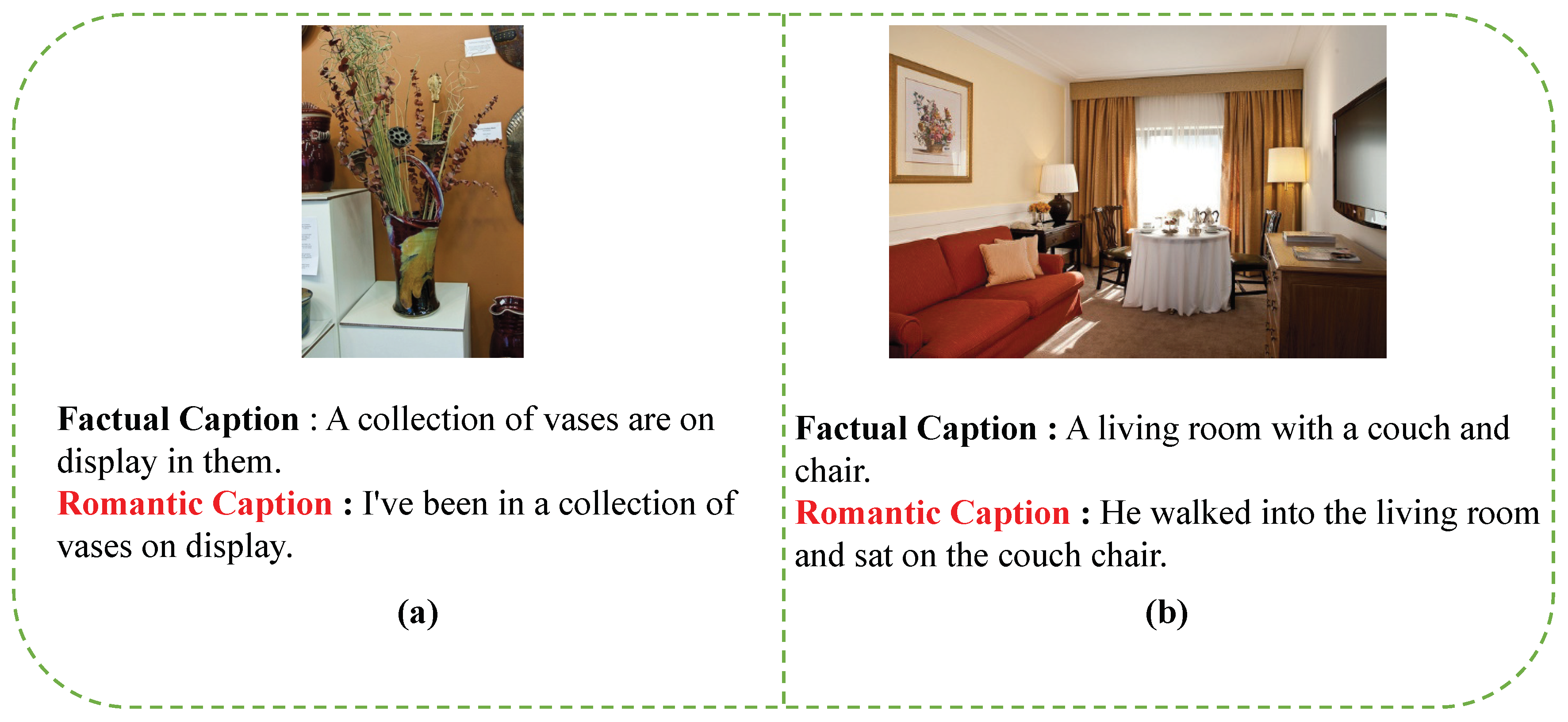

1. Introduction

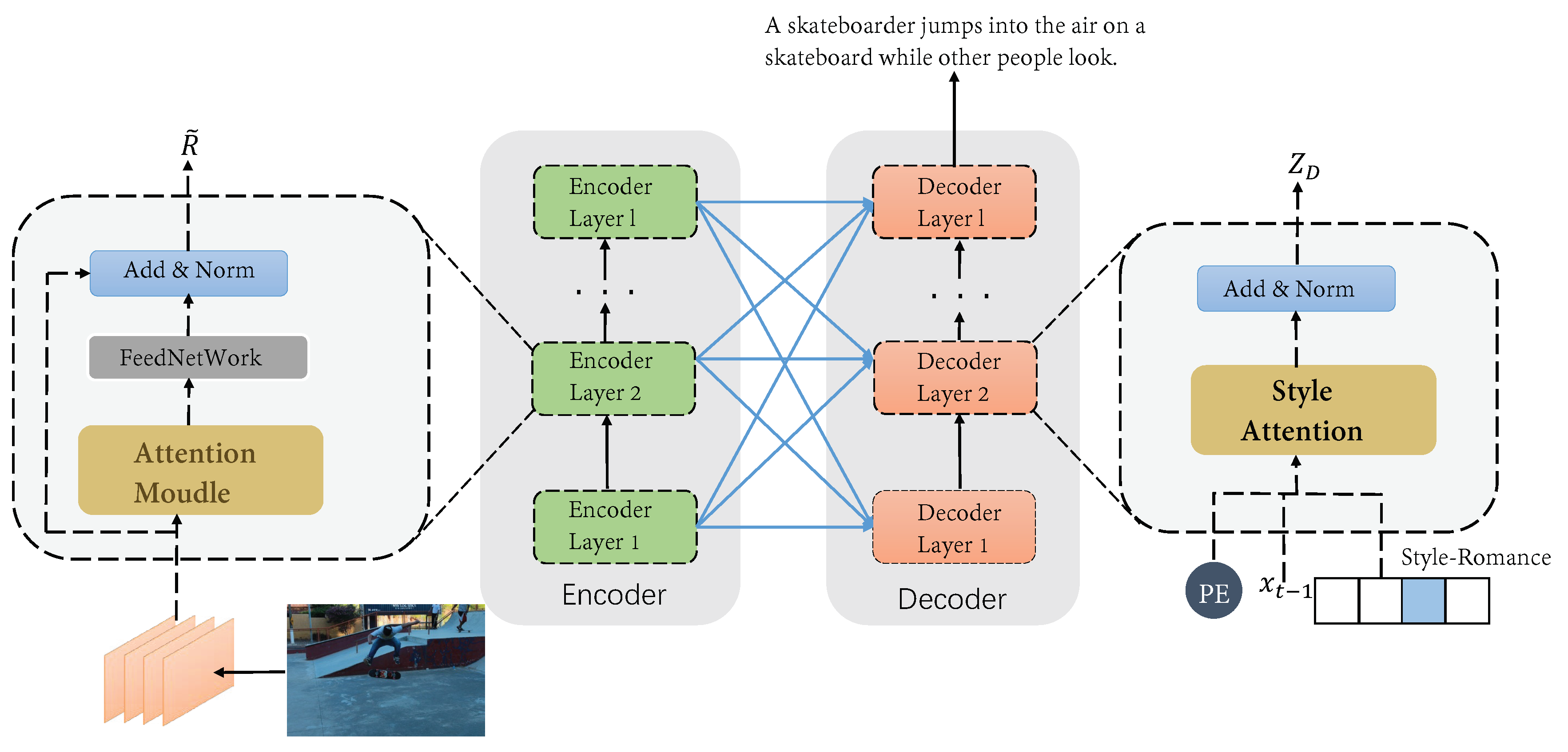

- We propose a stylized transformer structure. This structure efficiently matches style information through a multi-head style attention mechanism and generates stylized image caption rather than the factual caption.

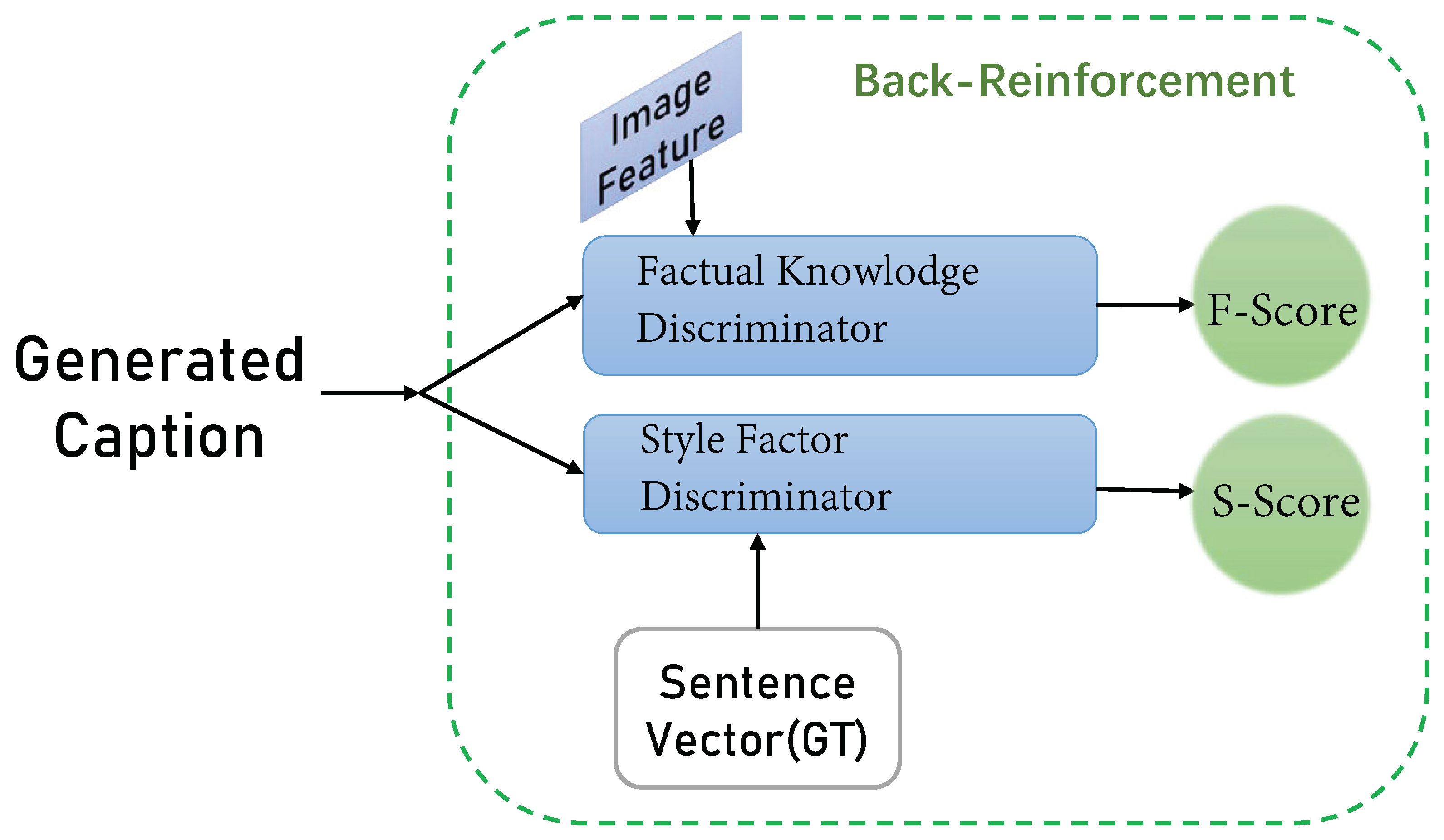

- We propose a back-reinforcement module to evaluate the degree of matching between the image and style information and the generated captions.

- Based on 1 and 2, we design a stylized image captioning model based on adversarial learning. The game process enables the model to generate an accurate stylized caption based on learning the image’s factual information.

- We test our model on public datasets, and the results show the effectiveness of our proposed method.

2. Related Work

2.1. Traditional Image Captioning

2.2. Stylized Image Captioning

3. Method

3.1. Motivation

3.2. Overview

3.3. STrans: A Better Framework to Understand Images

3.3.1. Encode Images: Effectively Utilize the Information of Images

3.3.2. Style Attention Module: Closely Integrate Style Factors

3.4. Back-Reinforcement: Further Optimize the Caption Generation Module

3.4.1. Factual Knowledge Discriminator (FKD): Let the Model Make More Use of Image Information

3.4.2. Style Factor Discriminator (SFD): Make the Model Closer to a Specific Style

3.5. Training Strategy

4. Experiment

4.1. Preparatory Work

4.1.1. Datasets

4.1.2. Evaluation Metrics

- For evaluating sentence fluency, we utilized a language modeling tool (SRILM [30]) to achieve it. SRILM calculates the perplexity generated sentences using the trigram language model trained on the respective corpus [9]. SRILM calculates the perplexity scores (ppl.) of each style generated caption. The smaller the value of the SRILM score denotes, the lower the perplexity of captions generated by the model, the more fluent the sentence, and the better the model’s performance.

- Style classification accuracy (cls.) is the proportion of captions that accurately integrate style factors in all captions. We calculate style accuracy by a style classifier, which is pre-trained on FlickrStyle10K and MSCOCO 2014 datasets.

4.1.3. Compared Models

- NIC-TF: This method uses each style of data set to fine-tune on the NIC model [11].

- [6]: The work proposes an end-to-end learning framework that can automatically distill the style factors in a single language text.

- SF-LSTM [19]: The work proposes a variant of LSTM; its function is to obtain factual and style knowledge. In addition, during the training process, they proposed to use the actual output of the parameters as a guide. It is a supervised method.

- [9]: This paper trains a model to implement the task of learning multi-style captions from unpaired data.

4.2. Implementation Details

4.3. Result Analysis

4.3.1. Quantitative Analysis

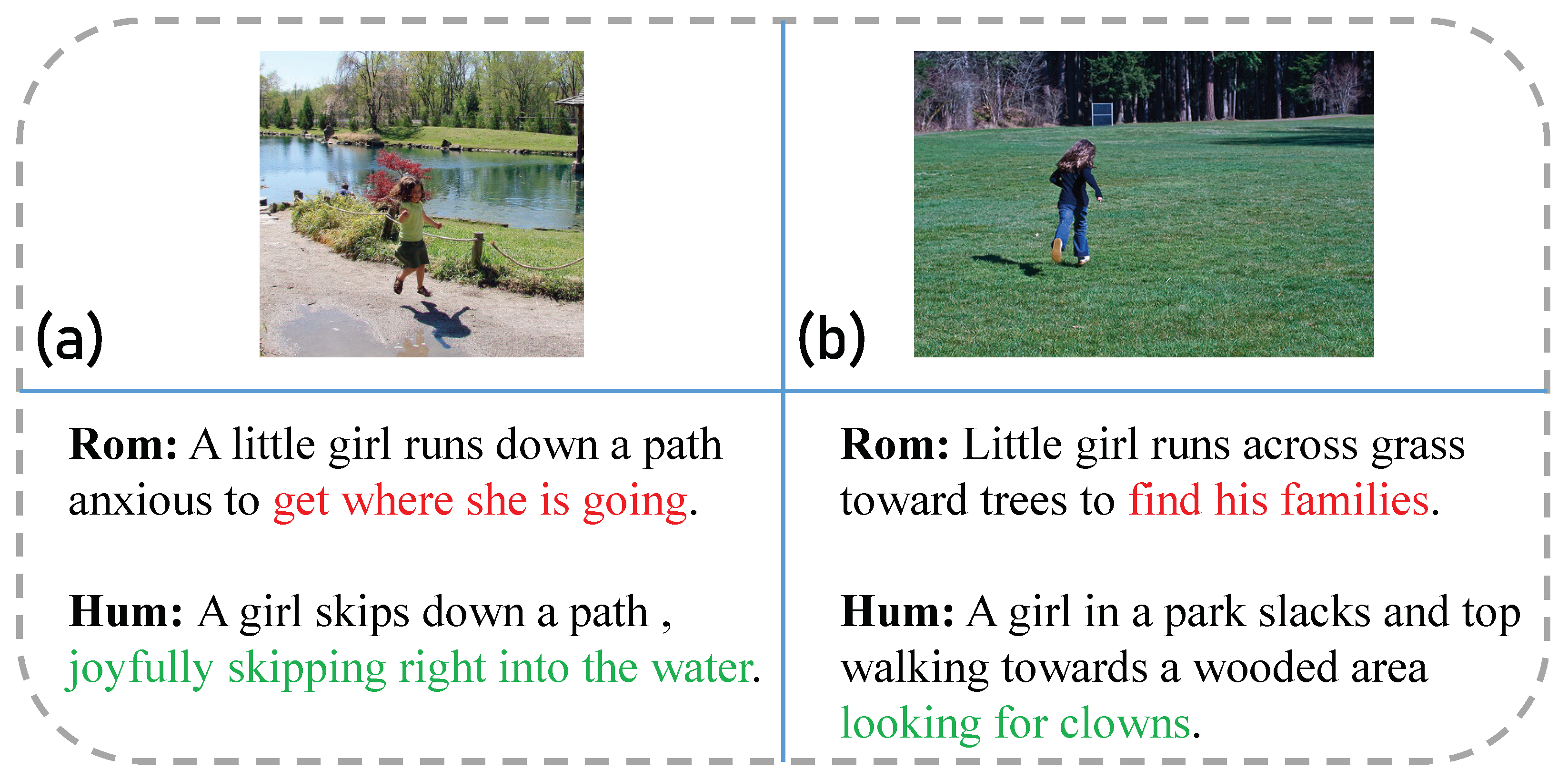

4.3.2. Qualitative Analysis

4.3.3. Ablation Studies

- : To verify the multi-discriminator module’s function, we removed the reverse enhancement module and only used the caption generator module.

- ST-FKD: We removed SFD to verify the style factor discriminator’s guiding role on style.

- ST-SFD: To verify the effect of the factual knowledge discriminator, we only kept the style factor discriminator in the reverse enhancement module.

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Chen, S.; Jin, Q.; Wang, P.; Wu, Q. Say As You Wish: Fine-Grained Control of Image Caption Generation With Abstract Scene Graphs. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 9962–9971. [Google Scholar]

- Anderson, P.; He, X.; Buehler, C.; Teney, D.; Johnson, M.; Gould, S.; Zhang, L. Bottom-up and top-down attention for image captioning and visual question answering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 6077–6086. [Google Scholar]

- Chen, L.; Zhang, H.; Xiao, J.; Nie, L.; Shao, J.; Liu, W.; Chua, T.-S. SCA-CNN: Spatial and channel-wise attention in convolutional networks for image captioning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 26 July 2017; pp. 5659–5667. [Google Scholar]

- Pan, Y.; Yao, T.; Li, Y.; Mei, T. X-Linear Attention Networks for Image Captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–18 June 2020; pp. 10971–10980. [Google Scholar]

- Jing, B.; Xie, P.; Xing, P.E. On the Automatic Generation of Medical Imaging Reports. arXiv 2018, arXiv:1711.08195. [Google Scholar]

- Gan, C.; Gan, Z.; He, X.; Gao, J.; Deng, L. StyleNet: Generating Attractive Visual Captions With Styles. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 3137–3146. [Google Scholar]

- Mathews, A.; Xie, L.; He, X. SemStyle: Learning to Generate Stylised Image Captions Using Unaligned Text. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 8591–8600. [Google Scholar]

- Zhang, W.; Ying, Y.; Lu, P.; Zha, H. Learning Long- and Short-Term User Literal-Preference with Multimodal Hierarchical Transformer Network for Personalized Image Caption. In Proceedings of the AAAI, New York, NY, USA, 7–12 February 2020. [Google Scholar] [CrossRef]

- Guo, L.; Liu, J.; Yao, P.; Li, J.; Lu, H. MSCap: Multi-Style Image Captioning With Unpaired Stylized Text. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–21 June 2019; pp. 4204–4213. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS), Long Beach, CA, USA, 4–10 December 2017; pp. 5998–6008. [Google Scholar]

- Karpathy, A.; Li, F.-F. Deep visual-semantic alignments for generating image descriptions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; Volume 2015, pp. 3128–3137. [Google Scholar]

- Vinyals, O.; Toshev, A.; Bengio, S.; Erhan, D. Show and tell: A neural image caption generator. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3156–3164. [Google Scholar]

- Shetty, R.; Rohrbach, M.; Hendricks, L.A.; Fritz, M.; Schiele, B. Speaking the Same Language: Matching Machine to Human Captions by Adversarial Training. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Dai, B.; Fidler, S.; Urtasun, R.; Lin, D. Towards diverse and natural image descriptions via a conditional gan. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2989–2998. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In IEEE Transactions on Pattern Analysis and Machine Intelligence; IEEE Computer Society: Los Alamitos, CA, USA, 2017; Volume 39, pp. 1137–1149. [Google Scholar] [CrossRef]

- Yao, T.; Pan, Y.; Li, Y.; Mei, T. Exploring visual relationship for image captioning. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 684–699. [Google Scholar]

- Li, G.; Zhu, L.; Liu, P.; Yang, Y. Entangled Transformer for Image Captioning. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 8928–8937. [Google Scholar]

- Cornia, M.; Stefanini, M.; Baraldi, L.; Cucchiara, R. Meshed-Memory Transformer for Image Captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–18 June 2020; pp. 10578–10587. [Google Scholar]

- Chen, T.; Zhang, Z.; You, Q.; Fang, C.; Wang, Z.; Jin, H.; Luo, J. “Factual” or “Emotional”: Stylized Image Captioning with Adaptive Learning and Attention. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 519–535. [Google Scholar]

- Nezami, O.M.; Dras, M.; Wan, S.; Paris, C.; Hamey, L. Towards Generating Stylized Image Captions via Adversarial Training. In Pacific Rim International Conference on Artificial Intelligence; Springer: Berlin/Heidelberg, Germany, 2019; pp. 270–284. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Yu, L.; Zhang, W.; Wang, J.; Yu, Y. SeqGAN: Sequence generative adversarial nets with policy gradient. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence (AAAI-17), San Francisco, CA, USA, 4–9 February 2017; pp. 2852–2858. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems 27 (NIPS), Montreal, QC, Canada, 13 December 2014; pp. 2672–2680. [Google Scholar]

- Rennie, S.J.; Marcheret, E.; Mroueh, Y.; Ross, J.; Goel, V. Self-Critical Sequence Training for Image Captioning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 7008–7024. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Doll’ar, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.-J. Bleu: A method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting on Association for Computational Linguistics, Philadelphia, PA, USA, 7–12 July 2002; pp. 311–318. [Google Scholar]

- Vedantam, R.; Zitnick, C.L.; Parikh, D. Cider: Consensusbased image description evaluation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4566–4575. [Google Scholar]

- Banerjee, S.; Lavie, A. Meteor: An automaticmetric for mt evaluation with improved correlation with human judgments. In Proceedings of the ACL Workshop on Intrinsic and Extrinsic Evaluation Measures for Machine Translation and/or Summarization, Ann Arbor, MI, USA, 29 June 2005; pp. 65–72. [Google Scholar]

- Stolcke, A. Srilm-an extensible language modeling toolkit. In Proceedings of the Seventh International Conference on Spoken Language Processing, Denver, CO, USA, 16–20 September 2002; Available online: https://isca-speech.org/archive/archive_papers/icslp_2002/i02_0901.pdf (accessed on 30 November 2020).

- Kingma, D.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C.D. Glove: Global vectors for word representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; Volume 14, pp. 1532–1543. [Google Scholar]

| Model | Style | B-1 | B-3 | M | C | ppl() | cls |

|---|---|---|---|---|---|---|---|

| NIC-TF | Rom | 26.9 | 7.5 | 11.0 | 35.4 | 27.7 | 82.6 |

| Hum | 26.3 | 7.4 | 10.2 | 35.1 | 31.8 | 80.1 | |

| StyleNet | Rom | 13.3 | 1.5 | 4.5 | 7.2 | 52.9 | 37.8 |

| Hum | 13.4 | 0.9 | 4.3 | 11.3 | 48.1 | 41.9 | |

| SF-LSTM | Rom | 27.8 | 8.2 | 11.2 | 37.5 | - | - |

| Hum | 27.4 | 8.5 | 11.0 | 39.5 | - | - | |

| ST-BR | Rom | 22.5 | 3.7 | 6.6 | 21.7 | 17.3 | 95.2 |

| Hum | 20.4 | 4.3 | 7.5 | 18.2 | 16.1 | 96.1 |

| Model | Style | B-1 | B-3 | M | C | ppl | cls |

|---|---|---|---|---|---|---|---|

| MSCap | Rom | 17.0 | 2.0 | 5.4 | 10.1 | 20.4 | 88.7 |

| Hum | 16.3 | 1.9 | 5.3 | 15.2 | 22.7 | 91.3 | |

| ST-BR | Rom | 18.1 | 2.3 | 5.1 | 11.5 | 18.8 | 90.1 |

| Hum | 17.6 | 2.1 | 5.5 | 16.7 | 18.1 | 92.5 |

| Method | Style | B1 | B3 | M | C | ppl | cls |

|---|---|---|---|---|---|---|---|

| STrans | Rom | 12.2 | 1.3 | 4.7 | 8.0 | 54.3 | 35.6 |

| Hum | 13.0 | 0.7 | 4.3 | 13.4 | 46.2 | 43.5 | |

| ST-FKD | Rom | 18.9 | 2.5 | 5.5 | 16.7 | 59.3 | 30.4 |

| Hum | 17.0 | 1.6 | 6.0 | 15.9 | 55.2 | 29.9 | |

| ST-SFD | Rom | 10.0 | 0.8 | 3.2 | 5.7 | 33.2 | 76.9 |

| Hum | 9.8 | 0.5 | 3.0 | 6.0 | 30.1 | 88.0 | |

| ST-BR | Rom | 22.5 | 3.7 | 6.6 | 21.7 | 17.3 | 95.2 |

| Hum | 20.4 | 4.3 | 7.5 | 18.2 | 16.1 | 96.1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, Z.; Liu, Q.; Liu, G. Better Understanding: Stylized Image Captioning with Style Attention and Adversarial Training. Symmetry 2020, 12, 1978. https://doi.org/10.3390/sym12121978

Yang Z, Liu Q, Liu G. Better Understanding: Stylized Image Captioning with Style Attention and Adversarial Training. Symmetry. 2020; 12(12):1978. https://doi.org/10.3390/sym12121978

Chicago/Turabian StyleYang, Zhenyu, Qiao Liu, and Guojing Liu. 2020. "Better Understanding: Stylized Image Captioning with Style Attention and Adversarial Training" Symmetry 12, no. 12: 1978. https://doi.org/10.3390/sym12121978

APA StyleYang, Z., Liu, Q., & Liu, G. (2020). Better Understanding: Stylized Image Captioning with Style Attention and Adversarial Training. Symmetry, 12(12), 1978. https://doi.org/10.3390/sym12121978