Abstract

The use of auxiliary information in survey sampling to enhance the efficiency of the estimators of population parameters is a common phenomenon. Generally, the ratio and regression estimators are developed by using the known information on conventional parameters of the auxiliary variables, such as variance, coefficient of variation, coefficient of skewness, coefficient of kurtosis, or correlation between the study and auxiliary variable. The efficiency of these estimators is dubious in the presence of outliers in the data and a nonsymmetrical population. This study presents improved variance estimators under simple random sampling without replacement with the assumption that the information on some nonconventional dispersion measures of the auxiliary variable is readily available. These auxiliary variables can be the inter-decile range, sample inter-quartile range, probability-weighted moment estimator, Gini mean difference estimator, Downton’s estimator, median absolute deviation from the median, and so forth. The algebraic expressions for the bias and mean square error of the proposed estimators are obtained and the efficiency conditions are derived to compare with the existing estimators. The percentage relative efficiencies are used to numerically compare the results of the proposed estimators with the existing estimators by using real datasets, indicating the supremacy of the suggested estimators.

1. Introduction

Suppose a finite population consists of different and identifiable units. Let be a measurable variable of interest with values being ascertained on resulting in a set of observations . The purpose of the measurement process is to estimate the population variance by drawing a random sample from the population. Suppose that the information on auxiliary variable which is correlated with the study variable is also available against every unit of the population. The use of auxiliary information to improve the efficiency of the estimators of population parameters is very popular, especially when information on the auxiliary variable is readily available and is highly correlated with the variable of interest. For instance, see [1,2,3] and the references cited therein. The ratio, product, and regression method of estimations are frequently used to enhance the efficiency of the estimators depending upon the nature of the relationship between the study and the auxiliary variables. Along with the estimation of population mean, the estimation of population variance is of importance in many real-life situations. Generally, estimation of population variance is dealt with in the context of augmenting the conventional parameters of the auxiliary variable through a ratio or regression method of estimation to achieve greater efficiency. Mostly coefficient of skewness, coefficient of kurtosis, coefficient of variation, and coefficient of correlation are used in linear combination with some other conventional parameters of the auxiliary variable to estimate the variance. The readers can refer to [4,5,6,7,8,9,10,11,12,13,14,15] and the references therein. The auxiliary measures used in most of the existing ratio-type estimators of variance are nonresistant to the presence of outliers or nonsymmetrical populations. Therefore, there is a need to develop such ratio- or regression-type estimators which are somewhat outlier resistant and more stable in the case of asymmetrical populations.

The present study is focused on estimation of population variance by incorporating information on nonconventional dispersion parameters (detailed in Section 3) of the auxiliary variable. These measures are resistant to outliers and used in a linear combination with the other conventional measures to improve the efficiency of the variance estimator under simple random sampling without replacement (SRSWOR) in the presence of outliers in the target population. The rest of the manuscript is structured as follows. The nomenclature used in the manuscript and background of the existing estimators of population variance is described in Section 2, whereas Section 3 presents the proposed improved families of estimators of population variance. In Section 4 the performance of the proposed families of the estimators is evaluated and compared with the existing estimators of population variance. Finally, some concluding remarks are given in Section 5.

2. Background of the Ratio-Type Estimators of Variance

This section deals with some of the existing estimators of population variance under simple random sampling (SRS), which utilizes the known information on conventional parameters of the auxiliary variable to enhance the efficiency of the variance estimators. Before going into the details of the existing estimators of population variance, the notation used in this manuscript is as follows.

Population size; Sample size

Sampling fraction;

Study variable; Auxiliary variable

Population means; Sample means

Population standard deviations; Sample standard deviations

Population coefficients of variation; Population correlation coefficient

Population coefficient of kurtosis of the study variable

Population coefficient of kurtosis of the auxiliary variable

Population coefficient of skewness of the auxiliary variable

Population median of the auxiliary variable

Population lower quartile of the auxiliary variable

Population upper quartile of the auxiliary variable

Population inter-quartile range of the auxiliary variable

Population inter-quartile average of the auxiliary variable

Population semi inter-quartile range of the auxiliary variable

ith ( population decile of the auxiliary variable

Bias of the estimator; Mean square error of the estimator

Traditional ratio estimator; Existing ratio estimator

The class of estimators introduced by [16]

Proposed class-I estimators; Proposed class-II estimators

; ; ; The traditional ratio-type estimator of Isaki [4] utilizes information on the known variance of the auxiliary variable to estimate the population variance . The estimator, along with its approximate bias and MSE, is given as

Several modifications and improved estimators of variance which have been proposed in the literature make use of different conventional characteristics of the auxiliary variable. All these estimators exhibit superior efficiency as compared to the traditional ratio estimator under certain theoretical conditions. Some of the existing estimators, which utilize information on the variance of the auxiliary variable linearly integrated with other conventional parameters of the auxiliary variable, are summarized in Table 1 with their respective bias, MSEs, and constants.

Table 1.

Some existing estimators of variance with their bias and mean squared error.

Recently Subramani and Kumarapandiyan [16] proposed a new class of modified ratio-type estimators of population variance by modifying the estimator proposed by Upadhyaya and Singh [17]. They showed that their new class of estimators outperforms the estimators suggested in [5,11,12,13,14,17,18] under certain conditions.

The general structure of the Subramani and Kumarapandiyan [16] class of estimators is given as

where , are different choices of parameters of the auxiliary variable which can be found in Appendix A.

All these estimators exhibit superior efficiency as compared to the traditional ratio-type estimators suggested by Isaki [4] under certain conditions, but most of these estimators are based on conventional parameters of the auxiliary variable. Although many other estimators of variance are available in the existing literature, they are more complex and involve laborious computational details. Therefore, the above detailed estimators were chosen for comparison purposes due to the simplicity of their structure and relatively lower computational complexities for practitioners.

3. Proposed Estimators of Variance

This section presents two different families of ratio estimators of population variance for the case where information on some nonconventional measures of dispersion of the auxiliary variable is readily available. The nonconventional measures used to develop the new ratio estimators of variance include the following.

- Inter-decile Range: The inter-decile range is the difference between the largest decile and smallest decile . Symbolically it is given as

- Sample Inter-quartile Range: The sample inter-quartile range is based on the difference between the upper and lower quartiles as discussed by Riaz [19] and Nazir et al. [20]. It is computed as.

- Probability Weighted Moment Estimator: The probability weighted moment estimator of dispersion suggested by Downton [21] is based on the ordered sample statistics and it is defined aswhere denotes the th order sample statistics.

- Downton’s Estimator: Another estimator of dispersion, similar to , was proposed by Downton [21] and defined as

- Gini Mean Difference Estimator: Gini [22] introduced a dispersion estimator which is also based on the sample order statistics. It is given as

- Median Absolute Deviation from Median: Hampel [23] suggested an estimator of dispersion based on absolute deviation from the median. It is defined as, where is the median.

- The Median of Pairwise Distances: Shamos [24] (p. 260) and Bickel and Lehmann [25] (p. 38) suggested an estimator of dispersion which is based on the median of pairwise distances as . Rousseeuw and Croux [26] suggested to pre-multiply it by 1.0483 to achieve consistency under the Gaussian distribution, and the resultant estimator can be defined as.

- Median Absolute Deviation from Mean: Wu et al. [27] defined another estimator which is also based on absolute deviation from the mean. It is given as

- Mean Absolute Deviation from Mean: Wu et al. [27] suggested an estimator of dispersion which is based on absolute deviation from the mean. It is given as

- Average Absolute Deviation from Median: Wu et al. [27] suggested an estimator of dispersion which is based on the average of absolute deviation from the median. It is given as

- The Ordered Statistic of Subranges: A robust estimator of dispersion based on the order of subranges was introduced by Croux and Rousseeuw [28], defined as, where the symbol [·] represents the integer part of a fraction.

- Trimmed Mean of Median of Pairwise Distances: Croux and Rousseeuw [28] defined another robust estimator of dispersion which is based on the trimmed mean of the median of pairwise distances. It is given as, where for each , we compute the median of that yields values, and the average of the first order statistics gives the final estimate , where , which is roughly half of the number of observations.

- The 0.25-quantile of Pairwise Distances: Another incorporated in this study as a non-conventional dispersion measure is due to Rousseeuw and Croux [26] and is defined aswhere is the constant factor and its default value is to make it a consistent estimator under normality, while and . Thus, the th order statistic of the interpoint distances yields the desired estimator.

- The Median of the Median of Distances: This study also includes a robust estimator of dispersion defined in Rousseeuw and Croux [26]. It is given as, where is a constant used for consistency and under a normal population its value is usually set to .

The abovementioned nonconventional measures are used in conjunction with other conventional measures such as the coefficient of skewness, the coefficient of variation, and the coefficient of correlation in the context of ratio and regression methods of estimation under SRSWOR to propose new estimators of population variance. The detailed properties of the above nonconventional measures can be found in the relevant cited references.

3.1. The Suggested Estimators of Class-I

Motivated by Abid et al. [29], we propose a new class of ratio estimators of variance under SRS by using the power transformation and the Searls [30] technique as follows:

where is the Searls [30] constant, is the sample variance of the study variable, and and are the population and sample mean of the auxiliary variable, respectively. It is worth mentioning that , , and can either be known real numbers or known conventional parameters of the auxiliary variable , whereas are the known nonconventional dispersion parameters of the auxiliary variable .

To obtain the bias and MSE of , in terms of relative errors, we can express and such that , and , , , .

After putting the values of and into Equation (4), we get

where and .

Assuming so that is expandable, expanding the right-hand side of Equation (5) and neglecting the terms of ’s having power greater than two, we have

Subtracting from both sides and simplifying, we get

By taking the expectation on both sides of Equation (6), we get the bias of up to the first degree of approximation as

The mean square error of is defined as

So, squaring both sides of Equation (6), keeping terms of ’s only up to the second order and applying expectation, the MSE of up to the first degree of approximation is represented as

Differentiating Equation (8) with respect to , equating it to zero, and after simplification, we get the optimum value of as

where and .

Substituting the above result into Equation (8) and simplifying, the minimum MSE of is

The exact values of and can easily be obtained by substituting the known results for , , and into their respective expressions, which are given as

The proposed class-I encompasses different kinds of existing estimators by specifying the values of the constants. For example, if we set and , then the estimator suggested by Upadhyaya and Singh [17] is a member of the proposed class of estimators. Similarly, if we set , , and then the Subramani and Kumarapandiyan [16] class of estimators becomes a member of the proposed class. Some new members of the proposed class-I estimators which are based on integration of conventional parameters and nonconventional dispersion parameters of the auxiliary variable are given in Table 2. It is worth mentioning that many other estimators can be generated from the proposed class of estimators, but to conserve space only a few are given.

Table 2.

Some new members of proposed class-I estimators.

Efficiency Conditions for Class-I Estimators

- ▪

- The estimators of class-I perform better than the traditional estimator of Isaki [4] for estimating the population variance ifor

- ▪

- The estimators defined in class-I will achieve greater efficiency as compared to the estimators defined in Section 2, i.e., ifor

- ▪

- The suggested class-I estimators will outperform the Upadhyaya and Singh [17] modified ratio-type estimator of population variance in terms of efficiency ifor

- ▪

- The estimators envisaged in the proposed class will exhibit superior performance as compared to the Subramani and Kumarapandiyan [16] modified class of estimators ifor

3.2. The Suggested Estimators of Class-II

In this section, we present a new class of regression-type estimators of population variance. The proposed class of estimators is defined as

where is the Searls [30] constant, and can be real numbers or functions of known conventional parameter of the auxiliary variable , and are the known functions of the nonconventional dispersion parameters of the auxiliary variable , and is the regression coefficient between the study and auxiliary variables.

The minimized bias and minimized MSE of the class-II estimators are obtained by adapting the procedure given in Section 3.1:

where , , , , and .

The estimators envisaged in class-II incorporate many existing estimators of population variance. For instance, if we set and , then the estimator suggested by Upadhyaya and Singh [17] is a member of the class of estimators. Similarly, if we set , , and then the Subramani and Kumarapandiyan [16] class of estimators becomes a member of the proposed class of estimators. Moreover, if the regression coefficient , the class of estimators defined in Section 3.1 is also a member of the proposed class . Table 3 contains some new members of the proposed class-II to estimate the population variance based on auxiliary information.

Table 3.

Some new members of proposed class-II estimators.

Efficiency Conditions for Class-II Estimators

- ▪

- The estimators in class-II will perform better than the Isaki [4] traditional ratio estimator of population variance ifor

- ▪

- The estimators defined in class-II will be superior in terms of efficiency as compared to the estimators defined in Section 2, i.e., ifor

- ▪

- The suggested class-II estimators will outperform the Upadhyaya and Singh [17] modified ratio-type estimator of population variance in terms of efficiency ifor

- ▪

- The estimators envisaged in the proposed class-II will exhibit superior performance as compared to the Subramani and Kumarapandiyan [16] modified class of estimators ifor

4. Empirical Study

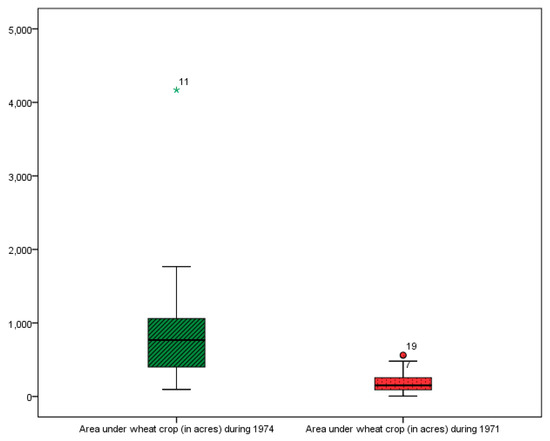

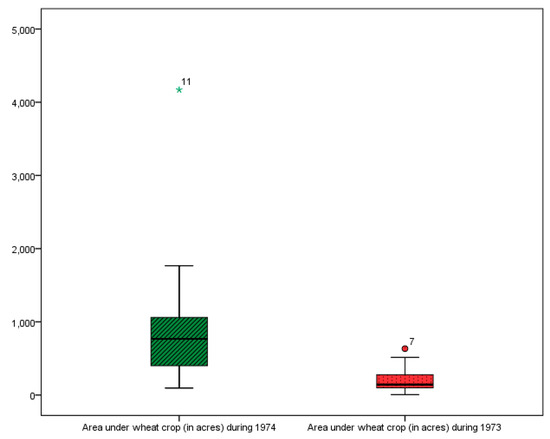

To assess the performance of the proposed classes of estimators in comparison to their competing estimators of variance, two real populations were taken from Singh and Chaudhary [31] (p. 177). These are the same datasets which were considered by Subramani and Kumarapandiyan [16]. In population-I, denotes the area under wheat crop (in acres) during 1974 in 34 villages and denotes the area under wheat crop (in acres) during 1971 in the same villages; in population-II, is the same as in population-I and is the area under wheat crop (in acres) during 1973. As mentioned earlier, the estimators in this study are nonconventional and somewhat robust dispersion measures. Moreover, these measures perform more efficiently in the presence of outliers in the data as compared to other conventional measures. So, it is expected that proposed classes of estimators will exhibit superior efficiency as compared to the existing and the traditional ratio estimators. The data of both the populations contain outliers, which is observable from the boxplots shown in Figure 1 and Figure 2.

Figure 1.

Boxplot of population-I.

Figure 2.

Boxplot of population-II.

The comparison between the proposed classes of estimators and the existing estimators was made based on their percentage relative efficiencies (PREs) as compared to the traditional ratio estimator of variance suggested by Isaki [4]. The PRE of the proposed estimators relative to the traditional estimator is defined as

where denotes the percentage relative efficiency of the proposed estimator in comparison with the traditional estimator, is the mean square error of the traditional estimator, and is the mean square error of the proposed classes of estimator. It is worth mentioning that due to the length of study, from the class of estimators proposed by Subramani and Kumarapandiyan [16], we took only its most efficient estimator, which is based on the for comparison purposes. The population characteristics are summarized in Table 4.

Table 4.

Population characteristics.

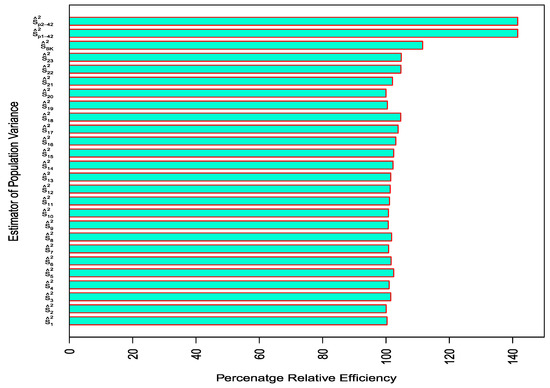

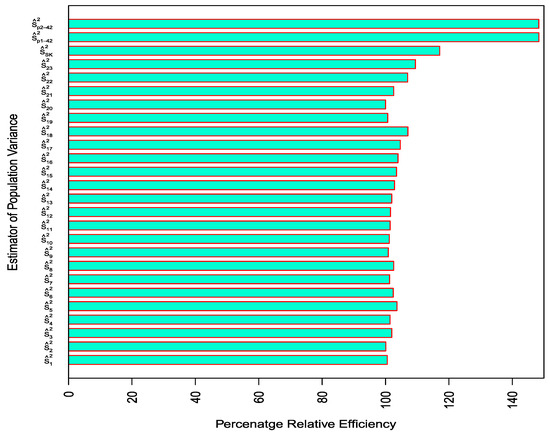

The PREs of the existing estimators as compared to the traditional ratio estimator by Isaki [4] are shown in Table 5 and Table 6 for population-1 and -2, respectively, while the PREs of the proposed estimators as compared to the traditional ratio estimator by Isaki [4] are given in Table 7 and Table 8, respectively. For better understanding, Figure 3 and Figure 4 display the comparative PREs of the existing and proposed estimators against population-1 and -2, respectively, where the best estimator from each of the proposed classes was chosen for comparison for better visual display.

Table 5.

PREs of the existing estimators as compared to the traditional ratio estimator for population-I.

Table 6.

PREs of the existing estimators as compare to the traditional ratio estimator for population-II.

Table 7.

PREs of the proposed classes of estimators as compared to the traditional ratio estimator for population-I.

Table 8.

PREs of the proposed classes of estimators as compared to the traditional ratio estimator for population-II.

Figure 3.

Comparison of PREs of existing and proposed estimators of variance for Population-I.

Figure 4.

Comparison of PREs of existing and proposed estimators of variance for Population-II.

From the results reported in Table 5, Table 6, Table 7 and Table 8, the findings are summarized as follows:

- The estimators proposed in class-I and class-II have higher PREs as compared to the existing estimators for both the populations considered in this study, which reveals the supremacy of the proposed classes of estimators in the presence of outliers in the data (cf. Table 5, Table 6, Table 7 and Table 8 and Figure 3 and Figure 4). For instance, the suggested estimators of class-I and class-II are at least 38% more efficient as compared to the traditional ratio estimator for population-I. For population-II, the efficiency of the suggested estimators exceeds 44%. All existing estimators are at most 11% and 17% more efficient as compared to the traditional ratio estimator for population-I and population-II, respectively.

- The estimator which is based on inter-decile range and the correlation coefficient between the study and auxiliary variables turned out to be the most efficient estimator.

- It was also observed that the performance of existing estimators in comparison with the traditional ratio estimators is not much superior in the presence of outliers in the data (cf. Table 5 and Table 6), whereas the suggested estimators perform quite well as compared to the existing and the traditional ratio estimators (cf. Table 7 and Table 8). These findings highlight the significance of using nonconventional measures in estimating the population variance in the presence of outliers.

5. Conclusions

This study introduced two new classes of ratio- and regression-type estimators of population variance under simple random sampling without replacement by integrating information on nonconventional and somewhat robust dispersion measures of an auxiliary variable. The expressions for bias and mean square error were obtained, and the efficiency conditions under which the proposed estimators perform better than the existing estimators were also derived. In support of the theoretical findings, an empirical study was conducted based on two real populations which revealed that the suggested classes of estimators outperform the existing estimators considered in this study in terms of PREs in the presence of outliers. Based on the findings, it is strongly recommended that the proposed classes of estimators be used instead of the existing estimators to estimate the population variance in the case of outliers in the dataset. The present study can be further extended by estimating the population variance in the case of two auxiliary variables; moreover, under different sampling schemes, nonconventional measures can be employed to enhance the efficiency of the variance estimators.

Author Contributions

F.N. Conceptualization, Data curation, Investigation, Methodology, Writing—Original draft. T.N. Formal analysis, Software, Validation, Writing—Original draft. T.P. Project administration, Supervision, Visualization, Writing—Review and editing. M.A. Data curation, Methodology, Resources, Writing—review and editing. All authors have read and agreed to the published version of the manuscript.

Funding

There was no funding for this paper.

Acknowledgments

F.N. and T.N. express gratitude to Chinese Scholarship Council (CSC), China for providing the financial support and excellent research facilities to study in China.

Conflicts of Interest

There is no potential conflict of interest to be reported by the authors.

Appendix A

Following are the various choices of , , parameters of the auxiliary variable X, used by Subramani and Kumarapandiyan [16] to propose a generalized modified class of estimators of population variance.

,, , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , and .

References

- Cochran, W.G. Sampling Techniques, 3rd ed.; John Wiley and Sons: New York, NY, USA, 1977. [Google Scholar]

- Singh, H.P. Estimation of normal parent parameters with known coefficient of variation. Gujarat Stat. Rev. 1986, 13, 57–62. [Google Scholar]

- Wolter, K.M. Introduction to Variance Estimation, 2nd ed.; Springer: New York, NY, USA, 1985. [Google Scholar]

- Isaki, C.T. Variance estimation using auxiliary information. J. Am. Stat. Assoc. 1983, 78, 117–123. [Google Scholar] [CrossRef]

- Kadilar, C.; Cingi, H. Ratio estimators for population variance in simple and stratified sampling. Appl. Math. Comput. 2006, 173, 1047–1058. [Google Scholar] [CrossRef]

- Khan, M.; Shabbir, J. A ratio type estimator for the estimation of population variance using quartiles of an auxiliary variable. J. Stat. Appl. Probab. 2013, 2, 319–325. [Google Scholar] [CrossRef]

- Maqbool, S.; Javaid, S. Variance estimation using linear combination of tri-mean and quartile average. Am. J. Biol. Environ. Stat. 2017, 3, 5–9. [Google Scholar] [CrossRef]

- Muneer, S.; Khalil, A.; Shabbir, J.; Narjis, G. A new improved ratio-product type exponential estimator of finite population variance using auxiliary information. J. Stat. Comput. Simul. 2018, 88, 3179–3192. [Google Scholar] [CrossRef]

- Naz, F.; Abid, M.; Nawaz, T.; Pang, T. Enhancing the efficiency of the ratio-type estimators of population variance with a blend of information on robust location measures. Sci. Iran. 2019. [Google Scholar] [CrossRef]

- Solanki, R.S.; Singh, H.P.; Pal, S.K. Improved ratio-type estimators of finite population variance using quartiles. Hacet. J. Math. Stat. 2015, 44, 747–754. [Google Scholar] [CrossRef]

- Subramani, J.; Kumarapandiyan, G. Variance estimation using median of the auxiliary variable. Int. J. Probab. Stat. 2012, 1, 36–40. [Google Scholar] [CrossRef]

- Estimation of Variance Using Deciles of an Auxiliary Variable. Available online: https://www.researchgate.net/publication/274008488_7_Subramani_J_and_Kumarapandiyan_G_2012c_Estimation_of_variance_using_deciles_of_an_auxiliary_variable_Proceedings_of_International_Conference_on_Frontiers_of_Statistics_and_Its_Applications_Bonfring_ (accessed on 10 November 2019).

- Subramani, J.; Kumarapandiyan, G. Variance estimation using quartiles and their functions of an auxiliary variable. Int. J. Stat. Appl. 2012, 2, 67–72. [Google Scholar] [CrossRef]

- Upadhyaya, L.N.; Singh, H.P. An estimator for population variance that utilizes the kurtosis of an auxiliary variable in sample surveys. Vikram Math. J. 1999, 19, 14–17. [Google Scholar]

- Yaqub, M.; Shabbir, J. An improved class of estimators for finite population variance. Hacet. J. Math. Stat. 2016, 45, 1641–1660. [Google Scholar] [CrossRef]

- Subramani, J.; Kumarapandiyan, G. A class of modified ratio estimators for estimation of population variance. J. Appl. Math. Stat. Inform. 2015, 11, 91–114. [Google Scholar] [CrossRef]

- Subramani, J.; Kumarapandiyan, G. Estimation of variance using known coefficient of variation and median of an auxiliary variable. J. Mod. Appl. Stat. Methods 2013, 12, 58–64. [Google Scholar] [CrossRef]

- Upadhyaya, L.N.; Singh, H.P. Estimation of population standard deviation using auxiliary information. Am. J. Math. Manag. Sci. 2001, 21, 345–358. [Google Scholar] [CrossRef]

- Riaz, M. A dispersion control chart. Commun. Stat.—Simul. Comput. 2008, 37, 1239–1261. [Google Scholar] [CrossRef]

- Nazir, H.Z.; Riaz, M.; Does, R.J.M.M. Robust CUSUM control charting for process dispersion. Qual. Reliab. Eng. Int. 2015, 31, 369–379. [Google Scholar] [CrossRef]

- Downton, F. Linear estimates with polynomial coefficients. Biometrika 1966, 53, 129–141. [Google Scholar] [CrossRef]

- Gini, C. Variabilità e mutabilità. In Memorie di Metodologica Statistica; Pizetti, E., Salvemini, T., Eds.; Libreria Eredi Virgilio Veschi: Rome, Italy, 1912. [Google Scholar]

- Hampel, F.R. The influence curve and its role in robust estimation. J. Am. Stat. Assoc. 1974, 69, 383–393. [Google Scholar] [CrossRef]

- Shamos, M.I. Geometry and statistics: problems at the interface. In New Directions and Recent Results in Algorithms and Complexity; Traub, J.F., Ed.; Academic Press: New York, NY, USA, 1976; pp. 251–280. [Google Scholar]

- Bickel, P.J.; Lehmann, E.L. Descriptive statistics for nonparametric models IV. spread. In Contributions to Statistics, Hajek Memorial Volume; Jureckova, J., Ed.; Academia: Prague, Czechia, 1979; pp. 33–40. [Google Scholar] [CrossRef]

- Rousseeuw, P.J.; Croux, C. Alternatives to the median absolute deviation. J. Am. Stat. Assoc. 1993, 88, 1273–1283. [Google Scholar] [CrossRef]

- Wu, C.; Zhao, Y.; Wang, Z. The median absolute deviations and their applications to Shewhart control charts. Commun. Stat.—Simul. Comput. 2002, 31, 425–442. [Google Scholar] [CrossRef]

- Croux, C.; Rousseeuw, P.J. A class of high-breakdown scale estimators based on subranges. Commun. Stat.—Theory Methods 1992, 21, 1935–1951. [Google Scholar] [CrossRef]

- Abid, M.; Abbas, N.; Nazir, H.Z.; Lin, Z. Enhancing the mean ratio estimators for estimating population mean using non-conventional location parameters. Rev. Colomb. Estadística 2016, 39, 63–79. [Google Scholar] [CrossRef]

- Searls, D.T. Utilization of known coefficient of kurtosis in the estimation procedure of variance. J. Am. Stat. Assoc. 1964, 59, 1225–1226. [Google Scholar] [CrossRef]

- Singh, D.; Chaudhary, F.S. Theory and Analysis of Sample Survey Designs; New Age International Publisher: New Dehli, India, 1986. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).