Abstract

This paper proposed an improved bat algorithm based on Lévy flights and adjustment factors (LAFBA). Dynamically decreasing inertia weight is added to the velocity update, which effectively balances the global and local search of the algorithm; the search strategy of Lévy flight is added to the position update, so that the algorithm maintains a good population diversity and the global search ability is improved; and the speed adjustment factor is added, which effectively improves the speed and accuracy of the algorithm. The proposed algorithm was then tested using 10 benchmark functions and 2 classical engineering design optimizations. The simulation results show that the LAFBA has stronger optimization performance and higher optimization efficiency than basic bat algorithm and other bio-inspired algorithms. Furthermore, the results of the real-world engineering problems demonstrate the superiority of LAFBA in solving challenging problems with constrained and unknown search spaces.

1. Introduction

Many problems in management can be treated as global optimization problems, and the need to efficiently solve large-scale optimization problems has prompted the development of bio-inspired intelligent optimization algorithms. For example, scholar Holland proposed a genetic algorithm (GA) based on the idea of evolution [1]; Kennedy and Eberhart proposed particle swarm optimization (PSO) [2,3] by referring to the swarm foraging behavior in nature; and Dorigo et al., inspired by ant foraging behavior, proposed the algorithm of ant colony optimization (ACO) [4]. In recent years, a variety of novel swarm intelligent optimization algorithms have been developed, such as bee colony algorithm [5], krill herd algorithm [6], cuckoo search algorithm [7,8] and moth flame optimization algorithm [9] etc. Although a meta-heuristic algorithm has the advantages of simple calculation steps and being easy to understand and implement, it also has the disadvantages of easily falling into local extreme value and low solution accuracy. Sergeyev et al. compared several widely used metaheuristic global optimization methods with Lipschitz deterministic methods by using operational zones, and simulation results show that, in some runs, the metaheuristic methods were getting stuck in the local solutions [10]. Therefore, it is of great significance to research and put forward swarm intelligence optimization algorithms with better performance to enrich the algorithm and expand the application field of the algorithm.

The bat algorithm (BA) is a metaheuristic optimization algorithm proposed by Yang [11]. The algorithm uses the bat’s echolocation capability to design an optimization strategy that iterates through frequency updates. The bat algorithm has the advantages of having less setting parameters, being easy to understand and implement, and having fast convergence. However, it also has drawbacks in balancing global and local search capabilities, it is easy to fall into a local optimum, and the solution accuracy is not high. To overcome these shortcomings, many scholars have made improvements to the bat algorithm. For instance, Ramli and other scholars, in order to improve the global search ability of the bat algorithm, put forward an enhanced bat algorithm (MBA) based on dimensional and inertia weight factor to enhance the convergence [12]. Banati and Chaudhary proposed a multimodal bat algorithm (MMBAIS) with improved search mechanism, which effectively alleviates the problem of early convergence and improves the convergence speed of the algorithm in later phase [13]. Al-Betar studied the alternative selection mechanism in the bat algorithm for global optimization [14]. Li added a mutation switch function to the standard bat algorithm and proposed a bat optimization algorithm (UGBA) that combines uniform variation and Gaussian variation [15]. Asma proposed a directional bat algorithm (dBA) to introduce directional echolocation in the standard bat algorithm to enhance the exploration and exploitation capabilities of the algorithm [16]. Al-Betar and Awadallah proposed an island bat algorithm (iBA) that uses the island model’s strategy for bat algorithms to enhance algorithm population diversity to avoid premature convergence [17].

In addition, many scholars are committed to expanding the application fields of bat algorithms. For example, Laudis and other scholars have proposed a multi objective bat algorithm (MOBA) to solve the problem in Very Large-Scale Integration (VLSI) design [18]. Tawhid and Dsouza proposed a hybrid binary bat enhanced particle swarm optimization algorithm (HBBEPSO) to solve the feature selection problems [19]. Osaba proposed an improved discrete bat algorithm (IBA) for solving symmetric and asymmetric traveling salesman problems [20]. Mohamed and Moftah proposed a novel multiobjective binary bat algorithm for simultaneous ranking and selection of keystroke dynamics features [21]. Hamidzadeh used the ergodicity of chaotic algorithm and the automatic conversion of bat algorithm global search and local search to construct a weighted SVDD method based on chaotic bat algorithm (WSVDD–CBA) for effective data description [22]. Qi and others proposed a discretized bat algorithm for solving vehicle routing problems with time windows [23]. Bekdaş and others used the bat algorithm to modify the tuning quality and proposed an effective method to solve the damper optimization problem [24]. Ameur and Sakly proposed a new hardware implementation of the bat algorithm’s field-programmable gate array (FPGA) [25]. Chaib and other scholars used the bat algorithm to optimize the design and adjust the novel fractional-order PID power system stabilizer [26]. Mohammad et al. used the bat algorithm to successfully solve the complex engineering problem of dam–reservoir operation [27]. In order to better solve the problem of mobile robot path planning, Liu et al. proposed a bat algorithm with reverse learning and tangent random exploration mechanism [28].

In order to improve the performance of the bat algorithm, this paper proposes an improved bat algorithm based on Lévy flights and adjustment factors (LAFBA). The search mechanism of Lévy flight is introduced to update the position of the bat algorithm, which can effectively help the algorithm maintain the diversity of the population and improve the global search ability. In addition, the introduction of the dynamic decreasing inertia weight and speed adjustment factor enables the algorithm to balance global exploration and local exploitation, improve the accuracy of the algorithm, and accelerate the later convergence speed.

The efficiency of the LAFBA is tested by solving 10 classical optimization functions and 2 structural optimization problems. The results obtained show that LAFBA is competitive in comparison with other state-of-the-art optimization methods.

2. Enhanced Bat Algorithm

2.1. Bat Algorithm

The bat algorithm is a swarm intelligent optimization algorithm that simulates the behavior of bats using the echolocation ability to prey. It realizes velocity and position update through the change of frequency f. The implementation of the algorithm is based on the following three idealized rules [11]:

Rule 1: Bats use echolocation to sense distance and can distinguish between prey and obstacles.

Rule 2: Bats fly randomly with the velocity at the position with a varying frequency (from a minimum frequency to a maximum frequency ) or a varying wavelength and sound loudness to search for prey. The wavelength (or frequency) of the emitted pulse can be automatically adjusted according to the proximity of the target, and the rate of pulse emission, , is also adjusted.

Rule 3: Assume that the loudness varies from the largest positive value to the minimum constant value .

Based on the above rules, the position vector of the bat represents a solution in the search space. Since the global optimal position vector in the search space is not known a priori, the algorithm randomly initializes the bats. The initialize equation as follows:

for bat, where ; ; n represents the population size, d represents the dimension of the search space. and are lower and upper bounds for dimension, and is a random number between [0,1]. Bat individuals, in the d-dimensional search space, update frequency, velocity vector, and position vector according to the following equations:

where is a random number between [0, 1]. represents the current global best solution, and the bat updates the velocity and position according to the change of frequency f.

When the bat performs a local search, the position update equation is as follows:

where which is set as 0.001 in this paper. represents the average loudness in current iteration.

As the bat approaches the prey, the loudness continues to decrease, while the rate of pulse emission increases. Loudness which is a vector of values for all bats, which assists in updating the bat location. Rate of pulse emission which is a vector for all bats controlling the diversification of bat algorithm. The update equation is as follows:

Here, is the pulse emission loudness attenuation coefficient. When , for any value at and , it has and .

2.2. Dynamically Decreasing Inertia Weight

In the bat algorithm velocity update equation, right before the previous generation velocity is a constant term coefficient 1. The fixed coefficient is not conducive to the algorithm’s exploration of the global search space, and also reduces the flexibility of the bat individual in the algorithm, thus making it easy to fall into local optimum. The particle swarm optimization algorithm balances the global and local search by adjusting the inertia weight [29]. A larger inertia weight makes the individual’s change range larger, which is conducive to global exploration and local exploitation. A smaller inertia weight makes the individual change range smaller, which is advantageous for the algorithm to perform a local search on the optimization function. Inspired by this, this paper introduces the dynamic decreasing inertia weight, and changes the velocity update equation to

where and respectively represent the maximum and minimum weight, represents the current number of iterations, and represents the maximum number of iterations. In this paper, takes a value of 0.9 and takes a value of 0.42. The inverse tangent function is a monotonically increasing function [30], so is a monotonically decreasing function. The inertia of the early period is great, while the inertia of the late period is small, which can balance the global and local search of the algorithm well. In this paper, the arctangent function domain is [0,4]. The change of the arctangent function in the domain is gradually slowed down, making the decrease of rapid in the early stage but slow in the late stage. The algorithm is converted from a fast global search to a slow local search, which can effectively improve the speed and accuracy of the algorithm.

2.3. Lévy Flights

In 1926, the French mathematician Paul Lévy proposed Lévy flights. Lévy flights phenomenon is very common in nature. The foraging activities of creatures, such as wasps, jackals, monkeys, and human hunting behaviors, are all consistent with the random motion model of Lévy’s flights [31]. For example, some herbivores randomly move around in a given area to find a source of grass, but if they cannot find it, they will quickly go to another area and then resume the previous way of walking. This can effectively avoid wasting time in a place with insufficient resource. Along the iterative process, an agglomeration phenomenon occurs in the individual bat algorithm, the population diversity is reduced, the global search ability is undermined, and the algorithm easily falls into premature convergence of local optimum. In order to solve this problem, this paper proposes to add the Lévy flight search mechanism to the bat algorithm, and modify the bat position vector update equation as below:

Here, is the current number of iterations, and d is the dimension of the search space. The Lévy flight is calculated as follows [32]:

where , are two random numbers in [0,1], is a constant 1.5, and calculated as follows:

where , 50 step sizes have been drawn to form a consecutive 50 steps of Lévy flights as shown in Figure 1 [32].

Figure 1.

A series of 50 consecutive steps of Lévy flights.

Figure 1 intuitively shows Lévy’s ability to suddenly move a long distance after several short distance movements. In this paper, the Lévy flight mechanism is introduced to the bat position update. On the one hand, it can effectively avoid the overreliance of the bat position change on the previous generation position information and ensure the diversity of the population. On the other hand, the random walk mode of Lévy flight that changes large steps suddenly after a series of small steps gives the bat individual the ability to jump suddenly, which helps the algorithm to jump out of the local optimum, avoid the premature convergence of the algorithm, and improve the global search ability.

2.4. Speed Adjustment Factor

In order to ensure the efficiency of the algorithm, this paper designs a speed adjustment factor based on the dimension of the optimization function to be solved and the number of iterations of the algorithm, which is applied to the bat position update. Along the iteration progress, the speed adjustment factor changes from large to small, and the position movement step size changes from large to small, which satisfies the requirement of the algorithm for global search in the early iteration stage and local search in the later iteration stage, enabling the bat algorithm to search for the optimum when solving each generation of the movement in space. The position vector update equation in this article is as below:

Here, can be any value between [0,1], which is set as 0.01 in this paper. d represents the dimension of the search space. At the same dimensional level, gradually decreases as the number of iterations increases, making the position update of the algorithm change from large-scale movement in the early stage to small-scale movement in the late stage, and accelerates the convergence speed of the algorithm. When the algorithm solves the function, the search difficulty will increase with the increase of dimension, and the problems of low solution accuracy and reduced solution speed may occur. With the increase of the solution dimension, the speed adjustment factor proposed in this paper will be maintained at a relatively low level, which can speed up the algorithm and improve the accuracy of the solution when solving high-dimensional functions.

2.5. The Pseudocode of the LAFBA

The dynamic declining inertia weight added in the velocity update can effectively balance the global and local search of the algorithm; the Lévy flight search mechanism introduced to the location update can ensure the diversity of the population and improve the global search ability of the algorithm, and the construction of the speed adjustment factor can effectively improve the solution accuracy and speed of the algorithm. The pseudocode of the LAFBA is shown as follows:

| 1. Define objective function , |

| 2. Set the initial value of population size n, and |

| 3. Initialize pulse rates and loudness |

| 4. Initialize the bat population (Equation (1)) |

| 5. Evaluate and find |

| 6. while t ≤ N_gen |

| 7. for = 1 to n |

| 8. Adjust frequency (Equation (2)) |

| 9. Update inertia weight (Equation (9)) and (Equation (11)) |

| 10. Update the velocity (Equation (8)) and position vector (Equation (13)) of the bat |

| 11. if (rand > ) |

| 12. Select a solution among the best solutions |

| 13. Generate a local solution around selected best (Equation (5)) |

| 14. end if |

| 15. Evaluate objective function |

| 16. if (rand < & f() < f()) |

| 17. |

| 18. f() = f() |

| 19. Increase (Equation (7)) |

| 20. Reduce (Equation (6)) |

| 21. end if |

| 22. if () |

| 23. Update the best solution |

| 24. end if |

| 25. end for |

| 26. Rank the bats and find the current best |

| 27. |

| 28. end while |

| 29. Return , postprocess results and visualization |

3. Numerical Simulation and Analysis

In order to test the performance of the improved algorithm proposed in this paper, ten standard optimization functions are selected [33], and the algorithm is compared with the standard bat algorithm (BA) [11], particle swarm optimization (PSO) algorithm [2], moth flame optimization (MFO) algorithm [9], sine cosine algorithm (SCA) [34], and butterfly optimization algorithm (BOA) [35].

3.1. Parameters Setting

We have tried to use different population sizes from n = 10 to 100, and we found that for most problems, n = 20 is sufficient. Therefore, we use a fixed population n = 20 for all simulations. In order to guarantee the comparability and fairness of the simulation experiment, the same parameters are set for the four algorithms: the population size n = 20, and the number of iterations = 500. For BA and LAFBA, , the sound loudness = 0.25 and the rate of pulse emission = 0.5. For PSO, we have used the standard version with learning parameters c1 = c2 = 2. For BOA, modular modality c is 0.01, power exponent a is increased from 0.1 to 0.3 and the probability switch p = 0.8.

The simulation environment is MATLAB 2014a, the operating system is Windows10 Home Chinese version, 4.00GB running memory, the processor is Intel(R) Core (TM) i5-6200U CPU @ 2.30 GHz 2.40GHz.

3.2. Standard Optimization Functions

Optimization functions are as below:

(1) Griewank Function

The function definition field is [−600, 600] and the theoretical optimal value is 0. This function is a continuous, differentiable, non-separable, scalable, multimodal multi-extreme function, with many local optima. The higher the function dimension, the more the number of local optima. It is extremely difficult to optimize and is thus often used to test the exploration and exploitation capabilities of the algorithm.

(2) Quartic Function

The function definition field is [−1.28, 1.28], and the theoretical optimal value is 0. This function is a continuous, differentiable, separable, scalable, and high-dimensional unimodal function. Unimodal functions are often used to test the convergence speed of an algorithm.

(3) Ackley Function

The function definition field is [−30, 30] and the theoretical optimal value is 0. This function is a continuous, differentiable, non-separable, scalable, and complex nonlinear multimodal function which is formed by the superposition of a moderately amplified cosine wave to an exponential function. The undulation of the function surface makes the search of this function more complicated, and there are a large number of local optima.

(4) Rastrigin Function

The function definition field is [−5.12, 5.12], and the function theory optimal value is 0. This function is a high-dimensional multimodal function. There are about 10d local minimum values in the solution space. The peak shape of the function fluctuates volatility, making the global search rather difficult.

(5) Schaffer Function

The function definition field is [−10, 10] and the theoretical optimal value is 0. The fluctuation is fierce, and it is difficult to find the global optimal value. The function graph presents a “four-corner hat” shape, which is a continuous, differentiable, non-separable, scalable, and typical multimodal function. The global optimal position is in the center of the brim, and the relative search area is very small.

(6) Sphere Function

The function definition field is [−5.12, 5.12], and the theoretical optimal value is 0. This function is a continuous, differentiable, separable, scalable, and classic high-dimensional unimodal function.

(7) Axis Parallel Function

The function definition field is [−5.12, 5.12], and the theoretical optimal value is 0. This function is a unimodal function.

(8) Zakharov Function

The function definition field is [−10,10], and the theoretical optimal value is 0. This function is a continuous, differentiable, non-separable, scalable, multimodal function.

(9) Schwefel 2.21 Function

The function definition field is [−10, 10] and the theoretical optimal value is 0. This function is a continuous, non-differentiable, separable, scalable and unimodal function.

(10) Schwefel 1.2 Function

The function definition field is [−5.12, 5.12], and the theoretical optimal value is 0. This function is a continuous, differentiable, non-separable, scalable, unimodal function.

3.3. Simulation Result Comparison and Analysis

The six algorithms are run on the above 10 optimization functions 30 times independently in dimensions 10, 30, and 100, and the function value obtained each time was recorded. According to the results of the algorithm in different dimensions, four criteria were collected and analyzed: best, worst, average, and standard deviation (SD). The data are shown in Table 1, Table 2 and Table 3.

Table 1.

Comparison results of Lévy flights and adjustment factors (LAFBA) and other five algorithms for benchmark functions with D = 10. BA, bat algorithm; PSO, particle swarm optimization; MFO, moth flame optimization; SCA, sine cosine algorithm; BOA, butterfly optimization algorithm.

Table 2.

comparison results of LAFBA and other five algorithms for benchmark functions with D = 30.

Table 3.

Comparison results of LAFBA and other five algorithms for benchmark functions with D = 100.

With the statistical test, we can make sure that the results are not generated by chance [36]. The Wilcoxon rank-sum test [37,38] was conducted in this experiment and p_value < 0.05 and h = 1 indicate that the difference between the two data is significant. The statistical comparison results between LAFBA and other 5 algorithms are shown in Table 4.

Table 4.

Results of Wilcoxon rank-sum test for LAFBA and other algorithms on 10 test functions with D = 30.

With the increase of the solution dimension, the difficulty faced by the algorithm to achieve the best result increases, and the problems of low solution accuracy, slowing down of the solving speed, or even failure to achieve the best result may occur. Therefore, this paper sets the three dimensions of 10D, 30D, and 100D to observe the change in the performance of the six algorithms in different dimensions.

It should be noted that the best optimal solution obtained is highlighted in bold font. From the results presented in Table 1, Table 2 and Table 3, under different dimensions, LAFBA is able to obtain the best values among 10 test functions in comparison with the BA, PSO, MFO, and SCA. When d = 10, compared to the BOA, the LAFBA obtained better results on 9 benchmark functions except for F3. When d = 30/100, BOA obtained the better mean value of F9 was better than the LAFBA. As the dimension increases, the difficulty in solving the function increases. The improved LAFBA proposed in this paper can still solve each optimization function effectively with the smallest standard deviation, indicating a good stability of the function. That is, the robustness is good.

As shown in Table 4, both the p-value and h = 1 indicate the rejection of the null hypothesis of equal medians at the default 5% significance level. This means that the superiority of LAFBA is statistically significant. and h = 0 have been underlined, and LAFBA is significantly different from BOA with the exception of F3.

In summary, the comparison with the other algorithms shows the superiority of LAFBA in several benchmarks. Among the six algorithms, LAFBA had the best performance.

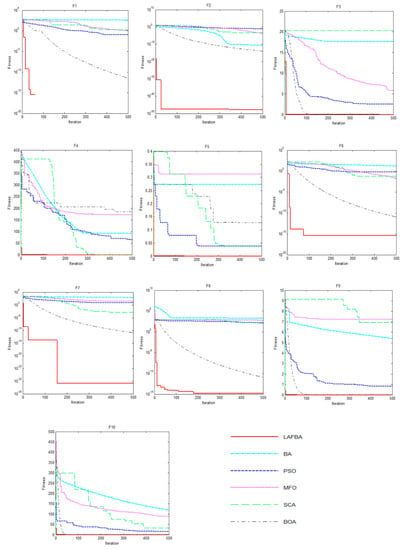

3.4. Convergence Curve Analysis

The convergence curve is an important indicator for the performance of the algorithm, through which we can see the convergence speed and the ability of the algorithm to jump out of the local optimum. For further illustration, the convergence curves of the LAFBA and other 5 algorithms with D = 30 on 10 benchmark functions are plotted in Figure 2.

Figure 2.

Convergence curve of F1–F10 function.

The different trends of the six curves in Figure 2 show the difference in the performance of the six algorithms. Functions 2, 6, 7, 9, and 10 are unimodal functions, and are often used to compare the convergence speed and execution ability of the algorithm. Observing the contrast curve of the corresponding function convergence curve, the LAFBA proposed in this paper is effective in obtaining the optimal solutions with a faster convergence rate. The quality of the solution is much higher than BA, PSO, MFO, SCA, and BOA. Functions 1, 3, 4, 5, and 8 are multimodal functions, with a large number of local optima, which are extremely difficult to optimize. They are commonly used to test the global optimization ability of the algorithm. Observing the convergence curves of the corresponding functions, the inflection point in the curve shows that the LAFBA algorithm successfully jumps out of the local optimum and continues to optimize, while the other algorithms converge to the local optimum too early, resulting in a higher curve than the LAFBA. In summary, the LAFBA shows stronger optimization performance and higher optimization efficiency.

4. LAFBA for Classical Engineering Problems

This section further verifies the performance and efficiency of the LAFBA by solving two constrained real engineering design problems: tension/compression spring design, and welded beam design. These problems were widely discussed in the literature and have been solved to better clarify the effectiveness of the algorithms. In the LAFBA, the population size n = 20, and the number of iterations N_gen = 500.

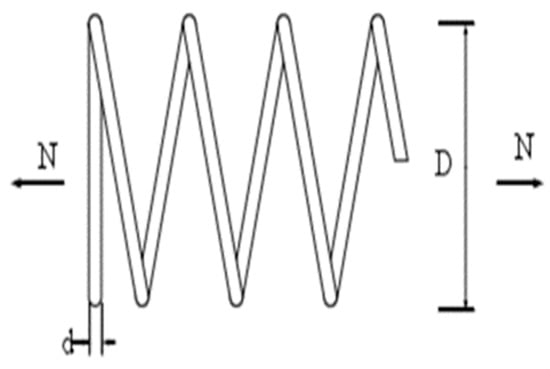

4.1. Tension/Compression Spring Design

The objective of this test problem is to minimize the weight of the tension/compression spring. Figure 3 shows the spring and its parameters [39,40]. The optimum design must satisfy constraints on shear stress, surge frequency, and deflection. This problem contains three constraint variables: the mean coil diameter (D), number of active coils (N), and wire diameter (d). The mathematical expression of tension/compression spring design problem is as follows:

Figure 3.

Tension/compression spring design problem.

| Consider | |

| Minimize | |

| Subject to | |

| Variable range | , , . |

There are several solutions for this problem found in the literature. This test case was solved using either mathematical techniques (constraints correction at constant cost [41] and penalty functions [40]) or meta-heuristics, such as GSA [42], PSO [43], evolution strategy (ES) [44], GA [45], and improved harmony search (HS) [46]. The best results of LAFBA are compared with 10 other optimization algorithms that were previously reported, as shown in Table 5.

Table 5.

Comparison results for tension/compression spring design problem.

From Table 5, compared with the GSA, PSO, ES, GA, and WOA, the LAFBA yielded better results for the tension/compression spring design problem. It can be seen that LAFBA outperforms all other algorithms except MFO, and the LAFBA algorithm is also the third-lowest-costing design.

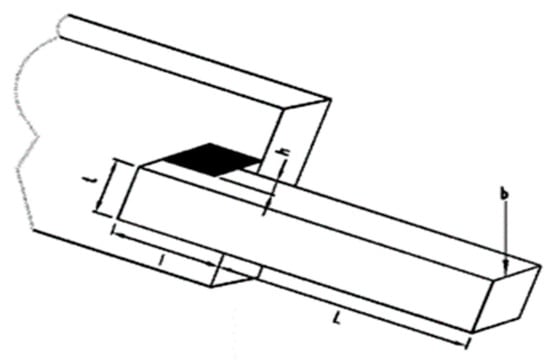

4.2. Welded Beam Design

The objective of this test problem is to minimize the fabrication costs of the welded beam design, and Figure 4 shows the welded beam and parameters involved in the design [45]. The optimum design must satisfy constraints on shear stress (τ) and bending stress in the beam (θ), buckling load (), and end deflection of the beam (δ). This problem contains four constraint variables: thickness of weld (h), length of the clamped bar (l), height of the bar (t), and thickness of the bar (b). The mathematical expression of welded beam design problem is as follows:

Figure 4.

Schematic view of welded beam design problem.

| Consider | |

| Minimize | |

| Subject to | |

| Variable range | |

| where | |

| E = 30 × 106 psi, G = 12 × 106 psi, | |

This problem was solved by GWO [49], GSA [42], Richardson’s random method, simplex method, Davidon–Fletcher–Powell, and Griffith and Stewart’s successive linear approximation [45]. The optimization results obtained by the proposed LAFBA for this problem were evaluated by comparing it with 15 other optimization algorithms that were previously reported, as shown in Table 6. Table 6 shows the best obtained results.

Table 6.

Comparison results for welded beam design problem.

Table 6 shows that the LAFBA algorithm is able to find a similar optimal design compared to those of GWO, MVO, and CPSO. This shows that this algorithm is also able to provide very competitive results in solving this problem.

5. Conclusions

In this study, an improved bat algorithm based on Lévy flights and adjustment factors (LAFBA) is proposed. Three modifications have been embedded into the BA to increase its global and local search abilities and, consequently, have significantly enhanced the BA performance. In order to evaluate the effectiveness of the LAFBA, 10 benchmark functions and 2 real-world engineering problems are used. The results of the simulation experiment of 10 benchmark functions and Wilcoxon rank-sum test show that the proposed LAFBA was a great improvement in terms of exploration and exploitation abilities, solution accuracy, and convergence speed compared with the bat algorithm, and four other bio-inspired algorithms. In addition, the results of the LAFBA in solving classical engineering design problems were compared with several state-of-the-art algorithms and produced comparable results. As the LAFBA algorithm shows a stable performance, it can also be applied to other more challenging real-world optimization problems.

Author Contributions

Methodology, J.L.; Resources, X.R.; Writing—original draft, X.L.; Writing—review& editing, Y.L.

Acknowledgments

This study is supported by the National Natural Science Foundation of China (No. 71601071), the Science & Technology Program of Henan Province, China (No. 182102310886 and 162102110109), and an MOE Youth Foundation Project of Humanities and Social Sciences (No. 15YJC630079). We are particularly grateful to the suggestions of the editor and the anonymous reviewers which is greatly improved the quality of the paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Holland, J.H. Erratum: Genetic Algorithms and the Optimal Allocation of Trials. Siam J. Comput. 1974, 3, 326. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. IEEE Int. Conf. Neural Netw. 1995, 2002, 1942–1948. [Google Scholar]

- Eberhart, R.; Kennedy, J. A new optimizer using particle swarm theory. In MHS’95. Proceedings of the Sixth International Symposium on Micro Machine and Human Science; IEEE Press: Piscataway, NJ, USA, 1995; pp. 39–43. [Google Scholar]

- Dorigo, M.; Colorni, V.A. Ant system: Optimization by a colony of cooperating agents. IEEE Trans. Syst. Man Cyber. Part B Cyber. 1996, 26, 29–41. [Google Scholar] [CrossRef] [PubMed]

- Karaboga, D. An Idea Based on Honey Bee Swarm for Numerical Optimization. TR-06; Erciyes University: Kayseri, Turkey, 2005. [Google Scholar]

- Gandomi, A.H.; Alavi, A.H. Krill herd: A new bio-inspired optimization algorithm. Commun. Nonlinear Sci. Numer. Simul. 2012, 17, 4831–4845. [Google Scholar] [CrossRef]

- Yang, X.S.; Deb, S. Cuckoo Search via Lévy flights. In Proceedings of the 2009 World Congress on Nature & Biologically Inspired Computing (NaBIC), Coimbatore, India, 9–11 December 2009; pp. 210–214. [Google Scholar]

- Yang, X.S.; Deb, S. Engineering Optimisation by Cuckoo Search. Int. J. Math. Modell. Numer. Optim. 2010, 1, 330–343. [Google Scholar] [CrossRef]

- Mirjalili, S. Moth-flame optimization algorithm: A novel nature-inspired heuristic paradigm. Knowl.-Based Syst. 2015, 89, 228–249. [Google Scholar] [CrossRef]

- Sergeyev, Y.D.; Kvasov, D.E.; Mukhametzhanov, M.S. Operational zones for comparing metaheuristic and deterministic one-dimensional global optimization algorithms. Math. Comput. Simul. 2017, 141, 96–109. [Google Scholar] [CrossRef]

- Yang, X.S. A New Metaheuristic Bat-Inspired Algorithm. Comput. Knowl. Technol. 2010, 284, 65–74. [Google Scholar]

- Ramli, M.R.; Abas, Z.A.; Desa, M.I.; Abidin, Z.Z.; Alazzam, M.B. Enhanced Convergence of Bat Algorithm Based on Dimensional and Inertia Weight Factor. J. King Saud Univ.-Comput. Inf. Sci. 2018. [Google Scholar] [CrossRef]

- Banati, H.; Chaudhary, R. Multi-Modal Bat Algorithm with Improved Search (MMBAIS). J. Comput. Sci. 2017, 23, 130–144. [Google Scholar] [CrossRef]

- Al-Betar, M.A.; Awadallah, M.A.; Faris, H.; Yang, X.S.; Khader, A.T.; Alomari, O.A. Bat-inspired Algorithms with Natural Selection mechanisms for Global optimization. Neurocomputing 2018, 273, 448–465. [Google Scholar] [CrossRef]

- Li, Y.; Pei, Y.H.; Liu, J.S. Bat optimization algorithm combining uniform variation and Gaussian variation. Control Decis. 2017, 32, 1775–1781. [Google Scholar]

- Chakri, A.; Khelif, R.; Benouaret, M.; Yang, X.S. New directional bat algorithm for continuous optimization problems. Expert Syst. Appl. 2017, 69, 159–175. [Google Scholar] [CrossRef]

- Al-Betar, M.A.; Awadallah, M.A. Island Bat Algorithm for Optimization. Expert Syst. Appl. 2018, 107, 126–145. [Google Scholar] [CrossRef]

- Laudis, L.L.; Shyam, S.; Jemila, C.; Suresh, V. MOBA: Multi Objective Bat Algorithm for Combinatorial Optimization in VLSI. Proc. Comput. Sci. 2018, 125, 840–846. [Google Scholar] [CrossRef]

- Tawhid, M.A.; Dsouza, K.B. Hybrid Binary Bat Enhanced Particle Swarm Optimization Algorithm for solving feature selection problems. Appl. Comput. Inf. 2018. [Google Scholar] [CrossRef]

- Osaba, E.; Yang, X.S.; Diaz, F.; Lopez-Garcia, P.; Carballedo, R. An improved discrete bat algorithm for symmetric and asymmetric Traveling Salesman Problems. Eng. Appl. Artif. Intell. 2016, 48, 59–71. [Google Scholar] [CrossRef]

- Mohamed, T.M.; Moftah, H.M. Simultaneous Ranking and Selection of Keystroke Dynamics Features Through A Novel Multi-Objective Binary Bat Algorithm. Future Comput. Inf. J. 2018, 3, 29–40. [Google Scholar] [CrossRef]

- Hamidzadeh, J.; Sadeghi, R.; Namaei, N. Weighted Support Vector Data Description based on Chaotic Bat Algorithm. Appl. Soft Comput. 2017, 60, 540–551. [Google Scholar] [CrossRef]

- Qi, Y.H.; Cai, Y.G.; Cai, H. Discrete Bat Algorithm for Vehicle Routing Problem with Time Window. Chin. J. Electron. 2018, 46, 672–679. [Google Scholar]

- Bekdaş, G.; Nigdeli, S.M.; Yang, X.S. A novel bat algorithm based optimum tuning of mass dampers for improving the seismic safety of structures. Eng. Struct. 2018, 159, 89–98. [Google Scholar] [CrossRef]

- Ameur, M.S.B.; Sakly, A. FPGA based hardware implementation of Bat Algorithm. Appl. Soft Comput. 2017, 58, 378–387. [Google Scholar] [CrossRef]

- Chaib, L.; Choucha, A.; Arif, S. Optimal design and tuning of novel fractional order PID power system stabilizer using a new metaheuristic Bat algorithm. Ain Shams Eng. J. 2017, 8, 113–125. [Google Scholar] [CrossRef]

- Mohammad, E.; Sayed-Farhad, M.; Hojat, K. Bat algorithm for dam–reservoir operation. Environ. Earth Sci. 2018, 77, 510. [Google Scholar]

- Liu, J.S.; Ji, H.Y.; Li, Y. Robot Path Planning Based on Improved Bat Algorithm and Cubic Spline Interpolation. Acta Autom. Sin. 2019. [Google Scholar]

- Shi, Y.; Eberhart, R. Modified particle swarm optimizer. Proc. IEEE ICEC Conf. Anchorage 1999, 69–73. [Google Scholar]

- Du, Y.H. Advanced Mathematics; Beijing Jiaotong University Press: Beijing, China, 2014. [Google Scholar]

- Ball, F.; Bao, Y.N. Predict Society; Contemporary China Publishing House: Beijing, China, 2007. [Google Scholar]

- Yang, X.S.; Karamanoglu, M.; He, X. Flower pollination algorithm: A novel approach for multiobjective optimization. Eng. Optim. 2014, 46, 1222–1237. [Google Scholar] [CrossRef]

- Jamil, M.; Yang, X.S. A Literature Survey of Benchmark Functions for Global Optimization Problems. Mathematics 2013, 4, 150–194. [Google Scholar]

- Mirjalili, S. SCA: A Sine Cosine Algorithm for solving optimization problems. Knowl.-Based Syst. 2016, 96, 120–133. [Google Scholar] [CrossRef]

- Arora, S.; Singh, S. Butterfly optimization algorithm: A novel approach for global optimization. Soft Comput. 2019, 23, 715–734. [Google Scholar] [CrossRef]

- Derrac, J.; García, S.; Molina, D.; Herrera, F. A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm Evolut. Comput. 2011, 1, 3–18. [Google Scholar] [CrossRef]

- Wilcoxon, F. Individual Comparisons by Ranking Methods. Biom. Bull. 1945, 1, 80–83. [Google Scholar] [CrossRef]

- García, S.; Molina, D.; Lozano, M.; Herrera, F. A study on the use of non-parametric tests for analyzing the evolutionary algorithms’ behaviour: A case study on the CEC’2005 Special Session on Real Parameter Optimization. J. Heuristics 2009, 15, 617–644. [Google Scholar]

- Zhao, Z.Y. Introduction to optimum design. Probabilistic Eng. Mech. 1990, 5, 100. [Google Scholar]

- Belegundu, A.D.; Arora, J.S. A study of mathematical programming methods for structural optimization. Part I: Theory. Int. J. Numer. Methods Eng. 2010, 21, 1601–1623. [Google Scholar] [CrossRef]

- Kaveh, A.; Talatahari, S. An improved ant colony optimization for constrained engineering design problems. Eng. Comput. 2010, 27, 155–182. [Google Scholar] [CrossRef]

- Rashedi, E.; Nezamabadi-Pour, H.; Saryazdi, S. GSA: A Gravitational Search Algorithm. Inf. Sci. 2009, 179, 2232–2248. [Google Scholar] [CrossRef]

- He, Q.; Wang, L. An effective co-evolutionary particle swarm optimization for constrained engineering design problems. Eng. Appl. Artif. Intell. 2007, 20, 89–99. [Google Scholar] [CrossRef]

- Mezura-Montes, E.; Coello, C.A.C. An empirical study about the usefulness of evolution strategies to solve constrained optimization problems. Int. J. Gen. Syst. 2008, 37, 443–473. [Google Scholar]

- Coello Coello, C.A. Use of a Self-Adaptive Penalty Approach for Engineering Optimization Problems. Comput. Ind. 2000, 41, 113–127. [Google Scholar] [CrossRef]

- Mahdavi, M.; Fesanghary, M.; Damangir, E. An improved harmony search algorithm for solving optimization problems. Appl. Math. Comput. 2007, 188, 1567–1579. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The Whale Optimization Algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Li, L.J.; Huang, Z.B.; Liu, F.; Wu, Q.H. A heuristic particle swarm optimizer for optimization of pin connected structures. Comput. Struct. 2007, 85, 340–349. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Krohling, R.A.; Coelho, L.D.S. Coevolutionary Particle Swarm Optimization Using Gaussian Distribution for Solving Constrained Optimization Problems. IEEE Trans. Cyber. 2007, 36, 1407–1416. [Google Scholar] [CrossRef]

- Coello, C.; Carlos, A. constraint-handling using an evolutionary multi objective optimization technique. Civ. Eng. Environ. Syst. 2000, 17, 319–346. [Google Scholar] [CrossRef]

- Deb, K. Optimal design of a welded beam via genetic algorithms. AIAA J. 1991, 29, 2013–2015. [Google Scholar]

- Deb, K. An efficient constraint handling method for genetic algorithms. Comput. Methods Appl. Mech. Eng. 2000, 186, 311–338. [Google Scholar] [CrossRef]

- Lee, K.S.; Geem, Z.W. A new meta-heuristic algorithm for continuous engineering optimization: Harmony search theory and practice. Comput. Methods Appl. Mech. Eng. 2005, 194, 3902–3933. [Google Scholar] [CrossRef]

- MartÍ, V.; Robledo, L.M. Multi-Verse Optimizer: A nature-inspired algorithm for global optimization. Neural Comput. Appl. 2016, 27, 495–513. [Google Scholar]

- Askarzadeh, A. A novel metaheuristic method for solving constrained engineering optimization problems: Crow search algorithm. Comput. Struct. 2016, 169, 1–12. [Google Scholar] [CrossRef]

- Ragsdell, K.M.; Phillips, D.T. Optimal Design of a Class of Welded Structures Using Geometric Programming. J. Eng. Ind. 1976, 98, 1021–1025. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).