A Framework for Automatic Building Detection from Low-Contrast Satellite Images

Abstract

1. Introduction

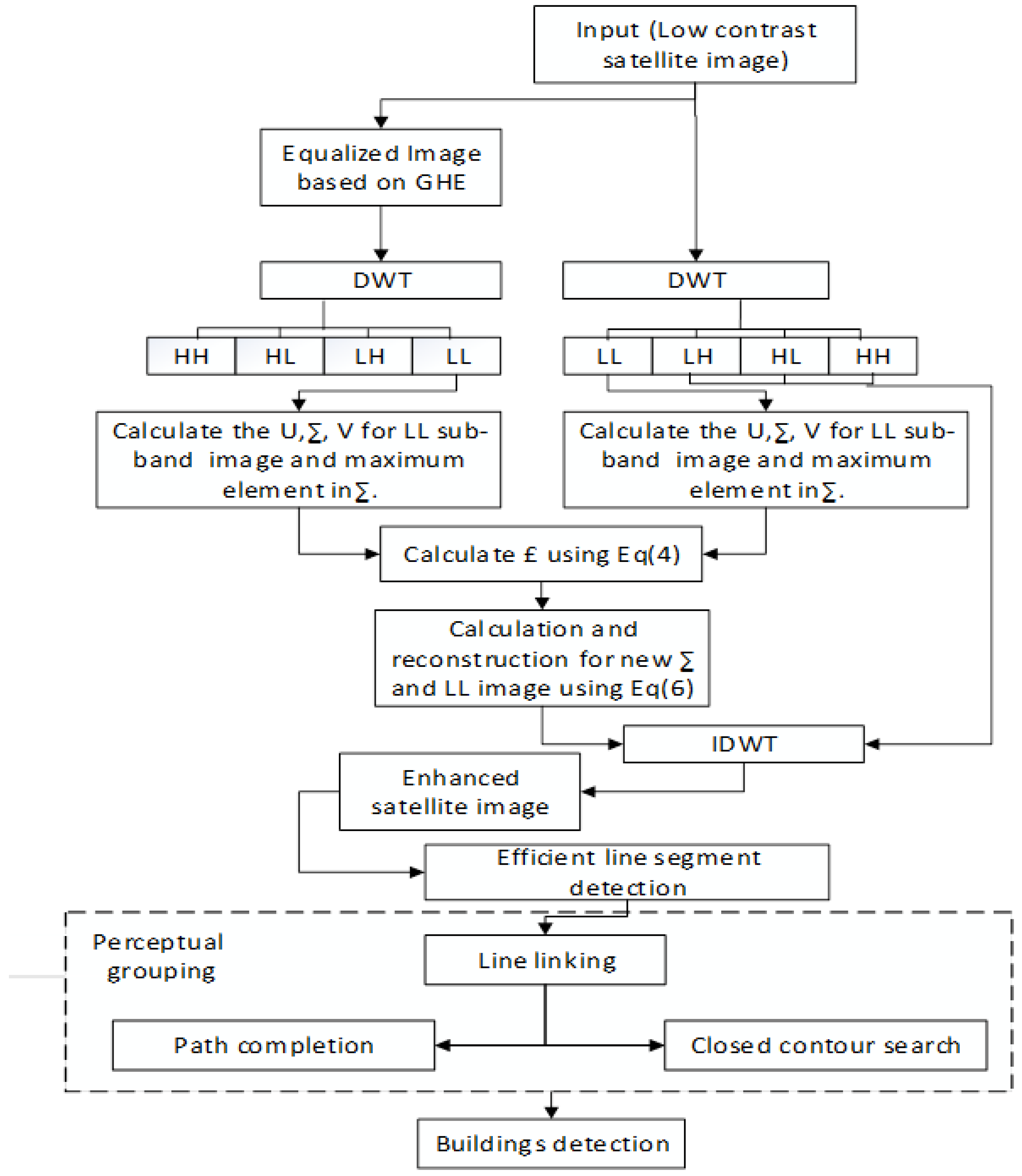

- Contrast enrichment of (low-contrast) satellite images using the discrete wavelet transform (DWT) based on singular value decomposition (SVD);

- Building detection from contrast-enriched images;

- Comparison of the developed technique with traditional approaches to building detection.

2. Theoretical Background

3. Proposed Methodology

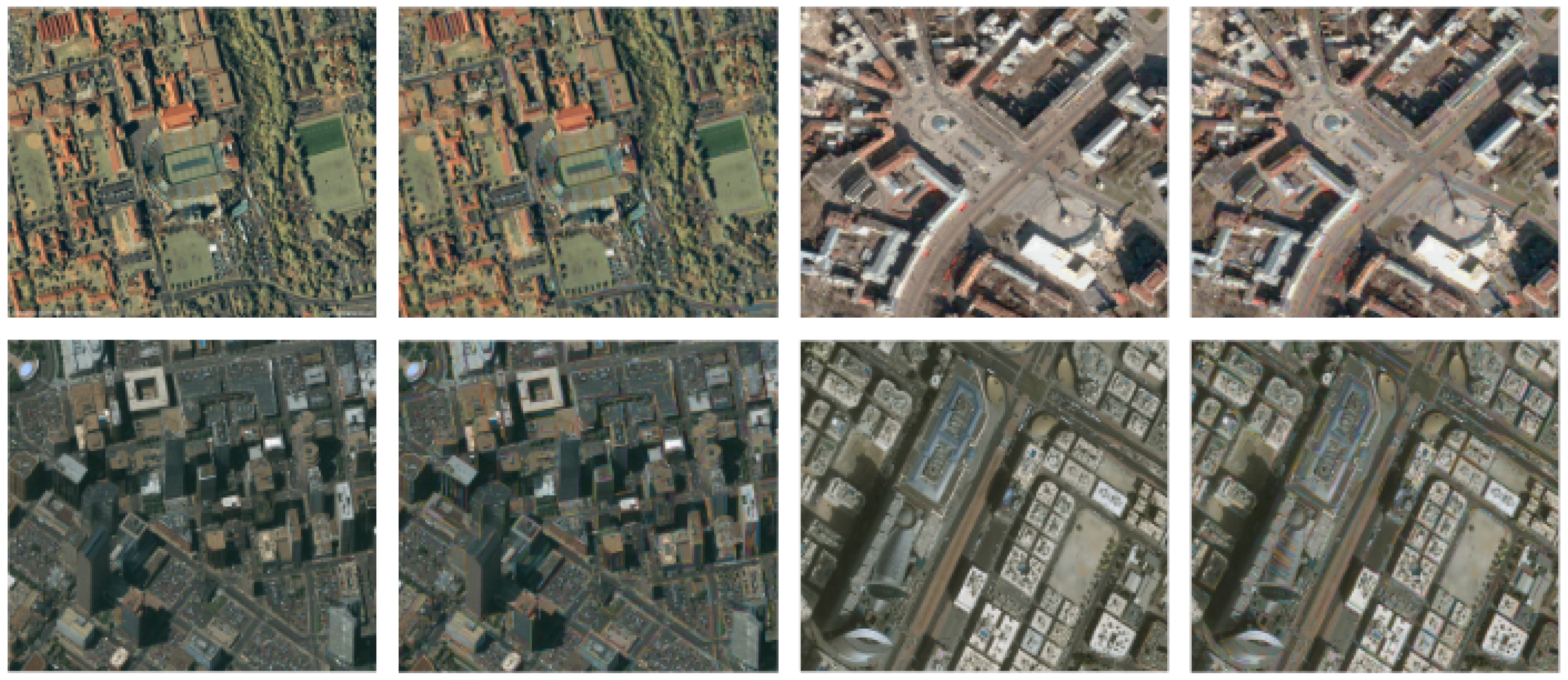

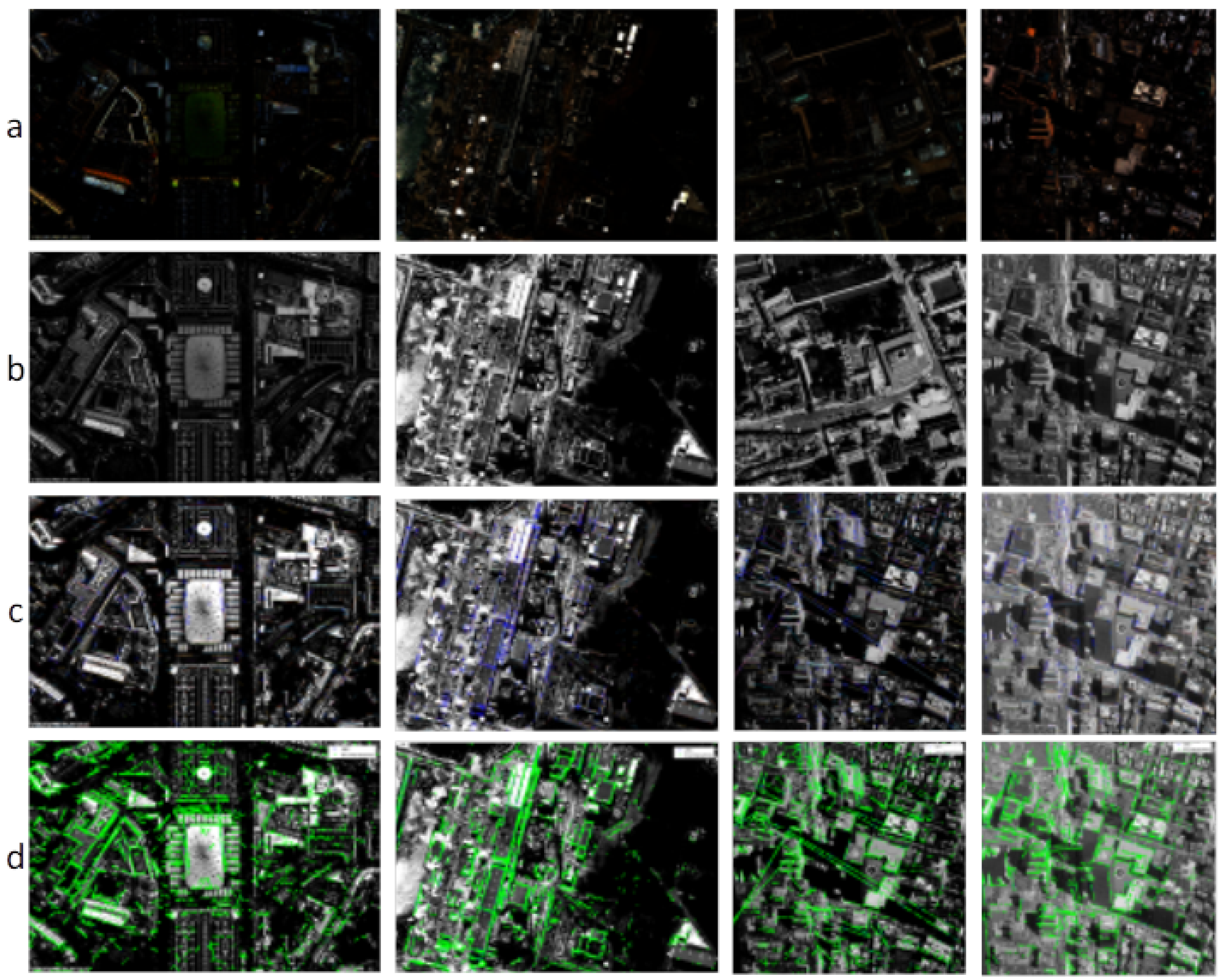

3.1. Contrast Enhancement

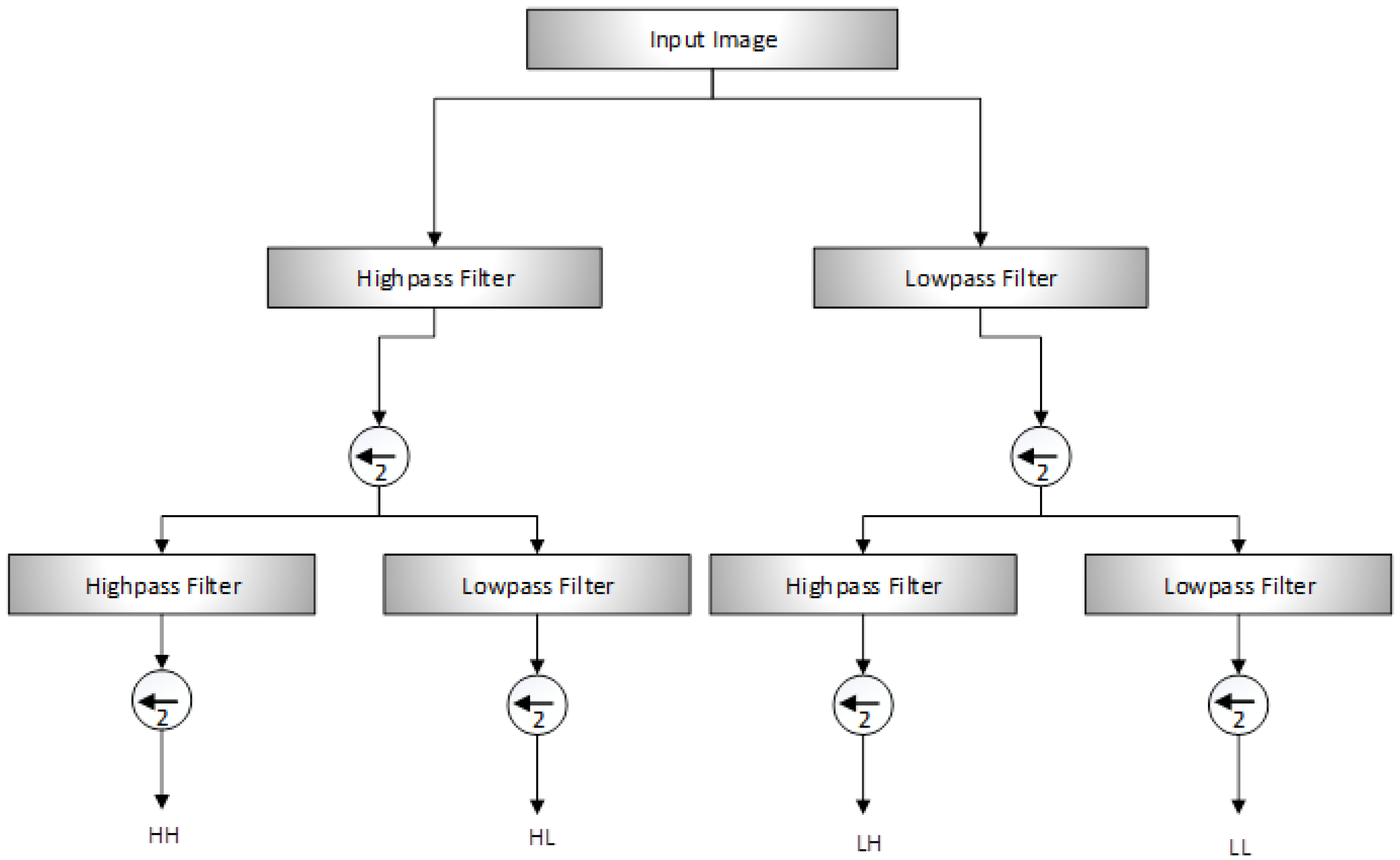

DWT–SVD

3.2. Building Extraction

3.2.1. Efficient Line Segment Detection

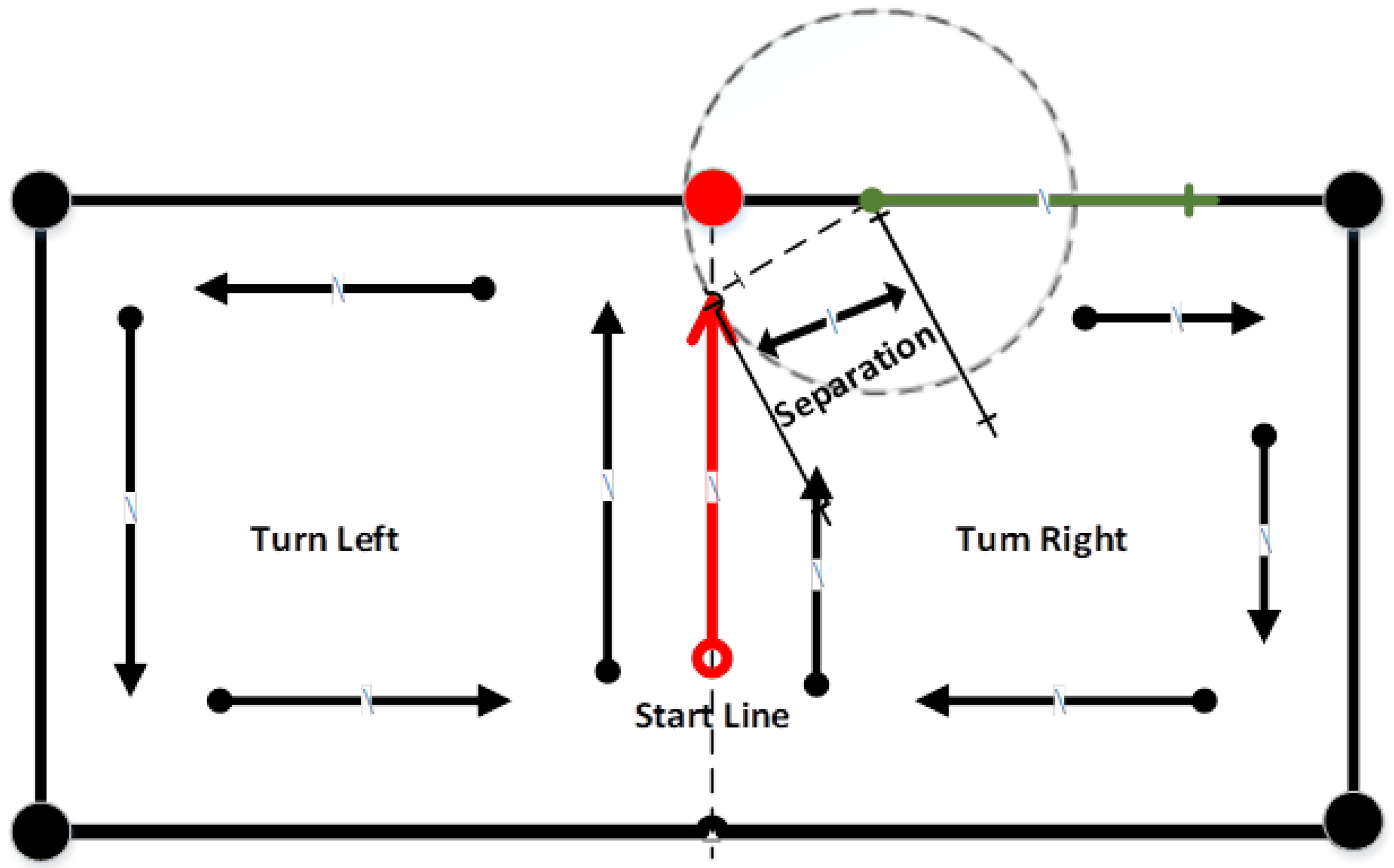

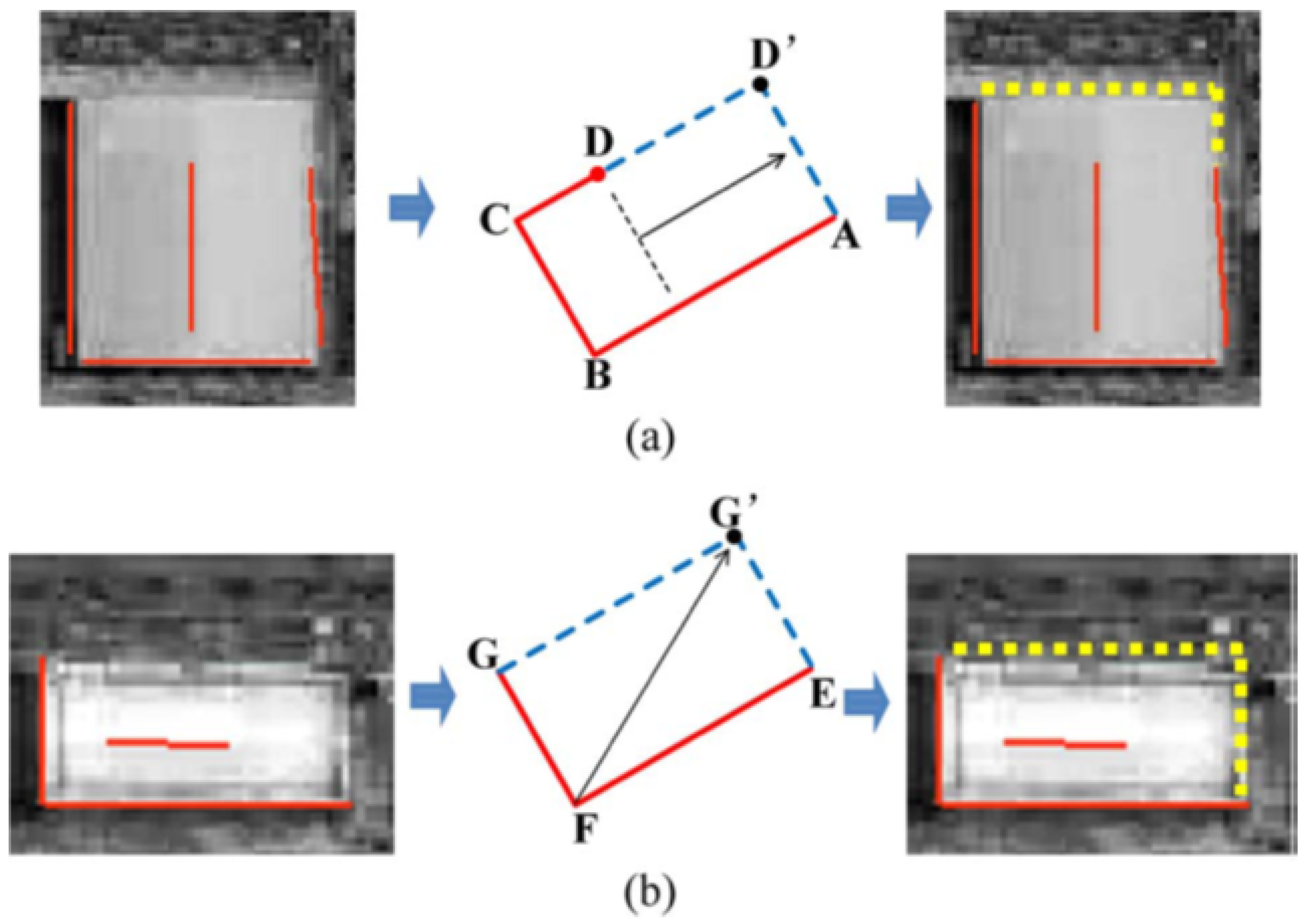

3.2.2. Perceptual Grouping

- The ratio of overlap and length is less than 15% for lines I and II;

- The lateral distance for lines I and II is less than 5 pixels (3 m);

- The separation value is less than 10 pixels (6 m) for lines I and III;

- The angle value between lines I and IV, Angle 1–Angle 2 is less than or equal to /10.

4. Evaluation and Results

5. Discussion

6. Implications and Future Research Gaps

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Ansari, M.A.; Zai, S.; Moon, Y.S. Automatic segmentation of coronary arteries from computed tomography angiography data cloud using optimal thresholding. Opt. Eng. 2017, 56, 013106. [Google Scholar] [CrossRef]

- Chen, F.; Ren, R.; Van de Voorde, T.; Xu, W.; Zhou, G.; Zhou, Y. Fast Automatic Airport Detection in Remote Sensing Images Using Convolutional Neural Networks. Remote Sens. 2018, 10, 443. [Google Scholar] [CrossRef]

- Memon, S.; Bhatti, S.; Thebo, L.A.; Muhammad, M.; Talpur, B.; Memon, M.A. A Video based Vehicle Detection, Counting and Classification System. Image Graph. Signal Process. 2018, 9, 34–41. [Google Scholar] [CrossRef]

- Yao, Y.; Shi, Y.; Weng, S.; Guan, B.; Yao, Y.; Shi, Y.; Weng, S.; Guan, B. Deep Learning for Detection of Object-Based Forgery in Advanced Video. Symmetry 2017, 10, 3. [Google Scholar] [CrossRef]

- Aamir, M.; Pu, Y.-F.; Abro, W.A.; Naeem, H.; Rahman, Z. A Hybrid Approach for Object Proposal Generation; Springer: Cham, Switzerland, 2019; pp. 251–259. [Google Scholar]

- Ayoub, N.; Gao, Z.; Chen, B.; Jian, M. A Synthetic Fusion Rule for Salient Region Detection under the Framework of DS-Evidence Theory. Symmetry 2018, 10, 183. [Google Scholar] [CrossRef]

- Rahman, Z.; Pu, Y.-F.; Aamir, M.; Ullah, F. A framework for fast automatic image cropping based on deep saliency map detection and gaussian filter. Int. J. Comput. Appl. 2018. [Google Scholar] [CrossRef]

- Naeem, H.; Bing, G.; Naeem, M.R.; Aamir, M.; Javed, M.S. A new approach for image detection based on refined Bag of Words algorithm. Optik 2017, 140, 823–832. [Google Scholar] [CrossRef]

- Bhargava, A.K.; Vagen, T.; Gassner, A. Breaking Ground: Unearthing the Potential of High-resolution, Remote-sensing Soil Data in Understanding Agricultural Profits and Technology Use in Sub-Saharan Africa. World Dev. 2018, 105, 352–366. [Google Scholar] [CrossRef]

- Näsi, R.; Honkavaara, E.; Blomqvist, M.; Lyytikäinen-Saarenmaa, P.; Hakala, T.; Viljanen, N.; Kantola, T.; Holopainen, M. Remote sensing of bark beetle damage in urban forests at individual tree level using a novel hyperspectral camera from UAV and aircraft. Urban For. Urban Green. 2018, 30, 72–83. [Google Scholar] [CrossRef]

- Michel, S.; Avouac, J.-P.; Ayoub, F.; Ewing, R.C.; Vriend, N.; Heggy, E. Comparing dune migration measured from remote sensing with sand flux prediction based on weather data and model, a test case in Qatar. Earth Planet. Sci. Lett. 2018, 497, 12–21. [Google Scholar] [CrossRef]

- Klein, M.; Pitstick, K.; Morris, E.; Hansen, J.; Vazquez-Trejo, J. Applying video summarization to aerial surveillance. In Ground/Air Multisensor Interoperability, Integration, and Networking for Persistent ISR IX; Pham, T., Kolodny, M.A., Wiegmann, D.M., Eds.; SPIE Defense + Security: Orlando, FL, USA, 2018; Volume 10635, p. 12. [Google Scholar]

- He, Z.; He, H. Unsupervised Multi-Object Detection for Video Surveillance Using Memory-Based Recurrent Attention Networks. Symmetry 2018, 10, 375. [Google Scholar] [CrossRef]

- Rocchini, D.; Luque, S.; Pettorelli, N.; Bastin, L.; Doktor, D.; Faedi, N.; Feilhauer, H.; Féret, J.-B.; Foody, G.M.; Gavish, Y.; et al. Measuring β-diversity by remote sensing: A challenge for biodiversity monitoring. Methods Ecol. Evol. 2018, 9, 1787–1798. [Google Scholar] [CrossRef]

- Baughman, C.A.; Jones, B.M.; Bodony, K.L.; Mann, D.H.; Larsen, C.F.; Himelstoss, E.; Smith, J. Remotely Sensing the Morphometrics and Dynamics of a Cold Region Dune Field Using Historical Aerial Photography and Airborne LiDAR Data. Remote Sens. 2018, 10, 792. [Google Scholar] [CrossRef]

- Shi, T.; Guo, L.; Chen, Y.; Wang, W.; Shi, Z.; Li, Q.; Wu, G. Proximal and remote sensing techniques for mapping of soil contamination with heavy metals. Appl. Spectrosc. Rev. 2018, 53, 783–805. [Google Scholar] [CrossRef]

- Dahiya, S.; Garg, P.K.; Jat, M.K.; Garg, P.K. Building Extraction from High Resolution Satellite Images Using Matlab Software Building Extraction from High resolution Satellite Images. In Proceedings of the International Multidisciplinary Scientific GeoConference & EXPO (SGEM), Albena, Bulgaria, 17–26 June 2014. [Google Scholar]

- Liu, W.; Prinet, V. Building Detection from High-resolution Satellite Image Using Probability Model. In Proceedings of the 2005 IEEE International Geoscience and Remote Sensing Symposium (IGARSS ’05), Seoul, Korea, 29 July 2005. [Google Scholar]

- Cui, S.Y.; Yan, Q.; Liu, Z.J.; Li, M. Building detection and recognition from high resolution remotely sensed imagery. In Proceedings of the XXIst ISPRS Congress, Beijing, China, 3–11 July 2008. [Google Scholar]

- Koc San, D.; Turker, M. Building extraction from high resolution satellite images using hough transform. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2010, 38, 1063–1068. [Google Scholar]

- Zhang, A.; Liu, X.; Gros, A.; Tiecke, T. Building Detection from Satellite Images on a Global Scale. arXiv, 2017; arXiv:1707.08952. [Google Scholar]

- Theng, L.B. Automatic Building Extraction from Satellite Imagery. Eng. Lett. 2006, 13, 3. [Google Scholar]

- Xu, Y.; Wu, L.; Xie, Z.; Chen, Z.; Xu, Y.; Wu, L.; Xie, Z.; Chen, Z. Building Extraction in Very High Resolution Remote Sensing Imagery Using Deep Learning and Guided Filters. Remote Sens. 2018, 10, 144. [Google Scholar] [CrossRef]

- Peng, J.; Liu, Y.C. Model and context-driven building extraction in dense urban aerial images. Int. J. Remote Sens. 2005, 26, 1289–1307. [Google Scholar] [CrossRef]

- Peng, J.; Zhang, D.; Liu, Y. An improved snake model for building detection from urban aerial images. Pattern Recognit. Lett. 2005, 26, 587–595. [Google Scholar] [CrossRef]

- Ahmadi, S.; Zoej, M.V.; Ebadi, H.; Moghaddam, H.A.; Mohammadzadeh, A. Automatic urban building boundary extraction from high resolution aerial images using an innovative model of active contours. Int. J. Appl. Earth Obs. Geoinf. 2010, 12, 150–157. [Google Scholar] [CrossRef]

- Akcay, H.G.; Aksoy, S. Building detection using directional spatial constraints. In Proceedings of the 2010 IEEE International Geoscience and Remote Sensing Symposium, Honolulu, HI, USA, 25–30 July 2010; pp. 1932–1935. [Google Scholar]

- Aytekın, Ö.; Erener, A.; Ulusoy, İ.; Düzgün, Ş. Unsupervised building detection in complex urban environments from multispectral satellite imagery. Int. J. Remote Sens. 2012, 33, 2152–2177. [Google Scholar] [CrossRef]

- Benedek, C.; Descombes, X.; Zerubia, J. Building Development Monitoring in Multitemporal Remotely Sensed Image Pairs with Stochastic Birth-Death Dynamics. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 33–50. [Google Scholar] [CrossRef] [PubMed]

- Durieux, L.; Lagabrielle, E.; Nelson, A. A method for monitoring building construction in urban sprawl areas using object-based analysis of Spot 5 images and existing GIS data. ISPRS J. Photogramm. Remote Sens. 2008, 63, 399–408. [Google Scholar] [CrossRef]

- Karantzalos, K.; Paragios, N. Recognition-Driven 2D Competing Priors Towards Automatic And Accurate Building Detection. IEEE Trans. Geosci. Remote Sens. 2009, 47, 133–144. [Google Scholar] [CrossRef]

- Lefevre, S.; Weber, J.; Sheeren, D. Automatic Building Extraction in VHR Images Using Advanced Morphological Operators. In Proceedings of the 2007 Urban Remote Sensing Joint Event, Paris, France, 11–13 April 2007; pp. 1–5. [Google Scholar]

- Lhomme, S.; He, D.; Weber, C.; Morin, D. A new approach to building identification from very-high-spatial-resolution images. Int. J. Remote Sens. 2009, 30, 1341–1354. [Google Scholar] [CrossRef]

- Noronha, S.; Nevatia, R. Detection and modeling of buildings from multiple aerial images. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 501–518. [Google Scholar] [CrossRef]

- Izadi, M.; Saeedi, P. Three-Dimensional Polygonal Building Model Estimation From Single Satellite Images. IEEE Trans. Geosci. Remote Sens. 2012, 50, 2254–2272. [Google Scholar] [CrossRef]

- Ok, A.O. Automated detection of buildings from single VHR multispectral images using shadow information and graph cuts. ISPRS J. Photogramm. Remote Sens. 2013, 86, 21–40. [Google Scholar] [CrossRef]

- Cote, M.; Saeedi, P. Automatic Rooftop Extraction in Nadir Aerial Imagery of Suburban Regions Using Corners and Variational Level Set Evolution. IEEE Trans. Geosci. Remote Sens. 2013, 51, 313–328. [Google Scholar] [CrossRef]

- Mayunga, S.D.; Coleman, D.J.; Zhang, Y. A semi-automated approach for extracting buildings from QuickBird imagery applied to informal settlement mapping. Int. J. Remote Sens. 2007, 28, 2343–2357. [Google Scholar] [CrossRef]

- Wang, J.; Yang, X.; Qin, X.; Ye, X.; Qin, Q. An Efficient Approach for Automatic Rectangular Building Extraction From Very High Resolution Optical Satellite Imagery. IEEE Geosci. Remote Sens. Lett. 2015, 12, 487–491. [Google Scholar] [CrossRef]

- Ok, A.O.; Senaras, C.; Yuksel, B. Automated Detection of Arbitrarily Shaped Buildings in Complex Environments From Monocular VHR Optical Satellite Imagery. IEEE Trans. Geosci. Remote Sens. 2013, 51, 1701–1717. [Google Scholar] [CrossRef]

- Senaras, C.; Ozay, M.; Yarman Vural, F.T. Building Detection With Decision Fusion. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 1295–1304. [Google Scholar] [CrossRef]

- Shufelt, J.A. Performance evaluation and analysis of monocular building extraction from aerial imagery. IEEE Trans. Pattern Anal. Mach. Intell. 1999, 21, 311–326. [Google Scholar] [CrossRef]

- Sirmacek, B.; Unsalan, C. Urban-Area and Building Detection Using SIFT Keypoints and Graph Theory. IEEE Trans. Geosci. Remote Sens. 2009, 47, 1156–1167. [Google Scholar] [CrossRef]

- Sirmacek, B.; Unsalan, C. A Probabilistic Framework to Detect Buildings in Aerial and Satellite Images. IEEE Trans. Geosci. Remote Sens. 2011, 49, 211–221. [Google Scholar] [CrossRef]

- Stankov, K.; He, D.-C. Detection of Buildings in Multispectral Very High Spatial Resolution Images Using the Percentage Occupancy Hit-or-Miss Transform. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4069–4080. [Google Scholar] [CrossRef]

- Wegne, J.D.; Soergel, U.; Rosenhahn, B. Segment-based building detection with conditional random fields. In Proceedings of the 2011 Joint Urban Remote Sensing Event, Munich, Germany, 11–13 April 2011; pp. 205–208. [Google Scholar]

- Lee, J.; Pant, S.R.; Lee, H.-S. An Adaptive Histogram Equalization Based Local Technique for Contrast Preserving Image Enhancement. Orig. Artic. Int. J. Fuzzy Log. Intell. Syst. 2015, 15, 35–44. [Google Scholar] [CrossRef]

- Demirel, H.; Anbarjafari, G.; Jahromi, M.N.S. Image equalization based on singular value decomposition. In Proceedings of the 2008 23rd International Symposium on Computer and Information Sciences, Istanbul, Turkey, 27–29 October 2008; pp. 1–5. [Google Scholar]

- Yu, N.; Qiu, T.; Bi, F.; Wang, A. Image Features Extraction and Fusion Based on Joint Sparse Representation. IEEE J. Sel. Top. Signal Process. 2011, 5, 1074–1082. [Google Scholar] [CrossRef]

- Liu, C.-C.; Dai, D.-Q.; Yan, H. Local Discriminant Wavelet Packet Coordinates for Face Recognition. J. Mach. Learn. Res. 2007, 8, 1165–1195. [Google Scholar]

- Starck, J.L.; Candès, E.J.; Donoho, D.L. The curvelet transform for image denoising. IEEE Trans. Image Process. 2002, 11, 670–684. [Google Scholar] [CrossRef] [PubMed]

- Demirel, H.; Ozcinar, C.; Anbarjafari, G. Satellite Image Contrast Enhancement Using Discrete Wavelet Transform and Singular Value Decomposition. IEEE Geosci. Remote Sens. Lett. 2010, 7, 333–337. [Google Scholar] [CrossRef]

- Lamard, M.; Daccache, W.; Cazuguel, G.; Roux, C.; Cochener, B. Use of a JPEG-2000 Wavelet Compression Scheme for Content-Based Ophtalmologic Retinal Images Retrieval. In Proceedings of the 2005 IEEE Engineering in Medicine and Biology 27th Annual Conference, Shanghai, China, 17–18 January 2005; pp. 4010–4013. [Google Scholar]

- Bhandari, A.K.; Soni, V.; Kumar, A.; Singh, G.K. Cuckoo search algorithm based satellite image contrast and brightness enhancement using DWT–SVD. ISA Trans. 2014, 53, 1286–1296. [Google Scholar] [CrossRef] [PubMed]

- Das, S.; Mirnalinee, T.T.; Varghese, K. Use of Salient Features for the Design of a Multistage Framework to Extract Roads From High-Resolution Multispectral Satellite Images. IEEE Trans. Geosci. Remote Sens. 2011, 49, 3906–3931. [Google Scholar] [CrossRef]

- Abujarad, F.; Nadim, G.; Omar, A. Clutter reduction and detection of landmine objects in ground penetrating radar data using singular value decomposition (SVD). In Proceedings of the 3rd International Workshop on Advanced Ground Penetrating Radar (IWAGPR 2005), Delft, The Netherlands, 2–3 May 2005; pp. 37–42. [Google Scholar]

- Demirel, H.; Anbarjafari, G. Satellite Image Resolution Enhancement Using Complex Wavelet Transform. IEEE Geosci. Remote Sens. Lett. 2010, 7, 123–126. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J. A survey on object detection in optical remote sensing images. ISPRS J. Photogramm. Remote Sens. 2016, 117, 11–28. [Google Scholar] [CrossRef]

- Akinlar, C.; Topal, C. EDLines: A real-time line segment detector with a false detection control. Pattern Recognit. Lett. 2011, 32, 1633–1642. [Google Scholar] [CrossRef]

- Burns, J.B.; Hanson, A.R.; Riseman, E.M. Extracting Straight Lines. IEEE Trans. Pattern Anal. Mach. Intell. 1986, PAMI-8, 425–455. [Google Scholar] [CrossRef]

- Topal, C.; Akinlar, C.; Genc, Y. Edge Drawing: A Heuristic Approach to Robust Real-Time Edge Detection. In Proceedings of the 2010 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 2424–2427. [Google Scholar]

- Cheng, P. QuickBird-A Milestone for High Resolution Mapping. Earth Obs. Mag. 2002, 11, 14–18. [Google Scholar]

- Gao, X.; Wang, M.; Yang, Y.; Li, G. Building Extraction From RGB VHR Images Using Shifted Shadow Algorithm. IEEE Access 2018, 6, 22034–22045. [Google Scholar] [CrossRef]

- Chandra, N.; Ghosh, J.K. A Cognitive Viewpoint on Building Detection from Remotely Sensed Multispectral Images. IETE J. Res. 2018, 64, 165–175. [Google Scholar] [CrossRef]

- Gavankar, N.L.; Ghosh, S.K. Automatic building footprint extraction from high-resolution satellite image using mathematical morphology. Eur. J. Remote Sens. 2018, 51, 182–193. [Google Scholar] [CrossRef]

- Attarzadeh, R.; Momeni, M. Object-Based Rule Sets and Its Transferability for Building Extraction from High Resolution Satellite Imagery. J. Indian Soc. Remote Sens. 2018, 46, 169–178. [Google Scholar] [CrossRef]

- Huang, X.; Yuan, W.; Li, J.; Zhang, L. A New Building Extraction Postprocessing Framework for High-Spatial- Resolution Remote-Sensing Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 654–668. [Google Scholar] [CrossRef]

- Li, W.; He, C.; Fang, J.; Fu, H. Semantic Segmentation based Building Extraction Method using Multi-Source GIS Map Datasets and Satellite Imagery. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 238–241. [Google Scholar]

- Li, S.; Tang, H.; Huang, X.; Mao, T.; Niu, X.; Li, S.; Tang, H.; Huang, X.; Mao, T.; Niu, X. Automated Detection of Buildings from Heterogeneous VHR Satellite Images for Rapid Response to Natural Disasters. Remote Sens. 2017, 9, 1177. [Google Scholar] [CrossRef]

- Chen, R.; Li, X.; Li, J.; Chen, R.; Li, X.; Li, J. Object-Based Features for House Detection from RGB High-Resolution Images. Remote Sens. 2018, 10, 451. [Google Scholar] [CrossRef]

| Total No. of Buildings | No. of TP | No. of FP | No. of FN | RoD | FNR | Accuracy |

|---|---|---|---|---|---|---|

| 168 | 146 | 18 | 10 | 89.02% | 6.4% | 83.90% |

| Image ID | EDLines (s) | Number of Lines | Number of Lines after Linking | Total Time |

|---|---|---|---|---|

| (a) | 0.06 | 332 | 288 | 12 |

| (b) | 0.07 | 682 | 598 | 11 |

| (c) | 0.02 | 444 | 412 | 9 |

| (d) | 0.06 | 1063 | 962 | 13 |

| Methods | Image Size | TNB | TBD | RoD | Time (s) |

|---|---|---|---|---|---|

| Noronha et al. [34] | 800 × 400 | 145 | 134 | 92.4% | 228 |

| Izadi et al. [35] | 400 × 400 | 70 | 62 | 94.3% | 1390 |

| OK et al. [36] | 641 × 863 | 1145 | 995 | 81.4% | 188 |

| Cote et al. [37] | 400 × 400 | 233 | 197 | 90.2% | 121 |

| Mayunga et al. [38] | 600 × 600 | 45 | 34 | 89.9% | 60 |

| Jun Wang et al. [39] | 512 × 512 | 165 | 144 | 87.3% | 16 |

| Authors | 512 × 512 | 168 | 146 | 89.02% | 13 |

| Methods | Datasets | Image Type | f-Score | Precision (%) | Recall (%) | Overall Accu. (%) | Time (s) |

|---|---|---|---|---|---|---|---|

| [63] | Dataset NS | RGB | 76.80 | 74.70 | 81.30 | 79.80 | 47s |

| [64] | CTA/MPP | RGB | 62.50 | 68.45 | 59.80 | 70.10 | Time ∝ IS |

| [65] | Dataset NS | Grayscale | 89.90 | 89.00 | 91.00 | 82.01 | HCC |

| [66] | QuickBird | RGB | 86.02 | 83.69 | 88.50 | 84.66 | HCC |

| [67] | QuickBird | RGB | 90.45 | 91.49 | 89.40 | 82.53 | 174.1s |

| [68] | OpenSt. Map | RGB | 74.90 | 87.92 | 65.22 | 59.85 | HCC |

| [69] | QuickBird | RGB | 80.65 | 75.62 | 86.39 | 82.34 | HCC |

| [70] | Google Earth | RGB | 83.80 | 85.20 | 82.50 | 89.80 | HCC |

| Authors | QuickBird | RGB & GrayScale | 91.33 | 89.02 | 93.58 | 83.90 | 13s |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aamir, M.; Pu, Y.-F.; Rahman, Z.; Tahir, M.; Naeem, H.; Dai, Q. A Framework for Automatic Building Detection from Low-Contrast Satellite Images. Symmetry 2019, 11, 3. https://doi.org/10.3390/sym11010003

Aamir M, Pu Y-F, Rahman Z, Tahir M, Naeem H, Dai Q. A Framework for Automatic Building Detection from Low-Contrast Satellite Images. Symmetry. 2019; 11(1):3. https://doi.org/10.3390/sym11010003

Chicago/Turabian StyleAamir, Muhammad, Yi-Fei Pu, Ziaur Rahman, Muhammad Tahir, Hamad Naeem, and Qiang Dai. 2019. "A Framework for Automatic Building Detection from Low-Contrast Satellite Images" Symmetry 11, no. 1: 3. https://doi.org/10.3390/sym11010003

APA StyleAamir, M., Pu, Y.-F., Rahman, Z., Tahir, M., Naeem, H., & Dai, Q. (2019). A Framework for Automatic Building Detection from Low-Contrast Satellite Images. Symmetry, 11(1), 3. https://doi.org/10.3390/sym11010003