1. Introduction

Plastic waste poses significant threats to marine ecosystems. Of particular concern is the widespread presence of microplastics, which disrupt ecological balance, threaten aquatic organisms, and may affect human health. The accumulation of plastic debris in oceans has been linked to ecosystem degradation and the loss of biodiversity and ecosystem services. Rivers are estimated to contribute up to 80% of plastics entering the oceans, making riverine systems a key pathway for marine pollution and a critical target for mitigation [

1,

2,

3].

Urbanization and human activity in river basins have accelerated the transport of waste into rivers and, ultimately, the oceans [

4]. Once in rivers, plastics undergo physical, chemical, and biological fragmentation, generating microplastics that are even more difficult to manage. Detecting and quantifying river plastic waste is therefore essential for understanding river conditions and their downstream impacts on marine ecosystems [

5].

In Thailand, the problem is particularly severe. According to the World Bank [

6], about 428,000 tons of plastic waste are mismanaged annually, much of which enters the ocean through rivers. In the Chao Phraya River, the estimated microplastic outflow from Samut Prakan Province reached about 183,000 particles per day in September 2021 and 160,000 particles per day in March 2022. Similarly, a study of the Nan River found an average of 23.67 microplastic particles per liter in surface water samples [

6,

7]. These findings underscore the severity of river plastic pollution in Thailand and the need for effective monitoring systems.

To address this challenge, methods that can identify and quantify different waste types are required. Accurate classification of plastics, metals, glass, and other anthropogenic debris supports targeted waste management strategies and enables automated monitoring systems that can reduce costs and labor [

8,

9,

10].

Recent advances in computer vision have enabled automated, image-based monitoring systems to replace traditional manual observation. Deep learning techniques—particularly object detection and tracking—have significantly improved monitoring capabilities for dynamic environments. For instance, YOLO-based detection integrated with DeepSORT tracking has shown promising performance for continuous monitoring of floating waste under varying water and lighting conditions [

11,

12,

13,

14].

Numerous studies have investigated river waste modeling and monitoring to understand spatial distribution and accumulation dynamics. Lebreton et al. [

1] identified major source rivers such as the Yangtze, Ganges, and Niger through a global emission model. Gasperi et al. [

9] and González-Fernández et al. [

10] applied image-based approaches along the Seine and Rhine, while Kataoka et al. [

15] demonstrated image-based quantification under flood conditions in Japan. Recent studies in Malaysia and China achieved 85–90% accuracy using YOLO-based detection [

16,

17] and van Lieshout et al. [

18] reported 68.7% precision in Jakarta’s rivers, with performance declining under local variability. A YOLO + DeepSORT system previously trained on 5711 flume images achieved over 81% mAP in laboratory settings but lower accuracy in natural environments due to reflections, lighting variation, and occlusion [

19,

20]. Overall, river waste monitoring has advanced globally, yet site-specific adaptation and environmental variability remain major challenges, emphasizing the need for transferable and efficient monitoring frameworks.

This study aims to address these limitations by developing an automated waste quantification framework for real river environments. The applicability of a deep learning-based system for floating waste in the urban canals of the Chao Phraya River is evaluated using a three-step preprocessing pipeline—skew correction, background removal, and object region extraction—to enhance detection accuracy and robustness. Unlike previous studies that focused on model retraining or architectural modification, this research adopts a data-centric approach emphasizing preprocessing to improve adaptability to diverse field conditions. The proposed framework enables a pretrained YOLO-based detection and tracking model to achieve high accuracy without additional retraining, representing a practical and scalable solution for river waste monitoring, particularly in data-limited or resource-constrained regions. The novelty of this study lies in demonstrating, through a real-world case study of the Chao Phraya urban canals, that reliable and transferable detection performance can be achieved under real-world conditions.

2. Materials and Methods

2.1. Implementation of the Proposed Method

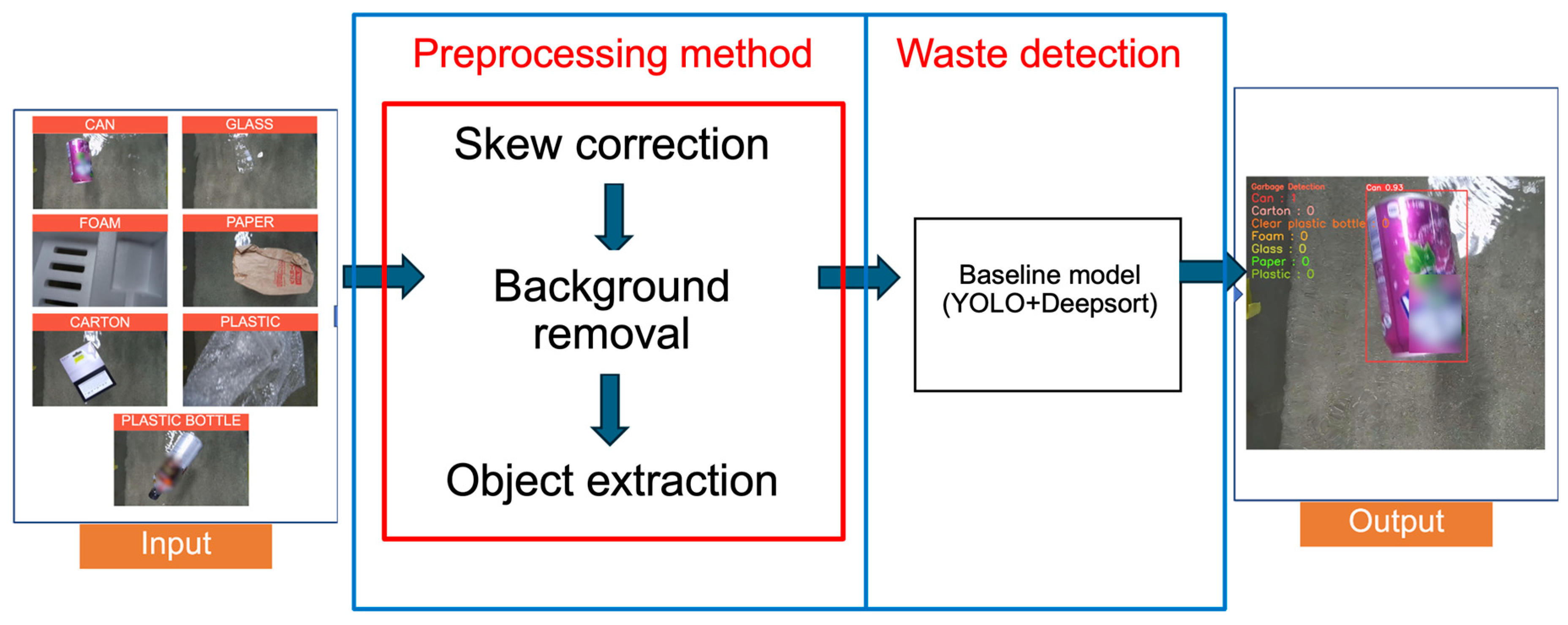

The proposed method consists of two main modules: a preprocessing module and an automated waste measurement module as shown in

Figure 1. The preprocessing module applies three techniques: skew correction, background removal, and object region extraction using SSD (Single Shot Multibox Detector) implemented in Python 3.10 with TensorFlow 2.12 [

21]. The automated measurement module integrates YOLOv5 for object detection [

22] with DeepSORT for multi-object tracking, enabling efficient monitoring and quantification [

23] of riverine waste in video frames.

2.2. Study Area and Sampling Sites

This study was conducted in an urban canal connected to the Chao Phraya River in Bangkok, Thailand. The Chao Phraya River is the principal watercourse of central Thailand, encompassing a drainage basin of approximately 20,523 km2. It originates in Nakhon Sawan Province at the confluence of the Ping, Wang, Yom, and Nan Rivers, and flows about 379 km southward before discharging into the Gulf of Thailand. The river traverses the central plains, a region characterized by intensive agricultural, aquacultural, industrial, and residential development.

For this research, the target area was a canal section monitored by the Bangna CCTV station in Bangkok as shown in

Figure 2. The monitoring system, installed along the Bangna Canal—a tributary of the Chao Phraya River—continuously recorded video at a resolution of 640 × 480 pixels and a frame rate of 30 frames per second (fps). The camera operated 24 h per day throughout the observation period.

To reduce the effects of low illumination, glare, and image noise, only daytime footage (approximately 08:00–17:00 local time) was extracted and analyzed. A total of 30 consecutive days of video recordings were archived, from which 2000 JPEG images were systematically sampled at regular intervals for experimental evaluation. Each extracted frame was visually inspected to ensure scene consistency and to exclude frames with significant occlusion, rainfall, or poor visibility.

The fixed camera installation provided a stable viewpoint geometry, minimizing positional variation and enabling a direct comparison between laboratory-trained and real-canal imagery.

2.3. Preprocessing Section

The Preprocessing Section aims to adjust actual canal images to resemble the laboratory images used for training in the automated waste measurement method as shown in

Figure 3. There are various preprocessing methods available; however, this study proposes three specific methods: skew correction of images, background removal, and object detection using SSD. Skew correction of images transforms tilted images captured by CCTV into top-down images like those taken in a laboratory setting. Background removal addresses the difficulty of distinguishing between the background and objects in real environment images by eliminating the background (water surface), making it easier to differentiate objects. In the SSD-based object detection step, the model uses an input size of 300 × 300 pixels, ensuring consistency with the configuration used during training.

2.4. Automated Waste Measurement

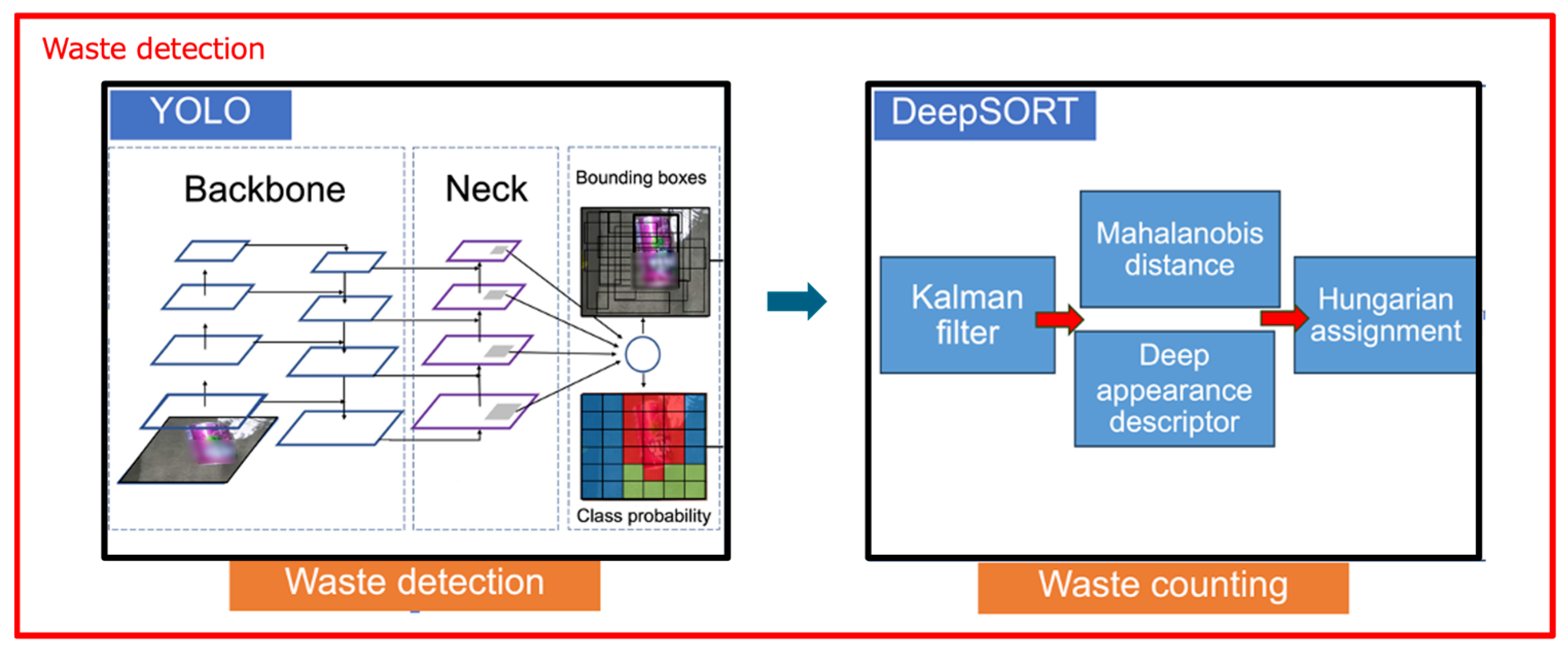

In this study, an automated waste measurement framework was established by integrating YOLO and DeepSORT, forming a unified pipeline for the real-time detection and tracking of floating waste, as shown in

Figure 4. This integration enables continuous quantification of waste flow in dynamic river environments, thereby enhancing the accuracy and efficiency of environmental monitoring systems.

YOLO was selected as the core detection algorithm because of its strong balance between speed and accuracy, which is particularly effective under the variable conditions encountered in real-world monitoring. Its ability to detect multiple objects concurrently within a single inference allows for real-time responsiveness, while its high precision in identifying diverse waste types aligns well with the objectives of this research. YOLO predicts object classes and bounding boxes through a multi-term loss function that jointly optimizes localization, confidence, and classification performance.

For the tracking component, DeepSORT was adopted to extend the functionality of the original SORT algorithm through the incorporation of a deep appearance descriptor. This addition allows the system to maintain consistent object identities over time, even under conditions of occlusion or changes in object appearance. DeepSORT combines motion prediction based on Kalman filtering with object association using Mahalanobis distance, ensuring reliable tracking across frames.

To optimize the combined performance of YOLO and DeepSORT, several parameters were fine-tuned. The YOLO model was trained with an initial learning rate of 0.00872 (

Table S1) while DeepSORT employed a cosine distance threshold of 0.2 for robust track association. These configurations provided a stable balance between detection precision and tracking consistency in real environments.

2.5. Experiments

2.5.1. Experiment I: Application of the Model to a Canal in Thailand

The practical applicability of the proposed waste detection and tracking system was evaluated by applying the integrated YOLOv5 and DeepSORT model to a real-world environment in Thailand.

2.5.2. Experiment II: Application of the Proposed Model with Preprocessing in a Canal in Thailand

The preprocessing component of the proposed automated waste measurement framework is designed to align real-world imagery with the characteristics of laboratory images used during model training. Given the significant variability and complexity of environmental conditions in actual canal settings, effective preprocessing is essential to ensure accurate and consistent performance of the detection and tracking models. Although various preprocessing techniques have been proposed, this study introduces three targeted methods: skew correction, background removal, and object detection using SSD.

2.5.3. Experiment III: Evaluation of the Updated YOLO Model for Automated Waste Measurement

To enhance the performance of the automated waste measurement system, the pipeline was updated to incorporate a YOLOv10-based [

25] object detection model. This updated model integrates recent advancements in network architecture and training strategies, including refined anchor box selection, improved data augmentation, and enhanced backbone feature extraction. These improvements are intended to increase detection precision, particularly under the challenging conditions of real-world environments.

2.6. Evaluation

Evaluating the performance of object detection and tracking models is essential for assessing their reliability in real applications. In this study, four standard metrics were used: Precision (P), Recall (R), F1 score [

26], and mean Average Precision (mAP) [

27].

Precision (P) represents the proportion of correctly detected objects among all detections, whereas Recall (R) indicates the proportion of actual objects that were successfully detected. The F1 score combines Precision and Recall into a single measure to evaluate the balance between accuracy and completeness and is particularly useful for assessing counting and tracking performance. Since the primary objective of this study is to quantify and track the number of floating waste objects, the use of the F1 score provides an appropriate and consistent metric for evaluating both detection and tracking reliability. The mAP summarizes detection accuracy across all waste categories as an overall indicator of model performance.

These metrics enable consistent evaluation and comparison of detection and tracking models. They were computed following Equations (1)–(4)

where

TP,

FP, and

FN denote true positives, false positives, and false negatives, respectively, and

n is the number of object categories.

3. Results

3.1. Experiment I: Application of the Model to a Canal in Thailand

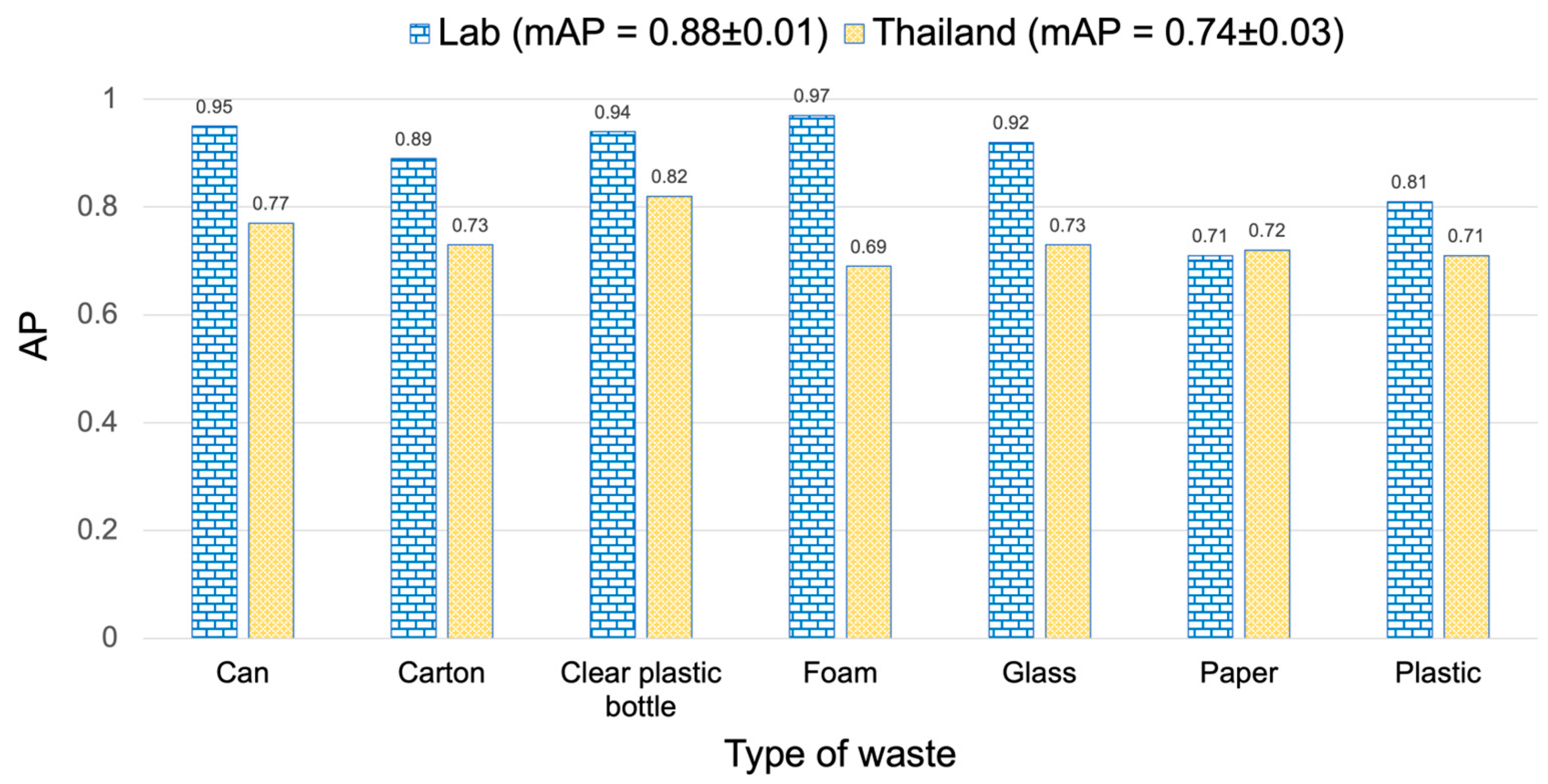

In this experiment, the baseline performance of the automated waste detection system was evaluated by applying a YOLOv5 object detection model integrated with the DeepSORT tracking algorithm to video footage captured in a natural canal setting in Thailand, as shown in

Figure 5. The YOLOv5 model had been trained in a controlled laboratory environment using annotated datasets, and no preprocessing or domain adaptation techniques were employed during deployment. The objective was to assess the model’s ability to generalize to real-world conditions without any further modification.

The results, as summarized in

Figure 6, indicate an overall mAP of 0.74 ± 0.03. While the system was able to detect and track common objects such as can, glass, and clear plastic bottle with relatively high AP values, the performance for other categories such as foam and paper was noticeably lower. This can be attributed to several environmental challenges present in the canal setting, including water surface reflections, object occlusions, floating debris, and fluctuating lighting conditions.

These findings suggest that models trained solely on laboratory data face significant limitations when applied directly to unstructured outdoor environments. The high variability in background and object appearance in natural canal severely degrades detection accuracy and consistency, demonstrating the need for preprocessing and domain adaptation in such contexts.

3.2. Experiment II: Application of the Proposed Model with Preprocessing in a Canal in Thailand

To address the domain gap between laboratory and real-world imagery observed in Experiment I, this experiment introduced a preprocessing framework designed to adapt input data before applying the detection model. The preprocessing pipeline consists of three key components: (1) skew correction to normalize image orientation, (2) background removal to reduce visual clutter and isolate floating waste, and (3) object region identification using the Single Shot Multibox Detector (SSD) to guide YOLOv5 in focusing on relevant areas.

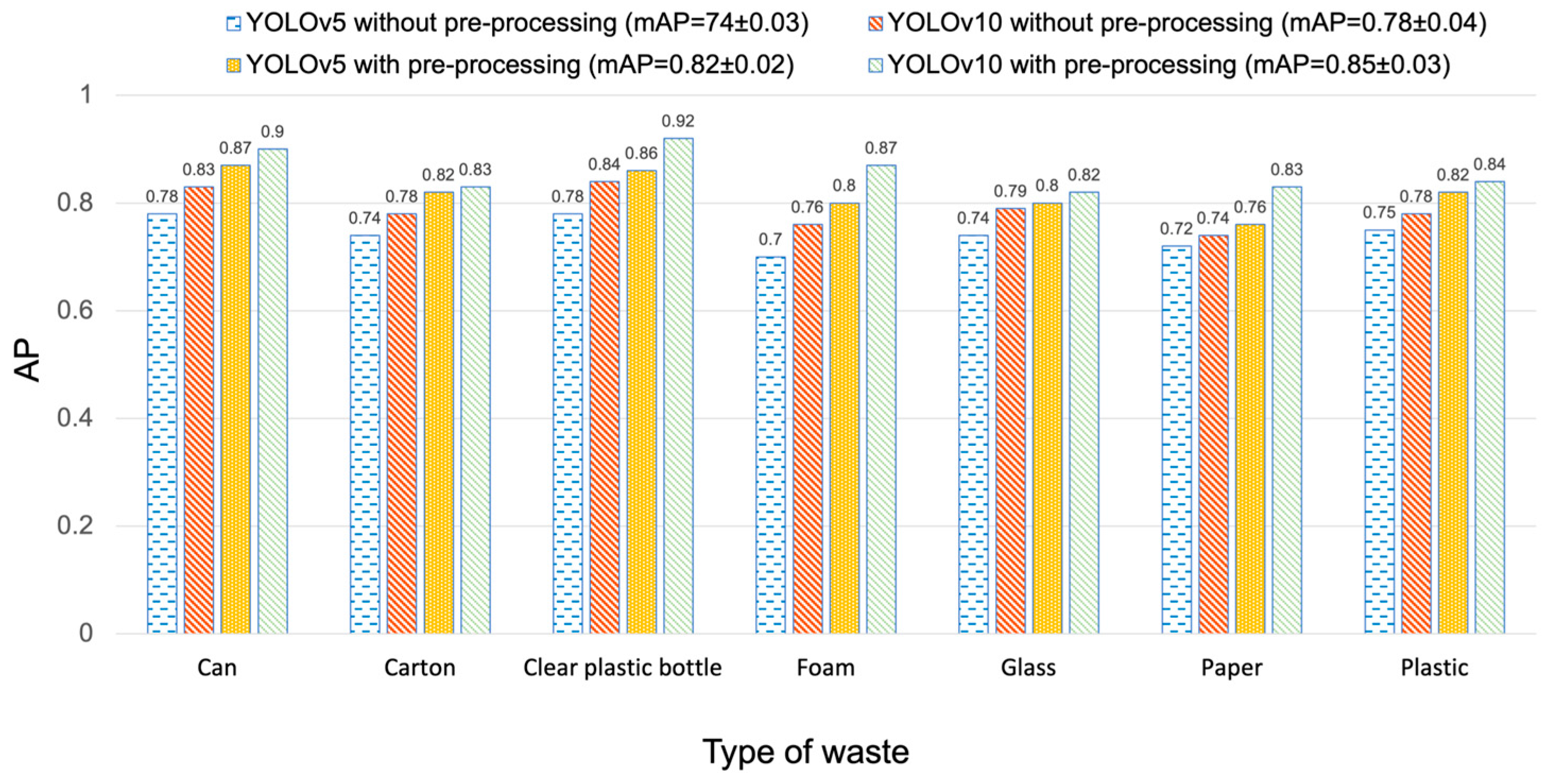

After integrating these preprocessing steps, the YOLOv5 + DeepSORT system was re-applied to the same canal dataset. The performance notably improved, with the mAP increasing from 0.74 ± 0.03 to 0.82 ± 0.03. As illustrated in

Figure 7, each waste category exhibited improved AP values, with foam and paper showing the most significant gains (+0.10 and +0.08, respectively).

Table 1 (center) visually demonstrates enhanced detection robustness and fewer false positives due to the improved signal-to-noise ratio in the preprocessed imagery.

This experiment highlights the importance of task-specific preprocessing in enabling deep learning models to adapt to complex and variable outdoor conditions. By reducing irrelevant background features and standardizing input image characteristics, the system achieved more stable and accurate detection and tracking performance in the real-world environment.

3.3. Experiment III: Evaluation of the Updated YOLO Model for Automated Waste Measurement

In the final experiment, the system was further enhanced by upgrading the detection backbone from YOLOv5 to YOLOv10. The YOLOv10m variant was adopted for its optimized balance between inference speed and detection accuracy. YOLOv10 introduces several architectural advancements, including a more efficient backbone, refined anchor box selection, improved feature fusion, and robust data augmentation techniques. These enhancements are expected to provide superior detection accuracy, particularly under challenging outdoor conditions such as canal environments.

Initially, the YOLOv10 + DeepSORT system was evaluated without preprocessing, resulting in a mAP of 0.78 ± 0.04—already an improvement over the YOLOv5 baseline, as shown in

Table 2. Subsequently, the same preprocessing pipeline from Experiment II was applied, which further elevated the mAP to 0.85 ± 0.03. This was the highest score among all experimental configurations. As shown in

Figure 7, clear plastic bottle achieved an AP of 0.92, while plastic and glass also showed remarkable accuracy improvements.

Table 1 presents a detection scene where the system precisely identified and tracked multiple floating waste items even under sunlight reflection and water motion.

These results confirm that combining advanced detection architectures with domain-aware preprocessing enables robust performance under real-world conditions. The YOLOv10-based system not only enhances detection precision but also ensures consistent tracking, making it well-suited for practical deployment in riverine waste monitoring applications.

3.4. Effectiveness of Preprocessing Steps by Waste Type

To evaluate the individual and combined contributions of the proposed preprocessing components, a stepwise performance analysis was conducted across seven waste types using YOLOv10.

Table 3 summarizes the mAP improvements observed for each class after applying successive preprocessing stages: Skew Correction (SC), Background Removal (BG), and Object Extraction (OE).

Skew Correction, the first step, resulted in modest but consistent gains across all waste types. Notably, rigid and geometrically distinct objects such as plastic bottles (+0.07) and cans (+0.06) showed the highest sensitivity to skew normalization. This suggests that perspective correction facilitates improved object localization, particularly for cylindrical or elongated items whose orientation heavily influences their appearance.

Background Removal, when added to skew correction, yielded substantial additional improvements for most classes, especially for visually ambiguous or low-contrast materials. Foam exhibited a 0.10 increase (from 0.70 to 0.80), and plastic and paper also improved significantly (+0.08 and +0.06, respectively). These results highlight that eliminating dynamic or reflective water backgrounds helps the model suppress false positives and focus on relevant object features.

The final stage, Object Extraction, further enhanced performance in all categories, though its marginal gains were smaller than previous steps. The improvement was most pronounced for cans (from 0.89 to 0.91) and plastic bottles (0.92 to 0.94), indicating that spatial cropping and focusing on regions-of-interest helped the model refine its predictions by reducing irrelevant spatial context.

Overall, the preprocessing pipeline increased total mAP from 0.74 (raw input) to 0.85 (fully preprocessed), with the most significant individual-class gains observed in:

Foam: +0.13 (0.69 → 0.82);

Plastic bottle: +0.12 (0.82 → 0.94);

Plastic: +0.14 (0.71 → 0.85);

Can: +0.14 (0.77 → 0.91).

These improvements are particularly important for waste types that are prone to environmental degradation or visual confusion, such as foam and plastic. The results demonstrate that task-specific preprocessing not only improves overall accuracy but also selectively enhances detection performance for difficult waste categories.

4. Discussion

In recent years, many studies have applied deep learning to the detection of floating waste in riverine environments. A consistent trend in this work has been reliance on dataset-specific retraining or architectural modifications to address environmental variability. For example,

Table 4, Zailan et al. (2022) [

16] reported a mean Average Precision (mAP) of about 89% using an optimized YOLOv4 model fine-tuned with a custom river dataset from Malaysia. Yang et al. (2022) [

28] achieved nearly 90% with a tuned YOLOv5, while Jiang et al. (2024) [

29] introduced attention-based feature fusion into YOLOv7 and exceeded 91%. Similarly, Li et al. (2020) [

30] combined YOLOv5 with Transformer modules to reach about 84% on river imagery. Although these approaches achieved high accuracy, they required large, annotated datasets, computationally demanding retraining, and location-specific adaptation.

In contrast, the present study achieved comparable results (mAP = 0.85 ± 0.03) using a different strategy. Instead of retraining or modifying the detection architecture, a three-stage preprocessing pipeline—skew correction, background removal, and object region extraction—was applied to a pretrained YOLO model originally trained with 5711 flume-based laboratory images. Importantly, no retraining or additional labeling was performed on the canal dataset, which comprised 2000 frames captured from urban canals of the Chao Phraya River in Thailand. These results highlight the effectiveness of data-centric adaptation (i.e., preprocessing-based adaptation), demonstrating that aligning input images prior to detection can achieve performance comparable to more complex and resource-intensive retraining approaches.

The implications of this data-centric adaptation are notable, especially when compared with the standard practices observed in previous studies. Most prior work has addressed real-world variability by collecting annotated imagery from deployment sites and retraining or fine-tuning detection models. While this approach can achieve high accuracy, it poses practical challenges for scalability, particularly in global applications where local datasets are scarce and retraining may not be feasible.

The proposed method overcomes these limitations by using preprocessing to reduce the domain gap between laboratory imagery and field-captured canal images. This enables the reuse of a robust pretrained model without additional annotation or training, making the approach well-suited for regions where data collection is logistically or economically constrained. Although the YOLO + DeepSORT model was trained solely on laboratory images, it achieved higher accuracy than several retrained alternatives, demonstrating that carefully designed preprocessing can improve both robustness and transferability.

In addition to detection accuracy, tracking reliability was also evaluated using the F1 score, which balances Precision and Recall of tracked objects over time. Although this study primarily focused on detection performance, the F1-based evaluation confirmed more consistent object identity maintenance after preprocessing, indicating improved temporal stability of tracking results. This suggests that the preprocessing pipeline not only enhances detection accuracy but also contributes to smoother object association in continuous video monitoring.

The ablation study shows how each component of the preprocessing pipeline [

31] contributes to detection performance, particularly in addressing the environmental complexity of real environment settings. Skew correction [

32] was especially effective for elongated or angular objects, such as bottles and cartons, by normalizing orientation and reducing distortion. This improved consistency with the training set and increased Average Precision (AP) by 0.07–0.09 for plastic bottles and cans. Background removal [

33] reduced the visual complexity of river surfaces (e.g., waves, reflections, vegetation), improving the signal-to-noise ratio and detection of low-contrast objects. Foam and paper benefited most, with mAP gains of 0.08–0.10. SSD-guided object extraction further improved precision by isolating relevant regions, though its impact was smaller than the previous steps.

Unlike end-to-end retraining approaches, where architectural changes obscure the contribution of individual design choices, our modular pipeline provides clarity and reproducibility. It demonstrates how systematic input conditioning can compensate for domain mismatch and environmental variability. Such transparency is particularly valuable in environmental monitoring, where deployment conditions are highly dynamic and stakeholders require confidence in how the system operates.

These findings should also be considered in the broader context of global riverine and marine plastic pollution, as terrestrial rivers are estimated to contribute about 80% of ocean-bound plastic waste, much of it from urban river systems in rapidly developing regions. Within this context, automated, scalable, and cost-effective monitoring systems are critical to support evidence-based mitigation strategies. A key strength of our proposed approach is its adaptability to diverse real-world conditions, achieved through preprocessing alone without the need for retraining, and with minimal dependence on manual annotation [

34]. This allows rapid deployment across river systems with varying hydrological, lighting, and background characteristics. As the proposed approach eliminates the need for retraining and additional data preparation, it offers a computationally efficient and easily deployable solution for real-world monitoring applications.

Nonetheless, several challenges remain. The system occasionally produces false negatives under conditions of strong reflections, motion blur, or occlusion. Moreover, the current pipeline is limited to detecting floating debris at the water surface and cannot identify submerged or bottom-deposited waste. To overcome these limitations, future work will focus on integrating the vision-based pipeline with hydrodynamic models to estimate and forecast waste transport and accumulation, even when objects are not directly visible. Additionally, system scalability will be evaluated by applying the framework to a broader range of natural rivers with diverse environmental characteristics [

35]. These efforts aim to advance the development of robust, field-ready tools for cleanup planning and policy applications.

5. Conclusions

This study proposed a preprocessing deep learning framework for detecting and tracking floating plastic waste in the urban canals of the Chao Phraya River Basin. By integrating YOLO + DeepSORT with a three-step preprocessing pipeline—skew correction, background removal, and object extraction—the framework successfully adapted a laboratory-trained model to real canal imagery without retraining. Experiments using 2000 CCTV images achieved a mean Average Precision (mAP) improvement from 0.74 ± 0.03 to 0.82 ± 0.02 and further to 0.85 ± 0.03 with the upgraded YOLOv10m detector, with major gains for visually complex materials such as foam, plastic, and paper. These findings confirm that targeted preprocessing effectively enhances model robustness and stability under varying canal surface and lighting conditions.

The results demonstrate the potential of this preprocessing deep learning approach as a scalable and cost-efficient solution for automated canal waste monitoring in data-limited regions. By focusing on preprocessing rather than retraining, the framework reduces annotation requirements and enables rapid deployment for real-world environmental surveillance. Some challenges remain, including reduced accuracy under reflections, motion blur, and partial occlusion, and the current method cannot yet detect submerged debris. Future research will explore multispectral imaging, real-time edge implementation, and integration with cleanup and policy decision systems to advance practical, data-driven river waste management for sustainable environmental monitoring.