Utilization of Augmented Reality Technique for Sewer Condition Visualization

Abstract

:1. Introduction

2. Theory and Methods

2.1. Literature Review

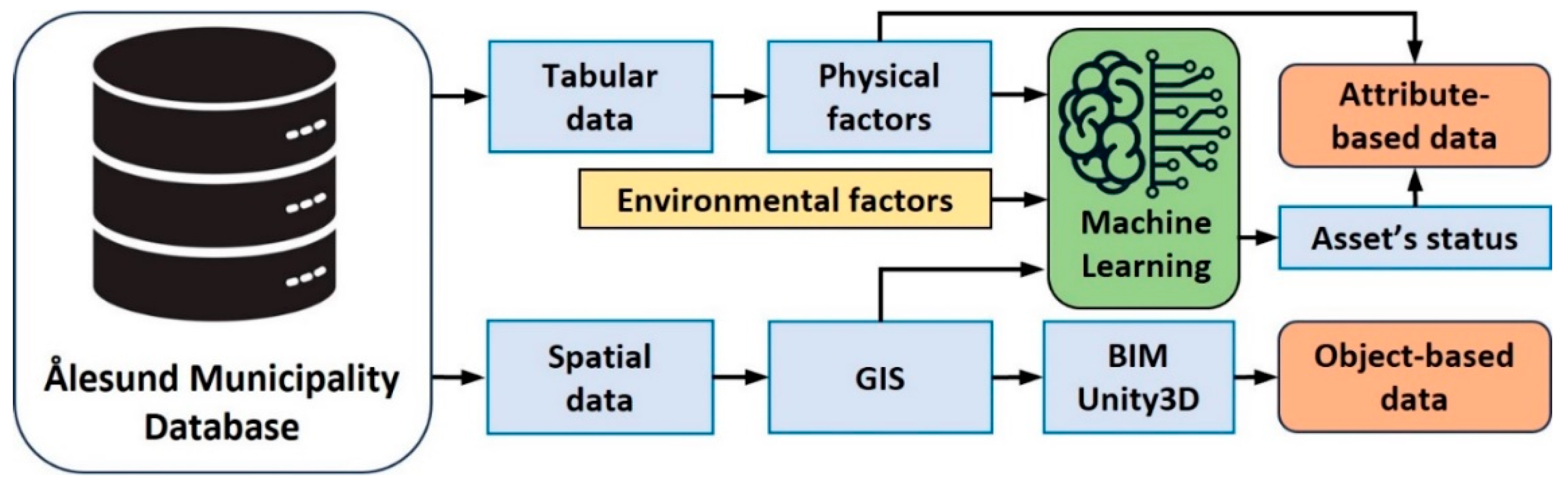

2.2. Data and 3D Model Preparation

2.3. Visualization Platform Development

2.4. System Configuration

2.5. Accuracy Estimation

3. Results and Discussion

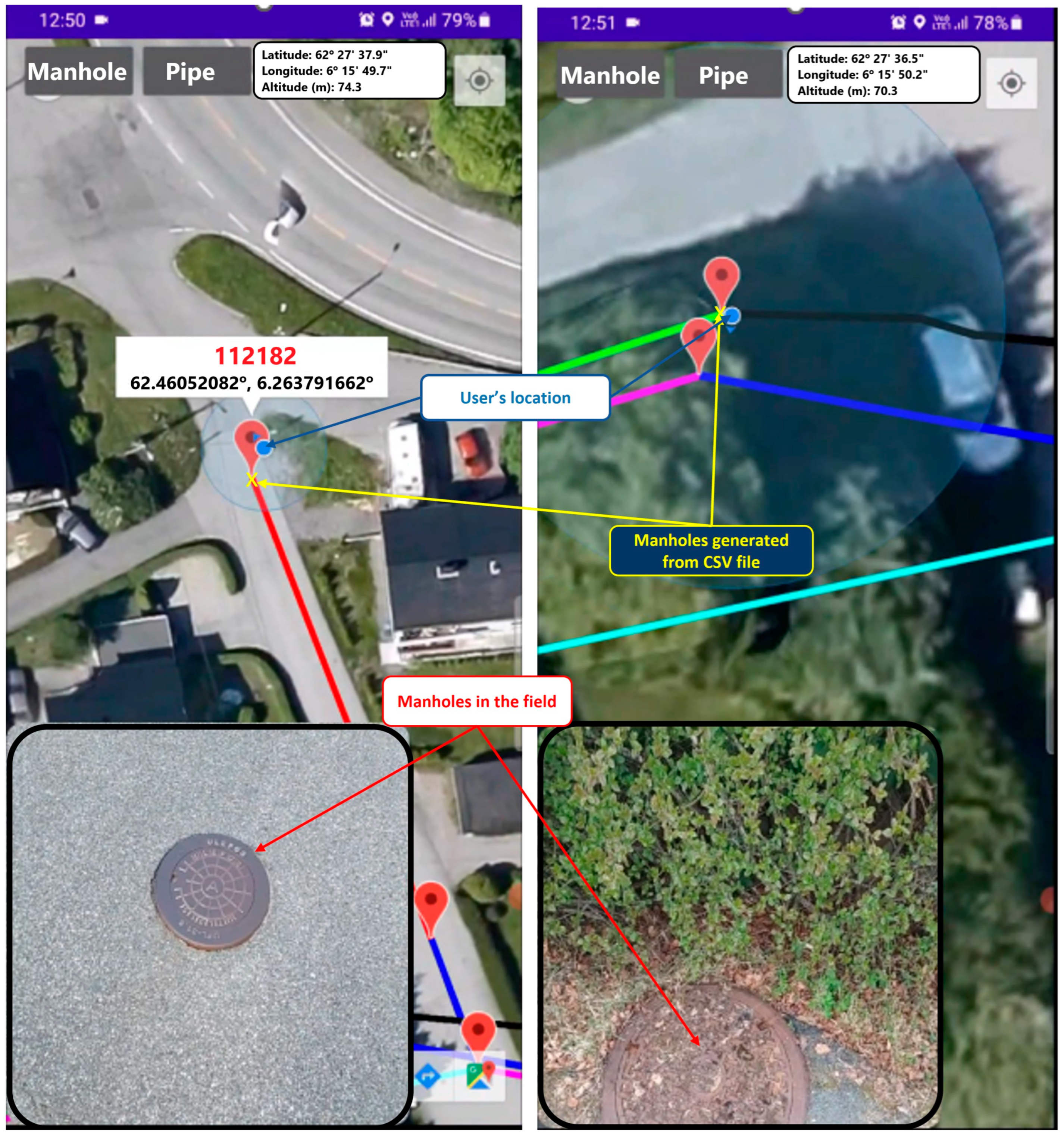

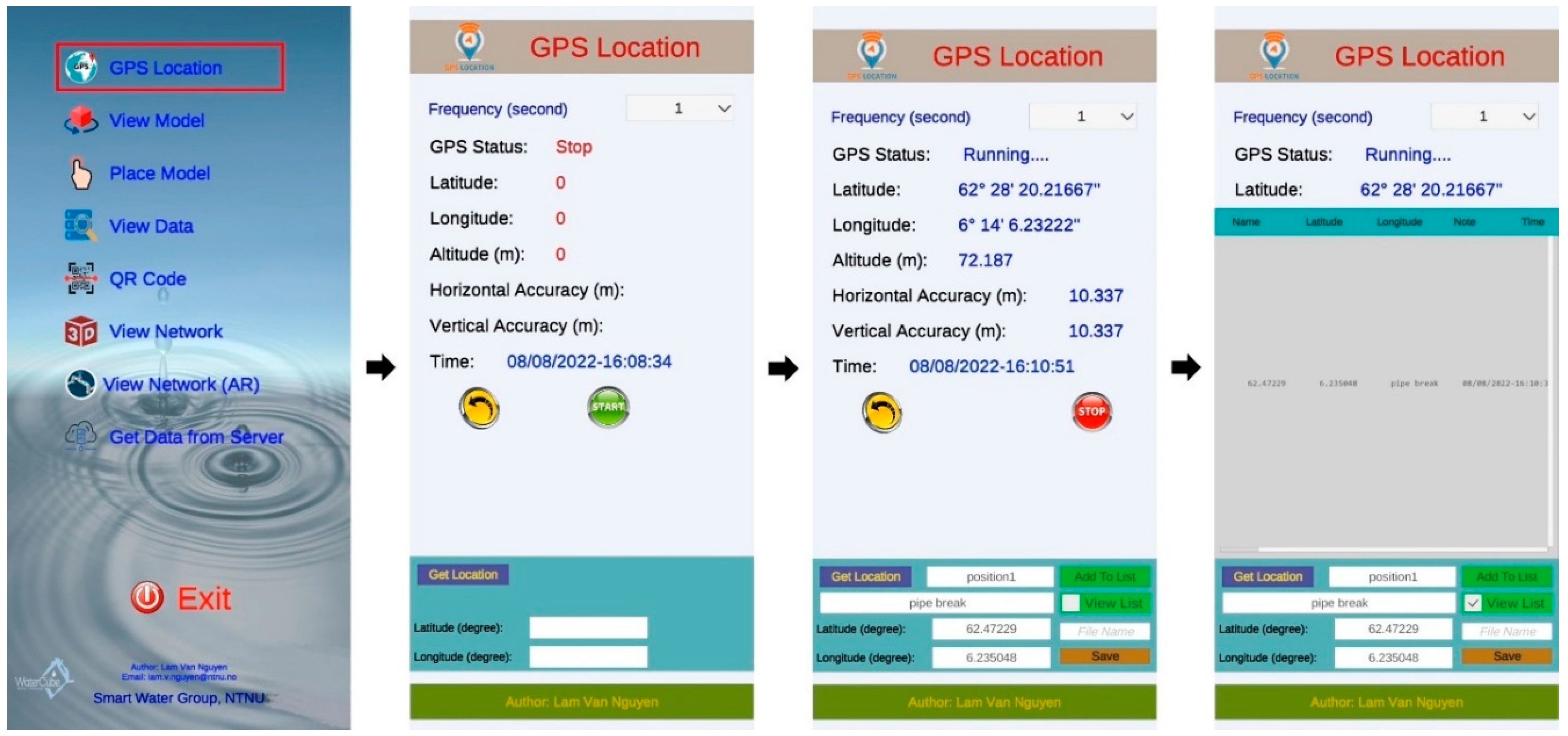

3.1. Visualization of Pipe Conditions with Mobile Application

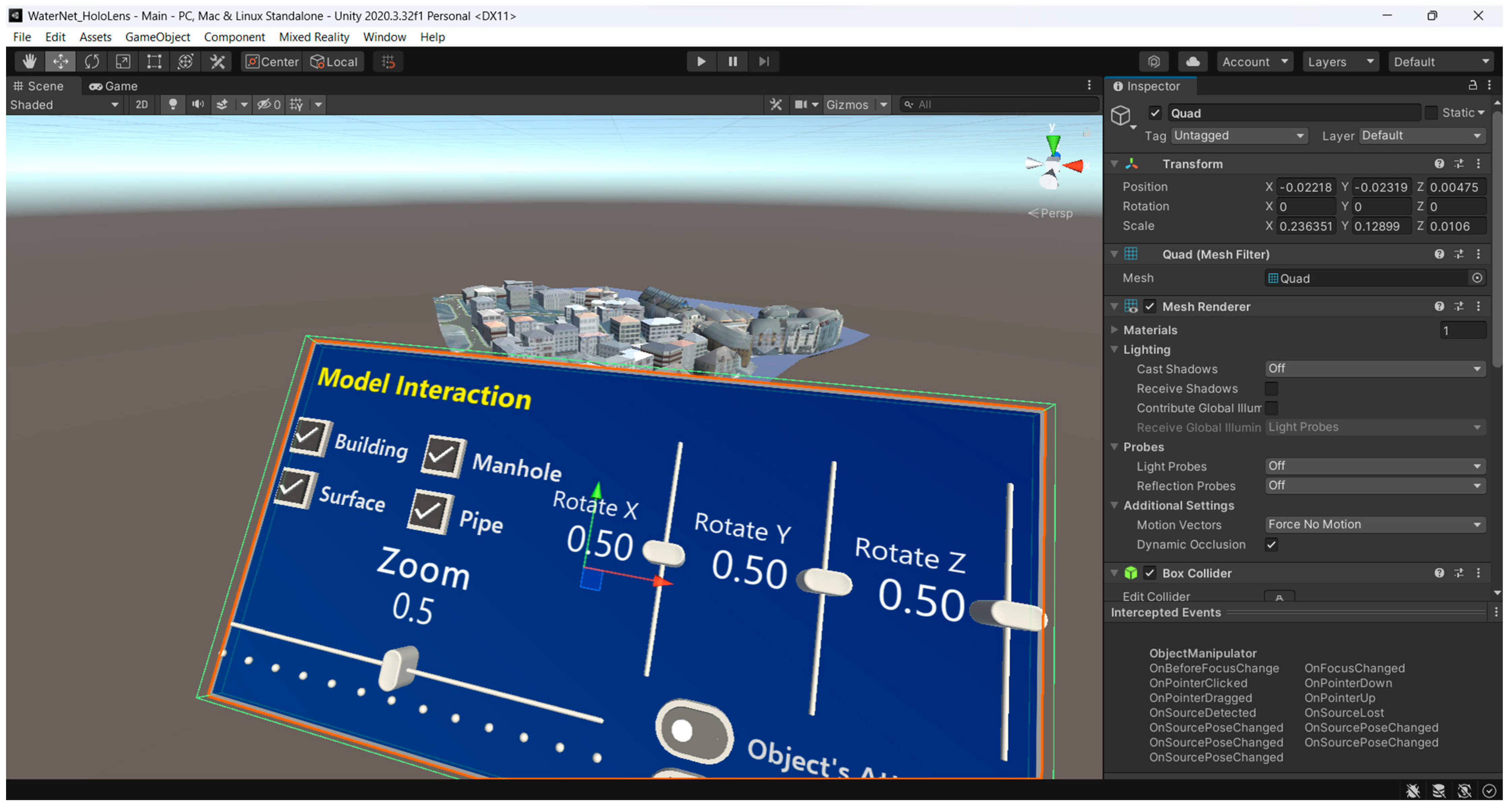

3.2. HoloLens Application

3.3. Accuracy Assessment

4. Conclusions and Outlook

- Allows for the collection of data on pipe and environmental attributes, processing, condition assessment, and visualization of pipe status on a mobile device;

- Can be used by operators for the 3D visualization of buried sewer pipes, including their attributes and conditions;

- Allows for the real-time visualization of dynamic data (e.g., water flow, water temperature) of the pipes through integration of geo-pipe locations and sensor data;

- Can be used in the field for purposes of asset management.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| GIS | Geographic Information System |

| AR | Augmented Reality |

| BIM | Building Information Modeling |

| GPR | Ground Penetrating Radar |

| GPS | Global Positioning System |

| GNSS | Global Navigation Satellite System |

| API | Application Programming Interface |

| ML | Machine Learning |

| ESRI | Environmental Systems Research Institute, Inc. |

| CSV | Comma-Separated Values |

| RMSE | Root Mean Square Error |

| PC | Personal Computer |

| WGS-84 | World Geodetic System 1984 |

| RTK | Real-Time Kinematic |

| RFID | Radio Frequency Identification |

| WCDMA | Wideband Code Division Multiple Access |

| LTE | Long-term Evolution |

| DGPS | Differential GPS |

References

- Statistics, N. Municipal Wastewater. Available online: https://www.ssb.no/en/natur-og-miljo/vann-og-avlop/statistikk/utslipp-og-rensing-av-kommunalt-avlop (accessed on 28 October 2023).

- Fugledalen, T.; Rokstad, M.M.; Tscheikner-Gratl, F. On the influence of input data uncertainty on sewer deterioration models—A case study in Norway. Struct. Infrastruct. Eng. 2021, 19, 1064–1075. [Google Scholar] [CrossRef]

- Nguyen, L.V.; Seidu, R. Application of Regression-Based Machine Learning Algorithms in Sewer Condition Assessment for Ålesund City, Norway. Water 2022, 14, 3993. [Google Scholar] [CrossRef]

- Nguyen, L.V.; Bui, D.T.; Seidu, R. Comparison of Machine Learning Techniques for Condition Assessment of Sewer Network. IEEE Access 2022, 10, 124238–124258. [Google Scholar] [CrossRef]

- Nguyen, L.V.; Seidu, R. Predicting sewer structural condition using hybrid machine learning algorithms. Urban Water J. 2023, 20, 882–896. [Google Scholar] [CrossRef]

- Li, M.; Feng, X.; Han, Y.; Liu, X. Mobile augmented reality-based visualization framework for lifecycle O&M support of urban underground pipe networks. Tunn. Undergr. Space Technol. 2023, 136, 21. [Google Scholar] [CrossRef]

- Nguyen, L.S.; Schaeli, B.; Sage, D.; Kayal, S.; Jeanbourquin, D.; Barry, D.A.; Rossi, L. Vision-based system for the control and measurement of wastewater flow rate in sewer systems. Water Sci. Technol. 2009, 60, 2281–2289. [Google Scholar] [CrossRef]

- Bottani, E.; Vignali, G. Augmented reality technology in the manufacturing industry: A review of the last decade. IISE Trans. 2019, 51, 284–310. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, Q.; Chen, H.; Song, X.; Tang, H.; Tian, M. An overview of augmented reality technology. J. Phys. Conf. Ser. 2019, 1237, 6. [Google Scholar] [CrossRef]

- Fite-Georgel, P. Is there a reality in Industrial Augmented Reality? In Proceedings of the 2011 10th IEEE International Symposium on Mixed and Augmented Reality, Basel, Switzerland, 26–29 October 2011; pp. 201–210. [Google Scholar]

- Mirauda, D.; Erra, U.; Agatiello, R.; Cerverizzo, M. Applications of Mobile Augmented Reality to Water Resources Management. Water 2017, 9, 699. [Google Scholar] [CrossRef]

- Centeno, J.A.S.; Kishi, R.T.; Mitishita, E.A. Three-dimensional Data Visualization in Water Quality Studies using Augmented Reality. In Proceedings of the 6th International Symposium on Mobile Mapping Technology, São Paulo, Brazil, 21–24 July 2009. [Google Scholar]

- Schall, G.; Zollmann, S.; Reitmayr, G. Smart Vidente: Advances in mobile augmented reality for interactive visualization of underground infrastructure. Pers. Ubiquitous Comput. 2013, 17, 1533–1549. [Google Scholar] [CrossRef]

- Haynes, P.; Hehl-Lange, S.; Lange, E. Mobile Augmented Reality for Flood Visualisation. Environ. Model. Softw. 2018, 109, 380–389. [Google Scholar] [CrossRef]

- Hahmann, S.; Burghardt, D. How much information is geospatially referenced? Networks and cognition. Int. J. Geogr. Inf. Sci. 2013, 27, 1171–1189. [Google Scholar] [CrossRef]

- Hunter, J.D. Matplotlib: A 2D Graphics Environment. Comput. Sci. Eng. 2007, 9, 90–95. [Google Scholar] [CrossRef]

- Villanueva, R.A.M.; Chen, Z.J. ggplot2: Elegant Graphics for Data Analysis (2nd ed.). Meas. Interdiscip. Res. Perspect. 2019, 17, 160–167. [Google Scholar] [CrossRef]

- Kjellin, A.; Pettersson, L.W.; Seipel, S.; Lind, M. Evaluating 2D and 3D visualizations of spatiotemporal information. ACM Trans. Appl. Percept. 2008, 7, 1–23. [Google Scholar] [CrossRef]

- Dübel, S.; Röhlig, M.; Schumann, H.; Trapp, M. 2D and 3D presentation of spatial data: A systematic review. In Proceedings of the 2014 IEEE VIS International Workshop on 3DVis (3DVis), Paris, France, 9 November 2014; pp. 11–18. [Google Scholar]

- Beha, F.; Göritz, A.; Schildhauer, T. Business model innovation: The role of different types of visualizations. In Proceedings of the ISPIM Conference Proceedings, Hamburg, Germany, 14–17 June 2015; p. 19. [Google Scholar]

- Huston, D.; Xia, T.; Zhang, Y.; Fan, T.; Orfeo, D.; Razinger, J. Urban underground infrastructure mapping and assessment. In Proceedings of the Sensors and Smart Structures Technologies for Civil, Mechanical, and Aerospace Systems 2017, Portland, OR, USA, 25–29 March 2017; p. 11. [Google Scholar]

- Fang, X.; Li, Q.; Zhu, J.; Chen, Z.; Zhang, D.; Wu, K.; Ding, K.; Li, Q. Sewer defect instance segmentation, localization, and 3D reconstruction for sewer floating capsule robots. Autom. Constr. 2022, 142, 104494. [Google Scholar] [CrossRef]

- Soria, G.; Ortega Alvarado, L.M.; Feito, F.R. Augmented and Virtual Reality for Underground Facilities Management. J. Comput. Inf. Sci. Eng. 2018, 18, 9. [Google Scholar] [CrossRef]

- Fenais, A.; Ariaratnam, S.T.; Ayer, S.K.; Smilovsky, N. Integrating Geographic Information Systems and Augmented Reality for Mapping Underground Utilities. Infrastructures 2019, 4, 60. [Google Scholar] [CrossRef]

- Pereira, M.; Burns, D.; Orfeo, D.; Farrel, R.; Hutson, D.; Xia, T. New GPR System Integration with Augmented Reality Based Positioning. In Proceedings of the 2018 on Great Lakes Symposium on VLSI, Chicago, IL, USA, 23–25 May 2018; pp. 341–346. [Google Scholar]

- Jimenez, R.J.P.; Becerril, E.M.D.; Nor, R.M.; Smagas, K.; Valari, E.; Stylianidis, E. Market potential for a location based and augmented reality system for utilities management. In Proceedings of the 2016 22nd International Conference on Virtual System & Multimedia (VSMM), Kuala Lumpur, Malaysia, 17–21 October 2016; pp. 1–4. [Google Scholar]

- Kim, B.-h. Development of Augmented Reality Underground Facility Management System using Map Application Programming Interface and JavaScript Object Notation Communication. Teh. Vjesn. 2023, 30, 797–803. [Google Scholar] [CrossRef]

- Tarek, H.; Marzouk, M. Integrated Augmented Reality and Cloud Computing Approach for Infrastructure Utilities Maintenance. J. Pipeline Syst. Eng. Pract. 2022, 13, 11. [Google Scholar] [CrossRef]

- Côté, S.; Mercier, A. Augmentation of Road Surfaces with Subsurface Utility Model Projections. In Proceedings of the 2018 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Tuebingen/Reutlingen, Germany, 18–22 March 2018; pp. 535–536. [Google Scholar]

- Rahman, A.; Xi, M.; Dabrowski, J.J.; McCulloch, J.; Arnold, S.; Rana, M.; George, A.; Adcock, M. An integrated framework of sensing, machine learning, and augmented reality for aquaculture prawn farm management. Aquac. Eng. 2021, 95, 102192. [Google Scholar] [CrossRef]

- Haugen, H.J.; Viak, A. Datafl yt—Klassifi Sering av Avløpsledninger; Norwegian Water BA: Hamar, Norway, 2018. [Google Scholar]

- Trimble. Trimble to Enhance its Office-to-Field Platform with the Acquisition of Google’s SketchUp 3D Modeling Platform. Available online: https://investor.trimble.com/news-releases/news-release-details/trimble-enhance-its-office-field-platform-acquisition-googles?releaseid=667690 (accessed on 19 October 2022).

- Bassett, T.; Lannon, S.C.; Waldron, D.; Jones, P.J. Calculating the solar potential of the urban fabric with SketchUp and HTB2. In Proceedings of the Solar Building Skins, Bressanone, Italy, 6–7 December 2012. [Google Scholar]

- Lewis, G.M.; Hampton, S.J. Visualizing volcanic processes in SketchUp: An integrated geo-education tool. Comput. Geosci. 2015, 81, 93–100. [Google Scholar] [CrossRef]

- Jusuf, S.K.; Ignatius, M.; Wong, N.H.; Tan, E. STEVE Tool Plug-in for SketchUp: A User-Friendly Microclimatic Mapping Tool for Estate Development. In Sustainable Building and Built Environments to Mitigate Climate Change in the Tropics: Conceptual and Practical Approaches, Karyono, T.H., Vale, R., Vale, B., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 113–130. [Google Scholar]

- Burner, D.M.; Ashworth, A.J.; Laughlin, K.F.; Boyer, M.E. Using SketchUp to Simulate Tree Row Azimuth Effects on Alley Shading. Agron. J. 2018, 110, 425–430. [Google Scholar] [CrossRef]

- Ma, Z.; Ren, Y. Integrated Application of BIM and GIS: An Overview. Procedia Eng. 2017, 196, 1072–1079. [Google Scholar] [CrossRef]

- Kuok, K.K.; Kingston Tan, K.W.; Chiu, P.C.; Chin, M.Y.; Rahman, M.R.; Bin Bakri, M.K. Application of Building Information Modelling (BIM) Technology in Drainage System Using Autodesk InfraWorks 360 Software; Springer Nature: Singapore, 2022; pp. 209–224. [Google Scholar]

- Barazzetti, L. Integrated BIM-GIS Model Generation at the City Scale Using Geospatial Data; SPIE: Bellingham, WA, USA, 2018; Volume 10773. [Google Scholar]

- Hocking, J.; Schell, J. Unity in Action: Multiplatform Game Development in C#, 3rd ed.; Manning Publications Co.: Shelter Island, NY, USA, 2022; p. 416. [Google Scholar]

- Juliani, A.; Berges, V.-P.; Teng, E.; Cohen, A.; Harper, J.; Elion, C.; Goy, C.; Gao, Y.; Henry, H.; Mattar, M. Unity: A General Platform for Intelligent Agents. arXiv 2018, arXiv:1809.02627. [Google Scholar] [CrossRef]

- Han, Y.-S.; Lee, J.; Lee, J.; Lee, W.; Lee, K. 3D CAD data extraction and conversion for application of augmented/virtual reality to the construction of ships and offshore structures. Int. J. Comput. Integr. Manuf. 2019, 32, 658–668. [Google Scholar] [CrossRef]

- Samsung. Galaxy A42 5G. Available online: https://www.samsung.com/us/smartphones/galaxy-a42-5g/ (accessed on 31 October 2022).

- Microsoft. HoloLens (1st gen) Hardware. Available online: https://learn.microsoft.com/en-us/hololens/hololens1-hardware (accessed on 1 November 2022).

- Duque, N.; Duque, D.; Aguilar, A.; Saldarriaga, J. Sewer Network Layout Selection and Hydraulic Design Using a Mathematical Optimization Framework. Water 2020, 12, 3337. [Google Scholar] [CrossRef]

- Zeiler, M. Modeling Our World: The ESRI Guide to Geodatabase Design; Environmental Systems Research Institute, Inc.: Redlands, CA, USA, 1999; Volume 40. [Google Scholar]

- Wang, W.; Wu, X.; Chen, G.; Chen, Z. Holo3DGIS: Leveraging Microsoft HoloLens in 3D Geographic Information. ISPRS Int. J. Geo-Inf. 2018, 7, 60. [Google Scholar] [CrossRef]

- Microsoft. Unity Development for HoloLens. Available online: https://learn.microsoft.com/en-us/windows/mixed-reality/develop/unity/unity-development-overview?tabs=arr%2CD365%2Chl2 (accessed on 1 November 2022).

- Renfro, B.A.; Stein, M.; Boeker, N.; Terry, A. An Analysis of Global Positioning System (GPS) Standard Positioning Service (SPS) Performance for 2017. 2018. Available online: https://www.gps.gov/systems/gps/performance/2018-GPS-SPS-performance-analysis.pdf (accessed on 22 November 2023).

- Jian, M.; Wang, Y.; Wu, B.; Cheng, Y. Hybrid cloud computing for user location-aware augmented reality construction. In Proceedings of the 2018 20th International Conference on Advanced Communication Technology (ICACT), Chuncheon, Republic of Korea, 11–14 February 2018; pp. 190–194. [Google Scholar]

- Chen, Y.; Zhao, S.; Farrell, J.A. Computationally efficient carrier integer ambiguity resolution in multiepoch GPS/INS: A common-position-shift approach. IEEE Trans. Control Syst. Technol. 2015, 24, 1541–1556. [Google Scholar] [CrossRef]

- Blum, J.R.; Greencorn, D.G.; Cooperstock, J.R. Smartphone Sensor Reliability for Augmented Reality Applications. In Mobile and Ubiquitous Systems: Computing, Networking, and Services; MobiQuitous 2012; Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering; Springer: Berlin/Heidelberg, Germany, 2013; pp. 127–138. [Google Scholar]

| Manhole Name | 112182 | 112760 | 58884 | 112765 | 58886 |

|---|---|---|---|---|---|

| Mean RMSE (m) | 1.15 | 2.19 | 1.80 | 1.59 | 1.56 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nguyen, L.V.; Bui, D.T.; Seidu, R. Utilization of Augmented Reality Technique for Sewer Condition Visualization. Water 2023, 15, 4232. https://doi.org/10.3390/w15244232

Nguyen LV, Bui DT, Seidu R. Utilization of Augmented Reality Technique for Sewer Condition Visualization. Water. 2023; 15(24):4232. https://doi.org/10.3390/w15244232

Chicago/Turabian StyleNguyen, Lam Van, Dieu Tien Bui, and Razak Seidu. 2023. "Utilization of Augmented Reality Technique for Sewer Condition Visualization" Water 15, no. 24: 4232. https://doi.org/10.3390/w15244232

APA StyleNguyen, L. V., Bui, D. T., & Seidu, R. (2023). Utilization of Augmented Reality Technique for Sewer Condition Visualization. Water, 15(24), 4232. https://doi.org/10.3390/w15244232