Optimising the Workflow for Fish Detection in DIDSON (Dual-Frequency IDentification SONar) Data with the Use of Optical Flow and a Genetic Algorithm

Abstract

1. Introduction

2. Methods

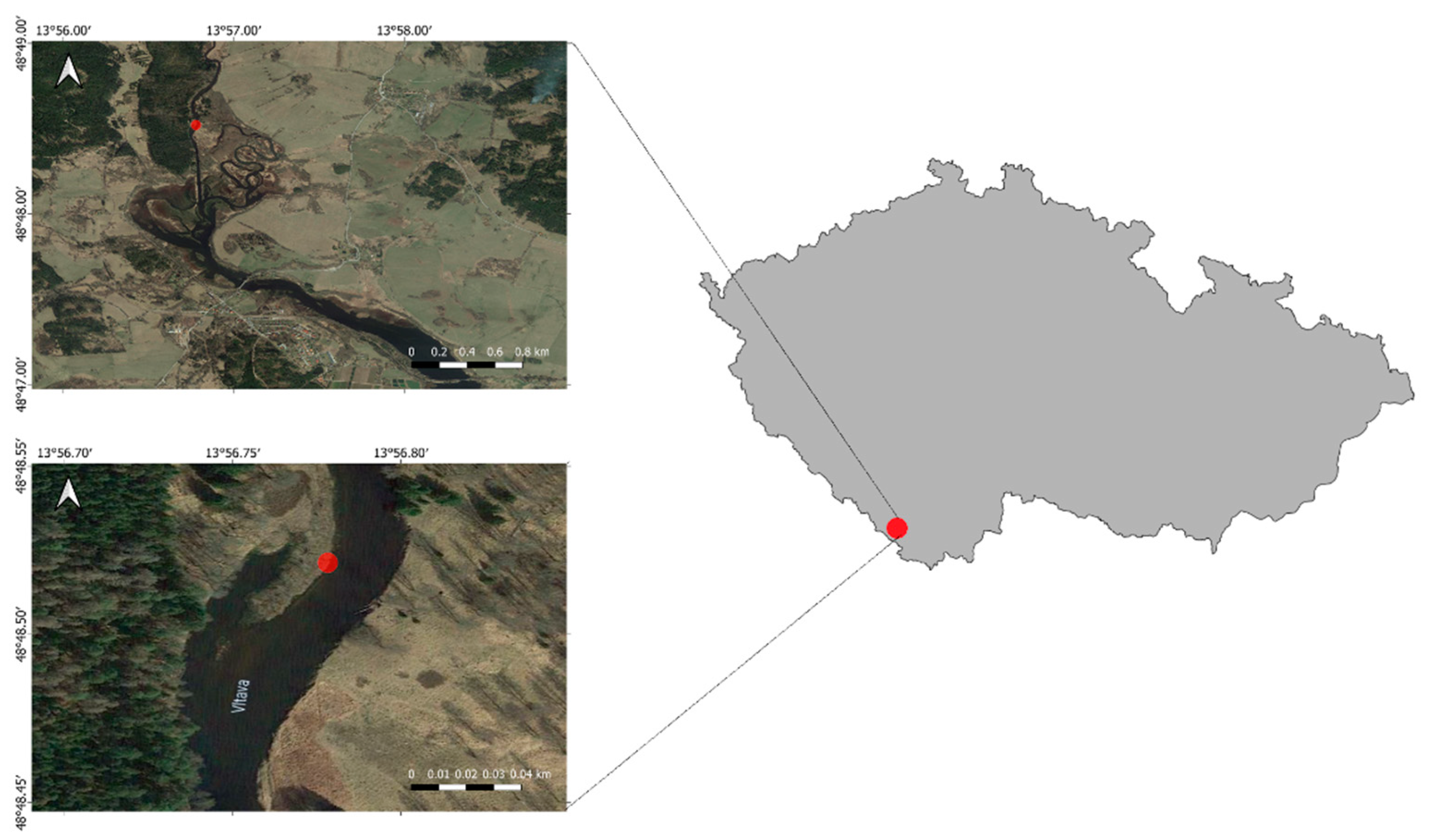

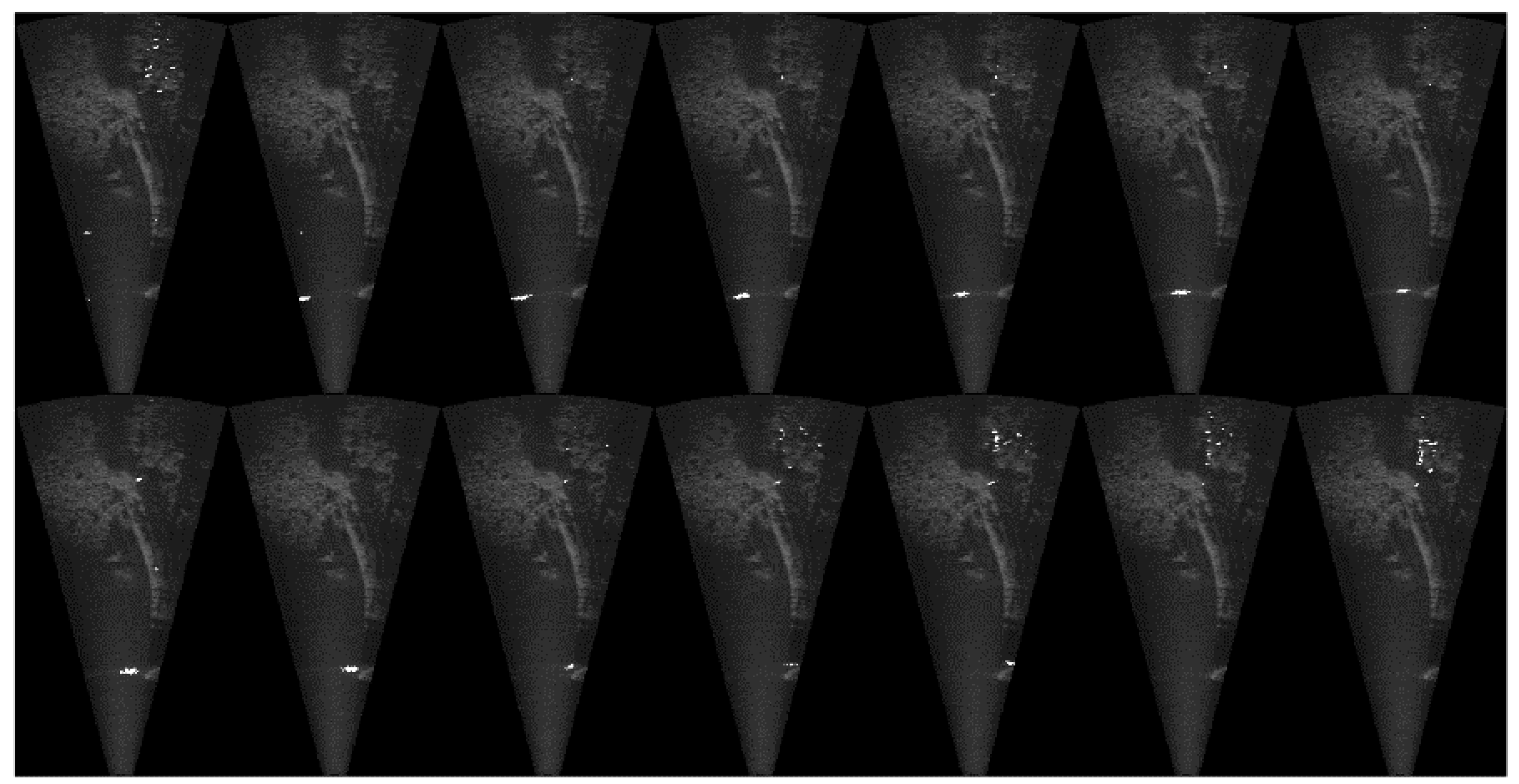

2.1. Data Collection

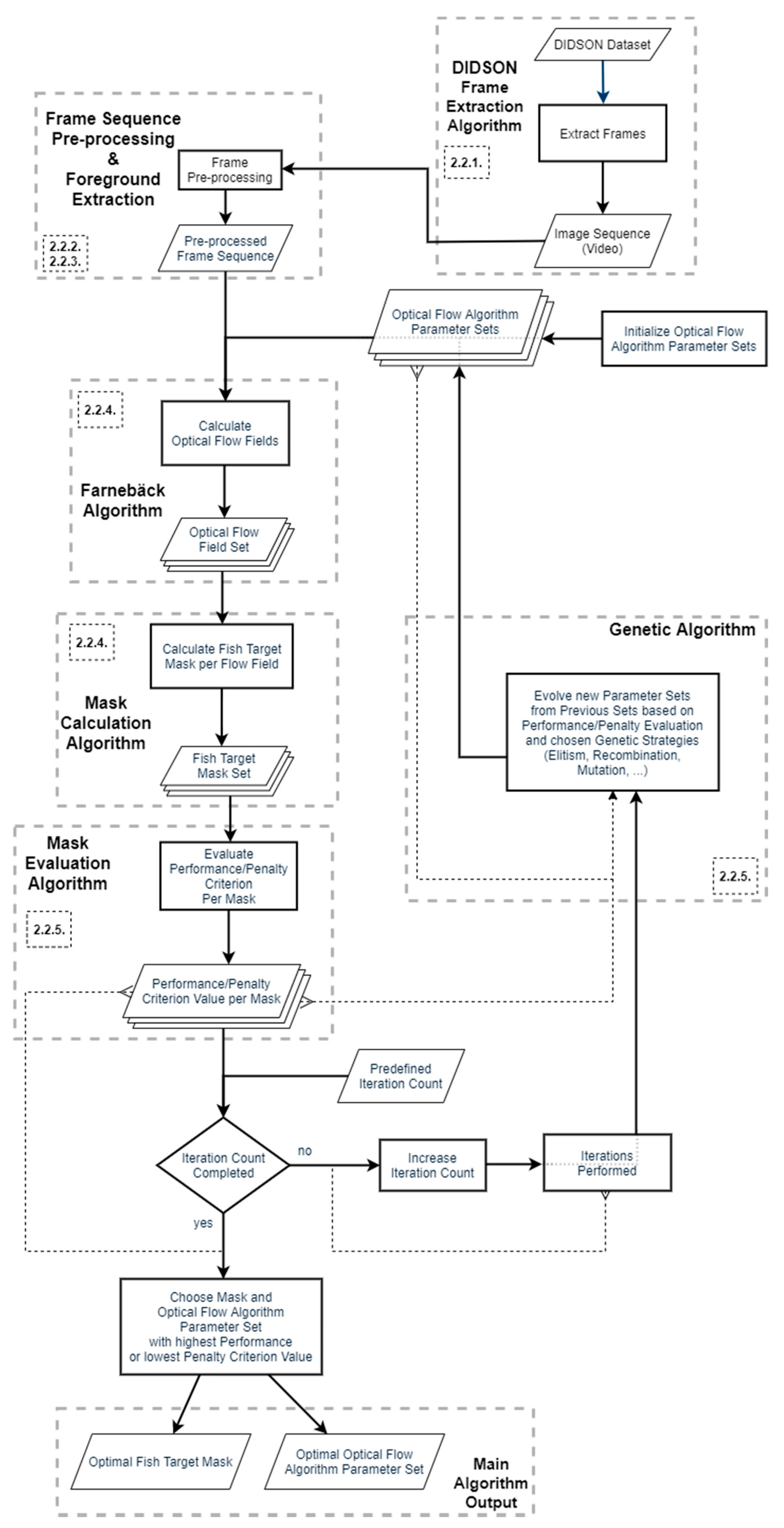

2.2. Workflow

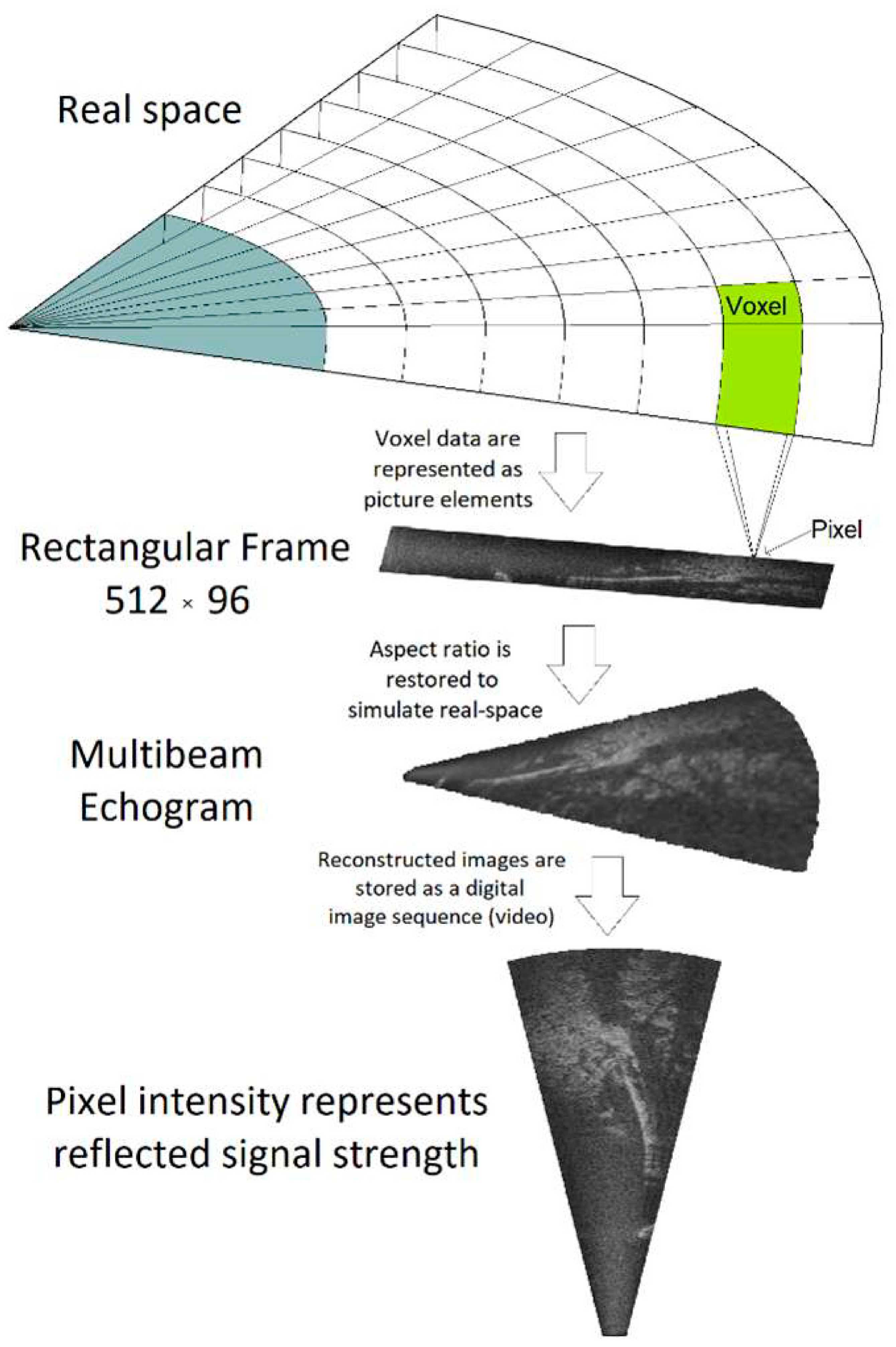

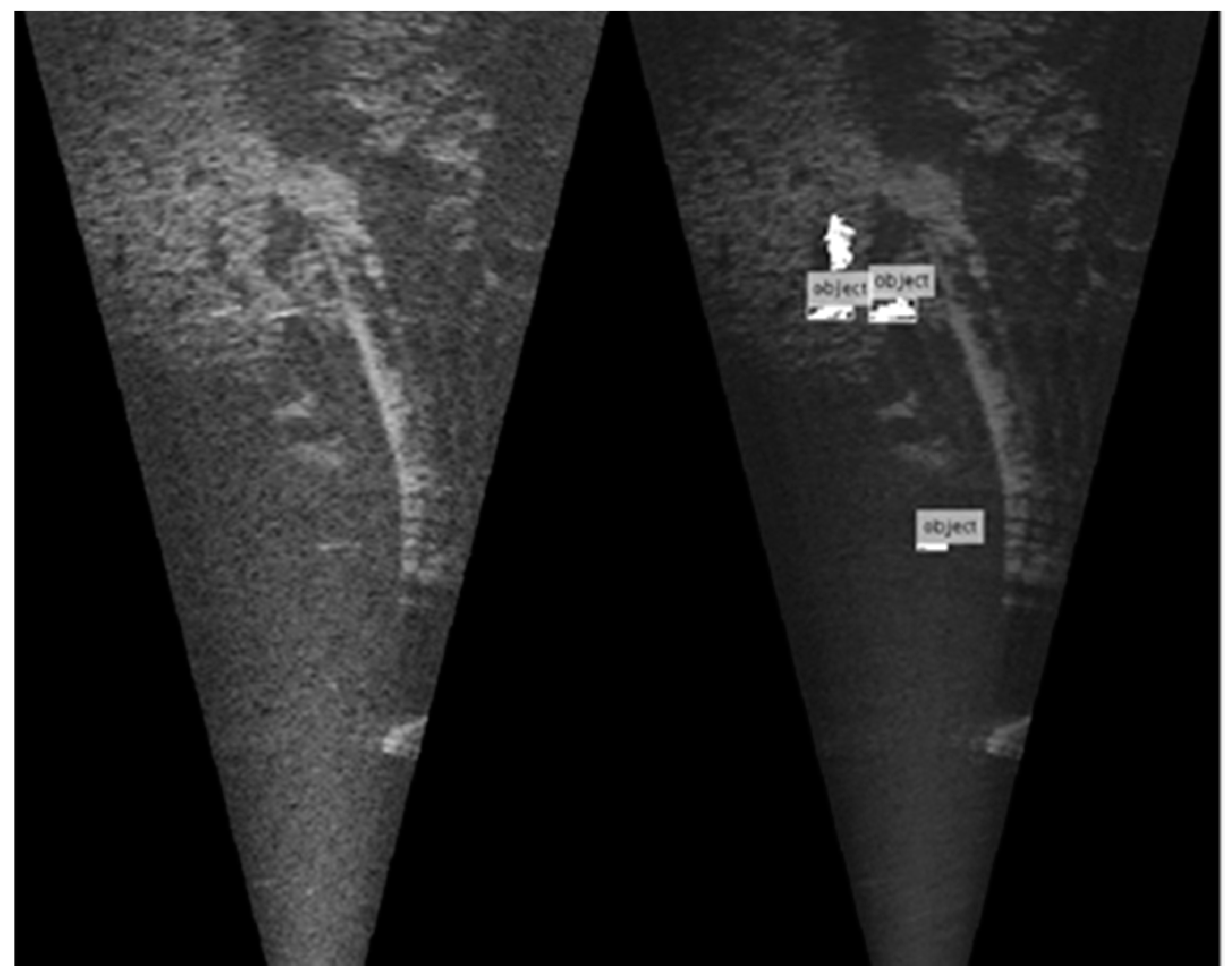

2.2.1. Data Extraction

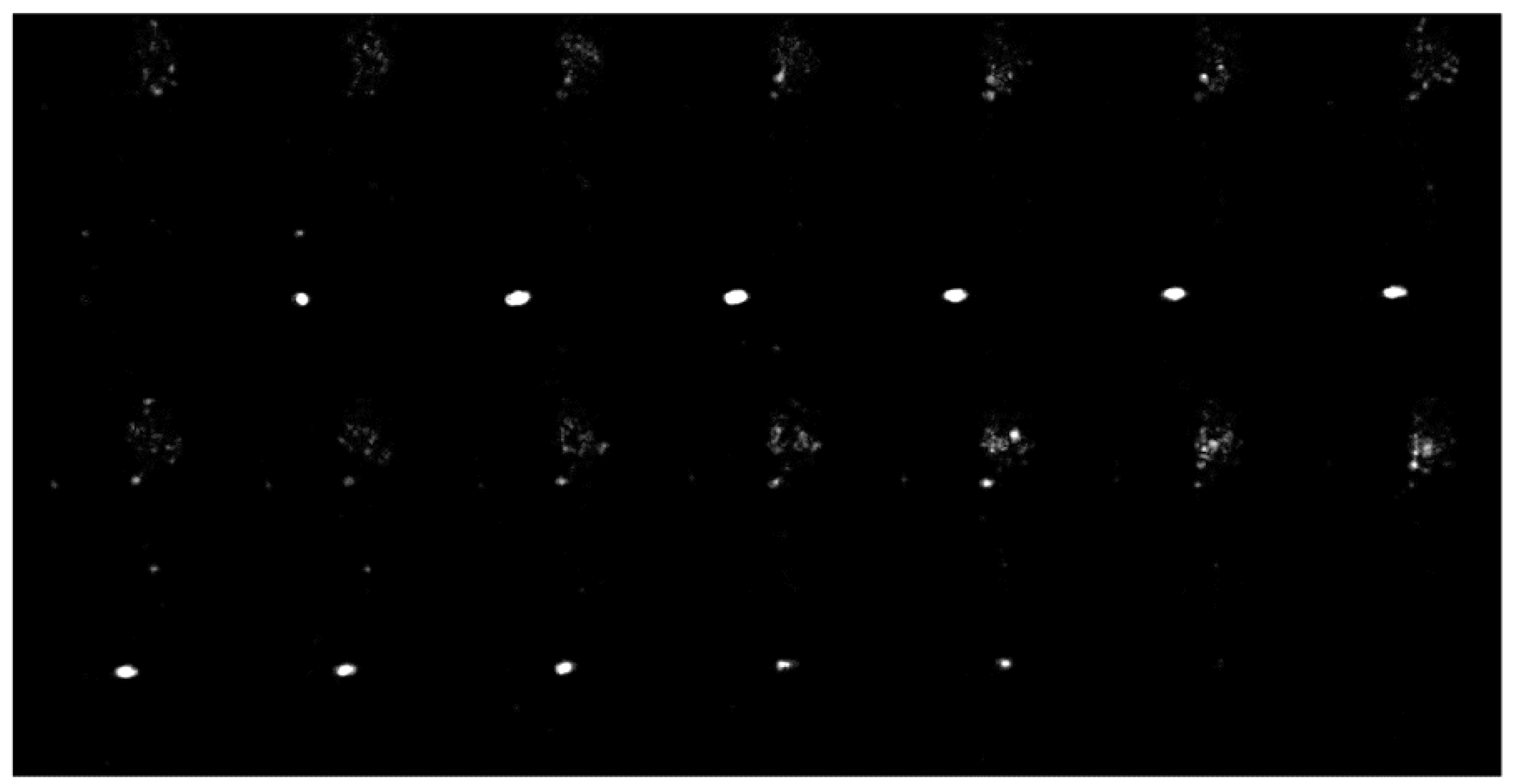

2.2.2. Pre-processing of Reconstructed Frame Sequence

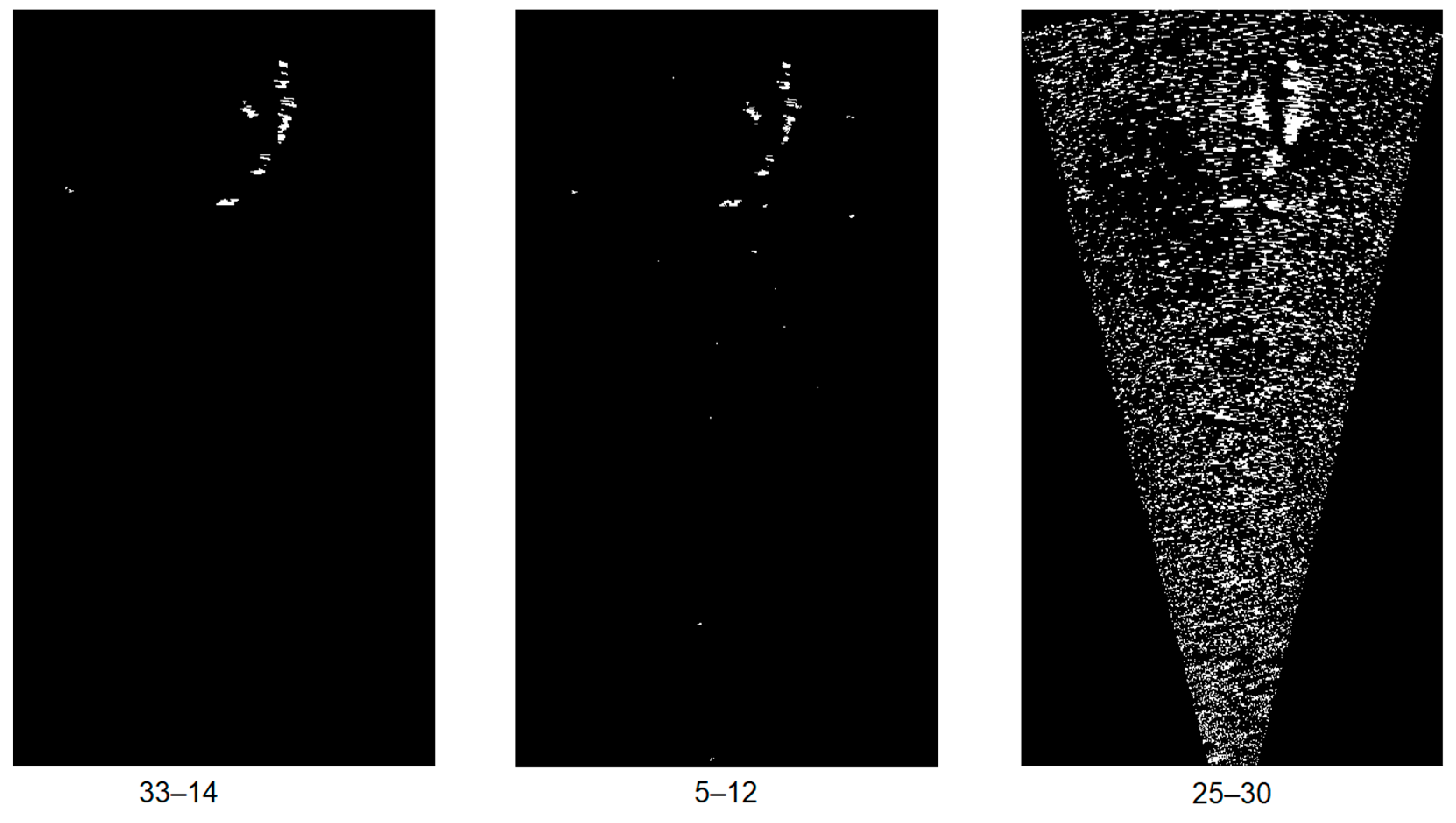

2.2.3. Background Subtraction—Foreground Extraction

2.2.4. Foreground Masking using Optical Flow

- Imbalanced background-to-foreground pixel number ratios.

- High variances in the foreground and background pixel values.

- Small mean difference between foreground and background pixels.

- First threshold on the optical flow field output frame using Otsu’s method to get the optical flow mask. The background detected in this step for this frame is ignored in further calculations.

- Detect connected components of the optical flow mask. Each component corresponds to a location of more pronounced motion on the frame.

- For each connected component, retrieve the pixel intensity values of the original frame and perform thresholding using Otsu’s method on the component using those intensity values.

- The final segmentation per connected component of the optical flow mask frame represents the overall fish target mask for that frame.

- The number of scales to use for the multi-scale optical flow component estimation (pyramid levels).

- The down-sampling factor between scale levels for the scales used in the iterative calculation (pyramid scale).

- The typical size of each neighbourhood that is polynomially approximated at each step in pixels.

- The size of the Gaussian filter used to average displacement values estimated from different iterations in pixels.

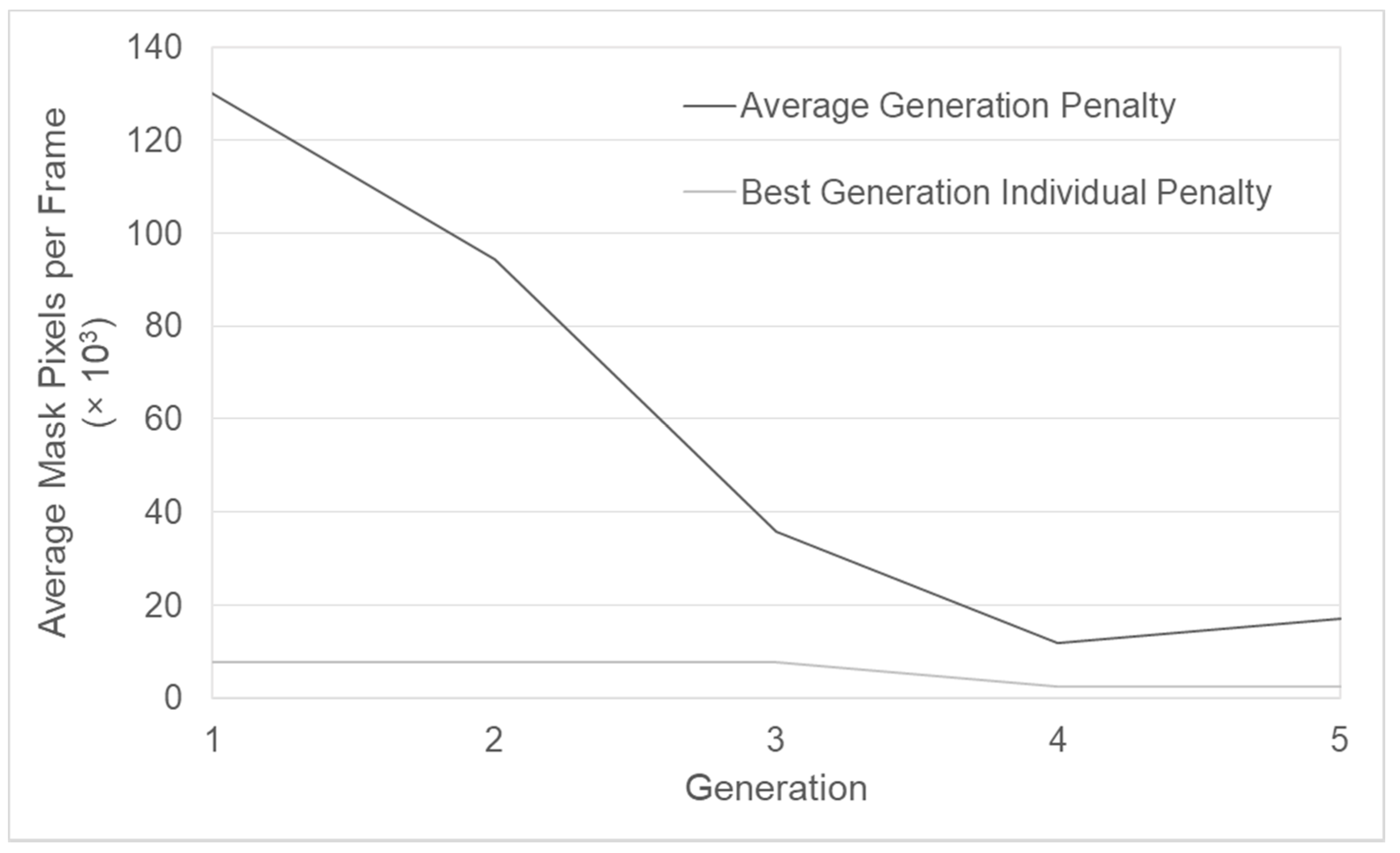

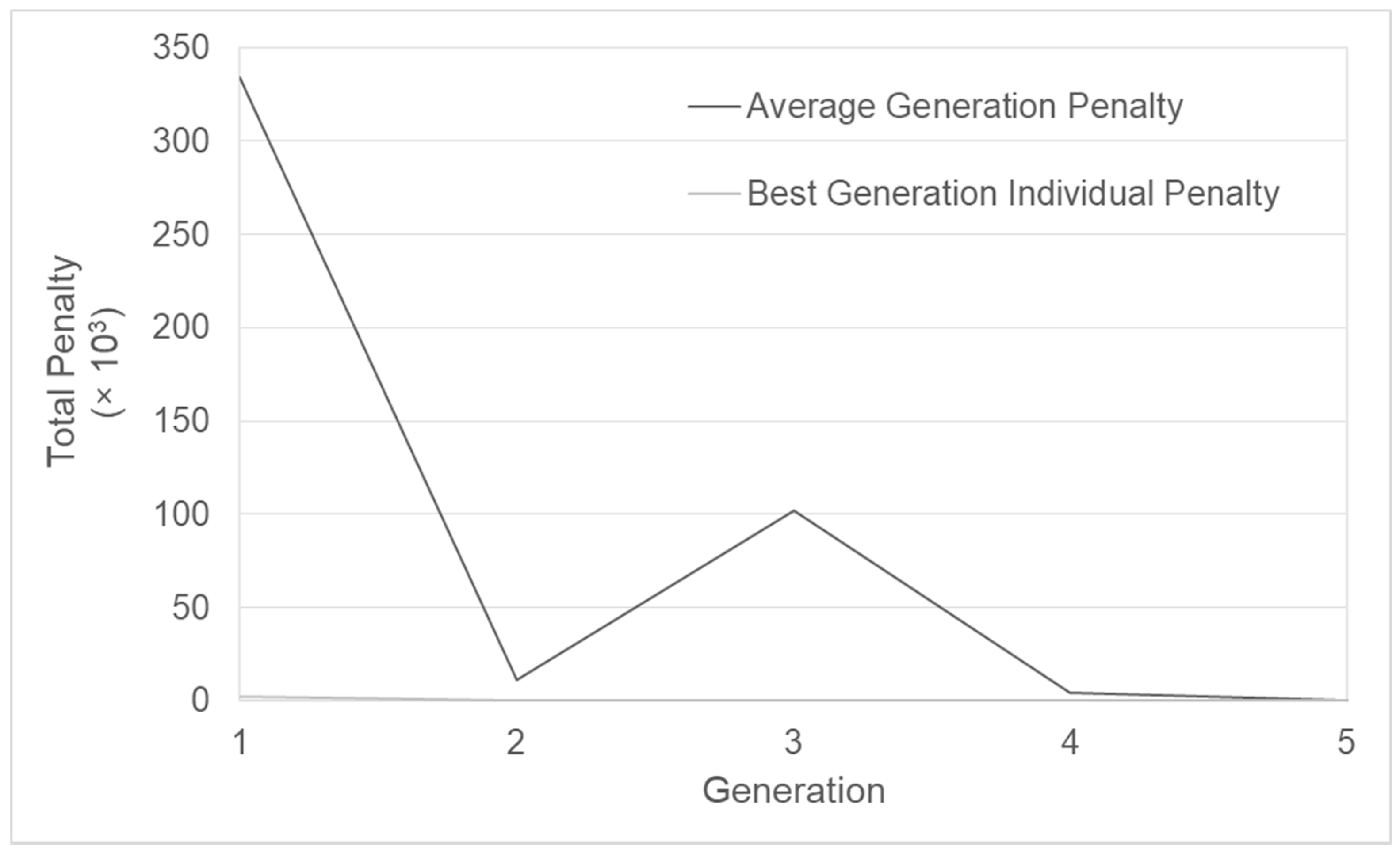

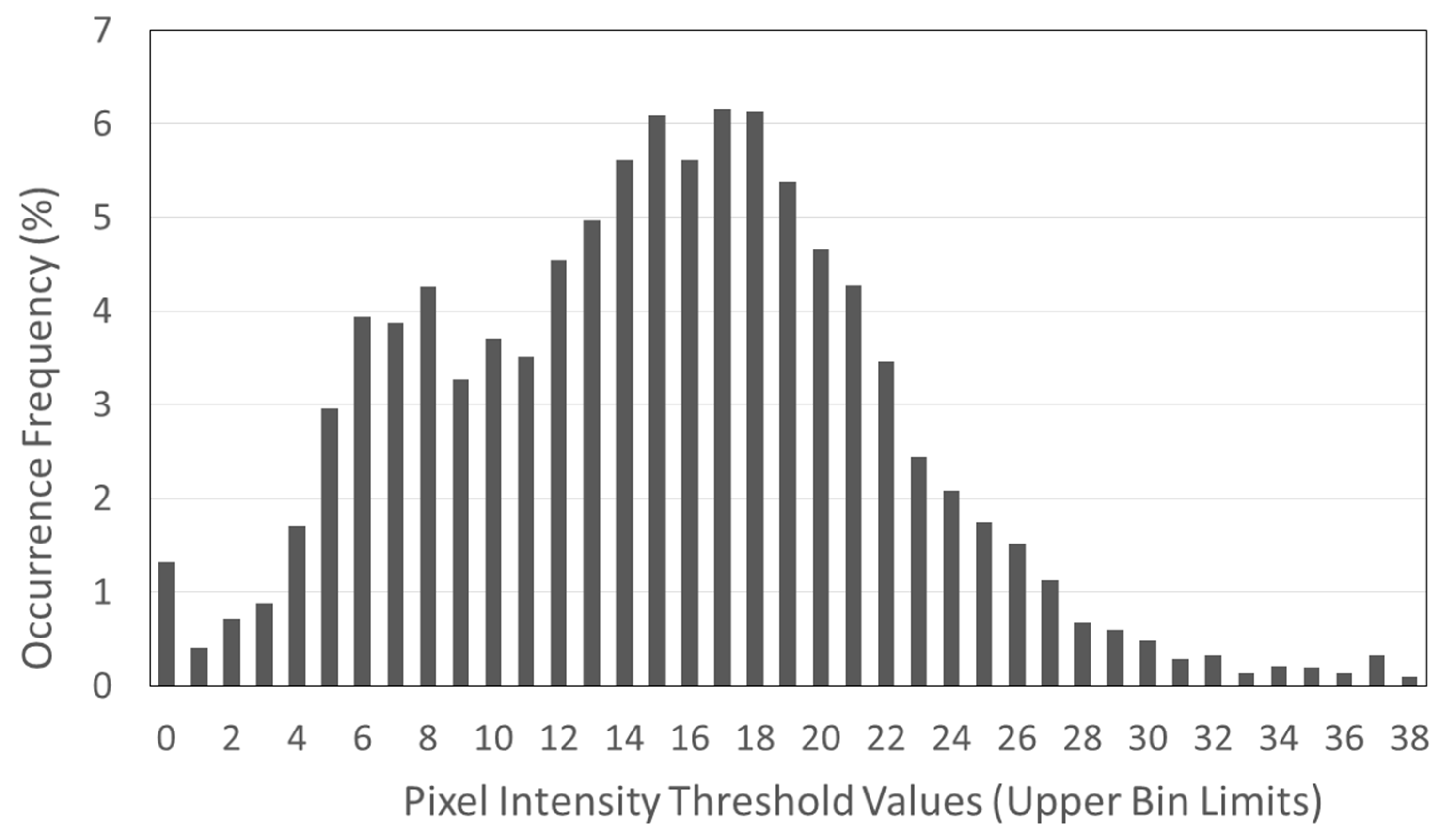

2.2.5. Genetic Algorithm—Conditionally Optimal Mask

- Population size: 6 individuals.

- Generation limit: 5 generations.

- Average number of masked pixels per frame.

- Constant penalty per very small or very large object.

2.3. Output and Evaluation

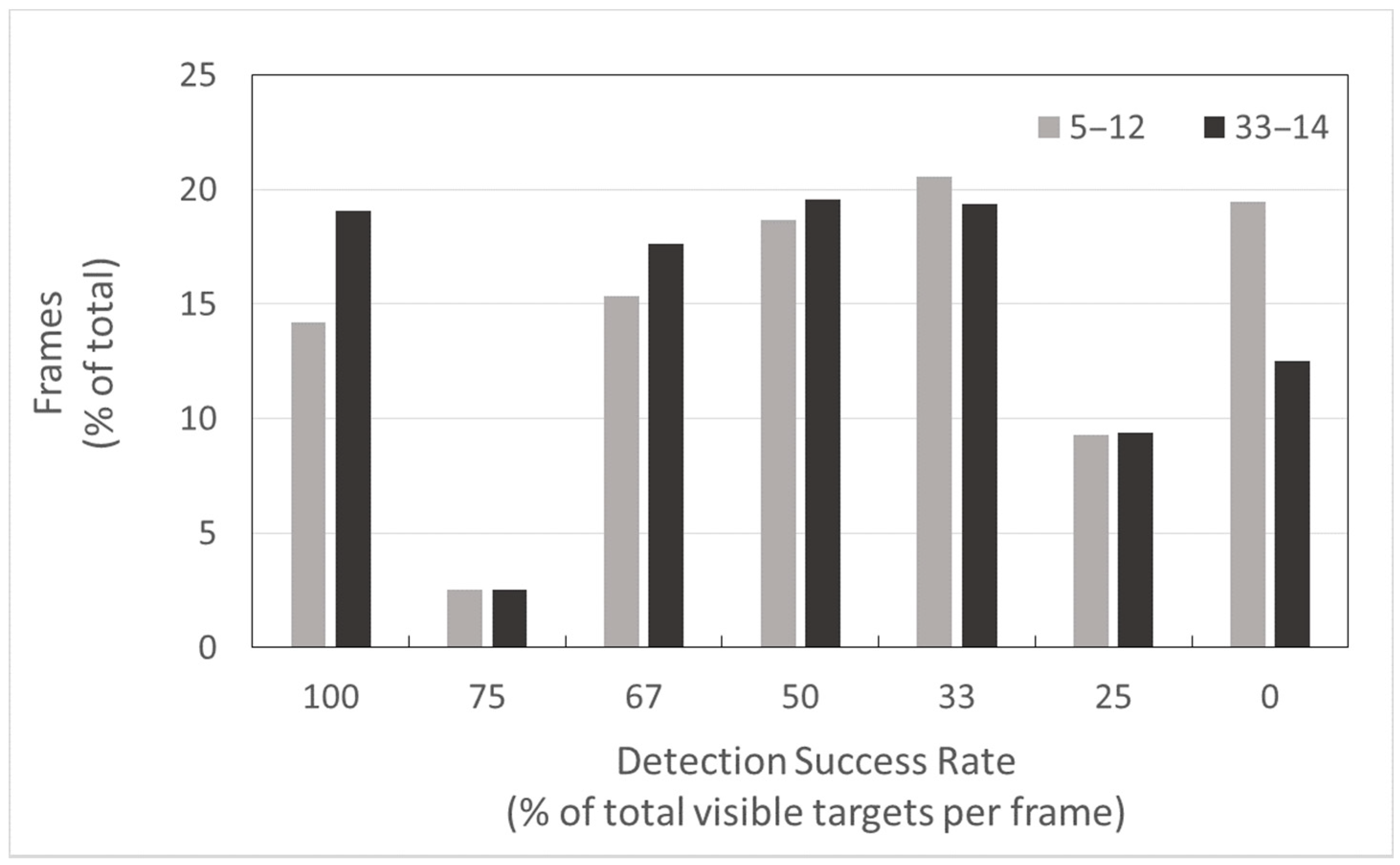

3. Results

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bizzi, S.; Demarchi, L.; Grabowski, R.C.; Weissteiner, C.J.; Van de Bund, W. The Use of Remote Sensing to Characterise Hydromorphological Properties of European Rivers. Aquat. Sci. 2016, 78, 57–70. [Google Scholar] [CrossRef]

- Bhat, S.U.; Pandit, A.K. Water Quality Assessment and Monitoring of Kashmir Himalayan Freshwater Springs-A Case Study. Aquat. Ecosyst. Heal. Manag. 2020, 23, 274–287. [Google Scholar] [CrossRef]

- Domakinis, C.; Mouratidis, A.; Voudouris, K.; Astaras, T.; Karypidou, M.C. Flood Susceptibility Mapping in Erythropotamos River Basin with the Aid of Remote Sensing and GIS. AUC Geogr. 2020, 55, 149–164. [Google Scholar] [CrossRef]

- Mouratidis, A.; Sarti, F. Flash-Flood Monitoring and Damage Assessment with SAR Data: Issues and Future Challenges for Earth Observation from Space Sustained by Case Studies from the Balkans and Eastern Europe. Lect. Notes Geoinf. Cart. 2013, 199659, 125–136. [Google Scholar] [CrossRef]

- Palmer, M.A.; Bernhardt, E.S.; Allan, J.D.; Lake, P.S.; Alexander, G.; Brooks, S.; Carr, J.; Clayton, S.; Dahm, C.N.; Follstad Shah, J.; et al. Standards for Ecologically Successful River Restoration. J. Appl. Ecol. 2005, 42, 208–217. [Google Scholar] [CrossRef]

- Karr, J. Biological Integrity: A Long-Neglected Aspect of Water Resource Management. Ecol. Soc. Am. Ecol. Appl. 1991, 1, 66–84. [Google Scholar] [CrossRef] [PubMed]

- Simmonds, E.J.; MacLennan, D. Fisheries Acoustics: Theory and Practice, 2nd ed.; Blackwell Publishing: Hoboken, NJ, USA, 2005. [Google Scholar] [CrossRef]

- Foote, K.G. Acoustic Methods: Brief Review and Prospects for Advancing Fisheries Research. In The Future of Fisheries Science in North America; Beamish, R.J., Rothschild, B.J., Eds.; Springer: Dordrecht, The Netherlands, 2009; pp. 313–343. [Google Scholar] [CrossRef]

- Moursund, R.A.; Carlson, T.J.; Peters, R.D. A Fisheries Application of a Dual-Frequency Identification Sonar Acoustic Camera. ICES J. Mar. Sci. 2003, 60, 678–683. [Google Scholar] [CrossRef]

- Belcher, E.; Matsuyama, B.; Trimble, G. Object Identification with Acoustic Lenses. In TS/IEEE Oceans 2001. An Ocean Odyssey. Conference Proceedings (IEEE Cat. No.01CH37295), Honolulu, HI, USA, 5–8 November 2001; IEEE: Piscataway, NJ, USA, 2001; Volume 1, pp. 6–11. [Google Scholar] [CrossRef]

- Belcher, E.; Hanot, W.; Burch, J. Dual-Frequency Identification Sonar (DIDSON). In Proceedings of the 2002 International Symposium on Underwater Technology (Cat. No.02EX556), Tokyo, Japan, 19 April 2002; IEEE: Piscataway, NJ, USA, 2002; pp. 187–192. [Google Scholar] [CrossRef]

- Sound Metrics. Available online: www.soundmetrics.com (accessed on 17 December 2019).

- Pipal, K.; Jessop, M.; Boughton, D.; Adams, P. Using Dual-Frequency Identification Sonar (DIDSON) to Estimate Adult Steelhead Escapement in the San Lorenzo River, California. Calif. Fish Game 2010, 96, 90–95. [Google Scholar]

- Faulkner, A.V.; Maxwell, S.L. An Aiming Protocol for Fish-Counting Sonars Using River Bottom Profiles from a Dual-Frequency Identification Sonar (DIDSON); Alaska Department of Fish and Game, Division of Sport Fish, Research and Technical Services: Juneau, AK, USA, 2009. [Google Scholar]

- Burwen, D.L.; Fleischman, S.J.; Miller, J.D. Accuracy and Precision of Salmon Length Estimates Taken from DIDSON Sonar Images. Trans. Am. Fish. Soc. 2010, 139, 1306–1314. [Google Scholar] [CrossRef]

- Daroux, A.; Martignac, F.; Nevoux, M.; Baglinière, J.L.; Ombredane, D.; Guillard, J. Manual Fish Length Measurement Accuracy for Adult River Fish Using an Acoustic Camera (DIDSON). J. Fish Biol. 2019, 95, 480–489. [Google Scholar] [CrossRef] [PubMed]

- van Keeken, O.A.; van Hal, R.; Volken Winter, H.; Tulp, I.; Griffioen, A.B. Behavioural Responses of Eel (Anguilla Anguilla) Approaching a Large Pumping Station with Trash Rack Using an Acoustic Camera (DIDSON). Fish. Manag. Ecol. 2020, 27, 464–471. [Google Scholar] [CrossRef]

- Rakowitz, G.; Tušer, M.; Říha, M.; Jůza, T.; Balk, H.; Kubečka, J. Use of High-Frequency Imaging Sonar (DIDSON) to Observe Fish Behaviour towards a Surface Trawl. Fish. Res. 2012, 123–124, 37–48. [Google Scholar] [CrossRef]

- Martignac, F.; Daroux, A.; Bagliniere, J.L.; Ombredane, D.; Guillard, J. The Use of Acoustic Cameras in Shallow Waters: New Hydroacoustic Tools for Monitoring Migratory Fish Population. A Review of DIDSON Technology. Fish Fish. 2015, 16, 486–510. [Google Scholar] [CrossRef]

- Lenihan, E.S.; McCarthy, T.K.; Lawton, C. Use of an Acoustic Camera to Monitor Seaward Migrating Silver-Phase Eels (Anguilla Anguilla) in a Regulated River. Ecohydrol. Hydrobiol. 2019, 19, 289–295. [Google Scholar] [CrossRef]

- Langkau, M.C.; Balk, H.; Schmidt, M.B.; Borcherding, J. Can Acoustic Shadows Identify Fish Species? A Novel Application of Imaging Sonar Data. Fish. Manag. Ecol. 2012, 19, 313–322. [Google Scholar] [CrossRef]

- Mueller, A.-M.; Mulligan, T.; Withler, P.K. Classifying Sonar Images: Can a Computer-Driven Process Identify Eels? North Am. J. Fish. Manag. 2008, 28, 1876–1886. [Google Scholar] [CrossRef]

- Han, J.; Honda, N.; Asada, A.; Shibata, K. Automated Acoustic Method for Counting and Sizing Farmed Fish during Transfer Using DIDSON. Fish. Sci. 2009, 75, 1359–1367. [Google Scholar] [CrossRef]

- Farnebäck, G. Two-Frame Motion Estimation Based On. Lect. Notes Comput. Sci. 2003, 2749, 363–370. [Google Scholar]

- Solichin, A.; Harjoko, A.; Putra, A.E. Movement Direction Estimation on Video Using Optical Flow Analysis on Multiple Frames. Int. J. Adv. Comput. Sci. Appl. 2018, 9, 174–181. [Google Scholar] [CrossRef]

- Bhanu, B.; Lee, S.; Ming, J. Adaptive Image Segmentation Using a Genetic Algorithm. IEEE Trans. Syst. ManCybern. 1995, 25, 1543–1567. [Google Scholar] [CrossRef]

- Muška, M.; Tušer, M. Soužití Člověka a Perlorodky Říční ve Vltavském Luhu: G—Monitoring Populací Ryb ve Vltavě, Kvantifikace Migrace Ryb z Přehrady Lipno Do Toku Vltavy [Coexistence of Human and the Pearl Mussel Margaritifera Margaritifera in the Vltava River Floodplain, G.; Biologické centrum, v.v.i., Hydrobiologický ústav: České Budějovice, Czech Republic, 2015. [Google Scholar]

- Maclennan, D.N.; Fernandes, P.G.; Dalen, J. A Consistent Approach to Definitions and Symbols in Fisheries Acoustics. Ices J. Mar. Sci. 2002, 59, 365–369. [Google Scholar] [CrossRef]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man. Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Lee, S.U.; Yoon Chung, S.; Park, R.H. A Comparative Performance Study of Several Global Thresholding Techniques for Segmentation. Comput. Vis. Graph. Image Process. 1990, 52, 171–190. [Google Scholar] [CrossRef]

- Kittler, J.; Illingworth, J. On Threshold Selection Using Clustering Criteria. IEEE Trans. Syst. Man. Cybern. 1985, SMC-15, 652–655. [Google Scholar] [CrossRef]

- Sentas, A.; Psilovikos, A.; Karamoutsou, L.; Charizopoulos, N. Monitoring, Modeling, and Assessment of Water Quality and Quantity in River Pinios, Using ARIMA Models. Desalin. Water Treat. 2018, 133, 336–347. [Google Scholar] [CrossRef]

- Sentas, A.; Karamoutsou, L.; Charizopoulos, N.; Psilovikos, T.; Psilovikos, A.; Loukas, A. The Use of Stochastic Models for Short-Term Prediction of Water Parameters of the Thesaurus Dam, River Nestos, Greece. Proceedings 2018, 2, 634. [Google Scholar] [CrossRef]

- Suryanarayana, I.; Braibanti, A.; Sambasiva Rao, R.; Ramam, V.A.; Sudarsan, D.; Nageswara Rao, G. Neural Networks in Fisheries Research. Fish. Res. 2008, 92, 115–139. [Google Scholar] [CrossRef]

- Tušer, M.; Frouzová, J.; Balk, H.; Muška, M.; Mrkvička, T.; Kubečka, J. Evaluation of potential bias in observing fish with a DIDSON acoustic camera. Fish. Res. 2014, 155, 114–121. [Google Scholar] [CrossRef]

- Fayyad, U.; Piatetsky-Shapiro, G.; Smyth, P. From Data Mining to Knowledge Discovery in Databases. AI Mag. 1996, 17, 37. [Google Scholar] [CrossRef]

- Zadeh, L. Fuzzy Logic, Neural Networks and Soft Computing. Comm. ACM 1994, 37, 77–84. [Google Scholar] [CrossRef]

- Dogan, I. An Overview of Soft Computing. Proc. Comp. Sci. 2016, 102, 34–38. [Google Scholar] [CrossRef]

- Ghalandari, M.; Ziamolki, A.; Mosavi, A.; Shamshirband, S.; Chau, K.-W.; Bornassi, S. Aeromechanical optimization of first row compressor test stand blades using a hybrid machine learning model of genetic algorithm, artificial neural networks and design of experiments. Eng. App. Comp. Fl. Mech. 2019, 13, 892–904. [Google Scholar] [CrossRef]

| Comparison | Percentage of Total Frames |

|---|---|

| 33–14 > 5–12 | 22.3 % |

| 33–14 < 5–12 | 11.8 % |

| 33–14 = 5–12 | 65.9 % |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Perivolioti, T.-M.; Tušer, M.; Terzopoulos, D.; Sgardelis, S.P.; Antoniou, I. Optimising the Workflow for Fish Detection in DIDSON (Dual-Frequency IDentification SONar) Data with the Use of Optical Flow and a Genetic Algorithm. Water 2021, 13, 1304. https://doi.org/10.3390/w13091304

Perivolioti T-M, Tušer M, Terzopoulos D, Sgardelis SP, Antoniou I. Optimising the Workflow for Fish Detection in DIDSON (Dual-Frequency IDentification SONar) Data with the Use of Optical Flow and a Genetic Algorithm. Water. 2021; 13(9):1304. https://doi.org/10.3390/w13091304

Chicago/Turabian StylePerivolioti, Triantafyllia-Maria, Michal Tušer, Dimitrios Terzopoulos, Stefanos P. Sgardelis, and Ioannis Antoniou. 2021. "Optimising the Workflow for Fish Detection in DIDSON (Dual-Frequency IDentification SONar) Data with the Use of Optical Flow and a Genetic Algorithm" Water 13, no. 9: 1304. https://doi.org/10.3390/w13091304

APA StylePerivolioti, T.-M., Tušer, M., Terzopoulos, D., Sgardelis, S. P., & Antoniou, I. (2021). Optimising the Workflow for Fish Detection in DIDSON (Dual-Frequency IDentification SONar) Data with the Use of Optical Flow and a Genetic Algorithm. Water, 13(9), 1304. https://doi.org/10.3390/w13091304