1. Introduction

In the face of natural disasters such as flash flooding, prompt information is crucial to establish a mitigation plan and find the best route for first responders. These rains cause unprecedented flooding and cause severe fatalities and hundreds of billions of US dollars in damages. Such an extreme flood not only damages roads and bridges but also cuts off evacuation routes and rescue paths. In many parts of the US, occurrences of “rare” extreme precipitation and flooding events are now a new normal [

1].

There are different types of observations to monitor and detect floods in urban areas. Among them, typical measurement methods include in-situ water level sensors in streams, remote sensing from satellites and airborne drones, on-site images from social media, and traffic-monitoring systems. Each observation type contributes to filling the information gap to grasp a holistic picture of urban flooding with different spatiotemporal scales. Despite the advance in measurement techniques, there are still limitations known for each measurement approach. For instance, in-situ water-level sensors are adapted to only stream monitoring. Instrumenting entire hydrological basins, which can cover hundreds of square kilometers, is practically and economically infeasible. Satellites are still limited to monitoring water levels and flow remotely with a low temporal frequency. Optical measurements from satellites and drones allow measuring only in a short period, and they are impossible during floods with severe weather conditions. For example, thick cloud layers interfere with the observation of satellites, while drones cannot fly when the wind is strong.

The vertical resolution of current synthetic aperture radars (tens of centimeters) with several days of the repeat cycle is insufficient for the task [

2,

3]. Without promising real-time flooding information, local citizens face the possible danger of tragedies. To address those limitations, effective, inexpensive, and reliable approaches are needed instead of installing additional facilities or equipment to detect flooding or inundation near civil infrastructure (e.g., highways and bridges). On-site images from traffic monitoring systems which are automatically photographed at regular times provide critical information for citizen and government, sometimes as the only reliable and practical sources, to identify the occurrence of flooding under extreme weather conditions in real-time, and protect people from exposure to danger for taking pictures in these conditions [

4,

5]. Despite these advantages, low-resolution images such as closed-circuit television (CCTV) photos are often corrupted by noises that can degrade image quality due to transmission or capture by inaccurate equipment or natural weather environments (e.g., raindrops or light refraction on camera lenses). The low-resolution images from traffic monitoring cameras or CCTV are one of the few reliable sources to know the outside conditions during extreme natural disasters [

6,

7].

The low resolution creates a dilemma to be overcome; for example, there are monitors in different places such as intersections or highways, but the resolution of CCTV is not fine enough for decision-makers to use the footage to decide to evacuate people to a safe place or guide a correct route for transportation. In addition, it is challenging to detect inundation from CCTV images using only a deep learning approach, which is one of the common applications of object detection. However, it requires many images sources in the same position but different situations to form the training database [

8]. Unless the rain is heavy enough to quickly accumulate water on the road that can be recorded by erected equipment at the same time, it is difficult to obtain water levels changing with obvious differences in the image. From the context of the recognition target, detecting inundation from an image is different from the conventional image processing or target tracking procedure. In the conventional image processing, the water in an image may be regarded as noise or unnecessary background to be removed to better recognize common objects of interest such as cars or humans. For the detection of inundation, on the contrary, the edge of water bodies with changing boundaries and reflectivity is the object of interest to be recognized in while the other objects, but the water needs to be filtered out.

The de-noising filtering method is used to enhance the quality of an image by removing noises mixed in when the image is digitized and reconstructing a signal in the original image by extracting features in the image [

9]. The impact of image noise can be decreased by changing the pixels to adjust brightness and contrast with the de-noising filtering methods (e.g., mean filtering and median filtering) [

10]. In recent years, engineering communities have developed de-noising technologies [

9,

11]. Thresholding discrete wavelet transform (DWT) coefficients have been mainly studied for de-noising [

12,

13,

14]. The wavelet is usually calculated by using spatial Gaussian variables, while different wavelets are derived from different Gaussian multi-order derivative functions [

15,

16]. The principal of the wavelet coefficient is to set a processing range by a threshold to achieve de-noising [

17]. The wavelet coefficients method has the characteristics of bandpass filtering. Thus, the use of the wavelet decomposition and reconstruction method allows feasible de-noising [

18,

19]. Compared with other de-noising filtering techniques (e.g., mean filtering and median filtering), the wavelet coefficients method is a customized denoise threshold known as an adaptive threshold that can be more accurate to separate noise than other de-noising filtering techniques with a fixed threshold.

Since wavelet coefficients for de-noising are well-studied, many threshold approaches have been proposed. Among those threshold methods, the wavelet Bayes shrink approach is the most effective wavelet coefficient method [

15]. Based on Bayesian theory, the Bayes shrink is changed according to different image information, so the Bayes shrink is also called the adaptive threshold [

16,

20,

21]. The most important concept of de-noising is that there is no best de-noising method, but only the most suitable de-noising method because the noise of each image is different [

22]. Thus, it is important to test and choose the most accurate de-noising filtering for a CCTV image to enhance image segmentation, which allows estimating the inundated area.

The image affected by any natural environments (e.g., mist, light refraction) may cause image overexposure and fogginess. There are advanced image process approaches in addition to de-noising processing: dark channel prior and dehaze filtering, which can eliminate mist and light refraction situations. The dark channel prior is operated by the darkest pixels in the image being separated from the image, and the remaining pixels are relatively bright image or called foggy image. By the dark channel prior, the difference between a foggy image and a fog-free image can be calculated with light refraction and brightness of an environment. The process of de-hazing is to obtain the fog-free image by changing the information of the fog image and combining it with the dark channel prior. By dehaze filtering calculated by dark channel and light refraction, the fog can be effectively eliminated [

23,

24]. After de-noising the image, to further identify the inundation or water area in the image, the edge and contour of the object must be determined first. Image segmentation is an effective way to find the edge of the objects on the image.

Image segmentation is one of the hotspots in image processing and computer vision, which is the basis for image analysis and understanding of image feature extraction and recognition. It refers to dividing the image into several areas based on grayscale, color, texture, and shape. The features divided into the same area are similar, and there are significant differences between different areas. There is a common principle in image segmentation algorithms which can be divided to region-based segmentation, edge detection segmentation, and clustering segmentation. Dijk and Hollander [

25] describe each algorithm in unified frameworks that introduce separate clusters and data weight functions. Felzenszwalb and Huttenlocher [

26] study two different local neighborhoods in constructing the graph, by which the important characteristic of the method is its ability to preserve detail. We deploy three different image-segmentation methods, including k-means clustering segmentation, Otsu region-based segmentation, and Bayesian threshold segmentation.

A neural network may be another solution to the limitations of CCTV data, and uses a large number of different images of flood and water level conditions to establish a database as a reliable statistical method for identifying the status of flash floods [

27]. However, a neural network for flood monitoring requires many images with different views in the same location. It is challenging to meet the requirements because, practically, there are not enough images in the same CCTV location with different flooding conditions to build a suitable database.

Thus, this paper presents an effective image-processing procedure that requires only a single image to detect the inundation area in a CCTV image to overcome limitations on current flooding detection. First, we investigate and propose de-noising approaches to improve the quality of the image. Then we utilize different image segmentation methods, including k-means segmentation, Otsu segmentation, and Bayesian segmentation, to detect flooding areas. The obtained segmentation results are compared to determine which matches the flooded area in the CCTV image best.

2. Methodology

To address the challenges to detect inundation in CCTV images using other approaches including a neural network, the paper proposed a de-noising and image segmentation approach to find the water area in the image by de-noising and image segmentation. The first step is to find the most suitable de-noising method for CCTV images. The second step is to use image segmentation to find the edge further to find the water area in the image. The flowchart of this study is shown in

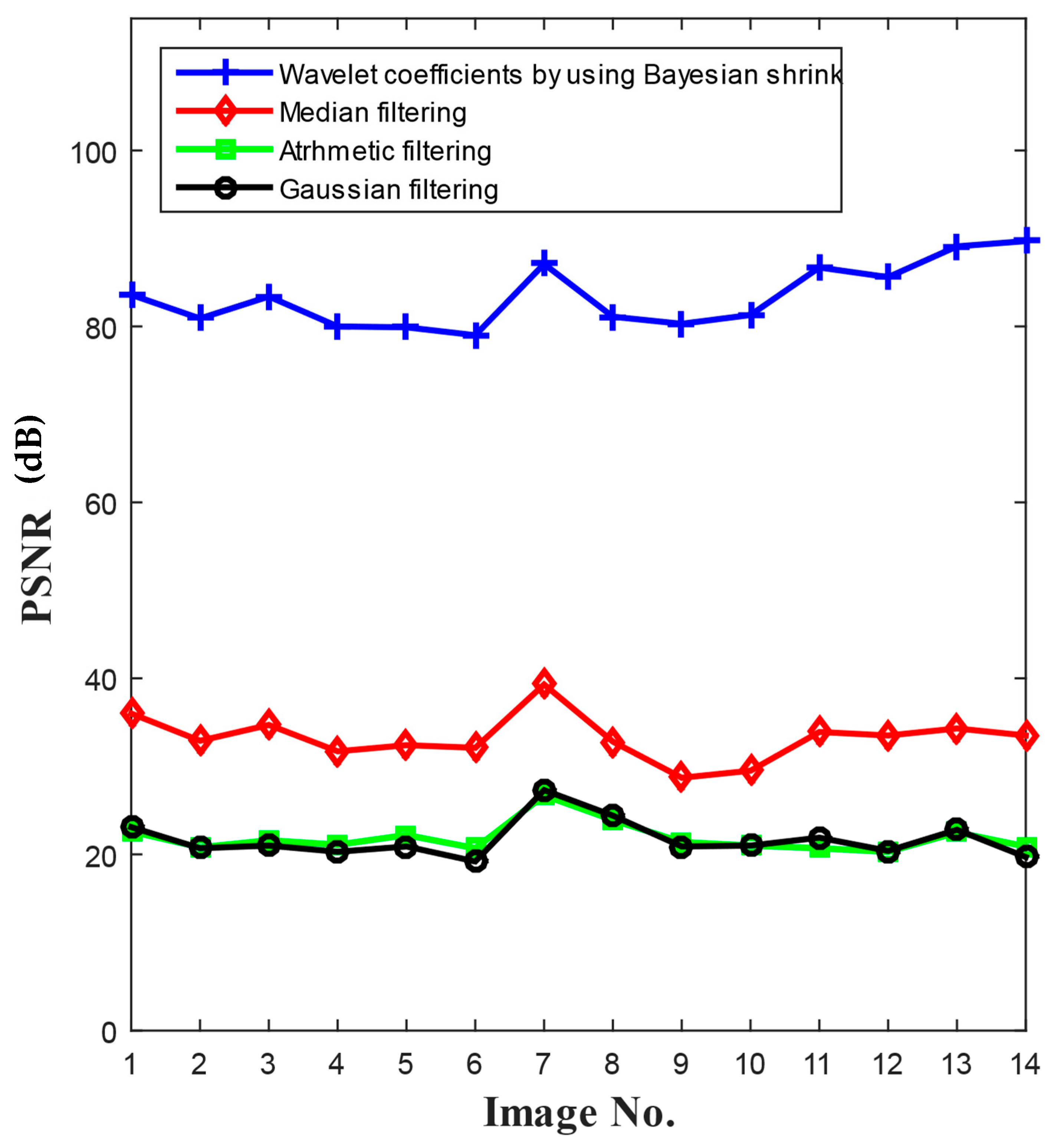

Figure 1. The effectiveness of de-noising is determined by the peak signal-to-noise ratio (PSNR), which is commonly used for image compression and reconstruction after image de-noising. The higher the PSNR, the better the de-noising effect, and the more original image information is retained.

In image processing, we face various random noises: Gaussian noise, impulse noise, and speckle noise. They are distributed in the CCTV image, caused by digitized transmission compression or equipment, which affects the performance of image processing. There are two requirements for the de-noising filtering: keeping intact important information (e.g., the object edges) and making the image clearer with a better visual impact so the image’s information can be clearly seen. We will study several de-noising filtering techniques: mean filtering, and median filtering, which belong to image enhancement. The performance of the de-noising method depends on the type of noise. For example, median de-noising filtering is very effective in smoothing impulse noise while it allows keeping the sharp edges of the image. The results of the image segmentation to find inundation objects edge effectively and accurately by de-noising filtering.

To find out which type of de-noising method is the most suitable for flood identification from CCTV images, we need to understand the type of noise. During image acquisition, encoding, transmission, and processing steps, noise always appears in the digital image. Without prior knowledge of filtering techniques, it is difficult to remove noise from digital images. Image noise is a random change in brightness or color information in the captured image. It is degradation in image signal caused by external sources. We can model a noisy image as

=

+

where,

is a function of the noisy image,

is a function of image noise, and

is a function of the original image. Before de-noising, we need to understand which noises are in the image. There are different types of image noise. They are typically divided into 3 types, which are Gaussian noise, impulse noise, and speckle noise. Gaussian noise is generated by adding a random Gaussian function to the image, while impulse noise is caused by adding random white and black dots to the image, and speckle noise is a granular noise that inherently exists in an image and reduces its quality. An example of adding noise to the image is shown in

Figure 2. Due to the wide variety of image noise, it is necessary to test different de-noising methods separately to determine the most suitable de-noising method for CCTV images.

For the following contents in

Section 2, several de-noising methods are presented in the first half, which are mean filtering, median filtering, Gaussian filtering, and wavelet coefficients. The image segmentation methods (e.g., k-means segmentation, Otsu segmentation, and Bayesian segmentation) are proposed in the second half.

2.1. De-Noising Method

The image enhancement is performed by changing the pixel number of images with several convolution approaches (e.g., spatial convolution and frequency convolution), which is a mathematical operation to determine a new pixel value from a linear combination of pixel values and its neighboring pixels. The spatial convolution is simply calculated by arithmetic, such as add, minus, multiply, and divide pixel value. The frequency convolution is calculated by the information of the image after the fast Fourier transform (FFT), which converts the information from the spatial domain to a frequency domain [

27]. The principle of image enhancement is to modify pixels by changing the brightness, contrast, and simply de-noising [

28,

29].

2.1.1. Median Filtering and Arithmetic Filtering

If a signal changes gently, the output value, which are pixels of images, can be replaced by the statistical median value in a certain size neighborhood of this pixel point, and this neighborhood is called a window in the signal-processing field. The larger the window, the smoother the output, but it may also erase useful signal characteristics [

30]. In order to keep the useful signal, the size of the window should be determined according to the signal and noise characteristics. Usually, the size of the window is odd because the odd number of data (e.g., pixel number) has a unique median value. The concept of mean filtering is similar to median filtering; the only difference is that the former uses the arithmetic mean as a filter [

31].

2.1.2. Gaussian Filtering

Gaussian filtering is commonly used as a linear filtering algorithm. A two-dimensional Gaussian function distribution is used to make a smooth image. The principle of Gaussian filtering is the weighted average of all pixel values in the entire image through the Gaussian distribution. More precisely, Gaussian filtering is the result of convolution operation on pixels by Gaussian normal distribution [

32]. The value of each pixel is obtained by a weighted average of the values of itself and nearby pixels. The two-dimensional Gaussian function is:

where

and

are the number of pixels on the

x and

y-axis of the image, respectively and

is the standard deviation of a Gaussian distribution.

2.1.3. Wavelet Coefficients

The discrete wavelet transform (DWT) can be interpreted as signal decomposition in a set of independent, spatially oriented frequency channels. Signal decomposition means that the signal passes through two complementary filters (low-pass and high-pass filters) and appears in the form of approximate and detailed signals as known as wavelet coefficients [

33]. The approximate and detailed signals can be assembled back into the original signal without loss of information. The process is called reconstruction. For decomposition, the image is divided into four sub-bands, as shown in

Figure 3a. The image is divided into four different sub-band based on their frequency. The four sub-band come from the separable application of vertical and horizontal directions. Each wavelet coefficient represents a spatial area corresponding to approximately a

area of the original image. Each coefficient in the sub-bands represent a spatial area corresponding to approximately a

area of the original image. The frequencies

can be divided into two ranges, the low-frequency range

and the high-frequency range

. The sub-band labeled L or H depends on their frequency. The four sub-band come from the separable application of vertical and horizontal direction. These four sub-band present image information called details:

is diagonal detail,

is vertical detail,

is horizontal detail and

is the remaining image details, where number 1 means detail in the first scale decomposition [

34]. To obtain the next, more critical scale of wavelet coefficients, the sub-band

is further decomposed, as shown in

Figure 3b. The image is divided into four different sub-band based on their frequency, which low-frequency range in second scale is

while high frequency range in second scale is

. Each coefficient in the sub-bands of the second scale

,

,

, and

represents a spatial area corresponding to approximately a

area of the original picture. The decomposition process continues until a certain final scale is reached, while the degree of matching between the reconstructed signal and the original signal is 90%. The DWT shows the wavelet analysis is a measure of similarity between basis wavelets and the signal function. [

35]. The wavelet coefficients method for image de-noising is the process of decomposition and reconstruction of details.

The wavelet threshold is the reference point to divide the frequency of the image sub-band. The image and noise have different characteristics after wavelet transform. After the noisy signal is decomposed in the wavelet scale, the information of the image is mainly concentrated on the low-resolution sub-bands [

36], and the noise signal is mainly distributed on each high-frequency sub-bands. Thus, the choice of wavelet threshold directly affects the performance of wavelet de-noising. The wavelet coefficients of each scale are classified according to different threshold algorithms they used [

35]. If the wavelet coefficients are smaller than the threshold, set it to zero; otherwise, it maintains or slightly decreases the magnitude [

34]. Because of this characteristic of wavelet coefficients, it is very effective in energy compression, which can better save important image features such as edge changes in the image. Finding an optimal threshold is a tedious process. If using a smaller threshold, it produces a poor performance of de-noising, while using a larger threshold also causes image details to be removed as noise [

16].

In this paper, Bayes shrink is used for wavelet coefficients, which has the best performance of de-noising for high-frequency noise [

20]. The following is the Bayes shrink algorithm introduction. The Bayes shrink is known to be effective for images with Gaussian noise. The observation model is expressed as follows:

where

is the wavelet transform of the noisy image;

is the wavelet transform of the original image, and

denotes the wavelet transform of the noise components following the Gaussian distribution

. Since

and

are mutually independent, the variances

,

and

of

,

and

is given by:

It has been shown that the noise variance

can be estimated from the first decomposition level diagonal high-frequency sub-band,

by the robust and accurate median estimator [

37],

The variance of the degraded image can be estimated as:

where

are the wavelet coefficients of wavelet on every scale;

is the total number of wavelet coefficient. Use of soft threshold which is based on sub-band and level-dependent near-optimal threshold as the equation condition for Bayes shrink thresholding:

where

The basic framework of the wavelet transform-based image de-noising is shown in

Figure 4.

2.2. Image Segmentation

The goal of this paper is to identify the inundation or water area in CCTV images. In order to achieve this goal, the edge and contour of the object must be determined first using an image segmentation, which is an effective way to find the edge of the objects on the image. The classification of image segmentation is based on grayscale, color, texture, and shape to divide the image into several areas. The features that have been divided into the same area are similar, while there are significant differences between different areas. Moreover, this is the basis for image analysis and understanding of image feature extraction and object detection. There several image segmentation approaches are studied (e.g., region-based segmentation, clustering segmentation) [

26]. The region-based segmentation divides the image into two regions of the target and the background by a single threshold. With different threshold calculation methods, the region-based segmentation presents different results. The clustering method is used to segment the image with the corresponding feature pixel points. According to their features in the cluster, the image is segmented into several different clusters in which each cluster has similar features. A global threshold can effectively segment different targets and backgrounds with different grayscales. However, when the grayscale difference of the image is not obvious, the local threshold or adaptive threshold method should be used.

To be able to understand how the computer interprets images to detect an object edge and find which image segmentation has the best performance to detect the water area, we use three different image-segmentation methods, which are k-means clustering segmentation, Otsu region-based segmentation, and Bayesian threshold segmentation.

2.2.1. K-Means Segmentation

Each pixel in a color image is a point in three-dimensional space; k-means segmentation uses pixels of the image as data points according to the specified number of clusters, replacing each pixel with its corresponding cluster center to reconstruct the image. K-means clustering minimizes the sum of the squared errors of the data in the cluster and the center of the cluster [

38]. The purpose is to find a similar cluster in the data so that members in the same subset have similar attributes. Assume there is a set of n-dimensional data:

where

is a set of

data points as the data to be clustered and

is a number of dimension of data points

;

is the number of clusters from data points

By using the formula of Euclidean distance to calculate the sum of least squares between clusters center and pixel points

is the minimum value to define the number of clusters:

where

is the center of

k clusters and

is the value of the variable

reaches the minimum value in the following formula.

The image segmentation based on k-means uses the pixels as data points, using Equation (9) to calculate the number of clusters then replace each pixel with its corresponding cluster center to reconstruct the image. The different clusters present different colors and other characteristics, while the pixel points in the same cluster have similar characteristics.

2.2.2. Otsu Segmentation

The most commonly used threshold segmentation algorithm is the most substantial interclass variance method (Otsu), which selects threshold by maximizing the variance between classes. According to the grayscale characteristics of the image, Otsu assumes that the image is composed of two parts: the foreground and background. By calculating the variance of the foreground and background of the segmentation result under different thresholds, the threshold with the largest variance is the Otsu threshold [

39]. The larger the between-class variance between the background and foreground, the better the effect to distinguish these two parts. The main calculated between-class variance equation is:

where

and

are the ratio of background pixels and foreground pixels in the image, respectively;

and

are the average grayscale value of background and foreground.

2.2.3. Bayesian Segmentation

Similar to Otsu segmentation, the image is divided into the foreground and background by Bayesian segmentation. The Bayesian theorem calculates the posterior probability with the smallest Bayesian risk as Bayesian shrink, which is defined as the probability distribution of the expected values. Image segmentation is a conditional assumption question in which the decisions are usually based on probability to select value [

40,

41]. If

,

is selected; if

, then

be chosen where

is a probability,

and

are decisions, and

is the independently distributed Gaussian variables. For an image

, the segmentation by using Bayesian theorem can be presented as:

where

is the Bayesian threshold of image and satisfies the following formula:

Assume that

is the probability density function with the expected Bayesian threshold values of image

, which is defined as the probability distribution based on Equations (11) to (13):

For the image is divided into a background part

and target part

by a threshold, which their probability is

and

, separately. The posterior probability can be presented by the Bayesian theorem:

The threshold with minimum Bayesian risk has the maximum expectation of posterior probability represented by Equation (15), and can be written as:

Based on threshold presented on Equation (16), which has the maximum expectation of threshold based on the Bayesian theorem, the image segmentation results can get the minimum error, which means the distortion of the image after segmentation is minor.

2.3. Data Collection and Assessment Approach

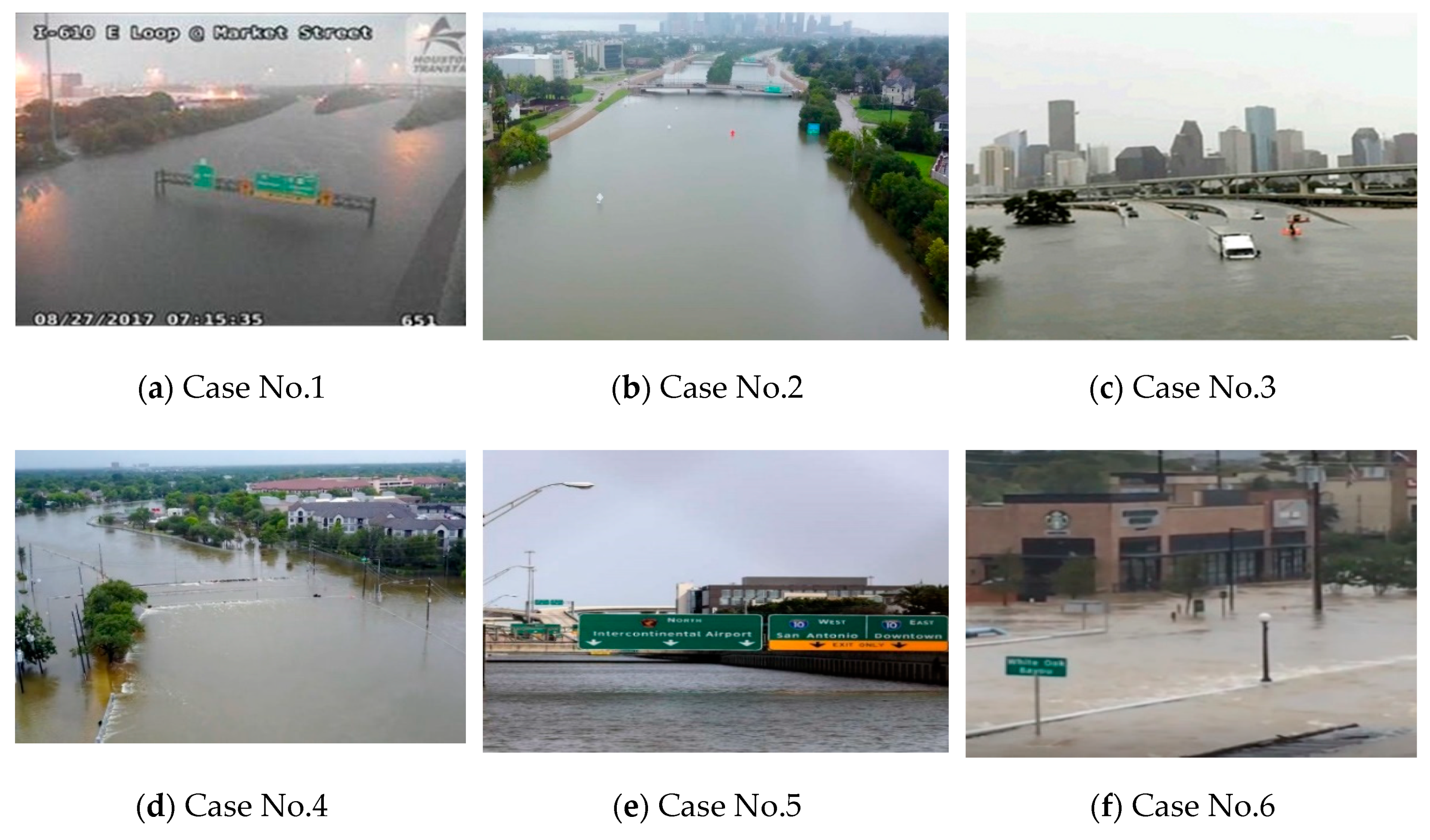

2.3.1. Data Collection

In this study, 14 CCTV images which are collected from public websites on transportation information government website such as TranSTAR or downloaded from public social media have been tested to determine which de-noising method is the best for CCTV images. Six of the CCTV images are shown in

Figure 5.

2.3.2. Mean Squared Error (MSE) and Peak Signal-to-Noise Ratio (PSNR)

The quality and information of the image after compression or reconstruction are usually different from the original image. Image de-noising is also a process of compression and reconstruction, which can eliminate most image noise while maintaining image information. However, the differences are difficult to identify the performance of de-noising by the human eye. The criteria for the quality of de-noising filtering are determined by mean squared error (MSE) and peak signal-to-noise ratio (PSNR). MSE in mathematical statistics refers to the expected value of the squared difference between the estimated values and the true value, which can evaluate the degree of change of data. The smaller the value of MSE, the better the accuracy of the experimental data. PSNR is a measurement method to quantify the impact of image processing, which is commonly used for image compression and reconstruction after image de-noising. The higher the PSNR, the better the de-noising effect, and the more original image information is retained.

where

is a resolution of image;

is an image after de-noise;

is a noisy image;

is the maximum of resolution (i.e., 8-bits image is

= 256 resolution). According to Equation (9), the better performance of the de-noising method, the higher PSNR since

is a fixed value of resolution, and

is the error between de-noised image and noisy image. In theory, the de-noising method can only accurately remove image noise and retain the details of the image. The reconstructed image after de-noising must be consistent with the original image, except that it contains noise.

4. Conclusions

In this study, we comparatively studied image-processing methods, such as de-noising methods and image segmentation, to automatically detect the flooded areas from the low-resolution images. The inundation detection results indicate that a series of methods are important and necessary to achieve detection. According to this research, the most effective de-noising method for a CCTV image is the Bayes shrink adaptive wavelet threshold. By using Bayes shrink and segmentation as a pre-processing procedure, future classification and object detection in CCTV images are expected to be more successful. The key findings are summarized below.

First, by comparing the most recently used de-noising methods, Bayes shrink with adaptive wavelet coefficients shows the best de-noising performance of all indicating this by the minimum MSE and maximum PSNR for CCTV images. The PSNR of CCTV images, by using the Bayes shrink approach, mostly exceeds 85 dB, which means that at least 85 % of the image details are retained after de-noising.

Second, for image-segmentation techniques, Bayesian segmentation has the best performance to find the inundation edge. The results present the most important part of following object detection. Bayesian segmentation allows identifying the inundation edges correctly in a grayscale image.

Last, use of the edge based on Bayesian segmentation enabled us to calculate inundation to achieve object detection. We notice the importance of the ROI, which controls the location of CCTV images to avoid the sky part, which has similar features to the inundation part. In this study, the inundation in CCTV can be identified accurately, which is important for following work like water-level detection by using the coordinate of the image.

The image processing presented in this paper is to estimate the inundation from images to assess flooding risks in the vicinity of the local flooding locations. Such information will help traffic engineers to take preventive or proactive actions to improve the safety of the drivers and to protect and preserve the transportation infrastructure.

For further research by using the concept of image processing presented in this paper, which defines the edge of the inundation area, the depth of water can be calculated by the coordinate relationship between image and the real world. It is possible to monitor the inundation status and calculate the water level in real-time by using a traffic-monitoring camera in the future. This research demonstrates the other economical option for people to detect flooding conditions such as the location and water level of the inundation area to provide people with more and faster information.