1. Introduction

Particulate matter with a diameter smaller than 2.5 μm (PM2.5) is an air pollutant so fine that it is invisible to the naked eye but can penetrate the human body through inhalation. These particles can travel deep into the lungs, enter the bloodstream and cause long-term effects on the cardiovascular and pulmonary systems [

1]. According to the World Health Organization (WHO), PM2.5 is a major cause of premature mortality worldwide and is associated with chronic diseases and lung cancer [

2]. In some regions, PM2.5 concentrations may rise to levels that pose serious risks to public health [

3]. Sources of PM2.5 include both natural phenomena, such as wildfires and desert dust, and anthropogenic activities [

4], and episodes intensify when the atmosphere is stable, such as weak winds, shallow boundary layers, and temperature inversions that trap pollutants near the surface [

5]. In rapidly urbanizing areas, combustion processes and atmospheric chemical reactions lead to pollutant accumulation and direct health effects [

6].

This problem is particularly pronounced in northern Thailand, where complex mountainous terrain surrounds many urban areas in basins and valleys [

7]. Under atmospheric conditions, pollutants can become trapped, especially during the dry season when haze episodes occur regularly each year [

8]. A 2024 study revealed that several provinces experienced more than 50 consecutive days of PM2.5 levels exceeding the national standard ambient air quality standard of 37.5 µg/m

3, with peak hourly concentrations surpassing 200 µg/m

3, a level considered extremely hazardous to health [

9]. In addition to its respiratory and cardiovascular effects, PM2.5 exposure has also been associated with ocular health issues, such as conjunctival redness, tearing, and dryness, with some symptoms showing up to a twelve-fold increase in risk compared to clear-air days [

10]. Biomass burning in northern Thailand, primarily driven by forest fires, constitutes the dominant form of biomass combustion in the region and occurs intensively during the dry season [

11]. This emission source has been identified as a major contributor to transboundary air pollution, underscoring the strategic importance of accurate forecasting for air quality management and environmental policymaking [

12].

Climate change is projected to significantly affect air quality-related meteorological conditions in Upper Northern Thailand, particularly during the haze season. Increases in the maximum temperature may enhance pollutant emissions, whereas a higher minimum temperature, together with reductions in the surface wind speed and planetary boundary layer height, are expected to weaken vertical dispersion and ventilation, creating favorable conditions for PM2.5 accumulation [

13]. Meteorological variables, including wind speed, rainfall, humidity, and temperature inversions, have been shown to significantly influence spatial PM2.5 accumulation patterns in the basin topography of Chiang Mai, particularly during dry-season stagnation events [

14]. Physics-based models, such as WRF-Chem, enable high-resolution simulations of atmospheric chemical processes, which are critical for understanding the formation and transport of air pollutants [

15]. However, they are constrained by uncertainties in emission inventories, computational demands, and grid-related errors in complex terrains [

16]. Satellite data extend spatial coverage but face issues of cloud contamination, vertical uncertainty and nonlinear relationships with ground-level PM2.5 [

17]. Ground-based observations are highly accurate but spatially uneven, being concentrated in urban centers and failing to represent rural or forested emission regions [

18]. Collectively, these strengths and limitations highlight the necessity of multi-source data integration to support robust and spatially consistent PM2.5 predictions.

Therefore, the concepts of data fusion and integration have gained increasing attention. For instance, Joharestani et al. employed ML models, such as RF, XGBoost, and Deep Learning, to integrate satellite-derived data, meteorological variables, and ground-based observations in Tehran [

19]. Their results improved R

2 up to 0.81 and reduced the RMSE to 9.93 µg/m

3, demonstrating the potential of multi-source integration to substantially enhance PM2.5 prediction accuracy [

19]. In Thailand, particularly in the northern region, where complex terrain and multiple emission sources prevail, research of this nature remains limited, representing a critical research gap that this study seeks to address. Similarly, Feng et al. (2022) used satellite reflectance in combination with meteorological variables in hybrid models (RF, LightGBM), achieving an R

2 of 0.91 and reducing the RMSE to 11.6 µg/m

3 compared to using AOD alone, further highlighting the benefits of multi-source data integration combined with advanced ML techniques [

17].

At the same time, several studies have highlighted the limitations of physics-based models when applied in complex regions. Lyu et al. (2017) reported that although the CMAQ driven by WRF captured overall PM2.5 patterns, it still exhibited substantial biases in many areas, with accuracy depending on the grid resolution and emission inventory completeness [

20]. Similarly, Dou et al. (2021) found that WRF-CMAQ failed to fully capture high-concentration pollution episodes, particularly in heavily polluted industrial areas such as the North China Plain [

21]. These findings emphasize the need for complementary approaches, such as ML, which can learn complex cross-source relationships, and bias correction techniques, which can adjust model outputs to be closer to the observed values. For example, Singh et al. demonstrated that deep learning-based bias correction reduced RMSE by 25–41% and increased the Index of Agreement (IOA) above 0.70 compared with original CMAQ outputs [

22]. Recent work in Chiang Mai further showed that statistical models, such as the Generalized Additive Model (GAM), incorporating environmental and temporal predictors (temperature, humidity, month, hour), significantly improved agreement with ground-based observations (R

2 = 0.92, RMSE = 5.08 µg/m

3). Comparable performance was observed for Linear Regression (LR) (R

2 = 0.90, RMSE = 5.49 µg/m

3) and RF when using only environmental variables (R

2 = 0.89, RMSE = 5.74 µg/m

3), while bias correction with GAM reduced the mean absolute percentage error (MAPE) to 17% [

23].

The suitability of ML algorithms largely depends on the data structure and spatiotemporal complexity. Tree-based models, such as RF and XGBoost, are well-suited for tabular data with nonlinear characteristics and provide interpretability through variable importance rankings; however, they cannot directly capture temporal sequences [

19,

24]. In contrast, Deep Learning models, such as Convolutional Neural Networks (CNNs), excel at extracting spatial features from satellite imagery, whereas Convolutional LSTM (ConvLSTM) integrates the strengths of CNN and LSTM to jointly capture spatial and temporal dependencies. These models are particularly effective for fine-scale daily PM2.5 forecasting, as shown by Zhang et al. (2020) and Sohrabi and Maleki (2025), who successfully combined satellite imagery and ground-based measurements to achieve accurate spatial predictions [

25,

26]. In contrast, basic models such as Multilayer Perceptron (MLP) and LSTM can handle temporal sequences but lack explicit spatial feature extraction, limiting their performance in complex spatiotemporal contexts. Mitreska et al. (2023) demonstrated that ConvLSTM significantly outperformed CNN-LSTM [

27], while Shi et al. (2024) reported that the performance of LSTM in PM2.5 prediction decreases in regions with high spatial variability [

28]. This reflects a limitation of the model in capturing complex spatial contexts, suggesting that hybrid models capable of learning multidimensional patterns may offer greater potential for both short- and long-term of PM2.5 predictions.

In this context, the present study had two primary objectives: (1) to analyze and identify suitable input variables for PM2.5 prediction by integrating WRF-Chem outputs, satellite data, and ground-based measurements; and (2) to evaluate the predictive performance of four ML algorithms—two tree-based models (RF and XGBoost) and two deep learning models (CNN and ConvLSTM)—for forecasting PM2.5 concentrations during the haze and biomass burning seasons in Chiang Mai, Thailand. The study period covered the haze season of 2024 (approximately January–May). The dataset includes ground-based PM2.5 measurements, WRF-Chem PM2.5 and meteorological variables (e.g., temperature, humidity, wind, and precipitation), geographical variables (DEM, NDVI, and LULC), and fire-related variables (hotspots and burn scars). Importantly, PM2.5 outputs from WRF-Chem were bias-corrected using ground-based observations before the ML model training. The model performance was assessed through cross-validation using different statistical indicators.

2. Data and Methods

2.1. Study Area

The study area (

Figure 1) was Chiang Mai Province and its surrounding regions in northern Thailand, corresponding to Domain 3 of the WRF-Chem model. The domain is geographically located between approximately 18°30′–19°30′ N and 98°30′–99°30′ E, covering Chiang Mai as the central focus and extending into parts of the adjacent provinces, including Lamphun, Lampang, Mae Hong Son and Chiang Rai. The terrain of this area is diverse, characterized primarily by a central plain encircled by high mountains, resulting in a basin topography. These geographical features promote the accumulation of air pollutants, thereby facilitating the persistence of PM2.5, particularly during the winter and dry seasons.

2.2. Time Period

The study period was from January to May 2024, which corresponds to the haze and biomass-burning seasons in northern Thailand. This period is characterized by the highest annual accumulation of PM2.5 and represents particularly challenging conditions for air quality forecasting. The selection of this timeframe allowed for the evaluation of the performance of the models under highly variable conditions with diverse emission sources.

2.3. Data Preprocessing and Integration

This study employed multi-source datasets to enhance the spatial and temporal coverage of the PM2.5 prediction. The datasets consisted of outputs from the WRF-Chem model, satellite-derived products, and ground-based observations of air quality. Data preprocessing is a crucial step in ensuring the consistency, reliability, and suitability of datasets for machine learning (ML) model development. The details of the datasets are summarized in

Table 1, and the main procedures are described as follows.

2.3.1. WRF-Chem Model-Based Data

The Weather Research and Forecasting model coupled with Chemistry (WRF-Chem) has been widely applied in atmospheric chemistry and air quality studies [

29]. The WRF-Chem simulations were conducted using the 0.1° × 0.1° resolution EDGAR-HTAP anthropogenic emission inventory [

30], while the 3BEM model [

31] was applied to generate the biomass-burning emission dataset used as primary emission inputs. Both inventories provide consistent global coverage with detailed sectoral classifications and were processed to match the model’s spatial and temporal resolution prior to integration. It serves as a primary source of information for simulating pollutant dispersion and the associated meteorological conditions. The meteorological variables employed in this study are listed in

Table 1 and include PM2.5 concentrations simulated by WRF-Chem (WRF-PM2.5), near-surface temperature at 2 m above ground (T2), relative humidity at 2 m (RH2), wind speed at 10 m (WS10), wind direction at 10 m (WD10), precipitation (Precip), planetary boundary layer height (PBLH), and venting index (Venting). These variables were simulated on a 99 × 99 grid with a spatial resolution of 1 km

2 and an hourly temporal resolution, covering the period from January to May 2024.

The WRF-Chem outputs were further downscaled to a spatial resolution of 1 km

2 to better represent local heterogeneity and align with the requirements for high-resolution PM2.5 forecasting. Although this downscaling process may introduce additional uncertainties and slightly reduce the statistical accuracy of the raw simulations, it provides enhanced spatial detail and ensures consistency in integrating meteorological data. In complex basin terrain, a 1 km grid helps resolve slope/valley winds and cold-air pooling that trap pollutants overnight [

32,

33], and it reduces spatial averaging that can smear localized hotspots at coarser grids [

34]; thus, the 1 km grid serves as a common geo-temporal backbone for pixel-level fusion of station, WRF-Chem, satellite-fire, and geospatial layers used in this study [

35].

2.3.2. Satellite-Based Data

Satellite-derived datasets were integrated to complement partial information related to topography, vegetation, land use, and biomass burning. Two categories of satellite-based data were used: (1) geographical data and (2) fire-related variables, each contributing to the spatiotemporal representation of PM2.5 dynamics in the study area.

Geographical Data

Digital Elevation Model (DEM): Obtained from NASA’s Shuttle Radar Topography Mission (SRTM) with a resolution of 30 arc-seconds (~1 km

2). DEM data provide elevation information that is critical for assessing pollutant accumulation in complex terrains [

36].

Normalized Difference Vegetation Index (NDVI): Derived from MODIS MOD13Q1, representing vegetation greenness in the study area. The NDVI facilitates the assessment of agricultural and forest changes that influence PM2.5 distribution [

37].

Land Use/Land Cover (LULC): Extracted from MODIS MCD12Q1, providing land classification categories such as forests, croplands, and urban areas. These data enhance the interpretation of emission sources across different land use types [

38].

Fire Variables Data

Burned Area: Derived from the MODIS MCD64A1 Burned Area Product, with a 1 km

2 spatial resolution and monthly frequency, indicating areas affected by open burning and forest fires during January–May 2024 [

39].

Hotspot Density: Obtained from the VIIRS FIRMS product based on the Suomi NPP and NOAA-20 VIIRS sensors. With a spatial resolution of 375 m and daily temporal coverage, this dataset represents fire hotspots per pixel and is crucial for capturing biomass burning activities in regions without monitoring stations [

40].

2.3.3. Ground-Based Observation Data

Ground-based air quality monitoring stations provided highly accurate point-based PM2.5 observations, which were essential for both the bias correction of the WRF-Chem outputs and the validation of the ML models. In this study, hourly PM2.5 concentrations from 54 stations within WRF-Chem Domain 3 were used, consisting of four stations operated by the Pollution Control Department of Thailand (PCD) [

41] and 50 additional stations from the DustBoy Open API, which is provided by the Climate Change Data Center at Chiang Mai University (CCDC-CMU) [

42]. The performance was evaluated between the PCD reference monitors and DustBoy sensors, showing strong agreement with correlation coefficients (r) of 0.89 to 0.94 and determination coefficients (R

2) of 80.24–87.41%, confirming the reliability of DustBoy measurements [

43].

2.3.4. Construction of Spatial and Temporal Encoding Variables

In the data preprocessing stage, spatial and temporal encoding variables were generated to enable the ML models to capture spatiotemporal patterns more effectively. The constructed variables included hour, dayofyear, hour_sin, hour_cos, dayofyear_sin, dayofyear_cos, lat_sin, lat_cos, and lon_sin. These variables enhanced the model’s ability to recognize diurnal and seasonal cycles and the spatial distribution of PM2.5 in the environment.

These variables were generated to encode cyclical temporal patterns and spatial coordinates into a format suitable for machine learning models, particularly those sensitive to input scaling and periodicity, such as tree-based and neural network (NN) algorithms. All derived spatial and temporal features were included in each variable group to enhance the representation of cyclic temporal dynamics and spatial distributions in the machine learning input space. The details of these variables are summarized in

Table 2.

2.3.5. Construction of Lag Variables

To account for the temporal accumulation and persistence of PM2.5 pollution, a set of nine lag variables was generated: PM2.5_lag1, PM2.5_lag2, PM2.5_lag3, PM2.5_lag6, PM2.5_lag12, PM2.5_lag18, PM2.5_lag24, PM2.5_lag48, and PM2.5_lag72. The selection of these lag intervals was motivated by the characteristics of PM2.5 formation and accumulation, which are influenced by biomass burning events and meteorological conditions in the hours preceding the event. Multiple lag periods allowed the models to capture short- and long-term carryover effects, particularly at high PM2.5 concentrations. Therefore, incorporating lagged predictors facilitated the learning of complex temporal dependencies and improved the model’s ability to forecast PM2.5 concentrations over an extended horizon.

After all preprocessing steps, datasets from different sources, including WRF-Chem outputs, satellite products, and ground-based observations, were integrated using regridding and spatial interpolation techniques to harmonize the spatial and temporal frequencies. For station-based PM2.5 surfaces, we used ordinary kriging with a spherical variogram on an intermediate 1 km grid and then reprojected and bilinearly resampled the interpolated fields to the reference 1 km WRF-Chem grid. Hourly quality control requires (1) station coverage spanning the reference domain and (2) at least three stations with non-constant values; Variogram fit diagnostics were reviewed to ensure the presence of a plausible structure. For variables available only at daily or monthly frequencies, we performed temporal downscaling by replicating each daily/monthly value across all hours within its period to construct an hourly time series. These stepwise constant series were used as slowly varying covariates rather than hourly targets. To limit artifacts from replication (e.g., step effects, aliasing, phase-lag), we applied monthly standardization, added day-of-year sine/cosine terms to encode smooth seasonality, leveraged lagged PM2.5 and hourly meteorology to supply diurnal variability, and applied each product’s quality assurance and quality control masks while avoiding period-boundary edges. Regridding was applied to adjust the satellite and monitoring station data to match the 1 km2 WRF-Chem grid, and spatial interpolation was employed to fill the gaps in areas without monitoring stations. This integration ensured that all datasets were consistent and suitable for subsequent machine learning modeling.

2.4. Data Preparation and Splitting

For machine learning model development, the datasets were prepared in two primary formats: tabular and sequence formats, depending on the type of model employed for the PM2.5 prediction. The data preparation process is described below.

Tabular Format: Applied to tree-based models such as RF and XGBoost. In this format, the data were structured in a tabular form, where each column represented an input variable. These variables were selected and grouped based on the results of the variable relationship analysis [

44].

Sequence Format: Applied to sequential-based models such as Long Short-Term Memory (LSTM), CNN, and ConvLSTM. In this format, the data were organized into time-series sequences, which enabled the deep learning models to capture the temporal dependencies and dynamic variations in the data [

45].

All datasets were divided into three subsets: training, validation, and test sets, which corresponded to model training, performance monitoring during training, and final evaluation, respectively. Data splitting was based on the Air Quality Index (AQI) categories defined by the PCD, which classifies air quality into five levels:

very good,

good,

moderate,

unhealthy for sensitive groups (high),

and unhealthy (very high) (

Table 3).

Test set: Selected from four periods, each consisting of six consecutive days, during which AQI values fell within the unhealthy and unhealthy for sensitive groups categories.

Validation set: Selected from periods with PM2.5 concentrations comparable to those of the test set to ensure consistency in evaluation.

Training set: Composed of the remaining periods, covering all AQI categories to ensure the representation of diverse atmospheric conditions.

This strategy ensured that the training set encompassed the full range of AQI levels, whereas the validation and test sets specifically targeted high-pollution episodes. Such a design allowed the models to learn from diverse input conditions and be tested under the most challenging scenarios for PM2.5 forecasting. The details of the selected training, validation, and test datasets are listed in

Table 4.

In addition, prediction experiments were designed to predict PM2.5 concentrations 24 h ahead, with evaluations conducted across four AQI categories, as defined in the test set (

Table 4). Each prediction utilized the preceding three days (72 h) of data as input, followed by one day (24 h) of data for the prediction. This setup reflects the practical requirements for short-term air quality prediction and enables models to capture immediate atmospheric dynamics while being assessed under varying pollution levels. To ensure a like-for-like comparison across model families and to isolate algorithmic effects from window design, the same 72 h input → 24 h prediction window was applied to all models (RF, XGBoost, CNN3D, ConvLSTM). The 72 h look-back is sufficiently long to encompass both diurnal cycles and multi-day accumulation/dispersion that characterize high-AQI episodes yet compact enough to avoid excessive smoothing and complexity during training. Independent evidence also indicates that incorporating a multi-day context can sustain forecast skill at longer lead times in hourly PM2.5 forecasting [

46]. By aligning the prediction windows with high-pollution episodes identified in the test set, the evaluation framework established a rigorous basis for examining the robustness and responsiveness of the model to rapid changes in the air quality.

2.5. Bias Correction of WRF-Chem PM2.5

After preparing all variables, the next step was to perform bias correction on the PM2.5 outputs from the WRF-Chem model to reduce discrepancies between the simulated and observed concentrations from the ground-based monitoring stations. Ground observations were used as a reference for adjusting the WRF-Chem PM2.5 values, thereby improving the accuracy and reliability of PM2.5 forecasting, particularly in areas with sparse or no monitoring data.

Following previous studies [

22,

23], this study evaluated multiple bias-correction approaches to assess their effectiveness in reducing model errors and improving the predictive accuracy. Bias correction was performed using the training and validation datasets, while the test set was excluded to avoid overfitting. The corrected PM2.5 values were subsequently employed as target variables for machine learning model training and were validated using an independent test set. Five algorithms were compared: Linear Regression (LR), used to adjust linear discrepancies between WRF-Chem simulations and ground observations; Artificial Neural Network (ANN), designed to capture complex and non-linear relationships; Random Forest (RF), which aggregates multiple decision trees to improve accuracy and reduce variance; Extreme Gradient Boosting (XGB), employing an iterative boosting mechanism to refine predictions; and Convolutional Neural Network (CNN), applied for spatial bias correction by learning spatial patterns from WRF-Chem outputs to adjust PM2.5 concentrations at each pixel [

23].

The performance of the bias correction models was assessed using statistical indicators, including the correlation coefficient (R), coefficient of determination (R

2), mean absolute error (MAE), and root mean square error (RMSE), to identify the most suitable approach for improving the WRF-Chem PM2.5 estimates [

22,

23].

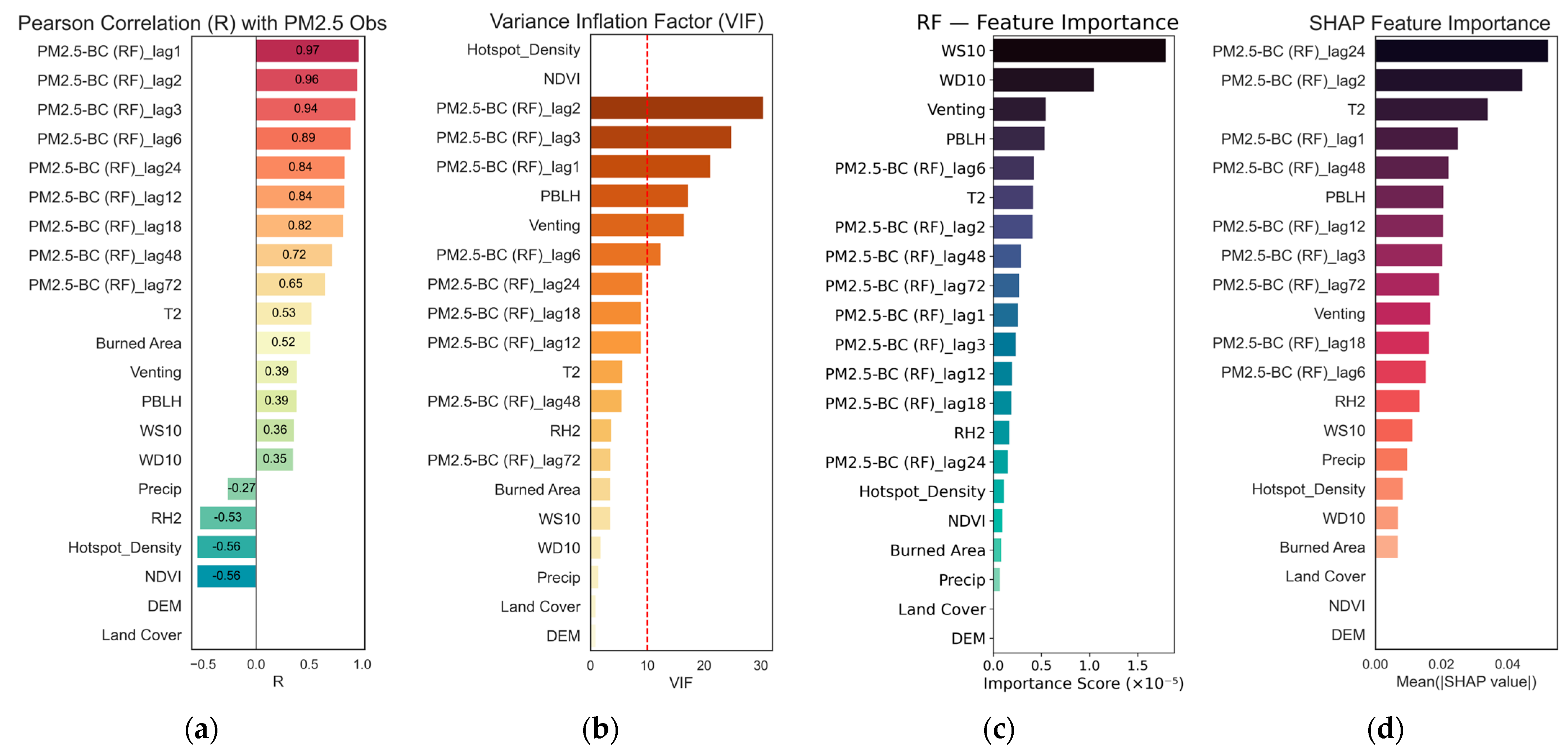

2.6. Variable Relationship Analysis

Variable Relationship Analysis is a critical step that directly influences the performance of PM2.5 forecasting models, with the objective of selecting and grouping input variables appropriately prior to their application in ML model development.

Pearson’s Correlation Coefficient was used to assess linear relationships between variables, with |r| > 0.5 and

p < 0.05 serving as the threshold for selecting moderately to strongly correlated predictors [

47].

The Variance Inflation Factor (VIF) was employed to detect multicollinearity, where variables with VIF < 5 were considered acceptable, values of 5–10 required caution, and those exceeding 10 were candidates for removal or feature consolidation [

48].

Random Forest Feature Importance evaluates variable relevance by measuring impurity reduction across decision trees, with top-ranked or above-average importance features retained to preserve model performance [

49]. In this study, an impurity-based (split-based) feature importance was computed from the trained ensemble, averaged across the trees and normalized. Features were ranked and retained if they exceeded the median of the group.

SHapley Additive exPlanations (SHAP), based on cooperative game theory, were applied to interpret the model outputs. Features with higher SHAP values were prioritized, whereas near-zero features were considered less relevant [

50]. In this study, SHAP (game-theoretic attribution) was applied to the fitted tree ensemble, and the importance was summarized by the mean absolute SHAP value over the evaluation split to obtain a stable rank order. A model-specific SHAP implementation for tree ensembles was used, and rankings were interpreted jointly with correlation and VIF to mitigate collinearity.

2.7. Feature Grouping for Input Machine Learning Algorithms

Following the statistical and model-based assessments described in

Section 2.6, all selected predictors were systematically organized into meaningful categories to form the input feature groups used in the machine learning (ML) model. The grouping aimed to ensure that variables with similar physical characteristics or functional roles were evaluated together, thereby facilitating model interpretability and performance comparison under different input scenarios. All sources were co-registered on a common 1 km, hourly grid to enable pixel-level fusion and consistent group comparisons.

The classification of variables was guided by both their physical relevance to PM2.5 formation and their statistical independence, as derived from previous analyses. Accordingly, all predictors were divided into four categories.

Lag Variables, representing temporal persistence and autocorrelation of PM2.5 concentrations.

Meteorological Variables describing atmospheric and dispersion conditions.

Fire Variables indicating emission intensity and biomass burning activity.

Geographical Variables represent surface and land cover characteristics that influence pollutant distribution.

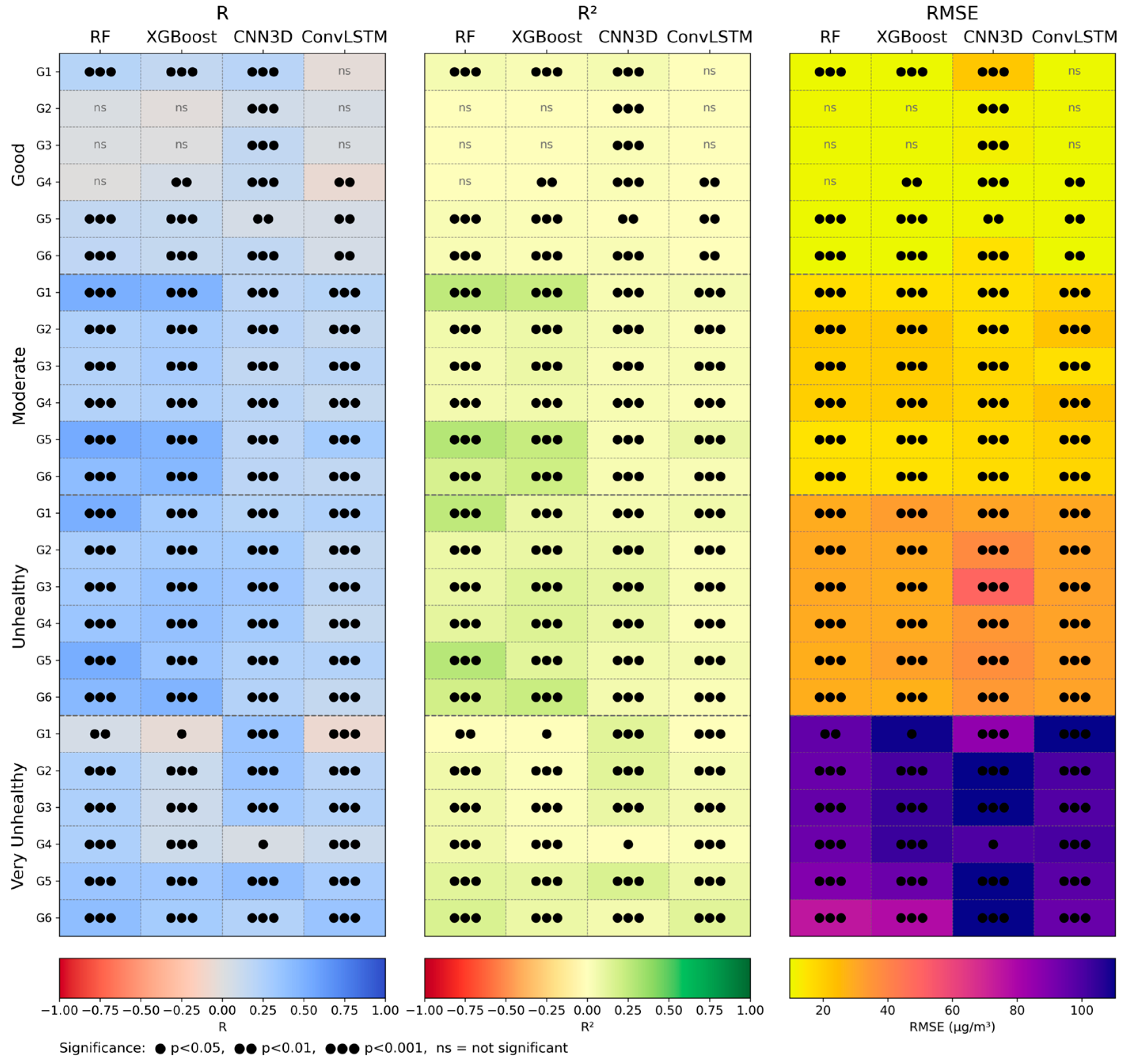

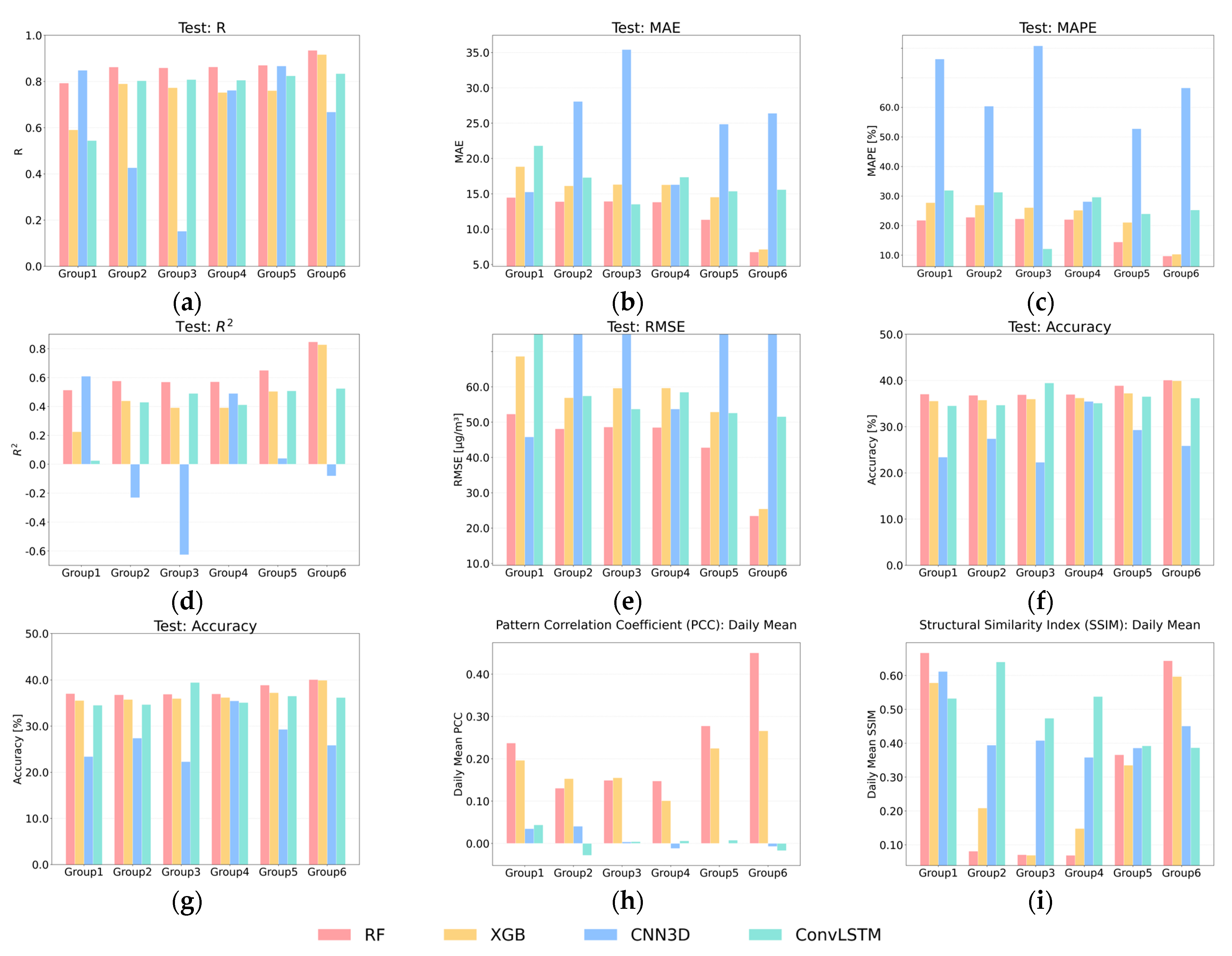

Based on these categories, multiple feature groups were constructed by combining different sets of variables to test the sensitivity and robustness of the ML models. Specifically, we pre-defined six feature-comparison groups for planned evaluation on the 1 km grid: (group 1) temporal-only, (group 2) meteorology-only, (group 3) meteorology + fire, (group 4) meteorology+ geography, (group 5) All Variables (Full Set), and a compact set selected by SHAP+VIF (group 6); full variable listings appear in the results. This systematic grouping strategy provided a structured framework for examining the incremental contribution of each domain (temporal, meteorological, fire-related, and geographical) to the model’s predictive skill while maintaining parsimony and interpretability.

2.8. Machine Learning Algorithms

In this study, four ML algorithms suitable for PM2.5 forecasting were employed: Random Forest (RF), Extreme Gradient Boosting (XGBoost), Three-Dimensional Convolutional Neural Networks (CNN3D), and Convolutional Long Short-Term Memory (ConvLSTM). Each algorithm was tuned and trained using the pre-processed datasets described in the previous sections. The advantage of selecting these models lies in their ability to handle complex, high-dimensional data and learn effectively from spatiotemporal data.

2.8.1. Random Forest (RF)

Random Forest (RF) is a supervised ensemble learning technique that constructs multiple decision trees from bootstrap samples and aggregates their predictions to improve accuracy and reduce variance [

51]. It is particularly suitable for tabular data without explicit temporal structures and provides transparent estimates of feature importance. Key structural hyperparameters, such as the number of trees (n_estimators), maximum depth (max_depth), splitting criteria, and minimum number of samples per leaf (min_samples_leaf), directly influenced the model’s ability to capture the complex patterns. In this study, grid search optimization was applied using the RMSE of the validation set as the primary evaluation metric. The structural hyperparameters are listed in

Table 5.

2.8.2. Extreme Gradient Boosting (XGBoost)

XGBoost is a boosting algorithm that iteratively refines prediction accuracy by learning from the residual errors of prior models [

52]. Its strengths lie in its computational efficiency and built-in regularization mechanisms, which reduce the overfitting. Structural hyperparameters, such as the learning rate, number of trees, tree depth, subsampling ratio, and regularization parameters, determine the model’s ability to effectively capture nonlinear relationships. In this study, XGBoost was tuned using a grid search with the RMSE as the evaluation criterion. The full configuration is presented in

Table 6.

2.8.3. Three-Dimensional Convolutional Neural Network (CNN3D)

CNN3D extends conventional CNNs by incorporating the temporal axis into convolution operations, making it well-suited for dynamic environmental data, such as satellite-derived aerosol fields [

53,

54]. The core architectural components, including the number of filters, temporal and spatial kernel sizes, dropout rate, and dilated convolutions, shape the model’s capacity to extract long-term spatiotemporal dependencies from data. The model was trained using the MSE loss and Adam optimizer, with gradient clipping to stabilize the training. The model uses a custom CausalConv3D with kernel_size = (3, 3, 3) (time × height × width) and temporal causal padding to avoid look-ahead. Stride = 1 and “same” padding preserved the 1 km spatial resolution. Convolutions use ReLU with Glorot initialization; an in-layer dropout is available for regularization. FixedStandardize and LayerNormalization (float32) stabilized the optimization. The 3 × 3 × 3 kernels capture hour-to-hour dynamics and local spatial patterns without over-smoothing, while retaining the kilometer-scale detail needed for pollution hotspots. The hyperparameters are listed in

Table 7.

2.8.4. Convolutional Long Short-Term Memory (ConvLSTM)

ConvLSTM extends the standard LSTMs by replacing matrix multiplication with two-dimensional convolutions, thereby enabling integrated spatiotemporal learning [

55]. Critical hyperparameters, such as the number of ConvLSTM layers, filter sizes, kernel dimensions, dropout rates, and normalization strategies, determine the model’s ability to capture both the temporal dependencies and spatial structures. The model was trained with MSE loss and the Adam optimizer, employing gradient clipping (clipnorm = 1.0) to prevent the gradient explosion. ConvLSTM is loaded with custom FixedStandardize and TakeLastT. 2D convolutions inside LSTM gates extract local spatial structure, while the recurrent pathway models temporal dependencies, balancing space and time. Inference enables mixed precision when supported and follows the 72→24 setup (X: N × 72 × H × W × C; Y: 24 future hours). A summary of the structural hyperparameters is presented in

Table 8.

2.9. Model Evaluation

The performance of the machine learning models was evaluated through quantitative and spatial assessments to comprehensively capture the accuracy and reliability of PM2.5 forecasts.

2.9.1. Quantitative Evaluation

The quantitative assessment employed several statistical analyses. Pearson’s correlation coefficient (R) measures the linear association between the predicted and observed values, with values closer to 1 indicating a stronger agreement (Equation (1)) [

47]. The coefficient of determination (R

2) quantifies the proportion of the observed variance explained by the model, with values approaching 1 reflecting a higher predictive capability (Equation (2)) [

56]. The Root Mean Squared Error (RMSE) represents the average magnitude of the prediction errors, where lower values denote greater accuracy (Equation (3)) [

57]. Bias (PBIAS) evaluates the systematic deviation of predictions from observations, with positive values indicating overestimation and negative values indicating underestimation (Equation (4)). Finally, the Mean Absolute Percentage Error (MAPE) expresses the average prediction error in percentage terms, allowing meaningful comparisons across time series and forecasting methods, and can be further used to derive prediction accuracy (Equations (5) and (6)) [

58].

where

denotes the observed PM2.5 concentration obtained from ground-based monitoring stations,

represents the predicted values from the machine learning model, and

is the mean of the observed values. Similarly,

and

denote another variable and its mean, respectively, which are used in correlation-based metrics (e.g., Equation (1)). The term

indicates the total number of paired samples considered in the calculation.

2.9.2. Spatial Evaluation

The spatial assessment focused on evaluating the consistency of spatial patterns. Pattern correlation methods apply the Pearson correlation coefficient (

PCC) to compare the spatial distributions between the simulated and observed fields across grids, thereby assessing the linear consistency of spatial patterns (Equation (7)) [

59]. In addition, the Structural Similarity Index (

SSIM) was used to measure the structural resemblance between the simulated and observed maps, considering luminance, contrast, and structure. The

SSIM provides a more perceptually relevant evaluation of spatial outputs than traditional error metrics, with values closer to 1 indicating a high structural similarity (Equation (8)) [

60].

where

and

denote the spatial means of the modeled and observed data, respectively, and

and

denote the spatial variances of both datasets. The term

indicates the spatial covariance between the model outputs and observations. In the case of the Structural Similarity Index (

SSIM), the constants

and

are included to stabilize the calculation when the denominator approaches zero.

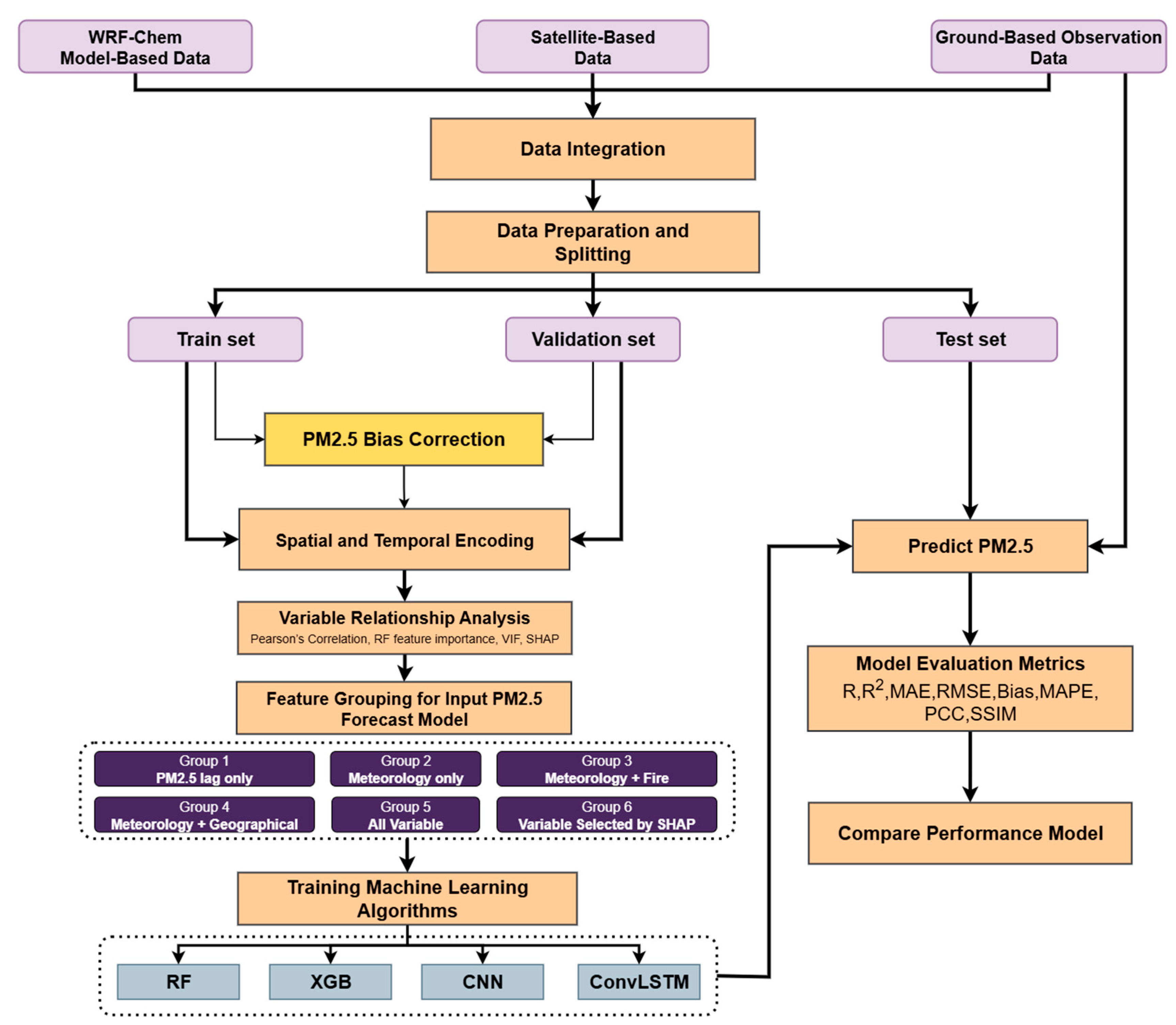

2.10. Flow Chart

The workflow for developing and evaluating the PM2.5 forecasting models integrated multi-source datasets, including numerical model outputs from WRF-Chem (e.g., WRF-PM2.5, T2, RH2, WD10, WS10, Precipitation, PBLH), satellite-derived products (Burned Area, Hotspot Density, DEM, NDVI, Land Cover), and ground-based observations (PM2.5_obs). All datasets were preprocessed and integrated before being split into training, validation, and testing sets. Subsequently, a bias correction procedure was applied to the WRF-Chem PM2.5 outputs using multiple machine learning algorithms, including Linear Regression, Random Forest, XGBoost, and CNN, with model performance evaluated through R, R2, MAE, and RMSE. After bias correction, all inputs were integrated into a unified 1 km, hourly grid to align the spatial and temporal resolutions across the numerical model outputs, satellite layers, and ground observations.

After bias correction, spatial and temporal encoding variables were generated and incorporated into a variable relationship analysis using Pearson’s correlation, VIF, and SHAP. This analysis guided the grouping of features into six categories based on variable characteristics (lag-only, meteorological-only, fire-related, geographical, and SHAP-selected). Each feature group was then used to construct forecasting models using the selected algorithms, namely, Random Forest, XGBoost, CNN3D, and ConvLSTM.

During model training and validation, the model learns a mapping from 72 h predictor sequences (meteorology, fire and geographic layers, temporal encodings, and lagged PM2.5 from the station-based observation interpolation) to the next 24 h of bias-corrected PM2.5, which serves solely as the learning target. During inference on held-out test periods, the same 72 h predictors are supplied (including lagged PM2.5 up to the forecast start time only); no future PM2.5 is used. The model then produced 24 h PM2.5 predictions that are evaluated against independent station observations, clearly separating what was predicted from how predictions were assessed and preventing temporal information leakage.

The forecasts were further evaluated using a comprehensive set of performance metrics, including R, R

2, MAE, RMSE, Bias, MAPE, Pattern Correlation Coefficient (

PCC), and Structural Similarity Index (

SSIM), to systematically compare the predictive ability of each algorithm. Overall, this workflow reflects a scientifically rigorous design that connects data acquisition, preprocessing, and bias correction with advanced modeling and final evaluation, thereby ensuring the development of high-performance hourly PM2.5 forecasting models with high accuracy and reliability (

Figure 2).

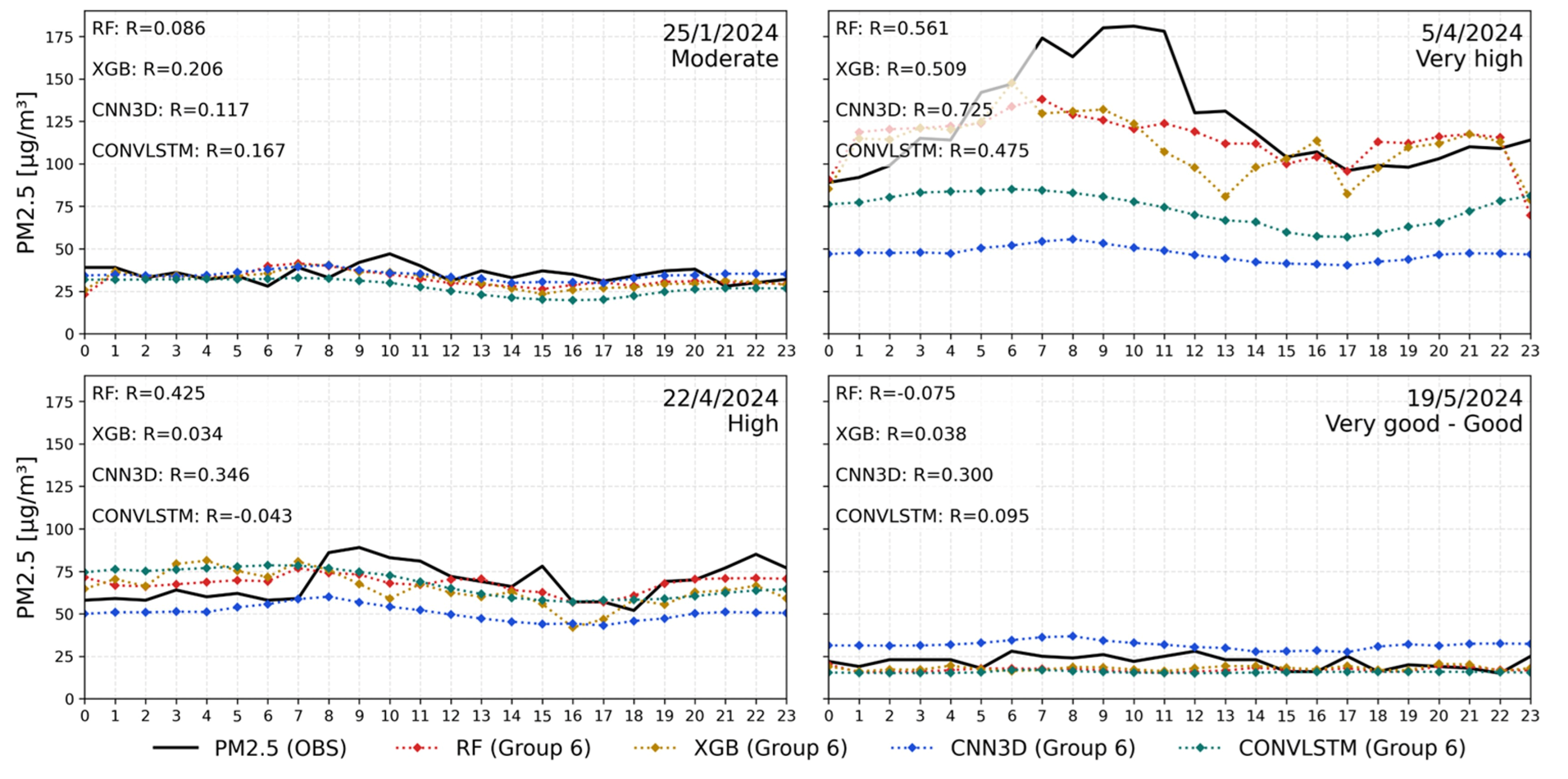

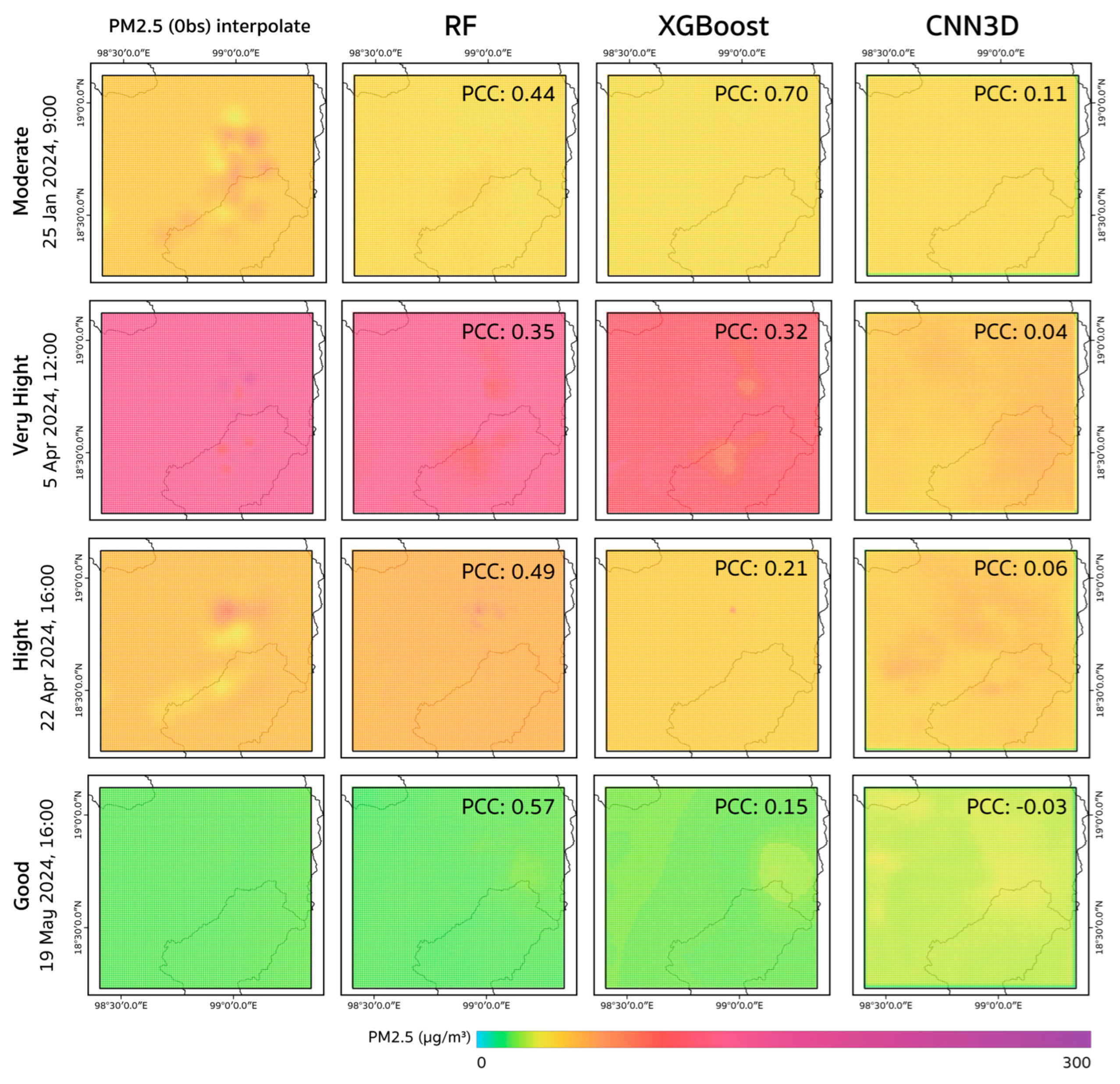

4. Discussion

This study highlights the effectiveness of ML models in predicting hourly PM2.5 concentrations by integrating bias-corrected WRF-Chem model outputs, satellite-based information, and ground-based observations. Among the models tested, tree-based algorithms, particularly RF and XGBoost, demonstrated consistently high accuracies and robustness. These models were able to capture hourly variations in PM2.5 more reliably than deep learning models, particularly during high-concentration episodes. This finding is consistent with that of Chen et al. [

61], who reported that RF performs well on noisy and heterogeneous datasets and extended the results of Ma et al. [

62] by applying a fine-resolution (1 km) spatiotemporal framework in Northern Thailand.

Deep learning models, including CNN3D and ConvLSTM, exhibited weaker performance in this study despite their theoretical ability to capture spatiotemporal dependencies. Their instability during training and reduced generalizability under real-world conditions are consistent with the findings of Wu et al. [

63]. Similarly, Qiu et al. [

64] suggested that hybrid CNN-LSTM structures may offer advantages in certain contexts; the results presented here indicate that tree-based models remain more consistent when the data are incomplete, noisy, or influenced by complex topography.

The analysis of variable importance further emphasized the strong contribution of lagged PM2.5 (e.g., lag1, lag24) and meteorological drivers (T2, RH2, and wind speed/direction), consistent with Bi et al. [

65], who noted the central role of temporal persistence and meteorological influences in shaping air pollution patterns in Southeast Asia. Fire-related factors, particularly burned areas, were especially relevant during high-pollution events. The Variable grouping approach applied in this study proved effective in reducing redundancy while retaining predictive skill, which supports earlier findings [

61] but extends them by offering a systematic grouping strategy that facilitates fair comparison across algorithms.

From a temporal perspective, RF and XGB successfully captured the diurnal cycles and short-term fluctuations of the observed PM2.5, demonstrating their suitability for real-time, early warning systems. Nevertheless, the performance decreased under low-to-moderate AQI conditions owing to weaker signals and lower signal-to-noise ratios, as reported by Zhang et al. [

66]. At low PM2.5 levels, prediction accuracy typically declines because (1) the signal-to-noise ratio is lower and instrument/representation errors become comparable to the signal, (2) predictor–target coupling weakens (e.g., fire activity is sparse and meteorology explains less variance), and (3) variance is small so correlation-based metrics (R, R

2) are mathematically bounded even when absolute errors are modest. In our AQI-stratified evaluation, models remained statistically significant but showed reduced effect sizes in the “Good” and “Moderate” ranges, consistent with these mechanisms. Practically, this suggests adopting heteroscedastic-aware training (e.g., variance-stabilizing transforms or quantile losses) and reporting AQI-stratified metrics to reflect the use-case performance under clean-air regimes. Spatially, RF and XGB maintained higher consistency with the observed PM2.5 distributions than CNN3D and ConvLSTM, indicating their suitability for regions with complex terrains, such as northern Thailand. The integration of bias-corrected WRF-Chem outputs, as emphasized by Noynoo et al. [

67], plays a critical role in stabilizing predictions across both the temporal and spatial dimensions.

Comparative context with related works. Our Random Forest achieved R = 0.93 and R

2 = 0.85 in Group 6 (variables selected by SHAP and VIF), as shown in

Figure 6a,d, which is above the R

2 = 0.81 reported by Joharestani et al. [

19] for an urban domain using multisource inputs. The improvement is plausibly attributable to three design choices rather than model type alone: (1) feature curation—a disciplined SHAP+VIF screen that removed weak or redundant predictors while retaining lagged PM2.5 and key meteorology; (2) physics-informed inputs—integration of bias-corrected WRF-Chem fields that stabilize the signal and complement observations, instead of relying on AOD which did not consistently help; and (3) task framing—an hourly, fine-scale (1 km) setup with fire-related covariates suited to our biomass-burning regime, and evaluation on strictly held-out high-pollution episodes. Taken together, these choices likely increased explanatory power without inflating complexity, yielding stronger R and R

2 while remaining methodologically comparable to prior multisource RF studies.

Overall, while earlier studies have demonstrated the benefits of multi-source integration [

62] and the strong predictive ability of tree-based models [

61], this study distinguishes itself by combining both under a fine-resolution experimental framework and applying a systematic variable grouping. This approach strengthens the reliability of ML-based PM2.5 prediction and provides practical insights for operational air quality management and policy support in Southeast Asia, particularly in Thailand.

Limitations and Future Perspectives

This study has several limitations that should be considered when interpreting the results, as well as opportunities for future development that build upon these findings.

First, the spatial density and distribution of ground monitoring stations are uneven, with dense coverage in urban centers but sparse representation in rural and mountainous regions. This spatial imbalance introduces non-uniform confidence in spatial interpolation, increasing the uncertainty in areas farther from the monitoring stations. Consequently, both the bias correction and evaluation processes may be biased toward urban conditions, indicating that the reported model skill likely reflects urbanized zones more accurately than rural or topographically complex areas do. Consequently, model generalization may weaken when applied to regions with limited observational datasets.

Second, certain predictors may exert conditional importance under specific meteorological regimes. This study incorporated a comprehensive suite of meteorological, geospatial, and fire-related variables, including near-surface temperature, relative humidity, hourly precipitation, 10 m wind speed and direction, planetary boundary layer height, ventilation coefficient, burned area, hotspot density, digital elevation, land cover, temporal encodings, and lagged PM2.5—feature selection procedures inevitably reduce redundancy. However, predictors such as wind direction and the ventilation coefficient can become particularly influential under strong wind or nocturnal inversion conditions. When these variables are down-selected within specific feature groups, the fidelity of the model may decline during such events.

Third, the training dataset represented only a single haze season in Northern Thailand. This limited temporal scope constrains the model’s ability to capture interannual variability, off-season conditions, and extreme events, potentially reducing its robustness when applied in other years or in different climatological contexts. Extending the dataset to encompass multiple seasons would provide a more representative basis for training and evaluation.

Finally, computational constraints impose practical limitations. The 1 km hourly multivariable framework requires substantial computational power, memory and storage. These constraints necessitated limiting the hyperparameter search space and sensitivity analyses, preventing the exhaustive exploration of all model configurations. Despite these restrictions, the modeling system still provides a valuable benchmark for near-real-time PM2.5 prediction and a scalable foundation for future studies.

Several promising pathways for improvement are available. Expanding the temporal and spatial scopes of the training data is crucial. Incorporating multi-year haze seasons will enable the model to better represent interannual variability and long-term trends, while extending the observation network through additional ground sensors and emerging high-resolution satellite platforms can reduce spatial bias in data-sparse regions.

On the methodological front, the application of advanced deep learning architectures, such as spatial attention networks, multi-scale feature fusion, or physics-informed hybrid models, may improve the representation of atmospheric dynamics across complex terrains. Similarly, exploring ensemble learning strategies that integrate tree-based and deep learning models can balance interpretability, adaptability, and computational efficiency.

From an operational perspective, deeper integration with decision-support systems used by environmental agencies and provincial authorities is essential. Linking model outputs with real-time biomass-burning management tools, air quality dashboards, and public alert systems can transform forecast information into actionable strategies. These applications can support daily advisories, optimize the scheduling of fuel-reduction operations, and enhance surveillance during periods of elevated fire risks.

In the long term, these advancements will help transition the current research framework into a fully operational, real-time early warning, and policy support system. By combining extended datasets, improved architectures, and institutional collaboration, the modeling framework can evolve into a scalable and adaptive platform that bridges scientific modeling, environmental management, and public policy to support sustainable air quality governance across Southeast Asia.

5. Conclusions

This study demonstrated that integrating bias-corrected WRF-Chem outputs with satellite-based and ground-based observations substantially improved the accuracy of the hourly PM2.5 predictions. Among the evaluated models, tree-based algorithms, particularly RF and XGBoost, consistently outperformed deep learning architectures (CNN3D and ConvLSTM), providing more stable and reliable forecasts. Variable importance analyses confirmed the dominant influence of lagged PM2.5 and meteorological variables (e.g., T2 and RH2), and fire-related predictors (burned area and hotspot density) exerted substantial effects during high-pollution episodes. The systematic variable grouping approach, based on correlation, VIF, RF importance, and SHAP, effectively reduced redundancy and emphasized the most predictive features. Together, these findings highlight the value of integrating physical understanding with data-driven optimization to develop robust forecasting models suitable for air quality management in complex terrains such as northern Thailand and broader Southeast Asia.

Beyond predictive accuracy, the operational application of 24 h PM2.5 forecasts enhances their policy relevance. Forecast outputs were delivered with AQI-aligned thresholds, uncertainty ranges, and machine-readable formats (GeoTIFF/NetCDF), accessible via an hourly dashboard and API. Routine reliability summaries and calibration snapshots accompany each forecast cycle to ensure transparency and reproducibility. These products can support daily public advisories, scheduling of fuel-reduction operations, and targeted patrols or surveillance during elevated-risk periods, demonstrating how predictive analytics can be systematically integrated into biomass-burning management workflows.

Future extensions should focus on expanding the dataset to multi-year horizons to better capture the seasonal dynamics and extreme pollution events. However, this expansion must be balanced against computational demands, necessitating parallel improvements in algorithmic efficiency and infrastructure capacity to ensure optimal performance. With these advancements, the framework presented in this study offers a solid foundation for developing scalable operational PM2.5 early warning systems that bridge scientific modeling and policy implementation.