An Artificial Intelligence-Driven Precipitation Downscaling Method Using Spatiotemporally Coupled Multi-Source Data

Abstract

1. Introduction

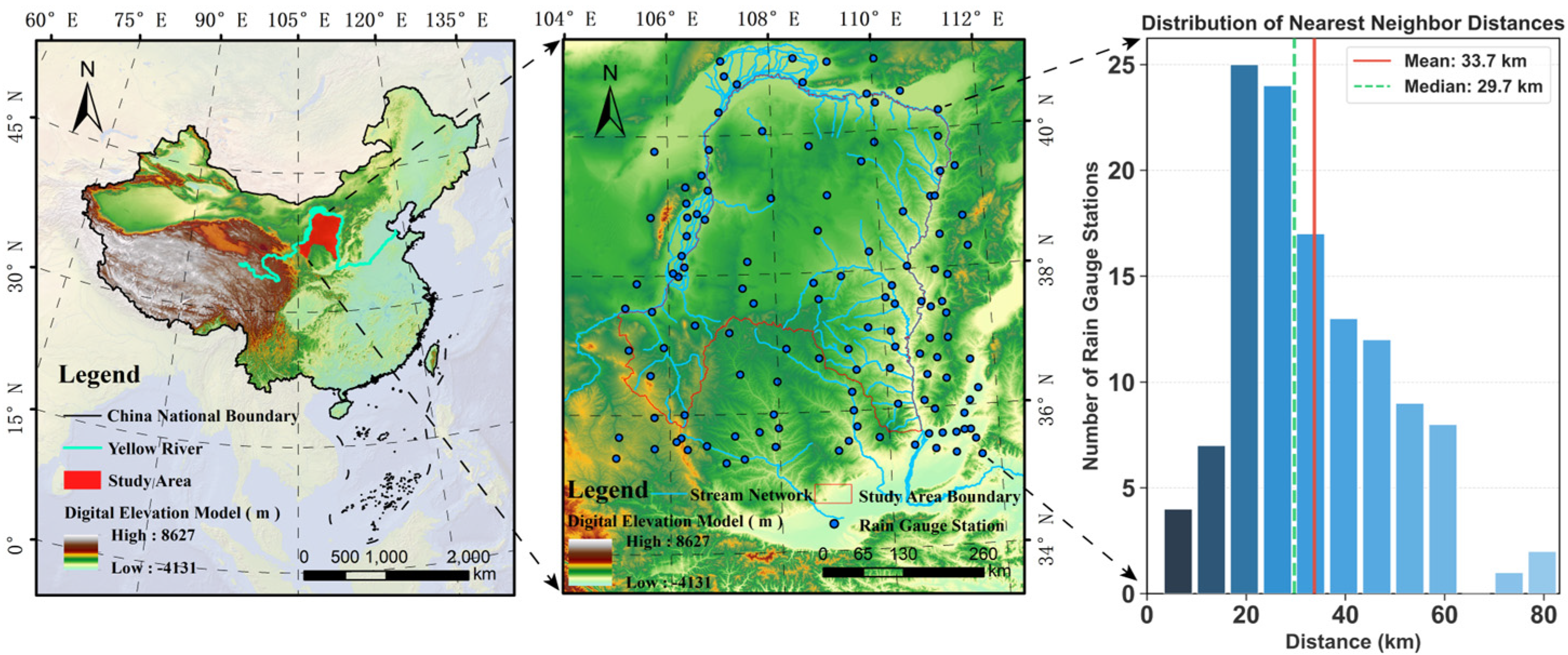

2. Data Sources

2.1. Precipitation Data

2.2. Multi-Source Environmental Features

3. Methodology

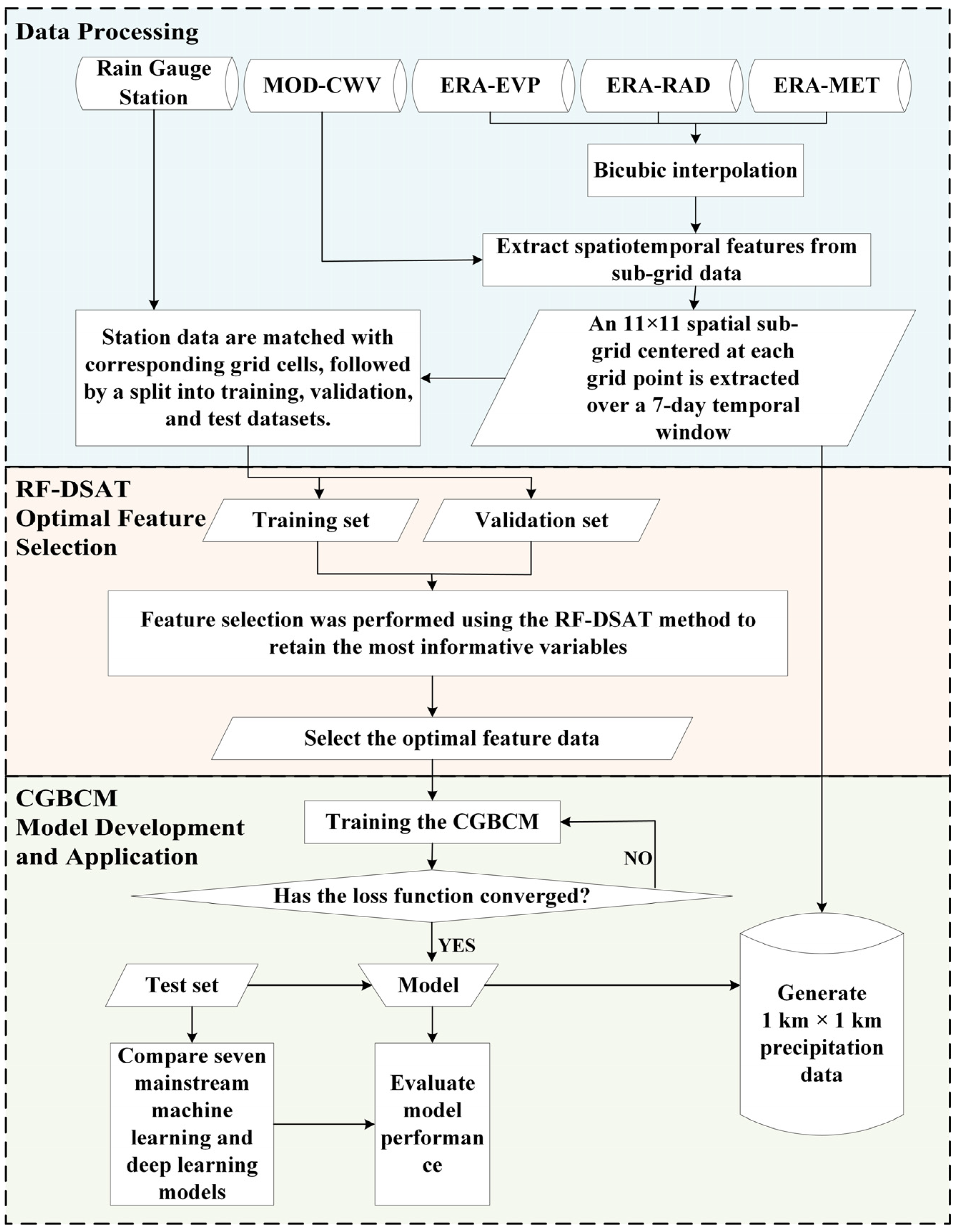

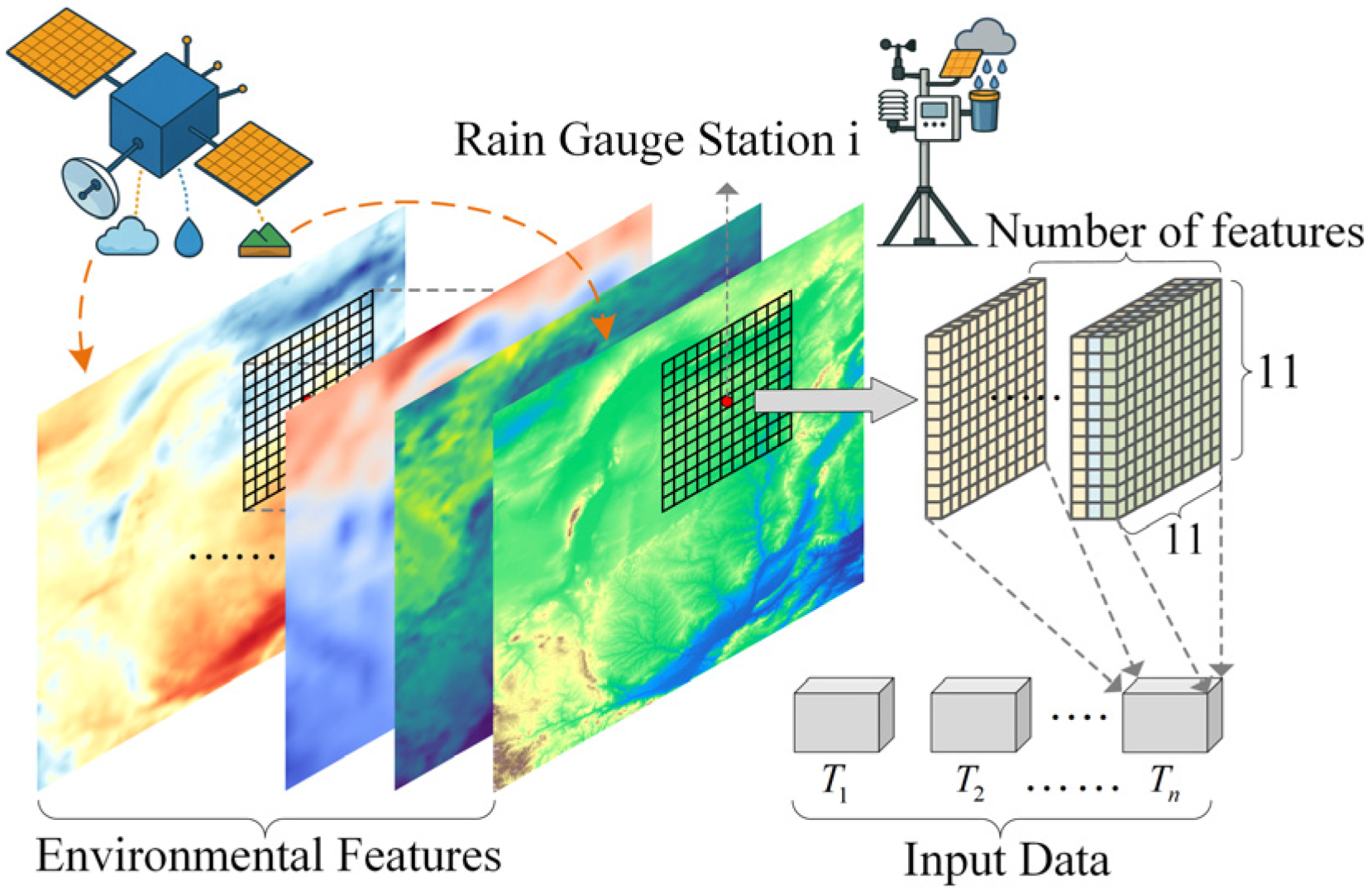

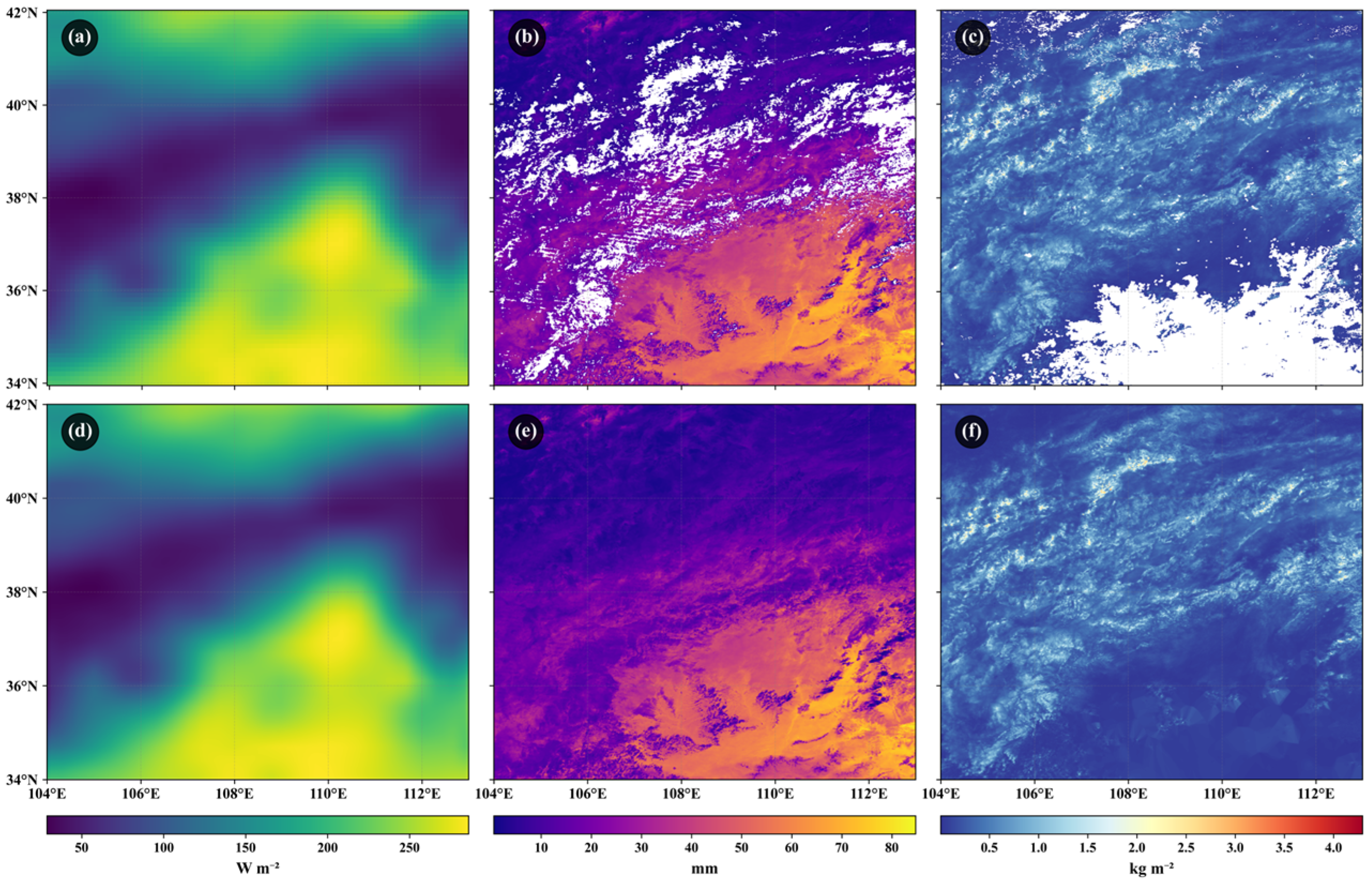

3.1. Data Processing

3.2. Random Forest-Based Dual-Spectrum Adaptive Threshold Feature Selection Algorithm

3.2.1. Process Steps

- (1)

- Feature importance scores are obtained through the Random Forest model, where I represents the feature importance vector arranged in descending order of importance, and is the total number of features. Random Forest calculates the importance score of each feature by averaging impurity reduction across multiple decision trees, effectively capturing nonlinear feature relationships [45].

- (2)

- The first-order derivative of feature importance is calculated to construct the gradient spectrum, as shown in Equation (1), where represents the importance difference between adjacent features. To ensure comparability across different scales, the gradient is normalized as shown in Equation (2). Subsequently, a local extremum detection algorithm is used to identify significant change points in the gradient spectrum, as shown in Equation (3). These change points represent significant breakpoints in feature importance, indicating potential feature selection threshold positions.

- (3)

- The cumulative importance contribution of features is calculated, where represents the cumulative contribution ratio of the first features. The elbow method [46] is applied to detect the inflection point of the cumulative contribution curve (The KneeLocator function is derived from the kneed library). The inflection point position indicates where the cumulative contribution growth rate significantly decreases, representing the equilibrium point of diminishing returns.

- (4)

- where represents the explained variance ratio (model performance), and represents the feature proportion penalty term (model complexity). The squared penalty term strengthens control over redundant features and tends to select more compact feature subsets.

3.2.2. Experimental Design

3.3. Spatiotemporally Coupled Bias Correction Model (CGBCM)

3.3.1. Spatial Information Processing Module

3.3.2. Temporal Information Processing Module

3.3.3. Spatiotemporal Information Coupling Module

3.3.4. CGBCM Performance Evaluation Module

3.3.5. Experimental Design

4. Results

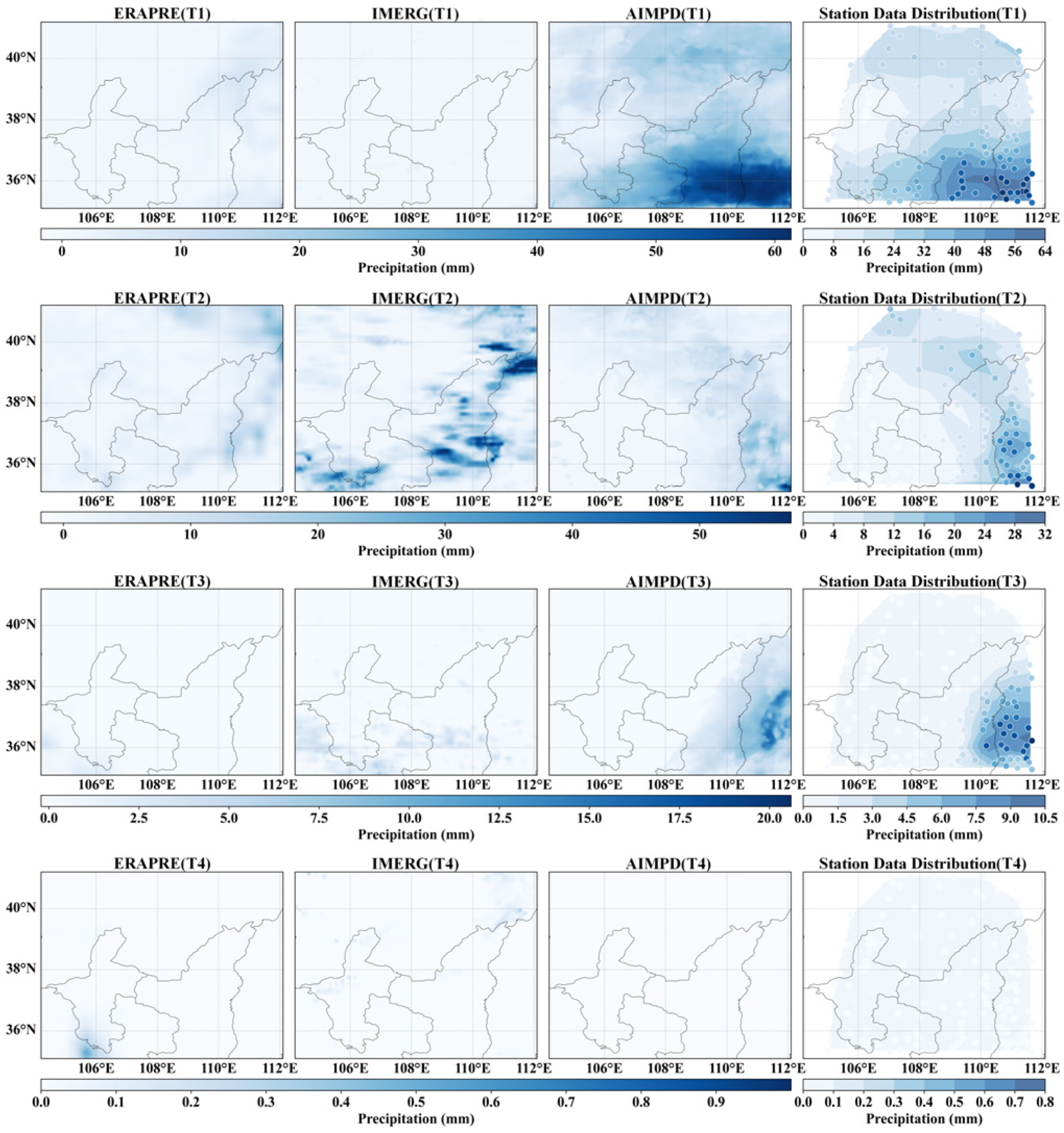

4.1. Data Processing

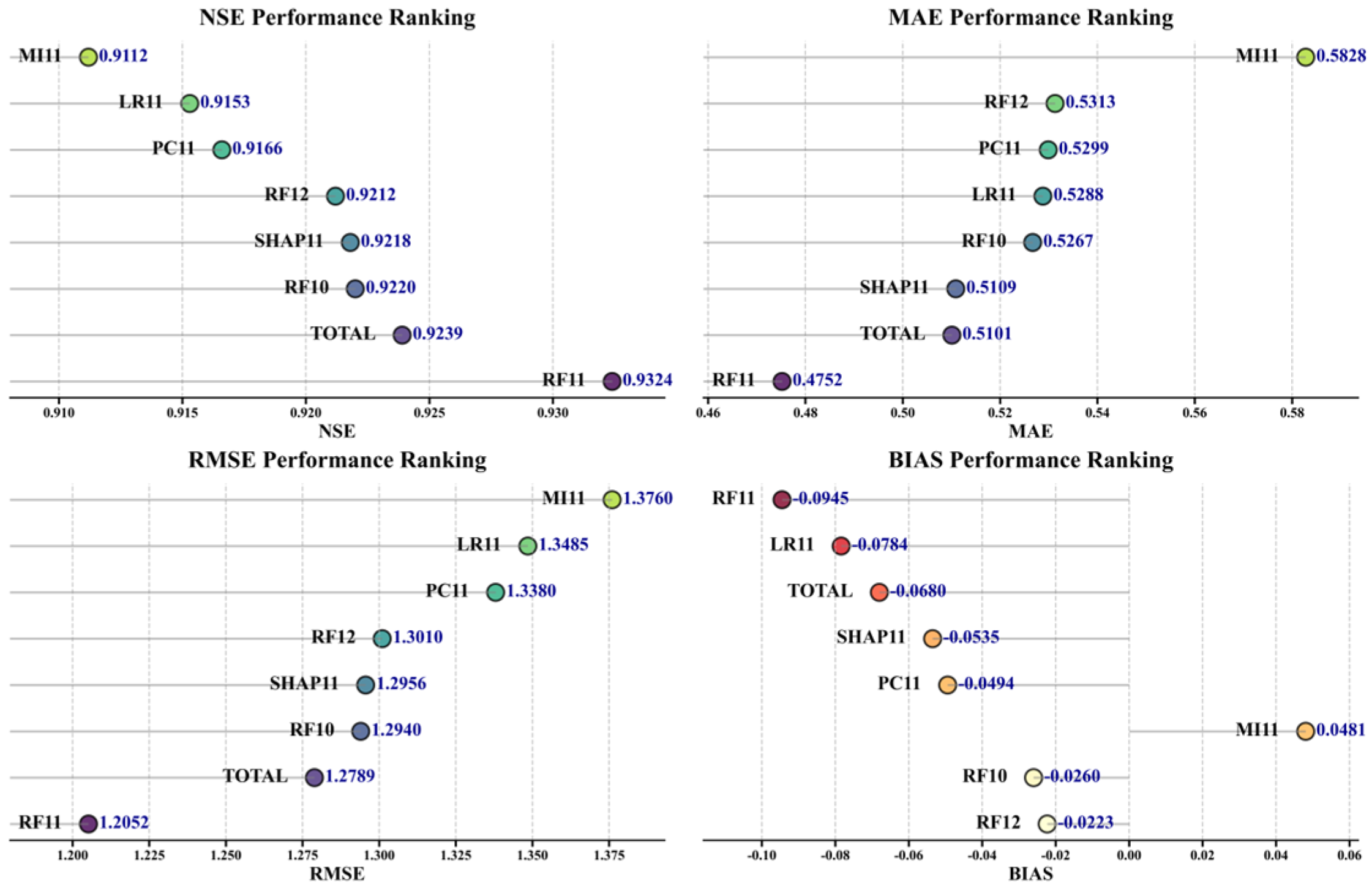

4.2. Feature Selection Algorithm Comparison

4.3. CGBCM Model Configuration and Spatiotemporal Perception Scale

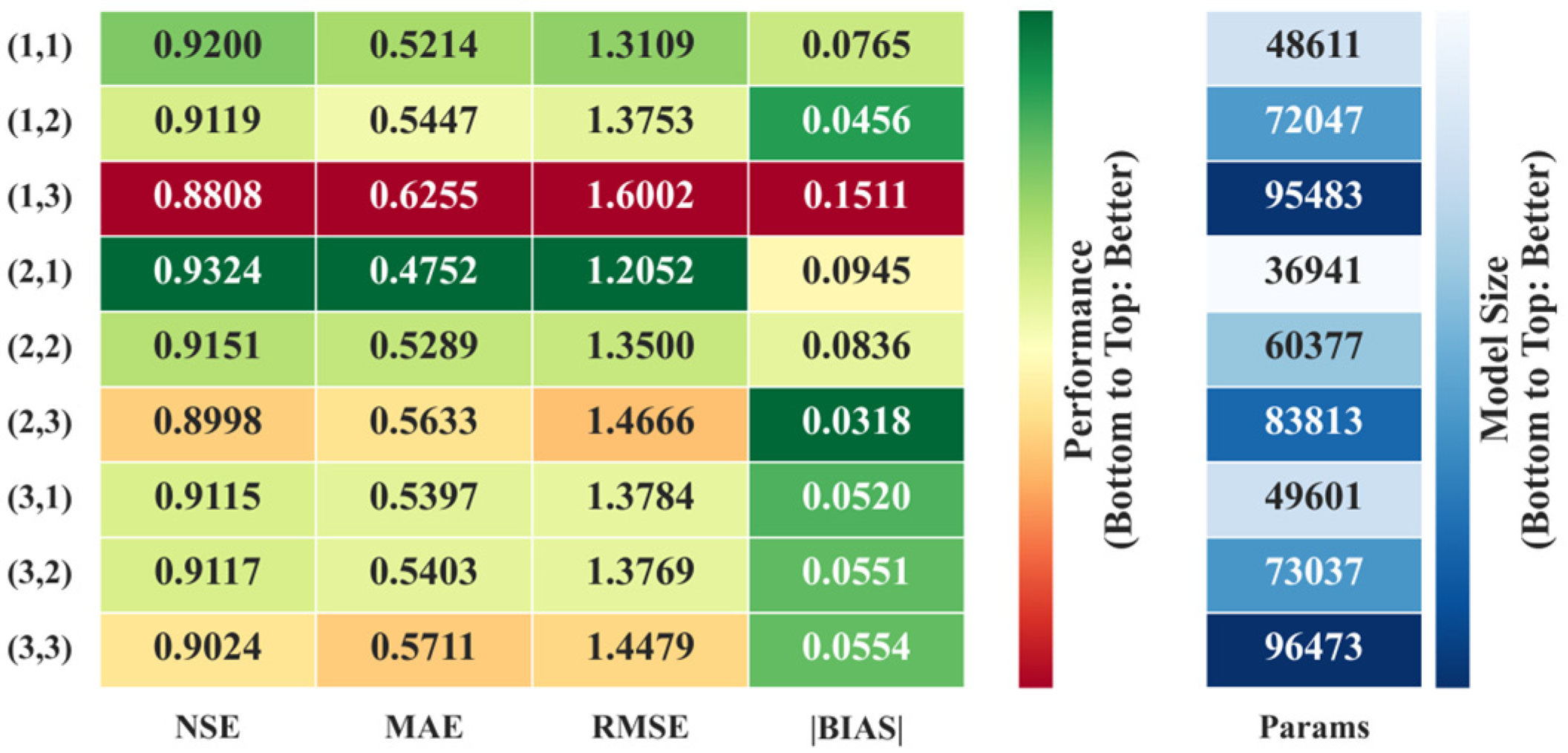

4.3.1. Model Structure and Hyperparameter Configuration

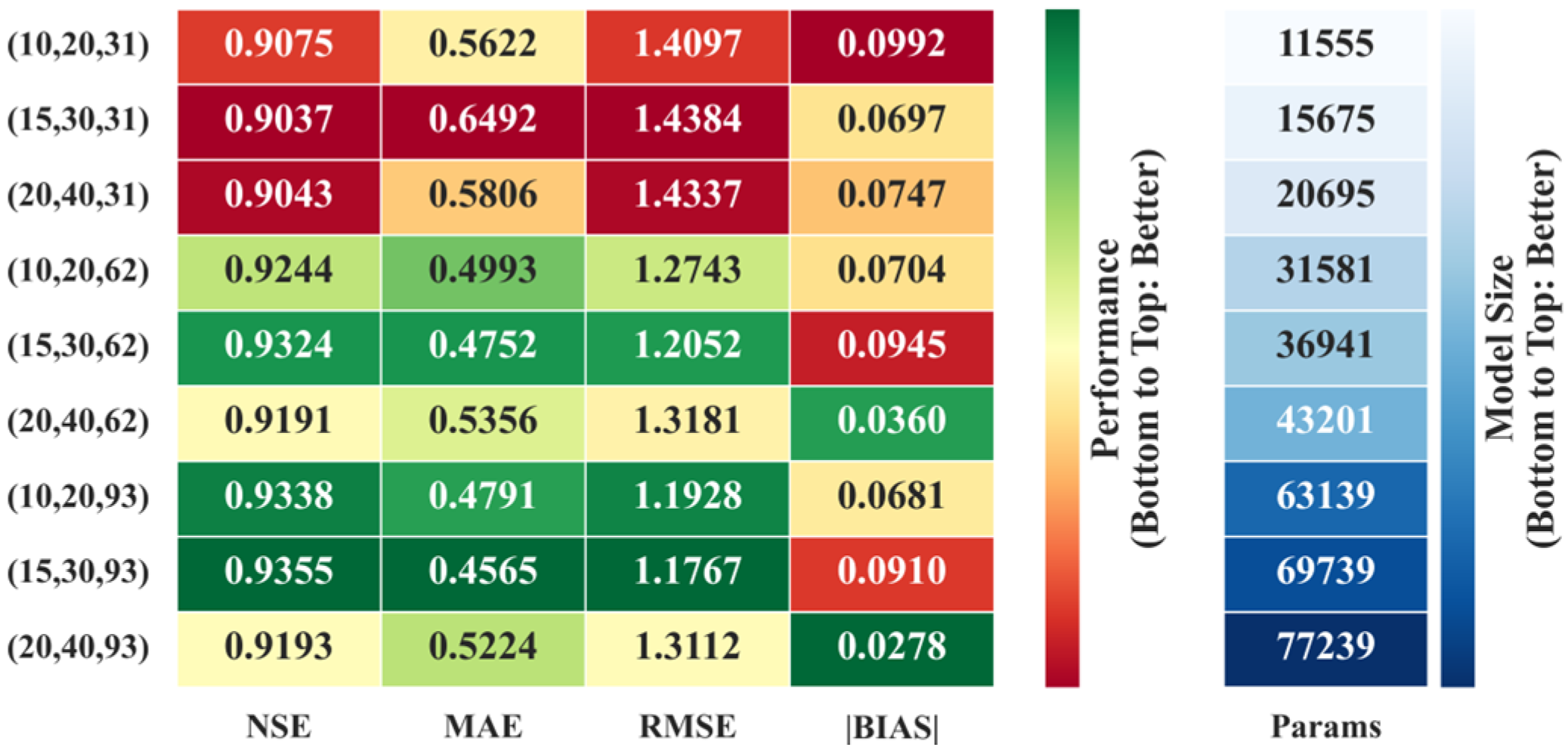

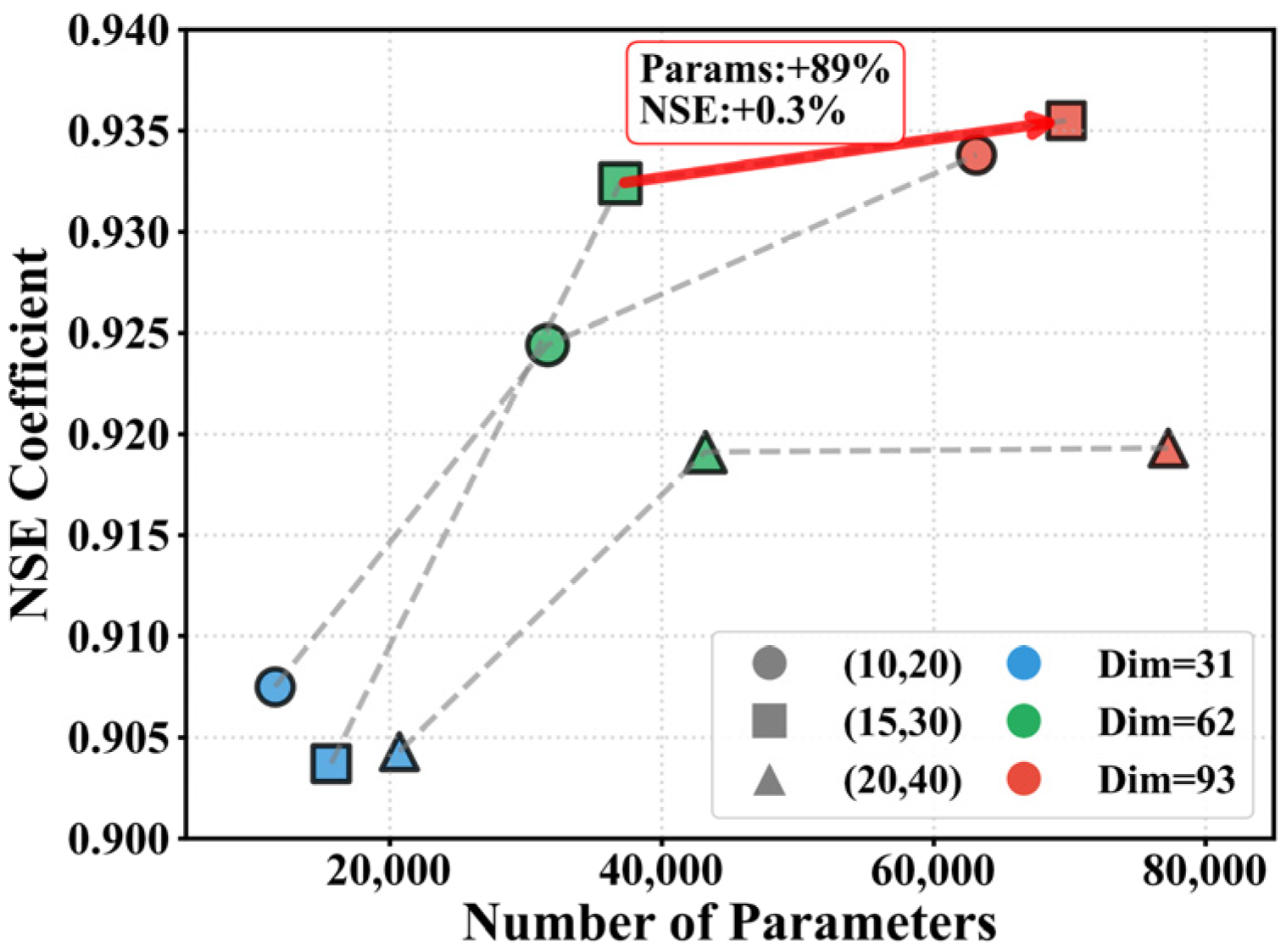

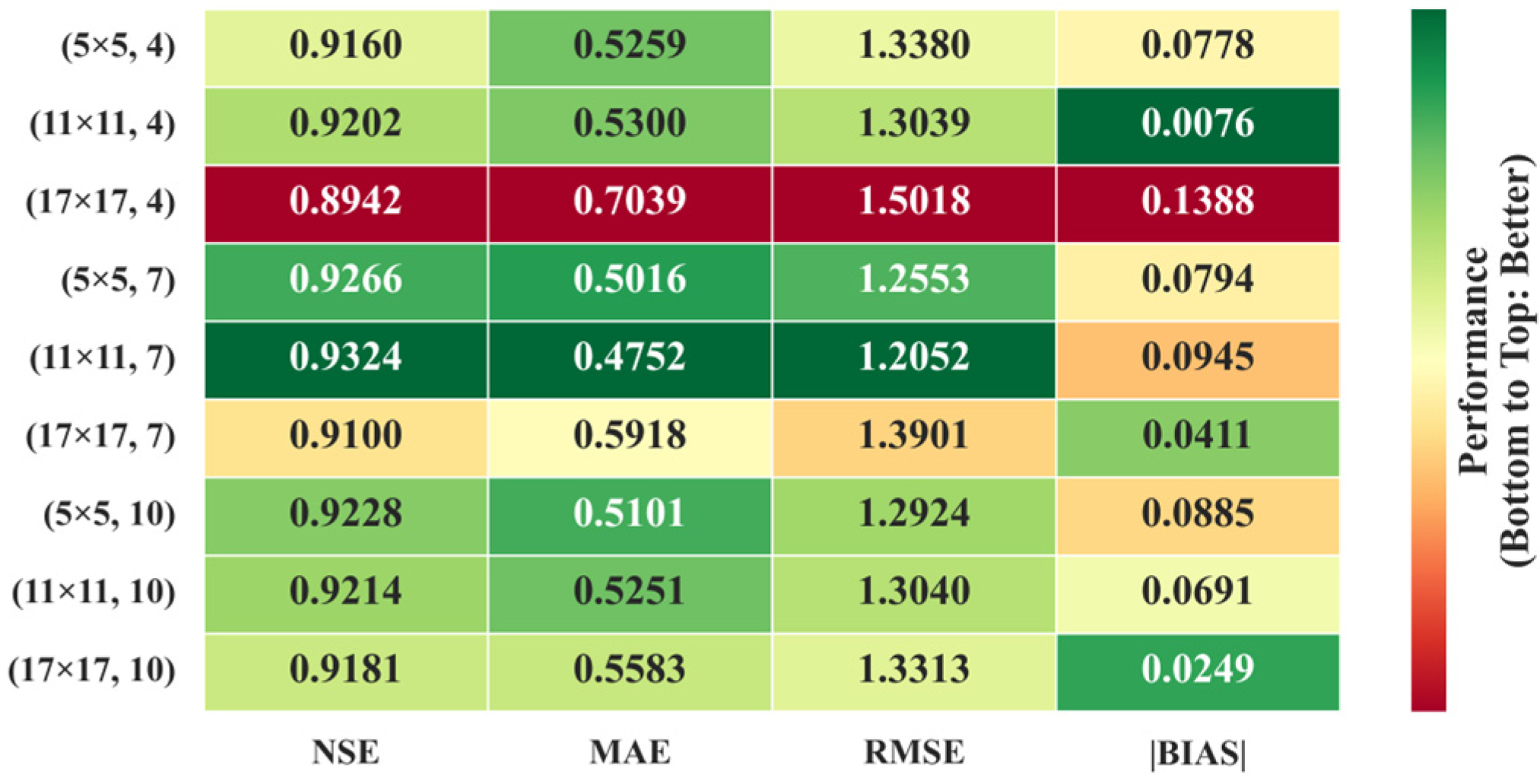

4.3.2. Spatial Perception Scale and Temporal Modeling Span

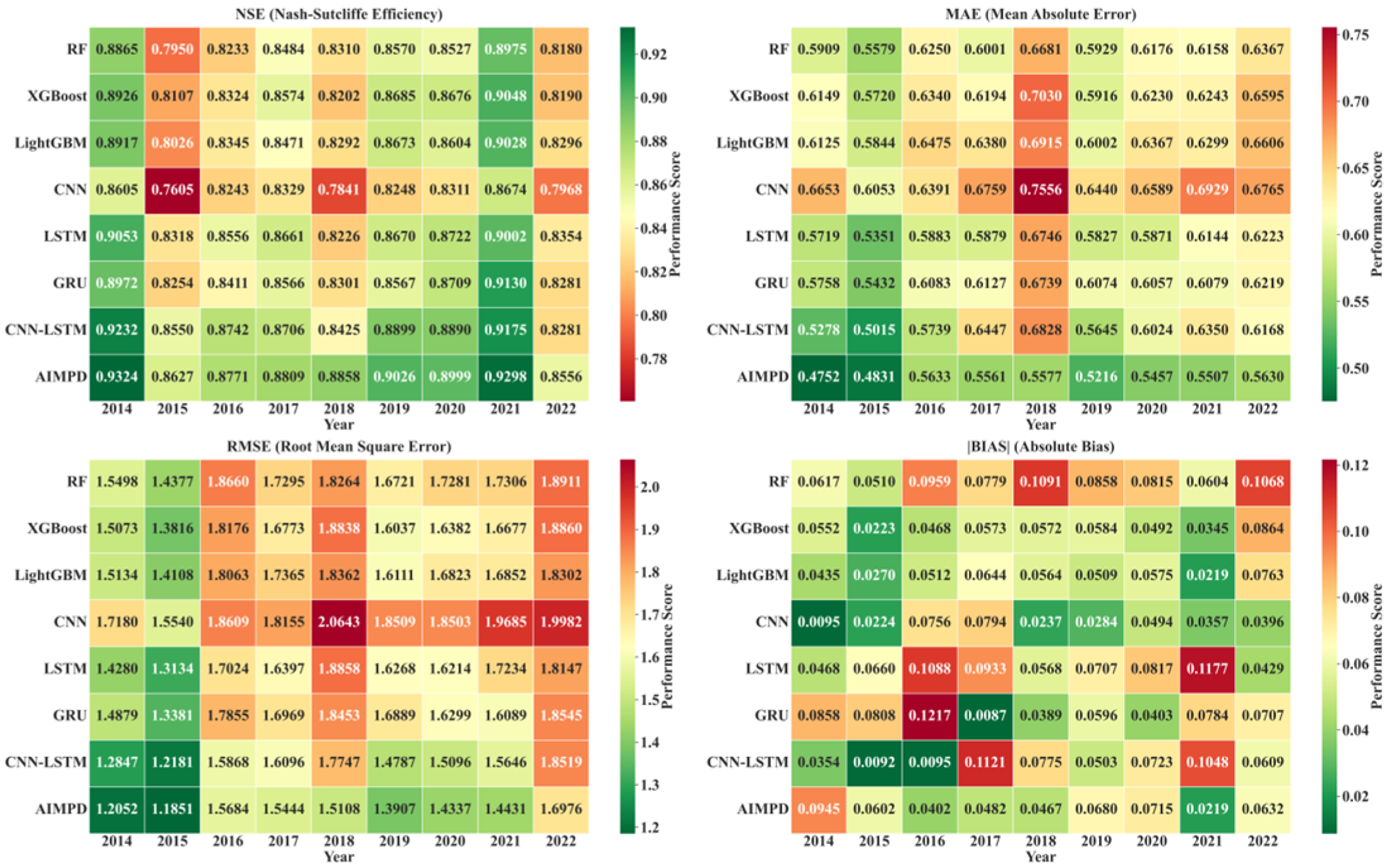

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Vogel, R.M.; Lall, U.; Cai, X.M.; Rajagopalan, B.; Weiskel, P.K.; Hooper, R.P.; Matalas, N.C. Hydrology: The interdisciplinary science of water. Water Resour. Res. 2015, 51, 4409–4430. [Google Scholar] [CrossRef]

- Feldman, A.F.; Feng, X.; Felton, A.J.; Konings, A.G.; Knapp, A.K.; Biederman, J.A.; Poulter, B. Plant responses to changing rainfall frequency and intensity. Nat. Rev. Earth Environ. 2024, 5, 276–294. [Google Scholar] [CrossRef]

- Strohmenger, L.; Ackerer, P.; Belfort, B.; Pierret, M.C. Local and seasonal climate change and its influence on the hydrological cycle in a mountainous forested catchment. J. Hydrol. 2022, 610, 127914. [Google Scholar] [CrossRef]

- Antal, A.; Guerreiro, P.M.P.; Cheval, S. Comparison of spatial interpolation methods for estimating the precipitation distribution in Portugal. Theor. Appl. Climatol. 2021, 145, 1193–1206. [Google Scholar] [CrossRef]

- Xiang, Y.X.; Zeng, C.; Zhang, F.; Wang, L. Effects of climate change on runoff in a representative Himalayan basin assessed through optimal integration of multi-source precipitation data. J. Hydrol.-Reg. Stud. 2024, 53, 101828. [Google Scholar] [CrossRef]

- Jongjin, B.; Jongmin, P.; Dongryeol, R.; Minha, C. Geospatial blending to improve spatial mapping of precipitation with high spatial resolution by merging satellite-based and ground-based data. Hydrol. Process 2016, 30, 2789–2803. [Google Scholar] [CrossRef]

- Lei, H.J.; Zhao, H.Y.; Ao, T.Q. Ground validation and error decomposition for six state-of-the-art satellite precipitation products over mainland China. Atmos. Res. 2022, 269, 106017. [Google Scholar] [CrossRef]

- Moazami, S.; Na, W.Y.; Najafi, M.R.; de Souza, C. Spatiotemporal bias adjustment of IMERG satellite precipitation data across Canada. Adv. Water Resour. 2022, 168, 104300. [Google Scholar] [CrossRef]

- Gebremichael, M.; Bitew, M.M.; Hirpa, F.A.; Tesfay, G.N. Accuracy of satellite rainfall estimates in the Blue Nile Basin: Lowland plain versus highland mountain. Water Resour. Res. 2014, 50, 8775–8790. [Google Scholar] [CrossRef]

- Li, M.; Shao, Q.X. An improved statistical approach to merge satellite rainfall estimates and raingauge data. J. Hydrol. 2010, 385, 51–64. [Google Scholar] [CrossRef]

- Prein, A.F.; Gobiet, A. Impacts of uncertainties in European gridded precipitation observations on regional climate analysis. Int. J. Climatol. 2017, 37, 305–327. [Google Scholar] [CrossRef] [PubMed]

- Bao, J.W.; Feng, J.M.; Wang, Y.L. Dynamical downscaling simulation and future projection of precipitation over China. J. Geophys. Res.-Atmos. 2015, 120, 8227–8243. [Google Scholar] [CrossRef]

- Politi, N.; Vlachogiannis, D.; Sfetsos, A.; Nastos, P.T. High-resolution dynamical downscaling of ERA-Interim temperature and precipitation using WRF model for Greece. Clim. Dynam 2021, 57, 799–825. [Google Scholar] [CrossRef]

- Gao, S.B.; Huang, D.L.; Du, N.Z.; Ren, C.Y.; Yu, H.Q. WRF ensemble dynamical downscaling of precipitation over China using different cumulus convective schemes. Atmos. Res. 2022, 271, 106116. [Google Scholar] [CrossRef]

- Xue, Y.K.; Janjic, Z.; Dudhia, J.; Vasic, R.; De Sales, F. A review on regional dynamical downscaling in intraseasonal to seasonal simulation/prediction and major factors that affect downscaling ability. Atmos. Res. 2014, 147, 68–85. [Google Scholar] [CrossRef]

- Adachi, S.A.; Tomita, H. Methodology of the Constraint Condition in Dynamical Downscaling for Regional Climate Evaluation: A Review. J. Geophys. Res.-Atmos. 2020, 125, e2019JD032166. [Google Scholar] [CrossRef]

- Maraun, D.; Wetterhall, F.; Ireson, A.M.; Chandler, R.E.; Kendon, E.J.; Widmann, M.; Brienen, S.; Rust, H.W.; Sauter, T.; Themessl, M.; et al. Precipitation Downscaling under Climate Change: Recent Developments to Bridge the Gap between Dynamical Models and the End User. Rev. Geophys. 2010, 48, Rg3003. [Google Scholar] [CrossRef]

- Rastogi, D.; Niu, H.R.; Passarella, L.; Mahajan, S.; Kao, S.C.; Vahmani, P.; Jones, A.D. Complementing Dynamical Downscaling With Super-Resolution Convolutional Neural Networks. Geophys. Res. Lett. 2025, 52, e2024GL111828. [Google Scholar] [CrossRef]

- Vosper, E.; Watson, P.; Harris, L.; McRae, A.; Santos-Rodriguez, R.; Aitchison, L.; Mitchell, D. Deep Learning for Downscaling Tropical Cyclone Rainfall to Hazard-Relevant Spatial Scales. J. Geophys. Res.-Atmos. 2023, 128, e2022JD038163. [Google Scholar] [CrossRef]

- Liu, X.L.; Coulibaly, P.; Evora, N. Comparison of data-driven methods for downscaling ensemble weather forecasts. Hydrol. Earth Syst. Sci. 2008, 12, 615–624. [Google Scholar] [CrossRef]

- Vrac, M.; Drobinski, P.; Merlo, A.; Herrmann, M.; Lavaysse, C.; Li, L.; Somot, S. Dynamical and statistical downscaling of the French Mediterranean climate: Uncertainty assessment. Nat. Hazard. Earth Syst. Sci. 2012, 12, 2769–2784. [Google Scholar] [CrossRef]

- Sarhadi, A.; Burn, D.H.; Yang, G.; Ghodsi, A. Advances in projection of climate change impacts using supervised nonlinear dimensionality reduction techniques. Clim. Dynam 2017, 48, 1329–1351. [Google Scholar] [CrossRef]

- Lima, C.H.R.; Kwon, H.H.; Kim, H.J. Sparse Canonical Correlation Analysis Postprocessing Algorithms for GCM Daily Rainfall Forecasts. J. Hydrometeorol. 2022, 23, 1705–1718. [Google Scholar] [CrossRef]

- Peleg, N.; Molnar, P.; Burlando, P.; Fatichi, S. Exploring stochastic climate uncertainty in space and time using a gridded hourly weather generator. J. Hydrol. 2019, 571, 627–641. [Google Scholar] [CrossRef]

- Wang, F.; Tian, D.; Lowe, L.; Kalin, L.; Lehrter, J. Deep Learning for Daily Precipitation and Temperature Downscaling. Water Resour. Res. 2021, 57, e2020WR029308. [Google Scholar] [CrossRef]

- Ghorbanpour, A.K.; Hessels, T.; Moghim, S.; Afshar, A. Comparison and assessment of spatial downscaling methods for enhancing the accuracy of satellite-based precipitation over Lake Urmia Basin. J. Hydrol. 2021, 596, 126055. [Google Scholar] [CrossRef]

- Zhang, X.; Song, Y.; Nam, W.H.; Huang, T.L.; Gu, X.H.; Zeng, J.Y.; Huang, S.Z.; Chen, N.C.; Yan, Z.; Niyogi, D. Data fusion of satellite imagery and downscaling for generating highly fine-scale precipitation. J. Hydrol. 2024, 631, 130665. [Google Scholar] [CrossRef]

- Zhu, H.L.; Liu, H.Z.; Zhou, Q.M.; Cui, A.H. Towards an Accurate and Reliable Downscaling Scheme for High-Spatial-Resolution Precipitation Data. Remote Sens. 2023, 15, 2640. [Google Scholar] [CrossRef]

- Zhu, H.L.; Zhou, Q.M. Advancing Satellite-Derived Precipitation Downscaling in Data-Sparse Area Through Deep Transfer Learning. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4102513. [Google Scholar] [CrossRef]

- Baño-Medina, J.; Manzanas, R.; Gutiérrez, J.M. Configuration and intercomparison of deep learning neural models for statistical downscaling. Geosci. Model. Dev. 2020, 13, 2109–2124. [Google Scholar] [CrossRef]

- Liu, Z.Y.; Yang, Q.L.; Shao, J.M.; Wang, G.Q.; Liu, H.Y.; Tang, X.P.; Xue, Y.H.; Bai, L.L. Improving daily precipitation estimation in the data scarce area by merging rain gauge and TRMM data with a transfer learning framework. J. Hydrol. 2022, 613, 128455. [Google Scholar] [CrossRef]

- Gu, J.J.; Ye, Y.T.; Jiang, Y.Z.; Dong, J.P.; Cao, Y.; Huang, J.X.; Guan, H.Z. A downscaling-calibrating framework for generating gridded daily precipitation estimates with a high spatial resolution. J. Hydrol. 2023, 626, 130371. [Google Scholar] [CrossRef]

- Gavahi, K.; Foroumandi, E.; Moradkhani, H. A deep learning-based framework for multi-source precipitation fusion. Remote Sens. Environ. 2023, 295, 113723. [Google Scholar] [CrossRef]

- Asfaw, T.G.; Luo, J.J. Downscaling Seasonal Precipitation Forecasts over East Africa with Deep Convolutional Neural Networks. Adv. Atmos. Sci. 2024, 41, 449–464. [Google Scholar] [CrossRef]

- Jiang, Y.Z.; Yang, K.; Qi, Y.C.; Zhou, X.; He, J.; Lu, H.; Li, X.; Chen, Y.Y.; Li, X.D.; Zhou, B.R.; et al. TPHiPr: A long-term (1979-2020) high-accuracy precipitation dataset (1/30°, daily) for the Third Pole region based on high-resolution atmospheric modeling and dense observations. Earth Syst. Sci. Data 2023, 15, 621–638. [Google Scholar] [CrossRef]

- Wang, Z.X.; Mao, Y.D.; Geng, J.Z.; Huang, C.J.; Ogg, J.; Kemp, D.B.; Zhang, Z.; Pang, Z.B.; Zhang, R. Pliocene-Pleistocene evolution of the lower Yellow River in eastern North China: Constraints on the age of the Sanmen Gorge connection. Glob. Planet. Change 2022, 213, 103835. [Google Scholar] [CrossRef]

- Zhou, H.W.; Ning, S.; Li, D.; Pan, X.S.; Li, Q.; Zhao, M.; Tang, X. Assessing the Applicability of Three Precipitation Products, IMERG, GSMaP, and ERA5, in China over the Last Two Decades. Remote Sens. 2023, 15, 4154. [Google Scholar] [CrossRef]

- Wu, J.; Gao, X.J.; Giorgi, F.; Chen, D.L. Changes of effective temperature and cold/hot days in late decades over China based on a high resolution gridded observation dataset. Int. J. Climatol. 2017, 37, 788–800. [Google Scholar] [CrossRef]

- Xu, Y.; Gao, X.J.; Shen, Y.; Xu, C.H.; Shi, Y.; Giorgi, F. A Daily Temperature Dataset over China and Its Application in Validating a RCM Simulation. Adv. Atmos. Sci. 2009, 26, 763–772. [Google Scholar] [CrossRef]

- Hastings, D.A.; Dunbar, P.K.; Elphingstone, G.M.; Bootz, M.; Murakami, H.; Maruyama, H.; Masaharu, H.; Holland, P.; Payne, J.; Bryant, N.A. The Global Land One-Kilometer Base Elevation (GLOBE) Digital Elevation Model, Version 1.0; National Oceanic and Atmospheric Administration: Silver Spring, MD, USA; National Geophysical Data Center: Boulder, CO, USA, 1999; Volume 325, pp. 80305–83328. [Google Scholar]

- Jing, Y.H.; Lin, L.P.; Li, X.H.; Li, T.W.; Shen, H.F. An attention mechanism based convolutional network for satellite precipitation downscaling over China. J. Hydrol. 2022, 613, 128388. [Google Scholar] [CrossRef]

- Zhang, H.X.; Huo, S.L.; Feng, L.; Ma, C.Z.; Li, W.P.; Liu, Y.; Wu, F.C. Geographic Characteristics and Meteorological Factors Dominate the Variation of Chlorophyll-a in Lakes and Reservoirs With Higher TP Concentrations. Water Resour. Res. 2024, 60, e2023WR036587. [Google Scholar] [CrossRef]

- Zhang, M.; Wu, B.F.; Zeng, H.W.; He, G.J.; Liu, C.; Tao, S.Q.; Zhang, Q.; Nabil, M.; Tian, F.Y.; Bofana, J.; et al. GCI30: A global dataset of 30 m cropping intensity using multisource remote sensing imagery. Earth Syst. Sci. Data 2021, 13, 4799–4817. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Yuan, X.G.; Liu, S.R.; Feng, W.; Dauphin, G. Feature Importance Ranking of Random Forest-Based End-to-End Learning Algorithm. Remote Sens. 2023, 15, 5203. [Google Scholar] [CrossRef]

- Liu, F.; Deng, Y. Determine the Number of Unknown Targets in Open World Based on Elbow Method. IEEE Trans. Fuzzy Syst. 2021, 29, 986–995. [Google Scholar] [CrossRef]

- Pearson, K. Notes on the history of correlation. Biometrika 1920, 13, 25–45. [Google Scholar] [CrossRef]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. B Stat. Methodol. 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Lee, S.I. A Unified Approach to Interpreting Model Predictions. In Proceedings of the 31st International Conference on Neural Information Processing Systems (NIPS’17), Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 2002, 86, 2278–2324. [Google Scholar] [CrossRef]

- Cho, K.; Van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv 2014, arXiv:1406.1078. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar] [CrossRef]

- Iiduka, H. Appropriate Learning Rates of Adaptive Learning Rate Optimization Algorithms for Training Deep Neural Networks. IEEE Trans. Cybern. 2022, 52, 13250–13261. [Google Scholar] [CrossRef] [PubMed]

- Wright, S.J. Coordinate descent algorithms. Math. Program. 2015, 151, 3–34. [Google Scholar] [CrossRef]

- Nesterov, Y. Efficiency of Coordinate Descent Methods on Huge-Scale Optimization Problems. SIAM J. Optimiz. 2012, 22, 341–362. [Google Scholar] [CrossRef]

- Keys, R. Cubic convolution interpolation for digital image processing. IEEE Trans. Acoust. Speech Signal Process. 2003, 29, 1153–1160. [Google Scholar] [CrossRef]

- Chen, T.Q.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Kdd’16: Proceedings of the 22nd Acm Sigkdd International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 785–794. [Google Scholar] [CrossRef]

- Ke, G.L.; Meng, Q.; Finley, T.; Wang, T.F.; Chen, W.; Ma, W.D.; Ye, Q.W.; Liu, T.Y. LightGBM: A Highly Efficient Gradient Boosting Decision Tree. In Proceedings of the 31st International Conference on Neural Information Processing Systems (NIPS’17), Auckland, New Zealand, 4–9 December 2017; Volume 30. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Gers, F.A.; Schmidhuber, J.; Cummins, F. Learning to forget: Continual prediction with LSTM. Neural Comput. 2000, 12, 2451–2471. [Google Scholar] [CrossRef]

- Shi, X.J.; Chen, Z.R.; Wang, H.; Yeung, D.Y.; Wong, W.K.; Woo, W.C. Convolutional LSTM Network: A Machine Learning Approach for Precipitation Nowcasting. In Advances in Neural Information Processing Systems 28 (Nips 2015), Proceedings of the 29th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 7 December 2015; Curran Associates, Inc.: Red Hook, NY, USA, 2015; Volume 28. [Google Scholar]

- Wu, H.C.; Yang, Q.L.; Liu, J.M.; Wang, G.Q. A spatiotemporal deep fusion model for merging satellite and gauge precipitation in China. J. Hydrol. 2020, 584, 124664. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, C.; Ma, L.; Huang, X.; Wang, C.; Liu, X.; Sun, B.; Zhang, Q. An Artificial Intelligence-Driven Precipitation Downscaling Method Using Spatiotemporally Coupled Multi-Source Data. Atmosphere 2025, 16, 1226. https://doi.org/10.3390/atmos16111226

Li C, Ma L, Huang X, Wang C, Liu X, Sun B, Zhang Q. An Artificial Intelligence-Driven Precipitation Downscaling Method Using Spatiotemporally Coupled Multi-Source Data. Atmosphere. 2025; 16(11):1226. https://doi.org/10.3390/atmos16111226

Chicago/Turabian StyleLi, Chao, Long Ma, Xing Huang, Chenyue Wang, Xinyuan Liu, Bolin Sun, and Qiang Zhang. 2025. "An Artificial Intelligence-Driven Precipitation Downscaling Method Using Spatiotemporally Coupled Multi-Source Data" Atmosphere 16, no. 11: 1226. https://doi.org/10.3390/atmos16111226

APA StyleLi, C., Ma, L., Huang, X., Wang, C., Liu, X., Sun, B., & Zhang, Q. (2025). An Artificial Intelligence-Driven Precipitation Downscaling Method Using Spatiotemporally Coupled Multi-Source Data. Atmosphere, 16(11), 1226. https://doi.org/10.3390/atmos16111226